Post Syndicated from Geographics original https://www.youtube.com/watch?v=JMPrxUdMpRE

Armed to Boot: an enhancement to Arm’s Secure Boot chain

Post Syndicated from Derek Chamorro original https://blog.cloudflare.com/armed-to-boot/

Over the last few years, there has been a rise in the number of attacks that affect how a computer boots. Most modern computers use a specification called Unified Extensible Firmware Interface (UEFI) that defines a software interface between an operating system (e.g. Windows) and platform firmware (e.g. disk drives, video cards). There are security mechanisms built into UEFI that ensure that platform firmware can be cryptographically validated and boot securely through an application called a bootloader. This firmware is stored in non-volatile SPI flash memory on the motherboard, so it persists on the system even if the operating system is reinstalled and drives are replaced.

This creates a ‘trust anchor’ used to validate each stage of the boot process, but, unfortunately, this trust anchor is also a target for attack. In these UEFI attacks, malicious actions are loaded onto a compromised device early in the boot process. This means that malware can change configuration data, establish persistence by ‘implanting’ itself, and can bypass security measures that are only loaded at the operating system stage. So, while UEFI-anchored secure boot protects the bootloader from bootloader attacks, it does not protect the UEFI firmware itself.

Because of this growing trend of attacks, we began the process of cryptographically signing our UEFI firmware as a mitigation step. While our existing solution is platform specific to our x86 AMD server fleet, we did not have a similar solution to UEFI firmware signing for Arm. To determine what was missing, we had to take a deep dive into the Arm secure boot process.

Read on to learn about the world of Arm Trusted Firmware Secure Boot.

Arm Trusted Firmware Secure Boot

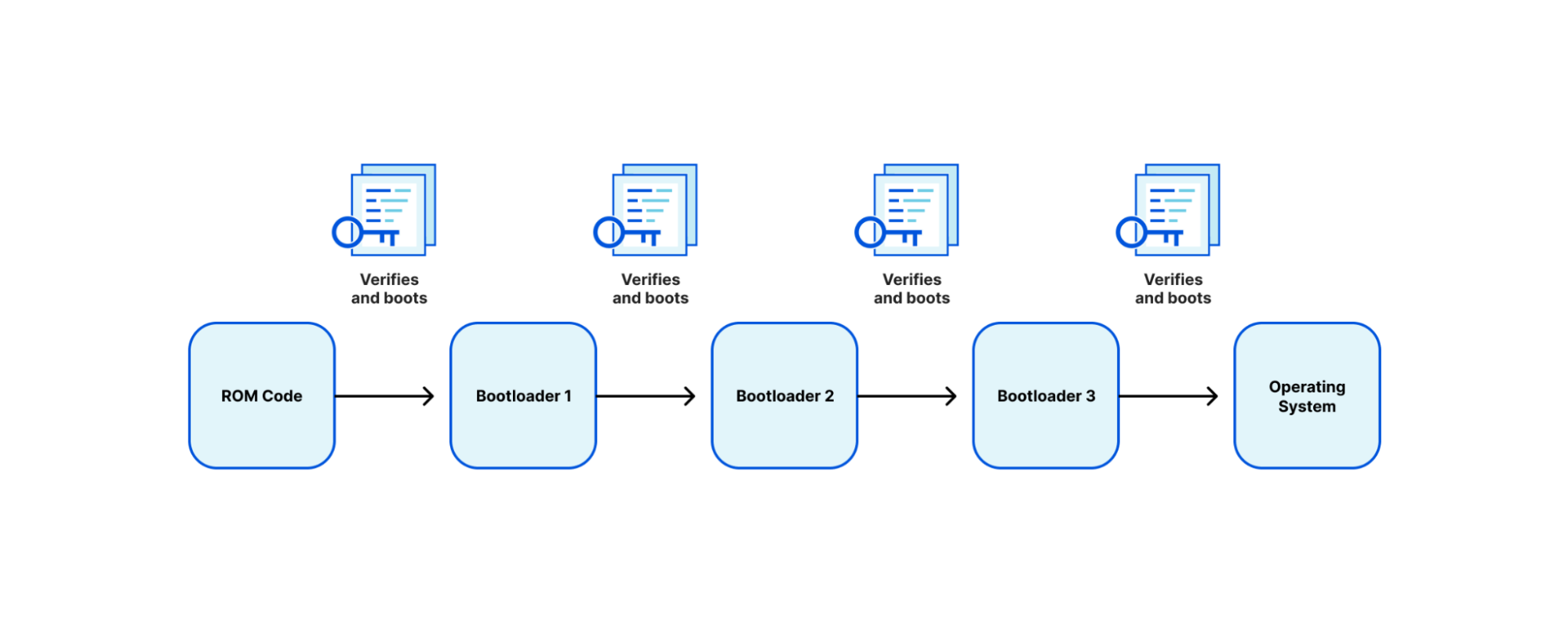

Arm defines a trusted boot process through an architecture called Trusted Board Boot Requirements (TBBR), or Arm Trusted Firmware (ATF) Secure Boot. TBBR works by authenticating a series of cryptographically signed binary images each containing a different stage or element in the system boot process to be loaded and executed. Every bootloader (BL) stage accomplishes a different stage in the initialization process:

BL1

BL1 defines the boot path (is this a cold boot or warm boot), initializes the architectures (exception vectors, CPU initialization, and control register setup), and initializes the platform (enables watchdog processes, MMU, and DDR initialization).

BL2

BL2 prepares initialization of the Arm Trusted Firmware (ATF), the stack responsible for setting up the secure boot process. After ATF setup, the console is initialized, memory is mapped for the MMU, and message buffers are set for the next bootloader.

BL3

The BL3 stage has multiple parts, the first being initialization of runtime services that are used in detecting system topology. After initialization, there is a handoff between the ATF ‘secure world’ boot stage to the ‘normal world’ boot stage that includes setup of UEFI firmware. Context is set up to ensure that no secure state information finds its way into the normal world execution state.

Each image is authenticated by a public key, which is stored in a signed certificate and can be traced back to a root key stored on the SoC in one time programmable (OTP) memory or ROM.

TBBR was originally designed for cell phones. This established a reference architecture on how to build a “Chain of Trust” from the first ROM executed (BL1) to the handoff to “normal world” firmware (BL3). While this creates a validated firmware signing chain, it has caveats:

- SoC manufacturers are heavily involved in the secure boot chain, while the customer has little involvement.

- A unique SoC SKU is required per customer. With one customer this could be easy, but most manufacturers have thousands of SKUs

- The SoC manufacturer is primarily responsible for end-to-end signing and maintenance of the PKI chain. This adds complexity to the process requiring USB key fobs for signing.

- Doesn’t scale outside the manufacturer.

What this tells us is what was built for cell phones doesn’t scale for servers.

If we were involved 100% in the manufacturing process, then this wouldn’t be as much of an issue, but we are a customer and consumer. As a customer, we have a lot of control of our server and block design, so we looked at design partners that would take some of the concepts we were able to implement with AMD Platform Secure Boot and refine them to fit Arm CPUs.

Amping it up

We partnered with Ampere and tested their Altra Max single socket rack server CPU (code named Mystique) that provides high performance with incredible power efficiency per core, much of what we were looking for in reducing power consumption. These are only a small subset of specs, but Ampere backported various features into the Altra Max notably, speculative attack mitigations that include Meltdown and Spectre (variants 1 and 2) from the Armv8.5 instruction set architecture, giving Altra the “+” designation in their ISA.

Ampere does implement a signed boot process similar to the ATF signing process mentioned above, but with some slight variations. We’ll explain it a bit to help set context for the modifications that we made.

Ampere Secure Boot

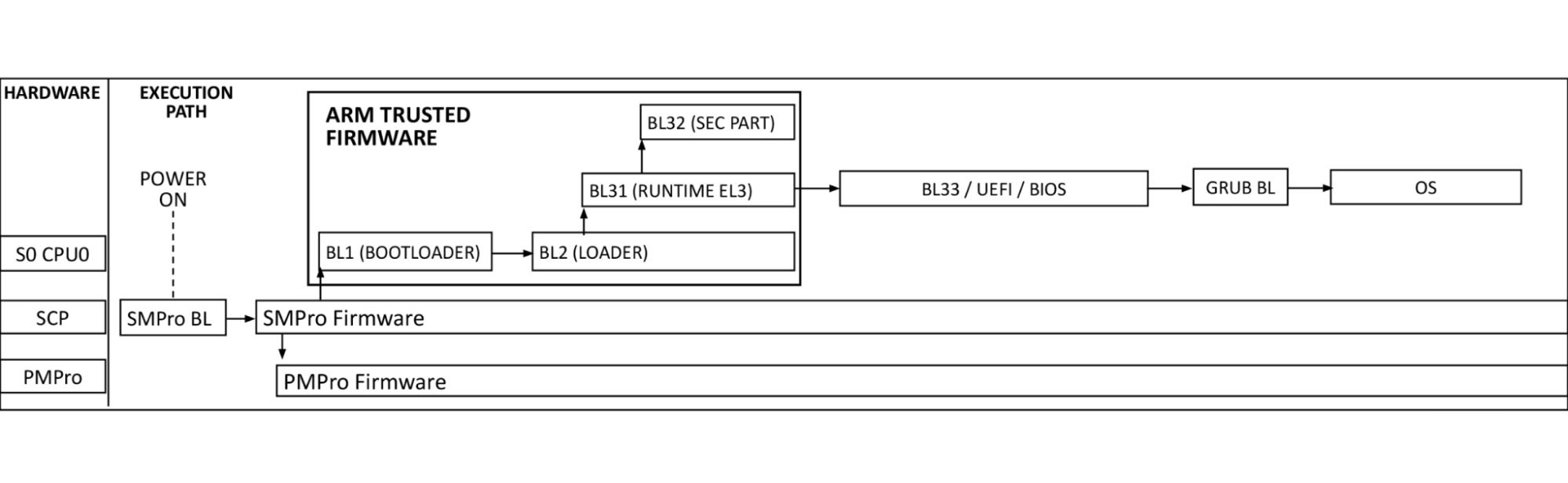

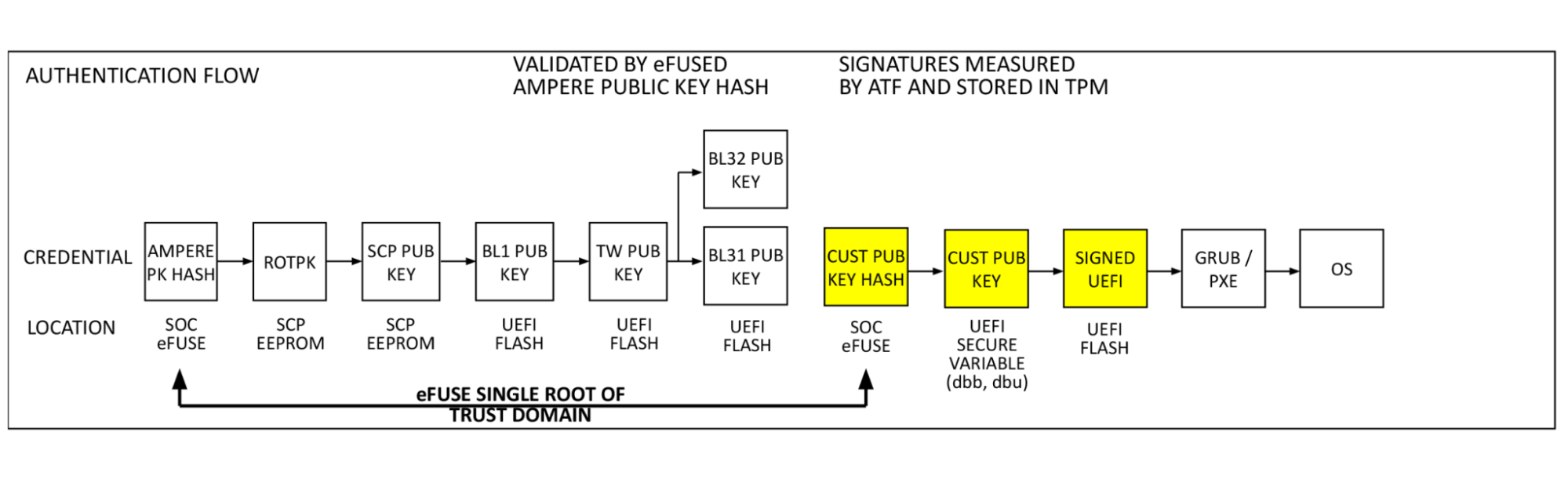

The diagram above shows the Arm processor boot sequence as implemented by Ampere. System Control Processors (SCP) are comprised of the System Management Processor (SMpro) and the Power Management Processor (PMpro). The SMpro is responsible for features such as secure boot and bmc communication while the PMpro is responsible for power features such as Dynamic Frequency Scaling and on-die thermal monitoring.

At power-on-reset, the SCP runs the system management bootloader from ROM and loads the SMpro firmware. After initialization, the SMpro spawns the power management stack on the PMpro and ATF threads. The ATF BL2 and BL31 bring up processor resources such as DRAM, and PCIe. After this, control is passed to BL33 BIOS.

Authentication flow

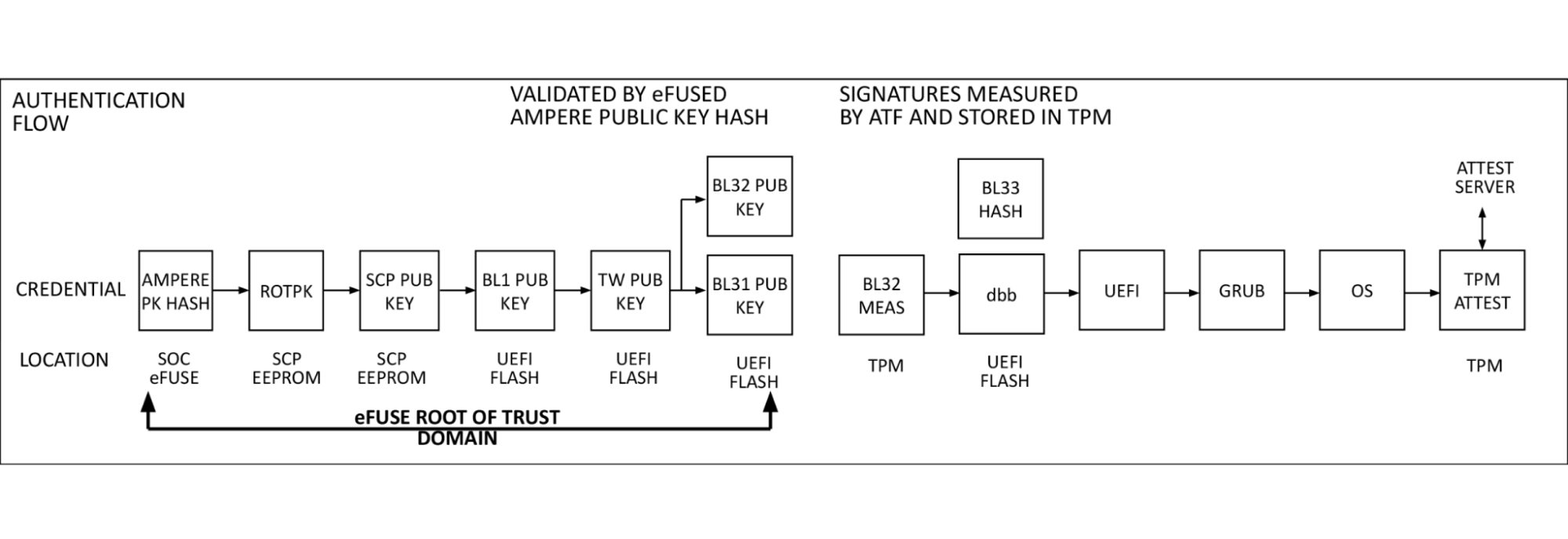

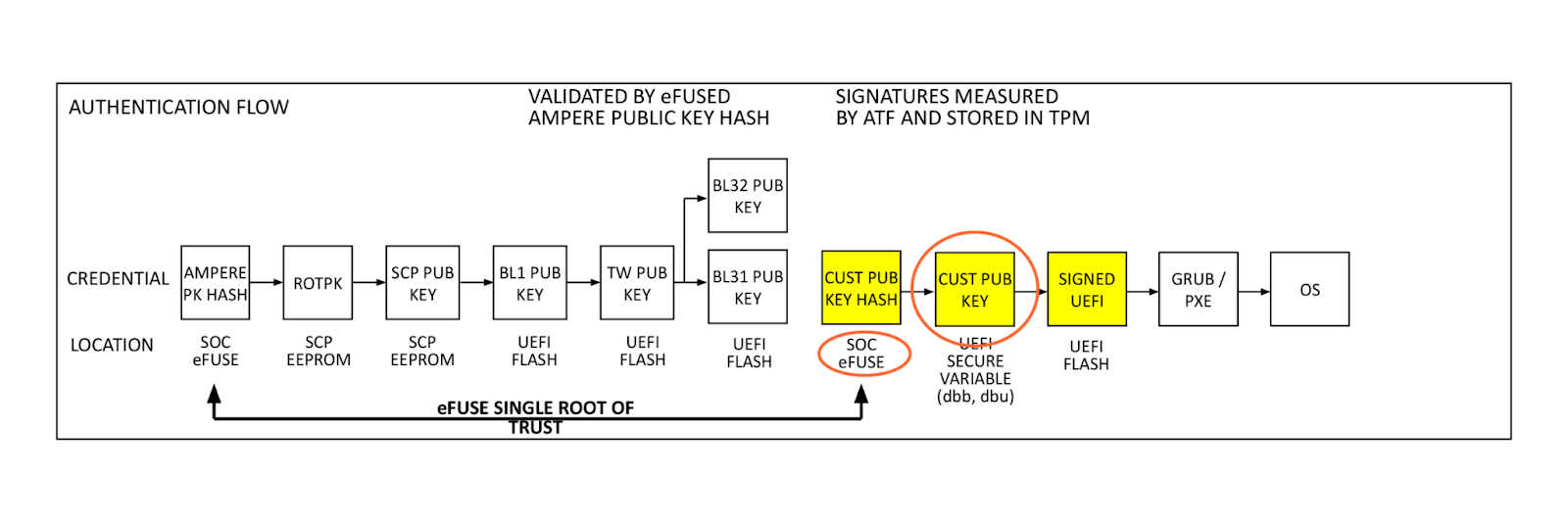

At power on, the SMpro firmware reads Ampere’s public key (ROTPK) from the SMpro key certificate in SCP EEPROM, computes a hash and compares this to Ampere’s public key hash stored in eFuse. Once authenticated, Ampere’s public key is used to decrypt key and content certificates for SMpro, PMpro, and ATF firmware, which are launched in the order described above.

The SMpro public key will be used to authenticate the SMpro and PMpro images and ATF keys which in turn will authenticate ATF images. This cascading set of authentication that originates with the Ampere root key and stored in chip called an electronic fuse, or eFuse. An eFuse can be programmed only once, setting the content to be read-only and can not be tampered with nor modified.

This is the original hardware root of trust used for signing system, secure world firmware. When we looked at this, after referencing the signing process we had with AMD PSB and knowing there was a large enough one-time-programmable (OTP) region within the SoC, we thought: why can’t we insert our key hash in here?

Single Domain Secure Boot

Single Domain Secure Boot takes the same authentication flow and adds a hash of the customer public key (Cloudflare firmware signing key in this case) to the eFuse domain. This enables the verification of UEFI firmware by a hardware root of trust. This process is performed in the already validated ATF firmware by BL2. Our public key (dbb) is read from UEFI secure variable storage, a hash is computed and compared to the public key hash stored in eFuse. If they match, the validated public key is used to decrypt the BL33 content certificate, validating and launching the BIOS, and remaining boot items. This is the key feature added by SDSB. It validates the entire software boot chain with a single eFuse root of trust on the processor.

Building blocks

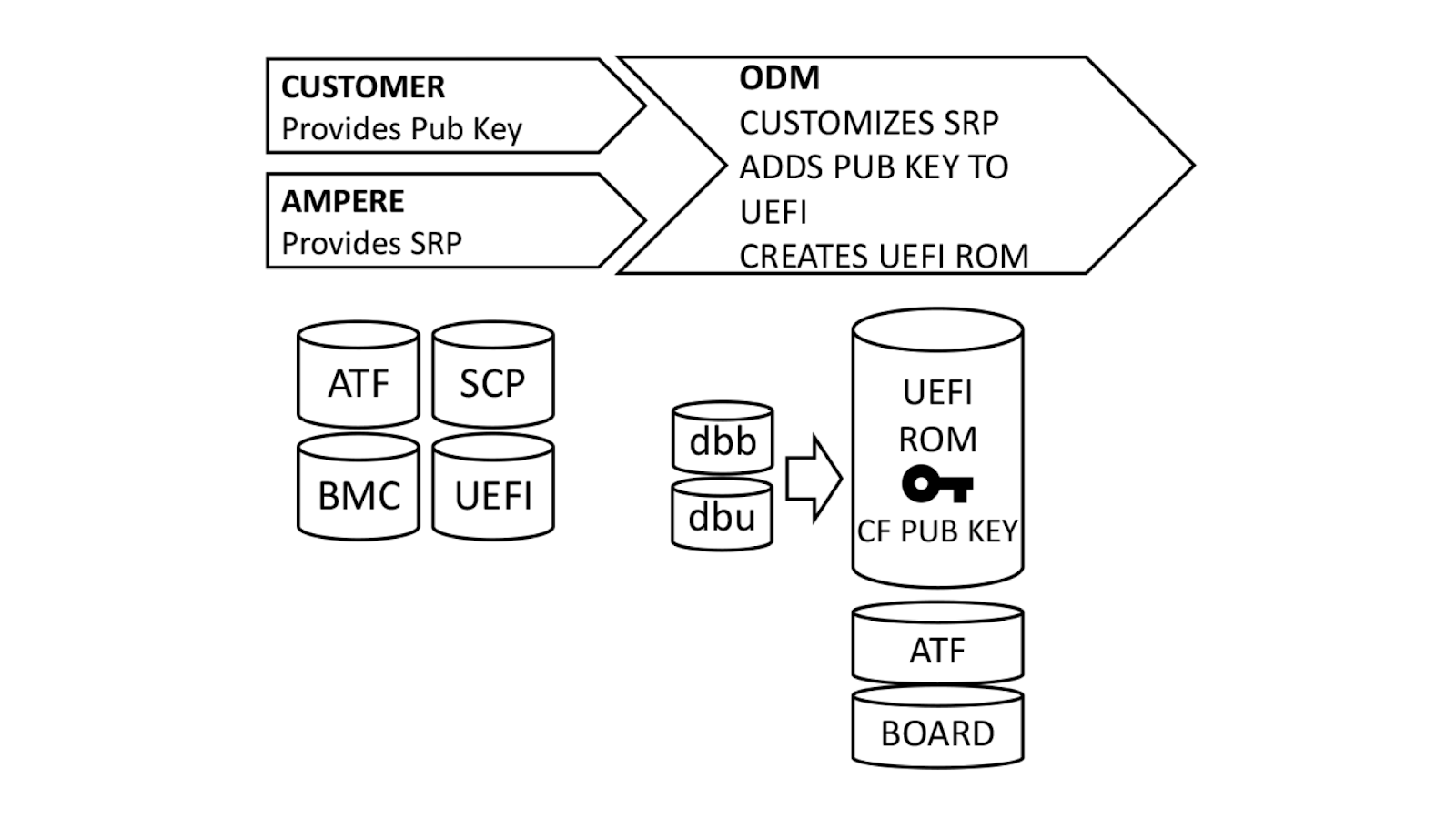

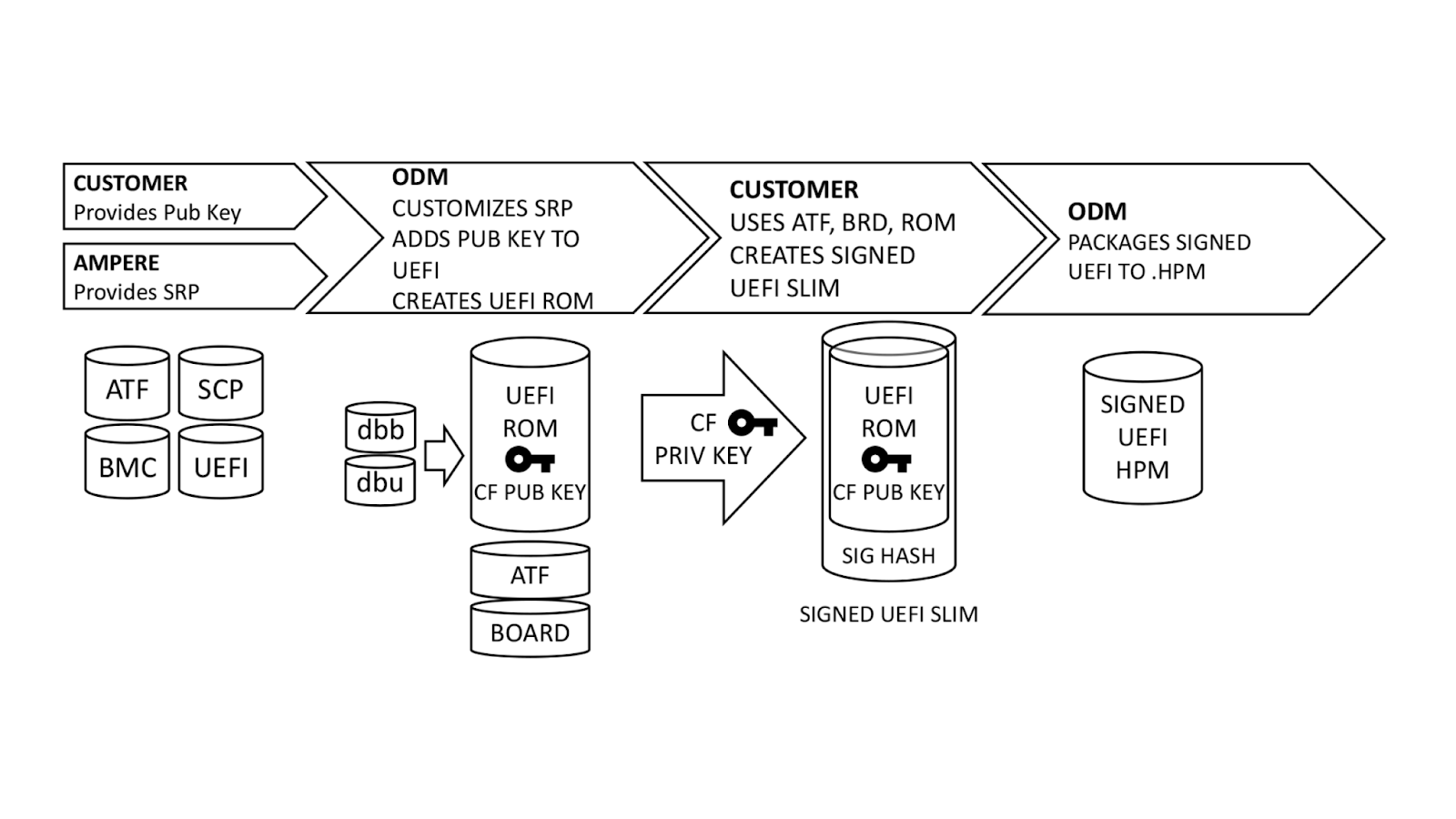

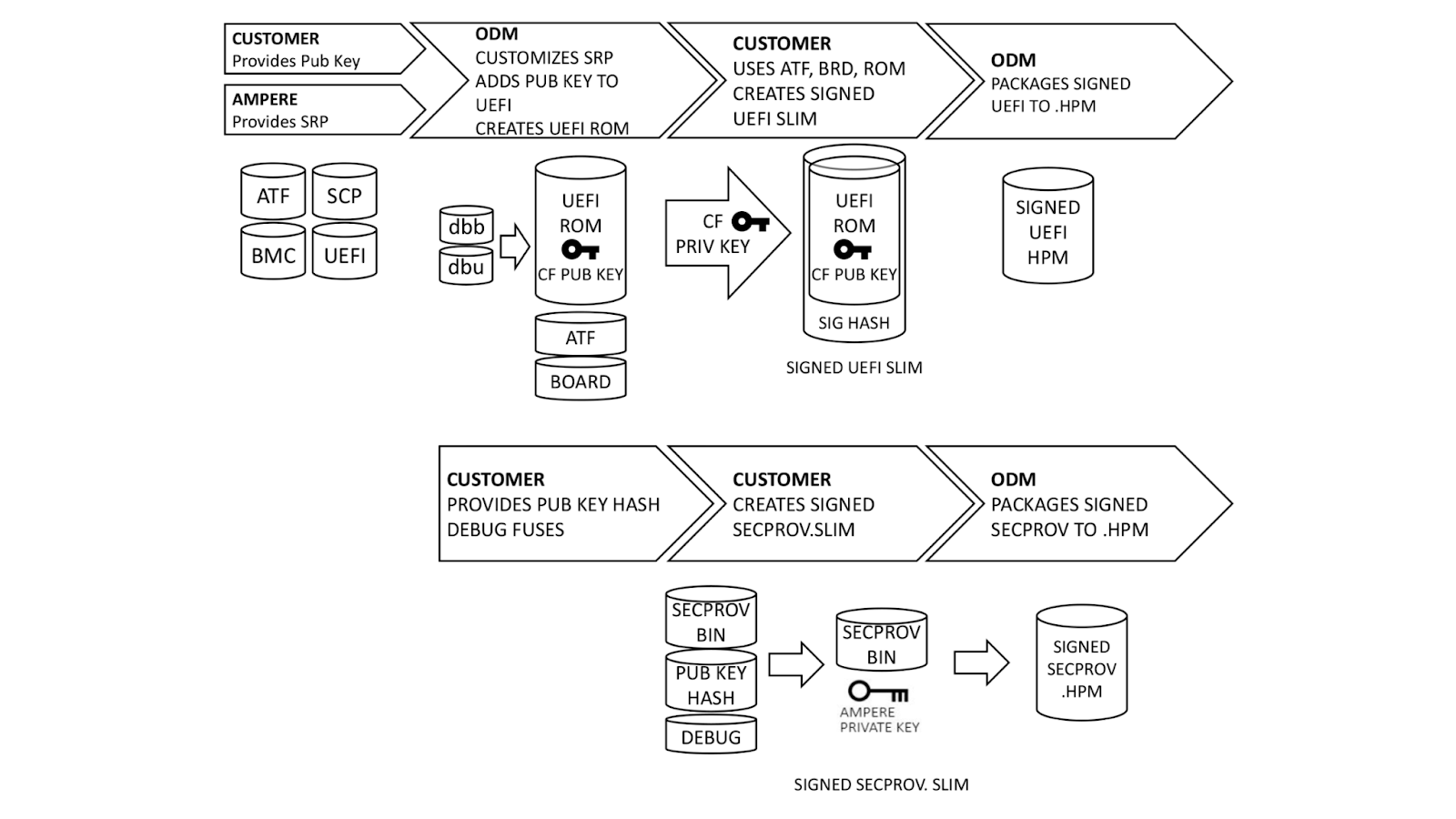

With a basic understanding of how Single Domain Secure Boot works, the next logical question is “How does it get implemented?”. We ensure that all UEFI firmware is signed at build time, but this process can be better understood if broken down into steps.

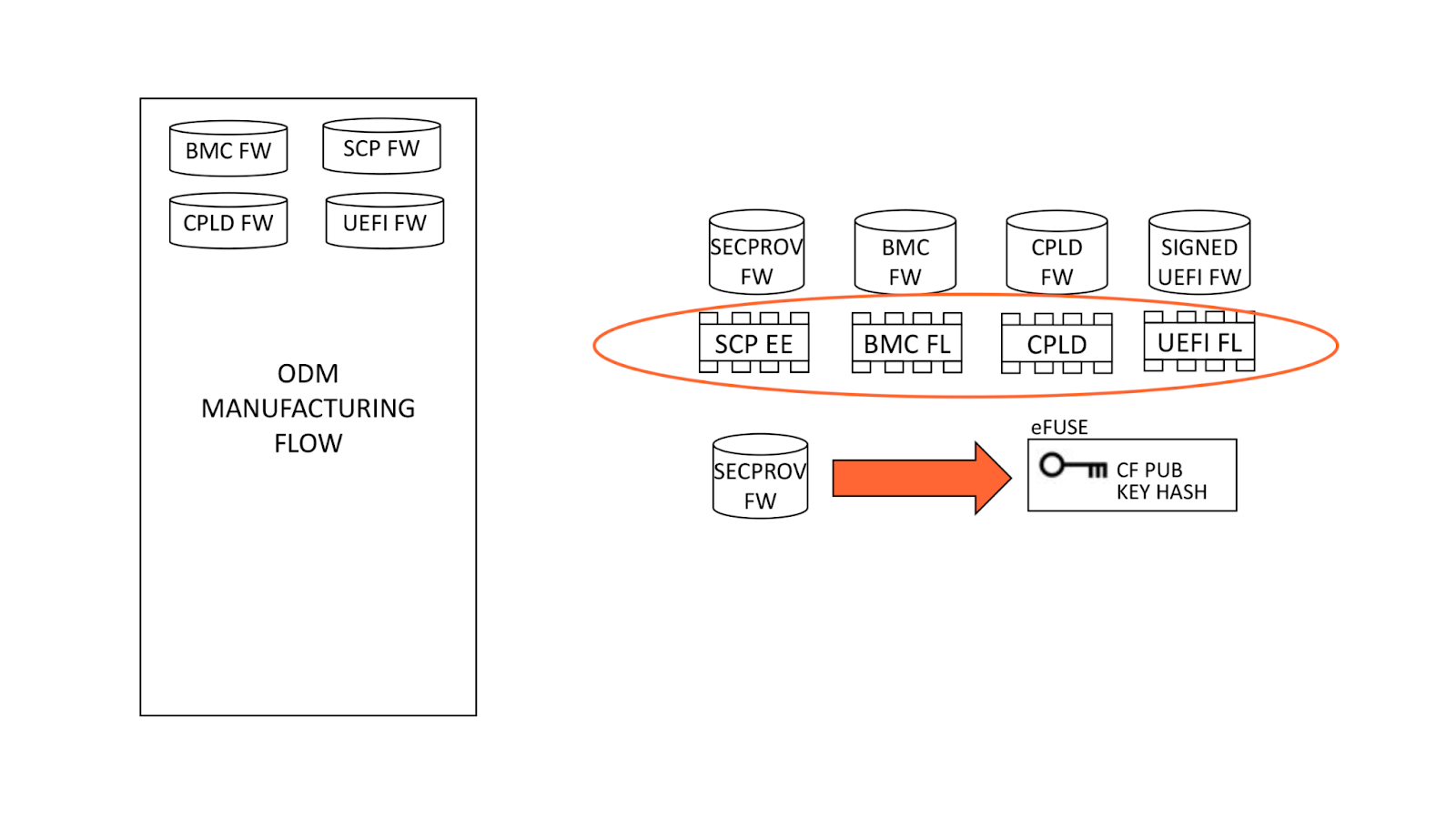

Ampere, our original device manufacturer (ODM), and we play a role in execution of SDSB. First, we generate certificates for a public-private key pair using our internal, secure PKI. The public key side is provided to the ODM as dbb.auth and dbu.auth in UEFI secure variable format. Ampere provides a reference Software Release Package (SRP) including the baseboard management controller, system control processor, UEFI, and complex programmable logic device (CPLD) firmware to the ODM, who customizes it for their platform. The ODM generates a board file describing the hardware configuration, and also customizes the UEFI to enroll dbb and dbu to secure variable storage on first boot.

Once this is done, we generate a UEFI.slim file using the ODM’s UEFI ROM image, Arm Trusted Firmware (ATF) and Board File. (Note: This differs from AMD PSB insofar as the entire image and ATF files are signed; with AMD PSB, only the first block of boot code is signed.) The entire .SLIM file is signed with our private key, producing a signature hash in the file. This can only be authenticated by the correct public key. Finally, the ODM packages the UEFI into .HPM format compatible with their platform BMC.

In parallel, we provide the debug fuse selection and hash of our DER-formatted public key. Ampere uses this information to create a special version of the SCP firmware known as Security Provisioning (SECPROV) .slim format. This firmware is run one time only, to program the debug fuse settings and public key hash into the SoC eFuses. Ampere delivers the SECPROV .slim file to the ODM, who packages it into a .hpm file compatible with the BMC firmware update tooling.

Fusing the keys

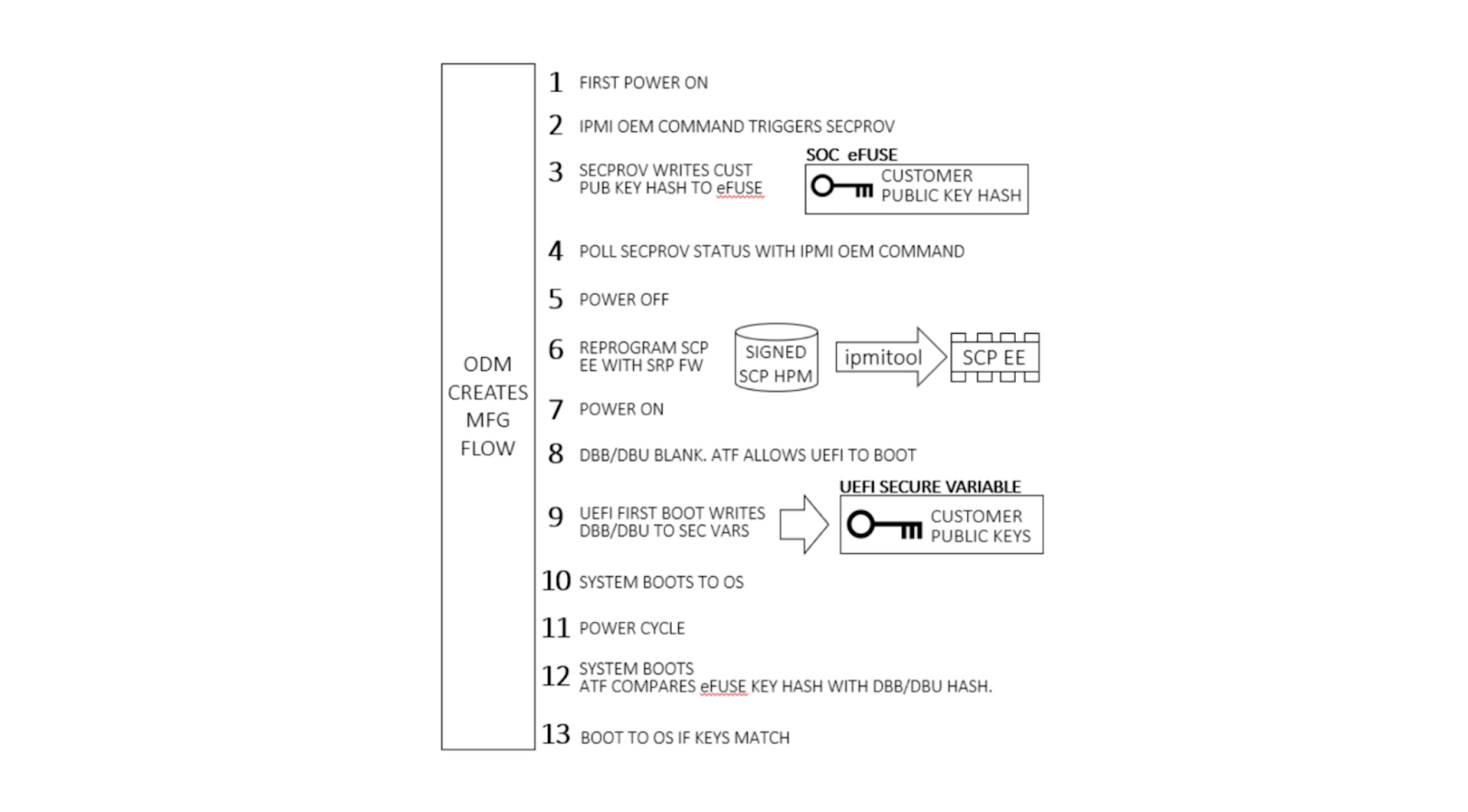

During system manufacturing, firmware is pre-programmed into storage ICs before placement on the motherboard. Note that the SCP EEPROM contains the SECPROV image, not standard SCP firmware. After a system is first powered on, an IPMI command is sent to the BMC which releases the Ampere processor from reset. This allows SECPROV firmware to run, burning the SoC eFuse with our public key hash and debug fuse settings.

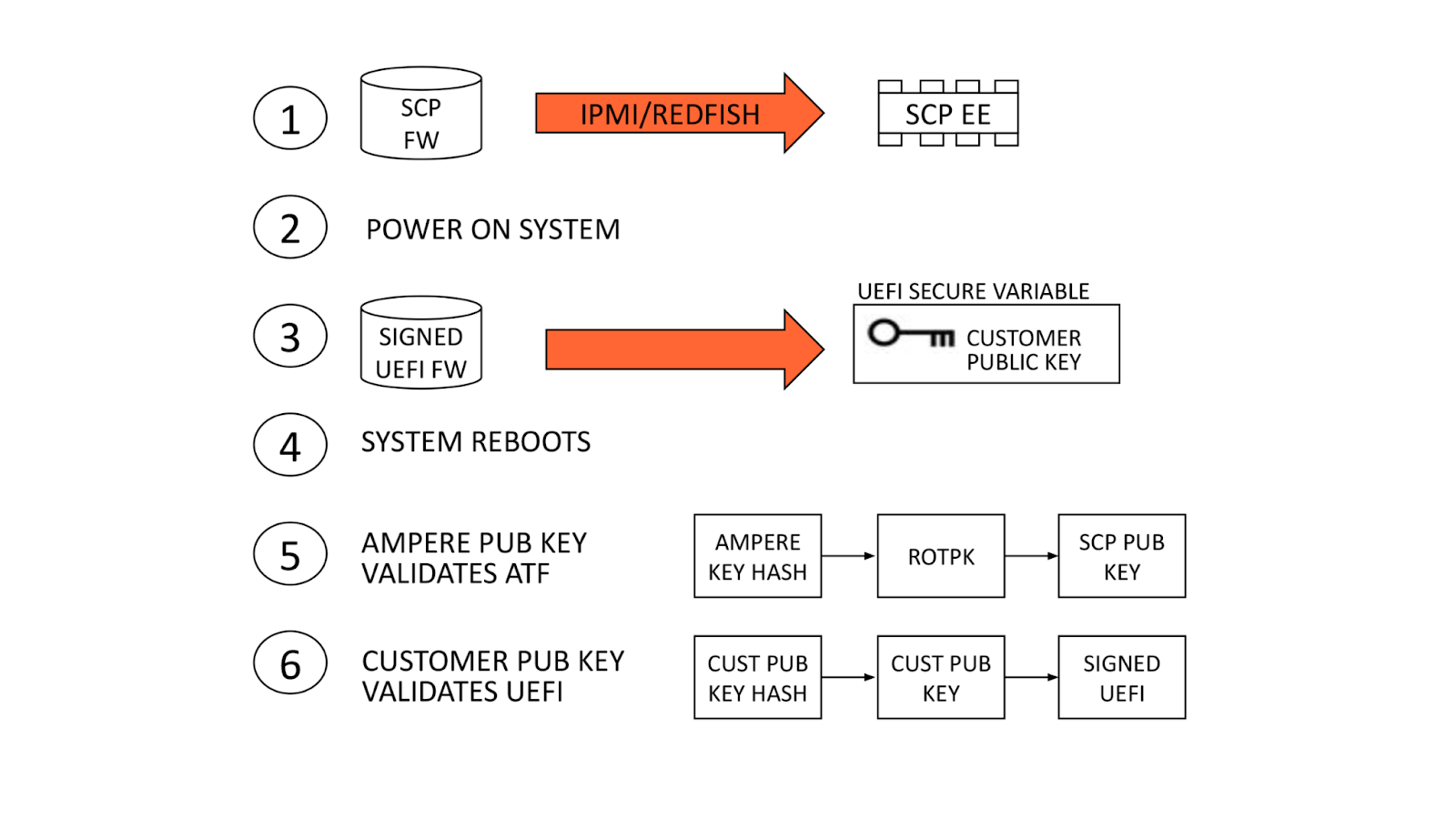

Final manufacturing flow

Once our public key has been provisioned, manufacturing proceeds by re-programming the SCP EEPROM with its regular firmware. Once the system powers on, ATF detects there are no keys present in secure variable storage and allows UEFI firmware to boot, regardless of signature. Since this is the first UEFI boot, it programs our public key into secure variable storage and reboots. ATF is validated by Ampere’s public key hash as usual. Since our public key is present in dbb, it is validated against our public key hash in eFuse and allows UEFI to boot.

Validation

The first part of validation requires observing successful destruction of the eFuses. This imprints our public key hash into a dedicated, immutable memory region, not allowing the hash to be overwritten. Upon automatic or manual issue of an IPMI OEM command to the BMC, the BMC observes a signal from the SECPROV firmware, denoting eFuse programming completion. This can be probed with BMC commands.

When the eFuses have been blown, validation continues by observing the boot chain of the other firmware. Corruption of the SCP, ATF, or UEFI firmware obstructs boot flow and boot authentication and will cause the machine to fail booting to the OS. Once firmware is in place, happy path validation begins with booting the machine.

Upon first boot, firmware boots in the following order: BMC, SCP, ATF, and UEFI. The BMC, SCP, and ATF firmware can be observed via their respective serial consoles. The UEFI will automatically enroll the dbb and dbu files to the secure variable storage and trigger a reset of the system.

After observing the reset, the machine should successfully boot to the OS if the feature is executed correctly. For further validation, we can use the UEFI shell environment to extract the dbb file and compare the hash against the hash submitted to Ampere. After successfully validating the keys, we flash an unsigned UEFI image. An unsigned UEFI image causes authentication failure at bootloader stage BL3-2. The ATF firmware undergoes a boot loop as a result. Similar results will occur for a UEFI image signed with incorrect keys.

Updated authentication flow

On all subsequent boot cycles, the ATF will read secure variable dbb (our public key), compute a hash of the key, and compare it to the read-only Cloudflare public key hash in eFuse. If the computed and eFuse hashes match, our public key variable can be trusted and is used to authenticate the signed UEFI. After this, the system boots to the OS.

Let’s boot!

We were unable to get a machine without the feature enabled to demonstrate the set-up of the feature since we have the eFuse set at build time, but we can demonstrate what it looks like to go between an unsigned BIOS and a signed BIOS. What we would have observed with the set-up of the feature is a custom BMC command to instruct the SCP to burn the ROTPK into the SOC’s OTP fuses. From there, we would observe feedback to the BMC detailing whether burning the fuses was successful. Upon booting the UEFI image for the first time, the UEFI will write the dbb and dbu into secure storage.

As you can see, after flashing the unsigned BIOS, the machine fails to boot.

Despite the lack of visibility in failure to boot, there are a few things going on underneath the hood. The SCP (System Control Processor) still boots.

- The SCP image holds a key certificate with Ampere’s generated ROTPK and the SCP key hash. SCP will calculate the ROTPK hash and compare it against the burned OTP fuses. In the failure case, where the hash does not match, you will observe a failure as you saw earlier. If successful, the SCP firmware will proceed to boot the PMpro and SMpro. Both the PMpro and SMpro firmware will be verified and proceed with the ATF authentication flow.

- The conclusion of the SCP authentication is the passing of the BL1 key to the first stage bootloader via the SCP HOB(hand-off-block) to proceed with the standard three stage bootloader ATF authentication mentioned previously.

- At BL2, the dbb is read out of the secure variable storage and used to authenticate the BL33 certificate and complete the boot process by booting the BL33 UEFI image.

Still more to do

In recent years, management interfaces on servers, like the BMC, have been the target of cyber attacks including ransomware, implants, and disruptive operations. Access to the BMC can be local or remote. With remote vectors open, there is potential for malware to be installed on the BMC via network interfaces. With compromised software on the BMC, malware or spyware could maintain persistence on the server. An attacker might be able to update the BMC directly using flashing tools such as flashrom or socflash without the same level of firmware resilience established at the UEFI level.

The future state involves using host CPU-agnostic infrastructure to enable a cryptographically secure host prior to boot time. We will look to incorporate a modular approach that has been proposed by the Open Compute Project’s Data Center Secure Control

Module Specification (DC-SCM) 2.0 specification. This will allow us to standardize our Root of Trust, sign our BMC, and assign physically unclonable function (PUF) based identity keys to components and peripherals to limit the use of OTP fusing. OTP fusing creates a problem with trying to “e-cycle” or reuse machines as you cannot truly remove a machine identity.

Animal Shelters: A History

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=t7jky9WX0t0

Combining computing and maths to teach primary learners about variables

Post Syndicated from Katharine Childs original https://www.raspberrypi.org/blog/variables-primary-school-computing-maths-education-seminar/

In our first seminar of 2023, we were delighted to welcome Dr Katie Rich and Carla Strickland. They spoke to us about teaching the programming construct of variables in Grade 3 and 4 (age 8 to 10).

We are hearing from a diverse range of speakers in our current series of monthly online research seminars focused on primary (K-5) computing education. Many of them work closely with educators to translate research findings into classroom practice to make sure that all our younger learners have positive first experiences of learning computing. An important goal of their research is to impact the development of pedagogy, resources, and professional development to support educators to deliver computing concepts with confidence.

Variables in computing and mathematics

Dr Katie Rich (American Institutes of Research) and Carla Strickland (UChicago STEM Education) are both part of a team that worked on a research project called Everyday Computing, which aims to integrate computational thinking into primary mathematics lessons. A key part of the Everyday Computing project was to develop coherent learning resources across a number of school years. During the seminar, Katie and Carla presented on a study in the project that revolved around teaching variables in Grade 3 and 4 (age 8 to 10) by linking this computing concept to mathematical concepts such as area, perimeter, and fractions.

Variables are used in both mathematics and computing, but in significantly different ways. In mathematics, a variable, often represented by a single letter such as x or y, corresponds to a quantity that stays the same for a given problem. However, in computing, a variable is an identifier used to label data that may change as a computer program is executed. A variable is one of the programming constructs that can be used to generalise programs to make them work for a range of inputs. Katie highlighted that the research team was keen to explore the synergies and tensions that arise when curriculum subjects share terms, as is the case for ‘variable’.

Defining a learning trajectory

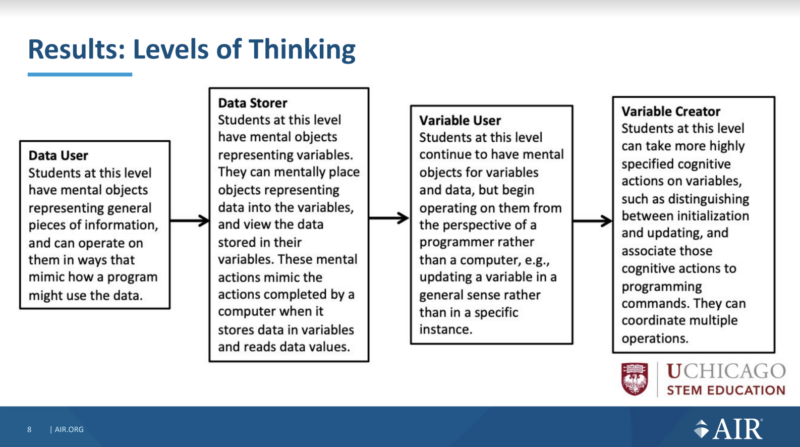

At the start of the project, in order to be able to develop coherent learning resources across school years, the team reviewed research papers related to teaching the programming construct of variables. In the papers, they found a variety of learning goals that related to facts (what learners need to know) and skills (what learners need to be able to do). They grouped these learning goals and arranged the groups into ‘levels of thinking’, which were then mapped onto a learning trajectory to show progression pathways for learning.

Learning materials about variables

Carla then shared three practical examples of learning resources their research team created that integrated the programming construct of variables into a maths curriculum. The three activities, described below, form part of a series of lessons called Action Fractions. You can read more about the series of lessons in this research paper.

Robot Boxes is an unplugged activity that is positioned at the Data User level of thinking. It relates to creating instructions for a fictional robot. Learners have to pay attention to different data the robot needs in order to draw a box, such as the length and width, and also to the value that the robot calculates as area of the box. The lesson uses boxes on paper as concrete representations of variables to which learners can physically add values.

Ambling Animals is set at the ‘Data Storer’ and ‘Variable Interpreter’ levels of thinking. It includes a Scratch project to help students to locate and compare fractions on number lines. During this lesson, find a variable that holds the value of the animal that represents the larger of two fractions.

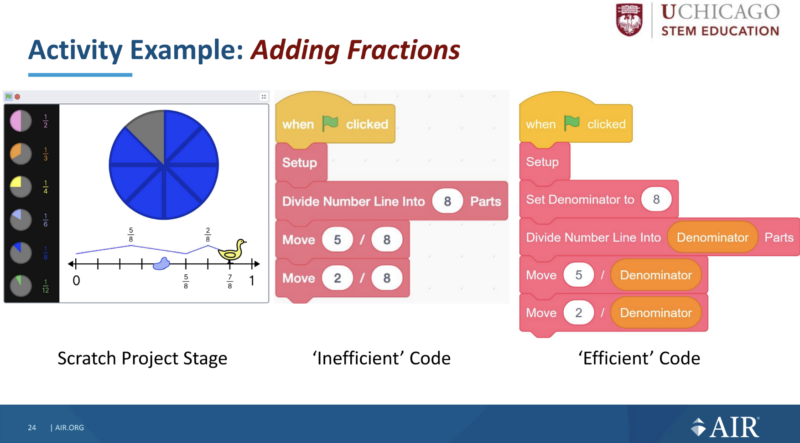

Adding Fractions draws on facts and skills from the ‘Variable Interpreter’ and ‘Variable Implementer’ levels of thinking and also includes a Scratch project. The Scratch project visualises adding fractions with the same denominator on a number line. The lesson starts to explain why variables are so important in computer programs by demonstrating how using a variable can make code more efficient.

Takeaways: Cross-curricular teaching, collaborative research

Teaching about the programming construct of variables can be challenging, as it requires young learners to understand abstract ideas. The research Katie and Carla presented shows how integrating these concepts into a mathematics curriculum is one way to highlight tangible uses of variables in everyday problems. The levels of thinking in the learning trajectory provide a structure helping teachers to support learners to develop their understanding and skills; the same levels of thinking could be used to introduce variables in other contexts and curricula.

Many primary teachers use cross-curricular learning to increase children’s engagement and highlight real-world examples. The seminar showed how important it is for teachers to pay attention to terms used across subjects, such as the word ‘variable’, and to explicitly explain a term’s different meanings. Katie and Carla shared a practical example of this when they suggested that computing teachers need to do more to stress the difference between equations such as xy = 45 in maths and assignment statements such as length = 45 in computing.

The Everyday Computing project resources were created by a team of researchers and educators who worked together to translate research findings into curriculum materials. This type of collaboration can be really valuable in driving a research agenda to directly improve learning outcomes for young people in classrooms.

How can this research influence your classroom practice or other activities as an educator? Let us know your thoughts in the comments. We’ll be continuing to reflect on this question throughout the seminar series.

You can watch Katie’s and Carla’s full presentation here:

Join our seminar series on primary computing education

Our monthly seminar series on primary (K–5) teaching and learning is of interest to a global audience of educators, including those who want to understand the prior learning experiences of older learners.

We continue on Tuesday 7 February at 17.00 UK time, when we will hear from Dr Jean Salac, University of Washington. Jean will present her work in identifying inequities in elementary computing instruction and in developing a learning strategy, TIPP&SEE, to address these inequities. Sign up now, and we will send you a joining link for the session.

The post Combining computing and maths to teach primary learners about variables appeared first on Raspberry Pi.

US Cyber Command Operations During the 2022 Midterm Elections

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/01/us-cyber-command-operations-during-the-2022-midterm-elections.html

The head of both US Cyber Command and the NSA, Gen. Paul Nakasone, broadly discussed that first organization’s offensive cyber operations during the runup to the 2022 midterm elections. He didn’t name names, of course:

We did conduct operations persistently to make sure that our foreign adversaries couldn’t utilize infrastructure to impact us,” said Nakasone. “We understood how foreign adversaries utilize infrastructure throughout the world. We had that mapped pretty well. And we wanted to make sure that we took it down at key times.”

Nakasone noted that Cybercom’s national mission force, aided by NSA, followed a “campaign plan” to deprive the hackers of their tools and networks. “Rest assured,” he said. “We were doing operations well before the midterms began, and we were doing operations likely on the day of the midterms.” And they continued until the elections were certified, he said.

We know Cybercom did similar things in 2018 and 2020, and presumably will again in two years.

A Quick Micron 9400 Pro Video

Post Syndicated from Patrick Kennedy original https://www.servethehome.com/a-quick-micron-9400-pro-video/

We have a quick video to go along with the Micron 9400 Pro 32TB PCIe Gen4 NVMe SSD review that we published last week

The post A Quick Micron 9400 Pro Video appeared first on ServeTheHome.

Cloudflare Incident on January 24th, 2023

Post Syndicated from Kenny Johnson original https://blog.cloudflare.com/cloudflare-incident-on-january-24th-2023/

Several Cloudflare services became unavailable for 121 minutes on January 24th, 2023 due to an error releasing code that manages service tokens. The incident degraded a wide range of Cloudflare products including aspects of our Workers platform, our Zero Trust solution, and control plane functions in our content delivery network (CDN).

Cloudflare provides a service token functionality to allow automated services to authenticate to other services. Customers can use service tokens to secure the interaction between an application running in a data center and a resource in a public cloud provider, for example. As part of the release, we intended to introduce a feature that showed administrators the time that a token was last used, giving users the ability to safely clean up unused tokens. The change inadvertently overwrote other metadata about the service tokens and rendered the tokens of impacted accounts invalid for the duration of the incident.

The reason a single release caused so much damage is because Cloudflare runs on Cloudflare. Service tokens impact the ability for accounts to authenticate, and two of the impacted accounts power multiple Cloudflare services. When these accounts’ service tokens were overwritten, the services that run on these accounts began to experience failed requests and other unexpected errors.

We know this impacted several customers and we know the impact was painful. We’re documenting what went wrong so that you can understand why this happened and the steps we are taking to prevent this from occurring again.

What is a service token?

When users log into an application or identity provider, they typically input a username and a password. The password allows that user to demonstrate that they are in control of the username and that the service should allow them to proceed. Layers of additional authentication can be added, like hard keys or device posture, but the workflow consists of a human proving they are who they say they are to a service.

However, humans are not the only users that need to authenticate to a service. Applications frequently need to talk to other applications. For example, imagine you build an application that shows a user information about their upcoming travel plans.

The airline holds details about the flight and its duration in their own system. They do not want to make the details of every individual trip public on the Internet and they do not want to invite your application into their private network. Likewise, the hotel wants to make sure that they only send details of a room booking to a valid, approved third party service.

Your application needs a trusted way to authenticate with those external systems. Service tokens solve this problem by functioning as a kind of username and password for your service. Like usernames and passwords, service tokens come in two parts: a Client ID and a Client Secret. Both the ID and Secret must be sent with a request for authentication. Tokens are also assigned a duration, after which they become invalid and must be rotated. You can grant your application a service token and, if the upstream systems you need validate it, your service can grab airline and hotel information and present it to the end user in a joint report.

When administrators create Cloudflare service tokens, we generate the Client ID and the Client Secret pair. Customers can then configure their requesting services to send both values as HTTP headers when they need to reach a protected resource. The requesting service can run in any environment, including inside of Cloudflare’s network in the form of a Worker or in a separate location like a public cloud provider. Customers need to deploy the corresponding protected resource behind Cloudflare’s reverse proxy. Our network checks every request bound for a configured service for the HTTP headers. If present, Cloudflare validates their authenticity and either blocks the request or allows it to proceed. We also log the authentication event.

Incident Timeline

All Timestamps are UTC

At 2023-01-24 16:55 UTC the Access engineering team initiated the release that inadvertently began to overwrite service token metadata, causing the incident.

At 2023-01-24 17:05 UTC a member of the Access engineering team noticed an unrelated issue and rolled back the release which stopped any further overwrites of service token metadata.

Service token values are not updated across Cloudflare’s network until the service token itself is updated (more details below). This caused a staggered impact of the service token’s that had their metadata overwritten.

2023-01-24 17:50 UTC: The first invalid service token for Cloudflare WARP was synced to the edge. Impact began for WARP and Zero Trust users.

At 2023-01-24 18:12 an incident was declared due to the large drop in successful WARP device posture uploads.

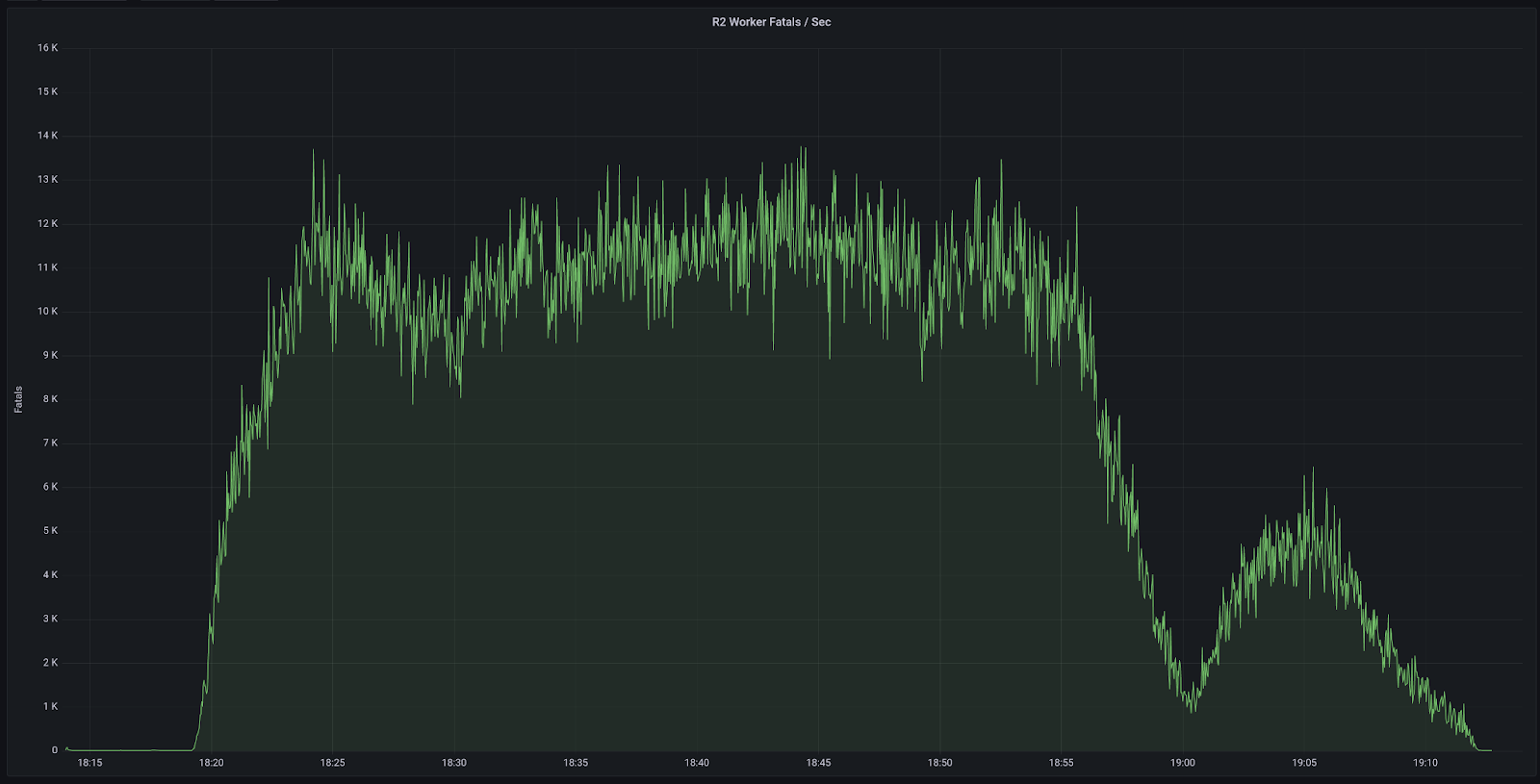

2023-01-24 18:19 UTC: The first invalid service token for the Cloudflare API was synced to the edge. Impact began for Cache Purge, Cache Reserve, Images and R2. Alerts were triggered for these products which identified a larger scope of the incident.

At 2023-01-24 18:21 the overwritten services tokens were discovered during the initial investigation.

At 2023-01-24 18:28 the incident was elevated to include all impacted products.

At 2023-01-24 18:51 An initial solution was identified and implemented to revert the service token to its original value for the Cloudflare WARP account, impacting WARP and Zero Trust. Impact ended for WARP and Zero Trust.

At 2023-01-24 18:56 The same solution was implemented on the Cloudflare API account, impacting Cache Purge, Cache Reserve, Images and R2. Impact ended for Cache Purge, Cache Reserve, Images and R2.

At 2023-01-24 19:00 An update was made to the Cloudflare API account which incorrectly overwrote the Cloudflare API account. Impact restarted for Cache Purge, Cache Reserve, Images and R2. All internal Cloudflare account changes were then locked until incident resolution.

At 2023-01-24 19:07 the Cloudflare API was updated to include the correct service token value. Impact ended for Cache Purge, Cache Reserve, Images and R2.

At 2023-01-24 19:51 all affected accounts had their service tokens restored from a database backup. Incident Ends.

What was released and how did it break?

The Access team was rolling out a new change to service tokens that added a “Last seen at” field. This was a popular feature request to help identify which service tokens were actively in use.

What went wrong?

The “last seen at” value was derived by scanning all new login events in an account’s login event Kafka queue. If a login event using a service token was detected, an update to the corresponding service token’s last seen value was initiated.

In order to update the service token’s “last seen at” value a read write transaction is made to collect the information about the corresponding service token. Service token read requests redact the “client secret” value by default for security reasons. The “last seen at” update to the service token then used that information from the read did not include the “client secret” and updated the service token with an empty “client secret” on the write.

An example of the correct and incorrect service token values shown below:

Example Access Service Token values

{

"1a4ddc9e-a1234-4acc-a623-7e775e579c87": {

"client_id": "6b12308372690a99277e970a3039343c.access",

"client_secret": "<hashed-value>", <-- what you would expect

"expires_at": 1698331351

},

"23ade6c6-a123-4747-818a-cd7c20c83d15": {

"client_id": "1ab44976dbbbdadc6d3e16453c096b00.access",

"client_secret": "", <--- this is the problem

"expires_at": 1670621577

}

}

The service token “client secret” database did have a “not null” check however in this situation an empty text string did not trigger as a null value.

As a result of the bug, any Cloudflare account that used a service token to authenticate during the 10 minutes “last seen at” release was out would have its “client secret” value set to an empty string. The service token then needed to be modified in order for the empty “client secret” to be used for authentication. There were a total of 4 accounts in this state, all of which are internal to Cloudflare.

How did we fix the issue?

As a temporary solution, we were able to manually restore the correct service token values for the accounts with overwritten service tokens. This stopped the immediate impact across the affected Cloudflare services.

The database team was then able to implement a solution to restore the service tokens of all impacted accounts from an older database copy. This concluded any impact from this incident.

Why did this impact other Cloudflare services?

Service tokens impact the ability for accounts to authenticate. Two of the impacted accounts power multiple Cloudflare services. When these accounts’ services tokens were overwritten, the services that run on these accounts began to experience failed requests and other unexpected errors.

Cloudflare WARP Enrollment

Cloudflare provides a mobile and desktop forward proxy, Cloudflare WARP (our “1.1.1.1” app), that any user can install on a device to improve the privacy of their Internet traffic. Any individual can install this service without the need for a Cloudflare account and we do not retain logs that map activity to a user.

When a user connects using WARP, Cloudflare validates the enrollment of a device by relying on a service that receives and validates the keys on the device. In turn, that service communicates with another system that tells our network to provide the newly enrolled device with access to our network

During the incident, the enrollment service could no longer communicate with systems in our network that would validate the device. As a result, users could no longer register new devices and/or install the app on a new device, and may have experienced issues upgrading to a new version of the app (which also triggers re-registration).

Cloudflare Zero Trust Device Posture and Re-Auth Policies

Cloudflare provides a comprehensive Zero Trust solution that customers can deploy with or without an agent living on the device. Some use cases are only available when using the Cloudflare agent on the device. The agent is an enterprise version of the same Cloudflare WARP solution and experienced similar degradation anytime the agent needed to send or receive device state. This impacted three use cases in Cloudflare Zero Trust.

First, similar to the consumer product, new devices could not be enrolled and existing devices could not be revoked. Administrators were also unable to modify settings of enrolled devices.. In all cases errors would have been presented to the user.

Second, many customers who replace their existing private network with Cloudflare’s Zero Trust solution may add rules that continually validate a user’s identity through the use of session duration policies. The goal of these rules is to enforce users to reauthenticate in order to prevent stale sessions from having ongoing access to internal systems. The agent on the device prompts the user to reauthenticate based on signals from Cloudflare’s control plane. During the incident, the signals were not sent and users could not successfully reauthenticate.

Finally, customers who rely on device posture rules also experienced impact. Device posture rules allow customers who use Access or Gateway policies to rely on the WARP agent to continually enforce that a device meets corporate compliance rules.

The agent communicates these signals to a Cloudflare service responsible for maintaining the state of the device. Cloudflare’s Zero Trust access control product uses a service token to receive this signal and evaluate it along with other rules to determine if a user can access a given resource. During this incident those rules defaulted to a block action, meaning that traffic modified by these policies would appear broken to the user. In some cases this meant that all internet bound traffic from a device was completely blocked leaving users unable to access anything.

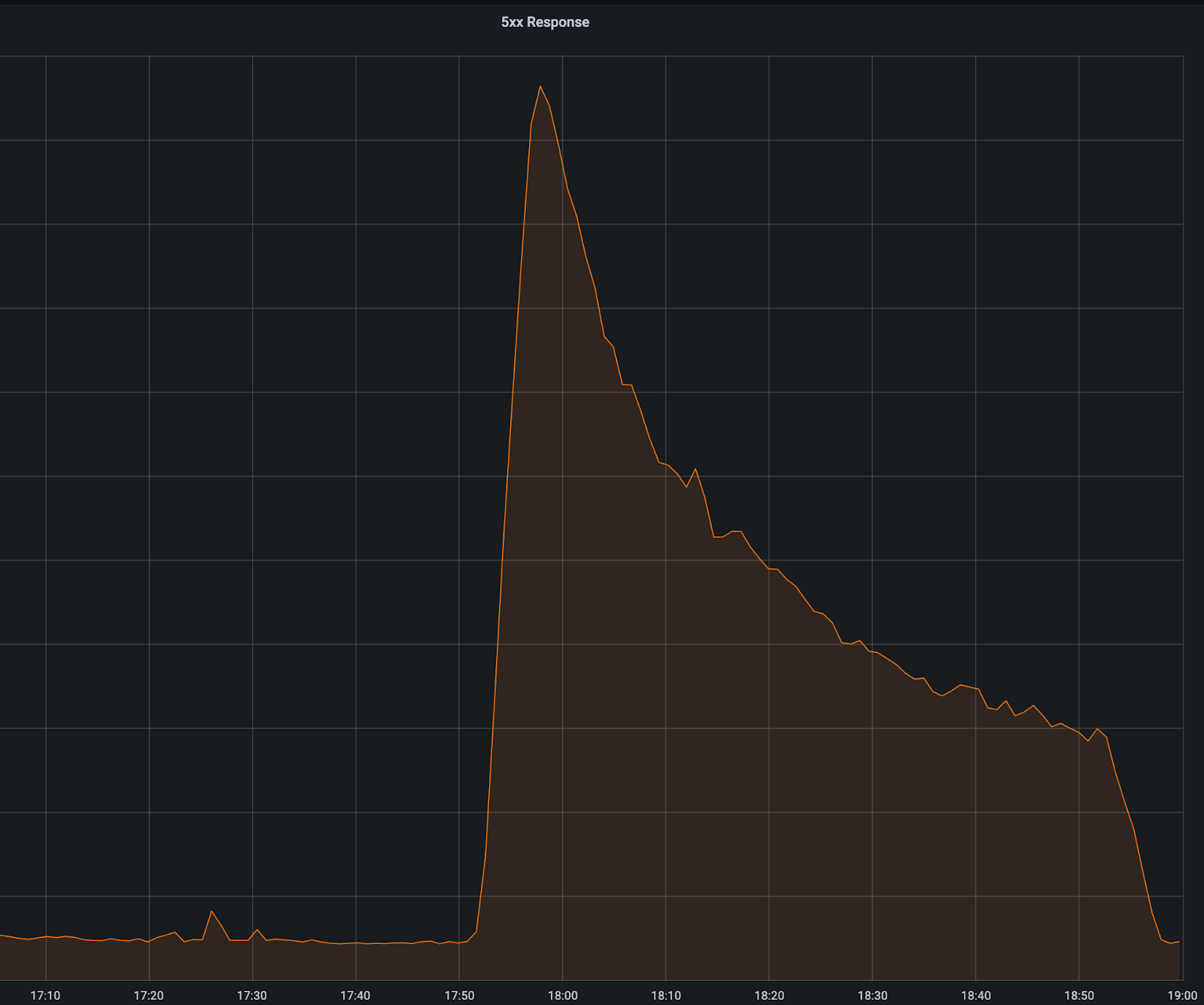

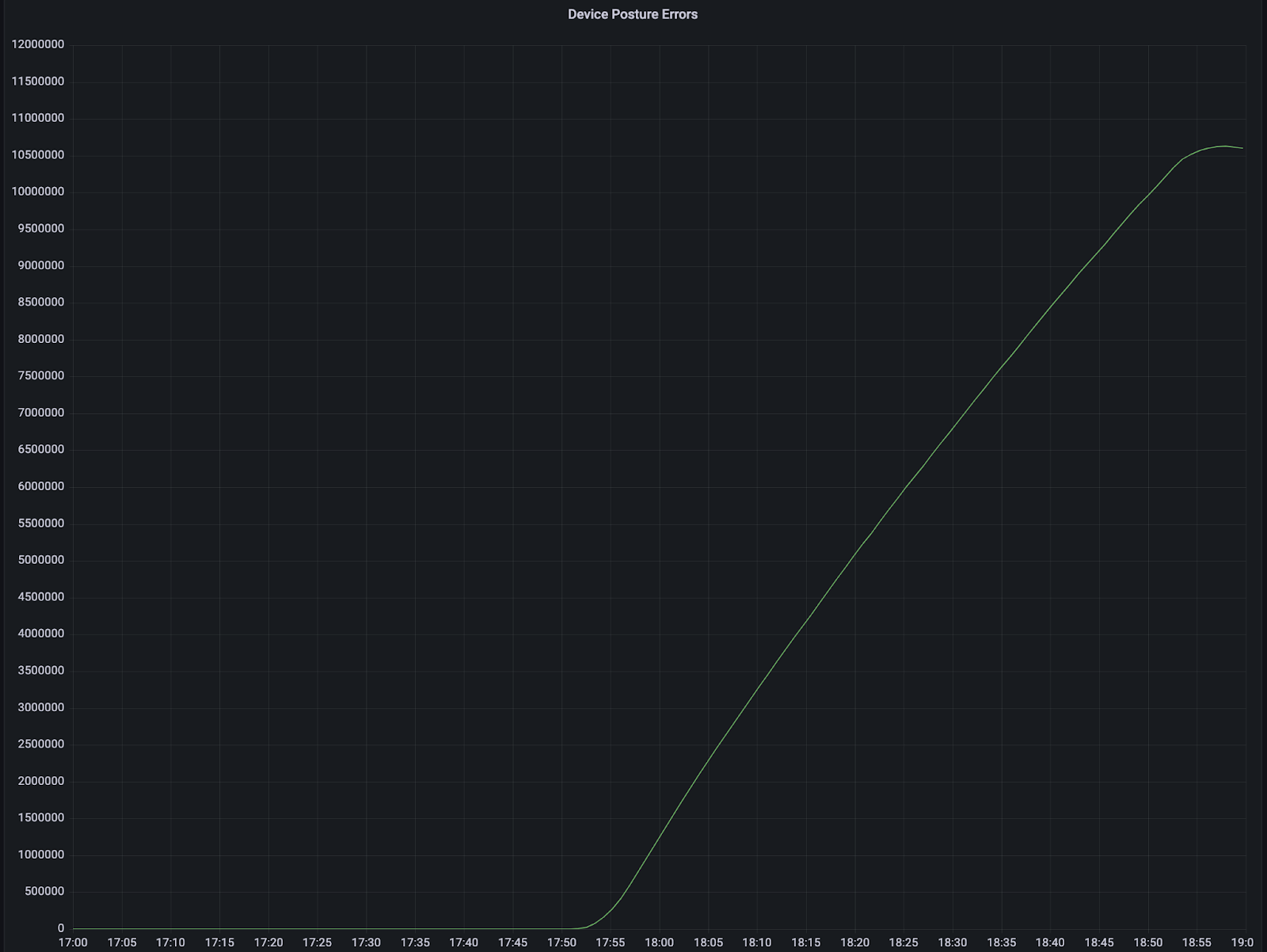

Cloudflare Gateway caches the device posture state for users every 5 minutes to apply Gateway policies. The device posture state is cached so Gateway can apply policies without having to verify device state on every request. Depending on which Gateway policy type was matched, the user would experience two different outcomes. If they matched a network policy the user would experience a dropped connection and for an HTTP policy they would see a 5XX error page. We peaked at over 50,000 5XX errors/minute over baseline and had over 10.5 million posture read errors until the incident was resolved.

Gateway 5XX errors per minute

Total count of Gateway Device posture errors

Cloudflare R2 Storage and Cache Reserve

Cloudflare R2 Storage allows developers to store large amounts of unstructured data without the costly egress bandwidth fees associated with typical cloud storage services.

During the incident, the R2 service was unable to make outbound API requests to other parts of the Cloudflare infrastructure. As a result, R2 users saw elevated request failure rates when making requests to R2.

Many Cloudflare products also depend on R2 for data storage and were also affected. For example, Cache Reserve users were impacted during this window and saw increased origin load for any items not in the primary cache. The majority of read and write operations to the Cache Reserve service were impacted during this incident causing entries into and out of Cache Reserve to fail. However, when Cache Reserve sees an R2 error, it falls back to the customer origin, so user traffic was still serviced during this period.

Cloudflare Cache Purge

Cloudflare’s content delivery network (CDN) caches the content of Internet properties on our network in our data centers around the world to reduce the distance that a user’s request needs to travel for a response. In some cases, customers want to purge what we cache and replace it with different data.

The Cloudflare control plane, the place where an administrator interacts with our network, uses a service token to authenticate and reach the cache purge service. During the incident, many purge requests failed while the service token was invalid. We saw an average impact of 20 purge requests/second failing and a maximum of 70 requests/second.

What are we doing to prevent this from happening again?

We take incidents like this seriously and recognize the impact it had. We have identified several steps we can take to address the risk of a similar problem occurring in the future. We are implementing the following remediation plan as a result of this incident:

Test: The Access engineering team will add unit tests that would automatically catch any similar issues with service token overwrites before any new features are launched.

Alert: The Access team will implement an automatic alert for any dramatic increase in failed service token authentication requests to catch issues before they are fully launched.

Process: The Access team has identified process improvements to allow for faster rollbacks for specific database tables.

Implementation: All relevant database fields will be updated to include checks for empty strings on top of existing “not null checks”

We are sorry for the disruption this caused for our customers across a number of Cloudflare services. We are actively making these improvements to ensure improved stability moving forward and that this problem will not happen again.

Comic for 2023.01.25 – Skittles

Post Syndicated from Explosm.net original https://explosm.net/comics/skittles

New Cyanide and Happiness Comic

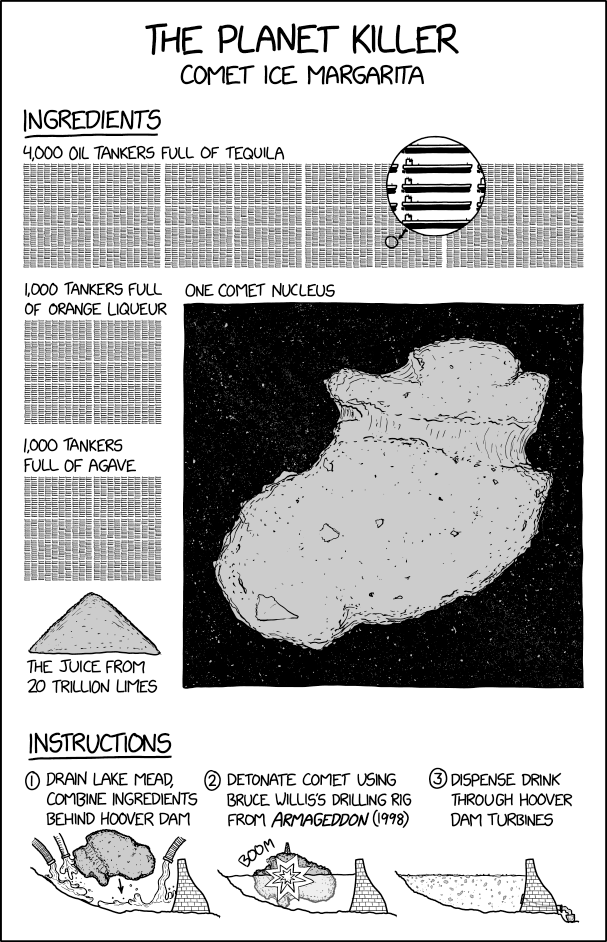

Planet Killer Comet Margarita

Post Syndicated from original https://xkcd.com/2729/

[$] Python packaging, visions, and unification

Post Syndicated from original https://lwn.net/Articles/920832/

The Python community is currently struggling with a longtime difficulty in

its ecosystem: how to develop, package, distribute, and maintain libraries

and applications. The current situation is sub-optimal in several

dimensions due, at least in part, to the existence of multiple,

non-interoperable mechanisms and tools to handle some of those needs. Last

week, we had an overview of Python

packaging as a prelude to starting to dig into the discussions. In

this installment, we start to look at the kinds of problems that exist—and

the barriers to solving them.

WINE 8.0 released

Post Syndicated from original https://lwn.net/Articles/921078/

Version 8.0 of the WINE

Windows compatibility layer has been released. The headline feature

appears to be the conversion to PE (“portable executable”) modules:

After 4 years of work, the PE conversion is finally complete: all

modules can be built in PE format. This is an important milestone

on the road to supporting various features such as copy protection,

32-bit applications on 64-bit hosts, Windows debuggers, x86

applications on ARM, etc.

Other changes include WoW64 support (allowing 32-bit modules to call into

64-bit libraries), Print Processor support, improved Direct3D support, and

more.

Build a multi-Region and highly resilient modern data architecture using AWS Glue and AWS Lake Formation

Post Syndicated from Vivek Shrivastava original https://aws.amazon.com/blogs/big-data/build-a-multi-region-and-highly-resilient-modern-data-architecture-using-aws-glue-and-aws-lake-formation/

AWS Lake Formation helps with enterprise data governance and is important for a data mesh architecture. It works with the AWS Glue Data Catalog to enforce data access and governance. Both services provide reliable data storage, but some customers want replicated storage, catalog, and permissions for compliance purposes.

This post explains how to create a design that automatically backs up Amazon Simple Storage Service (Amazon S3), the AWS Glue Data Catalog, and Lake Formation permissions in different Regions and provides backup and restore options for disaster recovery. These mechanisms can be customized for your organization’s processes. The utility for cloning and experimentation is available in the open-sourced GitHub repository.

This solution only replicates metadata in the Data Catalog, not the actual underlying data. To have a redundant data lake using Lake Formation and AWS Glue in an additional Region, we recommend replicating the Amazon S3-based storage using S3 replication, S3 sync, aws-s3-copy-sync-using-batch or S3 Batch replication process. This ensures that the data lake will still be functional in another Region if Lake Formation has an availability issue. The Data Catalog setup (tables, databases, resource links) and Lake Formation setup (permissions, settings) must also be replicated in the backup Region.

Solution overview

This post shows how to create a backup of the Lake Formation permissions and AWS Glue Data Catalog from one Region to another in the same account. The solution doesn’t create or modify AWS Identity and Access Management (IAM) roles, which are available in all Regions. There are three steps to creating a multi-Region data lake:

- Migrate Lake Formation data permissions.

- Migrate AWS Glue databases and tables.

- Migrate Amazon S3 data.

In the following sections, we look at each migration step in more detail.

Lake Formation permissions

In Lake Formation, there are two types of permissions: metadata access and data access.

Metadata access permissions allow users to create, read, update, and delete metadata databases and tables in the Data Catalog.

Data access permissions allow users to read and write data to specific locations in Amazon S3. Data access permissions are managed using data location permissions, which allow users to create and alter metadata databases and tables that point to specific Amazon S3 locations.

When data is migrated from one Region to another, only the metadata access permissions are replicated. This means that if data is moved from a bucket in the source Region to another bucket in the target Region, the data access permissions need to be reapplied in the target Region.

AWS Glue Data Catalog

The AWS Glue Data Catalog is a central repository of metadata about data stored in your data lake. It contains references to data that is used as sources and targets in AWS Glue ETL (extract, transform, and load) jobs, and stores information about the location, schema, and runtime metrics of your data. The Data Catalog organizes this information in the form of metadata tables and databases. A table in the Data Catalog is a metadata definition that represents the data in a data lake, and databases are used to organize these metadata tables.

Lake Formation permissions can only be applied to objects that already exist in the Data Catalog in the target Region. Therefore, in order to apply these permissions, the underlying Data Catalog databases and tables must already exist in the target Region. To meet this requirement, this utility migrates both the AWS Glue databases and tables from the source Region to the target Region.

Amazon S3 data

The data that underlies an AWS Glue table can be stored in an S3 bucket in any Region, so replication of the data itself isn’t necessary. However, if the data has already been replicated to the target Region, this utility has the option to update the table’s location to point to the replicated data in the target Region. If the location of the data is changed, the utility updates the S3 bucket name and keeps the rest of the prefix hierarchy unchanged.

This utility doesn’t include the migration of data from the source Region to the target Region. Data migration must be performed separately using methods such as S3 replication, S3 sync, aws-s3-copy-sync-using-batch or S3 Batch replication.

This utility has two modes for replicating Lake Formation and Data Catalog metadata: on-demand and real-time. The on-demand mode is a batch replication that takes a snapshot of the metadata at a specific point in time and uses it to synchronize the metadata. The real-time mode replicates changes made to the Lake Formation permissions or Data Catalog in near-real time.

The on-demand mode of this utility is recommended for creating existing Lake Formation permissions and Data Catalogs because it replicates a snapshot of the metadata. After the Lake Formation and Data Catalogs are synchronized, you can use real-time mode to replicate any ongoing changes. This creates a mirror image of the source Region in the target Region and keeps it up to date as changes are made in the source Region. These two modes can be used independently of each other, and the operations are idempotent.

The code for the on-demand and real-time modes is available in the GitHub repository. Let’s look at each mode in more detail.

On-demand mode

On-demand mode is used to copy the Lake Formation permissions and Data Catalog at a specific point in time. The code is deployed using the AWS Cloud Development Kit (AWS CDK). The following diagram shows the solution architecture for this mode.

The AWS CDK deploys an AWS Glue job to perform the replication. The job retrieves configuration information from a file stored in an S3 bucket. This file includes details such as the source and target Regions, an optional list of databases to replicate, and options for moving data to a different S3 bucket. More information about these options and deployment instructions is available in the GitHub repository.

The AWS Glue job retrieves the Lake Formation permissions and Data Catalog object metadata from the source Region and stores it in a JSON file in an S3 bucket. The same job then uses this file to create the Lake Formation permissions and Data Catalog databases and tables in the target Region.

This tool can be run on demand by running the AWS Glue job. It copies the Lake Formation permissions and Data Catalog object metadata from the source Region to the target Region. If you run the tool again after making changes to the target Region, the changes are replaced with the latest Lake Formation permissions and Data Catalog from the source Region.

This utility can detect any changes made to the Data Catalog metadata, databases, tables, and columns while replicating the Data Catalog from the source to the target Region. If a change is detected in the source Region, the latest version of the AWS Glue object is applied to the target Region. The utility reports the number of objects modified during its run.

The Lake Formation permissions are copied from the source to the target Region, so any new permissions are replicated in the target Region. If a permission is removed from the source Region, it is not removed from the target Region.

Real-time mode

Real-time mode replicates the Lake Formation permissions and Data Catalog at a regular interval. The default interval is 1 minute, but it can be modified during deployment. The code is deployed using the AWS CDK. The following diagram shows the solution architecture for this mode.

The AWS CDK deploys two AWS Lambda jobs and creates an Amazon DynamoDB table to store AWS CloudTrail events and an Amazon EventBridge rule to run the replication at a regular interval. The Lambda jobs retrieve the configuration information from a file stored in an S3 bucket. This file includes details such as the source and target Regions, options for moving data to a different S3 bucket, and the lookback period for CloudTrail in hours. More information about these options and deployment instructions is available in the GitHub repository.

The EventBridge rule triggers a Lambda job at a fixed interval. This job retrieves the configuration information and queries CloudTrail events related to the Data Catalog and Lake Formation that occurred in the past hour (the duration is configurable). All relevant events are then stored in a DynamoDB table.

After the event information is inserted into the DynamoDB table, another Lambda job is triggered. This job retrieves the configuration information and queries the DynamoDB table. It then applies all the changes to the target Region. If the tool is run again after making changes to the target Region, the changes are replaced with the latest Lake Formation permissions and Data Catalog from the source Region. Unlike on-demand mode, this utility also removes any Lake Formation permissions that were removed from the source Region from the target Region.

Limitations

This utility is designed to replicate permissions within a single account only. The on-demand mode replicates a snapshot and doesn’t remove existing permissions, so it doesn’t perform delete operations. The API currently doesn’t support replicating changes to row and column permissions.

Conclusion

In this post, we showed how you can use this utility to migrate the AWS Glue Data Catalog and Lake Formation permissions from one Region to another. It can also keep the source and target Regions synchronized if any changes are made to the Data Catalog or the Lake Formation permissions. Implementing it across Regions (multi-Region) is a good option if you are looking for the most separation and complete independence of your globally diverse data workloads. Also consider the trade-offs. Implementing and operating this strategy, particularly using multi-Region, can be more complicated and more expensive, than other DR strategies.

To get started, checkout the github repo. For more resources, refer to the following:

About the authors

Vivek Shrivastava is a Principal Data Architect, Data Lake in AWS Professional Services. He is a Bigdata enthusiast and holds 13 AWS Certifications. He is passionate about helping customers build scalable and high-performance data analytics solutions in the cloud. In his spare time, he loves reading and finds areas for home automation

Vivek Shrivastava is a Principal Data Architect, Data Lake in AWS Professional Services. He is a Bigdata enthusiast and holds 13 AWS Certifications. He is passionate about helping customers build scalable and high-performance data analytics solutions in the cloud. In his spare time, he loves reading and finds areas for home automation

Raza Hafeez is a Senior Data Architect within the Shared Delivery Practice of AWS Professional Services. He has over 12 years of professional experience building and optimizing enterprise data warehouses and is passionate about enabling customers to realize the power of their data. He specializes in migrating enterprise data warehouses to AWS Modern Data Architecture.

Raza Hafeez is a Senior Data Architect within the Shared Delivery Practice of AWS Professional Services. He has over 12 years of professional experience building and optimizing enterprise data warehouses and is passionate about enabling customers to realize the power of their data. He specializes in migrating enterprise data warehouses to AWS Modern Data Architecture.

Nivas Shankar is a Principal Product Manager for AWS Lake Formation. He works with customers around the globe to translate business and technical requirements into products that enable customers to improve how they manage, secure and access data lake. Also leads several data and analytics initiatives within AWS including support for Data Mesh.

Nivas Shankar is a Principal Product Manager for AWS Lake Formation. He works with customers around the globe to translate business and technical requirements into products that enable customers to improve how they manage, secure and access data lake. Also leads several data and analytics initiatives within AWS including support for Data Mesh.

The Formovie V10 could BREAK the home projector market.

Post Syndicated from The Hook Up original https://www.youtube.com/watch?v=Y4waPkVPa-Q

Build a serverless analytics application with Amazon Redshift and Amazon API Gateway

Post Syndicated from David Zhang original https://aws.amazon.com/blogs/big-data/build-a-serverless-analytics-application-with-amazon-redshift-and-amazon-api-gateway/

Serverless applications are a modernized way to perform analytics among business departments and engineering teams. Business teams can gain meaningful insights by simplifying their reporting through web applications and distributing it to a broader audience.

Use cases can include the following:

- Dashboarding – A webpage consisting of tables and charts where each component can offer insights to a specific business department.

- Reporting and analysis – An application where you can trigger large analytical queries with dynamic inputs and then view or download the results.

- Management systems – An application that provides a holistic view of the internal company resources and systems.

- ETL workflows – A webpage where internal company individuals can trigger specific extract, transform, and load (ETL) workloads in a user-friendly environment with dynamic inputs.

- Data abstraction – Decouple and refactor underlying data structure and infrastructure.

- Ease of use – An application where you want to give a large set of user-controlled access to analytics without having to onboard each user to a technical platform. Query updates can be completed in an organized manner and maintenance has minimal overhead.

In this post, you will learn how to build a serverless analytics application using Amazon Redshift Data API and Amazon API Gateway WebSocket and REST APIs.

Amazon Redshift is fully managed by AWS, so you no longer need to worry about data warehouse management tasks such as hardware provisioning, software patching, setup, configuration, monitoring nodes and drives to recover from failures, or backups. The Data API simplifies access to Amazon Redshift because you don’t need to configure drivers and manage database connections. Instead, you can run SQL commands to an Amazon Redshift cluster by simply calling a secured API endpoint provided by the Data API. The Data API takes care of managing database connections and buffering data. The Data API is asynchronous, so you can retrieve your results later.

API Gateway is a fully managed service that makes it easy for developers to publish, maintain, monitor, and secure APIs at any scale. With API Gateway, you can create RESTful APIs and WebSocket APIs that enable real-time two-way communication applications. API Gateway supports containerized and serverless workloads, as well as web applications. API Gateway acts as a reverse proxy to many of the compute resources that AWS offers.

Event-driven model

Event-driven applications are increasingly popular among customers. Analytical reporting web applications can be implemented through an event-driven model. The applications run in response to events such as user actions and unpredictable query events. Decoupling the producer and consumer processes allows greater flexibility in application design and building decoupled processes. This design can be achieved with the Data API and API Gateway WebSocket and REST APIs.

Both REST API calls and WebSocket establish communication between the client and the backend. Due to the popularity of REST, you may wonder why WebSockets are present and how they contribute to an event-driven design.

What are WebSockets and why do we need them?

Unidirectional communication is customary when building analytical web solutions. In traditional environments, the client initiates a REST API call to run a query on the backend and either synchronously or asynchronously waits for the query to complete. The “wait” aspect is engineered to apply the concept of polling. Polling in this context is when the client doesn’t know when a backend process will complete. Therefore, the client will consistently make a request to the backend and check.

What is the problem with polling? Main challenges include the following:

- Increased traffic in your network bandwidth – A large number of users performing empty checks will impact your backend resources and doesn’t scale well.

- Cost usage – Empty requests don’t deliver any value to the business. You pay for the unnecessary cost of resources.

- Delayed response – Polling is scheduled in time intervals. If the query is complete in-between these intervals, the user can only see the results after the next check. This delay impacts the user experience and, in some cases, may result in UI deadlocks.

For more information on polling, check out From Poll to Push: Transform APIs using Amazon API Gateway REST APIs and WebSockets.

WebSockets is another approach compared to REST when establishing communication between the front end and backend. WebSockets enable you to create a full duplex communication channel between the client and the server. In this bidirectional scenario, the client can make a request to the server and is notified when the process is complete. The connection remains open, with minimal network overhead, until the response is received.

You may wonder why REST is present, since you can transfer response data with WebSockets. A WebSocket is a light weight protocol designed for real-time messaging between systems. The protocol is not designed for handling large analytical query data and in API Gateway, each frame’s payload can only hold up to 32 KB. Therefore, the REST API performs large data retrieval.

By using the Data API and API Gateway, you can build decoupled event-driven web applications for your data analytical needs. You can create WebSocket APIs with API Gateway and establish a connection between the client and your backend services. You can then initiate requests to perform analytical queries with the Data API. Due to the Data API’s asynchronous nature, the query completion generates an event to notify the client through the WebSocket channel. The client can decide to either retrieve the query results through a REST API call or perform other follow-up actions. The event-driven architecture enables bidirectional interoperable messages and data while keeping your system components agnostic.

Solution overview

In this post, we show how to create a serverless event-driven web application by querying with the Data API in the backend, establishing a bidirectional communication channel between the user and the backend with the WebSocket feature in API Gateway, and retrieving the results using its REST API feature. Instead of designing an application with long-running API calls, you can use the Data API. The Data API allows you to run SQL queries asynchronously, removing the need to hold long, persistent database connections.

The web application is protected using Amazon Cognito, which is used to authenticate the users before they can utilize the web app and also authorize the REST API calls when made from the application.

Other relevant AWS services in this solution include AWS Lambda and Amazon EventBridge. Lambda is a serverless, event-driven compute resource that enables you to run code without provisioning or managing servers. EventBridge is a serverless event bus allowing you to build event-driven applications.

The solution creates a lightweight WebSocket connection between the browser and the backend. When a user submits a request using WebSockets to the backend, a query is submitted to the Data API. When the query is complete, the Data API sends an event notification to EventBridge. EventBridge signals the system that the data is available and notifies the client. Afterwards, a REST API call is performed to retrieve the query results for the client to view.

We have published this solution on the AWS Samples GitHub repository and will be referencing it during the rest of this post.

The following architecture diagram highlights the end-to-end solution, which you can provision automatically with AWS CloudFormation templates run as part of the shell script with some parameter variables.

The application performs the following steps (note the corresponding numbered steps in the process flow):

- A web application is provisioned on AWS Amplify; the user needs to sign up first by providing their email and a password to access the site.

- The user verifies their credentials using a pin sent to their email. This step is mandatory for the user to then log in to the application and continue access to the other features of the application.

- After the user is signed up and verified, they can sign in to the application and requests data through their web or mobile clients with input parameters. This initiates a WebSocket connection in API Gateway. (Flow 1, 2)

- The connection request is handled by a Lambda function,

OnConnect, which initiates an asynchronous database query in Amazon Redshift using the Data API. The SQL query is taken from a SQL script in Amazon Simple Storage Service (Amazon S3) with dynamic input from the client. (Flow 3, 4, 6, 7) - In addition, the

OnConnectLambda function stores the connection, statement identifier, and topic name in an Amazon DynamoDB database. The topic name is an extra parameter that can be used if users want to implement multiple reports on the same webpage. This allows the front end to map responses to the correct report. (Flow 3, 4, 5) - The Data API runs the query, mentioned in step 2. When the operation is complete, an event notification is sent to EventBridge. (Flow 8)

- EventBridge activates an event rule to redirect that event to another Lambda function,

SendMessage. (Flow 9) - The

SendMessagefunction notifies the client that the SQL query is complete via API Gateway. (Flow 10, 11, 12) - After the notification is received, the client performs a REST API call (GET) to fetch the results. (Flow 13, 14, 15, 16)

- The

GetResultfunction is triggered, which retrieves the SQL query result and returns it to the client. - The user is now able to view the results on the webpage.

- When clients disconnect from their browser, API Gateway automatically deletes the connection information from the DynamoDB table using the

onDisconnectfunction. (Flow 17, 18,19)

Prerequisites

Prior to deploying your event-driven web application, ensure you have the following:

- An Amazon Redshift cluster in your AWS environment – This is your backend data warehousing solution to run your analytical queries. For instructions to create your Amazon Redshift cluster, refer to Getting started with Amazon Redshift.

- After you create your Amazon Redshift cluster, add the

AmazonS3ReadOnlyAccesspermission to the associated cluster AWS Identity and Access Management (IAM) role.

- After you create your Amazon Redshift cluster, add the

- An S3 bucket that you have access to – The S3 bucket will be your object storage solution where you can store your SQL scripts. To create your S3 bucket, refer to Create your first S3 bucket.

Deploy CloudFormation templates

The code associated to the design is available in the following GitHub repository. You can clone the repository inside an AWS Cloud9 environment in our AWS account. The AWS Cloud9 environment comes with AWS Command Line Interface (AWS CLI) installed, which is used to run the CloudFormation templates to set up the AWS infrastructure. Make sure that the jQuery library is installed; we use it to parse the JSON output during the run of the script.

The complete architecture is set up using three CloudFormation templates:

- cognito-setup.yaml – Creates the Amazon Cognito user pool to web app client, which is used for authentication and protecting the REST API

- backend-setup.yaml – Creates all the required Lambda functions and the WebSocket and Rest APIs, and configures them on API Gateway

- webapp-setup.yaml – Creates the web application hosting using Amplify to connect and communicate with the WebSocket and Rest APIs.

These CloudFormation templates are run using the script.sh shell script, which takes care of all the dependencies as required.

A generic template is provided for you to customize your own DDL SQL scripts as well as your own query SQL scripts. We have created sample scripts for you to follow along.

- Download the sample DDL script and upload it to an existing S3 bucket.

- Change the IAM role value to your Amazon Redshift cluster’s IAM role with permissions to

AmazonS3ReadOnlyAccess.

For this post, we copy the New York Taxi Data 2015 dataset from a public S3 bucket.

- Download the sample query script and upload it to an existing S3 bucket.

- Upload the modified sample DDL script and the sample query script into a preexisting S3 bucket that you own, and note down the S3 URI path.

If you want to run your own customized version, modify the DDL and query script to fit your scenario.

- Edit the

script.shfile before you run it and set the values for the following parameters:

-

- RedshiftClusterEndpoint (aws_redshift_cluster_ep) – Your Amazon Redshift cluster endpoint available on the AWS Management Console

- DBUsername (aws_dbuser_name) – Your Amazon Redshift database user name

- DDBTableName (aws_ddbtable_name) – The name of your DynamoDB table name that will be created

- WebsocketEndpointSSMParameterName (aws_wsep_param_name) – The parameter name that stores the WebSocket endpoint in AWS Systems Manager Parameter Store.

- RestApiEndpointSSMParameterName (aws_rapiep_param_name) – The parameter name that stores the REST API endpoint in Parameter Store.

- DDLScriptS3Path (aws_ddl_script_path) – The S3 URI to the DDL script that you uploaded.

- QueryScriptS3Path (aws_query_script_path) – The S3 URI to the query script that you uploaded.

- AWSRegion (aws_region) – The Region where the AWS infrastructure is being set up.

- CognitoPoolName (aws_user_pool_name) – The name you want to give to your Amazon Cognito user pool

- ClientAppName (aws_client_app_name) – The name of the client app to be configured for the web app to handle the user authentication for the users

The default acceptable values are already provided as part of the downloaded code.

- Run the script using the following command:

During deployment, AWS CloudFormation creates and triggers the Lambda function SetupRedshiftLambdaFunction, which sets up an Amazon Redshift database table and populates data into the table. The following diagram illustrates this process.

Use the demo app

When the shell script is complete, you can start interacting with the demo web app:

- On the Amplify console, under All apps in the navigation pane, choose DemoApp.

- Choose Run build.

The DemoApp web application goes through a phase of Provision, Build, Deploy.

- When it’s complete, use the URL provided to access the web application.

The following screenshot shows the web application page. It has minimal functionality: you can sign in, sign up, or verify a user.

- Choose Sign Up.

- For Email ID, enter an email.

- For Password, enter a password that is at least eight characters long, has at least one uppercase and lowercase letter, at least one number, and at least one special character.

- Choose Let’s Enroll.

The Verify your Login to Demo App page opens.

- Enter your email and the verification code sent to the email you specified.

- Choose Verify.

You’re redirected to a login page.

- Sign in using your credentials.

You’re redirected to the demoPage.html website.

- Choose Open Connection.

You now have an active WebSocket connection between your browser and your backend AWS environment.

- For Trip Month, specify a month (for this example, December) and choose Submit.

You have now defined the month and year you want to query your data upon. After a few seconds, you can to see the output delivered from the WebSocket.

You may continue using the active WebSocket connection for additional queries—just choose a different month and choose Submit again.

- When you’re done, choose Close Connection to close the WebSocket connection.

For exploratory purposes, while your WebSocket connection is active, you can navigate to your DynamoDB table on the DynamoDB console to view the items that are currently stored. After the WebSocket connection is closed, the items stored in DynamoDB are deleted.

Clean up

To clean up your resources, complete the following steps:

- On the Amazon S3 console, navigate to the S3 bucket containing the sample DDL script and query script and delete them from the bucket.

- On the Amazon Redshift console, navigate to your Amazon Redshift cluster and delete the data you copied over from the sample DDL script.

- Run

truncate nyc_yellow_taxi; - Run

drop table nyc_yellow_taxi;

- Run

- On the AWS CloudFormation console, navigate to the CloudFormation stacks and choose Delete. Delete the stacks in the following order:

WebappSetupBackendSetupCognitoSetup

All resources created in this solution will be deleted.

Monitoring

You can monitor your event-driven web application events, user activity, and API usage with Amazon CloudWatch and AWS CloudTrail. Most areas of this solution already have logging enabled. To view your API Gateway logs, you can turn on CloudWatch Logs. Lambda comes with default logging and monitoring and can be accessed with CloudWatch.

Security

You can secure access to the application using Amazon Cognito, which is a developer-centric and cost-effective customer authentication, authorization, and user management solution. It provides both identity store and federation options that can scale easily. Amazon Cognito supports logins with social identity providers and SAML or OIDC-based identity providers, and supports various compliance standards. It operates on open identity standards (OAuth2.0, SAML 2.0, and OpenID Connect). You can also integrate it with API Gateway to authenticate and authorize the REST API calls either using the Amazon Cognito client app or a Lambda function.

Considerations

The nature of this application includes a front-end client initializing SQL queries to Amazon Redshift. An important component to consider are potential malicious activities that the client can perform, such as SQL injections. With the current implementation, that is not possible. In this solution, the SQL queries preexist in your AWS environment and are DQL statements (they don’t alter the data or structure). However, as you develop this application to fit your business, you should evaluate these areas of risk.

AWS offers a variety of security services to help you secure your workloads and applications in the cloud, including AWS Shield, AWS Network Firewall, AWS Web Application Firewall, and more. For more information and a full list, refer to Security, Identity, and Compliance on AWS.

Cost optimization

The AWS services that the CloudFormation templates provision in this solution are all serverless. In terms of cost optimization, you only pay for what you use. This model also allows you to scale without manual intervention. Review the following pages to determine the associated pricing for each service:

- Amazon API Gateway pricing

- AWS Lambda pricing

- Amazon EventBridge pricing

- Amazon S3 pricing

- Amazon DynamoDB pricing

- AWS Amplify pricing

- Amazon Cognito pricing

Conclusion

In this post, we showed you how to create an event-driven application using the Amazon Redshift Data API and API Gateway WebSocket and REST APIs. The solution helps you build data analytical web applications in an event-driven architecture, decouple your application, optimize long-running database queries processes, and avoid unnecessary polling requests between the client and the backend.

You also used severless technologies, API Gateway, Lambda, DynamoDB, and EventBridge. You didn’t have to manage or provision any servers throughout this process.

This event-driven, serverless architecture offers greater extensibility and simplicity, making it easier to maintain and release new features. Adding new components or third-party products is also simplified.

With the instructions in this post and the generic CloudFormation templates we provided, you can customize your own event-driven application tailored to your business. For feedback or contributions, we welcome you to contact us through the AWS Samples GitHub Repository by creating an issue.

About the Authors

David Zhang is an AWS Data Architect in Global Financial Services. He specializes in designing and implementing serverless analytics infrastructure, data management, ETL, and big data systems. He helps customers modernize their data platforms on AWS. David is also an active speaker and contributor to AWS conferences, technical content, and open-source initiatives. During his free time, he enjoys playing volleyball, tennis, and weightlifting. Feel free to connect with him on LinkedIn.

David Zhang is an AWS Data Architect in Global Financial Services. He specializes in designing and implementing serverless analytics infrastructure, data management, ETL, and big data systems. He helps customers modernize their data platforms on AWS. David is also an active speaker and contributor to AWS conferences, technical content, and open-source initiatives. During his free time, he enjoys playing volleyball, tennis, and weightlifting. Feel free to connect with him on LinkedIn.

Manash Deb is a Software Development Manager in the AWS Directory Service team. With over 18 years of software dev experience, his passion is designing and delivering highly scalable, secure, zero-maintenance applications in the AWS identity and data analytics space. He loves mentoring and coaching others and to act as a catalyst and force multiplier, leading highly motivated engineering teams, and building large-scale distributed systems.

Manash Deb is a Software Development Manager in the AWS Directory Service team. With over 18 years of software dev experience, his passion is designing and delivering highly scalable, secure, zero-maintenance applications in the AWS identity and data analytics space. He loves mentoring and coaching others and to act as a catalyst and force multiplier, leading highly motivated engineering teams, and building large-scale distributed systems.

Pavan Kumar Vadupu Lakshman Manikya is an AWS Solutions Architect who helps customers design robust, scalable solutions across multiple industries. With a background in enterprise architecture and software development, Pavan has contributed in creating solutions to handle API security, API management, microservices, and geospatial information system use cases for his customers. He is passionate about learning new technologies and solving, automating, and simplifying customer problems using these solutions.

Pavan Kumar Vadupu Lakshman Manikya is an AWS Solutions Architect who helps customers design robust, scalable solutions across multiple industries. With a background in enterprise architecture and software development, Pavan has contributed in creating solutions to handle API security, API management, microservices, and geospatial information system use cases for his customers. He is passionate about learning new technologies and solving, automating, and simplifying customer problems using these solutions.

Build a Cloud Storage App in 30 Minutes

Post Syndicated from Pat Patterson original https://www.backblaze.com/blog/build-a-cloud-storage-app-in-30-minutes/