Post Syndicated from Kyaw Soe Hlaing original https://aws.amazon.com/blogs/architecture/field-notes-migrating-file-servers-to-amazon-fsx-and-integrating-with-aws-managed-microsoft-ad/

Amazon FSx provides AWS customers with the native compatibility of third-party file systems with feature sets for workloads such as Windows-based storage, high performance computing (HPC), machine learning, and electronic design automation (EDA). Amazon FSx automates the time-consuming administration tasks such as hardware provisioning, software configuration, patching, and backups. Since Amazon FSx integrates the file systems with cloud-native AWS services, this makes them even more useful for a broader set of workloads.

Amazon FSx for Windows File Server provides fully managed file storage that is accessible over the industry-standard Server Message Block (SMB) protocol. Built on Windows Server, Amazon FSx delivers a wide range of administrative features such as data deduplication, end-user file restore, and Microsoft Active Directory (AD) integration.

In this post, I explain how to migrate files and file shares from on-premises servers to Amazon FSx with AWS DataSync in a domain migration scenario. Customers are migrating their file servers to Amazon FSx as part of their migration from an on-premises Active Directory to AWS managed Active Directory. Their plan is to replace their file servers with Amazon FSx during Active Directory migration to AWS Managed AD.

Prerequisites

Before you begin, perform the steps outlined in this blog to migrate the user accounts and groups to the managed Active Directory.

Walkthrough

There are numerous ways to perform the Active Directory migration. Generally, the following five steps are taken:

- Establish two-way forest trust between on-premises AD and AWS Managed AD

- Migrate user accounts and group with the ADMT tool

- Duplicate Access Control List (ACL) permissions in the file server

- Migrate files and folders with existing ACL to Amazon FSx using AWS DataSync

- Migrate User Computers

In this post, I focus on duplication of ACL permissions and migration of files and folders using Amazon FSx and AWS DataSync. In order to perform duplication of ACL permission in file servers, I use SubInACL tool, which is available from the Microsoft website.

Duplication of the ACL is required because users want to seamlessly access file shares once their computers are migrated to AWS Managed AD. Thus all migrated files and folders have permission with Managed AD users and group objects. For enterprises, the migration of user computers does not happen overnight. Normally, migration takes place in batches or phases. With ACL duplication, both migrated and non-migrated users can access their respective file shares seamlessly during and after migration.

Duplication of Access Control List (ACL)

Before we proceed with ACL duplication, we must ensure that the migration of user accounts and groups was completed. In my demo environment, I have already migrated on-premises users to the Managed Active Directory. In the meantime, we presume that we are migrating identical users to the Managed Active Directory. There might be a scenario where migrated user accounts have different naming such as samAccount name. In this case, we will need to handle this during ACL duplication with SubInACL. For more information about syntax, refer to the SubInACL documentation.

As indicated in following screenshots, I have two users created in the on-premises Active Directory (onprem.local) and those two identical users have been created in the Managed Active Directory too (corp.example.com).

In the following screenshot, I have a shared folder called “HR_Documents” in an on-premises file server. Different users have different access rights to that folder. For example, John Smith has “Full Control” but Onprem User1 only have “Read & Execute”. Our plan is to add same access right to identical users from the Managed Active Directory, here corp.example.com, so that once John Smith is migrated to managed AD, he can access to shared folders in Amazon FSx using his Managed Active Directory credential.

Let’s verify the existing permission in the “HR_Documents” folder. Two users from onprem.local are found with different access rights.

Now it’s time to install SubInACL.

We install it in our on-premises file server. After the SubInACL tool is installed, it can be found under “C:\Program Files (x86)\Windows Resource Kits\Tools” folder by default. To perform an ACL duplication, run command prompt as administrator and run the following command;

Subinacl /outputlog=C:\temp\HR_document_log.txt /errorlog=C:\temp\HR_document_Err_log.txt /Subdirectories C:\HR_Documents\* /migratetodomain=onprem=corp

There are several parameters that I am using in the command:

- Outputlog = where log file is saved

- ErrorLog = where error log file is saved

- Subdirectories = to apply permissions including subfolders and files

- Migratetodomain= NetBIOS name of source domain and destination domain

If the command is run successfully, you should able to see a summary of the results. If there is no error or failure, you can verify whether ACL permissions are duplicated as expected by looking at the folders and files. In our case, we can see that there is one ACL entry of identical account from corp.example.com is added.

Note: you will always see two ACL entries, one from onprem.local and another one from corp.example.com domain in all the files and folders that you used during migration. Permissions are now applied to both at the folder and file level.

Migrate files and folders using AWS DataSync

AWS DataSync is an online data transfer service that simplifies, automates, and accelerates moving data between on-premises storage systems and AWS Storage services such as Amazon S3, Amazon Elastic File System (Amazon EFS), or Amazon FSx for Windows File Server. Manual tasks related to data transfers can slow down migrations and burden IT operations. AWS DataSync reduces or automatically handles many of these tasks, including scripting copy jobs, scheduling and monitoring transfers, validating data, and optimizing network utilization.

Create an AWS DataSync agent

An AWS DataSync agent deploys as a virtual machine in an on-premises data center. An AWS DataSync agent can be run on ESXi, KVM, and Microsoft Hyper-V hypervisors. The AWS DataSync agent is used to access on-premises storage systems and transfer data to the AWS DataSync managed service running on AWS. AWS DataSync always performs incremental copies by comparing from a source to a destination and only copying files that are new or have changed.

AWS DataSync supports the following SMB (Server Message Block) locations to migrate data from:

- Network File System (NFS)

- Server Message Block (SMB)

In this blog, I use SMB as the source location, since I am migrating from an on-premises Windows File server. AWS DataSync supports SMB 2.1 and SMB 3.0 protocols.

AWS DataSync saves metadata and special files when copying to and from file systems. When files are copied from a SMB file share and Amazon FSx for Windows File Server, AWS DataSync copies the following metadata:

- File timestamps: access time, modification time, and creation time

- File owner and file group security identifiers (SIDs)

- Standard file attributes

- NTFS discretionary access lists (DACLs): access control entries (ACEs) that determine whether to grant access to an object

Data Synchronization with AWS DataSync

When a task starts, AWS DataSyc goes through different stages. It begins with examining file system follows by data transfer to destination. Once data transfer is completed, it performs verification for consistency between source and destination file systems. You can review detailed information about the data synchronization stages.

DataSync Endpoints

You can activate your agent by using one of the following endpoint types:

- Public endpoints – If you use public endpoints, all communication from your DataSync agent to AWS occurs over the public internet.

- Federal Information Processing Standard (FIPS) endpoints – If you need to use FIPS 140-2 validated cryptographic modules when accessing the AWS GovCloud (US-East) or AWS GovCloud (US-West) Region, use this endpoint to activate your agent. You use the AWS CLI or API to access this endpoint.

- Virtual private cloud (VPC) endpoints – If you use a VPC endpoint, all communication from AWS DataSync to AWS services occurs through the VPC endpoint in your VPC in AWS. This approach provides a private connection between your self-managed data center, your VPC, and AWS services. It increases the security of your data as it is copied over the network.

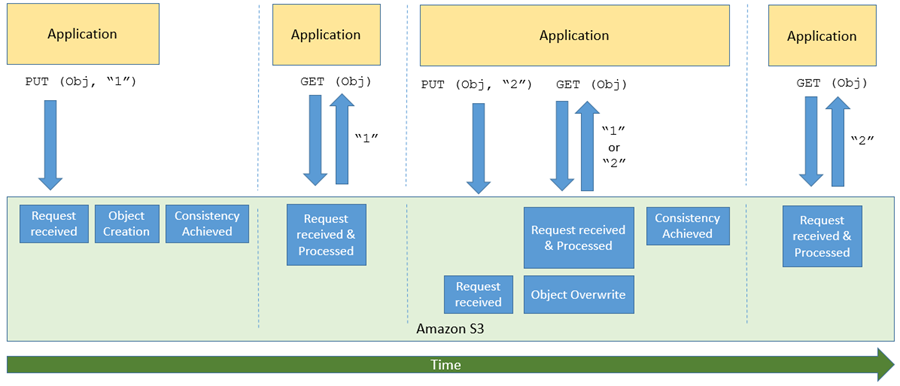

In my demo environment, I have implemented AWS DataSync as indicated in following diagram. The DataSync Agent can be run either on VMware or Hyper-V and KVM platform in a customer on-premises data center.

Once the AWS DataSync Agent setup is completed and the task that defined the source file servers and destination Amazon FSx server is added, you can verify agent status in the AWS Management Console.

Select Task and then choose Start to start copying files and folders. This will start the replication task (or you can wait until the task runs hourly). You can check the History tab to see a history of the replication task executions.

Congratulations! You have replicated the contents of an on-premises file server to Amazon FSx. Let’s look and make sure the ACL permissions are still intact in their destination after migration. As shown in the following screenshots, the ACL permissions in the Payroll folder still remains as is, both on-premises users and Managed AD users are inside. Once the user’s computers are migrated to the Managed AD, they can access the same file share in Amazon FSx server using Managed AD credentials.

Cleaning up

If you are performing testing by following the preceding steps in your own account, delete the following resources, to avoid incurring future charges:

- EC2 instances

- Managed AD

- Amazon FSx file system

- AWS Datasync

Conclusion

You have learned how to duplicate ACL permissions and shared folder permissions during migration of file servers to Amazon FSx. This process provides a seamless migration experience for users. Once the user’s computers are migrated to the Managed AD, they only need to remap shared folders from Amazon FSx. This can be automated by pushing down shared folders mapping with a Group Policy. If new files or folders are created in the source file server, AWS Datasync will synchronize to Amazon FSx server.

For customers who are planning to do a domain migration from on-premises to AWS Managed Microsoft AD, migration of resources like file servers are common. Handling ACL permissions plays a vital role in providing a seamless migration experience. The duplication of ACL can be an option, otherwise, the ADMT tool can be used to migrate SID information from the source Domain to destination Domain. To migrate SID history, SID filtering needs to be disabled during migration.

If you want to provide feedback about this post, you are welcome to submit in the comments section below.