Post Syndicated from Adrianna Kurzela original https://aws.amazon.com/blogs/big-data/level-up-your-react-app-with-amazon-quicksight-how-to-embed-your-dashboard-for-anonymous-access/

Using embedded analytics from Amazon QuickSight can simplify the process of equipping your application with functional visualizations without any complex development. There are multiple ways to embed QuickSight dashboards into application. In this post, we look at how it can be done using React and the Amazon QuickSight Embedding SDK.

Dashboard consumers often don’t have a user assigned to their AWS account and therefore lack access to the dashboard. To enable them to consume data, the dashboard needs to be accessible for anonymous users. Let’s look at the steps required to enable an unauthenticated user to view your QuickSight dashboard in your React application.

Solution overview

Our solution uses the following key services:

- Amazon API Gateway

- AWS Identity and Access Management (IAM)

- AWS Lambda

- Amazon QuickSight (with session capacity pricing enabled)

After loading the web page on the browser, the browser makes a call to API Gateway, which invokes a Lambda function that calls the QuickSight API to generate a dashboard URL for an anonymous user. The Lambda function needs to assume an IAM role with the required permissions. The following diagram shows an overview of the architecture.

Prerequisites

You must have the following prerequisites:

- An AWS account

- A QuickSight account with session capacity pricing enabled

- QuickSight dashboards (for setup instructions, refer to Tutorial: Create an Amazon QuickSight dashboard using sample data)

- A sample React application (to get started, refer to Start a New React Project)

Set up permissions for unauthenticated viewers

In your account, create an IAM policy that your application will assume on behalf of the viewer:

- On the IAM console, choose Policies in the navigation pane.

- Choose Create policy.

- On the JSON tab, enter the following policy code:

Make sure to change the value of <YOUR_DASHBOARD_ID> to the value of the dashboard ID. Note this ID to use in a later step as well.

For the second statement object with logs, permissions are optional. It allows you to create a log group with the specified name, create a log stream for the specified log group, and upload a batch of log events to the specified log stream.

In this policy, we allow the user to perform the GenerateEmbedUrlForAnonymousUser action on the dashboard ID within the list of dashboard IDs inserted in the placeholder.

- Enter a name for your policy (for example,

AnonymousEmbedPolicy) and choose Create policy.

Next, we create a role and attach this policy to the role.

- Choose Roles in the navigation pane, then choose Create role.

- Choose Lambda for the trusted entity.

- Search for and select

AnonymousEmbedPolicy, then choose Next. - Enter a name for your role, such as

AnonymousEmbedRole. - Make sure the policy name is included in the Add permissions section.

- Finish creating your role.

You have just created the AnonymousEmbedRole execution role. You can now move to the next step.

Generate an anonymous embed URL Lambda function

In this step, we create a Lambda function that interacts with QuickSight to generate an embed URL for an anonymous user. Our domain needs to be allowed. There are two ways to achieve the integration of Amazon QuickSight:

- By adding the URL to the list of allowed domains in the Amazon QuickSight admin console (explained later in [Optional] Add your domain in QuickSight section).

- [Recommended] By adding the embed URL request during runtime in the API call. Option 1 is recommended when you need to persist the allowed domains. Otherwise, the domains will be removed after 30 minutes, which is equivalent to the session duration. For other use cases, it is recommended to use the second option (described and implemented below).

On the Lambda console, create a new function.

- Select Author from scratch.

- For Function name, enter a name, such as

AnonymousEmbedFunction. - For Runtime¸ choose Python 3.9.

- For Execution role¸ choose Use an existing role.

- Choose the role

AnonymousEmbedRole. - Choose Create function.

- On the function details page, navigate to the Code tab and enter the following code:

If you don’t use localhost, replace http://localhost:3000 in the returns with the hostname of your application. To move to production, don’t forget to replace http://localhost:3000 with your domain.

- On the Configuration tab, under General configuration, choose Edit.

- Increase the timeout from 3 seconds to 30 seconds, then choose Save.

- Under Environment variables, choose Edit.

- Add the following variables:

- Add

DashboardIdListand list your dashboard IDs. - Add

DashboardRegionand enter the Region of your dashboard.

- Add

- Choose Save.

Your configuration should look similar to the following screenshot.

- On the Code tab, choose Deploy to deploy the function.

Set up API Gateway to invoke the Lambda function

To set up API Gateway to invoke the function you created, complete the following steps:

- On the API Gateway console, navigate to the REST API section and choose Build.

- Under Create new API, select New API.

- For API name, enter a name (for example,

QuicksightAnonymousEmbed). - Choose Create API.

- On the Actions menu, choose Create resource.

- For Resource name, enter a name (for example,

anonymous-embed).

Now, let’s create a method.

- Choose the

anonymous-embedresource and on the Actions menu, choose Create method. - Choose GET under the resource name.

- For Integration type, select Lambda.

- Select Use Lambda Proxy Integration.

- For Lambda function, enter the name of the function you created.

- Choose Save, then choose OK.

Now we’re ready to deploy the API.

- On the Actions menu, choose Deploy API.

- For Deployment stage, select New stage.

- Enter a name for your stage, such as

embed. - Choose Deploy.

[Optional] Add your domain in QuickSight

If you added Allowed domains in Generate an anonymous embed URL Lambda function part, feel free to move to Turn on capacity pricing section.

To add your domain to the allowed domains in QuickSight, complete the following steps:

- On the QuickSight console, choose the user menu, then choose Manage QuickSight.

- Choose Domains and Embedding in the navigation pane.

- For Domain, enter your domain (

http://localhost:<PortNumber>).

Make sure to replace <PortNumber> to match your local setup.

- Choose Add.

Make sure to replace the localhost domain with the one you will use after testing.

Turn on capacity pricing

If you don’t have session capacity pricing enabled, follow the steps in this section. It’s mandatory to have this function enabled to proceed further.

Capacity pricing allows QuickSight customers to purchase reader sessions in bulk without having to provision individual readers in QuickSight. Capacity pricing is ideal for embedded applications or large-scale business intelligence (BI) deployments. For more information, visit Amazon QuickSight Pricing.

To turn on capacity pricing, complete the following steps:

- On the Manage QuickSight page, choose Your Subscriptions in the navigation pane.

- In the Capacity pricing section, select Get monthly subscription.

- Choose Confirm subscription.

To learn more about capacity pricing, see New in Amazon QuickSight – session capacity pricing for large scale deployments, embedding in public websites, and developer portal for embedded analytics.

Set up your React application

To set up your React application, complete the following steps:

- In your React project folder, go to your root directory and run

npm i amazon-quicksight-embedding-sdkto install the amazon-quicksight-embedding-sdk package. - In your

App.jsfile, replace the following:- Replace

YOUR_API_GATEWAY_INVOKE_URL/RESOURCE_NAMEwith your API Gateway invoke URL and your resource name (for example,https://xxxxxxxx.execute-api.xx-xxx-x.amazonaws.com/embed/anonymous-embed). - Replace

YOUR_DASHBOARD1_IDwith the first dashboardId from yourDashboardIdList. This is the dashboard that will be shown on the initial render. - Replace

YOUR_DASHBOARD2_IDwith the second dashboardId from yourDashboardIdList.

- Replace

The following code snippet shows an example of the App.js file in your React project. The code is a React component that embeds a QuickSight dashboard based on the selected dashboard ID. The code contains the following key components:

- State hooks – Two state hooks are defined using the

useState()hook from React:- dashboard – Holds the currently selected dashboard ID.

- quickSightEmbedding – Holds the QuickSight embedding object returned by the

embedDashboard()function.

- Ref hook – A ref hook is defined using the

useRef()hook from React. It’s used to hold a reference to the DOM element where the QuickSight dashboard will be embedded. - useEffect() hook – The useEffect() hook is used to trigger the embedding of the QuickSight dashboard whenever the selected dashboard ID changes. It first fetches the dashboard URL for the selected ID from the QuickSight API using the fetch() method. After it retrieves the URL, it calls the embed() function with the URL as the argument.

- Change handler – The

changeDashboard()function is a simple event handler that updates the dashboard state whenever the user selects a different dashboard from the drop-down menu. As soon as new dashboard ID is set, the useEffect hook is triggered. - 10-millisecond timeout – The purpose of using the timeout is to introduce a small delay of 10 milliseconds before making the API call. This delay can be useful in scenarios where you want to avoid immediate API calls or prevent excessive requests when the component renders frequently. The timeout gives the component some time to settle before initiating the API request. Because we’re building the application in development mode, the timeout helps avoid errors caused by the double run of

useEffectwithinStrictMode. For more information, refer to Updates to Strict Mode.

See the following code:

Next, replace the contents of your App.css file, which is used to style and layout your web page, with the content from the following code snippet:

Now it’s time to test your app. Start your application by running npm start in your terminal. The following screenshots show examples of your app as well as the dashboards it can display.

Conclusion

In this post, we showed you how to embed a QuickSight dashboard into a React application using the AWS SDK. Sharing your dashboard with anonymous users allows them to access your dashboard without granting them access to your AWS account. There are also other ways to share your dashboard anonymously, such as using 1-click public embedding.

Join the Quicksight Community to ask, answer and learn with others and explore additional resources.

About the Author

Adrianna is a Solutions Architect at AWS Global Financial Services. Having been a part of Amazon since August 2018, she has had the chance to be involved both in the operations as well as the cloud business of the company. Currently, she builds software assets which demonstrate innovative use of AWS services, tailored to a specific customer use cases. On a daily basis, she actively engages with various aspects of technology, but her true passion lies in combination of web development and analytics.

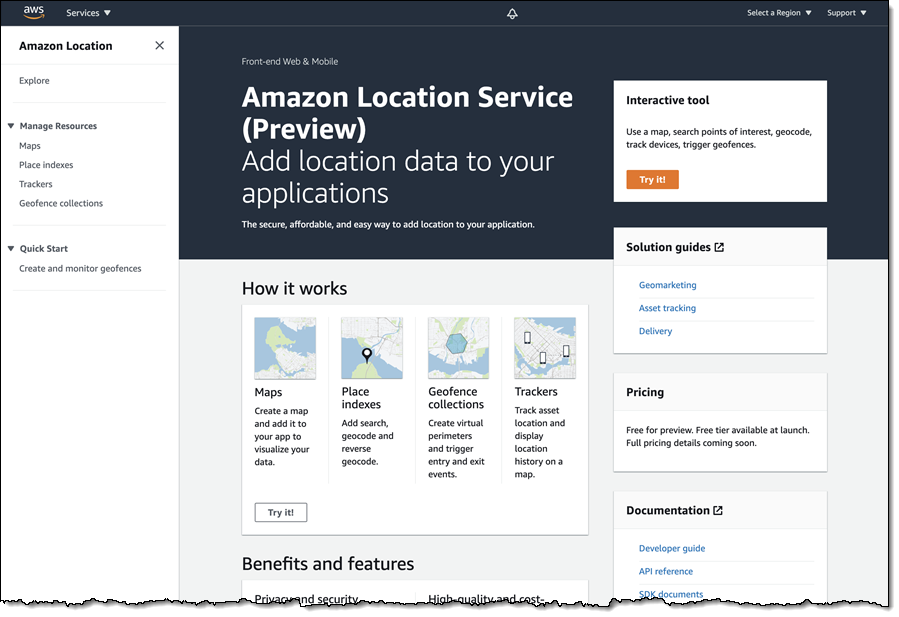

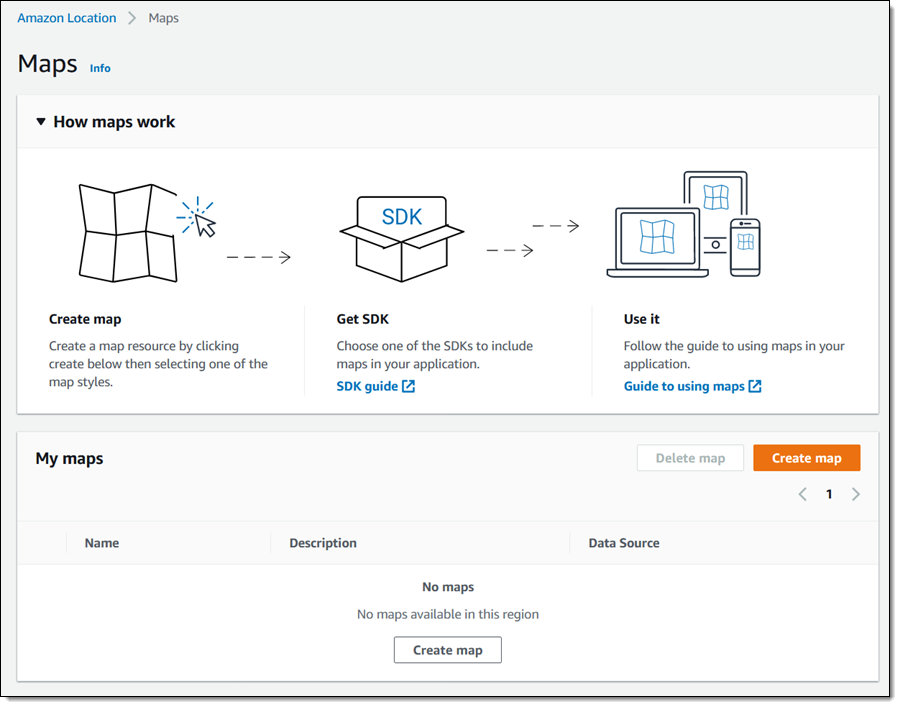

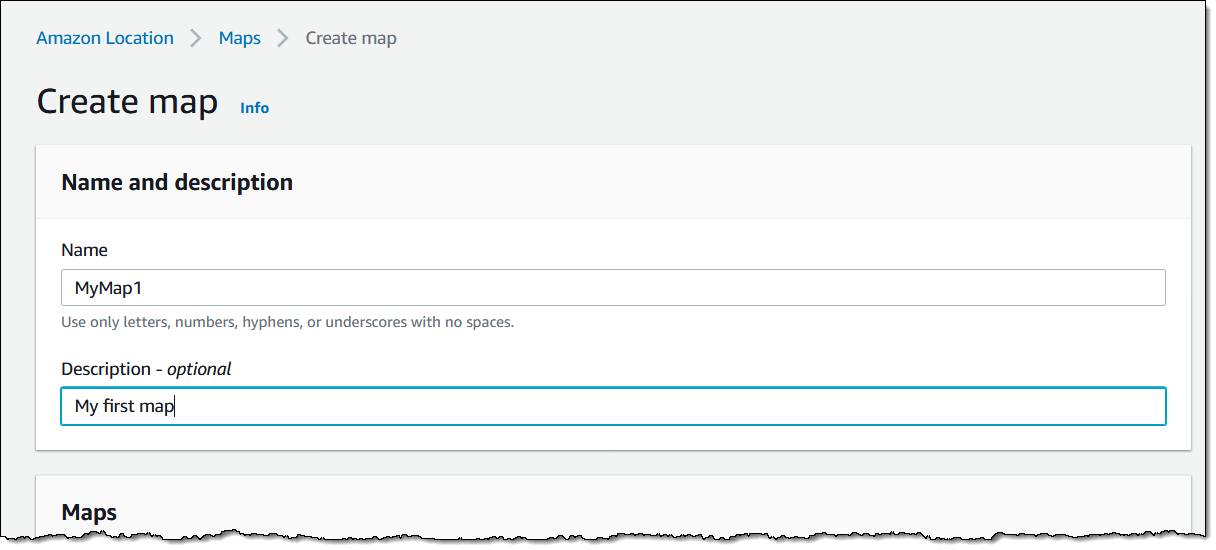

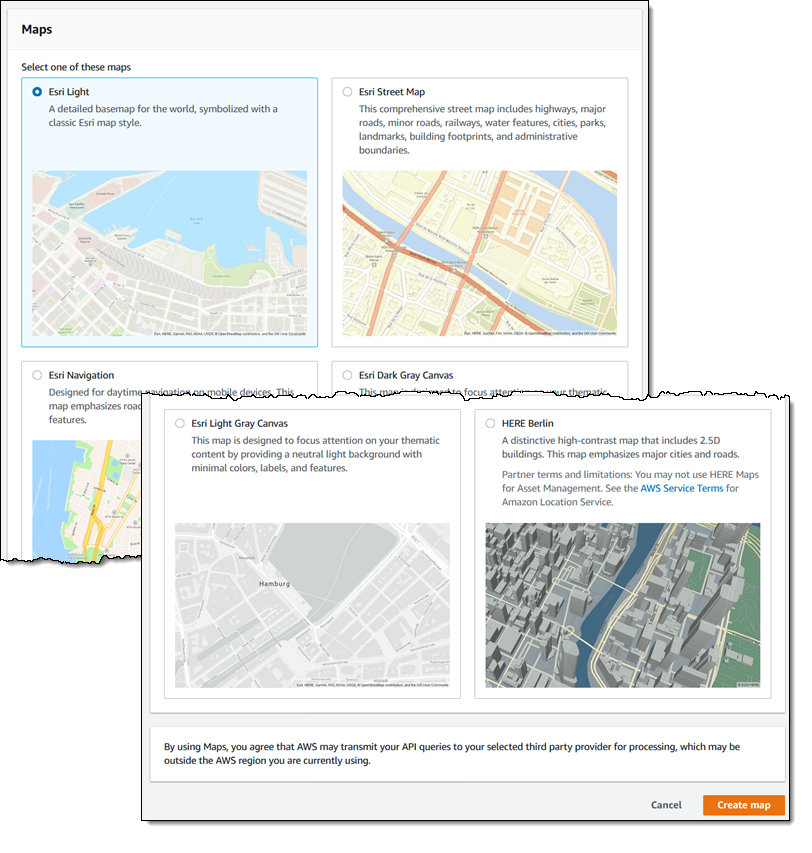

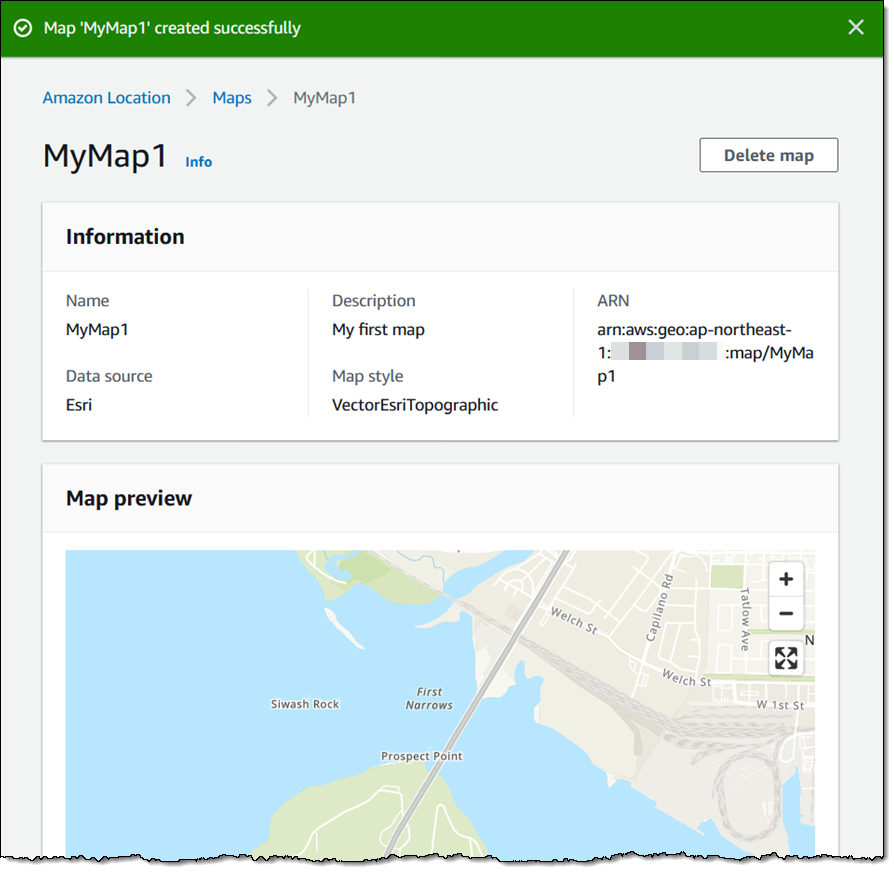

We want to make it easier and more cost-effective for you to add maps, location awareness, and other location-based features to your web and mobile applications. Until now, doing this has been somewhat complex and expensive, and also tied you to the business and programming models of a single provider.

We want to make it easier and more cost-effective for you to add maps, location awareness, and other location-based features to your web and mobile applications. Until now, doing this has been somewhat complex and expensive, and also tied you to the business and programming models of a single provider.

Nuxt.js scaffolding tool inputs

Nuxt.js scaffolding tool inputs