Post Syndicated from The Hook Up original https://www.youtube.com/watch?v=hBEb_FCLRU8

A Name Resolver for the Distributed Web

Post Syndicated from Thibault Meunier original https://blog.cloudflare.com/cloudflare-distributed-web-resolver/

The Domain Name System (DNS) matches names to resources. Instead of typing 104.18.26.46 to access the Cloudflare Blog, you type blog.cloudflare.com and, using DNS, the domain name resolves to 104.18.26.46, the Cloudflare Blog IP address.

Similarly, distributed systems such as Ethereum and IPFS rely on a naming system to be usable. DNS could be used, but its resolvers’ attributes run contrary to properties valued in distributed Web (dWeb) systems. Namely, dWeb resolvers ideally provide (i) locally verifiable data, (ii) built-in history, and (iii) have no single trust anchor.

At Cloudflare Research, we have been exploring alternative ways to resolve queries to responses that align with these attributes. We are proud to announce a new resolver for the Distributed Web, where IPFS content indexed by the Ethereum Name Service (ENS) can be accessed.

To discover how it has been built, and how you can use it today, read on.

Welcome to the Distributed Web

IPFS and its addressing system

The InterPlanetary FileSystem (IPFS) is a peer-to-peer network for storing content on a distributed file system. It is composed of a set of computers called nodes that store and relay content using a common addressing system.

This addressing system relies on the use of Content IDentifiers (CID). CIDs are self-describing identifiers, because the identifier is derived from the content itself. For example, QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco is the CID version 0 (CIDv0) of the wikipedia-on ipfs homepage.

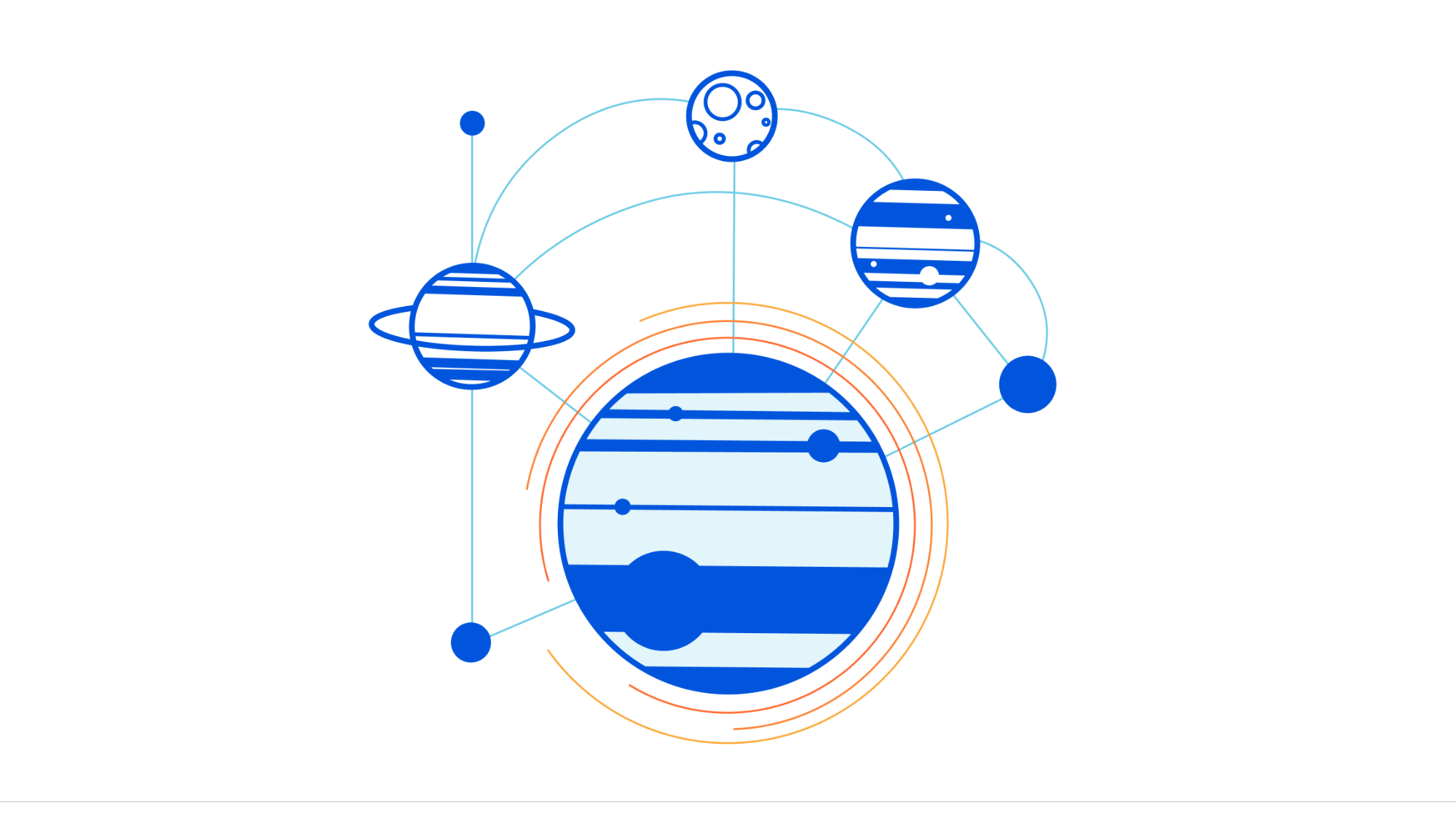

To understand why a CID is defined as self-describing, we can look at its binary representation. For QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco, the CID looks like the following:

The first is the algorithm used to generate the CID (sha2-256 in this case); then comes the length of the encoded content (32 for a sha2-256 hash), and finally the content itself. When referring to the multicodec table, it is possible to understand how the content is encoded.

| Name | Code (in hexadecimal) |

|---|---|

| identity | 0x00 |

| sha1 | 0x11 |

| sha2-256 | 0x12 = 00010010 |

| keccak-256 | 0x1b |

This encoding mechanism is useful, because it creates a unique and upgradable content-addressing system across multiple protocols.

If you want to learn more, have a look at ProtoSchool’s tutorial.

Ethereum and decentralised applications

Ethereum is an account-based blockchain with smart contract capabilities. Being account-based, each account is associated with addresses and these can be modified by operations grouped in blocks and sealed by Ethereum’s consensus algorithm, Proof-of-Work.

There are two categories of accounts: user accounts and contract accounts. User accounts are controlled by a private key, which is used to sign transactions from the account. Contract accounts hold bytecode, which is executed by the network when a transaction is sent to their account. A transaction can include both funds and data, allowing for rich interaction between accounts.

When a transaction is created, it gets verified by each node on the network. For a transaction between two user accounts, the verification consists of checking the origin account signature. When the transaction is between a user and a smart contract, every node runs the smart contract bytecode on the Ethereum Virtual Machine (EVM). Therefore, all nodes perform the same suite of operations and end up in the same state. If one actor is malicious, nodes will not add its contribution. Since nodes have diverse ownership, they have an incentive to not cheat.

How to access IPFS content

As you may have noticed, while a CID describes a piece of content, it doesn’t describe where to find it. In fact, the CID describes the content, but not its location on the network. The location of the file would be retrieved by a query made to an IPFS node.

An IPFS URL (Unified Resource Locator) looks like this: ipfs://QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco. Accessing this URL means retrieving QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco using the IPFS protocol, denoted by ipfs://. However, typing such a URL is quite error-prone. Also, these URLs are not very human-friendly, because there is no good way to remember such long strings. To get around this issue, you can use DNSLink. DNSLink is a way of specifying IPFS CIDs within a DNS TXT record. For instance, wikipedia on ipfs has the following TXT record

$ dig +short TXT _dnslink.en.wikipedia-on-ipfs.org

_dnslink=/ipfs/QmXoypizjW3WknFiJnKLwHCnL72vedxjQkDDP1mXWo6uco

In addition, its A record points to an IPFS gateway. This means that, when you access en.wikipedia-on-ipfs.org, your request is directed to an IPFS HTTP Gateway, which then looks out for the CID using your domain TXT record, and returns the content associated to this CID using the IPFS network.

This is trading ease-of-access against security. The web browser of the user doesn’t verify the integrity of the content served. This could be because the browser does not implement IPFS or because it has no way of validating domain signature — DNSSEC. We wrote about this issue in our previous blog post on End-to-End Integrity.

Human readable identifiers

DNS simplifies referring to IP addresses, in the same way that postal addresses are a way of referring to geolocation data, and contacts in your mobile phone abstract phone numbers. All these systems provide a human-readable format and reduce the error rate of an operation.

To verify these data, the trusted anchors, or “sources of truth”, are:

- Root DNS Keys for DNS.

- The government registry for postal addresses. In the UK, addresses are handled by cities, boroughs and local councils.

- When it comes to your contacts, you are the trust anchor.

Ethereum Name Service, an index for the Distributed Web

An account is identified by its address. An address starts with “0x” and is followed by 20 bytes (ref 4.1 Ethereum yellow paper), for example: 0xf10326c1c6884b094e03d616cc8c7b920e3f73e0. This is not very readable, and can be pretty scary when transactions are not reversible and one can easily mistype a single character.

A first mitigation strategy was to introduce a new notation to capitalise some letters based on the hash of the address 0xF10326C1c6884b094E03d616Cc8c7b920E3F73E0. This can help detect mistype, but it is still not readable. If I have to send a transaction to a friend, I have no way of confirming she hasn’t mistyped the address.

The Ethereum Name Service (ENS) was created to tackle this issue. It is a system capable of turning human-readable names, referred to as domains, to blockchain addresses. For instance, the domain privacy-pass.eth points to the Ethereum address 0xF10326C1c6884b094E03d616Cc8c7b920E3F73E0.

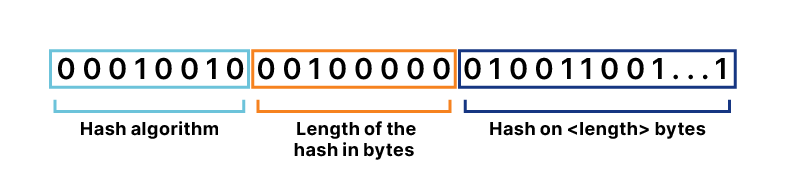

To achieve this, the system is organised in two components, registries and resolvers.

A registry is a smart contract that maintains a list of domains and some information about each domain: the domain owner and the domain resolver. The owner is the account allowed to manage the domain. They can create subdomains and change ownership of their domain, as well as modify the resolver associated with their domain.

Resolvers are responsible for keeping records. For instance, Public Resolver is a smart contract capable of associating not only a name to blockchain addresses, but also a name to an IPFS content identifier. The resolver address is stored in a registry. Users then contact the registry to retrieve the resolver associated with the name.

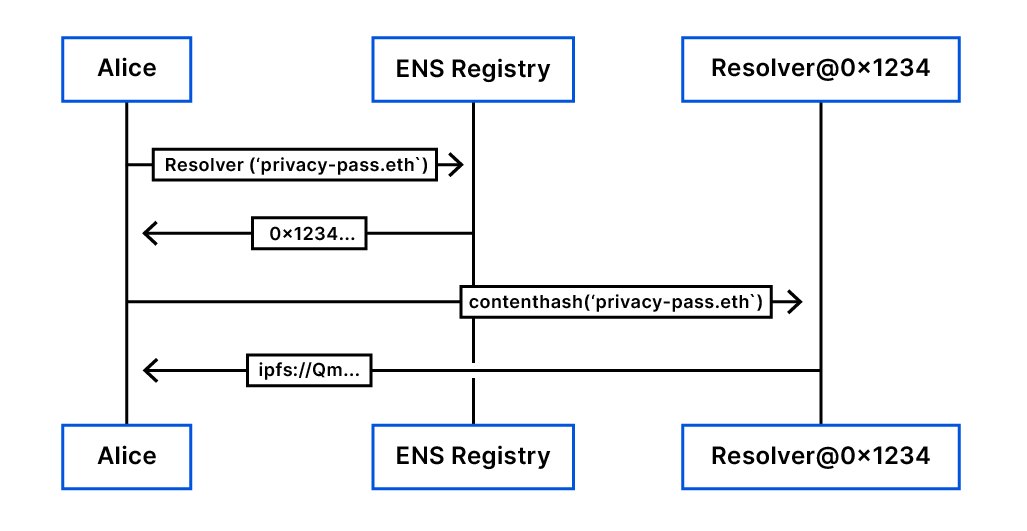

Consider a user, Alice, who has direct access to the Ethereum state. The flow goes as follows: Alice would like to get Privacy Pass’s Ethereum address, for which the domain is privacy-pass.eth. She looks for privacy-pass.eth in the ENS Registry and figures out the resolver for privacy-pass.eth is at 0x1234… . She now looks for the address of privacy-pass.eth at the resolver address, which turns out to be 0xf10326c….

Accessing the IPFS content identifier for privacy-pass.eth works in a similar way. The resolver is the same, only the accessed data is different — Alice calls a different method from the smart contract.

Cloudflare Distributed Web Resolver

The goal was to be able to use this new way of indexing IPFS content directly from your web browser. However, accessing the ENS registry requires access to the Ethereum state. To get access to IPFS, you would also need to access the IPFS network.

To tackle this, we are going to use Cloudflare’s Distributed Web Gateway. Cloudflare operates both an Ethereum Gateway and an IPFS Gateway, respectively available at cloudflare-eth.com and cloudflare-ipfs.com.

EthLink

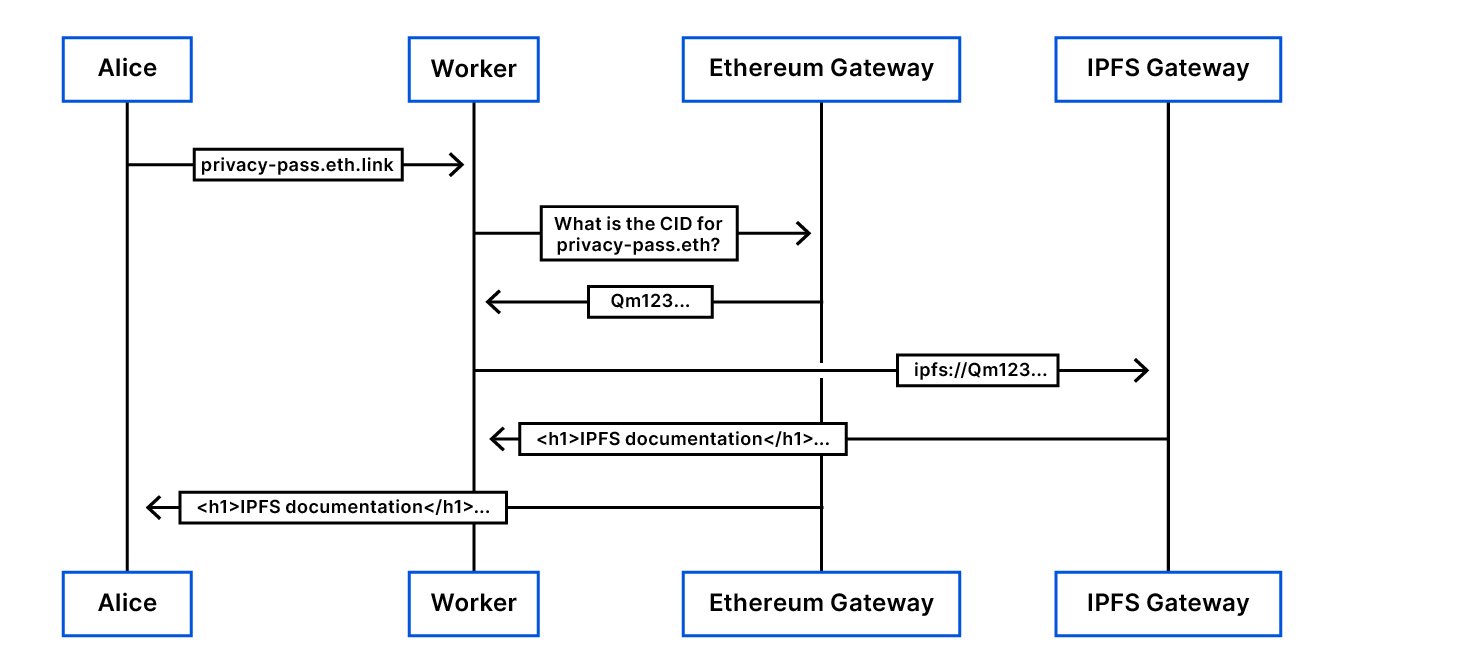

The first version of EthLink was built by Jim McDonald and is operated by True Name LTD at eth.link. Starting from next week, eth.link will transition to use the Cloudflare Distributed Web Resolver. To that end, we have built EthLink on top of Cloudflare Workers. This is a proxy to IPFS. It proxies all ENS registered domains when .link is appended. For instance, privacy-pass.eth should render the Privacy Pass homepage. From your web browser, https://privacy-pass.eth.link does it.

The resolution is done at the Cloudflare edge using a Cloudflare Worker. Cloudflare Workers allows JavaScript code to be run on Cloudflare infrastructure, eliminating the need to maintain a server and increasing the reliability of the service. In addition, it follows Service Workers API, so results returned from the resolver can be checked by end users if needed.

To do this, we setup a wildcard DNS record for *.eth.link to be proxied through Cloudflare and handled by a Cloudflare Worker. When a user Alice accesses privacy-pass.eth.link, the worker first gets the CID of the CID to be retrieved from Ethereum. Then, it requests the content matching this CID to IPFS, and returns it to Alice.

All parts can be run locally. The worker can be run in a service Worker, and the Ethereum Gateway can point to both a local Ethereum node and the IPFS gateway provided by IPFS Companion. It means that while Cloudflare provides resolution-as-a-service, none of the components has to be trusted.

Final notes

So are we distributed yet? No, but we are getting closer, building bridges between emerging technologies and current web infrastructure. By providing a gateway dedicated to the distributed web, we hope to make these services more accessible to everyone.

We thank the ENS team for their support of a new resolver on expanding the distributed web. The ENS team has been running a similar service at https://eth.link. On January 18th, they will switch https://eth.link to using our new service.

These services benefit from the added speed and security of the Cloudflare Worker platform, while paving the way to run distributed protocols in browsers.

Supporting teachers and students with remote learning through free video lessons

Post Syndicated from original https://www.raspberrypi.org/blog/supporting-teachers-students-remote-learning-free-video-lessons/

Working with Oak National Academy, we’ve turned the materials from our Teach Computing Curriculum into more than 300 free, curriculum-mapped video lessons for remote learning.

A comprehensive set of free classroom materials

One of our biggest projects for teachers that we’ve worked on over the past two years is the Teach Computing Curriculum: a comprehensive set of free computing classroom materials for key stages 1 to 4 (learners aged 5 to 16). The materials comprise lesson plans, homework, progression mapping, and assessment materials. We’ve created these as part of the National Centre for Computing Education, but they are freely available for educators all over the world to download and use.

More than 300 free, curriculum-mapped video lessons

In the second half of 2020, in response to school closures, our team of experienced teachers produced over 100 hours of video to transform Teach Computing Curriculum materials into video lessons for learning at home. They are freely available for parents, educators, and learners to continue learning computing at home, wherever you are in the world.

You’ll find our videos for more than 300 hour-long lessons on the Oak National Academy website. The progression of the lessons is mapped out clearly, and the videos cover England’s computing national curriculum. There are video lessons for:

- Years 5 and 6 at key stage 2 (ages 7 to 11)

- Years 7, 8, and 9 at key stage 3 (ages 11 to 14)

- Examined (GCSE) as well as non-examined (Digital Literacy) at key stage 4 (ages 14 to 16)

To access the full set of classroom materials for teaching, visit the National Centre for Computing Education website.

The post Supporting teachers and students with remote learning through free video lessons appeared first on Raspberry Pi.

Comic for 2021.01.13

Post Syndicated from Explosm.net original http://explosm.net/comics/5767/

New Cyanide and Happiness Comic

AWS Managed Services by Anchor 2021-01-13 03:31:09

Post Syndicated from Douglas Chang original https://www.anchor.com.au/blog/2021/01/25624/

If you’re an IT Manager or Operations Manager who has considered moving your company’s online assets into the AWS cloud, you may have started by wondering, what is it truly going to involve?

One of the first decisions you will need to make is whether you are going to approach the cloud with the assistance of an AWS managed service provider (AWS MSP), or whether you intend to fully self-manage.

Whether or not a fully managed service is the right option for you comes down to two pivotal questions;

- Do you have the technical expertise required to properly deploy and maintain AWS cloud services?

- Do you, or your team, have the time/capacity to take this on – not just right now, but in an ongoing capacity too?

Below, we’ll briefly cover some of the considerations you’ll need to make when choosing between fully managed AWS Cloud Services and Self-Managed AWS Cloud Services.

Self-Managed AWS Services

Why outsource the management of your AWS when you can train your own in-house staff to do it?

With self-managed AWS Services, this means you’re responsible for every aspect of the service from start to finish. Managing your own services allows for the benefit of ultimate control, which may be beneficial if you require very specific deployment conditions or software versions to run your applications. It can also allow you to very gradually test your applications within their new infrastructure, and learn as you go.

This will result in knowing how to manage and control your own services on a closer level, but it comes with the downside of a very heavy learning curve and time investment if you have never entered the cloud environment before. In the context of a business or corporate environment, you’d also need to ensure that multiple staff members go through this process to ensure redundancy for staff availability and turnover. You’d also need in either case to invest in continuous development to keep up with the latest best practices and security protocols, because the cloud, like any technical landscape, is fast-paced and ever-changing.

This can end up being a significant investment in training and staff development. As employees are never guaranteed to stay, there is the risk of that investment, or at least substantial portions of it, disappearing at some point.

At the time of writing, there are 450 items in the AWS learning library, for those looking to self-learn. In terms of taking exams to obtain official accreditation, AWS offers 3 levels of certification at present, starting with Foundational, through to Associate, and finally, Professional. To reach the Professional level, AWS requires “Two years of comprehensive experience designing, operating, and troubleshooting solutions using the AWS Cloud”.

Fully Managed AWS Services

Hand the reins over to accredited professionals.

Fully-managed AWS services mean you’ll reap all of the extensive benefits of moving your online infrastructure into the cloud, without taking on the responsibility of setting up or maintaining those services.

You will hand over the stress of managing backups, high availability, software versions, patches, fixes, dependencies, cost optimisation, network infrastructure, security, and various other aspects of keeping your cloud services secure and cost-effective. You won’t need to spend anything on staff training or development, and there is no risk of losing control of your services when internal staff come and go. Essentially, you will be handing the reins over to a team of experts who have already obtained their AWS certifications at the highest level, with collective decades of experience in all manner of business operations and requirements.

The main risk here is choosing where the right place to outsource your AWS management is. When choosing to outsource AWS cloud management, you’ll want to be sure the AWS partner you choose offers the level of support you are going to require, as well as hold all relevant certifications. When partnered with the right AWS MSP team, you’ll also often find that the management fees pay for themselves due to the greater level of AWS cost optimisation that can be achieved by seasoned professionals.

If you’re interested in finding out an estimation of professional AWS cloud management costs for your business or discussing how your business operations could be improved or revolutionised through the AWS cloud platform, please don’t hesitate to get in touch with our expert team for a free consultation. Our expert team can conduct a thorough assessment of your current infrastructure and business, and provide you with a report on how your business can specifically benefit from a migration to the AWS cloud platform.

The post appeared first on AWS Managed Services by Anchor.

[$] Debian discusses vendoring—again

Post Syndicated from original https://lwn.net/Articles/842319/rss

The problems with “vendoring” in packages—bundling dependencies rather than

getting them from other packages—seems to crop up frequently these days.

We looked at Debian’s concerns about

packaging Kubernetes and its myriad of Go

dependencies back in October. A more recent discussion in that

distribution’s community looks at another famously dependency-heavy

ecosystem: JavaScript libraries from the npm repository. Even C-based ecosystems

are not immune to the problem, as we saw with

iproute2 and libbpf back in November; the discussion of vendoring seems

likely to recur over the coming years.

Trident – Real-time event processing at scale

Post Syndicated from Grab Tech original https://engineering.grab.com/trident-real-time-event-processing-at-scale

Ever wondered what goes behind the scenes when you receive advisory messages on a confirmed booking? Or perhaps how you are awarded with rewards or points after completing a GrabPay payment transaction? At Grab, thousands of such campaigns targeting millions of users are operated daily by a backbone service called Trident. In this post, we share how Trident supports Grab’s daily business, the engineering challenges behind it, and how we solved them.

What is Trident?

Trident is essentially Grab’s in-house real-time if this, then that (IFTTT) engine, which automates various types of business workflows. The nature of these workflows could either be to create awareness or to incentivize users to use other Grab services.

If you are an active Grab user, you might have noticed new rewards or messages that appear in your Grab account. Most likely, these originate from a Trident campaign. Here are a few examples of types of campaigns that Trident could support:

- After a user makes a GrabExpress booking, Trident sends the user a message that says something like “Try out GrabMart too”.

- After a user makes multiple ride bookings in a week, Trident sends the user a food reward as a GrabFood incentive.

- After a user is dropped off at his office in the morning, Trident awards the user a ride reward to use on the way back home on the same evening.

- If a GrabMart order delivery takes over an hour of waiting time, Trident awards the user a free-delivery reward as compensation.

- If the driver cancels the booking, then Trident awards points to the user as a compensation.

- With the current COVID pandemic, when a user makes a ride booking, Trident sends a message to both the passenger and driver reminding about COVID protocols.

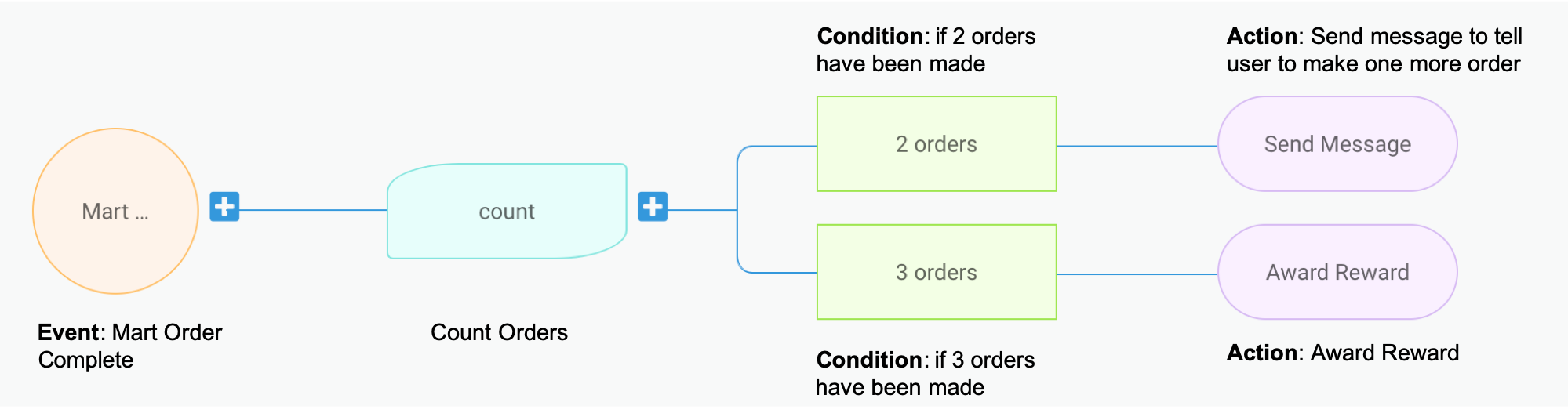

Trident processes events based on campaigns, which are basically a logic configuration on what event should trigger what actions under what conditions. To illustrate this better, let’s take a sample campaign as shown in the image below. This mock campaign setup is taken from the Trident Internal Management portal.

This sample setup basically translates to: for each user, count his/her number of completed GrabMart orders. Once he/she reaches 2 orders, send him/her a message saying “Make one more order to earn a reward”. And if the user reaches 3 orders, award him/her the reward and send a congratulatory message. 😁

Other than the basic event, condition, and action, Trident also allows more fine-grained configurations such as supporting the overall budget of a campaign, adding limitations to avoid over awarding, experimenting A/B testing, delaying of actions, and so on.

An IFTTT engine is nothing new or fancy, but building a high-throughput real-time IFTTT system poses a challenge due to the scale that Grab operates at. We need to handle billions of events and run thousands of campaigns on an average day. The amount of actions triggered by Trident is also massive.

In the month of October 2020, more than 2,000 events were processed every single second during peak hours. Across the entire month, we awarded nearly half a billion rewards, and sent over 2.5 billion communications to our end-users.

Now that we covered the importance of Trident to the business, let’s drill down on how we designed the Trident system to handle events at a massive scale and overcame the performance hurdles with optimization.

Architecture design

We designed the Trident architecture with the following goals in mind:

- Independence: It must run independently of other services, and must not bring performance impacts to other services.

- Robustness: All events must be processed exactly once (i.e. no event missed, no event gets double processed).

- Scalability: It must be able to scale up processing power when the event volume surges and withstand when popular campaigns run.

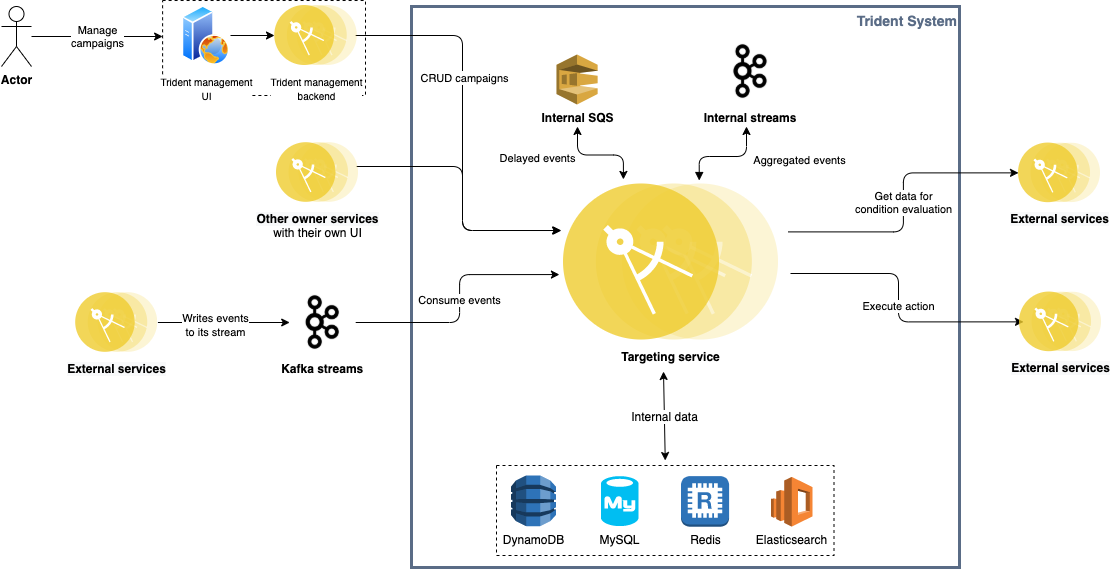

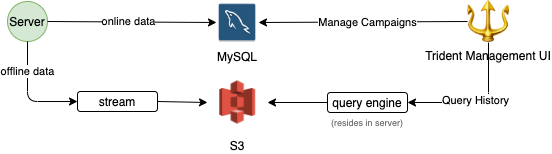

The following diagram depicts how the overall system architecture looks like.

Trident consumes events from multiple Kafka streams published by various backend services across Grab (e.g. GrabFood orders, Transport rides, GrabPay payment processing, GrabAds events). Given the nature of Kafka streams, Trident is completely decoupled from all other upstream services.

Each processed event is given a unique event key and stored in Redis for 24 hours. For any event that triggers an action, its key is persisted in MySQL as well. Before storing records in both Redis and MySQL, we make sure any duplicate event is filtered out. Together with the at-least-once delivery guaranteed by Kafka, we achieve exactly-once event processing.

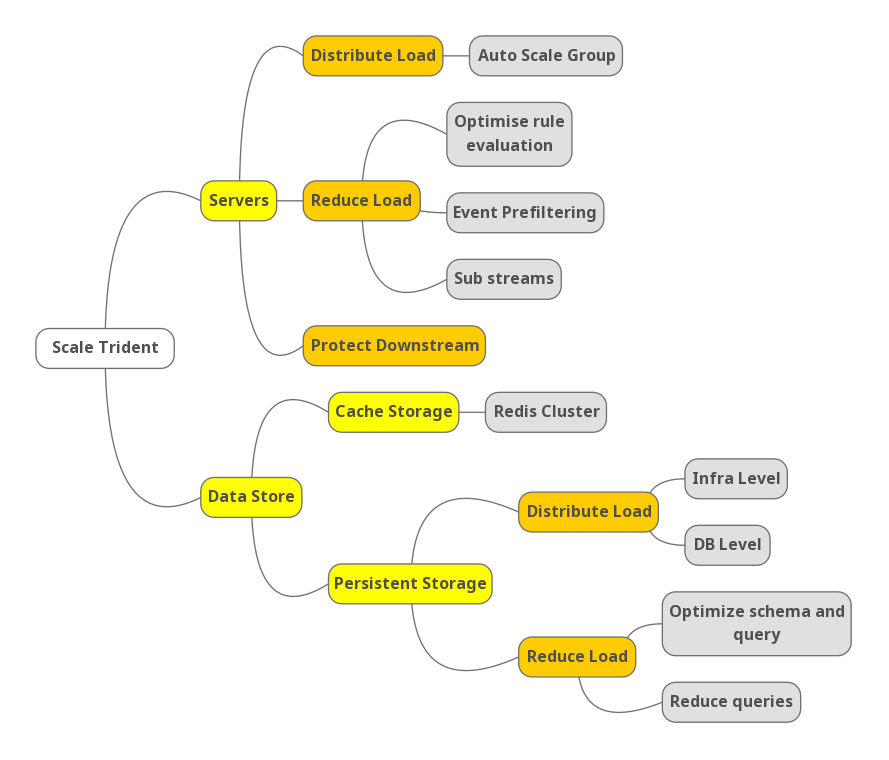

Scalability is a key challenge for Trident. To achieve high performance under massive event volume, we needed to scale on both the server level and data store level. The following mind map shows an outline of our strategies.

Scale servers

Our source of events are Kafka streams. There are mostly two factors that could affect the load on our system:

- Number of events produced in the streams (more rides, food orders, etc. results in more events for us to process).

- Number of campaigns running.

- Nature of campaigns running. The campaigns that trigger actions for more users cause higher load on our system.

There are naturally two types of approaches to scale up server capacity:

- Distribute workload among server instances.

- Reduce load (i.e. reduce the amount of work required to process each event).

Distribute load

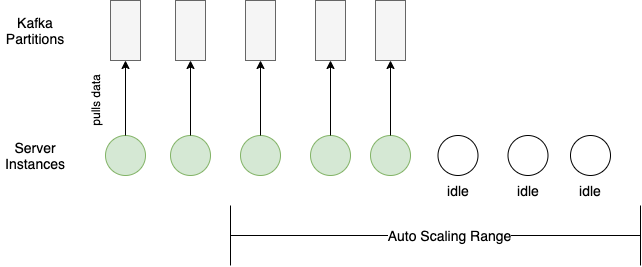

Distributing workload seems trivial with the load balancing and auto-horizontal scaling based on CPU usage that cloud providers offer. However, an additional server sits idle until it can consume from a Kafka partition.

Each Kafka partition can only be consumed by one consumer within the same consumer group (our auto-scaling server group in this case). Therefore, any scaling in or out requires matching the Kafka partition configuration with the server auto-scaling configuration.

Here’s an example of a bad case of load distribution:

And here’s an example of a good load distribution where the configurations for the Kafka partitions and the server auto-scaling match:

Within each server instance, we also tried to increase processing throughput while keeping the resource utilization rate in check. Each Kafka partition consumer has multiple goroutines processing events, and the number of active goroutines is dynamically adjusted according to the event volume from the partition and time of the day (peak/off-peak).

Reduce load

You may ask how we reduced the amount of processing work for each event. First, we needed to see where we spent most of the processing time. After performing some profiling, we identified that the rule evaluation logic was the major time consumer.

What is rule evaluation?

Recall that Trident needs to operate thousands of campaigns daily. Each campaign has a set of rules defined. When Trident receives an event, it needs to check through the rules for all the campaigns to see whether there is any match. This checking process is called rule evaluation.

More specifically, a rule consists of one or more conditions combined by AND/OR Boolean operators. A condition consists of an operator with a left-hand side (LHS) and a right-hand side (RHS). The left-hand side is the name of a variable, and the right-hand side a value. A sample rule in JSON:

Country is Singapore and taxi type is either JustGrab or GrabCar.

{

"operator": "and",

"conditions": [

{

"operator": "eq",

"lhs": "var.country",

"rhs": "sg"

},

{

"operator": "or",

"conditions": [

{

"operator": "eq",

"lhs": "var.taxi",

"rhs": <taxi-type-id-for-justgrab>

},

{

"operator": "eq",

"lhs": "var.taxi",

"rhs": <taxi-type-id-for-grabcard>

}

]

}

]

}

When evaluating the rule, our system loads the values of the LHS variable, evaluates against the RHS value, and returns as result (true/false) whether the rule evaluation passed or not.

To reduce the resources spent on rule evaluation, there are two types of strategies:

- Avoid unnecessary rule evaluation

- Evaluate “cheap” rules first

We implemented these two strategies with event prefiltering and weighted rule evaluation.

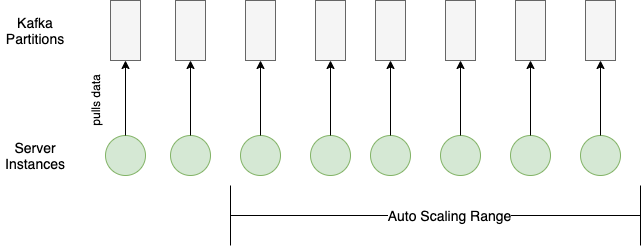

Event prefiltering

Just like the DB index helps speed up data look-up, having a pre-built map also helped us narrow down the range of campaigns to evaluate. We loaded active campaigns from the DB every few minutes and organized them into an in-memory hash map, with event type as key, and list of corresponding campaigns as the value. The reason we picked event type as the key is that it is very fast to determine (most of the time just a type assertion), and it can distribute events in a reasonably even way.

When processing events, we just looked up the map, and only ran rule evaluation on the campaigns in the matching hash bucket. This saved us at least 90% of the processing time.

Weighted rule evaluation

Evaluating different rules comes with different costs. This is because different variables (i.e. LHS) in the rule can have different sources of values:

- The value is already available in memory (already consumed from the event stream).

- The value is the result of a database query.

- The value is the result of a call to an external service.

These three sources are ranked by cost:

In-memory < database < external service

We aimed to maximally avoid evaluating expensive rules (i.e. those that require calling external service, or querying a DB) while ensuring the correctness of evaluation results.

First optimization – Lazy loading

Lazy loading is a common performance optimization technique, which literally means “don’t do it until it’s necessary”.

Take the following rule as an example:

A & B

If we load the variable values for both A and B before passing to evaluation, then we are unnecessarily loading B if A is false. Since most of the time the rule evaluation fails early (for example, the transaction amount is less than the given minimum amount), there is no point in loading all the data beforehand. So we do lazy loading ie. load data only when evaluating that part of the rule.

Second optimization – Add weight

Let’s take the same example as above, but in a different order.

B & A

Source of data for A is memory and B is external service

Now even if we are doing lazy loading, in this case, we are loading the external data always even though it potentially may fail at the next condition whose data is in memory.

Since most of our campaigns are targeted, a popular condition is to check if a user is in a certain segment, which is usually the first condition that a campaign creator sets. This data resides in another service. So it becomes quite expensive to evaluate this condition first even though the next condition’s data can be already in memory (e.g. if the taxi type is JustGrab).

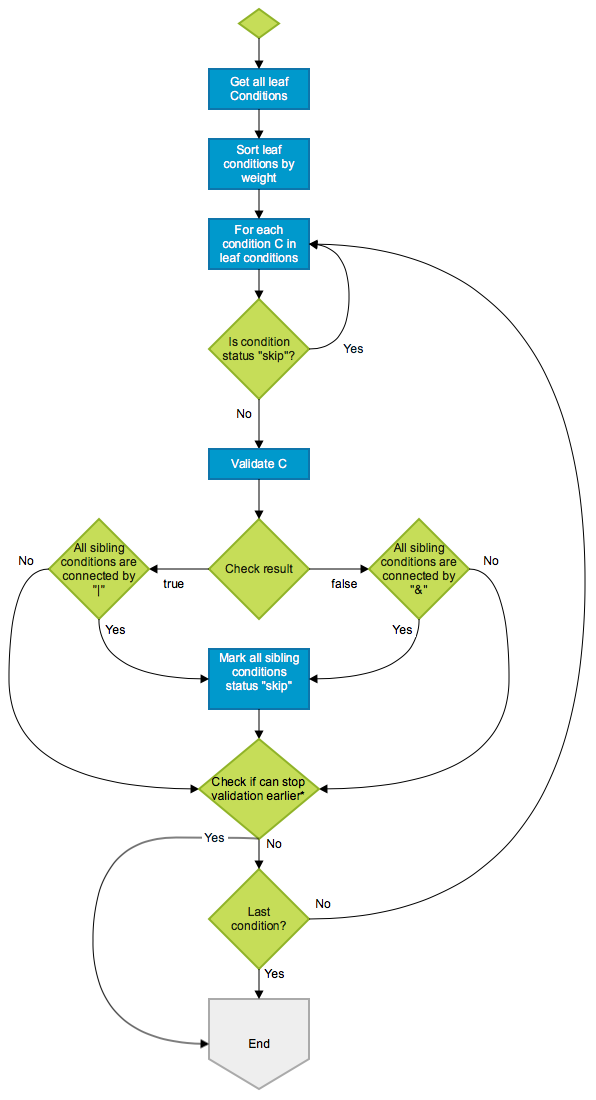

So, we did the next phase of optimization here, by sorting the conditions based on weight of the source of data (low weight if data is in memory, higher if it’s in our database and highest if it’s in an external system). If AND was the only logical operator we supported, then it would have been quite simple. But the presence of OR made it complex. We came up with an algorithm that sorts the evaluation based on weight keeping in mind the AND/OR. Here’s what the flowchart looks like:

An example:

Conditions: A & ( B | C ) & ( D | E )

Actual result: true & ( false | false ) & ( true | true ) --> false

Weight: B < D < E < C < A

Expected check order: B, D, C

Firstly, we start validating B which is false. Apparently, we cannot skip the sibling conditions here since B and C are connected by |. Next, we check D. D is true and its only sibling E is connected by | so we can mark E “skip”. Then, we check E but since E has been marked “skip”, we just skip it. Still, we cannot get the final result yet, so we need to continue validating C which is false. Now we know (B | C) is false so the whole condition is false too. We can stop now.

Sub-streams

After investigation, we learned that we consumed a particular stream that produced terabytes of data per hour. It caused our CPU usage to shoot up by 30%. We found out that we process only a handful of event types from that stream. So we introduced a sub-stream in between, which contains the event types we want to support. This stream is populated from the main stream by another server, thereby reducing the load on Trident.

Protect downstream

While we scaled up our servers wildly, we needed to keep in mind that there were many downstream services that received more traffic. For example, we call the GrabRewards service for awarding rewards or the LocaleService for checking the user’s locale. It is crucial for us to have control over our outbound traffic to avoid causing any stability issues in Grab.

Therefore, we implemented rate limiting. There is a total rate limit configured for calling each downstream service, and the limit varies in different time ranges (e.g. tighter limit for calling critical service during peak hour).

Scale data store

We have two types of storage in Trident: cache storage (Redis) and persistent storage (MySQL and others).

Scaling cache storage is straightforward, since Redis Cluster already offers everything we need:

- High performance: Known to be fast and efficient.

- Scaling capability: New shards can be added at any time to spread out the load.

- Fault tolerance: Data replication makes sure that data does not get lost when any single Redis instance fails, and auto election mechanism makes sure the cluster can always auto restore itself in case of any single instance failure.

All we needed to make sure is that our cache keys can be hashed evenly into different shards.

As for scaling persistent data storage, we tackled it in two ways just like we did for servers:

- Distribute load

- Reduce load (both overall and per query)

Distribute load

There are two levels of load distribution for persistent storage: infra level and DB level. On the infra level, we split data with different access patterns into different types of storage. Then on the DB level, we further distributed read/write load onto different DB instances.

Infra level

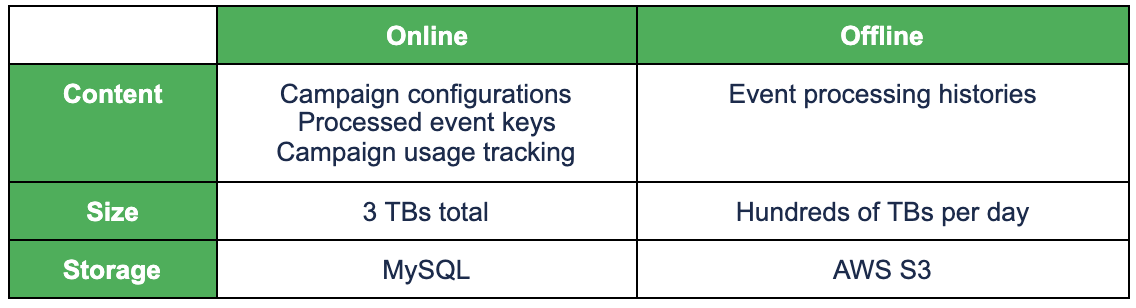

Just like any typical online service, Trident has two types of data in terms of access pattern:

- Online data: Frequent access. Requires quick access. Medium size.

- Offline data: Infrequent access. Tolerates slow access. Large size.

For online data, we need to use a high-performance database, while for offline data, we can just use cheap storage. The following table shows Trident’s online/offline data and the corresponding storage.

Writing of offline data is done asynchronously to minimize performance impact as shown below.

For retrieving data for the users, we have high timeout for such APIs.

DB level

We further distributed load on the MySQL DB level, mainly by introducing replicas, and redirecting all read queries that can tolerate slightly outdated data to the replicas. This relieved more than 30% of the load from the master instance.

Going forward, we plan to segregate the single MySQL database into multiple databases, based on table usage, to further distribute load if necessary.

Reduce load

To reduce the load on the DB, we reduced the overall number of queries and removed unnecessary queries. We also optimized the schema and query, so that query completes faster.

Query reduction

We needed to track usage of a campaign. The tracking is just incrementing the value against a unique key in the MySQL database. For a popular campaign, it’s possible that multiple increment (a write query) queries are made to the database for the same key. If this happens, it can cause an IOPS burst. So we came up with the following algorithm to reduce the number of queries.

- Have a fixed number of threads per instance that can make such a query to the DB.

- The increment queries are queued into above threads.

- If a thread is idle (not busy in querying the database) then proceed to write to the database then itself.

- If the thread is busy, then increment in memory.

- When the thread becomes free, increment by the above sum in the database.

To prevent accidental over awarding of benefits (rewards, points, etc), we require campaign creators to set the limits. However, there are some campaigns that don’t need a limit, so the campaign creators just specify a large number. Such popular campaigns can cause very high QPS to our database. We had a brilliant trick to address this issue- we just don’t track if the number is high. Do you think people really want to limit usage when they set the per user limit to 100,000? 😉

Query optimization

One of our requirements was to track the usage of a campaign – overall as well as per user (and more like daily overall, daily per user, etc). We used the following query for this purpose:

INSERT INTO … ON DUPLICATE KEY UPDATE value = value + inc

The table had a unique key index (combining multiple columns) along with a usual auto-increment integer primary key. We encountered performance issues arising from MySQL gap locks when high write QPS hit this table (i.e. when popular campaigns ran). After testing out a few approaches, we ended up making the following changes to solve the problem:

- Removed the auto-increment integer primary key.

- Converted the secondary unique key to the primary key.

Conclusion

Trident is Grab’s in-house real-time IFTTT engine, which processes events and operates business mechanisms on a massive scale. In this article, we discussed the strategies we implemented to achieve large-scale high-performance event processing. The overall ideas of distributing and reducing load may be straightforward, but there were lots of thoughts and learnings shared in detail. If you have any comments or questions about Trident, feel free to leave a comment below.

All the examples of campaigns given in the article are for demonstration purpose only, they are not real live campaigns.

Join us

Grab is more than just the leading ride-hailing and mobile payments platform in Southeast Asia. We use data and technology to improve everything from transportation to payments and financial services across a region of more than 620 million people. We aspire to unlock the true potential of Southeast Asia and look for like-minded individuals to join us on this ride.

If you share our vision of driving South East Asia forward, apply to join our team today.

Unlocking LUKS2 volumes with TPM2, FIDO2, PKCS#11 Security Hardware on systemd 248

Post Syndicated from original http://0pointer.net/blog/unlocking-luks2-volumes-with-tpm2-fido2-pkcs11-security-hardware-on-systemd-248.html

TL;DR: It’s now easy to unlock your LUKS2 volume with a FIDO2

security token (e.g. YubiKey, Nitrokey FIDO2, AuthenTrend

ATKey.Pro). And TPM2 unlocking is easy now too.

Blogging is a lot of work, and a lot less fun than hacking. I mostly

focus on the latter because of that, but from time to time I guess

stuff is just too interesting to not be blogged about. Hence here,

finally, another blog story about exciting new features in systemd.

With the upcoming systemd v248 the

systemd-cryptsetup

component of systemd (which is responsible for assembling encrypted

volumes during boot) gained direct support for unlocking encrypted

storage with three types of security hardware:

-

Unlocking with FIDO2 security tokens (well, at least with those

which implement thehmac-secretextension; most do). i.e. your

YubiKeys (series 5 and above), Nitrokey FIDO2, AuthenTrend

ATKey.Pro and such. -

Unlocking with TPM2 security chips (pretty ubiquitous on non-budget

PCs/laptops/…) -

Unlocking with PKCS#11 security tokens, i.e. your smartcards and

older YubiKeys (the ones that implement PIV). (Strictly speaking

this was supported on older systemd already, but was a lot more

“manual”.)

For completeness’ sake, let’s keep in mind that the component also

allows unlocking with these more traditional mechanisms:

-

Unlocking interactively with a user-entered passphrase (i.e. the

way most people probably already deploy it, supported since

about forever) -

Unlocking via key file on disk (optionally on removable media

plugged in at boot), supported since forever. -

Unlocking via a key acquired through trivial

AF_UNIX/SOCK_STREAMsocket IPC. (Also new in v248) -

Unlocking via recovery keys. These are pretty much the same

thing as a regular passphrase (and in fact can be entered wherever

a passphrase is requested) — the main difference being that they

are always generated by the computer, and thus have guaranteed high

entropy, typically higher than user-chosen passphrases. They are

generated in a way they are easy to type, in many cases even if the

local key map is misconfigured. (Also new in v248)

In this blog story, let’s focus on the first three items, i.e. those

that talk to specific types of hardware for implementing unlocking.

To make working with security tokens and TPM2 easy, a new, small tool

was added to the systemd tool set:

systemd-cryptenroll. It’s

only purpose is to make it easy to enroll your security token/chip of

choice into an encrypted volume. It works with any LUKS2 volume, and

embeds a tiny bit of meta-information into the LUKS2 header with

parameters necessary for the unlock operation.

Unlocking with FIDO2

So, let’s see how this fits together in the FIDO2 case. Most likely

this is what you want to use if you have one of these fancy FIDO2 tokens

(which need to implement the hmac-secret extension, as

mentioned). Let’s say you already have your LUKS2 volume set up, and

previously unlocked it with a simple passphrase. Plug in your token,

and run:

# systemd-cryptenroll --fido2-device=auto /dev/sda5

(Replace /dev/sda5 with the underlying block device of your volume).

This will enroll the key as an additional way to unlock the volume,

and embeds all necessary information for it in the LUKS2 volume

header. Before we can unlock the volume with this at boot, we need to

allow FIDO2 unlocking via

/etc/crypttab. For

that, find the right entry for your volume in that file, and edit it

like so:

myvolume /dev/sda5 - fido2-device=auto

Replace myvolume and /dev/sda5 with the right volume name, and

underlying device of course. Key here is the fido2-device=auto

option you need to add to the fourth column in the file. It tells

systemd-cryptsetup to use the FIDO2 metadata now embedded in the

LUKS2 header, wait for the FIDO2 token to be plugged in at boot

(utilizing systemd-udevd, …) and unlock the volume with it.

And that’s it already. Easy-peasy, no?

Note that all of this doesn’t modify the FIDO2 token itself in any

way. Moreover you can enroll the same token in as many volumes as you

like. Since all enrollment information is stored in the LUKS2 header

(and not on the token) there are no bounds on any of this. (OK, well,

admittedly, there’s a cap on LUKS2 key slots per volume, i.e. you

can’t enroll more than a bunch of keys per volume.)

Unlocking with PKCS#11

Let’s now have a closer look how the same works with a PKCS#11

compatible security token or smartcard. For this to work, you need a

device that can store an RSA key pair. I figure most security

tokens/smartcards that implement PIV qualify. How you actually get the

keys onto the device might differ though. Here’s how you do this for

any YubiKey that implements the PIV feature:

# ykman piv reset

# ykman piv generate-key -a RSA2048 9d pubkey.pem

# ykman piv generate-certificate --subject "Knobelei" 9d pubkey.pem

# rm pubkey.pem

(This chain of commands erases what was stored in PIV feature of your

token before, be careful!)

For tokens/smartcards from other vendors a different series of

commands might work. Once you have a key pair on it, you can enroll it

with a LUKS2 volume like so:

# systemd-cryptenroll --pkcs11-token-uri=auto /dev/sda5

Just like the same command’s invocation in the FIDO2 case this enrolls

the security token as an additional way to unlock the volume, any

passphrases you already have enrolled remain enrolled.

For the PKCS#11 case you need to edit your /etc/crypttab entry like this:

myvolume /dev/sda5 - pkcs11-uri=auto

If you have a security token that implements both PKCS#11 PIV and

FIDO2 I’d probably enroll it as FIDO2 device, given it’s the more

contemporary, future-proof standard. Moreover, it requires no special

preparation in order to get an RSA key onto the device: FIDO2 keys

typically just work.

Unlocking with TPM2

Most modern (non-budget) PC hardware (and other kind of hardware too)

nowadays comes with a TPM2 security chip. In many ways a TPM2 chip is

a smartcard that is soldered onto the mainboard of your system. Unlike

your usual USB-connected security tokens you thus cannot remove them

from your PC, which means they address quite a different security

scenario: they aren’t immediately comparable to a physical key you can

take with you that unlocks some door, but they are a key you leave at

the door, but that refuses to be turned by anyone but you.

Even though this sounds a lot weaker than the FIDO2/PKCS#11 model TPM2

still bring benefits for securing your systems: because the

cryptographic key material stored in TPM2 devices cannot be extracted

(at least that’s the theory), if you bind your hard disk encryption to

it, it means attackers cannot just copy your disk and analyze it

offline — they always need access to the TPM2 chip too to have a

chance to acquire the necessary cryptographic keys. Thus, they can

still steal your whole PC and analyze it, but they cannot just copy

the disk without you noticing and analyze the copy.

Moreover, you can bind the ability to unlock the harddisk to specific

software versions: for example you could say that only your trusted

Fedora Linux can unlock the device, but not any arbitrary OS some

hacker might boot from a USB stick they plugged in. Thus, if you trust

your OS vendor, you can entrust storage unlocking to the vendor’s OS

together with your TPM2 device, and thus can be reasonably sure

intruders cannot decrypt your data unless they both hack your OS

vendor and steal/break your TPM2 chip.

Here’s how you enroll your LUKS2 volume with your TPM2 chip:

# systemd-cryptenroll --tpm2-device=auto --tpm2-pcrs=7 /dev/sda5

This looks almost as straightforward as the two earlier

sytemd-cryptenroll command lines — if it wasn’t for the

--tpm2-pcrs= part. With that option you can specify to which TPM2

PCRs you want to bind the enrollment. TPM2 PCRs are a set of

(typically 24) hash values that every TPM2 equipped system at boot

calculates from all the software that is invoked during the boot

sequence, in a secure, unfakable way (this is called

“measurement”). If you bind unlocking to a specific value of a

specific PCR you thus require the system has to follow the same

sequence of software at boot to re-acquire the disk encryption

key. Sounds complex? Well, that’s because it is.

For now, let’s see how we have to modify your /etc/crypttab to

unlock via TPM2:

myvolume /dev/sda5 - tpm2-device=auto

This part is easy again: the tpm2-device= option is what tells

systemd-cryptsetup to use the TPM2 metadata from the LUKS2 header

and to wait for the TPM2 device to show up.

Bonus: Recovery Key Enrollment

FIDO2, PKCS#11 and TPM2 security tokens and chips pair well with

recovery keys: since you don’t need to type in your password everyday

anymore it makes sense to get rid of it, and instead enroll a

high-entropy recovery key you then print out or scan off screen and

store a safe, physical location. i.e. forget about good ol’

passphrase-based unlocking, go for FIDO2 plus recovery key instead!

Here’s how you do it:

# systemd-cryptenroll --recovery-key /dev/sda5

This will generate a key, enroll it in the LUKS2 volume, show it to

you on screen and generate a QR code you may scan off screen if you

like. The key has highest entropy, and can be entered wherever you can

enter a passphrase. Because of that you don’t have to modify

/etc/crypttab to make the recovery key work.

Future

There’s still plenty room for further improvement in all of this. In

particular for the TPM2 case: what the text above doesn’t really

mention is that binding your encrypted volume unlocking to specific

software versions (i.e. kernel + initrd + OS versions) actually sucks

hard: if you naively update your system to newer versions you might

lose access to your TPM2 enrolled keys (which isn’t terrible, after

all you did enroll a recovery key — right? — which you then can use

to regain access). To solve this some more integration with

distributions would be necessary: whenever they upgrade the system

they’d have to make sure to enroll the TPM2 again — with the PCR

hashes matching the new version. And whenever they remove an old

version of the system they need to remove the old TPM2

enrollment. Alternatively TPM2 also knows a concept of signed PCR

hash values. In this mode the distro could just ship a set of PCR

signatures which would unlock the TPM2 keys. (But quite frankly I

don’t really see the point: whether you drop in a signature file on

each system update, or enroll a new set of PCR hashes in the LUKS2

header doesn’t make much of a difference). Either way, to make TPM2

enrollment smooth some more integration work with your distribution’s

system update mechanisms need to happen. And yes, because of this OS

updating complexity the example above — where I referenced your trusty

Fedora Linux — doesn’t actually work IRL (yet? hopefully…). Nothing

updates the enrollment automatically after you initially enrolled it,

hence after the first kernel/initrd update you have to manually

re-enroll things again, and again, and again … after every update.

The TPM2 could also be used for other kinds of key policies, we might

look into adding later too. For example, Windows uses TPM2 stuff to

allow short (4 digits or so) “PINs” for unlocking the harddisk,

i.e. kind of a low-entropy password you type in. The reason this is

reasonably safe is that in this case the PIN is passed to the TPM2

which enforces that not more than some limited amount of unlock

attempts may be made within some time frame, and that after too many

attempts the PIN is invalidated altogether. Thus making dictionary

attacks harder (which would normally be easier given the short length

of the PINs).

Postscript

(BTW: Yubico sent me two YubiKeys for testing, Nitrokey a Nitrokey

FIDO2, and AuthenTrend three ATKey.Pro tokens, thank you! — That’s why

you see all those references to YubiKey/Nitrokey/AuthenTrend devices

in the text above: it’s the hardware I had to test this with. That

said, I also tested the FIDO2 stuff with a SoloKey I bought, where it

also worked fine. And yes, you!, other vendors!, who might be reading

this, please send me your security tokens for free, too, and I

might test things with them as well. No promises though. And I am not

going to give them back, if you do, sorry. ;-))

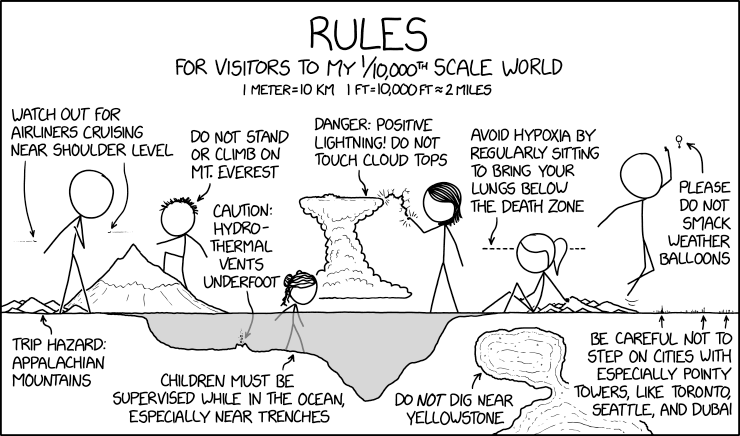

1/10,000th Scale World

Post Syndicated from original https://xkcd.com/2411/

Patch Tuesday – January 2021

Post Syndicated from Richard Tsang original https://blog.rapid7.com/2021/01/12/patch-tuesday-january-2021/

We arrive at the first Patch Tuesday of 2021 (2021-Jan) with 83 vulnerabilities across our standard spread of products. Windows Operating System vulnerabilities dominated this month’s advisories, followed by Microsoft Office (which includes the SharePoint family of products), and lastly some from less frequent products such as Microsoft System Center and Microsoft SQL Server.

Vulnerability Breakdown by Software Family

| Family | Vulnerability Count |

|---|---|

| Windows | 65 |

| ESU | 35 |

| Microsoft Office | 11 |

| Developer Tools | 5 |

| SQL Server | 1 |

| Apps | 1 |

| System Center | 1 |

| Azure | 1 |

| Browser | 1 |

Microsoft Defender Remote Code Execution Vulnerability (CVE-2021-1647)

CVE-2021-1647 is marked as a CVSS 7.8, actively exploited, remote code execution vulnerability through the Microsoft Malware Protection Engine (mpengine.dll) between version 1.1.17600.5 up to 1.1.17700.4.

As a default, Microsoft’s affected antimalware software will automatically keep the Microsoft Malware Protection Engine up to date. What this means, however, is that no further action is needed to resolve this vulnerability unless non-standard configurations are used.

This vulnerability affects Windows Defender or the supported Endpoint Protection pieces of the System Center family of products (2012, 2012 R2, and namesake version: Microsoft System Center Endpoint Protection).

Patching Windows Operating Systems Next

Another confirmation of the standard advice of prioritizing Operating System patches whenever possible is that 11 of the 13 top CVSS-scoring (CVSSv3 8.8) vulnerabilities addressed in this month’s Patch Tuesday would be immediately covered through these means. As an interesting observation, the Windows Remote Procedure Call Runtime component appears to have been given extra scrutiny this month. This RPC Runtime component accounts for the 9 of the 13 top CVSS scoring vulnerabilities along with half of all the 10 Critical Remote Code Execution vulnerabilities being addressed.

More Work to be Done

Lastly, some minor calls to note that this Patch Tuesday includes SQL Server as that is an atypical family covered during Patch Tuesdays and, arguably more notable, is a reminder that Adobe Flash has officially reached end-of-life and would’ve been actively removed from all browsers via Windows Update (already).

Summary Tables

Here are this month’s patched vulnerabilities split by the product family.

Azure Vulnerabilities

| CVE | Vulnerability Title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1677 | Azure Active Directory Pod Identity Spoofing Vulnerability | No | No | 5.5 | Yes |

Browser Vulnerabilities

| CVE | Vulnerability Title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1705 | Microsoft Edge (HTML-based) Memory Corruption Vulnerability | No | No | 4.2 | No |

Developer Tools Vulnerabilities

| cve | Vulnerability Title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2020-26870 | Visual Studio Remote Code Execution Vulnerability | No | No | 7 | Yes |

| CVE-2021-1725 | Bot Framework SDK Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1723 | ASP.NET Core and Visual Studio Denial of Service Vulnerability | No | No | 7.5 | No |

Developer Tools Windows Vulnerabilities

| CVE | Vulnerability Title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1651 | Diagnostics Hub Standard Collector Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1680 | Diagnostics Hub Standard Collector Elevation of Privilege Vulnerability | No | No | 7.8 | No |

Microsoft Office Vulnerabilities

| CVE | title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1715 | Microsoft Word Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

| CVE-2021-1716 | Microsoft Word Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

| CVE-2021-1641 | Microsoft SharePoint Spoofing Vulnerability | No | No | 4.6 | No |

| CVE-2021-1717 | Microsoft SharePoint Spoofing Vulnerability | No | No | 4.6 | No |

| CVE-2021-1718 | Microsoft SharePoint Server Tampering Vulnerability | No | No | 8 | No |

| CVE-2021-1707 | Microsoft SharePoint Server Remote Code Execution Vulnerability | No | No | 8.8 | Yes |

| CVE-2021-1712 | Microsoft SharePoint Elevation of Privilege Vulnerability | No | No | 8 | No |

| CVE-2021-1719 | Microsoft SharePoint Elevation of Privilege Vulnerability | No | No | 8 | No |

| CVE-2021-1711 | Microsoft Office Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

| CVE-2021-1713 | Microsoft Excel Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

| CVE-2021-1714 | Microsoft Excel Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

SQL Server Vulnerabilities

| CVE | title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1636 | Microsoft SQL Elevation of Privilege Vulnerability | No | No | 8.8 | Yes |

System Center Vulnerabilities

| CVE | title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1647 | Microsoft Defender Remote Code Execution Vulnerability | Yes | No | 7.8 | Yes |

Windows Vulnerabilities

| CVE | title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1681 | Windows WalletService Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1686 | Windows WalletService Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1687 | Windows WalletService Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1690 | Windows WalletService Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1646 | Windows WLAN Service Elevation of Privilege Vulnerability | No | No | 6.6 | No |

| CVE-2021-1650 | Windows Runtime C++ Template Library Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1663 | Windows Projected File System FS Filter Driver Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1670 | Windows Projected File System FS Filter Driver Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1672 | Windows Projected File System FS Filter Driver Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1689 | Windows Multipoint Management Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1682 | Windows Kernel Elevation of Privilege Vulnerability | No | No | 7 | No |

| CVE-2021-1697 | Windows InstallService Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1662 | Windows Event Tracing Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1703 | Windows Event Logging Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1645 | Windows Docker Information Disclosure Vulnerability | No | No | 5 | Yes |

| CVE-2021-1637 | Windows DNS Query Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1638 | Windows Bluetooth Security Feature Bypass Vulnerability | No | No | 7.7 | No |

| CVE-2021-1683 | Windows Bluetooth Security Feature Bypass Vulnerability | No | No | 5 | No |

| CVE-2021-1684 | Windows Bluetooth Security Feature Bypass Vulnerability | No | No | 5 | No |

| CVE-2021-1642 | Windows AppX Deployment Extensions Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1685 | Windows AppX Deployment Extensions Elevation of Privilege Vulnerability | No | No | 7.3 | No |

| CVE-2021-1648 | Microsoft splwow64 Elevation of Privilege Vulnerability | No | Yes | 7.8 | Yes |

| CVE-2021-1710 | Microsoft Windows Media Foundation Remote Code Execution Vulnerability | No | No | 7.8 | No |

| CVE-2021-1691 | Hyper-V Denial of Service Vulnerability | No | No | 7.7 | No |

| CVE-2021-1692 | Hyper-V Denial of Service Vulnerability | No | No | 7.7 | No |

| CVE-2021-1643 | HEVC Video Extensions Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

| CVE-2021-1644 | HEVC Video Extensions Remote Code Execution Vulnerability | No | No | 7.8 | Yes |

Windows Apps Vulnerabilities

| CVE | title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1669 | Windows Remote Desktop Security Feature Bypass Vulnerability | No | No | 8.8 | Yes |

Windows ESU Vulnerabilities

| CVE | title | Exploited | Disclosed | CVSS3 | FAQ? |

|---|---|---|---|---|---|

| CVE-2021-1709 | Windows Win32k Elevation of Privilege Vulnerability | No | No | 7 | No |

| CVE-2021-1694 | Windows Update Stack Elevation of Privilege Vulnerability | No | No | 7.5 | Yes |

| CVE-2021-1702 | Windows Remote Procedure Call Runtime Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1674 | Windows Remote Desktop Protocol Core Security Feature Bypass Vulnerability | No | No | 8.8 | No |

| CVE-2021-1695 | Windows Print Spooler Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1676 | Windows NT Lan Manager Datagram Receiver Driver Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1706 | Windows LUAFV Elevation of Privilege Vulnerability | No | No | 7.3 | No |

| CVE-2021-1661 | Windows Installer Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1704 | Windows Hyper-V Elevation of Privilege Vulnerability | No | No | 7.3 | No |

| CVE-2021-1696 | Windows Graphics Component Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1708 | Windows GDI+ Information Disclosure Vulnerability | No | No | 5.7 | Yes |

| CVE-2021-1657 | Windows Fax Compose Form Remote Code Execution Vulnerability | No | No | 7.8 | No |

| CVE-2021-1679 | Windows CryptoAPI Denial of Service Vulnerability | No | No | 6.5 | No |

| CVE-2021-1652 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1653 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1654 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1655 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1659 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1688 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1693 | Windows CSC Service Elevation of Privilege Vulnerability | No | No | 7.8 | No |

| CVE-2021-1699 | Windows (modem.sys) Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1656 | TPM Device Driver Information Disclosure Vulnerability | No | No | 5.5 | Yes |

| CVE-2021-1658 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1660 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1666 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1667 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1673 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1664 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1671 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1700 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1701 | Remote Procedure Call Runtime Remote Code Execution Vulnerability | No | No | 8.8 | No |

| CVE-2021-1678 | NTLM Security Feature Bypass Vulnerability | No | No | 4.3 | No |

| CVE-2021-1668 | Microsoft DTV-DVD Video Decoder Remote Code Execution Vulnerability | No | No | 7.8 | No |

| CVE-2021-1665 | GDI+ Remote Code Execution Vulnerability | No | No | 7.8 | No |

| CVE-2021-1649 | Active Template Library Elevation of Privilege Vulnerability | No | No | 7.8 | No |

Summary Graphs

Note: Graph data is reflective of data presented by Microsoft’s CVRF at the time of writing.

Unlocking LUKS2 volumes with TPM2, FIDO2, PKCS#11 Security Hardware on systemd 248

Post Syndicated from original http://0pointer.net/blog/unlocking-luks2-volumes-with-tpm2-fido2-pkcs11-security-hardware-on-systemd-248.html

Introducing message archiving and analytics for Amazon SNS

Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/introducing-message-archiving-and-analytics-for-amazon-sns/

This blog post is courtesy of Sebastian Caceres (AWS Consultant, DevOps), Otavio Ferreira (Sr. Manager, Amazon SNS), Prachi Sharma and Mary Gao (Software Engineers, Amazon SNS).

Today, we are announcing the release of a message delivery protocol for Amazon SNS based on Amazon Kinesis Data Firehose. This is a new way to integrate SNS with storage and analytics services, without writing custom code.

SNS provides topics for push-based, many-to-many pub/sub messaging to help you decouple distributed systems, microservices, and event-driven serverless applications. As applications grow, so does the need to archive messages to meet compliance goals. These archives can also provide important operational and business insights.

Previously, custom code was required to create data pipelines, using general-purpose SNS subscription endpoints, such as Amazon SQS queues or AWS Lambda functions. You had to manage data transformation, data buffering, data compression, and the upload to data stores.

Overview

With the new native integration between SNS and Kinesis Data Firehose, you can send messages to storage and analytics services, using a purpose-built SNS subscription type.

Once you configure a subscription, messages published to the SNS topic are sent to the subscribed Kinesis Data Firehose delivery stream. The messages are then delivered to the destination endpoint configured in the delivery stream, which can be an Amazon S3 bucket, an Amazon Redshift table, or an Amazon Elasticsearch Service index.

You can also use a third-party service provider as the destination of a delivery stream, including Datadog, New Relic, MongoDB, and Splunk. No custom code is required to bridge the services. For more information, see Fanout to Kinesis Data Firehose streams, in the SNS Developer Guide.

The new Kinesis Data Firehose subscription type and its destinations are part of the application-to-application (A2A) messaging offering of SNS. The addition of this subscription type expands the SNS A2A offering to include the following use cases:

- Run analytics on SNS messages, using Amazon Kinesis Data Analytics, Amazon Elasticsearch Service, or Amazon Redshift as a delivery stream destination. You can use this option to gain insights and detect anomalies in workloads.

- Index and search SNS messages, using Amazon Elasticsearch Service as a delivery stream destination. From there, you can create dashboards using Kibana, a data visualization and exploration tool.

- Store SNS messages for backup and auditing purposes, using S3 as a destination of choice. You can then use Amazon Athena to query the S3 bucket for analytics purposes.

- Apply transformation to SNS messages. For example, you may obfuscate personally identifiable information (PII) or protected health information (PHI) using a Lambda function invoked by the delivery stream.

- Feed SNS messages into cloud-based application monitoring and observability tools, using Datadog, New Relic, or Splunk as a destination. You can choose this option to enrich DevOps or marketing workflows.

As with all supported message delivery protocols, you can filter, monitor, and encrypt messages.

To simplify architecture and further avoid custom code, you can use an SNS subscription filter policy. This enables you to route only the relevant subset of SNS messages to the Kinesis Data Firehose delivery stream. For more information, see SNS message filtering.

To monitor the throughput, you can check the NumberOfMessagesPublished and the NumberOfNotificationsDelivered metrics for SNS, and the IncomingBytes, IncomingRecords, DeliveryToS3.Records and DeliveryToS3.Success metrics for Kinesis Data Firehose. For additional information, see Monitoring SNS topics using CloudWatch and Monitoring Kinesis Data Firehose using CloudWatch.

For security purposes, you can choose to have data encrypted at rest, using server-side encryption (SSE), in addition to encrypted in transit using HTTPS. For more information, see SNS SSE, Kinesis Data Firehose SSE, and S3 SSE.

Applying SNS message archiving and analytics in a use case

For example, consider an airline ticketing platform that operates in a regulated environment. The compliance framework requires that the company archives all ticket sales for at least 5 years.

The platform is based on an event-driven serverless architecture. It has a ticket seller Lambda function that publishes an event to an SNS topic for every ticket sold. The SNS topic fans out the event to subscribed systems that are interested in processing this type of event. In the preceding diagram, two systems are interested: one focused on payment processing, and another on fraud control. Each subscribed system is invoked by an SQS queue and an event processing Lambda function.

To meet the compliance goal on data retention, the airline company subscribes a Kinesis Data Firehose delivery stream to their existing SNS topic. They use an S3 bucket as the stream destination. After this, all events published to the SNS topic are archived in the S3 bucket.

The company can then use Athena to query the S3 bucket with standard SQL to run analytics and gain insights on ticket sales. For example, they can query for the most popular flight destinations or the most frequent flyers.

Subscribing a Kinesis Data Firehose stream to an SNS topic

You can set up a Kinesis Data Firehose subscription to an SNS topic using the AWS Management Console, the AWS CLI, or the AWS SDKs. You can also use AWS CloudFormation to automate the provisioning of these resources.

We use CloudFormation for this example. The provided CloudFormation template creates the following resources:

- An SNS topic

- An S3 bucket

- A Kinesis Data Firehose delivery stream

- A Kinesis Data Firehose subscription in SNS

- Two SQS subscriptions in SNS

- Two IAM roles with access to deliver messages:

- From SNS to Kinesis Data Firehose

- From Kinesis Data Firehose to S3

To provision the infrastructure, use the following template:

---

AWSTemplateFormatVersion: '2010-09-09'

Description: Template for creating an SNS archiving use case

Resources:

ticketUploadStream:

DependsOn:

- ticketUploadStreamRolePolicy

Type: AWS::KinesisFirehose::DeliveryStream

Properties:

S3DestinationConfiguration:

BucketARN: !Sub 'arn:${AWS::Partition}:s3:::${ticketArchiveBucket}'

BufferingHints:

IntervalInSeconds: 60

SizeInMBs: 1

CompressionFormat: UNCOMPRESSED

RoleARN: !GetAtt ticketUploadStreamRole.Arn

ticketArchiveBucket:

Type: AWS::S3::Bucket

ticketTopic:

Type: AWS::SNS::Topic

ticketPaymentQueue:

Type: AWS::SQS::Queue

ticketFraudQueue:

Type: AWS::SQS::Queue

ticketQueuePolicy:

Type: AWS::SQS::QueuePolicy

Properties:

PolicyDocument:

Statement:

Effect: Allow

Principal:

Service: sns.amazonaws.com

Action:

- sqs:SendMessage

Resource: '*'

Condition:

ArnEquals:

aws:SourceArn: !Ref ticketTopic

Queues:

- !Ref ticketPaymentQueue

- !Ref ticketFraudQueue

ticketUploadStreamSubscription:

Type: AWS::SNS::Subscription

Properties:

TopicArn: !Ref ticketTopic

Endpoint: !GetAtt ticketUploadStream.Arn

Protocol: firehose

SubscriptionRoleArn: !GetAtt ticketUploadStreamSubscriptionRole.Arn

ticketPaymentQueueSubscription:

Type: AWS::SNS::Subscription

Properties:

TopicArn: !Ref ticketTopic

Endpoint: !GetAtt ticketPaymentQueue.Arn

Protocol: sqs

ticketFraudQueueSubscription:

Type: AWS::SNS::Subscription

Properties:

TopicArn: !Ref ticketTopic

Endpoint: !GetAtt ticketFraudQueue.Arn

Protocol: sqs

ticketUploadStreamRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Sid: ''

Effect: Allow

Principal:

Service: firehose.amazonaws.com

Action: sts:AssumeRole

ticketUploadStreamRolePolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: FirehoseticketUploadStreamRolePolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- s3:AbortMultipartUpload

- s3:GetBucketLocation

- s3:GetObject

- s3:ListBucket

- s3:ListBucketMultipartUploads

- s3:PutObject

Resource:

- !Sub 'arn:aws:s3:::${ticketArchiveBucket}'

- !Sub 'arn:aws:s3:::${ticketArchiveBucket}/*'

Roles:

- !Ref ticketUploadStreamRole

ticketUploadStreamSubscriptionRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- sns.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: SNSKinesisFirehoseAccessPolicy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Action:

- firehose:DescribeDeliveryStream

- firehose:ListDeliveryStreams

- firehose:ListTagsForDeliveryStream

- firehose:PutRecord

- firehose:PutRecordBatch

Effect: Allow

Resource:

- !GetAtt ticketUploadStream.Arn

To test, publish a message to the SNS topic. After the delivery stream buffer interval of 60 seconds, the message appears in the destination S3 bucket. For information on message formats, see Amazon SNS message formats in Amazon Kinesis Data Firehose destinations.

Cleaning up

After testing, avoid incurring usage charges by deleting the resources you created during the walkthrough. If you used the CloudFormation template, delete all the objects from the S3 bucket before deleting the stack.

Conclusion

In this post, we show how SNS delivery to Kinesis Data Firehose enables you to integrate SNS with storage and analytics services. The example shows how to create an SNS subscription to use a Kinesis Data Firehose delivery stream to store SNS messages in an S3 bucket.

You can adapt this configuration for your needs for storage, encryption, data transformation, and data pipeline architecture. For more information, see Fanout to Kinesis Data Firehose streams in the SNS Developer Guide.

For details on pricing, see SNS pricing and Kinesis Data Firehose pricing. For more serverless learning resources, visit Serverless Land.

A set of stable kernels

Securing access to EMR clusters using AWS Systems Manager

Post Syndicated from Sai Sriparasa original https://aws.amazon.com/blogs/big-data/securing-access-to-emr-clusters-using-aws-systems-manager/

Organizations need to secure infrastructure when enabling access to engineers to build applications. Opening SSH inbound ports on instances to enable engineer access introduces the risk of a malicious entity running unauthorized commands. Using a Bastion host or jump server is a common approach used to allow engineer access to Amazon EMR cluster instances by enabling SSH inbound ports. In this post, we present a more secure way to access your EMR cluster launched in a private subnet that eliminates the need to open inbound ports or use a Bastion host.

We strive to answer the following three questions in this post:

- Why use AWS Systems Manager Session Manager with Amazon EMR?

- Who can use Session Manager?

- How can Session Manager be configured on Amazon EMR?

After answering these questions, we will walk you through configuring Amazon EMR with Session Manager and creating an AWS Identity and Access Management (IAM) policy to enable Session Manager capabilities on Amazon EMR. We also walk you through the steps required to configure secure tunneling to access Hadoop application web interfaces such as YARN Resource Manager and, Spark Job Server.

Creating an IAM role

AWS Systems Manager provides a unified user interface so you can view and manage your Amazon Elastic Compute Cloud (Amazon EC2) instances. Session Manager provides secure and auditable instance management. Systems Manager integration with IAM provides centralized access control to your EMR cluster. By default, Systems Manager doesn’t have permissions to perform actions on cluster instances. You must grant access by attaching an IAM role on the instance. Before you get started, create an IAM service role for cluster EC2 instances with the least privilege access policy.

- Create an IAM service role (Amazon EMR role for Amazon EC2) for cluster EC2 instances and attach the AWS managed Systems Manager core instance (AmazonSSMManagedInstanceCore) policy.

- Create an IAM policy with least privilege to allow the principal to initiate a Session Manager session on Amazon EMR cluster instances:

- Attach the least privilege policy to the IAM principal (role or user).

How Amazon EMR works with AWS Systems Manager Agent

You can install and configure AWS Systems Manager Agent (SSM Agent) on Amazon EMR cluster node(s) using bootstrap actions. SSM Agent makes it possible for Session Manager to update, manage and configure these resources. Session Manager is available at no additional cost to manage Amazon EC2 instances, for cost on additional features refer Systems Manager pricing page. The agent processes requests from the Session Manager service in the AWS Cloud, and then runs them as specified in the user request. You can achieve dynamic port forwarding by installing the Systems Manager plug-in on a local computer. IAM policies provide centralized access control on the EMR cluster.

The following diagram illustrates a high-level integration of AWS Systems Manager interaction with an EMR cluster.

Configuring SSM Agent on an EMR Cluster:

To configure SSM Agent on your cluster, complete the following steps: