Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=IZHZbGb3PUA

Packard Bell Corner Computer: One of 1995’s Strangest PCs

Post Syndicated from LGR original https://www.youtube.com/watch?v=f1csOOMXANI

Security updates for Friday

Post Syndicated from original https://lwn.net/Articles/837105/rss

Security updates have been issued by Debian (libproxy, pacemaker, and thunderbird), Fedora (nss), openSUSE (kernel), Oracle (curl, librepo, qt and qt5-qtbase, and tomcat), Red Hat (firefox), SUSE (firefox, java-1_7_0-openjdk, and openldap2), and Ubuntu (apport, libmaxminddb, openjdk-8, openjdk-lts, and slirp).

Medina Modification Center Explosion, November 13, 1963

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=4doGizimjZs

Why Zabbix throttling preprocessing is a key point for high-frequency monitoring

Post Syndicated from Dmitry Lambert original https://blog.zabbix.com/why-zabbix-throttling-preprocessing-is-a-key-point-for-high-frequency-monitoring/12364/

Sometimes we need much more than collecting generic data from our servers or network devices. For high-frequency monitoring, we need functionality to offload сore components from the extensive load. Throttling is the exact thing that will allow you to drop repetitive values on a Pre-processing level and collect only changing values.

Contents

I. High-frequency monitoring (0:33)

1. High-frequency monitoring issues (2:25)

2. Throttling (5:55)

Throttling is available since Zabbix 4.2 and is highly effective for high-frequency monitoring.

High-frequency monitoring

We have to set update intervals for all of the items we create in Configuration > Host > Items > Create item.

Setting update interval

The smallest update interval for regular items in Zabbix is one second. If we want to monitor all items, including memory usage, network bandwidth, or CPU load once per second, this can be considered a high-frequency interval. However, in the case of industrial equipment or telemetry data, we’ll most likely need the data more often, for instance, every 1 millisecond.

The easiest way to send data every millisecond is to use Zabbix sender — a small utility to send values to the Zabbix server or the proxy. But first, these values should be gathered.

High-frequency monitoring issues

Selecting an update interval for different items

We have to think about performance, as the more data we have, the more performance issues will arise and the more powerful hardware we’ll have to buy.

If the data grabbed from a host is constantly changing, it makes sense to collect the data every 10 or 100 milliseconds, for instance. This means that we have to process this changing data with the triggers, store it in the database, visualize it in the Latest data, as every time we receive a new value.

There are values that does not have that trend to change very frequently, but without Throttling we would still collect a new value every milisecond and process it with all our triggers and internal processes, even if the value does not change over hours.

Throttling

The greatest way to solve this problem is through throttling.

To illustrate it, in Configuration > Hosts, let’s create a ‘Throttling‘ host and add it to a group.

Creating host

Then we’ll create an item to work as a Zabbix sender item.

Creating Zabbix sender item

NOTE. For a Zabbix sender item, the Type should always be ‘Zabbix trapper’.

Then open the CLI and reload the config cache:

zabbix_server -R config_cache_reload

Now we can send values to the Zabbix sender, specifying IP address of the Zabbix server, hostname, which is case-sensitive, the key, and then the value — 1:

zabbix_sender -z 127.0.0.1 -s Throttling -k youtube -o 1

If we send value “1” several times, they all will be displayed in Monitoring > Latest data.

Displaying the values grabbed from the host

NOTE. It’s possible to filter the Latest data to display only the needed host and set a sufficient range of the last values to be displayed.

Using this method we are spamming the Zabbix server. So, we can add throttling to the settings of our item in the Pre-processing tab in Configuration > Hosts.

NOTE. There are no other parameters to configure besides this Pre-processing step from the throttling menu.

Discard unchanged

Discard unchanged throttling option

With the ‘Discard unchanged‘ throttling option, only new values will be processed by the server, while identical values will be ignored.

Throttling ignores identical values

Discard unchanged with a heartbeat

If we change the pre-processing settings for our item in the Pre-processing tab in Configuration > Hosts to ‘Discard unchanged with a heartbeat‘, we have one additional Parameter to specify — the interval to send the values if they are identical.

Discard unchanged with a heartbeat

So, if we specify 120 seconds, then in Monitoring > Latest data, we’ll get the values once per 120 seconds even if they are identical.

Displaying identical values with an interval

This throttling option is useful when we have nodata() triggers. So, with the Discard unchanged throttling option, the nodata() triggers will fire as identical data will be dropped. If we use Discard unchanged with heartbeat even identical values will be grabbed, so the trigger won’t fire.

In simpler words, the ‘Discard unchanged‘ throttling option will drop all identical values, while ‘Discard unchanged with heartbeat‘ will send even the identical values with the specified interval.

—

Watch the video.

New Zealand Election Fraud

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2020/11/new-zealand-election-fraud.html

It seems that this election season has not gone without fraud. In New Zealand, a vote for “Bird of the Year” has been marred by fraudulent votes:

More than 1,500 fraudulent votes were cast in the early hours of Monday in the country’s annual bird election, briefly pushing the Little-Spotted Kiwi to the top of the leaderboard, organizers and environmental organization Forest & Bird announced Tuesday.

Those votes — which were discovered by the election’s official scrutineers — have since been removed. According to election spokesperson Laura Keown, the votes were cast using fake email addresses that were all traced back to the same IP address in Auckland, New Zealand’s most populous city.

It feels like writing this story was a welcome distraction from writing about the US election:

“No one has to worry about the integrity of our bird election,” she told Radio New Zealand, adding that every vote would be counted.

Asked whether Russia had been involved, she denied any “overseas interference” in the vote.

I’m sure that’s a relief to everyone involved.

Automated Origin CA for Kubernetes

Post Syndicated from Terin Stock original https://blog.cloudflare.com/automated-origin-ca-for-kubernetes/

In 2016, we launched the Cloudflare Origin CA, a certificate authority optimized for making it easy to secure the connection between Cloudflare and an origin server. Running our own CA has allowed us to support fast issuance and renewal, simple and effective revocation, and wildcard certificates for our users.

Out of the box, managing TLS certificates and keys within Kubernetes can be challenging and error prone. The secret resources have to be constructed correctly, as components expect secrets with specific fields. Some forms of domain verification require manually rotating secrets to pass. Once you’re successful, don’t forget to renew before the certificate expires!

cert-manager is a project to fill this operational gap, providing Kubernetes resources that manage the lifecycle of a certificate. Today we’re releasing origin-ca-issuer, an extension to cert-manager integrating with Cloudflare Origin CA to easily create and renew certificates for your account’s domains.

Origin CA Integration

Creating an Issuer

After installing cert-manager and origin-ca-issuer, you can create an OriginIssuer resource. This resource creates a binding between cert-manager and the Cloudflare API for an account. Different issuers may be connected to different Cloudflare accounts in the same Kubernetes cluster.

apiVersion: cert-manager.k8s.cloudflare.com/v1

kind: OriginIssuer

metadata:

name: prod-issuer

namespace: default

spec:

signatureType: OriginECC

auth:

serviceKeyRef:

name: service-key

key: key

```This creates a new OriginIssuer named “prod-issuer” that issues certificates using ECDSA signatures, and the secret “service-key” in the same namespace is used to authenticate to the Cloudflare API.

Signing an Origin CA Certificate

After creating an OriginIssuer, we can now create a Certificate with cert-manager. This defines the domains, including wildcards, that the certificate should be issued for, how long the certificate should be valid, and when cert-manager should renew the certificate.

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: example-com

namespace: default

spec:

# The secret name where cert-manager

# should store the signed certificate.

secretName: example-com-tls

dnsNames:

- example.com

# Duration of the certificate.

duration: 168h

# Renew a day before the certificate expiration.

renewBefore: 24h

# Reference the Origin CA Issuer you created above,

# which must be in the same namespace.

issuerRef:

group: cert-manager.k8s.cloudflare.com

kind: OriginIssuer

name: prod-issuer

Once created, cert-manager begins managing the lifecycle of this certificate, including creating the key material, crafting a certificate signature request (CSR), and constructing a certificate request that will be processed by the origin-ca-issuer.

When signed by the Cloudflare API, the certificate will be made available, along with the private key, in the Kubernetes secret specified within the secretName field. You’ll be able to use this certificate on servers proxied behind Cloudflare.

Extra: Ingress Support

If you’re using an Ingress controller, you can use cert-manager’s Ingress support to automatically manage Certificate resources based on your Ingress resource.

apiVersion: networking/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/issuer: prod-issuer

cert-manager.io/issuer-kind: OriginIssuer

cert-manager.io/issuer-group: cert-manager.k8s.cloudflare.com

name: example

namespace: default

spec:

rules:

- host: example.com

http:

paths:

- backend:

serviceName: examplesvc

servicePort: 80

path: /

tls:

# specifying a host in the TLS section will tell cert-manager

# what DNS SANs should be on the created certificate.

- hosts:

- example.com

# cert-manager will create this secret

secretName: example-tls

Building an External cert-manager Issuer

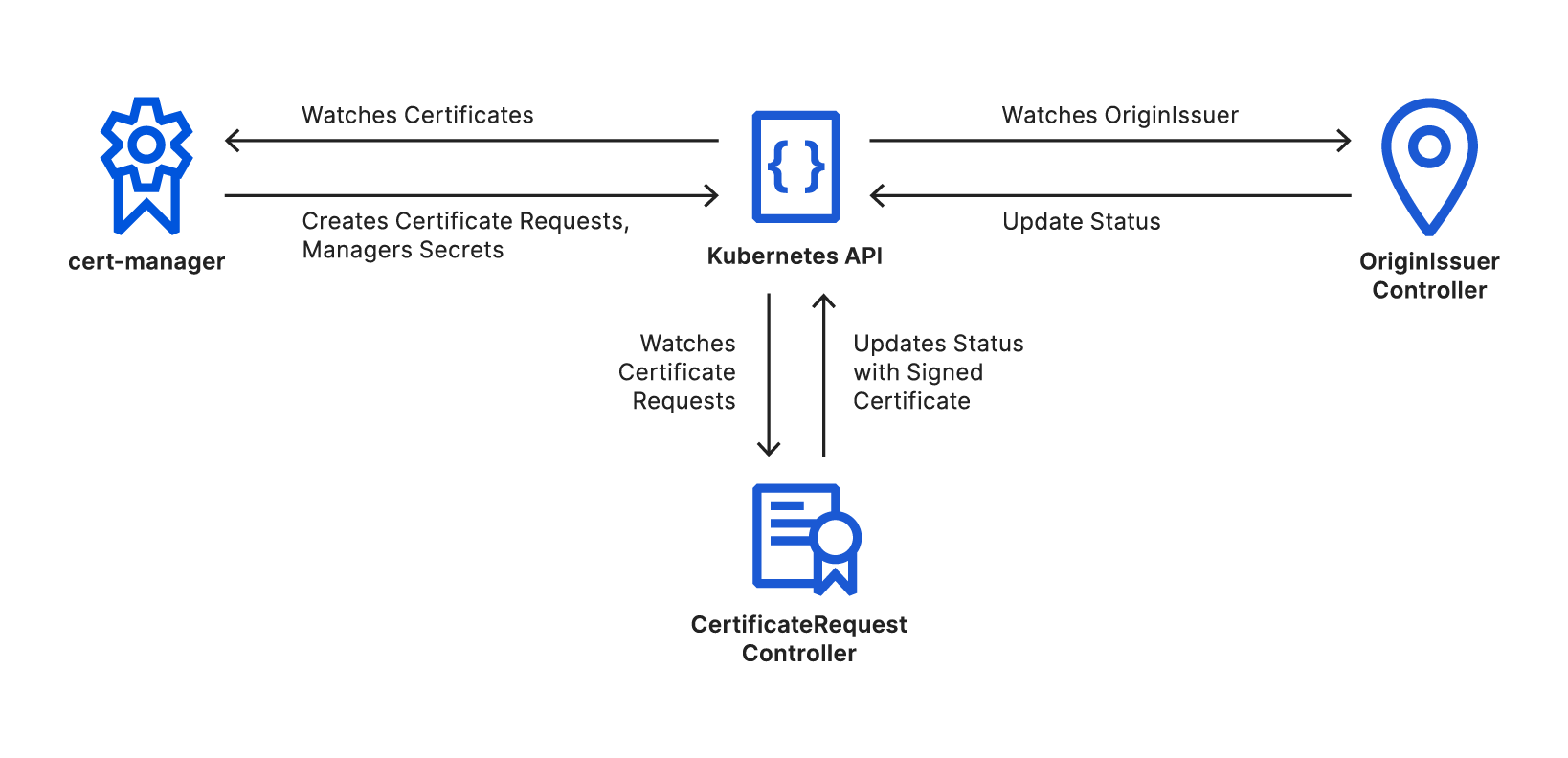

An external cert-manager issuer is a specialized Kubernetes controller. There’s no direct communication between cert-manager and external issuers at all; this means that you can use any existing tools and best practices for developing controllers to develop an external issuer.

We’ve decided to use the excellent controller-runtime project to build origin-ca-issuer, running two reconciliation controllers.

OriginIssuer Controller

The OriginIssuer controller watches for creation and modification of OriginIssuer custom resources. The controllers create a Cloudflare API client using the details and credentials referenced. This client API instance will later be used to sign certificates through the API. The controller will periodically retry to create an API client; once it is successful, it updates the OriginIssuer’s status to be ready.

CertificateRequest Controller

The CertificateRequest controller watches for the creation and modification of cert-manager’s CertificateRequest resources. These resources are created automatically by cert-manager as needed during a certificate’s lifecycle.

The controller looks for Certificate Requests that reference a known OriginIssuer, this reference is copied by cert-manager from the origin Certificate resource, and ignores all resources that do not match. The controller then verifies the OriginIssuer is in the ready state, before transforming the certificate request into an API request using the previously created clients.

On a successful response, the signed certificate is added to the certificate request, and which cert-manager will use to create or update the secret resource. On an unsuccessful request, the controller will periodically retry.

Learn More

Up-to-date documentation and complete installation instructions can be found in our GitHub repository. Feedback and contributions are greatly appreciated. If you’re interested in Kubernetes at Cloudflare, including building controllers like these, we’re hiring.

PRIMM: encouraging talk in programming lessons

Post Syndicated from Oliver Quinlan original https://www.raspberrypi.org/blog/primm-talk-in-programming-lessons-research-seminar/

Whenever you learn a new subject or skill, at some point you need to pick up the particular language that goes with that domain. And the only way to really feel comfortable with this language is to practice using it. It’s exactly the same when learning programming.

In our latest research seminar, we focused on how we educators and our students can talk about programming. The seminar presentation was given by our Chief Learning Officer, Dr Sue Sentance. She shared the work she and her collaborators have done to develop a research-based approach to teaching programming called PRIMM, and to work with teachers to investigate the effects of PRIMM on students.

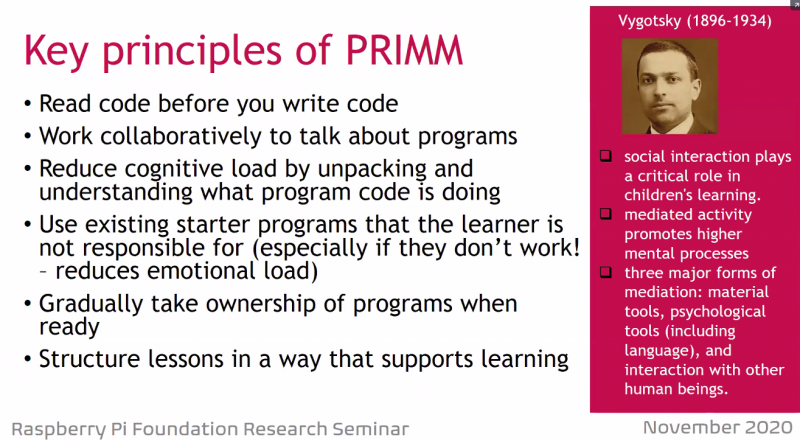

As well as providing a structure for programming lessons, Sue’s research on PRIMM helps us think about ways in which learners can investigate programs, start to understand how they work, and then gradually develop the language to talk about them themselves.

Productive talk for education

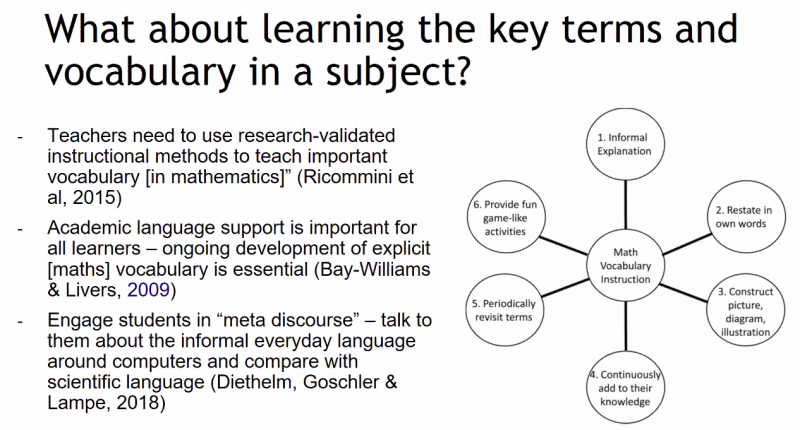

Sue began by taking us through the rich history of educational research into language and dialogue. This work has been heavily developed in science and mathematics education, as well as language and literacy.

In particular the work of Neil Mercer and colleagues has shown that students need guidance to develop and practice using language to reason, and that developing high-quality language improves understanding. The role of the teacher in this language development is vital.

Sue’s work draws on these insights to consider how language can be used to develop understanding in programming.

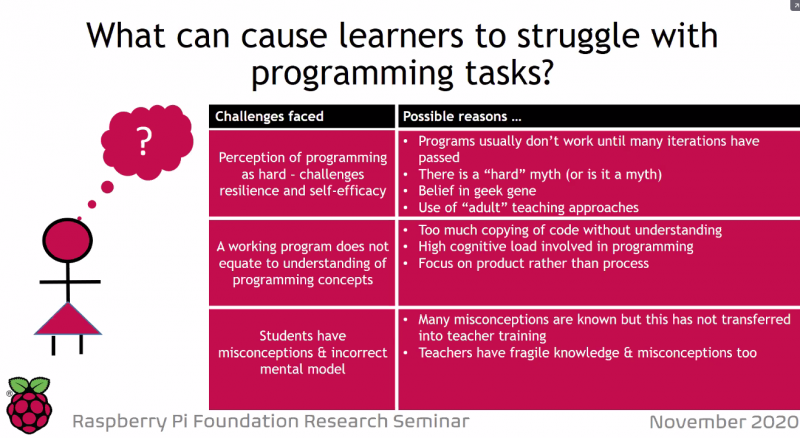

Why is programming challenging for beginners?

Sue identified shortcomings of some teaching approaches that are common in the computing classroom but may not be suitable for all beginners.

- ‘Copy code’ activities for learners take a long time, lead to dreaded syntax errors, and don’t necessarily build more understanding.

- When teachers model the process of writing a program, this can be very helpful, but for beginners there may still be a huge jump from being able to follow the modeling to being able to write a program from scratch themselves.

PRIMM was designed by Sue and her collaborators as a language-first approach where students begin not by writing code, but by reading it.

What is PRIMM?

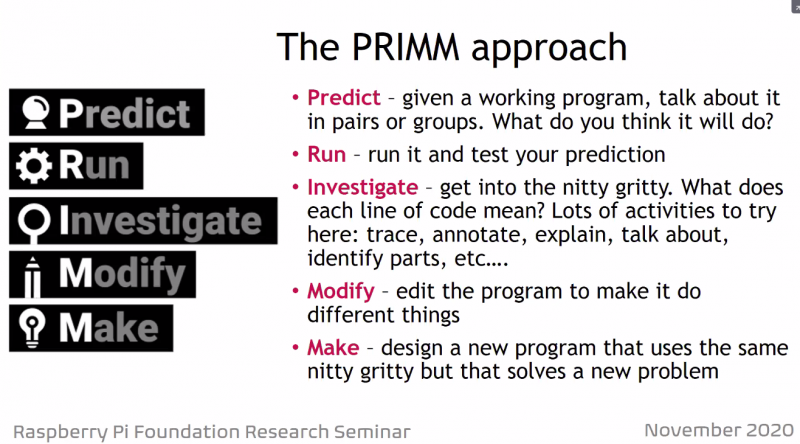

PRIMM stands for ‘Predict, Run, Investigate, Modify, Make’. In this approach, rather than copying code or writing programs from scratch, beginners instead start by focussing on reading working code.

In the Predict stage, the teacher provides learners with example code to read, discuss, and make output predictions about. Next, they run the code to see how the output compares to what they predicted. In the Investigate stage, the teacher sets activities for the learners to trace, annotate, explain, and talk about the code line by line, in order to help them understand what it does in detail.

In the seminar, Sue took us through a mini example of the stages of PRIMM where we predicted the output of Python Turtle code. You can follow along on the recording of the seminar to get the experience of what it feels like to work through this approach.

The impact of PRIMM on learning

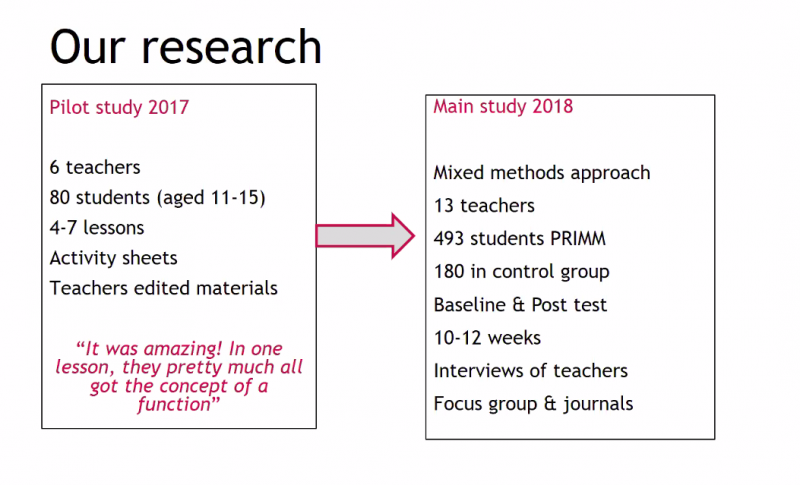

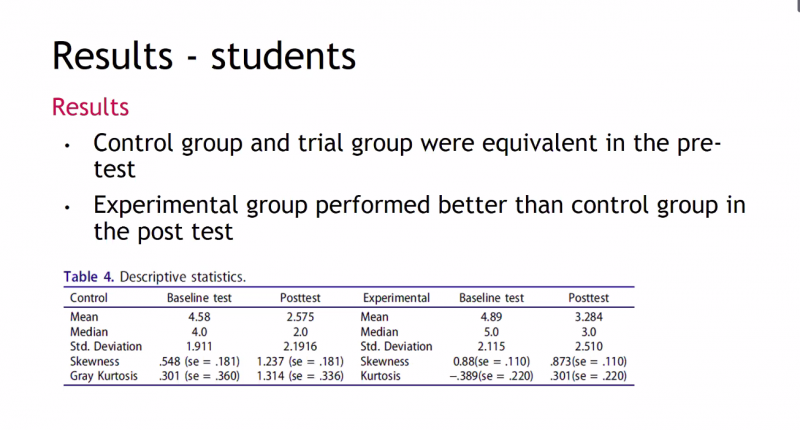

The PRIMM approach is informed by research, and it is also the subject of research by Sue and her collaborators. They’ve conducted two studies to measure the effectiveness of PRIMM: an initial pilot, and a larger mixed-methods study with 13 teachers and 493 students with a control group.

The larger study used a pre and post test, and found that the group who experienced a PRIMM approach performed better on the tests than the control group. The researchers also collected a wealth of qualitative feedback from teachers. The feedback suggested that the approach can help students to develop a language to express their understanding of programming, and that there was much more productive peer conversation in the PRIMM lessons (sometimes this meant less talk, but at a more advanced level).

The PRIMM structure also gave some teachers a greater capacity to talk about the process of teaching programming. It facilitated the discussion of teaching ideas and learning approaches for the teachers, as well as developing language approaches that students used to learn programming concepts.

The research results suggest that learners taught using PRIMM appear to be developing the language skills to talk coherently about their programming. The effectiveness of PRIMM is also evidenced by the number of teachers who have taken up the approach, building in their own activities and in some cases remixing the PRIMM terminology to develop their own take on a language-first approach to teaching programming.

Future research will investigate in detail how PRIMM encourages productive talk in the classroom, and will link the approach to other work on semantic waves. (For more on semantic waves in computing education, see this seminar by Jane Waite and this symposium talk by Paul Curzon.)

Resources for educators who want to try PRIMM

If you would like to try out PRIMM with your learners, use our free support materials:

- A brief introduction to using PRIMM to structure programming lessons

- Sue shares more about the Investigate stage of PRIMM in issue 14 of Hello World magazine

- Official website for PRIMM

- Online course: Programming Pedagogy in Primary Schools

- Online course: Programming Pedagogy in Secondary Schools

- The Programming units in the Teach Computing Curriculum we developed help you make use of PRIMM

Join our next seminar

If you missed the seminar, you can find the presentation slides alongside the recording of Sue’s talk on our seminars page.

In our next seminar on Tuesday 1 December at 17:00–18:30 GMT / 12:00–13:30 EsT / 9:00–10:30 PT / 18:00–19:30 CEST. Dr David Weintrop from the University of Maryland will be presenting on the role of block-based programming in computer science education. To join, simply sign up with your name and email address.

Once you’ve signed up, we’ll email you the seminar meeting link and instructions for joining. If you attended this past seminar, the link remains the same.

The post PRIMM: encouraging talk in programming lessons appeared first on Raspberry Pi.

Comic for 2020.11.13

Post Syndicated from Explosm.net original http://explosm.net/comics/5714/

New Cyanide and Happiness Comic

Unknown Soldiers

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=1yBiin-kTKE

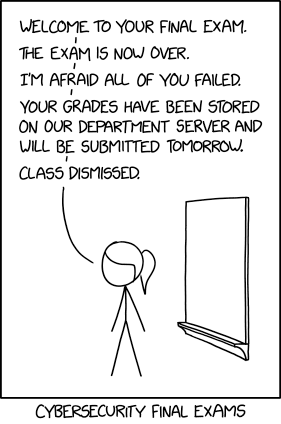

Final Exam

Post Syndicated from original https://xkcd.com/2385/

Migrating from Vertica to Amazon Redshift

Post Syndicated from Seetha Sarma original https://aws.amazon.com/blogs/big-data/migrating-from-vertica-to-amazon-redshift/

Amazon Redshift powers analytical workloads for Fortune 500 companies, startups, and everything in between. With Amazon Redshift, you can query petabytes of structured and semi-structured data across your data warehouse, operational database, and your data lake using standard SQL.

When you use Vertica, you have to install and upgrade Vertica database software and manage the cluster OS and hardware. Amazon Redshift is a fully managed cloud solution; you don’t have to install and upgrade database software and manage the OS and the hardware. In this post, we discuss the best practices for migrating from a self-managed Vertica cluster to the fully managed Amazon Redshift solution. We discuss how to plan for the migration, including sizing your Amazon Redshift cluster and strategies for data placement. We look at the tools for schema conversion and see how to choose the right keys for distributing and sorting your data. We also see how to speed up the data migration to Amazon Redshift based on your data size and network connectivity. Finally, we cover how cluster management on Amazon Redshift differs from Vertica.

Migration planning

When planning your migration, start with where you want to place the data. Your business use case drives what data gets loaded to Amazon Redshift and what data remains on the data lake. In this section, we discuss how to size the Amazon Redshift cluster based on the size of the Vertica dataset that you’re moving to Amazon Redshift. We also look at the Vertica schema and decide the best data distribution and sorting strategies to use for Amazon Redshift, if you choose to do it manually.

Data placement

Amazon Redshift powers the lake house architecture, which enables you to query data across your data warehouse, data lake, and operational databases to gain faster and deeper insights not possible otherwise. In a Vertica data warehouse, you plan the capacity for all your data, whereas with Amazon Redshift, you can plan your data warehouse capacity much more efficiently. If you have a huge historical dataset being shared by multiple compute platforms, then it’s a good candidate to keep on Amazon Simple Storage Service (Amazon S3) and utilize Amazon Redshift Spectrum. Also, streaming data coming from Kafka and Amazon Kinesis Data Streams can add new files to an existing external table by writing to Amazon S3 with no resource impact to Amazon Redshift. This has a positive impact on concurrency. Amazon Redshift Spectrum is good for heavy scan and aggregate work. For tables that are frequently accessed from a business intelligence (BI) reporting or dashboarding interface and for tables frequently joined with other Amazon Redshift tables, it’s optimal to have tables loaded in Amazon Redshift.

Vertica has Flex tables to handle JSON data. You don’t need to load the JSON data to Amazon Redshift. You can use external tables to query JSON data stored on Amazon S3 directly from Amazon Redshift. You create external tables in Amazon Redshift within an external schema.

Vertica users typically create a projection on a Vertica table to optimize for a particular query. If necessary, use materialized views in Amazon Redshift. Vertica also has aggregate projection, which acts like a synchronized materialized view. With materialized views in Amazon Redshift, you can store the pre-computed results of queries and efficiently maintain them by incrementally processing the latest changes made to the source tables. Subsequent queries referencing the materialized views use the pre-computed results to run much faster. You can create materialized views based on one or more source tables using filters, inner joins, aggregations, grouping, functions, and other SQL constructs.

Cluster sizing

When you create a cluster on the Amazon Redshift console, you can get a recommendation of your cluster configuration based on the size of your data and query characteristics (see the following screenshot).

Amazon Redshift offers different node types to accommodate your workloads. We recommend using RA3 nodes so you can size compute and storage independently to achieve improved price and performance. Amazon Redshift takes advantage of optimizations such as data block temperature, data block age, and workload patterns to optimize performance and manage automatic data placement across tiers of storage in the RA3 clusters.

ETL pipelines and BI reports typically use temporary tables that are only valid for a session. Vertica has local and global temporary tables. If you’re using Vertica local temporary tables, no change is required during migration. Vertica local tables and Amazon Redshift temporary tables have similar behavior. They’re visible only to the session and get dropped when the session ends. Vertica global tables persist across sessions until they are explicitly dropped. If you use them now, you have to change them to permanent tables in Amazon Redshift and drop them when they’re no longer needed.

Data distribution, sorting, and compression

Amazon Redshift optimizes for performance by distributing the data across compute nodes and sorting the data. Make sure to set the sort key, distribution style, and compression encoding of the tables to take full advantage of the massively parallel processing (MPP) capabilities. The choice of distribution style and sort keys vary based on data model and access patterns. Use the data distribution and column order of the Vertica tables to help choose the distribution keys and sort keys on Amazon Redshift.

Distribution keys

Choose a column with high cardinality of evenly spread out values as the distribution key. Profile the data for the columns used for distribution keys. Vertica has segmentation that specifies how to distribute data for superprojections of a table, where the data to be hashed consists of one or more column values. The columns used in segmentation are most likely good candidates for distribution keys on Amazon Redshift. If you have multiple columns in segmentation, pick the column that provides the highest cardinality to reduce the possibility of high data skew.

Besides supporting data distribution by key, Amazon Redshift also supports other distribution styles: ALL, EVEN, and AUTO. Use ALL distribution for small dimension tables and EVEN distribution for larger tables, or use AUTO distribution, where Amazon Redshift changes the distribution style from ALL to EVEN as the table size reaches a threshold.

Sort keys

Amazon Redshift stores your data on disk in sorted order using the sort key. The Amazon Redshift query optimizer uses the sort order for optimal query plans. Review if one of raw columns used in the Vertica table’s Order By clause is the best column to use as the sort key in the Amazon Redshift table.

The order by fields in Vertica superprojections are good candidates for a sort key in Amazon Redshift, but the design criteria of sort order in Amazon Redshift is different from what you use in Vertica. In Vertica projections Order By clause, you use the low-cardinality columns with high probability of having RLE encoding before the high-cardinality columns. In Amazon Redshift, you can set the SORTKEY to AUTO, or choose a column as SORTKEY or define a compound sort key. You define compound sort keys using multiple columns, starting with the most frequently used column first. All the columns in the compound sort key are used, in the order in which they are listed, to sort the data. You can use a compound sort key when query predicates use a subset of the sort key columns in order. Amazon Redshift stores the table rows on disk in sorted order and uses metadata to track the minimum and maximum values for each 1 MB block, called a zone map. Amazon Redshift uses the zone map and the sort key for filtering the block, thereby reducing the scanning cost to efficiently handle range-restricted predicates.

Profile the data for the columns used for sort keys. Make sure the first column of the sort key is not encoded. Choose timestamp columns or columns used in frequent range filtering, equality filtering, or joins as sort keys in Amazon Redshift.

Encoding

You don’t always have to select compression encodings; Amazon Redshift automatically assigns RAW compression for columns that are defined as sort keys, AZ64 compression for the numeric and timestamp columns, and LZO compression for the VARCHAR columns. When you select compression encodings manually, choose AZ64 for numeric and date/time data stored in Amazon Redshift. AZ64 encoding has consistently better performance and compression than LZO. It has comparable compression with ZSTD but greatly better performance.

Tooling

After we decide the data placement, cluster size, partition keys, and sort keys, the next step is to look at the tooling for schema conversion and data migration.

You can use AWS Schema Conversion Tool (AWS SCT) to convert your schema, which can automate about 80% of the conversion, including the conversion of DISTKEY and SORTKEY, or you can choose to convert the Vertica DDLs to Amazon Redshift manually.

To efficiently migrate your data, you want to choose the right tools depending on the data size. If you have a dataset that is smaller than a couple of terabytes, you can migrate your data using AWS Data Migration Service (AWS DMS) or AWS SCT data extraction agents. When you have more than a few terabytes of data, your tool choice depends on your network connectivity. When there is no dedicated network connection, you can run the AWS SCT data extraction agents to copy the data to AWS Snowball Edge and ship the device back to AWS to complete the data export to Amazon S3. If you have a dedicated network connection to AWS, you can run the S3EXPORT or S3EXPORT_PARTITION commands available in Vertica 9.x directly from the Vertica nodes to copy the data in parallel to the S3 bucket.

The following diagram visualizes the migration process.

Schema conversion

AWS SCT uses extension pack schema to implement system functions of the source database that are required when writing your converted schema to your target database instance. Review the database migration assessment report for compatibility. AWS SCT can use source metadata and statistical information to determine the distribution key and sort key. AWS SCT adds a sort key in the Amazon Redshift table for the raw column used in the Vertica table’s Order By clause.

The following code is an example of Vertica CREATE TABLE and CREATE PROJECTION statements:

The following code is the corresponding Amazon Redshift CREATE TABLE statement:

Data migration

To significantly reduce the data migration time from large Vertica clusters (if you have a dedicated network connection from your premises to AWS with good bandwidth), run the S3EXPORT or S3EXPORT_PARTITION function in Vertica 9.x, which exports the data in parallel from the Vertica nodes directly to Amazon S3.

The Parquet files generated by S3EXPORT don’t have any partition key on them, because partitioning consumes time and resources on the database where the S3EXPORT runs, which is typically the Vertica production database. The following code is one command you can use:

The following code is another command option:

Performance

In this section, we look at best practices for ETL performance while copying the data from Amazon S3 to Amazon Redshift. We also discuss how to handle Vertica partition swapping and partition dropping scenarios in Amazon Redshift.

Copying using an Amazon S3 prefix

Make sure the ETL process is running from Amazon Elastic Compute Cloud (Amazon EC2) servers or other managed services within AWS. Exporting your data from Vertica as multiple files to Amazon S3 gives you the option to load your data in parallel to Amazon Redshift. While converting the Vertica ETL scripts, use the COPY command with an Amazon S3 object prefix to load an Amazon Redshift table in parallel from data files stored under that prefix on Amazon S3. See the following code:

Loading data using Amazon Redshift Spectrum queries

When you want to transform the exported Vertica data before loading to Amazon Redshift, or when you want to load only a subset of data into Amazon Redshift, use an Amazon Redshift Spectrum query. Create an external table in Amazon Redshift pointing to the exported Vertica data stored in Amazon S3 within an external schema. Put your transformation logic in a SELECT query, and ingest the result into Amazon Redshift using a CREATE TABLE or SELECT INTO statement:

Handling Vertica partitions

Vertica has partitions, and the data loads use partition swapping and partition dropping. In Amazon Redshift, we can use the sort key, staging table, and alter table append to achieve similar results. First, the Amazon Redshift ETL job should use the sort key as filter conditions to insert the incremental data into a staging table or a temporary table in Amazon Redshift, for example the date from the MyTimeStamp column between yesterday and today. The ETL job should then delete data from the primary table that matches the filter conditions. The delete operation is very efficient in Amazon Redshift because of the sort key on the source partition column. The Amazon Redshift ETL jobs can then use alter table append to move the new data to the primary table. See the following code:

Cluster management

When a Vertica node fails, Vertica remains queryable but the performance is degraded until all the data is restored to the recovered node. When an Amazon Redshift node fails, Amazon Redshift automatically detects and replaces a failed node in your data warehouse cluster and replays the ReadOnly queries. Amazon Redshift makes your replacement node available immediately and loads your most frequently accessed data from the S3 bucket first to allow you to resume querying your data as quickly as possible.

Vertica cluster resize, similar to Amazon Redshift classic resize, takes a few hours depending on data volume to rebalance the data when nodes are added or removed. With Amazon Redshift elastic resize, the cluster resize completes within minutes. We recommend elastic resize for most use cases to shorten the cluster downtime and schedule resizes to handle seasonal spikes in your workload.

Conclusion

This post shared some best practices for migrating your data warehouse from Vertica to Amazon Redshift. It also pointed out the differences between Amazon Redshift and Vertica in handling queries, data management, cluster management, and temporary tables. Create your cluster on the Amazon Redshift console and convert your schema using AWS SCT to start your migration to Amazon Redshift. If you have any questions or comments, please share your thoughts in the comments section.

About the Authors

Seetha Sarma is a Senior Database Solutions Architect with Amazon Web Services.

Seetha Sarma is a Senior Database Solutions Architect with Amazon Web Services.

Veerendra Nayak is a Senior Database Solutions Architect with Amazon Web Services.

Veerendra Nayak is a Senior Database Solutions Architect with Amazon Web Services.

Кой покрива “Газпром”? Ресорни министри крият информация за Турски поток

Post Syndicated from Николай Марченко original https://bivol.bg/%D1%80%D0%B5%D1%81%D0%BE%D1%80%D0%BD%D0%B8-%D0%BC%D0%B8%D0%BD%D0%B8%D1%81%D1%82%D1%80%D0%B8-%D0%BA%D1%80%D0%B8%D1%8F%D1%82-%D0%B8%D0%BD%D1%84%D0%BE%D1%80%D0%BC%D0%B0%D1%86%D0%B8%D1%8F-%D0%B7%D0%B0.html

Ключовите министри в правителството на Бойко Борисов, в чиито компетенции влиза реализацията на проекта за газопровод „Турски поток 2“, от седмици отказват всякаква информация по проекта на сайта за разследваща журналистика „Биволъ“. Докато очакваме отговори за договорите с руски компании, за статута на беларуски и руски работници по ареала на газопровода, ТАСС изведнъж съобщава, че изграждането на тръбата е приключило с прекарването й до сръбската граница. Посолството на САЩ пък също поне две седмици с мълчание отвръща на „Биволъ“ за потенциалните санкции и американската компания, доставила турбини за компресорната станция край с. Расово. Обоснованото подозрение е, че целта на това „мотане“ бе с цел проектът да бъде реализиран. И това е така, въпреки протестите на НПО-та начело с гражданското движение „България обединена с една цел“ (БОЕЦ) и политическите сили като „Да, България“ и „Зелено движение“.

В края на месец октомври 2020 г. „Биволъ“ се обърна към министрите Теменужка Петкова, Деница Сачева и Петя Ставрева с въпроси за условията, по които се реализира т.нар. „Балкански поток“ на територията на Република България.

„Биволъ“ изпрати по надлежен ред писмата си на името на тези министри съгласно Закона за достъп до обществената информация (ЗДОИ). По изтичането на двуседмичния законов срок обаче нито едно от трите ведомства не отговори по същество на поставените от медията ни въпроси.

Оказа се, че тримата министри използват типичната бюрократична „хватка“, използвана успешно от прокуратурата на България – т.нар.

„препращане по компетентност“.

И едно е да препращат на подчинените си институции в качеството си на принципал, както направиха зам.-министърът на енергетиката Жечо Станков и министърът на енергетиката Теменужка Петкова, които препратиха запитването до „Булгартрансгаз“.

Друго е, когато министърът на регионалното развитие препраща заявлението по ЗДОИ не само на подчинените си в Дирекцията за национален строителен контрол (ДНСК), а и към колегите в МТСП и Министерството на здравеопазването (МЗ).

Министри си прехвърлят топката

Въпросите до Теменужка Петкова са от нейната компетентност, тъй като касят ключовия енергиен проект, реализиран от „Булгартрансгаз“ като дружество на държавния Български енергиен холдинг (БЕХ) с принципал в лицето на министър на енергетиката. Ето какво написа “Биволъ”:

“ Уважаема Г–жо Министър,

молим да ни бъде предоставена информация съгласно Закона за достъп до обществена информация (ЗДОИ) по договорите на „Булгартрансгаз“ ЕАД с компаниите: “Аркад Енджиниъринг енд Констракшън“, “Аркад” С.П.А., ДЗЗД “Газово развитие и разширение в България“, “Комплишънс дивелъпмънт С.а р.л. – Клон България“, „Ай Ди Си“ (Infrastructure Development and Construction)и ОАО „Белтрубопроводстрой“ относно изграждането на разширението на българската газопреносна мрежа до сръбската граница. Съответно копията на договорите, анексите към тях със заличени данни по GDPR”.

Едва на 10 ноември 2020 г. от пресцентъра на МЕ информират репортера на „Биволъ“ по телефона какво се случва или по-скоро не се случва с преписката по случая. Още на 30 октомври екипът трябваше да е получил на мейла си придружаващото писмо на Жечо Станков и Теменужка Петкова до ръководството на „Булгартрансгаз“, което да даде отговори в законосъобразните срокове.

Очевидно „в министерството липсва информация“ относно договорите на БТГ с компаниите, изпълнители на „Турски поток 2“. Понеже такова писмо така и не постъпва, деловодството им все пак го препраща и до „Биволъ“ повторно на 10 ноември 2020 г. след съответното обаждане до пресцентъра на МЕ. Писмото е препратено до изпълнителния директор на БТГ Александър Малинов (на главната снимка с премиера Бойко Борисов).

МТСП пък получава заявлението на „Биволъ“ по ЗДОИ на 28 октомври 2020 г. и почти десет дни го държи в своето деловодство. Съдържанието е същото и със същите въпроси:

Уважаема Г–жо Министър,

молим да ни бъде предоставена информация съгласно Закона за достъп до обществена информация (ЗДОИ) относно наличието на проверки и контрол върху сключените трудови договори, изплащаните осигуровки (пенсионно и здравно осигуряване) от българските компании: „Булгартрансгаз“ ЕАД, „Главболгарстрой“ АД, „Главболгарстрой Интернешънъл“ АД „Стримона строй“, „Турбо Машина България ЕООД“, „Нова строй Тийм“ ЕООД.

Интересува ни как се спазва трудовото законодателство на Република България предвид хилядите работници от чуждестранни компании, които се намират в ареала на изграждането на разширението на българската газопреносна мрежа до сръбската граница.

По какъв начин се изплащат здравните осигуровки и обезщетенията при трудовите злополуки, при автомобилни катастрофи с работници от съответния строеж и при случаи на масово заразяване с Ковид 19 и необходимото изолиране на работници.

Включително такива извън страните на ЕС (Руска Федерация, Република Беларус, Република Сърбия, Република Турция, Саудитска Арабия, Украйна и др.) Става дума за юридическите лица, регистрирани в България за изпълнението на енергийния проект: „Бонатти С.П.А“, „Макс Щрайхер С.П.А“, „Комплишънс дивелъпмънт С.А.Р.Л- клон България“, “Аркад Енджиниъринг енд Констракшън“, “Аркад” С.П.А.,, „Ай Ди Си“ (Infrastructure Development and Construction), ОАО „Белтрубопроводстрой“.

Съответно изискваме копията на документацията, свързана с проверките за спазване на трудово законодателство след регистрираните злополуки на строителните обекти на този енергиен проект, сключените договори, изплащането на осигуровки и обезщетения при злополуки, анексите към тях със заличени данни по GDPR.

Следва препращане на 6 ноември „по компетентност“ до изпълнителния директор на ИА „Главна Инспекция по труда“ Румяна Михайлова, до изпълнителния директор на Националната агенция за приходите Галя Димитрова и до управителя на Националния осигурителен институт (НОИ) Ивайло Иванов:

„УВАЖАЕМИ ГОСПОЖИ И ГОСПОДА,

В Министерството на труда и социалната политика е постъпило заявление с вх. № АУ-2-22 от 28.10.2020 г. от г-н Николай Сергеев Марченко, журналист в сайта „БИВОЛЪ“. Получено е и заявление, с вх. № АУ-2-24 от 06.11.2020 г., препратено по компетентност от Министерство на регионалното развитие и благоустройство, с идентично съдържание, подадено от същия заявител.

Информирам Ви, че Министерството на труда и социалната политика не администрира поисканата с горепосочените заявления информация.

На основание чл. 32, ал. 1 от Закона за достъп до обществена информация, приложено препращам Ви по компетентност, постъпилите в Министерството на труда и социалната политика, заявления за достъп до обществена информация“.

Подписът под писмото е на главния секретар на ведомството на Деница Сачева – Милена Петрова.

Аналогично постъпват и във ведомството, ръководено от регионалния министър Петя Ставрева, към която “Биволъ” също се обърна със заявление по ЗДОИ:

“Уважаема Г–жо Министър,

молим да ни бъде предоставена информация съгласно Закона за достъп до обществена информация (ЗДОИ) относно наличието на проверки и контрол върху строителната дейност на българските компании и чуждестранни компании, които се намират в качеството си на изпълнители и подизпълнители в ареала на изграждането на разширението на българската газопреносна мрежа до сръбската граница.

Става дума за: „Булгартрансгаз“ ЕАД, „Главболгарстрой“ АД, „Главболгарстрой Интернешънъл“ АД „Стримона строй“, „Турбо Машина България ЕООД“, „Нова строй Тийм“ ЕООД. Включително такива извън страните на ЕС (Руска Федерация, Република Беларус, Република Сърбия, Република Турция, Саудитска Арабия, Украйна и др.) Става дума за юридическите лица, регистрирани в България за изпълнението на енергийния проект: „Бонатти С.П.А“, „Макс Щрайхер С.П.А“, „Комплишънс дивелъпмънт С.А.Р.Л- клон България“, “Аркад Енджиниъринг енд Констракшън“, “Аркад” С.П.А.,, „Ай Ди Си“ (Infrastructure Development and Construction), ОАО „Белтрубопроводстрой“.

Интересува ни как се спазва строителното законодателство на Република България и ЕС предвид хилядите работници от чуждестранни компании.

По какъв начин се осъществява строителният контрол предвид наличието на информация за нерегламентиран нощен труд, предизвикани трудови злополуки, автомобилни катастрофи с работници и случаи на масово заразяване с Ковид 19 с изолиране на десетки работници.

Съответно изискваме копията на документацията, свързана с наличие на строителен контрол / надзор над площадките и строителните обекти на този енергиен проект, законосъобразността на сключените договори и анексите към тях със заличени данни по GDPR.“.

Там отговорът е с подписа на директора Дирекция „Правна“ на МРРБ Боянка Георгиева, препратила “по компетентност” заявлението извън ведомството:

„УВАЖАЕМИ ГОСПОЖИ И ГОСПОДА,

На основание чл. 32, ал. 1 от Закона за достъп до обществена информация, приложено Ви изпращаме по компетентност постъпилото заявление за достъп до обществена информация с вх. № 94-00-99/28.10.2020 г., подадено от Николай Марченко, журналист в сайта „Биволъ“.

Писмото ни се препраща не само на сезираното от нас вече МТСП, но и до Министерство на здравеопазването и до подчинената на МРРБ контролна институция – ДНСК.

Вашингтон не бърза със санкциите

Интересното е, че и посланикът на Съединените Американски Щати в България Херо Мустафа не бърза да отговаря на поставените от „Биволъ“ въпроси относно реализирането на газопровода с участие на американски компании, доставяли не само тежка строителна техника, но и турбини за компресорната станция „Расово“.

Официалното запитване на „Биволъ“ е изпратено на 30 октомври 2020 г. със съответната информация, препратки към разследванията на медията ни и няколкото общи въпроса относно позицията и плановете на САЩ за реакция при реализирането на проекта:

„Ваше Превъзходителство,

молим да ни бъде предоставена информация относно плановете на САЩ за включване в санкционния списък на изпълнителите на проекта за газопровод „Турски поток 2“.

Обръщаме вниманието Ви на факта, че разследванията на „Биволъ“ и ГД „България Обединена с Една Цел“ (БОЕЦ) установиха, че държавният концерн „Булгартрансгаз“ ЕАД има договори с европейския клон на компания Solar Turbines (САЩ) –

“Солар Търбайнс Юръп С.А.“ – Белгия, доставила в с. Расово три компресора за Компресорната станция „Расово“ за нуждите на Турски поток 2. Заснети са с дрон от ГД „БОЕЦ“.

Установихме също така на линейната част на газопровода край с. Макреш (Област Монтана) наличие на тежка техника на американската Caterpillar, брандирана от официалния дистрибутор за Гърция и България Eltrak Group (вижте: Dubious Bulgarian Companies Provide Cover Up Construction-Equipment for Turkstream 2). Според Информационната система за управление и наблюдение на евросрествата в България (ИСУН) “Елтрак България” е доставчик по европроект на фирмата “Верда 008” OOД, чиято техника американско производство може да се види по строежите на газопровода.

От българската страна подизпълнители са „Главболгарстрой“ АД и „Главболгарстрой Интернешънъл“ АД в консорциум ДЗЗД „ФЕРОЩАЛ БАЛКАНГАЗ“ с „Ферощал Индустрианлаген ГмбХ – Германия“. Сред декларираните от тях подизпълнители са: “Турбо Машина България” ЕООД и “Химкомплект Инженеринг” АД.

Обръщаме вниманието Ви на факта, че „Турбо Машина България“ ЕООД има едни и същи собственици с фирмата „Брайт Инженеринг ООД“ – Варна, която през 2007, 2010, 2012 и 2016 г. е

подизпълнител на двата ТЕЦА-а, собственост на компании от САЩ: „Ей и Ес – 3С Марица изток I” ЕООД и „КонтурГлобал Марица Изток 3“ АД.

Иначе компаниите извън ЕС и НАТО на Турски поток 2 са: “Аркад Енджиниъринг енд Констракшън” (Саудитска Арабия), “Аркад” С.П.А.(Милано, Италия), контрол върху които според данните ни е минал в ръцете на компании с краен собственик ОАО „Газпром“ (Руска Федерация).

Също на обекта работи ДЗЗД “Газово развитие и разширение в България – ГРРБ“ с участие на Консорцио Варна 1 („Бонатти С.П.А“ и „Макс Щрайхер С.П.А“). Относно италианската Bonatti установихме (вижте Company Suspected of Ties with Cosa-nostra Wants to Build Turkstream 2 in Bulgaria) в партньорство с Investigative Reporting Project Italy (IRPI), че е свързана със сицилианската ОПГ Коза Ностра и е подизпълнител на територията на Италия и Гърция на подкрепените от САЩ и ЕС проекти за Трансадриатически и Трансанадолски газопроводи.

За компанията IDC – Infrastructure Development and Construction (Белград, Сърбия) е установено, че през мрежа от сръбски и офшорни юридически лица е собственост на „Газпром“, който отдавна е под санкциите на САЩ и ЕС. Беларуският държавен концерн ОАО „Белтрубопроводстрой“ (вижте: Lukashenko is Building Tturkstream in bulgaria with European Money) е дългогодишен подизпълнител на „Газпром“ и е изключен от Литовската държавна агенция за сигурност от проекта за газов интерконектор „Полша – Литва“ заради потенциалните си връзки с беларуски и руски специални служби.

Наличието на американски доставчици, оборудване и тежка техника означава ли, че Вашингтон не е против компании от САЩ да участват в проектите на „Газпром“? Заобиколила ли е Solar Turbines през белгийския си клон обявените от Държавния департамент санкции за Северен поток 2 и Турски поток 2?

Ще бъде ли извършена проверка за законосъобразността на сътрудничеството на американски компании с „Булгартрансгаз“ и подизпълнители на подсанкционния в САЩ и ЕС „Газпром“?

Могат ли да бъдат наложени санкции върху българските компании като „Български енергиен холдинг“ ЕАД, „Булгартрансгаз“ ЕАД, „Главболгарстрой Интернешънъл“ АД и др., които реализират спорния за интересите на ЕС и НАТО Турски поток 2? Нарушава ли Република България Договора си за членство в НАТО, след като допуска представители на руски служби да охраняват офиса/базата на IDC и Arkad в гр. Брусарци, използвали физическа сила срещу Биволъ и ГД БОЕЦ при заснемане на обекта?

Каква е позицията на Посолството относно реализирания проект, подлага ли се той вече на санкции наред със Северен поток 2 или това предстои да бъде решено в обявения 30-дневен срок?“

На 30 октомври специалистът „Информация“ в Посолството на САЩ Раиса Йорданова отговаря на медията ни, че запитването ни е получено.

„Опитваме се да намерим човек, който да отговори на въпросите Ви“, гласи имейлът от пресслужбата на Херо Мустафа.

На 2 ноември Раиса Йорданова не коментира дали и в какви срокове може да се очаква някакъв официален отговор от страна на американската дипломатическа мисия у нас:

„За съжаление, не мога да дам конкретен отговор на въпроса Ви“.

По време на проведения телефонен разговор на 10 ноември на екипа ни пак е отговорено, че не се знае кога могат да очакват отговора на посланик Херо Мустафа относно евентуалните санкции за „Турски поток 2“.

Дали тази липса на официална позиция е в резултат на несигурността с резултатите на изборите в САЩ, където действащият държавен глава Доналд Тръмп по първоначални данни губи битката за Белия дом?

Или е свързано с желанието на напускащия поста президент и неговия държавен секретар Майк Помпео да не си развалят отношенията с руския президент Владимир Путин заради и без това достроената тръба?

А и всеки президент и държавен секретар има право да си смени посланиците след встъпването в длъжност. Затова и Херо Мустафа може да бъде сменена след януари 2021 г., ако Джо Байдън заеме поста на президент, а бившата съветничка на Барак Обама по международна политика Сюзан Райс стане държавен секретар на САЩ.

И в този случай американската дипломатка по разбираеми причини може да няма желание да си разваля отношенията с руския си колега в София Анатолий Макаров, за когото реализирането на ребрандирания „Южен поток“ под формата на „Турски поток 2“ може да се окаже повод за повишение в дипломатическата му кариера.

Тръбата е готова, отговорите – липсват

Докато тече тази безкрайна официална кореспонденция, на 9 ноември 2020 г. изведнъж главната руска държавна информационна агенция информира, че газопроводът през България вече е готов.

„Строителството на „Балкански поток“ де факто е завършено, а българският участък от газопровода е свързан с този в Сърбия. Това съобщи пред ТАСС информиран източник, участващ в изграждането на газопровода“, писа в. „Сега“, както и редица други национални медии.

Оказа се, че властта и изпълнителите са най-обезпокоени не дали ще бъде пуснат в експлоатация предвид риска от санкциите на САЩ, а дали ще има пищен банкет по повод приключилата работа.

„Балкански поток” е завършен, свързан е с газопровод в Сърбия, няма официални събития във връзка с завършването на газопровода поради пандемията на коронавируса, а церемонията вероятно ще се състои по-късно “.

Това е казал пред агенция ТАСС неназованият източник в София.

През октомври министър-председателят Бойко Борисов обяви, че строителството на газопровода “Балкански поток” в страната „е почти приключило“.

Тогава премиерът отново подчерта пред, че “с изпълнението на проекта “Балкански поток” България щяла

„да се превърне в регионален стратегически газоразпределителен център и ще гарантира диверсификация на доставките на газ”.

Всъщност, „Балкански поток“ е продължение на газопровода „Турски поток“ през територията на България, а за Държавния департамент на САЩ това е един и същи газопровод. В официалните документи се нарича „обект на разширяване на газопреносната инфраструктура на компанията „Булгартрансгаз “паралелно със северния главен газопровод до българо-сръбската граница“.

Първият участък на „Турски поток“ беше пуснат на 8 януари 2020 г. тръбата минава по дъното на Черно море от Русия до бреговете на Турция. Това вече ощети БТГ откъм транзитните такси, тъй като Турция в качеството на най-голям потребител от години беше получавала своето синьо гориво през стария Трансбалкански газопровод, който е с маршрут за пренос по линията: Русия – Украйна – Молдова – Румъния – България.

Но от началото на 2020 г. Анкара вече получава основните си количества от „Газпром“ през морската част на „Турски поток“. Така Москва успява да накаже не само Украйна, но и страните по маршрута с орязването до минимум на транзитните такси, които оттук нататък България получава само за пренос към малките енергийни потребители като Сърбия и Северна Македония.

“Биволъ” поиска позиция по проекта и от ресорните вицепремиери по еврофондовете Томислав Дончев и по икономическата политика Мариана Николова

дали според тях е нормално да се използва строителната техника, закупена по еврофондове на площадките за “Турски поток 2”.

И дали правителството е готово при наличие на рискове от санкции на САЩ да пусне газопровода в експлоатация.

Двамата ресорни зам.-министър-председатели очаквано игнорираха поставените от медията ни въпроси.

Същевременно сръбската дъщерна компания на “Газпром” IDC е подала сигнал към Районното полицейско управление на гр. Брусарци заради навлизането на “неустановени лица” на “нейната територия” след акцията на БОЕЦ и Биволъ пред офиса на дружеството в началото на октомври т.г.

Районният прокурор на Лом пък все още разглежда подадените тогава от екипите на Биволъ и БОЕЦ жалби за към ПУ Брусарци заради прилагане на физическа сила от неизвестни рускоезични служители на IDC, опитали да ни изведат извън бариерата на общинския терен, използван от тях за база.

На 9 ноември от ГД “БОЕЦ” са внесли и и официален сигнал до ДНСК към МРРБ с искане да бъде спряна работата по газопровода поради липсата на адекватен строителен контрол и евентуалните нарушения по трасето:

“На основание чл. 224 и чл. 225 от ЗУТ, БОЕЦ изискваме от ДНСК образуването на проверка и да пристъпи към незабавно спиране на строителните дейности на компресорната станция до с. Расово, област Монтана”.

“Както и по трасето на „Турски поток” до приключване на проверката и премахване на установените нередности”, гласи сигналът на гражданското НПО.

Meet the newest AWS Heroes including the first DevTools Heroes!

Post Syndicated from Ross Barich original https://aws.amazon.com/blogs/aws/meet-the-newest-aws-heroes-including-the-first-devtools-heroes/

The AWS Heroes program recognizes individuals from around the world who have extensive AWS knowledge and go above and beyond to share their expertise with others. The program continues to grow, to better recognize the most influential community leaders across a variety of technical disciplines.

Introducing AWS DevTools Heroes

Today we are introducing AWS DevTools Heroes: passionate advocates of the developer experience on AWS and the tools that enable that experience. DevTools Heroes excel at sharing their knowledge and building community through open source contributions, blogging, speaking, community organizing, and social media. Through their feedback, content, and contributions DevTools Heroes help shape the AWS developer experience and evolve the AWS DevTools, such as the AWS Cloud Development Kit, the AWS SDKs, and AWS Code suite of services.

The first cohort of AWS DevTools Heroes include:

Bhuvaneswari Subramani – Bengaluru, India

DevTools Hero Bhuvaneswari Subramani is Director Engineering Operations at Infor. With two decades of IT experience, specializing in Cloud Computing, DevOps, and Performance Testing, she is one of the community leaders of AWS User Group Bengaluru. She is also an active speaker at AWS community events and industry conferences, and delivers guest lectures on Cloud Computing for staff and students at engineering colleges across India. Her workshops, presentations, and blogs on AWS Developer Tools always stand out.

DevTools Hero Bhuvaneswari Subramani is Director Engineering Operations at Infor. With two decades of IT experience, specializing in Cloud Computing, DevOps, and Performance Testing, she is one of the community leaders of AWS User Group Bengaluru. She is also an active speaker at AWS community events and industry conferences, and delivers guest lectures on Cloud Computing for staff and students at engineering colleges across India. Her workshops, presentations, and blogs on AWS Developer Tools always stand out.

Jared Short – Washington DC, USA

DevTools Hero Jared Short is an engineer at Stedi, where they are using AWS native tooling and serverless services to build a global network for exchanging B2B transactions in a standard format. Jared’s work includes early contributions to the Serverless Framework. These days, his focus is working with AWS CDK and other toolsets to create intuitive and joyful developer experiences for teams on AWS.

DevTools Hero Jared Short is an engineer at Stedi, where they are using AWS native tooling and serverless services to build a global network for exchanging B2B transactions in a standard format. Jared’s work includes early contributions to the Serverless Framework. These days, his focus is working with AWS CDK and other toolsets to create intuitive and joyful developer experiences for teams on AWS.

Matt Coulter – Belfast, Northern Ireland

DevTools Hero Matt Coulter is a Technical Architect for Liberty IT, focused on creating the right environment for empowered teams to rapidly deliver business value in a well-architected, sustainable, and serverless-first way. Matt has been creating this environment by building CDK Patterns, an open source collection of serverless architecture patterns built using AWS CDK that reference the AWS Well Architected Framework. Matt also created CDK Day, which was the first community driven, global conference focused on everything CDK (AWS CDK, CDK for Terraform, CDK for Kubernetes, and others).

DevTools Hero Matt Coulter is a Technical Architect for Liberty IT, focused on creating the right environment for empowered teams to rapidly deliver business value in a well-architected, sustainable, and serverless-first way. Matt has been creating this environment by building CDK Patterns, an open source collection of serverless architecture patterns built using AWS CDK that reference the AWS Well Architected Framework. Matt also created CDK Day, which was the first community driven, global conference focused on everything CDK (AWS CDK, CDK for Terraform, CDK for Kubernetes, and others).

Paul Duvall – Washington DC, USA

DevTools Hero Paul Duvall is co-founder and former CTO of Stelligent. He is principal author of “Continuous Integration: Improving Software Quality and Reducing Risk,” and is also the author of many other publications including “Continuous Compliance on AWS,” “Continuous Encryption on AWS,” and “Continuous Security on AWS.” Paul hosted the DevOps on AWS Radio podcast for over three years and has been an enthusiastic user and advocate of AWS Developer Tools since their respective releases.

DevTools Hero Paul Duvall is co-founder and former CTO of Stelligent. He is principal author of “Continuous Integration: Improving Software Quality and Reducing Risk,” and is also the author of many other publications including “Continuous Compliance on AWS,” “Continuous Encryption on AWS,” and “Continuous Security on AWS.” Paul hosted the DevOps on AWS Radio podcast for over three years and has been an enthusiastic user and advocate of AWS Developer Tools since their respective releases.

Sebastian Korfmann – Hamburg, Germany

DevTools Hero Sebastian Korfmann is an entrepreneurial Software Engineer with a current focus on Cloud Tooling, Infrastructure as Code, and the Cloud Development Kit (CDK) ecosystem in particular. He is a core contributor to the CDK for Terraform project, which enables users to define infrastructure using TypeScript, Python, and Java while leveraging the hundreds of providers and thousands of module definitions provided by Terraform and the Terraform ecosystem. With cdk.dev, Sebastian co-founded a community-driven hub for all things CDK, and he runs a weekly newsletter covering the growing CDK ecosystem.

DevTools Hero Sebastian Korfmann is an entrepreneurial Software Engineer with a current focus on Cloud Tooling, Infrastructure as Code, and the Cloud Development Kit (CDK) ecosystem in particular. He is a core contributor to the CDK for Terraform project, which enables users to define infrastructure using TypeScript, Python, and Java while leveraging the hundreds of providers and thousands of module definitions provided by Terraform and the Terraform ecosystem. With cdk.dev, Sebastian co-founded a community-driven hub for all things CDK, and he runs a weekly newsletter covering the growing CDK ecosystem.

Steve Gordon – East Sussex, United Kingdom

DevTools Hero Steve Gordon is a Pluralsight author and senior engineer who is passionate about community and all things .NET related, having worked with .NET for over 16 years. Steve has used AWS extensively for five years as a platform for running .NET microservices. He blogs regularly about running .NET on AWS, including deep dives into how the .NET SDK works, building cloud-native services, and how to deploy .NET containers to Amazon ECS. Steve founded .NET South East, a .NET Meetup group based in Brighton.

DevTools Hero Steve Gordon is a Pluralsight author and senior engineer who is passionate about community and all things .NET related, having worked with .NET for over 16 years. Steve has used AWS extensively for five years as a platform for running .NET microservices. He blogs regularly about running .NET on AWS, including deep dives into how the .NET SDK works, building cloud-native services, and how to deploy .NET containers to Amazon ECS. Steve founded .NET South East, a .NET Meetup group based in Brighton.

Thorsten Höger – Stuttgart, Germany

DevTools Hero Thorsten Höger is CEO and cloud consultant at Taimos, where he is advising customers on how to use AWS. Being a developer, he focuses on improving development processes and automating everything to build efficient deployment pipelines for customers of all sizes. As a supporter of open-source software, Thorsten is maintaining or contributing to several projects on GitHub, like test frameworks for AWS Lambda, Amazon Alexa, or developer tools for AWS. He is also the maintainer of the Jenkins AWS Pipeline plugin and one of the top three non-AWS contributors to AWS CDK.

DevTools Hero Thorsten Höger is CEO and cloud consultant at Taimos, where he is advising customers on how to use AWS. Being a developer, he focuses on improving development processes and automating everything to build efficient deployment pipelines for customers of all sizes. As a supporter of open-source software, Thorsten is maintaining or contributing to several projects on GitHub, like test frameworks for AWS Lambda, Amazon Alexa, or developer tools for AWS. He is also the maintainer of the Jenkins AWS Pipeline plugin and one of the top three non-AWS contributors to AWS CDK.

Meet the rest of the new AWS Heroes

There is more good news! We are thrilled to introduce the remaining new AWS Heroes in this cohort, including the first Heroes from Argentina, Lebanon, and Saudi Arabia:

Ahmed Samir – Riyadh, Saudi Arabia

Community Hero Ahmed Samir is a Cloud Architect and mentor with more than 12 years of experience in IT. He is the leader of three Arabic Meetups in Riyadh: AWS, Amazon SageMaker, and Kubernetes, where he has organized and delivered over 40 Meetup events. Ahmed frequently shares knowledge and evangelizes AWS in Arabic through his social media accounts. He also holds AWS and Kubernetes certifications.

Community Hero Ahmed Samir is a Cloud Architect and mentor with more than 12 years of experience in IT. He is the leader of three Arabic Meetups in Riyadh: AWS, Amazon SageMaker, and Kubernetes, where he has organized and delivered over 40 Meetup events. Ahmed frequently shares knowledge and evangelizes AWS in Arabic through his social media accounts. He also holds AWS and Kubernetes certifications.

Anas Khattar – Beirut, Lebanon

Community Hero Anas Khattar is co-founder of Digico Solutions. He founded the AWS User Group Lebanon in 2018 and coordinates monthly meetups and workshops on a variety of cloud topics, which helped grow the group to more than 1,000 AWSome members. He also regularly speaks at tech conferences and authors tech blogs on Dev Community, sharing his AWS experiences and best practices. In close collaboration with the regional AWS community leaders and builders, Anas organized AWS Community Day MENA, which started in September 2020 with 12 User Groups from 10 countries, and hosted 27 speakers over 2 days.

Community Hero Anas Khattar is co-founder of Digico Solutions. He founded the AWS User Group Lebanon in 2018 and coordinates monthly meetups and workshops on a variety of cloud topics, which helped grow the group to more than 1,000 AWSome members. He also regularly speaks at tech conferences and authors tech blogs on Dev Community, sharing his AWS experiences and best practices. In close collaboration with the regional AWS community leaders and builders, Anas organized AWS Community Day MENA, which started in September 2020 with 12 User Groups from 10 countries, and hosted 27 speakers over 2 days.

Chris Gong – New York, USA

Community Hero Chris Gong is constantly exploring the different ways that cloud services can be applied in game development. Passionate about sharing his knowledge with the world, he routinely creates tutorials and educational videos on his YouTube channel, Flopperam, where the primary focus has been AWS Game Tech and Unreal Engine, specifically the multiplayer and networking aspects of game development. Although Amazon GameLift has been his biggest interest, Chris has plans to cover the usage of other AWS services in game development while exploring how they can be integrated with other game engines besides Unreal Engine.

Community Hero Chris Gong is constantly exploring the different ways that cloud services can be applied in game development. Passionate about sharing his knowledge with the world, he routinely creates tutorials and educational videos on his YouTube channel, Flopperam, where the primary focus has been AWS Game Tech and Unreal Engine, specifically the multiplayer and networking aspects of game development. Although Amazon GameLift has been his biggest interest, Chris has plans to cover the usage of other AWS services in game development while exploring how they can be integrated with other game engines besides Unreal Engine.

Damian Olguin – Cordoba, Argentina

Community Hero Damian Olguin is a tech entrepreneur and one of the founders of Teracloud, an AWS APN Partner. As a community leader, he promotes knowledge-sharing experiences in user communities within LATAM. He is co-organizer of AWS User Group Cordoba and co-host of #DeepFridays, a Twitch streaming show that promotes AI/ML technology adoption by playing with DeepRacer, DeepLens, and DeepComposer. Damian is a public speaker who has spoken at AWS Community Day Buenos Aires 2019, AWS re:Invent 2019, and AWS Community Day LATAM 2020.

Community Hero Damian Olguin is a tech entrepreneur and one of the founders of Teracloud, an AWS APN Partner. As a community leader, he promotes knowledge-sharing experiences in user communities within LATAM. He is co-organizer of AWS User Group Cordoba and co-host of #DeepFridays, a Twitch streaming show that promotes AI/ML technology adoption by playing with DeepRacer, DeepLens, and DeepComposer. Damian is a public speaker who has spoken at AWS Community Day Buenos Aires 2019, AWS re:Invent 2019, and AWS Community Day LATAM 2020.

Denis Bauer – Sydney, Australia

Data Hero Denis Bauer is a Principal Research Scientist at Australia’s government research agency (CSIRO). Her open source products include VariantSpark, the Machine Learning tool for analysing ultra-high dimensional data, which was the first AWS Marketplace health product from a public sector organization. Denis is passionate about facilitating the digital transformation of the health and life-science sector by building a strong community of practice through open source technology, keynote presentations, and inclusive interdisciplinary collaborations. For example, the collaboration with her organization’s visionary Scientific Computing and Cloud Platforms experts as well as AWS Data Hero Lynn Langit has enabled the creation of cloud-based bioinformatics solutions used by 10,000 researchers annually.

Data Hero Denis Bauer is a Principal Research Scientist at Australia’s government research agency (CSIRO). Her open source products include VariantSpark, the Machine Learning tool for analysing ultra-high dimensional data, which was the first AWS Marketplace health product from a public sector organization. Denis is passionate about facilitating the digital transformation of the health and life-science sector by building a strong community of practice through open source technology, keynote presentations, and inclusive interdisciplinary collaborations. For example, the collaboration with her organization’s visionary Scientific Computing and Cloud Platforms experts as well as AWS Data Hero Lynn Langit has enabled the creation of cloud-based bioinformatics solutions used by 10,000 researchers annually.

Denis Dyack – St. Catharines, Canada

Community Hero Denis Dyack, a video game industry veteran of more than 30 years, is the Founder and CEO of Apocalypse Studios. His studio evangelizes using a cloud-first approach in game development and partners with AWS Game Tech to move the medium of the games industry forward. In his years of experience speaking at games conferences and within AWS communities, Denis has been an advocate for building on Amazon Lumberyard and more recently in moving over game development pipelines to AWS.

Community Hero Denis Dyack, a video game industry veteran of more than 30 years, is the Founder and CEO of Apocalypse Studios. His studio evangelizes using a cloud-first approach in game development and partners with AWS Game Tech to move the medium of the games industry forward. In his years of experience speaking at games conferences and within AWS communities, Denis has been an advocate for building on Amazon Lumberyard and more recently in moving over game development pipelines to AWS.

Emrah Şamdan – Ankara, Turkey

Serverless Hero Emrah Şamdan is the VP of Products at Thundra. In order to expand the serverless community globally in a pandemic, he co-organized the quarterly held ServerlessDays Virtual. He’s also a local community organizer for AWS Community Day Turkey, ServerlessDays Istanbul, and bi-weekly meetups at Cloud and Serverless Turkey. He’s currently part of the core organizer team of global ServerlessDays and is continuously looking for ways to expand the community. He frequently writes about serverless and cloud-native microservices on Medium and on the Thundra blog.

Serverless Hero Emrah Şamdan is the VP of Products at Thundra. In order to expand the serverless community globally in a pandemic, he co-organized the quarterly held ServerlessDays Virtual. He’s also a local community organizer for AWS Community Day Turkey, ServerlessDays Istanbul, and bi-weekly meetups at Cloud and Serverless Turkey. He’s currently part of the core organizer team of global ServerlessDays and is continuously looking for ways to expand the community. He frequently writes about serverless and cloud-native microservices on Medium and on the Thundra blog.

Franck Pachot – Lausanne, Switzerland

Data Hero Franck Pachot is the Principal Consultant and Database Evangelist at dbi services (an AWS Select Partner) and is passionate about all databases. With over 20 years of experience in development, data modeling, infrastructure, and all DBA tasks, Franck is a recognized database expert across Oracle and AWS. Franck is also an AWS Academy educator for Powercoders, and holds AWS Certified Database Specialty and Oracle Certified Master certifications. Franck contributes to technical communities, educating customers on AWS Databases through his blog, Twitter, and podcast in French. He is active in the Data community, and enjoys talking and meeting other data enthusiasts at conferences.

Data Hero Franck Pachot is the Principal Consultant and Database Evangelist at dbi services (an AWS Select Partner) and is passionate about all databases. With over 20 years of experience in development, data modeling, infrastructure, and all DBA tasks, Franck is a recognized database expert across Oracle and AWS. Franck is also an AWS Academy educator for Powercoders, and holds AWS Certified Database Specialty and Oracle Certified Master certifications. Franck contributes to technical communities, educating customers on AWS Databases through his blog, Twitter, and podcast in French. He is active in the Data community, and enjoys talking and meeting other data enthusiasts at conferences.

Hiroko Nishimura – Washington DC, USA

Community Hero Hiroko Nishimura (Hiro) is the founder of AWS Newbies and Cloud Newbies, which help people with non-traditional technical backgrounds begin their explorations into the AWS Cloud. As a “career switcher” herself, she has been community building since 2018 to help others deconstruct Cloud Computing jargon so they, too, can begin a career in the Cloud. Finally putting her degrees in Special Education to good use, Hiro teaches “Introduction to AWS for Non-Engineers” courses at LinkedIn Learning, and introductory coding lessons at egghead.

Community Hero Hiroko Nishimura (Hiro) is the founder of AWS Newbies and Cloud Newbies, which help people with non-traditional technical backgrounds begin their explorations into the AWS Cloud. As a “career switcher” herself, she has been community building since 2018 to help others deconstruct Cloud Computing jargon so they, too, can begin a career in the Cloud. Finally putting her degrees in Special Education to good use, Hiro teaches “Introduction to AWS for Non-Engineers” courses at LinkedIn Learning, and introductory coding lessons at egghead.

Juliano Cristian – Santa Catarina, Brazil

Community Hero Juliano Cristian is CEO of Game Business Accelerator Academy and co-founder of Game Developers SC which participates in the AWS APN program. He organizes the AWS Game Tech Lumberyard User Group in Florianópolis, Brazil, holding Meetups, Practical Labs, Game Jams, and Workshops. He also conducts many lectures at universities, speaking with more than 90 educational institutions across Brazil, introducing students to cloud computing and AWS Game Tech services. Whenever he can, Juliano also participates in other AWS User Groups in Brazil and Latin America, working to build an increasingly motivated and productive community.

Community Hero Juliano Cristian is CEO of Game Business Accelerator Academy and co-founder of Game Developers SC which participates in the AWS APN program. He organizes the AWS Game Tech Lumberyard User Group in Florianópolis, Brazil, holding Meetups, Practical Labs, Game Jams, and Workshops. He also conducts many lectures at universities, speaking with more than 90 educational institutions across Brazil, introducing students to cloud computing and AWS Game Tech services. Whenever he can, Juliano also participates in other AWS User Groups in Brazil and Latin America, working to build an increasingly motivated and productive community.

Jungyoul Yu – Seoul, Korea

Machine Learning Hero Jungyoul Yu works at Danggeun Market as a DevOps Engineer, and is a leader of the AWS DeepRacer Group, part of the AWS Korea User Group. He was one of the AWS DeepRacer League finalists in AWS Summit Seoul and AWS re:Invent 2019. Starting off with zero ML experience, Jungyoul used AWS DeepRacer to learn ML techniques and began sharing his learnings both in the AWS DeepRacer Community, with User Groups, at AWS Community Day, and at various meetups. He has also shared many blog posts and sample code such as DeepRacer Reward Function Simulator, Rank Notifier, and Auto submit bot.

Machine Learning Hero Jungyoul Yu works at Danggeun Market as a DevOps Engineer, and is a leader of the AWS DeepRacer Group, part of the AWS Korea User Group. He was one of the AWS DeepRacer League finalists in AWS Summit Seoul and AWS re:Invent 2019. Starting off with zero ML experience, Jungyoul used AWS DeepRacer to learn ML techniques and began sharing his learnings both in the AWS DeepRacer Community, with User Groups, at AWS Community Day, and at various meetups. He has also shared many blog posts and sample code such as DeepRacer Reward Function Simulator, Rank Notifier, and Auto submit bot.

Juv Chan – Singapore

Machine Learning Hero Juv Chan is an AI automation engineer at UBS. He is the AWS DeepRacer League Singapore Summit 2019 champion and a re:Invent Championship Cup 2019 finalist. He is the lead organizer for the AWS DeepRacer Beginner Challenge global virtual community race in 2020. Juv is also involved in sharing his DeepRacer and Machine Learning knowledge with the AWS Machine Learning community at both global and regional scale. Juv is a contributing writer for both Towards Data Science and Towards AI platforms, where he blogs about AWS AI/ML and cloud relevant topics.