Post Syndicated from Jocelyn Woolbright original https://blog.cloudflare.com/cloudflares-athenian-project-expands-internationally/

Over the course of the past few years, we’ve seen a wide variety of different kinds of online threats to democratically-held elections around the world. These threats range from attempts to restrict the availability of information, to efforts to control the dialogue around elections, to full disruptions of the voting process.

Some countries have shut down the Internet completely during elections. In 2020, Access Now’s #KeepItOn Campaign reported at least 155 Internet shutdowns in 29 countries such as Togo, Republic of the Congo, Niger and Benin. In 2021, Uganda’s government ordered the “Suspension Of The Operation Of Internet Gateways” the day before the country’s general election.

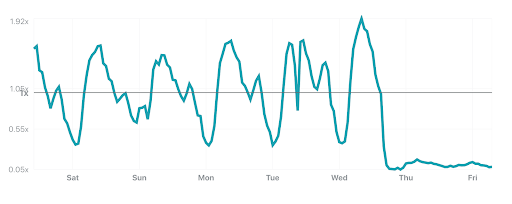

Even outside a full Internet shutdown, election reporting and registration websites can face attacks from other nations and from parties seeking to disrupt the administration of the election or undermine trust in the electoral process. These cyberattacks target not only electronic voting or election technologies, but access to information and communications tools such as voter registration and websites that host election results. In 2014, a series of cyberattacks including DDoS, malware and phishing attacks were launched against Ukraine’s Central Election Commission ahead of the presidential election. These sophisticated attacks attempted to infiltrate the internal voting system and spread malware to deliver fake election results. Similar attacks were seen again in 2019 as Ukraine accused Russia of launching a DDoS attack against the CEC a month before the presidential election. These types of attacks that target electoral management agencies’ communication tools and public facing websites have been on the rise in countries ranging from Indonesia, North Macedonia, Georgia, and Estonia.

Three and a half years ago, Cloudflare launched the Athenian Project to provide free Enterprise level services to state and local election websites in the United States. Through this project we have protected over 292 websites with information about voter registration, voting and polling places, as well as sites publishing final results across 30 states at no cost to the entities administering them. However, due to the growing trend of cyberattacks targeting election infrastructure, election security is not a US-specific issue, and since we launched the Athenian Project in the United States many people have asked: why don’t you extend these cybersecurity protections to election entities around the world?

Challenges, Solutions and Partnerships

The short answer is we weren’t entirely sure whether Cloudflare, a US based company, could provide a free set of upgraded security services to foreign election entities. Cloudflare is a global company with 16 offices around the world and a global network that spans over 100 countries to provide security and performance tools. We are proud to create new and innovative products to enhance user privacy and security, but understanding the intricacies of local elections, the regulatory environment, and political players is complicated, to say the least.

When we started the Athenian Project in 2017, we understood the environment and gaps in coverage for state and local governments in the United States. The United States has a decentralized election administrative system, which means that local election administrators may conduct elections differently in every state. Because of the funding challenges that come with a decentralized system, state and local governments in all 50 states could benefit from free Enterprise-level services. Fast-forward to more than three years after we launched the project, we have learned a great deal about what types of threats election entities face, what products election entities need for securing their web infrastructure, and how to build trust with state and local governments in need of these types of protections.

As the Athenian Project and Cloudflare for Campaigns grew in the United States, we received inquiries from foreign election bodies, political parties and campaigns on whether they were eligible for protection under one of these projects. We turned to our Project Galileo partners for their advice and guidance.

Under Project Galileo, we partner with more than 40 civil society organizations to protect a range of sensitive players on the Internet including human rights organizations, journalism and independent media, and organizations that focus on strengthening democracy in 111 countries. Many of these civil society partners work on election-related matters such as capacity building, strengthening democratic institutions, supporting civil society organizations to equipping these groups with the tools they need to be safe and secure online. These partners, many of whom have local representatives on the ground, understand the intricacies of the election landscape and delicate nature of trust building between local election administrations, political parties and organizations with personnel directly on the ground in many of these regions to provide direct support and expertise when it comes to safeguarding elections.

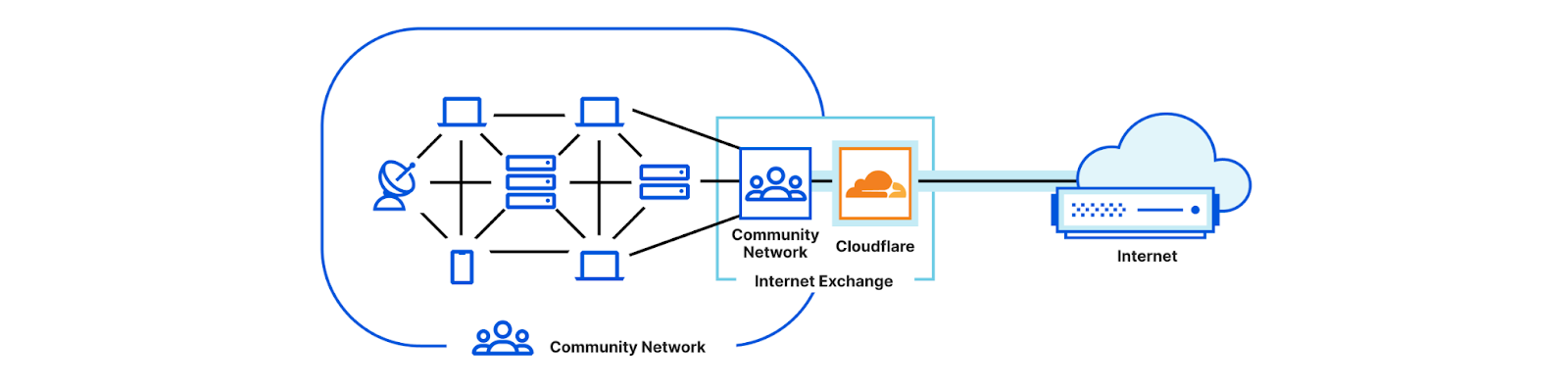

After many discussions and years in the making, we are excited to announce our collaboration with The International Foundation for Electoral Systems, National Democratic Institute, the International Republican Institute and to provide free Enterprise Cloudflare services to groups working on election reporting and to election management agencies to provide the tools, resources and expertise to help them stay online in the face of large scale cyber attacks.

Partnership with International Foundation for Electoral Systems

As we work with civil society organizations on issues in the election space and extending protections outside the United States, we frequently heard organizations bring up IFES, the International Foundation for Electoral Systems, due to their expertise in promoting and protecting democracy. The International Foundation for Electoral Systems is a nonpartisan, nonprofit organization that has worked in more than 145 countries, from developing to mature democracies.

Founded in 1987, IFES’ work in promoting democracy and genuine elections has evolved to meet the challenges of today and tomorrow. IFES offers research, innovation and technical assistance to support democratic elections, human rights, combat corruption, promote equal political participation, and ensure that information and technology advance, not undermine, democracy and elections.

One of the many reasons we wanted to work with IFES on expanding our election offering was due to the organizations’ unique position in terms of technical expertise, understanding of the political landscapes in which they operate, and fundamental knowledge of the types of protections these election management bodies (EMBs) need in preparing and conducting elections. Building trust in the election space is critical when providing support to EMBs. Due to years of hard work from IFES assisting with the implementation of election operations as well as direct assistance to support democratic developments, and the trust IFES has correspondingly developed with EMBs, they were a logical partner.

IFES’ Center for Technology & Democracy, in collaboration with IFES program teams worldwide, provides cybersecurity and ICT assistance to EMBs and civil society organizations (CSOs). IFES uses leading cybersecurity and ICT practices and standards incorporated into its Holistic Exposure and Adaptation Testing (HEAT) methodology with the aim of increasing EMBs and CSOs digital transformation while mitigating associated risks.

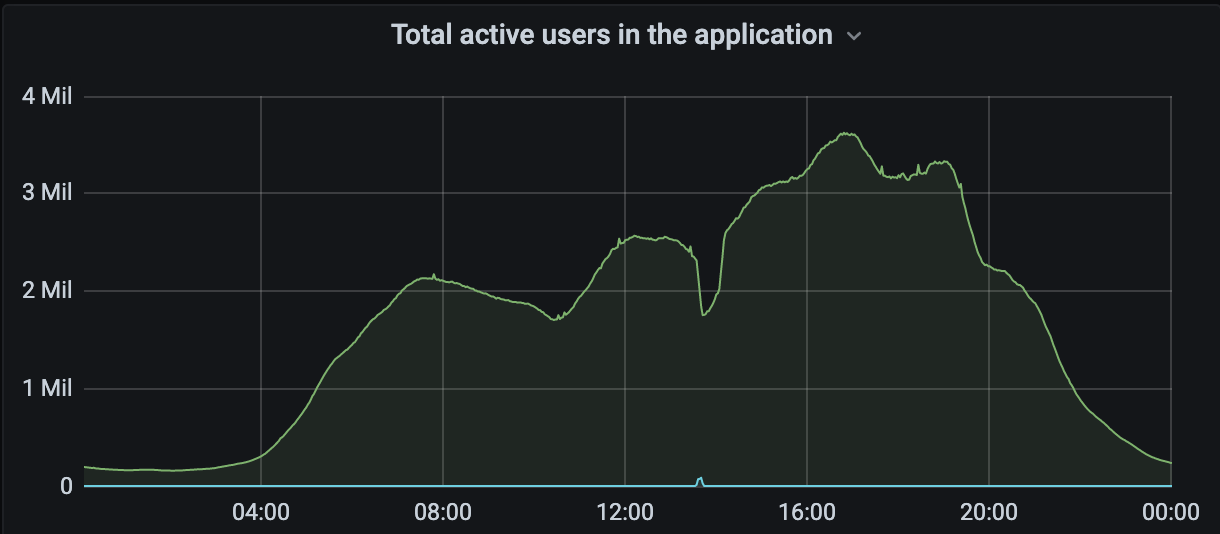

“Cloudflare has played an integral role in helping EMBs and CSOs protect their websites, prevent website defacement, and ensure that they are accessible during peak traffic spikes. This has allowed EMBs and CSOs to build internal and external stakeholder confidence while gaining access and building local capacity on cutting-edge cybersecurity solutions and good practices.”

— Stephen Boyce, Senior Global Election Technology & Cybersecurity Advisor at IFES.

As part of the partnership with IFES, Cloudflare provides its highest level of services to EMBs working with IFES and equips them with the cybersecurity tools for their web infrastructure and internal teams to promote electoral integrity and stronger democracies. Along with cybersecurity tools, Cloudflare will work closely with IFES on training and direct assistance to these election bodies, so they have the knowledge and expertise to conduct a free, fair, and safe elections. In the past, Cloudflare has worked with IFES to provide services in support of elections in Georgia, and we look forward to extending these protections to other EMBs in the future.

Partnership with National Democratic Institute, the International Republican Institute and the Design 4 Democracy Coalition

The National Democratic Institute and The International Republican Institute are two of the many Project Galileo partners that we have worked with to provide cybersecurity tools to organizations that work building and strengthening democratic institutions and increasing civic participation all around the world. As we worked together on Project Galileo, our conversations often focused on the best way to extend these types of security tools to groups in the election space.

Cloudflare is excited to announce that we are partnering with the National Democractic Institute (NDI), the International Republican Institute (IRI) and the Design 4 Democracy Coalition (D4D) to expand our election support efforts. Through this initiative, Cloudflare will provide free service to vulnerable groups working on elections, as identified by NDI and IRI. Our combined expertise in cybersecurity and elections administration will enable us to be mutually beneficial in navigating this space. As part of protecting a new set of election groups, Cloudflare will work with NDI and IRI to understand the global threats faced by democratic election institutions.

“Elections are being undermined by a wide range of malign actors. Through our partnership with Cloudflare, IRI has been able to ensure that the civil society and independent media organizations we support globally are able to defend themselves against cyber attacks and massive increases in web traffic – keeping them safe and online at the most critical moments for democratic integrity. We are excited to be working with Cloudflare, NDI, and the D4D Coalition to expand those offerings to election management bodies, political parties, and political campaigns – a critical step toward ensuring that political competition is fought in the sphere of policy and governance delivery, and not through information and cyber warfare.”

— Amy Studdart, Senior Advisor for Digital Democracy, Center for Global Impact at the International Republican Institute.

As part of our new initiative, when Cloudflare tests new products which would be particularly useful for election groups we will work with NDI, IRI and D4D to encourage these groups to adopt the new services. This might include passing along information and documentation on how to deploy them, offering webinars, and providing other specialized support. Piloting new products with this audience will also provide us with the opportunity to learn about needs and pain points for these groups.

“Safe, reliable access to the internet is fundamental to a free, open, and democratic electoral process in the modern era. Cloudflare’s sophisticated protections against various forms of cyberattack have provided invaluable support to at-risk campaigns and civic organizations through NDI and the D4D Coalition. This new initiative will go further to supporting one of the most fundamental of human rights: the vote.”

— Chris Doten, Chief Innovation Officer at the National Democratic Institute.

Extending Protection to State Parties in the United States with Defending Digital Campaigns

We didn’t forget our friends in the United States. I am excited to announce that we are extending our support to provide a suite of Cloudflare products to eligible state parties in the United States with our partnership with Defending Digital Campaigns (DDC). In January 2020, we announced our partnership with Defending Digital Campaigns, a nonprofit, nonpartisan organization that provides access to cybersecurity products, services, and information to eligible federal campaigns.

We have reported on the regulatory challenges of providing free or discounted services to political campaigns in the past. Due to campaign finance regulations in the United States, private corporations are prohibited from providing any contributions of either money or services to federal candidates or political party organizations. We partnered with DDC, who was granted special permission by the Federal Election Commission to provide eligible federal campaigns with free or reduced-cost cybersecurity services due to the enhanced threat of foreign cyberattacks against party and candidate committees.

Since the start of our partnership, we have provided products to protect Presidential, Senate and House campaigns with tools like DDoS protection, web application firewall, SSL encryption, and bot protection. We have also offered campaigns cybersecurity tools to protect their internal networks, offering Cloudflare Access and Gateway to more than 75 campaigns in the 2020 U.S. election.

After the 2020 U.S. election, DDC extended their offering to protect state parties in select states.

“One of DDC’s core recommendations for any campaign or an organization like a State Party is protecting their websites from attacks or defacements,” said Michael Kaiser, President and CEO of Defending Digital Campaigns. “Our partnership with Cloudflare is critical to bringing this core protection to eligible entities and protecting our democracy.”

We are excited to be furthering our partnership with Defendering Digital Campaigns to provide our free suite of services to eligible state parties to better secure themselves from cyber attacks.

For more information on eligibility for these services under DDC and the next steps, please visit cloudflare.com/campaigns/usa.

To the future…

Recognizing the global nature of cyberthreats targeting election-related technologies, we are excited to be working with these groups to help players in the election space stay secure online. In addition to the goals already laid out, Cloudflare intends to build on these partnerships in the future. Eventually, we hope to assist with each of these partners’ programs as mentors and trainers, perhaps directly participating in assessments and training around critical elections. These groups’ expertise makes them fantastic partners in this space, and we look forward to the opportunity to expand our work with their guidance.