Post Syndicated from Max Peterson original https://aws.amazon.com/blogs/security/how-aws-is-helping-customers-achieve-their-digital-sovereignty-and-resilience-goals/

As we’ve innovated and expanded the Amazon Web Services (AWS) Cloud, we continue to prioritize making sure customers are in control and able to meet regulatory requirements anywhere they operate. With the AWS Digital Sovereignty Pledge, which is our commitment to offering all AWS customers the most advanced set of sovereignty controls and features available in the cloud, we are investing in an ambitious roadmap of capabilities for data residency, granular access restriction, encryption, and resilience. Today, I’ll focus on the resilience pillar of our pledge and share how customers are able to improve their resilience posture while meeting their digital sovereignty and resilience goals with AWS.

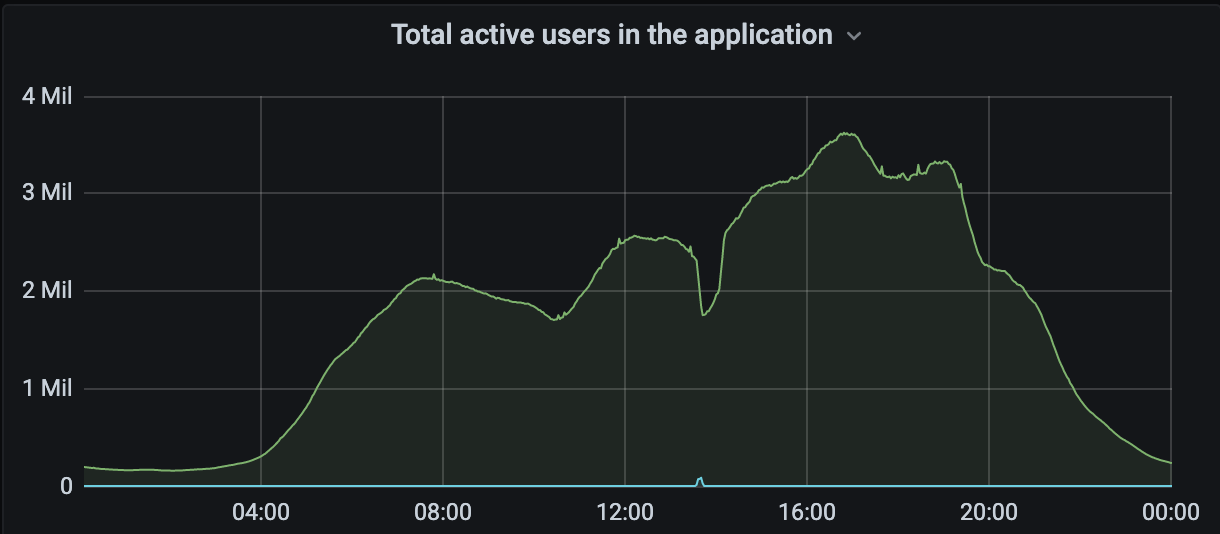

Resilience is the ability for any organization or government agency to respond to and recover from crises, disasters, or other disruptive events while maintaining its core functions and services. Resilience is a core component of sovereignty and it’s not possible to achieve digital sovereignty without it. Customers need to know that their workloads in the cloud will continue to operate in the face of natural disasters, network disruptions, and disruptions due to geopolitical crises. Public sector organizations and customers in highly regulated industries rely on AWS to provide the highest level of resilience and security to help meet their needs. AWS protects millions of active customers worldwide across diverse industries and use cases, including large enterprises, startups, schools, and government agencies. For example, the Swiss public transport organization BERNMOBIL improved its ability to protect data against ransomware attacks by using AWS.

Building resilience into everything we do

AWS has made significant investments in building and running the world’s most resilient cloud by building safeguards into our service design and deployment mechanisms and instilling resilience into our operational culture. We build to guard against outages and incidents, and account for them in the design of AWS services—so when disruptions do occur, their impact on customers and the continuity of services is as minimal as possible. To avoid single points of failure, we minimize interconnectedness within our global infrastructure. The AWS global infrastructure is geographically dispersed, spanning 105 Availability Zones (AZs) within 33 AWS Regions around the world. Each Region is comprised of multiple Availability Zones, and each AZ includes one or more discrete data centers with independent and redundant power infrastructure, networking, and connectivity. Availability Zones in a Region are meaningfully distant from each other, up to 60 miles (approximately 100 km) to help prevent correlated failures, but close enough to use synchronous replication with single-digit millisecond latency. AWS is the only cloud provider to offer three or more Availability Zones within each of its Regions, providing more redundancy and better isolation to contain issues. Common points of failure, such as generators and cooling equipment, aren’t shared across Availability Zones and are designed to be supplied by independent power substations. To better isolate issues and achieve high availability, customers can partition applications across multiple Availability Zones in the same Region. Learn more about how AWS maintains operational resilience and continuity of service.

Resilience is deeply ingrained in how we design services. At AWS, the services we build must meet extremely high availability targets. We think carefully about the dependencies that our systems take. Our systems are designed to stay resilient even when those dependencies are impaired; we use what is called static stability to achieve this level of resilience. This means that systems operate in a static state and continue to operate as normal without needing to make changes during a failure or when dependencies are unavailable. For example, in Amazon Elastic Compute Cloud (Amazon EC2), after an instance is launched, it’s just as available as a physical server in a data center. The same property holds for other AWS resources such as virtual private clouds (VPCs), Amazon Simple Storage Service (Amazon S3) buckets and objects, and Amazon Elastic Block Store (Amazon EBS) volumes. Learn more in our Fault Isolation Boundaries whitepaper.

Information Services Group (ISG) cited strengthened resilience when naming AWS a Leader in their recent report, Provider Lens for Multi Public Cloud Services – Sovereign Cloud Infrastructure Services (EU), “AWS delivers its services through multiple Availability Zones (AZs). Clients can partition applications across multiple AZs in the same AWS region to enhance the range of sovereign and resilient options. AWS enables its customers to seamlessly transport their encrypted data between regions. This ensures data sovereignty even during geopolitical instabilities.”

AWS empowers governments of all sizes to safeguard digital assets in the face of disruptions. We proudly worked with the Ukrainian government to securely migrate data and workloads to the cloud immediately following Russia’s invasion, preserving vital government services that will be critical as the country rebuilds. We supported the migration of over 10 petabytes of data. For context, that means we migrated data from 42 Ukraine government authorities, 24 Ukrainian universities, a remote learning K–12 school serving hundreds of thousands of displaced children, and dozens of other private sector companies.

For customers who are running workloads on-premises or for remote use cases, we offer solutions such as AWS Local Zones, AWS Dedicated Local Zones, and AWS Outposts. Customers deploy these solutions to help meet their needs in highly regulated industries. For example, to help meet the rigorous performance, resilience, and regulatory demands for the capital markets, Nasdaq used AWS Outposts to provide market operators and participants with added agility to rapidly adjust operational systems and strategies to keep pace with evolving industry dynamics.

Enabling you to build resilience into everything you do

Millions of customers trust that AWS is the right place to build and run their business-critical and mission-critical applications. We provide a comprehensive set of purpose-built resilience services, strategies, and architectural best practices that you can use to improve your resilience posture and meet your sovereignty goals. These services, strategies, and best practices are outlined in the AWS Resilience Lifecycle Framework across five stages—Set Objectives, Design and Implement, Evaluate and Test, Operate, and Respond and Learn. The Resilience Lifecycle Framework is modeled after a standard software development lifecycle, so you can easily incorporate resilience into your existing processes.

For example, you can use the AWS Resilience Hub to set your resilience objectives, evaluate your resilience posture against those objectives, and implement recommendations for improvement based on the AWS Well-Architected Framework and AWS Trusted Advisor. Within Resilience Hub, you can create and run AWS Fault Injection Service experiments, which allow you to test how your application will respond to certain types of disruptions. Recently, Pearson, a global provider of educational content, assessment, and digital services to learners and enterprises, used Resilience Hub to improve their application resilience.

Other AWS resilience services such as AWS Backup, AWS Elastic Disaster Recovery (AWS DRS), and Amazon Route53 Application Recovery Controller (Route 53 ARC) can help you quickly respond and recover from disruptions. When Thomson Reuters, an international media company that provides solutions for tax, law, media, and government to clients in over 100 countries, wanted to improve data protection and application recovery for one of its business units, they adopted AWS DRS. AWS DRS provides Thomson Reuters continuous replication, so changes they made in the source environment were updated in the disaster recovery site within seconds.

Achieve your resilience goals with AWS and our AWS Partners

AWS offers multiple ways for you to achieve your resilience goals, including assistance from AWS Partners and AWS Professional Services. AWS Resilience Competency Partners specialize in improving customers’ critical workloads’ availability and resilience in the cloud. AWS Professional Services offers Resilience Architecture Readiness Assessments, which assess customer capabilities in eight critical domains—change management, disaster recovery, durability, observability, operations, redundancy, scalability, and testing—to identify gaps and areas for improvement.

We remain committed to continuing to enhance our range of sovereign and resilient options, allowing customers to sustain operations through disruption or disconnection. AWS will continue to innovate based on customer needs to help you build and run resilient applications in the cloud to keep up with the changing world.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.