Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=Yo5PDMviTCM

Analyze AWS WAF logs using Amazon OpenSearch Service anomaly detection built on Random Cut Forests

Post Syndicated from Umesh Ramesh original https://aws.amazon.com/blogs/security/analyze-aws-waf-logs-using-amazon-opensearch-service-anomaly-detection-built-on-random-cut-forests/

This blog post shows you how to use the machine learning capabilities of Amazon OpenSearch Service (successor to Amazon Elasticsearch Service) to detect and visualize anomalies in AWS WAF logs. AWS WAF logs are streamed to Amazon OpenSearch Service using Amazon Kinesis Data Firehose. Kinesis Data Firehose invokes an AWS Lambda function to transform incoming source data and deliver the transformed data to Amazon OpenSearch Service. You can implement this solution without any machine learning expertise. AWS WAF logs capture a number of attributes about the incoming web request, and you can analyze these attributes to detect anomalous behavior. This blog post focuses on the following two scenarios:

- Identifying anomalous behavior based on a high number of web requests coming from an unexpected country (Country Code is one of the request fields captured in AWS WAF logs).

- Identifying anomalous behavior based on HTTP method for a read-heavy application like a content media website that receives unexpected write requests.

Log analysis is essential for understanding the effectiveness of any security solution. It helps with day-to-day troubleshooting, and also with long-term understanding of how your security environment is performing.

AWS WAF is a web application firewall that helps protect your web applications from common web exploits which could affect application availability, compromise security, or consume excessive resources. AWS WAF gives you control over which traffic sent to your web applications is allowed or blocked, by defining customizable web security rules. AWS WAF lets you define multiple types of rules to block unauthorized traffic.

Machine learning can assist in identifying unusual or unexpected behavior. Amazon OpenSearch Service is one of the commonly used services which offer log analytics for monitoring service logs, using dashboards and alerting mechanisms. Static, rule‑based analytics approaches are slow to adapt to evolving workloads, and can miss critical issues. With the announcement of real-time anomaly detection support in Amazon OpenSearch Service, you can use machine learning to detect anomalies in real‑time streaming data, and identify issues as they evolve so you can mitigate them quickly. Real‑time anomaly detection support uses Random Cut Forest (RCF), an unsupervised algorithm, which continuously adapts to evolving data patterns. Simply stated, RCF takes a set of random data points, divides them into multiple groups, each with the same number of points, and then builds a collection of models. As an unsupervised algorithm, RCF uses cluster analysis to detect spikes in time series data, breaks in periodicity or seasonality, and data point exceptions. The anomaly detection feature is lightweight, with the computational load distributed across Amazon OpenSearch Service nodes. Figure 1 shows the architecture of the solution described in this blog post.

Figure 1: End-to-end architecture

The architecture flow shown in Figure 1 includes the following high-level steps:

- AWS WAF streams logs to Kinesis Data Firehose.

- Kinesis Data Firehose invokes a Lambda function to add attributes to the AWS WAF logs.

- Kinesis Data Firehose sends the transformed source records to Amazon OpenSearch Service.

- Amazon OpenSearch Service automatically detects anomalies.

- Amazon OpenSearch Service delivers anomaly alerts via Amazon Simple Notification Service (Amazon SNS).

Solution

Figure 2 shows examples of both an original and a modified AWS WAF log. The solution in this blog post focuses on Country and httpMethod. It uses a Lambda function to transform the AWS WAF log by adding fields, as shown in the snippet on the right side. The values of the newly added fields are evaluated based on the values of country and httpMethod in the AWS WAF log.

Figure 2: Sample processing done by a Lambda function

In this solution, you will use a Lambda function to introduce new fields to the incoming AWS WAF logs through Kinesis Data Firehose. You will introduce additional fields by using one-hot encoding to represent the incoming linear values as a “1” or “0”.

Scenario 1

In this scenario, the goal is to detect traffic from unexpected countries when serving user traffic expected to be from the US and UK. The function adds three new fields:

usTraffic

ukTraffic

otherTraffic

As shown in the lambda function inline code, we use the traffic_from_country function, in which we only want actions that ALLOW the traffic. Once we have that, we use conditions to check the country code. If the value of the country field in the web request captured in AWS WAF log is US, the usTraffic field in the transformed data will be assigned the value 1 while otherTraffic and ukTraffic will be assigned the value 0. The other two fields are transformed as shown in Table 1.

| Original AWS WAF log | Transformed AWS WAF log with new fields after one-hot encoding | ||

| Country | usTraffic | ukTraffic | otherTraffic |

| US | 1 | 0 | 0 |

| UK | 0 | 1 | 0 |

| All other country codes | 0 | 0 | 1 |

Table 1: One-hot encoding field mapping for country

Scenario 2

In the second scenario, you detect anomalous requests that use POST HTTP method.

As shown in the lambda function inline code, we use the filter_http_request_method function, in which we only want actions that ALLOW the traffic. Once we have that, we use conditions to check the HTTP _request method. If the value of the HTTP method in the AWS WAF log is GET, the getHttpMethod field is assigned the value 1 while headHttpMethod and postHttpMethod are assigned the value 0. The other two fields are transformed as shown in Table 2.

| Original AWS WAF log | Transformed AWS WAF log with new fields after one-hot encoding | ||

| HTTP method | getHttpMethod | headHttpMethod | postHttpMethod |

| GET | 1 | 0 | 0 |

| HEAD | 0 | 1 | 0 |

| POST | 0 | 0 | 1 |

Table 2: One-hot encoding field mapping for HTTP method

After adding these new fields, the transformed record from Lambda must contain the following parameters before the data is sent back to Kinesis Data Firehose:

| recordId | The transformed record must contain the same original record ID as is received from the Kinesis Data Firehose. |

| result | The status of the data transformation of the record (the status can be OK or Dropped). |

| data | The transformed data payload. |

AWS WAF logs are JSON files, and this anomaly detection feature works only on numeric data. This means that to use this feature for detecting anomalies in logs, you must pre-process your logs using a Lambda function.

Lambda function for one-hot encoding

Use the following Lambda function to transform the AWS WAF log by adding new attributes, as explained in Scenario 1 and Scenario 2.

After the transformation, the data that’s delivered to Amazon OpenSearch Service will have additional fields, as described in Table 1 and Table 2 above. You can configure an anomaly detector in Amazon OpenSearch Service to monitor these additional fields. The algorithm computes an anomaly grade and confidence score value for each incoming data point. Anomaly detection uses these values to differentiate an anomaly from normal variations in your data. Anomaly detection and alerting are plugins that are included in the available set of Amazon OpenSearch Service plugins. You can use these two plugins to generate a notification as soon as an anomaly is detected.

Deployment steps

In this section, you complete five high-level steps to deploy the solution. In this blog post, we are deploying this solution in the us-east-1 Region. The solution assumes you already have an active web application protected by AWS WAF rules. If you’re looking for details on creating AWS WAF rules, refer to Working with web ACLs and sample examples for more information.

Note: When you associate a web ACL with Amazon CloudFront as a protected resource, make sure that the Kinesis Firehose Delivery Stream is deployed in the us-east-1 Region.

The steps are:

- Deploy an AWS CloudFormation template

- Enable AWS WAF logs

- Create an anomaly detector

- Set up alerts in Amazon OpenSearch Service

- Create a monitor for the alerts

Deploy a CloudFormation template

To start, deploy a CloudFormation template to create the following AWS resources:

- Amazon OpenSearch Service and Kibana (versions 1.5 to 7.10) with built-in AWS WAF dashboards.

- Kinesis Data Firehose streams

- A Lambda function for data transformation and an Amazon SNS topic with email subscription.

To deploy the CloudFormation template

- Download the CloudFormation template and save it locally as Amazon-ES-Stack.yaml.

- Go to the AWS Management Console and open the CloudFormation console.

- Choose Create Stack.

- On the Specify template page, choose Upload a template file. Then select Choose File, and select the template file that you downloaded in step 1.

- Choose Next.

- Provide the Parameters:

- Enter a unique name for your CloudFormation stack.

- Update the email address for UserEmail with the address you want alerts sent to.

- Choose Next.

- Review and choose Create stack.

- When the CloudFormation stack status changes to CREATE_COMPLETE, go to the Outputs tab and make note of the DashboardLinkOutput value. Also note the credentials you’ll receive by email (Subject: Your temporary password) and subscribe to the SNS topic for which you’ll also receive an email confirmation request.

Enable AWS WAF logs

Before enabling the AWS WAF logs, you should have AWS WAF web ACLs set up to protect your web application traffic. From the console, open the AWS WAF service and choose your existing web ACL. Open your web ACL resource, which can either be deployed on an Amazon CloudFront distribution or on an Application Load Balancer.

To enable AWS WAF logs

- From the AWS WAF home page, choose Create web ACL.

- From the AWS WAF home page, choose Logging and metrics

- From the AWS WAF home page, choose the web ACL for which you want to enable logging, as shown in Figure 3:

Figure 3 – Enabling WAF logging

- Go to the Logging and metrics tab, and then choose Enable Logging. The next page displays all the delivery streams that start with aws-waf-logs. Choose the Kinesis Data Firehose delivery stream that was created by the Cloud Formation template, as shown in Figure 3 (in this example, aws-waf-logs-useast1). Don’t redact any fields or add filters. Select Save.

Create an Index template

Index templates lets you initialize new indices with predefined mapping. For example, in this case you predefined mapping for timestamp.

To create an Index template

- Log into the Kibana dashboard. You can find the Kibana dashboard link in the Outputs tab of the CloudFormation stack. You should have received the username and temporary password (Ignore the period (.) at the end of the temporary password) by email, at the email address you entered as part of deploying the CloudFormation template. You will be logged in to the Kibana dashboard after setting a new password.

- Choose Dev Tools in the left menu panel to access Kibana’s console.

- The left pane in the console is the request pane, and the right pane is the response pane.

- Select the green arrow at the end of the command line to execute the following PUT command.

- You should see the following response:

The command creates a template named awswaf and applies it to any new index name that matches the regular expression awswaf-*

Create an anomaly detector

A detector is an individual anomaly detection task. You can create multiple detectors, and all the detectors can run simultaneously, with each analyzing data from different sources.

To create an anomaly detector

- Select Anomaly Detection from the menu bar, select Detectors and Create Detector.

Figure 4- Home page view with menu bar on the left

- To create a detector, enter the following values and features:

Name and description

Name: aws-waf-country Description: Detect anomalies on other country values apart from “US” and “UK“ Data Source

Index: awswaf* Timestamp field: timestamp Data filter: Visual editor

Figure 5 – Detector features and their values

- For Detector operation settings, enter a value in minutes for the Detector interval to set the time interval at which the detector collects data. To add extra processing time for data collection, set a Window delay value (also in minutes). This tells the detector that the data isn’t ingested into Amazon OpenSearch Service in real time, but with a delay. The example in Figure 6 uses a 1-minute interval and a 2-minute delay.

Figure 6 – Detector operation settings

- Next, select Create.

- Once you create a detector, select Configure Model and add the following values to Model configuration:

Feature Name: waf-country-other Feature State: Enable feature Find anomalies based on: Field value Aggregation method: sum() Field: otherTraffic The aggregation method determines what constitutes an anomaly. For example, if you choose min(), the detector focuses on finding anomalies based on the minimum values of your feature. If you choose average(), the detector finds anomalies based on the average values of your feature. For this scenario, you will use sum().The value otherTraffic for Field is the transformed field in the Amazon OpenSearch Service logs that was added by the Lambda function.

Figure 7 – Detector Model configuration

- Under Advanced Settings on the Model configuration page, update the Window size to an appropriate interval (1 equals 1 minute) and choose Save and Start detector and Automatically start detector.

We recommend you choose this value based on your actual data. If you expect missing values in your data, or if you want the anomalies based on the current value, choose 1. If your data is continuously ingested and you want the anomalies based on multiple intervals, choose a larger window size.

Note: The detector takes 4 to 5 minutes to start.

Figure 8 – Detector window size

Set up alerts

You’ll use Amazon SNS as a destination for alerts from Amazon OpenSearch Service.

Note: A destination is a reusable location for an action.

To set up alerts:

- Go to the Kibana main menu bar and select Alerting, and then navigate to the Destinations tab.

- Select Add destination and enter a unique name for the destination.

- For Type, choose Amazon SNS and provide the topic ARN that was created as part of the CloudFormation resources (captured in the Outputs tab).

- Provide the ARN for an IAM role that was created as part of the CloudFormation outputs (SNSAccessIAMRole-********) that has the following trust relationship and permissions (at a minimum):

Figure 9 – Destination

Note: For more information, see Adding IAM Identity Permissions in the IAM user guide.

- Choose Create.

Create a monitor

A monitor can be defined as a job that runs on a defined schedule and queries Amazon OpenSearch Service. The results of these queries are then used as input for one or more triggers.

To create a monitor for the alert

- Select Alerting on the Kibana main menu and navigate to the Monitors tab. Select Create monitor

- Create a new record with the following values:

Monitor Name: aws-waf-country-monitor Method of definition: Define using anomaly detector Detector: aws-waf-country Monitor schedule: Every 2 minutes - Select Create.

Figure 10 – Create monitor

- Choose Create Trigger to connect monitoring alert with the Amazon SNS topic using the below values:

Trigger Name: SNS_Trigger Severity Level: 1 Trigger Type: Anomaly Detector grade and confidence Under Configure Actions, set the following values:

Action Name: SNS-alert Destination: select the destination name you chose when you created the Alert above Message Subject: “Anomaly detected – Country” Message: <Use the default message displayed> - Select Create to create the trigger.

Figure 11 – Create trigger

Figure 12 – Configure actions

Test the solution

Now that you’ve deployed the solution, the AWS WAF logs will be sent to Amazon OpenSearch Service.

Kinesis Data Generator sample template

When testing the environment covered in this blog outside a production context, we used Kinesis Data Generator to generate sample user traffic with the template below, changing the country strings in different runs to reflect expected records or anomalous ones. Other tools are also available.

You will receive an email alert via Amazon SNS if the traffic contains any anomalous data. You should also be able to view the anomalies recorded in Amazon OpenSearch Service by selecting the detector and choosing Anomaly results for the detector, as shown in Figure 13.

Figure 13 – Anomaly results

Conclusion

In this post, you learned how you can discover anomalies in AWS WAF logs across parameters like Country and httpMethod defined by the attribute values. You can further expand your anomaly detection use cases with application logs and other AWS Service logs. To learn more about this feature with Amazon OpenSearch Service, we suggest reading the Amazon OpenSearch Service documentation. We look forward to hearing your questions, comments, and feedback.

If you found this post interesting and useful, you may be interested in https://aws.amazon.com/blogs/security/how-to-improve-visibility-into-aws-waf-with-anomaly-detection/ and https://aws.amazon.com/blogs/big-data/analyzing-aws-waf-logs-with-amazon-es-amazon-athena-and-amazon-quicksight/ as further alternative approaches.

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security news? Follow us on Twitter.

Women in Film: A Conversation with Regina Hall

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=Fm9faUddc88

Film Talk: Call Jane

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=10P7Se3W6Qs

Film Talk: 892

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=s9igBgVu5TQ

Highlights from Git 2.35

Post Syndicated from Taylor Blau original https://github.blog/2022-01-24-highlights-from-git-2-35/

The open source Git project just released Git 2.35, with features and bug fixes from over 93 contributors, 35 of them new. We last caught up with you on the latest in Git back when 2.34 was released. To celebrate this most recent release, here’s GitHub’s look at some of the most interesting features and changes introduced since last time.

- When working on a complicated change, it can be useful to temporarily discard parts of your work in order to deal with them separately. To do this, we use the

git stashtool, which stores away any changes to files tracked in your Git repository.Using

git stashthis way makes it really easy to store all accumulated changes for later use. But what if you only want to store part of your changes in the stash? You could usegit stash -pand interactively select hunks to stash or keep. But what if you already did that via an earliergit add -p? Perhaps when you started, you thought you were ready to commit something, but by the time you finished staging everything, you realized that you actually needed to stash it all away and work on something else.git stash‘s new--stagedmode makes it easy to stash away what you already have in the staging area, and nothing else. You can think of it likegit commit(which only writes staged changes), but instead of creating a new commit, it writes a new entry to the stash. Then, when you’re ready, you can recover your changes (withgit stash pop) and keep working.[source]

-

git loghas a rich set of--formatoptions that you can use to customize the output ofgit log. These can be handy when sprucing up your terminal, but they are especially useful for making it easier to script around the output ofgit log.In our blog post covering Git 2.33, we talked about a new

--formatspecifier called%(describe). This made it possible to include the output ofgit describealongside the output ofgit log. When it was first released, you could pass additional options down through the%(describe)specifier, like matching or excluding certain tags by writing--format=%(describe:match=<foo>,exclude=<bar>).In 2.35, Git includes a couple of new ways to tweak the output of

git describe. You can now control whether to use lightweight tags, and how many hexadecimal characters to use when abbreviating an object identifier.You can try these out with

%(describe:tags=<bool>)and%(describe:abbrev=<n>), respectively. Here’s a goofy example that gives me thegit describeoutput for the last 8 commits in my copy ofgit.git, using only non-release-candidate tags, and uses 13 characters to abbreviate their hashes:$ git log -8 --format='%(describe:exclude=*-rc*,abbrev=13)' v2.34.1-646-gaf4e5f569bc89 v2.34.1-644-g0330edb239c24 v2.33.1-641-g15f002812f858 v2.34.1-643-g2b95d94b056ab v2.34.1-642-gb56bd95bbc8f7 v2.34.1-203-gffb9f2980902d v2.34.1-640-gdf3c41adeb212 v2.34.1-639-g36b65715a4132Which is much cleaner than this alternative way to combine

git logandgit describe:$ git log -8 --format='%H' | xargs git describe --exclude='*-rc*' --abbrev=13[source]

-

In our last post, we talked about SSH signing: a new feature in Git that allows you to use the SSH key you likely already have in order to sign certain kinds of objects in Git.

This release includes a couple of new additions to SSH signing. Suppose you use SSH keys to sign objects in a project you work on. To track which SSH keys you trust, you use the allowed signers file to store the identities and public keys of signers you trust.

Now suppose that one of your collaborators rotates their key. What do you do? You could update their entry in the allowed signers file to point at their new key, but that would make it impossible to validate objects signed with the older key. You could store both keys, but that would mean that you would accept new objects signed with the old key.

Git 2.35 lets you take advantage of OpenSSH’s

valid-beforeandvalid-afterdirectives by making sure that the object you’re verifying was signed using a signature that was valid when it was created. This allows individuals to rotate their SSH keys by keeping track of when each key was valid without invalidating any objects previously signed using an older key.Git 2.35 also supports new key types in the

user.signingKeyconfiguration when you include the key verbatim (instead of storing the path of a file that contains the signing key). Previously, the rule for interpretinguser.signingKeywas to treat its value as a literal SSH key if it began with “ssh-“, and to treat it as filepath otherwise. You can now specify literal SSH keys with keytypes that don’t begin with “ssh-” (like ECDSA keys). -

If you’ve ever dealt with a merge conflict, you know that accurately resolving conflicts takes some careful thinking. You may not have heard of Git’s

merge.conflictStylesetting, which makes resolving conflicts just a little bit easier.The default value for this configuration is “merge”, which produces the merge conflict markers that you are likely familiar with. But there is a different mode, “diff3”, which shows the merge base in addition to the changes on either side.

Git 2.35 introduces a new mode, “zdiff3”, which zealously moves any lines in common at the beginning or end of a conflict outside of the conflicted area, which makes the conflict you have to resolve a little bit smaller.

For example, say I have a list with a placeholder comment, and I merge two branches that each add different content to fill in the placeholder. The usual merge conflict might look something like this:

1, foo, bar, <<<<<<< HEAD ======= quux, woot, >>>>>>> side baz, 3,Trying again with diff3-style conflict markers shows me the merge base (revealing a comment that I didn’t know was previously there) along with the full contents of either side, like so:

1, <<<<<<< HEAD foo, bar, baz, ||||||| 60c6bd0 # add more here ======= foo, bar, quux, woot, baz, >>>>>>> side 3,The above gives us more detail, but notice that both sides add “foo” and, “bar” at the beginning and “baz” at the end. Trying one last time with zdiff3-style conflict markers moves the “foo” and “bar” outside of the conflicted region altogether. The result is both more accurate (since it includes the merge base) and more concise (since it handles redundant parts of the conflict for us).

1, foo, bar, <<<<<<< HEAD ||||||| 60c6bd0 # add more here ======= quux, woot, >>>>>>> side baz, 3,[source]

-

You may (or may not!) know that Git supports a handful of different algorithms for generating a diff. The usual algorithm (and the one you are likely already familiar with) is the Myers diff algorithm. Another is the

--patiencediff algorithm and its cousin--histogram. These can often lead to more human-readable diffs (for example, by avoiding a common issue where adding a new function starts the diff by adding a closing brace to the function immediately preceding the new one).In Git 2.35,

--histogramgot a nice performance boost, which should make it faster in many cases. The details are too complicated to include in full here, but you can check out the reference below and see all of the improvements and juicy performance numbers.[source]

-

If you’re a fan of performance improvements (and

diffoptions!), here’s another one you might like. You may have heard ofgit diff‘s--color-movedoption (if you haven’t, we talked about it back in our Highlights from Git 2.17). You may not have heard of the related--color-moved-ws, which controls how whitespace is or isn’t ignored when colorizing diffs. You can think of it like the other space-ignoring options (like--ignore-space-at-eol,--ignore-space-change, or--ignore-all-space), but specifically for when you’re running diff in the--color-movedmode.Like the above, Git 2.35 also includes a variety of performance improvement for

--color-moved-ws. If you haven’t tried--color-movedyet, give it a try! If you already use it in your workflow, it should get faster just by upgrading to Git 2.35.[source]

-

Way back in our Highlights from Git 2.19, we talked about how a new feature in

git grepallowed thegit jumpaddon to populate your editor with the exact locations ofgit grepmatches.In case you aren’t familiar with

git jump, here’s a quick refresher.git jumppopulates Vim’s quickfix list with the locations of merge conflicts, grep matches, or diff hunks (by runninggit jump merge,git jump grep, orgit jump diff, respectively).In Git 2.35,

git jump mergelearned how to narrow the set of merge conflicts using a pathspec. So if you’re working on resolving a big merge conflict, but you only want to work on a specific section, you can run:$ git jump merge -- footo only focus on conflicts in the

foodirectory. Alternatively, if you want to skip conflicts in a certain directory, you can use the special negative pathspec like so:# Skip any conflicts in the Documentation directory for now. $ git jump merge -- ':^Documentation'[source]

-

You might have heard of Git’s “clean” and “smudge” filters, which allow users to specify how to “clean” files when staging, or “smudge” them when populating the working copy. Git LFS makes extensive use of these filters to represent large files with stand-in “pointers.” Large files are converted to pointers when staging with the clean filter, and then back to large files when populating the working copy with the smudge filter.

Git has historically used the

size_tandunsigned longtypes relatively interchangeably. This is understandable, since Git was originally written on Linux where these two types have the same width (and therefore, the same representable range of values).But on Windows, which uses the LLP64 data model, the

unsigned longtype is only 4 bytes wide, whereassize_tis 8 bytes wide. Because the clean and smudge filters had previously usedunsigned long, this meant that they were unable to process files larger than 4GB in size on platforms conforming to LLP64.The effort to standardize on the correct

size_ttype to represent object length continues in Git 2.35, which makes it possible for filters to handle files larger than 4GB, even on LLP64 platforms like Windows1.[source]

-

If you haven’t used Git in a patch-based workflow where patches are emailed back and forth, you may be unaware of the

git amcommand, which extracts patches from a mailbox and applies them to your repository.Previously, if you tried to

git aman email which did not contain a patch, you would get dropped into a state like this:$ git am /path/to/mailbox Applying: [...] Patch is empty. When you have resolved this problem, run "git am --continue". If you prefer to skip this patch, run "git am --skip" instead. To restore the original branch and stop patching, run "git am --abort".This can often happen when you save the entire contents of a patch series, including its cover letter (the customary first email in a series, which contains a description of the patches to come but does not itself contain a patch) and try to apply it.

In Git 2.35, you can specify how

git amwill behave should it encounter an empty commit with--empty=<stop|drop|keep>. These options instructamto either halt applying patches entirely, drop any empty patches, or apply them as-is (creating an empty commit, but retaining the log message). If you forgot to specify an--emptybehavior but tried to apply an empty patch, you can rungit am --allow-emptyto apply the current patch as-is and continue.[source]

-

Returning readers may remember our discussion of the sparse index, a Git features that improves performance in repositories that use sparse-checkout. The aforementioned link describes the feature in detail, but the high-level gist is that it stores a compacted form of the index that grows along with the size of your checkout rather than the size of your repository.

In 2.34, the sparse index was integrated into a handful of commands, including

git status,git add, andgit commit. In 2.35, command support for the sparse index grew to include integrations withgit reset,git diff,git blame,git fetch,git pull, and a new mode ofgit ls-files. -

Speaking of sparse-checkout, the

git sparse-checkoutbuiltin has deprecated thegit sparse-checkout initsubcommand in favor of usinggit sparse-checkout set. All of the options that were previously available in theinitsubcommand are still available in thesetsubcommand. For example, you can enable cone-mode sparse-checkout and include the directoryfoowith this command:$ git sparse-checkout set --cone foo[source]

-

Git stores references (such as branches and tags) in your repository in one of two ways: either “loose” as a file inside of

.git/refs(like.git/refs/heads/main) or “packed” as an entry inside of the file at.git/packed_refs.But for repositories with truly gigantic numbers of references, it can be inefficient to store them all together in a single file. The reftable proposal outlines the alternative way that JGit stores references in a block-oriented fashion. JGit has been using reftable for many years, but Git has not had its own implementation.

Reftable promises to improve reading and writing performance for repositories with a large number of references. Work has been underway for quite some time to bring an implementation of reftable to Git, and Git 2.35 comes with an initial import of the reftable backend. This new backend isn’t yet integrated with the refs, so you can’t start using reftable just yet, but we’ll keep you posted about any new developments in the future.

[source]

The rest of the iceberg

That’s just a sample of changes from the latest release. For more, check out the release notes for 2.35, or any previous version in the Git repository.

- Note that these patches shipped to Git for Windows via its 2.34 release, so technically this is old news! But we’ll still mention it anyway. ↩

Evolve JSON Schemas in Amazon MSK and Amazon Kinesis Data Streams with the AWS Glue Schema Registry

Post Syndicated from Aditya Challa original https://aws.amazon.com/blogs/big-data/evolve-json-schemas-in-amazon-msk-and-amazon-kinesis-data-streams-with-the-aws-glue-schema-registry/

Data is being produced, streamed, and consumed at an immense rate, and that rate is projected to grow exponentially in the future. In particular, JSON is the most widely used data format across streaming technologies and workloads. As applications, websites, and machines increasingly adopt data streaming technologies such as Apache Kafka and Amazon Kinesis Data Streams, which serve as a highly available transport layer that decouples the data producers from data consumers, it can become progressively more challenging for teams to coordinate and evolve JSON Schemas. Adding or removing a field or changing the data type on one or more existing fields could introduce data quality issues and downstream application failures without careful data handling. Teams rely on custom tools, complex code, tedious processes, or unreliable documentation to protect against these schema changes. This puts heavy dependency on human oversight, which can make the change management processes error-prone. A common solution is a schema registry that enables data producers and consumers to perform validation of schema changes in a coordinated fashion. This allows for risk-free evolution as business demands change over time.

The AWS Glue Schema Registry, a serverless feature of AWS Glue, now enables you to validate and reliably evolve streaming data against JSON Schemas. The Schema Registry is a free feature that can significantly improve data quality and developer productivity. With it, you can eliminate defensive coding and cross-team coordination, reduce downstream application failures, and use a registry that is integrated across multiple AWS services. Each schema can be versioned within the guardrails of a compatibility mode, providing developers the flexibility to reliably evolve JSON Schemas. Additionally, the Schema Registry can serialize data into a compressed format, which helps you save on data transfer and storage costs.

This post shows you how to use the Schema Registry for JSON Schemas and provides examples of how to use it with both Kinesis Data Streams and Apache Kafka or Amazon Managed Streaming for Apache Kafka (Amazon MSK).

Overview of the solution

In this post, we walk you through a solution to store, validate, and evolve a JSON Schema in the AWS Glue Schema Registry. The schema is used by Apache Kafka and Kinesis Data Streams applications while producing and consuming JSON objects. We also show you what happens when a new version of the schema is created with a new field.

The following diagram illustrates our solution workflow:

The steps to implement this solution are as follows:

- Create a new registry and register a schema using an AWS CloudFormation template.

- Create a new version of the schema using the AWS Glue console that is backward-compatible with the previous version.

- Build a producer application to do the following:

- Generate JSON objects that adhere to one of the schema versions.

- Serialize the JSON objects into an array of bytes.

- Obtain the corresponding schema version ID from the Schema Registry and encode the byte array with the same.

- Send the encoded byte array through a Kinesis data stream or Apache Kafka topic.

- Build a consumer application to do the following:

- Receive the encoded byte array through a Kinesis data stream or Apache Kafka topic.

- Decode the schema version ID and obtain the corresponding schema from the Schema Registry.

- Deserialize the array of bytes into the original JSON object.

- Consume the JSON object as needed.

Description of the schema used

For this post, we start with the following schema. The schema is of a weather report object that contains three main pieces of data: location, temperature, and timestamp. All three are required fields, but the schema does allow additional fields (indicated by the additionalProperties flag) such as windSpeed or precipitation if the producer wants to include them. The location field is an object with two string fields: city and state. Both are required fields and the schema doesn’t allow any additional fields within this object.

Using the above schema, a valid JSON object would look like this:

Deploy with AWS CloudFormation

For a quick start, you can deploy the provided CloudFormation stack. The CloudFormation template generates the following resources in your account:

- Registry – A registry is a container of schemas. Registries allow you to organize your schemas, as well as manage access control for your applications. A registry has an Amazon Resource Name (ARN) to allow you to organize and set different access permissions to schema operations within the registry.

- Schema – A schema defines the structure and format of a data record. A schema is a versioned specification for reliable data publication, consumption, or storage. Each schema can have multiple versions. Versioning is governed by a compatibility rule that is applied on a schema. Requests to register new schema versions are checked against this rule by the Schema Registry before they can succeed.

To manually create these resources without using AWS CloudFormation, refer to Creating a Registry and Creating a Schema.

Prerequisites

Make sure to complete the following steps as prerequisites:

- Create an AWS account. For this post, you configure the required AWS resources in the

us-east-1orus-west-2Region. If you haven’t signed up, complete the following tasks:- Create an account. For instructions, see Sign Up for AWS.

- Create an AWS Identity and Access Management (IAM) user. For instructions, see Creating an IAM User in your AWS account.

- Choose Launch Stack to launch the CloudFormation stack:

Review the newly registered schema

Let’s review the registry and the schema on the AWS Glue console.

- Sign in to the AWS Glue console and choose the appropriate Region.

- Under Data Catalog, choose Schema registries.

- Choose the

GsrBlogRegistryschema registry. - Choose the

GsrBlogSchemaschema. - Choose Version 1.

We can see the JSON Schema version details and its definition. Note that the compatibility mode chosen is Backward compatibility. We see the purpose of that in the next section.

Evolve the schema by creating a new backward-compatible version

In this section, we take what is created so far and add a new schema version to demonstrate how we can evolve our schema while keeping the integrity intact.

To add a new schema version, complete the following steps, continuing from the previous section:

- On the Schema version details page, choose Register new version.

- Inside the

propertiesobject within thelocationobject (after thestatefield), add a newcountryfield as follows:

Because the compatibility mode chosen for the schema is backward compatibility, it’s important that we don’t make this new field a required field. If we do that, the Schema Registry fail this new version.

- Choose Register version.

We now have a new version of the schema that allows the producers to include an optional country field within the location object if they choose to.

Use the AWS Glue Schema Registry

In this section, we walk through the steps to use the Schema Registry with Kinesis Data Streams or Apache Kafka.

Prerequisites

Make sure to complete the following steps as prerequisites:

- Configure your AWS credentials in your local machine.

- Install Maven on the local machine.

- Download the application code from the GitHub repo.

- Build the package:

Use the Schema Registry with Kinesis Data Streams

Run the Kinesis producer code to produce JSON messages that are associated with a schema ID assigned by the Schema Registry:

This command returns the following output:

Run the Kinesis consumer code to receive JSON messages with the schema ID, obtain the schema from the Schema Registry, and validate:

This command returns the following output with the JSON records received and decoded:

Use the Schema Registry with Apache Kafka

In the root of the downloaded GitHub repo folder, create a config file with the connection parameters for the Kafka cluster:

Run the Kafka producer code to produce JSON messages that are associated with a schema ID assigned by the Schema Registry:

This command returns the following output:

Run the Kafka consumer code to consume JSON messages with the schema ID, obtain the schema from the Schema Registry, and validate:

This command returns the following output with the JSON records received and decoded:

Clean up

Now to the final step, cleaning up the resources. Delete the CloudFormation stack to remove any resources you created as part of this walkthrough.

Schema Registry features

Let’s discuss the features the Schema Registry has to offer:

- Schema discovery – When a producer registers a schema change, metadata can be applied as a key-value pair to provide searchable information for administrators or developers. This metadata can indicate the original source of the data (

source=MSK_west), the team’s name to contact (owner=DataEngineering), or AWS tags (environment=Production). You could potentially encrypt a field in your data on the producing client and use metadata to specify to potential consumer clients which public key fingerprint to use for decryption. - Schema compatibility – The versioning of each schema is governed by a compatibility mode. If a new version of a schema is requested to be registered that breaks the specified compatibility mode, the request fails, and an exception is thrown. Compatibility checks enable developers building downstream applications to have a bounded set of scenarios to build applications against, which helps prepare for the changes without issue. Commonly used modes are FORWARD, BACKWARD, and FULL. For more information about mode definitions, see Schema Versioning and Compatibility.

- Schema validation – Schema Registry serializers work to validate that the data produced is compatible with the assigned schema. If it isn’t, the data producer receives an exception from the serializer. This ensures that potentially breaking changes are found earlier in development cycles and can also help prevent unintentional schema changes due to human error.

- Auto-registration of schemas – If configured to do so, the data producer can auto-register schema changes as they flow in the data stream. This is especially helpful for use cases where the source of the data is generated by a change data capture process (CDC) from the database.

- IAM support – Due to integrated IAM support, only authorized producers can change certain schemas. Furthermore, only those consumers authorized to read the schema can do so. Schema changes are typically performed deliberately and with care, so it’s important to use IAM to control who performs these changes. Additionally, access control to schemas is important in situations where you might have sensitive information included in the schema definition itself. In the previous examples, IAM roles are inferred via the AWS SDK for Java, so they are inherited from the Amazon Elastic Compute Cloud (Amazon EC2) instance’s role that the application runs on, if using Amazon EC2. You can also apply IAM roles to any other AWS service that could contain this code, such as containers or AWS Lambda functions.

- Secondary deserializer – If you have already registered schemas in another schema registry, there’s an option for specifying a secondary deserializer when performing schema lookups. This allows for migrations from other schema registries without having to start all over again. Any schema ID that is unknown to the Schema Registry is looked up in the registry tied to the secondary deserializer.

- Compression – Using a schema registry can reduce data payload by no longer needing to send and receive schemas with each message. Schema Registry libraries also provide an option for zlib compression, which can reduce data requirements even further by compressing the payload of the message. This varies by use case, but compression can reduce the size of the message significantly.

- Multiple data formats – The Schema Registry currently supports AVRO (v1.10.2) data format, JSON data format with JSON Schema format for the schema (specifications Draft-04, Draft-06, and Draft-07), and Java language support, with other data formats and languages to come.

Conclusion

In this post, we discussed the benefits of using the AWS Glue Schema Registry to register, validate, and evolve JSON Schemas for data streams as business needs change. We also provided examples of how to use the Schema Registry.

Learn more about Integrating with AWS Glue Schema Registry.

About the Author

Aditya Challa is a Senior Solutions Architect at Amazon Web Services. Aditya loves helping customers through their AWS journeys because he knows that journeys are always better when there’s company. He’s a big fan of travel, history, engineering marvels, and learning something new every day.

Aditya Challa is a Senior Solutions Architect at Amazon Web Services. Aditya loves helping customers through their AWS journeys because he knows that journeys are always better when there’s company. He’s a big fan of travel, history, engineering marvels, and learning something new every day.

Handle fast-changing reference data in an AWS Glue streaming ETL job

Post Syndicated from Jerome Rajan original https://aws.amazon.com/blogs/big-data/handle-fast-changing-reference-data-in-an-aws-glue-streaming-etl-job/

Streaming ETL jobs in AWS Glue can consume data from streaming sources such as Amazon Kinesis and Apache Kafka, clean and transform those data streams in-flight, as well as continuously load the results into Amazon Simple Storage Service (Amazon S3) data lakes, data warehouses, or other data stores.

The always-on nature of streaming jobs poses a unique challenge when handling fast-changing reference data that is used to enrich data streams within the AWS Glue streaming ETL job. AWS Glue processes real-time data from Amazon Kinesis Data Streams using micro-batches. The foreachbatch method used to process micro-batches handles one data stream.

This post proposes a solution to enrich streaming data with frequently changing reference data in an AWS Glue streaming ETL job.

You can enrich data streams with changing reference data in the following ways:

- Read the reference dataset with every micro-batch, which can cause redundant reads and an increase in read requests. This approach is expensive, inefficient, and isn’t covered in this post.

- Design a method to tell the AWS Glue streaming job that the reference data has changed and refresh it only when needed. This approach is cost-effective and highly available. We recommend using this approach.

Solution overview

This post uses DynamoDB Streams to capture changes to reference data, as illustrated in the following architecture diagram. For more information about DynamoDB Streams, see DynamoDB Streams Use Cases and Design Patterns.

The workflow contains the following steps:

- A user or application updates or creates a new item in the DynamoDB table.

- DynamoDB Streams is used to identify changes in the reference data.

- A Lambda function is invoked every time a change occurs in the reference data.

- The Lambda function captures the event containing the changed record, creates a “change file” and places it in an Amazon S3 bucket.

- The AWS Glue job is designed to monitor the stream for this value in every micro-batch. The moment that it sees the change flag, AWS Glue initiates a refresh of the DynamoDB data before processing any further records in the stream.

This post is accompanied by an AWS CloudFormation template that creates resources as described in the solution architecture:

- A DynamoDB table named

ProductPrioritywith a few items loaded - An S3 bucket named

demo-bucket-<AWS AccountID> - Two Lambda functions:

demo-glue-script-creator-lambdademo-reference-data-change-handler

- A Kinesis data stream named

SourceKinesisStream - An AWS Glue Data Catalog database called

my-database - Two Data Catalog tables

- An AWS Glue job called

demo-glue-job-<AWS AccountID>. The code for the AWS Glue job can be found at this link. - Two AWS Identity and Access Management (IAM) roles:

- A role for the Lambda functions to access Kinesis, Amazon S3, and DynamoDB Streams

- A role for the AWS Glue job to access Kinesis, Amazon S3, and DynamoDB

- An Amazon Kinesis Data Generator (KDG) account with a user created through Amazon Cognito to generate a sample data stream

Prerequisites

For this walkthrough, you should have the following prerequisites:

- An AWS account

- The IAM user should have permissions to create the required roles

- Permission to create a CloudFormation stack and the services we detailed

Create resources with AWS CloudFormation

To deploy the solution, complete the following steps:

- Choose Launch Stack:

- Set up an Amazon Cognito user pool and test if you can access the KDG URL specified in the stack’s output tab. Furthermore, validate if you can log in to KDG using the credentials provided while creating the stack.

You should now have the required resources available in your AWS account.

- Verify this list with the resources in the output section of the CloudFormation stack.

Sample data

Sample reference data has already been loaded into the reference data store. The following screenshot shows an example.

The priority value may change frequently based on the time of the day, the day of the week, or other factors that drive demand and supply.

The objective is to accommodate these changes to the reference data seamlessly into the pipeline.

Generate a randomized stream of events into Kinesis

Next, we simulate a sample stream of data into Kinesis. For detailed instructions, see Test Your Streaming Data Solution with the New Amazon Kinesis Data Generator. For this post, we define the structure of the simulated orders data using a parameterized template.

- On the KDG console, choose the Region where the source Kinesis stream is located.

- Choose your delivery stream.

- Enter the following template into the Record template field:

- Choose Test template, then choose Send data.

KDG should start sending a stream of randomly generated orders to the Kinesis data stream.

Run the AWS Glue streaming job

The CloudFormation stack created an AWS Glue job that reads from the Kinesis data stream through a Data Catalog table, joins with the reference data in DynamoDB, and writes the result to an S3 bucket. To run the job, complete the following steps:

- On the AWS Glue console, under ETL in the navigation pane, choose Jobs.

- Select the job

demo-glue-job-<AWS AccountID>. - On the Actions menu, choose Run job.

In addition to the enrichment, the job includes an additional check that monitors an Amazon S3 prefix for a “Change Flag” file. This file is created by the Lambda function, which is invoked by the DynamoDB stream whenever there is an update or a new reference item.

Investigate the target data in Amazon S3

The following is a screenshot of the data being loaded in real time into the item=burger partition. The priority was set to medium in the reference data, and the orders go into the corresponding partition.

Update the reference data

Now we update the priority for burgers to high in the DynamoDB table through the console while the orders are streaming into the pipeline.

Use the following command to perform the update through Amazon CloudShell. Change the Region to the appropriate value.

Verify that the data got updated.

Navigate to the target S3 folder to confirm the contents. The AWS Glue job should have started sending the orders for burgers into the high partition.

The Lambda function is invoked by the DynamoDB stream and places a “Change Flag” file in an Amazon S3 bucket. The AWS Glue job refreshes the reference data and deletes the file to avoid redundant refreshes.

Using this pattern for reference data in Amazon S3

If the reference data is stored in an S3 bucket, create an Amazon S3 event notification that identifies changes to the prefix where the reference data is stored. The event notification invokes a Lambda function that inserts the change flag into the data stream.

Cleaning up

To avoid incurring future charges, delete the resources. You can do this by deleting the CloudFormation stack.

Conclusion

In this post, we discussed approaches to handle fast-changing reference data stored in DynamoDB or Amazon S3. We demonstrated a simple use case that implements this pattern.

Note that DynamoDB Streams writes stream records in near-real time. When designing your solution, account for a minor delay between the actual update in DynamoDB and the write into the DynamoDB stream.

About the Authors

Jerome Rajan is a Lead Data Analytics Consultant at AWS. He helps customers design & build scalable analytics solutions and migrate data pipelines and data warehouses into the cloud. In an alternate universe, he is a World Chess Champion!

Jerome Rajan is a Lead Data Analytics Consultant at AWS. He helps customers design & build scalable analytics solutions and migrate data pipelines and data warehouses into the cloud. In an alternate universe, he is a World Chess Champion!

Dipankar Ghosal is a Principal Architect at Amazon Web Services and is based out of Minneapolis, MN. He has a focus in analytics and enjoys helping customers solve their unique use cases. When he’s not working, he loves going hiking with his wife and daughter.

Dipankar Ghosal is a Principal Architect at Amazon Web Services and is based out of Minneapolis, MN. He has a focus in analytics and enjoys helping customers solve their unique use cases. When he’s not working, he loves going hiking with his wife and daughter.

[$] The rest of the 5.17 merge window

Post Syndicated from original https://lwn.net/Articles/881597/rss

Linus Torvalds released

5.17-rc1 and closed the 5.17 merge window on January 23 after

having pulled just over 11,000 non-merge changesets into the mainline

repository. A little over 4,000 of those changesets arrived after our first-half merge-window summary was

written. Activity thus slowed down, as expected, in the second half of the

merge window, but there still a number of significant changes that made it

in for the next kernel release.

Migrating AWS Lambda functions to Arm-based AWS Graviton2 processors

Post Syndicated from Julian Wood original https://aws.amazon.com/blogs/compute/migrating-aws-lambda-functions-to-arm-based-aws-graviton2-processors/

AWS Lambda now allows you to configure new and existing functions to run on Arm-based AWS Graviton2 processors in addition to x86-based functions. Using this processor architecture option allows you to get up to 34% better price performance. This blog post highlights some considerations when moving from x86 to arm64 as the migration process is code and workload dependent.

Functions using the Arm architecture benefit from the performance and security built into the Graviton2 processor, which is designed to deliver up to 19% better performance for compute-intensive workloads. Workloads using multithreading and multiprocessing, or performing many I/O operations, can experience lower invocation time, which reduces costs.

Duration charges, billed with millisecond granularity, are 20 percent lower when compared to current x86 pricing. This also applies to duration charges when using Provisioned Concurrency. Compute Savings Plans supports Lambda functions powered by Graviton2.

The architecture change does not affect the way your functions are invoked or how they communicate their responses back. Integrations with APIs, services, applications, or tools are not affected by the new architecture and continue to work as before.

The following runtimes, which use Amazon Linux 2, are supported on Arm:

- Node.js 12 and 14

- Python 3.8 and 3.9

- Java 8 (java8.al2) and 11

- .NET Core 3.1

- Ruby 2.7

- Custom runtime (provided.al2)

Lambda@Edge does not support Arm as an architecture option.

You can create and manage Lambda functions powered by Graviton2 processor using the AWS Management Console, AWS Command Line Interface (AWS CLI), AWS CloudFormation, AWS Serverless Application Model (AWS SAM), and AWS Cloud Development Kit (AWS CDK). Support is also available through many AWS Lambda Partners.

Understanding Graviton2 processors

AWS Graviton processors are custom built by AWS. Generally, you don’t need to know about the specific Graviton processor architecture, unless your applications can benefit from specific features.

The Graviton2 processor uses the Neoverse-N1 core and supports Arm V8.2 (include CRC and crypto extensions) plus several other architectural extensions. In particular, Graviton2 supports the Large System Extensions (LSE), which improve locking and synchronization performance across large systems.

Migrating x86 Lambda functions to arm64

Many Lambda functions may only need a configuration change to take advantage of the price/performance of Graviton2. Other functions may require repackaging the Lambda function using Arm-specific dependencies, or rebuilding the function binary or container image.

You may not require an Arm processor on your development machine to create Arm-based functions. You can build, test, package, compile, and deploy Arm Lambda functions on x86 machines using AWS SAM and Docker Desktop. If you have an Arm-based system, such as an Apple M1 Mac, you can natively compile binaries.

Functions without architecture-specific dependencies or binaries

If your functions don’t use architecture-specific dependencies or binaries, you can switch from one architecture to the other with a single configuration change. Many functions using interpreted languages such as Node.js and Python, or functions compiled to Java bytecode, can switch without any changes. Ensure you check binaries in dependencies, Lambda layers, and Lambda extensions.

To switch functions from x86 to arm64, you can change the Architecture within the function runtime settings using the Lambda console.

If you want to display or log the processor architecture from within a Lambda function, you can use OS specific calls. For example, Node.js process.arch or Python platform.machine().

When using the AWS CLI to create a Lambda function, specify the --architectures option. If you do not specify the architecture, the default value is x86-64. For example, to create an arm64 function, specify --architectures arm64.

aws lambda create-function \

--function-name MyArmFunction \

--runtime nodejs14.x \

--architectures arm64 \

--memory-size 512 \

--zip-file fileb://MyArmFunction.zip \

--handler lambda.handler \

--role arn:aws:iam::123456789012:role/service-role/MyArmFunction-role

When using AWS SAM or CloudFormation, add or amend the Architectures property within the function configuration.

MyArmFunction:

Type: AWS::Lambda::Function

Properties:

Runtime: nodejs14.x

Code: src/

Architectures:

- arm64

Handler: lambda.handler

MemorySize: 512When initiating an AWS SAM application, you can specify:

sam init --architecture arm64When building Lambda layers, you can specify CompatibleArchitectures.

MyArmLayer:

Type: AWS::Lambda::LayerVersion

Properties:

ContentUri: layersrc/

CompatibleArchitectures:

- arm64

Building function code for Graviton2

If you have dependencies or binaries in your function packages, you must rebuild the function code for the architecture you want to use. Many packages and dependencies have arm64 equivalent versions. Test your own workloads against arm64 packages to see if your workloads are good migration candidates. Not all workloads show improved performance due to the different processor architecture features.

For compiled languages like Rust and Go, you can use the provided.al2 custom runtime, which supports Arm. You provide a binary that communicates with the Lambda Runtime API.

When compiling for Go, set GOARCH to arm.

GOOS=linux GOARCH=arm go build

When compiling for Rust, set the target.

cargo build --release -- target-cpu=neoverse-n1

The default installation of Python pip on some Linux distributions is out of date (<19.3). To install binary wheel packages released for Graviton, upgrade the pip installation using:

sudo python3 -m pip install --upgrade pipThe Arm software ecosystem is continually improving. As a general rule, use later versions of compilers and language runtimes whenever possible. The AWS Graviton Getting Started GitHub repository includes known recent changes to popular packages that improve performance, including ffmpeg, PHP, .Net, PyTorch, and zlib.

You can use https://pkgs.org/ as a package repository search tool.

Sometimes code includes architecture specific optimizations. These can include code optimized in assembly using specific instructions for CRC, or enabling a feature that works well on particular architectures. One way to see if any optimizations are missing for arm64 is to search the code for __x86_64__ ifdefs and see if there is corresponding arm64 code included. If not, consider alternative solutions.

For additional language-specific considerations, see the links within the GitHub repository.

The Graviton performance runbook is a performance profiling reference by the Graviton to benchmark, debug, and optimize application code.

Building functions packages as container images

Functions packaged as container images must be built for the architecture (x86 or arm64) they are going to use. There are arm64 architecture versions of the AWS provided base images for Lambda. To specify a container image for arm64, use the arm64 specific image tag, for example, for Node.js 14:

- public.ecr.aws/lambda/nodejs:14-arm64

- public.ecr.aws/lambda/nodejs:latest-arm64

- public.ecr.aws/lambda/nodejs:14.2021.10.01.16-arm64

Arm64 Images are also available from Docker Hub.

You can also use arbitrary Linux base images in addition to the AWS provided Amazon Linux 2 images. Images that support arm64 include Alpine Linux 3.12.7 or later, Debian 10 and 11, Ubuntu 18.04 and 20.04. For more information and details of other supported Linux versions, see Operating systems available for Graviton based instances.

Migrating a function

Here is an example of how to migrate a Lambda function from x86 to arm64 and take advantage of newer software versions to improve price and performance. You can follow a similar approach to test your own code.

I have an existing Lambda function as part of an AWS SAM template configured without an Architectures property, which defaults to x86_64.

Imagex86Function:

Type: AWS::Serverless::Function

Properties:

CodeUri: src/

Handler: app.lambda_handler

Runtime: python3.9The Lambda function code performs some compute intensive image manipulation. The code uses a dependency configured with the following version:

{

"dependencies": {

"imagechange": "^1.1.1"

}

}I duplicate the Lambda function within the AWS SAM template using the same source code and specify arm64 as the Architectures.

ImageArm64Function:

Type: AWS::Serverless::Function

Properties:

CodeUri: src/

Handler: app.lambda_handler

Runtime: python3.9

Architectures:

- arm64I use AWS SAM to build both Lambda functions. I specify the --use-container flag to build each function within its architecture-specific build container.

sam build –use-containerI can use sam local invoke to test the arm64 function locally even on an x86 system.

I then use sam deploy to deploy the functions to the AWS Cloud.

The AWS Lambda Power Tuning open-source project runs your functions using different settings to suggest a configuration to minimize costs and maximize performance. The tool allows you to compare two results on the same chart and incorporate arm64-based pricing. This is useful to compare two versions of the same function, one using x86 and the other arm64.

I compare the performance of the X86 and arm64 Lambda functions and see that the arm64 Lambda function is 12% cheaper to run:

I then upgrade the package dependency to use version 1.2.1, which has been optimized for arm64 processors.

{

"dependencies": {

"imagechange": "^1.2.1"

}

}I use sam build and sam deploy to redeploy the updated Lambda functions with the updated dependencies.

I compare the original x86 function with the updated arm64 function. Using arm64 with a newer dependency code version increases the performance by 30% and reduces the cost by 43%.

You can use Amazon CloudWatch,to view performance metrics such as duration, using statistics. You can then compare average and p99 duration between the two architectures. Due to the Graviton2 architecture, functions may be able to use less memory. This could allow you to right-size function memory configuration, which also reduces costs.

Deploying arm64 functions in production

Once you have confirmed your Lambda function performs successfully on arm64, you can migrate your workloads. You can use function versions and aliases with weighted aliases to control the rollout. Traffic gradually shifts to the arm64 version or rolls back automatically if any specified CloudWatch alarms trigger.

AWS SAM supports gradual Lambda deployments with a feature called Safe Lambda deployments using AWS CodeDeploy. You can compile package binaries for arm64 using a number of CI/CD systems. AWS CodeBuild supports building Arm based applications natively. CircleCI also has Arm compute resource classes for deployment. GitHub Actions allows you to use self-hosted runners. You can also use AWS SAM within GitHub Actions and other CI/CD pipelines to create arm64 artifacts.

Conclusion

Lambda functions using the Arm/Graviton2 architecture provide up to 34 percent price performance improvement. This blog discusses a number of considerations to help you migrate functions to arm64.

Many functions can migrate seamlessly with a configuration change, others need to be rebuilt to use arm64 packages. I show how to migrate a function and how updating software to newer versions may improve your function performance on arm64. You can test your own functions using the Lambda PowerTuning tool.

Start migrating your Lambda functions to Arm/Graviton2 today.

For more serverless learning resources, visit Serverless Land.

Netfilter project: Settlement with Patrick McHardy

Post Syndicated from original https://lwn.net/Articles/882397/rss

The netfilter project,

which works on packet-filtering for the Linux kernel, has announced that it

has reached a settlement

(English

translation) with Patrick McHardy that is “legally

binding and it governs any legal enforcement

activities” on netfilter programs and libraries as well as the

kernel itself. McHardy has been employing

questionable practices in doing GPL enforcement in Germany over the

last six years or more. The practice has been called “copyright trolling” by some and is

part of what led to the creation of The Principles of Community-Oriented GPL Enforcement.

This settlement establishes that any decision-making around

netfilter-related enforcement activities should be based on a majority

vote. Thus, each active coreteam member

at the time of the

enforcement request holds one right to vote. This settlement covers

past and new enforcement, as well as the enforcement of contractual

penalties related to past declarations to cease-and-desist.

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/882396/rss

Security updates have been issued by Debian (chromium, golang-1.7, golang-1.8, pillow, qtsvg-opensource-src, util-linux, and wordpress), Fedora (expat, harfbuzz, kernel, qt5-qtsvg, vim, webkit2gtk3, and zabbix), Mageia (glibc, kernel, and kernel-linus), openSUSE (bind, chromium, and zxing-cpp), Oracle (kernel), Red Hat (java-11-openjdk and kpatch-patch), Scientific Linux (java-11-openjdk), SUSE (bind, clamav, zsh, and zxing-cpp), and Ubuntu (aide, dbus, and thunderbird).

The Great Resignation: 4 Ways Cybersecurity Can Win

Post Syndicated from Amy Hunt original https://blog.rapid7.com/2022/01/24/the-great-resignation-4-ways-cybersecurity-can-win/

Pandemics change everything.

In the Middle Ages, the Black Death killed half of Europe’s population. It also killed off the feudal system of landowning lords exploiting laborer serfs. Rampant death caused an extreme labor shortage and forced the lords to pay wages. Eventually, serfs had bargaining power and escalating wages as aristocrats competed for people to work their lands.

Think we invented “The Great Resignation?” 14th-century peasants did.

Last year, more than 40 million Americans quit their jobs. The trend raged across Europe. Workers in China went freelance. The Harvard Business Review reports resignations are highest in tech and healthcare, both seriously strained by the pandemic. Of course, cybersecurity has had a talent shortage for years now. As 2022 and back-to-office plans take shape, expect another tidal wave.

Here are four ideas about how to prepare for it and win.

1. You’ll do better if you label it The Great Rethinking

COVID-19’s daily specter of illness and death has spurred existential questions. “If life is so short, what am I doing? Is this all there is?”

Isolated with family every day, month after month, some of us have decided we’re happier than ever. Others are causing a big spike in divorce and the baby bust. Either way, people are confronting the quality of their relationships. Some friendships have made it into our small, carefully considered “safety pods,” and others haven’t.

As we rethink our most profound human connections, we’re surely going to rethink work and how we spend most of our waking hours.

2. Focus on our collective search for meaning

A mere 17% of us say jobs or careers are a source of meaning in life. But here, security professionals have a rare advantage.

Nearly all cybercrime is conducted by highly organized criminal gangs and adversarial nation states. They’ve breached power grids and pipelines, air traffic, nuclear installations, hospitals, and the food supply. Roughly 1 in 20 people a year suffer identity theft, which can produce damaging personal consequences that drag on and on. In December, hackers shut down city bus service in Honolulu and the Handi-Van, which people with disabilities count on to get around.

How many jobs can be defined simply and accurately as good vs evil? How many align everyday people with the aims of the FBI and the Department. of Justice? With lower-wage workers leading the Great Resignation last year, the focus has been on salary and raises. But don’t underestimate meaning.

3. Winners know silos equal stress and will get rid of them

Along with meaning and good pay, consider ways to make your security operations center (SOC) a better place to be. Consolidate your tools. Integrate systems. Extend your visibility. Improve signal-to-noise ratio. The collision of security information and event management (SIEM) and extended detection and response (XDR) protects you from a whole lot more than malicious attacks.

Remote work, hybrid work, and far-flung digital infrastructure are here to stay. So are attackers who’ve thrived in the last two years, shattering all records. If you’re among the 76% of security professionals who admit they really don’t understand XDR, know you’re not alone – but also know that XDR will soon separate winners from losers. Transforming your SOC with it will change what work is like for both you and your staff, and give you a competitive advantage.

4. You can take this message to the C-suite

Lower-wage workers started the trend, but CEO resignations are surging now (and it’s not just Jeff Bezos and Jack Dorsey). They’re employees, too, and the Great Rethinking has also arrived in their homes. Maybe COVID-19 meant they finally spent real time with their kids, and they’d like more of it, please. Maybe they’re exhausted from communicating on Zoom for the last two years. Maybe they think a new deal is in order for everyone.

As you make the case for XDR, consider your ability to give new, compelling context to your recommendations. XDR is the ideal collaboration between humans and machines, each doing what they do best. It reduces the chance executives will have to explain themselves on the evening news. It helps create work-life balance. Of course it makes sense.

And what about when things get back to normal? The history of diseases is they don’t really leave and we don’t really return to “normal.” Things change. We change. You can draw a straight line from the Black Death, to the idea of a middle class, then to the Renaissance. Here’s hoping.

Want more info on how XDR can help you meet today’s challenges?

Апология за кака Корни

Post Syndicated from original https://bivol.bg/%D0%B0%D0%BF%D0%BE%D0%BB%D0%BE%D0%B3%D0%B8%D1%8F-%D0%B7%D0%B0-%D0%BA%D0%B0%D0%BA%D0%B0-%D0%BA%D0%BE%D1%80%D0%BD%D0%B8.html

Щото, нали, някой щял да се обиди, та трябвало да пиша културно. Съжалявам… Не, всъщност, изобщо не съжалявам. Просто отказвам да пиша културно. Особено, когато става дума за БСП –…

Burkina Faso experiencing second major Internet disruption this year

Post Syndicated from João Tomé original https://blog.cloudflare.com/internet-disruption-in-burkina-faso/

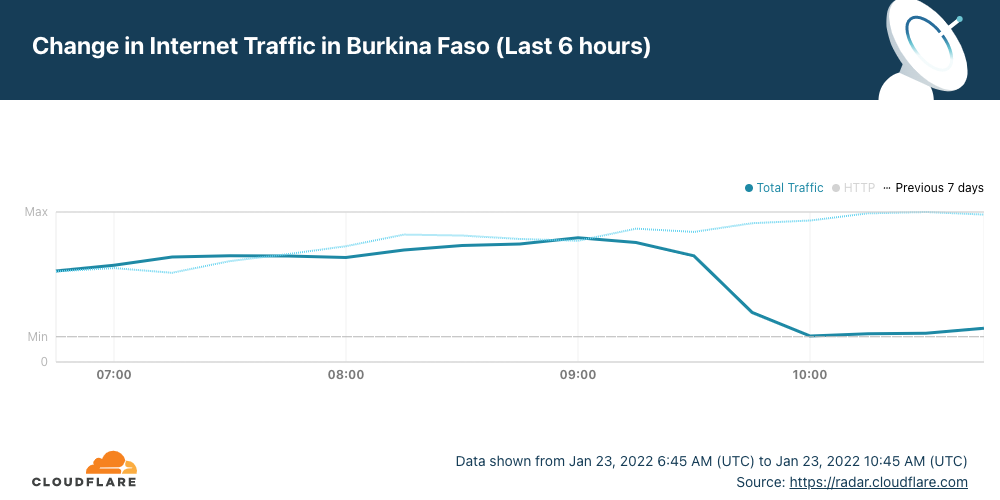

The early hours of Sunday, January 23, 2022, started in Burkina Faso with an Internet outage or shutdown. Heavy gunfire in an army mutiny could be related to the outage according to the New York Times (“mobile Internet services were shut down”). As of today, there are three countries affected by major Internet disruptions — Tonga and Yemen are the others.

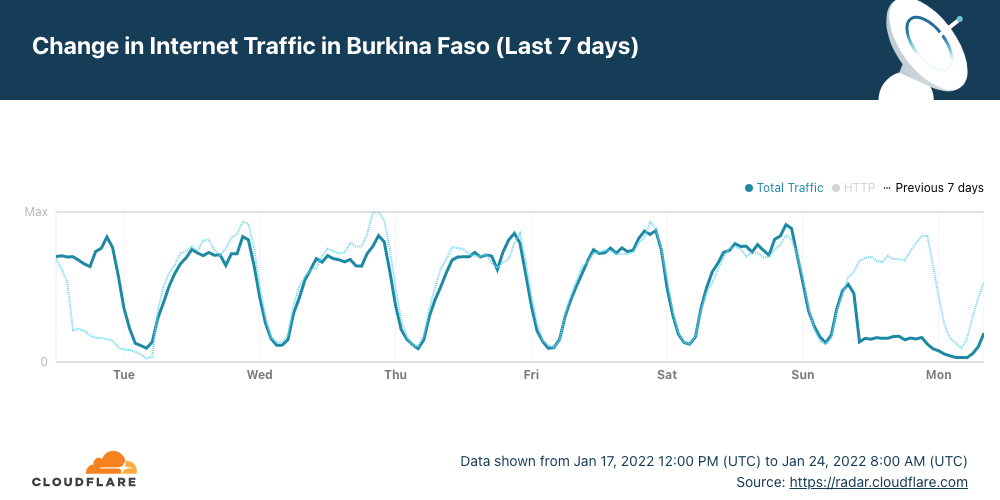

Cloudflare Radar shows that Internet traffic dropped significantly in the West African country after ~09:15 UTC (the same in local time) and remains low more than 24 hours later. Burkina Faso also had a mobile Internet shutdown on January 10, 2022, and another we reported in late November 2021.

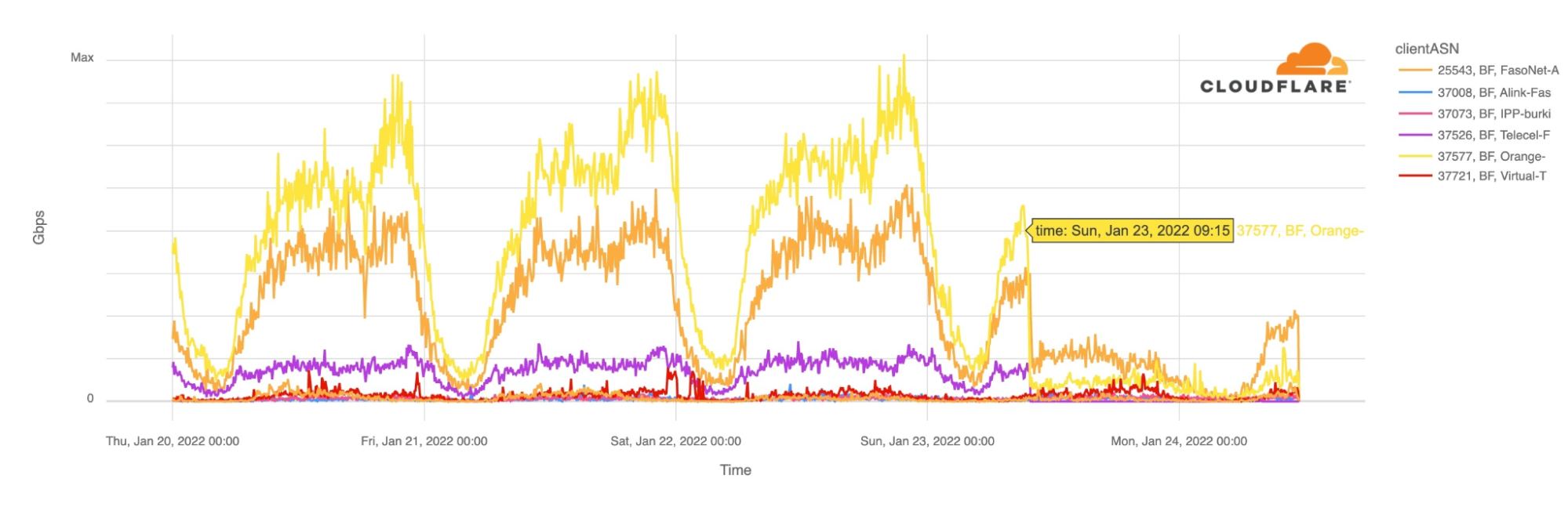

The main ISPs from Burkina Faso were affected. The two leading Internet Service Providers Orange and FasoNet lost Internet traffic after 09:15 UTC, but also Telecel Faso, as the next chart shows. This morning, at around 10:00 UTC there was some traffic from FasoNet but less than half of what we saw at the same time in preceding days.

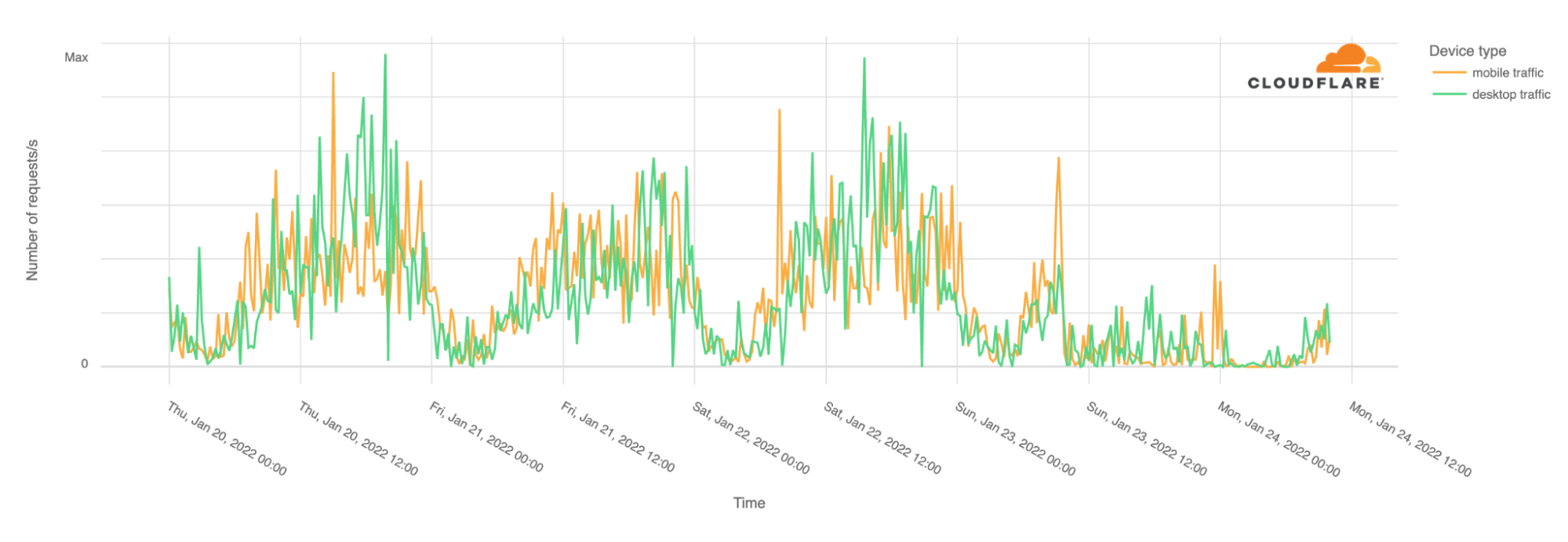

It’s not only mobile traffic that is affected. Desktop traffic is also impacted. In Burkina Faso, our data shows that mobile devices normally represent 70% of Internet traffic.

With the Burkina Faso disruption, three countries are currently mostly without access to the Internet for different reasons.

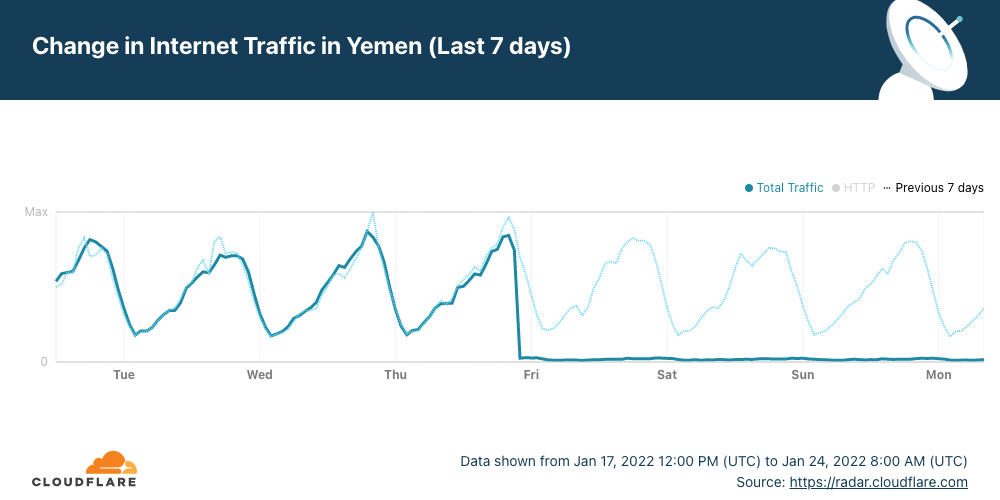

In Yemen, as we reported, the four day-long outage is related to airstrikes that affected a telecommunications building in Al-Hudaydah where the FALCON undersea cable lands.

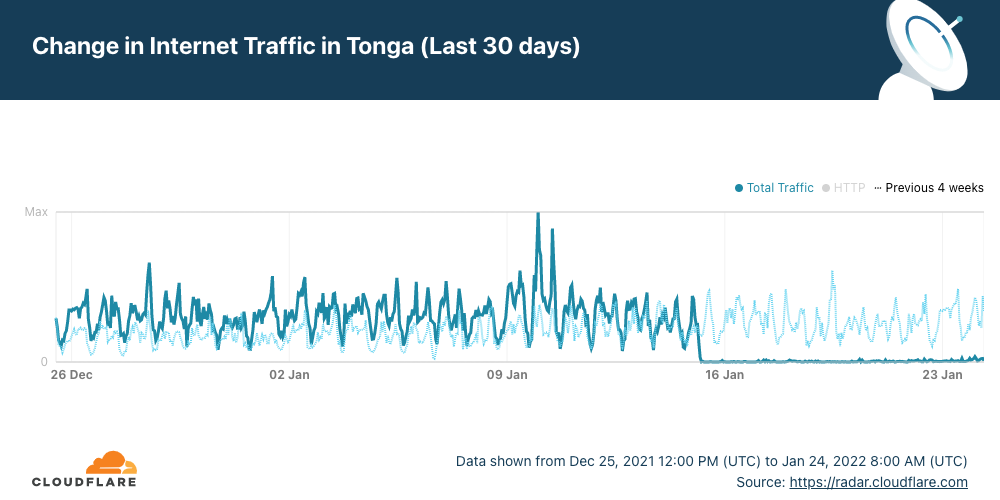

In Tonga, the nine day-long outage that we also explained is related to problems in the undersea cable caused by the large volcanic eruption in the South Pacific archipelago.

Several significant Internet disruptions have already occurred in 2022 for different reasons:

1. An Internet outage that lasted a few hours in The Gambia because of a cable problem (on January 4).

2. A six days Internet shutdown in Kazakhstan because of unrest (from January 5 to January 11).

3. A mobile Internet shutdown in Burkina Faso because of a coup plot (on January 10).

4. An Internet outage in Tonga because of a volcanic eruption (ongoing since January 15).

5. An Internet outage in Yemen because of airstrikes that affected a telecommunications building (ongoing since January 20,).

6. This second Internet disruption in Burkina Faso is related to military unrest (ongoing since January 23).

You can keep an eye on Cloudflare Radar to monitor the Burkina Faso, Yemen and Tonga situations as they unfold.

Smoking Ban for Women

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=bLbPY_WwS6k

Linux-Targeted Malware Increased by 35%

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/01/linux-targeted-malware-increased-by-35.html

Crowdstrike is reporting that malware targeting Linux has increased considerably in 2021:

Malware targeting Linux systems increased by 35% in 2021 compared to 2020.

XorDDoS, Mirai and Mozi malware families accounted for over 22% of Linux-targeted threats observed by CrowdStrike in 2021.

Ten times more Mozi malware samples were observed in 2021 compared to 2020.

Lots of details in the report.

News article:

The Crowdstrike findings aren’t surprising as they confirm an ongoing trend that emerged in previous years.