Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/02/camera-the-size-of-a-grain-of-salt.html

Cameras are getting smaller and smaller, changing the scale and scope of surveillance.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/02/camera-the-size-of-a-grain-of-salt.html

Cameras are getting smaller and smaller, changing the scale and scope of surveillance.

Post Syndicated from Sebastian Hufnagel original https://blog.cloudflare.com/towards-a-global-framework-for-cross-border-data-flows-and-privacy-protection/

As our societies and economies rely more and more on digital technologies, there is an increased need to share and transfer data, including personal data, over the Internet. Cross-border data flows have become essential to international trade and global economic development. In fact, the digital transformation of the global economy could never have happened as it did without the open and global architecture of the Internet and the ability for data to transcend national borders. As we described in our blog post yesterday, data localization doesn’t necessarily improve data privacy. Actually, there can be real benefits to data security and – by extension – privacy if we are able to transfer data across borders. So with Data Privacy Day coming up tomorrow, we wanted to take this opportunity to drill down into the current environment for the transfer of personal data from the EU to the US, which is governed by the EU’s privacy regulation (GDPR). Looking to the future, we will make the case for a more stable, global cross-border data transfer framework, which will be critical for an open, more secure and more private Internet.

In the last decade, we have observed a growing tendency around the world to ring-fence the Internet and erect new barriers to international data flows, especially personal data. In some cases this has resulted in less choice and poorer performance for users of digital products and services. In other cases it has limited free access to information, and – paradoxically- in some cases this has resulted in even less data security and privacy, which is contrary to the very rationale of data protection regulations. The motives for these concerning developments are manifold, ranging from a lack of trust with regard to privacy protection in third countries, to asserting national security, to seeking economic self-determination.

In the European Union, for the last few years, even the most privacy-focused companies (like Cloudflare) have faced a drumbeat of speculation and concerns from some hardliner data protection authorities, privacy activists and others about whether data processed by US cloud service providers could really be processed in a manner that complies with the GDPR. Often, these concerns are purely legalistic and fail to take into account the actual risks associated with a specific data transfer, and, in Cloudflare’s case, the essential contribution of our services to the security and privacy of millions of European Internet users. In fact, official guidance from the European Data Protection Board (EDPB) has confirmed that EU personal data can still be processed in the US, but this has become quite complicated since the suspension of the Privacy Shield framework by the European Court of Justice with its 2020 Schrems II judgment: data controllers must use legal transfer mechanisms such as EU standard contractual clauses as well as a host of additional legal, technical and organizational safeguards.

However, it is ultimately up to the competent data protection authorities to decide whether such measures are sufficient in a case-by-case interpretation. Since these cases are often quite complex, since every case is different, and since there are 45 data protection authorities across Europe alone, this approach simply doesn’t scale. Further, DPAs – sometimes even within the same EU country (Germany) – have disagreed in their interpretation of the law when it comes to third country transfers. And when it comes to an actual court ruling, it is our experience that the courts tend to be more pragmatic and balanced about data protection than the DPAs are. But it takes a long time and many resources before a data protection case ends up before a court. This is particularly problematic for small businesses that can’t afford lengthy legal battles. As a result, the theoretical threat of a hefty fine from a DPA may create enough of a deterrent for them to stop using services involving third-country data transfers altogether, even if those services provide greater security and privacy for the personal data they process, and make them more productive. This is clearly not in the interest of the European economy and most likely was not the intention of policy-makers when adopting the GDPR back in 2016.

While recent developments will not resolve all the challenges mentioned above, last December, after years of complex negotiations, international policy-makers took two important steps towards restoring legal certainty and trust relating to cross-border flows of personal data.

On December 13, 2022, the European Commission published its long-awaited preliminary assessment that the EU would consider that personal data transferred from the EU to the US under the future EU-US Data Privacy Framework (DPF) enjoys an adequate level of protection in the United States. The assessment follows the recent signing of Executive Order 14086 by US President Biden, which comprehensively addressed the concerns expressed by the European Court of Justice (ECJ) in its 2022 Schrems II decision. Notably, the US government will impose additional limits on US authorities’ use of bulk surveillance methods against non-US citizens and create an independent redress mechanism in the US that allows EU data subjects to exercise their data protection rights. While the Commission’s initial assessment is only the start of an EU ratification process that is expected to take about 4-6 months, experts are very optimistic that it will be adopted at the end.

Just one day later, the US, along with the 37 other OECD countries and the European Union, adopted a first-of-its kind agreement to enhance trust in cross-border data flows between rule-of law democratic systems, by articulating joint principles for safeguards to protect privacy and other human rights and freedoms when governments access personal data held by private entities on grounds of national security and law enforcement. Where legal frameworks require that transborder data flows are subject to safeguards, like in the case of GDPR in the EU, participants agreed to “take into account a destination country’s effective implementation of the principles as a positive contribution towards facilitating transborder data flows in the application of those rules.” (It’s also good to note that, in line with Cloudflare’s mission to help build a better Internet, the OECD declaration recalls members’ shared commitment to a “global, open, accessible, interconnected, interoperable, reliable and secure Internet”).

The EU-US DPF and the OECD Declaration are complementary to each other and both mark important steps to restore trust in cross-border data flows between countries that share common values like democracy and the rule of law, protecting privacy and other human rights and freedoms. However, both approaches come with their own limitations: the DPF is limited to personal data transfers from the EU to the US In addition, it cannot be excluded that it will be invalidated by the ECJ again in a few years time, as privacy activists have already announced that they will legally challenge it again. The OECD Declaration, on the other hand, is global in scope, but limited to general principles for governments, which can be interpreted quite differently in practice.

This is why, in addition to these efforts, we need a stable, multilateral framework with specific privacy protection requirements, which cannot be invalidated unilaterally. One single global certification should suffice for participating companies to safely transfer personal data between participating countries worldwide. The emerging Global Cross Border Privacy Rules (CBPR) certification, which is already supported by several governments from North America and Asia, looks very promising in this regard.

European policy-makers will ultimately need to decide whether they want to continue on the present path, which risks leaving Europe behind as an isolated data island. Alternatively, the EU could revise its privacy regulation with a view to prevent Europe’s many national and regional data protection authorities from interpreting it in a way that is out of touch with reality. It could also make it interoperable with a global framework for cross-border data flows based on shared values and mutual trust.

Cloudflare will continue to actively engage with policy-makers globally to create awareness for the practical challenges our industry is facing and to work on sustainable policy solutions for an open and interconnected Internet that is more private and secure.

Data Privacy Day tomorrow provides a unique occasion for us all to celebrate the significant progress achieved so far to protect users’ privacy online. At the same time, we should use this day to reflect on how regulations can be adapted or enforced in a way that more meaningfully protects privacy, notably by prioritizing the use of security and privacy-enhancing technologies over prohibitive approaches that harm the economy without tangible privacy benefits.

Post Syndicated from Emily Hancock original https://blog.cloudflare.com/investing-in-security-to-protect-data-privacy/

If you’ve made it to 2023 without ever receiving a notice that your personal information was compromised in a security breach, consider yourself lucky. In a best case scenario, bad actors only got your email address and name – information that won’t cause you a huge amount of harm. Or in a worst-case scenario, maybe your profile on a dating app was breached and intimate details of your personal life were exposed publicly, with life-changing impacts. But there are also more hidden, insidious ways that your personal data can be exploited. For example, most of us use an Internet Service Provider (ISP) to connect to the Internet. Some of those ISPs are collecting information about your Internet viewing habits, your search histories, your location, etc. – all of which can impact the privacy of your personal information as you are targeted with ads based on your online habits.

You also probably haven’t made it to 2023 without hearing at least something about Internet privacy laws around the globe. In some jurisdictions, lawmakers are driven by a recognition that the right to privacy is a fundamental human right. In other locations, lawmakers are passing laws to address the harms their citizens are concerned about – data breaches and mining of data about private details of people’s lives to sell targeted advertising. At the core of most of this legislation is an effort to give users more control over their personal data. And many of these regulations require data controllers to ensure adequate protections are in place for cross-border data transfers. In recent years, we’ve seen an increasing number of regulators interpreting these regulations in a way that would leave no room for cross-border data transfers, however. These interpretations are problematic – not only are they harmful to global commerce, but they also disregard the idea that data might be more secure if cross-border data transfers are allowed. Some regulators instead assert that personal data will be safer if it stays within their borders because their law protects privacy better than that of another jurisdiction.

So with Data Privacy Day 2023 just a few days away on January 28, we think it’s important to focus on all the ways security measures and privacy-enhancing technologies help keep personal data private and why security measures are so much more critical to protecting privacy than merely implementing the requirements of data protection laws or keeping data in a jurisdiction because regulators think that jurisdiction has stronger laws than another.

Most data protection regulations recognize the role security plays in protecting the privacy of personal information. That’s not surprising. An entity’s efforts to follow a data protection law’s requirements for how personal data should be collected and used won’t mean much if a third party can access the data for their own malicious purposes.

The laws themselves provide few specifics about what security is required. For example, the EU General Data Protection Regulation (“GDPR”) and similar comprehensive privacy laws in other jurisdictions require data controllers (the entities that collect your data) to implement “reasonable and appropriate” security measures. But it’s almost impossible for regulators to require specific security measures because the security landscape changes so quickly. In the United States, state security breach laws don’t require notification if the data obtained is encrypted, suggesting that encryption is at least one way regulators think data should be protected.

Enforcement actions brought by regulators against companies that have experienced data breaches provide other clues for what regulators think are “best practices” for ensuring data protection. For example, on January 10 of this year, the U.S. Federal Trade Commission entered into a consent order with Drizly, an online alcohol sales and delivery platform, outlining a number of security failures that led to a data breach that exposed the personal information of about 2.5 million Drizly users and requiring Drizly to implement a comprehensive security program that includes a long list of intrusion detection and logging procedures. In particular, the FTC specifically requires Drizly to implement “…(c) data loss prevention tools; [and] (d) properly configured firewalls” among other measures.

What many regulatory post-breach enforcement actions have in common is the requirement of a comprehensive security program that includes a number of technical measures to protect data from third parties who might seek access to it. The enforcement actions tend to be data location-agnostic, however. It’s not important where the data might be stored – what is important is the right security measures are in place. We couldn’t agree more wholeheartedly.

Cloudflare’s portfolio of products and services helps our customers put protections in place to thwart would-be attackers from accessing their websites or corporate networks. By making it less likely that users’ data will be accessed by malicious actors, Cloudflare’s services can help organizations save millions of dollars, protect their brand reputations, and build trust with their users. We also spend a great deal of time working to develop privacy-enhancing technologies that directly support the ability of individual users to have a more privacy-preserving experience on the Internet.

Cloudflare is most well-known for its application layer security services – Web Application Firewall (WAF), bot management, DDoS protection, SSL/TLS, Page Shield, and more. As the FTC noted in its Drizly consent order, firewalls can be a critical line of defense for any online application. Think about what happens when you go through security at an airport – your body and your bags are scanned for something bad that might be there (e.g. weapons or explosives), but the airport security personnel are not inventorying or recording the contents of your bags. They’re simply looking for dangerous content to make sure it doesn’t make its way onto an airplane. In the same way, the WAF looks at packets as they are being routed through Cloudflare’s network to make sure the Internet equivalent of weapons and explosives are not delivered to a web application. Governments around the globe have agreed that these quick security scans at the airport are necessary to protect us all from bad actors. Internet traffic is the same.

We embrace the critical importance of encryption in transit. In fact, we see encryption as so important that in 2014, Cloudflare introduced Universal SSL to support SSL (and now TLS) connections to every Cloudflare customer. And at the same time, we recognize that blindly passing along encrypted packets would undercut some of the very security that we’re trying to provide. Data privacy and security are a balance. If we let encrypted malicious code get to an end destination, then the malicious code may be used to access information that should otherwise have been protected. If data isn’t encrypted in transit, it’s at risk for interception. But by supporting encryption in transit and ensuring malicious code doesn’t get to its intended destination, we can protect private personal information even more effectively.

Let’s take another example – In June 2022, Atlassian released a Security Advisory relating to a remote code execution (RCE) vulnerability affecting Confluence Server and Confluence Data Center products. Cloudflare responded immediately to roll out a new WAF rule for all of our customers. For customers without this WAF protection, all the trade secret and personal information on their instances of Confluence were potentially vulnerable to data breach. These types of security measures are critical to protecting personal data. And it wouldn’t have mattered if the personal data were stored on a server in Australia, Germany, the U.S., or India – the RCE vulnerability would have exposed data wherever it was stored. Instead, the data was protected because a global network was able to roll out a WAF rule immediately to protect all of its customers globally.

The power of a large, global network is often overlooked when we think about using security measures to protect the privacy of personal data. Regulators who would seek to wall off their countries from the rest of the world as a method of protecting data privacy often miss how such a move can impact the security measures that are even more critical to keeping private data protected from bad actors.

Global knowledge is necessary to stop attacks that could come from anywhere in the world. Just as an international network of counterterrorism units helps to prevent physical threats, the same approach is needed to prevent cyberthreats. The most powerful security tools are built upon identified patterns of anomalous traffic, coming from all over the world. Cloudflare’s global network puts us in a unique position to understand the evolution of global threats and anomalous behaviors. To empower our customers with preventative and responsive cybersecurity, we transform global learnings into protections, while still maintaining the privacy of good-faith Internet users.

For example, Cloudflare’s tools to block threats at the DNS or HTTP level, including DDoS protection for websites and Gateway for enterprises, allow users to further secure their entities beyond customized traffic rules by screening for patterns of traffic known to contain phishing or malware content. We use our global network to improve our identification of vulnerabilities and malicious content and to roll out rules in real time that protect everyone. This ability to identify and instantly protect our customers from security vulnerabilities that they may not have yet had time to address reduces the possibility that their data will be compromised or that they will otherwise be subjected to nefarious activity.

Similarly, Cloudflare’s Bot Management product only increases in accuracy with continued use on the global network: it detects and blocks traffic coming from likely bots before feeding back learnings to the models backing the product. And most importantly, we minimize the amount of information used to detect these threats by fingerprinting traffic patterns and forgoing reliance on PII. Our Bot Management products are successful because of the sheer number of customers and amount of traffic on our network. With approximately 20 percent of all websites protected by Cloudflare, we are uniquely positioned to gather the signals that traffic is from a bad bot and interpret them into actionable intelligence. This diversity of signal and scale of data on a global platform is critical to help us continue to evolve our bot detection tools. If the Internet were fragmented – preventing data from one jurisdiction being used in another – more and more signals would be missed. We wouldn’t be able to apply learnings from bot trends in Asia to bot mitigation efforts in Europe, for example.

A global network is equally important for resilience and effective security protection, a reality that the war in Ukraine has brought into sharp relief. In order to keep their data safe, the Ukrainian government was required to change their laws to remove data localization requirements. As Ukraine’s infrastructure came under attack during Russia’s invasion, the Ukrainian government migrated their data to the cloud, allowing it to be preserved and easily moved to safety in other parts of Europe. Likewise, Cloudflare’s global network played an important role in helping maintain Internet access inside Ukraine. Sites in Ukraine at times came under heavy DDoS attack, even as infrastructure was being destroyed by physical attacks. With bandwidth limited, it was important that the traffic that was getting through inside Ukraine was useful traffic, not attack traffic. Instead of allowing attack traffic inside Ukraine, Cloudflare’s global network identified it and rejected it in the countries where the attacks originated. Without the ability to inspect and reject traffic outside of Ukraine, the attack traffic would have further congested networks inside Ukraine, limiting network capacity for critical wartime communications.

Although the situation in Ukraine reflects the country’s wartime posture, Cloudflare’s global network provides the same security benefits for all of our customers. We use our entire network to deliver DDoS mitigation, with a network capacity of over 172 Tbps, making it possible for our customers to stay online even in the face of the largest attacks. That enormous capacity to protect customers from attack is the result of the global nature of Cloudflare’s network, aided by the ability to restrict attack traffic to the countries where it originated. And a network that stays online is less likely to have to address the network intrusions and data loss that are frequently connected to successful DDoS attacks.

Some of the biggest data breaches in recent years have happened as a result of something pretty simple – an attacker uses a phishing email or social engineering to get an employee of a company to visit a site that infects the employee’s computer with malware or enter their credentials on a fake site that lets the bad actor capture the credentials and then use those to impersonate the employee and log into a company’s systems. Depending on the type of information compromised, these kinds of data breaches can have a huge impact on individuals’ privacy. For this reason, Cloudflare has invested in a number of technologies designed to protect corporate networks, and the personal data on those networks.

As we noted during our recent CIO week, the FBI’s latest Internet Crime Report shows that business email compromise and email account compromise, a subset of malicious phishing campaigns, are the most costly – with U.S. businesses losing nearly $2.4 billion. Cloudflare has invested in a number of Zero Trust solutions to help fight this very problem:

These Zero Trust tools, combined with the use of hardware keys for multi-factor authentication, were key in Cloudflare’s ability to prevent a breach by an SMS phishing attack that targeted more than 130 companies in July and August 2022. Many of these companies reported the disclosure of customer personal information as a result of employees falling victim to this SMS phishing effort.

And remember the Atlassian Confluence RCE vulnerability we mentioned earlier? Cloudflare remained protected not only due to our rapid update of our WAF rules, but also because we use our own Cloudflare Access solution (part of our Zero Trust suite) to ensure that only individuals with Cloudflare credentials are able to access our internal systems. Cloudflare Access verified every request made to a Confluence application to ensure it was coming from an authenticated user.

All of these Zero Trust solutions require sophisticated machine learning to detect patterns of malicious activity, and none of them require data to be stored in a specific location to keep the data safe. Thwarting these kinds of security threats aren’t only important for protecting organizations’ internal networks from intrusion – they are critical for keeping large scale data sets private for the benefit of millions of individuals.

Cloudflare’s security services enable our customers to screen for cybersecurity risks on Cloudflare’s network before those risks can reach the customer’s internal network. This helps protect our customers and our customers’ data from a range of cyber threats. By doing so, Cloudflare’s services are essentially fulfilling a privacy-enhancing function in themselves. From the beginning, we have built our systems to ensure that data is kept private, even from us, and we have made public policy and contractual commitments about keeping that data private and secure. But beyond securing our network for the benefit of our customers, we’ve invested heavily in new technologies that aim to secure communications from bad actors; the prying eyes of ISPs or other man-in-the-middle machines that might find your Internet communications of interest for advertising purpose; or government entities that might want to crack down on individuals exercising their freedom of speech.

For example, Cloudflare operates part of Apple’s iCloud Private Relay system, which ensures that no single party handling user data has complete information on both who the user is and what they are trying to access. Instead, a user’s original IP address is visible to the access network (e.g. the coffee shop you’re sitting in, or your home ISP) and the first relay (operated by Apple), but the server or website name is encrypted and not visible to either. The first relay hands encrypted data to a second relay (e.g. Cloudflare), but is unable to see “inside” the traffic to Cloudflare. And the Cloudflare-operated relays know only that it is receiving traffic from a Private Relay user, but not specifically who or their client IP address. Cloudflare relays then forward traffic on to the destination server.

And of course any post on how security measures enable greater data privacy would be remiss if it failed to mention Cloudflare’s privacy-first 1.1.1.1 public resolver. By using 1.1.1.1, individuals can search the Internet without their ISPs seeing where they are going. Unlike most DNS resolvers, 1.1.1.1 does not sell user data to advertisers.

Together, these many technologies and security measures ensure the privacy of personal data from many types of threats to privacy – behavioral advertising, man-in-the-middle attacks, malicious code, and more. On this data privacy day 2023, we urge regulators to recognize that the emphasis currently being placed on data localization has perhaps gone too far – and has foreclosed the many benefits cross-border data transfers can have for data security and, therefore, data privacy.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/01/bulk-surveillance-of-money-transfers.html

Just another obscure warrantless surveillance program.

US law enforcement can access details of money transfers without a warrant through an obscure surveillance program the Arizona attorney general’s office created in 2014. A database stored at a nonprofit, the Transaction Record Analysis Center (TRAC), provides full names and amounts for larger transfers (above $500) sent between the US, Mexico and 22 other regions through services like Western Union, MoneyGram and Viamericas. The program covers data for numerous Caribbean and Latin American countries in addition to Canada, China, France, Malaysia, Spain, Thailand, Ukraine and the US Virgin Islands. Some domestic transfers also enter the data set.

[…]

You need to be a member of law enforcement with an active government email account to use the database, which is available through a publicly visible web portal. Leber told The Journal that there haven’t been any known breaches or instances of law enforcement misuse. However, Wyden noted that the surveillance program included more states and countries than previously mentioned in briefings. There have also been subpoenas for bulk money transfer data from Homeland Security Investigations (which withdrew its request after Wyden’s inquiry), the DEA and the FBI.

How is it that Arizona can be in charge of this?

Wall Street Journal podcast—with transcript—on the program. I think the original reporting was from last March, but I missed it back then.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/01/the-fbi-identified-a-tor-user.html

No details, though:

According to the complaint against him, Al-Azhari allegedly visited a dark web site that hosts “unofficial propaganda and photographs related to ISIS” multiple times on May 14, 2019. In virtue of being a dark web site—that is, one hosted on the Tor anonymity network—it should have been difficult for the site owner’s or a third party to determine the real IP address of any of the site’s visitors.

Yet, that’s exactly what the FBI did. It found Al-Azhari allegedly visited the site from an IP address associated with Al-Azhari’s grandmother’s house in Riverside, California. The FBI also found what specific pages Al-Azhari visited, including a section on donating Bitcoin; another focused on military operations conducted by ISIS fighters in Iraq, Syria, and Nigeria; and another page that provided links to material from ISIS’s media arm. Without the FBI deploying some form of surveillance technique, or Al-Azhari using another method to visit the site which exposed their IP address, this should not have been possible.

There are lots of ways to de-anonymize Tor users. Someone at the NSA gave a presentation on this ten years ago. (I wrote about it for the Guardian in 2013, an essay that reads so dated in light of what we’ve learned since then.) It’s unlikely that the FBI uses the same sorts of broad surveillance techniques that the NSA does, but it’s certainly possible that the NSA did the surveillance and passed the information to the FBI.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/01/threats-of-machine-generated-text.html

With the release of ChatGPT, I’ve read many random articles about this or that threat from the technology. This paper is a good survey of the field: what the threats are, how we might detect machine-generated text, directions for future research. It’s a solid grounding amongst all of the hype.

Machine Generated Text: A Comprehensive Survey of Threat Models and Detection Methods

Abstract: Advances in natural language generation (NLG) have resulted in machine generated text that is increasingly difficult to distinguish from human authored text. Powerful open-source models are freely available, and user-friendly tools democratizing access to generative models are proliferating. The great potential of state-of-the-art NLG systems is tempered by the multitude of avenues for abuse. Detection of machine generated text is a key countermeasure for reducing abuse of NLG models, with significant technical challenges and numerous open problems. We provide a survey that includes both 1) an extensive analysis of threat models posed by contemporary NLG systems, and 2) the most complete review of machine generated text detection methods to date. This survey places machine generated text within its cybersecurity and social context, and provides strong guidance for future work addressing the most critical threat models, and ensuring detection systems themselves demonstrate trustworthiness through fairness, robustness, and accountability.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/01/experian-privacy-vulnerability.html

Brian Krebs is reporting on a vulnerability in Experian’s website:

Identity thieves have been exploiting a glaring security weakness in the website of Experian, one of the big three consumer credit reporting bureaus. Normally, Experian requires that those seeking a copy of their credit report successfully answer several multiple choice questions about their financial history. But until the end of 2022, Experian’s website allowed anyone to bypass these questions and go straight to the consumer’s report. All that was needed was the person’s name, address, birthday and Social Security number.

Post Syndicated from Mark Nottingham original https://blog.cloudflare.com/the-state-of-http-in-2022/

At over thirty years old, HTTP is still the foundation of the web and one of the Internet’s most popular protocols—not just for browsing, watching videos and listening to music, but also for apps, machine-to-machine communication, and even as a basis for building other protocols, forming what some refer to as a “second waist” in the classic Internet hourglass diagram.

What makes HTTP so successful? One answer is that it hits a “sweet spot” for most applications that need an application protocol. “Building Protocols with HTTP” (published in 2022 as a Best Current Practice RFC by the HTTP Working Group) argues that HTTP’s success can be attributed to factors like:

– familiarity by implementers, specifiers, administrators, developers, and users;

– availability of a variety of client, server, and proxy implementations;

– ease of use;

– availability of web browsers;

– reuse of existing mechanisms like authentication and encryption;

– presence of HTTP servers and clients in target deployments; and

– its ability to traverse firewalls.

Another important factor is the community of people using, implementing, and standardising HTTP. We work together to maintain and develop the protocol actively, to assure that it’s interoperable and meets today’s needs. If HTTP stagnates, another protocol will (justifiably) replace it, and we’ll lose all the community’s investment, shared understanding and interoperability.

Cloudflare and many others do this by sending engineers to participate in the IETF, where most Internet protocols are discussed and standardised. We also attend and sponsor community events like the HTTP Workshop to have conversations about what problems people have, what they need, and understand what changes might help them.

So what happened at all of those working group meetings, specification documents, and side events in 2022? What are implementers and deployers of the web’s protocol doing? And what’s coming next?

Specification-wise, the biggest thing to happen in 2022 was the publication of HTTP/3, because it was an enormous step towards keeping up with the requirements of modern applications and sites by using the network more efficiently to unblock web performance.

Way back in the 90s, HTTP/0.9 and HTTP/1.0 used a new TCP connection for each request—an astoundingly inefficient use of the network. HTTP/1.1 introduced persistent connections (which were backported to HTTP/1.0 with the `Connection: Keep-Alive` header). This was an improvement that helped servers and the network cope with the explosive popularity of the web, but even back then, the community knew it had significant limitations—in particular, head-of-line blocking (where one outstanding request on a connection blocks others from completing).

That didn’t matter so much in the 90s and early 2000s, but today’s web pages and applications place demands on the network that make these limitations performance-critical. Pages often have hundreds of assets that all compete for network resources, and HTTP/1.1 wasn’t up to the task. After some false starts, the community finally addressed these issues with HTTP/2 in 2015.

However, removing head-of-line blocking in HTTP exposed the same problem one layer lower, in TCP. Because TCP is an in-order, reliable delivery protocol, loss of a single packet in a flow can block access to those after it—even if they’re sitting in the operating system’s buffers. This turns out to be a real issue for HTTP/2 deployment, especially on less-than-optimal networks.

The answer, of course, was to replace TCP—the venerable transport protocol that so much of the Internet is built upon. After much discussion and many drafts in the QUIC Working Group, QUIC version 1 was published as that replacement in 2021.

HTTP/3 is the version of HTTP that uses QUIC. While the working group effectively finished it in 2021 along with QUIC, its publication was held until 2022 to synchronise with the publication of other documents (see below). 2022 was also a milestone year for HTTP/3 deployment; Cloudflare saw increasing adoption and confidence in the new protocol.

While there was only a brief gap of a few years between HTTP/2 and HTTP/3, there isn’t much appetite for working on HTTP/4 in the community soon. QUIC and HTTP/3 are both new, and the world is still learning how best to implement the protocols, operate them, and build sites and applications using them. While we can’t rule out a limitation that will force a new version in the future, the IETF built these protocols based upon broad industry experience with modern networks, and have significant extensibility available to ease any necessary changes.

The other headline event for HTTP specs in 2022 was the publication of its “core” documents — the heart of HTTP’s specification. The core comprises: HTTP Semantics – things like methods, headers, status codes, and the message format; HTTP Caching – how HTTP caches work; HTTP/1.1 – mapping semantics to the wire, using the format everyone knows and loves.

Additionally, HTTP/2 was republished to properly integrate with the Semantics document, and to fix a few outstanding issues.

This is the latest in a long series of revisions for these documents—in the past, we’ve had the RFC 723x series, the (perhaps most well-known) RFC 2616, RFC 2068, and the grandparent of them all, RFC 1945. Each revision has aimed to improve readability, fix errors, explain concepts better, and clarify protocol operation. Poorly specified (or implemented) features are deprecated; new features that improve protocol operation are added. See the ‘Changes from…’ appendix in each document for the details. And, importantly, always refer to the latest revisions linked above; the older RFCs are now obsolete.

HTTP/2 included server push, a feature designed to allow servers to “push” a request/response pair to clients when they knew the client was going to need something, so it could avoid the latency penalty of making a request and waiting for the response.

After HTTP/2 was finalised in 2015, Cloudflare and many other HTTP implementations soon rolled out server push in anticipation of big performance wins. Unfortunately, it turned out that’s harder than it looks; server push effectively requires the server to predict the future—not only what requests the client will send but also what the network conditions will be. And, when the server gets it wrong (“over-pushing”), the pushed requests directly compete with the real requests that the browser is making, representing a significant opportunity cost with real potential for harming performance, rather than helping it. The impact is even worse when the browser already has a copy in cache, so it doesn’t need the push at all.

As a result, Chrome removed HTTP/2 server push in 2022. Other browsers and servers might still support it, but the community seems to agree that it’s only suitable for specialised uses currently, like the browser notification-specific Web Push Protocol.

That doesn’t mean that we’re giving up, however. The 103 (Early Hints) status code was published as an Experimental RFC by the HTTP Working Group in 2017. It allows a server to send hints to the browser in a non-final response, before the “real” final response. That’s useful if you know that the content is going to include some links to resources that the browser will fetch, but need more time to get the response to the client (because it will take more time to generate, or because the server needs to fetch it from somewhere else, like a CDN does).

Early Hints can be used in many situations that server push was designed for — for example, when you have CSS and JavaScript that a page is going to need to load. In theory, they’re not as optimal as server push, because they only allow hints to be sent when there’s an outstanding request, and because getting the hints to the client and acted upon adds some latency.

In practice, however, Cloudflare and our partners (like Shopify and Google) spent 2022 experimenting with Early Hints and finding them much safer to use, with promising performance benefits that include significant reductions in key web performance metrics.

We’re excited about the potential that Early Hints show; so excited that we’ve integrated them into Cloudflare Pages. We’re also evaluating new ways to improve performance using this new capability in the protocol.

For many, the most exciting HTTP protocol extensions in 2022 focused on intermediation—the ability to insert proxies, gateways, and similar components into the protocol to achieve specific goals, often focused on improving privacy.

The MASQUE Working Group, for example, is an effort to add new tunneling capabilities to HTTP, so that an intermediary can pass the tunneled traffic along to another server.

While CONNECT has enabled TCP tunnels for a long time, MASQUE enabled UDP tunnels, allowing more protocols to be tunneled more efficiently–including QUIC and HTTP/3.

At Cloudflare, we’re enthusiastic to be working with Apple to use MASQUE to implement iCloud Private Relay and enhance their customers’ privacy without relying solely on one company. We’re also very interested in the Working Group’s future work, including IP tunneling that will enable MASQUE-based VPNs.

Another intermediation-focused specification is Oblivious HTTP (or OHTTP). OHTTP uses sets of intermediaries to prevent the server from using connections or IP addresses to track clients, giving greater privacy assurances for things like collecting telemetry or other sensitive data. This specification is just finishing the standards process, and we’re using it to build an important new product, Privacy Gateway, to protect the privacy of our customers’ customers.

We and many others in the Internet community believe that this is just the start, because intermediation can partition communication, a valuable tool for improving privacy.

Finally, 2022 saw a lot of work on security-related aspects of HTTP. The Digest Fields specification is an update to the now-ancient `Digest` header field, allowing integrity digests to be added to messages. The HTTP Message Signatures specification enables cryptographic signatures on requests and responses — something that has widespread ad hoc deployment, but until now has lacked a standard. Both specifications are in the final stages of standardisation.

A revision of the Cookie specification also saw a lot of progress in 2022, and should be final soon. Since it’s not possible to get rid of them completely soon, much work has taken place to limit how they operate to improve privacy and security, including a new `SameSite` attribute.

Another set of security-related specifications that Cloudflare has invested in for many years is Privacy Pass also known as “Private Access Tokens.” These are cryptographic tokens that can assure clients are real people, not bots, without using an intrusive CAPTCHA, and without tracking the user’s activity online. In HTTP, they take the form of a new authentication scheme.

While Privacy Pass is still not quite through the standards process, 2022 saw its broad deployment by Apple, a huge step forward. And since Cloudflare uses it in Turnstile, our CAPTCHA alternative, your users can have a better experience today.

So, what’s next? Besides, the specifications above that aren’t quite finished, the HTTP Working Group has a few other works in progress, including a QUERY method (think GET but with a body), Resumable Uploads (based on tus), Variants (an improved Vary header for caching), improvements to Structured Fields (including a new Date type), and a way to retrofit existing headers into Structured Fields. We’ll write more about these as they progress in 2023.

At the 2022 HTTP Workshop, the community also talked about what new work we can take on to improve the protocol. Some ideas discussed included improving our shared protocol testing infrastructure (right now we have a few resources, but it could be much better), improving (or replacing) Alternative Services to allow more intelligent and correct connection management, and more radical changes, like alternative, binary serialisations of headers.

There’s also a continuing discussion in the community about whether HTTP should accommodate pub/sub, or whether it should be standardised to work over WebSockets (and soon, WebTransport). Although it’s hard to say now, adjacent work on Media over QUIC that just started might provide an opportunity to push this forward.

Of course, that’s not everything, and what happens to HTTP in 2023 (and beyond) remains to be seen. HTTP is still evolving, even as it stays compatible with the largest distributed hypertext system ever conceived—the World Wide Web.

Post Syndicated from Hunts Chen original https://blog.cloudflare.com/the-as112-project/

Today, we’re excited to announce that Cloudflare is participating in the AS112 project, becoming an operator of this community-operated, loosely-coordinated anycast deployment of DNS servers that primarily answer reverse DNS lookup queries that are misdirected and create significant, unwanted load on the Internet.

With the addition of Cloudflare global network, we can make huge improvements to the stability, reliability and performance of this distributed public service.

The AS112 project is a community effort to run an important network service intended to handle reverse DNS lookup queries for private-only use addresses that should never appear in the public DNS system. In the seven days leading up to publication of this blog post, for example, Cloudflare’s 1.1.1.1 resolver received more than 98 billion of these queries — all of which have no useful answer in the Domain Name System.

Some history is useful for context. Internet Protocol (IP) addresses are essential to network communication. Many networks make use of IPv4 addresses that are reserved for private use, and devices in the network are able to connect to the Internet with the use of network address translation (NAT), a process that maps one or more local private addresses to one or more global IP addresses and vice versa before transferring the information.

Your home Internet router most likely does this for you. You will likely find that, when at home, your computer has an IP address like 192.168.1.42. That’s an example of a private use address that is fine to use at home, but can’t be used on the public Internet. Your home router translates it, through NAT, to an address your ISP assigned to your home and that can be used on the Internet.

Here are the reserved “private use” addresses designated in RFC 1918.

| Address block | Address range | Number of addresses |

|---|---|---|

| 10.0.0.0/8 | 10.0.0.0 – 10.255.255.255 | 16,777,216 |

| 172.16.0.0/12 | 172.16.0.0 – 172.31.255.255 | 1,048,576 |

| 192.168.0.0/16 | 192.168.0.0 – 192.168.255.255 | 65,536 |

Although the reserved addresses themselves are blocked from ever appearing on the public Internet, devices and programs in private environments may occasionally originate DNS queries corresponding to those addresses. These are called “reverse lookups” because they ask the DNS if there is a name associated with an address.

A reverse DNS lookup is an opposite process of the more commonly used DNS lookup (which is used every day to translate a name like www.cloudflare.com to its corresponding IP address). It is a query to look up the domain name associated with a given IP address, in particular those addresses associated with routers and switches. For example, network administrators and researchers use reverse lookups to help understand paths being taken by data packets in the network, and it’s much easier to understand meaningful names than a meaningless number.

A reverse lookup is accomplished by querying DNS servers for a pointer record (PTR). PTR records store IP addresses with their segments reversed, and by appending “.in-addr.arpa” to the end. For example, the IP address 192.0.2.1 will have the PTR record stored as 1.2.0.192.in-addr.arpa. In IPv6, PTR records are stored within the “.ip6.arpa” domain instead of “.in-addr.arpa.”. Below are some query examples using the dig command line tool.

# Lookup the domain name associated with IPv4 address 172.64.35.46

# “+short” option make it output the short form of answers only

$ dig @1.1.1.1 PTR 46.35.64.172.in-addr.arpa +short

hunts.ns.cloudflare.com.

# Or use the shortcut “-x” for reverse lookups

$ dig @1.1.1.1 -x 172.64.35.46 +short

hunts.ns.cloudflare.com.

# Lookup the domain name associated with IPv6 address 2606:4700:58::a29f:2c2e

$ dig @1.1.1.1 PTR e.2.c.2.f.9.2.a.0.0.0.0.0.0.0.0.0.0.0.0.8.5.0.0.0.0.7.4.6.0.6.2.ip6.arpa. +short

hunts.ns.cloudflare.com.

# Or use the shortcut “-x” for reverse lookups

$ dig @1.1.1.1 -x 2606:4700:58::a29f:2c2e +short

hunts.ns.cloudflare.com.

The private use addresses concerned have only local significance and cannot be resolved by the public DNS. In other words, there is no way for the public DNS to provide a useful answer to a question that has no global meaning. It is therefore a good practice for network administrators to ensure that queries for private use addresses are answered locally. However, it is not uncommon for such queries to follow the normal delegation path in the public DNS instead of being answered within the network. That creates unnecessary load.

By definition of being private use, they have no ownership in the public sphere, so there are no authoritative DNS servers to answer the queries. At the very beginning, root servers respond to all these types of queries since they serve the IN-ADDR.ARPA zone.

Over time, due to the wide deployment of private use addresses and the continuing growth of the Internet, traffic on the IN-ADDR.ARPA DNS infrastructure grew and the load due to these junk queries started to cause some concern. Therefore, the idea of offloading IN-ADDR.ARPA queries related to private use addresses was proposed. Following that, the use of anycast for distributing authoritative DNS service for that idea was subsequently proposed at a private meeting of root server operators. And eventually the AS112 service was launched to provide an alternative target for the junk.

To deal with this problem, the Internet community set up special DNS servers called “blackhole servers” as the authoritative name servers that respond to the reverse lookup of the private use address blocks 10.0.0.0/8, 172.16.0.0/12, 192.168.0.0/16 and the link-local address block 169.254.0.0/16 (which also has only local significance). Since the relevant zones are directly delegated to the blackhole servers, this approach has come to be known as Direct Delegation.

The first two blackhole servers set up by the project are: blackhole-1.iana.org and blackhole-2.iana.org.

Any server, including DNS name server, needs an IP address to be reachable. The IP address must also be associated with an Autonomous System Number (ASN) so that networks can recognize other networks and route data packets to the IP address destination. To solve this problem, a new authoritative DNS service would be created but, to make it work, the community would have to designate IP addresses for the servers and, to facilitate their availability, an AS number that network operators could use to reach (or provide) the new service.

The selected AS number (provided by American Registry for Internet Numbers) and namesake of the project, was 112. It was started by a small subset of root server operators, later grown to a group of volunteer name server operators that include many other organizations. They run anycasted instances of the blackhole servers that, together, form a distributed sink for the reverse DNS lookups for private network and link-local addresses sent to the public Internet.

A reverse DNS lookup for a private use address would see responses like in the example below, where the name server blackhole-1.iana.org is authoritative for it and says the name does not exist, represented in DNS responses by NXDOMAIN.

$ dig @blackhole-1.iana.org -x 192.168.1.1 +nord

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 23870

;; flags: qr aa; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;1.1.168.192.in-addr.arpa. IN PTR

;; AUTHORITY SECTION:

168.192.in-addr.arpa. 10800 IN SOA 168.192.in-addr.arpa. nobody.localhost. 42 86400 43200 604800 10800

At the beginning of the project, node operators set up the service in the direct delegation fashion (RFC 7534). However, adding delegations to this service requires all AS112 servers to be updated, which is difficult to ensure in a system that is only loosely-coordinated. An alternative approach using DNAME redirection was subsequently introduced by RFC 7535 to allow new zones to be added to the system without reconfiguring the blackhole servers.

DNS zones are directly delegated to the blackhole servers in this approach.

RFC 7534 defines the static set of reverse lookup zones for which AS112 name servers should answer authoritatively. They are as follows:

Zone files for these zones are quite simple because essentially they are empty apart from the required SOA and NS records. A template of the zone file is defined as:

; db.dd-empty

;

; Empty zone for direct delegation AS112 service.

;

$TTL 1W

@ IN SOA prisoner.iana.org. hostmaster.root-servers.org. (

1 ; serial number

1W ; refresh

1M ; retry

1W ; expire

1W ) ; negative caching TTL

;

NS blackhole-1.iana.org.

NS blackhole-2.iana.org.

IP addresses of the direct delegation name servers are covered by the single IPv4 prefix 192.175.48.0/24 and the IPv6 prefix 2620:4f:8000::/48.

| Name server | IPv4 address | IPv6 address |

|---|---|---|

| blackhole-1.iana.org | 192.175.48.6 | 2620:4f:8000::6 |

| blackhole-2.iana.org | 192.175.48.42 | 2620:4f:8000::42 |

Firstly, what is DNAME? Introduced by RFC 6672, a DNAME record or Delegation Name Record creates an alias for an entire subtree of the domain name tree. In contrast, the CNAME record creates an alias for a single name and not its subdomains. For a received DNS query, the DNAME record instructs the name server to substitute all those appearing in the left hand (owner name) with the right hand (alias name). The substituted query name, like the CNAME, may live within the zone or may live outside the zone.

Like the CNAME record, the DNS lookup will continue by retrying the lookup with the substituted name. For example, if there are two DNS zone as follows:

# zone: example.com

www.example.com. A 203.0.113.1

foo.example.com. DNAME example.net.

# zone: example.net

example.net. A 203.0.113.2

bar.example.net. A 203.0.113.3

The query resolution scenarios would look like this:

| Query (Type + Name) | Substitution | Final result |

|---|---|---|

| A www.example.com | (no DNAME, don’t apply) | 203.0.113.1 |

| DNAME foo.example.com | (don’t apply to the owner name itself) | example.net |

| A foo.example.com | (don’t apply to the owner name itself) | <NXDOMAIN> |

| A bar.foo.example.com | bar.example.net | 203.0.113.2 |

RFC 7535 specifies adding another special zone, empty.as112.arpa, to support DNAME redirection for AS112 nodes. When there are new zones to be added, there is no need for AS112 node operators to update their configuration: instead, the zones’ parents will set up DNAME records for the new domains with the target domain empty.as112.arpa. The redirection (which can be cached and reused) causes clients to send future queries to the blackhole server that is authoritative for the target zone.

Note that blackhole servers do not have to support DNAME records themselves, but they do need to configure the new zone to which root servers will redirect queries at. Considering there may be existing node operators that do not update their name server configuration for some reasons and in order to not cause interruption to the service, the zone was delegated to a new blackhole server instead – blackhole.as112.arpa.

This name server uses a new pair of IPv4 and IPv6 addresses, 192.31.196.1 and 2001:4:112::1, so queries involving DNAME redirection will only land on those nodes operated by entities that also set up the new name server. Since it is not necessary for all AS112 participants to reconfigure their servers to serve empty.as112.arpa from this new server for this system to work, it is compatible with the loose coordination of the system as a whole.

The zone file for empty.as112.arpa is defined as:

; db.dr-empty

;

; Empty zone for DNAME redirection AS112 service.

;

$TTL 1W

@ IN SOA blackhole.as112.arpa. noc.dns.icann.org. (

1 ; serial number

1W ; refresh

1M ; retry

1W ; expire

1W ) ; negative caching TTL

;

NS blackhole.as112.arpa.

The addresses of the new DNAME redirection name server are covered by the single IPv4 prefix 192.31.196.0/24 and the IPv6 prefix 2001:4:112::/48.

| Name server | IPv4 address | IPv6 address |

|---|---|---|

| blackhole.as112.arpa | 192.31.196.1 | 2001:4:112::1 |

RFC 7534 recommends every AS112 node also to host the following metadata zones as well: hostname.as112.net and hostname.as112.arpa.

These zones only host TXT records and serve as identifiers for querying metadata information about an AS112 node. At Cloudflare nodes, the zone files look like this:

$ORIGIN hostname.as112.net.

;

$TTL 604800

;

@ IN SOA ns3.cloudflare.com. dns.cloudflare.com. (

1 ; serial number

604800 ; refresh

60 ; retry

604800 ; expire

604800 ) ; negative caching TTL

;

NS blackhole-1.iana.org.

NS blackhole-2.iana.org.

;

TXT "Cloudflare DNS, <DATA_CENTER_AIRPORT_CODE>"

TXT "See http://www.as112.net/ for more information."

;

$ORIGIN hostname.as112.arpa.

;

$TTL 604800

;

@ IN SOA ns3.cloudflare.com. dns.cloudflare.com. (

1 ; serial number

604800 ; refresh

60 ; retry

604800 ; expire

604800 ) ; negative caching TTL

;

NS blackhole.as112.arpa.

;

TXT "Cloudflare DNS, <DATA_CENTER_AIRPORT_CODE>"

TXT "See http://www.as112.net/ for more information."

;

As the AS112 project helps reduce the load on public DNS infrastructure, it plays a vital role in maintaining the stability and efficiency of the Internet. Being a part of this project aligns with Cloudflare’s mission to help build a better Internet.

Cloudflare is one of the fastest global anycast networks on the planet, and operates one of the largest, highly performant and reliable DNS services. We run authoritative DNS for millions of Internet properties globally. We also operate the privacy- and performance-focused public DNS resolver 1.1.1.1 service. Given our network presence and scale of operations, we believe we can make a meaningful contribution to the AS112 project.

We’ve publicly talked about the Cloudflare in-house built authoritative DNS server software, rrDNS, several times in the past, but haven’t talked much about the software we built to power the Cloudflare public resolver – 1.1.1.1. This is an opportunity to shed some light on the technology we used to build 1.1.1.1, because this AS112 service is built on top of the same platform.

We’ve created a platform to run DNS workloads. Today, it powers 1.1.1.1, 1.1.1.1 for Families, Oblivious DNS over HTTPS (ODoH), Cloudflare WARP and Cloudflare Gateway.

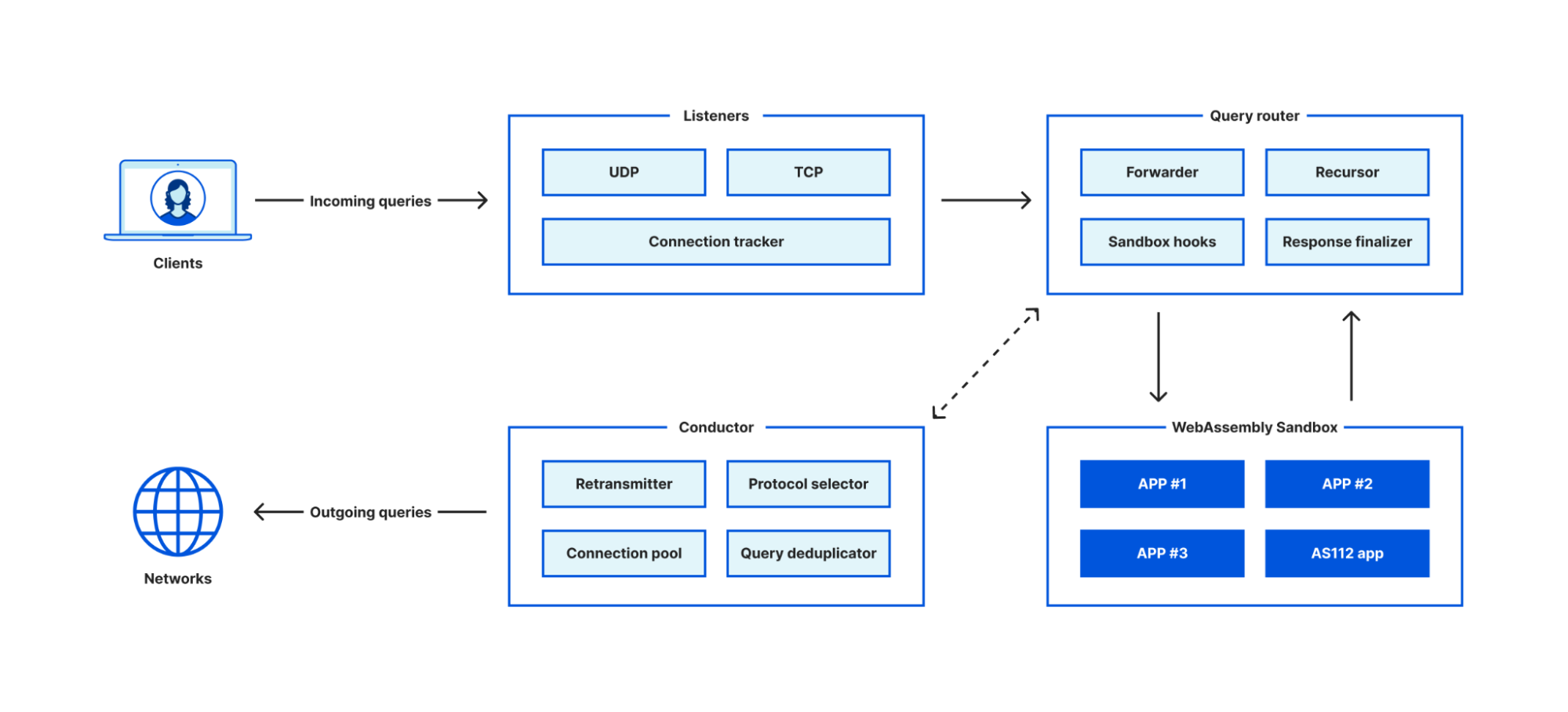

The core part of the platform is a non-traditional DNS server, which has a built-in DNS recursive resolver and a forwarder to forward queries to other servers. It consists of four key modules:

The DNS server itself doesn’t include any business logic, instead the guest applications run in the sandbox environment can implement concrete business logic such as request filtering, query processing, logging, attack mitigation, cache purging, etc.

The server is written in Rust and the sandbox environment is built on top of a WebAssembly runtime. The combination of Rust and WebAssembly allow us to implement high efficient connection handling, request filtering and query dispatching modules, while having the flexibility of implementing custom business logic in a safe and efficient manner.

The host exposes a set of APIs, called hostcalls, for the guest applications to accomplish a variety of tasks. You can think of them like syscalls on Linux. Here are few examples functions provided by the hostcalls:

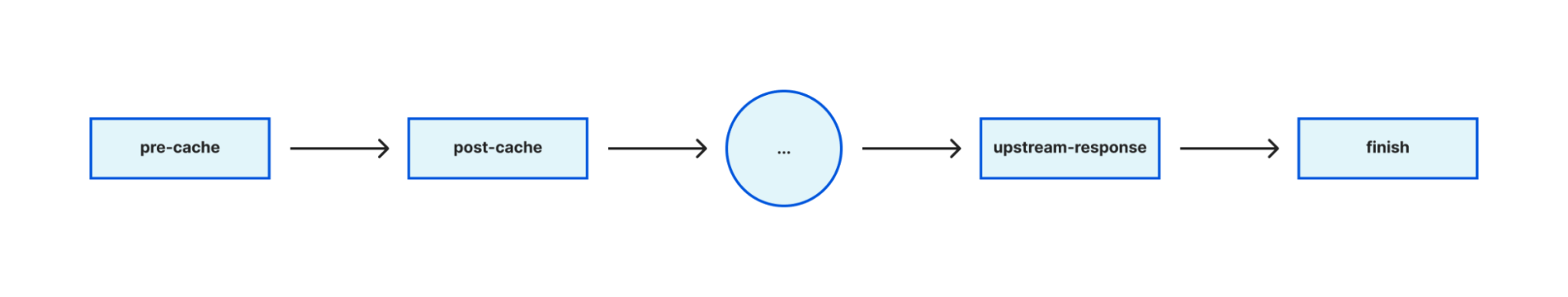

The DNS request lifecycle is broken down into phases. A request phase is a point in processing at which sandboxed apps can be called to change the course of request resolution. And each guest application can register callbacks for each phase.

The AS112 service is built as a guest application written in Rust and compiled to WebAssembly. The zones listed in RFC 7534 and RFC 7535 are loaded as static zones in memory and indexed as a tree data structure. Incoming queries are answered locally by looking up entries in the zone tree.

A router setting in the app manifest is added to tell the host what kind of DNS queries should be processed by the guest application, and a fallback_action setting is added to declare the expected fallback behavior.

# Declare what kind of queries the app handles.

router = [

# The app is responsible for all the AS112 IP prefixes.

"dst in { 192.31.196.0/24 192.175.48.0/24 2001:4:112::/48 2620:4f:8000::/48 }",

]

# If the app fails to handle the query, servfail should be returned.

fallback_action = "fail"

The guest application, along with its manifest, is then compiled and deployed through a deployment pipeline that leverages Quicksilver to store and replicate the assets worldwide.

The guest application is now up and running, but how does the DNS query traffic destined to the new IP prefixes reach the DNS server? Do we have to restart the DNS server every time we add a new guest application? Of course there is no need. We use software we developed and deployed earlier, called Tubular. It allows us to change the addresses of a service on the fly. With the help of Tubular, incoming packets destined to the AS112 service IP prefixes are dispatched to the right DNS server process without the need to make any change or release of the DNS server itself.

Meanwhile, in order to make the misdirected DNS queries land on the Cloudflare network in the first place, we use BYOIP (Bringing Your Own IPs to Cloudflare), a Cloudflare product that can announce customer’s own IP prefixes in all our locations. The four AS112 IP prefixes are boarded onto the BYOIP system, and will be announced by it globally.

How can we ensure the service we set up does the right thing before we announce it to the public Internet? 1.1.1.1 processes more than 13 billion of these misdirected queries every day, and it has logic in place to directly return NXDOMAIN for them locally, which is a recommended practice per RFC 7534.

However, we are able to use a dynamic rule to change how the misdirected queries are handled in Cloudflare testing locations. For example, a rule like following:

phase = post-cache and qtype in { PTR } and colo in { test1 test2 } and qname-suffix in { 10.in-addr.arpa 16.172.in-addr.arpa 17.172.in-addr.arpa 18.172.in-addr.arpa 19.172.in-addr.arpa 20.172.in-addr.arpa 21.172.in-addr.arpa 22.172.in-addr.arpa 23.172.in-addr.arpa 24.172.in-addr.arpa 25.172.in-addr.arpa 26.172.in-addr.arpa 27.172.in-addr.arpa 28.172.in-addr.arpa 29.172.in-addr.arpa 30.172.in-addr.arpa 31.172.in-addr.arpa 168.192.in-addr.arpa 254.169.in-addr.arpa } forward 192.175.48.6:53

The rule instructs that in data center test1 and test2, when the DNS query type is PTR, and the query name ends with those in the list, forward the query to server 192.175.48.6 (one of the AS112 service IPs) on port 53.

Because we’ve provisioned the AS112 IP prefixes in the same node, the new AS112 service will receive the queries and respond to the resolver.

It’s worth mentioning that the above-mentioned dynamic rule that intercepts a query at the post-cache phase, and changes how the query gets processed, is executed by a guest application too, which is named override. This app loads all dynamic rules, parses the DSL texts and registers callback functions at phases declared by each rule. And when an incoming query matches the expressions, it executes the designated actions.

We collect the following metrics to generate the public statistics that an AS112 operator is expected to share to the operator community:

We’ll serve the public statistics page on the Cloudflare Radar website. We are still working on implementing the required backend API and frontend of the page – we’ll share the link to this page once it is available.

We are going to announce the AS112 prefixes starting December 15, 2022.

After the service is launched, you can run a dig command to check if you are hitting an AS112 node operated by Cloudflare, like:

$ dig @blackhole-1.iana.org TXT hostname.as112.arpa +short

"Cloudflare DNS, SFO"

"See http://www.as112.net/ for more information."

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/12/security-vulnerabilities-in-eufy-cameras.html

Eufy cameras claim to be local only, but upload data to the cloud. The company is basically lying to reporters, despite being shown evidence to the contrary. The company’s behavior is so egregious that ReviewGeek is no longer recommending them.

This will be interesting to watch. If Eufy can ignore security researchers and the press without there being any repercussions in the market, others will follow suit. And we will lose public shaming as an incentive to improve security.

After further testing, we’re not seeing the VLC streams begin based solely on the camera detecting motion. We’re not sure if that’s a change since yesterday or something I got wrong in our initial report. It does appear that Eufy is making changes—it appears to have removed access to the method we were using to get the address of our streams, although an address we already obtained is still working.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/12/the-decoupling-principle.html

This is a really interesting paper that discusses what the authors call the Decoupling Principle:

The idea is simple, yet previously not clearly articulated: to ensure privacy, information should be divided architecturally and institutionally such that each entity has only the information they need to perform their relevant function. Architectural decoupling entails splitting functionality for different fundamental actions in a system, such as decoupling authentication (proving who is allowed to use the network) from connectivity (establishing session state for communicating). Institutional decoupling entails splitting what information remains between non-colluding entities, such as distinct companies or network operators, or between a user and network peers. This decoupling makes service providers individually breach-proof, as they each have little or no sensitive data that can be lost to hackers. Put simply, the Decoupling Principle suggests always separating who you are from what you do.

Lots of interesting details in the paper.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/11/computer-repair-technicians-are-stealing-your-data.html

Laptop technicians routinely violate the privacy of the people whose computers they repair:

Researchers at University of Guelph in Ontario, Canada, recovered logs from laptops after receiving overnight repairs from 12 commercial shops. The logs showed that technicians from six of the locations had accessed personal data and that two of those shops also copied data onto a personal device. Devices belonging to females were more likely to be snooped on, and that snooping tended to seek more sensitive data, including both sexually revealing and non-sexual pictures, documents, and financial information.

[…]

In three cases, Windows Quick Access or Recently Accessed Files had been deleted in what the researchers suspect was an attempt by the snooping technician to cover their tracks. As noted earlier, two of the visits resulted in the logs the researchers relied on being unrecoverable. In one, the researcher explained they had installed antivirus software and performed a disk cleanup to “remove multiple viruses on the device.” The researchers received no explanation in the other case.

[…]

The laptops were freshly imaged Windows 10 laptops. All were free of malware and other defects and in perfect working condition with one exception: the audio driver was disabled. The researchers chose that glitch because it required only a simple and inexpensive repair, was easy to create, and didn’t require access to users’ personal files.

Half of the laptops were configured to appear as if they belonged to a male and the other half to a female. All of the laptops were set up with email and gaming accounts and populated with browser history across several weeks. The researchers added documents, both sexually revealing and non-sexual pictures, and a cryptocurrency wallet with credentials.

A few notes. One: this is a very small study—only twelve laptop repairs. Two, some of the results were inconclusive, which indicated—but did not prove—log tampering by the technicians. Three, this study was done in Canada. There would probably be more snooping by American repair technicians.

The moral isn’t a good one: if you bring your laptop in to be repaired, you should expect the technician to snoop through your hard drive, taking what they want.

Research paper.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/11/apples-device-analytics-can-identify-icloud-users.html

Researchers claim that supposedly anonymous device analytics information can identify users:

On Twitter, security researchers Tommy Mysk and Talal Haj Bakry have found that Apple’s device analytics data includes an iCloud account and can be linked directly to a specific user, including their name, date of birth, email, and associated information stored on iCloud.

Apple has long claimed otherwise:

On Apple’s device analytics and privacy legal page, the company says no information collected from a device for analytics purposes is traceable back to a specific user. “iPhone Analytics may include details about hardware and operating system specifications, performance statistics, and data about how you use your devices and applications. None of the collected information identifies you personally,” the company claims.

Apple was just sued for tracking iOS users without their consent, even when they explicitly opt out of tracking.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/11/nsa-over-surveillance.html

Here in 2022, we have a newly declassified 2016 Inspector General report—”Misuse of Sigint Systems”—about a 2013 NSA program that resulted in the unauthorized (that is, illegal) targeting of Americans.

Given all we learned from Edward Snowden, this feels like a minor coda. There’s nothing really interesting in the IG document, which is heavily redacted.

News story.

EDITED TO ADD (11/14): Non-paywalled copy of the Bloomberg link.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/11/using-wi-fi-to-see-through-walls.html

This technique measures device response time to determine distance:

The scientists tested the exploit by modifying an off-the-shelf drone to create a flying scanning device, the Wi-Peep. The robotic aircraft sends several messages to each device as it flies around, establishing the positions of devices in each room. A thief using the drone could find vulnerable areas in a home or office by checking for the absence of security cameras and other signs that a room is monitored or occupied. It could also be used to follow a security guard, or even to help rival hotels spy on each other by gauging the number of rooms in use.

There have been attempts to exploit similar WiFi problems before, but the team says these typically require bulky and costly devices that would give away attempts. Wi-Peep only requires a small drone and about $15 US in equipment that includes two WiFi modules and a voltage regulator. An intruder could quickly scan a building without revealing their presence.

Research paper.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/11/irans-digital-surveillance-tools-leaked.html

It’s Iran’s turn to have its digital surveillance tools leaked:

According to these internal documents, SIAM is a computer system that works behind the scenes of Iranian cellular networks, providing its operators a broad menu of remote commands to alter, disrupt, and monitor how customers use their phones. The tools can slow their data connections to a crawl, break the encryption of phone calls, track the movements of individuals or large groups, and produce detailed metadata summaries of who spoke to whom, when, and where. Such a system could help the government invisibly quash the ongoing protests —or those of tomorrow —an expert who reviewed the SIAM documents told The Intercept.

[…]

SIAM gives the government’s Communications Regulatory Authority —Iran’s telecommunications regulator —turnkey access to the activities and capabilities of the country’s mobile users. “Based on CRA rules and regulations all telecom operators must provide CRA direct access to their system for query customers information and change their services via web service,” reads an English-language document obtained by The Intercept. (Neither the CRA nor Iran’s mission to the United Nations responded to a requests for comment.)

Lots of details, and links to the leaked documents, at the Intercept webpage.

Post Syndicated from Mari Galicer original https://blog.cloudflare.com/building-privacy-into-internet-standards-and-how-to-make-your-app-more-private-today/

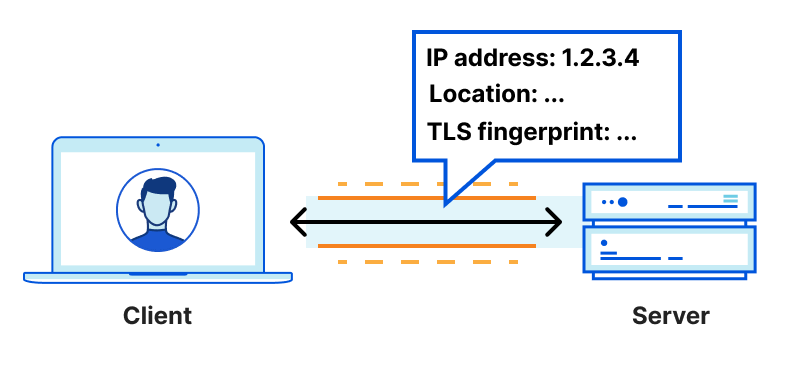

If you’re running a privacy-oriented application or service on the Internet, your options to provably protect users’ privacy are limited. You can minimize logs and data collection but even then, at a network level, every HTTP request needs to come from somewhere. Information generated by HTTP requests, like users’ IP addresses and TLS fingerprints, can be sensitive especially when combined with application data.

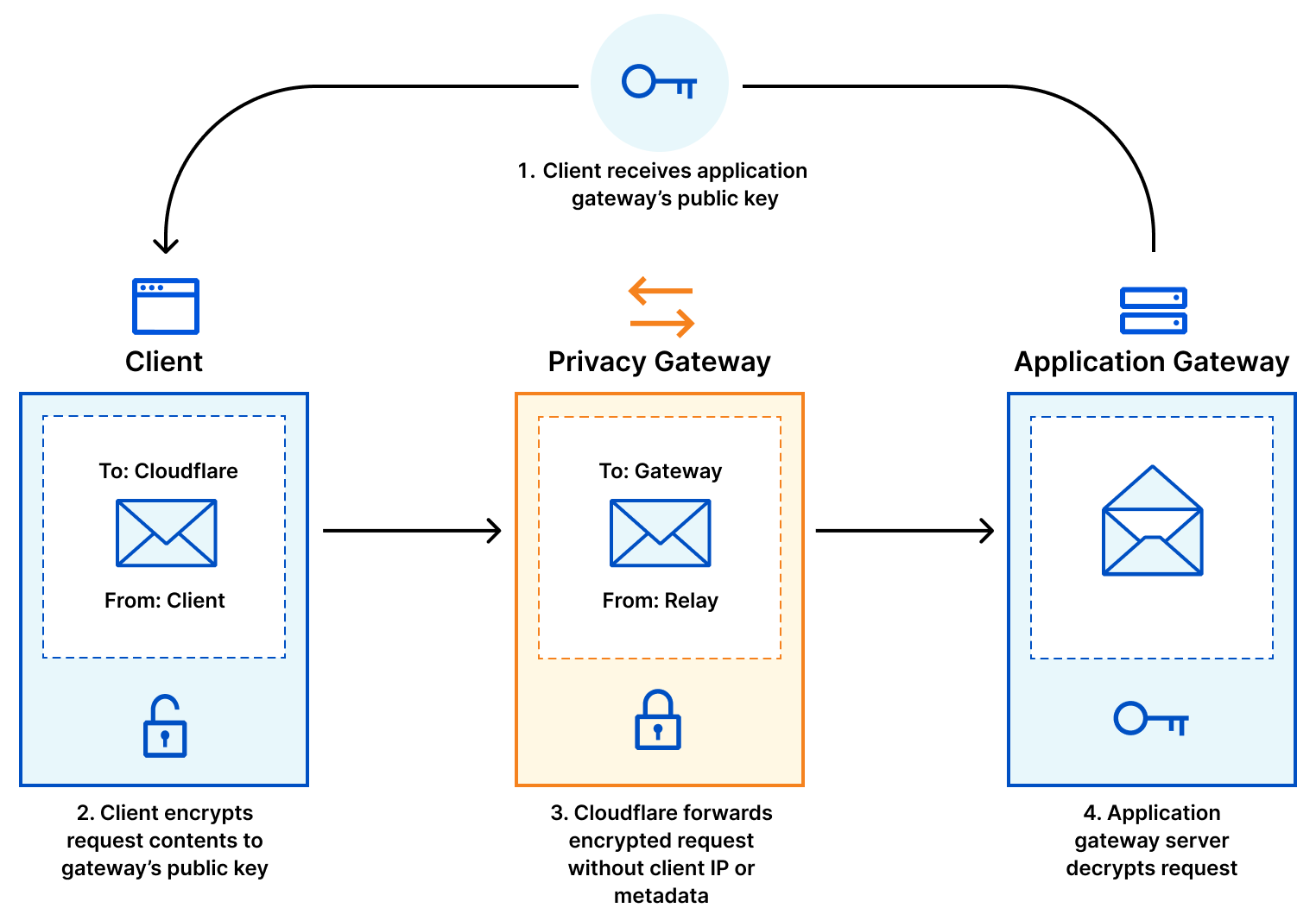

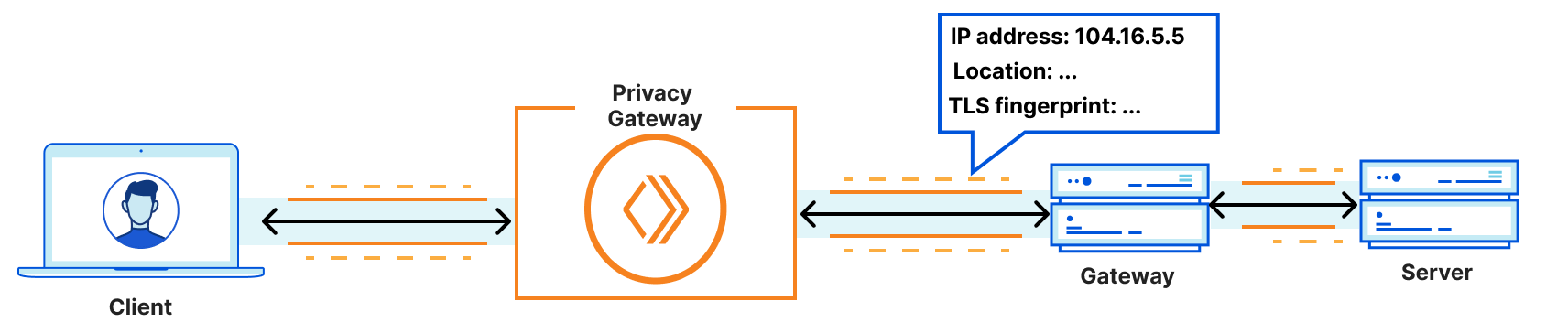

Meaningful improvements to your users’ privacy require a change in how HTTP requests are sent from client devices to the server that runs your application logic. This was the motivation for Privacy Gateway: a service that relays encrypted HTTP requests and responses between a client and application server. With Privacy Gateway, Cloudflare knows where the request is coming from, but not what it contains, and applications can see what the request contains, but not where it comes from. Neither Cloudflare nor the application server has the full picture, improving end-user privacy.

We recently deployed Privacy Gateway for Flo Health, a period tracking app, for the launch of their Anonymous Mode. With Privacy Gateway in place, all request data for Anonymous Mode users is encrypted between the app user and Flo, which prevents Flo from seeing the IP addresses of those users and Cloudflare from seeing the contents of that request data.

With Privacy Gateway in place, several other privacy-critical applications are possible:

The main innovation in the Oblivious HTTP standard – beyond a basic proxy service – is that these messages are encrypted to the application’s server, such that Privacy Gateway learns nothing of the application data beyond the source and destination of each message.

Privacy Gateway enables application developers and platforms, especially those with strong privacy requirements, to build something that closely resembles a “Mixnet”: an approach to obfuscating the source and destination of a message across a network. To that end, Privacy Gateway consists of three main components:

If you were to imagine request data as the contents of the envelope (a letter) and the IP address and request metadata as the address on the outside, with Privacy Gateway, Cloudflare is able to see the envelope’s address and safely forward it to its destination without being able to see what’s inside.

In slightly more detail, the data flow is as follows:

This interaction offers two types of privacy, which we informally refer to as request privacy and client privacy.