Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/13-raspberry-pis-slosh-test-space-shuttle-tanks-in-zero-gravity/

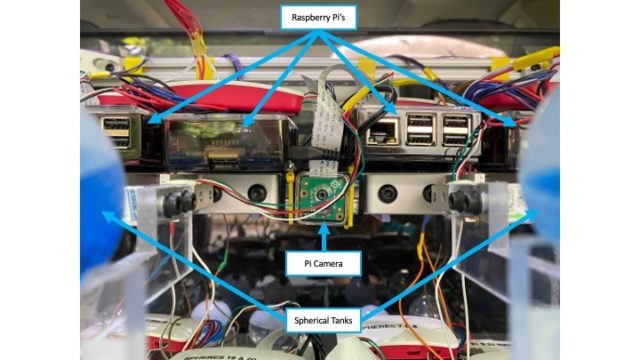

High-school student Eleanor Sigrest successfully crowdfunded her way onto a zero-G flight to test her latest Raspberry Pi-powered project. NASA Goddard engineers peer reviewed Eleanor’s experimental design, which detects unwanted movement (or ‘slosh’) in spacecraft fluid tanks.

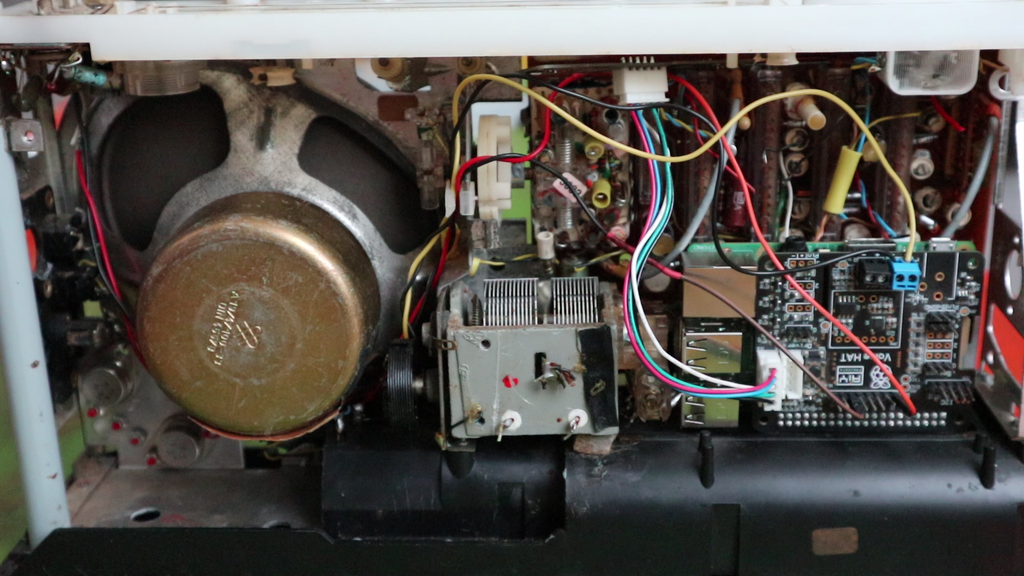

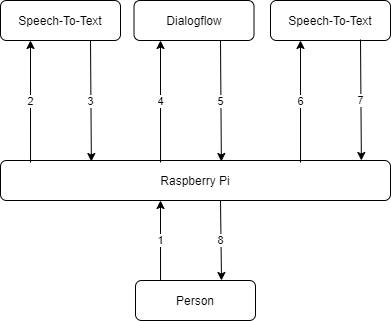

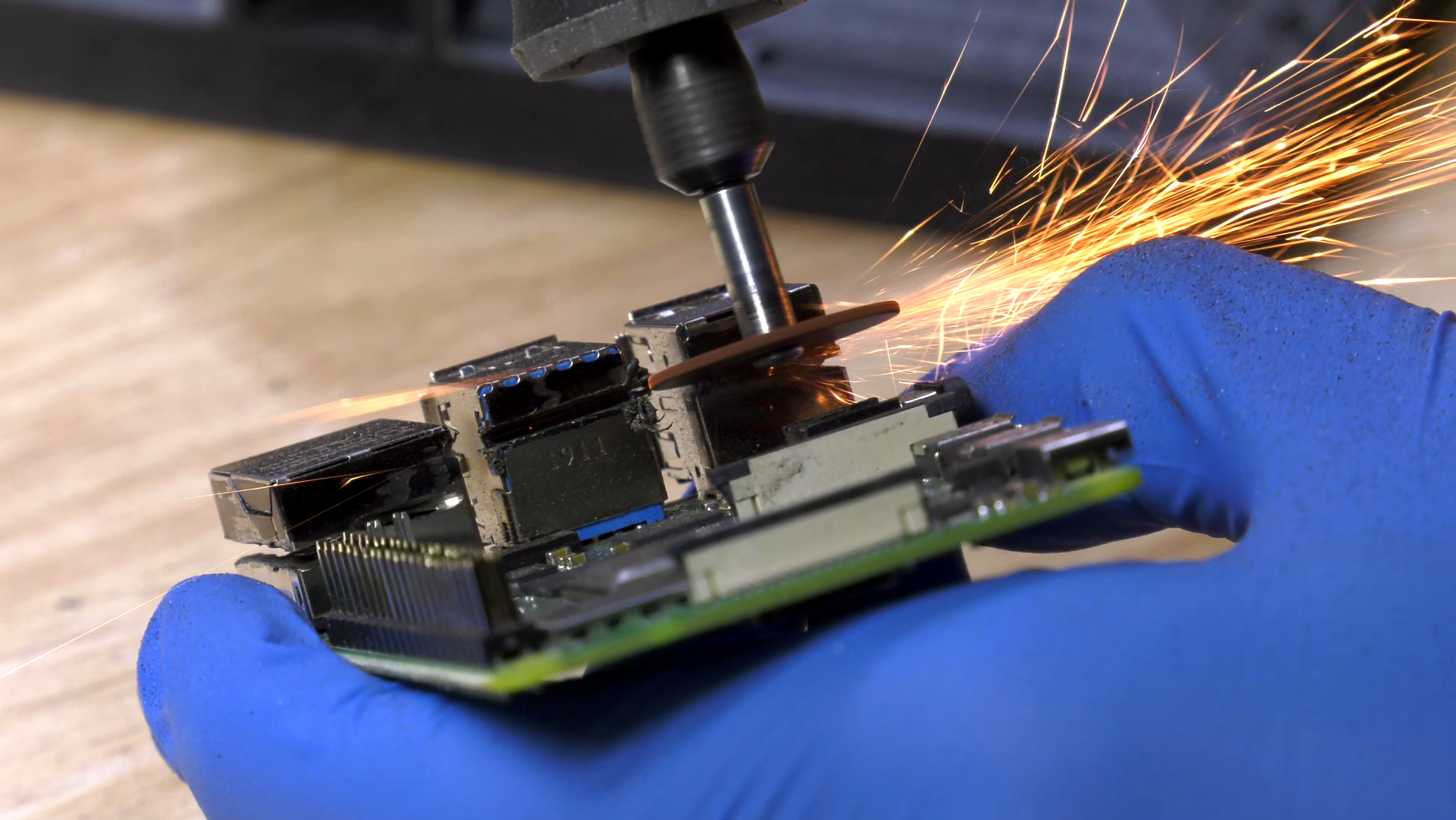

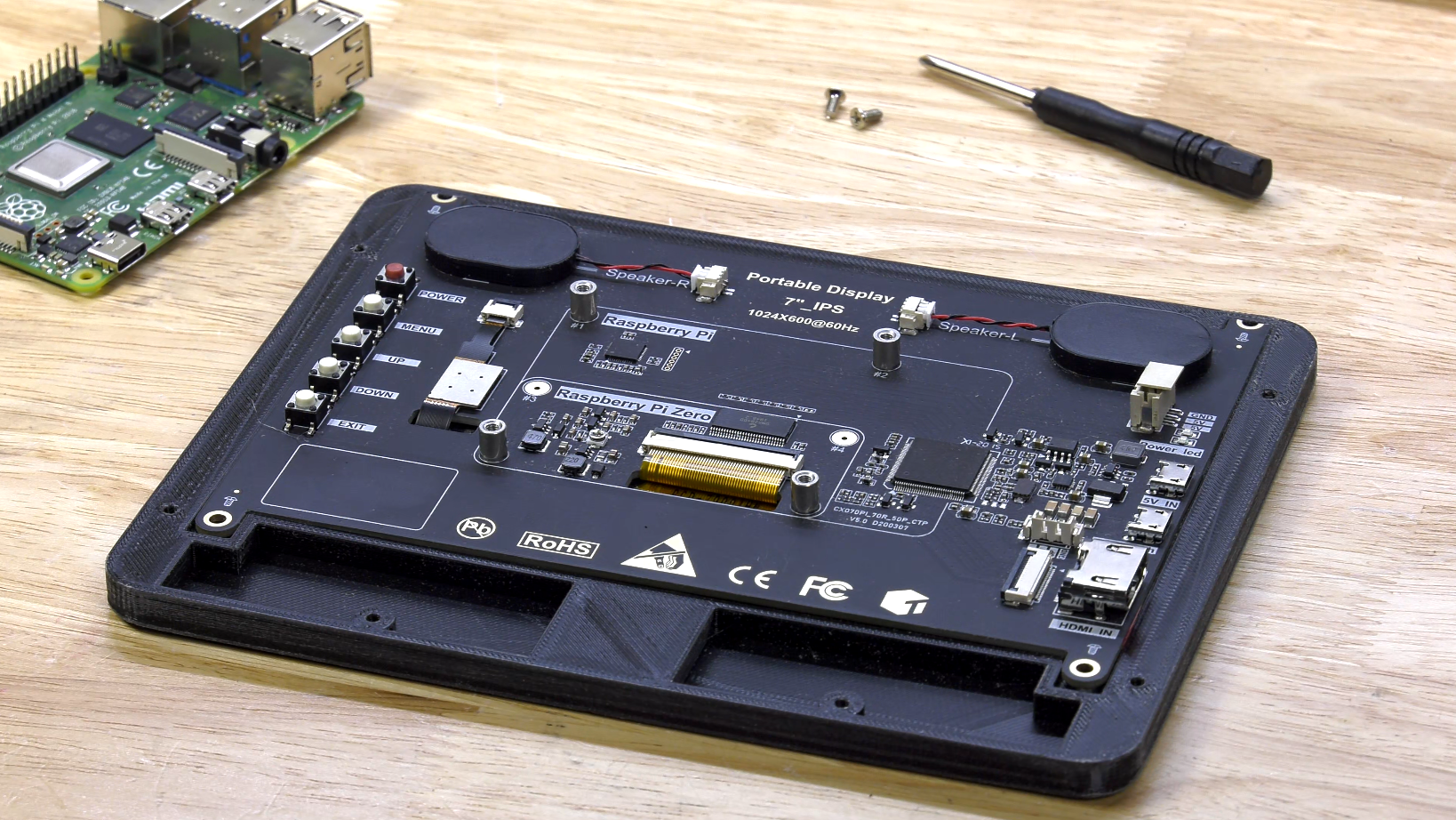

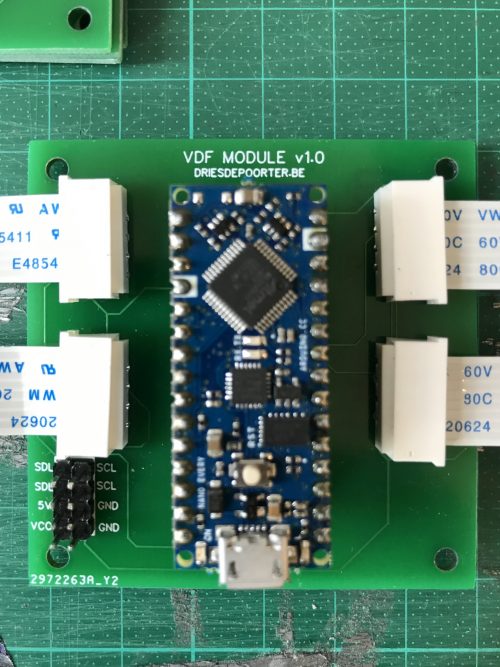

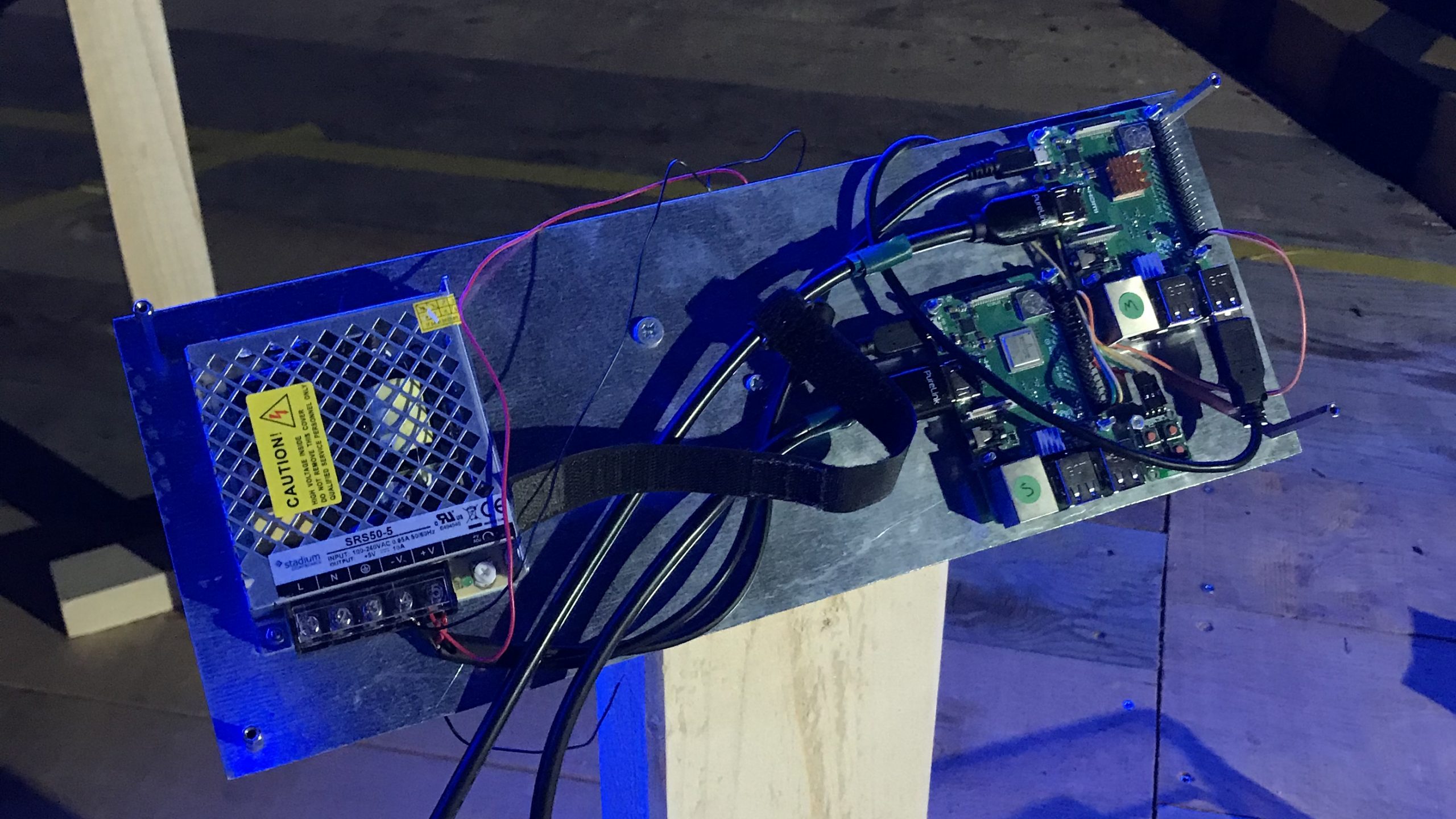

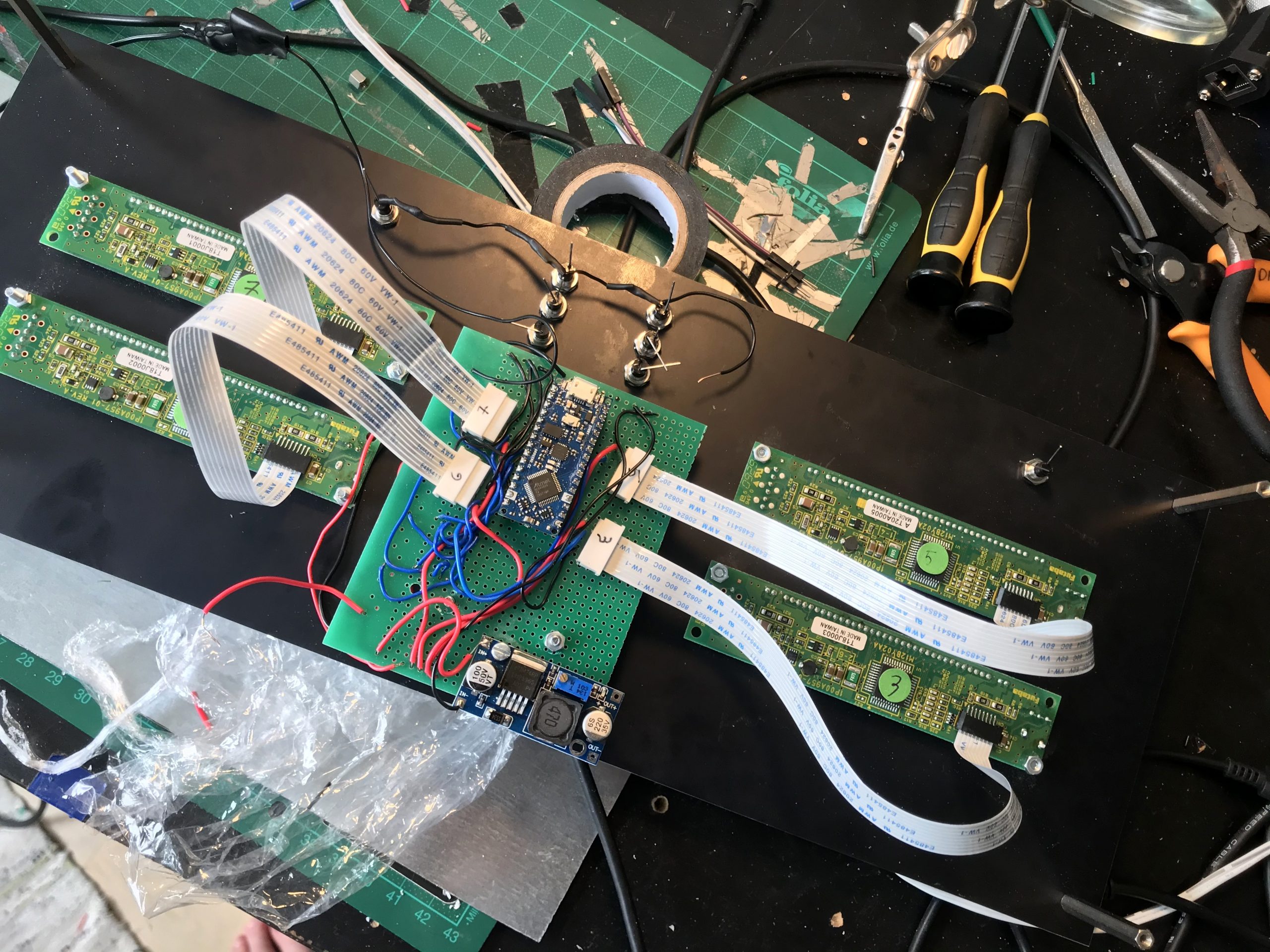

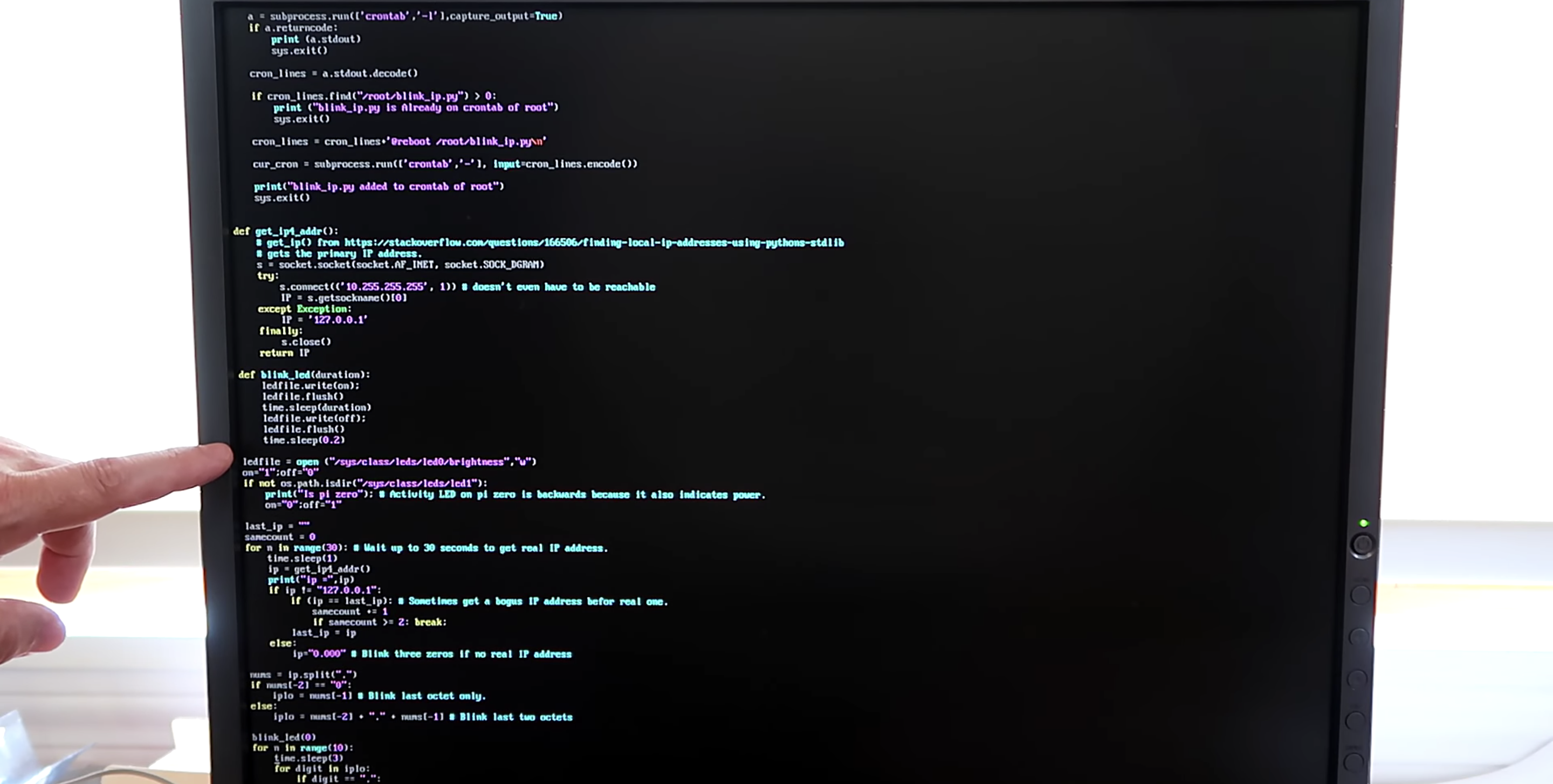

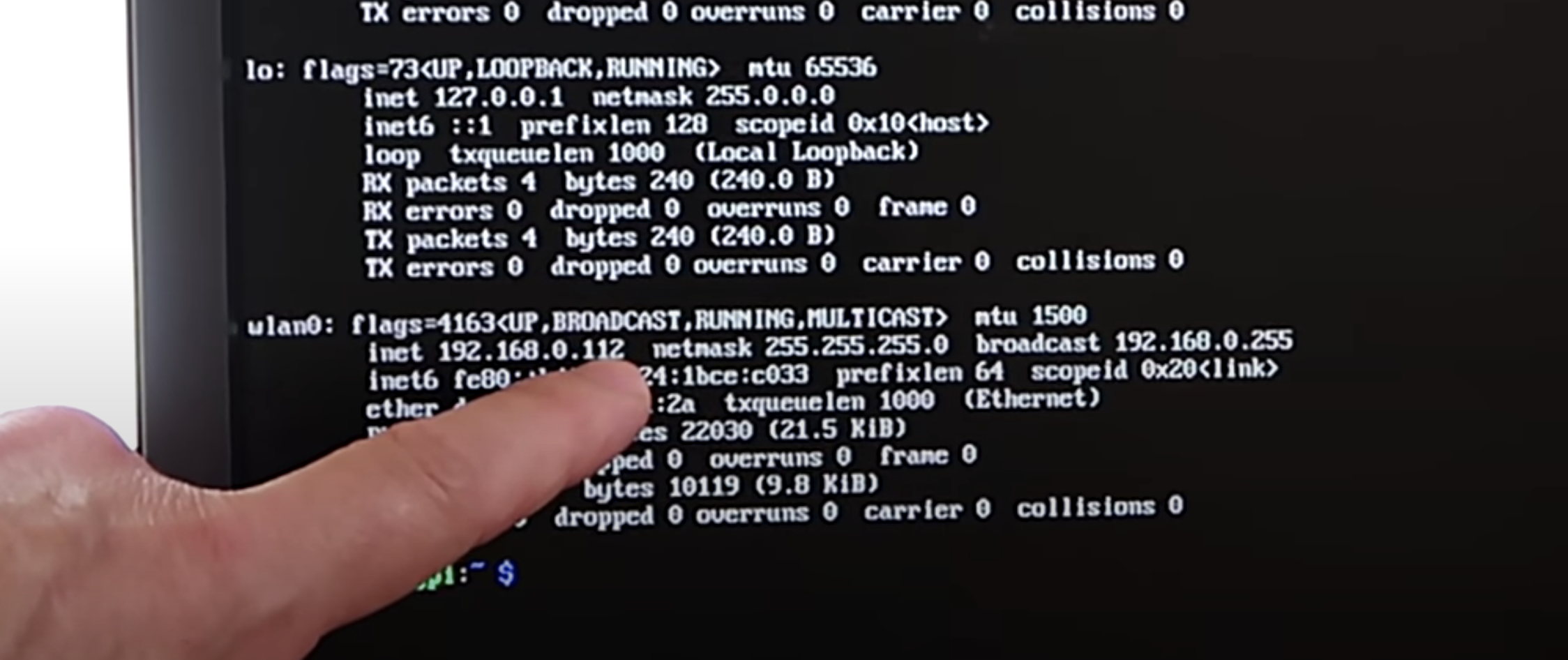

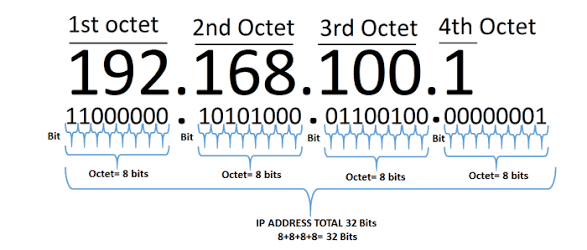

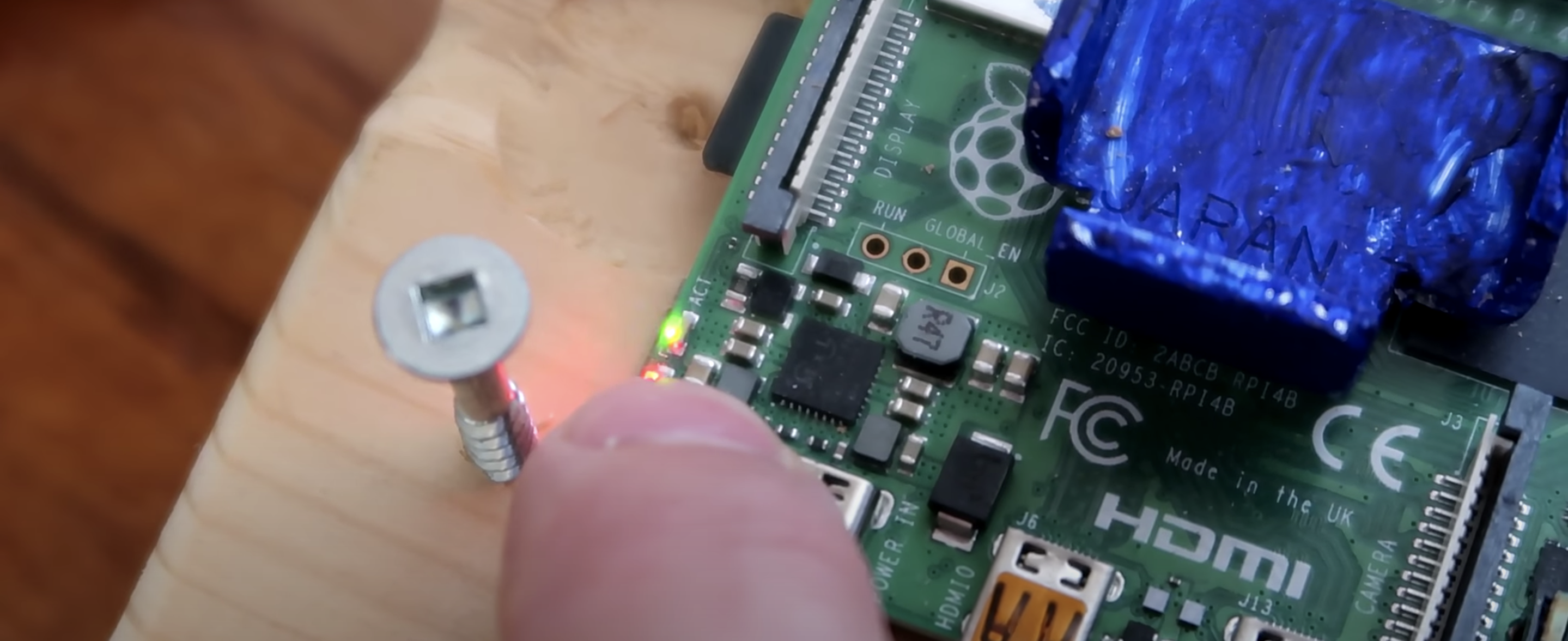

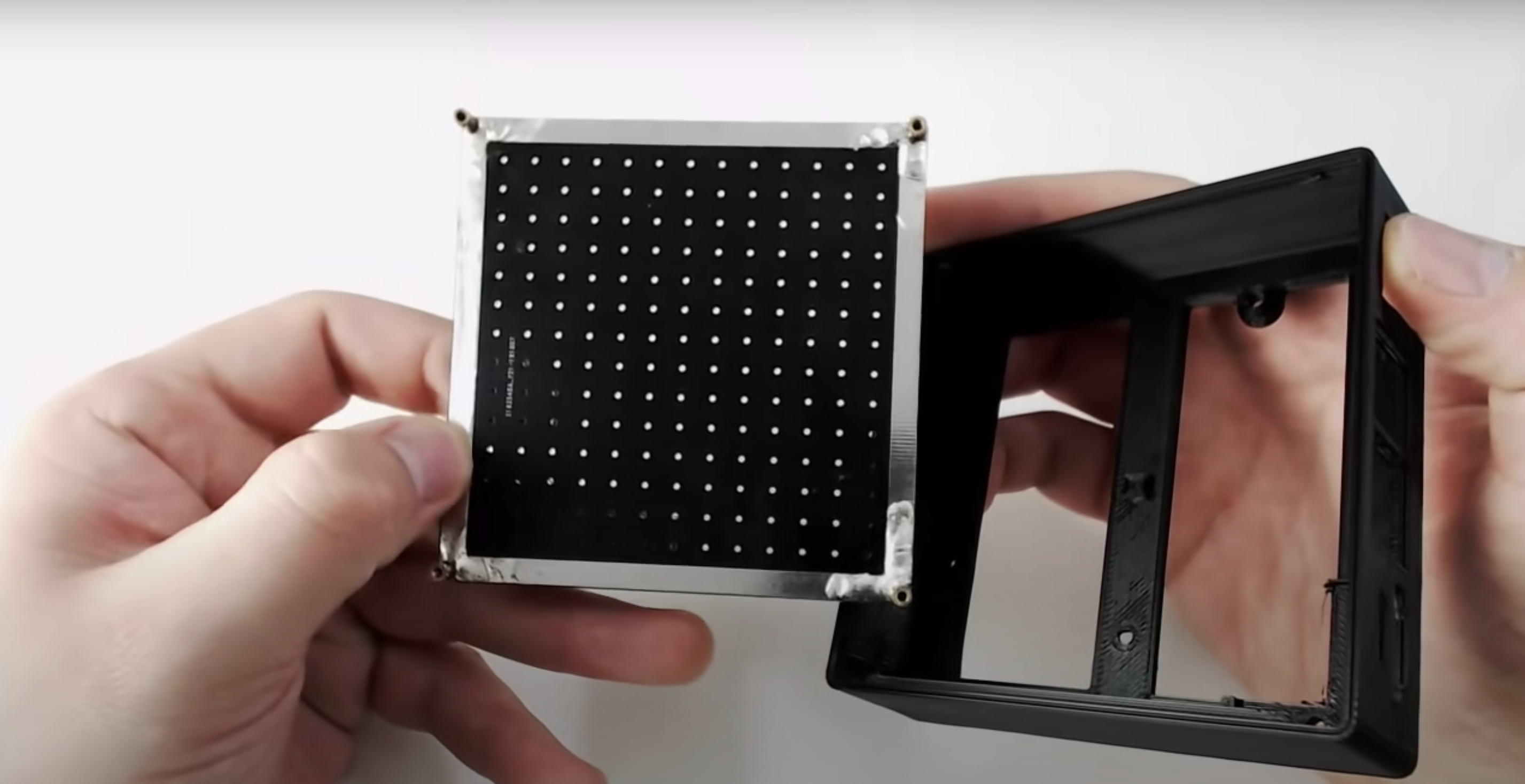

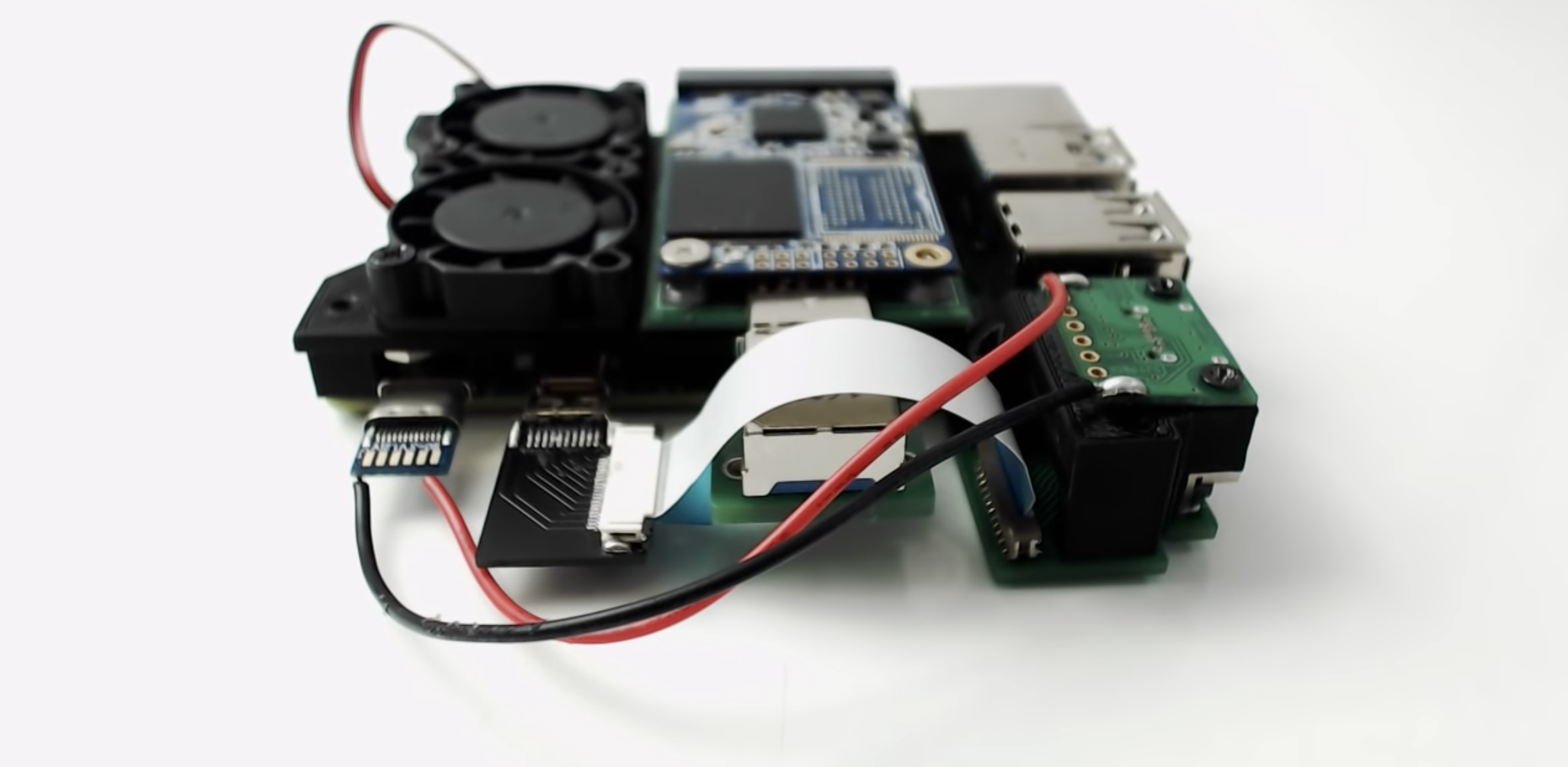

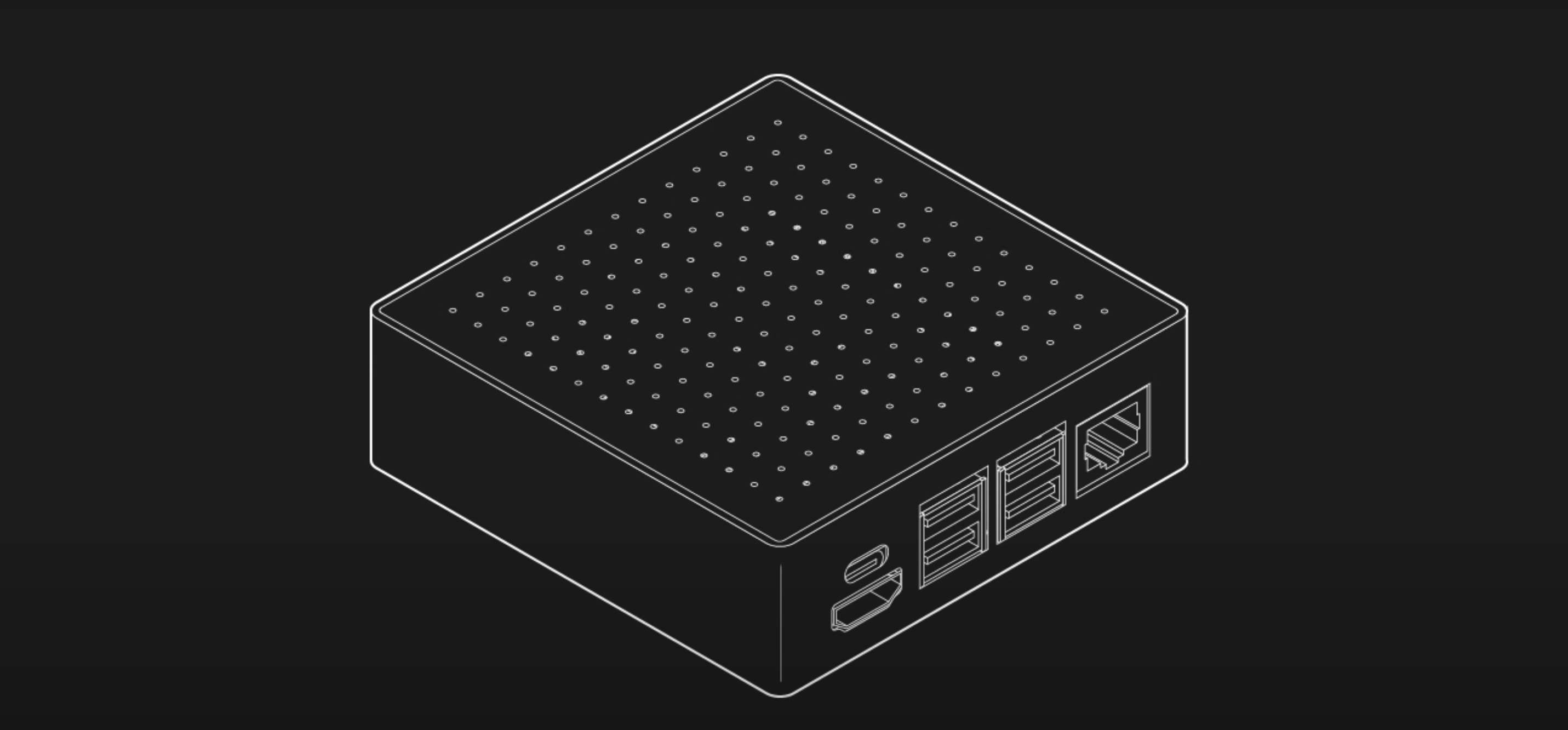

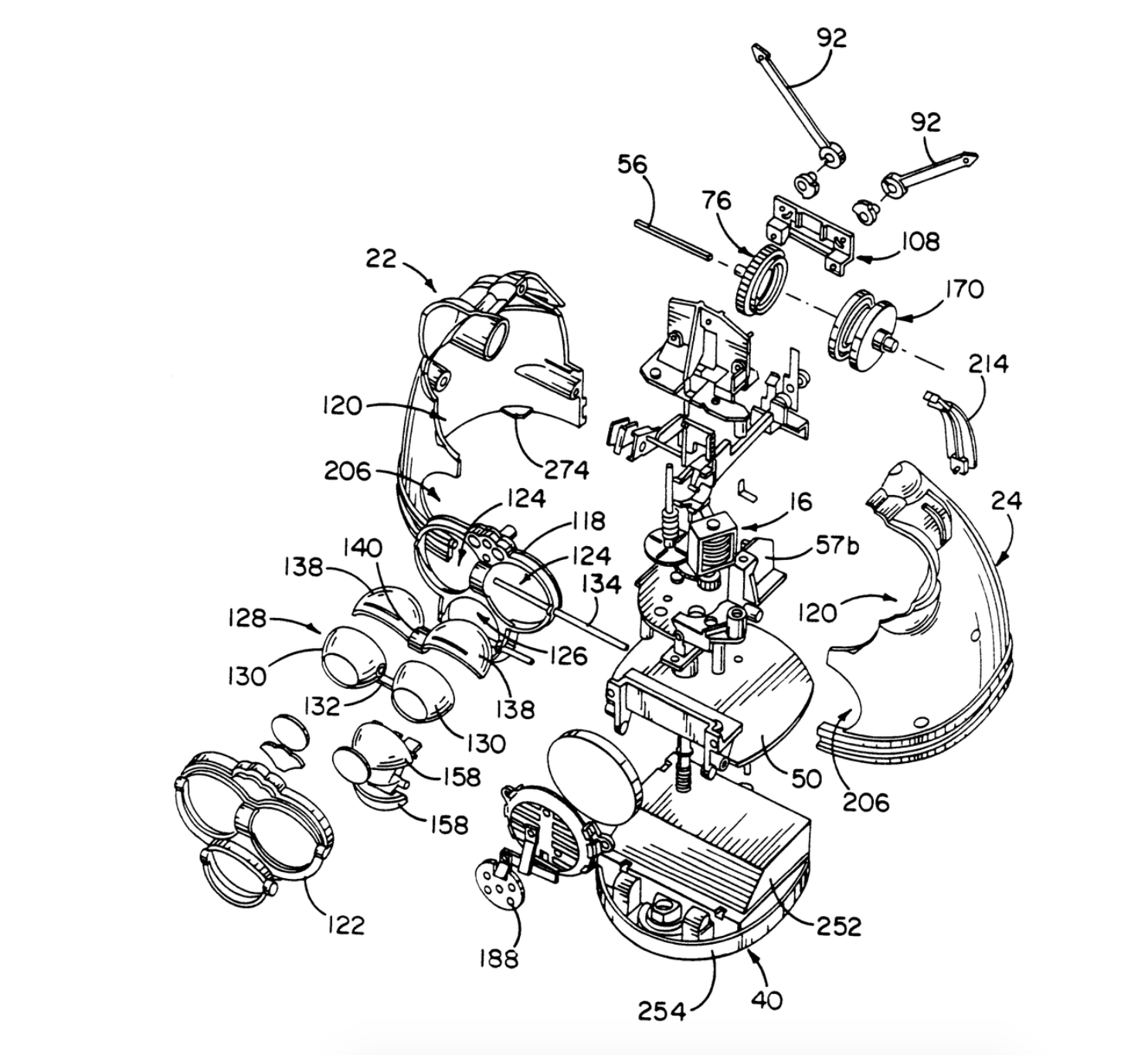

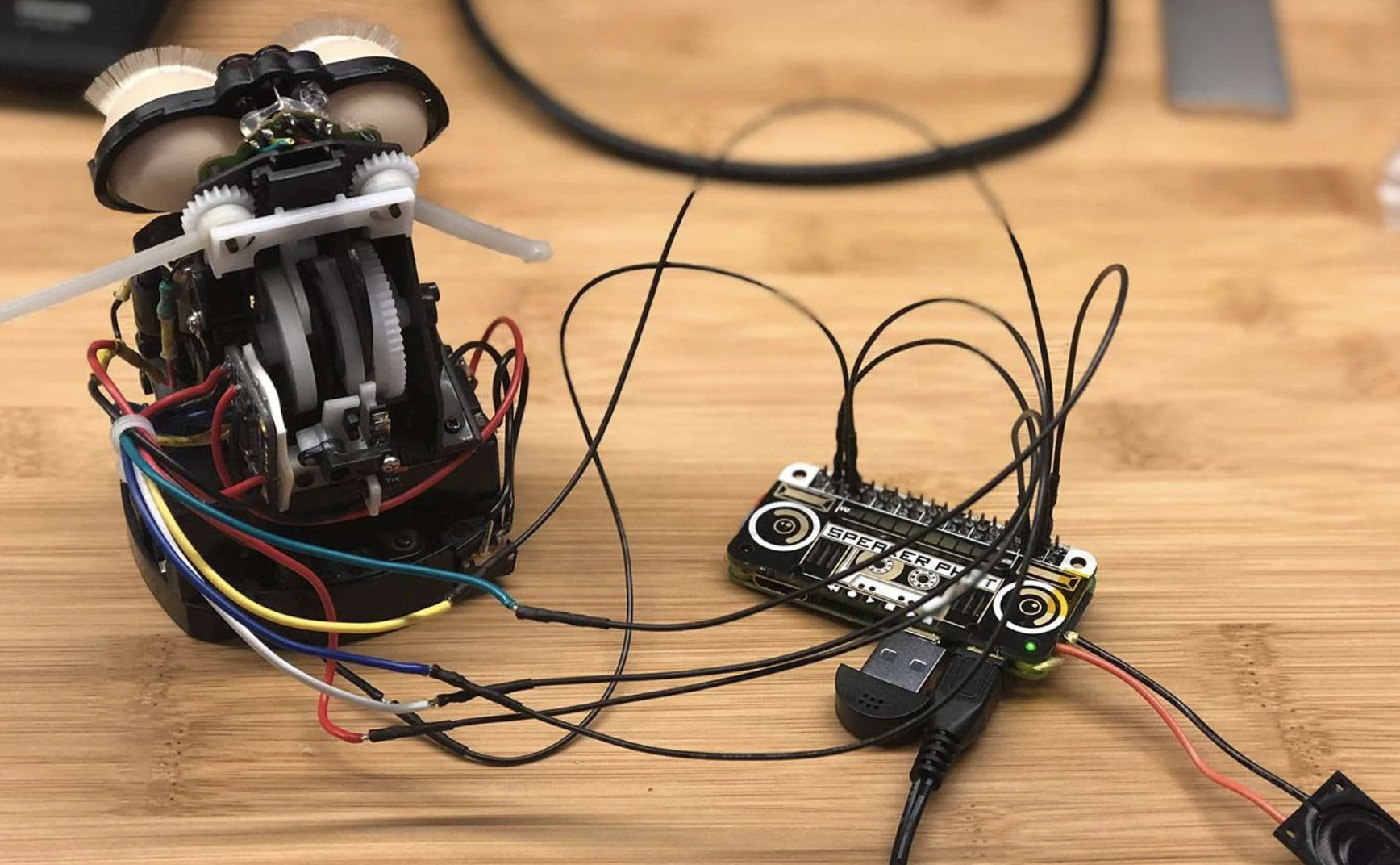

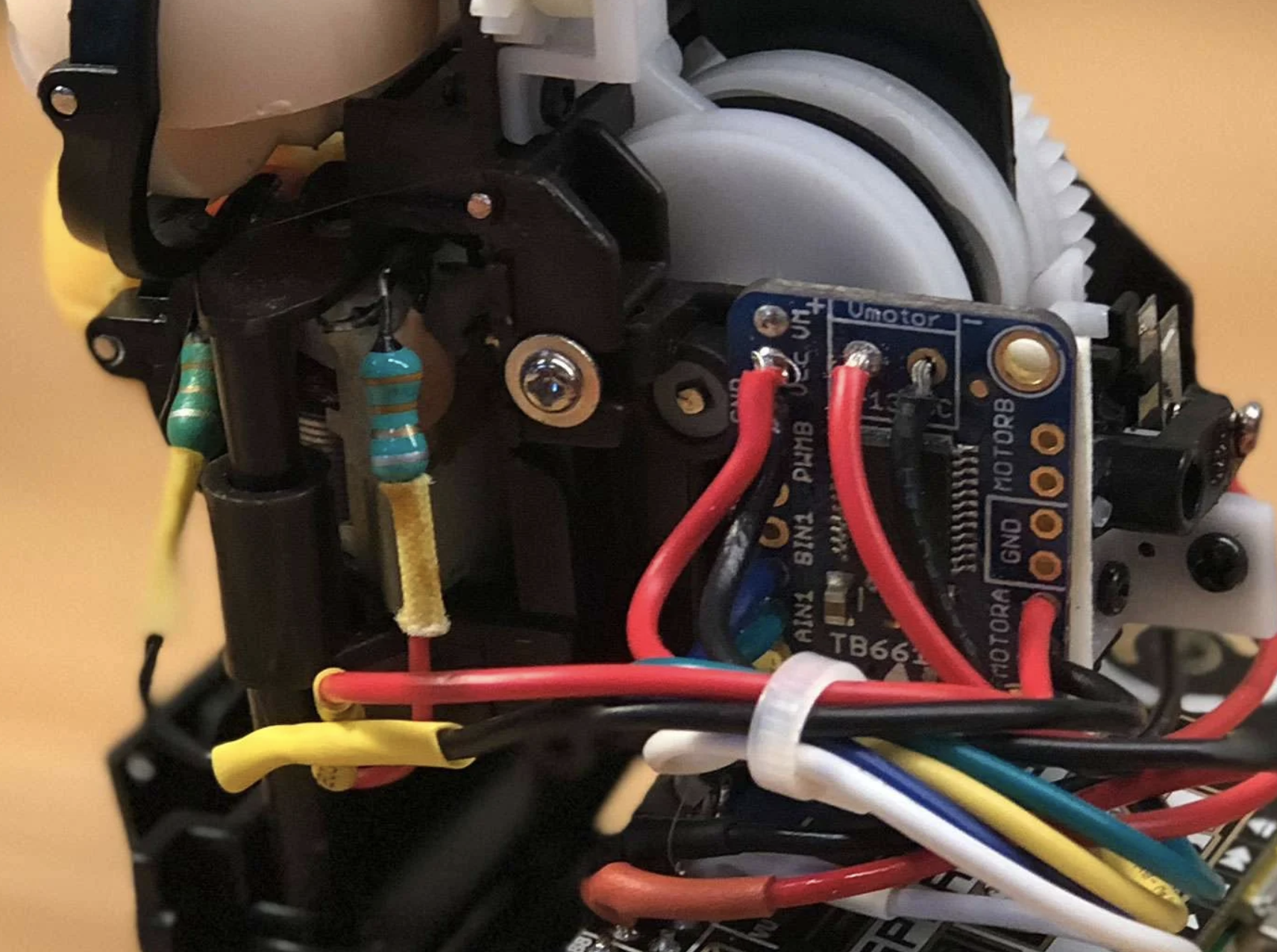

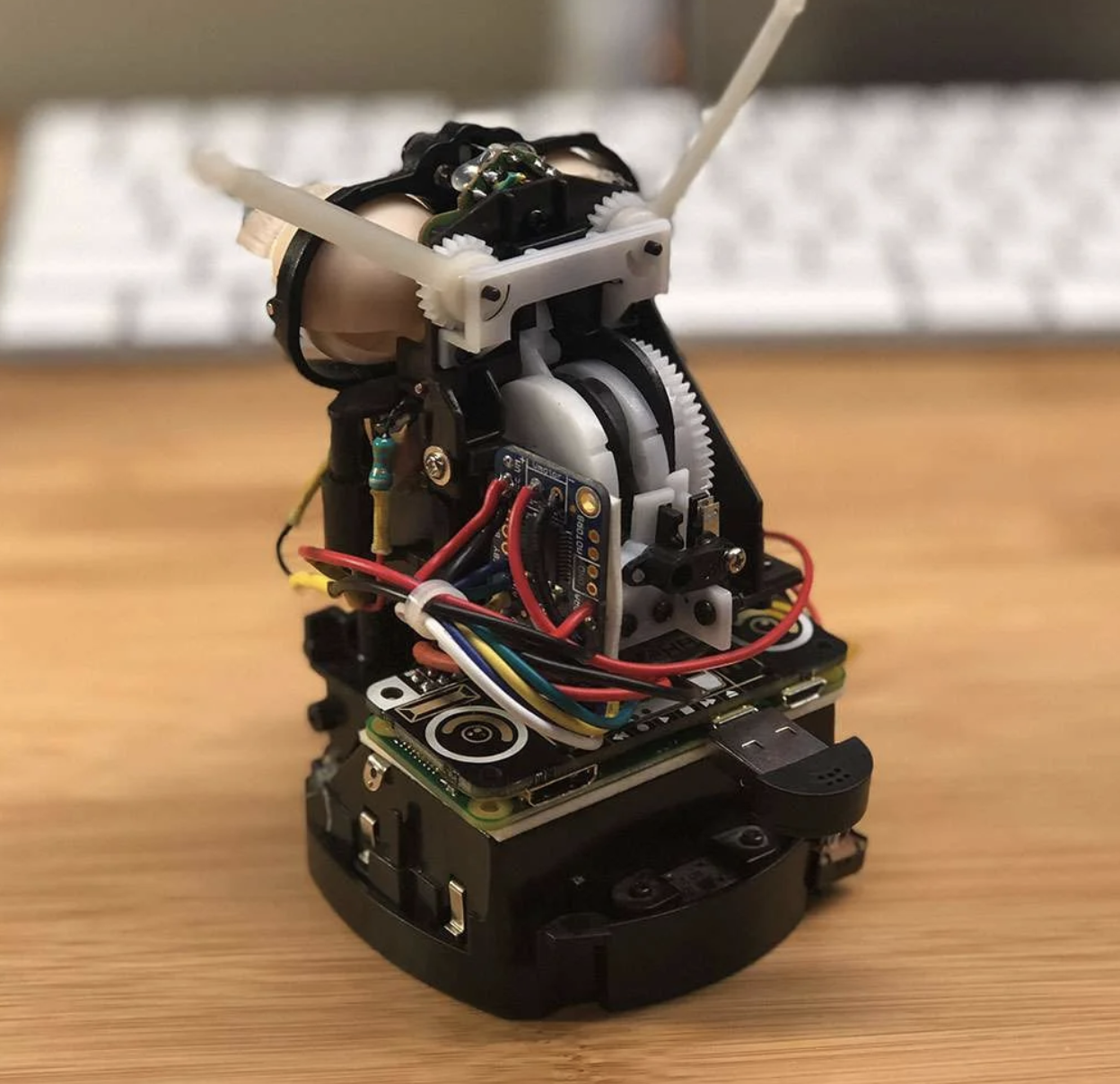

The apparatus features an accelerometer to precisely determine the moment of zero gravity, along with 13 Raspberry Pis and 12 Raspberry Pi cameras to capture the slosh movement.

What’s wrong with slosh?

The Broadcom Foundation shared a pretty interesting minute-by-minute report on Eleanor’s first hyperbolic flight and how she got everything working. But, in a nutshell…

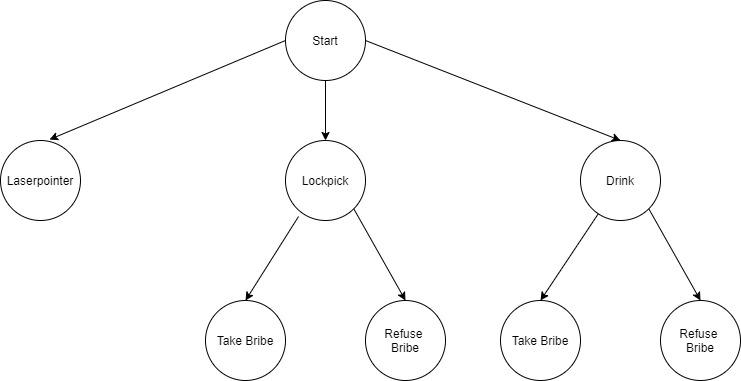

You don’t want the fluid in your space shuttle tanks sloshing around too much. It’s a mission-ending problem. Slosh occurs on take-off and also in microgravity during manoeuvres, so Eleanor devised this novel approach to managing it in place of the costly, heavy subsystems currently used on board space craft.

Eleanor wanted to prove that the fluid inside tanks treated with superhydrophobic and superhydrophilic coatings settled quicker than in uncoated tanks. And she was right: settling times were reduced by 73% in some cases.

A continuation of this experiment is due to go up on Blue Origin’s New Shepard rocket – and yes, a patent is already pending.

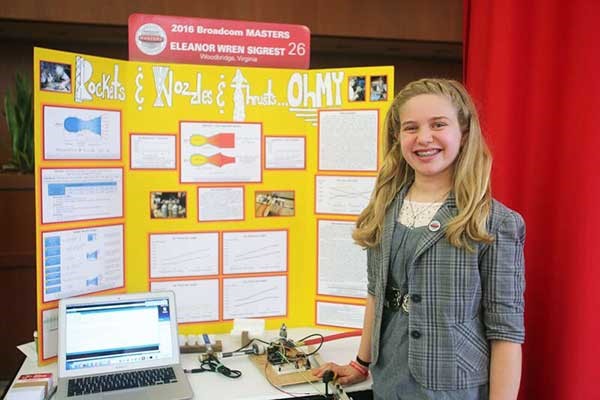

Curiosity, courage & compromise

At just 13 years old, Eleanor won the Samueli Prize at the 2016 Broadcom MASTERS for her mastery of STEM principles and team leadership during a rigorous week-long competition. High praise came from Paula Golden, President of Broadcom Foundation, who said: “Eleanor is the epitome of a young woman scientist and engineer. She combines insatiable curiosity with courage: two traits that are essential for a leader in these fields.”

That week-long experience also included a Raspberry Pi Challenge, and Eleanor explained: “During the Raspberry Pi Challenge, I learned that sometimes the simplest solutions are the best. I also learned it’s important to try everyone’s ideas because you never know which one might work the best. Sometimes it’s a compromise of different ideas, or a compromise between complicated and simple. The most important thing is to consider them all.”

Get this girl to Mars already.

The post 13 Raspberry Pis slosh-test space shuttle tanks in zero gravity appeared first on Raspberry Pi.