Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=OJtMraZQf98

Security updates for Wednesday

Post Syndicated from original https://lwn.net/Articles/877284/rss

Security updates have been issued by Debian (rsync, rsyslog, and uriparser), Fedora (containerd, freeipa, golang-github-containerd-ttrpc, libdxfrw, libldb, librecad, mingw-speex, moby-engine, samba, and xen), Red Hat (kernel, kernel-rt, kpatch-patch, and samba), and Ubuntu (linux, linux-aws, linux-aws-5.11, linux-azure, linux-azure-5.11, linux-gcp, linux-gcp-5.11, linux-hwe-5.11, linux-kvm, linux-oracle, linux-oracle-5.11, linux-raspi, linux, linux-aws, linux-aws-5.4, linux-azure, linux-gcp, linux-gke, linux-gke-5.4, linux-gkeop, linux-gkeop-5.4, linux-hwe-5.4, linux-kvm, linux-oracle, linux-oracle-5.4, linux, linux-aws, linux-aws-hwe, linux-azure, linux-azure-4.15, linux-dell300x, linux-gcp-4.15, linux-hwe, linux-kvm, linux-oracle, linux-raspi2, linux-snapdragon, linux, linux-aws, linux-azure, linux-gcp, linux-kvm, linux-oem-5.13, linux-oracle, linux-raspi, and linux-oem-5.14).

23 – System Recordings – FreePBX 101 v15

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=t0UJiEXyHL8

No Neutral Zigbee 3.0 Switch Module & Zigbee2MQTT Custom Converter

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=WV-S4jTBVMc

DIY Smart Garage Opener LOCAL Shelly Plus 1 (Bluetooth Bonus)

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=nSrm6h7r-KE

Announcing winners of the AWS Graviton Challenge Contest and Hackathon

Post Syndicated from Neelay Thaker original https://aws.amazon.com/blogs/compute/announcing-winners-of-the-aws-graviton-challenge-contest-and-hackathon/

At AWS, we are constantly innovating on behalf of our customers so they can run virtually any workload, with optimal price and performance. Amazon EC2 now includes more than 475 instance types that offer a choice of compute, memory, networking, and storage to suit your workload needs. While we work closely with our silicon partners to offer instances based on their latest processors and accelerators, we also drive more choice for our customers by building our own silicon.

The AWS Graviton family of processors were built as part of that silicon innovation initiative with the goal of pushing the price performance envelope for a wide variety of customer workloads in EC2. We now have 12 EC2 instance families powered by AWS Graviton2 processors – general purpose (M6g, M6gd), burstable (T4g), compute optimized (C6g, C6gd, C6gn), memory optimized (R6g, R6gd, X2gd), storage optimized (Im4gn, Is4gen), and accelerated computing (G5g) available globally across 23 AWS Regions. We also announced the preview of Amazon EC2 C7g instances powered by the latest generation AWS Graviton3 processors that will provide the best price performance for compute-intensive workloads in EC2. Thousands of customers, including Discovery, DIRECTV, Epic Games, and Formula 1, have realized significant price performance benefits with AWS Graviton-based instances for a broad range of workloads. This year, AWS Graviton-based instances also powered much of Amazon Prime Day 2021 and supported 12 core retail services during the massive 2-day online shopping event.

To make it easy for customers to adopt Graviton-based instances, we launched a program called the Graviton Challenge. Working with customers, we saw that many successful adoptions of Graviton-based instances were the result of one or two developers taking a single workload and spending a few days to benchmark the price performance gains with Graviton2-based instances, before scaling it to more workloads. The Graviton Challenge provides a step-by-step plan that developers can follow to move their first workload to Graviton-based instances. With the Graviton Challenge, we also launched a Contest (US-only), and then a Hackathon (global), where developers could compete for prizes by building new applications or moving existing applications to run on Graviton2-based instances. More than a thousand participants, including enterprises, startups, individual developers, open-source developers, and Arm developers, registered and ran a variety of applications on Graviton-based instances with significant price performance benefits. We saw some fantastic entries and usage of Graviton2-based instances across a variety of use cases and want to highlight a few.

The Graviton Challenge Contest winners:

- Best Adoption – Enterprise and Most Impactful Adoption: VMware vRealize SRE team, who migrated 60 micro-services written in Java, Rust, and Golang to Graviton2-based general purpose and compute optimized instances and realized up to 48% latency reduction and 22% cost savings.

- Best Adoption – Startup: Kasm Technologies, who realized up to 48% better performance and 25% potential cost savings for its container streaming platform built on C/C++ and Python.

- Best New Workload adoption: Dustin Wilson, who built a dynamic tile server based on Golang and running on Graviton2-based memory-optimized instances that helps analysts query large geospatial datasets and benchmarked up to 1.8x performance gains over comparable x86-based instances.

- Most Innovative Adoption: Loroa, an application that translates any given text into spoken words from one language into multiple other languages using Graviton2-based instances, Amazon Polly, and Amazon Translate.

If you are attending AWS re:Invent 2021 in person, you can hear more details on their Graviton adoption experience by attending the CMP213: Lessons learned from customers who have adopted AWS Graviton chalk talk.

Winners for the Graviton Challenge Hackathon:

- Best New App: PickYourPlace, an open-source based data analytics platform to help users select a place to live based on property value, safety, and accessibility.

- Best Migrated App: Genie, an image credibility checker based on deep learning that makes predictions on photographic and tampered confidence of an image.

- Highest Potential Impact: Welly Tambunan, who’s also an AWS Community Builder, for porting big data platforms Spark, Dremio, and AirByte to Graviton2 instances so developers can leverage it to build big data capabilities into their applications.

- Most Creative Use Case: OXY, a low-cost custom Oximeter with mobile and web apps that enables continuous and remote monitoring to prevent deaths due to Silent Hypoxia.

- Best Technical Implementation: Apollonia Bot that plays songs, playlists, or podcasts on a Discord voice channel, so users can listen to it together.

It’s been incredibly exciting to see the enthusiasm and benefits realized by our customers. We are also thankful to our judges – Patrick Moorhead from Moor Insights, James Governor from RedMonk, and Jason Andrews from Arm, for their time and effort.

In addition to EC2, several AWS services for databases, analytics, and even serverless support options to run on Graviton-based instances. These include Amazon Aurora, Amazon RDS, Amazon MemoryDB, Amazon DocumentDB, Amazon Neptune, Amazon ElastiCache, Amazon OpenSearch, Amazon EMR, AWS Lambda, and most recently, AWS Fargate. By using these managed services on Graviton2-based instances, customers can get significant price performance gains with minimal or no code changes. We also added support for Graviton to key AWS infrastructure services such as Elastic Beanstalk, Amazon EKS, Amazon ECS, and Amazon CloudWatch to help customers build, run, and scale their applications on Graviton-based instances. Additionally, a large number of Linux and BSD-based operating systems, and partner software for security, monitoring, containers, CI/CD, and other use cases now support Graviton-based instances and we recently launched the AWS Graviton Ready program as part of the AWS Service Ready program to offer Graviton-certified and validated solutions to customers.

Congrats to all of our Contest and Hackathon winners! Full list of the Contest and Hackathon winners is available on the Graviton Challenge page.

P.S.: Even though the Contest and Hackathon have ended, developers can still access the step-by-step plan on the Graviton Challenge page to move their first workload to Graviton-based instances.

Julia 1.7 released

Post Syndicated from original https://lwn.net/Articles/877242/rss

Version

1.7 of the Julia programming language has been released. The list of

new features is long; see the release announcement and this LWN article for the details.

OWASP Top 10 Deep Dive: Identification and Authentication Failures

Post Syndicated from Nathaniel Hierseman original https://blog.rapid7.com/2021/12/01/owasp-top-10-deep-dive-identification-and-authentication-failures/

In the 2021 edition of the OWASP top 10 list, Broken Authentication was changed to Identification and Authentication Failures. This term bundles in a number of existing items like cryptography failures, session fixation, default login credentials, and brute-forcing access. Additionally, this vulnerability slid down the top 10 list from number 2 to number 7.

To be sure, security practitioners have made progress in recent years in mitigating authentication vulnerabilities. We should consider ourselves fortunate that most employees are no longer using default usernames and passwords, generic admin, or admin as credentials — many of these issues have been resolved with the availability of frameworks that help standardize against these types of vulnerabilities. Security teams have also started to feel the effects of maintaining multi-factor authentication (MFA) accounts across the multitudes of applications we use in our day-to-day lives. This, too, has helped contribute to this category going down in the OWASP top 10 list.

But that doesn’t mean security pros should take their eyes off the ball when it comes to identification and authentication failures. Let’s take a look at the issues that still remain in mitigating this family of threats.

The challenges with identification and authentication

Nearly every application and technology solution that we use in our lives has some sort of login associated with it. In your home, think about the WiFi routers you connect to — and the many devices and appliances that can now access that network.

Your workplace likely also has a wide variety of devices that reside on the network. Most of these devices have some form of login that allows them to make configuration changes. In addition, these devices almost always come with generic usernames and passwords that allow users to log into them for the first time.

Unfortunately, these credentials appear in every user guide and are publicly well-known. Vendors routinely use the same generic credentials across multiple different product types, which can compound the problem. Even if you change the password upon configuration of the device, the username is still known, and the password can be brute-forced with a variety of different testing tools.

Testing identification and authentication with InsightAppSec

InsightAppSec, Rapid7’s dynamic application security testing (DAST) solution, offers a single solution with an ability to detect and identify these risks across your environment. It allows you to test your applications and devices for identification and authentication failures throughout the enterprise.

InsightAppSec contains 101 different attack modules, with thousands of payloads to help identify vulnerabilities in your environment. You can utilize the default attack templates within the solution or build your own from scratch.

InsightAppSec also lets you prioritize these risks within the platform and gives you the information you need to provide details and context to the teams responsible for remediation. The platform gives detailed recommendations for how developers can fix vulnerabilities. Users can also replay the attacks in real time against the application and validate that common issues, such as using generic credentials to log in, have been resolved with the Rapid7 Chrome Plugin.

Final thought

As organizations continue to protect their applications from identification and authentication issues, there will be added mechanisms for authentication in place for protection. As a part of any good application security program, these applications will still be to be scanned and tested. Having a scanning solution in place to be able to authenticate appropriately through these security protections is essential for organizations to address their identity- and access-related vulnerabilities.

Check out our previous OWASP Top 10 Deep Dives on:

Summary of Zabbix Summit Online 2021, Zabbix 6.0 LTS release date and Zabbix Workshops

Post Syndicated from Arturs Lontons original https://blog.zabbix.com/summary-of-zabbix-summit-online-2021-zabbix-6-0-lts-release-date-and-zabbix-workshops/17155/

Now that the Zabbix Summit Online 2021 has concluded, we are thrilled to report we hosted attendees from over 3000 organizations from more than 130 countries all across the globe.

This year, the main focus of the speeches was the upcoming Zabbix 6.0 LTS release, as well as speeches focused on automating Zabbix data collection and configuration, Integrating Zabbix within existing company infrastructures, and migrating from legacy tools to Zabbix. 21 speakers in total presented their use cases and talked about new Zabbix features during the Summit with over 8 hours of content.

In case you missed the Summit or wish to come back to some of the speeches – both the presentations (in PDF format) and the videos of the speeches are available on the Zabbix Summit Online 2021 Event page.

Zabbix 6.0 LTS release date

As for Zabbix 6.0 LTS – as per our statement during the event, you can expect Zabbix 6.0 LTS to release in early 2022. At the time of this post, the latest pre-release version is Zabbix 6.0 Alpha 7, with the first Beta version scheduled for release VERY soon. Feel free to deploy the latest pre-release version and take a look at features such as Geomaps, Business Service monitoring, improved Audit log, UX improvements, Anomaly detection with Machine Learning, and more! The list of the latest released Zabbix 6.0 versions as well as the improvements and fixes they contain is available in the Release notes section of our website.

Zabbix 6.0 LTS Workshops

The workshops will focus on particular Zabbix 6.0 LTS features and will be available once the Zabbix 6.0 LTS is released. The workshops will provide a unique chance to learn and practice the configuration of specific Zabbix 6.0 LTS features under the guidance of a certified Zabbix trainer at absolutely no cost! Some of the topics covered in the workshops will include – Deploying Zabbix server HA cluster, Creating triggers for Baseline monitoring and Anomaly detection, Displaying your infrastructure status on Geomaps, Deploying Business Service monitoring with root cause analysis, and more!

Upcoming events

But there’s more! On December 9 2021 Zabbix will host PostgreSQL Monitoring Day with Zabbix & Postgres Pro. The speeches will focus on monitoring PostgreSQL databases, running Zabbix on PostgreSQL DB backends with TimescaleDB, and securing your Zabbix + PostgreSQL instances. If you’re currently using PostgreSQL DB backends r plan to do so in the future – you definitely don’t want to miss out!

As for 2022 – you can expect multiple meetups regarding Zabbix 6.0 LTS features and use cases, as well as events focused on specific monitoring use cases. More information will be publicly available with the release of Zabbix 6.0 LTS.

Comic for 2021.12.01

Post Syndicated from Explosm.net original http://explosm.net/comics/6043/

New Cyanide and Happiness Comic

Enhanced Amazon S3 Integration for Amazon FSx for Lustre

Post Syndicated from Sébastien Stormacq original https://aws.amazon.com/blogs/aws/enhanced-amazon-s3-integration-for-amazon-fsx-for-lustre/

Today, we are announcing two additional capabilities of Amazon FSx for Lustre. First, a full bi-directional synchronization of your file systems with Amazon Simple Storage Service (Amazon S3), including deleted files and objects. Second, the ability to synchronize your file systems with multiple S3 buckets or prefixes.

Lustre is a large scale, distributed parallel file system powering the workloads of most of the largest supercomputers. It is popular among AWS customers for high-performance computing workloads, such as meteorology, life-science, and engineering simulations. It is also used in media and entertainment, as well as the financial services industry.

I had my first hands-on Lustre file systems when I was working for Sun Microsystems. I was a pre-sales engineer and worked on some deals to sell multimillion-dollar compute and storage infrastructure to financial services companies. Back then, having access to a Lustre file system was a luxury. It required expensive compute, storage, and network hardware. We had to wait weeks for delivery. Furthermore, it required days to install and configure a cluster.

Fast forward to 2021, I may create a petabyte-scale Lustre cluster and attach the file system to compute resources running in the AWS cloud, on-demand, and only pay for what I use. There is no need to know about Storage Area Networks (SAN), Fiber Channel (FC) fabric, and other underlying technologies.

Modern applications use different storage options for different workloads. It is common to use S3 object storage for data transformation, preparation, or import/export tasks. Other workloads may require POSIX file-systems to access the data. FSx for Lustre lets you synchronize objects stored on S3 with the Lustre file system to meet these requirements.

When you link your S3 bucket to your file system, FSx for Lustre transparently presents S3 objects as files and lets you to write results back to S3.

Full Bi-Directional Synchronization with Multiple S3 Buckets

If your workloads require a fast, POSIX-compliant file system access to your S3 buckets, then you can use FSx for Lustre to link your S3 buckets to a file system and keep data synchronized between the file system and S3 in both directions. However, until today, there were a couple limitations. First, you had to manually configure a task to export data back from FSx for Lustre to S3. Second, deleted files on S3 were not automatically deleted from the file system. And third, an FSx for Lustre file system was synchronized with one S3 bucket only. We are addressing these three challenges with this launch.

Starting today, when you configure an automatic export policy for your data repository association, files on your FSx for Lustre file system are automatically exported to your data repository on S3. Next, deleted objects on S3 are now deleted from the FSx for Lustre file system. The opposite is also available: deleting files on FSx for Lustre triggers the deletion of corresponding objects on S3. Finally, you may now synchronize your FSx for Lustre file system with multiple S3 buckets. Each bucket has a different path at the root of your Lustre file system. For example your S3 bucket logs may be mapped to /fsx/logs and your other financial_data bucket may be mapped to /fsx/finance.

These new capabilities are useful when you must concurrently process data in S3 buckets using both a file-based and an object-based workflow, as well as share results in near real time between these workflows. For example, an application that accesses file data can do so by using an FSx for Lustre file system linked to your S3 bucket, while another application running on Amazon EMR may process the same files from S3.

Moreover, you may link multiple S3 buckets or prefixes to a single FSx for Lustre file system, thereby enabling a unified view across multiple datasets. Now you can create a single FSx for Lustre file system and easily link multiple S3 data repositories (S3 buckets or prefixes). This is convenient when you use multiple S3 buckets or prefixes to organize and manage access to your data lake, access files from a public S3 bucket (such as these hundreds of public datasets) and write job outputs to a different S3 bucket, or when you want to use a larger FSx for Lustre file system linked to multiple S3 datasets to achieve greater scale-out performance.

How It Works

Let’s create an FSx for Lustre file system and attach it to an Amazon Elastic Compute Cloud (Amazon EC2) instance. I make sure that the file system and instance are in the same VPC subnet to minimize data transfer costs. The file system security group must authorize access from the instance.

I open the AWS Management Console, navigate to FSx, and select Create file system. Then, I select Amazon FSx for Lustre. I am not going through all of the options to create a file system here, you can refer to the documentation to learn how to create a file system. I make sure that Import data from and export data to S3 is selected.

It takes a few minutes to create the file system. Once the status is

It takes a few minutes to create the file system. Once the status is  Available, I navigate to the Data repository tab, and then select Create data repository association.

Available, I navigate to the Data repository tab, and then select Create data repository association.

I choose a Data Repository path (my source S3 bucket) and a file system path (where in the file system that bucket will be imported).

Then, I choose the Import policy and Export policy. I may synchronize the creation of file/objects, their updates, and when they are deleted. I select Create.

When I use automatic import, I also make sure to provide an S3 bucket in the same AWS Region as the FSx for Lustre cluster. FSx for Lustre supports linking to an S3 bucket in a different AWS Region for automatic export and all other capabilities.

Using the console, I see the list of Data repository associations. I wait for the import task status to become  Succeeded. If I link the file system to an S3 bucket with a large number of objects, then I may choose to skip Importing metadata from repository while creating the data repository association, and then load metadata from selected prefixes in my S3 buckets that are required for my workload using an Import task.

Succeeded. If I link the file system to an S3 bucket with a large number of objects, then I may choose to skip Importing metadata from repository while creating the data repository association, and then load metadata from selected prefixes in my S3 buckets that are required for my workload using an Import task.

I create an EC2 instance in the same VPC subnet. Furthermore, I make sure that the FSx for Lustre cluster security group authorizes ingress traffic from the EC2 instance. I use SSH to connect to the instance, and then type the following commands (commands are prefixed with the $ sign that is part of my shell prompt).

# check kernel version, minimum version 4.14.104-95.84 is required

$ uname -r

4.14.252-195.483.amzn2.aarch64

# install lustre client

$ sudo amazon-linux-extras install -y lustre2.10

Installing lustre-client

...

Installed:

lustre-client.aarch64 0:2.10.8-5.amzn2

Complete!

# create a mount point

$ sudo mkdir /fsx

# mount the file system

$ sudo mount -t lustre -o noatime,flock fs-00...9d.fsx.us-east-1.amazonaws.com@tcp:/ny345bmv /fsx

# verify mount succeeded

$ mount

...

172.0.0.0@tcp:/ny345bmv on /fsx type lustre (rw,noatime,flock,lazystatfs)

Then, I verify that the file system contains the S3 objects, and I create a new file using the touch command.

I switch to the AWS Console, under S3 and then my bucket name, and I verify that the file has been synchronized.

Using the console, I delete the file from S3. And, unsurprisingly, after a few seconds, the file is also deleted from the FSx file system.

Pricing and Availability

These new capabilities are available at no additional cost on Amazon FSx for Lustre file systems. Automatic export and multiple repositories are only available on Persistent 2 file systems in US East (N. Virginia), US East (Ohio), US West (Oregon), Canada (Central), Asia Pacific (Tokyo), Europe (Frankfurt), and Europe (Ireland). Automatic import with support for deleted and moved objects in S3 is available on file systems created after July 23, 2020 in all regions where FSx for Lustre is available.

You can configure your file system to automatically import S3 updates by using the AWS Management Console, the AWS Command Line Interface (CLI), and AWS SDKs.

Learn more about using S3 data repositories with Amazon FSx for Lustre file systems.

One More Thing

One more thing while you are reading. Today, we also launched the next generation of FSx for Lustre file systems. FSx for Lustre next-gen file systems are built on AWS Graviton processors. They are designed to provide you with up to 5x higher throughput per terabyte (up to 1 GB/s per terabyte) and reduce your cost of throughput by up to 60% as compared to previous generation file systems. Give it a try today!

PS : my colleague Michael recorded a demo video to show you the enhanced S3 integration for FSx for Lustre in action. Check it out today.

New – Offline Tape Migration Using AWS Snowball Edge

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/new-offline-tape-migration-using-aws-snowball-edge/

Over the years, we have given you a succession of increasingly powerful tools to help you migrate your data to the AWS Cloud. Starting with AWS Import/Export back in 2009, followed by Snowball in 2015, Snowmobile and Snowball Edge in 2016, and Snowcone in 2020, each new device has given you additional features to simplify and expedite the migration process. All of the devices are designed to operate in environments that suffer from network constraints such as limited bandwidth, high connections costs, or high latency.

Offline Tape Migration

Today, we are taking another step forward by making it easier for you to migrate data stored offline on physical tapes. You can get rid of your large and expensive storage facility, send your tape robots out to pasture, and eliminate all of the time & effort involved in moving archived data to new formats and mediums every few years, all while retaining your existing tape-centric backup & recovery utilities and workflows.

This launch brings a tape migration capability to AWS Snowball Edge devices, and allows you to migrate up to 80 TB of data per device, making it suitable for your petabyte-scale migration efforts. Tapes can be stored in the Amazon S3 Glacier Flexible Retrieval or Amazon S3 Glacier Deep Archive storage classes, and then accessed from on-premises and cloud-based backup and recovery utilities.

Back in 2013 I showed you how to Create a Virtual Tape Library Using the AWS Storage Gateway. Today’s launch builds on that capability in two different ways. First, you create a Virtual Tape Library (VTL) on a Snowball Edge and copy your physical tapes to it. Second, after your tapes are in the cloud, you create a VTL on a Storage Gateway and use it to access your virtual tapes.

Getting Started

To get started, I open the Snow Family Console and create a new job. Then I select Import virtual tapes into AWS Storage Gateway and click Next:

Then I go through the remainder of the ordering sequence (enter my shipping address, name my job, choose a KMS key, and set up notification preferences), and place my order. I can track the status of the job in the console:

When my device arrives I tell the somewhat perplexed delivery person about data transfer, carry it down to my basement office, and ask Luna to check it out:

Back in the Snow Family console, I download the manifest file and copy the unlock code:

I connect the Snowball Edge to my “corporate” network:

Then I install AWS OpsHub for Snow Family on my laptop, power on the Snowball Edge, and wait for it to obtain & display an IP address:

I launch OpsHub, sign in, and accept the default name for my device:

I confirm that OpsHub has access to my device, and that the device is unlocked:

I view the list of services running on the device, and note that Tape Gateway is not running:

Before I start Tape Gateway, I create a Virtual Network Interface (VNI):

And then I start the Tape Gateway service on the Snow device:

Now that the service is running on the device, I am ready to create the Storage Gateway. I click Open Storage Gateway console from within OpsHub:

I select Snowball Edge as my host platform:

Then I give my gateway a name (MyTapeGateway), select my backup application (Veeam Backup & Replication in this case), and click Activate Gateway:

Then I configure CloudWatch logging:

And finally, I review the settings and click Finish to activate my new gateway:

The activation process takes a few minutes, just enough time to take Luna for a quick walk. When I return, the console shows that the gateway is activated and running, and I am all set:

Creating Tapes

The next step is to create some virtual tapes. I click Create tapes and enter the requested information, including the pool (Deep Archive or Glacier), and click Create tapes:

The next step is to copy data from my physical tapes to the Snowball Edge. I don’t have a data center and I don’t have any tapes, so I can’t show you how to do this part. The data is stored on the device, and my Internet connection is used only for management traffic between the Snowball Edge device and AWS. To learn more about this part of the process, check out our new animated explainer.

After I have copied the desired tapes to the device, I prepare it for shipment to AWS. I make sure that all of the virtual tapes in the Storage Gateway Console have the status In Transit to VTS (Virtual Tape Shelf), and then I power down the device.

The display on the device updates to show the shipping address, and I wait for the shipping company to pick up the device.

When the device arrives at AWS, the virtual tapes are imported, stored in the S3 storage class associated with the pool that I chose earlier, and can be accessed by retrieving them using an online tape gateway. The gateway can be deployed as a virtual machine or a hardware appliance.

Now Available

You can use AWS Snowball Edge for offline tape migration in the US East (N. Virginia), US East (Ohio), US West (Oregon), US West (N. California), Europe (Ireland), Europe (Frankfurt), Europe (London), Asia Pacific (Sydney) Regions. Start migrating petabytes of your physical tape data to AWS, today!

— Jeff;

Preview – AWS Backup Adds Support for Amazon S3

Post Syndicated from Sébastien Stormacq original https://aws.amazon.com/blogs/aws/preview-aws-backup-adds-support-for-amazon-s3/

Starting today, you can preview AWS Backup for Amazon Simple Storage Service (Amazon S3).

AWS Backup is a fully managed, policy-based service that lets you to centralize and automate the backup and restore of your applications spanning across 12 AWS services: Amazon Elastic Compute Cloud (Amazon EC2) instances, Amazon Elastic Block Store (EBS) volumes, Amazon Relational Database Service (RDS) databases (including Amazon Aurora clusters), Amazon DynamoDB tables, Amazon Neptune databases, Amazon DocumentDB (with MongoDB compatibility) databases, Amazon Elastic File System (Amazon EFS) file systems, Amazon FSx for Lustre file systems, Amazon FSx for Windows File Server file systems, AWS Storage Gateway volumes, and now Amazon S3 (in preview).

Modern workloads and systems are leveraging different storage options for different functionalities. In the 21st century, it is normal to build applications relying on non-relational and relational databases, shared file storage, and object storage, just to name of few. When operating and managing these applications, you told us that you wanted centralized protection and provable compliance for application data stored in S3 alongside other AWS services for storage, compute, and databases.

I can see three benefits when integrating Amazon Simple Storage Service (Amazon S3) with your data protection policies in AWS Backup.

First, it lets you centrally manage your applications backups: AWS Backup provides an automated solution to centrally configure backup policies, thereby helping you simplify backup lifecycle management. This also makes it easy to ensure that your application data across AWS services (including S3) is centrally backed up.

Second, it lets you easily restore your data: AWS Backup provides a single-click-restore experience for your S3 data. This lets you perform point-in-time restores of your S3 buckets and objects to a new or existing S3 bucket.

Finally, it improves backup compliance: AWS Backup provides built-in dashboards that let you to track backup and restore operations for S3.

AWS Backup for S3 (Preview) lets you create continuous point-in-time backups along with periodic backups of S3 buckets, including object data, object tags, access control lists (ACLs), and user-defined metadata. The first backup is a full snapshot, while subsequent backups are incremental. If there is a data disruption event, then you choose a backup from the backup vault, and restore an S3 bucket (or individual S3 objects) to a new or existing S3 bucket. AWS Backup is integrated with AWS Organizations, which let you use a single policy across AWS accounts (within your Organizations) to automate backup creation and backup access management.

Furthermore, you can turn on AWS Backup Vault Lock to enable delete protection of the data that you protect with AWS Backup, and thereby improving protection of your immutable backups from accidental deletion or malicious re-encryption.

How to Get Started

AWS Backup works with versioned S3 buckets. Before you get started, turn on S3 Versioning on your buckets to backup.

I must enable S3 in AWS Backup Settings when I use this feature for the first time. Using the AWS Management Console, I navigate to AWS Backup, then select Settings and Configure resources. I enable S3, and select Confirm. This is a one-time operation.

For this demo, I already have an existing backup plan, and I want to add an S3 bucket to this plan. If you want to create a new backup plan, then you can refer to AWS Backup‘s technical documentation.

To start including my S3 objects in my backup plan, I open the AWS Management Console, navigate to Backup plans, and select Assign resources.

I give a name to my Resource assignment. I select Include specific resources types, then I select S3 as Resource type and one or several S3 Bucket names. When I am done, I select Assign resources.

Alternatively, I may use tags or resource IDs to assign S3 resources.

If you have thousands of S3 buckets, I recommend using tags to assign the S3 buckets to a backup plan. AWS Backup matches the tags in S3 buckets to the ones assigned to the backup plan, and it centrally backs up the S3 resources along with other AWS services that your application uses.

The other options are not different from what you know already.

The Bucket names list in the previous screenshot only shows the S3 buckets in the same Region.

Alternatively, I may also create on-demand backups. I navigate to the Protected resources section, and select Create on-demand backup.

I select S3 as the Resource type, and select the Bucket name. As per usual, I choose a Backup Window, a Retention period, a Backup vault, and an IAM role. Then, I select Create on-demand backup.

After a while, depending on the size of my bucket, the backup is

After a while, depending on the size of my bucket, the backup is  Completed.

Completed.

All of the backups are encrypted and stored securely in a backup vault that I selected in the backup plan.

A backup vault (or backup storage vault) is an encrypted logical construct in my AWS account that stores and organizes my backups (recovery points). I may create new backup vaults in every AWS Region where AWS Backup is available. I may enable AWS Backup Vault Lock (delete-protection capability) on the backup vault to avoid accidental deletions and prevent malicious actors from re-encrypting my data. AWS Backup stores my continuous backups and periodic snapshots in the backup vault of my preference, and it lets me browse and restore as per my requirements.

How to Restore Objects

Let’s try to restore this backup.

The restore operation is very flexible. I may restore entire S3 buckets or individual S3 objects. I may restore the backups to the source S3 bucket, or to another existing bucket. Furthermore, I may create a new S3 bucket during restore. The S3 buckets must have Versioning enabled. Also, I may change the encryption key during restore.

I navigate to Backup vaults to restore the S3 bucket I just backed up. In the Backups section, I select the Recovery point ID that I want to restore, and I select Restore from the Actions menu.

Before starting the restore, I may select a few options:

- The Restore time: I may restore my continuous backup to a point-in-time in the last 35 days, while I can restore my periodic backups to their original state.

- The Restore type: I may choose to restore the entire bucket or a subset of objects within it.

- The Restore destination: I may choose to restore on the same bucket, on another one, or create a new bucket during restore.

- The Restored object encryption: this lets me select the key I want to use to encrypt the restored objects in the bucket.

I select Restore backup to start the restore.

I can monitor the progress in the Jobs section, under the Restore jobs tab.

I can monitor the progress in the Jobs section, under the Restore jobs tab.

When the status turns green to  Completed, my objects are ready to use!

Completed, my objects are ready to use!

Generally, the most comprehensive data-protection strategies include regular testing and validation of your restore procedures before you need them. Testing your restores also helps to prepare and maintain recovery runbooks. In turn, that ensures operational readiness during a disaster recovery exercise, or an actual data loss scenario.

Availability and Pricing

The preview is available in the US West (Oregon) Region only.

During the preview, there are no charges for creating and storing backups. You will pay the AWS charges for underlying resources, such as S3 storage, API usage, and versioning.

Send us an email at [email protected] including your AWS account ID to register for the preview.

Amazon S3 Glacier is the Best Place to Archive Your Data – Introducing the S3 Glacier Instant Retrieval Storage Class

Post Syndicated from Marcia Villalba original https://aws.amazon.com/blogs/aws/amazon-s3-glacier-is-the-best-place-to-archive-your-data-introducing-the-s3-glacier-instant-retrieval-storage-class/

Today we are announcing the Amazon S3 Glacier Instant Retrieval storage class. This new archive storage class delivers the lowest cost storage for long-lived data that is rarely accessed and requires millisecond retrieval.

We are also excited to announce that S3 Intelligent-Tiering now automatically optimizes storage costs for rarely accessed data that needs immediate retrieval with the new Archive Instant Access tier, which is ideal for data with unknown or changing access patterns. For existing customers, this will provide an immediate savings of 68 percent for data that hasn’t been accessed for more than 90 days, with no action needed. The Frequent, Infrequent, and now Archive Instant Access tiers are designed for the same milliseconds access time and high-throughput performance.

In addition, we are announcing the new name for the existing Amazon S3 Glacier storage class and several price reductions.

Amazon S3 Glacier Instant Retrieval

The Amazon S3 Glacier storage classes are extremely low-cost and built for data archiving. They are secure and durable, and they are designed to provide the lowest cost for data that does not require immediate access, with retrieval options from minutes to hours.

Many customers need to store rarely accessed data for several years. However the data must be highly available and immediately accessible. Today, these customers use the S3 Standard-Infrequent Access (S3 Standard-IA) storage class. This storage class offers low cost for storage and allows customers to retrieve their data instantly.

S3 Glacier Instant Retrieval is a new storage class that delivers the fastest access to archive storage, with the same low latency and high-throughput performance as the S3 Standard and S3 Standard-IA storage classes. You can save up to 68 percent on storage costs as compared with using the S3 Standard-IA storage class when you use the S3 Glacier Instant Retrieval storage class and pay a low price to retrieve data. For example, in the US East (N. Virginia) Region, S3 Glacier Instant Retrieval storage pricing is $0.004 per GB-month and data retrieval is $0.03 per GB. Learn more about pricing for your Region.

Media archives, medical images, or user-generated content are just a few examples of ideal use cases for S3 Glacier Instant Retrieval. Once created, this content is rarely accessed, but when it is needed it must be available in milliseconds.

To get started using the new storage class from the Amazon S3 console, upload an object as you would normally, and select the S3 Glacier Instant Retrieval storage class.

This feature is available programmatically from AWS SDKs, AWS Command Line Interface (CLI), and AWS CloudFormation.

In my opinion, the easiest way to store data in S3 Glacier Instant Retrieval is to use the S3 PUT API using the CLI. When using this API, set the storage class to GLACIER_IR.

aws s3api put-object --bucket <bucket-name> --key <object-key> --body <name-file> --storage-class GLACIER_IR

When the object is uploaded to Amazon S3, verify the storage class in the list of objects or on the object details page.

For data that already exists in Amazon S3, you can use S3 Lifecycle to transition data from the S3 Standard and S3 Standard-IA storage classes into S3 Glacier Instant Retrieval.

New Archive Instant Access Tier in S3 Intelligent-Tiering

S3 Intelligent-Tiering is a storage class that automatically moves objects between access tiers to optimize costs. This is the recommended storage class for data with unpredictable or changing access patterns, such as in data lakes, analytics, or user-generated content.

Until today, there were two low latency access tiers optimized for frequent and infrequent access, and two optional archive access tiers designed for asynchronous access optimized for rare access at a low cost.

Beginning today, the Archive Instant Access tier is added as a new access tier in the S3 Intelligent-Tiering storage class. You will start seeing automatic costs savings for your storage in S3 Intelligent-Tiering for rarely accessed objects.

The Archive Instant Access tier joins the group of low latency access tiers. This new tier is optimized for data that is not accessed for months at a time but, when it is needed, is available within milliseconds.

S3 Intelligent-Tiering automatically stores objects in three access tiers that deliver the same performance as the S3 Standard storage class:

- Frequent Access tier

- Infrequent Access tier

- Archive Instant Access (new)

For a small monitoring and automation charge, S3 Intelligent-Tiering monitors access patterns and moves objects between the different access tiers. Objects that have not been accessed for 30 consecutive days are moved from the Frequent Access tier to the Infrequent Access tier for savings of 40 percent. When an object hasn’t been accessed for 90 consecutive days, S3 Intelligent-Tiering will move the object from the Infrequent Access tier to the Archive Instant Access tier, with a savings of 68 percent. If the data is accessed later, it is automatically moved back to the Frequent Access tier. No tiering charges apply when objects are moved between access tiers within the S3 Intelligent-Tiering storage class.

To get started with this new access tier, select Intelligent-Tiering as the storage class for an object when uploading an object using the S3 console. After 90 days of inactivity (30 days in Frequent Access tier and 60 days in Infrequent Access tier), S3 Intelligent-Tiering will automatically move the object to the Archive Instant Access tier. The introduction of the new Archive Instant Access tier has no impact on performance when you retrieve objects.

New name for the Amazon S3 Glacier storage class – S3 Glacier Flexible Retrieval

The existing Amazon S3 Glacier storage class is now named S3 Glacier Flexible Retrieval. This storage class now has free bulk retrievals in 5 to 12 hours, and the storage price has been reduced by 10 percent in all Regions, effective December 1, 2021. S3 Glacier Flexible Retrieval is now even more cost-effective, and the free bulk retrievals make it ideal for retrieving large data volumes.

These are the Amazon S3 archive storage classes:

- S3 Glacier Instant Retrieval: The newest storage class is optimized for long-lived data that is rarely accessed (typically once per quarter). However when data is needed, it is available within milliseconds. For example, medical images and news media assets are perfect for this storage class.

- S3 Glacier Flexible Retrieval: This newly renamed storage class is optimized for archiving data that can be retrieved in minutes or with free bulk retrievals in 5 to 12 hours. This storage class is ideal for backups and disaster recovery use cases, where you have large amounts of long-term, rarely accessed data, and you don’t want to worry about retrieval costs when you need the data.

- S3 Glacier Deep Archive: This storage class is the lowest-cost storage in the cloud and is optimized for archiving data that can be restored in at least 12 hours. It’s great for storing your compliance archives or for digital media preservation.

Amazon S3 has reduced storage prices!

We are excited to announce that Amazon S3 has reduced storage prices of up to 31 percent in the S3 Standard-IA and S3 One Zone-IA storage classes across 9 AWS Regions: US West (N. California), Asia Pacific (Hong Kong), Asia Pacific (Mumbai), Asia Pacific (Osaka), Asia Pacific (Seoul), Asia Pacific (Singapore), Asia Pacific (Sydney), Asia Pacific (Tokyo), and South America (São Paulo). These price reductions are effective December 1, 2021.

Learn more about price reduction details.

Available Now

The new storage class, S3 Glacier Instant Retrieval, and the new Archive Instant Access tier in S3 Intelligent-Tiering are available today (November 30, 2021) in all AWS Regions.

The price cut for S3 Glacier and free bulk retrievals in all AWS Regions, and the S3 Standard-Infrequent Access/One Zone-Infrequent storage class in nine Regions will be effective on December 1, 2021.

Learn more about the storage classes changes and all the storage classes.

— Marcia

New – Simplify Access Management for Data Stored in Amazon S3

Post Syndicated from Marcia Villalba original https://aws.amazon.com/blogs/aws/new-simplify-access-management-for-data-stored-in-amazon-s3/

Today, we are introducing a couple new features that simplify access management for data stored in Amazon Simple Storage Service (Amazon S3). First, we are introducing a new Amazon S3 Object Ownership setting that lets you disable access control lists (ACLs) to simplify access management for data stored in Amazon S3. Second, the Amazon S3 console policy editor now reports security warnings, errors, and suggestions powered by IAM Access Analyzer as you author your S3 policies.

Since launching 15 years ago, Amazon S3 buckets have been private by default. At first, the only way to grant access to objects was using ACLs. In 2011, AWS Identity and Access Management (IAM) was announced, which allowed the use of policies to define permissions and control access to buckets and objects in Amazon S3. Nowadays, you have several ways to control access to your data in Amazon S3, including IAM policies, S3 bucket policies, S3 Access Points policies, S3 Block Public Access, and ACLs.

ACLs are an access control mechanism in which each bucket and object has an ACL attached to it. ACLs define which AWS accounts or groups are granted access as well as the type of access. When an object is created, the ownership of it belongs to the creator. This ownership information is embedded in the object ACL. When you upload an object to a bucket owned by another AWS account, and you want the bucket owner to access the object, then permissions need to be granted in the ACL. In many cases, ACLs and other kinds of policies are used within the same bucket.

The new Amazon S3 Object Ownership setting, Bucket owner enforced, lets you disable all of the ACLs associated with a bucket and the objects in it. When you apply this bucket-level setting, all of the objects in the bucket become owned by the AWS account that created the bucket, and ACLs are no longer used to grant access. Once applied, ownership changes automatically, and applications that write data to the bucket no longer need to specify any ACL. As a result, access to your data is based on policies. This simplifies access management for data stored in Amazon S3.

With this launch, when creating a new bucket in the Amazon S3 console, you can choose whether ACLs are enabled or disabled. In the Amazon S3 console, when you create a bucket, the default selection is that ACLs are disabled. If you wish to keep ACLs enabled, you can choose other configurations for Object Ownership, specifically:

- Bucket owner preferred: All new objects written to this bucket with the

bucket-owner-full-controlledcanned ACL will be owned by the bucket owner. ACLs are still used for access control. - Object writer: The object writer remains the object owner. ACLs are still used for access control.

For existing buckets, you can view and manage this setting in the Permissions tab.

Before enabling the Bucket owner enforced setting for Object Ownership on an existing bucket, you must migrate access granted to other AWS accounts from the bucket ACL to the bucket policy. Otherwise, you will receive an error when enabling the setting. This helps you ensure applications writing data to your bucket are uninterrupted. Make sure to test your applications after you migrate the access.

Policy validation in the Amazon S3 console

We are also introducing policy validation in the Amazon S3 console to help you out when writing resource-based policies for Amazon S3. This simplifies authoring access control policies for Amazon S3 buckets and access points with over 100 actionable policy checks powered by IAM Access Analyzer.

To access policy validation in the Amazon S3 console, first go to the detail page for a bucket. Then, go to the Permissions tab and edit the bucket policy.

When you start writing your policy, you see that, as you type, different findings appear at the bottom of the screen. Policy checks from IAM Access Analyzer are designed to validate your policies and report security warnings, errors, and suggestions as findings based on their impact to help you make your policy more secure.

When you start writing your policy, you see that, as you type, different findings appear at the bottom of the screen. Policy checks from IAM Access Analyzer are designed to validate your policies and report security warnings, errors, and suggestions as findings based on their impact to help you make your policy more secure.

You can also perform these checks and validations using the IAM Access Analyzer’s ValidatePolicy API.

Availability

Amazon S3 Object Ownership is available at no additional cost in all AWS Regions, excluding the AWS China Regions and AWS GovCloud Regions. IAM Access Analyzer policy validation in the Amazon S3 console is available at no additional cost in all AWS Regions, including the AWS China Regions and AWS GovCloud Regions.

Get started with Amazon S3 Object Ownership through the Amazon S3 console, AWS Command Line Interface (CLI), Amazon S3 REST API, AWS SDKs, or AWS CloudFormation. Learn more about this feature on the documentation page.

And to learn more and get started with policy validation in the Amazon S3 console, see the Access Analyzer policy validation documentation.

— Marcia

New for AWS Backup – Support for VMware and VMware Cloud on AWS

Post Syndicated from Danilo Poccia original https://aws.amazon.com/blogs/aws/new-for-aws-backup-support-for-vmware-and-vmware-cloud-on-aws/

Today, I am happy to announce AWS Backup support for VMware, a new capability that enables you to centralize and automate data protection of virtual machines (VMs) running on VMware on premises and VMware CloudTM on AWS. You can now use a single, centrally managed policy in AWS Backup to protect these VMware environments together with 12 AWS compute, storage, and database services already supported by AWS Backup. You can then use AWS Backup to restore VMware workloads to on-premises data centers and VMware Cloud on AWS.

While doing so, AWS Backup Audit Manager lets you consistently demonstrate compliance by monitoring backup, copy, and restore operations and generating auditor-ready reports to satisfy your data governance and regulatory requirements.

Let’s see how this works in practice.

Using AWS Backup Support for VMware

There are three steps to back up VMware virtual machines (VMs) with AWS Backup:

- Create a gateway to connect AWS Backup to your hypervisor.

- Connect to your hypervisor through the gateway.

- Assign virtual machines managed by your hypervisor to a backup plan.

On the left pane of the AWS Backup console, there is a new External resources section. There, I choose Gateways and then Create gateway. This AWS Backup gateway helps with discovery of the on-premises VMware environment and acts as a cloud gateway to send and receive data.

I download the Open Virtualization Format (OVF) file of the AWS Backup gateway and follow the instructions to deploy the gateway using the VMware vSphere client. I am using an internal test and development VMware environment for this walkthrough.

After deploying the gateway in my VMware environment, I come back to the AWS Backup console. I write a name for the gateway (for simplicity, I use the same name of the gateway VM) and the IP address of the gateway VM. Optionally, I can add tags to help organize and track my setup. I go on and create the gateway.

Now, I choose Add hypervisor. I write a name for the hypervisor and the IP address of the VMware vCenter server host.

I enter the username and password of a service account that I created for AWS Backup on the Active Directory domain. The username should include the domain (for example, username@domain). Then, I choose the encryption key to protect the service account credentials. If I don’t choose my own AWS Key Management Service (KMS) key, AWS Backup encrypts the username and password using a key that AWS owns and manages.

I select the gateway to connect to the hypervisor and choose Test gateway connection. This test helps ensure that the gateway can communicate with the hypervisor before I complete the configuration. Optionally, I can add tags to help organize and track my setup. I go on and add the hypervisor.

After a few minutes, the hypervisor is online, and I see the VMs managed by vCenter in the AWS Backup console. I can now use these virtual machines as resources in my backup plans in the same way as the other AWS compute, storage, and database resources supported by AWS Backup.

I create a new backup plan and start with a template. The rules of the template enforce daily backups with five weeks of retention and monthly backups with one year of retention. I can customize these rules based on my requirements.

Then, I choose to assign resources to the backup plan, and I select three VMs.

If you need, you can create an on-demand backup in the Protected resources section of the console. For example, here I am starting the on-demand backup for one of the VMs.

When a backup is complete, VMs are added to the list of the protected resources, and I can initiate a restore.

I select the backup and choose Restore. Then, I enter the restore location, which can be the same VMware environment I used for the backup or another (for example, on VMware Cloud on AWS). Below, I specify name, path, compute resource name, and datastore to use for the restore. Then, I choose Restore backup.

I monitor the status of my backup and restore jobs from the AWS Backup console. To monitor backup and restore metrics over a period of time, I can use Amazon CloudWatch metrics, logs, and alarms. I can also send events to Amazon EventBridge to receive notifications once a job completes or fails.

Availability and Pricing

AWS Backup support for VMware is available in the US East (N. Virginia, Ohio), US West (N. California, Oregon), GovCloud (US-East, US-West), Canada (Central), Europe (Frankfurt, Ireland, London, Milan, Paris, Stockholm), South America (São Paulo), Asia Pacific (Hong Kong, Mumbai, Seoul, Singapore, Sydney, Tokyo, Osaka), Middle East (Bahrain), and Africa (Cape Town) Regions. Please see the AWS Regional Services List for more information.

AWS Backup supports VMware ESXi 6.7.x and 7.0.x VMs running on NFS, VMFS, and VSAN data stores on premises and in VMware Cloud on AWS. In addition, AWS Backup supports both SCSI Hot-Add and Network Block Device (NBD) transport modes for copying data from source VMs to AWS.

With AWS Backup support for VMware, you pay using the same dimensions that AWS Backup uses today: backup storage, restore, and cross-region data transfer. For more information, see the AWS Backup pricing page.

Your VM backups are stored in a backup vault. All backups stored and managed by AWS Backup are replicated to 3 Availability Zones (AZs) in the Region and designed for 99.999999999 percent (11 9s) durability and 99.99 percent (4 9s) of service availability.

AWS Backup supports first full, then incremental-forever, backups of VMs that you can create on-demand or via a schedule configured in your backup plan. AWS Backup always does full restores even though backups are stored as incremental, enabling you to benefit from storage efficiency cost savings while easily performing restores.

Centrally protect your VMware environments and your AWS compute, storage, and database resources with AWS Backup.

— Danilo

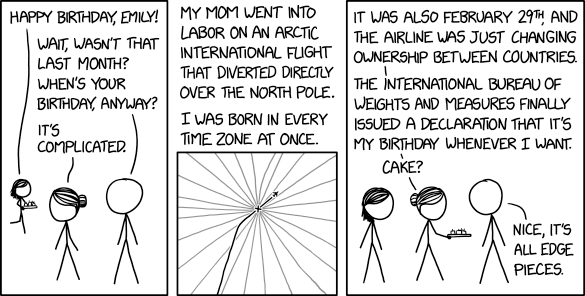

Edge Cake

Post Syndicated from original https://xkcd.com/2549/

New – Amazon FSx for OpenZFS

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/new-amazon-fsx-for-openzfs/

Last month, my colleague Bill Vass said that we are “slowly adding additional file systems” to Amazon FSx. I’d question Bill’s definition of slow, given that his team has launched Amazon FSx for Lustre, Amazon FSx for Windows File Server, and Amazon FSx for NetApp ONTAP in less than three years.

Amazon FSx for OpenZFS

Today I am happy to announce Amazon FSx for OpenZFS, the newest addition to the Amazon FSx family. Just like the other members of the family, this new addition lets you use a popular file system without having to deal with hardware provisioning, software configuration, patching, backups, and the like. You can create a file system in minutes and begin to enjoy the benefits of OpenZFS right away: transparent compression, continuous integrity verification, snapshots, and copy-on-write. Even better, you get all of these benefits without having to develop the specialized expertise that has traditionally been needed to set up and administer OpenZFS.

FSx for OpenZFS is powered by the AWS Graviton family processors and AWS SRD (Scalable Reliable Datagram) Networking, and can deliver up to 1 million IOPS with latencies of 100-200 microseconds, along with up to 4 GB/second of uncompressed throughput, up to 12 GB/second of compressed throughput, and up to 12.5 GB/second throughput to cached data. FSx for OpenZFS supports the OpenZFS Adaptive Replacement Cache (ARC) and uses memory in the file server to provide faster performance. It also supports advanced NFS performance features such as session trunking and NFS delegation, allowing you to get very high throughput and IOPS from a single client, while still safely caching frequently accessed data on the client side.

FSx for OpenZFS volumes can be accessed from cloud or on-premises Linux, MacOS, and Windows clients via industry-standard NFS protocols (v3, v4, v4.1, and v4.2). Cloud clients can be Amazon Elastic Compute Cloud (Amazon EC2) instances, Amazon Elastic Container Service (Amazon ECS) and Amazon Elastic Kubernetes Service (EKS) clusters, Amazon WorkSpaces virtual desktops, and VMware Cloud on AWS. Your data is stored in encrypted form and replicated within an AWS Availability Zone, with components replaced automatically and transparently as necessary.

You can use FSx for OpenZFS to address your highly demanding machine learning, EDA (Electronic Design Automation), media processing, financial analytics, code repository, DevOps, and web content management workloads. With performance that is close to local storage, FSx for OpenZFS is great for these and other latency-sensitive workloads that manipulate and sequentially access many small files. Finally, because you can create, mount, use, and delete file systems as needed, you can now use OpenZFS in a dynamic, agile fashion.

Using Amazon FSx for OpenZFS

I can create an OpenZFS file system using the AWS Management Console, CLI, APIs, or AWS CloudFormation. From the FSx Console I click Create file system and choose Amazon FSx for OpenZFS:

I can choose Quick create (and use recommended best-practice configurations), or Standard create (and set all of the configuration options myself). I’ll take the easy route and use the recommended best practices to get started. I enter a name (Jeff-OpenZFS) select the amount of SSD storage that I need, choose a VPC & subnet, and click Next:

The console shows me that I can edit many of the attributes of my file system later if necessary. I review the settings and click Create file system:

My file system is ready within a minute or two, and I click Attach to get the proper commands to mount it to my client:

To be more precise, I am mounting the root volume (/fsx) of my file system. Once it is mounted, I can use it as I would any other file system. After I add some files to it, I can use the Action menu in the console to create a backup:

I can restore the backup to a new file system:

As I noted earlier, each file system can deliver up to 4 gigabytes per second of throughput for uncompressed data. I can look at total throughput and other metrics in the console:

I can set throughput capacity of each volume when I create it, and then change it later if necessary:

Changes take effect within minutes. The file system remains active and mounted while the change is put into effect, but some operations may pause momentarily:

A single OpenZFS file system can contain multiple volumes, each with separate quotas (overall volume storage, per-user storage, and per-group storage) and compression settings. When I use the quick create option a root volume named fsx is created for me; I can click Create volume to create more volumes at any time:

The new volume exists within the namespace hierarchy of the parent, and can be mounted separately or accessed from the parent.

Things to Know

Here are a couple of quick facts and to wrap up this post:

Pricing – Pricing is based on the provisioned storage capacity, throughput, and IOPS.

Regions – Amazon FSx for OpenZFS is available in the US East (N. Virginia), US East (Ohio), US West (Oregon), Europe (Ireland), Canada (Central), Asia Pacific (Tokyo), and Europe (Frankfurt) Regions.

In the Works – We are working on additional features including storage scaling, IOPS scaling, a high availability option and another storage class.

Now Available

Amazon FSx for OpenZFS is available now and you can start using it today!

— Jeff;

22 – IVR Design – FreePBX 101 v15

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=wx2iza8xx8o

Hubble and Webb Space Telescopes, the story so far…

Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=TjW4qKn0aNo