Post Syndicated from original https://xkcd.com/2544/

![Was this your car? [looping 'image loading' animation] Was this your car? [looping 'image loading' animation]](https://imgs.xkcd.com/comics/heart_stopping_texts.png)

Post Syndicated from original https://xkcd.com/2544/

![Was this your car? [looping 'image loading' animation] Was this your car? [looping 'image loading' animation]](https://imgs.xkcd.com/comics/heart_stopping_texts.png)

Post Syndicated from Rick Armstrong original https://aws.amazon.com/blogs/compute/setting-up-ec2-mac-instances-as-shared-remote-development-environments/

This post is written by: Michael Meidlinger, Solutions Architect

In December 2020, we announced a macOS-based Amazon Elastic Compute Cloud (Amazon EC2) instance. Amazon EC2 Mac instances let developers build, test, and package their applications for every Apple platform, including macOS, iOS, iPadOS, tvOS, and watchOS. Customers have been utilizing these instances in order to automate their build pipelines for the Apple platform and integrate their native build tools, such as Jenkins and GitLab.

Aside from build automation, more and more customers are looking to utilize EC2 Mac instances for interactive development. Several advantages exist when utilizing remote development environments over installations on local developer machines:

On top of that, this approach promotes cost efficiency, as it enables EC2 Mac instances to be shared and utilized by multiple developers concurrently. This is particularly relevant for EC2 Mac instances, as they run on dedicated Mac mini hosts with a minimum tenancy of 24 hours. Therefore, handing out full instances to individual developers is not practical most often.

Interactive remote development environments are also facilitated by code editors, such as VSCode, which provide a modern GUI based experience on the developer’s local machine while having source code files and terminal sessions for testing and debugging in the remote environment context.

This post will demonstrate how EC2 Mac instances can be setup as remote development servers that can be accessed by multiple developers concurrently in order to compile and run their code interactively via command line access. The proposed setup features centralized user management based on AWS Directory Service and shared network storage utilizing Amazon Elastic File System (Amazon EFS), thereby decoupling those aspects from the development server instances. As a result, new instances can easily be added when needed, and existing instances can be updated to the newest OS and development toolchain version without affecting developer workflow.

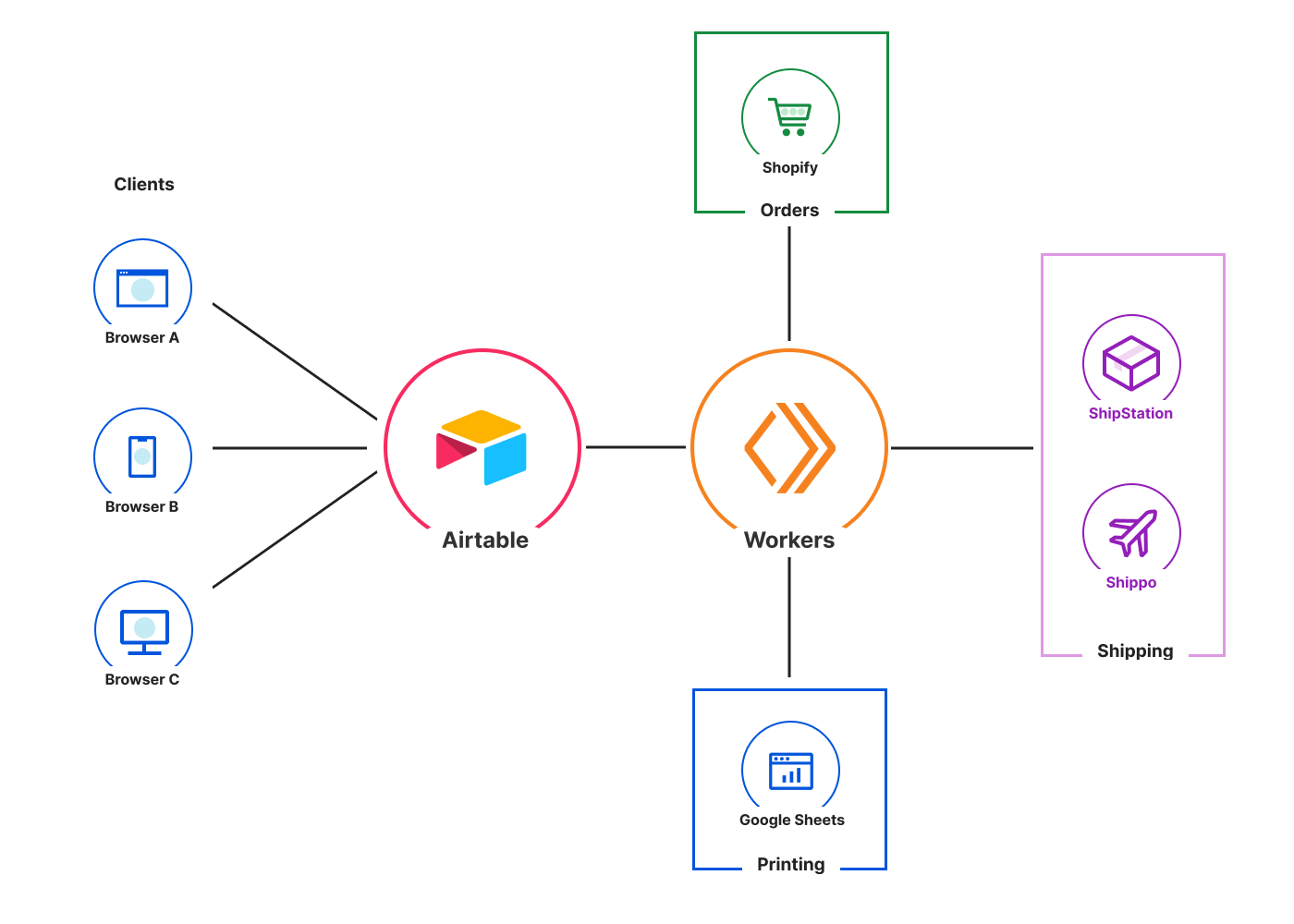

The following diagram shows the architecture rolled out in the context of this blog.

The compute layer consists of two EC2 Mac instances running in isolated private subnets in different Availability Zones. In a production setup, these instances are provisioned with every necessary tool and software needed by developers to build and test their code for Apple platforms. Provisioning can be accomplished by creating custom Amazon Machine Images (AMIs) for the EC2 Mac instances or by bootstrapping them with setup scripts. This post utilizes Amazon provided AMIs with macOS BigSur without custom software. Once setup, developers gain command line access to the instances via SSH and utilize them as remote development environments.

The architecture promotes the decoupling of compute and storage so that EC2 Mac instances can be updated with new OS and/or software versions without affecting the developer experience or data. Home directories reside on a highly available Amazon EFS file system, and they can be consistently accessed from all EC2 Mac instances. From a user perspective, any two EC2 Mac instances are alike, in that the user experiences the same configuration and environment (e.g., shell configurations such as .zshrc, VSCode remote extensions .vscode-server, or other tools and configurations installed within the user’s home directory). The file system is exposed to the private subnets via redundant mount target ENIs and persistently mounted on the Mac instances.

For centralized user and access management, all instances in the architecture are part of a common Active Directory domain based on AWS Managed Microsoft AD. This is exposed via redundant ENIs to the private subnets containing the Mac instances.

To manage and configure the Active Directory domain, a Windows Instance (MGMT01) is deployed. For this post, we will connect to this instance for setting up Active Directory users. Note: other than that, this instance is not required for operating the solution, and it can be shut down both for reasons of cost efficiency and security.

The access layer constitutes the entry and exit point of the setup. For this post, it is comprised of an internet-facing bastion host connecting authorized Active Directory users to the Mac instances, as well as redundant NAT gateways providing outbound internet connectivity.

Depending on customer requirements, the access layer can be realized in various ways. For example, it can provide access to customer on-premises networks by using AWS Direct Connect or AWS Virtual Private Network (AWS VPN), or to services in different Virtual Private Cloud (VPC) networks by using AWS PrivateLink. This means that you can integrate your Mac development environment with pre-existing development-related services, such as source code and software repositories or build and test services.

We utilize AWS CloudFormation to automatically deploy the entire setup in the preceding description. All templates and code can be obtained from the blog’s GitHub repository. To complete the setup, you need

Warning: Deploying this example will incur AWS service charges of at least $50 due to the fact that EC2 Mac instances can only be released 24 hours after allocation.

In this section, we provide a step-by-step guide for deploying the solution. We will mostly rely on AWS CLI and shell scripts provided along with the CloudFormation templates and use the AWS Management Console for checking and verification only.

1. Get the Code: Obtain the CloudFormation templates and all relevant scripts and assets via git:

2. Create an Amazon Simple Storage Service (Amazon S3) deployment bucket and upload assets for deployment: CloudFormation templates and other assets are uploaded to this bucket in order to deploy them. To achieve this, run the upload.sh script in the repository root, accepting the default bucket configuration as suggested by the script:

3. Create an SSH Keypair for admin Access: To access the instances deployed by CloudFormation, create an SSH keypair with name mac-admin, and then import it with EC2:

4. Create CloudFormation Parameters file: Initialize the json file by copying the provided template parameters-template.json :

Substitute the following placeholders:

a. <YourS3BucketName>: The unique name of the S3 bucket you created in step 2.

b. <YourSecurePassword>: Active Directory domain admin password. This must be 8-32 characters long and can contain numbers, letters and symbols.

c. <YourMacOSAmiID>: We used the latest macOS BigSur AMI at the time of writing with AMI ID ami-0c84d9da210c1110b in the us-east-2 Region. You can obtain other AMI IDs for your desired AWS Region and macOS version from the console.

d. <MacHost1ID> and <MacHost2ID>: See the next step 5. on how to allocate Dedicated Hosts and obtain the host IDs.

5. Allocate Dedicated Hosts: EC2 Mac Instances run on Dedicated Hosts. Therefore, prior to being able to deploy instances, Dedicated Hosts must be allocated. We utilize us-east-2 as the target Region, and we allocate the hosts in the Availability Zones us-east-2b and us-east-2c:

Substitute the host IDs returned from those commands in the parameters.json file as instructed in the previous step 5.

6. Deploy the CloudFormation Stack: To deploy the stack with the name ec2-mac-remote-dev-env, run the provided sh script as follows:

Stack deployment can take up to 1.5 hours, which is due to the Microsoft Managed Active Directory, the Windows MGMT01 instance, and the Mac instances being created sequentially. Check the CloudFormation Console to see whether the stack finished deploying. In the console, under Stacks, select the stack name from the preceding code (ec2-mac-remote-dev-env), and then navigate to the Outputs Tab. Once finished, this will display the public DNS name of the bastion host, as well as the private IPs of the Mac instances. You need this information in the upcoming section in order to connect and test your setup.

Now you can log in and explore the setup. We will start out by creating a developer account within Active Directory and configure an SSH key in order for it to grant access.

First, we create a new SSH key for the developer Active Directory user. Utilize OpenSSH CLI,

Furthermore, utilizing the connection information from the CloudFormation output, setup your ~/.ssh/config to contain the following entries, where $BASTION_HOST_PUBLIC_DNS, $MAC1_PRIVATE_IP and $MAC2_PRIVATE_IP must be replaced accordingly:

Now login to the bastion host, forwarding port 3389 to the MGMT01 host in order to gain Remote Desktop Access to the Windows management instance:

While having this connection open, launch your Remote Desktop Client and connect to localhost with Username admin and password as specified earlier in the CloudFormation parameters. Once connected to the instance, open Control Panel>System and Security>Administrative Tools and click Active Directory Users and Computers. Then, in the appearing window, enable View>Advanced Features. If you haven’t changed the Active Directory domain name explicitly in CloudFormation, then the default domain name is example.com with corresponding NetBIOS Name example. Therefore, to create a new user for that domain, select Active Directory Users and Computers>example.com>example>Users, and click Create a new User. In the resulting wizard, set the Full name and User logon name fields to developer, and proceed to set a password to create the user. Once created, right-click on the developer user, and select Properties>Attribute Editor. Search for the altSecurityIdentities property, and copy-paste the developer public SSH key (contained in ~/.ssh/mac-developer.pub) into the Value to add field, click Add, and then click OK. In the Properties window, save your changes by clicking Apply and OK. The following figure illustrates the process just described:

Now that the developer account is setup, you can connect to either of the two EC2 Mac instances from your local machine with the Active Directory account:

When you connect via the preceding command, your local machine first establishes an SSH connection to the bastion host which authorizes the request against the key we just stored in Active Directory. Upon success, the bastion host forwards the connection to the macos1 instance, which again authorizes against Active Directory and launches a terminal session upon success. The following figure illustrates the login with the macos1 instances, showcasing both the integration with AD (EXAMPLE\Domain Users group membership) as well as with the EFS share, which is mounted at /opt/nfsshare and symlinked to the developer’s home directory.

Likewise, you can create folders and files in the developer’s home directory such as the test-project folder depicted in the screenshot.

Lastly, let’s utilize VS Code’s remote plugin and connect to the other macos2 instance. Select the Remote Explorer on the left-hand pane and click to open the macos2 host as shown in the following screenshot:

A new window will be opened with the context of the remote server, as shown in the next figure. As you can see, we have access to the same files seen previously on the macos1 host.

From the repository root, run the provided destroy.sh script in order to destroy all resources created by CloudFormation, specifying the stack name as input parameter:

Check the CloudFormation Console to confirm that the stack and its resources are properly deleted.

Lastly, in the EC2 Console, release the dedicated Mac Hosts that you allocated in the beginning. Notice that this is only possible 24 hours after allocation.

This post has shown how EC2 Mac instances can be set up as remote development environments, thereby allowing developers to create software for Apple platforms regardless of their local hardware and software setup. Aside from increased flexibility and maintainability, this setup also saves cost because multiple developers can work interactively with the same EC2 Mac instance. We have rolled out an architecture that integrates EC2 Mac instances with AWS Directory Services for centralized user and access management as well as Amazon EFS to store developer home directories in a durable and highly available manner. This has resulted in an architecture where instances can easily be added, removed, or updated without affecting developer workflow. Now, irrespective of your client machine, you are all set to start coding with your local editor while leveraging EC2 Mac instances in the AWS Cloud to provide you with a macOS environment! To get started and learn more about EC2 Mac instances, please visit the product page.

Post Syndicated from João Bozelli original https://aws.amazon.com/blogs/architecture/field-notes-building-on-demand-disaster-recovery-for-ibm-db2-on-aws/

With the increased adoption of critical applications running in the cloud, customers often find themselves revisiting traditional strategies that were adopted for on-premises workloads. When it comes to IBM DB2, one of the first decisions to make is to decide what backup and restore method will be used.

In this blog post, we will show you how IT architects, database administrators, and cloud administrators can use AWS services such as Amazon Machine Images (AMIs) and Amazon Simple Storage Service (Amazon S3) to build on-demand disaster recovery. This is useful for organizations who are flexible in their Recovery Time Objective (RTO) to reduce cost by only provisioning the target environment when needed.

Figure 1. Architecture of AWS services used in this blog post

Figure 1 shows the Amazon Elastic Compute Cloud (Amazon EC2) instance running the DB2 database in the primary Region (São Paulo, in this example) and performing backups to Amazon S3 by a script initiated by AWS Systems Manager. The backups in Amazon S3 are then replicated to the secondary Region (N. Virginia, in this example) by the S3 Cross-Region Replication (CRR) feature of Amazon S3.

AWS Backup provides automation by performing the AMI copy and in a similar fashion to the database backups, the AMIs are copied to the secondary Region as well. You can further enhance the backup mechanism by activating monitoring through Amazon CloudWatch and using Amazon Simple Notification Service (Amazon SNS) to send out alerts in the event of failures. The architectural considerations will be outlined in detail.

Database backups are stored in Amazon S3, which replicates the backups inside a Region by default and can be replicated to another Region using CRR. Since version 11.1, IBM DB2 running on Linux natively supports data backups to Amazon S3. To create this architecture, follow these steps:

cd /db2/db2<sid>/

mkdir .keystore

gsk8capicmd_64 -keydb -create -db "/db2/db2<sid>/.keystore/db6-s3.p12" -pw "<password>" -type pkcs12 -stashdb2 "update dbm cfg using keystore_location /db2/db2<sid>/.keystore/db6-s3.p12 keystore_type pkcs12"db2 get dbm cfg |grep -i KEYSTORE

Keystore type (KEYSTORE_TYPE) = PKCS12

Keystore location (KEYSTORE_LOCATION) = /db2/db2<sid>/.keystore/db6-s3.p12

Figure 2. Example bucket for storing backups

In this example, the primary folder for this database is SBX. The folder data will store the data backups, the folder config will store the configuration parameters, the folder keystore will store the backup of the keystore, and the folder logs will store the database logs.

db2 "catalog storage access alias <alias_name> vendor S3 server <S3 endpoint> user '<access_key>' password '<secret_access_key>' container '<bucket_name>'"db2set DB2_OBJECT_STORAGE_LOCAL_STAGING_PATH=/backup/staging/datadb2set |grep -i STAGING

DB2_OBJECT_STORAGE_LOCAL_STAGING_PATH=/backup/staging/dataNote: make sure that the target is DB2REMOTE as follows:

db2 BACKUP DATABASE <instance> TO DB2REMOTE://<alias>//<path>/<additional path> compress without promptingWhile the backup is running, you will see data being stored in the staging directory (for this example: /backup/staging/data), and then uploaded to Amazon S3.

The backup script can be integrated with AWS Systems Manager maintenance windows to run on schedule to allow control and visibility. When combined with Amazon SNS, you can send out notifications in case of success, failures, or both.

There are different options when it comes to storing the database logs into Amazon S3. In this example, we’re using a very simple script initiated by AWS Systems Manager to sync the logs from the staging disk to Amazon S3. This, combined with CRR, increases the durability of the backup by replicating the logs to another Region of your choice. The same backup method for the logs is applied to the IBM DB2 configuration files (parameters and variables) and the keystore. Figure 3 shows the CRR configured on the target bucket, which is then automatically replicating the data to a secondary Region (us-east-1).

Figure 3. Example buckets for IBM DB2 backup and disaster recovery, respectively

Figure 4. Amazon S3 Replication rules configured from sa-east-1 to us-east-1 (São Paulo to N. Virginia)

Figure 5. IBM DB2 logs backed up in São Paulo (sa-east-1) and replicated to N. Virginia (us-east-1)

For this use case, we have defined a lifecycle policy to maintain the objects (full and log backups) stored as Amazon S3 Standard for 30 days, afterwards they will be moved from Amazon S3 Standard to Amazon S3 Standard-IA. After 30 days, any objects stored as Amazon S3 Standard-IA will be deleted. When used in the context of a database, this allows you to automatically manage the lifecycle of your backups. If you have compliance needs to store specific backups with longer retention times, you can backup to a separate folder (prefix) with a different lifecycle rule.

Figure 6. Amazon S3 Lifecycle policy configure for buckets in São Paulo (sa-east-1) and N. Virginia (us-east-1)

Up to this point, this blog post has covered how you can manage the backups for a better Recovery Point Objective (RPO). However, let’s consider what happens in case of a disaster or if you have issues with the server running the IBM DB2 database. The Recovery Time Objective (RTO) will be higher because you will have to launch an EC2 instance, prepare the server, install the IBM DB2 database, and restore the full data and log backups.

To reduce your RTO, we recommend using automated AMI backups for your EC2 instance. AWS Backup helps you generate automated AMIs based on tags and resource IDs. AWS Backup can ship the AMI backup generated from your instance to another Region, for a multi-Region disaster recovery strategy.

In this example, we have created an AWS Backup plan to run twice a day and to ship a copy of the AMI from São Paulo (sa-east-1) to N. Virginia (sa-east-1).

Figure 7. Automated AMIs copied from São Paulo (sa-east-1) to N. Virginia (us-east-1) by AWS Backup

It is important to discuss the factors that impact overall backup and restore performance, and ultimately the RTO.

We recommend using VPC endpoints to ensure that the traffic from your EC2 to Amazon S3 does not traverse the internet, and to provide improved throughput for data upload. Another important factor is the type of EBS volumes used for storing the IBM DB2 data files. In this example, to cover a 170 GB database, the disk used was GP2 not striped in Logical Volume Manager (LVM). Because the degree of parallelism (number of tablespaces read in parallel by the IBM DB2 backup process) can increase CPU usage, caution is warranted when running online backups so as not to cause too much overhead on your database server. When considering optimization for EBS volumes, note the maximum throughput and IOPS that can be reached by instance type.

A test was run using AWS Command Line Interface to sync 100 GB of logs (100 files of 1 GB) from Amazon S3 to the newly created instance. It took 16 minutes. The amount of logs will vary depending on the backup schedule implemented. The Amazon S3 costs will vary depending on the lifecycle policies implemented. For further details, refer to Amazon S3 pricing.

In our tests, the backup time for a 170 GB database took 38 minutes, with a restore time of 14 minutes.

The restore time can vary depending on the backup size, the amount of logs to roll forward, and disk type (mentioned previously in the Performance considerations section).

With the results of this test, the RTO was the restore time plus the time taken to launch the new server based off the AMI backup taken.

| Disk Type | DB Size | Instance Type (Backup) | Parallel Channels (Backup) | Backup Time | Instance Type (Restore) | Parallel Channels (Restore) | Restore Time |

| GP2 | 170 GB | m5.4xlarge | 12 | 38 Minutes | m5.4xlarge | 12 | 14 Minutes |

To summarize, in this blog post we described how to configure IBM DB2 backups to Amazon S3, to build an on-demand strategy for backup and disaster recovery. By following these architecture design principles, you will continue to develop resilient business continuity. Let us know if you have any comments or questions. We value your feedback!

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=VXDuZFa-uJU

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=WLHaDTOZg6Q

Post Syndicated from Mahlon Duke original https://aws.amazon.com/blogs/big-data/accelo-uses-amazon-quicksight-to-accelerate-time-to-value-in-delivering-embedded-analytics-to-professional-services-businesses/

This is a guest post by Accelo. In their own words, “Accelo is the leading cloud-based platform for managing client work, from prospect to payment, for professional services companies. Each month, tens of thousands of Accelo users across 43 countries create more than 3 million activities, log 1.2 million hours of work, and generate over $140 million in invoices.”

Imagine driving a car with a blacked-out windshield. It sounds terrifying, but it’s the way things are for most small businesses. While they look into the rear-view mirror to see where they’ve been, they lack visibility into what’s ahead of them. The lack of real-time data and reliable forecasts leaves critical decisions like investment, hiring, and resourcing to “gut feel.” An industry survey conducted by Accelo shows 67% of senior leaders don’t have visibility into team utilization, and 54% of them can’t track client project budgets, much less profitability.

Professional services businesses generate most of their revenue directly from billable work they do for clients every day. Because no two clients, projects, or team members are the same, real-time and actionable insight is paramount to ensure happy clients and a successful, profitable business. A big part of the problem is that many businesses are trying to manage their client work with a cocktail of different, disconnected systems. No wonder KPMG found that 56% of CEOs have little confidence in the integrity of the data they’re using for decision-making.

Accelo’s mission is to solve this problem by giving businesses an integrated system to manage all their client work, from prospect to payment. By combining what have historically been disparate parts of the business—CRM, sales, project management, time tracking, client support, and billing—Accelo becomes the single source of truth for your business’s most important data.

Even with a trustworthy, automated and integrated system, decision makers still need to harness the data so they see what’s in front of them and can anticipate for the future. Accelo devoted all our resources and expertise to building a complete client work management platform, made up of essential products to achieve the greatest profitability. We recognized that in order to make the platform most effective, users needed to be empowered with the strongest analytics and actionable insights for strategic decision making. This drove us to seek out a leading BI solution that could seamlessly integrate with our platform and create the greatest user experience. Our objective was to ensure that Accelo users had access to the best BI tool without requiring them to spend more of their valuable time learning yet another tool – not to mention another login. We needed a powerful embedded analytics solution.

We evaluated dozens of leading BI and embedded reporting solutions, and Amazon QuickSight was the clear winner. In this post, we discuss why, and how QuickSight accelerated our time to value in delivering embedded analytics to our users.

Even today, many organizations track their work manually. They extract data from different systems that don’t talk to each other, and manually manipulate it in spreadsheets, which wastes time and introduces the kinds of data integrity problems that cause CEOs to lose their confidence. As companies grow, these manual and error-prone approaches don’t scale with them, and the sheer level of effort required to keep data up to date can easily result in leaders just giving up.

With this in mind, Accelo’s embedded analytics solution was built from the ground up to grow with us and with our users. As a part of the AWS family, QuickSight eliminated one of the biggest hurdles for embedded analytics through its SPICE storage system. SPICE enables us to create unlimited, purpose-built datasets that are hosted in Amazon’s dynamic storage infrastructure. These smaller datasets load more quickly than your typical monolithic database, and can be updated as often as we need, all at an affordable per-gigabyte rate. This allows us to provide real-time analytics to our users swiftly, accurately, and economically.

“Being able to rely on Accelo to tell us everything about our projects saves us a lot of time, instead of having to go in and download a lot of information to create a spreadsheet to do any kind of analysis,” says Katherine Jonelis, Director of Operations, MHA Consulting. “My boss loves the dashboards. He loves just being able to look at that and instantly know, ‘Here’s where we are.’”

In addition to powering analytics for our users, QuickSight also helps our internal teams identify and track vital KPIs, which historically has been done via third-party apps. These metrics can cover anything, from calculating the effective billable rate across hundreds of projects and thousands of time entries, to determining how much time is left for the team to finish their tasks profitably and on budget. Because the reports are embedded directly in Accelo, which already houses all the data, it was easy for our team to adapt to the new reports and require minimal training.

One of the most important factors in our evaluation of BI platforms was the time to value. We asked ourselves two questions: How long would it take to have the solution up and running, and how long would it take for our users to see value from it?

While there are plenty of powerful third-party, integrated BI products out there, they often require a complete integration, adding authentication and configuration on top of basic data extraction and transformations. This makes them an unattractive option, especially in an increasingly security-focused landscape. Meanwhile, most of the embedded products we evaluated required a time to launch that numbered in the months—spending time on infrastructure, data sources, and more. And that’s without considering the infrastructure and engineering costs of ongoing maintenance. One key benefit that propels QuickSight above other products is that it allowed us to reduce that setup time from months to weeks, and completely eliminated any configuration work for the end-user. This is possible thanks to built-in tools like native connections for AWS data sources, row-level security for datasets, and a simple user provisioning process.

Developer hours can be expensive, and are always in high demand. Even in a responsive and agile development environment like Accelo’s, development work still requires lead time before it can be scheduled and completed. Engineering resources are also finite—if they’re working on one thing today, something else is probably going into the backlog. QuickSight enables us to eliminate this bottleneck by shifting the task of managing these analytics from developers to data analysts. We used QuickSight to easily create datasets and reports, and placed a simple API call to embed them for our clients so they can start using them instantly. Now we’re able to quickly respond to our users’ ever-changing needs without requiring developers. That further improves the speed and quality of our data by using both the analysts’ general expertise with data visualization and their unique knowledge of Accelo’s schema. Today, all of Accelo’s reports are created and deployed through QuickSight. We’re able to accommodate dozens of custom requests each month for improvements—major and minor—without ever needing to involve a developer.

Implementation and training were also key considerations during our evaluation. Our customers are busy running their businesses. The last thing they want is to get trained on a new tool, not to mention the typically high cost associated with implementation. As a turnkey solution that requires no configuration and minimal education, QuickSight was the clear winner.

It’s no secret that employees dislike timesheets and would rather spend time working with their clients. For many services companies, logged time is how they bill their clients and get paid. Therefore, it’s vital that employees log all their hours. To make that process as painless as possible, Accelo offers several tools that minimize the amount of work it takes an employee to log their time. For example, the Auto Scheduling tool automatically builds out employees’ schedules based on the work they’re assigned, and logs their time with a single click. Inevitably, however, someone always forgets to log their time, leading to lost revenue.

To address this issue, Accelo built the Missing Time report, which pulls hundreds of thousands of time entries, complex work schedules, and even holiday and PTO time together to offer answers to these questions: Who hasn’t logged their time? How much time is missing? And from what time period?

Every business needs to know whether they’re profitable. Professional services businesses are unique in that profitability is tied directly to their individual clients and the relationships with them. Some clients may generate high revenues but require so much extra maintenance that they become unprofitable. On the other hand, low-profile clients that don’t require a lot of attention can significantly contribute to the business’s bottom line. By having all the client data under one roof, these centralized and embedded reports can provide visibility into your budgets, time entries, work status, and team utilization. This makes it possible to make real-time, data-driven actions without having to spend all day to get the data.

Clean and holistic data fosters deep insights that can lead to higher margins and profits. We’re excited to partner with AWS and QuickSight to provide professional services businesses with real-time insights into their operations so they can become truly data driven, effortlessly. Learn more about Accelo, and Amazon QuickSight Embedded Analytics!

Post Syndicated from Talia Nassi original https://aws.amazon.com/blogs/compute/publishing-messages-in-batch-to-amazon-sns-topics/

This post is written by Heeki Park (Principal Solutions Architect, Serverless Specialist), Marc Pinaud (Senior Product Manager, Amazon SNS), Amir Eldesoky (Software Development Engineer, Amazon SNS), Jack Li (Software Development Engineer, Amazon SNS), and William Nguyen (Software Development Engineer, Amazon SNS).

Today, we are announcing the ability for AWS customers to publish messages in batch to Amazon SNS topics. Until now, you were only able to publish one message to an SNS topic per Publish API request. With the new PublishBatch API, you can send up to 10 messages at a time in a single API request. This reduces cost for API requests by up to 90%, as you need fewer API requests to publish the same number of messages.

Consider a log processing application where you process system logs and have different requirements for downstream processing. For example, you may want to do inference on

incoming log data, populate an operational Amazon OpenSearch Service environment, and store log data in an enterprise data lake.

Systems send log data to a standard SNS topic, and Amazon SQS queues and Amazon Kinesis Data Firehose are configured as subscribers. An AWS Lambda function subscribes to the first SQS queue and uses machine learning models to perform inference to detect security incidents or system access anomalies. A Lambda function subscribes to the second SQS queue and emits those log entries to an Amazon OpenSearch Service cluster. The workload uses Kibana dashboards to visualize log data. An Amazon Kinesis Data Firehose delivery stream subscribes to the SNS topic and archives all log data into Amazon S3. This allows data scientists to conduct further investigation and research on those logs.

To do this, the following Java code publishes a set of log messages. In this code, you construct a publish request for a single message to an SNS topic and submit that request via the publish() method:

// tab 1: standard publish example

private static AmazonSNS snsClient;

private static final String MESSAGE_PAYLOAD = " 192.168.1.100 - - [28/Oct/2021:10:27:10 -0500] "GET /index.html HTTP/1.1" 200 3395";

PublishRequest request = new PublishRequest()

.withTopicArn(topicArn)

.withMessage(MESSAGE_PAYLOAD);

PublishResult response = snsClient.publish(request);

// tab 2: fifo publish example

private static AmazonSNS snsClient;

private static final String MESSAGE_PAYLOAD = " 192.168.1.100 - - [28/Oct/2021:10:27:10 -0500] "GET /index.html HTTP/1.1" 200 3395";

private static final String MESSAGE_FIFO_GROUP = "server1234";

PublishRequest request = new PublishRequest()

.withTopicArn(topicArn)

.withMessage(MESSAGE_PAYLOAD)

.withMessageGroupId(MESSAGE_FIFO_GROUP)

.withMessageDeduplicationId(UUID.randomUUID().toString());

PublishResult response = snsClient.publish(request);

If you extended the example above and had 10 log lines that each needed to be published as a message, you would have to write code to construct 10 publish requests, and subsequently submit each of those requests via the publish() method.

With the new ability to publish batch messages, you write the following new code. In the code below, you construct a list of publish entries first, then create a single publish batch request, and subsequently submit that batch request via the new publishBatch() method. In the code below, you use a sample helper method getLoggingPayload(i) to get the appropriate payload for the message, which you can replace with your own business logic.

// tab 1: standard publish example

private static final String MESSAGE_BATCH_ID_PREFIX = "server1234-batch-id-";

List<PublishBatchRequestEntry> entries = IntStream.range(0, 10)

.mapToObj(i -> {

new PublishBatchRequestEntry()

.withId(MESSAGE_BATCH_ID_PREFIX + i)

.withMessage(getLoggingPayload(i));

})

.collect(Collectors.toList());

PublishBatchRequest request = new PublishBatchRequest()

.withTopicArn(topicArn)

.withPublishBatchRequestEntries(entries);

PublishBatchResult response = snsClient.publishBatch(request);

// tab 2: fifo publish example

private static final String MESSAGE_BATCH_ID_PREFIX = "server1234-batch-id-";

private static final String MESSAGE_FIFO_GROUP = "server1234";

List<PublishBatchRequestEntry> entries = IntStream.range(0, 10)

.mapToObj(i -> {

new PublishBatchRequestEntry()

.withId(MESSAGE_BATCH_ID_PREFIX + i)

.withMessage(getLoggingPayload(i))

.withMessageGroupId(MESSAGE_FIFO_GROUP)

.withMessageDeduplicationId(UUID.randomUUID().toString());

})

.collect(Collectors.toList());

PublishBatchRequest request = new PublishBatchRequest()

.withTopicArn(topicArn)

.withPublishBatchRequestEntries(entries);

PublishBatchResult response = snsClient.publishBatch(request);In the list of publish requests, the application must assign a unique batch ID (up to 80 characters) to each publish request within that batch. When the SNS service successfully receives a message, the SNS service assigns a unique message ID and returns that message ID in the response object.

If publishing to a FIFO topic, the SNS service additionally returns a sequence number in the response. When publishing a batch of messages, the PublishBatchResult object returns a list of response objects for successful and failed messages. If you iterate through the list of response objects for successful messages, you might see the following:

// tab 1: standard publish output

{

"Id": "server1234-batch-id-0",

"MessageId": "fcaef5b3-e9e3-5c9e-b761-ac46c4a779bb",

...

}

// tab 2: fifo publish output

{

"Id": "server1234-batch-id-0",

"MessageId": "fcaef5b3-e9e3-5c9e-b761-ac46c4a779bb",

"SequenceNumber": "10000000000000003000",

...

}When receiving the message from SNS in the SQS queue, the application reads the following message:

// tab 1: standard publish output

{

"Type" : "Notification",

"MessageId" : "fcaef5b3-e9e3-5c9e-b761-ac46c4a779bb",

"TopicArn" : "arn:aws:sns:us-east-1:112233445566:publishBatchTopic",

"Message" : "payload-0",

"Timestamp" : "2021-10-28T22:58:12.862Z",

"UnsubscribeURL" : "http://sns.us-east-1.amazon.com/?Action=Unsubscribe&SubscriptionArn=arn:aws:sns:us-east-1:112233445566:publishBatchTopic:ff78260a-0953-4b60-9c2c-122ebcb5fc96"

}

// tab 2: fifo publish output

{

"Type" : "Notification",

"MessageId" : "fcaef5b3-e9e3-5c9e-b761-ac46c4a779bb",

"SequenceNumber" : "10000000000000003000",

"TopicArn" : "arn:aws:sns:us-east-1:112233445566:publishBatchTopic",

"Message" : "payload-0",

"Timestamp" : "2021-10-28T22:58:12.862Z",

"UnsubscribeURL" : "http://sns.us-east-1.amazon.com/?Action=Unsubscribe&SubscriptionArn=arn:aws:sns:us-east-1:112233445566:publishBatchTopic.fifo:ff78260a-0953-4b60-9c2c-122ebcb5fc96"

}In the standard publish example, the MessageId of fcaef5b3-e9e3-5c9e-b761-ac46c4a779bb is propagated down to the message in SQS. In the FIFO publish example, the SequenceNumber of 10000000000000003000 is also propagated down to the message in SQS.

When publishing messages in batch, the application must handle errors that may have occurred during the publish batch request. Errors can occur at two different levels. The first is when publishing the batch request to the SNS topic. For example, if the application does not specify a unique message batch ID, it fails with the following error:

com.amazonaws.services.sns.model.BatchEntryIdsNotDistinctException: Two or more batch entries in the request have the same Id. (Service: AmazonSNS; Status Code: 400; Error Code: BatchEntryIdsNotDistinct; Request ID: 44cdac03-eeac-5760-9264-f5f99f4914ad; Proxy: null)

The second is within the batch request at the message level. The application must inspect the returned PublishBatchResult object by iterating through successful and failed responses:

PublishBatchResult publishBatchResult = snsClient.publishBatch(request);

publishBatchResult.getSuccessful().forEach(entry -> {

System.out.println(entry.toString());

});

publishBatchResult.getFailed().forEach(entry -> {

System.out.println(entry.toString());

});With respect to quotas, the overall message throughput for an SNS topic remains the same. For example, in US East (N. Virginia), standard topics support up to 30,000 messages per second. Before this feature, 30,000 messages also meant 30,000 API requests per second. Because SNS now supports up to 10 messages per request, you can publish the same number of messages using only 3,000 API requests. With FIFO topics, the message throughput remains the same at 300 messages per second, but you can now send that volume of messages using only 30 API requests, thus reducing your messaging costs with SNS.

SNS now supports the ability to publish up to 10 messages in a single API request, reducing costs for publishing messages into SNS. Your applications can validate the publish status of each of the messages sent in the batch and handle failed publish requests accordingly. Message throughput to SNS topics remains the same for both standard and FIFO topics.

Learn more about this ability in the SNS Developer Guide.

Learn more about the details of the API request in the SNS API reference.

Learn more about SNS quotas.

For more serverless learning resources, visit Serverless Land.

Post Syndicated from original https://lwn.net/Articles/876209/rss

The kernel provides a number of macros internally to allow code to generate

warnings when something goes wrong. It does not, however, provide a lot of

guidance regarding what should happen when a warning is issued. Alexander

Popov recently posted a

patch series adding an option for the system’s response to warnings;

that series seems unlikely to be applied in anything close to its current

form, but it did succeed in provoking a discussion on how warnings should

be handled.

Post Syndicated from George Komninos original https://aws.amazon.com/blogs/big-data/design-and-build-a-data-vault-model-in-amazon-redshift-from-a-transactional-database/

Building a highly performant data model for an enterprise data warehouse (EDW) has historically involved significant design, development, administration, and operational effort. Furthermore, the data model must be agile and adaptable to change while handling the largest volumes of data efficiently.

Data Vault is a methodology for delivering project design and implementation to accelerate the build of data warehouse projects. Within the overall methodology, the Data Vault 2.0 data modeling standards are popular and widely used within the industry because they emphasize the business keys and their associations within the delivery of business processes. Data Vault facilitates the rapid build of data models via the following:

We always recommend working backwards from the business requirements to choose the most suitable data modelling pattern to use; there are times where Data Vault will not be the best choice for your enterprise data warehouse and another modelling pattern will be more suitable.

In this post, we demonstrate how to implement a Data Vault model in Amazon Redshift and query it efficiently by using the latest Amazon Redshift features, such as separation of compute from storage, seamless data sharing, automatic table optimizations, and materialized views.

A data warehouse platform built using Data Vault typically has the following architecture:

The architecture consists of four layers:

The following entity relationship diagram is a standard implementation of a transactional model that a sports ticket selling service could use:

The main entities within the schema are sporting events, customers, and tickets. A customer is a person, and a person can purchase one or multiple tickets for a sporting event. This business event is captured by the Ticket Purchase History intersection entity above. Finally, a sporting event has many tickets available to purchase and is staged within a single city.

To convert this source model to a Data Vault model, we start to identify the business keys, their descriptive attributes, and the business transactions. The three main entity types in the Raw Data Vault model are as follows:

The following example solution represents some of the sporting event entities when converted into the preceeding Raw Data Vault objects.

The hub is the definitive list of business keys loaded into the Raw Data Vault layer from all of the source systems. A business key is used to uniquely identify a business entity and is never duplicated. In our example, the source system has assigned a surrogate key field called Id to represent the Business Key, so this is stored in a column on the Hub called sport_event_id. Some common additional columns on hubs include the Load DateTimeStamp which records the date and time the business key was first discovered, and the Record Source which records the name of the source system where this business key was first loaded. Although, you don’t have to create a surrogate type (hash or sequence) for the primary key column, it is very common in Data Vault to hash the business key, so our example does this. Amazon Redshift supports multiple cryptographic hash functions like MD5, FNV, SHA1, and SHA2, which you can choose to generate your primary key column. See the following code :

Note the following:

_PK column.The link object is the occurrence of two or more business keys undertaking a business transaction, for example purchasing a ticket for a sporting event. Each of the business keys is mastered in their respective hubs, and a primary key is generated for the link comprising all of the business keys (typically separated by a delimiter field like ‘^’). As with hubs, some common additional columns are added to links, including the Load DateTimeStamp which records the date and time the transaction was first discovered, and the Record Source which records the name of the source system where this transaction was first loaded. See the following code:

Note the following:

_PK column is hashed values of concatenated ticket and sporting event business keys from the source data feed, for example MD5(ticket_id||'^'||sporting_event_id)_FK columns are foreign keys linked to the primary key of the respective hubs.The history of data about the hub or link is stored in the satellite object. The Load DateTimeStamp is part of the compound key of the satellite along with the primary key of either the hub or link because data can change over time. There are choices within the Data Vault standards for how to store satellite data from multiple sources. A common approach is to append the name of the feed to the satellite name. This lets a single hub contain reference data from more than one source system, and for new sources to be added without impact to the existing design. See the following code:

Note the following:

sport_event_pk value is inherited from the hub.sport_event_pk and load_dts columns. This allows history to be maintained.Load_DTS is populated via the staging schema or ETL code.If we take the preceding three Data Vault entity types above, we can convert the source data model into a Data Vault data model as follows:

The Business Data Vault contains business-centric calculations and performance de-normalizations that are read by the Information Marts. Some of the object types that are created in the Business Vault layer include the following:

Load DateTimeStamp depending on when the data was loaded. A PIT table simplifies access to all of this data by creating a table or materialized view to present a single row with all of the relevant data to hand. The compound key of a PIT table is the primary key from the hub, plus a snapshot date or snapshot date and time for the frequency of the population. Once a day is the most popular, but equally the frequency could be every 15 minutes or once a month.The architecture of Amazon Redshift allows flexibility in the design of the Data Vault platform by using the capabilities of the Amazon Redshift RA3 instance type to separate the compute resources from the data storage layer and the seamless ability to share data between different Amazon Redshift clusters. This flexibility allows highly performant and cost-effective Data Vault platforms to be built. For example, the Staging and Raw Data Vault Layers are populated 24-hours-a-day in micro batches by one Amazon Redshift cluster, the Business Data Vault layer can be built one-time-a-day and paused to save costs when completed, and any number of consumer Amazon Redshift clusters can access the results. Depending on the processing complexity of each layer, Amazon Redshift supports independently scaling the compute capacity required at each stage.

All of the underlying tables in Raw Data Vault can be loaded simultaneously. This makes great use of the massively parallel processing architecture in Amazon Redshift. For our business model, it makes sense to create a Business Data Vault layer, which can be read by an Information Mart to perform dimensional analysis on ticket sales. It can give us insights on the top home teams in fan attendance and how that correlates with specific sport locations or cities. Running these queries involves joining multiple tables. It’s important to design an optimal Business Data Vault layer to avoid excessive joins for deriving these insights.

For example, to get the number of tickets per city for June 2021, the SQL looks like the following code:

We can use the EXPLAIN command for the preceding query to get the Amazon Redshift query plan. The following plan shows that the specified joins require broadcasting data across nodes, since the join conditions are on different keys. This makes the query computationally expensive:

Let’s discuss the latest Amazon Redshift features that help optimize the performance of these queries on top of a Business Data Vault model.

Traditionally, to optimize joins in Amazon Redshift, it’s recommended to use distribution keys and styles to co-locate data in the same nodes, as based on common join predicates. The Raw Data Vault layer has a very well-defined pattern, which is ideal for determining the distribution keys. However, the broad range of SQL queries applicable to the Business Data Vault makes it hard to predict your consumption pattern that would drive your distribution strategy.

Automatic table optimization lets you get the fastest performance quickly without needing to invest time to manually tune and implement table optimizations. Automatic table optimization continuously observes how queries interact with tables, and it uses machine learning (ML) to select the best sort and distribution keys to optimize performance for the cluster’s workload. If Amazon Redshift determines that applying a key will improve cluster performance, then tables are automatically altered within hours without requiring administrator intervention.

Automatic Table Optimization provided following recommendations for the above query to get the number of tickets per city for June 2021. The recommendations suggest modifying the distribution style and sort keys for tables involved in these queries.

After the recommended distribution keys and sort keys were applied by Automatic Table Optimization, the explain plan shows “DS_DIST_NONE” and no data redistribution was required anymore for this query. The data required for the joins was co-located across Amazon Redshift nodes.

The data analyst responsible for running this analysis benefits significantly by creating a materialized view in the Business Data Vault schema that pre-computes the results of the queries by running the following SQL:

To get the latest satellite values, we must include load_dts in our join. For simplicity, we don’t do that for this post.

You can optimize this query both in terms of code length and complexity to something as simple as the following:

The run plan in this case is as follows:

More importantly, Amazon Redshift can automatically use the materialized view even if that’s not explicitly stated.

The preceding scenario addresses the needs of a specific analysis because the resulting materialized view is an aggregate. In a more generic scenario, after reviewing our Data Vault ER diagram, you can observe that any query that involves ticket sales analysis per location requires a substantial number of joins, all of which use different join keys. Therefore, any such analysis comes at a significant cost regarding performance. For example, to get the count of tickets sold per city and stadium name, you must run a query like the following:

You can use the EXPLAIN command for the preceding query to get the explain plan and know how expensive such an operation is:

We can identify commonly joined tables, like hub_sport_event, hub_ticket and hub_location, and then boost the performance of queries by creating materialized views that implement these joins ahead of time. For example, we can create a materialized view to join tickets to sport locations:

If we don’t make any edits to the expensive query that we ran before, then the run plan is as follows:

Amazon Redshift now uses the materialized view for any future queries that involve joining tickets with sports locations. For example, a separate business intelligence (BI) team looking into the dates with the highest ticket sales can run a query like the following:

Amazon Redshift can implicitly understand that the query can be optimized by using the materialized view we already created, thereby avoiding joins that involve broadcasting data across nodes. This can be seen from the run plan:

If we drop the materialized view, then the preceding query results in the following plan:

End-users of the data warehouse don’t need to worry about refreshing the data in the materialized views. This is because we enabled automatic materialized view refresh. Future use cases involving new dimensions also benefit from the existence of materialized views.

Another type of query that we can run on top of the Business Data Vault schema is prepared statements with bind variables. It’s quite common to see user interfaces integrated with data warehouses, which lets users dynamically change the value of the variable through selection in a choice list or link in a cross-tab. When the variable changes, so do the query condition and the report or dashboard contents. The following query is a prepared statement to get the count of tickets sold per city and stadium name. It takes the stadium name as a variable and provides the number of tickets sold in that stadium.

Let’s run the query to see the city and tickets sold for different stadiums passed as a variable in this prepared statement:

Let’s dive into the explain plan of this prepared statement to understand if Amazon Redshift can implicitly understand that the query can be optimized by using the materialized view bridge_tickets_per_stadium_mv that was created earlier:

As noted in the explain plan, Amazon Redshift could optimize the explain plan of the query to implicitly use the materialized view created earlier, even for prepared statements.

In this post, we’ve demonstrated how to implement Data Vault model in Amazon Redshift, thereby levering the out-of-the-box features. We also discussed how Amazon Redshift’s features, such as seamless data share, automatic table optimization, materialized views, and automatic materialized view refresh can help you build data models that meet high performance requirements.

George Komninos is a solutions architect for the AWS Data Lab. He helps customers convert their ideas to a production-ready data products. Before AWS, he spent three years at Alexa Information as a data engineer. Outside of work, George is a football fan and supports the greatest team in the world, Olympiacos Piraeus.

George Komninos is a solutions architect for the AWS Data Lab. He helps customers convert their ideas to a production-ready data products. Before AWS, he spent three years at Alexa Information as a data engineer. Outside of work, George is a football fan and supports the greatest team in the world, Olympiacos Piraeus.

Devika Singh is a Senior Solutions Architect at Amazon Web Services. Devika helps customers architect and build database and data analytics solutions to accelerate their path to production as part of the AWS Data Lab. She has expertise in database and data warehouse migrations to AWS, helping customers improve the value of their solutions with AWS.

Devika Singh is a Senior Solutions Architect at Amazon Web Services. Devika helps customers architect and build database and data analytics solutions to accelerate their path to production as part of the AWS Data Lab. She has expertise in database and data warehouse migrations to AWS, helping customers improve the value of their solutions with AWS.

Simon Dimaline has specialized in data warehousing and data modeling for more than 20 years. He currently works for the Data & Analytics practice within AWS Professional Services accelerating customers’ adoption of AWS analytics services.

Simon Dimaline has specialized in data warehousing and data modeling for more than 20 years. He currently works for the Data & Analytics practice within AWS Professional Services accelerating customers’ adoption of AWS analytics services.

Post Syndicated from original https://lwn.net/Articles/876425/rss

Greg Kroah-Hartman has released two more stable kernels. 5.14.20 reverts three patches from the

5.4.19 release, while 5.10.80 is one of the

massive updates mentioned yesterday. The

other massive release mentioned, 5.15.3, is still under

review and can be expected in the next day or two. As usual, the

kernels released contain important fixes and users should upgrade.

Post Syndicated from Srikanth Sopirala original https://aws.amazon.com/blogs/big-data/federate-amazon-redshift-access-with-secureauth-single-sign-on/

Amazon Redshift is the leading cloud data warehouse that delivers up to 3x better price performance compared to other cloud data warehouses by using massively parallel query execution, columnar storage on high-performance disks, and results caching. You can confidently run mission-critical workloads, even in highly regulated industries, because Amazon Redshift comes with out-of-the-box security and compliance.

You can use your corporate identity providers (IdPs), for example Azure AD, Active Directory Federation Services, Okta, or Ping Federate, with Amazon Redshift to provide single sign-on (SSO) to your users so they can use their IdP accounts to log in and access Amazon Redshift. With federation, you can centralize management and governance of authentication and permissions. For more information about the federation workflow using AWS Identity and Access Management (IAM) and an IdP, see Federate Database User Authentication Easily with IAM and Amazon Redshift.

This post shows you how to use the Amazon Redshift browser-based plugin with SecureAuth to enable federated SSO into Amazon Redshift.

To implement this solution, you complete the following high-level steps:

The process flow for federated authentication includes the following steps:

The following diagram illustrates this process flow.

Before starting this walkthrough, you must have the following:

For instructions on setting up your user data store integration with SecureAuth, see Add a User Data Store. The following screenshot shows a sample setup.

The next step is to create a new realm in your IdP.

Amazon Redshift).

http://localhost:7890/redshift/.| Attribute Number | SAML Attributes | Value |

| Attribute 1 | https://aws.amazon.com/SAML/Attributes/Role | Full Group DN List |

| Attribute 2 | https://aws.amazon.com/SAML/Attributes/RoleSessionName | Authenticated User ID |

| Attribute 3 | https://redshift.amazon.com/SAML/Attributes/AutoCreate | Global Aux ID 1 |

| Attribute 4 | https://redshift.amazon.com/SAML/Attributes/DbUser | Authenticated User ID |

The value of Attribute 1 must be dynamically populated with the AWS roles associated with the user that you use to access the Amazon Redshift cluster. This can be a multi-valued SAML attribute to accommodate situations where a user belongs to multiple AD groups or AWS roles with access to the Amazon Redshift cluster. The format of contents within Attribute 1 look like arn:aws:iam::AWS-ACCOUNT-NUMBER:role/AWS-ROLE-NAME, arn:aws:iam::AWS-ACCOUNT-NUMBER:saml-provider/SAML-PROVIDER-NAME after it’s dynamically framed using the following steps.

The dynamic population of Attribute 1 (https://aws.amazon.com/SAML/Attributes/Role) can be done using SecureAuth’s transformation engine.

We are assuming that the AD group name and IAM role name will be the same. This is key for the transformation to work.

You have to substitute the following in the script:

true.

When the setup is complete, your SecureAuth side settings should look something similar to the following screenshots.

The next step is to set up the service provider.

To create an IdP in IAM, complete the following steps:

You should see a page similar to the following after the IdP creation is complete.

For each Amazon Redshift DB group of users, we need to create an IAM role and the corresponding IAM policy. Repeat the steps in this section for each DB group.

http://localhost:7890/redshift/.

us-east-1.

Make sure that your IAM role name matches with the AD groups that you’re creating to support Amazon Redshift access. The transformation script that we discussed in the SecureAuth section is assuming that the AD group name and the Amazon Redshift access IAM role name are the same.

We use the AWS Command Line Interface (AWS CLI) to fetch the unique role identifier for the role you just created.

Replace <value> with the role-name that you just created. So, in this example, the command is:

RoleId.

RoleId fetched earlier.You’ve now created an IAM role and policy corresponding to the DB group for which you’re trying to enable IAM-based access.

To log in to Amazon Redshift using your IdP-based credentials, complete the following steps:

jdbc:redshift:iam://REDSHIFT-CLUSTER-ENDPOINT:PORT#/DATABASE.For example, jdbc:redshift:iam://sample-redshift-cluster-1.cxqXXXXXXXXX.us-east-1.redshift.amazonaws.com:5439/dev.

com.amazon.redshift.plugin.BrowserSamlCredentialsProviderhttps://XYZ.identity.secureauth.com/SecureAuth2/.finance.You should be redirected to your IdP in a browser window for authentication.

You’ll see the multi-factor authentication screen if it has been set up within SecureAuth.

You should see the successful login in the browser as well as in the SQL Workbench/J app.

select current_user, which should show the currently logged-in user.

Amazon Redshift supports stringent compliance and security requirements with no extra cost, which makes it ideal for highly regulated industries. With federation, you can centralize management and governance of authentication and permissions by managing users and groups within the enterprise IdP and use them to authenticate to Amazon Redshift. SSO enables users to have a seamless user experience while accessing various applications in the organization.

In this post, we walked you through a step-by-step guide to configure and use SecureAuth as your IdP and enabled federated SSO to an Amazon Redshift cluster. You can follow these steps to set up federated SSO for your organization and manage access privileges based on read/write privileges or by business function and passing group membership defined in your SecureAuth IdP to your Amazon Redshift cluster.

If you have any questions or suggestions, please leave a comment.

Srikanth Sopirala is a Principal Analytics Specialist Solutions Architect at AWS. He is a seasoned leader who is passionate about helping customers build scalable data and analytics solutions to gain timely insights and make critical business decisions. In his spare time, he enjoys reading, spending time with his family, and road biking.

Srikanth Sopirala is a Principal Analytics Specialist Solutions Architect at AWS. He is a seasoned leader who is passionate about helping customers build scalable data and analytics solutions to gain timely insights and make critical business decisions. In his spare time, he enjoys reading, spending time with his family, and road biking.

Sandeep Veldi is a Sr. Solutions Architect at AWS. He helps AWS customers with prescriptive architectural guidance based on their use cases and navigating their cloud journey. In his spare time, he loves to spend time with his family.

Sandeep Veldi is a Sr. Solutions Architect at AWS. He helps AWS customers with prescriptive architectural guidance based on their use cases and navigating their cloud journey. In his spare time, he loves to spend time with his family.

BP Yau is a Sr Analytics Specialist Solutions Architect at AWS. His role is to help customers architect big data solutions to process data at scale. Before AWS, he helped Amazon.com Supply Chain Optimization Technologies migrate the Oracle Data Warehouse to Amazon Redshift and built the next generation big data analytics platform using AWS technologies.

BP Yau is a Sr Analytics Specialist Solutions Architect at AWS. His role is to help customers architect big data solutions to process data at scale. Before AWS, he helped Amazon.com Supply Chain Optimization Technologies migrate the Oracle Data Warehouse to Amazon Redshift and built the next generation big data analytics platform using AWS technologies.

Jamey Munroe is Head of Database Sales, Global Verticals and Strategic Accounts. He joined AWS as one of its first Database and Analytics Sales Specialists, and he’s passionate about helping customers drive bottom-line business value throughout the full lifecycle of data. In his spare time, he enjoys solving home improvement DIY project challenges, fishing, and competitive cycling.

Jamey Munroe is Head of Database Sales, Global Verticals and Strategic Accounts. He joined AWS as one of its first Database and Analytics Sales Specialists, and he’s passionate about helping customers drive bottom-line business value throughout the full lifecycle of data. In his spare time, he enjoys solving home improvement DIY project challenges, fishing, and competitive cycling.

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=o0IG0Vjma10

Post Syndicated from Molly Clancy original https://www.backblaze.com/blog/what-is-kubernetes/

Do you remember when “Pokémon Go” came out in 2016? Suddenly it was everywhere. It was a world-wide obsession, with over 10 million downloads in its first week and 500 million downloads in six months. System load rapidly escalated to 50 times the anticipated demand. How could the game architecture support such out-of-control hypergrowth?

The answer: At release time, Pokémon Go was “The largest Kubernetes deployment on Google Container Engine.” Kubernetes is a container orchestration tool that manages resources for dynamic web-scale applications, like “Pokémon Go.”

In this post, we’ll take a look at what Kubernetes does, how it works, and how it could be applicable in your environment.

You may be familiar with containers. They’re conceptually similar to lightweight virtual machines. Instead of simulating computer hardware and running an entire operating system (OS) on that simulated computer, the container runs applications under a parent OS with almost no overhead. Containers allow developers and system administrators to develop, test, and deploy software and applications much faster than VMs, and most applications today are built with them.

But what happens if one of your containers goes down, or your ecommerce store experiences high demand, or if you release a viral sensation like “Pokémon Go”? You don’t want your application to crash, and you definitely don’t want your store to go down during the Christmas crush. Unfortunately, containers don’t solve those problems. You could implement intelligence in your application to scale as needed, but that would make your application a lot more complex and expensive to implement. It would be simpler and faster if you could use a drop-in layer of management—a “fleet manager” of sorts—to coordinate your swarm of containers. That’s Kubernetes.

Kubernetes implements a fairly straightforward hierarchy of components and concepts:

You have a few options to run Kubernetes. The minikube utility launches and runs a small single-node cluster locally for testing purposes. And you can control Kubernetes with any of several control interfaces: the kubectl command provides a command-line interface, and library APIs and REST endpoints provide programmable interfaces.

Modern web-based applications are commonly implemented with “microservices,” each of which embodies one part of the desired application behavior. Kubernetes distributes the microservices across Pods. Pods can be used two ways—to run a single container (the most common use case) or to run multiple containers (like a pod of peas or a pod of whales—a more advanced use case). Kubernetes operates on the Pods, which act as a sort of wrapper around the container(s) rather than the containers themselves. As the microservices run, Kubernetes is responsible for managing the application’s execution. Kubernetes “orchestrates” the Pods, including:

The beauty of this model is that the applications don’t have to know about the Kubernetes management. You don’t have to write load-balancing functionality into every application, or autoscaling, or other orchestration logic. The applications just run simplified microservices in a simple environment, and Kubernetes handles all the management complexity.

As an example: You write a small reusable application (say, a simple database) on a Debian Linux system. Then you could transfer that code to an Ubuntu system and run it, without any changes, in a Debian container. (Or, maybe you just download a database container from the Docker library.) Then you create a new application that calls the database application. When you wrote the original database on Debian, you might not have anticipated it would be used on an Ubuntu system. You might not have known that the database would be interacting with other application components. Fortunately, you didn’t have to anticipate the new usage paradigm. Kubernetes and containers isolate your code from the messy details.

Keep in mind, Kubernetes is not the only orchestration solution—there’s Docker Swarm, Hashicorp’s Nomad, and others. Cycle.io, for example, offers a simple container orchestration solution that focuses on ease for the most common container use cases.

Kubernetes spins up and spins down Pods as needed. Each Pod can host its own internal storage (as shown in the diagram above), but that’s not often used. A Pod might get discarded because the load has dropped, or the process crashed, or for other reasons. The Pods (and their enclosed containers and volumes) are ephemeral, meaning that their state is lost when they are destroyed. But most applications are stateful. They couldn’t function in a transitory environment like this. In order to work in a Kubernetes environment, the application must store its state information externally, outside the Pod. A new instance (a new Pod) must fetch the current state from the external storage when it starts up, and update the external storage as it executes.

You can specify the external storage when you create the Pod, essentially mounting the external volume in the container. The container running in the Pod accesses the external storage transparently, like any other local storage. Unlike local storage, though, cloud-based object storage is designed to scale almost infinitely right alongside your Kubernetes deployment. That’s what makes object storage an ideal match for applications running Kubernetes.

When you start up a Pod, you can specify the location of the external storage. Any container in the Pod can then access the external storage like any other mounted file system.

While there’s no doubt a learning curve involved (Kubernetes has sometimes been described as “not for normal humans”), container orchestrators like Kubernetes, Cycle.io, and others can greatly simplify the management of your applications. If you use a microservice model, or if you work with similar cloud-based architectures, a container orchestrator can help you prepare for success from day one by setting your application up to scale seamlessly.

The post What Is Kubernetes? appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from Arturs Lontons original https://blog.zabbix.com/handy-tips-12-optimizing-zabbix-database-size-with-custom-data-storage-periods/17396/

Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/deploying-aws-lambda-layers-automatically-across-multiple-regions/

This post is written by Ben Freiberg, Solutions Architect, and Markus Ziller, Solutions Architect.

Many developers import libraries and dependencies into their AWS Lambda functions. These dependencies can be zipped and uploaded as part of the build and deployment process but it’s often easier to use Lambda layers instead.

A Lambda layer is an archive containing additional code, such as libraries or dependencies. Layers are deployed as immutable versions, and the version number increments each time you publish a new layer. When you include a layer in a function, you specify the layer version you want to use.

Lambda layers simplify and speed up the development process by providing common dependencies and reducing the deployment size of your Lambda functions. To learn more, refer to Using Lambda layers to simplify your development process.

Many customers build Lambda layers for use across multiple Regions. But maintaining up-to-date and consistent layer versions across multiple Regions is a manual process. Layers are set as private automatically but they can be shared with other AWS accounts or shared publicly. Permissions only apply to a single version of a layer. This solution automates the creation and deployment of Lambda layers across multiple Regions from a centralized pipeline.

This solution uses AWS Lambda, AWS CodeCommit, AWS CodeBuild and AWS CodePipeline.

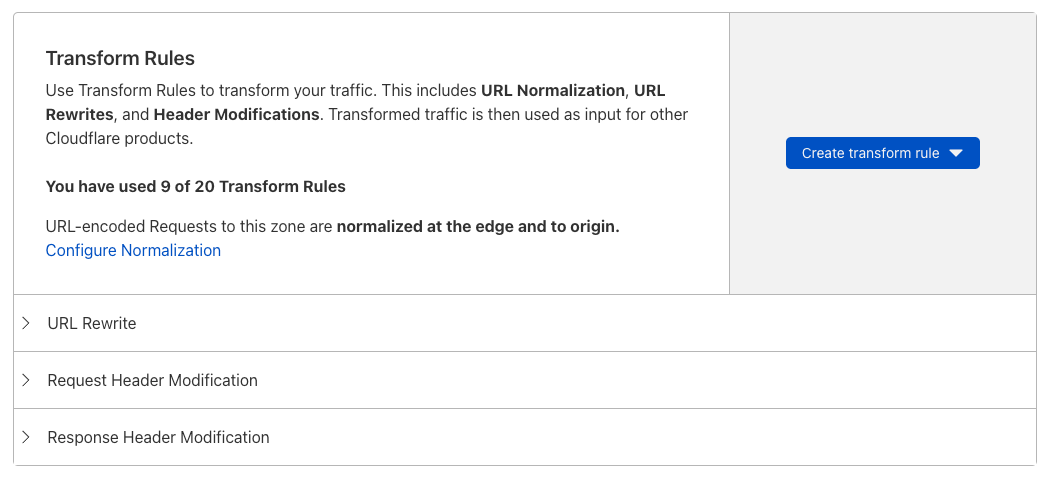

This diagram outlines the workflow implemented in this blog:

The following walkthrough explains the components and how the provisioning can be automated via CDK. For this walkthrough, you need:

To deploy the sample stack:

git clone https://github.com/aws-samples/multi-region-lambda-layers

The source code of the Lambda layers is stored in AWS CodeCommit. This is a secure, highly scalable, managed source control service that hosts private Git repositories. This example initializes a new repository as part of the CDK stack:

const asset = new Asset(this, 'SampleAsset', {

path: path.join(__dirname, '../../res')

});

const cfnRepository = new codecommit.CfnRepository(this, 'lambda-layer-source', {

repositoryName: 'lambda-layer-source',

repositoryDescription: 'Contains the source code for a nodejs12+14 Lambda layer.',

code: {

branchName: 'main',

s3: {

bucket: asset.s3BucketName,

key: asset.s3ObjectKey

}

},

});

This code uploads the contents of the ./cdk/res/ folder to an S3 bucket that is managed by the CDK. The CDK then initializes a new CodeCommit repository with the contents of the bucket. In this case, the repository gets initialized with the following files:

The default package.json in the sample code defines a Lambda layer with the latest version of the AWS SDK for JavaScript:

{

"name": "lambda-layer",

"version": "1.0.0",

"description": "Sample AWS Lambda layer",

"dependencies": {

"aws-sdk": "latest"

}

}

To see what is included in the layer, run npm install in the ./cdk/res/ directory. This shows the files that are bundled into the Lambda layer. The contents of this folder initialize the CodeCommit repository, so delete node_modules and package-lock.json inspecting these files.

This blog post uses a new CodeCommit repository as the source but you can adapt this to other providers. CodePipeline also supports repositories on GitHub and Bitbucket. To connect to those providers, see the documentation.

CodePipeline automates the process of building and distributing Lambda layers across Region for every change to the main branch of the source repository. It is a fully managed continuous delivery service that helps you automate your release pipelines for fast and reliable application and infrastructure updates. CodePipeline automates the build, test, and deploy phases of your release process every time there is a code change, based on the release model you define.

The CDK creates a pipeline in CodePipeline and configures it so that every change to the code base of the Lambda layer runs through the following three stages:

new codepipeline.Pipeline(this, 'Pipeline', {

pipelineName: 'LambdaLayerBuilderPipeline',

stages: [

{

stageName: 'Source',

actions: [sourceAction]

},

{

stageName: 'Build',

actions: [buildAction]

},

{

stageName: 'Distribute',

actions: parallel,

}

]

});

The source phase is the first phase of every run of the pipeline. It is typically triggered by a new commit to the main branch of the source repository. You can also start the source phase manually with the following AWS CLI command:

aws codepipeline start-pipeline-execution --name LambdaLayerBuilderPipelineWhen started manually, the current head of the main branch is used. Otherwise CodePipeline checks out the code in the revision of the commit that triggered the pipeline execution.

CodePipeline stores the code locally and uses it as an output artifact of the Source stage. Stages use input and output artifacts that are stored in the Amazon S3 artifact bucket you chose when you created the pipeline. CodePipeline zips and transfers the files for input or output artifacts as appropriate for the action type in the stage.