Post Syndicated from Bas Westerbaan original https://blog.cloudflare.com/nist-post-quantum-surprise/

On Tuesday, the US National Institute of Standards and Technology (NIST) announced which post-quantum cryptography they will standardize. We were already drafting this post with an educated guess on the choice NIST would make. We almost got it right, except for a single choice we didn’t expect—and which changes everything.

At Cloudflare, post-quantum cryptography is a topic close to our heart, as the future of a secure and private Internet is on the line. We have been working towards this day for many years, by implementing post-quantum cryptography, contributing to standards, and testing post-quantum cryptography in practice, and we are excited to share our perspective.

In this long blog post, we explain how we got here, what NIST chose to standardize, what it will mean for the Internet, and what you need to know to get started with your own post-quantum preparations.

How we got here

Shor’s algorithm

Our story starts in 1994, when mathematician Peter Shor discovered a marvelous algorithm that efficiently factors numbers and computes discrete logarithms. With it, you can break nearly all public-key cryptography deployed today, including RSA and elliptic curve cryptography. Luckily, Shor’s algorithm doesn’t run on just any computer: it needs a quantum computer. Back in 1994, quantum computers existed only on paper.

But in the years since, physicists started building actual quantum computers. Initially, these machines were (and still are) too small and too error-prone to be threatening to the integrity of public-key cryptography, but there is a clear and impending danger: it only seems a matter of time now before a quantum computer is built that has the capability to break public-key cryptography. So what can we do?

Encryption, key agreement and signatures

To understand the risk, we need to distinguish between the three cryptographic primitives that are used to protect your connection when browsing on the Internet:

Symmetric encryption. With a symmetric cipher there is one key to encrypt and decrypt a message. They’re the workhorse of cryptography: they’re fast, well understood and luckily, as far as known, secure against quantum attacks. (We’ll touch on this later when we get to security levels.) Examples are AES and ChaCha20.

Symmetric encryption alone is not enough: which key do we use when visiting a website for the first time? We can’t just pick a random key and send it along in the clear, as then anyone surveilling that session would know that key as well. You’d think it’s impossible to communicate securely without ever having met, but there is some clever math to solve this.

Key agreement, also called a key exchange, allows two parties that never met to agree on a shared key. Even if someone is snooping, they are not able to figure out the agreed key. Examples include Diffie–Hellman over elliptic curves, such as X25519.

The key agreement prevents a passive observer from reading the contents of a session, but it doesn’t help defend against an attacker who sits in the middle and does two separate key agreements: one with you and one with the website you want to visit. To solve this, we need the final piece of cryptography:

Digital signatures, such as RSA, allow you to check that you’re actually talking to the right website with a chain of certificates going up to a certificate authority.

Shor’s algorithm breaks all widely deployed key agreement and digital signature schemes, which are both critical to the security of the Internet. However, the urgency and mitigation challenges between them are quite different.

Impact

Most signatures on the Internet have a relatively short lifespan. If we replace them before quantum computers can crack them, we’re golden. We shouldn’t be too complacent here: signatures aren’t that easy to replace as we will see later on.

More urgently, though, an attacker can store traffic today and decrypt later by breaking the key agreement using a quantum computer. Everything that’s sent on the Internet today (personal information, credit card numbers, keys, messages) is at risk.

NIST Competition

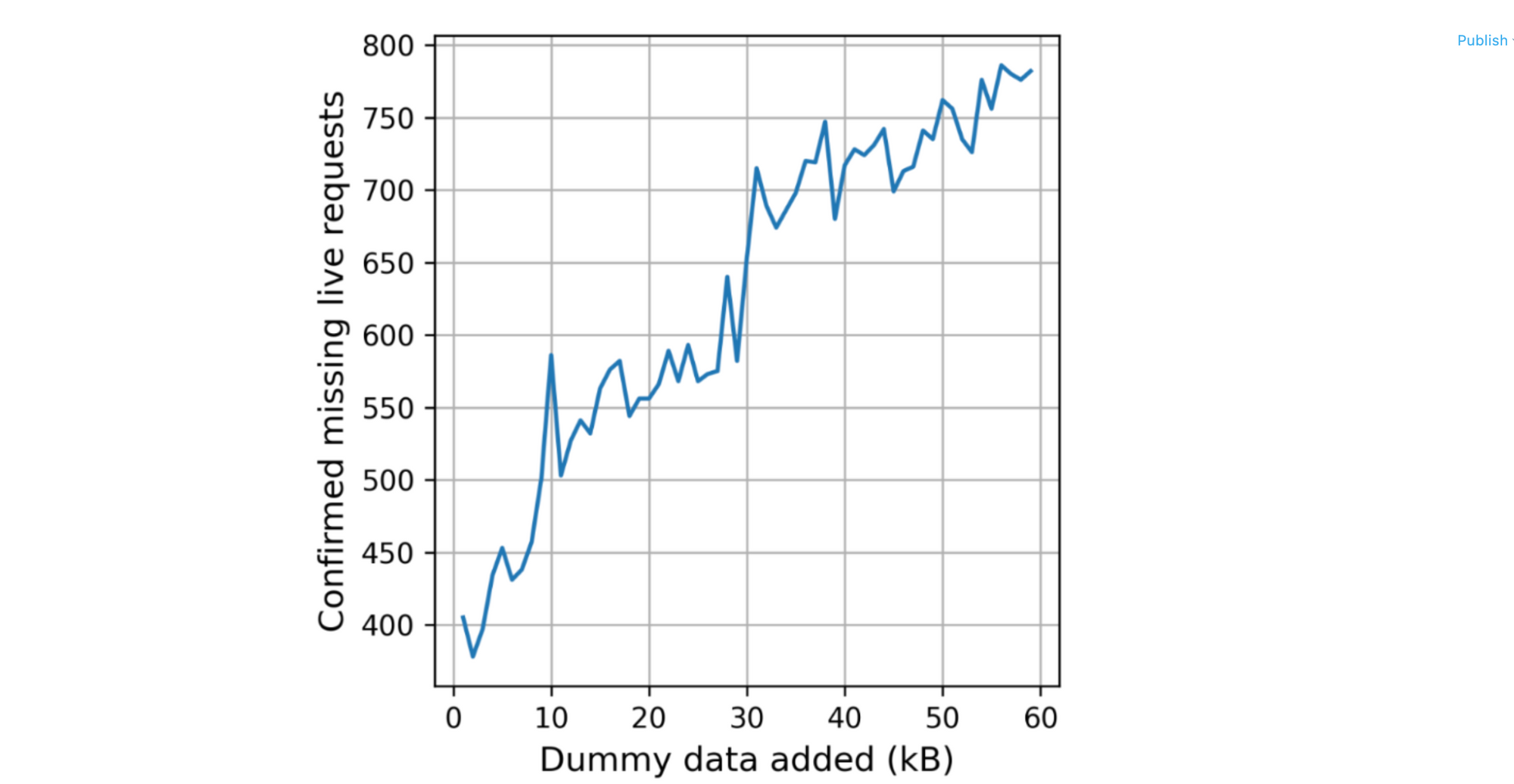

Luckily cryptographers took note of Shor’s work early on and started working on post-quantum cryptography: cryptography not broken by quantum algorithms. In 2016, NIST, known for standardizing AES and SHA, opened a public competition to select which post-quantum algorithms they will standardize. Cryptographers from all over the world submitted algorithms and publicly scrutinized each other’s submissions. To focus attention, the list of potential candidates were whittled down over three rounds. From the original 82 submissions, eight made it into the final third round. From those eight, NIST chose one key agreement scheme and three signature schemes. Let’s have a look at the key agreement first.

What NIST announced

Key agreement

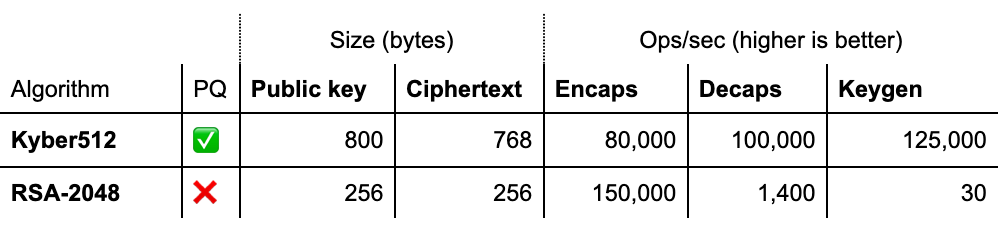

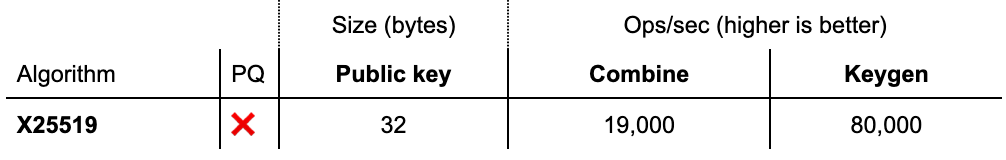

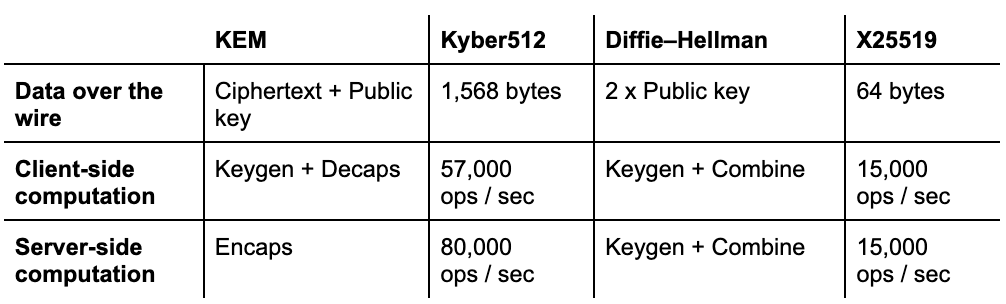

For key agreement, NIST picked only Kyber, which is a Key Encapsulation Mechanism (KEM). Let’s compare it side-by-side to an RSA-based KEM and the X25519 Diffie–Hellman key agreement:

KEM versus Diffie–Hellman

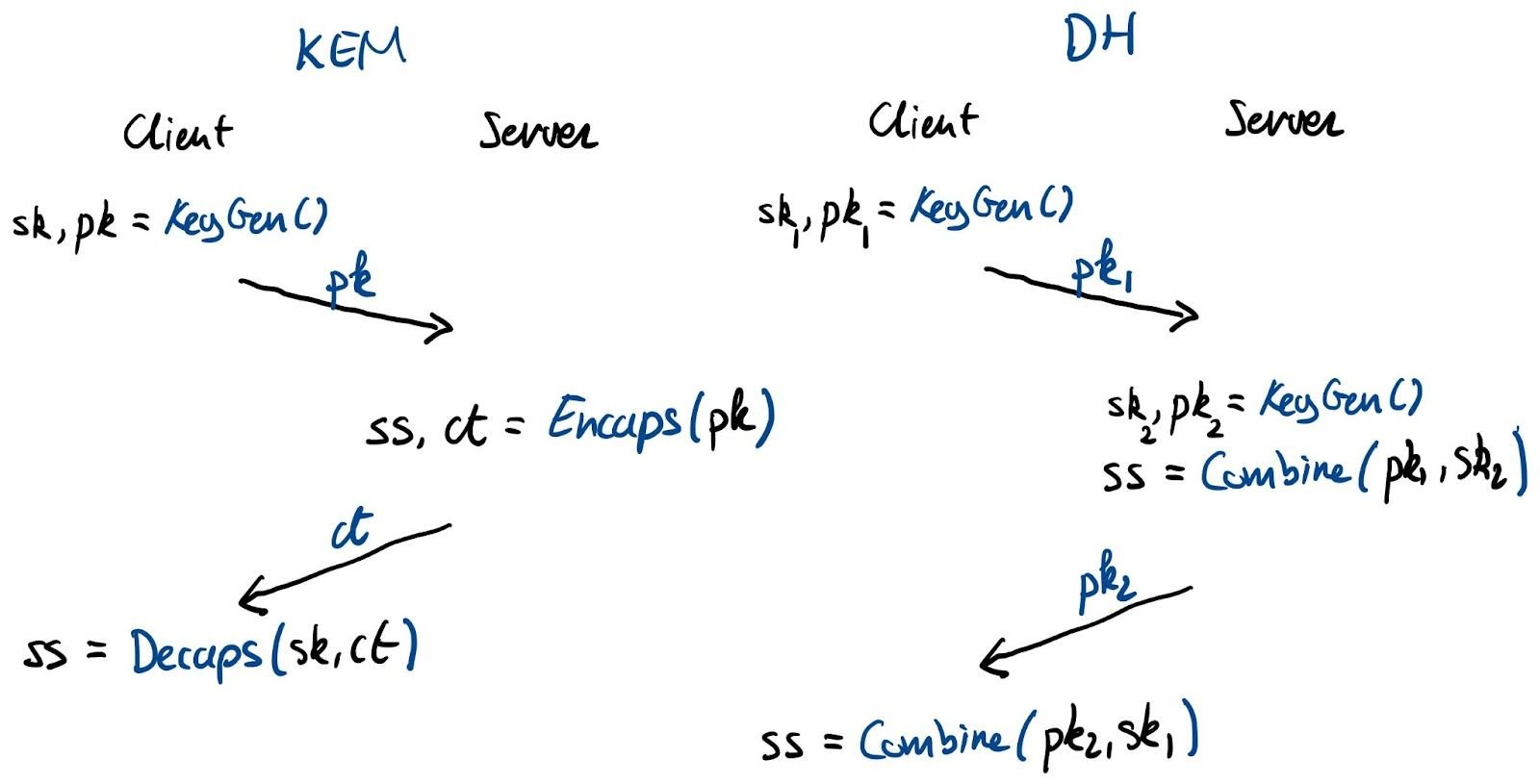

To properly compare these numbers, we have to explain how KEM and Diffie–Hellman key agreements are different.

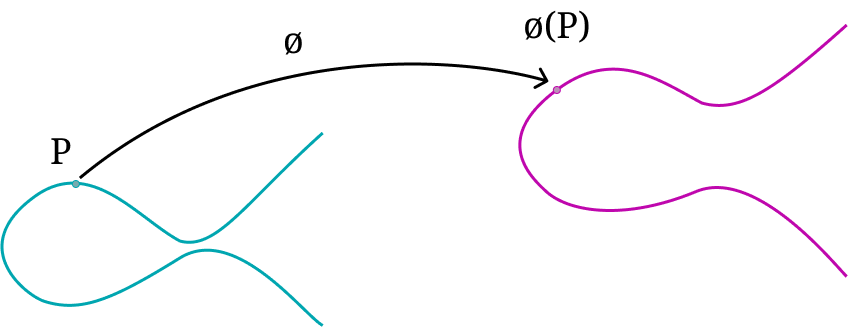

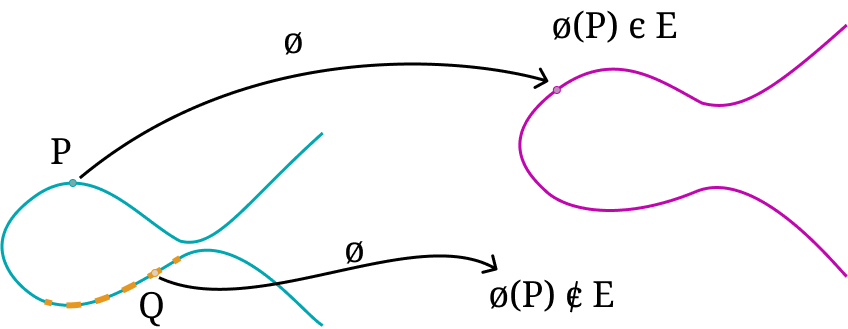

Let’s start with the KEM. A KEM is essentially a Public-Key Encryption (PKE) scheme tailored to encrypt shared secrets. To agree on a key, the initiator, typically the client, generates a fresh keypair and sends the public key over. The receiver, typically the server, generates a shared secret and encrypts (“encapsulates”) it for the initiator’s public key. It returns the ciphertext to the initiator, who finally decrypts (“decapsulates”) the shared secret with its private key.

With Diffie–Hellman, both parties generate a keypair. Because of the magic of Diffie–Hellman, there is a unique shared secret between every combination of a public and private key. Again, the initiator sends its public key. The receiver combines the received public key with its own private key to create the shared secret and returns its public key with which the initiator can also compute the shared secret.

Interactive versus non-interactive key agreement

As an aside, in this simple key agreement (such as in TLS), there is not a big difference between using a KEM or Diffie–Hellman: the number of round-trips is exactly the same. In fact, we’re using Diffie–Hellman essentially as a KEM. This, however, is not the case for all protocols: for instance, the 3XDH handshake of Signal can’t be done with plain KEMs and requires the full flexibility of Diffie–Hellman.

Now that we know how to compare KEMs and Diffie–Hellman, how does Kyber measure up?

Kyber

Kyber is a balanced post-quantum KEM. It is very fast: much faster than X25519, which is already known for its speed. Its main drawback, common to many post-quantum KEMs, is that Kyber has relatively large ciphertext and key sizes: compared to X25519 it adds 1,504 bytes. Is this problematic?

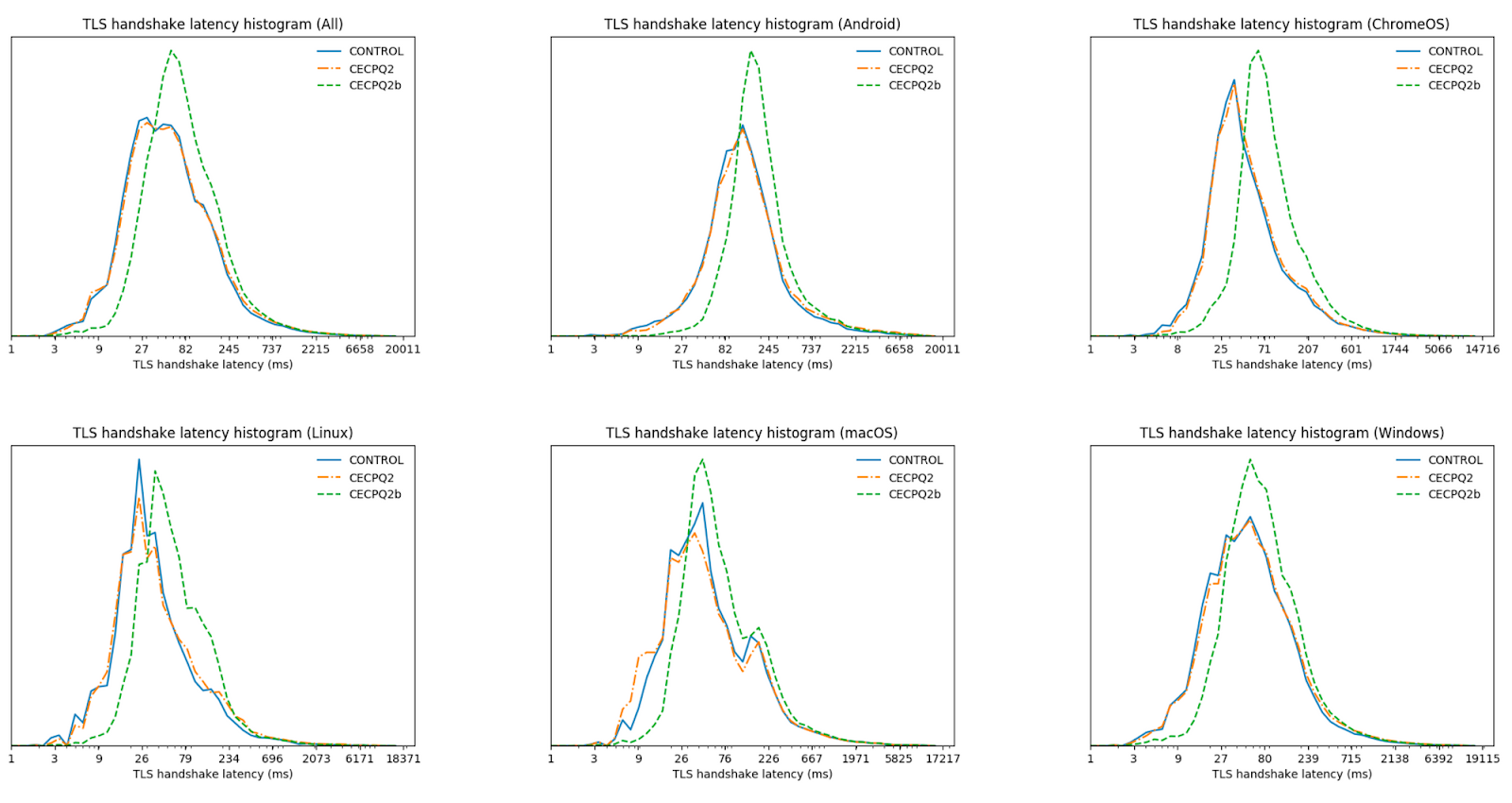

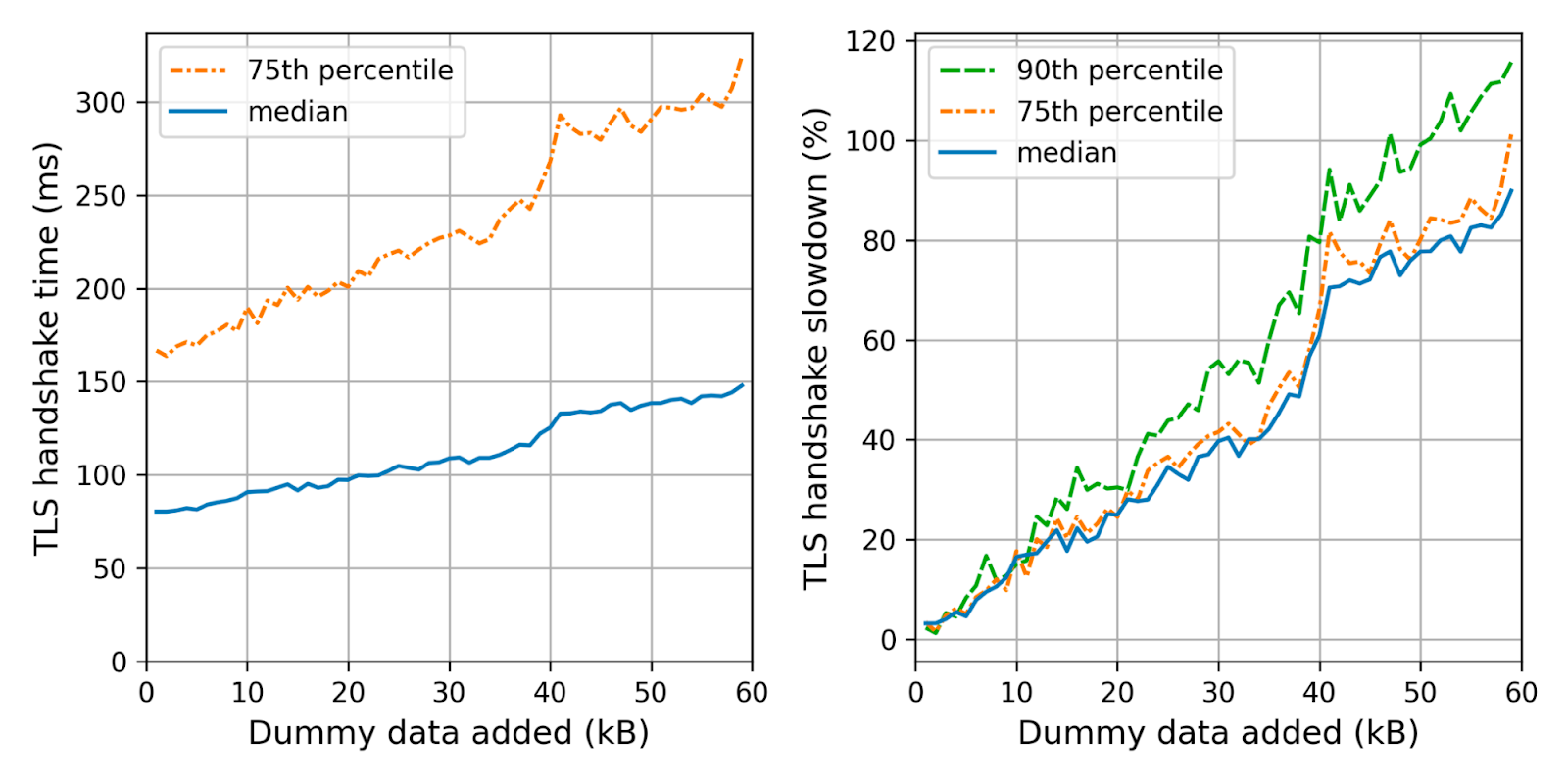

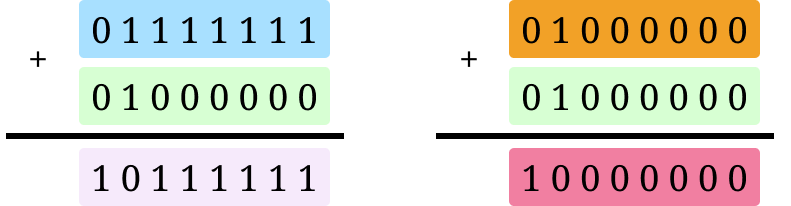

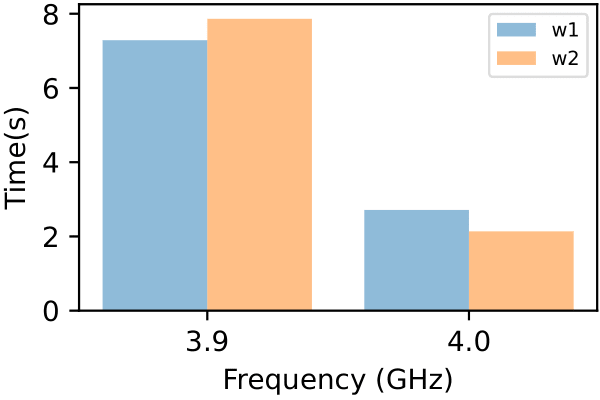

We have some indirect data. Back in 2019 together with Google we tested two post-quantum KEMs, NTRU-HRSS and SIKE in Chrome. SIKE has very small keys, but is computationally very expensive. NTRU-HRSS, on the other hand, has similar performance characteristics to Kyber, but is slightly bigger and slower. This is what we found:

In this experiment we used a combination (a hybrid) of the post-quantum KEM and X25519. Thus NTRU-HRSS couldn’t benefit from its speed compared to X25519. Even with this disadvantage, the difference in performance is very small. Thus we expect that switching to a hybrid of Kyber and X25519 will have little performance impact.

So can we switch to post-quantum TLS today? We would love to. However, we have to be a bit careful: some TLS implementations are brittle and crash on the larger KeyShare message that contains the bigger post-quantum keys. We will work hard to find ways to mitigate these issues, as was done to deploy TLS 1.3. Stay tuned!

The other finalists

It’s interesting to have a look at the KEMs that didn’t make the cut. NIST intends to standardize some of these in a fourth round. One reason is to increase the diversity in security assumptions in case there is a breakthrough in attacks on structured lattices on which Kyber is based. Another reason is that some of these schemes have specialized, but very useful applications. Finally, some of these schemes might be standardized outside of NIST.

| Structured lattices | Backup | Specialists |

|---|---|---|

| NTRU | BIKE 4️⃣ | Classic McEliece 4️⃣ |

| NTRU Prime | HQC 4️⃣ | SIKE 4️⃣ |

| SABER | FrodoKEM |

The finalists and candidates of the third round of the competition. The ones marked with 4️⃣ are proceeding to a fourth round and might yet be standardized.

The structured lattice generalists

Just like Kyber, the KEMs SABER, NTRU and NTRU Prime are all structured lattice schemes that are very similar in performance to Kyber. There are some finer differences, but any one of these KEMs would’ve been a great pick. And they still are: OpenSSH 9.0 chose to implement NTRU Prime.

The backup generalists

BIKE, HQC and FrodoKEM are also balanced KEMs, but they’re based on three different underlying hard problems. Unfortunately they’re noticeably less efficient, both in key sizes and computation. A breakthrough in the cryptanalysis of structured lattices is possible, though, and in that case it’s nice to have backups. Thus NIST is advancing BIKE and HQC to a fourth round.

While NIST chose not to advance FrodoKEM, which is based on unstructured lattices, Germany’s BSI prefers it.

The specialists

The last group of post-quantum cryptographic algorithms under NIST’s consideration are the specialists. We’re happy that both are advancing to the fourth round as they can be of great value in just the right application.

First up is Classic McEliece: it has rather unbalanced performance characteristics with its large public key (261kB) and small ciphertexts (128 bytes). This makes McEliece unsuitable for the ephemeral key exchange of TLS, where we need to transmit the public key. On the other hand, McEliece is ideal when the public key is distributed out-of-band anyway, as is often the case in applications and mobile apps that pin certificates. To use McEliece in this way, we need to change TLS a bit. Normally the server authenticates itself by sending a signature on the handshake. Instead, the client can encrypt a challenge to the KEM public key of the server. Being able to decrypt it is an implicit authentication. This variation of TLS is known as KEMTLS and also works great with Kyber when the public key isn’t known beforehand.

Finally, there is SIKE, which is based on supersingular isogenies. It has very small key and ciphertext sizes. Unfortunately, it is computationally more expensive than the other contenders.

Digital signatures

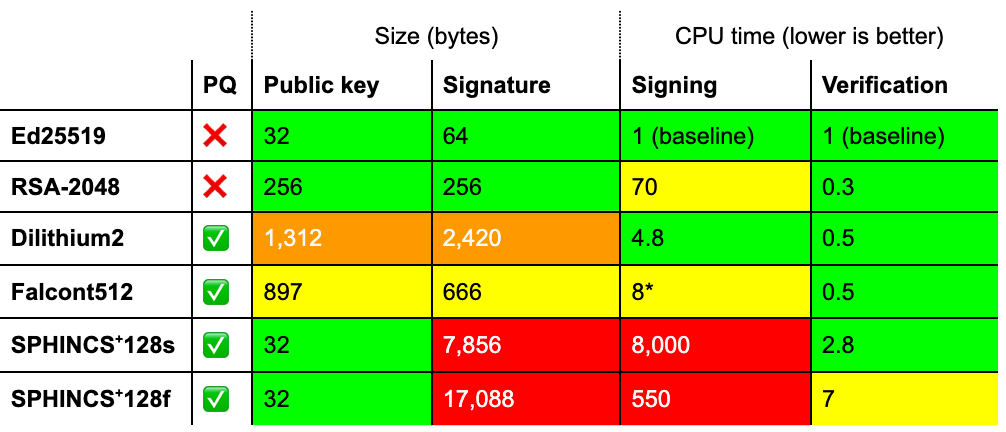

As we just saw, the situation for post-quantum key agreement isn’t too bad: Kyber, the chosen scheme is somewhat larger, but it offers computational efficiency in return. The situation for post-quantum signatures is worse: none of the schemes fit the bill on their own for different reasons. We discussed these issues at length for ten of them in a deep-dive last year. Let’s restrict ourselves for the moment to the schemes that were most likely to be standardized and compare them against Ed25519 and RSA-2048, the schemes that are in common use today.

Performance characteristics of NIST’s chosen signature schemes compared to Ed25519 and RSA-2048. We compare instances of security level 1, see below. Timings vary considerably by platform and implementation constraints and should be taken as a rough indication only. SPHINCS+ was timed with simple haraka as the underlying hash function. (*) Falcon requires a suitable double-precision floating-point unit for fast signing.

Floating points: Falcon’s achilles

All of these schemes have much larger signatures than those commonly used today. Looking at just these numbers, Falcon is the best of the worst. It, however, has a weakness that this table doesn’t show: it requires fast constant-time double-precision floating-point arithmetic to have acceptable signing performance.

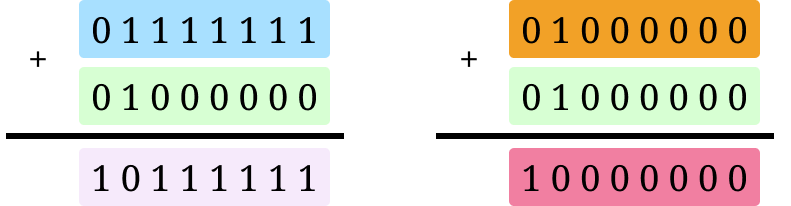

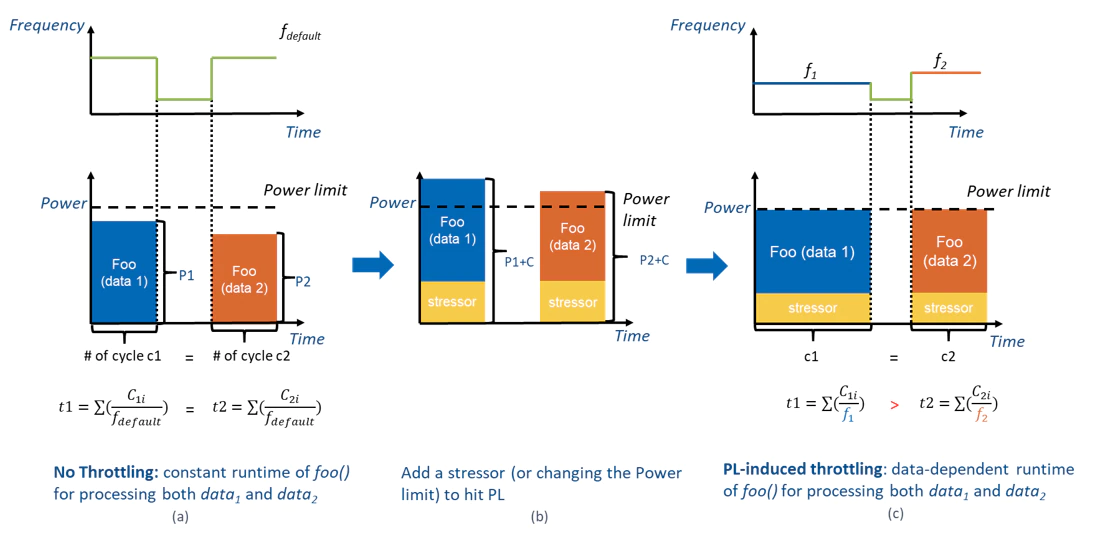

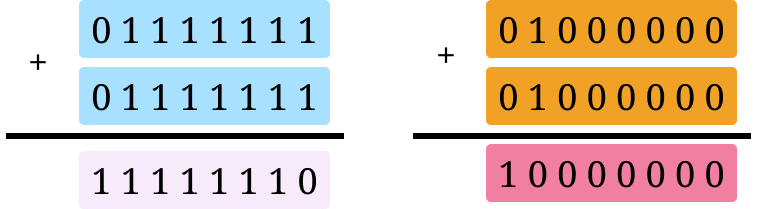

Let’s break that down. Constant time means that the time the operation takes does not depend on the data processed. If the time to create a signature depends on the private key, then the private key can often be recovered by measuring how long it takes to create a signature. Writing constant-time code is hard, but over the years cryptographers have got it figured out for integer arithmetic.

Falcon, crucially, is the first big cryptographic algorithm to use double-precision floating-point arithmetic. Initially it wasn’t clear at all whether Falcon could be implemented in constant-time, but impressively, Falcon was implemented in constant-time for several different CPUs, which required several clever workarounds for certain CPU instructions.

Despite this achievement, Falcon’s constant-timeness is built on shaky grounds. The next generation of Intel CPUs might add an optimization that breaks Falcon’s constant-timeness. Also, many CPUs today do not even have fast constant-time double-precision operations. And then still, there might be an obscure bug that has been overlooked.

In time it might be figured out how to do constant-time arithmetic on the FPU robustly, but we feel it’s too early to deploy Falcon where the timing of signature minting can be measured. Notwithstanding, Falcon is a great choice for offline signatures such as those in certificates.

Dilithium’s size

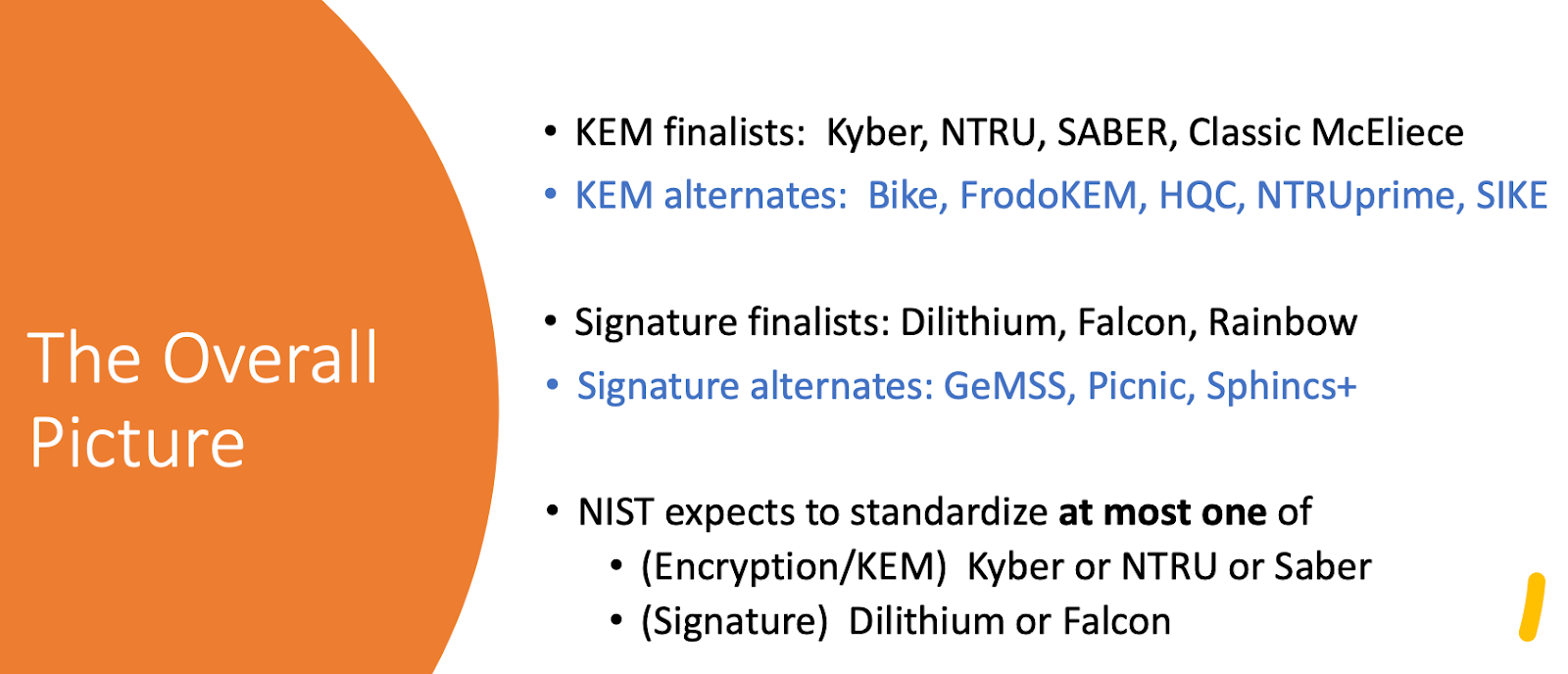

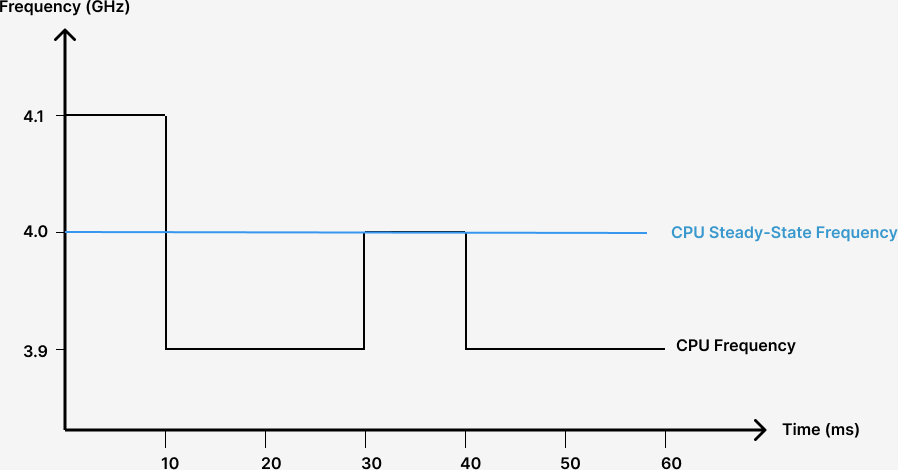

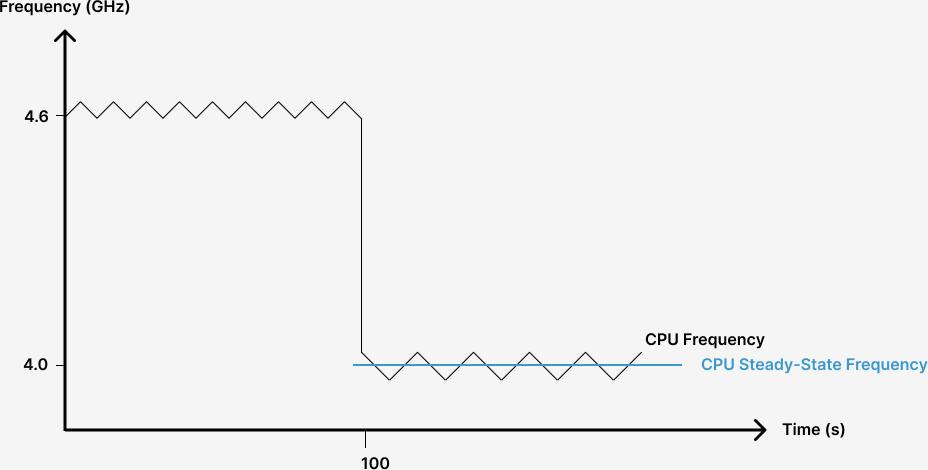

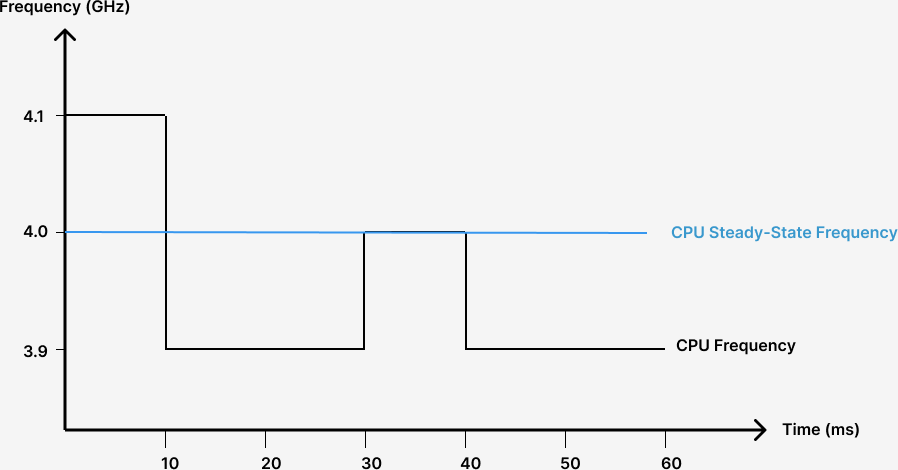

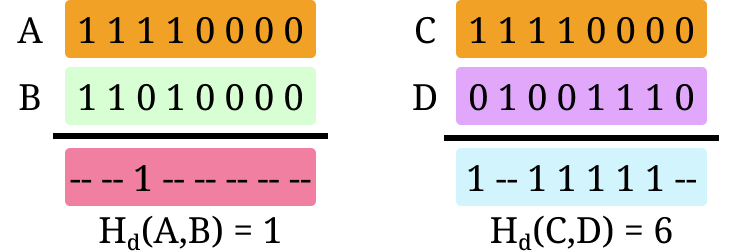

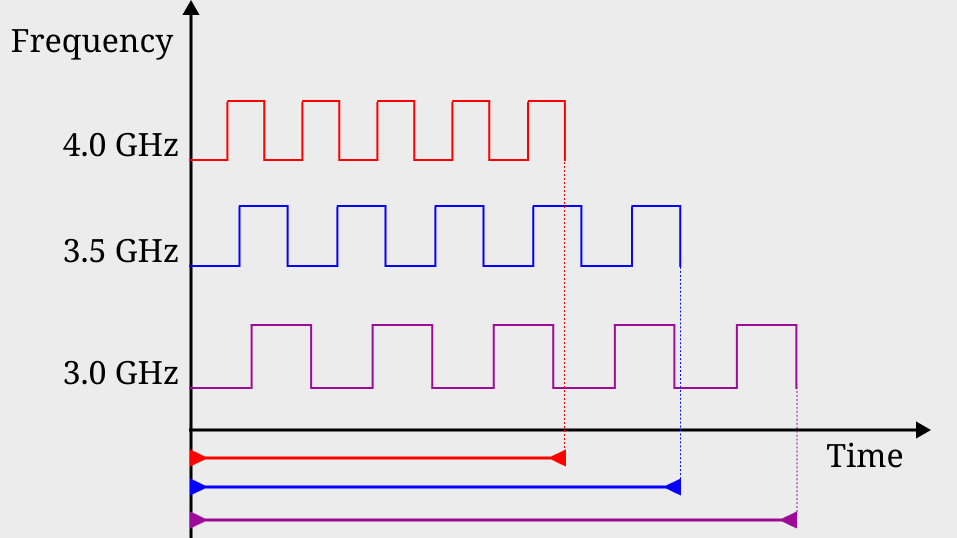

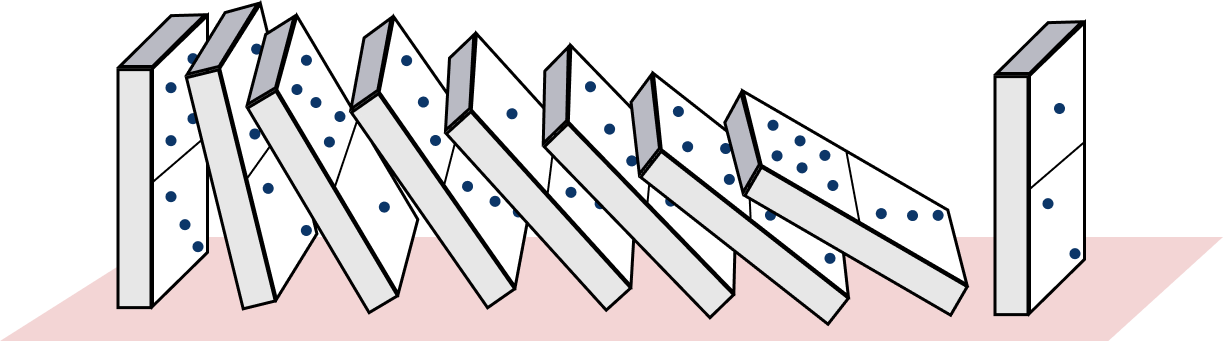

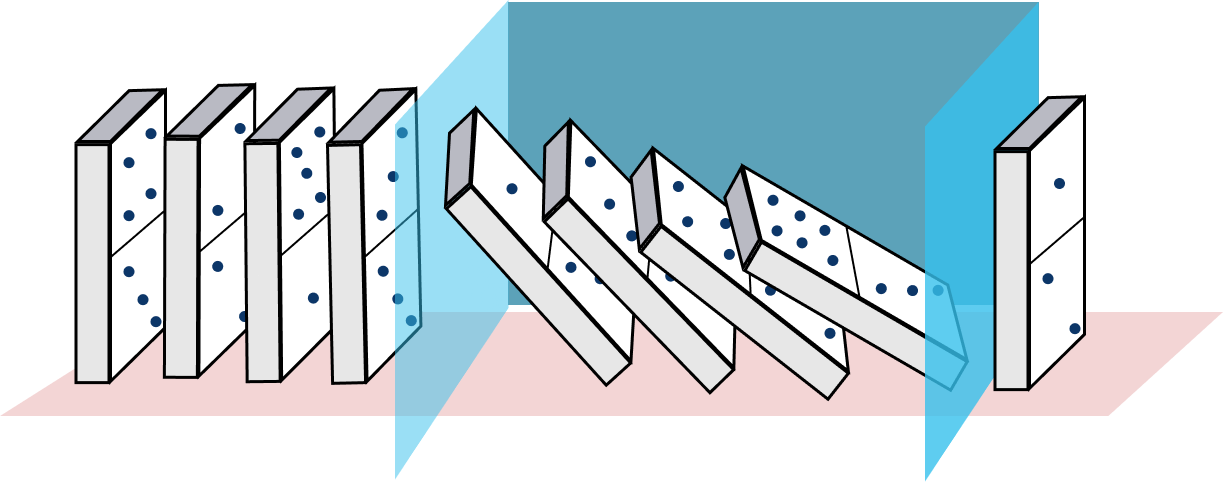

This brings us to Dilithium. Compared to Falcon it’s easy to implement safely and has better signing performance to boot. Its signatures and public keys are much larger though, which is problematic. For example, to each browser visiting this very page, we sent six signatures and two public keys. If we’d replace them all with Dilithium2 we would be looking at 17kB of additional data. Last year, we ran an experiment to see the impact of additional data in the TLS handshake:

There are some caveats to point out: first, we used a big 30-segment initial congestion window (icwnd). With a normal icwnd, the bump at 40KB moves to 10KB. Secondly, the height of this bump is the round-trip time (RTT), which due to our broadly distributed network, is very low for us. Thus, switching to Dilithium alone might well double your TLS handshake times. More disturbingly, we saw that some connections stopped working when we added too much data:

We expect this was caused by misbehaving middleboxes. Taken together, we concluded that early adoption of post-quantum signatures on the Internet would likely be more successful if those six signatures and two public keys would fit in 9KB. This can be achieved by using Dilithium for the handshake signature and Falcon for the other (offline) signatures.

At most one of Dilithium or Falcon

Unfortunately, NIST stated on several occasions that it would choose only two signature schemes, but not both Falcon and Dilithium:

The reason given is that both Dilithium and Falcon are based on structured lattices and thus do not add more security diversity. Because of the difficulty of implementing Falcon correctly, we expected NIST to standardize Dilithium and as a backup SPHINCS+. With that guess, we saw a big challenge ahead: to keep the Internet fast we would need some difficult and rigorous changes to the protocols.

The twist

However, to everyone’s surprise, NIST picked both! NIST chose to standardize Dilithium, Falcon and SPHINCS+. This is a very pleasant surprise for the Internet: it means that post-quantum authentication will be much simpler to adopt.

SPHINCS+, the conservative choice

In the excitement of the fight between Dilithium and Falcon, we could almost forget about SPHINCS+, a stateless hash-based signature. Its big advantage is that its security is based on the second-preimage resistance of the underlying hash-function, which is well understood. It is not a stretch to say that SPHINCS+ is the most conservative choice for a signature scheme, post-quantum or otherwise. But even as a co-submitter of SPHINCS+, I have to admit that its performance isn’t that great.

There is a lot of flexibility in the parameter choices for SPHINCS+: there are tradeoffs between signature size, signing time, verification time and the maximum number of signatures that can be minted. Of the current parameter sets, the “s” are optimized for size and “f” for signing speed; both chosen to allow 264 signatures. NIST has hinted at reducing the signature limit, which would improve performance. A custom choice of parameters for a particular application would improve it even more, but would still trail Dilithium.

Having discussed NIST choices, let’s have a look at those that were left out.

The other finalists

There were three other finalists: GeMSS, Picnic and Rainbow. None of these are progressing to a fourth round.

Picnic is a conservative choice similar to SPHINCS+. Its construction is interesting: it is based on the secure multiparty computation of a block cipher. To be efficient, a non-standard block cipher is chosen. This makes Picnic’s assumptions a bit less conservative, which is why NIST preferred SPHINCS+.

GeMSS and Rainbow are specialists: they have large public key sizes (hundreds of kilobytes), but very small signatures (33–66 bytes). They would be great for applications where the public key can be distributed out of band, such as for the Signed Certificate Timestamps included in certificates for Certificate Transparency. Unfortunately, both turned out to be broken.

Signature schemes on the horizon

Although we expect Falcon and Dilithium to be practical for the Internet, there is ample room for improvement. Many new signature schemes have been proposed after the start of the competition, which could help out a lot. NIST recognizes this and is opening a new competition for post-quantum signature schemes.

A few schemes that have caught our eye already are UOV, which has similar performance trade-offs to those for GeMSS and Rainbow; SQISign, which has small signatures, but is computationally expensive; and MAYO, which looks like it might be a great general-purpose signature scheme.

Stateful hash-based signatures

Finally, we’d be remiss not to mention the post-quantum signature scheme that already has been standardized by NIST: the stateful hash-based signature schemes LMS and XMSS. They share the same conservative security as their sibling SPHINCS+, but have much better performance. The rub is that for each keypair there are a finite number of signature slots and each signature slot can only be used once. If it’s used twice, it is insecure. This is why they are called stateful; as the signer must remember the state of all slots that have been used in the past, and any mistake is fatal. Keeping the state perfectly can be very challenging.

What else

What’s next?

NIST will draft standards for the selected schemes and request public feedback on them. There might be changes to the algorithms, but we do not expect anything major. The standards are expected to be finalized in 2024.

In the coming months, many languages, libraries and protocols will already add preliminary support for the current version of Kyber and the other post-quantum algorithms. We’re helping out to make post-quantum available to the Internet as soon as possible: we’re working within the IETF to add Kyber to TLS and will contribute upstream support to popular open-source libraries.

Start experimenting with Kyber today

Now is a good time for you to try out Kyber in your software stacks. We were lucky to correctly guess Kyber would be picked and have experience running it internally. Our tests so far show it performs great. Your requirements might differ, so try it out yourself.

The reference implementation in C is excellent. The Open Quantum Safe project integrates it with various TLS libraries, but beware: the algorithm identifiers and scheme might still change, so be ready to migrate.

Our CIRCL library has a fast independent implementation of Kyber in Go. We implemented Kyber ourselves so that we could help tease out any implementation bugs or subtle underspecification.

Experimenting with post-quantum signatures

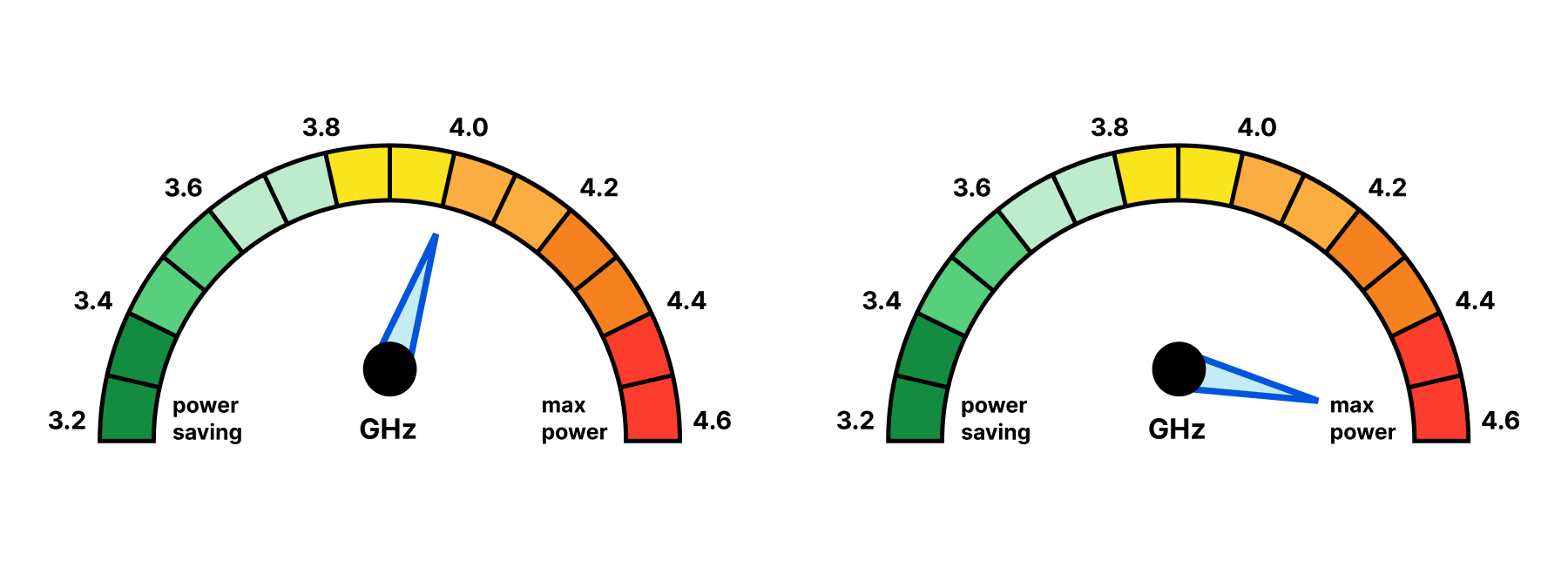

Post-quantum signatures are not as urgent, but might require more engineering to get right. First off, which signature scheme to pick?

- Are large signatures and slow operations acceptable? Go for SPHINCS+.

- Do you need more performance?

- Can your signature generation be timed, for instance when generated on-the-fly? Then go for (a hybrid, see below, with) Dilithium.

- For offline signatures, go for (a hybrid with) Falcon.

- If you can keep a state perfectly, check out XMSS/LMS.

Open Quantum Safe can be used to test these out. Our CIRCL library also has a fast independent implementation of Dilithium in Go. We’ll add Falcon and SPHINCS+ soon.

Hybrids

A hybrid is a combination of a classical and a post-quantum scheme. For instance, we can combine Kyber512 with X25519 to create a single Kyber512X key agreement. The advantage of a hybrid is that the data remains secure against non-quantum attackers even if Kyber512 turns out broken. It is important to note that it’s not just about the algorithm, but also the implementation: Kyber512 might be perfectly secure, but an implementation might leak via side-channels. The downside is that two key-exchanges are performed, which takes more CPU cycles and bytes on the wire. For the moment, we prefer sticking with hybrids, but we will revisit this soon.

Post-quantum security levels

Each algorithm has different parameters targeting various post-quantum security levels. Up till now we’ve only discussed the performance characteristics of security level 1 (or 2 in case of Dilithium, which doesn’t have level 1 parameters.) The definition of the security levels is rather interesting: they’re defined as being as hard to crack by a classical or quantum attacker as specific instances of AES and SHA:

| Level | Definition, as least as hard to break as … |

|---|---|

| 1 | To recover the key of AES-128 by exhaustive search |

| 2 | To find a collision in SHA256 by exhaustive search |

| 3 | To recover the key of AES-192 by exhaustive search |

| 4 | To find a collision in SHA384 by exhaustive search |

| 5 | To recover the key of AES-256 by exhaustive search |

So which security level should we pick? Is level 1 good enough? We’d need to understand how hard it is for a quantum computer to crack AES-128.

Grover’s algorithm

In 1996, two years after Shor’s paper, Lov Grover published his quantum search algorithm. With it, you can find the AES-128 key (given known plain and ciphertext) with only 264 executions of the cipher in superposition. That sounds much faster than the 2127 tries on average for a classical brute-force attempt. In fact, it sounds like security level 1 isn’t that secure at all. Don’t be alarmed: level 1 is much more secure than it sounds, but it requires some context.

To start, a classical brute-force attempt can be parallelized — millions of machines can participate, sharing the work. Grover’s algorithm, on the other hand, doesn’t parallelize well because the quadratic speedup disappears over that portion. To wit, a billion quantum computers would still have to do 249 iterations each to crack AES-128.

Then each iteration requires many gates. It’s estimated that these 249 operations take roughly 264 noiseless quantum gates. If each of our billion quantum computers could execute a billion noiseless quantum gates per second, then it’d still take 500 years.

That already sounds more secure, but we’re not done. Quantum computers do not execute noiseless quantum gates: they’re analogue machines. Every operation has a little bit of noise. Does this mean that quantum computing is hopeless? Not at all! There are clever algorithms to turn, say, a million noisy qubits into one less noisy qubit. It doesn’t just add qubits, but also extra gates. How much depends very much on the exact details of the quantum computer.

It is not inconceivable that in the future there will be quantum computers that effectively execute far more than a billion noiseless gates per second, but it will likely be decades after Shor’s algorithm is practical. This all is a long-winded way of saying that security level 1 seems solid for the foreseeable future.

Hedging against attacks

A different reason to pick a higher security level is to hedge against better attacks on the algorithm. This makes a lot of sense, but it is important to note that this isn’t a foolproof strategy:

- Not all attacks are small improvements. It’s possible that improvements in cryptanalysis break all security levels at once.

- Higher security levels do not protect against implementation flaws, such as (new) timing vulnerabilities.

A different aspect, that’s arguably more important than picking a high number, is crypto agility: being able to switch to a new algorithm/implementation in case of a break of trouble. Let’s hope that we will not need it, but now we’re going to switch, it’s nice to make it easier in the future.

CIRCL is Post-Quantum Enabled

We already mentioned CIRCL a few times, it’s our optimized crypto-library for Go whose development we started in 2019. CIRCL already contains support for several post-quantum algorithms such as the KEMs Kyber and SIKE and signature schemes Dilithium and Frodo. The code is up to date and compliant with test vectors from the third round. CIRCL is readily usable in Go programs either as a library or natively as part of Go using this fork.

One goal of CIRCL is to enable experimentation with post-quantum algorithms in TLS. For instance, we ran a measurement study to evaluate the feasibility of the KEMTLS protocol for which we’ve adapted the TLS package of the Go library.

As an example, this code uses CIRCL to sign a message with eddilithium2, a hybrid signature scheme pairing Ed25519 with Dilithium mode 2.

package main

import (

"crypto"

"crypto/rand"

"fmt"

"github.com/cloudflare/circl/sign/eddilithium2"

)

func main() {

// Generating random keypair.

pk, sk, err := eddilithium2.GenerateKey(rand.Reader)

// Signing a message.

msg := []byte("Signed with CIRCL using " + eddilithium2.Scheme().Name())

signature, err := sk.Sign(rand.Reader, msg, crypto.Hash(0))

// Verifying signature.

valid := eddilithium2.Verify(pk, msg, signature[:])

fmt.Printf("Message: %v\n", string(msg))

fmt.Printf("Signature (%v bytes): %x...\n", len(signature), signature[:4])

fmt.Printf("Signature Valid: %v\n", valid)

fmt.Printf("Errors: %v\n", err)

}Message: Signed with CIRCL using Ed25519-Dilithium2

Signature (2484 bytes): 84d6882a...

Signature Valid: true

Errors: <nil>

As can be seen the application programming interface is the same as the crypto.Signer interface from the standard library. Try it out, and we’re happy to hear your feedback.

Conclusion

This is a big moment for the Internet. From a set of excellent options for post-quantum key agreement, NIST chose Kyber. With it, we can secure the data on the Internet today against quantum adversaries of the future, without compromising on performance.

On the authentication side, NIST pleasantly surprised us by choosing both Falcon and Dilithium against their earlier statements. This was a great choice, as it will make post-quantum authentication more practical than we expected it would be.

Together with the cryptography community, we have our work cut out for us: we aim to make the Internet post-quantum secure as fast as possible.

Want to follow along? Keep an eye on this blog or have a look at research.cloudflare.com.

Want to help out? We’re hiring and open to research visits.

![[VIDEO] An Inside Look at the RSA 2022 Experience From the Rapid7 Team](https://blog.rapid7.com/content/images/2022/06/RSAC-2022-experience.jpg)

![[VIDEO] An Inside Look at the RSA 2022 Experience From the Rapid7 Team](https://play.vidyard.com/H2GfDP6w1PPGWrcrbR6cDW.jpg)

![[VIDEO] An Inside Look at the RSA 2022 Experience From the Rapid7 Team](https://play.vidyard.com/rjqC7aBPwPEp682bGvm3Pt.jpg)

![[VIDEO] An Inside Look at the RSA 2022 Experience From the Rapid7 Team](https://play.vidyard.com/FBbwA1HVwbrsC9gNEPC7h5.jpg)