Post Syndicated from original https://xkcd.com/2687/

Post Syndicated from original https://xkcd.com/2687/

Post Syndicated from Eric Johnson original https://aws.amazon.com/blogs/compute/propagating-valid-mtls-client-certificate-identity-to-downstream-services-using-amazon-api-gateway/

This blog written by Omkar Deshmane, Senior SA and Anton Aleksandrov, Principal SA, Serverless.

This blog shows how to use Amazon API Gateway with a custom authorizer to process incoming requests, validate the mTLS client certificate, extract the client certificate subject, and propagate it to the downstream application in a base64 encoded HTTP header.

This pattern allows you to terminate mTLS at the edge so downstream applications do not need to perform client certificate validation. With this approach, developers can focus on application logic and offload mTLS certificate management and validation to a dedicated service, such as API Gateway.

Authentication is one of the core security aspects that you must address when building a cloud application. Successful authentication proves you are who you are claiming to be. There are various common authentication patterns, such as cookie-based authentication, token-based authentication, or the topic of this blog – a certificate-based authentication.

Transport Layer Security (TLS) certificates are at the core of a secure and safe internet. TLS certificates secure the connection between the client and server by encrypting data, ensuring private communication. When using the TLS protocol, the server must prove its identity to the client using a certificate signed by a certificate authority trusted by the client.

Mutual TLS (mTLS) introduces an additional layer of security, in which both the client and server must prove their identities to each other. Developers commonly use mTLS for application-to-application authentication — using digital certificates to represent both client and server apps is a common authentication pattern for building such workflows. We highly recommended decoupling the mTLS implementation from the application business logic so that you do not have to update the application when changing the mTLS configuration. It is a common pattern to implement the mTLS authentication and termination in a network appliance at the edge, such as Amazon API Gateway.

In this solution, we show a pattern of using API Gateway with an authorizer implemented with AWS Lambda to validate the mTLS client certificate, extract the client certificate subject, and propagate it to the downstream application in a base64 encoded HTTP header.

While this blog describes how to implement this pattern for identities extracted from the mTLS client certificates, you can generalize it and apply to propagating information obtained via any other means of authentication.

This blog includes a sample application implemented using the AWS Serverless Application Model (AWS SAM). It creates a demo environment containing resources like API Gateway, a Lambda authorizer, and an Amazon EC2 instance, which simulates the backend application.

The EC2 instance is used for the backend application to mimic common customer scenarios. You can use any other type of compute, such as Lambda functions or containerized applications with Amazon Elastic Container Service (Amazon ECS) or Amazon Elastic Kubernetes Service (Amazon EKS), as a backend application layer as well.

The following diagram shows the solution architecture:

Some resources created as part of this sample architecture deployment have associated costs, both when running and idle. This includes resources like Amazon Virtual Private Cloud (Amazon VPC), VPC NAT Gateway, and EC2 instances. We recommend deleting the deployed stack after exploring the solution to avoid unexpected costs. See the Cleaning Up section for details.

Refer to the project code repository for instructions to deploy the solution using AWS SAM. The deployment provisions multiple resources, taking several minutes to complete.

Following the successful deployment, refer to the RestApiEndpoint variable in the Output section to locate the API Gateway endpoint. Note this value for testing later.

There are two key areas in the sample project code.

In src/authorizer/index.js, the Lambda authorizer code extracts the subject from the client certificate. It returns the value as part of the context object to API Gateway. This allows API Gateway to use this value in the subsequent integration request.

const crypto = require('crypto');

exports.handler = async (event) => {

console.log ('> handler', JSON.stringify(event, null, 4));

const clientCertPem = event.requestContext.identity.clientCert.clientCertPem;

const clientCert = new crypto.X509Certificate(clientCertPem);

const clientCertSub = clientCert.subject.replaceAll('\n', ',');

const response = {

principalId: clientCertSub,

context: { clientCertSub },

policyDocument: {

Version: '2012-10-17',

Statement: [{

Action: 'execute-api:Invoke',

Effect: 'allow',

Resource: event.methodArn

}]

}

};

console.log('Authorizer Response', JSON.stringify(response, null, 4));

return response;

};

In template.yaml, API Gateway injects the client certificate subject extracted by the Lambda authorizer previously into the integration request as X-Client-Cert-Sub HTTP header. X-Client-Cert-Sub is a custom header name and you can choose any other custom header name instead.

SayHelloGetMethod:

Type: AWS::ApiGateway::Method

Properties:

AuthorizationType: CUSTOM

AuthorizerId: !Ref CustomAuthorizer

HttpMethod: GET

ResourceId: !Ref SayHelloResource

RestApiId: !Ref RestApi

Integration:

Type: HTTP_PROXY

ConnectionType: VPC_LINK

ConnectionId: !Ref VpcLink

IntegrationHttpMethod: GET

Uri: !Sub 'http://${NetworkLoadBalancer.DNSName}:3000/'

RequestParameters:

'integration.request.header.X-Client-Cert-Sub': 'context.authorizer.clientCertSub' You create a client key and certificate during the deployment, which are stored in the /certificates directory. Use the curl command to make a request to the REST API endpoint using these files.

curl --cert certificates/client.pem --key certificates/client.key \

<use the RestApiEndpoint found in CloudFormation output>The client request to API Gateway uses mTLS with a client certificate supplied for mutual TLS authentication. API Gateway uses the Lambda authorizer to extract the certificate subject, and inject it into the Integration request.

The HTTP server runs on the EC2 instance, simulating the backend application. It accepts the incoming request and echoes it back, supplying request headers as part of the response body. The HTTP response received from the backend application contains a simple message and a copy of the request headers sent from API Gateway to the backend.

One header is x-client-cert-sub with the Common Name value you provided to the client certificate during creation. Verify that the value matches the Common Name that you provided when generating the client certificate.

API Gateway validated the mTLS client certificate, used the Lambda authorizer to extract the subject common name from the certificate, and forwarded it to the downstream application.

Use the sam delete command in the api-gateway-certificate-propagation directory to delete the sample application infrastructure:

sam deleteYou can also refer to the project code repository for the clean-up instructions.

This blog shows how to use the API Gateway with a Lambda authorizer for mTLS client certificate validation, custom field extraction, and downstream propagation to backend systems. This pattern allows you to terminate mTLS at the edge so that downstream applications are not responsible for client certificate validation.

For additional documentation, refer to Using API Gateway with Lambda Authorizer. Download the sample code from the project code repository. For more serverless learning resources, visit Serverless Land.

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=3AconLNL6iI

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=tcWvZzM50O0

Post Syndicated from Netflix Technology Blog original https://netflixtechblog.com/orchestrating-data-ml-workflows-at-scale-with-netflix-maestro-aaa2b41b800c

by Jun He, Akash Dwivedi, Natallia Dzenisenka, Snehal Chennuru, Praneeth Yenugutala, Pawan Dixit

At Netflix, Data and Machine Learning (ML) pipelines are widely used and have become central for the business, representing diverse use cases that go beyond recommendations, predictions and data transformations. A large number of batch workflows run daily to serve various business needs. These include ETL pipelines, ML model training workflows, batch jobs, etc. As Big data and ML became more prevalent and impactful, the scalability, reliability, and usability of the orchestrating ecosystem have increasingly become more important for our data scientists and the company.

In this blog post, we introduce and share learnings on Maestro, a workflow orchestrator that can schedule and manage workflows at a massive scale.

Scalability and usability are essential to enable large-scale workflows and support a wide range of use cases. Our existing orchestrator (Meson) has worked well for several years. It schedules around 70 thousands of workflows and half a million jobs per day. Due to its popularity, the number of workflows managed by the system has grown exponentially. We started seeing signs of scale issues, like:

With the high growth of workflows in the past few years — increasing at > 100% a year, the need for a scalable data workflow orchestrator has become paramount for Netflix’s business needs. After perusing the current landscape of workflow orchestrators, we decided to develop a next generation system that can scale horizontally to spread the jobs across the cluster consisting of 100’s of nodes. It addresses the key challenges we face with Meson and achieves operational excellence.

The orchestrator has to schedule hundreds of thousands of workflows, millions of jobs every day and operate with a strict SLO of less than 1 minute of scheduler introduced delay even when there are spikes in the traffic. At Netflix, the peak traffic load can be a few orders of magnitude higher than the average load. For example, a lot of our workflows are run around midnight UTC. Hence, the system has to withstand bursts in traffic while still maintaining the SLO requirements. Additionally, we would like to have a single scheduler cluster to manage most of user workflows for operational and usability reasons.

Another dimension of scalability to consider is the size of the workflow. In the data domain, it is common to have a super large number of jobs within a single workflow. For example, a workflow to backfill hourly data for the past five years can lead to 43800 jobs (24 * 365 * 5), each of which processes data for an hour. Similarly, ML model training workflows usually consist of tens of thousands of training jobs within a single workflow. Those large-scale workflows might create hotspots and overwhelm the orchestrator and downstream systems. Therefore, the orchestrator has to manage a workflow consisting of hundreds of thousands of jobs in a performant way, which is also quite challenging.

Netflix is a data-driven company, where key decisions are driven by data insights, from the pixel color used on the landing page to the renewal of a TV-series. Data scientists, engineers, non-engineers, and even content producers all run their data pipelines to get the necessary insights. Given the diverse backgrounds, usability is a cornerstone of a successful orchestrator at Netflix.

We would like our users to focus on their business logic and let the orchestrator solve cross-cutting concerns like scheduling, processing, error handling, security etc. It needs to provide different grains of abstractions for solving similar problems, high-level to cater to non-engineers and low-level for engineers to solve their specific problems. It should also provide all the knobs for configuring their workflows to suit their needs. In addition, it is critical for the system to be debuggable and surface all the errors for users to troubleshoot, as they improve the UX and reduce the operational burden.

Providing abstractions for the users is also needed to save valuable time on creating workflows and jobs. We want users to rely on shared templates and reuse their workflow definitions across their team, saving time and effort on creating the same functionality. Using job templates across the company also helps with upgrades and fixes: when the change is made in a template it’s automatically updated for all workflows that use it.

However, usability is challenging as it is often opinionated. Different users have different preferences and might ask for different features. Sometimes, the users might ask for the opposite features or ask for some niche cases, which might not necessarily be useful for a broader audience.

Maestro is the next generation Data Workflow Orchestration platform to meet the current and future needs of Netflix. It is a general-purpose workflow orchestrator that provides a fully managed workflow-as-a-service (WAAS) to the data platform at Netflix. It serves thousands of users, including data scientists, data engineers, machine learning engineers, software engineers, content producers, and business analysts, for various use cases.

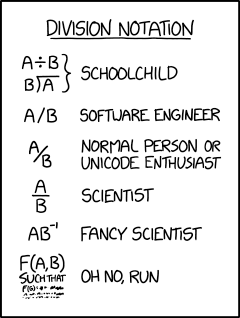

Maestro is highly scalable and extensible to support existing and new use cases and offers enhanced usability to end users. Figure 1 shows the high-level architecture.

In Maestro, a workflow is a DAG (Directed acyclic graph) of individual units of job definition called Steps. Steps can have dependencies, triggers, workflow parameters, metadata, step parameters, configurations, and branches (conditional or unconditional). In this blog, we use step and job interchangeably. A workflow instance is an execution of a workflow, similarly, an execution of a step is called a step instance. Instance data include the evaluated parameters and other information collected at runtime to provide different kinds of execution insights. The system consists of 3 main micro services which we will expand upon in the following sections.

Maestro ensures the business logic is run in isolation. Maestro launches a unit of work (a.k.a. Steps) in a container and ensures the container is launched with the users/applications identity. Launching with identity ensures the work is launched on-behalf-of the user/application, the identity is later used by the downstream systems to validate if an operation is allowed or not, for an example user/application identity is checked by the data warehouse to validate if a table read/write is allowed or not.

Workflow engine is the core component, which manages workflow definitions, the lifecycle of workflow instances, and step instances. It provides rich features to support:

We use Netflix open source project Conductor as a library to manage the workflow state machine in Maestro. It ensures to enqueue and dequeue each step defined in a workflow with at least once guarantee.

Time-based scheduling service starts new workflow instances at the scheduled time specified in workflow definitions. Users can define the schedule using cron expression or using periodic schedule templates like hourly, weekly etc;. This service is lightweight and provides an at-least-once scheduling guarantee. Maestro engine service will deduplicate the triggering requests to achieve an exact-once guarantee when scheduling workflows.

Time-based triggering is popular due to its simplicity and ease of management. But sometimes, it is not efficient. For example, the daily workflow should process the data when the data partition is ready, not always at midnight. Therefore, on top of manual and time-based triggering, we also provide event-driven triggering.

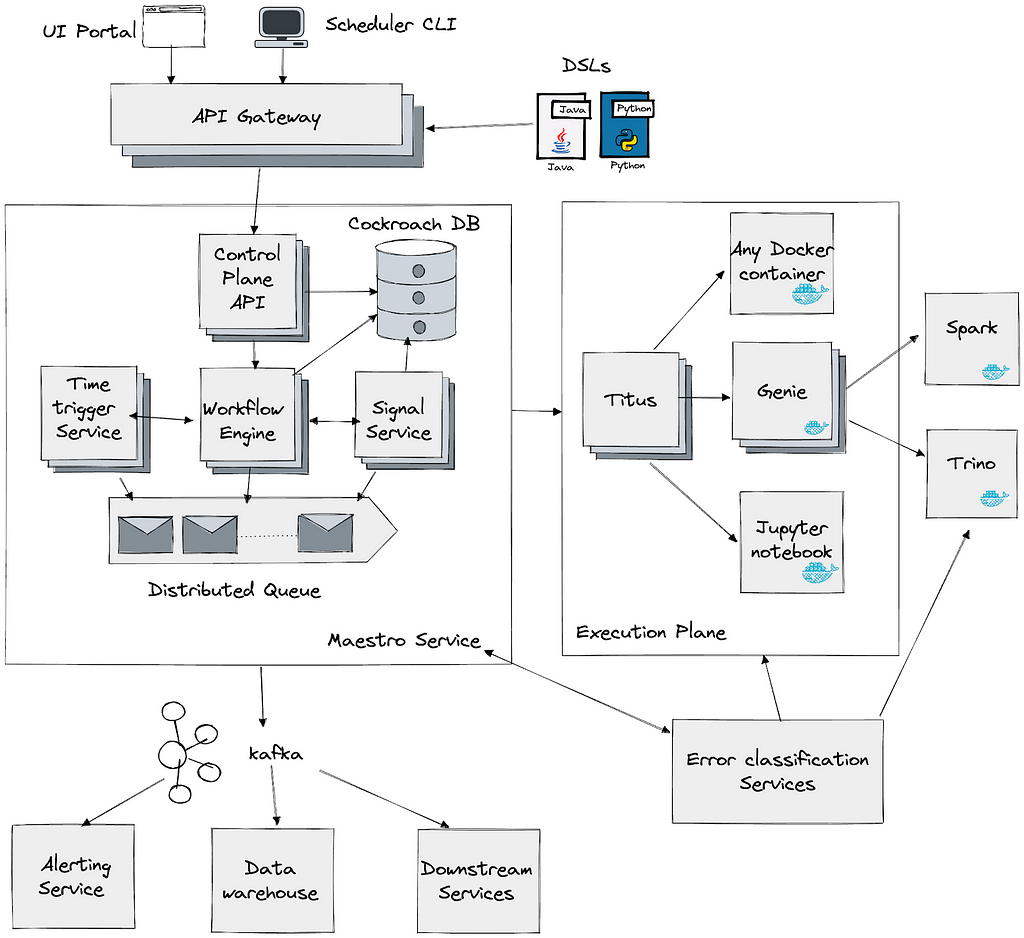

Maestro supports event-driven triggering over signals, which are pieces of messages carrying information such as parameter values. Signal triggering is efficient and accurate because we don’t waste resources checking if the workflow is ready to run, instead we only execute the workflow when a condition is met.

Signals are used in two ways:

Signal service goals are to

The maestro signal service consumes all the signals from different sources, e.g. all the warehouse table updates, S3 events, a workflow releasing a signal, and then generates the corresponding triggers by correlating a signal with its subscribed workflows. In addition to the transformation between external signals and workflow triggers, this service is also responsible for step dependencies by looking up the received signals in the history. Like the scheduling service, the signal service together with Maestro engine achieves exactly-once triggering guarantees.

Signal service also provides the signal lineage, which is useful in many cases. For example, a table updated by a workflow could lead to a chain of downstream workflow executions. Most of the time the workflows are owned by different teams, the signal lineage helps the upstream and downstream workflow owners to see who depends on whom.

All services in the Maestro system are stateless and can be horizontally scaled out. All the requests are processed via distributed queues for message passing. By having a shared nothing architecture, Maestro can horizontally scale to manage the states of millions of workflow and step instances at the same time.

CockroachDB is used for persisting workflow definitions and instance state. We chose CockroachDB as it is an open-source distributed SQL database that provides strong consistency guarantees that can be scaled horizontally without much operational overhead.

It is hard to support super large workflows in general. For example, a workflow definition can explicitly define a DAG consisting of millions of nodes. With that number of nodes in a DAG, UI cannot render it well. We have to enforce some constraints and support valid use cases consisting of hundreds of thousands (or even millions) of step instances in a workflow instance.

Based on our findings and user feedback, we found that in practice

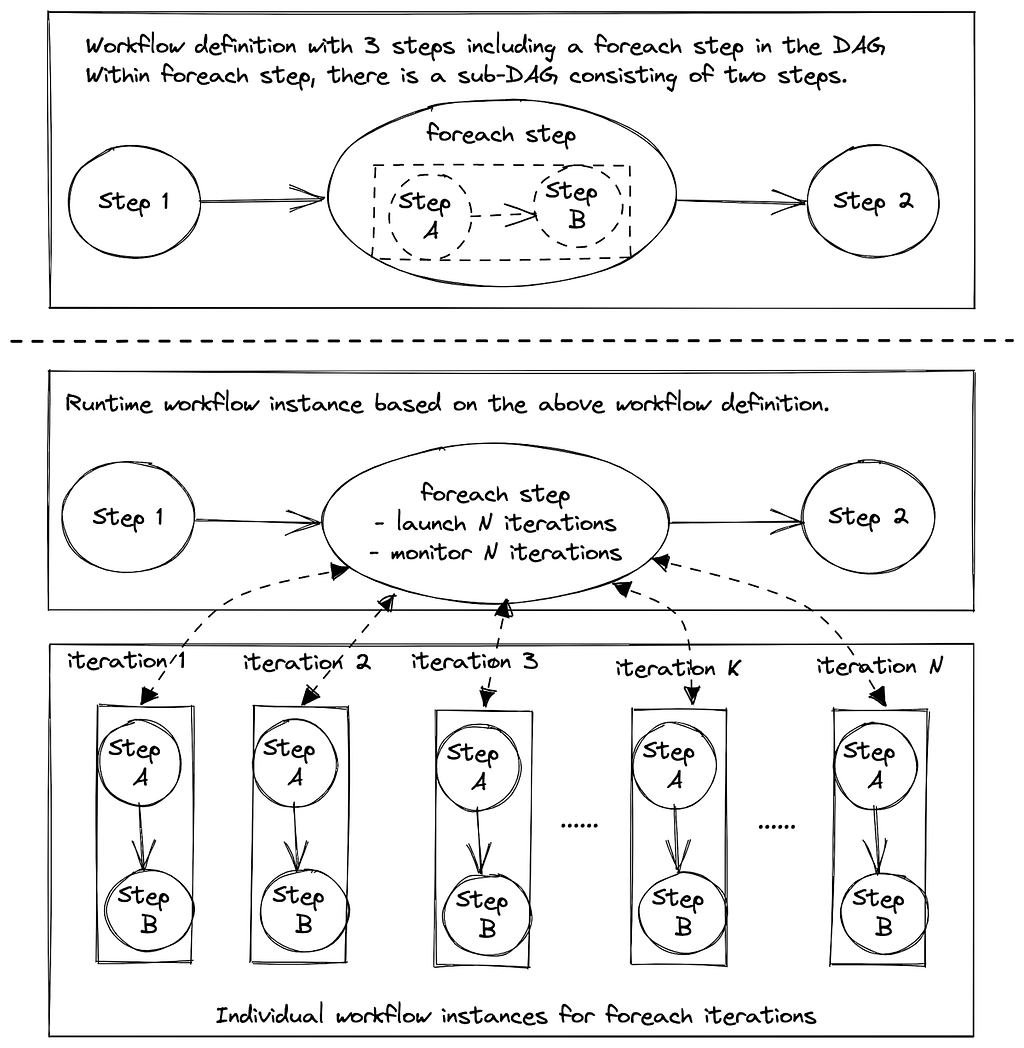

Therefore, we enforce a workflow DAG size limit (e.g. 1K) and we provide a foreach pattern that allows users to define a sub DAG within a foreach block and iterate the sub DAG with a larger limit (e.g. 100K). Note that foreach can be nested by another foreach. So users can run millions or billions of steps in a single workflow instance.

In Maestro, foreach itself is a step in the original workflow definition. Foreach is internally treated as another workflow which scales similarly as any other Maestro workflow based on the number of step executions in the foreach loop. The execution of sub DAG within foreach will be delegated to a separate workflow instance. Foreach step will then monitor and collect status of those foreach workflow instances, each of which manages the execution of one iteration.

With this design, foreach pattern supports sequential loop and nested loop with high scalability. It is easy to manage and troubleshoot as users can see the overall loop status at the foreach step or view each iteration separately.

We aim to make Maestro user friendly and easy to learn for users with different backgrounds. We made some assumptions about user proficiency in programming languages and they can bring their business logic in multiple ways, including but not limited to, a bash script, a Jupyter notebook, a Java jar, a docker image, a SQL statement, or a few clicks in the UI using parameterized workflow templates.

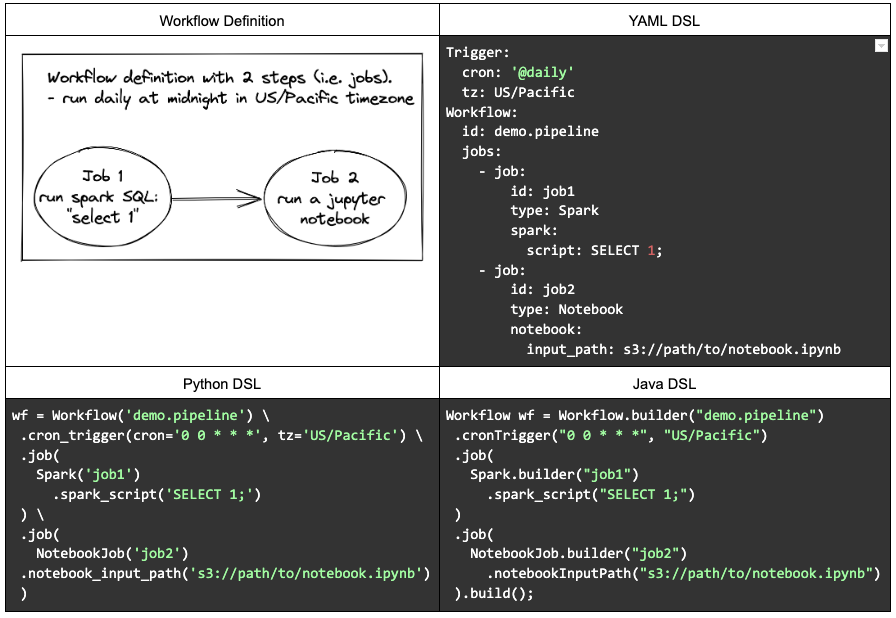

Maestro provides multiple domain specific languages (DSLs) including YAML, Python, and Java, for end users to define their workflows, which are decoupled from their business logic. Users can also directly talk to Maestro API to create workflows using the JSON data model. We found that human readable DSL is popular and plays an important role to support different use cases. YAML DSL is the most popular one due to its simplicity and readability.

Here is an example workflow defined by different DSLs.

Additionally, users can also generate certain types of workflows on UI or use other libraries, e.g.

Lots of times, users want to define a dynamic workflow to adapt to different scenarios. Based on our experiences, a completely dynamic workflow is less favorable and hard to maintain and troubleshooting. Instead, Maestro provides three features to assist users to define a parameterized workflow

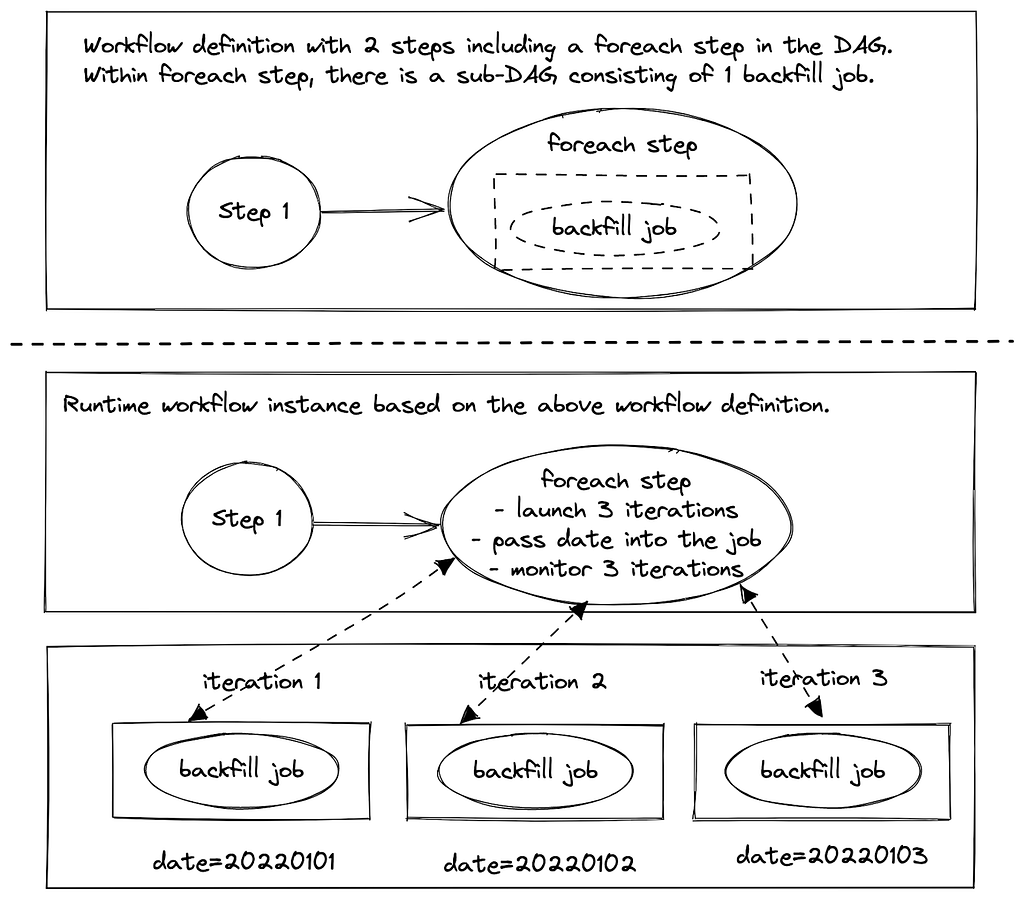

Instead of dynamically changing the workflow DAG at runtime, users can define those changes as sub workflows and then invoke the appropriate sub workflow at runtime because the sub workflow id is a parameter, which is evaluated at runtime. Additionally, using the output parameter, users can produce different results from the upstream job step and then iterate through those within the foreach, pass it to the sub workflow, or use it in the downstream steps.

Here is an example (using YAML DSL) of backfill workflow with 2 steps. In step1, the step computes the backfill ranges and returns the dates back. Next, foreach step uses the dates from step1 to create foreach iterations. Finally, each of the backfill jobs gets the date from the foreach and backfills the data based on the date.

Workflow:

id: demo.pipeline

jobs:

- job:

id: step1

type: NoOp

'!dates': return new int[]{20220101,20220102,20220103}; #SEL

- foreach:

id: step2

params:

date: ${dates@step1} #reference a upstream step parameter

jobs:

- job:

id: backfill

type: Notebook

notebook:

input_path: s3://path/to/notebook.ipynb

arg1: $date #pass the foreach parameter into notebook

The parameter system in Maestro is completely dynamic with code injection support. Users can write the code in Java syntax as the parameter definition. We developed our own secured expression language (SEL) to ensure security. It only exposes limited functionality and includes additional checks (e.g. the number of iteration in the loop statement, etc.) in the language parser.

Maestro provides multiple levels of execution abstractions. Users can choose to use the provided step type and set its parameters. This helps to encapsulate the business logic of commonly used operations, making it very easy for users to create jobs. For example, for spark step type, all users have to do is just specify needed parameters like spark sql query, memory requirements, etc, and Maestro will do all behind-the-scenes to create the step. If we have to make a change in the business logic of a certain step, we can do so seamlessly for users of that step type.

If provided step types are not enough, users can also develop their own business logic in a Jupyter notebook and then pass it to Maestro. Advanced users can develop their own well-tuned docker image and let Maestro handle the scheduling and execution.

Additionally, we abstract the common functions or reusable patterns from various use cases and add them to the Maestro in a loosely coupled way by introducing job templates, which are parameterized notebooks. This is different from step types, as templates provide a combination of various steps. Advanced users also leverage this feature to ship common patterns for their own teams. While creating a new template, users can define the list of required/optional parameters with the types and register the template with Maestro. Maestro validates the parameters and types at the push and run time. In the future, we plan to extend this functionality to make it very easy for users to define templates for their teams and for all employees. In some cases, sub-workflows are also used to define common sub DAGs to achieve multi-step functions.

We are taking Big Data Orchestration to the next level and constantly solving new problems and challenges, please stay tuned. If you are motivated to solve large scale orchestration problems, please join us as we are hiring.

Orchestrating Data/ML Workflows at Scale With Netflix Maestro was originally published in Netflix TechBlog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=0BcQudh2cME

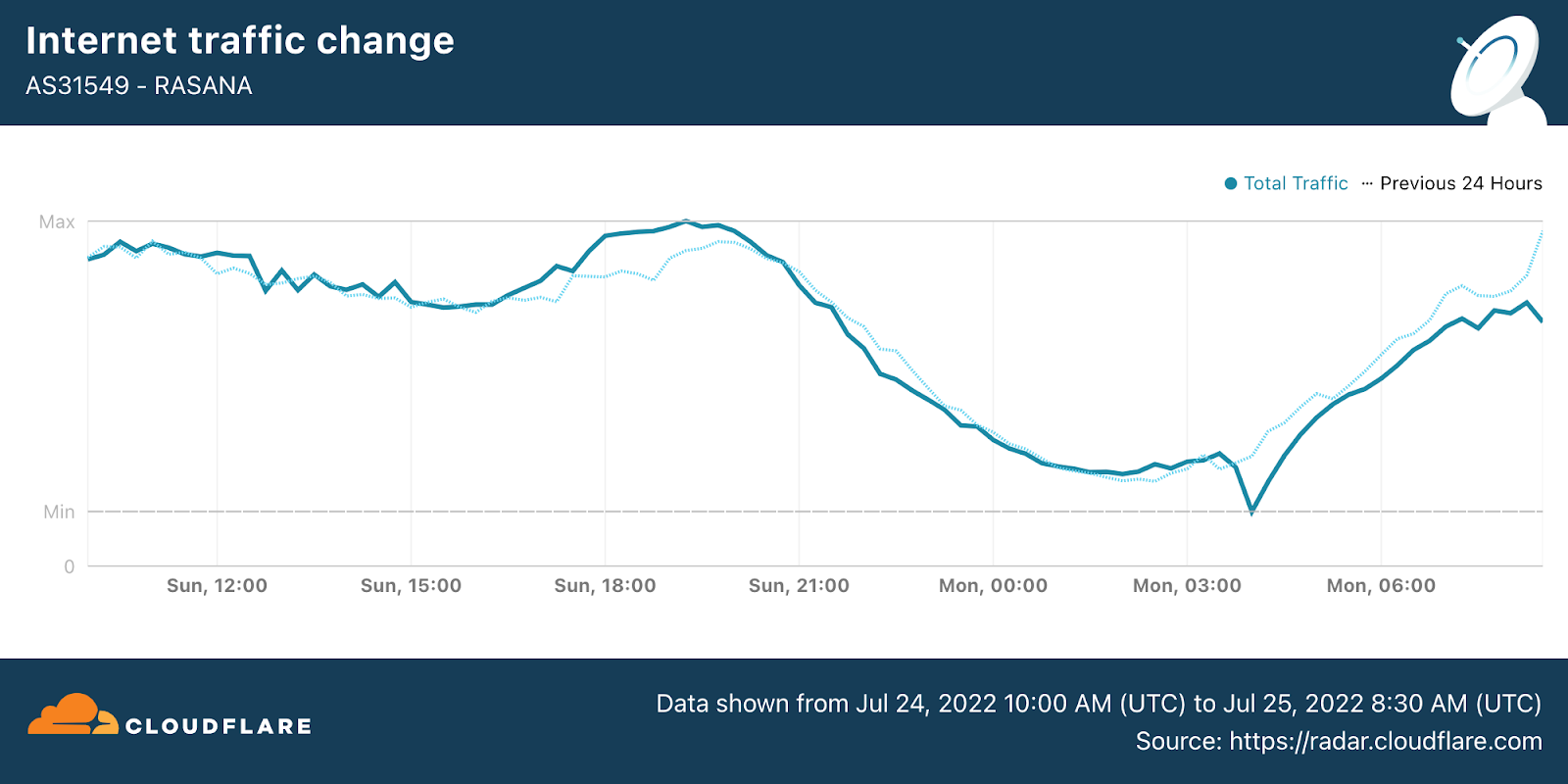

Post Syndicated from David Belson original https://blog.cloudflare.com/q3-2022-internet-disruption-summary/

Cloudflare operates in more than 275 cities in over 100 countries, where we interconnect with over 10,000 network providers in order to provide a broad range of services to millions of customers. The breadth of both our network and our customer base provides us with a unique perspective on Internet resilience, enabling us to observe the impact of Internet disruptions. In many cases, these disruptions can be attributed to a physical event, while in other cases, they are due to an intentional government-directed shutdown. In this post, we review selected Internet disruptions observed by Cloudflare during the third quarter of 2022, supported by traffic graphs from Cloudflare Radar and other internal Cloudflare tools, and grouped by associated cause or common geography. The new Cloudflare Radar Outage Center provides additional information on these, and other historical, disruptions.

Unfortunately, for the last decade, governments around the world have turned to shutting down the Internet as a means of controlling or limiting communication among citizens and with the outside world. In the third quarter, this was an all too popular cause of observed disruptions, impacting countries and regions in Africa, the Middle East, Asia, and the Caribbean.

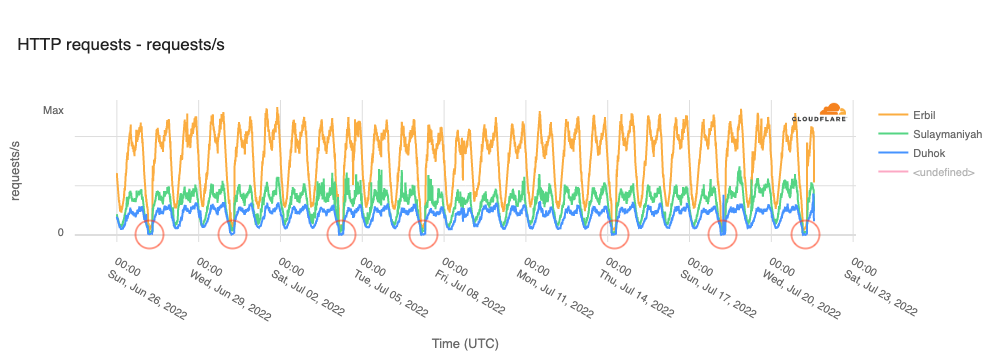

As mentioned in our Q2 summary blog post, on June 27, the Kurdistan Regional Government in Iraq began to implement twice-weekly (Mondays and Thursday) multi-hour regional Internet shutdowns over the following four weeks, intended to prevent cheating on high school final exams. As seen in the figure below, these shutdowns occurred as expected each Monday and Thursday through July 21, with the exception of July 21. They impacted three governorates in Iraq, and lasted from 0630–1030 local time (0330–0730 UTC) each day.

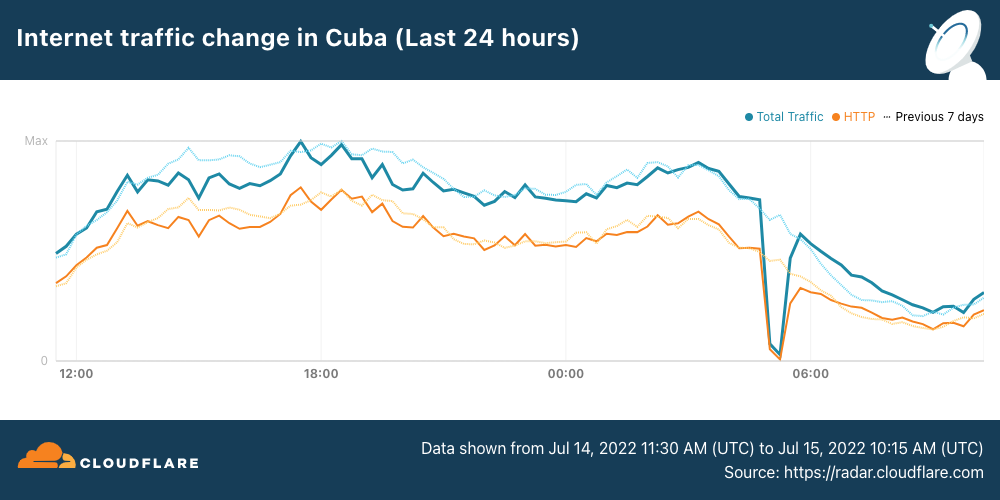

In Cuba, an Internet disruption was observed between 0055-0150 local time (0455-0550 UTC) on July 15 amid reported anti-government protests in Los Palacios and Pinar del Rio.

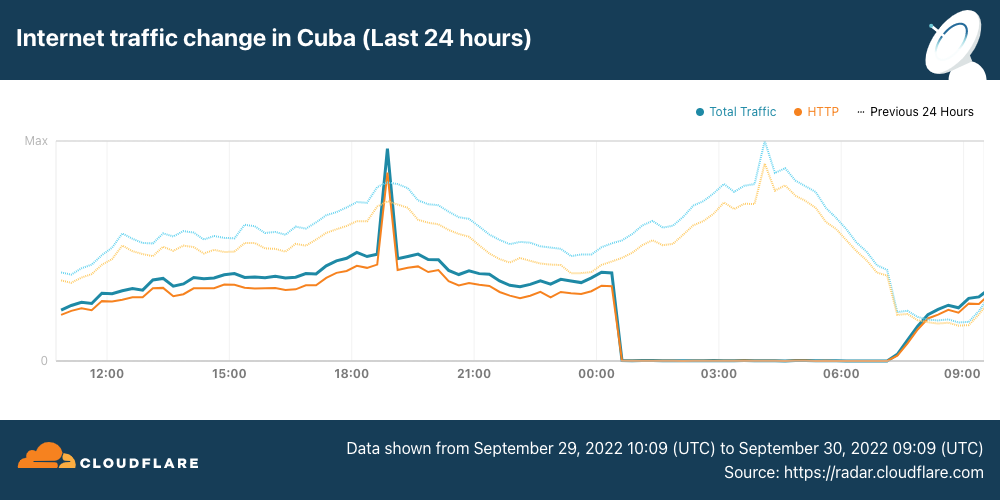

Closing out the quarter, another significant disruption was observed in Cuba, reportedly in response to protests over the lack of electricity in the wake of Hurricane Ian. A complete outage is visible in the figure below between 2030 on September 29 and 0315 on September 30 local time (0030-0715 UTC on September 30).

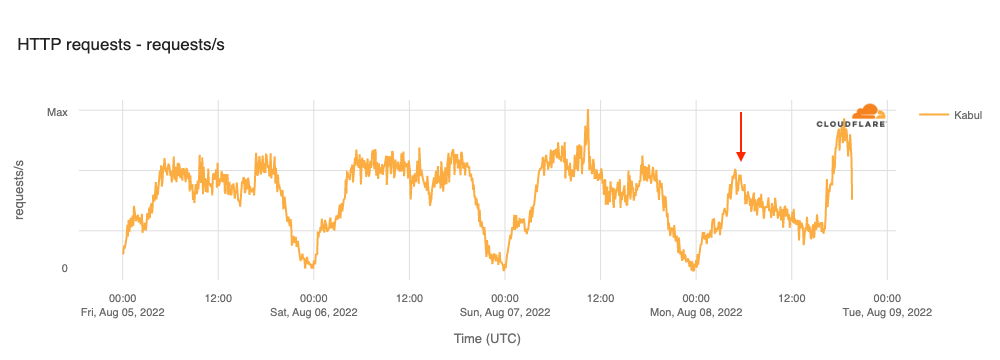

Telecommunications services were reportedly shut down in part of Kabul, Afghanistan on the morning of August 8. The figure below shows traffic dropping starting around 0930 local time (0500 UTC), recovering 11 hours later, around 2030 local time (1600 UTC).

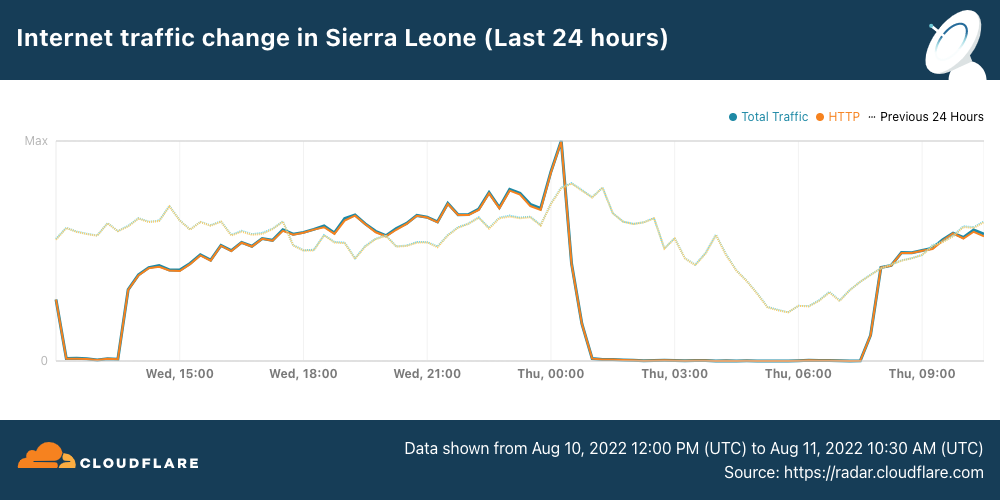

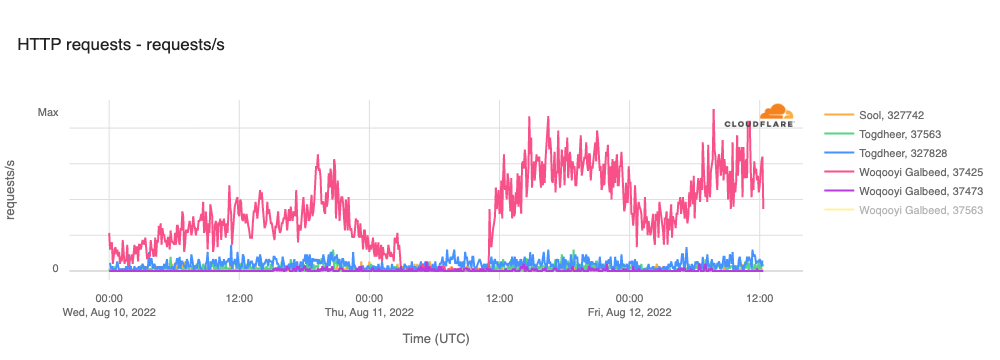

Protests in Freetown, Sierra Leone over the rising cost of living likely drove the Internet disruptions observed within the country on August 10 & 11. The first one occurred between 1200-1400 local time (1200-1400 UTC) on August 10. While this outage is believed to have been government directed as a means of quelling the protests, Zoodlabs, which manages Sierra Leone Cable Limited, claimed that the outage was the result of “emergency technical maintenance on some of our international routes”.

A second longer outage was observed between 0100-0730 local time (0100-0730 UTC) on August 11, as seen in the figure below. These shutdowns follow similar behavior in years past, where Internet connectivity was shut off following elections within the country.

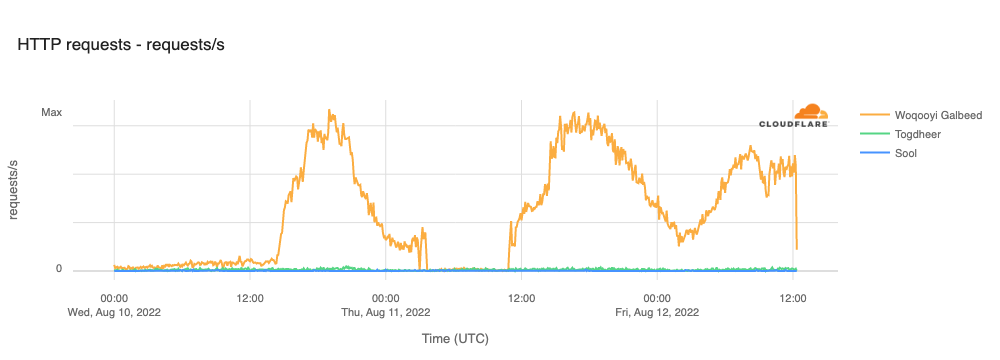

In Somaliland, local authorities reportedly cut off Internet service on August 11 ahead of scheduled opposition demonstrations. The figure below shows a complete Internet outage in Woqooyi Galbeed between 0645-1355 local time (0345-1055 UTC.)

At a network level, the observed outage was due to a loss of traffic from AS37425 (SomCable) and AS37563 (Somtel), as shown in the figures below. Somtel is a mobile services provider, while SomCable is focused on providing wireline Internet access.

India is no stranger to government-directed Internet shutdowns, taking such action hundreds of times over the last decade. This may be changing in the future, however, as the country’s Supreme Court ordered the Ministry of Electronics and Information Technology (MEITY) to reveal the grounds upon which it imposes or approves Internet shutdowns. Until this issue is resolved, we will continue to see regional shutdowns across the country.

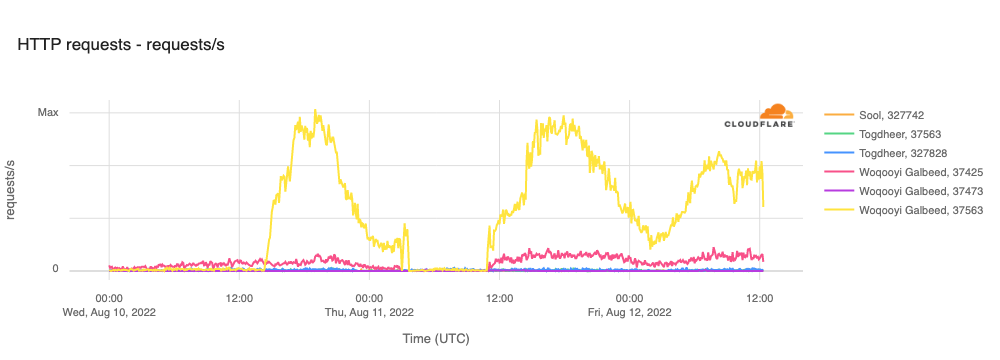

One such example occurred in Assam, where mobile Internet connectivity was shut down to prevent cheating on exams. The figure below shows that these shutdowns were implemented twice daily on August 21 and August 28. While the shutdowns were officially scheduled to take place between 1000-1200 and 1400-1600 local time (0430-0630 and 0830-1030 UTC), some providers reportedly suspended connectivity starting in the early morning.

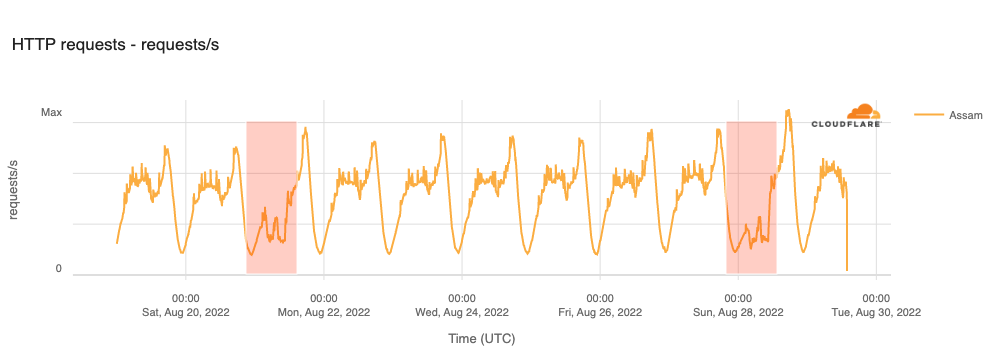

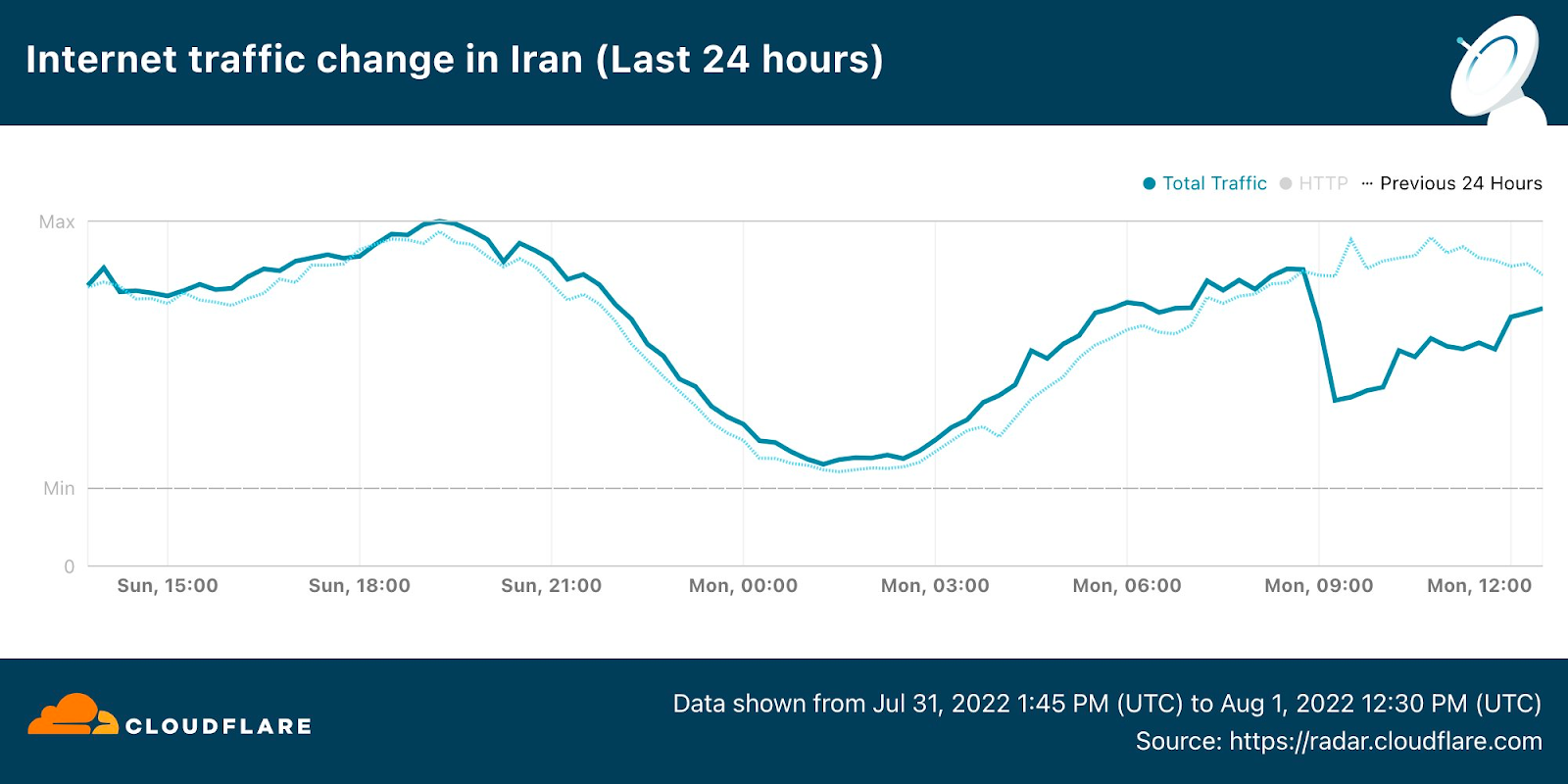

In late September, protests and demonstrations have erupted across Iran in response to the death of Mahsa Amini. Amini was a 22-year-old woman from the Kurdistan Province of Iran, and was arrested on September 13, 2022, in Tehran by Iran’s “morality police”, a unit that enforces strict dress codes for women. She died on September 16 while in police custody. In response to these protests and demonstrations, Internet connectivity across the country experienced multiple waves of disruptions.

In addition to multi-hour outages in Sanadij and Tehran province on September 19 and 21 that were covered in a blog post, three mobile network providers — AS44244 (Irancell), AS57218 (RighTel), and AS197207 (MCCI) — implemented daily Internet “curfews”, generally taking place between 1600 and midnight local time (1230-2030 UTC), although the start times varied on several days. These regular shutdowns are clearly visible in the figure below, and continued into early October.

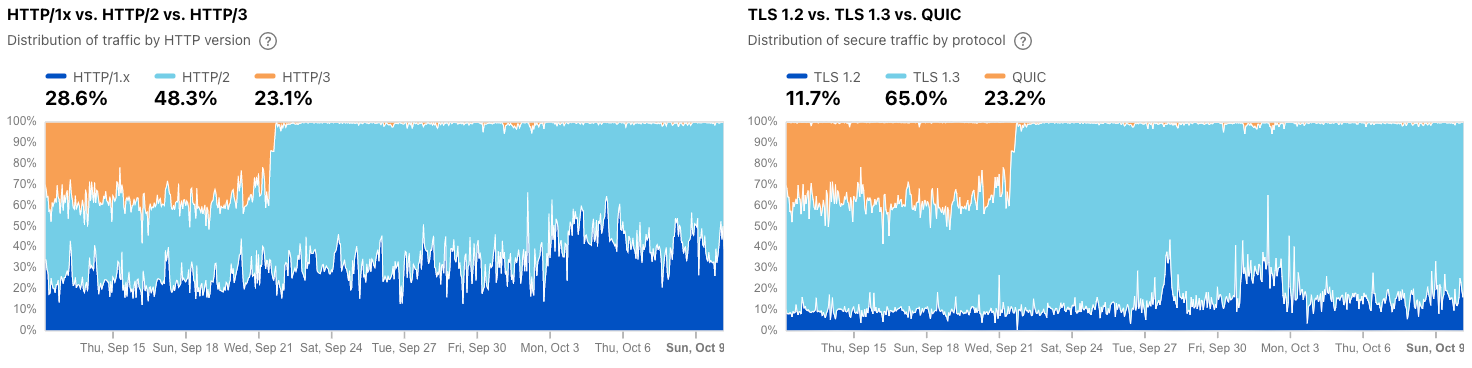

As noted in the blog post, access to DNS-over-HTTPS (DoH) and DNS-over-TLS (DoT) services was also blocked in Iran starting on September 20, and in a move that is likely related, connections over HTTP/3 and QUIC were blocked starting on September 22, as shown in the figure below from Cloudflare Radar.

Natural disasters such as earthquakes and hurricanes wreak havoc on impacted geographies, often causing loss of life, as well as significant structural damage to buildings of all types. Infrastructure damage is also extremely common, with widespread loss of both electrical power and telecommunications infrastructure.

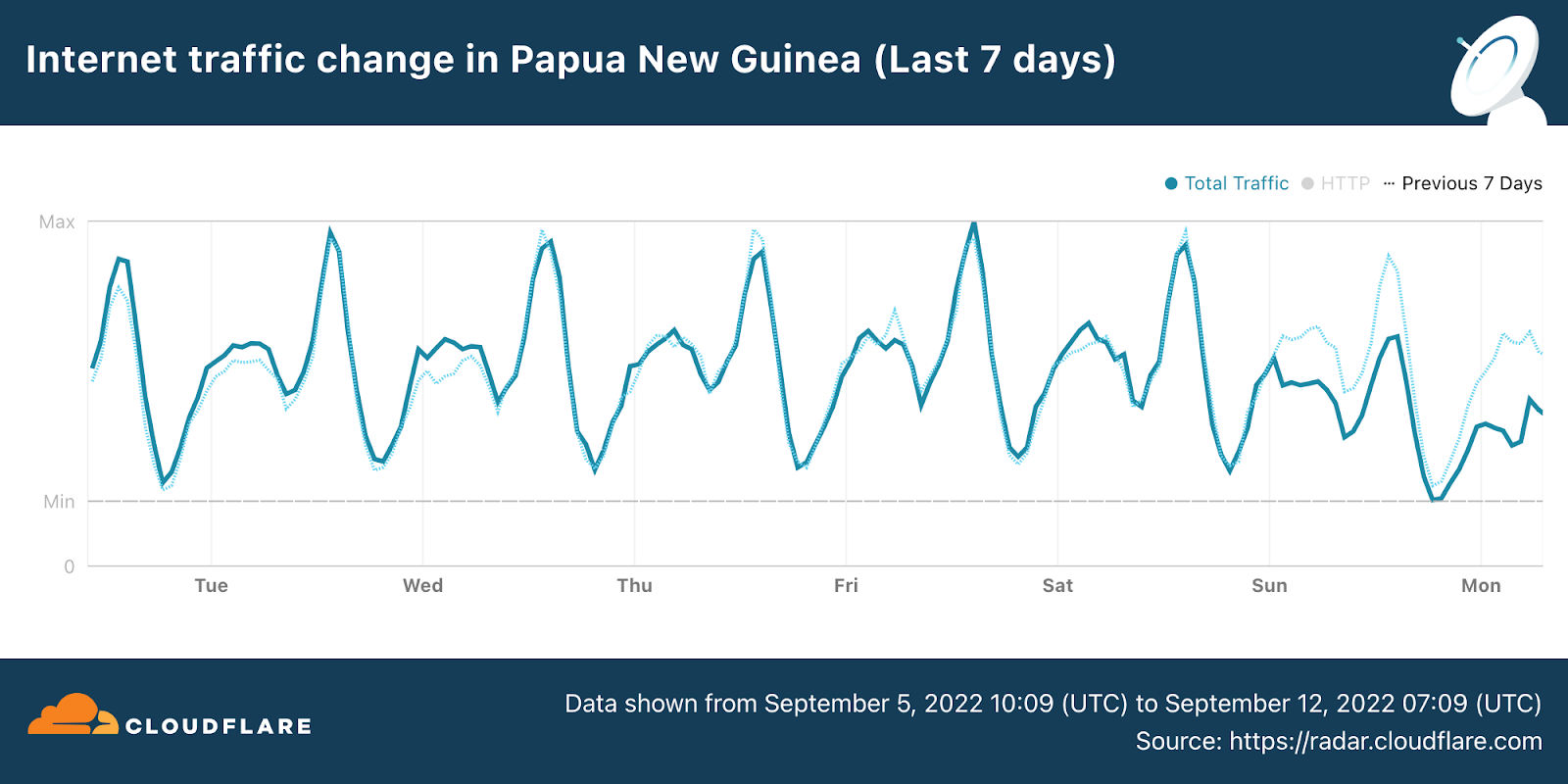

On September 11, a 7.6 magnitude earthquake struck Papua New Guinea, resulting in landslides, cracked roads, and Internet connectivity disruptions. Traffic to the country dropped by 26% just after 1100 local time (0100 UTC) . The figure below shows that traffic volumes remained lower into the following day as well. An announcement from PNG DataCo, a local provider, noted that the earthquake “has affected the operations of the Kumul Submarine Cable Network (KSCN) Express Link between Port Moresby and Madang and the PPC-1 Cable between Madang and Sydney.” This damage, they stated, resulted in the observed outage and degraded service.

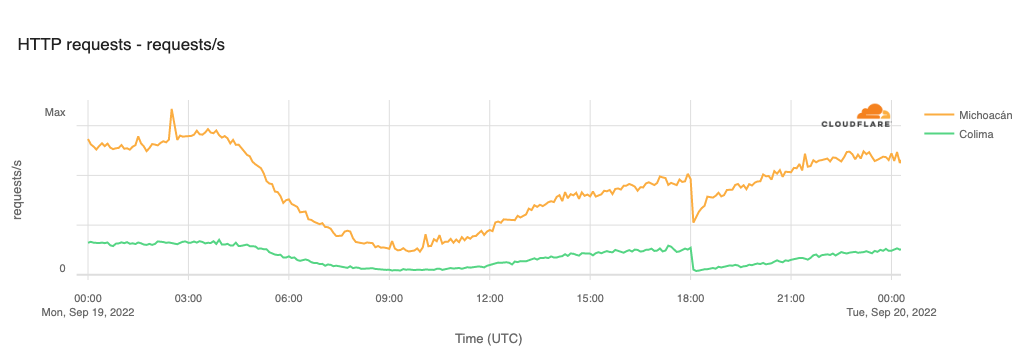

Just over a week later, a 7.6 magnitude earthquake struck the Colima-Michoacan border region in Mexico at 1305 local time (1805 UTC). As shown in the figure below, traffic dropped over 50% in the impacted states immediately after the quake occurred, but recovered fairly quickly, returning to normal levels by around 1600 local time (2100 UTC).

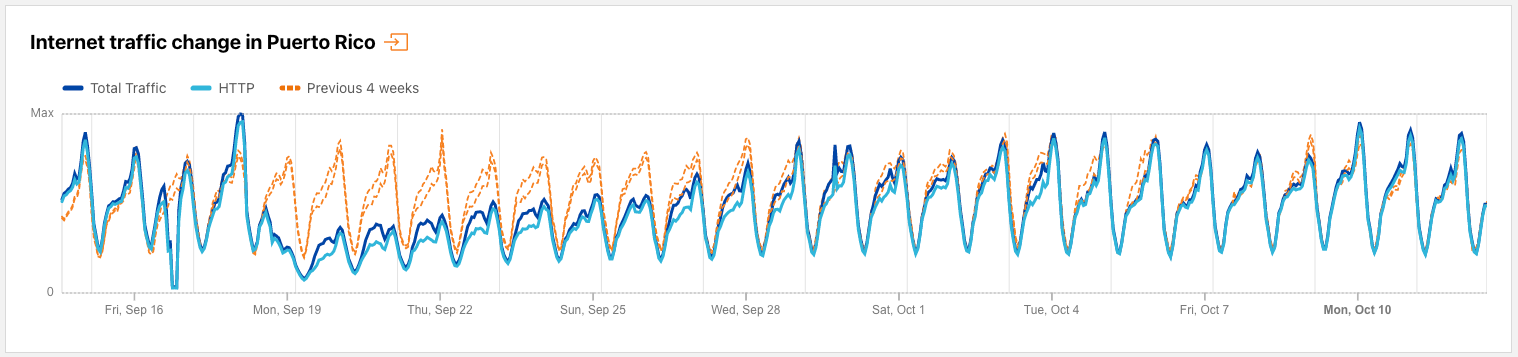

Several major hurricanes plowed their way up the east coast of North America in late September, causing significant damage, resulting in Internet disruptions. On September 18, island-wide power outages caused by Hurricane Fiona disrupted Internet connectivity on Puerto Rico. As the figure below illustrates, it took over 10 days for traffic volumes to return to expected levels. Luma Energy, the local power company, kept customers apprised of repair progress through regular updates to its Twitter feed.

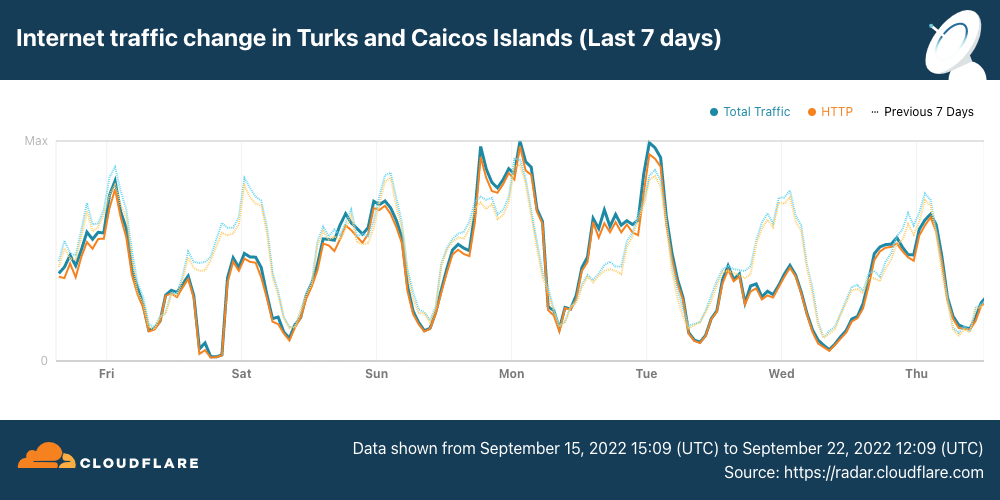

Two days later, Hurricane Fiona slammed the Turks and Caicos islands, causing flooding and significant damage, as well as disrupting Internet connectivity. The figure below shows traffic starting to drop below expected levels around 1245 local time (1645 UTC) on September 20. Recovery took approximately a day, with traffic returning to expected levels around 1100 local time (1500 UTC) on September 21.

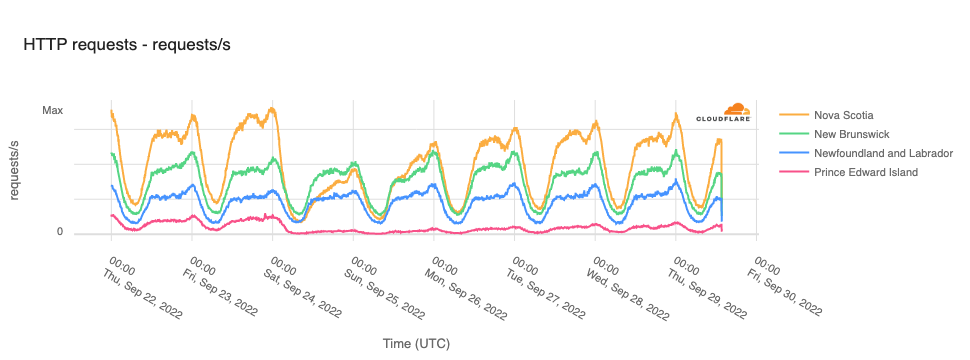

Continuing to head north, Hurricane Fiona ultimately made landfall in the Canadian province of Nova Scotia on September 24, causing power outages and disrupting Internet connectivity. The figure below shows that the most significant impact was seen in Nova Scotia. As Nova Scotia Power worked to restore service to customers, traffic volumes gradually increased, as seen in the figure below. By September 29, traffic volumes on the island had returned to normal levels.

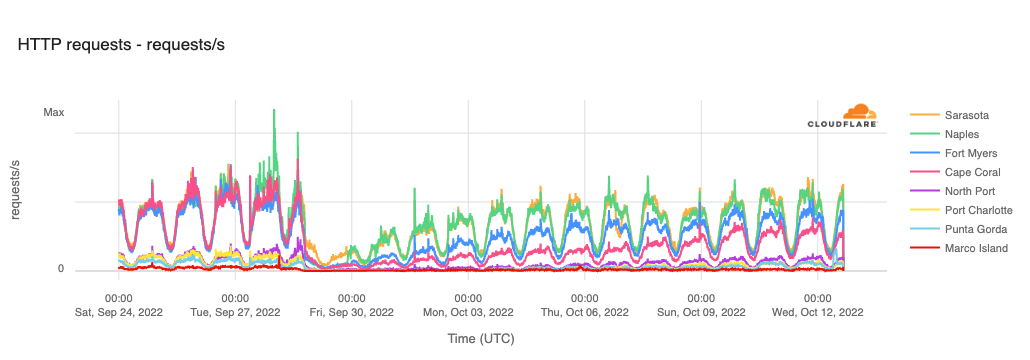

On September 28, Hurricane Ian made landfall in Florida, and was the strongest hurricane to hit Florida since Hurricane Michael in 2018. With over four million customers losing power due to damage from the storm, a number of cities experienced associated Internet disruptions. Traffic from impacted cities dropped significantly starting around 1500 local time (1900 UTC), and as the figure below shows, recovery has been slow, with traffic levels still not back to pre-storm volumes more than two weeks later.

In addition to power outages caused by earthquakes and hurricanes, a number of other power outages caused multi-hour Internet disruptions during the third quarter.

A reported power outage in a key data center building disrupted Internet connectivity for customers of local ISP Shatel in Iran on July 25. As seen in the figure below, traffic dropped significantly at approximately 0715 local time (0345 UTC). Recovery began almost immediately, with traffic nearing expected levels by 0830 local time (0500 UTC).

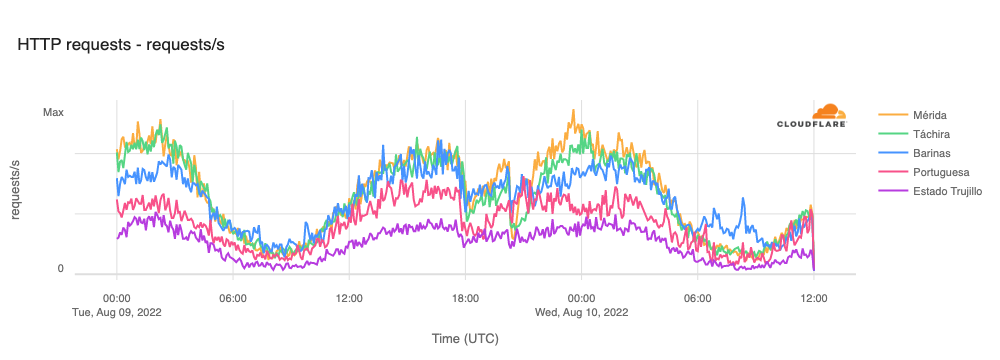

Electrical issues frequently disrupt Internet connectivity in Venezuela, and the independent @vesinfiltro Twitter account tracks these events closely. One such example occurred on August 9, when electrical issues disrupted connectivity across multiple states, including Mérida, Táchira, Barinas, Portuguesa, and Estado Trujillo. The figure below shows evidence of two disruptions, the first around 1340 local time (1740 UTC) and the second a few hours later, starting at around 1615 local time (2015 UTC). In both cases, traffic volumes appeared to recover fairly quickly.

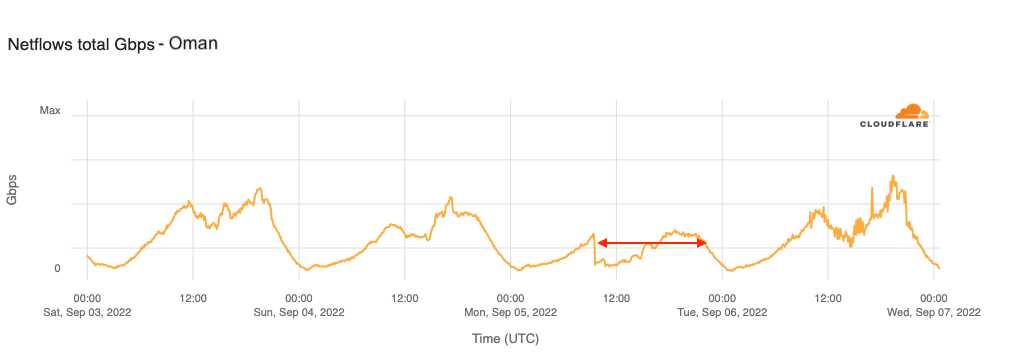

On September 5, a power outage in Oman impacted energy, aviation, and telecommunications services. The latter is evident in the figure below, which shows the country’s traffic volume dropping nearly 60% when the outage began just before 1515 local time (0915 UTC). Although authorities claimed that “the electricity network would be restored within four hours,” traffic did not fully return to normal levels until 0400 local time on September 6 (2200 UTC on September 5) the following day, approximately 11 hours later.

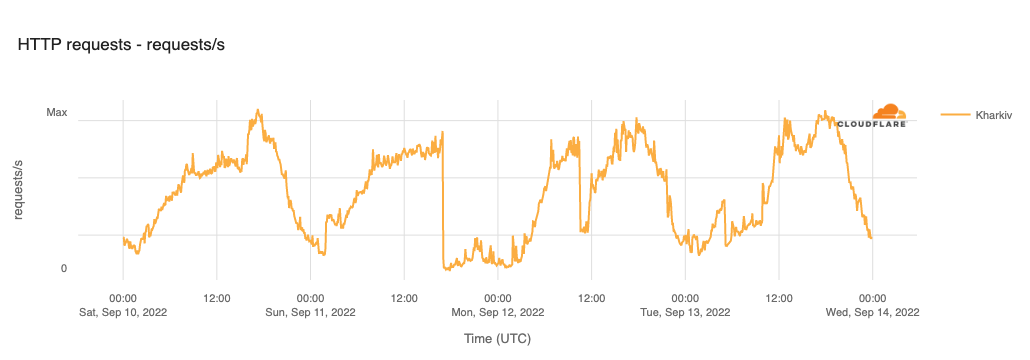

Over the last seven-plus months of war in Ukraine, we have observed multiple Internet disruptions due to infrastructure damage and power outages related to the fighting. We have covered these disruptions in our first and second quarter summary blog posts, and continue to do so on our @CloudflareRadar Twitter account as they occur. Power outages were behind Internet disruptions observed in Kharkiv on September 11, 12, and 13.

The figure below shows that the first disruption started around 2000 local time (1700 UTC) on September 11. This near-complete outage lasted just over 12 hours, with traffic returning to normal levels around 0830 local time (0530 UTC) on the 12th. However, later that day, another partial outage occurred, with a 50% traffic drop seen at 1330 local time (1030 UTC). This one was much shorter, with recovery starting approximately an hour later. Finally, a nominal disruption is visible at 0800 local time (0500 UTC) on September 13, with lower than expected traffic volumes lasting for around five hours.

Damage to both terrestrial and submarine cables have caused many Internet disruptions over the years. The recent alleged sabotage of the sub-sea Nord Stream natural gas pipelines has brought an increasing level of interest from European media (including Swiss and French publications) around just how important submarine cables are to the Internet, and an increasing level of concern among policymakers about the safety of these cable systems and the potential impact of damage to them. However, the three instances of cable damage reviewed below are all related to terrestrial cable.

On August 1, a reported “fiber optic cable” problem caused by a fire in a telecommunications manhole disrupted connectivity across multiple network providers, including AS31549 (Aria Shatel), AS58224 (TIC), AS43754 (Asiatech), AS44244 (Irancell), and AS197207 (MCCI). The disruption started around 1215 local time (0845 UTC) and lasted for approximately four hours. Because it impacted a number of major wireless and wireline networks, the impact was visible at a country level as well, as seen in the figure below.

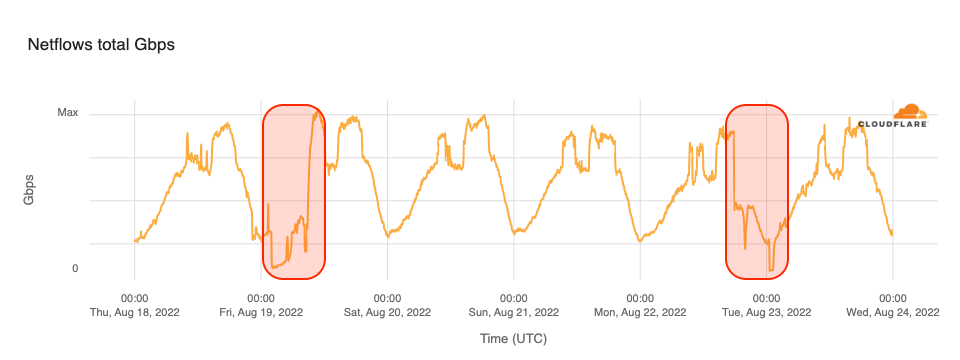

Cable damage due to heavy rains and flooding caused several Internet disruptions in Pakistan in August. The first notable disruption occurred on August 19, starting around 0700 local time (0200 UTC) and lasted just over six and a half hours. On August 22, another significant disruption is also visible, starting at 2250 local time (1750 UTC), with a further drop at 0530 local time (0030 UTC) on the 23rd. The second more significant drop was brief, lasting only 45 minutes, after which traffic began to recover.

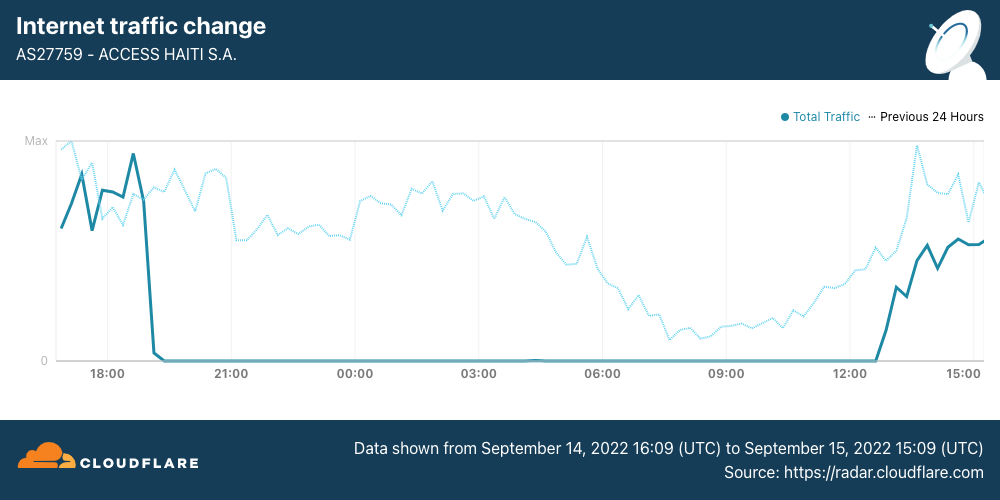

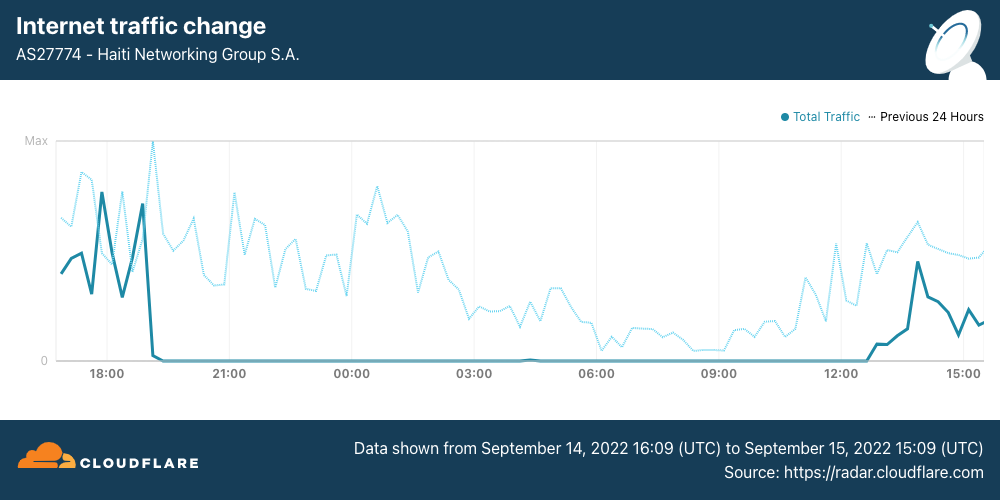

Amidst protests over fuel price hikes, fiber cuts in Haiti caused Internet outages on multiple network providers. Starting at 1500 local time (1900 UTC) on September 14, traffic on AS27759 (Access Haiti) fell to zero. According to a (translated) Twitter post from the provider, they had several fiber optic cables that were cut in various areas of the country, and blocked roads made it “really difficult” for their technicians to reach the problem areas. Repairs were eventually made, with traffic starting to increase again around 0830 local time (1230 UTC) on September 15, as shown in the figure below.

Access Haiti provides AS27774 (Haiti Networking Group) with Internet connectivity (as an “upstream” provider), so the fiber cut impacted their connectivity as well, causing the outage shown in the figure below.

As a heading, “technical problems” can be a catch-all, referring to multiple types of issues, including misconfigurations and routing problems. However, it is also sometimes the official explanation given by a government or telecommunications company for an observed Internet disruption.

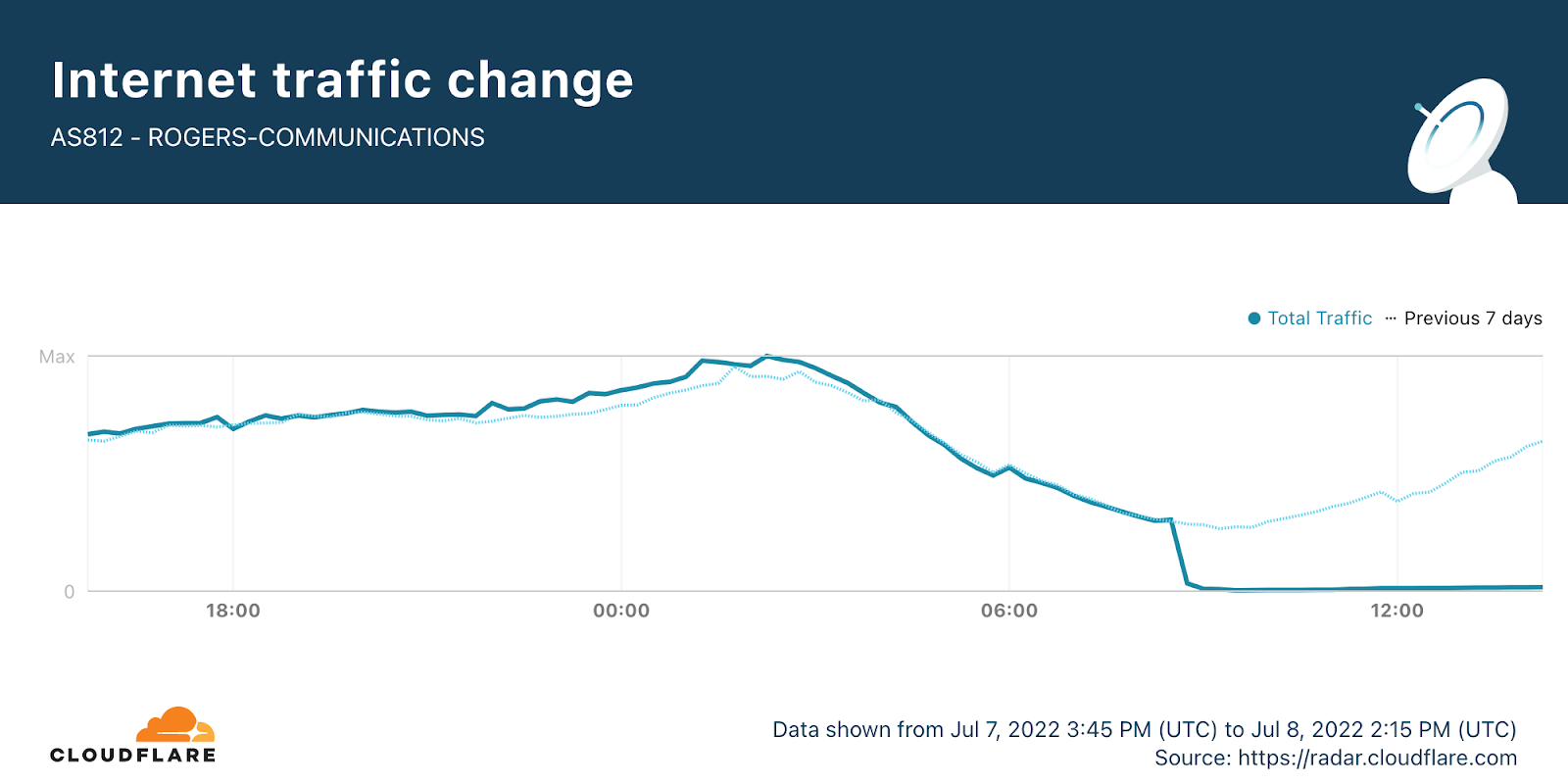

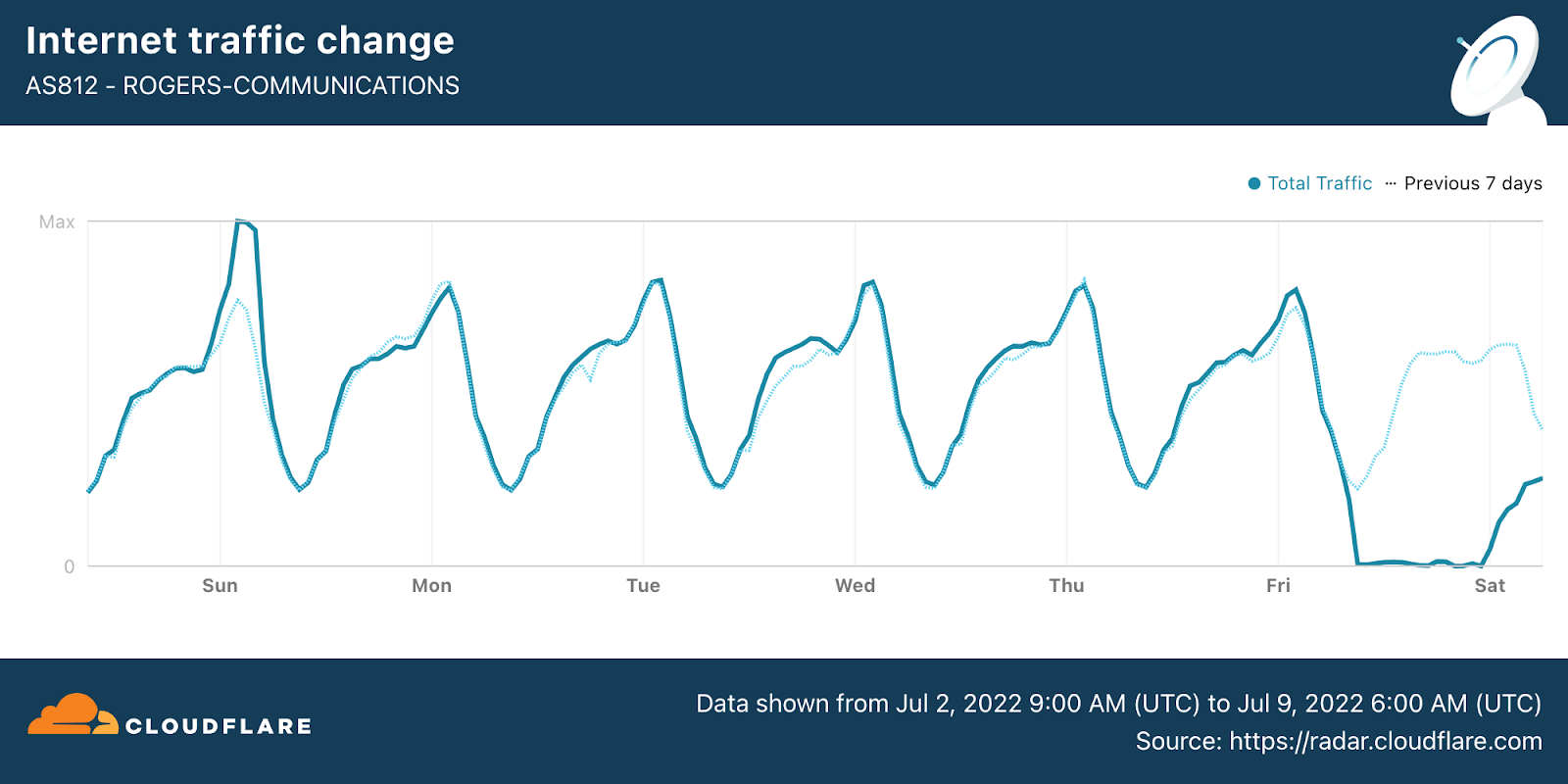

Arguably the most significant Internet disruption so far this year took place on AS812 (Rogers), one of Canada’s largest Internet service providers. At around 0845 UTC on July 8, a near complete loss of traffic was observed, as seen in the figure below.

The figure below shows that small amounts of traffic were seen from the network over the course of the outage, but it took nearly 24 hours for traffic to return to normal levels.

A notice posted by the Rogers CEO explained that “We now believe we’ve narrowed the cause to a network system failure following a maintenance update in our core network, which caused some of our routers to malfunction early Friday morning. We disconnected the specific equipment and redirected traffic, which allowed our network and services to come back online over time as we managed traffic volumes returning to normal levels.” A Cloudflare blog post covered the Rogers outage in real-time, highlighting related BGP activity and small increases of traffic.

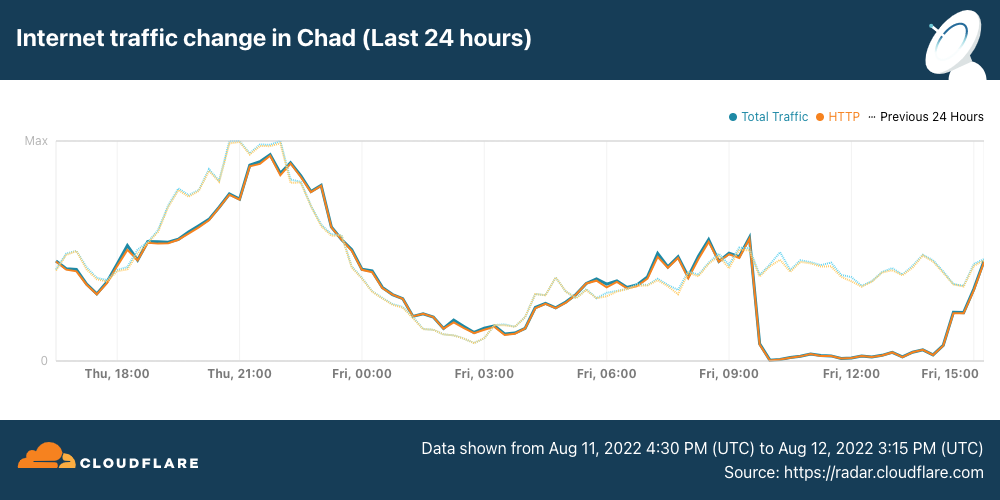

A four-hour near-complete Internet outage took place in Chad on August 12, occurring between 1045 and 1300 local time (0945 to 1400 UTC). Authorities in Chad said that the disruption was due to a “technical problem” on connections between Sudachad and networks in Cameroon and Sudan.

In many cases, observed Internet disruptions are attributed to underlying causes thanks to statements by service providers, government officials, or media coverage of an associated event. However, for some disruptions, no published explanation or associated event could be found.

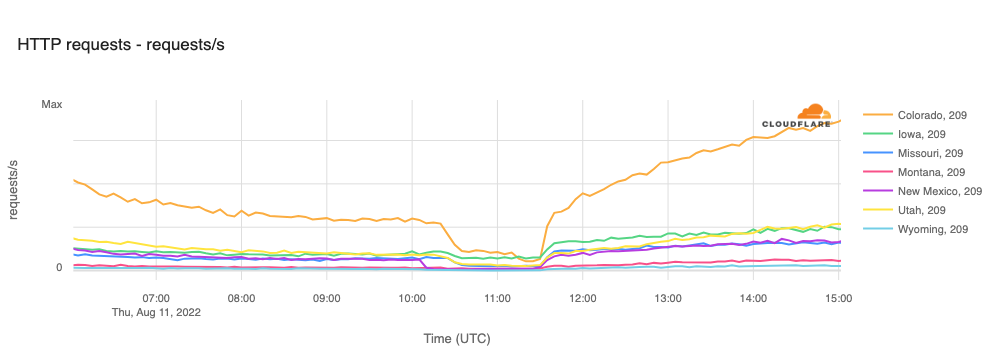

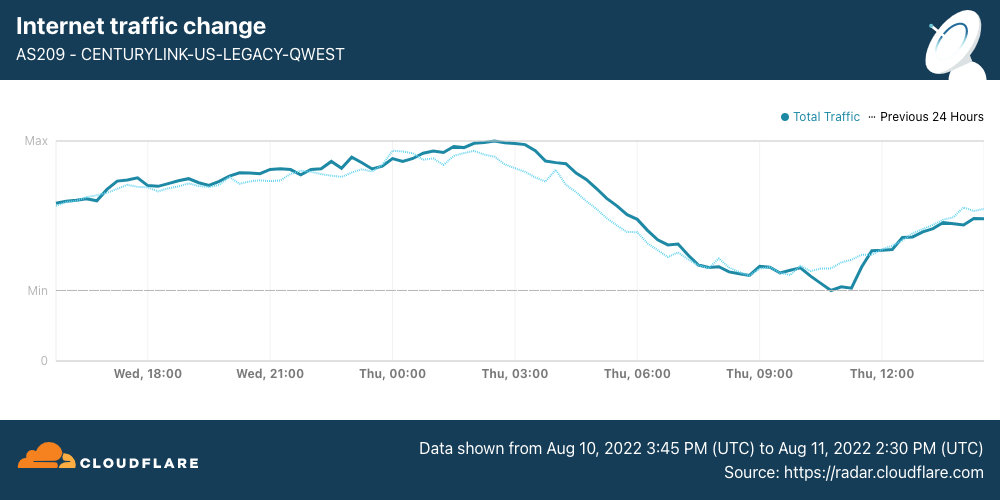

On August 11, a multi-hour outage impacted customers of US telecommunications provider Centurylink in states including Colorado, Iowa, Missouri, Montana, New Mexico, Utah, and Wyoming, as shown in the figure below. The outage was also visible in a traffic graph for AS209, the associated autonomous system.

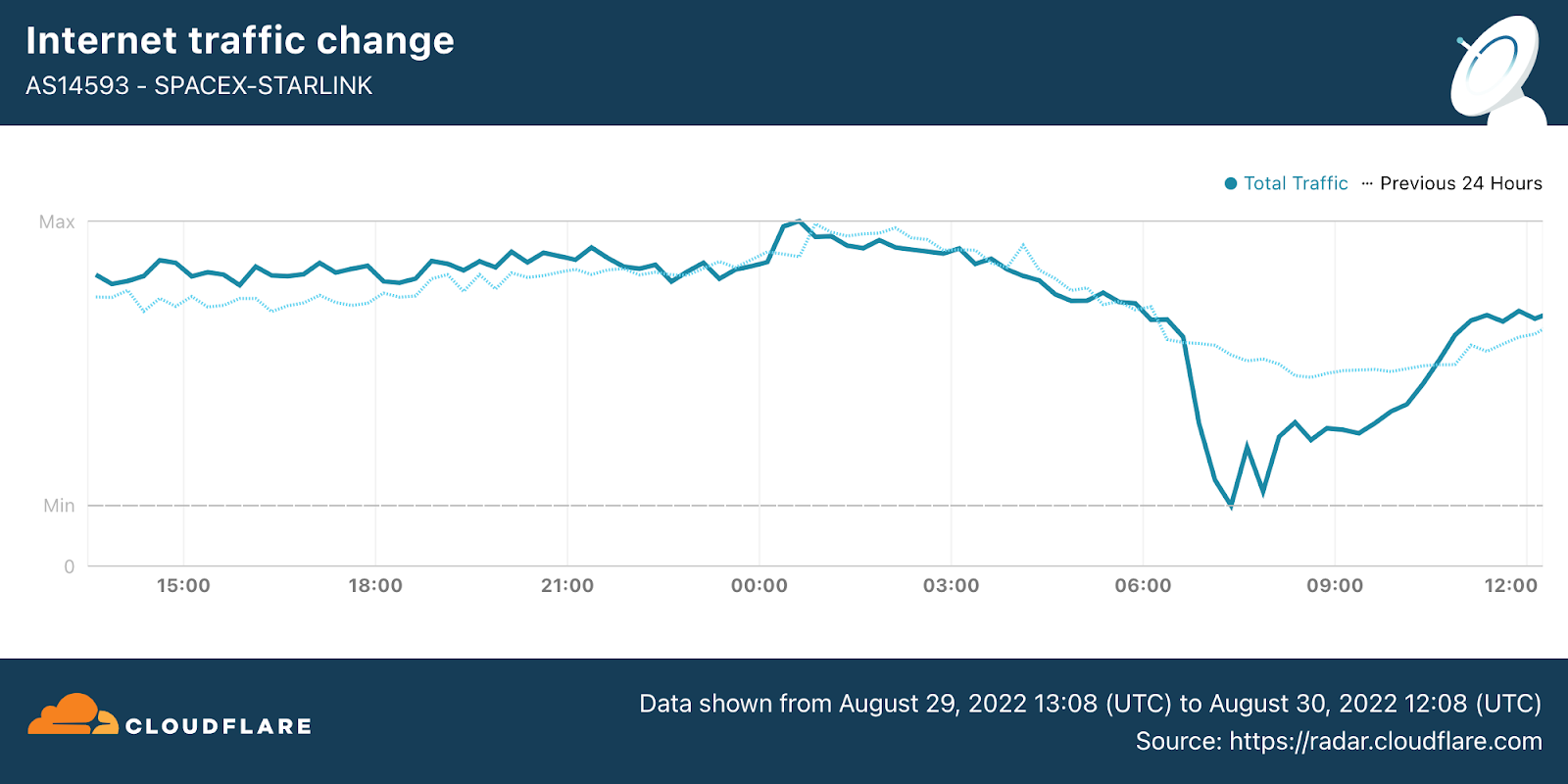

On August 30, satellite Internet provider suffered a global service disruption, lasting between 0630-1030 UTC as seen in the figure below.

As part of Cloudflare’s Birthday Week at the end of September, we launched the Cloudflare Radar Outage Center (CROC). The CROC is a section of our new Radar 2.0 site that archives information about observed Internet disruptions. The underlying data that powers the CROC is also available through an API, enabling interested parties to incorporate data into their own tools, sites, and applications. For regular updates on Internet disruptions as they occur and other Internet trends, follow @CloudflareRadar on Twitter.

Post Syndicated from Jennifer Newman original https://www.backblaze.com/blog/backblaze-at-educause-22-fueling-innovation-in-higher-education/

Like many industries, higher education has spent the last decade discovering the transformative power of the cloud and moving into the next century. The cost savings of running a more efficient tech stack and easier access to the vital data it contains have allowed those institutions to pursue the practical and academic discoveries they were built for.

Across the board, colleges and universities are pushing the boundaries of what cloud storage can do—and their creativity is paying huge dividends for their efficiency and security. We’ve included a few examples below that show just how these institutions have been able to maximize their cloud storage capabilities to reduce costs, modernize outdated operations, protect sensitive student and research data, and extend their ability to provide knowledge to a wider audience.

Pittsburg State: Located in Kansas, this university found themselves with nearly five decades of data in harm’s way due to the constant threat of tornadoes. Adding off-premises storage with Backblaze B2 not only gave them the geographical separation they needed, but the addition of a virtual air gap through Object Lock quadrupled their protection against ransomware.

Coast Community College District: CCCD aiming to update its data management system and eliminate costly delays from tape backups. Their existing tapes needed to be physically chauffeured between the three colleges in the district—Coastline Community College, Golden West College, and Orange Coast College—in friendly L.A. traffic. Backblaze B2’s S3 Compatible APIs made for a seamless integration with Cohesity backup.

UCSC–Silicon Valley: A 22-person video production team at the university’s online learning program, UC–Scout had quickly reached their storage capacity after archiving thousands of videos. By leveraging Backblaze B2, their IT team was able to streamline the entire production process, saving money and unleashing the team’s full creative potential.

Kanopy: The “Netflix for libraries” overhauled its tech stack in order to share its massive selection of more than 25,000 videos with thousands of schools and public libraries. After migrating to Backblaze B2, Kanopy was able to scale efficiently and rapidly accelerate content onboarding.

Gladstone Institutes: Gladstone Institutes needed an affordable, reliable backup system that would allow their researchers to focus on the life-saving developments they were pursuing in the lab. Cloud storage’s increased reliability allowed them to move away from LTO, and off-premise storage shielded their findings from the potential for natural disasters.

If you’re planning to attend EduCause ’22, you can learn more about the many possibilities Backblaze opens up in higher education. Through a new partnership between Backblaze and Carahsoft, public sector customers can now leverage their existing state, local, and federal buying programs to access Backblaze B2 Cloud Storage.

In addition to a live demo of Instant Recovery in the Veeam booth, we’re proud to sponsor the Carahsoft Happy Hour Reception. With special cocktails you won’t find anywhere else (try the “Backblaze Special;” you’ll love it), this is a great opportunity to network with fellow educators and learn more about how Backblaze can help you leverage your tech stack.

The post Backblaze at Educause ’22: Fueling Innovation in Higher Education appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from Deral Heiland original https://blog.rapid7.com/2022/10/18/hands-on-iot-hacking-rapid7-at-def-con-30-iot-village-part-1/

Rapid7 was back this year at DEF CON 30 participating at the IoT Village with another hands-on hardware hacking exercise, with the goal of teaching attendees’ various concepts and methods for IoT hacking. Over the years, these exercises have covered several different embedded device topics, including how to use a Logic Analyzer, extracting firmware, and gaining root access to an embedded IoT device.

Like last year, we had many IoT Village attendees request a copy of our exercise manual, so again I decided to create an in-depth write-up about the exercise we ran, with some expanded context to answer several questions and expand on the discussion we had with attendees at this year’s DEF CON IoT Village.

This year’s exercise focused on the following key areas:

The goal of this year’s hands-on hardware hacking exercise was to gain root access to a Arris SB6190 Cable modem without needing to install any external code. To do this, the user interacted with the device via a PHISON PS8211-0 embedded multimedia controller (eMMC) to mount up and gain access to the NAND flash memory storage. With NAND flash memory access, the user was able to identify the partitions of interest and extract those partitions using the Linux dd command.

Next, the user extracted the filesystem from the partition binary files and was then able to modify key elements to enable SSH access over the ethernet connection. After the modification where completed the filesystems were repacked and written back to the modem device. Finally, the attendee was able to power up the device and login over ethernet using SSH with root access and default device password.

In this first section of the exercise, we focused on understanding the process of gaining access to the NAND flash memory by interacting with a PHISON PS8211-0 embedded multimedia controller (eMMC).

To interact with typical eMMC devices, we typically need the following connections.

As shown in the above bullets, there are typically two different voltages required to interact with eMMC chips. However, in this case, we determined that the PHISON PS8211-0 eMMC chip did not have a different controller voltage for VCCq, meaning that the voltage used was only 3.3v for this example.

When connecting to and interacting with an eMMC device, we usually can utilize the internal power supply of the device. This often works well when different VCC and VCCq voltages are required, but in those cases, we also have to hold the microcontroller unit (MCU) at reset state to prevent the processor from causing interruption when trying to read memory. In this example, we found that the PHISON eMMC chip and NAND memory could be powered by supplying the voltage externally via the SD Card reader.

When using an SD Card reader to supply voltage, we must avoid hooking up the device’s normal source of power also. Hooking both sources – normal and SD Card – into the devices will lead to permanent damage to the device.

When it comes to soldering the needed wiring for this exercise, we realized allowing attendees to do the soldering connection would be much more complex than we could support. So, all the wiring was presoldered before the IoT Village event using 30-gauge color-coded wirewrap wire. This wiring was then attached to a SD Card breakout board as shown below in Figure 1:

Also, as you can see in the above images, the wires do not run parallel against each other, but have a reasonable gap between them and pass over each other perpendicularly when they cross over. This is because we found during testing that if we ran wires directly next to each other, it caused the partitions to fail to mount properly, most likely because noise was induced into the lines from the other lines affecting the signal.

Note: If you are looking to do your own wiring, the 30-gauge wirewrap wire I used is a Polyvinylidene fluoride coated insulation wire under the brand name of Kynar. The benefit of using Kynar wirewrap is that this insulation does not melt or shrink as easily from heat from the solder iron. When heated by a solder iron, standard plastic-coated insulation will shrink back, exposing uninsulated wire. This can lead to wires shorting out on the circuit board.

With the modem wired up to SD Card breakout as shown above we can mount NAND flash memory by connecting a SD Card reader. Note, not all SD Card readers will work, I used a simple trial and error method with several SD Card readers I had in my possession until I found that an inexpensive DYNEX brand reader worked. It should be attached as shown below in Figure 2:

Once plugged in, the various partitions on the Cable modem NAND Flash memory should start loading. In this case a total of seven partitions mounted up. This can take a few minutes to complete. If your system opened each one of the volumes as it mounted, I typically shut them down to avoid all the confusion on your system desktop. To see the layout of the various partitions on the NAND Flash and gather information as needed for reading and writing to the correct partitions. We used the Linux application Disks. Once Disks is opened you can click on the 118 MB Drive in the left column, and it will show all of the partitions and should look something like Figure 3 below:

In our second installment of this 4-part blog series, we’ll discuss the step of extracting partition data. Check back with us next week!

Post Syndicated from Stefano Sandona original https://aws.amazon.com/blogs/big-data/introducing-runtime-roles-for-amazon-emr-steps-use-iam-roles-and-aws-lake-formation-for-access-control-with-amazon-emr/

You can use the Amazon EMR Steps API to submit Apache Hive, Apache Spark, and others types of applications to an EMR cluster. You can invoke the Steps API using Apache Airflow, AWS Steps Functions, the AWS Command Line Interface (AWS CLI), all the AWS SDKs, and the AWS Management Console. Jobs submitted with the Steps API use the Amazon Elastic Compute Cloud (Amazon EC2) instance profile to access AWS resources such as Amazon Simple Storage Service (Amazon S3) buckets, AWS Glue tables, and Amazon DynamoDB tables from the cluster.

Previously, if a step needed access to a specific S3 bucket and another step needed access to a specific DynamoDB table, the AWS Identity and Access Management (IAM) policy attached to the instance profile had to allow access to both the S3 bucket and the DynamoDB table. This meant that the IAM policies you assigned to the instance profile had to contain a union of all the permissions for every step that ran on an EMR cluster.

We’re happy to introduce runtime roles for EMR steps. A runtime role is an IAM role that you associate with an EMR step, and jobs use this role to access AWS resources. With runtime roles for EMR steps, you can now specify different IAM roles for the Spark and the Hive jobs, thereby scoping down access at a job level. This allows you to simplify access controls on a single EMR cluster that is shared between multiple tenants, wherein each tenant can be easily isolated using IAM roles.

The ability to specify an IAM role with a job is also available on Amazon EMR on EKS and Amazon EMR Serverless. You can also use AWS Lake Formation to apply table- and column-level permission for Apache Hive and Apache Spark jobs that are submitted with EMR steps. For more information, refer to Configure runtime roles for Amazon EMR steps.

In this post, we dive deeper into runtime roles for EMR steps, helping you understand how the various pieces work together, and how each step is isolated on an EMR cluster.

In this post, we walk through the following:

Amazon EMR security configurations simplify applying consistent security, authorization, and authentication options across your clusters. You can create a security configuration on the Amazon EMR console or via the AWS CLI or AWS SDK. When you attach a security configuration to a cluster, Amazon EMR applies the settings in the security configuration to the cluster. You can attach a security configuration to multiple clusters at creation time, but can’t apply them to a running cluster.

To enable runtime roles for EMR steps, we have to create a security configuration as shown in the following code and enable the runtime roles property (configured via EnableApplicationScopedIAMRole). In addition to the runtime roles, we’re enabling propagation of the source identity (configured via PropagateSourceIdentity) and support for Lake Formation (configured via LakeFormationConfiguration). The source identity is a mechanism to monitor and control actions taken with assumed roles. Enabling Propagate source identity allows you to audit actions performed using the runtime role. Lake Formation is an AWS service to securely manage a data lake, which includes defining and enforcing central access control policies for your data lake.

Create a file called step-runtime-roles-sec-cfg.json with the following content:

Create the Amazon EMR security configuration:

You can also do the same via the Amazon console:

The security configuration appears in your security configuration list. You can also see that the authorization mechanism listed here is the runtime role instead of the instance profile.

Now we launch an EMR cluster and specify the security configuration we created. For more information, refer to Specify a security configuration for a cluster.

The following code provides the AWS CLI command for launching an EMR cluster with the appropriate security configuration. Note that this cluster is launched on the default VPC and public subnet with the default IAM roles. In addition, the cluster is launched with one primary and one core instance of the specified instance type. For more details on how to customize the launch parameters, refer to create-cluster.

If the default EMR roles EMR_EC2_DefaultRole and EMR_DefaultRole don’t exist in IAM in your account (this is the first time you’re launching an EMR cluster with those), before launching the cluster, use the following command to create them:

Create the cluster with the following code:

When the cluster is fully provisioned (Waiting state), let’s try to run a step on it with runtime roles for EMR steps enabled:

After launching the command, we receive the following as output:

The step failed, asking us to provide a runtime role. In the next section, we set up two IAM roles with different permissions and use them as the runtime roles for EMR steps.

Any IAM role that you want to use as a runtime role for EMR steps must have a trust policy that allows the EMR cluster’s EC2 instance profile to assume it. In our setup, we’re using the default IAM role EMR_EC2_DefaultRole as the instance profile role. In addition, we create two IAM roles called test-emr-demo1 and test-emr-demo2 that we use as runtime roles for EMR steps.

The following code is the trust policy for both of the IAM roles, which lets the EMR cluster’s EC2 instance profile role, EMR_EC2_DefaultRole, assume these roles and set the source identity and LakeFormationAuthorizedCaller tag on the role sessions. The TagSession permission is needed so that Amazon EMR can authorize to Lake Formation. The SetSourceIdentity statement is needed for the propagate source identity feature.

Create a file called trust-policy.json with the following content (replace 123456789012 with your AWS account ID):

Use that policy to create the two IAM roles, test-emr-demo1 and test-emr-demo2:

The IAM principal submitting the EMR steps needs to have permissions to invoke the AddJobFlowSteps API. In addition, you can use the Condition key elasticmapreduce:ExecutionRoleArn to control access to specific IAM roles. For example, the following policy allows the IAM principal to only use IAM roles test-emr-demo1 and test-emr-demo2 as the runtime roles for EMR steps.

job-submitter-policy.json file with the following content (replace 123456789012 with your AWS account ID):

john with the IAM user you use to submit your EMR steps):

IAM user john can now submit steps using arn:aws:iam::123456789012:role/test-emr-demo1 and arn:aws:iam::123456789012:role/test-emr-demo2 as the step runtime roles.

We now prepare our setup to show runtime roles for EMR steps in action.

To prepare your Amazon S3 data, complete the following steps:

test.csv with the following content:

For our initial test, we use a PySpark application called test.py with the following contents:

In the script, we’re trying to access the CSV file present under three different prefixes in the test bucket.

test.csv file but in a different location:

To show how runtime roles for EMR steps works, we assign to the roles we created different IAM permissions to access Amazon S3. The following table summarizes the grants we provide to each role (emr-steps-roles-new-us-east-1 is the bucket you configured in the previous section).

| S3 locations \ IAM Roles | test-emr-demo1 | test-emr-demo2 |

| s3://emr-steps-roles-new-us-east-1/* | No Access | No Access |

| s3://emr-steps-roles-new-us-east-1/demo1/* | Full Access | No Access |

| s3://emr-steps-roles-new-us-east-1/demo2/* | No Access | Full Access |

| s3://emr-steps-roles-new-us-east-1/scripts/* | Read Access | Read Access |

demo1-policy.json with the following content (substitute emr-steps-roles-new-us-east-1 with your bucket name):

demo2-policy.json with the following content (substitute emr-steps-roles-new-us-east-1 with your bucket name):

To use runtime roles with Amazon EMR steps, we need to add the following policy to our EMR cluster’s EC2 instance profile (in this example EMR_EC2_DefaultRole). With this policy, the underlying EC2 instances for the EMR cluster can assume the runtime role and apply a tag to that runtime role.

runtime-roles-policy.json with the following content (replace 123456789012 with your AWS account ID):

EMR_EC2_DefaultRole:

We’re now ready to perform our first test. We run the test.py script, previously uploaded to Amazon S3, two times as Spark steps: first using the test-emr-demo1 role and then using the test-emr-demo2 role as the runtime roles.

To run an EMR step specifying a runtime role, you need the latest version of the AWS CLI. For more details about updating the AWS CLI, refer to Installing or updating the latest version of the AWS CLI.

Let’s submit a step specifying test-emr-demo1 as the runtime role:

This command returns an EMR step ID. To check our step output logs, we can proceed two different ways:

stdout.$(LOG_URI)/steps/<stepID>/stdout.gz.The logs could take a couple of minutes to populate after the step is marked as Completed.

The following is the output of the EMR step with test-emr-demo1 as the runtime role:

As we can see, only the demo1 folder was accessible by our application.

Diving deeper into the step stderr logs, we can see that the related YARN application application_1656350436159_0017 was launched with the user 6GC64F33KUW4Q2JY6LKR7UAHWETKKXYL. We can confirm this by connecting to the EMR primary instance using SSH and using the YARN CLI:

Please note that in your case, the YARN application ID and the user will be different.

Now we submit the same script again as a new EMR step, but this time with the role test-emr-demo2 as the runtime role:

The following is the output of the EMR step with test-emr-demo2 as the runtime role:

As we can see, only the demo2 folder was accessible by our application.

Diving deeper into the step stderr logs, we can see that the related YARN application application_1656350436159_0018 was launched with a different user 7T2ORHE6Z4Q7PHLN725C2CVWILZWYOLE. We can confirm this by using the YARN CLI:

Each step was able to only access the CSV file that was allowed by the runtime role, so the first step was able to only access s3://emr-steps-roles-new-us-east-1/demo1/test.csv and the second step was only able to access s3://emr-steps-roles-new-us-east-1/demo2/test.csv. In addition, we observed that Amazon EMR created a unique user for the steps, and used the user to run the jobs. Please note that both roles need at least read access to the S3 location where the step scripts are located (for example, s3://emr-steps-roles-demo-bucket/scripts/test.py).

Now that we have seen how runtime roles for EMR steps work, let’s look at how we can use Lake Formation to apply fine-grained access controls with EMR steps.

You can use Lake Formation to apply table- and column-level permissions with Apache Spark and Apache Hive jobs submitted as EMR steps. First, the data lake admin in Lake Formation needs to register Amazon EMR as the AuthorizedSessionTagValue to enforce Lake Formation permissions on EMR. Lake Formation uses this session tag to authorize callers and provide access to the data lake. The Amazon EMR value is referenced inside the step-runtime-roles-sec-cfg.json file we used earlier when we created the EMR security configuration, and inside the trust-policy.json file we used to create the two runtime roles test-emr-demo1 and test-emr-demo2.

We can do so on the Lake Formation console in the External data filtering section (replace 123456789012 with your AWS account ID).

On the IAM runtime roles’ trust policy, we already have the sts:TagSession permission with the condition “aws:RequestTag/LakeFormationAuthorizedCaller": "Amazon EMR". So we’re ready to proceed.

To demonstrate how Lake Formation works with EMR steps, we create one database named entities with two tables named users and products, and we assign in Lake Formation the grants summarized in the following table.

| IAM Roles \ Tables | entities (DB) |

|

| users (Table) |

products (Table) |

|

| test-emr-demo1 | Full Read Access | No Access |

| test-emr-demo2 | Read Access on Columns: uid, state | Full Read Access |

We first prepare our Amazon S3 files.

users.csv file with the following content:

We can create our entities database by using the AWS Glue APIs.

entities-db.json file with the following content (substitute emr-steps-roles-new-us-east-1 with your bucket name):

We also use the AWS Glue APIs to create the tables users and products.

users-table.json file with the following content (substitute emr-steps-roles-new-us-east-1 with your bucket name):

products-table.json file with the following content (substitute emr-steps-roles-new-us-east-1 with your bucket name):

To access our tables data in Amazon S3, Lake Formation needs read/write access to them. To achieve that, we have to register Amazon S3 locations where our data resides and specify for them which IAM role to obtain credentials from.

Let’s create our IAM role for the data access.

trust-policy-data-access-role.json with the following content:

role emr-demo-lf-data-access-role:

data-access-role-policy.json with the following content (substitute emr-steps-roles-new-us-east-1 with your bucket name):

emr-demo-lf-data-access-role the created policy (replace 123456789012 with your AWS account ID):

We can now register our data location in Lake Formation.

emr-demo-lf-data-access-role IAM role, which has read/write access to that location.

For more details about adding an Amazon S3 location to your data lake and configuring your IAM data access roles, refer to Adding an Amazon S3 location to your data lake.

To be sure we’re using Lake Formation permissions, we should confirm that we don’t have any grants set up for the principal IAMAllowedPrincipals. The IAMAllowedPrincipals group includes any IAM users and roles that are allowed access to your Data Catalog resources by your IAM policies, and it’s used to maintain backward compatibility with AWS Glue.

To confirm Lake Formations permissions are enforced, navigate to the Lake Formation console and choose Data lake permissions in the navigation pane. Filter permissions by “Database”:“entities” and remove all the permissions given to the principal IAMAllowedPrincipals.

For more details on IAMAllowedPrincipals and backward compatibility with AWS Glue, refer to Changing the default security settings for your data lake.

To allow our IAM runtime roles to properly interact with Lake Formation, we should provide them the lakeformation:GetDataAccess and glue:Get* grants.

Lake Formation permissions control access to Data Catalog resources, Amazon S3 locations, and the underlying data at those locations. IAM permissions control access to the Lake Formation and AWS Glue APIs and resources. Therefore, although you might have the Lake Formation permission to access a table in the Data Catalog (SELECT), your operation fails if you don’t have the IAM permission on the glue:Get* API.

For more details about Lake Formation access control, refer to Lake Formation access control overview.

emr-runtime-roles-lake-formation-policy.json file with the following content:

We now set up the permission in Lake Formation for the two runtime roles.

users-grants-test-emr-demo1.json with the following content to grant SELECT access to all columns in the entities.users table to test-emr-demo1:

users-grants-test-emr-demo2.json with the following content to grant SELECT access to the uid and state columns in the entities.users table to test-emr-demo2:

products-grants-test-emr-demo2.json with the following content to grant SELECT access to all columns in the entities.products table to test-emr-demo2:

“Database”:“entities”.For our test, we use a PySpark application called test-lake-formation.py with the following content:

In the script, we’re trying to access the tables users and products. Let’s upload our Spark application in the same S3 bucket that we used earlier:

We’re now ready to perform our test. We run the test-lake-formation.py script first using the test-emr-demo1 role and then using the test-emr-demo2 role as the runtime roles.

Let’s submit a step specifying test-emr-demo1 as the runtime role:

The following is the output of the EMR step with test-emr-demo1 as the runtime role:

As we can see, our application was only able to access the users table.

Submit the same script again as a new EMR step, but this time with the role test-emr-demo2 as the runtime role:

The following is the output of the EMR step with test-emr-demo2 as the runtime role:

As we can see, our application was able to access a subset of columns for the users table and all the columns for the products table.

We can conclude that the permissions while accessing the Data Catalog are being enforced based on the runtime role used with the EMR step.

The source identity is a mechanism to monitor and control actions taken with assumed roles. The Propagate source identity feature similarly allows you to monitor and control actions taken using runtime roles by the jobs submitted with EMR steps.

We already configured EMR_EC2_defaultRole with "sts:SetSourceIdentity" on our two runtime roles. Also, both runtime roles let EMR_EC2_DefaultRole to SetSourceIdentity in their trust policy. So we’re ready to proceed.

We now see the Propagate source identity feature in action with a simple example.

We configure the IAM role job-submitter-1, which is assumed specifying the source identity and which is used to submit the EMR steps. In this example, we allow the IAM user paul to assume this role and set the source identity. Please note you can use any IAM principal here.

trust-policy-2.json with the following content (replace 123456789012 with your AWS account ID):

job-submitter-1:

We use now the same emr-runtime-roles-submitter-policy policy we defined before to allow the role to submit EMR steps using the test-emr-demo1 and test-emr-demo2 runtime roles.

job-submitter-1 (replace 123456789012 with your AWS account ID):

To show how propagation of source identity works with Amazon EMR, we generate a role session with the source identity test-ad-user.

With the IAM user paul (or with the IAM principal you configured), we first perform the impersonation (replace 123456789012 with your AWS account ID):

The following code is the output received:

We use the temporary AWS security credentials of the role session, to submit an EMR step along with the runtime role test-emr-demo1:

In a few minutes, we can see events appearing in the AWS CloudTrail log file. We can see all the AWS APIs that the jobs invoked using the runtime role. In the following snippet, we can see that the step performed the sts:AssumeRole and lakeformation:GetDataAccess actions. It’s worth noting how the source identity test-ad-user has been preserved in the events.

You can now delete the EMR cluster you created.

iam-passthrough-cluster, then choose Terminate.Alternatively, you can delete the cluster by using the Amazon EMR CLI with the following command (replace the EMR cluster ID with the one returned by the previously run aws emr create-cluster command):

In this post, we discussed how you can control data access on Amazon EMR on EC2 clusters by using runtime roles with EMR steps. We discussed how the feature works, how you can use Lake Formation to apply fine-grained access controls, and how to monitor and control actions using a source identity. To learn more about this feature, refer to Configure runtime roles for Amazon EMR steps.

Stefano Sandona is an Analytics Specialist Solution Architect with AWS. He loves data, distributed systems and security. He helps customers around the world architecting their data platforms. He has a strong focus on Amazon EMR and all the security aspects around it.

Stefano Sandona is an Analytics Specialist Solution Architect with AWS. He loves data, distributed systems and security. He helps customers around the world architecting their data platforms. He has a strong focus on Amazon EMR and all the security aspects around it.

Sharad Kala is a senior engineer at AWS working with the EMR team. He focuses on the security aspects of the applications running on EMR. He has a keen interest in working and learning about distributed systems.

Sharad Kala is a senior engineer at AWS working with the EMR team. He focuses on the security aspects of the applications running on EMR. He has a keen interest in working and learning about distributed systems.

Post Syndicated from original https://lwn.net/Articles/910766/

Since its inclusion in the Linux kernel, the WireGuard VPN tunnel has become

increasingly popular. In general, WireGuard is simpler to configure than

other VPNs, but the approach that it takes to authentication can present

some challenges. Each node in a WireGuard network has a cryptographic key

that serves as the node’s identity;

nodes that do not know each other’s keys cannot directly communicate.

Keeping

track of these keys and distributing them to the other nodes

in a mesh network quickly becomes a chore as the network grows.

Fortunately, there are now

several open-source

tools that can automate the management of these keys and make using

WireGuard easier for both administrators and end users.

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=B2PXiYOVQI4

Post Syndicated from original https://lwn.net/Articles/911563/

Version

106.0 of the Firefox browser has been released. There are several new

features, including PDF editing, Firefox

View (an overview of recently closed tabs), and a set of new color

schemes.

Post Syndicated from original https://lwn.net/Articles/911562/

Security updates have been issued by Debian (glibc and libksba), Fedora (dhcp and kernel), Red Hat (.NET 6.0, .NET Core 3.1, compat-expat1, kpatch-patch, and nodejs:16), Slackware (xorg), SUSE (exiv2, expat, kernel, libreoffice, python, python-numpy, squid, and virtualbox), and Ubuntu (linux-azure and zlib).

Post Syndicated from Ron Bowes original https://blog.rapid7.com/2022/10/18/flexlm-and-citrix-adm-denial-of-service-vulnerability/

On June 27, 2022, Citrix released an advisory for CVE-2022-27511 and CVE-2022-27512, which affect Citrix ADM (Application Delivery Management).

Rapid7 investigated these issues to better understand their impact, and found that the patch is not sufficient to prevent exploitation. We also determined that the worst outcome of this vulnerability is a denial of service – the licensing server can be told to shut down (even with the patch). We were not able to find a way to reset the admin password, as the original bulletin indicated.

In the course of investigating CVE-2022-27511 and CVE-2022-27512, we determined that the root cause of the issues in Citrix ADM was a vulnerable implementation of popular licensing software FLEXlm, also known as FlexNet Publisher. This disclosure addresses both the core issue in FLEXlm and Citrix ADM’s implementation of it (which resulted in both the original CVEs and later the patch bypass our research team discovered). Rapid7 coordinated disclosure with both companies and CERT/CC.

As of this publication, these issues remain unpatched, so IT defenders are urged to reach out to Revenera and Citrix for direct guidence on mitigating these denial of service vulnerabilities and CVE assignment.

FLEXlm is a license management application that is part of FlexNet licensing, provided by Revenera’s Flexnet Software, and is used for license provisioning on many popular network applications, including Citrix ADM. You can read more about FlexNet at the vendor’s website.

Citrix ADM is an application provisioning solution from Citrix, which uses FLEXlm for license management. You can read more about Citrix ADM at the vendor’s website.

This issue was discovered by Ron Bowes of Rapid7 while researching CVE-2022-27511 in Citrix ADM. It is being disclosed in accordance with Rapid7’s vulnerability disclosure policy.

Citrix ADM runs on FreeBSD, and remote administrative logins are possible. Using that, we compared two different versions of the Citrix ADM server – before and after the patch.

Eventually, we went through each network service, one by one, to check what each one did and whether the patch may have fixed something. When we got to TCP port 27000, we found that lmgrd was running. Looking up lmgrd, we determined that it’s a licensing server made by FLEXlm called FlexNet Licensing (among other names), made by Revenera. Since the bulletin calls out licensing disruption, this seemed like a sensible place to look; from the bulletin:

Temporary disruption of the ADM license service. The impact of this includes preventing new licenses from being issued or renewed by Citrix ADM.

If we look at how lmgrd is executed before and after the patch, we find that the command line arguments changed; before:

bash-3.2# ps aux | grep lmgrd

root 3506 0.0 0.0 10176 6408 - S 19:22 0:09.67 /netscaler/lmgrd -l /var/log/license.log -c /mpsconfig/license

And after:

bash-3.2# ps aux | grep lmgrd

root 5493 0.0 0.0 10176 5572 - S 13:15 0:02.45 /netscaler/lmgrd -2 -p -local -l /var/log/license.log -c /mpsconfig/license

If we look at some online documentation, we see that the -2 -p flags are security-related:

-2 -p Restricts usage of lmdown, lmreread, and lmremove to a FLEXlm administrator who is by default root. [...]

We tested a Linux copy of FlexNet 11.18.3.1, which allowed us to execute and debug Flex locally. Helpfully, the various command line utilities that FlexNet uses to perform actions (accessible via lmutil) use a TCP connection to localhost, allowing us to analyze the traffic. For example, the following command:

$ ./lmutil lmreread -c ./license/citrix_startup.lic

lmutil - Copyright (c) 1989-2021 Flexera. All Rights Reserved.

lmreread successful

Generates a lot of traffic going to localhost:27000, including:

Sent:

00000000 2f 4c 0f b0 00 40 01 02 63 05 2c 85 00 00 00 00 /L...@.. c.,.....

00000010 00 00 00 02 01 04 0b 12 00 54 00 78 00 02 0b af ........ .T.x....

00000020 72 6f 6e 00 66 65 64 6f 72 61 00 2f 64 65 76 2f ron.fedo ra./dev/

00000030 70 74 73 2f 32 00 00 78 36 34 5f 6c 73 62 00 01 pts/2..x 64_lsb..

Received:

00000000 2f 8f 09 c6 00 26 01 0e 63 05 2c 85 41 00 00 00 /....&.. c.,.A...

00000010 00 00 00 02 0b 12 01 04 00 66 65 64 6f 72 61 00 ........ .fedora.

00000020 6c 6d 67 72 64 00 lmgrd.

Sent:

00000040 2f 23 34 78 00 24 01 07 63 05 2c 86 00 00 00 00 /#4x.$.. c.,.....

00000050 00 00 00 02 72 6f 6e 00 66 65 64 6f 72 61 00 00 ....ron. fedora..

00000060 92 00 00 0a ....

Received:

00000026 2f 54 18 b9 00 a8 00 4f 63 05 2c 86 41 00 00 00 /T.....O c.,.A...

00000036 00 00 00 02 4f 4f 00 00 00 00 00 00 00 00 00 00 ....OO.. ........

00000046 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ........ ........

00000056 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ........ ........

00000066 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ........ ........

00000076 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ........ ........

00000086 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ........ ........

00000096 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ........ ........