Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=b4NTGitP8_Y

What we served up for the last Birthday Week before we’re a teenager

Post Syndicated from John Graham-Cumming original https://blog.cloudflare.com/what-we-served-up-for-the-last-birthday-week-before-were-a-teenager/

Almost a teen. With Cloudflare’s 12th birthday last Tuesday, we’re officially into our thirteenth year. And what a birthday we had!

36 announcements ranging from SIM cards to post quantum encryption via hardware keys and so much more. Here’s a review of everything we announced this week.

Monday

| What | In a sentence… |

|---|---|

| The First Zero Trust SIM | We’re bringing Zero Trust security controls to the humble SIM card, rethinking how mobile device security is done, with the Cloudflare SIM: the world’s first Zero Trust SIM. |

| Securing the Internet of Things | We’ve been defending customers from Internet of Things botnets for years now, and it’s time to turn the tides: we’re bringing the same security behind our Zero Trust platform to IoT. |

| Bringing Zero Trust to mobile network operators | Helping bring the power of Cloudflare’s Zero Trust platform to mobile operators and their subscribers. |

Tuesday

| What | In a sentence… |

|---|---|

| Workers Launchpad | Leading venture capital firms to provide up to $1.25 BILLION to back startups built on Cloudflare Workers. |

| Startup Plan v2.0 | Increasing the scope, eligibility and products we include under our Startup Plan, enabling more developers and startups to build the next big thing on top of Cloudflare. |

| workerd: the Open Source Workers runtime | workerd, the JavaScript/Wasm runtime based on the same code that powers Cloudflare Workers. workerd is open source under the Apache License version 2.0. |

| Cloudflare Calls | A new product that lets developers build real-time audio/video apps. Cloudflare Calls exposes a set of APIs to build video conferencing, screen sharing, and group calling apps on our network. |

| Cloudflare Queues | Queues is a global message queuing service that allows applications to reliably send and receive messages using Cloudflare Workers. It offers at-least once message delivery, supports batching of messages, and charges no bandwidth egress fees. |

| What’s new with D1 | Improving the developer experience of D1 with CLI support for backups, snapshots and local development. |

| WebRTC live streaming | Cloudflare Stream now supports live video streaming over WebRTC, with sub-second latency, to unlimited concurrent viewers. |

| The future of Page Rules | Our plan to replace Page Rules with four dedicated products, offering increased rules quota, more functionality, and better granularity. |

| Cache Rules | Evolving rules-based caching on Cloudflare with more configurable Cache Rules. |

| Configuration Rules | Configuration Rules enable new use-cases that previously were impossible without writing custom code in a Cloudflare Worker, including A/B testing configuration, enabling features for a set of file extensions and much more. |

| Origin Rules | A new product which allows for overriding the host header, the Server Name Indication (SNI), destination port and DNS resolution of matching HTTP requests. |

| Dynamic URL redirects | Users can redirect visitors to another webpage or website based upon hundreds of options such as the visitor’s country of origin or language, without having to write a single line of code. |

| Cloudflare named a Leader in WAF by Forrester | Forrester has recognised Cloudflare as a Leader in The Forrester Wave™: Web Application Firewalls, Q3 2022 report. |

Wednesday

| What | In a sentence… |

|---|---|

| Turnstile, a user-friendly, privacy-preserving alternative to CAPTCHA | Turnstile is an invisible alternative to CAPTCHA. Anyone, anywhere on the Internet, who wants to replace CAPTCHA on their site will be able to call a simple API, without having to be a Cloudflare customer or sending traffic through the Cloudflare global network. |

| Magic Network Monitoring for everyone | Magic Network Monitoring will be available to everyone, and now features a powerful analytics dashboard, self-serve configuration, and a step-by-step onboarding wizard. |

| Botnet Threat Feed for service providers | The Botnet Threat Feed will give ISPs threat intelligence on their own IP addresses that have participated in HTTP DDoS attacks as observed from the Cloudflare network — allowing them to reduce their abuse-driven costs, and ultimately reduce the amount and force of DDoS attacks across the Internet. |

| Build privacy-preserving products with Privacy Edge | Privacy Edge, including Code Auditability, Privacy Gateway, Privacy Proxy, and Cooperative Analytics, is a suite of products that make it easy for site owners and developers to build privacy into their products, by default. |

| Quick search in the dashboard | Our first release of quick search for the Cloudflare dashboard, a beta version of our first ever cross-dashboard search tool to help you navigate our products and features. |

Thursday

| What | In a sentence… |

|---|---|

| Making phishing defense seamless with Cloudflare Zero Trust and Yubico | An exclusive program for Cloudflare customers that makes hardware keys more accessible and economical than ever. This program is made possible through a new collaboration with Yubico, the industry’s leading hardware security key vendor and provides Cloudflare customers with exclusive “Good for the Internet” pricing. |

| How Cloudflare implemented hardware keys to prevent phishing | How Cloudflare uses hardware keys, built on FIDO2 and Webauthn, to become phish proof and more easily enforce least privilege access control. |

| Role Based Access Controls for every Cloudflare plan | Role based access controls, and all of our additional roles, will be rolled out to users on every plan. |

| Email Link Isolation | Bringing Browser Isolation to potentially unsafe links in email with Zero Trust and Area 1. |

| Unmetered Rate Limiting | Today, we are announcing that Free, Pro and Business plans include Rate Limiting rules without extra charges, including an updated version that is built on the powerful ruleset engine and allows building rules like in Custom Rules. |

Friday

| What | In a sentence… |

|---|---|

| Gateway + CASB | When CASB, Cloudflare’s API-driven SaaS security scanning tool, discovers a problem, it’s now possible to easily create a corresponding Gateway policy in as few as three clicks. |

| Project A11Y | How we upgraded Cloudflare’s dashboard to adhere to industry accessibility standards. |

| Bringing (free) Stream to Pro and Business plans | Beginning December 1, 2022, if you have a Business or Pro subscription, you will receive a complimentary allocation of Cloudflare Stream, including up to 100 minutes of video content and deliver up to 10,000 minutes of video content each month at no additional cost. |

| Workers Analytics Engine public beta | Workers Analytics Engine is a new way for developers to store and analyze time series analytics about anything using Cloudflare Workers, and it’s now in open beta! |

| Radar 2.0 | On the second anniversary of Cloudflare Radar, we are launching Cloudflare Radar 2.0 in beta. It makes it easier to find insights and explore data, see more insights, and share them with others. |

| Cloudflare Radar Outage Center | The new Cloudflare Radar Outage Center (CROC), launched today as part of Radar 2.0, is intended to be an archive of Internet outages around the world. |

| Radar Domain Rankings | Radar Domain Rankings is a new dataset for exploring the most popular domains on the Internet. The dataset aims to identify the top most popular domains based on how people use the Internet globally, without tracking individuals’ Internet use. |

One More Thing

We had so much over the week that we had to add just one more day, with a big focus on cryptography: not only how clients connect to our network, but also how Cloudflare connects to customer origins.

| What | In a sentence… |

|---|---|

| Bringing post quantum cryptography to Cloudflare customers | As a beta service, all websites and APIs served through Cloudflare support post-quantum hybrid key agreement. This is on by default; no need for an opt-in. This means that if your browser/app supports it, the connection to our network is also secure against any future quantum computer. |

| Cloudflare Tunnel goes post quantum | Cloudflare Tunnel gets a new option to use post-quantum connections. |

| Securing Origin Connectivity | Cloudflare will automatically find the most secure connection possible to origin servers and use it automatically. |

Next

And that’s it for Birthday Week 2022. But it’s not over for Cloudflare Innovation Weeks this year; stay tuned for a week of developer goodies coming soon.

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/910161/

Security updates have been issued by Debian (chromium, gdal, kernel, libdatetime-timezone-perl, libhttp-daemon-perl, lighttpd, mariadb-10.3, node-thenify, snakeyaml, tinyxml, and tzdata), Fedora (enlightenment, kitty, and thunderbird), Mageia (expat, firejail, libjpeg, nodejs, perl-HTTP-Daemon, python-mako, squid, and thunderbird), Scientific Linux (firefox and thunderbird), SUSE (buildah, connman, cosign, expat, ImageMagick, python36, python39, slurm, and webkit2gtk3), and Ubuntu (linux, linux-aws, linux-kvm, linux-lts-xenial and linux-gke-5.15).

Adding Smart blinds to my home – Yoolax and Smartwings Zigbee Shades

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=vLkvTTNnI_s

Defending against future threats: Cloudflare goes post-quantum

Post Syndicated from Bas Westerbaan original https://blog.cloudflare.com/post-quantum-for-all/

There is an expiration date on the cryptography we use every day. It’s not easy to read, but somewhere between 15 or 40 years, a sufficiently powerful quantum computer is expected to be built that will be able to decrypt essentially any encrypted data on the Internet today.

Luckily, there is a solution: post-quantum (PQ) cryptography has been designed to be secure against the threat of quantum computers. Just three months ago, in July 2022, after a six-year worldwide competition, the US National Institute of Standards and Technology (NIST), known for AES and SHA2, announced which post-quantum cryptography they will standardize. NIST plans to publish the final standards in 2024, but we want to help drive early adoption of post-quantum cryptography.

Starting today, as a beta service, all websites and APIs served through Cloudflare support post-quantum hybrid key agreement. This is on by default1; no need for an opt-in. This means that if your browser/app supports it, the connection to our network is also secure against any future quantum computer.

We offer this post-quantum cryptography free of charge: we believe that post-quantum security should be the new baseline for the Internet.

Deploying post-quantum cryptography seems like a no-brainer with quantum computers on the horizon, but it’s not without risks. To start, this is new cryptography: even with years of scrutiny, it is not inconceivable that a catastrophic attack might still be discovered. That is why we are deploying hybrids: a combination of a tried and tested key agreement together with a new one that adds post-quantum security.

We are primarily worried about what might seem mere practicalities. Even though the protocols used to secure the Internet are designed to allow smooth transitions like this, in reality there is a lot of buggy code out there: trying to create a post-quantum secure connection might fail for many reasons — for example a middlebox being confused about the larger post-quantum keys and other reasons we have yet to observe because these post-quantum key agreements are brand new. It’s because of these issues that we feel it is important to deploy post-quantum cryptography early, so that together with browsers and other clients we can find and work around these issues.

In this blog post we will explain how TLS, the protocol used to secure the Internet, is designed to allow a smooth and secure migration of the cryptography it uses. Then we will discuss the technical details of the post-quantum cryptography we have deployed, and how, in practice, this migration might not be that smooth at all. We finish this blog post by explaining how you can build a better, post-quantum secure, Internet by helping us test this new generation of cryptography.

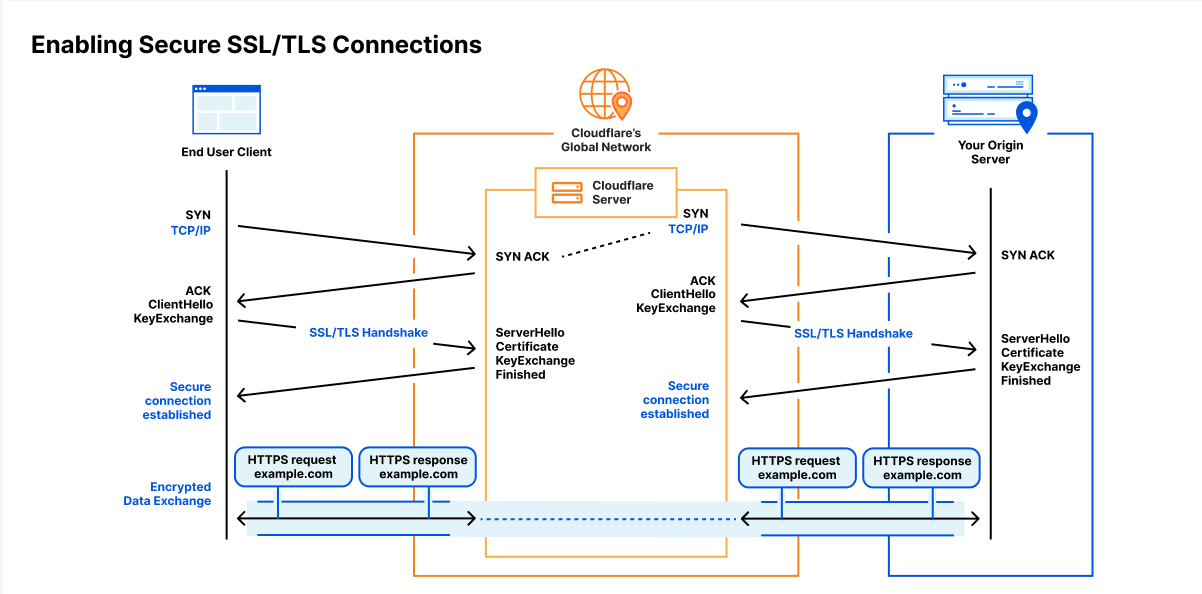

TLS: Transport Layer Security

When you’re browsing a website using a secure connection, whether that’s using HTTP/1.1 or QUIC, you are using the Transport Layer Security (TLS) protocol under the hood. There are two major versions of TLS in common use today: the new TLS 1.3 (~90%) and the older TLS 1.2 (~10%), which is on the decline.

TLS 1.3 is a huge improvement over TLS 1.2: it’s faster, more secure, simpler and more flexible in just the right places. This makes it easier to add post-quantum security to TLS 1.3 compared to 1.2. For the moment, we will leave it at that: we’ve only added post-quantum support to TLS 1.3.

So, what is TLS all about? The goal is to set up a connection between a browser and website such that

- Confidentiality and integrity, no one can read along or tamper with the data undetected.

- Authenticity you know you’re connected to the right website; not an imposter.

Building blocks: AEAD, key agreement and signatures

Three different types of cryptography are used in TLS to reach this goal.

- Symmetric encryption, or more precisely Authenticated Encryption With Associated Data (AEAD), is the workhorse of cryptography: it’s used to ensure confidentiality and integrity. This is a straight-forward kind of encryption: there is a single key that is used to encrypt and decrypt the data. Without the right key you cannot decrypt the data and any tampering with the encrypted data results in an error while decrypting.

In TLS 1.3, ChaCha20-Poly1305 and AES128-GCM are in common use today.

What about quantum attacks? At first glance, it looks like we need to switch to 256-bit symmetric keys to defend against Grover’s algorithm. In practice, however, Grover’s algorithm doesn’t parallelize well, so the currently deployed AEADs will serve just fine.

So if we can agree on a shared key to use with symmetric encryption, we’re golden. But how to get to a shared key? You can’t just pick a key and send it to the server: anyone listening in would know the key as well. One might think it’s an impossible task, but this is where the magic of asymmetric cryptography helps out:

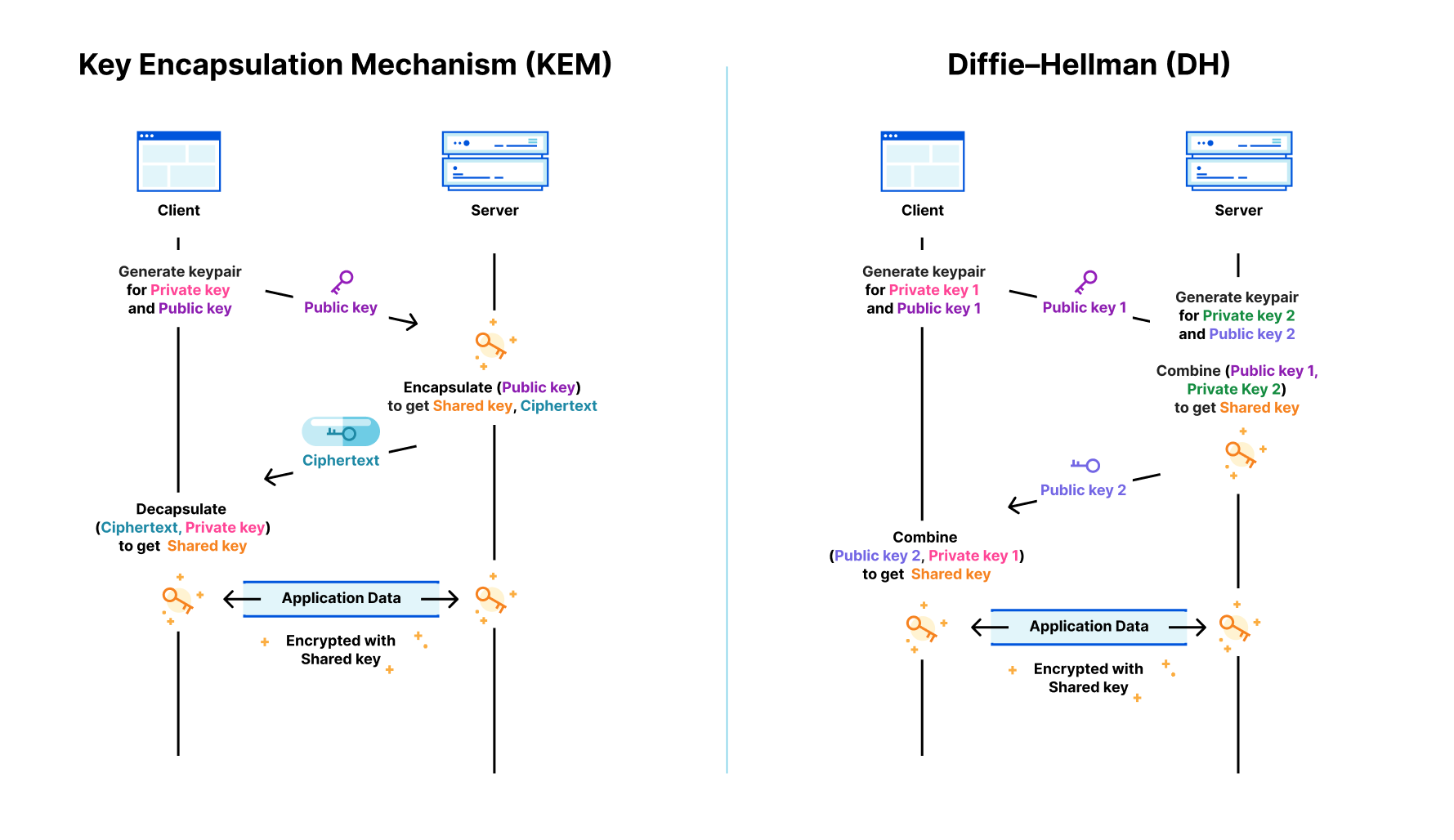

- A key agreement, also called key exchange or key distribution, is a cryptographic protocol with which two parties can agree on a shared key without an eavesdropper being able to learn anything. Today the X25519 Elliptic Curve Diffie–Hellman protocol (ECDH) is the de facto standard key agreement used in TLS 1.3. The security of X25519 is based on the discrete logarithm problem for elliptic curves, which is vulnerable to quantum attacks, as it is easily solved by a cryptographically relevant quantum computer using Shor’s algorithm. The solution is to use a post-quantum key agreement, such as Kyber.

A key agreement only protects against a passive attacker. An active attacker, that can intercept and modify messages (MitM), can establish separate shared keys with both the server and the browser, re-encrypting all data passing through. To solve this problem, we need the final piece of cryptography.

- With a digital signature algorithm, such as RSA or ECDSA, there are two keys: a public and a private key. Only with the private key, one can create a signature for a message. Anyone with the corresponding public key can check whether a signature is indeed valid for a given message. These digital signatures are at the heart of TLS certificates that are used to authenticate websites.

Both RSA and ECDSA are vulnerable to quantum attacks. We haven’t replaced those with post-quantum signatures, yet. The reason is that authentication is less urgent: we only need to have them replaced by the time a sufficiently large quantum computer is built, whereas any data secured by a vulnerable key agreement today can be stored and decrypted in the future. Even though we have more time, deploying post-quantum authentication will be quite challenging.

So, how do these building blocks come together to create TLS?

High-level overview of TLS 1.3

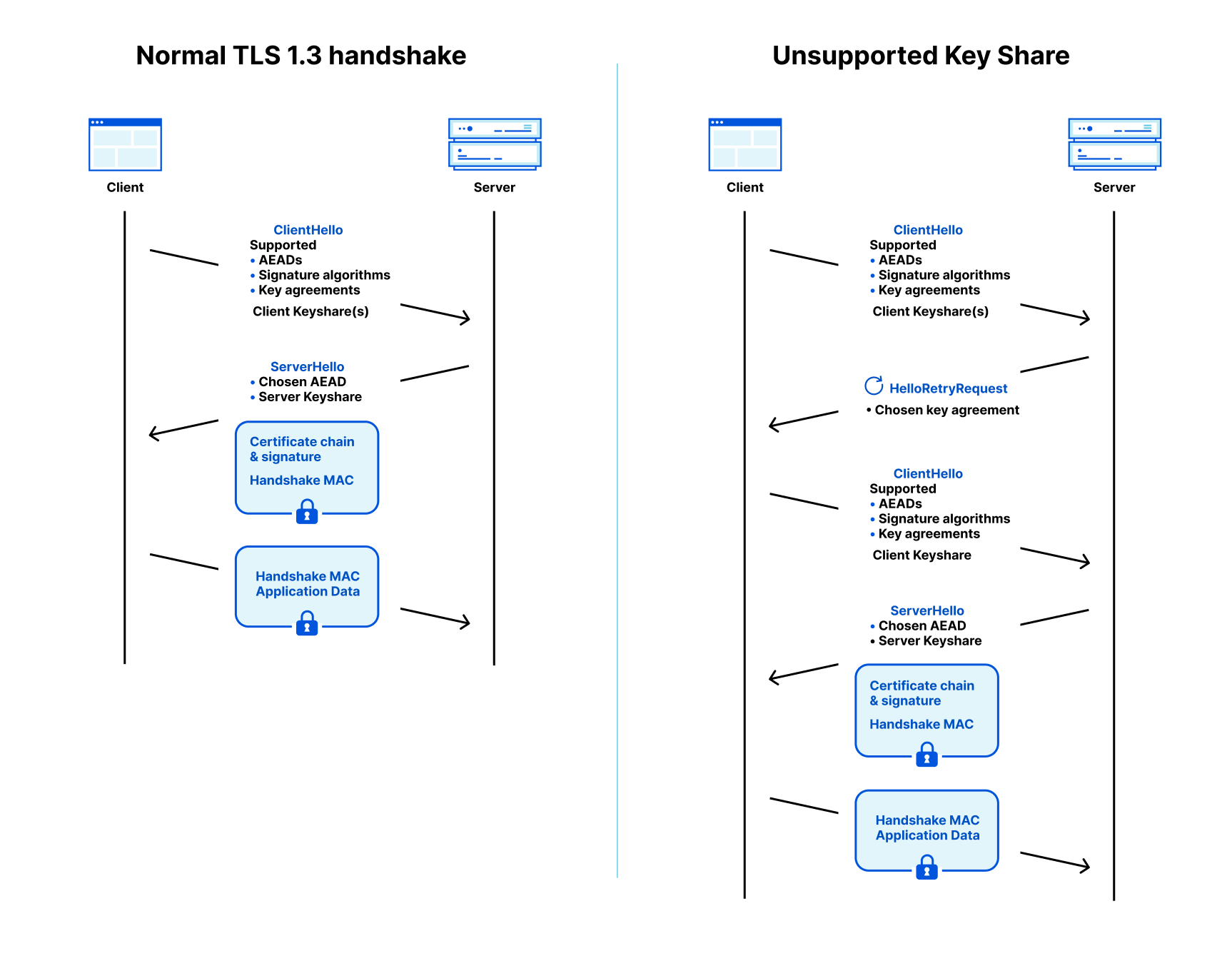

A TLS connection starts with a handshake which is used to authenticate the server and derive a shared key. The browser (client) starts by sending a ClientHello message that contains a list of the AEADs, signature algorithms, and key agreement methods it supports. To remove a roundtrip, the client is allowed to make a guess of what the server supports and start the key agreement by sending one or more client keyshares. That guess might be correct (on the left in the diagram below) or the client has to retry (on the right).

Key agreement

Before we explain the rest of this interaction, let’s dig into the key agreement: what is a keyshare? The way the key agreement for Kyber and X25519 work is different: the first is a Key Encapsulation Mechanism (KEM), while the latter is a Diffie–Hellman (DH) style agreement. The latter is more flexible, but for TLS it doesn’t make a difference.

In both cases the client sends a client keyshare to the server. From this client keyshare the server generates the shared key. The server then returns a server keyshare with which the client can also compute the shared key.

Going back to the TLS 1.3 flow: when the server receives the ClientHello message it picks an AEAD (cipher), signature algorithm and client keyshare that it supports. It replies with a ServerHello message that contains the chosen AEAD and the server keyshare for the selected key agreement. With the AEAD and shared key locked in, the server starts encrypting data (shown with blue boxes).

Authentication

Together with the AEAD and server keyshare, the server sends a signature, the handshake signature, on the transcript of the communication so far together with a certificate (chain) for the public key that it used to create the signature. This allows the client to authenticate the server: it checks whether it trusts the certificate authority (e.g. Let’s Encrypt) that certified the public key and whether the signature verifies for the messages it sent and received so far. This not only authenticates the server, but it also protects against downgrade attacks.

Downgrade protection

We cannot upgrade all clients and servers to post-quantum cryptography at once. Instead, there will be a transition period where only some clients and some servers support post-quantum cryptography. The key agreement negotiation in TLS 1.3 allows this: during the transition servers and clients will still support non post-quantum key agreements, and can fall back to it if necessary.

This flexibility is great, but also scary: if both client and server support post-quantum key agreement, we want to be sure that they also negotiate the post-quantum key agreement. This is the case in TLS 1.3, but it is not obvious: the keyshares, the chosen keyshare and the list of supported key agreements are all sent in plain text. Isn’t it possible for an attacker in the middle to remove the post-quantum key agreements? This is called a downgrade attack.

This is where the transcript comes in: the handshake signature is taken over all messages received and sent by the server so far. This includes the supported key agreements and the key agreement that was picked. If an attacker changes the list of supported key agreements that the client sends, then the server will not notice. However, the client checks the server’s handshake signature against the list of supported key agreements it has actually sent and thus will detect the mischief.

The downgrade attack problems are much more complicated for TLS 1.2, which is one of the reasons we’re hesitant to retrofit post-quantum security in TLS 1.2.

Wrapping up the handshake

The last part of the server’s response is “server finished”, a message authentication code (MAC) on the whole transcript so far. Most of the work has been done by the handshake signature, but in other operating modes of TLS without handshake signature, such as session resumption, it’s important.

With the chosen AEAD and server keyshare, the client can compute the shared key and decrypt and verify the certificate chain, handshake signature and handshake MAC. We did not mention it before, but the shared key is not used directly for encryption. Instead, for good measure, it’s mixed together with communication transcripts, to derive several specific keys for use during the handshake and the main connection afterwards.

To wrap up the handshake, the client sends its own handshake MAC, and can then proceed to send application-specific data encrypted with the keys derived during the handshake.

Hello! Retry Request?

What we just sketched is the desirable flow where the client sends a keyshare that is supported by the server. That might not be the case. If the server doesn’t accept any key agreements advertised by the client, then it will tell the client and abort the connection.

If there is a key agreement that both support, but for which the client did not send a keyshare, then the server will respond with a HelloRetryRequest (HRR) message requesting a keyshare of a specific key agreement that the client supports as shown on the diagram on the right. In turn, the client responds with a new ClientHello with the selected keyshare.

If there is a key agreement that both support, but for which the client did not send a keyshare, then the server will respond with a HelloRetryRequest (HRR) message requesting a keyshare of a specific key agreement that the client supports as shown on the diagram on the right. In turn, the client responds with a new ClientHello with the selected keyshare.

This is not the whole story: a server is also allowed to send a HelloRetryRequest to request a different key agreement that it prefers over those for which the client sent shares. For instance, a server can send a HelloRetryRequest to a post-quantum key agreement if the client supports it, but didn’t send a keyshare for it.

HelloRetryRequests are rare today. Almost every server supports the X25519 key-agreement and almost every client (98% today) sends a X25519 keyshare. Earlier P-256 was the de facto standard and for a long time many browsers would send both a P-256 and X25519 keyshare to prevent a HelloRetryRequest. As we will discuss later, we might not have the luxury to send two post-quantum keyshares.

That’s the theory

TLS 1.3 is designed to be flexible in the cryptography it uses without sacrificing security or performance, which is convenient for our migration to post-quantum cryptography. That is the theory, but there are some serious issues in practice — we’ll go into detail later on. But first, let’s check out the post-quantum key agreements we’ve deployed.

What we deployed

Today we have enabled support for the X25519Kyber512Draft00 and X25519Kyber768Draft00 key agreements using TLS identifiers 0xfe30 and 0xfe31 respectively. These are exactly the same key agreements we enabled on a limited number of zones this July.

These two key agreements are a combination, a hybrid, of the classical X25519 and the new post-quantum Kyber512 and Kyber768 respectively and in that order. That means that even if Kyber turns out to be insecure, the connection remains as secure as X25519.

Kyber, for now, is the only key agreement that NIST has selected for standardization. Kyber is very light on the CPU: it is faster than X25519 which is already known for its speed. On the other hand, its keyshares are much bigger:

| Size keyshares(in bytes) | Ops/sec (higher is better) | ||||

|---|---|---|---|---|---|

| Algorithm | PQ | Client | Server | Client | Server |

| Kyber512 | ✅ | 800 | 768 | 50,000 | 100,000 |

| Kyber768 | ✅ | 1,184 | 1,088 | 31,000 | 70,000 |

| X25519 | ❌ | 32 | 32 | 17,000 | 17,000 |

Size and CPU performance compared between X25519 and Kyber. Performance varies considerably by hardware platform and implementation constraints and should be taken as a rough indication only.

Kyber is expected to change in minor, but backwards incompatible ways, before final standardization by NIST in 2024. Also, the integration with TLS, including the choice and details of the hybrid key agreement, are not yet finalized by the TLS working group. Once they are, we will adopt them promptly.

Because of this, we will not support the preliminary key agreements announced today for the long term; they’re provided as a beta service. We will post updates on our deployment on pq.cloudflareresearch.com and announce it on the IETF PQC mailing list.

Now that we know how TLS negotiation works in theory, and which key agreements we’re adding, how could it fail?

Where things might break in practice

Protocol ossification

Protocols are often designed with flexibility in mind, but if that flexibility is not exercised in practice, it’s often lost. This is called protocol ossification. The roll-out of TLS 1.3 was difficult because of several instances of ossification. One poignant example is TLS’ version negotiation: there is a version field in the ClientHello message that indicates the latest version supported by the client. A new version was assigned to TLS 1.3, but in testing it turned out that many servers would not fallback properly to TLS 1.2, but crash the connection instead. How do we deal with ossification?

Workaround

Today, TLS 1.3 masquerades itself as TLS 1.2 down to including many legacy fields in the ClientHello. The actual version negotiation is moved into a new extension to the message. A TLS 1.2 server will ignore the new extension and ignorantly continue with TLS 1.2, while a TLS 1.3 server picks up on the extension and continues with TLS 1.3 proper.

Protocol grease

How do we prevent ossification? Having learnt from this experience, browsers will regularly advertise dummy versions in this new version field, so that misbehaving servers are caught early on. This is not only done for the new version field, but in many other places in the TLS handshake, and presciently also for the key agreement identifiers. Today, 40% of browsers send two client keyshares: one X25519 and another a bogus 1-byte keyshare to keep key agreement flexibility.

This behavior is standardized in RFC 8701: Generate Random Extensions And Sustain Extensibility (GREASE) and we call it protocol greasing, as in “greasing the joints” from Adam Langley’s metaphor of protocols having rusty joints in need of oil.

This keyshare grease helps, but it is not perfect, because it is the size of the keyshare that in this case causes the most concern.

Fragmented ClientHello

Post-quantum keyshares are big. The two Kyber hybrids are 832 and 1,216 bytes. Compared to that, X25519 is tiny with only 32 bytes. It is not unlikely that some implementations will fail when seeing such large keyshares.

Our biggest concern is with the larger Kyber768 based keyshare. A ClientHello with the smaller 832 byte Kyber512-based keyshare will just barely fit in a typical network packet. On the other hand, the larger 1,216 byte Kyber768-keyshare will typically fragment the ClientHello into two packets.

Assembling packets together isn’t free: it requires you to keep track of the partial messages around. Usually this is done transparently by the operating system’s TCP stack, but optimized middleboxes and load balancers that look at each packet separately, have to (and might not) keep track of the connections themselves.

QUIC

The situation for HTTP/3, which is built on QUIC, is particularly interesting. Instead of a simple port number chosen by the client (as in TCP), a QUIC packet from the client contains a connection ID that is chosen by the server. Think of it as “your reference” and “our reference” in snailmail. This allows a QUIC load-balancer to encode the particular machine handling the connection into the connection ID.

When opening a connection, the QUIC client doesn’t know which connection ID the server would like and sends a random one instead. If the client needs multiple initial packets, such as with a big ClientHello, then the client will use the same random connection ID. Even though multiple initial packets are allowed by the QUIC standard, a QUIC load balancer might not expect this, and won’t be able to refer to an underlying TCP connection.

Performance

Aside from these hard failures, soft failures, such as performance degradation are also of concern: if it’s too slow to load, a website might as well have been broken to begin with.

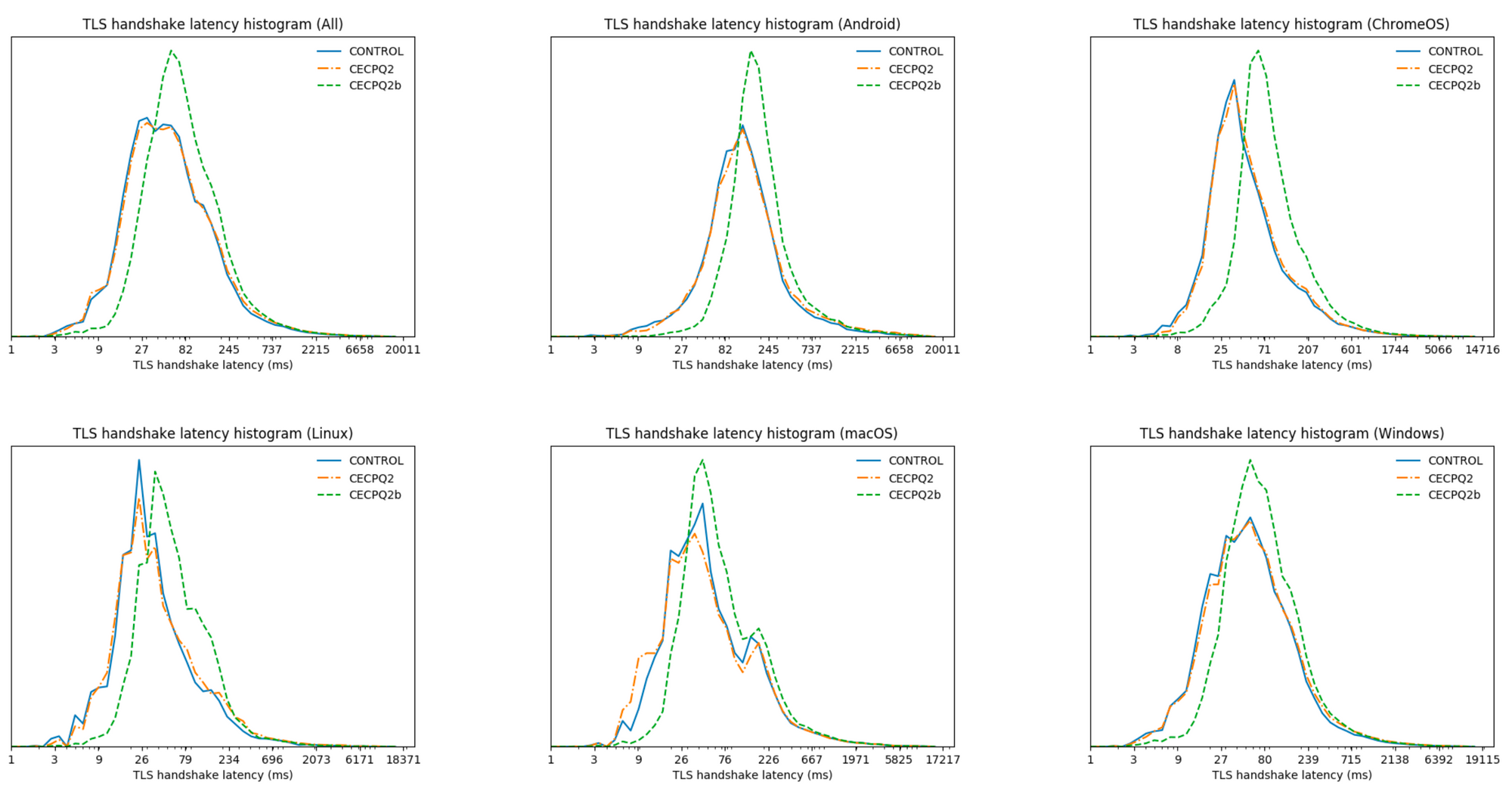

Back in 2019 in a joint experiment with Google, we deployed two post-quantum key agreements: CECPQ2, based on NTRU-HRSS, and CECPQ2b, based on SIKE. NTRU-HRSS is very similar to Kyber: it’s a bit larger and slower. Results from 2019 are very promising: X25519+NTRU-HRSS (orange line) is hard to distinguish from X25519 on its own (blue line).

We will continue to keep a close eye on performance, especially on the tail performance: we want a smooth transition for everyone, from the fastest to the slowest clients on the Internet.

How to help out

The Internet is a very heterogeneous system. To find all issues, we need sufficient numbers of diverse testers. We are working with browsers to add support for these key agreements, but there may not be one of these browsers in every network.

So, to help the Internet out, try and switch a small part of your traffic to Cloudflare domains to use these new key agreement methods. We have open-sourced forks for BoringSSL, Go and quic-go. For BoringSSL and Go, check out the sample code here. If you have any issues, please let us know at [email protected]. We will be discussing any issues and workarounds at the IETF TLS working group.

Outlook

The transition to a post-quantum secure Internet is urgent, but not without challenges. Today we have deployed a preliminary post-quantum key agreement on all our servers — a sizable portion of the Internet — so that we can all start testing the big migration today. We hope that come 2024, when NIST puts a bow on Kyber, we will all have laid the groundwork for a smooth transition to a Post-Quantum Internet.

…..

1We only support these post-quantum key agreements in protocols based on TLS 1.3 including HTTP/3. There is one exception: for the moment we disable these hybrid key exchanges for websites in FIPS-mode.

Automatic (secure) transmission: taking the pain out of origin connection security

Post Syndicated from Alex Krivit original https://blog.cloudflare.com/securing-origin-connectivity/

In 2014, Cloudflare set out to encrypt the Internet by introducing Universal SSL. It made getting an SSL/TLS certificate free and easy at a time when doing so was neither free, nor easy. Overnight millions of websites had a secure connection between the user’s browser and Cloudflare.

But getting the connection encrypted from Cloudflare to the customer’s origin server was more complex. Since Cloudflare and all browsers supported SSL/TLS, the connection between the browser and Cloudflare could be instantly secured. But back in 2014 configuring an origin server with an SSL/TLS certificate was complex, expensive, and sometimes not even possible.

And so we relied on users to configure the best security level for their origin server. Later we added a service that detects and recommends the highest level of security for the connection between Cloudflare and the origin server. We also introduced free origin server certificates for customers who didn’t want to get a certificate elsewhere.

Today, we’re going even further. Cloudflare will shortly find the most secure connection possible to our customers’ origin servers and use it, automatically. Doing this correctly, at scale, while not breaking a customer’s service is very complicated. This blog post explains how we are automatically achieving that highest level of security possible for those customers who don’t want to spend time configuring their SSL/TLS set up manually.

Why configuring origin SSL automatically is so hard

When we announced Universal SSL, we knew the backend security of the connection between Cloudflare and the origin was a different and harder problem to solve.

In order to configure the tightest security, customers had to procure a certificate from a third party and upload it to their origin. Then they had to indicate to Cloudflare that we should use this certificate to verify the identity of the server while also indicating the connection security capabilities of their origin. This could be an expensive and tedious process. To help alleviate this high set up cost, in 2015 Cloudflare launched a beta Origin CA service in which we provided free limited-function certificates to customer origin servers. We also provided guidance on how to correctly configure and upload the certificates, so that secure connections between Cloudflare and a customer’s origin could be established quickly and easily.

What we discovered though, is that while this service was useful to customers, it still required a lot of configuration. We didn’t see the change we did with Universal SSL because customers still had to fight with their origins in order to upload certificates and test to make sure that they had configured everything correctly. And when you throw things like load balancers into the mix or servers mapped to different subdomains, handling server-side SSL/TLS gets even more complicated.

Around the same time as that announcement, Let’s Encrypt and other services began offering certificates as a public CA for free, making TLS easier and paving the way for widespread adoption. Let’s Encrypt and Cloudflare had come to the same conclusion: by offering certificates for free, simplifying server configuration for the user, and working to streamline certificate renewal, they could make a tangible impact on the overall security of the web.

The announcements of free and easy to configure certificates correlated with an increase in attention on origin-facing security. Cloudflare customers began requesting more documentation to configure origin-facing certificates and SSL/TLS communication that were performant and intuitive. In response, in 2016 we announced the GA of origin certificate authority to provide cheap and easy origin certificates along with guidance on how to best configure backend security for any website.

The increased customer demand and attention helped pave the way for additional features that focused on backend security on Cloudflare. For example, authenticated origin pull ensures that only HTTPS requests from Cloudflare will receive a response from your origin, preventing an origin response from requests outside of Cloudflare. Another option, Cloudflare Tunnel can be set up to run on the origin servers, proactively establishing secure and private tunnels to the nearest Cloudflare data center. This configuration allows customers to completely lock down their origin servers to only receive requests routed through our network. For customers unable to lock down their origins using this method, we still encourage adopting the strongest possible security when configuring how Cloudflare should connect to an origin server.

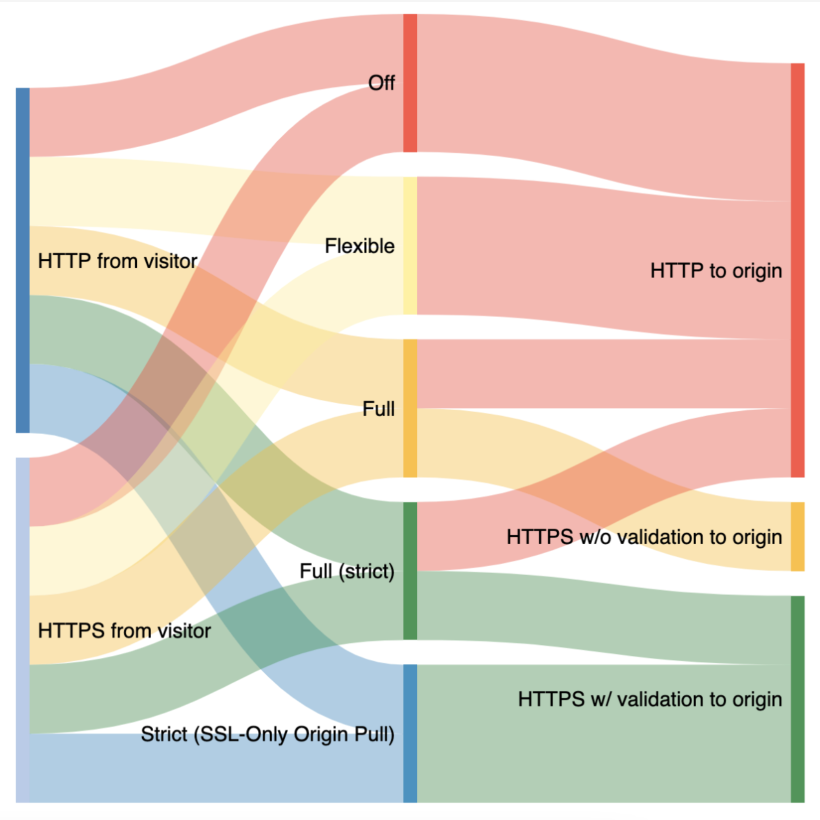

Cloudflare currently offers five options for SSL/TLS configurability that we use when communicating with origins:

- In Off mode, as you might expect, traffic from browsers to Cloudflare and from Cloudflare to origins are not encrypted and will use plain text HTTP.

- In Flexible mode, traffic from browsers to Cloudflare can be encrypted via HTTPS, but traffic from Cloudflare to the site’s origin server is not. This is a common selection for origins that cannot support TLS, even though we recommend upgrading this origin configuration wherever possible. A guide for upgrading can be found here.

- In Full mode, Cloudflare follows whatever is happening with the browser request and uses that same option to connect to the origin. For example, if the browser uses HTTP to connect to Cloudflare, we’ll establish a connection with the origin over HTTP. If the browser uses HTTPS, we’ll use HTTPS to communicate with the origin; however we will not validate the certificate on the origin to prove the identity and trustworthiness of the server.

- In Full (strict) mode, traffic between Cloudflare follows the same pattern as in Full mode, however Full (strict) mode adds validation of the origin server’s certificate. The origin certificate can either be issued by a public CA like Let’s Encrypt or by Cloudflare Origin CA.

- In Strict mode, traffic from the browser to Cloudflare that is HTTP or HTTPS will always be connected to the origin over HTTPS with a validation of the origin server’s certificate.

What we have found in a lot of cases is that when customers initially signed up for Cloudflare, the origin they were using could not support the most advanced versions of encryption, resulting in origin-facing communication using unencrypted HTTP. These default values persisted over time, even though the origin has become more capable. We think the time is ripe to re-evaluate the entire concept of default SSL/TLS levels.

That’s why we will reduce the configuration burden for origin-facing security by automatically managing this on behalf of our customers. Cloudflare will provide a zero configuration option for how we will communicate with origins: we will simply look at an origin and use the most-secure option available to communicate with it.

Re-evaluating default SSL/TLS modes is only the beginning. Not only will we automatically upgrade sites to their best security setting, we will also open up all SSL/TLS modes to all plan levels. Historically, Strict mode was reserved for enterprise customers only. This was because we released this mode in 2014 when few people had origins that were able to communicate over SSL/TLS, and we were nervous about customers breaking their configurations. But this is 2022, and we think that Strict mode should be available to anyone who wants to use it. So we will be opening it up to everyone with the launch of the automatic upgrades.

How will automatic upgrading work?

To upgrade the origin-facing security of websites, we first need to determine the highest security level the origin can use. To make this determination, we will use the SSL/TLS Recommender tool that we released a year ago.

The recommender performs a series of requests from Cloudflare to the customer’s origin(s) to determine if the backend communication can be upgraded beyond what is currently configured. The recommender accomplishes this by:

- Crawling the website to collect links on different pages of the site. For websites with large numbers of links, the recommender will only examine a subset. Similarly, for sites where the crawl turns up an insufficient number of links, we augment our results with a sample of links from recent visitors requests to the zone. All of this is to get a representative sample to where requests are going in order to know how responses are served from the origin.

- The crawler uses the user agent

Cloudflare-SSLDetectorand has been added to Cloudflare’s list of known “good bots”. - Next, the recommender downloads the content of each link over both HTTP and HTTPS. The recommender makes only idempotent GET requests when scanning origin servers to avoid modifying server resource state.

- Following this, the recommender runs a content similarity algorithm to determine if the content collected over HTTP and HTTPS matches.

- If the content that is downloaded over HTTP matches the content downloaded over HTTPS, then it’s known that we can upgrade the security of the website without negative consequences.

- If the website is already configured to Full mode, we will perform a certificate validation (without the additional need for crawling the site) to determine whether it can be updated to Full (strict) mode or higher.

If it can be determined that the customer’s origin is able to be upgraded without breaking, we will upgrade the origin-facing security automatically.

But that’s not all. Not only are we removing the configuration burden for services on Cloudflare, but we’re also providing more precise security settings by moving from per-zone SSL/TLS settings to per-origin SSL/TLS settings.

The current implementation of the backend SSL/TLS service is related to an entire website, which works well for those with a single origin. For those that have more complex setups however, this can mean that origin-facing security is defined by the lowest capable origin serving a part of the traffic for that service. For example, if a website uses img.example.com and api.example.com, and these subdomains are served by different origins that have different security capabilities, we would not want to limit the SSL/TLS capabilities of both subdomains to the least secure origin. By using our new service, we will be able to set per-origin security more precisely to allow us to maximize the security posture of each origin.

The goal of this is to maximize the origin-facing security of everything on Cloudflare. However, if any origin that we attempt to scan blocks the SSL recommender, has a non-functional origin, or opts-out of this service, we will not complete the scans and will not be able to upgrade security. Details on how to opt-out will be provided via email announcements soon.

Opting out

There are a number of reasons why someone might want to configure a lower-than-optimal security setting for their website. One common reason customers provide is a fear that having higher security settings will negatively impact the performance of their site. Others may want to set a suboptimal security setting for testing purposes or to debug some behavior. Whatever the reason, we will provide the tools needed to continue to configure the SSL/TLS mode you want, even if that’s different from what we think is the best.

When is this going to happen?

We will begin to roll this change out before the end of the year. If you read this and want to make sure you’re at the highest level of backend security already, we recommend Full (strict) or Strict mode. If you prefer to wait for us to automatically upgrade your origin security for you, please keep your eyes peeled to your inbox for the date we will begin rolling out this change for your group.

At Cloudflare, we believe that the Internet needs to be secure and private. If you’d like to help us achieve that, we’re hiring across the engineering organization.

Introducing post-quantum Cloudflare Tunnel

Post Syndicated from Bas Westerbaan original https://blog.cloudflare.com/post-quantum-tunnel/

Undoubtedly, one of the big themes in IT for the next decade will be the migration to post-quantum cryptography. From tech giants to small businesses: we will all have to make sure our hardware and software is updated so that our data is protected against the arrival of quantum computers. It seems far away, but it’s not a problem for later: any encrypted data captured today (not protected by post-quantum cryptography) can be broken by a sufficiently powerful quantum computer in the future.

Luckily we’re almost there: after a tremendous worldwide effort by the cryptographic community, we know what will be the gold standard of post-quantum cryptography for the next decades. Release date: somewhere in 2024. Hopefully, for most, the transition will be a simple software update then, but it will not be that simple for everyone: not all software is maintained, and it could well be that hardware needs an upgrade as well. Taking a step back, many companies don’t even have a full list of all software running on their network.

For Cloudflare Tunnel customers, this migration will be much simpler: introducing Post-Quantum Cloudflare Tunnel. In this blog post, first we give an overview of how Cloudflare Tunnel works and explain how it can help you with your post-quantum migration. Then we’ll explain how to get started and finish with the nitty-gritty technical details.

Cloudflare Tunnel

With Cloudflare Tunnel you can securely expose a server sitting within an internal network to the Internet by running the cloudflared service next to it. For instance, after having installed cloudflared on your internal network, you can expose your on-prem webapp on the Internet under, say example.com, so that remote workers can access it from anywhere,

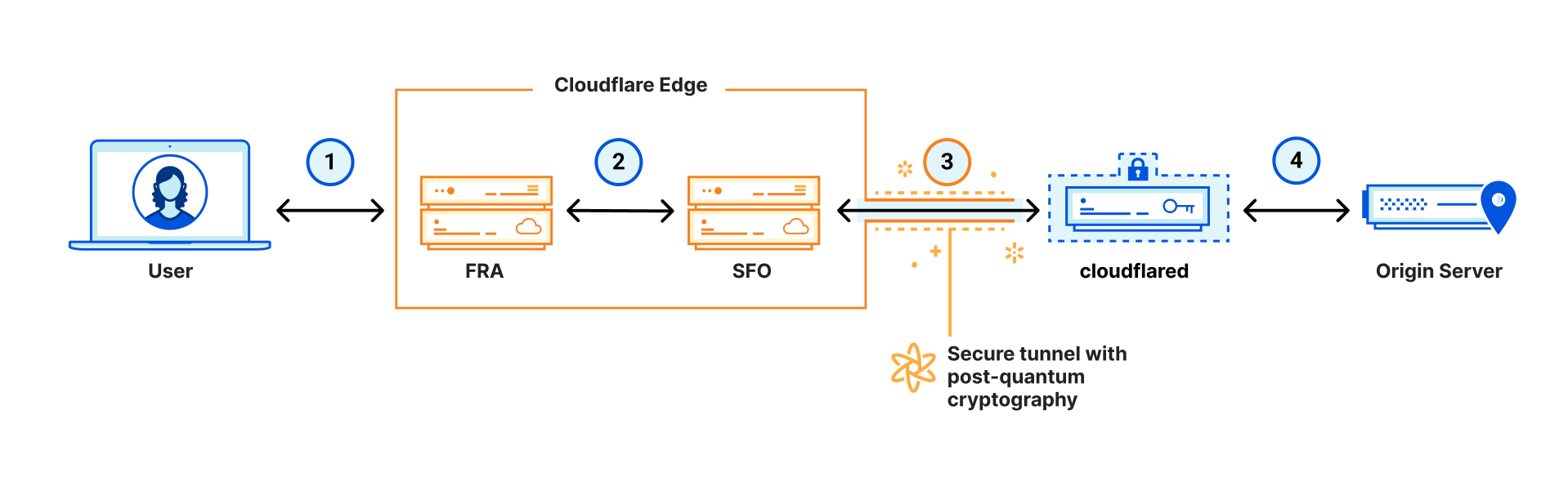

How does it work? cloudflared creates long-running connections to two nearby Cloudflare data centers, for instance San Francisco (connection 3) and one other. When your employee visits your domain, they connect (1) to a Cloudflare server close to them, say in Frankfurt. That server knows that this is a Cloudflare Tunnel and that your cloudflared has a connection to a server in San Francisco, and thus it relays (2) the request to it. In turn, via the reverse connection, the request ends up at cloudflared, which passes it (4) to the webapp via your internal network.

In essence, Cloudflare Tunnel is a simple but convenient tool, but the magic is in what you can do on top with it: you get Cloudflare’s DDoS protection for free; fine-grained access control with Cloudflare Access (even if the application didn’t support it) and request logs just to name a few. And let’s not forget the matter at hand:

Post-quantum tunnels

Our goal is to make it easy for everyone to have a fully post-quantum secure connection from users to origin. For this, Post-Quantum Cloudflare Tunnel is a powerful tool, because with it, your users can benefit from a post-quantum secure connection without upgrading your application (connection 4 in the diagram).

Today, we make two important steps towards this goal: cloudflared 2022.9.1 adds the --post-quantum flag, that when given, makes the connection from cloudflared to our network (connection 3) post-quantum secure.

Also today, we have announced support for post-quantum browser connections (connection 1).

We aren’t there yet: browsers (and other HTTP clients) do not support the post-quantum security offered by our network, yet, and we still have to make the connections between our data centers (connection 2) post-quantum secure.

An attacker only needs to have access to one vulnerable connection, but attackers don’t have access everywhere: with every connection we make post-quantum secure, we remove one opportunity for compromise.

We are eager to make post-quantum tunnels the default, but for now it is a beta feature. The reason is that the cryptography used and its integration into the network protocol are not yet final. Making post-quantum the default now, would require users to update cloudflared more often than we can reasonably expect them to.

Getting started

Are frequent updates to cloudflared not a problem for you? Then please do give post-quantum Cloudflare Tunnel a try. Make sure you’re on at least 2022.9.1 and simply run cloudflared with the --post-quantum flag:

$ cloudflared tunnel run --post-quantum tunnel-name

2022-09-23T11:44:42Z INF Starting tunnel tunnelID=[...]

2022-09-23T11:44:42Z INF Version 2022.9.1

2022-09-23T11:44:42Z INF GOOS: darwin, GOVersion: go1.19.1, GoArch: amd64

2022-09-23T11:44:42Z INF Settings: map[post-quantum:true pq:true]

2022-09-23T11:44:42Z INF Generated Connector ID: [...]

2022-09-23T11:44:42Z INF cloudflared will not automatically update if installed by a package manager.

2022-09-23T11:44:42Z INF Initial protocol quic

2022-09-23T11:44:42Z INF Using experimental hybrid post-quantum key agreement X25519Kyber768Draft00

2022-09-23T11:44:42Z INF Starting metrics server on 127.0.0.1:53533/metrics

2022-09-23T11:44:42Z INF Connection [...] registered connIndex=0 ip=[...] location=AMS

2022-09-23T11:44:43Z INF Connection [...] registered connIndex=1 ip=[...] location=AMS

2022-09-23T11:44:44Z INF Connection [...] registered connIndex=2 ip=[...] location=AMS

2022-09-23T11:44:45Z INF Connection [...] registered connIndex=3 ip=[...] location=AMS

If you run cloudflared as a service, you can turn on post-quantum by adding post-quantum: true to the tunnel configuration file. Conveniently, the cloudflared service will automatically update itself if not installed by a package manager.

If, for some reason, creating a post-quantum tunnel fails, you’ll see an error message like

2022-09-22T17:30:39Z INF Starting tunnel tunnelID=[...]

2022-09-22T17:30:39Z INF Version 2022.9.1

2022-09-22T17:30:39Z INF GOOS: darwin, GOVersion: go1.19.1, GoArch: amd64

2022-09-22T17:30:39Z INF Settings: map[post-quantum:true pq:true]

2022-09-22T17:30:39Z INF Generated Connector ID: [...]

2022-09-22T17:30:39Z INF cloudflared will not automatically update if installed by a package manager.

2022-09-22T17:30:39Z INF Initial protocol quic

2022-09-22T17:30:39Z INF Using experimental hybrid post-quantum key agreement X25519Kyber512Draft00

2022-09-22T17:30:39Z INF Starting metrics server on 127.0.0.1:55889/metrics

2022-09-22T17:30:39Z INF

===================================================================================

You are hitting an error while using the experimental post-quantum tunnels feature.

Please check:

https://pqtunnels.cloudflareresearch.com

for known problems.

===================================================================================

2022-09-22T17:30:39Z ERR Failed to create new quic connection error="failed to dial to edge with quic: CRYPTO_ERROR (0x128): tls: handshake failure" connIndex=0 ip=[...]

When the post-quantum flag is given, cloudflared will not fall back to a non post-quantum connection.

What to look for

The setup phase is the crucial part: once established, the tunnel is the same as a normal tunnel. That means that performance and reliability should be identical once the tunnel is established.

The post-quantum cryptography we use is very fast, but requires roughly a kilobyte of extra data to be exchanged during the handshake. The difference will be hard to notice in practice.

Our biggest concern is that some network equipment/middleboxes might be confused by the bigger handshake. If the post-quantum Cloudflare Tunnel isn’t working for you, we’d love to hear about it. Contact us at [email protected] and tell us which middleboxes or ISP you’re using.

Under the hood

When the --post-quantum flag is given, cloudflared restricts itself to the QUIC transport for the tunnel connection to our network and will only allow the post-quantum hybrid key exchanges X25519Kyber512Draft00 and X25519Kyber768Draft00 with TLS identifiers 0xfe30 and 0xfe31 respectively. These are hybrid key exchanges between the classical X25519 and the post-quantum secure Kyber. Thus, on the off-chance that Kyber turns out to be insecure, we can still rely on the non-post quantum security of X25519. These are the same key exchanges supported on our network.

cloudflared randomly picks one of these two key exchanges. The reason is that the latter usually requires two initial packets for the TLS ClientHello whereas the former only requires one. That allows us to test whether a fragmented ClientHello causes trouble.

When cloudflared fails to set up the post-quantum connection, it will report the attempted key exchange, cloudflared version and error to pqtunnels.cloudflareresearch.com so that we have visibility into network issues. Have a look at that page for updates on our post-quantum tunnel deployment.

The control connection and authentication of the tunnel between cloudflared and our network are not post-quantum secure yet. This is less urgent than the store-now-decrypt-later issue of the data on the tunnel itself.

We have open-sourced support for these post-quantum QUIC key exchanges in Go.

Outlook

In the coming decade the industry will roll out post-quantum data protection. Some cases will be as simple as a software update and others will be much more difficult. Post-Quantum Cloudflare Tunnel will secure the connection between Cloudflare’s network and your origin in a simple and user-friendly way — an important step towards the Post-Quantum Internet, so that everyone may continue to enjoy a private and secure Internet.

Best of the History Guy: Presidents

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=hfHPCspCmKA

Detecting Deepfake Audio by Modeling the Human Acoustic Tract

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/10/detecting-deepfake-audio-by-modeling-the-human-acoustic-tract.html

This is interesting research:

In this paper, we develop a new mechanism for detecting audio deepfakes using techniques from the field of articulatory phonetics. Specifically, we apply fluid dynamics to estimate the arrangement of the human vocal tract during speech generation and show that deepfakes often model impossible or highly-unlikely anatomical arrangements. When parameterized to achieve 99.9% precision, our detection mechanism achieves a recall of 99.5%, correctly identifying all but one deepfake sample in our dataset.

From an article by two of the researchers:

The first step in differentiating speech produced by humans from speech generated by deepfakes is understanding how to acoustically model the vocal tract. Luckily scientists have techniques to estimate what someone—or some being such as a dinosaur—would sound like based on anatomical measurements of its vocal tract.

We did the reverse. By inverting many of these same techniques, we were able to extract an approximation of a speaker’s vocal tract during a segment of speech. This allowed us to effectively peer into the anatomy of the speaker who created the audio sample.

From here, we hypothesized that deepfake audio samples would fail to be constrained by the same anatomical limitations humans have. In other words, the analysis of deepfaked audio samples simulated vocal tract shapes that do not exist in people.

Our testing results not only confirmed our hypothesis but revealed something interesting. When extracting vocal tract estimations from deepfake audio, we found that the estimations were often comically incorrect. For instance, it was common for deepfake audio to result in vocal tracts with the same relative diameter and consistency as a drinking straw, in contrast to human vocal tracts, which are much wider and more variable in shape.

This is, of course, not the last word. Deepfake generators will figure out how to use these techniques to create harder-to-detect fake voices. And the deepfake detectors will figure out another, better, detection technique. And the arms race will continue.

Slashdot thread.

ICYMI: Serverless Q3 2022

Post Syndicated from David Boyne original https://aws.amazon.com/blogs/compute/serverless-icymi-q3-2022/

Welcome to the 19th edition of the AWS Serverless ICYMI (in case you missed it) quarterly recap. Every quarter, we share all the most recent product launches, feature enhancements, blog posts, webinars, Twitch live streams, and other interesting things that you might have missed!

In case you missed our last ICYMI, check out what happened last quarter here.

AWS Lambda

AWS has now introduced tiered pricing for Lambda. With tiered pricing, customers who run large workloads on Lambda can automatically save on their monthly costs. Tiered pricing is based on compute duration measured in GB-seconds. The tiered pricing breaks down as follows:

With tiered pricing, you can save on the compute duration portion of your monthly Lambda bills. This allows you to architect, build, and run large-scale applications on Lambda and take advantage of these tiered prices automatically. To learn more about Lambda cost optimizations, watch the new serverless office hours video.

Developers are using AWS SAM CLI to simplify serverless development making it easier to build, test, package, and deploy their serverless applications. For JavaScript and TypeScript developers, you can now simplify your Lambda development further using esbuild in the AWS SAM CLI.

Together with esbuild and SAM Accelerate, you can rapidly iterate on your code changes in the AWS Cloud. You can approximate the same levels of productivity as when testing locally, while testing against a realistic application environment in the cloud. esbuild helps simplify Lambda development with support for tree shaking, minification, source maps, and loaders. To learn more about this feature, read the documentation.

Lambda announced support for Attribute-Based Access Control (ABAC). ABAC is designed to simplify permission management using access permissions based on tags. These can be attached to IAM resources, such as IAM users, and roles. ABAC support for Lambda functions allows you to scale your permissions as your organization innovates. It gives granular access to developers without requiring a policy update when a user or project is added, removed, or updated. To learn more about ABAC, read about ABAC for Lambda.

AWS Lambda Powertools is an open-source library to help customers discover and incorporate serverless best practices more easily. Powertools for TypeScript is now generally available and currently focused on three observability features: distributed tracing (Tracer), structured logging (Logger), and asynchronous business and application metrics (Metrics). Powertools is helping builders around the world with more than 10M downloads it is also available in Python and Java programming languages.

To learn more:

- Watch AWS Lambda Powertools for TypeScript/Node.js on Serverless office hours

- Explore the documentation for Lambda Powertools and utilities for Python, Java and TypeScript.

AWS Step Functions

Amazon States Language (ASL) provides a set of functions known as intrinsics that perform basic data transformations. Customers have asked for additional intrinsics to perform more data transformation tasks, such as formatting JSON strings, creating arrays, generating UUIDs, and encoding data. Step functions have now added 14 new intrinsic functions which can be grouped into six categories:

- Intrinsics for arrays

- Intrinsics for JSON data manipulation

- Intrinsics for data encoding and decoding

- Intrinsics for math operations

- Intrinsic for string operations

- Intrinsic for unique identifier generation

Intrinsic functions allow you to reduce the use of other services to perform basic data manipulations in your workflow. Read the release blog for use-cases and more details.

Step Functions expanded its AWS SDK integrations with support for Amazon Pinpoint API 2.0, AWS Billing Conductor, Amazon GameSparks, and 195 more AWS API actions. This brings the total to 223 AWS Services and 10,000+ API Actions.

Amazon EventBridge

EventBridge released support for bidirectional event integrations with Salesforce, allowing customers to consume Salesforce events directly into their AWS accounts. Customers can also utilize API Destinations to send EventBridge events back to Salesforce, completing the bidirectional event integrations between Salesforce and AWS.

EventBridge also released the ability to start receiving events from GitHub, Stripe, and Twilio using quick starts. Customers can subscribe to events from these SaaS applications and receive them directly onto their EventBridge event bus for further processing. With Quick Starts, you can use AWS CloudFormation templates to create HTTP endpoints for your event bus that are configured with security best practices.

To learn more:

- Read the feature announcement for Amazon EventBridge salesforce integration

- Watch the EventBridge team talk through salesforce integration on serverless office hours

- Learn how to start receiving events from GitHub, Stripe, and Twilio with quick starts.

Amazon DynamoDB

DynamoDB now supports bulk imports from Amazon S3 into new DynamoDB tables. You can use bulk imports to help you migrate data from other systems, load test your applications, facilitate data sharing between tables and accounts, or simplify your disaster recovery and business continuity plans. Bulk imports support CSV, DynamoDB JSON, and Amazon Ion as input formats. You can get started with DynamoDB import via API calls or the AWS Management Console. To learn more, read the documentation or follow this guide.

DynamoDB now supports up to 100 actions per transaction. With Amazon DynamoDB transactions, you can group multiple actions together and submit them as a single all-or-nothing operation. The maximum number of actions in a single transaction has now increased from 25 to 100. The previous limit of 25 actions per transaction would sometimes require writing additional code to break transactions into multiple parts. Now with 100 actions per transaction, builders will encounter this limit much less frequently. To learn more about best practices for transactions, read the documentation.

Amazon SNS

SNS has introduced the public preview of message data protection to help customers discover and protect sensitive data in motion without writing custom code. With message data protection for SNS, you can scan messages in real time for PII/PHI data and receive audit reports containing scan results. You can also prevent applications from receiving sensitive data by blocking inbound messages to an SNS topic or outbound messages to an SNS subscription. These scans include people’s names, addresses, social security numbers, credit card numbers, and prescription drug codes.

To learn more:

- Read the introduction blog for message data protection with Amazon SNS

- Read documentation from Message data protection

EDA Day – London 2022

The Serverless DA team hosted the world’s first event-driven architecture (EDA) day in London on September 1. This brought together prominent figures in the event-driven architecture community, AWS, and customer speakers, and AWS product leadership from EventBridge and Step Functions.

EDA day covered 13 sessions, 3 workshops, and a Q&A panel. The conference was keynoted by Gregor Hohpe and speakers included Sheen Brisals and Sarah Hamilton from Lego, Toli Apostolidis from Cinch, David Boyne and Marcia Villalba from Serverless DA, and the AWS product team leadership for the panel. Customers could also interact with EDA experts at the Serverlesspresso bar and the Ask the Experts whiteboard.

Serverless snippets collection added to Serverless Land

Serverless Land is a website that is maintained by the Serverless Developer Advocate team to help you build with workshops, patterns, blogs, and videos. The team has extended Serverless Land and introduced the new AWS Serverless snippets collection. Builders can use serverless snippets to find and integrate tools, code examples, and Amazon CloudWatch Logs Insights queries to help with their development workflow.

Serverless Blog Posts

July

Jul 13 – Optimizing Node.js dependencies in AWS Lambda

Jul 15 – Simplifying serverless best practices with AWS Lambda Powertools for TypeScript

Jul 15 – Creating a serverless Apache Kafka publisher using AWS Lambda

Jul 18 – Understanding AWS Lambda scaling and throughput

Jul 19 – Introducing Amazon CodeWhisperer in the AWS Lambda console (In preview)

Jul 19 – Scaling AWS Lambda permissions with Attribute-Based Access Control (ABAC)

Jul 25 – Migrating mainframe JCL jobs to serverless using AWS Step Functions

Jul 28 – Using AWS Lambda to run external transactions on Db2 for IBM i

August

Aug 1 – Using certificate-based authentication for iOS applications with Amazon SNS

Aug 4 – Introducing tiered pricing for AWS Lambda

Aug 5 – Securely retrieving secrets with AWS Lambda

Aug 8 – Estimating cost for Amazon SQS message processing using AWS Lambda

Aug 9 – Building AWS Lambda governance and guardrails

Aug 11 – Introducing the new AWS Serverless Snippets Collection

Aug 12 – Introducing bidirectional event integrations with Salesforce and Amazon EventBridge

Aug 17 – Using custom consumer group ID support for AWS Lambda event sources for MSK and self-managed Kafka

Aug 24 – Speeding up incremental changes with AWS SAM Accelerate and nested stacks

Aug 29 – Deploying AWS Lambda functions using AWS Controllers for Kubernetes (ACK)

Aug 30 – Building cost-effective AWS Step Functions workflows

September

Sep 05 – Introducing new intrinsic functions for AWS Step Functions

Sep 08 – Introducing message data protection for Amazon SNS

Sep 14 – Lifting and shifting a web application to AWS Serverless: Part 1

Sep 14 – Lifting and shifting a web application to AWS Serverless: Part 2

Videos

Serverless Office Hours – Tues 10AM PT

Weekly live virtual office hours. In each session we talk about a specific topic or technology related to serverless and open it up to helping you with your real serverless challenges and issues. Ask us anything you want about serverless technologies and applications.

YouTube: youtube.com/serverlessland

Twitch: twitch.tv/aws

July

Jul 5 – AWS SAM Accelerate GA + more!

Jul 12 – Infrastructure as actual code

Jul 19 – The AWS Step Functions Workflows Collection

Jul 26 – AWS Lambda Attribute-Based Access Control (ABAC)

August

Aug 2 – AWS Lambda Powertools for TypeScript/Node.js

Aug 9 – AWS CloudFormation Hooks

Aug 16 – Java on Lambda best-practices

Aug 30 – Alex de Brie: DynamoDB Misconceptions

September

Sep 06 – AWS Lambda Cost Optimization

Sep 13 – Amazon EventBridge Salesforce integration

Sep 20 – .NET on AWS Lambda best practices

FooBar Serverless YouTube channel

Marcia Villalba frequently publishes new videos on her popular serverless YouTube channel. You can view all of Marcia’s videos at https://www.youtube.com/c/FooBar_codes.

July

Jul 7 – Amazon Cognito – Add authentication and authorization to your web apps

Jul 14 – Add Amazon Cognito to an existing application – NodeJS-Express and React

Jul 21 – Introduction to Amazon CloudFront – Add CDN to your applications

Jul 28 – Add Amazon S3 storage and use a CDN in an existing application

August

Aug 04 – Testing serverless application locally – Demo with Node.js, Express, and React

Aug 11 – Building Amazon CloudWatch dashboards with AWS CDK

Aug 19 – Let’s code – Lift and Shift migration to Serverless of Node.js, Express, React and Mongo app

Aug 25 – Let’s code – Lift and Shift migration to Serverless, migrating Authentication and Authorization

Aug 29 – Deploying AWS Lambda functions using AWS Controllers for Kubernetes (ACK)

September

Sep 1 – Run Artillery in a Lambda function | Load test your serverless applications

Sep 8 – Let’s code – Lift and Shift migration to Serverless, migrating Storage with Amazon S3 and CloudFront

Sep 15 – What are Event-Driven Architectures? Why we care?

Sep 22 – Queues – Point to Point Messaging – Exploring Event-Driven Patterns

Still looking for more?

The Serverless landing page has more information. The Lambda resources page contains case studies, webinars, whitepapers, customer stories, reference architectures, and even more Getting Started tutorials.

You can also follow the Serverless Developer Advocacy team on Twitter to see the latest news, follow conversations, and interact with the team.

- Eric Johnson: @edjgeek

- James Beswick: @jbesw

- Ben Smith: @benjamin_l_s

- Julian Wood: @julian_wood

- Marcia Villalba: @mavi888uy

- David Boyne: @boyney123

Museums: Last Week Tonight with John Oliver (HBO)

Post Syndicated from LastWeekTonight original https://www.youtube.com/watch?v=eJPLiT1kCSM

Kernel 6.0 released

Post Syndicated from original https://lwn.net/Articles/910087/

Linus has released the 6.0 kernel as

expected.

So, as is hopefully clear to everybody, the major version number

change is more about me running out of fingers and toes than it is

about any big fundamental changes.But of course there’s a lot of various changes in 6.0 – we’ve got

over 15k non-merge commits in there in total, after all, and as

such 6.0 is one of the bigger releases at least in numbers of

commits in a while.

Headline features in 6.0 include

a number of io_uring improvements including support for buffered writes to

XFS filesystems and zero-copy network

transmission,

an io_uring-based block driver mechanism,

the runtime verification subsystem,

and much more; see

the LWN merge-window summaries (part 1,

part 2) for more information.

Battery Life

Post Syndicated from original https://xkcd.com/2680/

Debian’s firmware vote results

Post Syndicated from original https://lwn.net/Articles/910065/

The results are

in on the Debian project’s general-resolution

vote regarding non-free firmware

in the installer image. The winning option

allows the installer image to include firmware necessary to use the system:

We will include non-free firmware packages from the

“non-free-firmware” section of the Debian archive on our official

media (installer images and live images). The included firmware

binaries will normally be enabled by default where the system

determines that they are required, but where possible we will

include ways for users to disable this at boot (boot menu option,

kernel command line etc.).

The vote also changes the Debian Social Contract to make it clear that

including non-free firmware in this manner is allowed.

Best Ultra Short Throw Projectors 2022 || AWOL LTV-3500, FORMOVIE Theater, XGIMI AURA

Post Syndicated from The Hook Up original https://www.youtube.com/watch?v=od1W43Oua4w

Сигнали до “Биволъ” По €15 на ръка от джипка. Как гласуват за ДПС в Молдова

Post Syndicated from Николай Марченко original https://bivol.bg/%D0%BF%D0%BE-e15-%D0%BD%D0%B0-%D1%80%D1%8A%D0%BA%D0%B0-%D0%BE%D1%82-%D0%B4%D0%B6%D0%B8%D0%BF%D0%BA%D0%B0-%D0%BA%D0%B0%D0%BA-%D0%B3%D0%BB%D0%B0%D1%81%D1%83%D0%B2%D0%B0%D1%82-%D0%B7%D0%B0-%D0%B4%D0%BF.html

Цената на гласа в полза на Движението за права и свободи (ДПС) в Република Молдова не се е променила. Както и през 2021 г., в Автономната Република Гагаузия, населена с…

Bedknobs

Post Syndicated from Oglaf! -- Comics. Often dirty. original https://www.oglaf.com/bedknobs/

Fuji 100s vs Hasselblad X2D – 102mp Medium Format

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=V_WqYcx1Ps4

Christina Ho | Be Bold & Fly with Passion | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=1PN2I_6DoAI

The Madness of Vintage Computer Festival Midwest 2022

Post Syndicated from LGR original https://www.youtube.com/watch?v=bICt3WRH4Bs