Post Syndicated from Explosm.net original http://explosm.net/comics/5966/

New Cyanide and Happiness Comic

Post Syndicated from Explosm.net original http://explosm.net/comics/5966/

New Cyanide and Happiness Comic

Post Syndicated from Jeremy Ber original https://aws.amazon.com/blogs/big-data/kinesis-data-firehose-now-supports-dynamic-partitioning-to-amazon-s3/

Amazon Kinesis Data Firehose provides a convenient way to reliably load streaming data into data lakes, data stores, and analytics services. It can capture, transform, and deliver streaming data to Amazon Simple Storage Service (Amazon S3), Amazon Redshift, Amazon Elasticsearch Service, generic HTTP endpoints, and service providers like Datadog, New Relic, MongoDB, and Splunk. It is a fully managed service that automatically scales to match the throughput of your data and requires no ongoing administration. It can also batch, compress, transform, and encrypt your data streams before loading, which minimizes the amount of storage used and increases security.

Customers who use Amazon Kinesis Data Firehose often want to partition their incoming data dynamically based on information that is contained within each record, before sending the data to a destination for analysis. An example of this would be segmenting incoming Internet of Things (IoT) data based on what type of device generated it: Android, iOS, FireTV, and so on. Previously, customers would need to run an entirely separate job to repartition their data after it lands in Amazon S3 to achieve this functionality.

Kinesis Data Firehose data partitioning simplifies the ingestion of streaming data into Amazon S3 data lakes, by automatically partitioning data in transit before it’s delivered to Amazon S3. This makes the datasets immediately available for analytics tools to run their queries efficiently and enhances fine-grained access control for data. For example, marketing automation customers can partition data on the fly by customer ID, which allows customer-specific queries to query smaller datasets and deliver results faster. IT operations or security monitoring customers can create groupings based on event timestamps that are embedded in logs, so they can query smaller datasets and get results faster.

In this post, we’ll discuss the new Kinesis Data Firehose dynamic partitioning feature, how to create a dynamic partitioning delivery stream, and walk through a real-world scenario where dynamically partitioning data that is delivered into Amazon S3 could improve the performance and scalability of the overall system. We’ll then discuss some best practices around what makes a good partition key, how to handle nested fields, and integrating with Lambda for preprocessing and error handling. Finally, we’ll cover the limits and quotas of Kinesis Data Firehose dynamic partitioning, and some pricing scenarios.

First, let’s discuss why you might want to use dynamic partitioning instead of Kinesis Data Firehose’s standard timestamp-based data partitioning. Consider a scenario where your analytical data lake in Amazon S3 needs to be filtered according to a specific field, such as a customer identification—customer_id. Using the standard timestamp-based strategy, your data will look something like this, where <DOC-EXAMPLE-BUCKET> stands for your bucket name.

The difficulty in identifying particular customers within this array of data is that a full file scan will be required to locate any individual customer. Now consider the data partitioned by the identifying field, customer_id.

In this data partitioning scheme, you only need to scan one folder to find data related to a particular customer. This is how analytics query engines like Amazon Athena, Amazon Redshift Spectrum, or Presto are designed to work—they prune unneeded partitioning during query execution, thereby reducing the amount of data that is scanned and transferred. Partitioning data like this will result in less data scanned overall.

With the launch of Kinesis Data Firehose Dynamic Partitioning, you can now enable data partitioning to be dynamic, based on data content within the AWS Management Console, AWS Command Line Interface (AWS CLI), or AWS SDK when you create or update an existing Kinesis Data Firehose delivery stream.

At a high level, Kinesis Data Firehose Dynamic Partitioning allows for easy extraction of keys from incoming records into your delivery stream by allowing you to select and extract JSON data fields in an easy-to-use query engine.

Kinesis Data Firehose Dynamic Partitioning and key extraction will result in larger file sizes landing in Amazon S3, in addition to allowing for columnar data formats, like Apache Parquet, that query engines prefer.

With Kinesis Data Firehose Dynamic Partitioning, you have the ability to specify delimiters to detect or add on to your incoming records. This makes it possible to clean and organize data in a way that a query engine like Amazon Athena or AWS Glue would expect. This not only saves time but also cuts down on additional processes after the fact, potentially reducing costs in the process.

Kinesis Data Firehose has built-in support for extracting the keys for partitioning records that are in JSON format. You can select and extract the JSON data fields to be used in partitioning by using JSONPath syntax. These fields will then dictate how the data is partitioned when it’s delivered to Amazon S3. As we’ll discuss in the walkthrough later in this post, extracting a well-distributed set of partition keys is critical to optimizing your Kinesis Data Firehose delivery stream that uses dynamic partitioning.

If the incoming data is compressed, encrypted, or in any other file format, you can include in the PutRecord or PutRecords API calls the data fields for partitioning. You can also use the integrated Lambda function with your own custom code to decompress, decrypt, or transform the records to extract and return the data fields that are needed for partitioning. This is an expansion of the existing transform Lambda function that is available today with Kinesis Data Firehose. You can transform, parse, and return the data fields by using the same Lambda function.

In order to achieve larger file sizes when it sinks data to Amazon S3, Kinesis Data Firehose buffers incoming streaming data to a specified size or time period before it delivers to Amazon S3. Buffer sizes range from 1 MB to 4 GB when data is delivered to Amazon S3, and the buffering interval ranges from 1 minute to 1 hour.

Consider the following clickstream event.

You can now partition your data by customer_id, so Kinesis Data Firehose will automatically group all events with the same customer_id and deliver them to separate folders in your S3 destination bucket. The new folders will be created dynamically—you only specify which JSON field will act as dynamic partition key.

Assume that you want to have the following folder structure in your S3 data lake.

The Kinesis Data Firehose configuration for the preceding example will look like the one shown in the following screenshot.

Kinesis Data Firehose evaluates the prefix expression at runtime. It groups records that match the same evaluated S3 prefix expression into a single dataset. Kinesis Data Firehose then delivers each dataset to the evaluated S3 prefix. The frequency of dataset delivery to S3 is determined by the delivery stream buffer setting.

You can do even more with the jq JSON processor, including accessing nested fields and create complex queries to identify specific keys among the data.

In the following example, I decide to store events in a way that will allow me to scan events from the mobile devices of a particular customer.

Given the same event, I’ll use both the device and customer_id fields in the Kinesis Data Firehose prefix expression, as shown in the following screenshot. Notice that the device is a nested JSON field.

The generated S3 folder structure will be as follows, where <DOC-EXAMPLE-BUCKET> is your bucket name.

Now assume that you want to partition your data based on the time when the event actually was sent, as opposed to using Kinesis Data Firehose native support for ApproximateArrivalTimestamp, which represents the time in UTC when the record was successfully received and stored in the stream. The time in the event_timestamp field might be in a different time zone.

With Kinesis Data Firehose Dynamic Partitioning, you can extract and transform field values on the fly. I’ll use the event_timestamp field to partition the events by year, month, and day, as shown in the following screenshot.

The preceding expression will produce the following S3 folder structure, where <DOC-EXAMPLE-BUCKET> is your bucket name.

To begin delivering dynamically partitioned data into Amazon S3, navigate to the Amazon Kinesis console page by searching for or selecting Kinesis.

From there, choose Create Delivery Stream, and then select your source and sink.

For this example, you will receive data from a Kinesis Data Stream, but you can also choose Direct PUT or other sources as the source of your delivery stream.

For the destination, choose Amazon S3.

Next, choose the Kinesis Data Stream source to read from. If you have a Kinesis Data Stream previously created, simply choose Browse and select from the list. If not, follow this guide on how to create a Kinesis Data Stream.

Give your delivery stream a name and continue on to the Transform and convert records section of the create wizard.

In order to transform your source records with AWS Lambda, you can enable data transformation. This process will be covered in the next section, and we’ll leave both the AWS Lambda transformation as well as the record format conversion disabled for simplicity.

For your S3 destination, select or create an S3 bucket that your delivery stream has permissions to.

Below that setting, you can now enable dynamic partitioning on the incoming data in order to deliver data to different S3 bucket prefixes based on your specified JSONPath query.

You now have the option to enable the following features, shown in the screenshot below:

\n. This can be useful for data that comes in to your delivery stream in a specific format, but needs to be reformatted according to the downstream analysis engine.\n.

You can simply add your key/value pairs, then choose Apply dynamic partitioning keys to apply the partitioning scheme to your S3 bucket prefix. Keep in mind that you will also need to supply an error prefix for your S3 bucket before continuing.

Set your S3 buffering conditions appropriately for your use case. In my example, I’ve lowered the buffering to the minimum of 1 MiB of data delivered, or 60 seconds before delivering data to Amazon S3.

Keep the defaults for the remaining settings, and then choose Create delivery stream.

After data begins flowing through your pipeline, within the buffer interval you will see data appear in S3, partitioned according to the configurations within your Kinesis Data Firehose.

For delivery streams without the dynamic partitioning feature enabled, there will be one buffer across all incoming data. When data partitioning is enabled, Kinesis Data Firehose will have a buffer per partition, based on incoming records. The delivery stream will deliver each buffer of data as a single object when the size or interval limit has been reached, independent of other data partitions.

If the data flowing through a Kinesis Data Firehose is compressed, encrypted, or in any non-JSON file format, the dynamic partitioning feature won’t be able to parse individual fields by using the JSONPath syntax specified previously. To use the dynamic partitioning feature with non-JSON records, use the integrated Lambda function with Kinesis Data Firehose to transform and extract the fields needed to properly partition the data by using JSONPath.

The following Lambda function will decode a user payload, extract the necessary fields for the Kinesis Data Firehose dynamic partitioning keys, and return a proper Kinesis Data Firehose file, with the partition keys encapsulated in the outer payload.

Using Lambda to extract the necessary fields for dynamic partitioning provides both the benefit of encrypted and compressed data and the benefit of dynamically partitioning data based on record fields.

Kinesis Data Firehose Dynamic Partitioning has a limit of 500 active partitions per delivery stream while it is actively buffering data—in other words, how many active partitions exist in the delivery stream during the configured buffering hints. This limit is adjustable, and if you want to increase it, you’ll need to submit a support ticket for a limit increase.

Each new value that is determined by the JSONPath select query will result in a new partition in the Kinesis Data Firehose delivery stream. The partition has an associated buffer of data that will be delivered to Amazon S3 in the evaluated partition prefix. Upon delivery to Amazon S3, the buffer that previously held that data and the associated partition will be deleted and deducted from the active partitions count in Kinesis Data Firehose.

Consider the following records that were ingested to my delivery stream.

If I decide to use customer_id for my dynamic data partitioning and deliver records to different prefixes, I’ll have three active partitions if the records keep ingesting for all of my customers. When there are no more records for "customer_id": "123", Kinesis Data Firehose will delete the buffer and will keep only two active partitions.

If you exceed the maximum number of active partitions, the rest of the records in the delivery stream will be delivered to the S3 error prefix. For more information, see the Error Handling section of this blog post.

A maximum throughput of 25 MB per second is supported for each active partition. This limit is not adjustable. You can monitor the throughput with the new metric called PerPartitionThroughput.

The right partitioning can help you to save costs related to the amount of data that is scanned by analytics services like Amazon Athena. On the other hand, over-partitioning may lead to the creation of smaller objects and wipe out the initial benefit of cost and performance. See Top 10 Performance Tuning Tips for Amazon Athena.

We advise you to align your partitioning keys with your analysis queries downstream to promote compatibility between the two systems. At the same time, take into consideration how high cardinality can impact the dynamic partitioning active partition limit.

When you decide which fields to use for the dynamic data partitioning, it’s a fine balance between picking fields that match your business case and taking into consideration the partition count limits. You can monitor the number of active partitions with the new metric PartitionCount, as well as the number of partitions that have exceeded the limit with the metric PartitionCountExceeded.

Another way to optimize cost is to aggregate your events into a single PutRecord and PutRecordBatch API call. Because Kinesis Data Firehose is billed per GB of data ingested, which is calculated as the number of data records you send to the service, times the size of each record rounded up to the nearest 5 KB, you can put more data per each ingestion call.

Data partition functionality is run after data is de-aggregated, so each event will be sent to the corresponding Amazon S3 prefix based on the partitionKey field within each event.

Imagine that the following record enters your Kinesis Data Firehose delivery stream.

When your dynamic partition query scans over this record, it will be unable to locate the specified key of customer_id, and therefore will result in an error. In this scenario, we suggest using S3 error prefix when you create or modify your Kinesis Data Firehose stream.

All failed records will be delivered to the error prefix. The records you might find there are the events without the field you specified as your partition key.

Kinesis Data Firehose Dynamic Partitioning is billed per GB of partitioned data delivered to S3, per object, and optionally per jq processing hour for data parsing. The cost can vary based on the AWS Region where you decide to create your stream.

For more information, see our pricing page.

In this post, we discussed the Kinesis Data Firehose Dynamic Partitioning feature, and explored the use cases where this feature can help improve pipeline performance. We also covered how to develop and optimize a Kinesis Data Firehose pipeline by using dynamic partitioning and the best practices around building a reliable delivery stream.

Kinesis Data Firehose dynamic partitioning will be available in all Regions at launch, and we urge you to try the new feature to see how it can simplify your delivery stream and query engine performance. Be sure to provide us with any feedback you have about the new feature.

Jeremy Ber has been working in the telemetry data space for the past 5 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the past, Jeremy has supported and built systems that stream in terabytes of data per day and process complex machine learning algorithms in real time. At AWS, he is a Solutions Architect Streaming Specialist, supporting both Amazon Managed Streaming for Apache Kafka (Amazon MSK) and Amazon Kinesis.

Michael Greenshtein’s career started in software development and shifted to DevOps. Michael worked with AWS services to build complex data projects involving real-time, ETLs, and batch processing. Now he works in AWS as Solutions Architect in the Europe, Middle East, and Africa (EMEA) region, supporting a variety of customer use cases.

Post Syndicated from original https://xkcd.com/2511/

Post Syndicated from Kyle Hart original https://aws.amazon.com/blogs/security/how-us-federal-agencies-can-authenticate-to-aws-with-multi-factor-authentication/

This post is part of a series about how AWS can help your US federal agency meet the requirements of the President’s Executive Order on Improving the Nation’s Cybersecurity. We recognize that government agencies have varying degrees of identity management and cloud maturity and that the requirement to implement multi-factor, risk-based authentication across an entire enterprise is a vast undertaking. This post specifically focuses on how you can use AWS information security practices to help meet the requirement to “establish multi-factor, risk-based authentication and conditional access across the enterprise” as it applies to your AWS environment.

This post focuses on the best-practices for enterprise authentication to AWS – specifically federated access via an existing enterprise identity provider (IdP).

Many federal customers use authentication factors on their Personal Identity Verification (PIV) or Common Access Cards (CAC) to authenticate to an existing enterprise identity service which can support Security Assertion Markup Language (SAML), which is then used to grant user access to AWS. SAML is an industry-standard protocol and most IdPs support a range of authentication methods, so if you’re not using a PIV or CAC, the concepts will still work for your organization’s multi-factor authentication (MFA) requirements.

There are two categories we want to look at for authentication to AWS services:

There is also a third category of services where authentication occurs in AWS that is beyond the scope of this post: applications that you build on AWS that authenticate internal or external end users to those applications. For this category, multi-factor authentication is still important, but will vary based on the specifics of the application architecture. Workloads that sit behind an AWS Application Load Balancer can use the ALB to authenticate users using either Open ID Connect or SAML IdP that enforce MFA upstream.

AWS recommends that you use SAML and an IdP that enforces MFA as your means of granting users access to AWS. Many government customers achieve AWS federated authentication with Active Directory Federation Services (AD FS). The IdP used by our federal government customers should enforce usage of CAC/PIV to achieve MFA and be the sole means of access to AWS.

Federated authentication uses SAML to assume an AWS Identity and Access Management (IAM) role for access to AWS resources. A role doesn’t have standard long-term credentials such as a password or access keys associated with it. Instead, when you assume a role, it provides you with temporary security credentials for your role session.

AWS accounts in all AWS Regions, including AWS GovCloud (US) Regions, have the same authentication options for IAM roles through identity federation with a SAML IdP. The AWS Single Sign-on (SSO) service is another way to implement federated authentication to the AWS APIs in regions where it is available.

In AWS Regions excluding AWS GovCloud (US), you can consider using the AWS CloudShell service, which is an interactive shell environment that runs in your web browser and uses the same authentication pipeline that you use to access the AWS Management Console—thus inheriting MFA enforcement from your SAML IdP.

If you need to use federated authentication with MFA for the CLI on your own workstation, you’ll need to retrieve and present the SAML assertion token. For information about how you can do this in Windows environments, see the blog post How to Set Up Federated API Access to AWS by Using Windows PowerShell. For information about how to do this with Python, see How to Implement a General Solution for Federated API/CLI Access Using SAML 2.0.

IAM permissions policies support conditional access. Common use cases include allowing certain actions only from a specified, trusted range of IP addresses; granting access only to specified AWS Regions; and granting access only to resources with specific tags. You should create your IAM policies to provide least-privilege access across a number of attributes. For example, you can grant an administrator access to launch or terminate an EC2 instance only if the request originates from a certain IP address and is tagged with an appropriate tag.

You can also implement conditional access controls using SAML session tags provided by their IdP and passed through the SAML assertion to be consumed by AWS. This means two separate users from separate departments can assume the same IAM role but have tailored, dynamic permissions. As an example, the SAML IdP can provide each individual’s cost center as a session tag on the role assertion. IAM policy statements can be written to allow the user from cost center A the ability to administer resources from cost center A, but not resources from cost center B.

Many customers ask about how to limit control plane access to certain IP addresses. AWS supports this, but there is an important caveat to highlight. Some AWS services, such as AWS CloudFormation, perform actions on behalf of an authorized user or role, and execute from within the AWS cloud’s own IP address ranges. See this document for an example of a policy statement using the aws:ViaAWSService condition key to exclude AWS services from your IP address restrictions to avoid unexpected authorization failures.

You can launch resources such as Amazon WorkSpaces, AppStream 2.0, Redshift, and EC2 instances that you configure to require MFA. The Amazon WorkSpaces Streaming Protocol (WSP) supports CAC/PIV authentication for pre-authentication, and in-session access to the smart card. For more information, see Use smart cards for authentication. To see a short video of it in action, see the blog post Amazon WorkSpaces supports CAC/PIV smart card authentication. Redshift and AppStream 2.0 support SAML 2.0 natively, so you can configure those services to work with your SAML IdP similarly to how you configure AWS Console access and inherit the MFA enforced by the upstream IdP.

MFA access to EC2 instances can occur via the existing methods and enterprise directories used in your on-premises environments. You can, of course, implement other systems that enforce MFA access to an operating system such as RADIUS or other third-party directory or MFA token solutions.

An alternative method for MFA for shell access to EC2 instances is to use the Session Manager feature of AWS Systems Manager. Session Manager uses the Systems Manager management agent to provide role-based access to a shell (PowerShell on Windows) on an instance. Users can access Session Manager from the AWS Console or from the command line with the Session Manager AWS CLI plugin. Similar to using CloudShell for CLI access, accessing EC2 hosts via Session Manager uses the same authentication pipeline you use for accessing the AWS control plane. Your interactive session on that host can be configured for audit logging.

The focus of this blog is on integrating an agency’s existing MFA-enabled enterprise authentication service; but to make it easier for you to view the entire security picture, you might be interested in IAM security best practices. You can enforce these best-practice security configurations with AWS Organizations Service Control Policies.

This post covered methods your federal agency should consider in your efforts to apply the multi-factor authentication (MFA) requirements in the Executive Order on Improving the Nation’s Cybersecurity to your AWS environment. To learn more about how AWS can help you meet the requirements of the executive order, see the other posts in this series:

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

Post Syndicated from Leonardo Pêpe original https://aws.amazon.com/blogs/big-data/how-moia-built-a-fully-automated-gdpr-compliant-data-lake-using-aws-lake-formation-aws-glue-and-aws-codepipeline/

This is a guest blog post co-written by Leonardo Pêpe, a Data Engineer at MOIA.

MOIA is an independent company of the Volkswagen Group with locations in Berlin and Hamburg, and operates its own ride pooling services in Hamburg and Hanover. The company was founded in 2016 and develops mobility services independently or in partnership with cities and existing transport systems. MOIA’s focus is on ride pooling and the holistic development of the software and hardware for it. In October 2017, MOIA started a pilot project in Hanover to test a ride pooling service, which was brought into public operation in July 2018. MOIA covers the entire value chain in the area of ride pooling. MOIA has developed a ridesharing system to avoid individual car traffic and use the road infrastructure more efficiently.

In this post, we discuss how MOIA uses AWS Lake Formation, AWS Glue, and AWS CodePipeline to store and process gigabytes of data on a daily basis to serve 20 different teams with individual user data access and implement fine-grained control of the data lake to comply with General Data Protection Regulation (GDPR) guidelines. This involves controlling access to data at a granular level. The solution enables MOIA’s fast pace of innovation to automatically adapt user permissions to new tables and datasets as they become available.

Each MOIA vehicle can carry six passengers. Customers interact with the MOIA app to book a trip, cancel a trip, and give feedback. The highly distributed system prepares multiple offers to reach their destination with different pickup points and prices. Customers select an option and are picked up from their chosen location. All interactions between the customers and the app, as well as all the interactions between internal components and systems (the backend’s and vehicle’s IoT components), are sent to MOIA’s data lake.

Data from the vehicle, app, and backend must be centralized to have the synchronization between trips planned and implemented, and then to collect passenger feedback. To provide different pricing and routing options, MOIA needed centralized data. MOIA decided to build and secure its Amazon Simple Storage Service (Amazon S3) based Data Lake using AWS Lake Formation.

Different MOIA teams that includes Data Analysts, Data Scientists and Data Engineers need to access centralized data from different sources for the development and operations of the application workloads. It’s a legal requirement to control the access and format of the data to these different teams. The app development team needs to understand customer feedback in an anonymized way, pricing-related data must be accessed only by the business analytics team, vehicle data is meant to be used only by the vehicle maintenance team, and the routing team needs access to customer location and destination.

The following diagram illustrates MOIA solution architecture.

The solution has the following components:

MOIA wants to evolve their ML models for routing, demand prediction, and business models continuously. This requires MOIA to constantly review models and update them, therefore power users such as data administrators and engineers frequently redesign the table schemas in the AWS Glue Data Catalog as part of the data engineering workflow. This highly dynamic metadata transformation requires an equally dynamic governance layer pipeline that can assign the right user permissions to all tables and adapt to these changes transparently without disruptions to end-users. Due to GDPR requirements, there is no room for error, and manual work is required to assign the right permissions according to GDPR compliance on the tables in the data lake with many terabytes of data. Without automation, many developers are needed for administration; this adds human error into the workflows, which is not acceptable. Manual administration and access management isn’t a scalable solution. MOIA needed to innovate faster with GDPR compliance with a small team of developers.

Data schema and data structure often changes at MOIA, resulting in new tables being created in the data lake. To guarantee that new tables inherit the permissions granted to the same group of users who already have access to the entire database, MOIA uses an automated process that grants Lake Formation permission to newly created tables, columns, and databases, as described in the next section.

The following diagram illustrates the continuous deployment loop using AWS CloudFormation.

The workflow contains the following steps:

This way, new CloudFormation templates are created, containing permissions for new or modified tables. This process guarantees a fully automated governance layer for the data lake. The generated CloudFormation template contains Lake Formation permission resources for each table, database, or column. The process of managing Lake Formation permissions on Data Catalog databases, tables, and columns is simplified by granting Data Catalog permissions using the Lake Formation tag-based access control method. The advantage of generating a CloudFormation template is audibility. When the new version of the CloudFormation stack is prepared with an access control set on new or modified tables, administrators can compare that stack with the older version to discover newly prepared and modified tables. MOIA can view the differences via the AWS CloudFormation console before new stack deployment.

This solution delivers the following benefits:

MOIA has created scalable, automated, and versioned permissions with a GDPR-supported, governed data lake using Lake Formation. This solution helps them bring new features and models to market faster, and reduces administrative and repetitive tasks. MOIA can focus on 48 average releases every month, contributing to a great customer experience and new data insights.

Leonardo Pêpe is a Data Engineer at MOIA. With a strong background in infrastructure and application support and operations, he is immersed in the DevOps philosophy. He’s helping MOIA build automated solutions for its data platform and enabling the teams to be more data-driven and agile. Outside of MOIA, Leonardo enjoys nature, Jiu-Jitsu and martial arts, and explores the good of life with his family.

Leonardo Pêpe is a Data Engineer at MOIA. With a strong background in infrastructure and application support and operations, he is immersed in the DevOps philosophy. He’s helping MOIA build automated solutions for its data platform and enabling the teams to be more data-driven and agile. Outside of MOIA, Leonardo enjoys nature, Jiu-Jitsu and martial arts, and explores the good of life with his family.

Sushant Dhamnekar is a Solutions Architect at AWS. As a trusted advisor, Sushant helps automotive customers to build highly scalable, flexible, and resilient cloud architectures, and helps them follow the best practices around advanced cloud-based solutions. Outside of work, Sushant enjoys hiking, food, travel, and CrossFit workouts.

Sushant Dhamnekar is a Solutions Architect at AWS. As a trusted advisor, Sushant helps automotive customers to build highly scalable, flexible, and resilient cloud architectures, and helps them follow the best practices around advanced cloud-based solutions. Outside of work, Sushant enjoys hiking, food, travel, and CrossFit workouts.

Shiv Narayanan is Global Business Development Manager for Data Lakes and Analytics solutions at AWS. He works with AWS customers across the globe to strategize, build, develop and deploy modern data platforms. Shiv loves music, travel, food and trying out new tech.

Shiv Narayanan is Global Business Development Manager for Data Lakes and Analytics solutions at AWS. He works with AWS customers across the globe to strategize, build, develop and deploy modern data platforms. Shiv loves music, travel, food and trying out new tech.

Post Syndicated from David Amatulli original https://aws.amazon.com/blogs/architecture/practical-entity-resolution-on-aws-to-reconcile-data-in-the-real-world/

This post was co-written with Mamoon Chowdry, Solutions Architect, previously at AWS.

Businesses and organizations from many industries often struggle to ensure that their data is accurate. Data often has to match people or things exactly in the real world, such as a customer name, an address, or a company. Matching our data is important to validate it, de-duplicate it, or link records in different systems together. Know Your Customer (KYC) regulations also mean that we must be confident in who or what our data is referring to. We must match millions of records from different data sources. Some of that data may have been entered manually and contain inconsistencies.

It can often be hard to match data with the entity it is supposed to represent. For example, if a customer enters their details as, “Mr. John Doe, #1a 123 Main St.“ and you have a prior record in your customer database for ”J. Doe, Apt 1A, 123 Main Street“, are they referring to the same or a different person?

In cases like this, we often have to manually update our data to make sure it accurately and consistently matches a real-world entity. You may want to have consistent company names across a list of business contacts. When there isn’t an exact match, we have to reconcile our data with the available facts we know about that entity. This reconciliation is commonly referred to as entity resolution (ER). This process can be labor-intensive and error-prone.

This blog will explore some of the common types of ER. We will share a basic architectural pattern for near real-time ER processing. You will see how ER using fuzzy text matching can reconcile manually entered names with reference data.

Entity resolution is a broad and deep topic, and a complete discussion would be beyond the scope of this blog. However, at a high level there are four common approaches to matching ambiguous fields or records, to known entities.

We may also examine more than one field. For example, we may compare a name and address. Is “Mr. J Doe, 123 Main St” likely to be the same person as “Mr John Doe, 123 Main Street”? If we compare multiple fields in a record and analyze all of their similarity scores, this is commonly called Pairwise comparison.

2. Clustering. We can plot records in an n-dimensional space based on values computed from their fields. Their similarity to other reference records is then measured by calculating how close they are to each other in that space. Those that are clustered together are likely to refer to the same entity. Clustering is an effective method for grouping or segmenting data for computer vision, astronomy, or market segmentation. An example of this method is K-means clustering.

3. Graph networks. Graph networks are commonly used to store relationships between entities, such as people who are friends with each other, or residents of a particular address. When we need to resolve an ambiguous record, we can use a graph database to identify potential relationships to other records. For example, “J Doe, 123 Main St,” may be the same as “John Doe, 123 Main St,” because they have the same address and similar names.

Graph networks are especially helpful when dealing with complex relationships over millions of entities. For example, you can build a customer profile using web server logs and other data.

4. Commercial off-the-shelf (COTS) software. Enterprises can also deploy ER software, such as these offerings from the AWS Marketplace and Senzing entity resolution. This is helpful when companies may not have the skill or experience to implement a solution themselves. It is important to mention the role of Master Data Management (MDM) with ER. MDM involves having a single trusted source for your data. Tools, such as Informatica, can help ER with their MDM features.

Our solution (shown in Figure 1) allows us to build a low-cost, streamlined solution using AWS serverless technology. The architecture uses AWS Lambda, which allows you to run code without having to provision or manage servers. This code will be invoked through an API, which is created with Amazon API Gateway. API Gateway is a fully managed service used by developers to create, publish, maintain, monitor, and secure API operations at any scale. Finally, we will store our reference data in Amazon Simple Storage Service (S3).

We initially match manually entered strings to a list of reference strings. The strings we will try to match will be names of companies.

Figure 1. Example request dataflow through AWS

The reference data and index files were created from an export of the fuzzy match algorithm.

The algorithm in the AWS Lambda function works by converting each string to a collection of n-grams. N-grams are smaller substrings that are commonly used for analyzing free-form text.

The n-grams are then converted to a simple vector. Each vector is a numerical statistic that represents the Term Frequency – Inverse Document Frequency (TF-IDF). Both TF-IDF and n-grams are used to prepare text for searching. N-grams of strings that are similar in nature, tend to have similar TF-IDF vectors. We can plot these vectors in a chart. This helps us find similar strings as they are grouped or clustered together.

Comparing vectors to find similar strings can be fairly straightforward. But if you have numerous records, it can be computationally expensive and slow. To solve this, we use the NMSLIB library. This library indexes the vectors for faster similarity searching. It also gives us the degree of similarity between two strings. This is important because we may want to know the accuracy of a match we have found. For example, it can be helpful to filter out weak matches.

Using the NMSLIB library, which is loaded using Lambda layers, we initialize an index using Neighborhood APProximation (NAPP).

# initialize the index

newIndex = nmslib.init(method='napp', space='negdotprod_sparse_fast',

data_type=nmslib.DataType.SPARSE_VECTOR)Next we imported the index and data files that were created from our reference data.

# load the index file

newIndex.loadIndex(DATA_DIR + 'index_company_names.bin',

load_data=True)The input parameter companyName is then used to query the index to find the approximate nearest neighbor. By using the knnQueryBatch method, we distribute the work over a thread pool, which provides faster querying.

# set the input variable and empty output list

inputString = companyName

outputList = []# Find the nearest neighbor for our company name

# (K is the number of matches, set to 1)

newQueryMatrix = vectorizer.transform(inputString)

newNbrs = index.knnQueryBatch(newQueryMatrix, k = K, num_threads = numThreads)The best match is then returned as a JSON response.

# return the match

for i in range(K):

outputList.append(orgNames[newNbrs[0][0][i]])return {

'statusCode': '200',

'body': json.dumps(outputList),

}Our solution is a combination of Amazon API Gateway, AWS Lambda, and Amazon S3 (hyperlinks are to pricing pages). As an example, let’s assume that the API will receive 10 million requests per month. We can estimate the costs of running the solution as:

| Service | Description | Cost |

|---|---|---|

| AWS Lambda | 10 million requests and associated compute costs | $161.80 |

| Amazon API Gateway | HTTP API requests, avg size of request (34 KB), Avg message size (32 KB), requests (10 million/month) | $10.00 |

| Amazon S3 | S3 Standard storage (including data transfer costs) | $7.61 |

| Total | $179.41 |

Table 1. Example monthly cost estimate (USD)

Using AWS services to reconcile your data with real-world entities helps make your data more accurate and consistent. You can automate a manual task that could have been laborious, expensive, and error-prone.

Where can you use ER in your organization? Do you have manually entered or inaccurate data? Have you struggled to match it with real-world entities? You can experiment with this architecture to continue to improve the accuracy of your own data.

Further reading:

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=pI3KHSn0ilY

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=3BdkxtgN5wo

Post Syndicated from Marcia Villalba original https://aws.amazon.com/blogs/aws/welcome-to-aws-storage-day-2021/

Welcome to the third annual AWS Storage Day 2021! During Storage Day 2020 and the first-ever Storage Day 2019 we made many impactful announcements for our customers and this year will be no different. The one-day, free AWS Storage Day 2021 virtual event will be hosted on the AWS channel on Twitch. You’ll hear from experts about announcements, leadership insights, and educational content related to AWS Storage services.

The first part of the day is the leadership track. Wayne Duso, VP of Storage, Edge, and Data Governance, will be presenting a live keynote. He’ll share information about what’s new in AWS Cloud Storage and how these services can help businesses increase agility and accelerate innovation. The keynote will be followed by live interviews with the AWS Storage leadership team, including Mai-Lan Tomsen Bukovec, VP of AWS Block and Object Storage.

The first part of the day is the leadership track. Wayne Duso, VP of Storage, Edge, and Data Governance, will be presenting a live keynote. He’ll share information about what’s new in AWS Cloud Storage and how these services can help businesses increase agility and accelerate innovation. The keynote will be followed by live interviews with the AWS Storage leadership team, including Mai-Lan Tomsen Bukovec, VP of AWS Block and Object Storage.

The second part of the day is a technical track in which you’ll learn more about Amazon Simple Storage Service (Amazon S3), Amazon Elastic Block Store (EBS), Amazon Elastic File System (Amazon EFS), AWS Backup, Cloud Data Migration, AWS Transfer Family and Amazon FSx.

To register for the event, visit the AWS Storage Day 2021 event page.

Now as Jeff Barr likes to say, let’s get into the announcements.

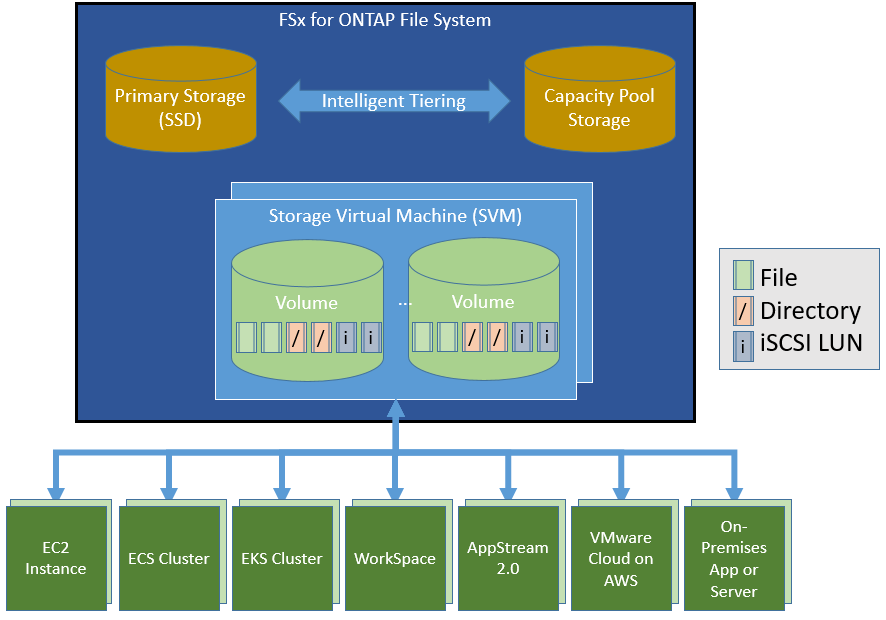

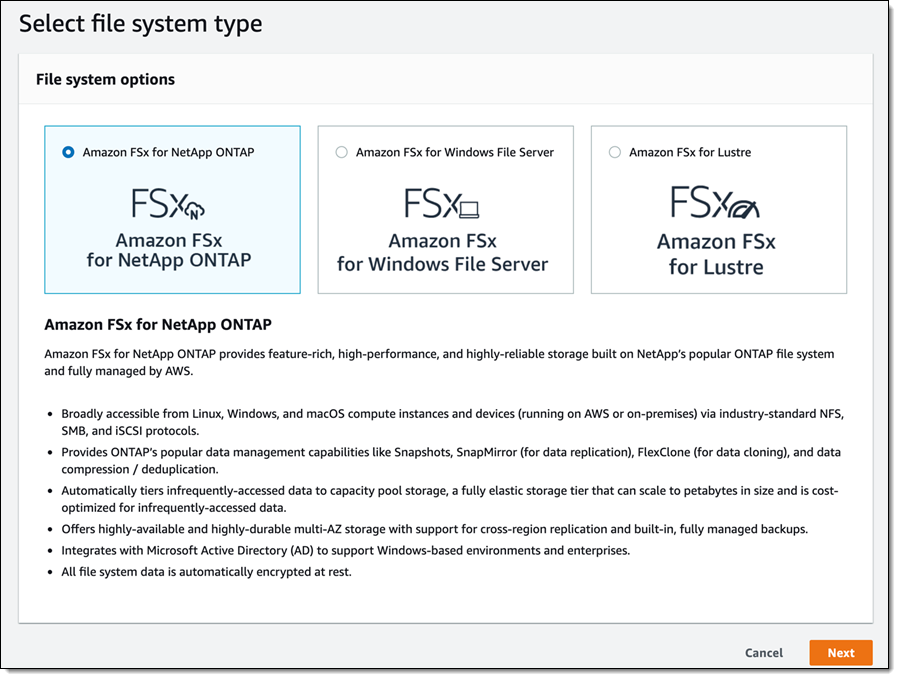

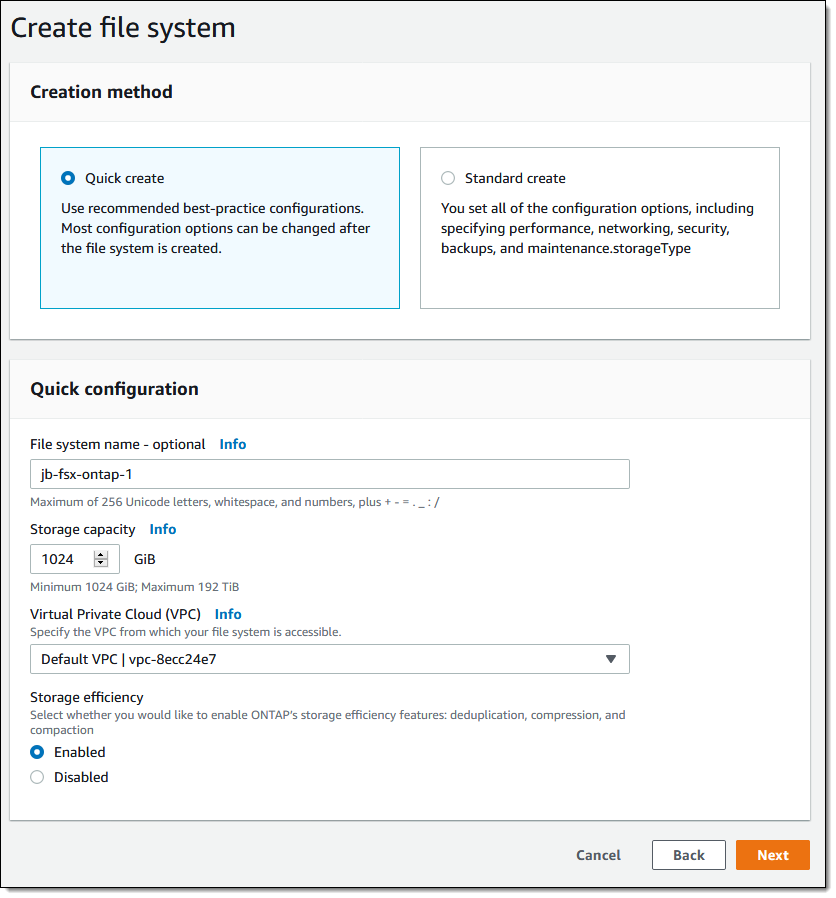

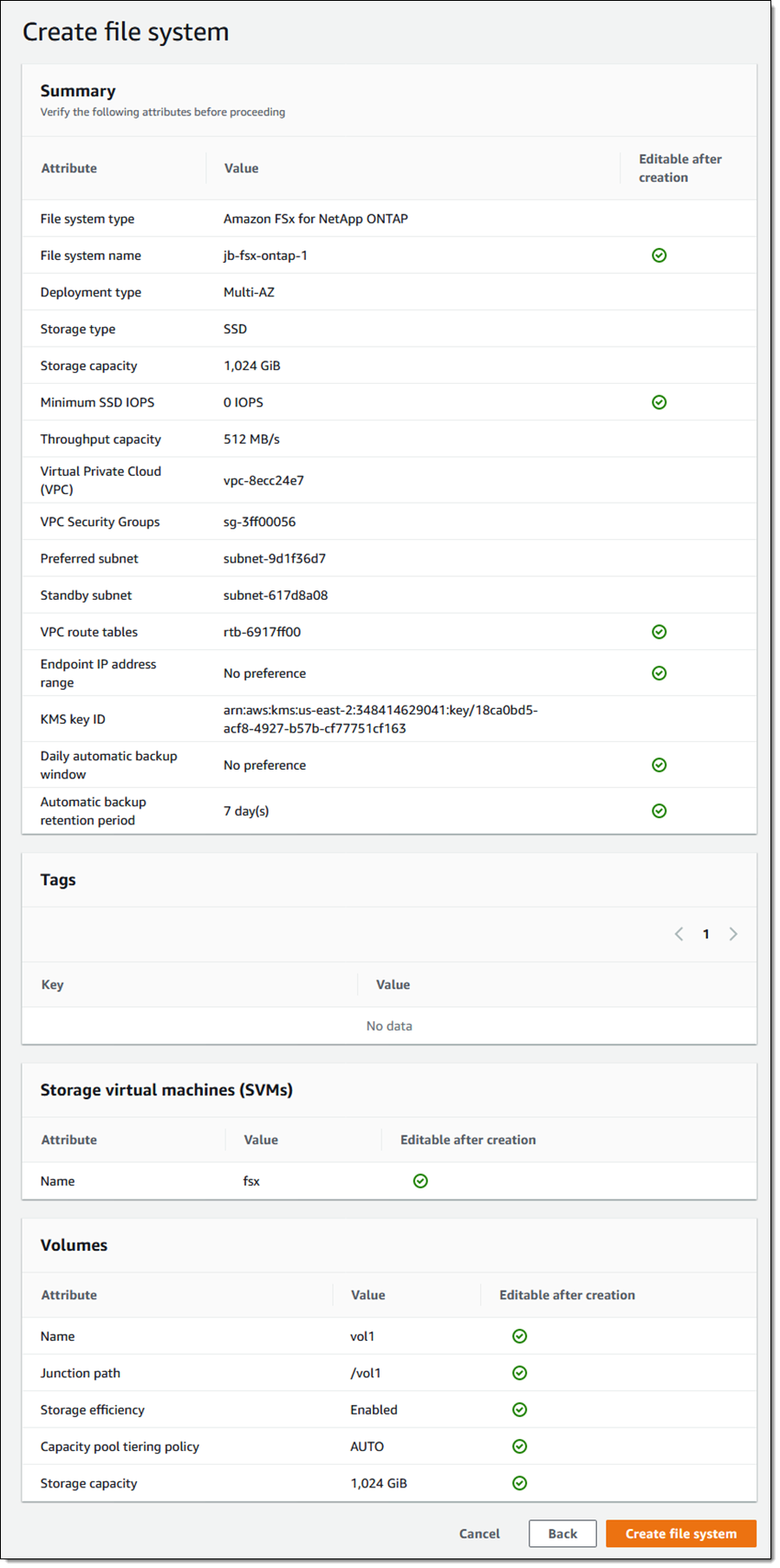

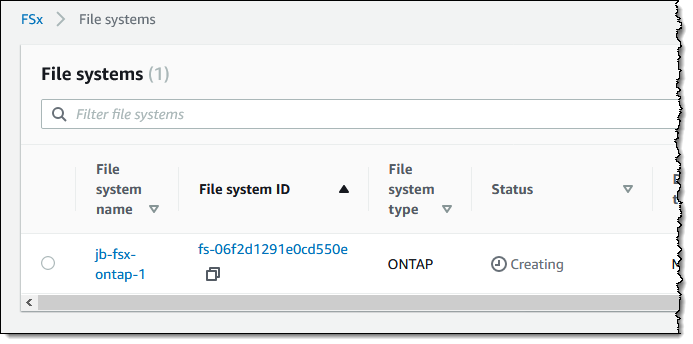

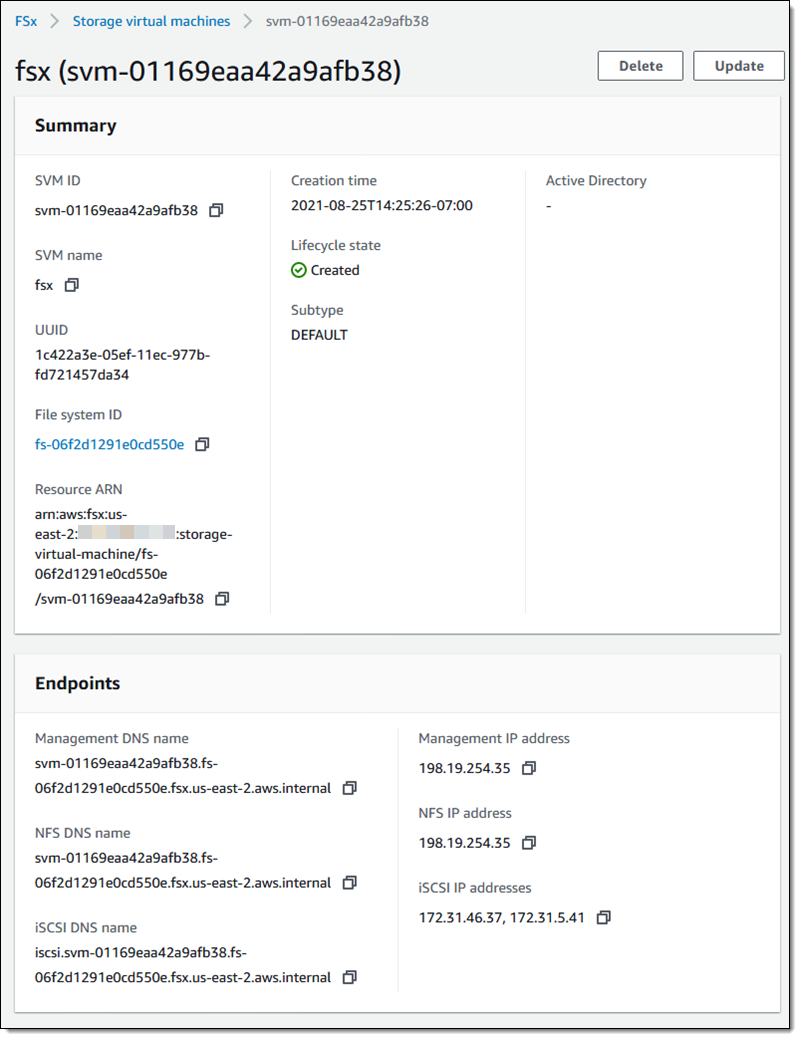

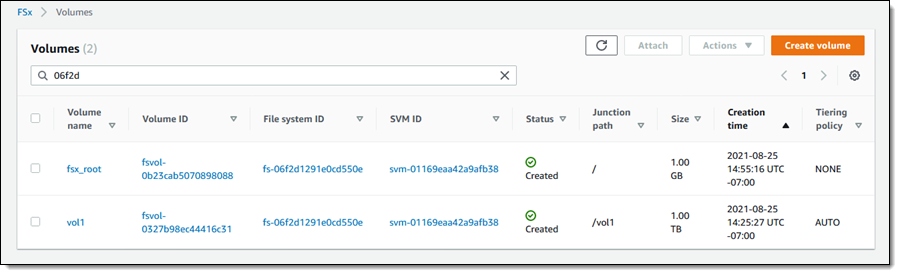

Amazon FSx for NetApp ONTAP

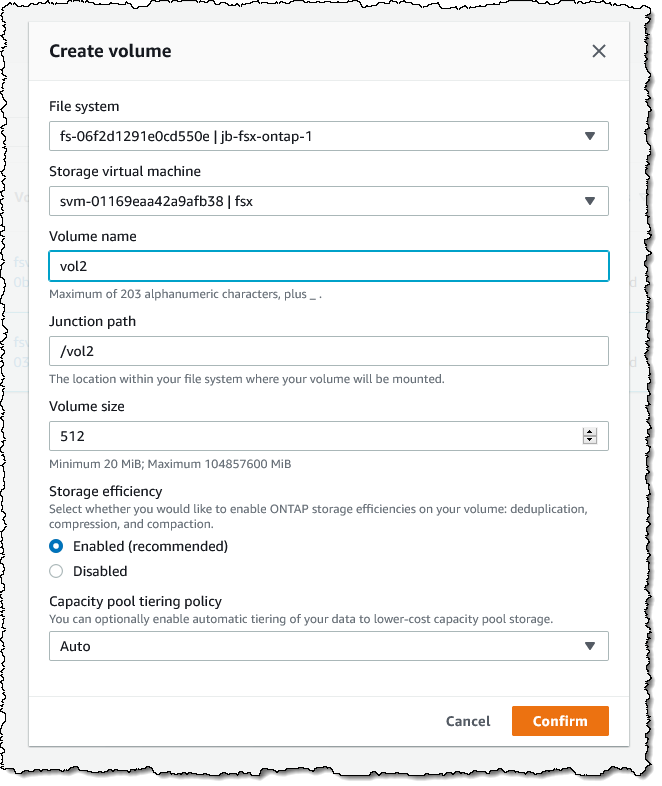

Today, we are pleased to announce Amazon FSx for NetApp ONTAP, a new storage service that allows you to launch and run fully managed NetApp ONTAP file systems in the cloud. Amazon FSx for NetApp ONTAP joins Amazon FSx for Lustre and Amazon FSx for Windows File Server as the newest file system offered by Amazon FSx.

Amazon FSx for NetApp ONTAP provides the full ONTAP experience with capabilities and APIs that make it easy to run applications that rely on NetApp or network-attached storage (NAS) appliances on AWS without changing your application code or how you manage your data. To learn more, read New – Amazon FSx for NetApp ONTAP.

Amazon S3

Amazon S3 Multi-Region Access Points is a new S3 feature that allows you to define global endpoints that span buckets in multiple AWS Regions. Using this feature, you can now build multi-region applications without adding complexity to your applications, with the same system architecture as if you were using a single AWS Region.

S3 Multi-Region Access Points is built on top of AWS Global Accelerator and routes S3 requests over the global AWS network. S3 Multi-Region Access Points dynamically routes your requests to the lowest latency copy of your data, so the upload and download performance can increase by 60 percent. It’s a great solution for applications that rely on reading files from S3 and also for applications like autonomous vehicles that need to write a lot of data to S3. To learn more about this new launch, read How to Accelerate Performance and Availability of Multi-Region Applications with Amazon S3 Multi-Region Access Points.

There’s also great news about the Amazon S3 Intelligent-Tiering storage class! The conditions of usage have been updated. There is no longer a minimum storage duration for all objects stored in S3 Intelligent-Tiering, and monitoring and automation charges for objects smaller than 128 KB have been removed. Smaller objects (128 KB or less) are not eligible for auto-tiering when stored in S3 Intelligent-Tiering. Now that there is no monitoring and automation charge for small objects and no minimum storage duration, you can use the S3 Intelligent-Tiering storage class by default for all your workloads with unknown or changing access patterns. To learn more about this announcement, read Amazon S3 Intelligent-Tiering – Improved Cost Optimizations for Short-Lived and Small Objects.

Amazon EFS

Amazon EFS Intelligent Tiering is a new capability that makes it easier to optimize costs for shared file storage when access patterns change. When you enable Amazon EFS Intelligent-Tiering, it will store the files in the appropriate storage class at the right time. For example, if you have a file that is not used for a period of time, EFS Intelligent-Tiering will move the file to the Infrequent Access (IA) storage class. If the file is accessed again, Intelligent-Tiering will automatically move it back to the Standard storage class.

To get started with Intelligent-Tiering, enable lifecycle management in a new or existing file system and choose a lifecycle policy to automatically transition files between different storage classes. Amazon EFS Intelligent-Tiering is perfect for workloads with changing or unknown access patterns, such as machine learning inference and training, analytics, content management and media assets. To learn more about this launch, read Amazon EFS Intelligent-Tiering Optimizes Costs for Workloads with Changing Access Patterns.

AWS Backup

AWS Backup Audit Manager allows you to simplify data governance and compliance management of your backups across supported AWS services. It provides customizable controls and parameters, like backup frequency or retention period. You can also audit your backups to see if they satisfy your organizational and regulatory requirements. If one of your monitored backups drifts from your predefined parameters, AWS Backup Audit Manager will let you know so you can take corrective action. This new feature also enables you to generate reports to share with auditors and regulators. To learn more, read How to Monitor, Evaluate, and Demonstrate Backup Compliance with AWS Backup Audit Manager.

Amazon EBS

Amazon EBS direct APIs now support creating 64 TB EBS Snapshots directly from any block storage data, including on-premises. This was increased from 16 TB to 64 TB, allowing customers to create the largest snapshots and recover them to Amazon EBS io2 Block Express Volumes. To learn more, read Amazon EBS direct API documentation.

AWS Transfer Family

AWS Transfer Family Managed Workflows is a new feature that allows you to reduce the manual tasks of preprocessing your data. Managed Workflows does a lot of the heavy lifting for you, like setting up the infrastructure to run your code upon file arrival, continuously monitoring for errors, and verifying that all the changes to the data are logged. Managed Workflows helps you handle error scenarios so that failsafe modes trigger when needed.

AWS Transfer Family Managed Workflows allows you to configure all the necessary tasks at once so that tasks can automatically run in the background. Managed Workflows is available today in the AWS Transfer Family Management Console. To learn more, read Transfer Family FAQ.

Join us online for more!

Join us online for more!

Don’t forget to register and join us for the AWS Storage Day 2021 virtual event. The event will be live at 8:30 AM Pacific Time (11:30 AM Eastern Time) on September 2. The event will immediately re-stream for the Asia-Pacific audience with live Q&A moderators on Friday, September 3, at 8:30 AM Singapore Time. All sessions will be available on demand next week.

We look forward to seeing you there!

— Marcia

Post Syndicated from Molly Clancy original https://www.backblaze.com/blog/ransomware-economy/

Ransomware continues to proliferate for a simple reason—it’s profitable. And it’s profitable not just for the ransomware developers themselves—they’re just one part of the equation—but for a whole ecosystem of players who make up the ransomware economy. To understand the threats to small and medium-sized businesses (SMBs) and organizations today, it’s important to understand the scope and scale of what you’re up against.

Today, we’re digging into how the ransomware economy operates, including the broader ecosystem and the players involved, emerging threats to SMBs, and the overall financial footprint of ransomware worldwide.

This post is a part of our ongoing series on ransomware. Take a look at our other posts for more information on how businesses can defend themselves against a ransomware attack, and more.

Cybercriminals have long been described as operating in “gangs.” The label conjures images of hackers furiously tapping away at glowing workstations in a shadowy warehouse. But the work of the ransomware economy today is more likely to take place in a boardroom than a back alley. Cybercriminals have graduated from gangs to highly complex organized crime syndicates that operate ransomware brands as part of a sophisticated business model.

Operators of these syndicates are just as likely to be worrying about user experience and customer service as they are with building malicious code. A look at the branding on display on some syndicates’ leak sites makes the case plain that these groups are more than a collective of expert coders—they’re savvy businesspeople.

Ransomware operators are often synonymous with the software variant they brand, deploy, and sell. Many have rebranded over the years or splintered into affiliated organizations. Some of the top ransomware brands operating today, along with high profile attacks they have carried out, are shown in the infographic below:

The groups shown above do not constitute an exhaustive list. In June 2021, FBI Director Christopher Wray stated that the FBI was investigating 100 different ransomware variants and new ones pop up everyday. While some brands have existed for years (Ryuk, for example), the list is also likely obsolete as soon as it’s published. Ransomware brands bubble up, go bust, and reorganize, changing with the cybersecurity tides.

Chainalysis, a blockchain data platform, published their Ransomware 2021: Critical Mid-year Update that shows just how much brands fluctuate year to year and, they note, even month to month:

Ransomware operators may appear to be single entities, but there is a complex ecosystem of suppliers and ancillary providers behind them that exchange services with each other on the dark web. The flowchart below illustrates all the players and how they interact:

Cybercrime “gangs” could once be tracked down and caught like the David Levi Phishing Gang that was investigated and prosecuted in 2005. Today’s decentralized ecosystem, however, makes going after ransomware operators all the more difficult. These independent entities may never interact with each other outside of the dark web where they exchange services for cryptocurrency:

Beyond the collection of entities directly involved in the deployment of ransomware, the broader ecosystem includes other players on the victim’s side, who, for better or worse, stand to profit off of ransomware attacks. These include:

While these providers work on behalf of victims, they also perpetuate the cycle of ransomware. For example, insurance providers that cover businesses in the event of a ransomware attack often advise their customers to pay the ransom if they think it will minimize downtime as the cost of extended downtime can far exceed the cost of a ransom payment. This becomes problematic for a few reasons:

In the ransomware economy, operators and their affiliates are the threat actors that carry out attacks. This affiliate model where operators sell ransomware as a service (RaaS) represents one of the biggest threats to SMBs and organizations today.

Cybercrime syndicates realized they could essentially license and sell their tech to affiliates who then carry out their own misdeeds empowered by another criminal’s software. The syndicates, affiliates, and other entities each take a portion of the ransom.

Operators advertise these partner programs on the dark web and thoroughly vet affiliates before bringing them on to filter out law enforcement posing as low-level criminals. One advertisement by the REvil syndicate noted, “No doubt, in the FBI and other special services, there are people who speak Russian perfectly, but their level is certainly not the one native speakers have. Check these people by asking them questions about the history of Ukraine, Belarus, Kazakhstan or Russia, which cannot be googled. Authentic proverbs, expressions, etc.”

Though less sophisticated than some of the more notorious viruses, these “as a service” variants enable even amateur cybercriminals to carry out attacks. And they’re likely to carry those attacks out on the easiest prey—small businesses who don’t have the resources to implement adequate protections or weather extended downtime.

Hoping to increase their chances of being paid, low-level threat actors using RaaS typically demanded smaller ransoms, under $100,000, but that trend is changing. Coveware reported in August 2020 that affiliates are getting bolder in their demands. They reported the first six-figure payments to the Dharma ransomware group, an affiliate syndicate, in Q2 2020.

The one advantage savvy business owners have when it comes to RaaS: attacks are high volume (carried out against many thousands of targets) but low quality and easily identifiable by the time they are widely distributed. By staying on top of antivirus protections and detection, business owners can increase their chances of catching the attacks before it’s too late.

So, how much money do ransomware crime syndicates actually make? The short answer is that it’s difficult to know because so many ransomware attacks go unreported. To get some idea of the size of the ransomware economy, analysts have to do some sleuthing.

Chainalysis tracks transactions to blockchain addresses linked to ransomware attacks in order to capture the size of ransomware revenues. In their regular reporting on the cybercrime cryptocurrency landscape, they showed that the total amount paid by ransomware victims increased by 311% in 2020 to reach nearly $350 million worth of cryptocurrency. In May, they published an update after identifying new ransomware addresses that put the number over $406 million. They expect the number will only continue to grow.

Similarly, threat intel company, Advanced Intelligence, and cybersecurity firm, HYAS, tracked Bitcoin transactions to 61 addresses associated with the Ryuk syndicate. They estimate that the operator may be worth upwards of $150 million alone. Their analysis sheds some light on how ransomware operators turn their exploits and the ransoms paid into usable cash.

Extorted funds are gathered in holding accounts, passed to money laundering services, then either funneled back into the criminal market and used to pay for other criminal services or cashed out at real cryptocurrency exchanges. The process follows these steps, as illustrated below:

In an interesting development, the report found that Ryuk actually bypassed laundering services and cashed out some of their own cryptocurrency directly on exchanges using stolen identities—a brash move for any organized crime operation.

Even though the ransomware economy is ever-changing, having an awareness of where attacks come and the threats you’re facing can prepare you if you ever face one yourself. To summarize:

We put this post together not to trade in fear, but to prepare SMBs and organizations with information in the fight against ransomware. And, you don’t have to fight it alone. Download our Complete Guide to Ransomware E-book and Guide for even more intel on ransomware today, plus steps to take to defend against ransomware, and how to respond if you do fall victim to an attack.

The post Introducing the Ransomware Economy appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from Megan O'Neil original https://aws.amazon.com/blogs/security/top-10-security-best-practices-for-securing-data-in-amazon-s3/

With more than 100 trillion objects in Amazon Simple Storage Service (Amazon S3) and an almost unimaginably broad set of use cases, securing data stored in Amazon S3 is important for every organization. So, we’ve curated the top 10 controls for securing your data in S3. By default, all S3 buckets are private and can be accessed only by users who are explicitly granted access through ACLs, S3 bucket policies, and identity-based policies. In this post, we review the latest S3 features and Amazon Web Services (AWS) services that you can use to help secure your data in S3, including organization-wide preventative controls such as AWS Organizations service control policies (SCPs). We also provide recommendations for S3 detective controls, such as Amazon GuardDuty for S3, AWS CloudTrail object-level logging, AWS Security Hub S3 controls, and CloudTrail configuration specific to S3 data events. In addition, we provide data protection options and considerations for encrypting data in S3. Finally, we review backup and recovery recommendations for data stored in S3. Given the broad set of use cases that S3 supports, you should determine the priority of controls applied in accordance with your specific use case and associated details.

Designate AWS accounts for public S3 use and prevent all other S3 buckets from inadvertently becoming public by enabling S3 Block Public Access. Use Organizations SCPs to confirm that the S3 Block Public Access setting cannot be changed. S3 Block Public Access provides a level of protection that works at the account level and also on individual buckets, including those that you create in the future. You have the ability to block existing public access—whether it was specified by an ACL or a policy—and to establish that public access isn’t granted to newly created items. This allows only designated AWS accounts to have public S3 buckets while blocking all other AWS accounts. To learn more about Organizations SCPs, see Service control policies.

Check that the access granted in the Amazon S3 bucket policy is restricted to specific AWS principals, federated users, service principals, IP addresses, or VPCs that you provide. A bucket policy that allows a wildcard identity such as Principal “*” can potentially be accessed by anyone. A bucket policy that allows a wildcard action “*” can potentially allow a user to perform any action in the bucket. For more information, see Using bucket policies.

Identity policies are policies assigned to AWS Identity and Access Management (IAM) users and roles and should follow the principle of least privilege to help prevent inadvertent access or changes to resources. Establishing least privilege identity policies includes defining specific actions such as S3:GetObject or S3:PutObject instead of S3:*. In addition, you can use predefined AWS-wide condition keys and S3‐specific condition keys to specify additional controls on specific actions. An example of an AWS-wide condition key commonly used for S3 is IpAddress: { aws:SourceIP: “10.10.10.10”}, where you can specify your organization’s internal IP space for specific actions in S3. See IAM.1 in Monitor S3 using Security Hub and CloudWatch Logs for detecting policies with wildcard actions and wildcard resources are present in your accounts with Security Hub.

Consider splitting read, write, and delete access. Allow only write access to users or services that generate and write data to S3 but don’t need to read or delete objects. Define an S3 lifecycle policy to remove objects on a schedule instead of through manual intervention— see Managing your storage lifecycle. This allows you to remove delete actions from your identity-based policies. Verify your policies with the IAM policy simulator. Use IAM Access Analyzer to help you identify, review, and design S3 bucket policies or IAM policies that grant access to your S3 resources from outside of your AWS account.

In 2020, GuardDuty announced coverage for S3. Turning this on enables GuardDuty to continuously monitor and profile S3 data access events (data plane operations) and S3 configuration (control plane APIs) to detect suspicious activities. Activities such as requests coming from unusual geolocations, disabling of preventative controls, and API call patterns consistent with an attempt to discover misconfigured bucket permissions. To achieve this, GuardDuty uses a combination of anomaly detection, machine learning, and continuously updated threat intelligence. To learn more, including how to enable GuardDuty for S3, see Amazon S3 protection in Amazon GuardDuty.

In May of 2020, AWS re-launched Amazon Macie. Macie is a fully managed service that helps you discover and protect your sensitive data by using machine learning to automatically review and classify your data in S3. Enabling Macie organization wide is a straightforward and cost-efficient method for you to get a central, continuously updated view of your entire organization’s S3 environment and monitor your adherence to security best practices through a central console. Macie continually evaluates all buckets for encryption and access control, alerting you of buckets that are public, unencrypted, or shared or replicated outside of your organization. Macie evaluates sensitive data using a fully-managed list of common sensitive data types and custom data types you create, and then issues findings for any object where sensitive data is found.

There are four options for encrypting data in S3, including client-side and server-side options. With server-side encryption, S3 encrypts your data at the object level as it writes it to disks in AWS data centers and decrypts it when you access it. As long as you authenticate your request and you have access permissions, there is no difference in the way you access encrypted or unencrypted objects.

The first two options use AWS Key Management Service (AWS KMS). AWS KMS lets you create and manage cryptographic keys and control their use across a wide range of AWS services and their applications. There are options for managing which encryption key AWS uses to encrypt your S3 data.

Amazon S3 is designed for durability of 99.999999999 percent of objects across multiple Availability Zones, is resilient against events that impact an entire zone, and designed for 99.99 percent availability over a given year. In many cases, when it comes to strategies to back up your data in S3, it’s about protecting buckets and objects from accidental deletion, in which case S3 Versioning can be used to preserve, retrieve, and restore every version of every object stored in your buckets. S3 Versioning lets you keep multiple versions of an object in the same bucket and can help you recover objects from accidental deletion or overwrite. Keep in mind this feature has costs associated. You may consider S3 Versioning in selective scenarios such as S3 buckets that store critical backup data or sensitive data.

With S3 Versioning enabled on your S3 buckets, you can optionally add another layer of security by configuring a bucket to enable multi-factor authentication (MFA) delete. With this configuration, the bucket owner must include two forms of authentication in any request to delete a version or to change the versioning state of the bucket.

S3 Object Lock is a feature that helps you mitigate data loss by storing objects using a write-once-read-many (WORM) model. By using Object Lock, you can prevent an object from being overwritten or deleted for a fixed time or indefinitely. Keep in mind that there are specific use cases for Object Lock, including scenarios where it is imperative that data is not changed or deleted after it has been written.

Amazon S3 is integrated with CloudTrail. CloudTrail captures a subset of API calls, including calls from the S3 console and code calls to the S3 APIs. In addition, you can enable CloudTrail data events for all your buckets or for a list of specific buckets. Keep in mind that a very active S3 bucket can generate a large amount of log data and increase CloudTrail costs. If this is concern around cost then consider enabling this additional logging only for S3 buckets with critical data.

Server access logging provides detailed records of the requests that are made to a bucket. Server access logs can assist you in security and access audits.

Although S3 stores your data across multiple geographically diverse Availability Zones by default, your compliance requirements might dictate that you store data at even greater distances. Cross-region replication (CRR) allows you to replicate data between distant AWS Regions to help satisfy these requirements. CRR enables automatic, asynchronous copying of objects across buckets in different AWS Regions. For more information on object replication, see Replicating objects. Keep in mind that this feature has costs associated, you might consider CCR in selective scenarios such as S3 buckets that store critical backup data or sensitive data.

Security Hub provides you with a comprehensive view of your security state in AWS and helps you check your environment against security industry standards and best practices. Security Hub collects security data from across AWS accounts, services, and supported third-party partner products and helps you analyze your security trends and identify the highest priority security issues.

The AWS Foundational Security Best Practices standard is a set of controls that detect when your deployed accounts and resources deviate from security best practices, and provides clear remediation steps. The controls contain best practices from across multiple AWS services, including S3. We recommend you enable the AWS Foundational Security Best Practices as it includes the following detective controls for S3 and IAM:

IAM.1: IAM policies should not allow full “*” administrative privileges.

S3.1: Block Public Access setting should be enabled

S3.2: S3 buckets should prohibit public read access

S3.3: S3 buckets should prohibit public write access

S3.4: S3 buckets should have server-side encryption enabled

S3.5: S3 buckets should require requests to use Secure Socket layer

S3.6: Amazon S3 permissions granted to other AWS accounts in bucket policies should be restricted

S3.8: S3 Block Public Access setting should be enabled at the bucket level

For details of each control, including remediation steps, please review the AWS Foundational Security Best Practices controls.

If there is a specific S3 API activity not covered above that you’d like to be alerted on, you can use CloudTrail Logs together with Amazon CloudWatch for S3 to do so. CloudTrail integration with CloudWatch Logs delivers S3 bucket-level API activity captured by CloudTrail to a CloudWatch log stream in the CloudWatch log group that you specify. You create CloudWatch alarms for monitoring specific API activity and receive email notifications when the specific API activity occurs.

By using the ten practices described in this blog post, you can build strong protection mechanisms for your data in Amazon S3, including least privilege access, encryption of data at rest, blocking public access, logging, monitoring, and configuration checks.

Depending on your use case, you should consider additional protection mechanisms. For example, there are security-related controls available for large shared datasets in S3 such as Access Points, which you can use to decompose one large bucket policy into separate, discrete access point policies for each application that needs to access the shared data set. To learn more about S3 security, see Amazon S3 Security documentation.

Now that you’ve reviewed the top 10 security best practices to make your data in S3 more secure, make sure you have these controls set up in your AWS accounts—and go build securely!

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on the Amazon S3 forum or contact AWS Support.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

Post Syndicated from Caitlin Condon original https://blog.rapid7.com/2021/09/02/active-exploitation-of-confluence-server-cve-2021-26084/

On August 25, 2021, Atlassian published details on CVE-2021-26084, a critical remote code execution vulnerability in Confluence Server and Confluence Data Center. The vulnerability arises from an OGNL injection flaw and allows authenticated attackers, “and in some instances an unauthenticated user,” to execute arbitrary code on Confluence Server or Data Center instances.

The vulnerable endpoints can be accessed by a non-administrator user or unauthenticated user if “Allow people to sign up to create their account” is enabled. To check whether this is enabled, go to COG > User Management > User Signup Options. The affected versions are before version 6.13.23, from version 6.14.0 before 7.4.11, from version 7.5.0 before 7.11.6, and from version 7.12.0 before 7.12.5.

Proof-of-concept exploit code has been publicly available since August 31, 2021, and active exploitation has been reported as of September 2. Confluence Server and Data Center customers who have not already done so should update to a fixed version immediately, without waiting for their typical patch cycles. For a complete list of fixed versions, see Atlassian’s advisory here.

For full vulnerability analysis, including triggers and check information, see Rapid7’s analysis in AttackerKB.

InsightVM and Nexpose customers can assess their exposure to CVE-2021-26084 with remote vulnerability checks as of the August 26, 2021 content release.

Post Syndicated from Channy Yun original https://aws.amazon.com/blogs/aws/new-amazon-efs-intelligent-tiering-optimizes-costs-for-workloads-with-changing-access-patterns/

Amazon Elastic File System (Amazon EFS) offers four storage classes: two Standard storage classes, Amazon EFS Standard and Amazon EFS Standard-Infrequent Access (EFS Standard-IA), and two One Zone storage classes, Amazon EFS One Zone, and Amazon EFS One Zone-Infrequent Access (EFS One Zone-IA). Standard storage classes store data within and across multiple availability zones (AZ). One Zone storage classes store data redundantly within a single AZ, at 47 percent lower price compared to file systems using Standard storage classes, for workloads that don’t require multi-AZ resilience.

The EFS Standard and EFS One Zone storage classes are performance-optimized to deliver lower latency. The Infrequent Access (IA) storage classes are cost-optimized for files that are not accessed every day. With EFS lifecycle management, you can move files that have not been accessed for the duration of the lifecycle policy (7, 14, 30, 60, or 90 days) to the IA storage classes. This will reduce the cost of your storage by up to 92 percent compared to EFS Standard and EFS One Zone storage classes respectively.

Customers love the cost savings provided by the IA storage classes, but they also want to ensure that they won’t get unexpected data access charges if access patterns change and files that have transitioned to IA are accessed frequently. Reading from or writing data to the IA storage classes incurs a data access charge for every access.

Today, we are launching Amazon EFS Intelligent-Tiering, a new EFS lifecycle management feature that automatically optimizes costs for shared file storage when data access patterns change, without operational overhead.

With EFS Intelligent-Tiering, lifecycle management monitors the access patterns of your file system and moves files that have not been accessed for the duration of the lifecycle policy from EFS Standard or EFS One Zone to EFS Standard-IA or EFS One Zone-IA, depending on whether your file system uses EFS Standard or EFS One Zone storage classes. If the file is accessed again, it is moved back to EFS Standard or EFS One Zone storage classes.

EFS Intelligent-Tiering optimizes your costs even if your workload file data access patterns change. You’ll never have to worry about unbounded data access charges because you only pay for data access charges for transitions between storage classes.

Getting started with EFS Intelligent-Tiering

To get started with EFS Intelligent-Tiering, create a file system using the AWS Management Console, enable lifecyle management and set two lifecycle policies.

Choose a Transition into IA option to move infrequently accessed files to the IA storage classes. From the drop down list, you can choose lifecycle policies of 7, 14, 30, 60, or 90 days. Additionally, choose a Transition out of IA option and select On first access to move files back to EFS Standard or EFS One Zone storage classes on access.

For an existing file system, you can click the Edit button on your file system to enable or change lifecycle management and EFS Intelligent-Tiering.

Also, you can use the PutLifecycleConfiguration API action or put-lifecycle-configuration command specifying the file system ID of the file system for which you are enabling lifecycle management and the two policies for EFS Intelligent-Tiering.

$ aws efs put-lifecycle-configuration \

--file-system-id File-System-ID \

--lifecycle-policies "[{"TransitionToIA":"AFTER_30_DAYS"},

{"TransitionToPrimaryStorageClass":"AFTER_1_ACCESS"}]"

--region us-west-2 \

--profile adminuser

You get the following response:

{

"LifecyclePolicies": [

{

"TransitionToIA": "AFTER_30_DAYS"

},

{

"TransitionToPrimaryStorageClass": "AFTER_1_ACCESS"

}

]

}To disable EFS Intelligent-Tiering, set both the Transition into IA and Transition out of IA options to None. This will disable lifecycle management, and your files will remain on the storage class they’re on.

Any files that have already started to move between storage classes at the time that you disabled EFS Intelligent-Tiering will complete moving to their new storage class. You can disable transition policies independently of each other.

For more information, see Amazon EFS lifecycle management in the Amazon EFS User Guide.

Now Available

Amazon EFS Intelligent-Tiering is available in all AWS Regions where Amazon EFS is available. To learn more, join us for the third annual and completely free-to-attend AWS Storage Day 2021 and tune in to our livestream on the AWS Twitch channel today.

You can send feedback to the AWS forum for Amazon EFS or through your usual AWS Support contacts.

– Channy

Post Syndicated from Alex Casalboni original https://aws.amazon.com/blogs/aws/s3-multi-region-access-points-accelerate-performance-availability/

Building multi-region applications allows you to improve latency for end users, achieve higher availability and resiliency in case of unexpected disasters, and adhere to business requirements related to data durability and data residency. For example, you might want to reduce the overall latency of dynamic API calls to your backend services . Or you might want to extend a single-region deployment to handle internet routing issues, failures of submarine cables, or regional connectivity issues – and therefore avoid costly downtime. Today, thanks to multi-region data replication functions such as Amazon DynamoDB global tables, Amazon Aurora global database, Amazon ElastiCache global datastore, and Amazon Simple Storage Service (Amazon S3) cross-region replication, you can build multi-region applications across 25 AWS Regions worldwide.

Yet, when it comes to implementing multi-region applications, you often have to make your code region-aware and take care of the heavy lifting of interacting with the correct regional resources, whether it’s the closest or the most available. For example, you might have three S3 buckets with object replication across three AWS Regions. Your application code needs to be aware of how many copies of the bucket exist and where they are located, which bucket is the closest to the caller, and how to fall back to other buckets in case of issues. The complexity grows when you add new regions to your multi-region architecture and redeploy your stack in each region whenever a global configuration changes.