Post Syndicated from LGR original https://www.youtube.com/watch?v=avYaKrL3YiY

Security updates for Friday

Post Syndicated from original https://lwn.net/Articles/895202/

Security updates have been issued by Debian (chromium, postgresql-11, postgresql-13, and waitress), Fedora (curl, java-1.8.0-openjdk-aarch32, keylime, and pcre2), Oracle (gzip and zlib), Red Hat (subversion:1.10), SUSE (clamav, documentation-suse-openstack-cloud, kibana, openstack-keystone, openstack-monasca-notification, e2fsprogs, gzip, and kernel), and Ubuntu (libvorbis and rsyslog).

Update for CIS Google Cloud Platform Foundation Benchmarks – Version 1.3.0

Post Syndicated from Ryan Blanchard original https://blog.rapid7.com/2022/05/13/update-for-cis-google-cloud-platform-foundation-benchmarks-version-1-3-0/

The Center for Internet Security (CIS) recently released an updated version of their Google Cloud Platform Foundation Benchmarks – Version 1.3.0. Expanding on previous iterations, the update adds 21 new benchmarks covering best practices for securing Google Cloud environments.

The updates were broad in scope, with recommendations covering configurations and policies ranging from resource segregation to Compute and Storage. In this post, we’ll briefly cover what CIS Benchmarks are, dig into a few key highlights from the newly released version, and highlight how Rapid7 InsightCloudSec can help your teams implement and maintain compliance with new guidance as it becomes available.

What are CIS Benchmarks?

In the rare case that you’ve never come across them, the CIS Benchmarks are a set of recommendations and best practices determined by contributors across the cybersecurity community intended to provide organizations and security practitioners with a baseline of configurations and policies to better protect their applications, infrastructure, and data.

While not a regulatory requirement, the CIS Benchmarks provide a foundation for establishing a strong security posture, and as a result, many organizations use them to guide the creation of their own internal policies. As new benchmarks are created and updates are announced, many throughout the industry sift through the recommendations to determine whether or not they should be implementing the guidelines in their own environments.

CIS Benchmarks can be even more beneficial to practitioners taking on emerging technology areas where they may not have the background knowledge or experience to confidently implement security programs and policies. In the case of the GCP Foundation Benchmarks, they can prove to be a vital asset for folks looking to get started in cloud security or that are taking on the added responsibility of their organizations’ cloud environments.

Key highlights from CIS GCP Foundational Benchmarks 1.3.0

Relative to benchmarks created for more traditional security fields such as endpoint OS, Linux, and others, those developed for cloud service providers (CSPs) are relatively new. As a result, when updates are released they tend to be fairly substantial as it relates to the volume of new recommendations. Let’s dig in a bit further into some of the key highlights from version 1.3.0 and why they’re important to consider for your own environment.

2.13 – Ensure Cloud Asset Inventory is enabled

Enabling Cloud Asset Inventory is critical to maintaining visibility into your entire environment, providing a real-time and retroactive (5 weeks of history retained) view of all assets across your cloud estate. This is critical because in order to effectively secure your cloud assets and data, you first need to gain insight into everything that’s running within your environment. Beyond providing an inventory snapshot, Cloud Asset Inventory also surfaces metadata related to those assets, providing added context when assessing the sensitivity and/or integrity of your cloud resources.

4.11 – Ensure that compute instances have Confidential Computing enabled

This is a really powerful new configuration that enables organizations to secure their mission critical data throughout its lifecycle, including while actively in use. Typically, encryption is only available while data is either at rest or in transit. Making use of Google’s new Secure Encrypted Virtualization (SEV) feature, Confidential Computing allows customers to encrypt their data while it is being indexed or queried.

A dozen new recommendations for securing GCP databases

The new benchmarks added 12 new recommendations targeted at securing GCP databases, each of which are geared toward addressing issues related to data loss or leakage. This aligns with Verizon’s most recent Data Breach Investigations Report, which found that data stores remained the most commonly exploited cloud service, with more than 40% of breaches resulting from misconfiguration of cloud data stores such as AWS S3 buckets, Azure Blob Storage, and Google Cloud Storage buckets.

How InsightCloudSec can help your team align to new CIS Benchmarks

In response to the recent update, Rapid7 has released a new compliance pack – GCP 1.3.0 – for InsightCloudSec to ensure that security teams can easily check their environment for adherence with the new benchmarks. The new pack contains 57 Insights to help organizations reconcile their own existing GCP configurations against the new recommendations and best practices. Should your team need to make any adjustments based on the benchmarks, InsightCloudSec users can leverage bots to notify the necessary team(s) or automatically enact them.

In subsequent releases, we will continue to update the pack as more filters and Insights are available. If you have specific questions on this capability or a supported GCP resource, reach out to us through the Customer Portal.

Additional reading:

- Is Your Kubernetes Cluster Ready for Version 1.24?

- Cloud-Native Application Protection (CNAPP): What’s Behind the Hype?

- 2022 Cloud Misconfigurations Report: A Quick Look at the Latest Cloud Security Breaches and Attack Trends

- InsightCloudSec Supports the Recently Updated NSA/CISA Kubernetes Hardening Guide

Announcing the Cloudflare Images Sourcing Kit

Post Syndicated from Paulo Costa original https://blog.cloudflare.com/cloudflare-images-sourcing-kit/

When we announced Cloudflare Images to the world, we introduced a way to store images within the product and help customers move away from the egress fees met when using remote sources for their deliveries via Cloudflare.

To store the images in Cloudflare, customers can upload them via UI with a simple drag and drop, or via API for scenarios with a high number of objects for which scripting their way through the upload process makes more sense.

To create flexibility on how to import the images, we’ve recently also included the ability to upload via URL or define custom names and paths for your images to allow a simple mapping between customer repositories and the objects in Cloudflare. It’s also possible to serve from a custom hostname to create flexibility on how your end-users see the path, to improve the delivery performance by removing the need to do TLS negotiations or to improve your brand recognition through URL consistency.

Still, there was no simple way to tell our product: “Tens of millions of images are in this repository URL. Go and grab them all from me”.

In some scenarios, our customers have buckets with millions of images to upload to Cloudflare Images. Their goal is to migrate all objects to Cloudflare through a one-time process, allowing you to drop the external storage altogether.

In another common scenario, different departments in larger companies use independent systems configured with varying storage repositories, all of which they feed at specific times with uneven upload volumes. And it would be best if they could reuse definitions to get all those new Images in Cloudflare to ensure the portfolio is up-to-date while not paying egregious egress fees by serving the public directly from those multiple storage providers.

These situations required the upload process to Cloudflare Images to include logistical coordination and scripting knowledge. Until now.

Announcing the Cloudflare Images Sourcing Kit

Today, we are happy to share with you our Sourcing Kit, where you can define one or more sources containing the objects you want to migrate to Cloudflare Images.

But, what exactly is Sourcing? In industries like manufacturing, it implies a number of operations, from selecting suppliers, to vetting raw materials and delivering reports to the process owners.

So, we borrowed that definition and translated it into a Cloudflare Images set of capabilities allowing you to:

- Define one or multiple repositories of images to bulk import;

- Reuse those sources and import only new images;

- Make sure that only actual usable images are imported and not other objects or file types that exist in that source;

- Define the target path and filename for imported images;

- Obtain Logs for the bulk operations;

The new kit does it all. So let’s go through it.

How the Cloudflare Images Sourcing Kit works

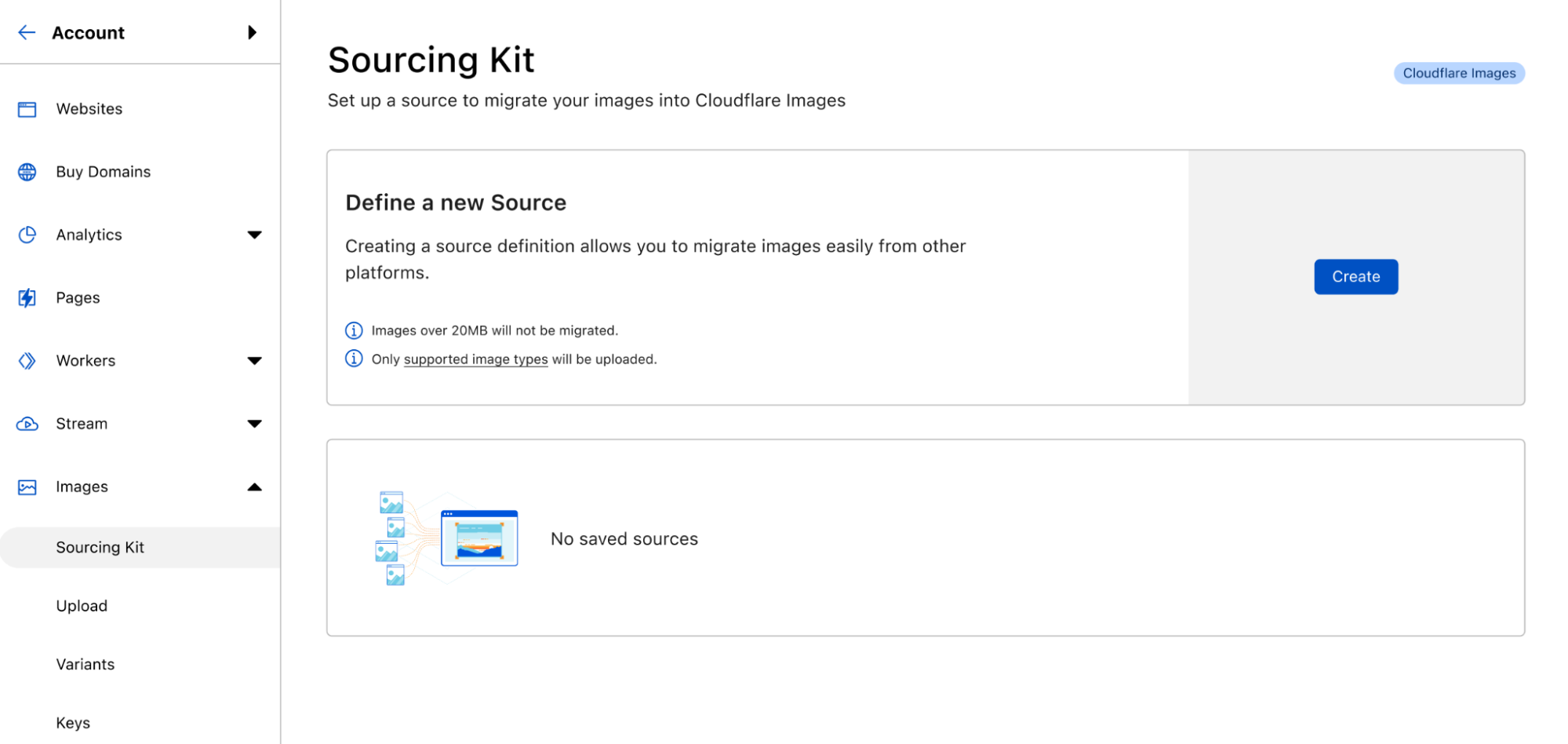

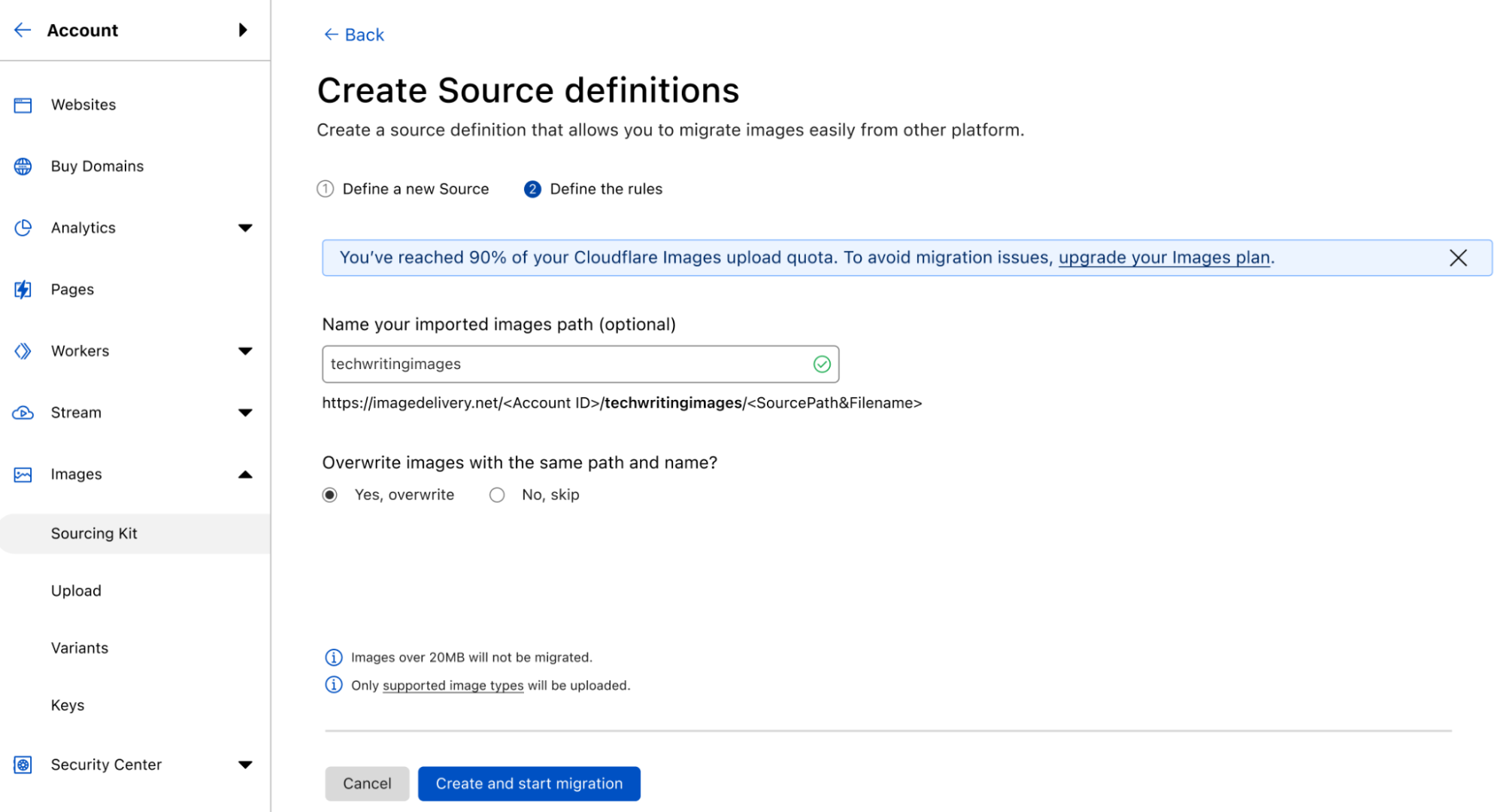

In the Cloudflare Dashboard, you will soon find the Sourcing Kit under Images.

In it, you will be able to create a new source definition, view existing ones, and view the status of the last operations.

Clicking on the create button will launch the wizard that will guide you through the first bulk import from your defined source:

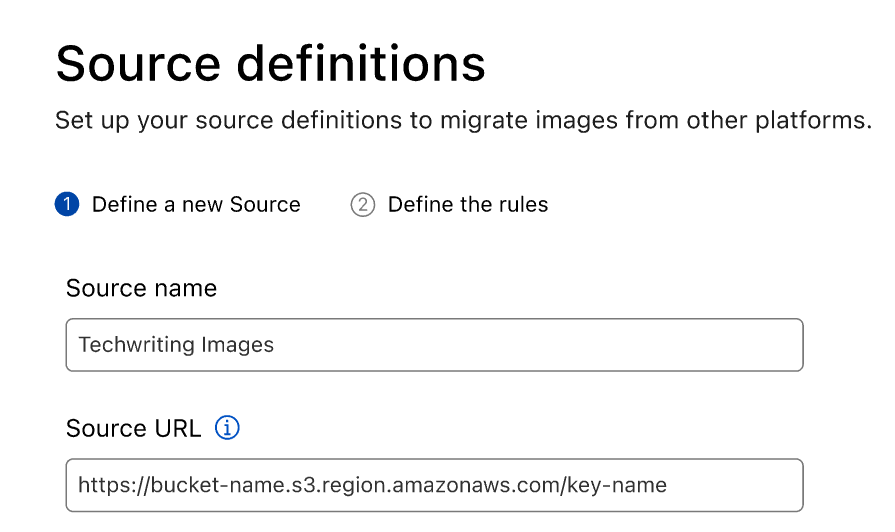

First, you will need to input the Name of the Source and the URL for accessing it. You’ll be able to save the definitions and reuse the source whenever you wish.

After running the necessary validations, you’ll be able to define the rules for the import process.

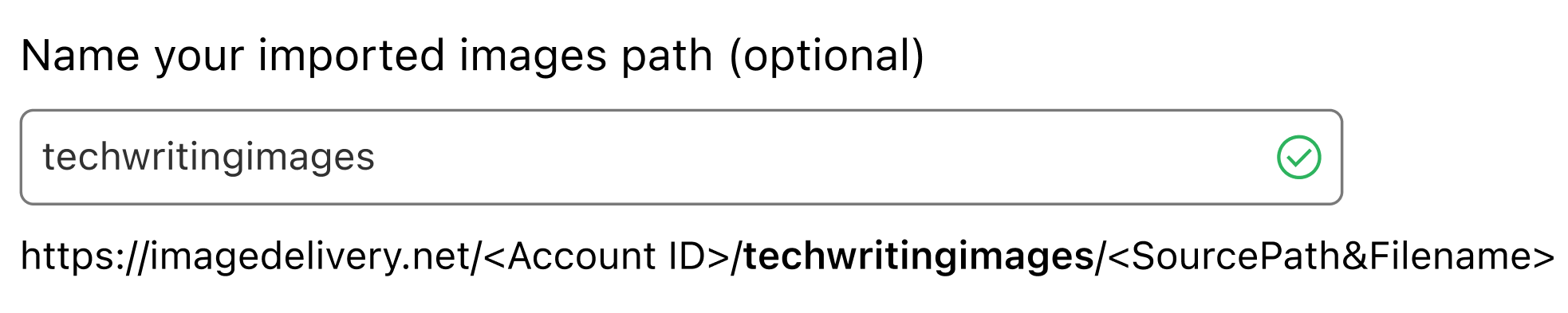

The first option you have allows an Optional Prefix Path. Defining a prefix allows a unique identifier for the images uploaded from this particular source, differentiating the ones imported from this source.

The naming rule in place respects the source image name and path already, so let’s assume there’s a puppy image to be retrieved at:

https://my-bucket.s3.us-west-2.amazonaws.com/folderA/puppy.png

When imported without any Path Prefix, you’ll find the image at

https://imagedelivery.net/<AccountId>/folderA/puppy.png

Now, you might want to create an additional Path Prefix to identify the source, for example by mentioning that this bucket is from the Technical Writing department. In the puppy case, the result would be:

https://imagedelivery.net/<AccountId>/techwriting/folderA/puppy.png

Custom Path prefixes also provide a way to prevent name clashes coming from other sources.

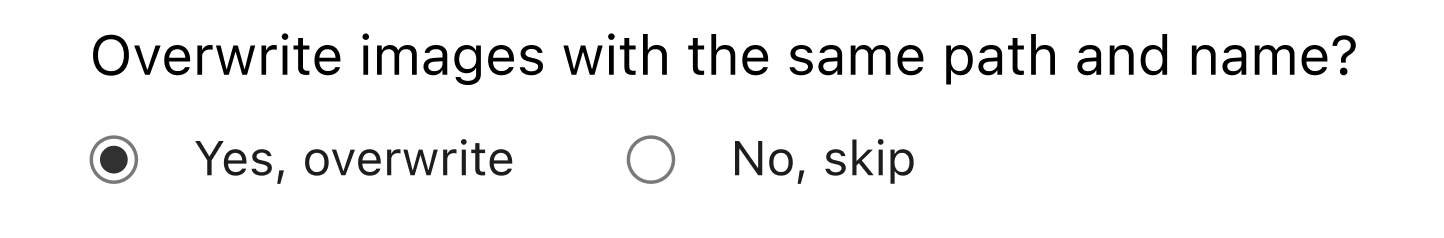

Still, there will be times when customers don’t want to use them. And, when re-using the source to import images, a same path+filename destinations clash might occur.

By default, we don’t overwrite existing images, but we allow you to select that option and refresh your catalog present in the Cloudflare pipeline.

Once these inputs are defined, a click on the Create and start migration button at the bottom will trigger the upload process.

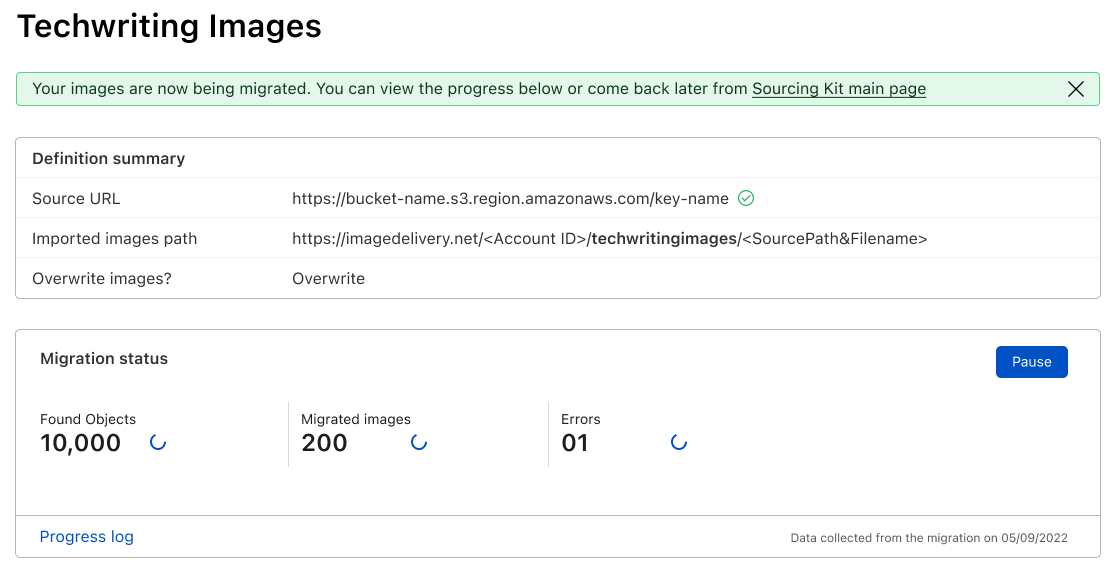

This action will show the final wizard screen, where the migration status is displayed. The progress log will report any errors obtained during the upload and is also available to download.

You can reuse, edit or delete source definitions when no operations are running, and at any point, from the home page of the kit, it’s possible to access the status and return to the ongoing or last migration report.

What’s next?

With the Beta version of the Cloudflare Images Sourcing Kit, we will allow you to define AWS S3 buckets as a source for the imports. In the following versions, we will enable definitions for other common repositories, such as the ones from Azure Storage Accounts or Google Cloud Storage.

And while we’re aiming for this to be a simple UI, we also plan to make everything available through CLI: from defining the repository URL to starting the upload process and retrieving a final report.

Apply for the Beta version

We will be releasing the Beta version of this kit in the following weeks, allowing you to source your images from third party repositories to Cloudflare.

If you want to be the first to use Sourcing Kit, request to join the waitlist on the Cloudflare Images dashboard.

Send email using Workers with MailChannels

Post Syndicated from Erwin van der Koogh original https://blog.cloudflare.com/sending-email-from-workers-with-mailchannels/

Here at Cloudflare we often talk about HTTP and related protocols as we work to help build a better Internet. However, the Simple Mail Transfer Protocol (SMTP) — used to send emails — is still a massive part of the Internet too.

Even though SMTP is turning 40 years old this year, most businesses still rely on email to validate user accounts, send notifications, announce new features, and more.

Sending an email is simple from a technical standpoint, but getting an email actually delivered to an inbox can be extremely tricky. Because of the enormous amount of spam that is sent every single day, all major email providers are very wary of things like new domains and IP addresses that start sending emails.

That is why we are delighted to announce a partnership with MailChannels. MailChannels has created an email sending service specifically for Cloudflare Workers that removes all the friction associated with sending emails. To use their service, you do not need to validate a domain or create a separate account. MailChannels filters spam before sending out an email, so you can feel safe putting user-submitted content in an email and be confident that it won’t ruin your domain reputation with email providers. But the absolute best part? Thanks to our friends at MailChannels, it is completely free to send email.

In the words of their CEO Ken Simpson: “Cloudflare Workers and Pages are changing the game when it comes to ease of use and removing friction to get started. So when we sat down to see what friction we could remove from sending out emails, it turns out that with our incredible anti-spam and anti-phishing, the answer is “everything”. We can’t wait to see what applications the community is going to build on top of this.”

The only constraint currently is that the integration only works when the request comes from a Cloudflare IP address. So it won’t work yet when you are developing on your local machine or running a test on your build server.

First let’s walk you through how to send out your first email using a Worker.

export default {

async fetch(request) {

send_request = new Request('https://api.mailchannels.net/tx/v1/send', {

method: 'POST',

headers: {

'content-type': 'application/json',

},

body: JSON.stringify({

personalizations: [

{

to: [{ email: '[email protected]', name: 'Test Recipient' }],

},

],

from: {

email: '[email protected]',

name: 'Workers - MailChannels integration',

},

subject: 'Look! No servers',

content: [

{

type: 'text/plain',

value: 'And no email service accounts and all for free too!',

},

],

}),

})

},

}

That is all there is to it. You can modify the example to make it send whatever email you want.

The MailChannels integration makes it easy to send emails to and from anywhere with Workers. However, we also wanted to make it easier to send emails to yourself from a form on your website. This is perfect for quickly and painlessly setting up pages such as “Contact Us” forms, landing pages, and sales inquiries.

The Pages Plugin Framework that we announced earlier this week allows other people to email you without exposing your email address.

The only thing you need to do is copy and paste the following code snippet in your /functions/_middleware.ts file. Now, every form that has the data-static-form-name attribute will automatically be emailed to you.

import mailchannelsPlugin from "@cloudflare/pages-plugin-mailchannels";

export const onRequest = mailchannelsPlugin({

personalizations: [

{

to: [{ name: "ACME Support", email: "[email protected]" }],

},

],

from: { name: "Enquiry", email: "[email protected]" },

respondWith: () =>

new Response(null, {

status: 302,

headers: { Location: "/thank-you" },

}),

});

Here is an example of what such a form would look like. You can make the form as complex as you like, the only thing it needs is the data-static-form-name attribute. You can give it any name you like to be able to distinguish between different forms.

<!DOCTYPE html>

<html>

<body>

<h1>Contact</h1>

<form data-static-form-name="contact">

<div>

<label>Name<input type="text" name="name" /></label>

</div>

<div>

<label>Email<input type="email" name="email" /></label>

</div>

<div>

<label>Message<textarea name="message"></textarea></label>

</div>

<button type="submit">Send!</button>

</form>

</body>

</html>

So as you can see there is no barrier left when it comes to sending out emails. You can copy and paste the above Worker or Pages code into your projects and immediately start to send email for free.

If you have any questions about using MailChannels in your Workers, or want to learn more about Workers in general, please join our Cloudflare Developer Discord server.

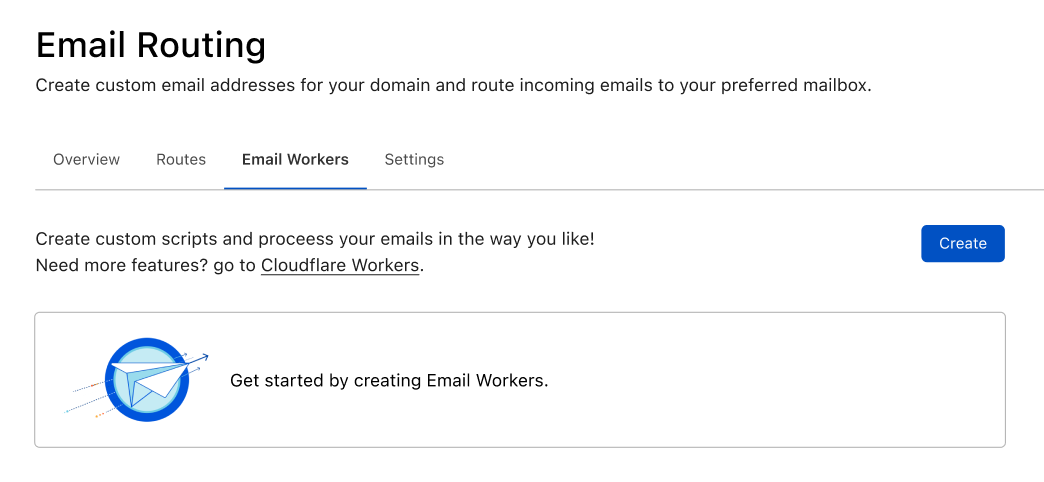

Route to Workers, automate your email processing

Post Syndicated from Joao Sousa Botto original https://blog.cloudflare.com/announcing-route-to-workers/

Cloudflare Email Routing has quickly grown to a few hundred thousand users, and we’re incredibly excited with the number of feature requests that reach our product team every week. We hear you, we love the feedback, and we want to give you all that you’ve been asking for. What we don’t like is making you wait, or making you feel like your needs are too unique to be addressed.

That’s why we’re taking a different approach – we’re giving you the power tools that you need to implement any logic you can dream of to process your emails in the fastest, most scalable way possible.

Today we’re announcing Route to Workers, for which we’ll start a closed beta soon. You can join the waitlist today.

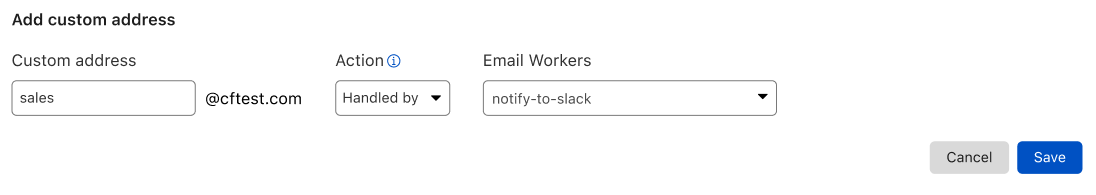

How this works

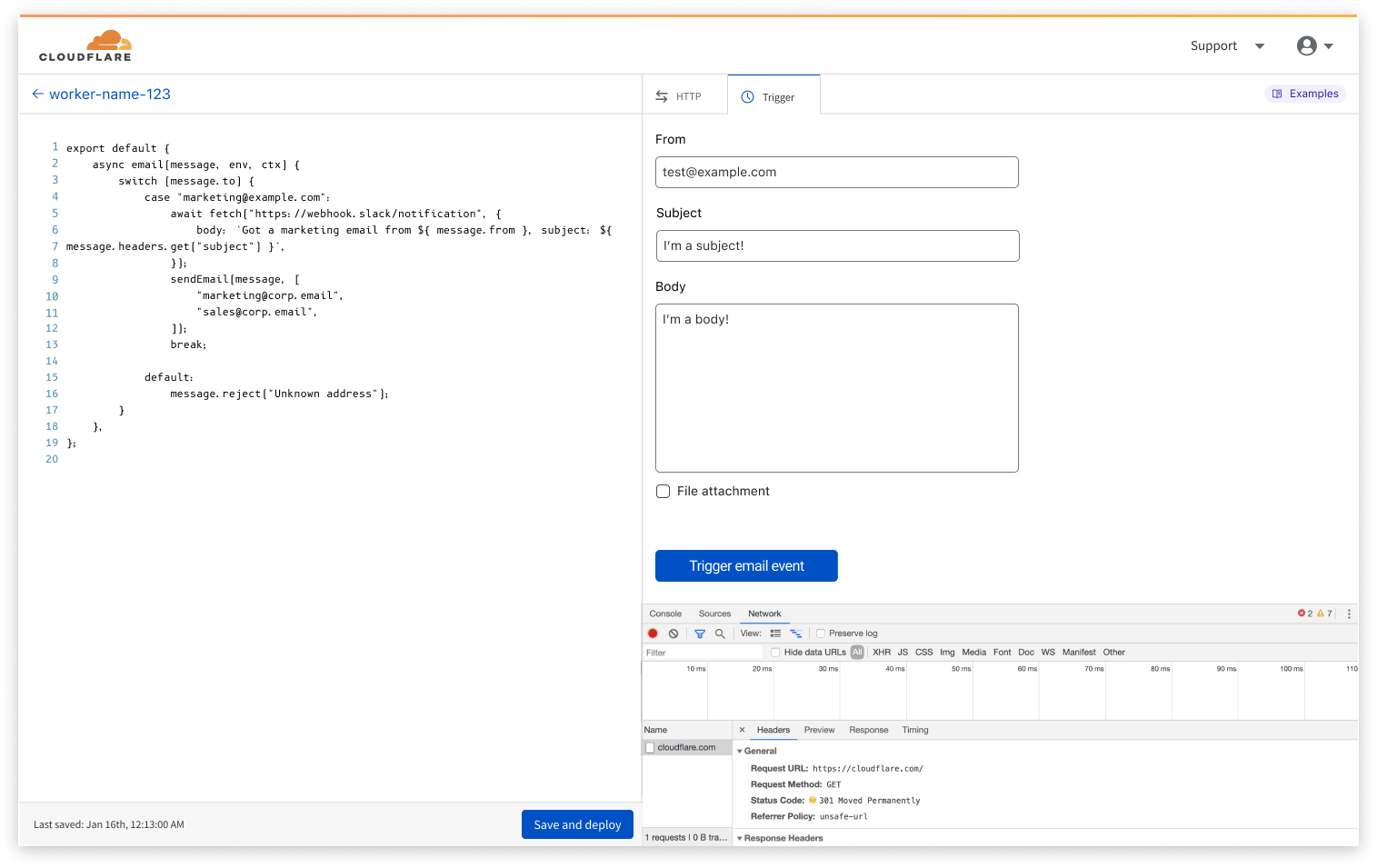

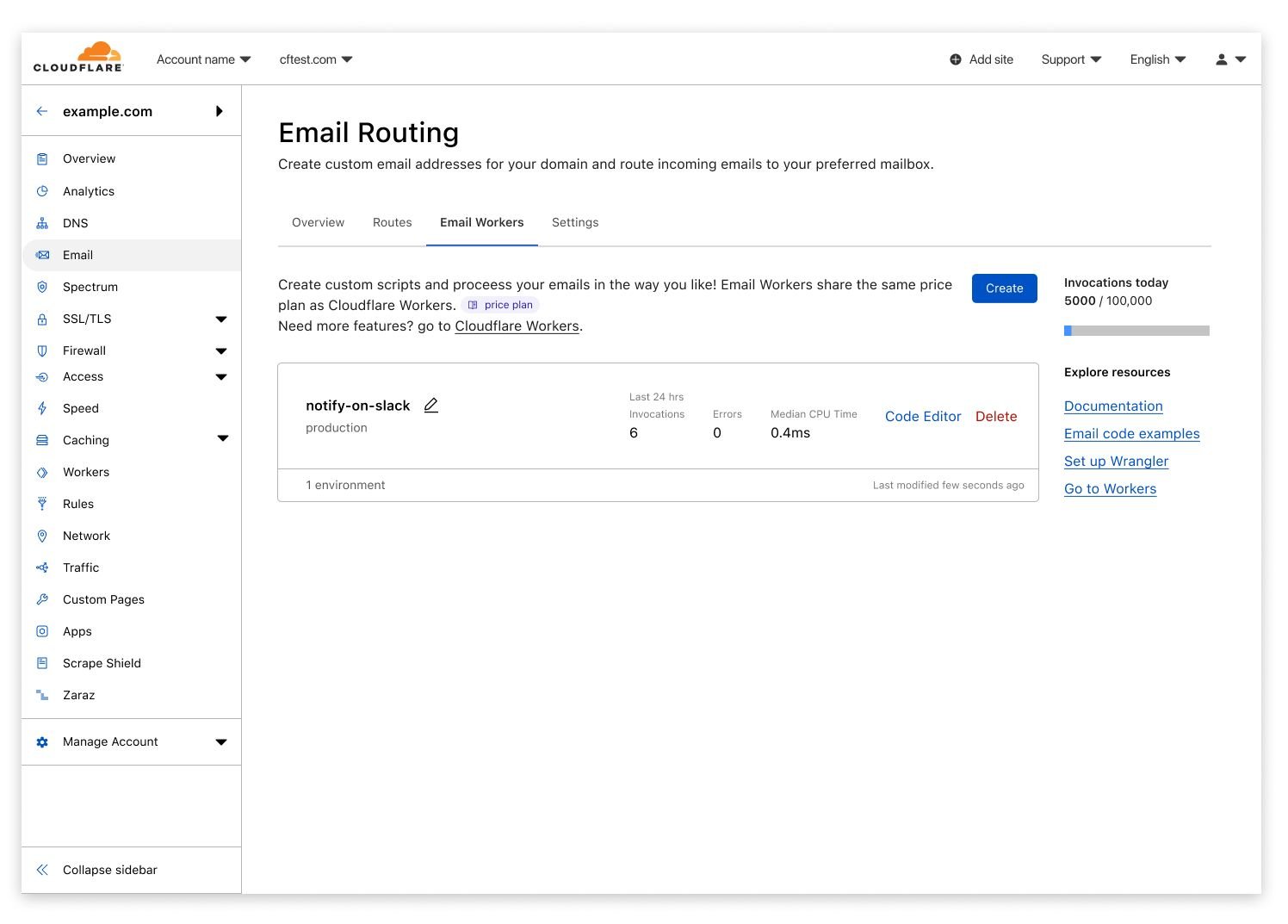

When using Route to Workers your Email Routing rules can have a Worker process the messages reaching any of your custom Email addresses.

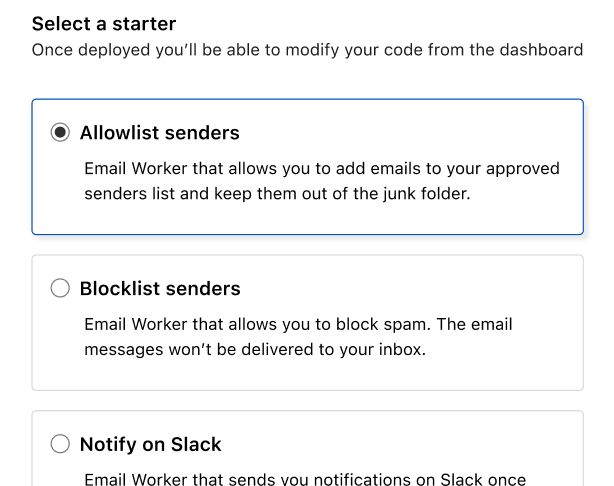

Even if you haven’t used Cloudflare Workers before, we are making onboarding as easy as can be. You can start creating Workers straight from the Email Routing dashboard, with just one click.

After clicking Create, you will be able to choose a starter that allows you to get up and running with minimal effort. Starters are templates that pre-populate your Worker with the code you would write for popular use cases such as creating a blocklist or allowlist, content based filtering, tagging messages, pinging you on Slack for urgent emails, etc.

You can then use the code editor to make your new Worker process emails in exactly the way you want it to – the options are endless.

And for those of you that prefer to jump right into writing their own code, you can go straight to the editor without using a starter. You can write Workers with a language you likely already know. Cloudflare built Workers to execute JavaScript and WebAssembly and has continuously added support for new languages.

The Workers you’ll use for processing emails are just regular Workers that listen to incoming events, implement some logic, and reply accordingly. You can use all the features that a normal Worker would.

The main difference being that instead of:

export default {

async fetch(request, env, ctx) {

handleRequest(request);

}

}You’ll have:

export default {

async email(message, env, ctx) {

handleEmail(message);

}

}The new `email` event will provide you with the “from”, “to” fields, the full headers, and the raw body of the message. You can then use them in any way that fits your use case, including calling other APIs and orchestrating complex decision workflows. In the end, you can decide what action to take, including rejecting or forwarding the email to one of your Email Routing destination addresses.

With these capabilities you can easily create logic that, for example, only accepts messages coming from one specific address and, when one matches the criteria, forwards to one or more of your verified destination addresses while also immediately alerting you on Slack. Code for such feature could be as simple as this:

export default {

async email(message, env, ctx) {

switch (message.to) {

case "[email protected]":

await fetch("https://webhook.slack/notification", {

body: `Got a marketing email from ${ message.from }, subject: ${ message.headers.get("subject") }`,

});

sendEmail(message, [

"marketing@corp",

"sales@corp",

]);

break;

default:

message.reject("Unknown address");

}

},

};

Route to Workers enables everyone to programmatically process their emails and use them as triggers for any other action. We think this is pretty powerful.

Process up to 100,000 emails/day for free

The first 100,000 Worker requests (or Email Triggers) each day are free, and paid plans start at just $5 per 10 million requests. You will be able to keep track of your Email Workers usage right from the Email Routing dashboard.

Join the Waitlist

You can join the waitlist today by going to the Email section of your dashboard, navigating to the Email Workers tab, and clicking the Join Waitlist button.

We are expecting to start the closed beta in just a few weeks, and can’t wait to hear about what you’ll build with it!

As usual, if you have any questions or feedback about Email Routing, please come see us in the Cloudflare Community and the Cloudflare Discord.

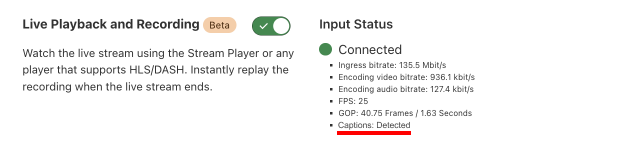

Closed Caption support coming to Stream Live

Post Syndicated from Mickie Betz original https://blog.cloudflare.com/stream-live-captions/

Building inclusive technology is core to the Cloudflare mission. Cloudflare Stream has supported captions for on-demand videos for several years. Soon, Stream will auto-detect embedded captions and include it in the live stream delivered to your viewers.

Thousands of Cloudflare customers use the Stream product to build video functionality into their apps. With live caption support, Stream customers can better serve their users with a more comprehensive viewing experience.

Enabling Closed Captions in Stream Live

Stream Live scans for CEA-608 and CEA-708 captions in incoming live streams ingested via SRT and RTMPS. Assuming the live streams you are pushing to Cloudflare Stream contain captions, you don’t have to do anything further: the captions will simply get included in the manifest file.

If you are using the Stream Player, these captions will be rendered by the Stream Player. If you are using your own player, you simply have to configure your player to display captions.

Currently, Stream Live supports captions for a single language during the live event. While the support for captions is limited to one language during the live stream, you can upload captions for multiple languages once the event completes and the live event becomes an on-demand video.

What is CEA-608 and CEA-708?

When captions were first introduced in 1973, they were open captions. This means the captions were literally overlaid on top of the picture in the video and therefore, could not be turned off. In 1982, we saw the introduction of closed captions during live television. Captions were no longer imprinted on the video and were instead passed via a separate feed and rendered on the video by the television set.

CEA-608 (also known as Line 21) and CEA-708 are well-established standards used to transmit captions. CEA-708 is a modern iteration of CEA-608, offering support for nearly every language and text positioning–something not supported with CEA-608.

Availability

Live caption support will be available in closed beta next month. To request access, sign up for the closed beta.

Including captions in any video stream is critical to making your content more accessible. For example, the majority of live events are watched on mute and thereby, increasing the value of captions. While Stream Live does not generate live captions yet, we plan to build support for automatic live captions in the future.

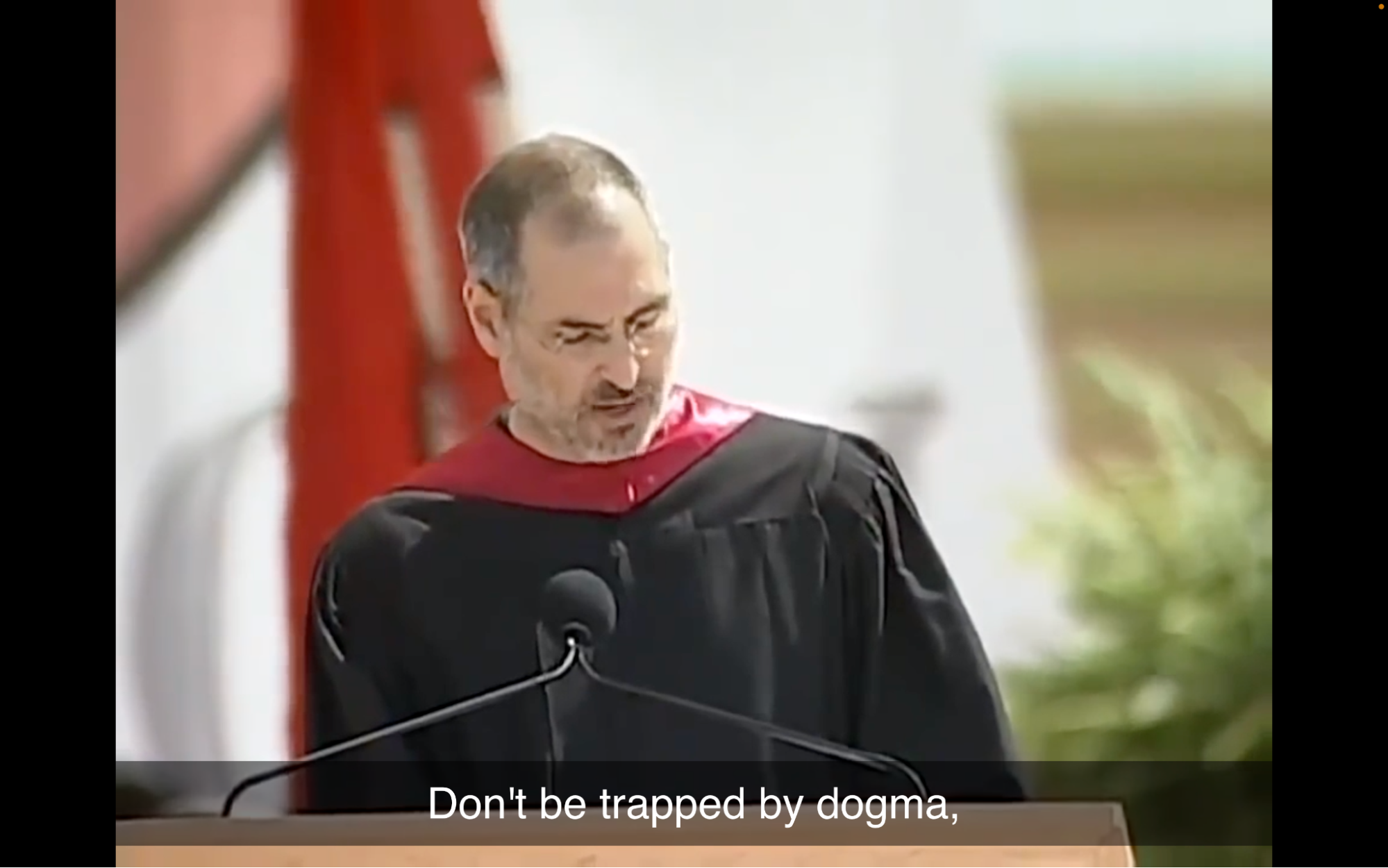

Stream with sub-second latency is like a magical HDMI cable to the cloud

Post Syndicated from J. Scott Miller original https://blog.cloudflare.com/magic-hdmi-cable/

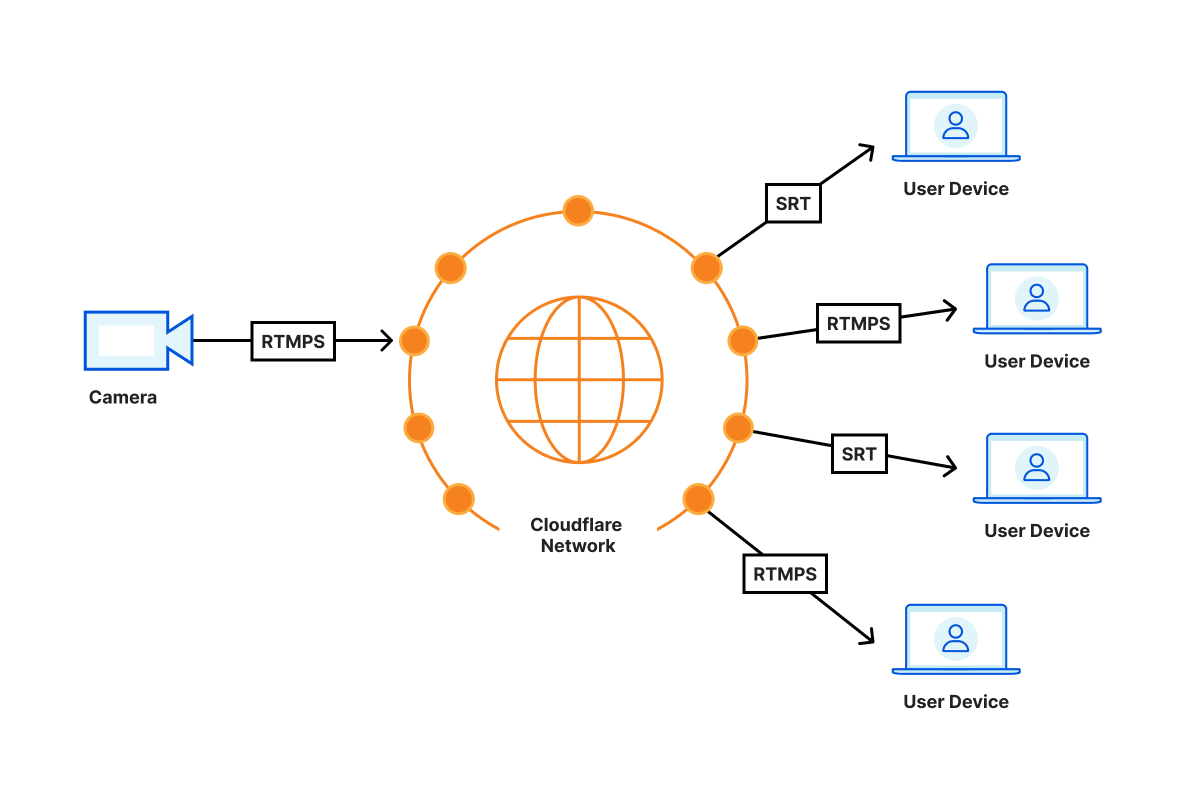

Starting today, in open beta, Cloudflare Stream supports video playback with sub-second latency over SRT or RTMPS at scale. Just like HLS and DASH formats, playback over RTMPS and SRT costs $1 per 1,000 minutes delivered regardless of video encoding settings used.

Stream is like a magic HDMI cable to the cloud. You can easily connect a video stream and display it from as many screens as you want wherever you want around the world.

What do we mean by sub-second?

Video latency is the time it takes from when a camera sees something happen live to when viewers of a broadcast see the same thing happen via their screen. Although we like to think what’s on TV is happening simultaneously in the studio and your living room at the same time, this is not the case. Often, cable TV takes five seconds to reach your home.

On the Internet, the range of latencies across different services varies widely from multiple minutes down to a few seconds or less. Live streaming technologies like HLS and DASH, used on by the most common video streaming websites typically offer 10 to 30 seconds of latency, and this is what you can achieve with Stream Live today. However, this range does not feel natural for quite a few use cases where the viewers interact with the broadcasters. Imagine a text chat next to an esports live stream or Q&A session in a remote webinar. These new ways of interacting with the broadcast won’t work with typical latencies that the industry is used to. You need one to two seconds at most to achieve the feeling that the viewer is in the same room as the broadcaster.

We expect Cloudflare Stream to deliver sub-second latencies reliably in most parts of the world by routing the video as much as possible within the Cloudflare network. For example, when you’re sending video from San Francisco on your Comcast home connection, the video travels directly to the nearest point where Comcast and Cloudflare connect, for example, San Jose. Whenever a viewer joins, say from Austin, the viewer connects to the Cloudflare location in Dallas, which then establishes a connection using the Cloudflare backbone to San Jose. This setup avoids unreliable long distance connections and allows Cloudflare to monitor the reliability and latency of the video all the way from broadcaster the last mile to the viewer last mile.

Serverless, dynamic topology

With Cloudflare Stream, the latency of content from the source to the destination is purely dependent on the physical distance between them: with no centralized routing, each Cloudflare location talks to other Cloudflare locations and shares the video among each other. This results in the minimum possible latency regardless of the locale you are broadcasting from.

We’ve tested about 500ms of glass to glass latency from San Francisco to London, both from and to residential networks. If both the broadcaster and the viewers were in California, this number would be lower, simply because of lower delay caused by less distance to travel over speed of light. An early tester was able to achieve 300ms of latency by broadcasting using OBS via RTMPS to Cloudflare Stream and pulling down that content over SRT using ffplay.

Any server in the Cloudflare Anycast network can receive and publish low-latency video, which means that you’re automatically broadcasting to the nearest server with no configuration necessary. To minimize latency and avoid network congestion, we route video traffic between broadcaster and audience servers using the same network telemetry as Argo.

On top of this, we construct a dynamic distribution topology, unique to the stream, which grows to meet the capacity needs of the broadcast. We’re just getting started with low-latency video, and we will continue to focus on latency and playback reliability as our real-time video features grow.

An HDMI cable to the cloud

Most video on the Internet uses HTTP – the protocol for loading websites on your browser to deliver video. This has many advantages, such as easy to achieve interoperability across viewer devices. Maybe more importantly, HTTP can use the existing infrastructure like caches which reduce the cost of video delivery.

Using HTTP has a cost in latency as it is not a protocol built to deliver video. There’s been many attempts made to deliver low latency video over HTTP, with some reducing latency to a few seconds, but none reach the levels achievable by protocols designed with video in mind. WebRTC and video delivery over QUIC have the potential to further reduce latency, but face inconsistent support across platforms today.

Video-oriented protocols, such as RTMPS and SRT, side-step some of the challenges above but often require custom client libraries and are not available in modern web browsers. While we now support low latency video today over RTMPS and SRT, we are actively exploring other delivery protocols.

There’s no silver bullet – yet, and our goal is to make video delivery as easy as possible by supporting the set of protocols that enables our customers to meet their unique and creative needs. Today that can mean receiving RTMPS and delivering low-latency SRT, or ingesting SRT while publishing HLS. In the future, that may include ingesting WebRTC or publishing over QUIC or HTTP/3 or WebTransport. There are many interesting technologies on the horizon.

We’re excited to see new use cases emerge as low-latency video becomes easier to integrate and less costly to manage. A remote cycling instructor can ask her students to slow down in response to an increase in heart rate; an esports league can effortlessly repeat their live feed to remote broadcasters to provide timely, localized commentary while interacting with their audience.

Creative uses of low latency video

Viewer experience at events like a concert or a sporting event can be augmented with live video delivered in real time to participants’ phones. This way they can experience the event in real-time and see the goal scored or details of what’s going happening on the stage.

Often in big cities, people who cheer loudly across the city can be heard before seeing a goal scored on your own screen. This can be eliminated by when every video screen shows the same content at the same time.

Esports games, large company meetings or conferences where presenters or commentators react real time to comments on chat. The delay between a fan making a comment and them seeing the reaction on the video stream can be eliminated.

Online exercise bikes can provide even more relevant and timely feedback from the live instructors, adding to the sense of community developed while riding them.

Participants in esports streams can be switched from a passive viewer to an active live participant easily as there is no delay in the broadcast.

Security cameras can be monitored from anywhere in the world without having to open ports or set up centralized servers to receive and relay video.

Getting Started

Get started by using your existing inputs on Cloudflare Stream. Without the need to reconnect, they will be available instantly for playback with the RTMPS/SRT playback URLs.

If you don’t have any inputs on Stream, sign up for $5/mo. You will get the ability to push live video, broadcast, record and now pull video with sub-second latency.

You will need to use a computer program like FFmpeg or OBS to push video. To playback RTMPS you can use VLC and FFplay for SRT. To integrate in your native app, you can utilize FFmpeg wrappers for native apps such as ffmpeg-kit for iOS.

RTMPS and SRT playback work with the recently launched custom domain support, so you can use the domain of your choice and keep your branding.

Bring your own ingest domain to Stream Live

Post Syndicated from Zaid Farooqui original https://blog.cloudflare.com/bring-your-own-ingest-domain-to-stream-live/

The last two years have given rise to hundreds of live streaming platforms. Most live streaming platforms enable their creators to go live by providing them with a server and an RTMP/SRT key that they can configure in their broadcasting app.

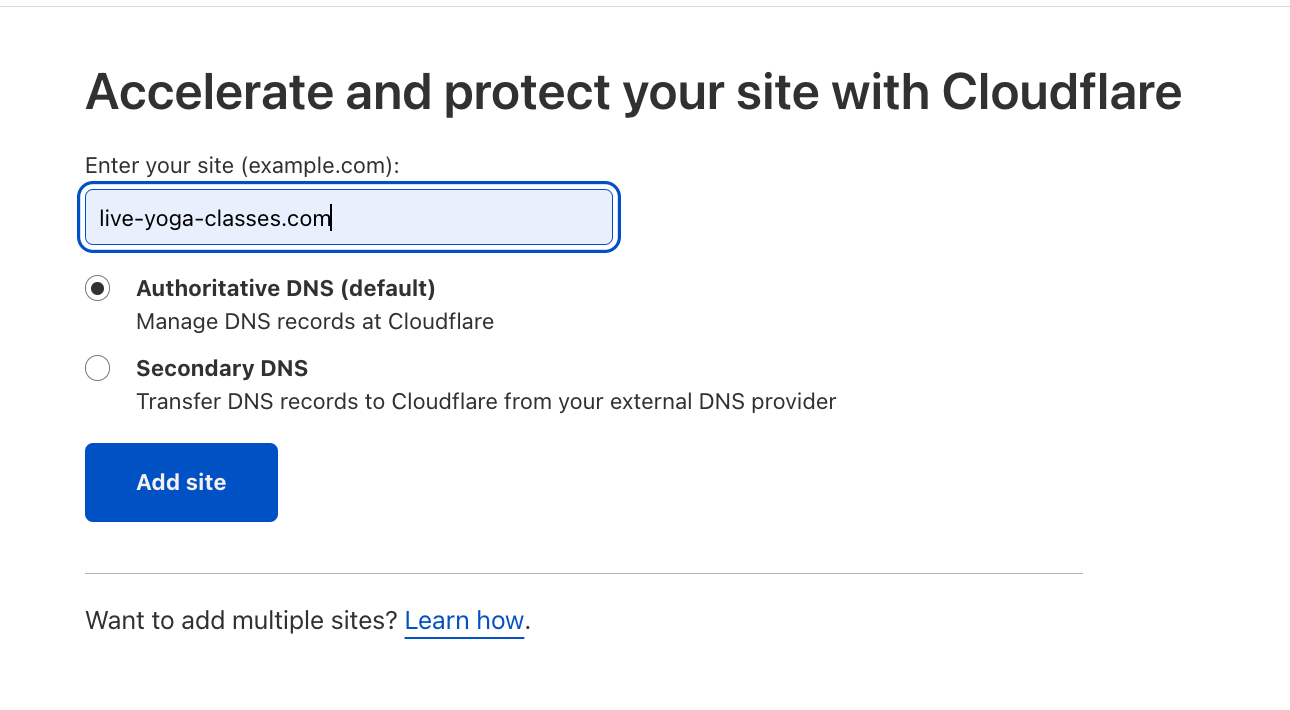

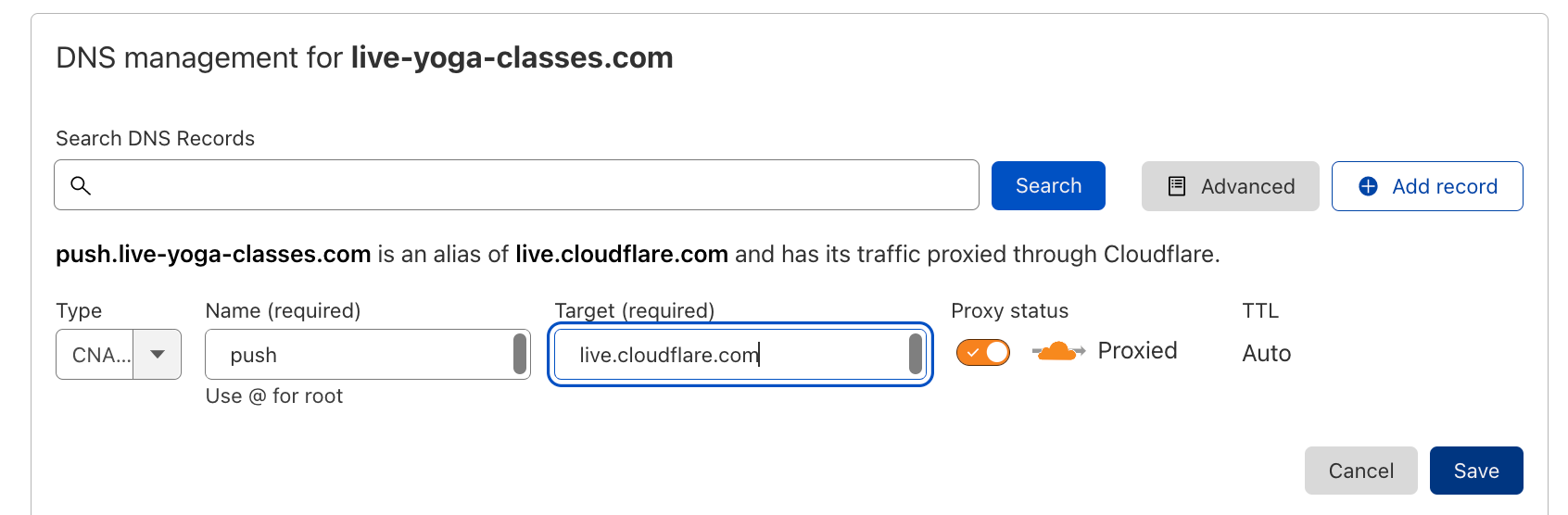

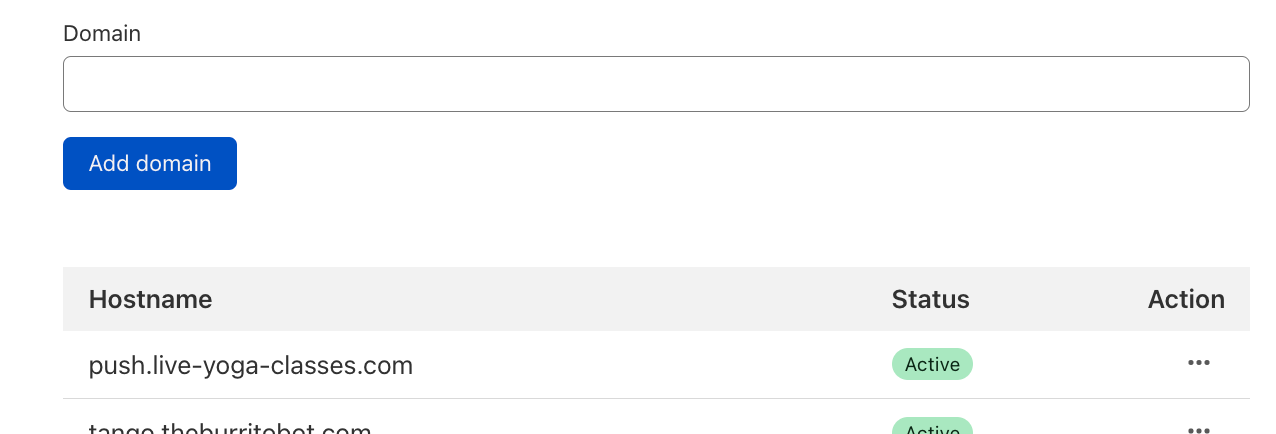

Until today, even if your live streaming platform was called live-yoga-classes.com, your users would need to push the RTMPS feed to live.cloudflare.com. Starting today, every Stream account can configure its own domain in the Stream dashboard. And your creators can broadcast to a domain such as push.live-yoga-classes.com.

This feature is available to all Stream accounts, including self-serve customers at no additional cost. Every Cloudflare account with a Stream subscription can add up to five ingest domains.

Secure CNAMEing for live video ingestion

Cloudflare Stream only supports encrypted video ingestion using RTMPS and SRT protocols. These are secure protocols and, similar to HTTPS, ensure encryption between the broadcaster and Cloudflare servers. Unlike non-secure protocols like RTMP, secure RTMP (or RTMPS) protects your users from monster-in-the-middle attacks.

In an unsecure world, you could simply CNAME a domain to another domain regardless of whether you own the domain you are sending traffic to. Because Stream Live intentionally does not support insecure live streams, you cannot simply CNAME your domain to live.cloudflare.com. So we leveraged other Cloudflare products such as Spectrum to natively support custom-branded domains in the Stream Live product without making the live streams less private for your broadcasters.

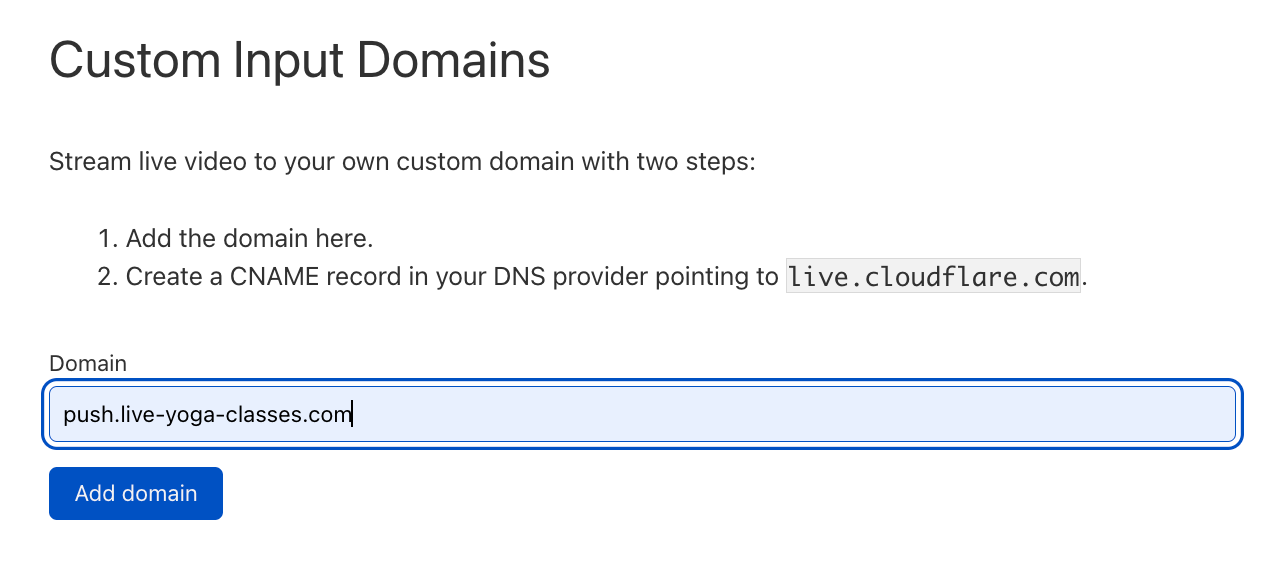

Configuring Custom Domain for Live Ingestion

To begin configuring your custom domain, add the domain to your Cloudflare account as a regular zone.

Next, CNAME the domain to live.cloudflare.com.

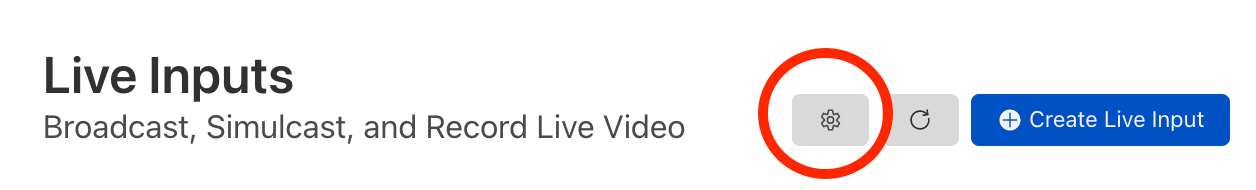

Assuming you have a Stream subscription, visit the Inputs page and click on the Settings icon:

Next, add the domain you configured in the previous step as a Live Ingest Domain:

If your domain is successfully added, you will see a confirmation:

Once you’ve added your ingest domain, test it by changing your existing configuration in your broadcasting software to your ingest domain. You can read the complete docs and limitations in the Stream Live developer docs.

What’s Next

Besides the branding upside — you don’t have to instruct your users to configure a domain such as live.cloudflare.com — custom domains help you avoid vendor lock-in and seamless migration. For example, if you have an existing live video pipeline that you are considering moving to Stream Live, this makes the migration one step easier because you no longer have to ask your users to change any settings in their broadcasting app.

A natural next step is to support custom keys. Currently, your users must still use keys that are provided by Stream Live. Soon, you will be able to bring your own keys. Custom domains combined with custom keys will help you migrate to Stream Live with zero breaking changes for your end users.

Cloudflare Stream simplifies creator management for creator platforms

Post Syndicated from Ben Krebsbach original https://blog.cloudflare.com/stream-creator-management/

Creator platforms across the world use Cloudflare Stream to rapidly build video experiences into their apps. These platforms serve a diverse range of creators, enabling them to share their passion with their beloved audience. While working with creator platforms, we learned that many Stream customers track video usage on a per-creator basis in order to answer critical questions such as:

- “Who are our fastest growing creators?”

- “How much do we charge or pay creators each month?”

- “What can we do more of in order to serve our creators?”

Introducing the Creator Property

Creator platforms enable artists, teachers and hobbyists to express themselves through various media, including video. We built Cloudflare Stream for these platforms, enabling them to rapidly build video use cases without needing to build and maintain a video pipeline at scale.

At its heart, every creator platform must manage ownership of user-generated content. When a video is uploaded to Stream, Stream returns a video ID. Platforms using Stream have traditionally had to maintain their own index to track content ownership. For example, when a user with internal user ID 83721759 uploads a video to Stream with video ID 06aadc28eb1897702d41b4841b85f322, the platform must maintain a database table of some sort to keep track of the fact that Stream video ID 06aadc28eb1897702d41b4841b85f322 belongs to internal user 83721759.

With the introduction of the creator property, platforms no longer need to maintain this index. Stream already has a direct creator upload feature to enable users to upload videos directly to Stream using tokenized URLs and without exposing account-wide auth information. You can now set the creator field with your user’s internal user ID at the time of requesting a tokenized upload URL:

curl -X POST "https://api.cloudflare.com/client/v4/accounts/023e105f4ecef8ad9ca31a8372d0c353/stream/direct_upload" \

-H "X-Auth-Email: [email protected]" \

-H "X-Auth-Key: c2547eb745079dac9320b638f5e225cf483cc5cfdda41" \

-H "Content-Type: application/json" \

--data '{"maxDurationSeconds":300,"expiry":"2021-01-02T02:20:00Z","creator": "<CREATOR_ID>", "thumbnailTimestampPct":0.529241,"allowedOrigins":["example.com"],"requireSignedURLs":true,"watermark":{"uid":"ea95132c15732412d22c1476fa83f27a"}}'

When the user uploads the video, the creator property would be automatically set to your internal user ID and can be leveraged for operations such as pulling analytics data for your creators.

Query By Creator Property

Setting the creator property on your video uploads is just the beginning. You can now filter Stream Analytics via the Dashboard or the GraphQL API using the creator property.

Previously, if you wanted to generate a monthly report of all your creators and the number of minutes of watch time recorded for their videos, you’d likely use a scripting language such as Python to do the following:

- Call the Stream GraphQL API requesting a list of videos and their watch time

- Traverse through the list of videos and query your internal index to determine which creator each video belongs to

- Sum up the video watch time for each creator to get a clean report showing you video consumption grouped by the video creator

The creator property eliminates this three step manual process. You can make a single API call to the GraphQL API to request a list of creators and the consumption of their videos for a given time period. Here is an example GraphQL API query that returns minutes delivered by creator:

query {

viewer {

accounts(filter: { accountTag: "<ACCOUNT_ID>" }) {

streamMinutesViewedAdaptiveGroups(

limit: 10

orderBy: [sum_minutesViewed_DESC]

filter: { date_lt: "2022-04-01", date_gt: "2022-04-31" }

) {

sum {

minutesViewed

}

dimensions {

creator

}

}

}

}

}

Stream is focused on helping creator platforms innovate and scale. Matt Ober, CTO of NFT media management platform Piñata, says “By allowing us to upload and then query using creator IDs, large-scale analytics of Cloudflare Stream is about to get a lot easier.”

Getting Started

Read the docs to learn more about setting the creator property on new and previously uploaded videos. You can also set the creator property on live inputs, so the recorded videos generated from the live event will already have the creator field populated.

Being able to filter analytics is just the beginning. We can’t wait to enable more creator-level operations, so you can spend more time on what makes your idea unique and less time maintaining table stakes infrastructure.

Пренаписаната история може да бъде и отречена. Разговор с Тобиас Флесенкемпер

Post Syndicated from Слава Савова original https://toest.bg/tobias-flessenkemper-rheinischer-verein-interview/

Тобиас Флесенкемпер е политолог, ръководител на представителството на Съвета на Европа в Белград и председател на Управителния съвет на Rheinischer Verein – асоциация за опазване на паметниците на културата и културния ландшафт със седалище в Кьолн, Германия. Основана е през 1906 г., а днес в нея членуват над 4000 доброволци. Асоциацията работи като посредник между граждани, институции и професионални организации и активно участва в кампании за защита на културно наследство в риск.

Rheinischer Verein съществува повече от век. Разкажете ни за развитието на Вашата инициатива.

През 1906 г. Западна Германия е в процес на интензивна индустриализация и на урбанизация в огромни мащаби. Изграждат се големи инфраструктурни проекти – железопътен транспорт, язовири, мини за въгледобив, съпроводени с обезлесяване и индустриализация на земеделието и животновъдството. По това време на много места се премахват средновековни военни укрепления, изграждат се нови градски центрове. Подобно на Франция, и тук манастирите, които са доминирали над тези земи в продължение на хилядолетие, се превръщат в заводи. Извършва се тотална промяна на градската и околната среда, съпроводена с усещането за принадлежност към нещо, което се разраства и губи корените си. Всичко това става без дискусия за опазване.

В тези условия се сформира общността, която основава днешната асоциация. В нея членуват от обикновени граждани до изтъкнати публични личности, от занаятчии до учени, но все хора с изявен интерес към флората и фауната. Те решават, че е необходимо да се предприеме нещо за по-доброто управление на региона, както и да не се допуска тези процеси да протичат без контрол – това е едно много ранно екологично движение, но също така и много ранно градоустройствено движение.

Днес асоциацията ни събира хора от най-различни сфери. Тук може да откриете младия антиглобалист с постколониални възгледи, който току-що е завършил антропология, заедно със занаятчията, занимаващ се с реставрация на дърворезба в средновековни селища, редом до политика, кмета или някой като мен, който искрено се интересува от история и култура. Именно това разнообразие създава силна и устойчива общност.

Миналогодишните носители на най-престижната награда за архитектура – „Прицкер“, са Ан Лакатон и Жан-Филип Васал, които се фокусират върху адаптацията на съществуващи сгради. Това е подход, който изисква минимална употреба на нови ресурси и е ключов за справянето с климатичната криза. Доколко обаче той е приложим към следвоенното наследство, чийто жизнен цикъл често е по-кратък?

Днес работим съвместно с младото движение „Архитекти за бъдеще“, чието име е препратка към инициативата „Петъци за бъдеще“. Основната им препоръка е да не се разрушават сгради. Определено количество енергия вече е вложено в изграждането им и то не би могло да бъде компенсирано чрез други икономии, дори да бъдат заместени с „екологични“ сгради. Следвоенното наследство е огромно заради събитията в този регион. Голяма част от населените места по поречието на Рейн са разрушени по време на Втората световна война и доразрушени след това, защото интересът към новото строителство е много голям – като едно от най-доходоносните занимания в нашата икономика.

Днес, когато дадена сграда не предлага нужните удобства, тя просто се заменя с нова. И това е особено разпространено при някои определени видове сгради. Например офисните. Животът им е свързан с данъчни стимули и регулации за амортизация. Ако той може да се скъси в рамките на 30–50 години, тогава строителството на нови офиси става привлекателно за инвеститорите – нещо като бързата мода. В Брюксел този процес е развит до съвършенство, животът на сградите е ограничен до 30 години. Но какъвто и нов аргумент да се появи за строежа на нови офиси, той е безсмислен.

Ако вземем например големите квартали, каквито има и в Източна Европа, с многоетажни сгради и зелени пространства помежду им, виждаме, че е много лесно те да бъдат реновирани, да се адаптират. Много енергия вече е вложена в тяхното подобрение – топлоизолация, смяна на дограми и пр., а структурата на сградите е здрава. При тях натискът да бъдат разрушени е много по-малък. В повечето случаи те вече са обновени или в момента се обновяват.

Другият вид сгради, с които сме най-ангажирани, е публичната инфраструктура. Това са библиотеки, кметства, училища и други обществени здания, често разположени в централните градски части. Съдбата на тези сгради и техните терени зависи от политически решения и именно това създава най-много трудности. Най-често срещаме типичната фараонска идея сред кметове и общински съветници да построят нещо, което да завещаят на града. Мавзолейна идея, заради която се разрушават ценни стари сгради в амбицията за нови „емблематични“ постройки. И това често се случва твърде лесно.

Днес се използва „зеленият“ аргумент срещу следвоенната архитектура, според който тези сгради не подлежат на ремонт. Ако обществените ковчежета не са пълни, тогава често се взима решение да се събори например старият театър и на негово място да се изгради търговски център. Непрекъснато се налага да предупреждаваме властите за подобни необмислени решения. Не знаем как нещата ще се променят в най-близко бъдеще. А адаптацията би могла да предложи едно напълно различно решение.

Премахването на стара сграда, дори на най-баналната следвоенна сграда, ни отнема възможността да създадем нови преживявания в това пространство и свободата да оставим функциите сами да се променят. Същото бих казал за старите културни центрове – дворците на културата. Необходимо е само да се отворят вратите им, да се възстанови достъпът на хората до тези места и така ще разберем какво може да се направи с тях. Но подобна представа често липсва във въображението на градската управа. Това може би е най-доброто решение по отношение на енергийната ефективност, след което сградата може да бъде модернизирана. Мисля, че това е част от традицията на наследяването. Нека първо проучим внимателно и след това да решим как да действаме.

Rheinischer Verein е и посредник между граждани и институции. Вашата асоциация разчита на помощта на много доброволци, но същевременно работи и на институционално ниво. Как участват гражданите в управлението на наследството?

Ние се намираме в регион, в който опазването на културното наследство е важна част от обществения дебат. Това има своите положителни и отрицателни страни, защото сме заобиколени от много артефакти и цялата културна и религиозна традиция, свързана с тях.

Хората тук често се мобилизират, но обикновено когато средата, която познават, започне да се променя. Тогава имат нужда от експертна помощ, идват при нас и оттук започва процесът, който наричаме медиация. Първата стъпка е да се изясни кое наследство е защитено и каква е законовата му дефиниция в нашия регион, защото не всичко е наследство от правна гледна точка. Помагаме на хората да установят контакт с отговорните служби и да започнат процедури по даване на статут, ако такъв липсва. В случаите, в които сградата е частна собственост, помагаме в диалога със собственика.

С други думи, съдействаме на различни хора да започнат разговор. По този начин определени проблеми, свързани с градската среда или дадена сграда, стават видими. Монументални творби със сграфито и мозайки, стари неонови реклами и пр. сега са в центъра на дебата заради облицоването на фасадите. Тези творби от 50-те са понякога кичозни. Но са и приятни. И ако изчезнат, част от характера на дадени места ще бъде загубена. Те служат като визуална опора на дадено място.

Медиацията може да следва и друга последователност. Може да е част от мобилизация с цел предпазването на сградата от преждевременни, необмислени намеси. Един от примерите за това е „Еберплац“ в Кьолн. Това е площад на много нива, който свързва различни улици, пешеходни зони и метростанции чрез единна бетонна инфраструктура. Нашата организация призова площадът да получи статут и това предизвика огромен скандал – как е възможно това място да заслужава статут, то е грозно, просто една дупка на утилитарната архитектура без никаква стойност. Всъщност целта не бе статут на всяка цена, а по-скоро начин да се насърчи дискусия защо трябва да се опазва и пътна, пешеходна или подземна инфраструктура. Целият процес се обърна на 180 градуса през изминалите пет години благодарение на дискусията за наследството.

Нека завършим разговора със заключението, че дефинициите за наследство са променлива величина, която е обект на постоянни корекции под натиска на политически и икономически гравитации…

Това е отворен въпрос, който трябва да е част от дебата. Един от най-скорошните проблеми, с които сме ангажирани, е промяна на закона за паметниците на културата в нашия регион. Правителството се опитва да промени процедурата, която в момента се състои от две стъпки. Това е добра стратегия за борба с корупцията, тъй като процесът се води от принципа на „четирите очи“. Тоест ако Общината иска да промени статута на дадени обекти, тя трябва да съгласува това с регионална агенция за опазване. И ако между тях има конфликт, те трябва да стигнат до споразумение – нищо не може да се придвижи в определена посока, ако няма съгласие. Тази процедура осигурява повече време за реакция, но също така предотвратява вземането на решения набързо.

Другият елемент, много специфичен за Германия и особено за западната част на страната, е ролята на църквите, които все още са големи собственици на недвижимо имущество, въпреки секуларизацията от XVIII и XIX век. Те имат специални правила по отношение на богослужебните места и искат да вземат решения за собствеността си самостоятелно. Това, разбира се, е вид дискриминация и много сложен казус, защото в този случай различни собственици имат различни права.

За разлика от Източна Европа, тук в жилищните квартали от 50-те и 60-те години има много църкви, често централно разположени. От икономическа гледна точка вероятно е по-добре за църквата да построи друго на мястото на свои имоти – жилища например, тъй като вече няма толкова много богомолци. А от наша гледна точка това е сериозна опасност, защото църковните сгради от следвоенния период са много важни не само като архитектура, но и като ориентир в построените след войната квартали. Ако премахнем тях или други важни обществени постройки, това ще промени драстично градската среда.

Както знаем от историята на XIX и XX век, понякога нещата започват да се променят твърде бързо и тогава внезапно губим културно наследство. Затова не можем да ги оставим на случайността. Законът е много важен, а за да бъдат защитени монументите, те трябва да имат голяма законова стойност. Когато изчезнат старите сгради около нас, се появяват и политически рискове. Когато историята може да бъде пренаписана, тя може да бъде и отречена – няма да има вече останали артефакти и всичко може да се превърне в практични решения.

Това например е част от дискусията в Австрия, инициирана от дясната Партия на свободата на Австрия – обсъжда се колко монумента са необходими, за да бъдат почетени концентрационните лагери. Аргументът на представители на партията беше, че може би два са достатъчни, защото можело да се направи нещо с виртуална реалност. Ако историята на лагерите се превърне във виртуална реалност, то постепенно това ще се превърне в друга история. Ние имаме нужда от местата, белязани от тези трагични моменти. Имаме нужда и от многопластова градска среда, изпълнена със своите противоречия.

Заглавна снимка: Посетители при руините на замък в Бахарах. Това е един от трите замъка, закупени от асоциацията скоро след основаването ѝ с цел тяхното опазване © Rheinischer Verein

Venus De Milo: Disarming Beauty

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=98OFF4YLE7w

Как МВР си отглежда антидържавна агресия

Post Syndicated from Светла Енчева original https://toest.bg/kak-mvr-si-otglezhda-antidurzhavna-agresiya/

Помните ли пожара в софийската джамия преди 11 години? През март 2011 г. „Атака“ започна протест пред сградата на БНТ против новините на турски език. Активисти на партията окупираха пространството от ул. „Сан Стефано“ №29 до подлеза на метрото на Орлов мост и раздаваха листовки на минувачите. Месец по-късно бяха още там. Тогава написах отворено писмо до кметицата Йорданка Фандъкова с въпрос докога Столичната община ще толерира акцията на „Атака“, призоваваща обществената телевизия да не спазва закона и да ограничи правата на част от българските граждани да имат новини на своя език. След известно прехвърляне на топката в Общината стана ясно, че партията на Волен Сидеров има разрешение за протеста си до края на юни. И че има право да върши това, защото е политическа партия.

Още един месец по-късно превърналият се вече в традиция протест на „Атака“ отиде пред софийската джамия „Баня Башъ“, за да протестира срещу звука от високоговорителите – отново с разрешение на Общината. По време на петъчната молитва активисти на партията нападнаха молещите се мюсюлмани със словесна агресия, яйца, камъни, прътове и тръби и подпалиха няколко от молитвените им килимчета. Присъстващите на мястото полицаи се намесиха чак когато агресията излезе от контрол. Пострадаха петима мюсюлмани, петима полицаи и брадичката на депутатката от „Атака“ Деница Гаджева. Поради липсата на адекватна реакция на институциите по отношение на нападението и разследването му България е осъдена от Европейския съд по правата на човека в Страсбург.

Подобни актове на агресия, засега в по-малки мащаби, се извършват напоследък под безучастния поглед на институциите.

Този път потърпевшите не са мюсюлмани, защото точката на конфликт е друга – подкрепящи Русия срещу подкрепящи Украйна. Друга разлика спрямо 2011 г. е, че сега отговорността на МВР е по-голяма от тази на местната власт. Защото привържениците на режима на Путин дори нямат нужда от официално разрешение, за да постигат с помощта на органите на реда това, което искат.

За комфорта на малобройния си протест пред Народното събрание подкрепящите „мира“, тоест инвазията на Русия в Украйна, имат картбланш от Столичната община. Не така стоеше въпросът обаче с планираното събитие за опаковане на Паметника на Съветската армия на 4 май. То беше организирано от гражданската инициатива за демонтиране на паметника и надлежно съгласувано с Общината и Столичната дирекция на вътрешните работи. Преди началото му пред паметника пристигнаха представители на партия „Възраждане“.

Въпреки че от партията на Костадинов нямаха право да присъстват на мястото на съгласувано събитие, органите на реда не ги отпратиха. Нещо повече, те ги подпомогнаха в постигането на целта им – да провалят опаковането на паметника, позволявайки им да замерят с различни предмети легитимните участници и да откраднат едно от украинските им знамена. Накрая полицията изтласка законния протест, оставяйки на площада хората от партията на Костадинов. А между представители на двете групи се стигна до бой в подлеза на Софийския университет.

Иронично, и Столичната община стана жертва на омразата, която от дълги години допуска.

На 5 май двама депутати от „Възраждане“ се качиха на вишка до балкона на сградата на Общината на ул. „Московска“ и свалиха оттам знамето на Украйна, поставено след решение на Общинския съвет. Това стана пред погледа на бездействащи полицаи. Аргументът им да не се намесят е, че депутатите са се позовали на своя имунитет. По тази логика всеки политик с имунитет има право да взема от чужди имоти всичко, което му харесва. Или по-скоро – което не му харесва. Вярно е, че общинската администрация е публична, но „Възраждане“ нямат нито свои представители в нея, нито каквито и да е правомощия да се месят в решенията ѝ или да се разпореждат с имуществото ѝ.

На 9 май пред Руското посолство полицията отново допусна протестиращи путинофили, въпреки че мястото беше крайна точка на съгласуваното с Общината и СДВР шествие в подкрепа на Украйна. Десетина души, охранявани от няколко пъти повече полицаи, на спокойствие пускаха съветска и руска патриотична музика, децибелите на която огласяваха квартал „Изгрев“ чак до „Дианабад“. А на хилядите участници в законното шествие органите на реда не разрешиха да оставят изцапани с червена боя играчки – символ на децата, загинали във войната на Русия срещу Украйна.

Закономерно, вече и самото МВР се превръща в обект на агресията, която допуска.

На 11 май протестът на „Възраждане“ против подкрепата за Украйна, започнал пред парламента, се отклони към сградата на Столичната община. Там активисти на партията се опитаха да свалят и новото украинско знаме, поставено от общинската администрация след открадването на старото. Този път органите на реда не им разрешиха, в резултат на което гневът на събралите се националисти русофили се обърна срещу тях. Протестиращите се опитаха дори да преместят микробус на жандармерията, бутайки го перпендикулярно на оста му на движение. По този начин може би се опитваха да го доближат до балкона със знамето. Ала законите на физиката надделяха над устрема на „възрожденците“, които не постигнаха нищо друго, освен клатене на превозното средство на органите на реда.

Фрустрацията на „Възраждане“ е обяснима – в последно време МВР демонстрира подкрепа на привържениците на Русия за сметка на тези на Украйна и изобщо всячески им създава комфорт. Ако например първите протестират пред парламента, а вторите – зад него, пространството зад Народното събрание е оградено почти отвсякъде. И демонстриращите солидарност с Украйна трябва дълго да обикалят, докато намерят откъде да влязат. Независимо че са многократно повече на брой от представителите на другата група.

Вътрешният министър Бойко Рашков най-сетне наруши мълчанието си относно действията на МВР на изслушване в парламента.

„Ние в МВР сме критични и към едната, и към другата страна. В подлеза [на Софийския университет – б.р.] е имало полицаи, но там побоят бе нанесен на един гражданин. Имаме основание да не сме доволни от нашите служители, най-малкото защото можеше да не се стигне до разбиването на главата на този гражданин, независимо дали обича, или мрази“, заяви Рашков, а недопускането на законното събитие за опаковането на ПСА аргументира така: „Едни пазят Паметника на съветската армия, други искат да го разрушат. Ние сме поели ангажимент към Руското посолство и други мисии в нашата страна да се грижим за тези паметници и го правим.“

С тези си думи Рашков демонстрира политически пристрастия и доста фриволно тълкуване на функциите на ръководеното от него министерство. Като част от изпълнителната власт то има мисията да осигурява обществения ред, съблюдавайки действащото законодателство и правата на различните групи и хора. Работата на МВР не е да проявява отношение (критичност или безкритичност), а още по-малко – да поема ангажименти към посолства по отношение на паметници, които не са тяхна собственост. Нито да интерпретира символни артистични акции като разрушаване. Интересно впрочем, ако се вземе легитимно решение за демонтирането на ПСА, дали тогава вътрешният министър ще попречи да се изпълни то, защото има ангажимент към Посолството на Русия?

Междувременно реториката на „Възраждане“ отдавна е прекрачила и най-разтегливите представи за национална сигурност.

Депутатката от партията Елена Гунчева например на 18 март заплаши във Facebook какво може да се случи, ако България изпрати военна помощ на Украйна: „Не се отчайвайте, има някаква надежда – Русия има високоточкови оръжия, така че точно може да улучи Министерски съвет“. И предложи цел на руска атака да стане и американската посланичка у нас: „Може да им пратим и линк с координатите на Мустафата.“ Че Русия няма излишни високоточни (а не високоточкови, както казва Гунчева) ракети, които да изстрелва към София, си личи от обстоятелството, че праща към Украйна все повече от не толкова точните, които попадат в жилищни райони. Призивът към чужда държава да нападне български институции и дипломатически мисии у нас обаче минава всякакви граници.

Не звучи по-миролюбиво и председателят на партията Костадин Костадинов, който, парадоксално, обвинява правителството, че „обслужва чужди интереси“. В началото на май той заяви от парламентарната трибуна: „Това правителство иска война. Щом правителството иска тази война, то ще я получи. Ние, българите, ще му я дадем.“ Костадинов призовава и към гражданско неподчинение.

На Русия в момента изобщо не ѝ е до това да напада България –

тя си има достатъчно проблеми в Украйна, където надеждите за дори символична победа на „специалната военна операция“ се топят с всеки изминал ден. Призивите за нападение, насилие и промяна на конституционно установения ред у нас обаче никак не са безобидни. МВР, изглежда, не си е научило урока, че вербалната агресия има свойството да прераства във физическа, ако не бъде спряна навреме.

„Възраждане“ действа методично, разширявайки заедно с пределите на екстремисткото си говорене и електоралната си подкрепа. Бойко Рашков е по-лоялен към политическите си корени в БСП, отколкото към политиката на правителството, чийто министър е. Самото правителство пък си има достатъчно проблеми, за да се занимава и с вкарването на Рашков в правия път. Всичко това е ясно. Но когато това крехко равновесие престане да може да бъде удържано, нека не се чудим откъде ни е дошло.

Заглавна снимка: Стопкадър от видеоизлъчването на „Дневник“ на предишното изслушване на министър Рашков пред парламента на 13 април 2022 г.

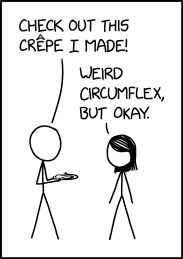

Crêpe

Post Syndicated from original https://xkcd.com/2619/

Surveillance by Driverless Car

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/05/surveillance-by-driverless-car.html

San Francisco police are using autonomous vehicles as mobile surveillance cameras.

Privacy advocates say the revelation that police are actively using AV footage is cause for alarm.

“This is very concerning,” Electronic Frontier Foundation (EFF) senior staff attorney Adam Schwartz told Motherboard. He said cars in general are troves of personal consumer data, but autonomous vehicles will have even more of that data from capturing the details of the world around them. “So when we see any police department identify AVs as a new source of evidence, that’s very concerning.”

Node.js 16.x runtime now available in AWS Lambda

Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/node-js-16-x-runtime-now-available-in-aws-lambda/

This post is written by Dan Fox, Principal Specialist Solutions Architect, Serverless.

You can now develop AWS Lambda functions using the Node.js 16 runtime. This version is in active LTS status and considered ready for general use. To use this new version, specify a runtime parameter value of nodejs16.x when creating or updating functions or by using the appropriate container base image.

The Node.js 16 runtime includes support for ES modules and top-level await that was added to the Node.js 14 runtime in January 2022. This is especially useful when used with Provisioned Concurrency to reduce cold start times.

This runtime version is supported by functions running on either Arm-based AWS Graviton2 processors or x86-based processors. Using the Graviton2 processor architecture option allows you to get up to 34% better price performance.

We recognize that customers have been waiting for some time for this runtime release. We hear your feedback and plan to release the next Node.js runtime version in a timelier manner.

AWS SDK for JavaScript

The Node.js 16 managed runtimes and container base images bundle the AWS JavaScript SDK version 2. Using the bundled SDK is convenient for a few use cases. For example, developers writing short functions via the Lambda console or inline functions via CloudFormation templates may find it useful to reference the bundled SDK.

In general, however, including the SDK in the function’s deployment package is good practice. Including the SDK pins a function to a specific minor version of the package, insulating code from SDK API or behavior changes. To include the SDK, refer to the SDK package and version in the dependency object of the package.json file. Use a package manager like npm or yarn to download and install the library locally before building and deploying your deployment package to the AWS Cloud.

Customers who take this route should consider using the JavaScript SDK, version 3. This version of the SDK contains modular packages. This can reduce the size of your deployment package, improving your function’s performance. Additionally, version 3 contains improved TypeScript compatibility, and using it will maximize compatibility with future runtime releases.

Language updates

With this release, Lambda customers can take advantage of new Node.js 16 language features, including:

- Toolchain and compiler upgrades, including prebuilt binaries for Apple Silicon.

- Stable timers promises API.

- RegExp match indices.

- Faster calls with argument size mismatch.

- An update to V8 release 9.4

Prebuilt binaries for Apple Silicon

Node.js 16 is the first runtime release to ship prebuilt binaries for Apple Silicon. Customers using M1 processors in Apple computers may now develop Lambda functions locally using this runtime version.

Stable timers promises API

The timers promises API offers timer functions that return promise objects, improving the functionality for managing timers. This feature is available for both ES modules and CommonJS.

You may designate your function as an ES module by changing the file name extension of your handler file to .mjs, or by specifying “type” as “module” in the function’s package.json file. Learn more about using Node.js ES modules in AWS Lambda.

// index.mjs

import { setTimeout } from 'timers/promises';

export async function handler() {

await setTimeout(2000);

return;

}

RegExp match indices

The RegExp match indices feature allows developers to get an array of the start and end indices of the captured string in a regular expression. Use the “/d” flag in your regular expression to access this feature.

// handler.js

exports.lambdaHandler = async () => {

const matcher = /(AWS )(Lambda)/d.exec('AWS Lambda');

console.log("match: " + matcher.indices[0]) // 0,10

console.log("first capture group: " + matcher.indices[1]) // 0,4

console.log("second capture group: " + matcher.indices[2]) // 4,10

}

Working with TypeScript

Many developers using Node.js runtimes in Lambda develop their code using TypeScript. To better support TypeScript developers, we have recently published new documentation on using TypeScript with Lambda, and added beta TypeScript support to the AWS SAM CLI.

We are also working on a TypeScript version of Lambda PowerTools. This is a suite of utilities for Lambda developers to simplify the adoption of best practices, such as tracing, structured logging, custom metrics, and more. Currently, AWS Lambda Powertools for TypeScript is in beta developer preview.

Runtime updates

To help keep Lambda functions secure, AWS will update Node.js 16 with all minor updates released by the Node.js community when using the zip archive format. For Lambda functions packaged as a container image, pull, rebuild, and deploy the latest base image from DockerHub or the Amazon ECR Public Gallery.

Amazon Linux 2

The Node.js 16 managed runtime, like Node.js 14, Java 11, and Python 3.9, is based on an Amazon Linux 2 execution environment. Amazon Linux 2 provides a secure, stable, and high-performance execution environment to develop and run cloud and enterprise applications.

Conclusion

Lambda now supports Node.js 16. Get started building with Node.js 16 by specifying a runtime parameter value of nodejs16.x when creating your Lambda functions using the zip archive packaging format.

You can also build Lambda functions in Node.js 16 by deploying your function code as a container image using the Node.js 16 AWS base image for Lambda. You can read about the Node.js programming model in the AWS Lambda documentation to learn more about writing functions in Node.js 16.

For existing Node.js functions, review your code for compatibility with Node.js 16 including deprecations, then migrate to the new runtime by changing the function’s runtime configuration to nodejs16.x.

For more serverless learning resources, visit Serverless Land.

How Paytm modernized their data pipeline using Amazon EMR

Post Syndicated from Rajat Bhardwaj original https://aws.amazon.com/blogs/big-data/how-paytm-modernized-their-data-pipeline-using-amazon-emr/

This post was co-written by Rajat Bhardwaj, Senior Technical Account Manager at AWS and Kunal Upadhyay, General Manager at Paytm.

Paytm is India’s leading payment platform, pioneering the digital payment era in India with 130 million active users. Paytm operates multiple lines of business, including banking, digital payments, bill recharges, e-wallet, stocks, insurance, lending and mobile gaming. At Paytm, the Central Data Platform team is responsible for turning disparate data from multiple business units into insights and actions for their executive management and merchants, who are small, medium or large business entities accepting payments from the Paytm platforms.

The Data Platform team modernized their legacy data pipeline with AWS services. The data pipeline collects data from different sources and runs analytical jobs, generating approximately 250K reports per day, which are consumed by Paytm executives and merchants. The legacy data pipeline was set up on premises using a proprietary solution and didn’t utilize the open-source Hadoop stack components such as Spark or Hive. This legacy setup was resource-intensive, having high CPU and I/O requirements. Analytical jobs took approximately 8–10 hours to complete, which often led to Service Level Agreements (SLA) breaches. The legacy solution was also prone to outages due to higher than expected hardware resource consumption. Its hardware and software limitations impacted the ability of the system to scale during peak load. Data models used in the legacy setup processed the entire data every time, which led to an increased processing time.

In this post, we demonstrate how the Paytm Central Data Platform team migrated their data pipeline to AWS and modernized it using Amazon EMR, Amazon Simple Storage Service (Amazon S3) and underlying AWS Cloud infrastructure along with Apache Spark. We optimized the hardware usage and reduced the data analytical processing, resulting in shorter turnaround time to generate insightful reports, all while maintaining operational stability and scale irrespective of the size of daily ingested data.

Overview of solution

The key to modernizing a data pipeline is to adopt an optimal incremental approach, which helps reduce the end-to-end cycle to analyze the data and get meaningful insights from it. To achieve this state, it’s vital to ingest incremental data in the pipeline, process delta records and reduce the analytical processing time. We configured the data sources to inherit the unchanged records and tuned the Spark jobs to only analyze the newly inserted or updated records. We used temporal data columns to store the incremental datasets until they’re processed. Data intensive Spark jobs are configured in incremental on-demand deduplicating mode to process the data. This helps to eliminate redundant data tuples from the data lake and reduces the total data volume, which saves compute and storage capacity. We also optimized the scanning of raw tables to restrict the scans to only the changed record set which reduced scanning time by approximately 90%. Incremental data processing also helps to reduce the total processing time.

At the time of this writing, the existing data pipeline has been operationally stable for 2 years. Although this modernization was vital, there is a risk of an operational outage while the changes are being implemented. Data skewing needs to be handled in the new system by an appropriate scaling strategy. Zero downtime is expected from the stakeholders because the reports generated from this system are vital for Paytm’s CXO, executive management and merchants on a daily basis.

The following diagram illustrates the data pipeline architecture.

Benefits of the solution

The Paytm Central Data Office team, comprised of 10 engineers, worked with the AWS team to modernize the data pipeline. The team worked for approximately 4 months to complete this modernization and migration project.

Modernizing the data pipeline with Amazon EMR 6.3 helped efficiently scale the system at a lower cost. Amazon EMR managed scaling helped reduce the scale-in and scale-out time and increase the usage of Amazon Elastic Compute Cloud (Amazon EC2) Spot Instances for running the Spark jobs. Paytm is now able to utilize a Spot to On-Demand ratio of 80:20, resulting in higher cost savings. Amazon EMR managed scaling also helped automatically scale the EMR cluster based on YARN memory usage with the desired type of EC2 instances. This approach eliminates the need to configure multiple Amazon EMR scaling policies tied to specific types of EC2 instances as per the compute requirements for running the Spark jobs.

In the following sections, we walk through the key tasks to modernize the data pipeline.

Migrate over 400 TB of data from the legacy storage to Amazon S3

Paytm team built a proprietary data migration application with the open-source AWS SDK for Java for Amazon S3 using the Scala programming language. This application can connect with multiple cloud providers , on-premises data centers and migrate the data to a central data lake built on Amazon S3.

Modernize the transformation jobs for over 40 data flows

Data flows are defined in the system for ingesting raw data, preprocessing the data and aggregating the data that is used by the analytical jobs for report generation. Data flows are developed using Scala programming language on Apache Spark. We use an Azkaban batch workflow job scheduler for ordering and tracking the Spark job runs. Workflows are created on Amazon EMR to schedule these Spark jobs multiple times during a day. We also implemented Spark optimizations to improve the operational efficiency for these jobs. We use Spark broadcast joins to handle the data skewness, which can otherwise lead to data spillage, resulting in extra storage needs. We also tuned the Spark jobs to avoid a large number of small files, which is a known problem with Spark if not handled effectively. This is mainly because Spark is a parallel processing system and data loading is done through multiple tasks where each task can load into multiple partition. Data-intensive jobs are run using Spark stages.

The following is the code snippet for the Scala jobs:

Validate the data

Accuracy of the data reports is vital for the modern data pipeline. The modernized pipeline has additional data reconciliation steps to improve the correctness of data across the platform. This is achieved by having greater programmatic control over the processed data. We could only reconcile data for the legacy pipeline after the entire data processing was complete. However, the modern data pipeline enables all the transactions to be reconciled at every step of the transaction, which gives granular control for data validation. It also helps isolate the cause of any data processing errors. Automated tests were done before go-live to compare the data records generated by the legacy vs. the modern system to ensure data sanity. These steps helped ensure the overall sanity of the processed data by the new system. Deduplication of data is done frequently via on-demand queries to eliminate redundant data, thereby reducing the processing time. As an example, if there are transactions which are already consumed by the end clients but still a part of the data-set, these can be eliminated by the deduplication, resulting in processing of only the newer transactions for the end client consumption.

The following sample query uses Spark SQL for on-demand deduplication of raw data at the reporting layer:

What we achieved as part of the modernization

With the new data pipeline, we reduced the compute infrastructure by 400% which helps to save compute cost. The earlier legacy stack was running on over 6,000 virtual cores. Optimization techniques helped to run the same system at an improved scale, with approximately 1,500 virtual cores. We are able to reduce the compute and storage capacity for 400 TB of data and 40 data flows after migrating to Amazon EMR. We also achieved Spark optimizations, which helped to reduce the runtime of the jobs by 95% (from 8–10 hours to 20–30 minutes), CPU consumption by 95%, I/O by 98% and overall computation time by 80%. The incremental data processing approach helped to scale the system despite data skewness, which wasn’t the case with the legacy solution.

Conclusion

In this post, we showed how Paytm modernized their data lake and data pipeline using Amazon EMR, Amazon S3, underlying AWS Cloud infrastructure and Apache Spark. Choice of these cloud & big-data technologies helped to address the challenges for operating a big data pipeline because the type and volume of data from disparate sources adds complexity to the analytical processing.

By partnering with AWS, the Paytm Central Data Platform team created a modern data pipeline in a short amount of time. It provides reduced data analytical times with astute scaling capabilities, generating high-quality reports for the executive management and merchants on a daily basis.