Post Syndicated from Brendan Irvine-Broque original https://blog.cloudflare.com/stream-live-ga/

Today, we’re excited to announce that Stream Live is out of beta, available to everyone, and ready for production traffic at scale. Stream Live is a feature of Cloudflare Stream that allows developers to build live video features in websites and native apps.

Since its beta launch, developers have used Stream to broadcast live concerts from some of the world’s most popular artists directly to fans, build brand-new video creator platforms, operate a global 24/7 live OTT service, and more. While in beta, Stream has ingested millions of minutes of live video and delivered to viewers all over the world.

Bring your big live events, ambitious new video subscription service, or the next mobile video app with millions of users — we’re ready for it.

Streaming live video at scale is hard

Live video uses a massive amount of bandwidth. For example, a one-hour live stream at 1080p at 8Mbps is 3.6GB. At typical cloud provider egress prices, even a little egress can break the bank.

Live video must be encoded on-the-fly, in real-time. People expect to be able to watch live video on their phone, while connected to mobile networks with less bandwidth, higher latency and frequently interrupted connections. To support this, live video must be re-encoded in real-time into multiple resolutions, allowing someone’s phone to drop to a lower resolution and continue playback. This can be complex (Which bitrates? Which codecs? How many?) and costly: running a fleet of virtual machines doesn’t come cheap.

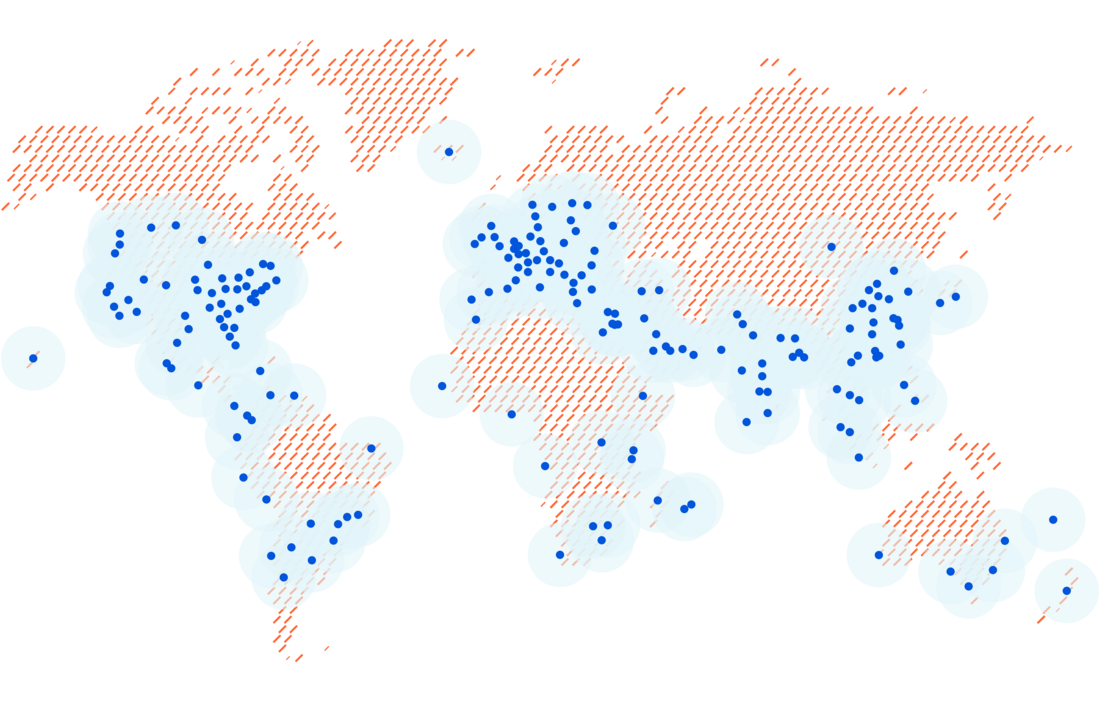

Ingest location matters — Live streaming protocols like RTMPS send video over TCP. If a single packet is dropped or lost, the entire connection is brought to a halt while the packet is found and re-transmitted. This is known as “head of line blocking”. The further away the broadcaster is from the ingest server, the more network hops, and the more likely packets will be dropped or lost, ultimately resulting in latency and buffering for viewers.

Delivery location matters — Live video must be cached and served from points of presence as close to viewers as possible. The longer the network round trips, the more likely videos will buffer or drop to a lower quality.

Broadcasting protocols are in flux — The most widely used protocol for streaming live video, RTMPS, has been abandoned since 2012, and dates back to the era of Flash video in the early 2000s. A new emerging standard, SRT, is not yet supported everywhere. And WebRTC has only recently evolved into an option for high definition one-to-many broadcasting at scale.

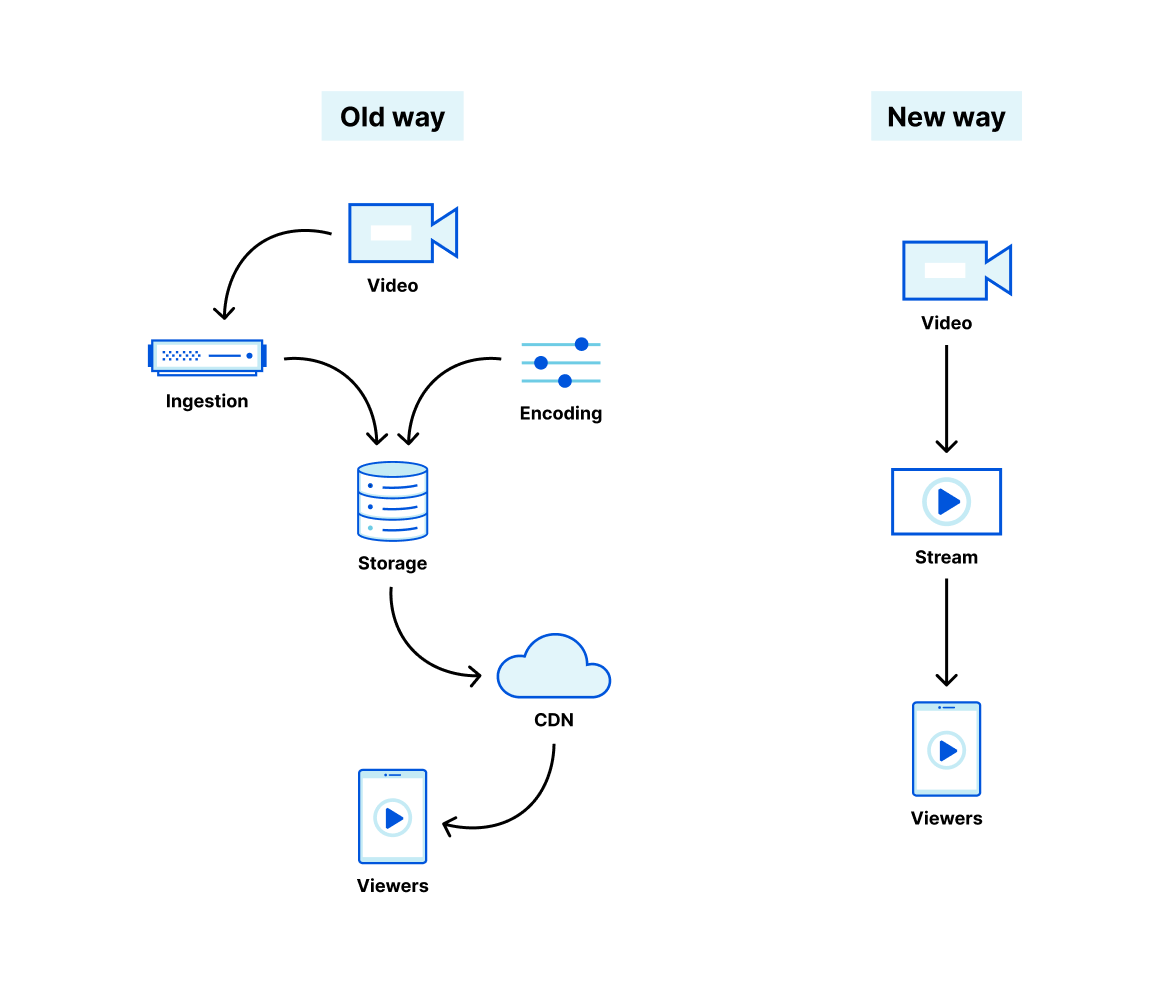

The old way to solve this has been to stitch together separate cloud services from different vendors. One vendor provides excellent content delivery, but no encoding. Another provides APIs or hardware to encode, but leaves you to fend for yourself and build your own storage layer. As a developer, you have to learn, manage and write a layer of glue code around the esoteric details of video streaming protocols, codecs, encoding settings and delivery pipelines.

We built Stream Live to make streaming live video easy, like adding an <img> tag to a website. Live video is now a fundamental building block of Internet content, and we think any developer should have the tools to add it to their website or native app.

With Stream, you or your users stream live video directly to Cloudflare, and Cloudflare delivers video directly to your viewers. You never have to manage internal encoding, storage, or delivery systems — it’s just live video in and live video out.

Our network, our hardware = a solution only Cloudflare can provide

We’re not the only ones building APIs for live video — but we are the only ones with our own global network and hardware that we control and optimize for video. That lets us do things that others can’t, like sub-second glass-to-glass latency using RTMPS and SRT playback at scale.

Newer video codecs require specialized hardware encoders, and while others are beholden to the hardware limitations of public cloud providers, we’re already hard at work installing the latest encoding hardware in our own racks, so that you can deliver high resolution video with even less bandwidth. Our goal is to make what is otherwise only available to video giants available directly to you — stay tuned for some exciting updates on this next week.

Most providers limit how many destinations you can restream or simulcast to. Because we operate our own network, we’ve never even worried about this, and let you restream to as many destinations as you need.

Operating our own network lets us price Stream based on minutes of video delivered — unlike others, we don’t pay someone else for bandwidth and then pass along their costs to you at a markup. The status quo of charging for bandwidth or per-GB storage penalizes you for delivering or storing high resolution content. If you ask why a few times, most of the time you’ll discover that others are pushing their own cost structures on to you.

Encoding video is compute-intensive, delivering video is bandwidth intensive, and location matters when ingesting live video. When you use Stream, you don’t need to worry about optimizing performance, finding a CDN, and/or tweaking configuration endlessly. Stream takes care of this for you.

Free your live video from the business models of big platforms

Nearly every business uses live video, whether to engage with customers, broadcast events or monetize live content. But few have the specialized engineering resources to deliver live video at scale on their own, and wire together multiple low level cloud services. To date, many of the largest content creators have been forced to depend on a shortlist of social media apps and streaming services to deliver live content at scale.

Unlike the status quo, who force you to put your live video in their apps and services and fit their business models, Stream gives you full control of your live video, on your website or app, on any device, at scale, without pushing your users to someone else’s service.

Free encoding. Free ingestion. Free analytics. Simple per-minute pricing.

| Others | Stream | |

|---|---|---|

| Encoding | $ per minute | Free |

| Ingestion | $ per GB | Free |

| Analytics | Separate product | Free |

| Live recordings | Minutes or hours later | Instant |

| Storage | $ per GB | per minute stored |

| Delivery | $ per GB | per minute delivered |

Other platforms charge for ingestion and encoding. Many even force you to consider where you’re streaming to and from, the bitrate and frames per second of your video, and even which of their datacenters you’re using.

With Stream, encoding and ingestion are free. Other platforms charge for delivery based on bandwidth, penalizing you for delivering high quality video to your viewers. If you stream at a high resolution, you pay more.

With Stream, you don’t pay a penalty for delivering high resolution video. Stream’s pricing is simple — minutes of video delivered and stored. Because you pay per minute, not per gigabyte, you can stream at the ideal resolution for your viewers without worrying about bandwidth costs.

Other platforms charge separately for analytics, requiring you to buy another product to get metrics about your live streams.

With Stream, analytics are free. Stream provides an API and Dashboard for both server-side and client-side analytics, that can be queried on a per-video, per-creator, per-country basis, and more. You can use analytics to identify which creators in your app have the most viewed live streams, inform how much to bill your customers for their own usage, identify where content is going viral, and more.

Other platforms tack on live recordings or DVR mode as a separate add-on feature, and recordings only become available minutes or even hours after a live stream ends.

With Stream, live recordings are a built-in feature, made available instantly after a live stream ends. Once a live stream is available, it works just like any other video uploaded to Stream, letting you seamlessly use the same APIs for managing both pre-recorded and live content.

Build live video into your website or app in minutes

Cloudflare Stream enables you or your users to go live using the same protocols and tools that broadcasters big and small use to go live to YouTube or Twitch, but gives you full control over access and presentation of live streams.

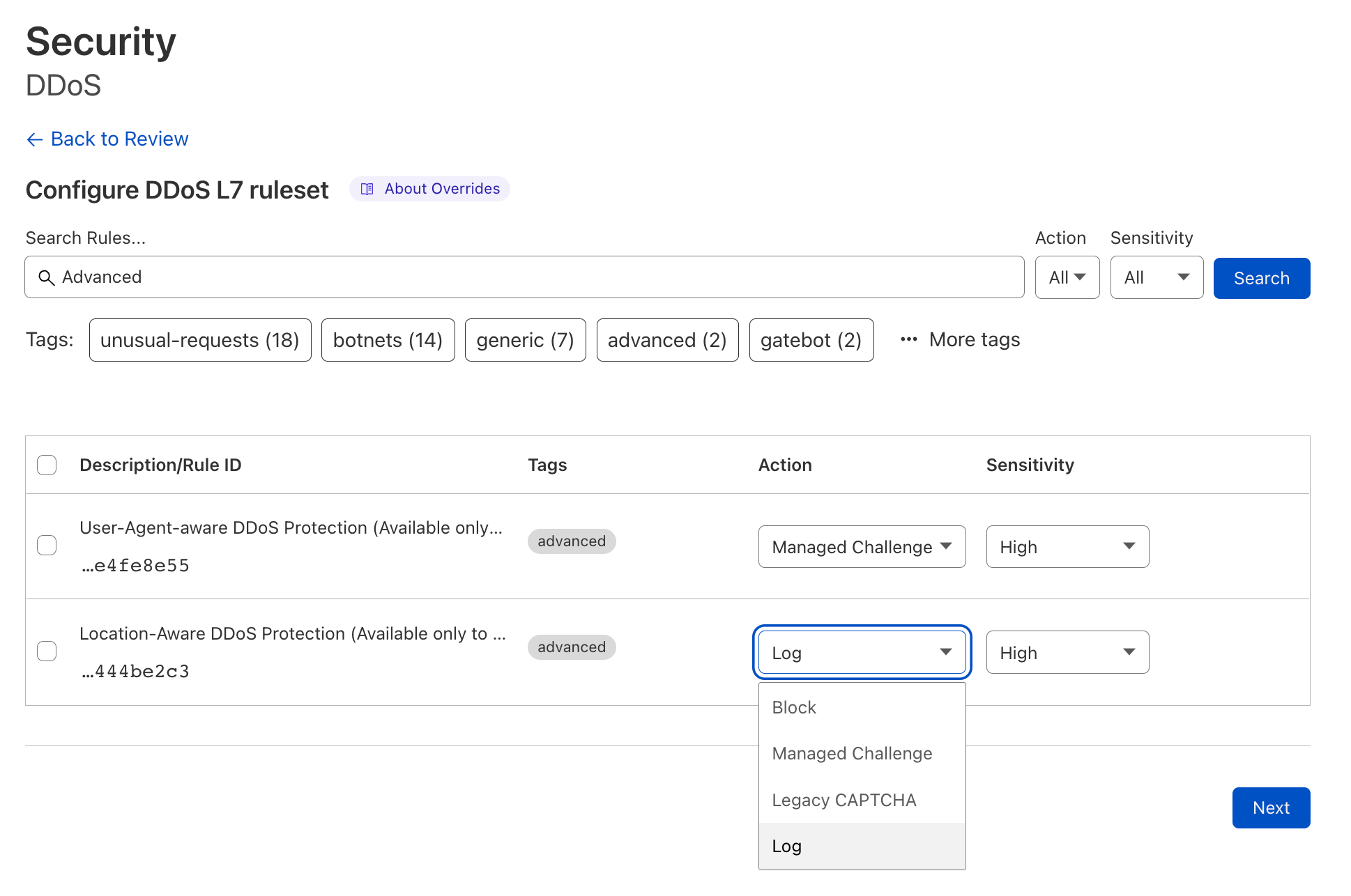

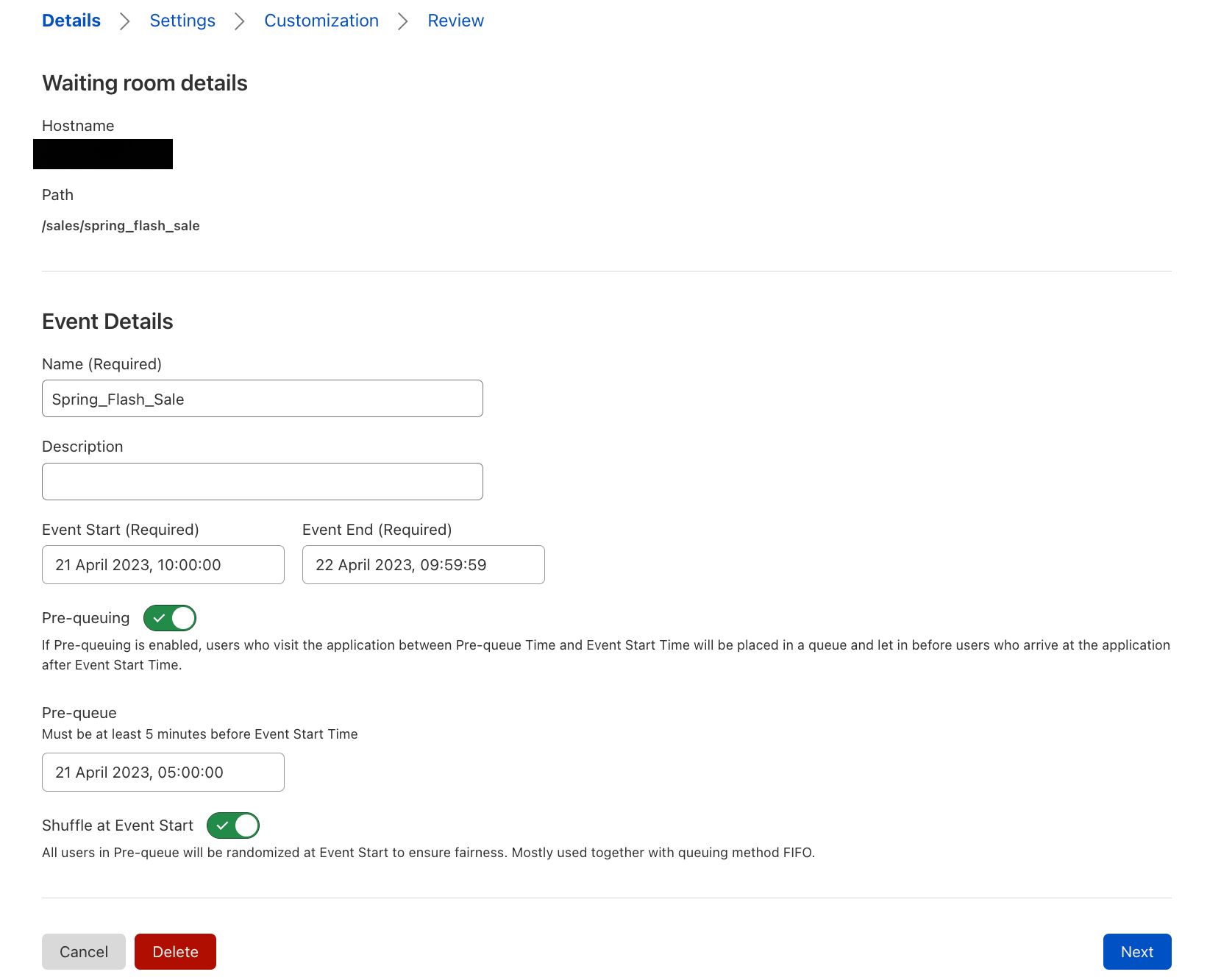

Step 1: Create a live input

Create a new live input from the Stream Dashboard or use use the Stream API:

Request

curl -X POST \

-H "Authorization: Bearer <YOUR_API_TOKEN>" \

-d "{"recording": { "mode": "automatic" } }" \

https://api.cloudflare.com/client/v4/accounts/<YOUR_CLOUDFLARE_ACCOUNT_ID>/stream/live_inputs

Response

{

"result": {

"uid": "<UID_OF_YOUR_LIVE_INPUT>",

"rtmps": {

"url": "rtmps://live.cloudflare.com:443/live/",

"streamKey": "<PRIVATE_RTMPS_STREAM_KEY>"

},

...

}

}

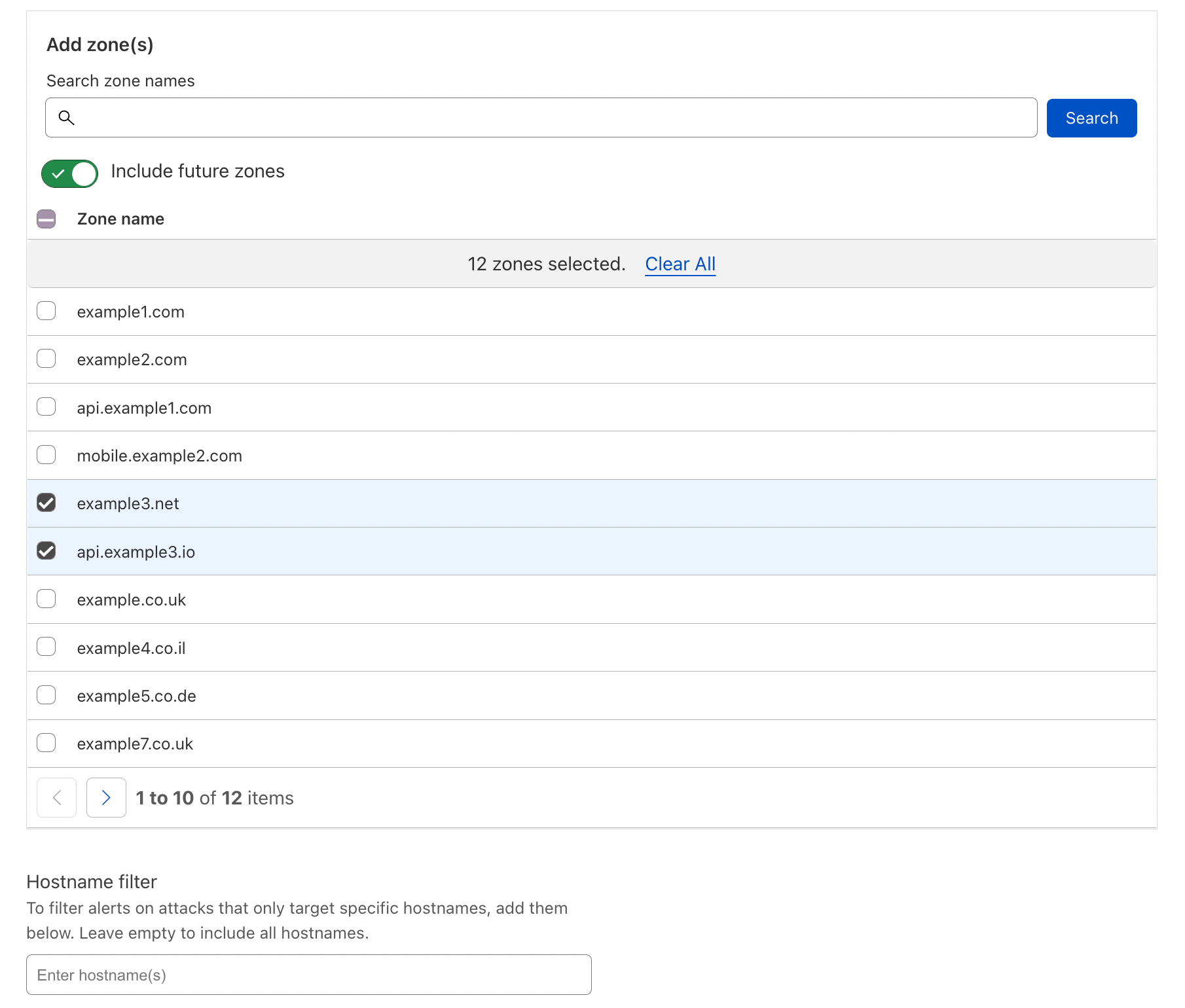

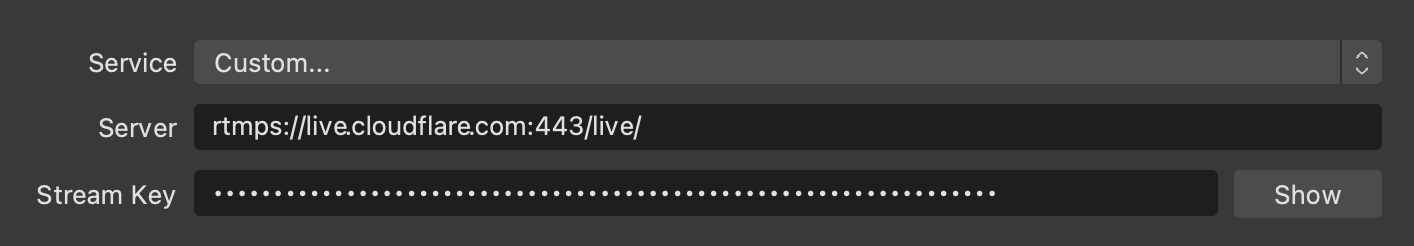

Step 2: Use the RTMPS key with any live broadcasting software, or in your own native app

Copy the RTMPS URL and key, and use them with your live streaming application. We recommend using Open Broadcaster Software (OBS) to get started, but any RTMPS or SRT compatible software should be able to interoperate with Stream Live.

Enter the Stream RTMPS URL and the Stream Key from Step 1:

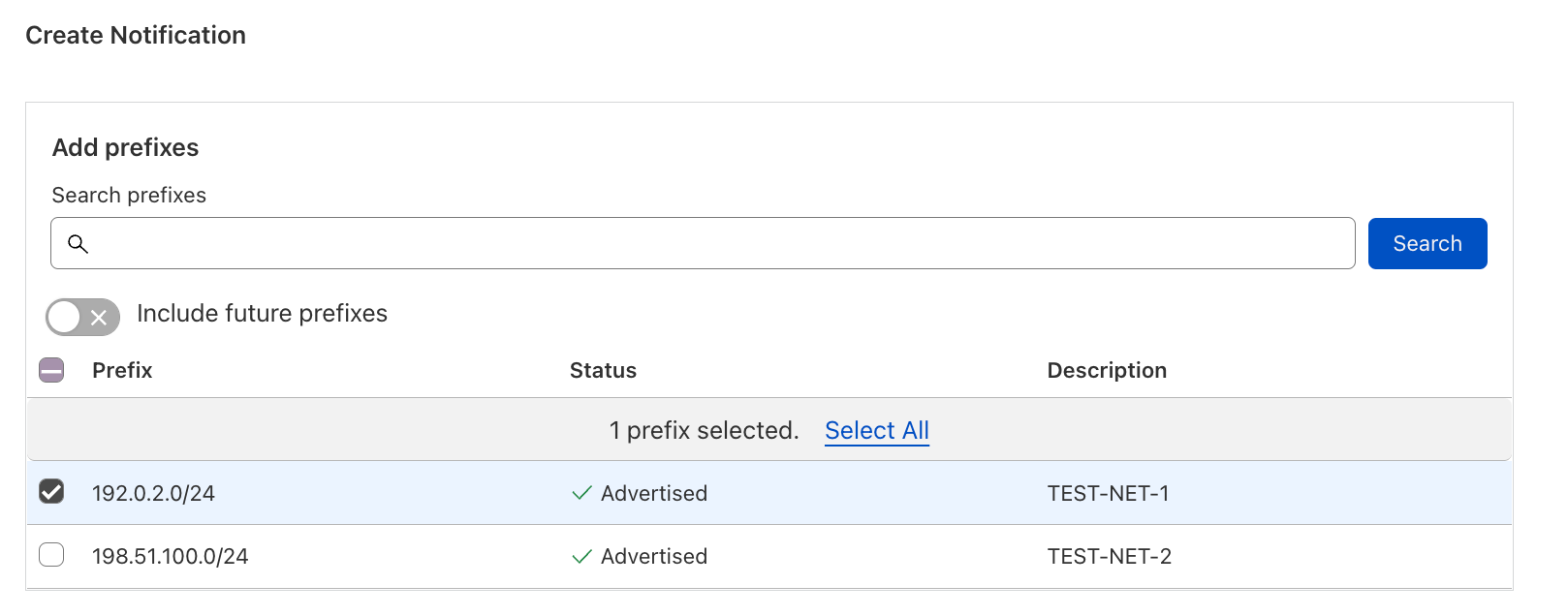

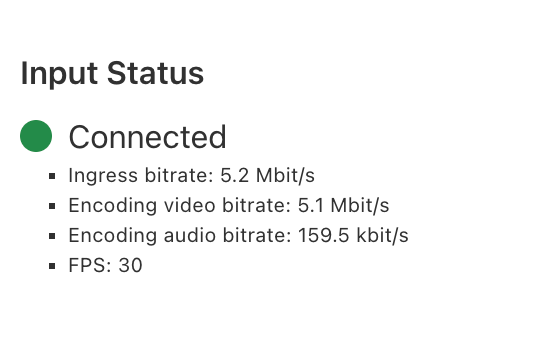

Step 3: Preview your live stream in the Cloudflare Dashboard

In the Stream Dashboard, within seconds of going live, you will see a preview of what your viewers will see, along with the real-time connection status of your live stream.

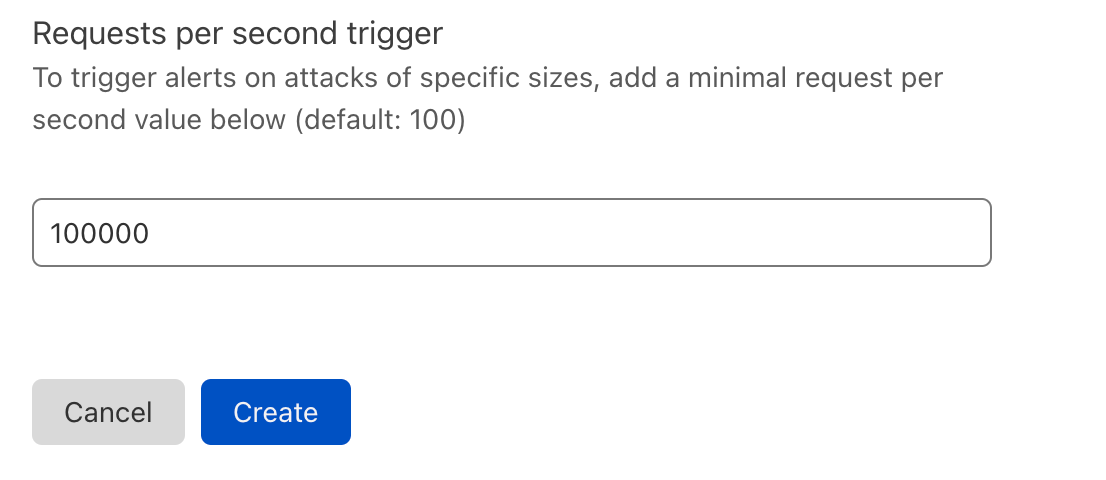

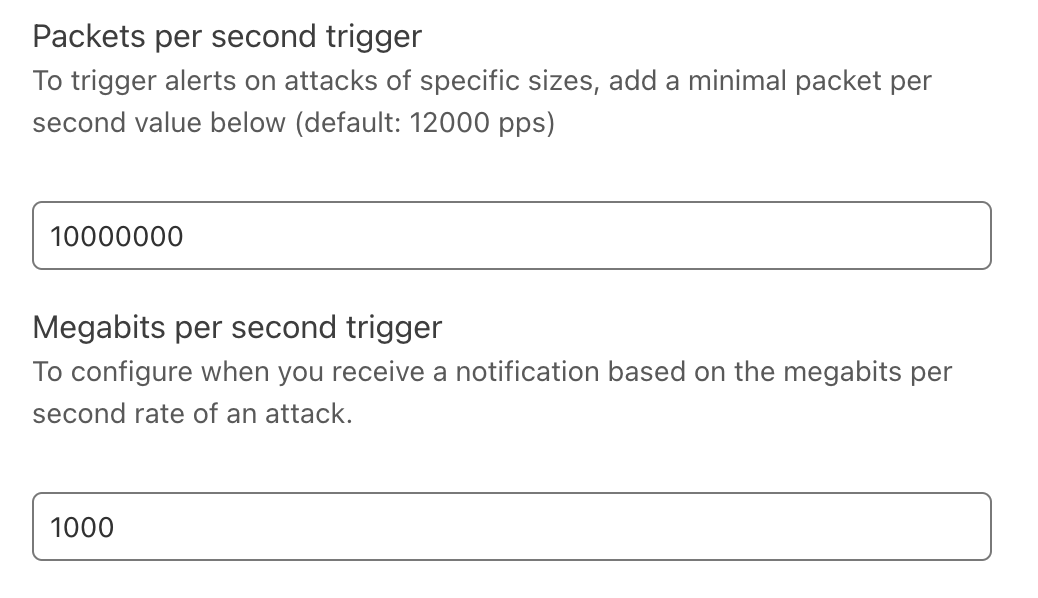

Step 4: Add live video playback to your website or app

Stream your video using our Stream Player embed code, or use any video player that supports HLS or DASH — live streams can be played in both websites or native iOS and Android apps.

For example, on iOS, all you need to do is provide AVPlayer with the URL to the HLS manifest for your live input, which you can find via the API or in the Stream Dashboard.

import SwiftUI

import AVKit

struct MyView: View {

// Change the url to the Cloudflare Stream HLS manifest URL

private let player = AVPlayer(url: URL(string: "https://customer-9cbb9x7nxdw5hb57.cloudflarestream.com/8f92fe7d2c1c0983767649e065e691fc/manifest/video.m3u8")!)

var body: some View {

VideoPlayer(player: player)

.onAppear() {

player.play()

}

}

}

struct MyView_Previews: PreviewProvider {

static var previews: some View {

MyView()

}

}

To run a complete example app in XCode, follow this guide in the Stream Developer docs.

Companies are building whole new video platforms on top of Stream

Developers want control, but most don’t have time to become video experts. And even video experts building innovative new platforms don’t want to manage live streaming infrastructure.

Switcher Studio’s whole business is live video — their iOS app allows creators and businesses to produce their own branded, multi camera live streams. Switcher uses Stream as an essential part of their live streaming infrastructure. In their own words:

“Since 2014, Switcher has helped creators connect to audiences with livestreams. Now, our users create over 100,000 streams per month. As we grew, we needed a scalable content delivery solution. Cloudflare offers secure, fast delivery, and even allowed us to offer new features, like multistreaming. Trusting Cloudflare Stream lets our team focus on the live production tools that make Switcher unique.”

While Stream Live has been in beta, we’ve worked with many customers like Switcher, where live video isn’t just one product feature, it is the core of their product. Even as experts in live video, they choose to use Stream, so that they can focus on the unique value they create for their customers, leaving the infrastructure of ingesting, encoding, recording and delivering live video to Cloudflare.

Start building live video into your website or app today

It takes just a few minutes to sign up and start your first live stream, using the Cloudflare Dashboard, with no code required to get started, but APIs for when you’re ready to start building your own live video features. Give it a try — we’re ready for you, no matter the size of your audience.