Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=UCoD2iccIWA

Phil Rosenthal | Somebody Feed Phil | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=JB9qUbaDPvI

The Linux Foundation’s “security mobilization plan”

Post Syndicated from original https://lwn.net/Articles/896244/

The Linux Foundation has posted an “Open Source

Software Security Mobilization Plan” that aims to address a number of

perceived security problems with the expenditure of nearly

$140 million over two years.

While there are considerable ongoing efforts to secure the OSS

supply chain, to achieve acceptable levels of resilience and risk,

a more comprehensive series of investments to shift security from a

largely reactive exercise to a proactive approach is required. Our

objective is to evolve the systems and processes used to ensure a

higher degree of security assurance and trust in the OSS supply

chain.This paper suggests a comprehensive portfolio of 10 initiatives

which can start immediately to address three fundamental goals for

hardening the software supply chain. Vulnerabilities and weaknesses

in widely deployed software present systemic threats to the

security and stability of modern society as government services,

infrastructure providers, nonprofits and the vast majority of

private businesses rely on software in order to function.

Manipulating Machine-Learning Systems through the Order of the Training Data

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/05/manipulating-machine-learning-systems-through-the-order-of-the-training-data.html

Yet another adversarial ML attack:

Most deep neural networks are trained by stochastic gradient descent. Now “stochastic” is a fancy Greek word for “random”; it means that the training data are fed into the model in random order.

So what happens if the bad guys can cause the order to be not random? You guessed it—all bets are off. Suppose for example a company or a country wanted to have a credit-scoring system that’s secretly sexist, but still be able to pretend that its training was actually fair. Well, they could assemble a set of financial data that was representative of the whole population, but start the model’s training on ten rich men and ten poor women drawn from that set then let initialisation bias do the rest of the work.

Does this generalise? Indeed it does. Previously, people had assumed that in order to poison a model or introduce backdoors, you needed to add adversarial samples to the training data. Our latest paper shows that’s not necessary at all. If an adversary can manipulate the order in which batches of training data are presented to the model, they can undermine both its integrity (by poisoning it) and its availability (by causing training to be less effective, or take longer). This is quite general across models that use stochastic gradient descent.

Research paper.

My TOP 5 HACS components – integrations and front-end improvements

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=jedZw1aIrbc

F-Droid: Our build and release infrastructure, and upcoming updates

Post Syndicated from original https://lwn.net/Articles/896240/

Here’s an

update from F-Droid regarding upcoming changes to its build and

distribution infrastructure.

If you have an app on f-droid.org, you might have noticed that all

builds happen on a 5 year old Debian release: stretch. We are in

the midst of a big effort to upgrade to the latest bullseye release

right now. This is not just a simple apt-get upgrade, we

are also

taking this opportunity to overhaul the build process so that app

builds work with a relatively plain Debian install as the base

OS. We have to provide a platform to build thousands of apps, so we

cannot just upgrade the base image as often as we like.

What It Takes to Securely Scale Cloud Environments at Tech Companies Today

Post Syndicated from Ben Austin original https://blog.rapid7.com/2022/05/25/what-it-takes-to-securely-scale-cloud-environments-at-tech-companies-today/

In January 2021, foreign trade marketing platform SocialArks was the target of a massive cyberattack. Security Magazine reported that the rapidly growing startup experienced a breach of over 214 million social media profiles and 400GB of data, exposing users’ names, phone numbers, email addresses, subscription data, and other sensitive information across Facebook, Instagram, and LinkedIn. According to Safety Detectives, the breach affected more than 318 million records in total, including those of high-profile influencers in the United States, China, the Netherlands, South Korea, and more.

The cause? A misconfigured database.

SocialArks’s Elasticsearch database contained scraped data from hundreds of millions of social media users from all around the world. The database was publicly exposed without password encryption or protection, meaning that any bad actor in possession of the company’s server IP address could easily access the private data.

What can tech companies learn from what happened to SocialArks?

One wrong misconfiguration can lead to major consequences — from reputational damage to revenue loss. As the cloud becomes increasingly pervasive and complex, tech companies know they must take advantage of innovative services to scale up. At the same time, DevOps and security teams must work together to ensure that they are using the cloud securely, from development to production.

Here are three ways to help empower your teams to take advantage of the many benefits of public cloud infrastructure without sacrificing security.

1. Improve visibility

Tech companies – probably more than those in any other industry – are keen to take advantage of the endless stream of new and innovative services coming from public cloud providers like AWS, Azure, and GCP. From more traditional cloud offerings like containers and databases to advanced machine learning, data analytics, and remote application delivery, developers at tech companies love to explore new cloud services as a means to spur innovation.

The challenge for security, of course, is that the sheer complexity of the average enterprise tech company’s cloud footprint is dizzying, not to mention the rapid rate of change. For example, a cloud environment with 10,000 compute instances can expect a daily churn of 20%, including auto-scaling groups, new and re-deployments of infrastructure and workloads, ongoing changes, and more. That means over the course of a year, security teams must monitor and apply guardrails to over 700,000 individual instances.

It’s easy for security (and operations) teams to wind up without unified visibility into what cloud services their development teams are using at any given point in time. Without a purpose-built multicloud security solution in place, there’s just no way to continuously monitor cloud and container services and maintain insight into potential risks.

It is entirely possible, however, to gain visibility. More than that, it’s necessary if you want to continue to scale. In the cloud world, the old security adage applies: You can’t secure what you can’t see. Total visibility into all cloud resources can help security teams quickly detect changes that could open the organization up to risk. With visibility in place, you can more readily assess risks, identify and remediate issues, and ensure continuous compliance with relevant regulations.

2. Create a culture of security

No one wants their DevOps and security teams to be working in opposition, especially in a rapid growth period. When you uphold DevSecOps principles, you eliminate the friction between DevOps and security professionals. There’s no need to “circle back” after an initial release or “push pause” on a scheduled deployment when securing the cloud throughout the CI/CD pipeline is just part of how the business operates. A culture that values security is vital when it comes to rapid scaling. You can’t rely on each individual to “do the right thing,” so you’re much better off building security into your culture on a deep level.

When it comes to timing your culture shift, all signs point to now. Fortune notes that while the pandemic-era adoption of hybrid work provides unprecedented flexibility and accessibility, it also can create a “nightmare scenario” with “hundreds (or thousands) of new vectors through which malicious actors can gain a foothold in your network.” Gartner reports that cloud security saw the largest spending increase of all other information security and risk management segments in 2021, ticking up by 41%. Yet, a survey by Cloud Security Alliance revealed that 76% of professionals polled fear that the risk of cloud misconfigurations will stay the same or increase.

Given these numbers, encouraging a culture of security is a present necessity, not a future concern. But how do you know when you’ve successfully created one?

The answer: When all parts of your team see cybersecurity as just another part of their job.

Of course, that’s easier said than done. Creating a culture of security requires processes that provide context and early feedback to developers, meaning that command and control is no longer security’s fallback position. Instead, collaboration should be the name of the game. Making security easy is what bridges the historical cultural divide between security and DevOps.

The utopia version of DevSecOps promises seamless collaboration – but each team has plenty on their own plates to worry about. How can tech companies foster a culture of security while optimizing their existing resources and workflows?

3. Focus on security by design

TrendMicro reports that simple cloud infrastructure misconfigurations account for 65% to 70% of all cloud security challenges. The Ponemon Institute and IBM found that the average cost of a data breach in 2021 was $4.24 million – the highest average cost ever recorded in the report’s 17-year history. That same report found that organizations with more mature cloud security practices were able to contain breaches on average 77 days faster than those with less mature strategies.

Security professionals are human, too. They can only be in so many places at once. With talent already scarce, you want your security team to focus on creating new strategies, without getting bogged down by simple fixes.

That’s why integrating security measures into the dev cycle framework can help you move towards achieving that balance between speed and security. Embedding checks within the development process is one way to empower early detection, saving your team’s time and resources.

This approach helps catch problems like policy violations or misconfigurations without sacrificing the speed that developers love or the safety that security professionals need. Plus, building security into your development processes will empower your dev teams to correct issues right away as they’re alerted, making that last deployment the breath of relief it should be.

When you integrate security and compliance checks early in the dev lifecycle, you can prevent the majority of vulnerabilities from cropping up in the first place — meaning your dev and sec teams can rest easy knowing that their infrastructure as code (IaC) templates are secure from the beginning.

How to get started: Empower secure development

Get your developers implementing security without having to onboard them to an entirely new role. By integrating and automating security checks into the workflows and tools your DevOps teams already know and love, you empower them to prioritize both speed and security.

Taking on even one of the three strategies described above can be intimidating. We suggest getting started by focusing on actionable steps, which we cover in depth in our eBook below.

Scaling securely is possible. Want to learn more? Read up on 6 Strategies to Empower Secure Innovation at Enterprise Tech Companies to tackle the unique cloud security challenges facing the tech industry.

Additional reading:

Another set of stable kernel updates

Post Syndicated from original https://lwn.net/Articles/896220/

The

5.17.10,

5.15.42,

5.10.118,

5.4.196,

4.19.245,

4.14.281, and

4.9.316

stable kernel updates have all been released; each contains another set of

important fixes.

Update: the 5.17.11 and 5.15.43 updates followed immediately

thereafter with a single MPTCP networking fix.

В кои случаи спасяваме спестяванията си, чрез покупка на недвижим имот?

Post Syndicated from VassilKendov original http://kendov.com/saving-savings-from-inflation/

В кои случаи спасяваме спестяванията си, чрез покупка на недвижим имот? Кога това е оправдано и защитени ли сме от инфлацията?

Инфалцията със сигурност “изяжда спестяванията”. Ако искаме да сме отговорни, трябва да ги защитим. Пасивността в случая е скъпо начинание.

За срещи и консултации по банкови кредити и неволи, моля използвайте посочената форма.

[contact-form-7]

Най-познатия за българското общество начин на защита е покупката на недвижим имот.

Както обичам да казвам – има и други начини, но този е най-разпространения и най-познатия”.

В кои случаи обаче това е оправдано и в кои не съвсем?

Във всички случаи обаче трябва да решим дали ще правим инвестиция или ще спестяваме под формата на недвижим имот.

Най-важното обаче е да останем ликвидни. Товест да имаме заделена сума “бели пари за черни дни”. Обикновено съветвам това да е в размер на 6 месечни заплати. Всичко над това вече може да се изтегли от банката и да се инвестира.

За предпочитане е в ново строителство. То е по-вероятно да запази цената си по време на инфлация.

Отдаването под наем на купения имот е право пропорционално спрямо вложеното време. Краткосорчното отдаване носи по-добри печалби, но изисква повече вложено време.

Доходността при дългосрочно отдаване се движи около 3-4%, а при краткосорчно между 7-10%.

При всички положения при по-висока инфлация не е добре парит Ви да стоят в банка по време на инфлация.

Ако видеото Ви е харесало, моля абонирайте се за канала в Youtube или Telegram

https://www.youtube.com/channel/UChh1cOXj_FpK8D8C0tV9GKg

https://t.me/KendovCom

Васил Кендов – финансист

The post В кои случаи спасяваме спестяванията си, чрез покупка на недвижим имот? appeared first on Kendov.com.

Implementing lightweight on-premises API connectivity using inverting traffic proxy

Post Syndicated from Oleksiy Volkov original https://aws.amazon.com/blogs/architecture/implementing-lightweight-on-premises-api-connectivity-using-inverting-traffic-proxy/

This post will explore the use of lightweight application inversion proxy as a solution for multi-point hybrid or multi-cloud, API-level connectivity for cases where AWS Direct Connect or VPN may not be practical. Then, we will present a sample solution and explain how it addresses typical challenges involved in this space.

Defining the issue

Large ISV providers and integration vendors often need to have API-level integration between a central cloud-based system and a number of on-premises APIs. Use cases can range from refactoring/modernization initiatives to interfacing with legacy on-premises applications, which have no direct migration path to the cloud.

The typical approach is to use VPN or Direct Connect, as they can provide significant benefits in terms of latency and security. However, they are not always practical in situations involving multi-source systems deployed by various groups or organizations that may have significant budget, process, or timeline constraints.

Conceptual solution

An option that addresses the connectivity need is an inverting application proxy, which can be deployed as a lightweight executable on an on-premises backend. The locally deployed agent can communicate with the proxy server on AWS using an inverted communication pattern. This means that the agent will establish outbound connection to the proxy, and it will use the connection to receive inbound requests, too. Figure 1 describes a sample architecture using inverting proxy pattern using Amazon API Gateway façade.

Figure 1. Inverting application proxy

The advantages of this approach include ease-of-deployment (drop-in executable agent) and -configuration. As the proxy inverts the direction of application connectivity to originate from on-premises servers, the local firewall does not need to be reconfigured to open additional ports needed for traditional proxy deployment.

Realizing the solution on AWS

We have built a sample traffic routing solution based on the original open-source Inverting Proxy and Agent by Ian Maddox, Jason Cooke, and Omar Janjur. The solution is written in Go and leverages multiple AWS services to provide additional telemetry, security, and discoverability capabilities that address the common needs of enterprise customers.

The solution is comprised of an inverting proxy and a forwarding agent. The inverting proxy is deployed on AWS as a stand-alone executable running on Amazon Elastic Compute Cloud (EC2) and responsible for forwarding traffic to the agent. The agent can be deployed as a binary or container within the target on-premises system.

Upon starting, the agent will establish an outbound connection with the proxy and local sever application. Once established, the proxy will use it in reverse to forward all incoming client requests through the agent and to the backend application. The connection is secured by Transport Layer Security (TLS) to protect communications between client and proxy and between agent and backend application.

This solution uses a unique backend ID and IAM user/role tags to identify different backend servers and control access to proxies. The backend ID is passed as a command-line parameter to the agent. The agent checks the IAM account or IAM role Amazon EC2 is running under for tag “AllowedBackends”. The tag contains coma-separated list of backend IDs that the agent is allowed to access. The connectivity is established only if the provided backend ID matches one of the values in the coma-separated list.

The solution supports native integration with AWS Cloud Map to enable automatic discoverability of remote API endpoints. Upon start and once the IAM access control checks are successfully validated, the agent can register the backend endpoints within AWS Cloud Map using a provided service name and service namespace ID.

Inverting proxy agent can collect telemetry and automatically publish it to Amazon CloudWatch using a custom namespace. This includes HTTP response codes and counts from server application aggregated by the backend ID.

For full list of options, features, and supported configurations, use --help command-line parameter with both agent and proxy executables.

Enabling highly resilient proxy deployment

For production scenarios that require high availability, deploy a pair of inverting proxies connecting to a pair of agents deployed on separate EC2 instances. The entire configuration is then placed behind Application Load Balancer to provide a single point of ingress, load-balancing, and health-checking functionality. Figure 2 demonstrates a highly resilient setup for critical workloads.

Figure 2. Highly resilient deployment diagram for inverting proxy

Additionally, for real-life production workloads dealing with sensitive data, we recommend following security and resilience best practices for Amazon EC2.

Deploying and running the solution

The solution includes a simple demo Node.js server application to simulate connectivity with an inverting proxy. A restrictive security group will be used to simulate on-premises data center.

Steps to deployment:

1. Create a “backend” Amazon EC2 server using Linux 2, free-tier AMI. Ensure that Port 443 (inbound port for sample server application) is blocked from external access via appropriate security group.

2. Connect by using SSH into target server run updates.

sudo yum update -y

3. Install development tools and dependencies:

sudo yum groupinstall "Development Tools" -y

4. Install Golang:

sudo yum install golang -y

5. Install node.js.

curl -o- https://raw.githubusercontent.com/nvm-sh/nvm/v0.34.0/install.sh | bash

. ~/.nvm/nvm.sh

nvm install 16

6. Clone the inverting proxy GitHub repository to the “backend” EC2 instance.

7. From inverting-proxy folder, build the application by running:

mkdir /home/ec2-user/inverting-proxy/bin

export GOPATH=/home/ec2-user/inverting-proxy/bin

make

8. From /simple-server folder, run the sample appTLS application in the background (see instructions below). Note: to enable SSL you will need to generate encryption key and certificate files (server.crt and server.key) and place them in simple-server folder.

npm install

node appTLS &

Example app listening at https://localhost:443

Confirm that the application is running by using ps -ef | grep node:

ec2-user 1700 30669 0 19:45 pts/0 00:00:00 node appTLS

ec2-user 1708 30669 0 19:45 pts/0 00:00:00 grep --color=auto node

9. For backend Amazon EC2 server, navigate to Amazon EC2 security settings and create an IAM role for the instance. Keep default permissions and add “AllowedBackends” tag with the backend ID as a tag value (the backend ID can be any string that matches the backend ID parameter in Step 13).

10. Create a proxy Amazon EC2 server using Linux AMI in a public subnet and connect by using SSH in an Amazon EC2 once online. Copy the contents of the bin folder from the agent EC2 or clone the repository and follow build instructions above (Steps 2-7).

Note: the agent will be establishing outbound connectivity to the proxy; open the appropriate port (443) in the proxy Amazon EC2 security group. The proxy server needs to be accessible by the backend Amazon EC2 and your client workstation, as you will use your local browser to test the application.

11. To enable TLS encryption on incoming connections to proxy, you will need to generate and upload the certificate and private key (server.crt and server.key) to the bin folder of the proxy deployment.

12. Navigate to /bin folder of the inverting proxy and start the proxy by running:

sudo ./proxy –port 443 -tls

2021/12/19 19:56:46 Listening on [::]:443

13. Use the SSH to connect into the backend Amazon EC2 server and configure the inverting proxy agent. Navigate to /bin folder in the cloned repository and run the command below, replacing uppercase strings with the appropriate values. Note: the required trailing slash after the proxy DNS URL.

./proxy-forwarding-agent -proxy https://YOUR_PROXYSERVER_PUBLIC_DNS/ -backend SampleBackend-host localhost:443 -scheme https

14. Use your local browser to navigate to proxy server public DNS name (https://YOUR_PROXYSERVER_PUBLIC_DNS). You should see the following response from your sample backend application:

Hello World!

Conclusion

Inverting proxy is a flexible, lightweight pattern that can be used for routing API traffic in non-trivial hybrid and multi-cloud scenarios that do not require low-latency connectivity. It can also be used for securing existing endpoints, refactoring legacy applications, and enabling visibility into legacy backends. The sample solution we have detailed can be customized to create unique implementations and provides out-of-the-box baseline integration with multiple AWS services.

2010 Haiti Earthquake: the Western Hemisphere’s Deadliest Quake

Post Syndicated from Geographics original https://www.youtube.com/watch?v=ciAGeSUF5lI

Security updates for Wednesday

Post Syndicated from original https://lwn.net/Articles/896216/

Security updates have been issued by Debian (lrzip and puma), Fedora (plantuml and plib), Oracle (kernel and kernel-container), Red Hat (firefox, kernel, kpatch-patch, subversion:1.14, and thunderbird), Scientific Linux (firefox and thunderbird), SUSE (kernel-firmware, libxml2, pcre2, and postgresql13), and Ubuntu (accountsservice, postgresql-10, postgresql-12, postgresql-13, postgresql-14, and rsyslog).

Introducing the PowerShell custom runtime for AWS Lambda

Post Syndicated from Julian Wood original https://aws.amazon.com/blogs/compute/introducing-the-powershell-custom-runtime-for-aws-lambda/

The new PowerShell custom runtime for AWS Lambda makes it even easier to run Lambda functions written in PowerShell to process events. Using this runtime, you can run native PowerShell code in Lambda without having to compile code, which simplifies deployment and testing. You can also now view PowerShell code in the AWS Management Console, and have more control over function output and logging.

Lambda has supported running PowerShell since 2018. However, the existing solution uses the .NET Core runtime implementation for PowerShell. It uses the additional AWSLambdaPSCore modules for deployment and publishing, which require compiling the PowerShell code into C# binaries to run on .NET. This adds additional steps to the development process.

This blog post explains the benefits of using a custom runtime and information about the runtime options. You can deploy and run an example native PowerShell function in Lambda.

PowerShell custom runtime benefits

The new custom runtime for PowerShell uses native PowerShell, instead of compiling PowerShell and hosting it on the .NET runtime. Using native PowerShell means the function runtime environment matches a standard PowerShell session, which simplifies the development and testing process.

You can now also view and edit PowerShell code within the Lambda console’s built-in code editor. You can embed PowerShell code within an AWS CloudFormation template, or other infrastructure as code tools.

This custom runtime returns everything placed on the pipeline as the function output, including the output of Write-Output. This gives you more control over the function output, error messages, and logging. With the previous .NET runtime implementation, your function returns only the last output from the PowerShell pipeline.

Building and packaging the custom runtime

The custom runtime is based on Lambda’s provided.al2 runtime, which runs in an Amazon Linux environment. This includes AWS credentials from an AWS Identity and Access Management (IAM) role that you manage. You can build and package the runtime as a Lambda layer, or include it in a container image. When packaging as a layer, you can add it to multiple functions, which can simplify deployment.

The runtime is based on the cross-platform PowerShell Core. This means that you can develop Lambda functions for PowerShell on Windows, Linux, or macOS.

You can build the custom runtime using a number of tools, including the AWS Command Line Interface (AWS CLI), AWS Tools for PowerShell, or with infrastructure as code tools such as AWS CloudFormation, AWS Serverless Application Model (AWS SAM), Serverless Framework, and AWS Cloud Development Kit (AWS CDK).

Showing the PowerShell custom runtime in action

In the following demo, you learn how to deploy the runtime and explore how it works with a PowerShell function.

The accompanying GitHub repository contains the code for the custom runtime, along with additional installation options and a number of examples.

This demo application uses AWS SAM to deploy the following resources:

- PowerShell custom runtime based on

provided.al2as a Lambda layer. - Additional Lambda layer containing the

AWS.Tools.Commonmodule from AWS Tools for PowerShell. - Both layers store their Amazon Resource Names (ARNs) as parameters in AWS Systems Manager Parameter Store which can be referenced in other templates.

- Lambda function with three different handler options.

To build the custom runtime and the AWS Tools for PowerShell layer for this example, AWS SAM uses a Makefile. This downloads the specified version of PowerShell from GitHub and the AWSTools.

Windows does not natively support Makefiles. When using Windows, you can use either Windows Subsystem for Linux (WSL), Docker Desktop, or native PowerShell.

Pre-requisites:

- AWS SAM

- If building on Windows:

Clone the repository and change into the example directory

git clone https://github.com/awslabs/aws-lambda-powershell-runtime

cd examples/demo-runtime-layer-functionUse one of the following Build options, A, B, C, depending on your operating system and tools.

A) Build using Linux or WSL

Build the custom runtime, Lambda layer, and function packages using native Linux or WSL.

sam build --parallelB) Build using Docker

You can build the custom runtime, Lambda layer, and function packages using Docker. This uses a Linux-based Lambda-like Docker container to build the packages. Use this option for Windows without WSL or as an isolated Mac/Linux build environment.

sam build --parallel --use-containerC) Build using PowerShell for Windows

You can use native PowerShell for Windows to download and extract the custom runtime and Lambda layer files. This performs the same file copy functionality as the Makefile. It adds the files to the source folders rather than a build location for subsequent deployment with AWS SAM. Use this option for Windows without WSL or Docker.

.\build-layers.ps1Test the function locally

Once the build process is complete, you can use AWS SAM to test the function locally.

sam local invokeThis uses a Lambda-like environment to run the function locally and returns the function response, which is the result of Get-AWSRegion.

Deploying to the AWS Cloud

Use AWS SAM to deploy the resources to your AWS account. Run a guided deployment to set the default parameters for the first deploy.

sam deploy -gFor subsequent deployments you can use sam deploy.

Enter a Stack Name and accept the remaining initial defaults.

AWS SAM deploys the infrastructure and outputs the details of the resources.

View, edit, and invoke the function in the AWS Management Console

You can view, edit code, and invoke the Lambda function in the Lambda Console.

Navigate to the Functions page and choose the function specified in the sam deploy Outputs.

Using the built-in code editor, you can view the function code.

This function imports the AWS.Tools.Common module during the init process. The function handler runs and returns the output of Get-AWSRegion.

To invoke the function, select the Test button and create a test event. For more information on invoking a function with a test event, see the documentation.

You can see the results in the Execution result pane.

You can also view a snippet of the generated Function Logs below the Response. View the full logs in Amazon CloudWatch Logs. You can navigate directly via the Monitor tab.

Invoke the function using the AWS CLI

From a command prompt invoke the function. Amend the --function-name and --region values for your function. This should return "StatusCode": 200 for a successful invoke.

aws lambda invoke --function-name "aws-lambda-powershell-runtime-Function-6W3bn1znmW8G" --region us-east-1 invoke-resultView the function results which are outputted to invoke-result.

cat invoke-resultInvoke the function using the AWS Tools for PowerShell

You can invoke the Lambda function using the AWS Tools for PowerShell and capture the response in a variable. The response is available in the Payload property of the $Response object, which can be read using the .NET StreamReader class.

$Response = Invoke-LMFunction -FunctionName aws-lambda-powershell-runtime-PowerShellFunction-HHdKLkXxnkUn -LogType Tail

[System.IO.StreamReader]::new($Response.Payload).ReadToEnd()This outputs the result of AWS-GetRegion.

Cleanup

Use AWS SAM to delete the AWS resources created by this template.

sam deletePowerShell runtime information

Variables

The runtime defines the following variables which are made available to the Lambda function.

$LambdaInput: A PSObject that contains the Lambda function input event data.$LambdaContext: An object that provides methods and properties with information about the invocation, function, and runtime environment. For more information, see the GitHub repository.

PowerShell module support

You can include additional PowerShell modules either via a Lambda Layer, or within your function code package, or container image. Using Lambda layers provides a convenient way to package and share modules that you can use with your Lambda functions. Layers reduce the size of uploaded deployment archives and make it faster to deploy your code.

The PSModulePath environment variable contains a list of folder locations that are searched to find user-supplied modules. This is configured during the runtime initialization. Folders are specified in the following order:

- Modules as part of function package in a

/modulessubfolder. - Modules as part of Lambda layers in a

/modulessubfolder. - Modules as part of the PowerShell custom runtime layer in a

/modulessubfolder.

Lambda handler options

There are three different Lambda handler formats supported with this runtime.

<script.ps1>

You provide a PowerShell script that is the handler. Lambda runs the entire script on each invoke.

<script.ps1>::<function_name>

You provide a PowerShell script that includes a PowerShell function name. The PowerShell function name is the handler. The PowerShell runtime dot-sources the specified <script.ps1>. This allows you to run PowerShell code during the function initialization cold start process. Lambda then invokes the PowerShell handler function <function_name>. On subsequent invokes using the same runtime environment, Lambda invokes only the handler function <function_name>.

Module::<module_name>::<function_name>

You provide a PowerShell module. You include a PowerShell function as the handler within the module. Add the PowerShell module using a Lambda Layer or by including the module in the Lambda function code package. The PowerShell runtime imports the specified <module_name>. This allows you to run PowerShell code during the module initialization cold start process. Lambda then invokes the PowerShell handler function <function_name> in a similar way to the <script.ps1>::<function_name> handler method.

Function logging and metrics

Lambda automatically monitors Lambda functions on your behalf and sends function metrics to Amazon CloudWatch. Your Lambda function comes with a CloudWatch Logs log group and a log stream for each instance of your function. The Lambda runtime environment sends details about each invocation to the log stream, and relays logs and other output from your function’s code. For more information, see the documentation.

Output from Write-Host, Write-Verbose, Write-Warning, and Write-Error is written to the function log stream. The output from Write-Output is added to the pipeline, which you can use with your function response.

Error handling

The runtime can terminate your function because it ran out of time, detected a syntax error, or failed to marshal the response object into JSON.

Your function code can throw an exception or return an error object. Lambda writes the error to CloudWatch Logs and, for synchronous invocations, also returns the error in the function response output.

See the documentation on how to view Lambda function invocation errors for the PowerShell runtime using the Lambda console and the AWS CLI.

Conclusion

The PowerShell custom runtime for Lambda makes it even easier to run Lambda functions written in PowerShell.

The custom runtime runs native PowerShell. You can view, and edit your code within the Lambda console. The runtime supports a number of different handler options, and you can include additional PowerShell modules.

See the accompanying GitHub repository which contains the code for the custom runtime, along with installation options and a number of examples. Start running PowerShell on Lambda today.

For more serverless learning resources, visit Serverless Land.

Acknowledgements

This custom runtime builds on the work of Norm Johanson, Kevin Marquette, Andrew Pearce, Jonathan Nunn, and Afroz Mohammed.

Dig through SERVFAILs with EDE

Post Syndicated from Stanley Chiang original https://blog.cloudflare.com/dig-through-servfails-with-ede/

It can be frustrating to get errors (SERVFAIL response codes) returned from your DNS queries. It can be even more frustrating if you don’t get enough information to understand why the error is occurring or what to do next. That’s why back in 2020, we launched support for Extended DNS Error (EDE) Codes to 1.1.1.1.

As a quick refresher, EDE codes are a proposed IETF standard enabled by the Extension Mechanisms for DNS (EDNS) spec. The codes return extra information about DNS or DNSSEC issues without touching the RCODE so that debugging is easier.

Now we’re happy to announce we will return more error code types and include additional helpful information to further improve your debugging experience. Let’s run through some examples of how these error codes can help you better understand the issues you may face.

To try for yourself, you’ll need to run the dig or kdig command in the terminal. For dig, please ensure you have v9.11.20 or above. If you are on macOS 12.1, by default you only have dig 9.10.6. Install an updated version of BIND to fix that.

Let’s start with the output of an example dig command without EDE support.

% dig @1.1.1.1 dnssec-failed.org +noedns

; <<>> DiG 9.18.0 <<>> @1.1.1.1 dnssec-failed.org +noedns

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: SERVFAIL, id: 8054

;; flags: qr rd ra; QUERY: 1, ANSWER: 0, AUTHORITY: 0, ADDITIONAL: 0

;; QUESTION SECTION:

;dnssec-failed.org. IN A

;; Query time: 23 msec

;; SERVER: 1.1.1.1#53(1.1.1.1) (UDP)

;; WHEN: Thu Mar 17 10:12:57 PDT 2022

;; MSG SIZE rcvd: 35

In the output above, we tried to do DNSSEC validation on dnssec-failed.org. It returns a SERVFAIL, but we don’t have context as to why.

Now let’s try that again with 1.1.1.1’s EDE support.

% dig @1.1.1.1 dnssec-failed.org +dnssec

; <<>> DiG 9.18.0 <<>> @1.1.1.1 dnssec-failed.org +dnssec

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: SERVFAIL, id: 34492

;; flags: qr rd ra; QUERY: 1, ANSWER: 0, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags: do; udp: 1232

; EDE: 9 (DNSKEY Missing): (no SEP matching the DS found for dnssec-failed.org.)

;; QUESTION SECTION:

;dnssec-failed.org. IN A

;; Query time: 15 msec

;; SERVER: 1.1.1.1#53(1.1.1.1) (UDP)

;; WHEN: Fri Mar 04 12:53:45 PST 2022

;; MSG SIZE rcvd: 103

We can see there is still a SERVFAIL. However, this time there is also an EDE Code 9 which stands for “DNSKey Missing”. Accompanying that, we also have additional information saying “no SEP matching the DS found” for dnssec-failed.org. That’s better!

Another nifty feature is that we will return multiple errors when appropriate, so you can debug each one separately. In the example below, we returned a SERVFAIL with three different error codes: “Unsupported DNSKEY Algorithm”, “No Reachable Authority”, and “Network Error”.

dig @1.1.1.1 [domain] +dnssec

; <<>> DiG 9.18.0 <<>> @1.1.1.1 [domain] +dnssec

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: SERVFAIL, id: 55957

;; flags: qr rd ra; QUERY: 1, ANSWER: 0, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags: do; udp: 1232

; EDE: 1 (Unsupported DNSKEY Algorithm): (no supported DNSKEY algorithm for [domain].)

; EDE: 22 (No Reachable Authority): (at delegation [domain].)

; EDE: 23 (Network Error): (135.181.58.79:53 rcode=REFUSED for [domain] A)

;; QUESTION SECTION:

;[domain]. IN A

;; Query time: 1197 msec

;; SERVER: 1.1.1.1#53(1.1.1.1) (UDP)

;; WHEN: Wed Mar 02 13:41:30 PST 2022

;; MSG SIZE rcvd: 202

Here’s a list of the additional codes we now support:

| Error Code Number | Error Code Name |

|---|---|

| 1 | Unsupported DNSKEY Algorithm |

| 2 | Unsupported DS Digest Type |

| 5 | DNSSEC Indeterminate |

| 7 | Signature Expired |

| 8 | Signature Not Yet Valid |

| 9 | DNSKEY Missing |

| 10 | RRSIGs Missing |

| 11 | No Zone Key Bit Set |

| 12 | NSEC Missing |

We have documented all the error codes we currently support with additional information you may find helpful. Refer to our dev docs for more information.

How we improved DNS record build speed by more than 4,000x

Post Syndicated from Alex Fattouche original https://blog.cloudflare.com/dns-build-improvement/

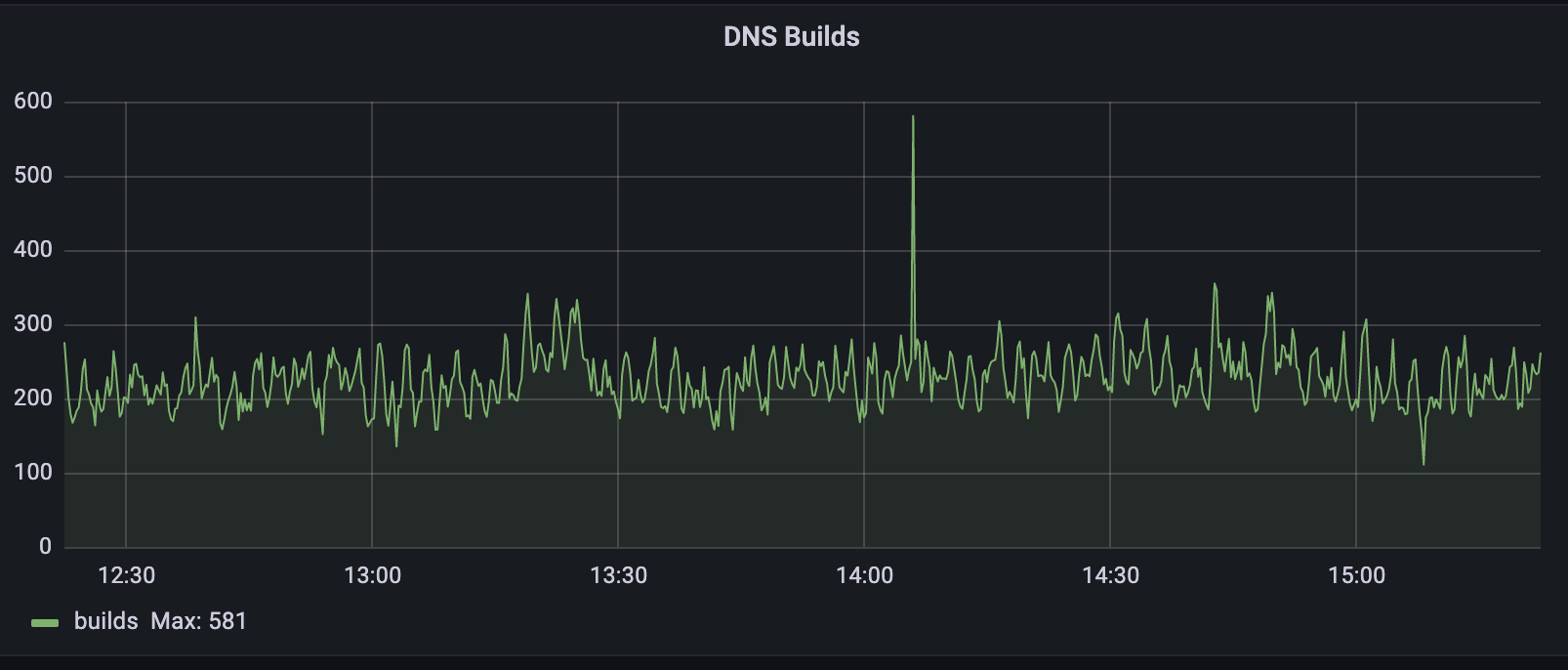

Since my previous blog about Secondary DNS, Cloudflare’s DNS traffic has more than doubled from 15.8 trillion DNS queries per month to 38.7 trillion. Our network now spans over 270 cities in over 100 countries, interconnecting with more than 10,000 networks globally. According to w3 stats, “Cloudflare is used as a DNS server provider by 15.3% of all the websites.” This means we have an enormous responsibility to serve DNS in the fastest and most reliable way possible.

Although the response time we have on DNS queries is the most important performance metric, there is another metric that sometimes goes unnoticed. DNS Record Propagation time is how long it takes changes submitted to our API to be reflected in our DNS query responses. Every millisecond counts here as it allows customers to quickly change configuration, making their systems much more agile. Although our DNS propagation pipeline was already known to be very fast, we had identified several improvements that, if implemented, would massively improve performance. In this blog post I’ll explain how we managed to drastically improve our DNS record propagation speed, and the impact it has on our customers.

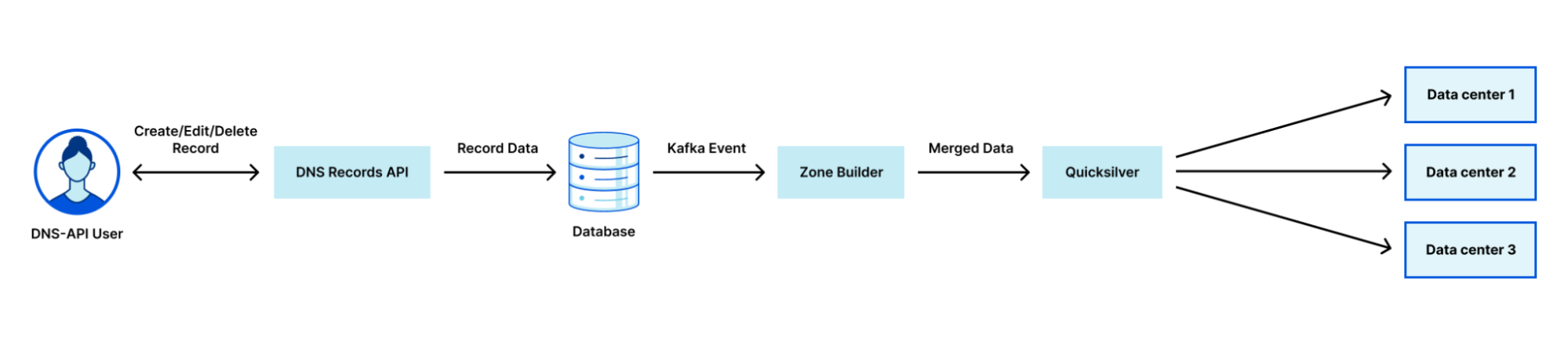

How DNS records are propagated

Cloudflare uses a multi-stage pipeline that takes our customers’ DNS record changes and pushes them to our global network, so they are available all over the world.

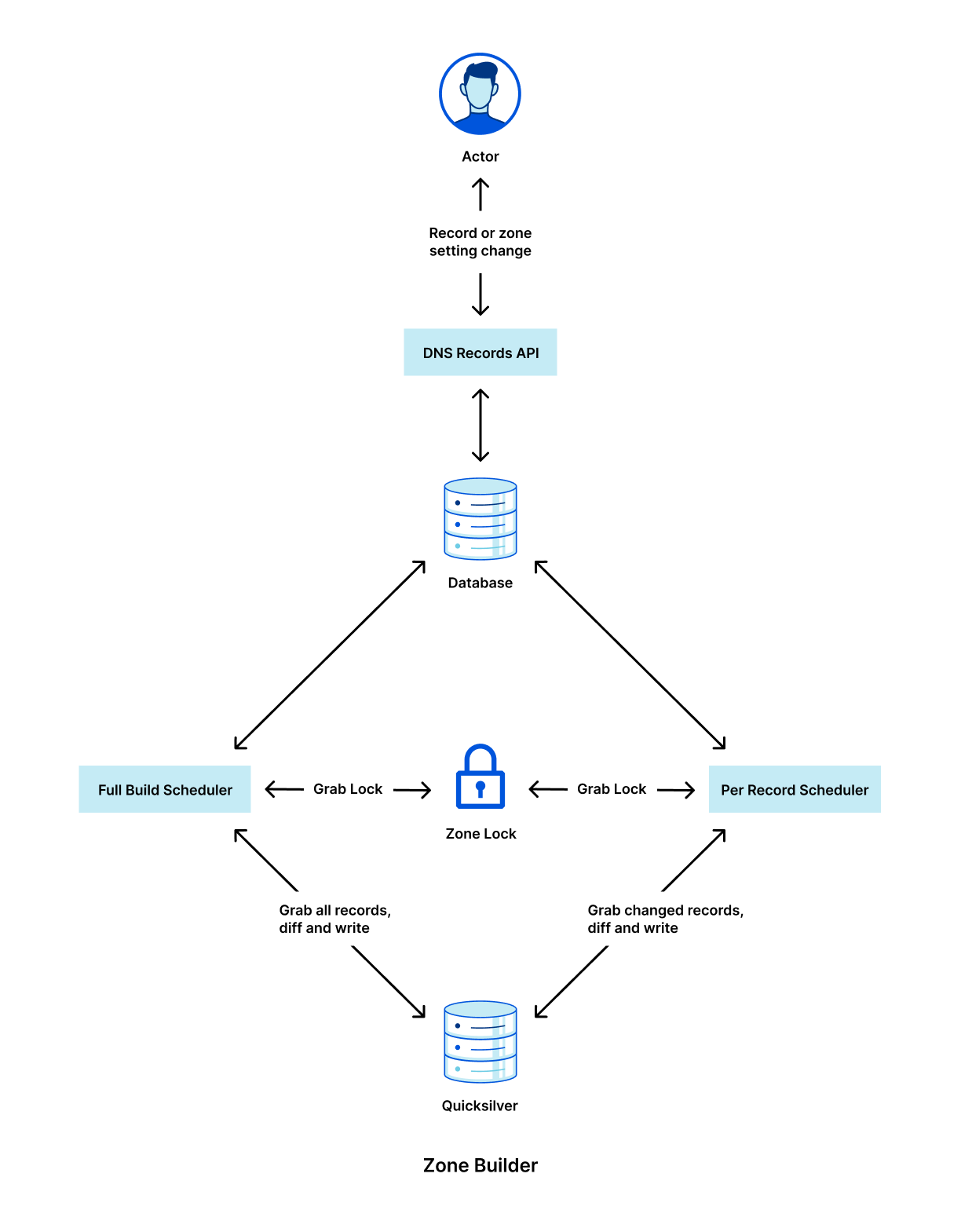

The steps shown in the diagram above are:

- Customer makes a change to a record via our DNS Records API (or UI).

- The change is persisted to the database.

- The database event triggers a Kafka message which is consumed by the Zone Builder.

- The Zone Builder takes the message, collects the contents of the zone from the database and pushes it to Quicksilver, our distributed KV store.

- Quicksilver then propagates this information to the network.

Of course, this is a simplified version of what is happening. In reality, our API receives thousands of requests per second. All POST/PUT/PATCH/DELETE requests ultimately result in a DNS record change. Each of these changes needs to be actioned so that the information we show through our API and in the Cloudflare dashboard is eventually consistent with the information we use to respond to DNS queries.

Historically, one of the largest bottlenecks in the DNS propagation pipeline was the Zone Builder, shown in step 4 above. Responsible for collecting and organizing records to be written to our global network, our Zone Builder often ate up most of the propagation time, especially for larger zones. As we continue to scale, it is important for us to remove any bottlenecks that may exist in our systems, and this was clearly identified as one such bottleneck.

Growing pains

When the pipeline shown above was first announced, the Zone Builder received somewhere between 5 and 10 DNS record changes per second. Although the Zone Builder at the time was a massive improvement on the previous system, it was not going to last long given the growth that Cloudflare was and still is experiencing. Fast-forward to today, we receive on average 250 DNS record changes per second, a staggering 25x growth from when the Zone Builder was first announced.

The way that the Zone Builder was initially designed was quite simple. When a zone changed, the Zone Builder would grab all the records from the database for that zone and compare them with the records stored in Quicksilver. Any differences were fixed to maintain consistency between the database and Quicksilver.

This is known as a full build. Full builds work great because each DNS record change corresponds to one zone change event. This means that multiple events can be batched and subsequently dropped if needed. For example, if a user makes 10 changes to their zone, this will result in 10 events. Since the Zone Builder grabs all the records for the zone anyway, there is no need to build the zone 10 times. We just need to build it once after the final change has been submitted.

What happens if the zone contains one million records or 10 million records? This is a very real problem, because not only is Cloudflare scaling, but our customers are scaling with us. Today our largest zone currently has millions of records. Although our database is optimized for performance, even one full build containing one million records took up to 35 seconds, largely caused by database query latency. In addition, when the Zone Builder compares the zone contents with the records stored in Quicksilver, we need to fetch all the records from Quicksilver for the zone, adding time. However, the impact doesn’t just stop at the single customer. This also eats up more resources from other services reading from the database and slows down the rate at which our Zone Builder can build other zones.

Per-record build: a new build type

Many of you might already have the solution to this problem in your head:

Why doesn’t the Zone Builder just query the database for the record that has changed and propagate just the single record?

Of course this is the correct solution, and the one we eventually ended up at. However, the road to get there was not as simple as it might seem.

Firstly, our database uses a series of functions that, at zone touch time, create a PostgreSQL Queue (PGQ) event that ultimately gets turned into a Kafka event. Initially, we had no distinction for individual record events, which meant our Zone Builder had no idea what had actually changed until it queried the database.

Next, the Zone Builder is still responsible for DNS zone settings in addition to records. Some examples of DNS zone settings include custom nameserver control and DNSSEC control. As a result, our Zone Builder needed to be aware of specific build types to ensure that they don’t step on each other. Furthermore, per-record builds cannot be batched in the same way that zone builds can because each event needs to be actioned separately.

As a result, a brand new scheduling system needed to be written. Lastly, Quicksilver interaction needed to be re-written to account for the different types of schedulers. These issues can be broken down as follows:

- Create a new Kafka event pipeline for record changes that contain information about the changed record.

- Separate the Zone Builder into a new type of scheduler that implements some defined scheduler interface.

- Implement the per-record scheduler to read events one by one in the correct order.

- Implement the new Quicksilver interface for the per-record scheduler.

Below is a high level diagram of how the new Zone Builder looks internally with the new scheduler types.

It is critically important that we lock between these two schedulers because it would otherwise be possible for the full build scheduler to overwrite the per-record scheduler’s changes with stale data.

It is important to note that none of this per-record architecture would be possible without the use of Cloudflare’s black lie approach to negative answers with DNSSEC. Normally, in order to properly serve negative answers with DNSSEC, all the records within the zone must be canonically sorted. This is needed in order to maintain a list of references from the apex record through all the records in the zone. With this normal approach to negative answers, a single record that has been added to the zone requires collecting all records to determine its insertion point within this sorted list of names.

Bugs

I would love to be able to write a Cloudflare blog where everything went smoothly; however, that is never the case. Bugs happen, but we need to be ready to react to them and set ourselves up so that next time this specific bug cannot happen.

In this case, the major bug we discovered was related to the cleanup of old records in Quicksilver. With the full Zone Builder, we have the luxury of knowing exactly what records exist in both the database and in Quicksilver. This makes writing and cleaning up a fairly simple task.

When the per-record builds were introduced, record events such as creates, updates, and deletes all needed to be treated differently. Creates and deletes are fairly simple because you are either adding or removing a record from Quicksilver. Updates introduced an unforeseen issue due to the way that our PGQ was producing Kafka events. Record updates only contained the new record information, which meant that when the record name was changed, we had no way of knowing what to query for in Quicksilver in order to clean up the old record. This meant that any time a customer changed the name of a record in the DNS Records API, the old record would not be deleted. Ultimately, this was fixed by replacing those specific update events with both a creation and a deletion event so that the Zone Builder had the necessary information to clean up the stale records.

None of this is rocket surgery, but we spend engineering effort to continuously improve our software so that it grows with the scaling of Cloudflare. And it’s challenging to change such a fundamental low-level part of Cloudflare when millions of domains depend on us.

Results

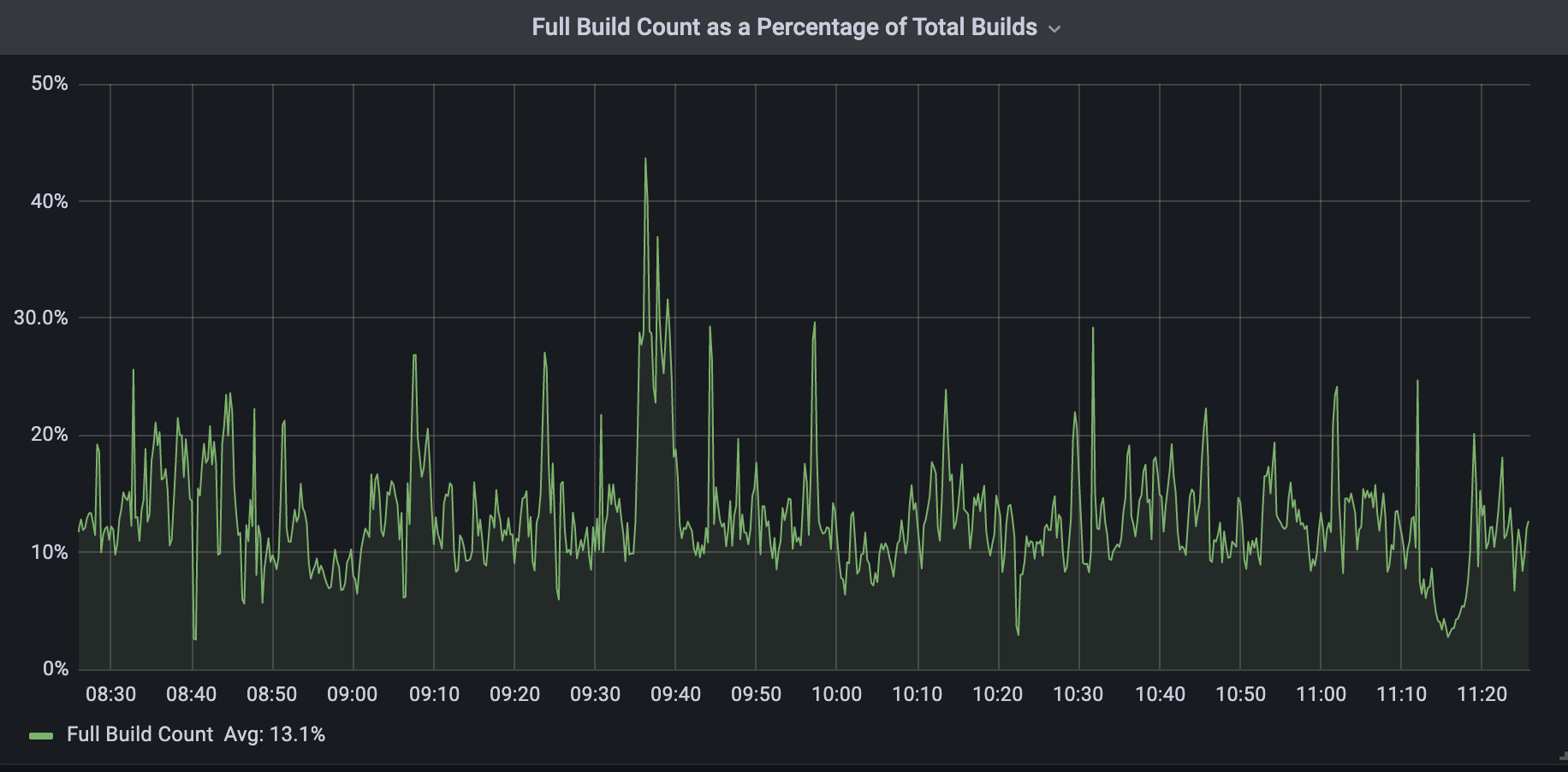

Today, all DNS Records API record changes are treated as per-record builds by the Zone Builder. As I previously mentioned, we have not been able to get rid of full builds entirely; however, they now represent about 13% of total DNS builds. This 13% corresponds to changes made to DNS settings that require knowledge of the entire zone’s contents.

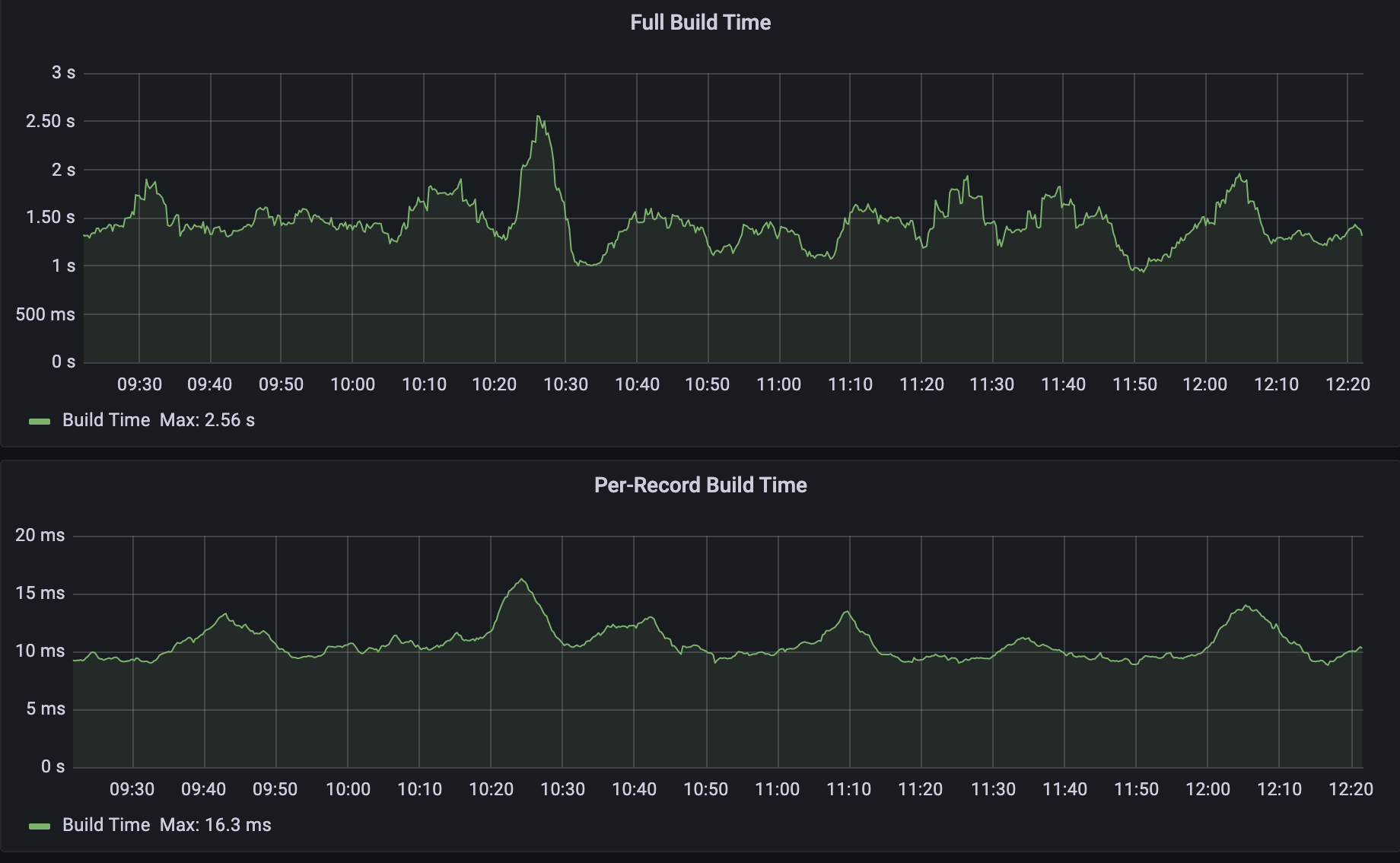

When we compare the two build types as shown below we can see that per-record builds are on average 150x faster than full builds. The build time below includes both database query time and Quicksilver write time.

From there, our records are propagated to our global network through Quicksilver.

The 150x improvement above is with respect to averages, but what about that 4000x that I mentioned at the start? As you can imagine, as the size of the zone increases, the difference between full build time and per-record build time also increases. I used a test zone of one million records and ran several per-record builds, followed by several full builds. The results are shown in the table below:

| Build Type | Build Time (ms) |

|---|---|

| Per Record #1 | 6 |

| Per Record #2 | 7 |

| Per Record #3 | 6 |

| Per Record #4 | 8 |

| Per Record #5 | 6 |

| Full #1 | 34032 |

| Full #2 | 33953 |

| Full #3 | 34271 |

| Full #4 | 34121 |

| Full #5 | 34093 |

We can see that, given five per-record builds, the build time was no more than 8ms. When running a full build however, the build time lasted on average 34 seconds. That is a build time reduction of 4250x!

Given the full build times for both average-sized zones and large zones, it is apparent that all Cloudflare customers are benefitting from this improved performance, and the benefits only improve as the size of the zone increases. In addition, our Zone Builder uses less database and Quicksilver resources meaning other Cloudflare systems are able to operate at increased capacity.

Next Steps

The results here have been very impactful, though we think that we can do even better. In the future, we plan to get rid of full builds altogether by replacing them with zone setting builds. Instead of fetching the zone settings in addition to all the records, the zone setting builder would just fetch the settings for the zone and propagate that to our global network via Quicksilver. Similar to the per-record builds, this is a difficult challenge due to the complexity of zone settings and the number of actors that touch it. Ultimately if this can be accomplished, we can officially retire the full builds and leave it as a reminder in our git history of the scale at which we have grown over the years.

In addition, we plan to introduce a batching system that will collect record changes into groups to minimize the number of queries we make to our database and Quicksilver.

Does solving these kinds of technical and operational challenges excite you? Cloudflare is always hiring for talented specialists and generalists within our Engineering and other teams.

On Disinformation and Large Online Platforms

Post Syndicated from Bozho original https://techblog.bozho.net/on-disinformation-and-large-online-platforms/

This week I was invited to be a panelist, together with other digital ministers, on a side-event organized by Ukraine in Davos, during the World Economic Forum. The topic was disinformation, and I’d like to share my thoughts on it. The video recording is here, but below is not a transcript, but an expanded version.

Bulgaria is seemingly more susceptible to disinformation, for various reasons. We have a majority of the population that has positive sentiments about Russia, for historical reasons. And disinformation campaigns have been around before the war and after the wear started. The typical narratives that are being pushed every day are about the bad, decadent west; the slavic, traditional, conservative Russian government; the evil and aggressive NATO; the great and powerful, but peaceful Russian army, and so on.

These disinformation campaign are undermining public discourse and even public policy. COVID vaccination rates in Bulgaria are one of the lowest in the world (and therefore the mortality rate is one of the highest). Propaganda and conspiracy theories took hold into our society and literally killed our relatives and friends. The war is another example – Bulgaria is on the first spot when it comes to people thinking that the west (EU/NATO) is at fault for the war in Ukraine.

Kremlin uses the same propaganda techniques developed in the cold war, but applied on the free internet, much more efficiently. They use European values of free speech to undermine those same European values.

Their main channels are social networks, who seem to remain blissfully ignorant of the local context as the one described above.

What we’ve seen, and what has been leaked and discussed for a long time is that troll factories amplify anonymous websites. They share content, like content, make it seem like it’s noteworthy to the algorithms.

We know how it works. But governments can’t just block a website, because they think it’s false information. A government may easily go beyond the good intentions and do censorship. In 4 years I won’t be a minister and the next government may decide I’m spreading “western propaganda” and block my profiles, my blogs, my interviews in the media.

I said all of that in front of the Bulgarian parliament last week. I also said that local measures are insufficient, and risky.

That’s why we have to act smart. We need to strike down the mechanisms for weaponzing social networks – for spreading disinformation to large portions of the population, not to block the information itself. Brute force is dangerous. And helps the Kremlin in their narrative about the bad, hypocritical west that talks about free speech, but has the power to shut you down if a bureaucrat says so.

The solution, in my opinion, is to regulate recommendation engines, on a European level. To make these algorithms find and demote these networks of trolls (they fail at that – Facebook claims they found 3 Russian-linked accounts in January).

How to do it? It’s hard to answer if we don’t know the data and the details of how they currently work. Social networks can try to cluster users by IPs, ASs, VPN exit nodes, content similarity, DNS and WHOIS data for websites, photo databases, etc. They can consult national media registers (if they exist), via APIs, to make sure something is a media and not an auto-generated website with pre-written false content (which is what actually happens).

The regulation should make it a focus of social media not to moderate everything, but to not promote inauthentic behavior.

Europe and its partners must find a way to regulate algorithms without curbing freedom of expression. And I was in Brussels last week to underline that. We can use the Digital services act to do exactly that, and we have to do it wisely.

I’ve been criticized – why I’m taking on this task while I can do just cool things like eID and eServices and removing bureaucracy. I’m.doing those, of course, without delay.

But we are here as government officials to tackle the systemic risks. The eID I’ll introduce will do no good if we lose the hearts and minds of people to Kremlin propaganda.

The post On Disinformation and Large Online Platforms appeared first on Bozho's tech blog.

Manage application security and compliance with the AWS Cloud Development Kit and cdk-nag

Post Syndicated from Rodney Bozo original https://aws.amazon.com/blogs/devops/manage-application-security-and-compliance-with-the-aws-cloud-development-kit-and-cdk-nag/

Infrastructure as Code (IaC) is an important part of Cloud Applications. Developers rely on various Static Application Security Testing (SAST) tools to identify security/compliance issues and mitigate these issues early on, before releasing their applications to production. Additionally, SAST tools often provide reporting mechanisms that can help developers verify compliance during security reviews.

cdk-nag integrates directly into AWS Cloud Development Kit (AWS CDK) applications to provide identification and reporting mechanisms similar to SAST tooling.

This post demonstrates how to integrate cdk-nag into an AWS CDK application to provide continual feedback and help align your applications with best practices.

Overview of cdk-nag

cdk-nag (inspired by cfn_nag) validates that the state of constructs within a given scope comply with a given set of rules. Additionally, cdk-nag provides a rule suppression and compliance reporting system. cdk-nag validates constructs by extending AWS CDK Aspects. If you’re interested in learning more about the AWS CDK Aspect system, then you should check out this post.

cdk-nag includes several rule sets (NagPacks) to validate your application against. As of this post, cdk-nag includes the AWS Solutions, HIPAA Security, NIST 800-53 rev 4, NIST 800-53 rev 5, and PCI DSS 3.2.1 NagPacks. You can pick and choose different NagPacks and apply as many as you wish to a given scope.

cdk-nag rules can either be warnings or errors. Both warnings and errors will be displayed in the console and compliance reports. Only unsuppressed errors will prevent applications from deploying with the cdk deploy command.

You can see which rules are implemented in each of the NagPacks in the Rules Documentation in the GitHub repository.

Walkthrough

This walkthrough will setup a minimal AWS CDK v2 application, as well as demonstrate how to apply a NagPack to the application, how to suppress rules, and how to view a report of the findings. Although cdk-nag has support for Python, TypeScript, Java, and .NET AWS CDK applications, we’ll use TypeScript for this walkthrough.

Prerequisites

For this walkthrough, you should have the following prerequisites:

- A local installation of and experience using the AWS CDK.

Create a baseline AWS CDK application

In this section you will create and synthesize a small AWS CDK v2 application with an Amazon Simple Storage Service (Amazon S3) bucket. If you are unfamiliar with using the AWS CDK, then learn how to install and setup the AWS CDK by looking at their open source GitHub repository.

- Run the following commands to create the AWS CDK application:

mkdir CdkTest

cd CdkTest

cdk init app --language typescript- Replace the contents of the

lib/cdk_test-stack.tswith the following:

import { Stack, StackProps } from 'aws-cdk-lib';

import { Construct } from 'constructs';

import { Bucket } from 'aws-cdk-lib/aws-s3';

export class CdkTestStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const bucket = new Bucket(this, 'Bucket')

}

}- Run the following commands to install dependencies and synthesize our sample app:

npm install

npx cdk synthYou should see an AWS CloudFormation template with an S3 bucket both in your terminal and in cdk.out/CdkTestStack.template.json.

Apply a NagPack in your application

In this section, you’ll install cdk-nag, include the AwsSolutions NagPack in your application, and view the results.

- Run the following command to install

cdk-nag:

npm install cdk-nag- Replace the contents of the

bin/cdk_test.tswith the following:

#!/usr/bin/env node

import 'source-map-support/register';

import * as cdk from 'aws-cdk-lib';

import { CdkTestStack } from '../lib/cdk_test-stack';

import { AwsSolutionsChecks } from 'cdk-nag'

import { Aspects } from 'aws-cdk-lib';

const app = new cdk.App();

// Add the cdk-nag AwsSolutions Pack with extra verbose logging enabled.

Aspects.of(app).add(new AwsSolutionsChecks({ verbose: true }))

new CdkTestStack(app, 'CdkTestStack', {});- Run the following command to view the output and generate the compliance report:

npx cdk synthThe output should look similar to the following (Note: SSE stands for Server-side encryption):

[Error at /CdkTestStack/Bucket/Resource] AwsSolutions-S1: The S3 Bucket has server access logs disabled. The bucket should have server access logging enabled to provide detailed records for the requests that are made to the bucket.

[Error at /CdkTestStack/Bucket/Resource] AwsSolutions-S2: The S3 Bucket does not have public access restricted and blocked. The bucket should have public access restricted and blocked to prevent unauthorized access.

[Error at /CdkTestStack/Bucket/Resource] AwsSolutions-S3: The S3 Bucket does not default encryption enabled. The bucket should minimally have SSE enabled to help protect data-at-rest.

[Error at /CdkTestStack/Bucket/Resource] AwsSolutions-S10: The S3 Bucket does not require requests to use SSL. You can use HTTPS (TLS) to help prevent potential attackers from eavesdropping on or manipulating network traffic using person-in-the-middle or similar attacks. You should allow only encrypted connections over HTTPS (TLS) using the aws:SecureTransport condition on Amazon S3 bucket policies.

Found errorsNote that applying the AwsSolutions NagPack to the application rendered several errors in the console (AwsSolutions-S1, AwsSolutions-S2, AwsSolutions-S3, and AwsSolutions-S10). Furthermore, the cdk.out/AwsSolutions-CdkTestStack-NagReport.csv contains the errors as well:

Rule ID,Resource ID,Compliance,Exception Reason,Rule Level,Rule Info

"AwsSolutions-S1","CdkTestStack/Bucket/Resource","Non-Compliant","N/A","Error","The S3 Bucket has server access logs disabled."

"AwsSolutions-S2","CdkTestStack/Bucket/Resource","Non-Compliant","N/A","Error","The S3 Bucket does not have public access restricted and blocked."

"AwsSolutions-S3","CdkTestStack/Bucket/Resource","Non-Compliant","N/A","Error","The S3 Bucket does not default encryption enabled."

"AwsSolutions-S5","CdkTestStack/Bucket/Resource","Compliant","N/A","Error","The S3 static website bucket either has an open world bucket policy or does not use a CloudFront Origin Access Identity (OAI) in the bucket policy for limited getObject and/or putObject permissions."

"AwsSolutions-S10","CdkTestStack/Bucket/Resource","Non-Compliant","N/A","Error","The S3 Bucket does not require requests to use SSL."Remediating and suppressing errors

In this section, you’ll remediate the AwsSolutions-S10 error, suppress the AwsSolutions-S1 error on a Stack level, suppress the AwsSolutions-S2 error on a Resource level errors, and not remediate the AwsSolutions-S3 error and view the results.

- Replace the contents of the

lib/cdk_test-stack.tswith the following:

import { Stack, StackProps } from 'aws-cdk-lib';

import { Construct } from 'constructs';

import { Bucket } from 'aws-cdk-lib/aws-s3';

import { NagSuppressions } from 'cdk-nag'

export class CdkTestStack extends Stack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

// The local scope 'this' is the Stack.

NagSuppressions.addStackSuppressions(this, [

{

id: 'AwsSolutions-S1',

reason: 'Demonstrate a stack level suppression.'

},

])

// Remediating AwsSolutions-S10 by enforcing SSL on the bucket.

const bucket = new Bucket(this, 'Bucket', { enforceSSL: true })

NagSuppressions.addResourceSuppressions(bucket, [

{

id: 'AwsSolutions-S2',

reason: 'Demonstrate a resource level suppression.'

},

])

}

}- Run the

cdk synthcommand again:

npx cdk synthThe output should look similar to the following:

[Error at /CdkTestStack/Bucket/Resource] AwsSolutions-S3: The S3 Bucket does not default encryption enabled. The bucket should minimally have SSE enabled to help protect data-at-rest.

Found errorsThe cdk.out/AwsSolutions-CdkTestStack-NagReport.csv contains more details about rule compliance, non-compliance, and suppressions.

Rule ID,Resource ID,Compliance,Exception Reason,Rule Level,Rule Info

"AwsSolutions-S1","CdkTestStack/Bucket/Resource","Suppressed","Demonstrate a stack level suppression.","Error","The S3 Bucket has server access logs disabled."

"AwsSolutions-S2","CdkTestStack/Bucket/Resource","Suppressed","Demonstrate a resource level suppression.","Error","The S3 Bucket does not have public access restricted and blocked."

"AwsSolutions-S3","CdkTestStack/Bucket/Resource","Non-Compliant","N/A","Error","The S3 Bucket does not default encryption enabled."

"AwsSolutions-S5","CdkTestStack/Bucket/Resource","Compliant","N/A","Error","The S3 static website bucket either has an open world bucket policy or does not use a CloudFront Origin Access Identity (OAI) in the bucket policy for limited getObject and/or putObject permissions."

"AwsSolutions-S10","CdkTestStack/Bucket/Resource","Compliant","N/A","Error","The S3 Bucket does not require requests to use SSL."

Moreover, note that the resultant cdk.out/CdkTestStack.template.json template contains the cdk-nag suppression data. This provides transparency with what rules weren’t applied to an application, as the suppression data is included in the resources.

{

"Metadata": {

"cdk_nag": {

"rules_to_suppress": [

{

"id": "AwsSolutions-S1",

"reason": "Demonstrate a stack level suppression."

}

]

}

},

"Resources": {

"BucketDEB6E181": {

"Type": "AWS::S3::Bucket",

"UpdateReplacePolicy": "Retain",

"DeletionPolicy": "Retain",

"Metadata": {

"aws:cdk:path": "CdkTestStack/Bucket/Resource",

"cdk_nag": {

"rules_to_suppress": [

{

"id": "AwsSolutions-S2",

"reason": "Demonstrate a resource level suppression."

}

]

}

}

},

...

},

...

}Reflecting on the Walkthrough

In this section, you learned how to apply a NagPack to your application, remediate/suppress warnings and errors, and review the compliance reports. The reporting and suppression systems provide mechanisms for the development and security teams within organizations to work together to identify and mitigate potential security/compliance issues. Security can choose which NagPacks developers should apply to their applications. Then, developers can use the feedback to quickly remediate issues. Security can use the reports to validate compliances. Furthermore, developers and security can work together to use suppressions to transparently document exceptions to rules that they’ve decided not to follow.

Advanced usage and further reading

This section briefly covers some advanced options for using cdk-nag.

Unit Testing with the AWS CDK Assertions Library

The Annotations submodule of the AWS CDK assertions library lets you check for cdk-nag warnings and errors without AWS credentials by integrating a NagPack into your application unit tests. Read this post for further information about the AWS CDK assertions module. The following is an example of using assertions with a TypeScript AWS CDK application and Jest for unit testing.

import { Annotations, Match } from 'aws-cdk-lib/assertions';

import { App, Aspects, Stack } from 'aws-cdk-lib';

import { AwsSolutionsChecks } from 'cdk-nag';

import { CdkTestStack } from '../lib/cdk_test-stack';

describe('cdk-nag AwsSolutions Pack', () => {

let stack: Stack;

let app: App;

// In this case we can use beforeAll() over beforeEach() since our tests

// do not modify the state of the application

beforeAll(() => {

// GIVEN

app = new App();

stack = new CdkTestStack(app, 'test');

// WHEN

Aspects.of(stack).add(new AwsSolutionsChecks());

});

// THEN

test('No unsuppressed Warnings', () => {

const warnings = Annotations.fromStack(stack).findWarning(

'*',

Match.stringLikeRegexp('AwsSolutions-.*')

);

expect(warnings).toHaveLength(0);

});

test('No unsuppressed Errors', () => {

const errors = Annotations.fromStack(stack).findError(

'*',

Match.stringLikeRegexp('AwsSolutions-.*')

);

expect(errors).toHaveLength(0);

});

});Additionally, many testing frameworks include watch functionality. This is a background process that reruns all of the tests when files in your project have changed for fast feedback. For example, when using the AWS CDK in JavaScript/Typescript, you can use the Jest CLI watch commands. When Jest watch detects a file change, it attempts to run unit tests related to the changed file. This can be used to automatically run cdk-nag-related tests when making changes to your AWS CDK application.

CDK Watch

When developing in non-production environments, consider using AWS CDK Watch with a NagPack for fast feedback. AWS CDK Watch attempts to synthesize and then deploy changes whenever you save changes to your files. Aspects are run during synthesis. Therefore, any NagPacks applied to your application will also run on save. As in the walkthrough, all of the unsuppressed errors will prevent deployments, all of the messages will be output to the console, and all of the compliance reports will be generated. Read this post for further information about AWS CDK Watch.

Conclusion

In this post, you learned how to use cdk-nag in your AWS CDK applications. To learn more about using cdk-nag in your applications, check out the README in the GitHub Repository. If you would like to learn how to create your own rules and NagPacks, then check out the developer documentation. The repository is open source and welcomes community contributions and feedback.

Author:

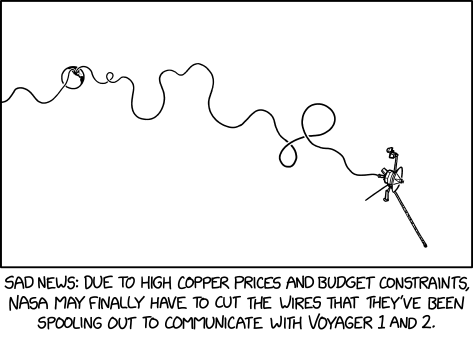

Voyager Wires

Post Syndicated from original https://xkcd.com/2624/

Spring 2022 SOC 2 Type I Privacy report now available

Post Syndicated from Nimesh Ravasa original https://aws.amazon.com/blogs/security/spring-2022-soc-2-type-i-privacy-report-now-available/

Your privacy considerations are at the core of our compliance work at Amazon Web Services (AWS), and we are focused on the protection of your content while using AWS services. Our Spring 2022 SOC 2 Type I Privacy report is now available, which provides customers with a third-party attestation of our system and the suitability of the design of our privacy controls. The SOC 2 Privacy Trust Service Criteria (TSC), developed by the American Institute of Certified Public Accountants (AICPA) establishes the criteria for evaluating controls that relate to how personal information is collected, used, retained, disclosed, and disposed of. For more information about our privacy commitments supporting our SOC 2 Type 1 report, see the AWS Customer Agreement.

The scope of the Spring 2022 SOC 2 Type I Privacy report includes information about how we handle the content that you upload to AWS, and how it is protected in all of the services and locations that are in scope for the latest AWS SOC reports. The SOC 2 Type I Privacy report is available to AWS customers through AWS Artifact in the AWS Management Console.

As always, we value your feedback and questions. Feel free to reach out to the compliance team through the Contact Us page. If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security how-to-content, news, and feature announcements? Follow us on Twitter.

The Latest Cash Grab?? – Starlink for RVs – All Details!

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=UgZvizSRuLg