Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=wGRjZDnWUb8

Improving AWS Java applications with Amazon CodeGuru Reviewer

Post Syndicated from Rajdeep Mukherjee original https://aws.amazon.com/blogs/devops/improving-aws-java-applications-with-amazon-codeguru-reviewer/

Amazon CodeGuru Reviewer is a machine learning (ML)-based AWS service for providing automated code reviews comments on your Java and Python applications. Powered by program analysis and ML, CodeGuru Reviewer detects hard-to-find bugs and inefficiencies in your code and leverages best practices learned from across millions of lines of open-source and Amazon code. You can start analyzing your code through pull requests and full repository analysis (for more information, see Automating code reviews and application profiling with Amazon CodeGuru).

The recommendations generated by CodeGuru Reviewer for Java fall into the following categories:

- AWS best practices

- Concurrency

- Security

- Resource leaks

- Other specialized categories such as sensitive information leaks, input validation, and code clones

- General best practices on data structures, control flow, exception handling, and more

We expect the recommendations to benefit beginners as well as expert Java programmers.

In this post, we showcase CodeGuru Reviewer recommendations related to using the AWS SDK for Java. For in-depth discussion of other specialized topics, see our posts on concurrency, security, and resource leaks. For Python applications, see Raising Python code quality using Amazon CodeGuru.

The AWS SDK for Java simplifies the use of AWS services by providing a set of features that are consistent and familiar for Java developers. The SDK has more than 250 AWS service clients, which are available on GitHub. Service clients include services like Amazon Simple Storage Service (Amazon S3), Amazon DynamoDB, Amazon Kinesis, Amazon Elastic Compute Cloud (Amazon EC2), AWS IoT, and Amazon SageMaker. These services constitute more than 6,000 operations, which you can use to access AWS services. With such rich and diverse services and APIs, developers may not always be aware of the nuances of AWS API usage. These nuances may not be important at the beginning, but become critical as the scale increases and the application evolves or becomes diverse. This is why CodeGuru Reviewer has a category of recommendations: AWS best practices. This category of recommendations enables you to become aware of certain features of AWS APIs so your code can be more correct and performant.

The first part of this post focuses on the key features of the AWS SDK for Java as well as API patterns in AWS services. The second part of this post demonstrates using CodeGuru Reviewer to improve code quality for Java applications that use the AWS SDK for Java.

AWS SDK for Java

The AWS SDK for Java supports higher-level abstractions for simplified development and provides support for cross-cutting concerns such as credential management, retries, data marshaling, and serialization. In this section, we describe a few key features that are supported in the AWS SDK for Java. Additionally, we discuss some key API patterns such as batching, and pagination, in AWS services.

The AWS SDK for Java has the following features:

- Waiters – Waiters are utility methods that make it easy to wait for a resource to transition into a desired state. Waiters makes it easier to abstract out the polling logic into a simple API call. The waiters interface provides a custom delay strategy to control the sleep time between retries, as well as a custom condition on whether polling of a resource should be retried. The AWS SDK for Java also offer an async variant of waiters.

- Exceptions – The AWS SDK for Java uses runtime (or unchecked) exceptions instead of checked exceptions in order to give you fine-grained control over the errors you want to handle and to prevent scalability issues inherent with checked exceptions in large applications. Broadly, the AWS SDK for Java has two types of exceptions:

- AmazonClientException – Indicates that a problem occurred inside the Java client code, either while trying to send a request to AWS or while trying to parse a response from AWS. For example, the AWS SDK for Java throws an AmazonClientException if no network connection is available when you try to call an operation on one of the clients.

- AmazonServiceException – Represents an error response from an AWS service. For example, if you try to end an EC2 instance that doesn’t exist, Amazon EC2 returns an error response, and all the details of that response are included in the AmazonServiceException that’s thrown. For some cases, a subclass of AmazonServiceException is thrown to allow you fine-grained control over handling error cases through catch blocks.

The API has the following patterns:

- Batching – A batch operation provides you with the ability to perform a single CRUD operation (create, read, update, delete) on multiple resources. Some typical use cases include the following:

- SendMessageBatch in Amazon Simple Queue Service (Amazon SQS), which allows you to send multiple messages to a single queue

- BatchGetItem in DynamoDB, which allows you to retrieve multiple items from different tables

- BatchDelete in Amazon S3, which deletes more than one object

- Pagination – Many AWS operations return paginated results when the response object is too large to return in a single response. To enable you to perform pagination, the request and response objects for many service clients in the SDK provide a continuation token (typically named NextToken) to indicate additional results.

AWS best practices

Now that we have summarized the SDK-specific features and API patterns, let’s look at the CodeGuru Reviewer recommendations on AWS API use.

The CodeGuru Reviewer recommendations for the AWS SDK for Java range from detecting outdated or deprecated APIs to warning about API misuse, missing pagination, authentication and exception scenarios, and using efficient API alternatives. In this section, we discuss a few examples patterned after real code.

Handling pagination

Over 1,000 APIs from more than 150 AWS services have pagination operations. The pagination best practice rule in CodeGuru covers all the pagination operations. In particular, the pagination rule checks if the Java application correctly fetches all the results of the pagination operation.

The response of a pagination operation in AWS SDK for Java 1.0 contains a token that has to be used to retrieve the next page of results. In the following code snippet, you make a call to listTables(), a DynamoDB ListTables operation, which can only return up to 100 table names per page. This code might not produce complete results because the operation returns paginated results instead of all results.

public void getDynamoDbTable() {

AmazonDynamoDBClient client = new AmazonDynamoDBClient();

List<String> tables = dynamoDbClient.listTables().getTableNames();

System.out.println(tables)

}CodeGuru Reviewer detects the missing pagination in the code snippet and makes the following recommendation to add another call to check for additional results.

You can accept the recommendation and add the logic to get the next page of table names by checking if a token (LastEvaluatedTableName in ListTablesResponse) is included in each response page. If such a token is present, it’s used in a subsequent request to fetch the next page of results. See the following code:

public void getDynamoDbTable() {

AmazonDynamoDBClient client = new AmazonDynamoDBClient();

ListTablesRequest listTablesRequest = ListTablesRequest.builder().build();

boolean done = false;

while (!done) {

ListTablesResponse listTablesResponse = client.listTables(listTablesRequest);

System.out.println(listTablesResponse.tableNames());

if (listTablesResponse.lastEvaluatedTableName() == null) {

done = true;

}

listTablesRequest = listTablesRequest.toBuilder()

.exclusiveStartTableName(listTablesResponse.lastEvaluatedTableName())

.build();

}

}Handling failures in batch operation calls

Batch operations are common with many AWS services that process bulk requests. Batch operations can succeed without throwing exceptions even if some items in the request fail. Therefore, a recommended practice is to explicitly check for any failures in the result of the batch APIs. Over 40 APIs from more than 20 AWS services have batch operations. The best practice rule in CodeGuru Reviewer covers all the batch operations. In the following code snippet, you make a call to sendMessageBatch, a batch operation from Amazon SQS, but it doesn’t handle any errors returned by that batch operation:

public void flush(final String sqsEndPoint,

final List<SendMessageBatchRequestEntry> batch) {

AwsSqsClientBuilder awsSqsClientBuilder;

AmazonSQS sqsClient = awsSqsClientBuilder.build();

if (batch.isEmpty()) {

return;

}

sqsClient.sendMessageBatch(sqsEndPoint, batch);

}CodeGuru Reviewer detects this issue and makes the following recommendation to check the return value for failures.

You can accept this recommendation and add logging for the complete list of messages that failed to send, in addition to throwing an SQSUpdateException. See the following code:

public void flush(final String sqsEndPoint,

final List<SendMessageBatchRequestEntry> batch) {

AwsSqsClientBuilder awsSqsClientBuilder;

AmazonSQS sqsClient = awsSqsClientBuilder.build();

if (batch.isEmpty()) {

return;

}

SendMessageBatchResult result = sqsClient.sendMessageBatch(sqsEndPoint, batch);

final List<BatchResultErrorEntry> failed = result.getFailed();

if (!failed.isEmpty()) {

final String failedMessage = failed.stream()

.map(batchResultErrorEntry ->

String.format("…", batchResultErrorEntry.getId(),

batchResultErrorEntry.getMessage()))

.collect(Collectors.joining(","));

throw new SQSUpdateException("Error occurred while sending

messages to SQS::" + failedMessage);

}

}Exception handling best practices

Amazon S3 is one of the most popular AWS services with our customers. A frequent operation with this service is to upload a stream-based object through an Amazon S3 client. Stream-based uploads might encounter occasional network connectivity or timeout issues, and the best practice to address such a scenario is to properly handle the corresponding ResetException error. ResetException extends SdkClientException, which subsequently extends AmazonClientException. Consider the following code snippet, which lacks such exception handling:

private void uploadInputStreamToS3(String bucketName,

InputStream input,

String key, ObjectMetadata metadata)

throws SdkClientException {

final AmazonS3Client amazonS3Client;

PutObjectRequest putObjectRequest =

new PutObjectRequest(bucketName, key, input, metadata);

amazonS3Client.putObject(putObjectRequest);

}In this case, CodeGuru Reviewer correctly detects the missing handling of the ResetException error and suggests possible solutions.

This recommendation is rich in that it provides alternatives to suit different use cases. The most common handling uses File or FileInputStream objects, but in other cases explicit handling of mark and reset operations are necessary to reliably avoid a ResetException.

You can fix the code by explicitly setting a predefined read limit using the setReadLimit method of RequestClientOptions. Its default value is 128 KB. Setting the read limit value to one byte greater than the size of stream reliably avoids a ResetException.

For example, if the maximum expected size of a stream is 100,000 bytes, set the read limit to 100,001 (100,000 + 1) bytes. The mark and reset always work for 100,000 bytes or less. However, this might cause some streams to buffer that number of bytes into memory.

The fix reliably avoids ResetException when uploading an object of type InputStream to Amazon S3:

private void uploadInputStreamToS3(String bucketName, InputStream input,

String key, ObjectMetadata metadata)

throws SdkClientException {

final AmazonS3Client amazonS3Client;

final Integer READ_LIMIT = 10000;

PutObjectRequest putObjectRequest =

new PutObjectRequest(bucketName, key, input, metadata);

putObjectRequest.getRequestClientOptions().setReadLimit(READ_LIMIT);

amazonS3Client.putObject(putObjectRequest);

}Replacing custom polling with waiters

A common activity when you’re working with services that are eventually consistent (such as DynamoDB) or have a lead time for creating resources (such as Amazon EC2) is to wait for a resource to transition into a desired state. The AWS SDK provides the Waiters API, a convenient and efficient feature for waiting that abstracts out the polling logic into a simple API call. If you’re not aware of this feature, you might come up with a custom, and potentially inefficient polling logic to determine whether a particular resource had transitioned into a desired state.

The following code appears to be waiting for the status of EC2 instances to change to shutting-down or terminated inside a while (true) loop:

private boolean terminateInstance(final String instanceId, final AmazonEC2 ec2Client)

throws InterruptedException {

long start = System.currentTimeMillis();

while (true) {

try {

DescribeInstanceStatusResult describeInstanceStatusResult =

ec2Client.describeInstanceStatus(new DescribeInstanceStatusRequest()

.withInstanceIds(instanceId).withIncludeAllInstances(true));

List<InstanceStatus> instanceStatusList =

describeInstanceStatusResult.getInstanceStatuses();

long finish = System.currentTimeMillis();

long timeElapsed = finish - start;

if (timeElapsed > INSTANCE_TERMINATION_TIMEOUT) {

break;

}

if (instanceStatusList.size() < 1) {

Thread.sleep(WAIT_FOR_TRANSITION_INTERVAL);

continue;

}

currentState = instanceStatusList.get(0).getInstanceState().getName();

if ("shutting-down".equals(currentState) || "terminated".equals(currentState)) {

return true;

} else {

Thread.sleep(WAIT_FOR_TRANSITION_INTERVAL);

}

} catch (AmazonServiceException ex) {

throw ex;

}

…

}CodeGuru Reviewer detects the polling scenario and recommends you use the waiters feature to help improve efficiency of such programs.

Based on the recommendation, the following code uses the waiters function that is available in the AWS SDK for Java. The polling logic is replaced with the waiters() function, which is then run with the call to waiters.run(…), which accepts custom provided parameters, including the request and optional custom polling strategy. The run function polls synchronously until it’s determined that the resource transitioned into the desired state or not. The SDK throws a WaiterTimedOutException if the resource doesn’t transition into the desired state even after a certain number of retries. The fixed code is more efficient, simple, and abstracts the polling logic to determine whether a particular resource had transitioned into a desired state into a simple API call:

public void terminateInstance(final String instanceId, final AmazonEC2 ec2Client)

throws InterruptedException {

Waiter<DescribeInstancesRequest> waiter = ec2Client.waiters().instanceTerminated();

ec2Client.terminateInstances(new TerminateInstancesRequest().withInstanceIds(instanceId));

try {

waiter.run(new WaiterParameters()

.withRequest(new DescribeInstancesRequest()

.withInstanceIds(instanceId))

.withPollingStrategy(new PollingStrategy(new MaxAttemptsRetryStrategy(60),

new FixedDelayStrategy(5))));

} catch (WaiterTimedOutException e) {

List<InstanceStatus> instanceStatusList = ec2Client.describeInstanceStatus(

new DescribeInstanceStatusRequest()

.withInstanceIds(instanceId)

.withIncludeAllInstances(true))

.getInstanceStatuses();

String state;

if (instanceStatusList != null && instanceStatusList.size() > 0) {

state = instanceStatusList.get(0).getInstanceState().getName();

}

}

}Service-specific best practice recommendations

In addition to the SDK operation-specific recommendations in the AWS SDK for Java we discussed, there are various AWS service-specific best practice recommendations pertaining to service APIs for services such as Amazon S3, Amazon EC2, DynamoDB, and more, where CodeGuru Reviewer can help to improve Java applications that use AWS service clients. For example, CodeGuru can detect the following:

- Resource leaks in Java applications that use high-level libraries, such as the Amazon S3 TransferManager

- Deprecated methods in various AWS services

- Missing null checks on the response of the GetItem API call in DynamoDB

- Missing error handling in the output of the PutRecords API call in Kinesis

- Anti-patterns such as binding the SNS subscribe or createTopic operation with Publish operation

Conclusion

This post introduced how to use CodeGuru Reviewer to improve the use of the AWS SDK in Java applications. CodeGuru is now available for you to try. For pricing information, see Amazon CodeGuru pricing.

The Inheritance Project

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=KR-Jy6qQxYc

Use new account assignment APIs for AWS SSO to automate multi-account access

Post Syndicated from Akhil Aendapally original https://aws.amazon.com/blogs/security/use-new-account-assignment-apis-for-aws-sso-to-automate-multi-account-access/

In this blog post, we’ll show how you can programmatically assign and audit access to multiple AWS accounts for your AWS Single Sign-On (SSO) users and groups, using the AWS Command Line Interface (AWS CLI) and AWS CloudFormation.

With AWS SSO, you can centrally manage access and user permissions to all of your accounts in AWS Organizations. You can assign user permissions based on common job functions, customize them to meet your specific security requirements, and assign the permissions to users or groups in the specific accounts where they need access. You can create, read, update, and delete permission sets in one place to have consistent role policies across your entire organization. You can then provide access by assigning permission sets to multiple users and groups in multiple accounts all in a single operation.

AWS SSO recently added new account assignment APIs and AWS CloudFormation support to automate access assignment across AWS Organizations accounts. This release addressed feedback from our customers with multi-account environments who wanted to adopt AWS SSO, but faced challenges related to managing AWS account permissions. To automate the previously manual process and save your administration time, you can now use the new AWS SSO account assignment APIs, or AWS CloudFormation templates, to programmatically manage AWS account permission sets in multi-account environments.

With AWS SSO account assignment APIs, you can now build your automation that will assign access for your users and groups to AWS accounts. You can also gain insights into who has access to which permission sets in which accounts across your entire AWS Organizations structure. With the account assignment APIs, your automation system can programmatically retrieve permission sets for audit and governance purposes, as shown in Figure 1.

Figure 1: Automating multi-account access with the AWS SSO API and AWS CloudFormation

Overview

In this walkthrough, we’ll illustrate how to create permission sets, assign permission sets to users and groups in AWS SSO, and grant access for users and groups to multiple AWS accounts by using the AWS Command Line Interface (AWS CLI) and AWS CloudFormation.

To grant user permissions to AWS resources with AWS SSO, you use permission sets. A permission set is a collection of AWS Identity and Access Management (IAM) policies. Permission sets can contain up to 10 AWS managed policies and a single custom policy stored in AWS SSO.

A policy is an object that defines a user’s permissions. Policies contain statements that represent individual access controls (allow or deny) for various tasks. This determines what tasks users can or cannot perform within the AWS account. AWS evaluates these policies when an IAM principal (a user or role) makes a request.

When you provision a permission set in the AWS account, AWS SSO creates a corresponding IAM role on that account, with a trust policy that allows users to assume the role through AWS SSO. With AWS SSO, you can assign more than one permission set to a user in the specific AWS account. Users who have multiple permission sets must choose one when they sign in through the user portal or the AWS CLI. Users will see these as IAM roles.

To learn more about IAM policies, see Policies and permissions in IAM. To learn more about permission sets, see Permission Sets.

Assume you have a company, Example.com, which has three AWS accounts: an organization management account (ExampleOrgMaster), a development account (ExampleOrgDev), and a test account (ExampleOrgTest). Example.com uses AWS Organizations to manage these accounts and has already enabled AWS SSO.

Example.com has the IT security lead, Frank Infosec, who needs PowerUserAccess to the test account (ExampleOrgTest) and SecurityAudit access to the development account (ExampleOrgDev). Alice Developer, the developer, needs full access to Amazon Elastic Compute Cloud (Amazon EC2) and Amazon Simple Storage Service (Amazon S3) through the development account (ExampleOrgDev). We’ll show you how to assign and audit the access for Alice and Frank centrally with AWS SSO, using the AWS CLI.

The flow includes the following steps:

- Create three permission sets:

- PowerUserAccess, with the PowerUserAccess policy attached.

- AuditAccess, with the SecurityAudit policy attached.

- EC2-S3-FullAccess, with the AmazonEC2FullAccess and AmazonS3FullAccess policies attached.

- Assign permission sets to the AWS account and AWS SSO users:

- Assign the PowerUserAccess and AuditAccess permission sets to Frank Infosec, to provide the required access to the ExampleOrgDev and ExampleOrgTest accounts.

- Assign the EC2-S3-FullAccess permission set to Alice Developer, to provide the required permissions to the ExampleOrgDev account.

- Retrieve the assigned permissions by using Account Entitlement APIs for audit and governance purposes.

Note: AWS SSO Permission sets can contain either AWS managed policies or custom policies that are stored in AWS SSO. In this blog we attach AWS managed polices to the AWS SSO Permission sets for simplicity. To help secure your AWS resources, follow the standard security advice of granting least privilege access using AWS SSO custom policy while creating AWS SSO Permission set.

Figure 2: AWS Organizations accounts access for Alice and Frank

To help simplify administration of access permissions, we recommend that you assign access directly to groups rather than to individual users. With groups, you can grant or deny permissions to groups of users, rather than having to apply those permissions to each individual. For simplicity, in this blog you’ll assign permissions directly to the users.

Prerequisites

Before you start this walkthrough, complete these steps:

- Identify the AWS accounts to which you want to grant AWS SSO access, and add them to your organization. To learn more, see Managing the AWS accounts in your organization.

- Get the permissions that are required to use the AWS SSO console. To learn more, see Permissions Required to Use the AWS SSO Console.

- Sign in to the AWS Organizations management account AWS Management Console with AWS SSO administrator credentials. To learn more about AWS Organizations and the management account, see AWS Organizations FAQs.

- Enable AWS SSO for your AWS Organizations structure. To learn more, see Enable AWS SSO.

- Have your users and groups provisioned in AWS SSO. You can manage your users and groups in AWS SSO internal identity store, connect AWS SSO to your Microsoft Active Directory or integrate with an external identity provider using SAML 2.0 and SCIM 2.0. To learn more about AWS SSO identity store options, see Manage Your Identity Source.

Use the AWS SSO API from the AWS CLI

In order to call the AWS SSO account assignment API by using the AWS CLI, you need to install and configure AWS CLI v2. For more information about AWS CLI installation and configuration, see Installing the AWS CLI and Configuring the AWS CLI.

Step 1: Create permission sets

In this step, you learn how to create EC2-S3FullAccess, AuditAccess, and PowerUserAccess permission sets in AWS SSO from the AWS CLI.

Before you create the permission sets, run the following command to get the Amazon Resource Name (ARN) of the AWS SSO instance and the Identity Store ID, which you will need later in the process when you create and assign permission sets to AWS accounts and users or groups.

Figure 3 shows the results of running the command.

Figure 3: AWS SSO list instances

Next, create the permission set for the security team (Frank) and dev team (Alice), as follows.

Permission set for Alice Developer (EC2-S3-FullAccess)

Run the following command to create the EC2-S3-FullAccess permission set for Alice, as shown in Figure 4.

Figure 4: Creating the permission set EC2-S3-FullAccess

Permission set for Frank Infosec (AuditAccess)

Run the following command to create the AuditAccess permission set for Frank, as shown in Figure 5.

Figure 5: Creating the permission set AuditAccess

Permission set for Frank Infosec (PowerUserAccess)

Run the following command to create the PowerUserAccess permission set for Frank, as shown in Figure 6.

Figure 6: Creating the permission set PowerUserAccess

Copy the permission set ARN from these responses, which you will need when you attach the managed policies.

Step 2: Assign policies to permission sets

In this step, you learn how to assign managed policies to the permission sets that you created in step 1.

Attach policies to the EC2-S3-FullAccess permission set

Run the following command to attach the amazonec2fullacess AWS managed policy to the EC2-S3-FullAccess permission set, as shown in Figure 7.

Figure 7: Attaching the AWS managed policy amazonec2fullaccess to the EC2-S3-FullAccess permission set

Run the following command to attach the amazons3fullaccess AWS managed policy to the EC2-S3-FullAccess permission set, as shown in Figure 8.

Figure 8: Attaching the AWS managed policy amazons3fullaccess to the EC2-S3-FullAccess permission set

Attach a policy to the AuditAccess permission set

Run the following command to attach the SecurityAudit managed policy to the AuditAccess permission set that you created earlier, as shown in Figure 9.

Figure 9: Attaching the AWS managed policy SecurityAudit to the AuditAccess permission set

Attach a policy to the PowerUserAccess permission set

The following command is similar to the previous command; it attaches the PowerUserAccess managed policy to the PowerUserAccess permission set, as shown in Figure 10.

Figure 10: Attaching AWS managed policy PowerUserAccess to the PowerUserAccess permission set

In the next step, you assign users (Frank Infosec and Alice Developer) to their respective permission sets and assign permission sets to accounts.

Step 3: Assign permission sets to users and groups and grant access to AWS accounts

In this step, you assign the AWS SSO permission sets you created to users and groups and AWS accounts, to grant the required access for these users and groups on respective AWS accounts.

To assign access to an AWS account for a user or group, using a permission set you already created, you need the following:

- The principal ID (the ID for the user or group)

- The AWS account ID to which you need to assign this permission set

To obtain a user’s or group’s principal ID (UserID or GroupID), you need to use the AWS SSO Identity Store API. The AWS SSO Identity Store service enables you to retrieve all of your identities (users and groups) from AWS SSO. See AWS SSO Identity Store API for more details.

Use the first two commands shown here to get the principal ID for the two users, Alice (Alice’s user name is [email protected]) and Frank (Frank’s user name is [email protected]).

Alice’s user ID

Run the following command to get Alice’s user ID, as shown in Figure 11.

Figure 11: Retrieving Alice’s user ID

Frank’s user ID

Run the following command to get Frank’s user ID, as shown in Figure 12.

Figure 12: Retrieving Frank’s user ID

Note: To get the principal ID for a group, use the following command.

Assign the EC2-S3-FullAccess permission set to Alice in the ExampleOrgDev account

Run the following command to assign Alice access to the ExampleOrgDev account using the EC2-S3-FullAccess permission set. This will give Alice full access to Amazon EC2 and S3 services in the ExampleOrgDev account.

Note: When you call the CreateAccountAssignment API, AWS SSO automatically provisions the specified permission set on the account in the form of an IAM policy attached to the AWS SSO–created IAM role. This role is immutable: it’s fully managed by the AWS SSO, and it cannot be deleted or changed by the user even if the user has full administrative rights on the account. If the permission set is subsequently updated, the corresponding IAM policies attached to roles in your accounts won’t be updated automatically. In this case, you will need to call ProvisionPermissionSet to propagate these updates.

Figure 13: Assigning the EC2-S3-FullAccess permission set to Alice on the ExampleOrgDev account

Assign the AuditAccess permission set to Frank Infosec in the ExampleOrgDev account

Run the following command to assign Frank access to the ExampleOrgDev account using the EC2-S3- AuditAccess permission set.

Figure 14: Assigning the AuditAccess permission set to Frank on the ExampleOrgDev account

Assign the PowerUserAccess permission set to Frank Infosec in the ExampleOrgTest account

Run the following command to assign Frank access to the ExampleOrgTest account using the PowerUserAccess permission set.

Figure 15: Assigning the PowerUserAccess permission set to Frank on the ExampleOrgTest account

To view the permission sets provisioned on the AWS account, run the following command, as shown in Figure 16.

Figure 16: View the permission sets (AuditAccess and EC2-S3-FullAccess) assigned to the ExampleOrgDev account

To review the created resources in the AWS Management Console, navigate to the AWS SSO console. In the list of permission sets on the AWS accounts tab, choose the EC2-S3-FullAccess permission set. Under AWS managed policies, the policies attached to the permission set are listed, as shown in Figure 17.

Figure 17: Review the permission set in the AWS SSO console

To see the AWS accounts, where the EC2-S3-FullAccess permission set is currently provisioned, navigate to the AWS accounts tab, as shown in Figure 18.

Figure 18: Review permission set account assignment in the AWS SSO console

Step 4: Audit access

In this step, you learn how to audit access assigned to your users and group by using the AWS SSO account assignment API. In this example, you’ll start from a permission set, review the permissions (AWS-managed policies or a custom policy) attached to the permission set, get the users and groups associated with the permission set, and see which AWS accounts the permission set is provisioned to.

List the IAM managed policies for the permission set

Run the following command to list the IAM managed policies that are attached to a specified permission set, as shown in Figure 19.

Figure 19: View the managed policies attached to the permission set

List the assignee of the AWS account with the permission set

Run the following command to list the assignee (the user or group with the respective principal ID) of the specified AWS account with the specified permission set, as shown in Figure 20.

Figure 20: View the permission set and the user or group attached to the AWS account

List the accounts to which the permission set is provisioned

Run the following command to list the accounts that are associated with a specific permission set, as shown in Figure 21.

Figure 21: View AWS accounts to which the permission set is provisioned

In this section of the post, we’ve illustrated how to create a permission set, assign a managed policy to the permission set, and grant access for AWS SSO users or groups to AWS accounts by using this permission set. In the next section, we’ll show you how to do the same using AWS CloudFormation.

Use the AWS SSO API through AWS CloudFormation

In this section, you learn how to use CloudFormation templates to automate the creation of permission sets, attach managed policies, and use permission sets to assign access for a particular user or group to AWS accounts.

Sign in to your AWS Management Console and create a CloudFormation stack by using the following CloudFormation template. For more information on how to create a CloudFormation stack, see Creating a stack on the AWS CloudFormation console.

When you create the stack, provide the following information for setting the example permission sets for Frank Infosec and Alice Developer, as shown in Figure 22:

- The Alice Developer and Frank Infosec user IDs

- The ExampleOrgDev and ExampleOrgTest account IDs

- The AWS SSO instance ARN

Then launch the CloudFormation stack.

Figure 22: User inputs to launch the CloudFormation template

AWS CloudFormation creates the resources that are shown in Figure 23.

Figure 23: Resources created from the CloudFormation stack

Cleanup

To delete the resources you created by using the AWS CLI, use these commands.

Run the following command to delete the account assignment.

After the account assignment is deleted, run the following command to delete the permission set.

To delete the resource that you created by using the CloudFormation template, go to the AWS CloudFormation console. Select the appropriate stack you created, and then choose delete. Deleting the CloudFormation stack cleans up the resources that were created.

Summary

In this blog post, we showed how to use the AWS SSO account assignment API to automate the deployment of permission sets, how to add managed policies to permission sets, and how to assign access for AWS users and groups to AWS accounts by using specified permission sets.

To learn more about the AWS SSO APIs available for you, see the AWS Single Sign-On API Reference Guide.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on the AWS SSO forum or contact AWS Support.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

SonicWall Zero-Day

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/02/sonicwall-zero-day.html

Hackers are exploiting a zero-day in SonicWall:

In an email, an NCC Group spokeswoman wrote: “Our team has observed signs of an attempted exploitation of a vulnerabilitythat affects the SonicWall SMA 100 series devices. We are working closely with SonicWall to investigate this in more depth.”

In Monday’s update, SonicWall representatives said the company’s engineering team confirmed that the submission by NCC Group included a “critical zero-day” in the SMA 100 series 10.x code. SonicWall is tracking it as SNWLID-2021-0001. The SMA 100 series is a line of secure remote access appliances.

The disclosure makes SonicWall at least the fifth large company to report in recent weeks that it was targeted by sophisticated hackers. Other companies include network management tool provider SolarWinds, Microsoft, FireEye, and Malwarebytes. CrowdStrike also reported being targeted but said the attack wasn’t successful.

Neither SonicWall nor NCC Group said that the hack involving the SonicWall zero-day was linked to the larger hack campaign involving SolarWinds. Based on the timing of the disclosure and some of the details in it, however, there is widespread speculation that the two are connected.

The speculation is just that — speculation. I have no opinion in the matter. This could easily be part of the SolarWinds campaign, which targeted other security companies. But there are a lot of “highly sophisticated threat actors” — that’s how NCC Group described them — out there, and this could easily be a coincidence.

Were I working for a national intelligence organization, I would try to disguise my operations as being part of the SolarWinds attack.

EDITED TO ADD (2/9): SonicWall has patched the vulnerability.

The Rust language gets a foundation

Post Syndicated from original https://lwn.net/Articles/845437/rss

The newly formed Rust Foundation has announced

its existence. “Today, on behalf of the Rust Core team, I’m

excited to announce the Rust Foundation, a new independent non-profit

organization to steward the Rust programming language and ecosystem, with a

unique focus on supporting the set of maintainers that govern and develop

the project. The Rust Foundation will hold its first board meeting

tomorrow, February 9th, at 4pm CT. The board of directors is composed of 5

directors from our Founding member companies, AWS, Huawei, Google,

Microsoft, and Mozilla, as well as 5 directors from project leadership, 2

representing the Core Team, as well as 3 project areas: Reliability,

Quality, and Collaboration.” Mozilla has transferred its trademarks

and domains for Rust over to the foundation.

[$] The burstable CFS bandwidth controller

Post Syndicated from original https://lwn.net/Articles/844976/rss

The kernel’s CFS bandwidth controller is an effective way of controlling

just how much CPU time is available to each control group. It can keep

processes from consuming too much CPU time and ensure that adequate time is

available for all processes that need it. That said, it’s not entirely

surprising that

the bandwidth controller is not perfect for every workload out there. This

patch set from Huaixin Chang aims to make it work better for bursty,

latency-sensitive workloads.

Highlights: Kati Morton | Are U Okay? | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=Tt8gsoZJda8

Four stable kernels

Post Syndicated from original https://lwn.net/Articles/845427/rss

Stable kernels 5.10.14, 5.4.96, 4.19.174, and 4.14.220 have been released. They all contain

important fixes and users should upgrade.

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/845426/rss

Security updates have been issued by Debian (chromium, gdisk, intel-microcode, privoxy, and wireshark), Fedora (mingw-binutils, mingw-jasper, mingw-SDL2, php, python-pygments, python3.10, wireshark, wpa_supplicant, and zeromq), Mageia (gdisk and tomcat), openSUSE (chromium, cups, kernel, nextcloud, openvswitch, RT kernel, and rubygem-nokogiri), SUSE (nutch-core), and Ubuntu (openldap, php-pear, and qemu).

Operating Lambda: Application design – Scaling and concurrency: Part 2

Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/operating-lambda-application-design-scaling-and-concurrency-part-2/

In the Operating Lambda series, I cover important topics for developers, architects, and systems administrators who are managing AWS Lambda-based applications. This three-part series discusses application design for Lambda-based applications.

Part 1 shows how to work with Service Quotas, when to request increases, and architecting with quotas in mind. This post covers scaling and concurrency and the different behaviors of on-demand and Provisioned Concurrency.

Scaling and concurrency in Lambda

Lambda is engineered to provide managed scaling in a way that does not rely upon threading or any custom engineering in your code. As traffic increases, Lambda increases the number of concurrent executions of your functions.

When a function is first invoked, the Lambda service creates an instance of the function and runs the handler method to process the event. After completion, the function remains available for a period of time to process subsequent events. If other events arrive while the function is busy, Lambda creates more instances of the function to handle these requests concurrently.

For an initial burst of traffic, your cumulative concurrency in a Region can reach between 500 and 3000 per minute, depending upon the Region. After this initial burst, functions can scale by an additional 500 instances per minute. If requests arrive faster than a function can scale, or if a function reaches maximum capacity, additional requests fail with a throttling error (status code 429).

All AWS accounts start with a default concurrent limit of 1000 per Region. This is a soft limit that you can increase by submitting a request in the AWS Support Center.

On-demand scaling example

In this example, a Lambda receives 10,000 synchronous requests from Amazon API Gateway. The concurrency limit for the account is 10,000. The following shows four scenarios:

In each case, all of the requests arrive at the same time in the minute they are scheduled:

- All requests arrive immediately: 3000 requests are handled by new execution environments; 7000 are throttled.

- Requests arrive over 2 minutes: 3000 requests are handled by new execution environments in the first minute; the remaining 2000 are throttled. In minute 2, another 500 environments are created and the 3000 original environments are reused; 1500 are throttled.

- Requests arrive over 3 minutes: 3000 requests are handled by new execution environments in the first minute; the remaining 333 are throttled. In minute 2, another 500 environments are created and the 3000 original environments are reused; all requests are served. In minute 3, the remaining 3334 requests are served by warm environments.

- Requests arrive over 4 minutes: In minute 1, 2500 requests are handled by new execution environment; the same environments are reused in subsequent minutes to serve all requests.

Provisioned Concurrency scaling example

The majority of Lambda workloads are asynchronous so the default scaling behavior provides a reasonable trade-off between throughput and configuration management overhead. However, for synchronous invocations from interactive workloads, such as web or mobile applications, there are times when you need more control over how many concurrent function instances are ready to receive traffic.

Provisioned Concurrency is a Lambda feature that prepares concurrent execution environments in advance of invocations. Consequently, this can be used to address two issues. First, if expected traffic arrives more quickly than the default burst capacity, Provisioned Concurrency can ensure that your function is available to meet the demand. Second, if you have latency-sensitive workloads that require predictable double-digit millisecond latency, Provisioned Concurrency solves the typical cold start issues associated with default scaling.

Provisioned Concurrency is a configuration available for a specific published version or alias of a Lambda function. It does not rely on any custom code or changes to a function’s logic, and it’s compatible with features such as VPC configuration, Lambda layers. For more information on how Provisioned Concurrency optimizes performance for Lambda-based applications, watch this Tech Talk video.

Using the same scenarios with 10,000 requests, the function is configured with a Provisioned Concurrency of 7,000:

- In case #1, 7,000 requests are handled by the provisioned environments with no cold start. The remaining 3,000 requests are handled by new, on-demand execution environments.

- In cases #2-4, all requests are handled by provisioned environments in the minute when they arrive.

Using service integrations and asynchronous processing

Synchronous requests from services like API Gateway require immediate responses. In many cases, these workloads can be rearchitected as asynchronous workloads. In this case, API Gateway uses a service integration to persist messages in an Amazon SQS queue durably. A Lambda function consumes these messages from the queue, and updates the status in an Amazon DynamoDB table. Another API endpoint provides the status of the request by querying the DynamoDB table:

API Gateway has a default throttle limit of 10,000 requests per second, which can be raised upon request. SQS standard queues support a virtually unlimited throughput of API actions such as SendMessage.

The Lambda function consuming the messages from SQS can control the speed of processing through a combination of two factors. The first is BatchSize, which is the number of messages received by each invocation of the function, and the second is concurrency. Provided there is still concurrency available in your account, the Lambda function is not throttled while processing messages from an SQS queue.

In asynchronous workflows, it’s not possible to pass the result of the function back through the invocation path. The original API Gateway call receives an acknowledgment that the message has been stored in SQS, which is returned back to the caller. There are multiple mechanisms for returning the result back to the caller. One uses a DynamoDB table, as shown, to store a transaction ID and status, which is then polled by the caller via another API Gateway endpoint until the work is finished. Alternatively, you can use webhooks via Amazon SNS or WebSockets via AWS IoT Core to return the result.

Using this asynchronous approach can make it much easier to handle unpredictable traffic with significant volumes. While it is not suitable for every use case, it can simplify scalability operations.

Reserved concurrency

Lambda functions in a single AWS account in one Region share the concurrency limit. If one function exceeds the concurrent limit, this prevents other functions from being invoked by the Lambda service. You can set reserved capacity for Lambda functions to ensure that they can be invoked even if the overall capacity has been exhausted. Reserved capacity has two effects on a Lambda function:

- The reserved capacity is deducted from the overall capacity for the AWS account in a given Region. The Lambda function always has the reserved capacity available exclusively for its own invocations.

- The reserved capacity restricts the maximum number of concurrency invocations for that function. Synchronous requests arriving in excess of the reserved capacity limit fail with a throttling error.

You can also use reserved capacity to throttle the rate of requests processed by your workload. For Lambda functions that are invoked asynchronously or using an internal poller, such as for S3, SQS, or DynamoDB integrations, reserved capacity limits how many requests are processed simultaneously. In this case, events are stored durably in internal queues until the Lambda function is available. This can help create a smoothing effect for handling spiky levels of traffic.

For example, a Lambda function receives messages from an SQS queue and writes to a DynamoDB table. It has a reserved concurrency of 10 with a batch size of 10 items. The SQS queue rapidly receives 1,000 messages. The Lambda function scales up to 10 concurrent instances, each processing 10 messages from the queue. While it takes longer to process the entire queue, this results in a consistent rate of write capacity units (WCUs) consumed by the DynamoDB table.

To learn more, read “Managing AWS Lambda Function Concurrency” and “Managing concurrency for a Lambda function”.

Conclusion

This post explains scaling and concurrency in Lambda and the different behaviors of on-demand and Provisioned Concurrency. It also shows how to use service integrations and asynchronous patterns in Lambda-based applications. Finally, I discuss how reserved concurrency works and how to use it in your application design.

Part 3 will discuss choosing and managing runtimes, networking and VPC configurations, and different invocation modes.

For more serverless learning resources, visit Serverless Land.

What is Zigbee and Z-Wave? Smart Home 2021

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=HVq4tT6g41U

Congratulations to Cloudflare’s 2020 Partner Award Winners

Post Syndicated from Matthew Harrell original https://blog.cloudflare.com/2020-partner-awards/

We are privileged to share Cloudflare’s inaugural set of Partner Awards. These Awards recognize our partner companies and representatives worldwide who stood out this past year for their investments in acquiring technical expertise in our offerings, for delivering innovative applications and services built on Cloudflare, and for their commitment to customer success.

The unprecedented challenges in 2020 have reinforced how critical it is to have a secure, performant, and reliable Internet. Throughout these turbulent times, our partners have been busy innovating and helping organizations of all sizes and in various industries. By protecting and accelerating websites, applications, and teams with Cloudflare, our partners have helped these organizations adjust, seize new opportunities, and thrive.

Congratulations to each of our award winners. Cloudflare’s mission of helping build a better Internet is more important than ever. And our partners are more critical than ever to achieving our mission. Testifying to Cloudflare’s global reach, our honorees represent companies headquartered in 16 countries.

Cloudflare Partner of the Year Honorees, 2020

Worldwide MSP Partner of the Year: Rackspace Technology

Honors the top performing managed services provider (MSP) partner across Cloudflare’s three sales geographies: Americas, APAC, and EMEA.

Cloudflare Americas Partner Awards

Partner of the Year: Optiv Security

Honors the top performing partner that has demonstrated phenomenal sales achievement in 2020.

Technology Partner of the Year: Sumo Logic

Honors the technology alliance that has delivered stellar business outcomes and demonstrated continued commitment to our joint customers.

New Partner of the Year: GuidePoint Security

Honors the partner who, although new to the Cloudflare Partner Network in 2020, has already made substantial investments to grow our shared business.

Partner Systems Engineers (SEs) of the Year:

Honors the partner SEs who have demonstrated depth of knowledge and expertise in Cloudflare solutions through earned certifications and also outstanding delivery of customer service in the practical application of Cloudflare technology solutions to customers’ technical and business challenges.

- Kati Graf, Adapture

- Bill Franklin, AVANT

- Kyle Rohan, GuidePoint Security

- Manooch Hosseini, Optiv Security

- Stephen Semmelroth, Stratacore

Most Valuable Players (MVPs) of the Year:

Honors top achievers who not only provided stellar service to our joint customers, but also built new business value by tapping into the power of network, relationships, and ecosystems.

- Kati Graf, Adapture

- Bill Mione, GuidePoint Security

- Manooch Hosseini and Isaac Liu, Optiv

- Leo Perez, RKON

Cloudflare APAC Partner Awards

Distributor of the Year: Softdebut Co., Ltd

Honors the top performing distributor that has best represented Cloudflare and positioned partners to secure customer sales and growth revenue streams.

Technology Partner of the Year: Pacific Tech Pte Ltd

Honors the technology alliance that has delivered stellar business outcomes and demonstrated continued commitment to our joint customers.

Partner Systems Engineers (SEs) of the Year:

- Shim Chin Leong, Excer Global Sdn Bhd

- Alex Yang, Omni Intelligent Services, Inc.

- Eric Tung, Primary Guard Sdn Bhd

Honors the first three individuals who have achieved four key certifications and have demonstrated depth of knowledge and expertise in those fields.

Most Valuable PPlayers (MVPs) of the Year:

- Eric Cheng, NC Services Limited

- Shripad Dhole, Wipro Limited

Honors top achievers who not only provided stellar service to our joint customers, but also built new business value by tapping into the power of network, relationships, and ecosystems.

Cloudflare EMEA Partner Awards

Partner of the Year: Safenames

Honors the top performing partner that has demonstrated phenomenal sales achievement in 2020.

Distributor of the Year: V-Valley

Honors the top performing distributor that has best represented Cloudflare and positioned partners to secure customer sales and growth revenue streams.

New Partner of the Year: Synopsis

Honors a new partner to the Cloudflare Partner Network this year that has already made substantial investments to grow our shared business.

Cloudflare Certification Champions: KUWAITNET, Origo, WideOps

Honors partner companies whose teams earned the highest total number of Cloudflare certifications.

Partner Systems Engineers (SEs) of the Year:

- Mika Peltonen, Ambientia

- Illia Melnikov, BAKOTECH

- Jobin Joseph and Varghese George, KUWAITNET

- Guðlaugur Garðar Eyþórsson, Origo

- Vahid Madi, Synopsis

- Roberto López Sánchez, V-Valley

Honors the partner SEs who have demonstrated depth of knowledge and expertise in Cloudflare solutions through earned certifications and also outstanding delivery of customer service in the practical application of Cloudflare technology solutions to customers’ technical and business challenges.

Are you a services or solutions provider interested in joining the Cloudflare Partner Network? Check out the short video below on our program and visit our partner portal for more information.

Zabbix 5 IT Infrastructure Monitoring Cookbook: Interview with the Co-Author

Post Syndicated from Jekaterina Petruhina original https://blog.zabbix.com/zabbix-5-it-infrastructure-monitoring-cookbook-interview-with-the-co-author/13439/

Active Zabbix community members Nathan Liefting and Brian van Baekel wrote a new book on Zabbix, sharing their years of monitoring experience. Nathan Liefting kindly agreed to share with us how the idea for Monitoring Cookbook was born and revealed the main topics covered.

Hi Nathan, congratulations on writing the book. Welcome to the Zabbix contributor community! First, introduce yourself. What is your experience in Zabbix?

Thank you, I’m very proud to have the opportunity to work with Brian van Baekel on this book and very grateful for the work our publisher Packt has put into it as well. My name is Nathan Liefting. I work for the company Opensource ICT Solutions, where I’m a full-time IT consultant and certified Zabbix Trainer.

My first introduction to Zabbix was back in 2016. We were in the process of upgrading a Zabbix 2 instance to the newly released Zabbix 3.0. As a Network Consultant, I was immediately very intrigued by the monitoring system and its capabilities in terms of customization compared to other monitoring solutions.

Since then, I’ve always worked with Zabbix, and when I was working for Managed Service Provider True in Amsterdam, I set up a new Zabbix setup to migrate from the old monitoring solution.

How did you have the idea for the book?

That’s a good question. You might know Patrik Uytterhoeven from Openfuture BV. He wrote the Zabbix 3 cookbook for Packt. Patrik was kind enough to recommend Brian and me to write the new Zabbix 5 cookbook, and from there on out, we started work on it.

Who is your reader? Who would benefit from the book primarily?

The book is about 350 pages, and of course, we can’t explain everything Zabbix has to offer in that page count. So we focus the cookbook on Zabbix beginners that would like to get on that intermediate level. We detail basic topics like data collection with different methods and more advanced topics like the Zabbix API and database partitioning.

I am convinced that the book contains a recipe for everyone that works with Zabbix, and it’s basically a foundation of knowledge that anyone could fall back on. See it as your starter field guide into professionally working with Zabbix.

Do readers need any prior skills in monitoring with Zabbix to master the new knowledge as efficiently as possible?

Definitely not. We detail everything from installation to how to monitor and more advanced topics. Start at chapter 1 with no knowledge about Zabbix whatsoever, and you can read the book. If you think a recipe is about something you already know, simply skip it and read the next one. We wrote the recipes to be as independent of each other as possible, so beginners and more advanced users can get used to the book.

Could you tell us about the content of the book? What information does it provide?

I would like to say everything is covered, but that would be a dream scenario. The book grasps the surface of almost everything you need to know to work professionally with Zabbix. If you know Zabbix, you know it’s possible to write 100 pages about triggers alone. Of course, this would be counterproductive for starters. We kept the recipes graspable for beginners, with valuable information for more advanced users.

Some topics we go over are:

- Zabbix setup and how to use it

- Setting up the different Zabbix monitoring item types

- Working with triggers and alerts

- Building structured templates according to best practice

- Visualizing data with graphs, dashboards, etc.

- Zabbix host discovery and low-level discovery

- Zabbix proxies

- Zabbix integrations with media like Microsoft Teams and Slack

- Zabbix API and custom integrations

- Upgrading components, database partitioning, and performance management

- Zabbix in the cloud

There are now many online resources about Zabbix – forums, blogs, YouTube channels. Why did you decide to opt for the print format?

I’m a big fan of sharing resources online, don’t get me wrong. But to me, there is nothing better than an old-fashioned book to have at the ready when I need it. I can read it, and at one point, I’m thinking, “Wait! I read something that could solve our problem”.

Printed media is not dead if you ask me. In my eyes, a book is still the best way to prepare yourself for any subject in IT. But even if you don’t like printed media, we over an amazing collection of information in the old paper format as well as an eBook.

What was your Zabbix learning path? Which resources did you found the best to gather Zabbix knowledge?

Definitely Zabbix official courses. I loved those so much I couldn’t wait to get my trainer certification myself. Now that I have it, I’m providing the official training myself and sharing the Zabbix knowledge I’ve acquired over the years with others like in the book.

Of course, the amazing Zabbix community offers great ways to share knowledge, for example, the Zabbix blog. I used those as well and even templates found on the Zabbix share to reverse engineers them and see how people worked.

Where will the book be available to purchase?

You can find the book on Amazon here.

When you purchase the book, please leave a review, as this really helps us spread the word about the book. I will personally read every review, so I’d love to hear any feedback on the book to improve later revisions and new releases.

If you’re reading this after purchasing, thank you very much for the support. I hope you enjoy the work.

Do you have any further plans for new books?

I’ve just finished writing this book, and it is a significant impact on personal time. I will definitely consider writing another book, but for now, I’ll focus on sharing content for Opensource ICT Solutions and on my personal website. Besides IT engineering, I also like to create other content, like my photography work, which I share on this website.

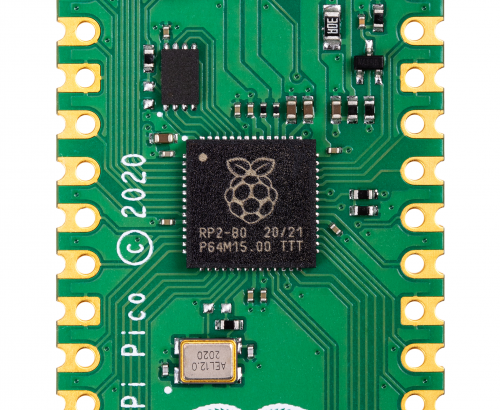

The journey to Raspberry Silicon

Post Syndicated from Liam Fraser original https://www.raspberrypi.org/blog/the-journey-to-raspberry-silicon/

When I first joined Raspberry Pi as a software engineer four and a half years ago, I didn’t know anything about chip design. I thought it was magic. This blog post looks at the journey to Raspberry Silicon and the design process of RP2040.

RP2040 has been in development since summer 2017. Chips are extremely complicated to design. In particular, the first chip you design requires you to design several fundamental components, which you can then reuse on future chips. The engineering effort was also diverted at some points in the project (for example to focus on the Raspberry Pi 4 launch).

Once the chip architecture is specified, the next stage of the project is the design and implementation, where hardware is described using a hardware description language such as Verilog. Verilog has been around since 1984 and, along with VHDL, has been used to design most chips in existence today. So what does Verilog look like, and how does it compare to writing software?

Suppose we have a C program that implements two wrapping counters:

void count_forever(void) {

uint8_t i = 0;

uint8_t j = 0;

while (1) {

i += 1;

j += 1;

}

}This C program will execute sequentially line by line, and the processor won’t be able to do anything else (unless it is interrupted) while running this code. Let’s compare this with a Verilog implementation of the same counter:

module counter (

input wire clk,

input wire rst_n,

output reg [7:0] i,

output reg [7:0] j

);

always @ (posedge clk or negedge rst_n) begin

if (~rst_n) begin

// Counter is in reset so hold counter at 0

i <= 8’d0;

j <= 8’d0;

end else begin

i <= i + 8’d1;

j <= j + 8’d1;

end

end

endmoduleVerilog statements are executed in parallel on every clock cycle, so both i and j are updated at exactly the same time, whereas the C program increments i first, followed by j. Expanding on this idea, you can think of a chip as thousands of small Verilog modules like this, all executing in parallel.

A chip designer has several tools available to them to test the design. Testing/verification is the most important part of a chip design project: if a feature hasn’t been tested, then it probably doesn’t work. Two methods of testing used on RP2040 are simulators and FPGAs.

A simulator lets you simulate the entire chip design, and also some additional components. In RP2040’s case, we simulated RP2040 and an external flash chip, allowing us to run code from SPI flash in the simulator. That is the beauty of hardware design: you can design some hardware, then write some C code to test it, and then watch it all run cycle by cycle in the simulator.

The downside to simulators is that they are very slow. It can take several hours to simulate just one second of a chip. Simulation time can be reduced by testing blocks of hardware in isolation from the rest of the chip, but even then it is still slow. This is where FPGAs come in…

FPGAs (Field Programmable Gate Arrays) are chips that have reconfigurable logic, and can emulate the digital parts of a chip, allowing most of the logic in the chip to be tested.

FPGAs can’t emulate the analogue parts of a design, such as the resistors that are built into RP2040’s USB PHY. However, this can be approximated by using external hardware to provide analogue functionality. FPGAs often can’t run a design at full speed. In RP2040’s case, the FPGA was able to run at 48MHz (compared to 133MHz for the fully fledged chip). This is still fast enough to test everything we wanted and also develop software on.

FPGAs also have debug logic built into them. This allows the hardware designer to probe signals in the FPGA, and view them in a waveform viewer similar to the simulator above, although visibility is limited compared to the simulator.

The RP2040 bootrom was developed on FPGA, allowing us to test the USB boot mode, as well executing code from SPI flash. In the image above, the SD card slot on the FPGA is wired up to SPI flash using an SD card-shaped flash board designed by Luke Wren.

In parallel to Verilog development, the implementation team is busy making sure that the Verilog we write can actually be made into a real chip. Synthesis takes a Verilog description of the chip and converts the logic described into logic cells defined by your library choice. RP2040 is manufactured by TSMC, and we used their standard cell library.

Chip manufacturing isn’t perfect. So design for test (DFT) logic is inserted, allowing the logic in RP2040 to be tested during production to make sure there are no manufacturing defects (short or open circuit connections, for example). Chips that fail this production test are thrown away (this is a tiny percentage – the yield for RP2040 is particularly high due to the small die size).

After synthesis, the resulting netlist goes through a layout phase where the standard cells are physically placed and interconnect wires are routed. This is a synchronous design so clock trees are inserted, and timing is checked and fixed to make sure the design meets the clock speeds that we want. Once several design rules are checked, the layout can be exported to GDSII format, suitable for export to TSMC for manufacture.

(In reality, the process of synthesis, layout, and DFT insertion is extremely complicated and takes several months to get right, so the description here is just a highly abbreviated overview of the entire process.)

Once silicon wafers are manufactured at TSMC they need to be put into a package. After that, the first chips are sent to Pi Towers for bring-up!

A bring-up board typically has a socket (in the centre) so you can test several chips in a single board. It also separates each power supply on the chip, so you can limit the current on first power-up to check there are no shorts. You don’t want the magic smoke to escape!

Once the initial bring-up was done, RP2040 was put through its paces in the lab. Characterising behaviour, seeing how it performs at temperature and voltage extremes.

Once the initial batch of RP2040s are signed off we give the signal for mass production, ready for them to be put onto Pico boards that you have in your hands today.

A chip is useless without detailed documentation. While RP2040 was making its way to mass production, we spent several months writing the SDK and excellent documentation you have available to you today.

The post The journey to Raspberry Silicon appeared first on Raspberry Pi.

Human Zoos and Ota Benga

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=j9a4U-F1qGE

NoxPlayer Android Emulator Supply-Chain Attack

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/02/noxplayer-android-emulator-supply-chain-attack.html

It seems to be the season of sophisticated supply-chain attacks.

This one is in the NoxPlayer Android emulator:

ESET says that based on evidence its researchers gathered, a threat actor compromised one of the company’s official API (api.bignox.com) and file-hosting servers (res06.bignox.com).

Using this access, hackers tampered with the download URL of NoxPlayer updates in the API server to deliver malware to NoxPlayer users.

[…]

Despite evidence implying that attackers had access to BigNox servers since at least September 2020, ESET said the threat actor didn’t target all of the company’s users but instead focused on specific machines, suggesting this was a highly-targeted attack looking to infect only a certain class of users.

Until today, and based on its own telemetry, ESET said it spotted malware-laced NoxPlayer updates being delivered to only five victims, located in Taiwan, Hong Kong, and Sri Lanka.

I don’t know if there are actually more supply-chain attacks occurring right now. More likely is that they’ve been happening for a while, and we have recently become more diligent about looking for them.

Активи за над милиард лева са свързани с България OpenLux разкрива финансовите потайности на Люксембург

Post Syndicated from Екип на Биволъ original https://bivol.bg/openlux-start.html

Какво има в сейфа на Люксембург – най-богатата държава в ЕС на глава от населението, разположена в сърцето на Европейския съюз и считана за едно от най-големите данъчни убежища в…

Comic for 2021.02.08

Post Syndicated from Explosm.net original http://explosm.net/comics/5788/

New Cyanide and Happiness Comic

Kernel prepatch 5.11-rc7

Post Syndicated from original https://lwn.net/Articles/845339/rss

The 5.11-rc7 kernel prepatch is out for

testing. “Anyway, this is hopefully the last rc for this release, unless some

surprise comes along and makes a travesty of our carefully laid plans.

It happens.

Nothing hugely scary stands out, with the biggest single part of the

patch being some new self-tests. In fact, about a quarter of the patch

is documentation and selftests.”