Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=W8KToZRdvK4

FireEye Hacked

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2020/12/fireeye-hacked.html

FireEye was hacked by — they believe — “a nation with top-tier offensive capabilities”:

During our investigation to date, we have found that the attacker targeted and accessed certain Red Team assessment tools that we use to test our customers’ security. These tools mimic the behavior of many cyber threat actors and enable FireEye to provide essential diagnostic security services to our customers. None of the tools contain zero-day exploits. Consistent with our goal to protect the community, we are proactively releasing methods and means to detect the use of our stolen Red Team tools.

We are not sure if the attacker intends to use our Red Team tools or to publicly disclose them. Nevertheless, out of an abundance of caution, we have developed more than 300 countermeasures for our customers, and the community at large, to use in order to minimize the potential impact of the theft of these tools.

We have seen no evidence to date that any attacker has used the stolen Red Team tools. We, as well as others in the security community, will continue to monitor for any such activity. At this time, we want to ensure that the entire security community is both aware and protected against the attempted use of these Red Team tools. Specifically, here is what we are doing:

- We have prepared countermeasures that can detect or block the use of our stolen Red Team tools.

- We have implemented countermeasures into our security products.

- We are sharing these countermeasures with our colleagues in the security community so that they can update their security tools.

- We are making the countermeasures publicly available on our GitHub.

- We will continue to share and refine any additional mitigations for the Red Team tools as they become available, both publicly and directly with our security partners.

Consistent with a nation-state cyber-espionage effort, the attacker primarily sought information related to certain government customers. While the attacker was able to access some of our internal systems, at this point in our investigation, we have seen no evidence that the attacker exfiltrated data from our primary systems that store customer information from our incident response or consulting engagements, or the metadata collected by our products in our dynamic threat intelligence systems. If we discover that customer information was taken, we will contact them directly.

From the New York Times:

The hack was the biggest known theft of cybersecurity tools since those of the National Security Agency were purloined in 2016 by a still-unidentified group that calls itself the ShadowBrokers. That group dumped the N.S.A.’s hacking tools online over several months, handing nation-states and hackers the “keys to the digital kingdom,” as one former N.S.A. operator put it. North Korea and Russia ultimately used the N.S.A.’s stolen weaponry in destructive attacks on government agencies, hospitals and the world’s biggest conglomerates - at a cost of more than $10 billion.

The N.S.A.’s tools were most likely more useful than FireEye’s since the U.S. government builds purpose-made digital weapons. FireEye’s Red Team tools are essentially built from malware that the company has seen used in a wide range of attacks.

Russia is presumed to be the attacker.

Reuters article. Boing Boing post. Slashdot thread. Wired article.

Home Assistant How To – get Minimum and Maximum sensor values

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=DX_PERJAA5c

Deprecating the __cfduid cookie

Post Syndicated from Sergi Isasi original https://blog.cloudflare.com/deprecating-cfduid-cookie/

Cloudflare is deprecating the __cfduid cookie. Starting on 10 May 2021, we will stop adding a “Set-Cookie” header on all HTTP responses. The last __cfduid cookies will expire 30 days after that.

We never used the __cfduid cookie for any purpose other than providing critical performance and security services on behalf of our customers. Although, we must admit, calling it something with “uid” in it really made it sound like it was some sort of user ID. It wasn’t. Cloudflare never tracks end users across sites or sells their personal data. However, we didn’t want there to be any questions about our cookie use, and we don’t want any customer to think they need a cookie banner because of what we do.

So why did we use the __cfduid cookie before, and why can we remove it now?

The primary use of the cookie is for detecting bots on the web. Malicious bots may disrupt a service that has been explicitly requested by an end user (through DDoS attacks) or compromise the security of a user’s account (e.g. through brute force password cracking or credential stuffing, among others). We use many signals to build machine learning models that can detect automated bot traffic. The presence and age of the cfduid cookie was just one signal in our models. So for our customers who benefit from our bot management products, the cfduid cookie is a tool that allows them to provide a service explicitly requested by the end user.

The value of the cfduid cookie is derived from a one-way MD5 hash of the cookie’s IP address, date/time, user agent, hostname, and referring website — which means we can’t tie a cookie to a specific person. Still, as a privacy-first company, we thought: Can we find a better way to detect bots that doesn’t rely on collecting end user IP addresses?

For the past few weeks, we’ve been experimenting to see if it’s possible to run our bot detection algorithms without using this cookie. We’ve learned that it will be possible for us to transition away from using this cookie to detect bots. We’re giving notice of deprecation now to give our customers time to transition, while our bot management team works to ensure there’s no decline in quality of our bot detection algorithms after removing this cookie. (Note that some Bot Management customers will still require the use of a different cookie after April 1.)

While this is a small change, we’re excited about any opportunity to make the web simpler, faster, and more private.

Comic for 2020.12.09

Post Syndicated from Explosm.net original http://explosm.net/comics/5738/

New Cyanide and Happiness Comic

PennyLane on Braket + Progress Toward Fault-Tolerant Quantum Computing + Tensor Network Simulator

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/pennylane-on-braket-progress-toward-fault-tolerant-quantum-computing-tensor-network-simulator/

I first wrote about Amazon Braket last year and invited you to Get Started with Quantum Computing! Since that launch we have continued to push forward, and have added several important & powerful new features to Amazon Braket:

August 2020 – General Availability of Amazon Braket with access to quantum computing hardware from D-Wave, IonQ, and Rigetti.

September 2020 – Access to D-Wave’s Advantage Quantum Processing Unit (QPU), which includes more than 5,000 qubits and 15-way connectivity.

November 2020 – Support for resource tagging, AWS PrivateLink, and manual qubit allocation. The first two features make it easy for you to connect your existing AWS applications to the new ones that you build with Amazon Braket, and should help you to envision what a production-class cloud-based quantum computing application will look like in the future. The last feature is particularly interesting to researchers; from what I understand, certain qubits within a given piece of quantum computing hardware can have individual physical and connectivity properties that might make them perform somewhat better when used as part of a quantum circuit. You can read about Allocating Qubits on QPU Devices to learn more (this is somewhat similar to the way that a compiler allocates CPU registers to frequently used variables).

In my initial blog post I also announced the formation of the AWS Center for Quantum Computing adjacent to Caltech.

As I write this, we are in the Noisy Intermediate Scale Quantum (NISQ) era. This description captures the state of the art in quantum computers: each gate in a quantum computing circuit introduces a certain amount of accuracy-destroying noise, and the cumulative effect of this noise imposes some practical limits on the scale of the problems.

Update Time

We are working to address this challenge, as are many others in the quantum computing field. Today I would like to give you an update on what we are doing at the practical and the theoretical level.

Similar to the way that CPUs and GPUs work hand-in-hand to address large scale classical computing problems, the emerging field of hybrid quantum algorithms joins CPUs and QPUs to speed up specific calculations within a classical algorithm. This allows for shorter quantum executions that are less susceptible to the cumulative effects of noise and that run well on today’s devices.

Variational quantum algorithms are an important type of hybrid quantum algorithm. The classical code (in the CPU) iteratively adjusts the parameters of a parameterized quantum circuit, in a manner reminiscent of the way that a neural network is built by repeatedly processing batches of training data and adjusting the parameters based on the results of an objective function. The output of the objective function provides the classical code with guidance that helps to steer the process of tuning the parameters in the desired direction. Mathematically (I’m way past the edge of my comfort zone here), this is called differentiable quantum computing.

So, with this rather lengthy introduction, what are we doing?

First, we are making the PennyLane library available so that you can build hybrid quantum-classical algorithms and run them on Amazon Braket. This library lets you “follow the gradient” and write code to address problems in computational chemistry (by way of the included Q-Chem library), machine learning, and optimization. My AWS colleagues have been working with the PennyLane team to create an integrated experience when PennyLane is used together with Amazon Braket.

PennyLane is pre-installed in Braket notebooks and you can also install the Braket-PennyLane plugin in your IDE. Once you do this, you can train quantum circuits as you would train neural networks, while also making use of familiar machine learning libraries such as PyTorch and TensorFlow. When you use PennyLane on the managed simulators that are included in Amazon Braket, you can train your circuits up to 10 times faster by using parallel circuit execution.

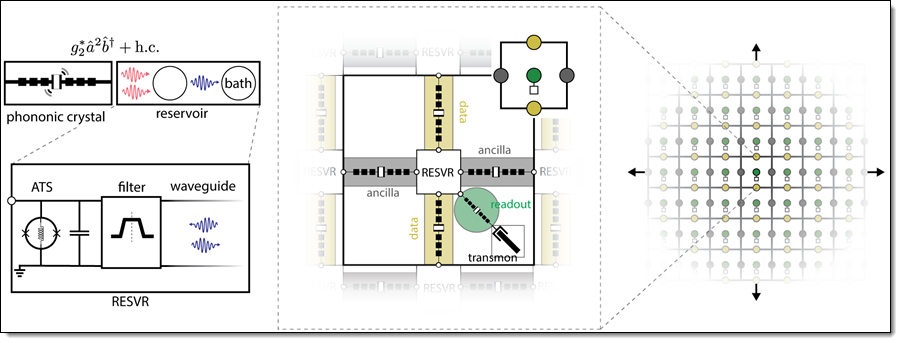

Second, the AWS Center for Quantum Computing is working to address the noise issue in two different ways: we are investigating ways to make the gates themselves more accurate, while also working on the development of more efficient ways to encode information redundantly across multiple qubits. Our new paper, Building a Fault-Tolerant Quantum Computer Using Concatenated Cat Codes speaks to both of these efforts. While not light reading, the 100+ page paper proposes the construction of a 2-D grid of micron-scale electro-acoustic qubits that are coupled via superconducting circuits:

Interestingly, this proposed qubit design was used to model a Toffoli gate, and then tested via simulations that ran for 170 hours on c5.18xlarge instances. In a very real sense, the classical computers are being used to design and then simulate their future quantum companions.

The proposed hybrid electro-acoustic qubits are far smaller than what is available today, and also offer a > 10x reduction in overhead (measured in the number of physical qubits required per error-corrected qubit and the associated control lines). In addition to working on the experimental development of this architecture based around hybrid electro-acoustic qubits, the AWS CQC team will also continue to explore other promising alternatives for fault-tolerant quantum computing to bring new, more powerful computing resources to the world.

And Third, we are expanding the choice of managed simulators that are available on Amazon Braket. In addition to the state vector simulator (which can simulate up to 34 qubits), you can use the new tensor network simulator that can simulate up to 50 qubits for certain circuits. This simulator builds a graph representation of the quantum circuit and uses the graph to find an optimized way to process it.

Help Wanted

If you are ready to help us to push the state of the art in quantum computing, take a look at our open positions. We are looking for Quantum Research Scientists, Software Developers, Hardware Developers, and Solutions Architects.

Time to Learn

It is still Day One (as we often say at Amazon) when it comes to quantum computing and now is the time to learn more and to get some experience with. Be sure to check out the Braket Tutorials repository and let me know what you think.

— Jeff;

PS – If you are ready to start exploring ways that you can put quantum computing to work in your organization, be sure to take a look at the Amazon Quantum Solutions Lab.

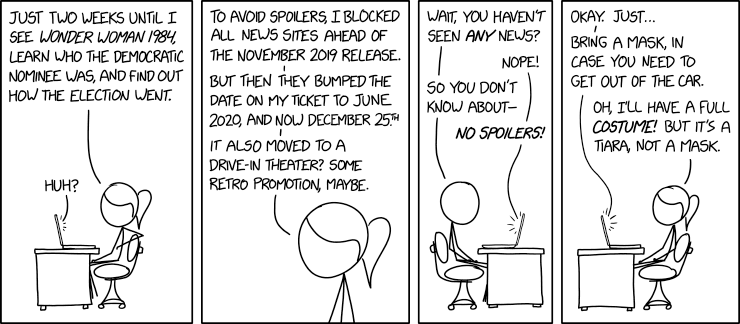

Wonder Woman 1984

Post Syndicated from original https://xkcd.com/2396/

New for Amazon CodeGuru – Python Support, Security Detectors, and Memory Profiling

Post Syndicated from Danilo Poccia original https://aws.amazon.com/blogs/aws/new-for-amazon-codeguru-python-support-security-detectors-and-memory-profiling/

Amazon CodeGuru is a developer tool that helps you improve your code quality and has two main components:

- CodeGuru Reviewer uses program analysis and machine learning to detect potential defects that are difficult to find in your code and offers suggestions for improvement.

- CodeGuru Profiler collects runtime performance data from your live applications, and provides visualizations and recommendations to help you fine-tune your application performance.

Today, I am happy to announce three new features:

- Python Support for CodeGuru Reviewer and Profiler (Preview) – You can now use CodeGuru to improve applications written in Python. Before this release, CodeGuru Reviewer could analyze Java code, and CodeGuru Profiler supported applications running on a Java virtual machine (JVM).

- Security Detectors for CodeGuru Reviewer – A new set of detectors for CodeGuru Reviewer to identify security vulnerabilities and check for security best practices in your Java code.

- Memory Profiling for CodeGuru Profiler – A new visualization of memory retention per object type over time. This makes it easier to find memory leaks and optimize how your application is using memory.

Let’s see these functionalities in more detail.

Python Support for CodeGuru Reviewer and Profiler (Preview)

Python Support for CodeGuru Reviewer is available in Preview and offers recommendations on how to improve the Python code of your applications in multiple categories such as concurrency, data structures and control flow, scientific/math operations, error handling, using the standard library, and of course AWS best practices.

You can now also use CodeGuru Profiler to collect runtime performance data from your Python applications and get visualizations to help you identify how code is running on the CPU and where time is consumed. In this way, you can detect the most expensive lines of code of your application. Focusing your tuning activities on those parts helps you reduce infrastructure cost and improve application performance.

Let’s see the CodeGuru Reviewer in action with some Python code. When I joined AWS eight years ago, one of the first projects I created was a Filesystem in Userspace (FUSE) interface to Amazon Simple Storage Service (S3) called yas3fs (Yet Another S3-backed File System). It was inspired by the more popular s3fs-fuse project but rewritten from scratch to implement a distributed cache synchronized by Amazon Simple Notification Service (SNS) notifications (now, thanks to the many contributors, it’s using S3 event notifications). It was also a good excuse for me to learn more about Python programming and S3. It’s a personal project that at the time made available as open source. Today, if you need a shared file system, you can use Amazon Elastic File System (EFS).

In the CodeGuru console, I associate the yas3fs repository. You can associate repositories from GitHub, including GitHub Enterprise Cloud and GitHub Enterprise Server, Bitbucket, or AWS CodeCommit.

After that, I can get a code review from CodeGuru in two ways:

- Automatically, when I create a pull request. This is a great way to use it as you and your team are working on a code base.

- Manually, creating a repository analysis to get a code review for all the code in one branch. This is useful to start using GodeGuru with an existing code base.

Since I just associated the whole repository, I go for a full analysis and write down the branch name to review (apologies, I was still using master at the time, now I use main for new projects).

After a few minutes, the code review is completed, and there are 14 recommendations. Not bad, but I can definitely improve the code. Here’s a few of the recommendations I get. I was using exceptions and global variables too much at the time.

Security Detectors for CodeGuru Reviewer

The new CodeGuru Reviewer Security Detector uses automated reasoning to analyze all code paths and find potential security issues deep in your Java code, even ones that span multiple methods and files and that may involve multiple sequences of operations. To build this detector, we used learning and best practices from Amazon’s 20+ years of experience.

The Security Detector is also identifying security vulnerabilities in the top 10 Open Web Application Security Project (OWASP) categories, such as weak hash encryption.

If the security detector discovers an issue, it offers a suggested remediation along with an explanation. In this way, it’s much easier to follow security best practices for AWS APIs, such as those for AWS Key Management Service (KMS) and Amazon Elastic Compute Cloud (EC2), and for common Java cryptography and TLS/SSL libraries.

With help from the security detector, security engineers can focus on architectural and application-specific security best-practices, and code reviewers can focus their attention on other improvements.

Memory Profiling for CodeGuru Profiler

For applications running on a JVM, CodeGuru Profiler can now show the Heap Summary, a consolidated view of memory retention during a time frame, tracking both overall sizes and number of objects per object type (such as String, int, char[], and custom types). These metrics are presented in a timeline graph, so that you can easily spot trends and peaks of memory utilization per object type.

Here are a couple of scenarios where this can help:

Memory Leaks – A constantly growing memory utilization curve for one or more object types may indicate a leak (intended here as unnecessary retention of memory objects by the application), possibly leading to out-of-memory errors and application crashes.

Memory Optimizations – Having a breakdown of memory utilization per object type is a step beyond traditional memory utilization monitoring, based solely on JVM-level metrics like total heap usage. By knowing that an unexpectedly high amount of memory has been associated with a specific object type, you can focus your analysis and optimization efforts on the parts of your application that are responsible for allocating and referencing objects of that type.

For example, here is a graph showing how memory is used by a Java application over an interval of time. Apart from the total capacity available and the used space, I can see how memory is being used by some specific object types, such as byte[], java.lang.UUID, and the entries of a java.util.LinkedHashMap. The continuous growth over time of the memory retained by these object types is suspicious. There is probably a memory leak I have to investigate.

In the table just below, I have a longer list of object types allocating memory on the heap. The first three are selected and for that reason are shown in the graph above. Here, I can inspect other object types and select them to see their memory usage over time. It looks like the three I already selected are the ones with more risk of being affected by a memory leak.

Available Now

These new features are available today in all regions where Amazon CodeGuru is offered. For more information, please see the AWS Regional Services table.

There are no pricing changes for Python support, security detectors, and memory profiling. You pay for what you use without upfront fees or commitments.

Learn more about Amazon CodeGuru and start using these new features today to improve the code quality of your applications.

— Danilo

GNU Autoconf 2.70 released

Post Syndicated from original https://lwn.net/Articles/839395/rss

GNU Autoconf 2.70 is out. “Noteworthy changes include support for the

2011 revisions of the C and C++ standards, support for reproducible

builds, improved support for cross-compilation, improved compatibility

with current compilers and shell utilities, more efficient generated

shell code, and many bug fixes.” See this article for more information on what has

been happening with Autoconf.

GNU autoconf-2.70 released

Post Syndicated from original https://lwn.net/Articles/839395/rss

GNU Autoconf 2.70 is out. “Noteworthy changes include support for the

2011 revisions of the C and C++ standards, support for reproducible

builds, improved support for cross-compilation, improved compatibility

with current compilers and shell utilities, more efficient generated

shell code, and many bug fixes.” See this article for more information on what has

been happening with Autoconf.

[$] Fedora and its editions

Post Syndicated from original https://lwn.net/Articles/839214/rss

Fedora has long had Workstation and Server editions and, back in

August, added an edition for Internet of

Things (IoT) devices. Those editions target different use cases for

the distribution, as does the CoreOS “spin” (or “emerging

edition”), which targets cloud and Kubernetes deployments. A proposal to

elevate Fedora CoreOS to a full edition as part of Fedora 34 was

recently discussed on the Fedora devel mailing list. As part of that, what

it means for a distribution to be part of Fedora was discussed as well.

Oblivious DNS-over-HTTPS

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2020/12/oblivious-dns-over-https.html

This new protocol, called Oblivious DNS-over-HTTPS (ODoH), hides the websites you visit from your ISP.

Here’s how it works: ODoH wraps a layer of encryption around the DNS query and passes it through a proxy server, which acts as a go-between the internet user and the website they want to visit. Because the DNS query is encrypted, the proxy can’t see what’s inside, but acts as a shield to prevent the DNS resolver from seeing who sent the query to begin with.

IETF memo.

The paper:

Abstract: The Domain Name System (DNS) is the foundation of a human-usable Internet, responding to client queries for host-names with corresponding IP addresses and records. Traditional DNS is also unencrypted, and leaks user information to network operators. Recent efforts to secure DNS using DNS over TLS (DoT) and DNS over HTTPS (DoH) havebeen gaining traction, ostensibly protecting traffic and hiding content from on-lookers. However, one of the criticisms ofDoT and DoH is brought to bear by the small number of large-scale deployments (e.g., Comcast, Google, Cloudflare): DNS resolvers can associate query contents with client identities in the form of IP addresses. Oblivious DNS over HTTPS (ODoH) safeguards against this problem. In this paper we ask what it would take to make ODoH practical? We describe ODoH, a practical DNS protocol aimed at resolving this issue by both protecting the client’s content and identity. We implement and deploy the protocol, and perform measurements to show that ODoH has comparable performance to protocols like DoH and DoT which are gaining widespread adoption,while improving client privacy, making ODoH a practical privacy enhancing replacement for the usage of DNS.

Slashdot thread.

Get up to 3x better price performance with Amazon Redshift than other cloud data warehouses

Post Syndicated from Eugene Kawamoto original https://aws.amazon.com/blogs/big-data/get-up-to-3x-better-price-performance-with-amazon-redshift-than-other-cloud-data-warehouses/

Since we announced Amazon Redshift in 2012, tens of thousands of customers have trusted us to deliver the performance and scale they need to gain business insights from their data. Amazon Redshift customers span all industries and sizes, from startups to Fortune 500 companies, and we work to deliver the best price performance for any use case. Earlier in 2020, we published a blog post about improved speed and scalability in Amazon Redshift. This includes optimizations such as dynamically adding cluster capacity when you need it with concurrency scaling, making sure you use cluster resources efficiently with automatic workload management (WLM), and automatically adjusting data layout, distribution keys, and query plans to provide optimal performance for a given workload. We also described how customers, including Codeacademy, OpenVault, Yelp, and Nielsen, have taken advantage of Amazon Redshift RA3 nodes with managed storage to scale their cloud data warehouses and reduce costs.

In addition to improving performance and scale, we are constantly looking at how to also improve the price performance that Amazon Redshift provides. One of the ways we ensure that we provide the best value for customers is to measure the performance of Amazon Redshift and other cloud data warehouses regularly using queries derived from industry-standard benchmarks such as TPC-DS. We completed our most recent tests based on the TPC-DS benchmark in November using the latest version of the products available across the vendors tested at that time. For Amazon Redshift, this includes more than 15 new capabilities released this year prior to November, but not new capabilities announced during AWS re:Invent 2020.

Best Out-of-the-Box and Tuned Price Performance

The test completed in November showed that Amazon Redshift delivers up to three times better price performance out-of-the-box than other cloud data warehouses. The following chart illustrates these findings.

For this test, we ran all 99 queries from the TPC-DS benchmark against a 3 TB data set. We calculated price performance by multiplying the time required to run all queries in hours by the price per hour for each cloud data warehouse. We used clusters with comparable hardware characteristics for each data warehouse. We also used default settings for each cloud data warehouse, except we enabled encryption for all four services because it’s on for two by default, and we disabled result caching where applicable. The default settings allowed us to determine the price performance delivered with no manual tuning effort. We selected the best result out of three runs for each query in order to take advantage of optimizations provided by each service. Finally, to ensure an apples-to-apples comparison, we used public pricing, and compared price performance rather than performance alone. For Amazon Redshift specifically, we used on-demand pricing; Amazon Redshift Reserved Instance pricing provides up to a 60% discount vs. on-demand pricing.

These results show that Amazon Redshift provides the best price performance out-of-the-box, even for a comparatively small 3 TB dataset. This means that you can benefit from Amazon Redshift’s leading price performance from the start without manual tuning.

You can also take advantage of performance tuning techniques for Amazon Redshift to achieve even better results for your workloads. We repeated the benchmark test using tuning best practices provided by each cloud data warehouse vendor. After all cloud data warehouses are tuned, Amazon Redshift has 1.5 times better price performance than the nearest cloud data warehouse competitor, as shown in the following chart.

As with all benchmarks, transparency and reproducibility are crucial. For this reason, we have made the data and queries available on GitHub for anyone to use. See the README in GitHub for detailed instructions on re-running these benchmarks.

Tuned price performance improves as your data warehouse grows

One critical aspect of a data warehouse is how it scales as your data grows. Will you be paying more per TB as you add more data, or will your costs remain consistent and predictable? We work to make sure that Amazon Redshift delivers not only strong performance as your data grows, but also consistent price performance. We tested Amazon Redshift price performance using TPC-DS with 3 TB, 30 TB, and 100 TB datasets on three different cluster sizes. As shown in the following graph, Amazon Redshift tuned price performance improved (from $2.80 to $2.41 per TB per run) as the datasets grew. Tuning reduces the amount of network and disk I/O required for a given workload, and has varying impact depending on the combination of workload and cluster size.

In addition, as shown in the following table, Amazon Redshift out-of-the-box price performance is nearly the same ($4.80 to $5.01 per TB per run) for all three dataset sizes. This linear scaling of price performance across data size and cluster size, both out-of-the-box and tuned, makes sure that Amazon Redshift will scale predictably as your data and workloads grow.

| Amazon Redshift TPC-DS benchmark results, November 2020 | |||||

| Out-of-Box | Tuned | ||||

| Data set (TB) |

Cluster | Runtime (sec) |

Price per TB per run | Runtime (sec) |

Price per TB per run |

| 3 | 10 node ra3.4xlarge | 1591 | $4.80 | 926 | $2.80 |

| 30 | 5 node ra3.16xlarge | 8291 | $5.01 | 4198 | $2.53 |

| 100 | 10 node ra3.16xlarge | 13,343 | $4.83 | 6644 | $2.41 |

You can learn more about Amazon Redshift’s performance on large datasets in How Amazon Redshift powers large-scale analytics for Amazon.com. This AWS re:Invent 2020 session shows how Amazon.com is using Amazon Redshift to keep up with exploding data growth, and how you can upgrade your existing data warehouse workloads to RA3 nodes to get scale and performance at great value.

Up to 10x better query performance with AQUA

We’re investing to make sure Amazon Redshift continues to improve as your data warehouse needs grow. As noted earlier, these benchmark results reflect the latest version of Amazon Redshift as of November, 2020. This version includes more than 15 new features released earlier this year, such as distributed bloom filters, vectorized queries, and automatic WLM, but doesn’t include the benefits from new capabilities announced during AWS re:Invent 2020. You can join What’s new with Amazon Redshift at AWS re:Invent 2020 to learn more about the new capabilities.

These new capabilities include AQUA (Advanced Query Accelerator) for Amazon Redshift. AQUA is a new distributed and hardware-accelerated cache for Amazon Redshift that delivers up to 10x better query performance than other cloud data warehouses. AQUA takes a new approach to cloud data warehousing. AQUA brings the compute to storage by doing a substantial share of data processing in-place on the innovative cache. In addition, it uses AWS-designed processors and a scale-out architecture to accelerate data processing beyond anything traditional CPUs can do today. AQUA’s preview is now open to all customers, and AQUA will be generally available in January 2021. You can learn more about AQUA and other new Amazon Redshift capabilities by joining What’s new with Amazon Redshift at AWS re:Invent 2020.

Price performance continues to improve

We’re investing to make sure Amazon Redshift continues to improve as your data warehouse needs grow. As noted earlier, these benchmark results reflect the latest version of Amazon Redshift as of November, 2020. This version includes more than 15 new features released earlier this year, such as distributed bloom filters, vectorized queries, and automatic WLM, but doesn’t include the benefits from new capabilities announced during AWS re:Invent 2020. You can join What’s new with Amazon Redshift at AWS re:Invent 2020 to learn more about the new capabilities.

Find the best price performance for your workloads

You can reproduce the results above using the data and queries available on GitHub.

Each workload has unique characteristics, so if you’re just getting started, a proof of concept is the best way to understand how Amazon Redshift performs for your requirements. When running your own proof of concept, it’s important that you focus on proper cluster sizing and the right metrics—query throughput (number of queries per hour) and price performance. You can make a data-driven decision by requesting assistance with a proof of concept or working with a system integration and consulting partner.

If you’re an existing Amazon Redshift customer, connect with us for a free optimization session and briefing on the new features announced at AWS re:Invent 2020.

To stay up-to-date with the latest developments in Amazon Redshift, subscribe to the What’s New in Amazon Redshift RSS feed.

About the Authors

Eugene Kawamoto is a director of product management for Amazon Redshift. Eugene leads the product management and database engineering teams at AWS. He has been with AWS for ~8 years supporting analytics and database services both in Seattle and in Tokyo. In his spare time, he likes running trails in Seattle, loves finding new temples and shrines in Kyoto, and enjoys exploring his travel bucket list.

Eugene Kawamoto is a director of product management for Amazon Redshift. Eugene leads the product management and database engineering teams at AWS. He has been with AWS for ~8 years supporting analytics and database services both in Seattle and in Tokyo. In his spare time, he likes running trails in Seattle, loves finding new temples and shrines in Kyoto, and enjoys exploring his travel bucket list.

Stefan Gromoll is a Senior Performance Engineer with Amazon Redshift where he is responsible for measuring and improving Redshift performance. In his spare time, he enjoys cooking, playing with his three boys, and chopping firewood.

Stefan Gromoll is a Senior Performance Engineer with Amazon Redshift where he is responsible for measuring and improving Redshift performance. In his spare time, he enjoys cooking, playing with his three boys, and chopping firewood.

How to protect a self-managed DNS service against DDoS attacks using AWS Global Accelerator and AWS Shield Advanced

Post Syndicated from Chido Chemambo original https://aws.amazon.com/blogs/security/how-to-protect-a-self-managed-dns-service-against-ddos-attacks-using-aws-global-accelerator-and-aws-shield-advanced/

In this blog post, I show you how to improve the distributed denial of service (DDoS) resilience of your self-managed Domain Name System (DNS) service by using AWS Global Accelerator and AWS Shield Advanced. You can use those services to incorporate some of the techniques used by Amazon Route 53 to protect against DDoS attacks.

DNS routes users to your application by quickly translating a human-readable domain name to a machine-readable IP address. When protecting the availability of your application against DDoS attacks, it’s important to consider every part of the stack, including domain name resolution. The recommended best practice is to create hosted zones on Route 53, a scalable, highly available DNS service that’s protected against large DDoS attacks and query floods. Route 53 uses anycast routing to serve DNS queries from more than 150 edge locations around the globe. With anycast routing, DNS queries are served from locations that are closer to your users and the globally distributed DDoS mitigation capacity of Amazon Web Services (AWS) reduces the impact of attacks.

Optionally, you can also build your own DNS service on Amazon Elastic Compute Cloud (Amazon EC2). For example, you can run your own proprietary DNS server to take advantage of custom features that you wrote to integrate with an existing DNS service that isn’t running on AWS. When you register a domain name, you’re usually required to provide at least two name servers that can respond to queries from your users. It’s possible to build a DNS service on only two instances, but that provides limited DDoS resilience.

Solution overview

To protect your self-managed DNS service using this solution, you need a strong understanding of DNS and how to operate a distributed, self-managed DNS service on Amazon EC2. This solution improves upon an existing self-managed DNS service by significantly enhancing its ability to withstand DDoS attacks. There are two components that you add to your application:

- You use Global Accelerator to provide your application with two static IP addresses that act as a fixed entry point to Amazon EC2 instances in multiple AWS Regions. Global Accelerator uses anycast to route your traffic to a point of entry close to the source of the traffic. In addition to providing availability and performance benefits, this gives you access to global DDoS mitigation capacity through AWS.

- You use Shield Advanced to monitor the availability of your application and automatically engage the AWS Shield Response Team (SRT) if its availability is affected by a DDoS attack. When you associate a Route 53 health check to your protected resources, Shield Advanced uses the health of the application as an input for detection and as a signal to SRT to contact your operations center when needed. You can also engage with SRT to write custom mitigations for your application. For your self-managed DNS service use case, this can include mitigations like DNS packet validation and suspicion scoring that gives a higher priority to queries that are more likely to be legitimate traffic for your application.

As part of this solution, you will build a DNS canary that uses Amazon CloudWatch to update the status of a Route 53 health check if your self-managed DNS service stops responding to queries. An example architecture using Amazon EC2 based DNS behind Global Accelerator and Shield is shown in figure 1.

Figure 1: Amazon EC2 based DNS behind Global Accelerator and Shield

Create and configure an accelerator

To begin, create an accelerator and add your existing DNS servers as endpoints. The newly created accelerator will receive queries and forward them to your DNS service.

To create and configure an accelerator

Step 1: Create an accelerator

- Navigate to the AWS Global Accelerator dashboard.

- Choose Create accelerator.

- Enter a name for your accelerator.

- Choose Next.

Step 2: Add listeners

Since DNS uses both TCP and UDP protocols, you must create separate listeners to handle requests for each protocol.

At the Add Listeners step, enter the following:

- Ports: 53

- Protocol: TCP

- Client affinity: None

Choose Add listener again to add the UDP listener. Enter the following:

- Ports: 53

- Protocol: UDP

- Client affinity: None

- Choose Next

To learn more about the different options available in this step, see To create a listener in Getting started with AWS Global Accelerator.

Step 3: Add endpoint groups

Starting with the TCP listener, enter the following settings:

- Region: Choose a Region that your DNS instances are located in, for example, us-east-1.

- Traffic dial: 100

- If you have additional DNS instances in another AWS Region, choose Add endpoint group and repeat steps a) and b), entering the appropriate Region.

- Repeat steps a) through c) to add endpoint groups for the UDP listener, and then choose Next.

To learn more about the different options available in this step, for example, Traffic dial, see the Add endpoint groups in Getting started with AWS Global Accelerator.

Step 4: Add endpoints

Starting with the TCP listener, enter the following in the form boxes for each Region specified in the previous step:

- Endpoint type: Select EC2 instance from the drop-down list.

- Endpoint: Select a DNS instance from the drop-down list.

- Weight: 128

If you have additional DNS instances in the Region, choose Add endpoint and repeat the preceding steps, but select a DNS instance that hasn’t been added as an endpoint.

Repeat all of the preceding steps for the UDP listener, then choose Create accelerator.

To learn more about the different options available in this step, see the Add endpoints in Getting started with AWS Global Accelerator.

Step 5: Verification

When you choose the Create accelerator button, you’re redirected to a Global Accelerator console page that lists all the accelerators in your account. On this page, you can view the global IPs and DNS name allocated to your newly created accelerator, in addition to the current status.

Wait until the status of the accelerators changes to Deployed before proceeding with any tests.

Configure Shield Advanced and Shield Advanced proactive engagement

Protect your accelerator with Shield Advanced, monitor the health of your application, and configure proactive engagement. When you turn on proactive engagement, the SRT will directly contact you if an Amazon Route 53 health check associated with your protected resource becomes unhealthy during an event that’s detected by Shield Advanced.

To configure proactive engagement

Step 1: Create a Route 53 health check

If you already have a Route 53 health check that monitors the health of your DNS service, you can proceed to step 2 of this section. If you don’t yet have a health check, you can use this AWS CloudFormation template to create one. The template will:

- Create a Lambda function that queries your DNS server through the accelerator global IPs. This function posts metrics to CloudWatch to indicate whether the query was successful or not.

- Create a CloudWatch alarm that will detect when DNS queries fail.

- Create a Route 53 health check that tracks the CloudWatch alarm and changes status to unhealthy when the alarm changes to the Alarm state.

Step 2: Subscribe to Shield Advanced

Please note that with AWS Shield Advanced, you pay a monthly fee of $3,000 per month per organization. In addition, you also pay for AWS Shield Advanced Data Transfer usage fees for AWS resources enabled for advanced protection.

- Navigate to the AWS Shield console.

- In the AWS Shield navigation bar, choose Getting started, and then choose Subscribe to Shield Advanced.

- On the Subscribe to Shield Advanced page, read the terms of agreement, and then select all of the check boxes to indicate that you accept the terms.

- Choose Subscribe to Shield Advanced.

Step 3: Add resources to protect

- Do one of the following, depending on if you were already subscribed to Shield Advanced.

- If you just subscribed to Shield Advanced by completing Step 2 above, choose Add resources to protect.

- If you were already subscribed to Shield Advanced, open the Shield console and choose Protected Resources, and then choose Add resources to protect.

- In the Choose resources to protect with Shield Advanced page, select the Regions and resource types that you want to protect, then choose Load resources.

- Select the resources that you want to protect, and then choose Protect with Shield Advanced.

- In the Configure health check based DDoS detection page, under the Protected resources section, select a Route 53 health check to add—either one that you created previously, or a health check created by the AWS CloudFormation template—as the Associated Health Check.

- Choose Next until you reach the Review and configure DDoS mitigation and visibility page, and then review the settings and choose Finish configuration.

Step 4: Add contacts

- Navigate to the Overview tab of the AWS Shield console.

- In the Proactive engagements and contacts section, choose Edit under the Contacts heading.

- In the Add contact form, add the contact’s Email, Phone number, and Notes.

- Choose Save.

Step 5: Request proactive engagement

- Choose Edit proactive engagement feature.

- Select Enable.

- Choose Save.

Step 6: Configuration review with the SRT

After you enable proactive engagement, the state will be Proactive engagement requested and pending.

SRT will contact you to schedule a configuration review. The review will include a review of your Route 53 health check configuration and a consultation about custom mitigations that can be configured to support your DNS use case. Following this review, SRT will complete your request to enable proactive engagement.

Summary

DNS is a foundational part of the user experience for any application that is accessed via a human readable domain name. Your DNS service should be highly available, DDoS resilient, and accessible to your users with minimal latency. If you run your own DNS service on Amazon EC2, you can improve the DDoS resiliency using Global Accelerator and Shield Advanced. This solution provides your users with a low latency path to your DNS service and provides you with some of the DDoS mitigation that protects Route 53. To learn more about DDoS best practices, see AWS Best Practices for DDoS Resiliency.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on the AWS Shield forum or contact AWS Support.

Want more AWS Security how-to content, news, and feature announcements? Follow us on Twitter.

AWS Audit Manager Simplifies Audit Preparation

Post Syndicated from Steve Roberts original https://aws.amazon.com/blogs/aws/aws-audit-manager-simplifies-audit-preparation/

Gathering evidence in a timely manner to support an audit can be a significant challenge due to manual, error-prone, and sometimes, distributed processes. If your business is subject to compliance requirements, preparing for an audit can cause significant lost productivity and disruption as a result. You might also have trouble applying traditional audit practices, which were originally designed for legacy on-premises systems, to your cloud infrastructure.

To satisfy complex and evolving sets of regulation and compliance standards, including the General Data Protection Regulation (GDPR), Health Insurance Portability and Accountability Act (HIPAA), and Payment Card Industry Data Security Standard (PCI DSS), you’ll need to gather, verify, and synthesize evidence.

You’ll also need to constantly reevaluate how your AWS usage maps to those evolving compliance control requirements. To satisfy requirements you may need to show data encryption was active, and log files showing server configuration changes, diagrams showing application high availability, transcripts showing required training was completed, spreadsheets showing that software usage did not exceed licensed amounts, and more. This effort, sometimes involving dozens of staff and consultants, can last several weeks.

Available today, AWS Audit Manager is a fully managed service that provides prebuilt frameworks for common industry standards and regulations, and automates the continual collection of evidence to help you in preparing for an audit. Continuous and automated gathering of evidence related to your AWS resource usage helps simplify risk assessment and compliance with regulations and industry standards and helps you maintain a continuous, audit-ready posture to provide a faster, less disruptive preparation process.

Built-in and customizable frameworks map usage of your cloud resources to controls for different compliance standards, translating evidence into an audit-ready, immutable assessment report using auditor-friendly terminology. You can also search, filter, and upload additional evidence to include in the final assessment, such as details of on-premises infrastructure, or procedures such as business continuity plans, training transcripts, and policy documents.

Given that audit preparation typically involves multiple teams, a delegation workflow feature lets you assign controls to subject-matter experts for review. For example, you might delegate reviewing evidence of network security to a network security engineer.

The finalized assessment report includes summary statistics and a folder containing all the evidence files, organized in accordance with the exact structure of the associated compliance framework. With the evidence collected and organized into a single location, it’s ready for immediate review, making it easier for audit teams to verify the evidence, answer questions, and add remediation plans.

Getting started with Audit Manager

Let’s get started by creating and configuring a new assessment. From Audit Manager‘s console home page, clicking Launch AWS Audit Manager takes me to my Assessments list (I can also reach here from the navigation toolbar to the left of the console home). There, I click Create assessment to start a wizard that walks me through the settings for the new assessment. First, I give my assessment a name, optional description, and then specify an Amazon Simple Storage Service (S3) bucket where the reports associated with the assessment will be stored.

Next, I choose the framework for my assessment. I can select from a variety of prebuilt frameworks, or a custom framework I have created myself. Custom frameworks can be created from scratch or based on an existing framework. Here, I’m going to use the prebuilt PCI DSS framework.

Next, I choose the framework for my assessment. I can select from a variety of prebuilt frameworks, or a custom framework I have created myself. Custom frameworks can be created from scratch or based on an existing framework. Here, I’m going to use the prebuilt PCI DSS framework.

After clicking Next, I can select the AWS accounts to be included in my assessment (Audit Manager is also integrated with AWS Organizations). Since I have a single account, I select it and click Next, moving on to select the AWS services that I want to be included in evidence gathering. I’m going to include all the suggested services (the default) and click Next to continue.

After clicking Next, I can select the AWS accounts to be included in my assessment (Audit Manager is also integrated with AWS Organizations). Since I have a single account, I select it and click Next, moving on to select the AWS services that I want to be included in evidence gathering. I’m going to include all the suggested services (the default) and click Next to continue.

Next I need to select the owners of the assessment, who have full permission to manage it (owners can be AWS Identity and Access Management (IAM) users or roles). You must select at least one owner, so I select my account and click Next to move to the final Review and create page. Finally, clicking Create assessment starts the gathering of evidence for my new assessment. This can take a while to complete, so I’m going to switch to another assessment to examine what kinds of evidence I can view and choose to include in my assessment report.

Next I need to select the owners of the assessment, who have full permission to manage it (owners can be AWS Identity and Access Management (IAM) users or roles). You must select at least one owner, so I select my account and click Next to move to the final Review and create page. Finally, clicking Create assessment starts the gathering of evidence for my new assessment. This can take a while to complete, so I’m going to switch to another assessment to examine what kinds of evidence I can view and choose to include in my assessment report.

Back in the Assessments list view, clicking on the assessment name takes me to details of the assessment, a summary of the controls for which evidence is being collected, and a list of the control sets into which the controls are grouped. Total evidence tells me the number of events and supporting documents that are included in the assessment. The additional tabs can be used to give me insight into the evidence I select for the final report, which accounts and services are included in the assessment, who owns it, and more. I can also navigate to the S3 bucket in which the evidence is being collected.

Expanding a control set shows me the related controls, with links to dive deeper on a given control, together with the status (Under review, Reviewed, and Inactive), whom the control has been delegated to for review, the amount of evidence gathered for that control, and whether the control and evidence have been added to the final report. If I change a control to be Inactive, meaning automated evidence gathering will cease for that control, this is logged.

Expanding a control set shows me the related controls, with links to dive deeper on a given control, together with the status (Under review, Reviewed, and Inactive), whom the control has been delegated to for review, the amount of evidence gathered for that control, and whether the control and evidence have been added to the final report. If I change a control to be Inactive, meaning automated evidence gathering will cease for that control, this is logged.

Let’s take a closer look at a control to show how the automated evidence gathering can help identify compliance issues before I start compiling the audit report. Expanding Default control set, I click control 8.1.2 For a sample of privileged user IDs… which takes me to a view giving more detailed information on the control and how it is tested. Scrolling down, there is a set of evidence folders listed and here I notice that there are some issues. Clicking the issue link in the Compliance check column summarizes where the data came from. Here, I can also select the evidence that I want included in my final report.

Let’s take a closer look at a control to show how the automated evidence gathering can help identify compliance issues before I start compiling the audit report. Expanding Default control set, I click control 8.1.2 For a sample of privileged user IDs… which takes me to a view giving more detailed information on the control and how it is tested. Scrolling down, there is a set of evidence folders listed and here I notice that there are some issues. Clicking the issue link in the Compliance check column summarizes where the data came from. Here, I can also select the evidence that I want included in my final report.

Going further, I can click on the evidence folder to note that there was a failure, and in turn clicking on the time of the failure takes me to a detailed summary of the issues for this control, and how to remediate.

Going further, I can click on the evidence folder to note that there was a failure, and in turn clicking on the time of the failure takes me to a detailed summary of the issues for this control, and how to remediate.

With the evidence gathered, it’s a simple task to select sufficient controls and appropriate evidence to include in my assessment report that can then be passed to my auditors. For the purposes of this post I’ve gone ahead and selected evidence for a handful of controls into my report. Then, I selected the Assessment report selection tab, where I review my evidence selections, and clicked Generate assessment report. In the dialog that appeared I gave my report a name, and then clicked Generate assessment report. When the dialog closes I am taken to the Assessment reports view and, when my report is ready, I can select it and download a zip file containing the report and the selected evidence. Alternatively, I can open the S3 bucket associated with the assessment (from the assessment’s details page) and view the report details and evidence there, as shown in the screenshot below. The overall report is listed (as a PDF file) and if I drill into the evidence folders, I can also view PDF files related to the specific items of evidence I selected.

With the evidence gathered, it’s a simple task to select sufficient controls and appropriate evidence to include in my assessment report that can then be passed to my auditors. For the purposes of this post I’ve gone ahead and selected evidence for a handful of controls into my report. Then, I selected the Assessment report selection tab, where I review my evidence selections, and clicked Generate assessment report. In the dialog that appeared I gave my report a name, and then clicked Generate assessment report. When the dialog closes I am taken to the Assessment reports view and, when my report is ready, I can select it and download a zip file containing the report and the selected evidence. Alternatively, I can open the S3 bucket associated with the assessment (from the assessment’s details page) and view the report details and evidence there, as shown in the screenshot below. The overall report is listed (as a PDF file) and if I drill into the evidence folders, I can also view PDF files related to the specific items of evidence I selected.

And to close, below is a screenshot of the beginning of the assessment report PDF file showing the number of selected controls and evidence, and services that I selected to be in scope when I created the assessment. Further pages go into more details.

And to close, below is a screenshot of the beginning of the assessment report PDF file showing the number of selected controls and evidence, and services that I selected to be in scope when I created the assessment. Further pages go into more details.

Audit Manager is available today in 10 AWS Regions: US East (Northern Virginia, Ohio), US West (Northern California, Oregon), Asia Pacific (Singapore, Sydney, Tokyo), and Europe (Frankfurt, Ireland, London).

Audit Manager is available today in 10 AWS Regions: US East (Northern Virginia, Ohio), US West (Northern California, Oregon), Asia Pacific (Singapore, Sydney, Tokyo), and Europe (Frankfurt, Ireland, London).

Get all the details about AWS Audit Manager and get started today.

Amazon SageMaker JumpStart Simplifies Access to Pre-built Models and Machine Learning Solutions

Post Syndicated from Julien Simon original https://aws.amazon.com/blogs/aws/amazon-sagemaker-jumpstart-simplifies-access-to-prebuilt-models-and-machine-learning-models/

Today, I’m extremely happy to announce the availability of Amazon SageMaker JumpStart, a capability of Amazon SageMaker that accelerates your machine learning workflows with one-click access to popular model collections (also known as “model zoos”), and to end-to-end solutions that solve common use cases.

In recent years, machine learning (ML) has proven to be a valuable technique in improving and automating business processes. Indeed, models trained on historical data can accurately predict outcomes across a wide range of industry segments: financial services, retail, manufacturing, telecom, life sciences, and so on. Yet, working with these models requires skills and experience that only a subset of scientists and developers have: preparing a dataset, selecting an algorithm, training a model, optimizing its accuracy, deploying it in production, and monitoring its performance over time.

In order to simplify the model building process, the ML community has created model zoos, that is to say, collections of models built with popular open source libraries, and often pretrained on reference datasets. For example, the TensorFlow Hub and the PyTorch Hub provide developers with a long list of models ready to be downloaded, and integrated in applications for computer vision, natural language processing, and more.

Still, downloading a model is just part of the answer. Developers then need to deploy it for evaluation and testing, using either a variety of tools, such as the TensorFlow Serving and TorchServe model servers, or their own bespoke code. Once the model is running, developers need to figure out the correct format that incoming data should have, a long-lasting pain point. I’m sure I’m not the only one regularly pulling my hair out here!

Of course, a full-ML application usually has a lot of moving parts. Data needs to be preprocessed, enriched with additional data fetched from a backend, and funneled into the model. Predictions are often postprocessed, and stored for further analysis and visualization. As useful as they are, model zoos only help with the modeling part. Developers still have lots of extra work to deliver a complete ML solution.

Because of all this, ML experts are flooded with a long backlog of projects waiting to start. Meanwhile, less experienced practitioners struggle to get started. These barriers are incredibly frustrating, and our customers asked us to remove them.

Introducing Amazon SageMaker JumpStart

Amazon SageMaker JumpStart is integrated in Amazon SageMaker Studio, our fully integrated development environment (IDE) for ML, making it intuitive to discover models, solutions, and more. At launch, SageMaker JumpStart includes:

- 15+ end-to-end solutions for common ML use cases such as fraud detection, predictive maintenance, and so on.

- 150+ models from the TensorFlow Hub and the PyTorch Hub, for computer vision (image classification, object detection), and natural language processing (sentence classification, question answering).

- Sample notebooks for the built-in algorithms available in Amazon SageMaker.

SageMaker JumpStart also provides notebooks, blogs, and video tutorials designed to help you learn and remove roadblocks. Content is easily accessible within Amazon SageMaker Studio, enabling you to get started with ML faster.

It only takes a single click to deploy solutions and models. All infrastructure is fully managed, so all you have to do is enjoy a nice cup of tea or coffee while deployment takes place. After a few minutes, you can start testing, thanks to notebooks and sample prediction code that are readily available in Amazon SageMaker Studio. Of course, you can easily modify them to use your own data.

SageMaker JumpStart makes it extremely easy for experienced practitioners and beginners alike to quickly deploy and evaluate models and solutions, saving days or even weeks of work. By drastically shortening the path from experimentation to production, SageMaker JumpStart accelerates ML-powered innovation, particularly for organizations and teams that are early on their ML journey, and haven’t yet accumulated a lot of skills and experience.

Now, let me show you how SageMaker JumpStart works.

Deploying a Solution with Amazon SageMaker JumpStart

Opening SageMaker Studio, I select the “JumpStart” icon on the left. This opens a new tab showing me all available content (solutions, models, and so on).

Let’s say that I’m interested in using computer vision to detect defects in manufactured products. Could ML be the answer?

Browsing the list of available solutions, I see one for product defect detection.

Opening it, I can learn more about the type of problems that it solves, the sample dataset used in the demo, the AWS services involved, and more.

A single click is all it takes to deploy this solution. Under the hood, AWS CloudFormation uses a built-in template to provision all appropriate AWS resources.

A few minutes later, the solution is deployed, and I can open its notebook.

The notebook opens immediately in SageMaker Studio. I run the demo, and understand how ML can help me detect product defects. This is also a nice starting point for my own project, making it easy to experiment with my own dataset (feel free to click on the image below to zoom in).

Once I’m done with this solution, I can delete all its resources in one click, letting AWS CloudFormation clean up without having to worry about leaving idle AWS resources behind.

Now, let’s look at models.

Deploying a Model with Amazon SageMaker JumpStart

SageMaker JumpStart includes a large collection of models available in the TensorFlow Hub and the PyTorch Hub. These models are pre-trained on reference datasets, and you can use them directly to handle a wide range of computer vision and natural language processing tasks. You can also fine-tune them on your own datasets for greater accuracy, a technique called transfer learning.

Here, I pick a version of the BERT model trained on question answering. I can either deploy it as is, or fine-tune it. For the sake of brevity, I go with the former here, and I just click on the “Deploy” button.

A few minutes later, the model has been deployed to a real-time endpoint powered by fully managed infrastructure.

Time to test it! Clicking on “Open Notebook” launches a sample notebook that I run right away to test the model, without having to change a line of code (again, feel free to click on the image below to zoom in). Here, I’m asking two questions (“What is Southern California often abbreviated as?” and “Who directed Spectre?“), passing some context containing the answer. In both cases, the BERT model gives the correct answer, respectively “socal” and “Sam Mendes“.

When I’m done testing, I can delete the endpoint in one click, and stop paying for it.

Getting Started

As you can see, it’s extremely easy to deploy models and solutions with SageMaker JumpStart in minutes, even if you have little or no ML skills.

You can start using this capability today in all regions where SageMaker Studio is available, at no additional cost.

Give it a try and let us know what you think.

As always, we’re looking forward to your feedback, either through your usual AWS support contacts, or on the AWS Forum for SageMaker.

Special thanks to my colleague Jared Heywood for his precious help during early testing.

New – Amazon SageMaker Pipelines Brings DevOps Capabilities to your Machine Learning Projects

Post Syndicated from Julien Simon original https://aws.amazon.com/blogs/aws/amazon-sagemaker-pipelines-brings-devops-to-machine-learning-projects/

Today, I’m extremely happy to announce Amazon SageMaker Pipelines, a new capability of Amazon SageMaker that makes it easy for data scientists and engineers to build, automate, and scale end to end machine learning pipelines.

Machine learning (ML) is intrinsically experimental and unpredictable in nature. You spend days or weeks exploring and processing data in many different ways, trying to crack the geode open to reveal its precious gemstones. Then, you experiment with different algorithms and parameters, training and optimizing lots of models in search of highest accuracy. This process typically involves lots of different steps with dependencies between them, and managing it manually can become quite complex. In particular, tracking model lineage can be difficult, hampering auditability and governance. Finally, you deploy your top models, and you evaluate them against your reference test sets. Finally? Not quite, as you’ll certainly iterate again and again, either to try out new ideas, or simply to periodically retrain your models on new data.

No matter how exciting ML is, it does unfortunately involve a lot of repetitive work. Even small projects will require hundreds of steps before they get the green light for production. Over time, not only does this work detract from the fun and excitement of your projects, it also creates ample room for oversight and human error.

To alleviate manual work and improve traceability, many ML teams have adopted the DevOps philosophy and implemented tools and processes for Continuous Integration and Continuous Delivery (CI/CD). Although this is certainly a step in the right direction, writing your own tools often leads to complex projects that require more software engineering and infrastructure work than you initially anticipated. Valuable time and resources are diverted from the actual ML project, and innovation slows down. Sadly, some teams decide to revert to manual work, for model management, approval, and deployment.

Introducing Amazon SageMaker Pipelines

Simply put, Amazon SageMaker Pipelines brings in best-in-class DevOps practices to your ML projects. This new capability makes it easy for data scientists and ML developers to create automated and reliable end-to-end ML pipelines. As usual with SageMaker, all infrastructure is fully managed, and doesn’t require any work on your side.

Care.com is the world’s leading platform for finding and managing high-quality family care. Here’s what Clemens Tummeltshammer, Data Science Manager, Care.com, told us: “A strong care industry where supply matches demand is essential for economic growth from the individual family up to the nation’s GDP. We’re excited about Amazon SageMaker Feature Store and Amazon SageMaker Pipelines, as we believe they will help us scale better across our data science and development teams, by using a consistent set of curated data that we can use to build scalable end-to-end machine learning (ML) model pipelines from data preparation to deployment. With the newly announced capabilities of Amazon SageMaker, we can accelerate development and deployment of our ML models for different applications, helping our customers make better informed decisions through faster real-time recommendations.”

Let me tell you more about the main components in Amazon SageMaker Pipelines: pipelines, model registry, and MLOps templates.

Pipelines – Model building pipelines are defined with a simple Python SDK. They can include any operation available in Amazon SageMaker, such as data preparation with Amazon SageMaker Processing or Amazon SageMaker Data Wrangler, model training, model deployment to a real-time endpoint, or batch transform. You can also add Amazon SageMaker Clarify to your pipelines, in order to detect bias prior to training, or once the model has been deployed. Likewise, you can add Amazon SageMaker Model Monitor to detect data and prediction quality issues.

Once launched, model building pipelines are executed as CI/CD pipelines. Every step is recorded, and detailed logging information is available for traceability and debugging purposes. Of course, you can also visualize pipelines in Amazon SageMaker Studio, and track their different executions in real time.

Model Registry – The model registry lets you track and catalog your models. In SageMaker Studio, you can easily view model history, list and compare versions, and track metadata such as model evaluation metrics. You can also define which versions may or may not be deployed in production. In fact, you can even build pipelines that automatically trigger model deployment once approval has been given. You’ll find that the model registry is very useful in tracing model lineage, improving model governance, and strengthening your compliance posture.

MLOps Templates – SageMaker Pipelines includes a collection of built-in CI/CD templates for popular pipelines (build/train/deploy, deploy only, and so on). You can also add and publish your own templates, so that your teams can easily discover them and deploy them. Not only do templates save lots of time, they also make it easy for ML teams to collaborate from experimentation to deployment, using standard processes and without having to manage any infrastructure. Templates also let Ops teams customize steps as needed, and give them full visibility for troubleshooting.

Now, let’s do a quick demo!

Building an End-to-end Pipeline with Amazon SageMaker Pipelines

Opening SageMaker Studio, I select the “Components” tab and the “Projects” view. This displays a list of built-in project templates. I pick one to build, train, and deploy a model.

Then, I simply give my project a name, and create it.

A few seconds later, the project is ready. I can see that it includes two Git repositories hosted in AWS CodeCommit, one for model training, and one for model deployment.

The first repository provides scaffolding code to create a multi-step model building pipeline: data processing, model training, model evaluation, and conditional model registration based on accuracy. As you’ll see in the pipeline.py file, this pipeline trains a linear regression model using the XGBoost algorithm on the well-known Abalone dataset. This repository also includes a build specification file, used by AWS CodePipeline and AWS CodeBuild to execute the pipeline automatically.

Likewise, the second repository contains code and configuration files for model deployment, as well as test scripts required to pass the quality gate. This operation is also based on AWS CodePipeline and AWS CodeBuild, which run a AWS CloudFormation template to create model endpoints for staging and production.

Clicking on the two blue links, I clone the repositories locally. This triggers the first execution of the pipeline.

A few minutes later, the pipeline has run successfully. Switching to the “Pipelines” view, I can visualize its steps.

Clicking on the training step, I can see the Root Mean Square Error (RMSE) metrics for my model.

As the RMSE is lower than the threshold defined in the conditional step, my model is added to the model registry, as visible below.

For simplicity, the registration step sets the model status to “Approved”, which automatically triggers its deployment to a real-time endpoint in the same account. Within seconds, I see that the model is being deployed.

Alternatively, you could register your model with a “Pending manual approval” status. This will block deployment until the model has been reviewed and approved manually. As the model registry supports cross-account deployment, you could also easily deploy in a different account, without having to copy anything across accounts.

A few minutes later, the endpoint is up, and I could use it to test my model.

Once I’ve made sure that this model works as expected, I could ping the MLOps team, and ask them to deploy the model in production.

Putting my MLOps hat on, I open the AWS CodePipeline console, and I see that my deployment is indeed waiting for approval.

I then approve the model for deployment, which triggers the final stage of the pipeline.

Reverting to my Data Scientist hat, I see in SageMaker Studio that my model is being deployed. Job done!

Getting Started

As you can see, Amazon SageMaker Pipelines makes it really easy for Data Science and MLOps teams to collaborate using familiar tools. They can create and execute robust, automated ML pipelines that deliver high quality models in production quicker than before.

You can start using SageMaker Pipelines in all commercial regions where SageMaker is available. The MLOps capabilities are available in the regions where CodePipeline is also available.

Sample notebooks are available to get you started. Give them a try, and let us know what you think. We’re always looking forward to your feedback, either through your usual AWS support contacts, or on the AWS Forum for SageMaker.

Special thanks to my colleague Urvashi Chowdhary for her precious assistance during early testing.

Introducing Amazon SageMaker Data Wrangler, a Visual Interface to Prepare Data for Machine Learning

Post Syndicated from Julien Simon original https://aws.amazon.com/blogs/aws/introducing-amazon-sagemaker-data-wrangler-a-visual-interface-to-prepare-data-for-machine-learning/

Today, I’m extremely happy to announce Amazon SageMaker Data Wrangler, a new capability of Amazon SageMaker that makes it faster for data scientists and engineers to prepare data for machine learning (ML) applications by using a visual interface.

Whenever I ask a group of data scientists and ML engineers how much time they actually spend studying ML problems, I often hear a collective sigh, followed by something along the lines of, “20%, if we’re lucky.” When I ask them why, the answer is invariably the same, “data preparation consistently takes up to 80% of our time!”

Indeed, preparing data for training is a crucial step of the ML process, and no one would think about botching it up. Typical tasks include:

- Locating data: finding where raw data is stored, and getting access to it

- Data visualization: examining statistical properties for each column in the dataset, building histograms, studying outliers

- Data cleaning: removing duplicates, dropping or filling entries with missing values, removing outliers

- Data enrichment and feature engineering: processing columns to build more expressive features, selecting a subset of features for training

In the early stage of a new ML project, this is a highly manual process, where intuition and experience play a large part. Using a mix of bespoke tools and open source tools such as pandas or PySpark, data scientists often experiment with different combinations of data transformations, and use them to process datasets before training models. Then, they analyze prediction results and iterate. As important as this is, looping through this process again and again can be time-consuming, tedious, and error-prone.

At some point, you will hit the right level of accuracy (or whatever other metric you’ve picked), and you’ll then want to train on the full dataset in your production environment. However, you’ll first have to reproduce and automate the exact data preparation steps that you experimented within your sandbox. Unfortunately, there’s always room for error given the interactive nature of this work, even if you carefully document it.

Last but not least, you’ll have to manage and scale your data processing infrastructure before you get to the finish line. Now that I think of it, 80% of your time may not be enough to do all of this!

Introducing Amazon SageMaker Data Wrangler