Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=eh7BO_qro7c

Време е да притопляме шамарите

Post Syndicated from Yovko Lambrev original https://yovko.net/vreme-za-shamari/

2021. Годината започва с поне три нови издания (и два нови превода) на български език на „1984“ и „Фермата“ на Оруел. Засега. Знам, че се подготвят още две издания на „1984“ до края на годината. Странно, нали? Когато споделих с приятел, че това изглежда абсурдно на фона на смешно малкия книжен пазар у нас, той ми припомни, че миналата година се навършиха 70 години от смъртта на автора и правата върху заглавията вече са свободни. „Некрасиво осребяване“ беше коментарът му.

От друга страна 2021 може и да бъде годината, в която ще надвием над безконтролното разпространение на вируса причинител на COVID-19, но със сигурност трябва да маркира и някаква по-осезаема съпротива срещу големите технологични корпорации. В този ред на мисли, четенето на антиутопии помага.

Интернет се централизира и деформира прекомерно. Съдържанието, генерирано от хората (шибаните корпорации ни наричат потребители) е засмукано от няколко т.нар. платформи. Но те го събират не за да е по-лесно намираемо или полезно за останалите, а единствено заради техните си егоистични и алчни цели. От своя страна почти всички платформи, услуги и инструменти са подслонени в обятията на облаците на Amazon, Microsoft или Google. Много вероятно е дори да сте наели виртуален сървър или нещо друго от по-малък доставчик, но той пак да разчита на инфраструктурата на трите големи, като само я преопакова и евентуално добавя някакви свои услуги или поддръжка.

Така уж децентрализираният Интернет е в ръцете на неколцина алчни копелдаци, които е крайно време да започнат да ядат шамари. Заради тях се пропукват фундаментални опори на обществата и демокрациите ни. Платформите отказват да носят отговорност за съдържанието, което разпространяват и усилват, но в същия момент задушават и поставят в зависимост медиите, които все още се опитват да изпълняват обществения си дълг. Новите бизнес-модели до един облагодетелстват платформите, без никакъв ангажимент да компенсират създаващите съдържание. Опитите на социалните мрежи да обозначават дезинформацията, политическите манипулации и фалшивите новини са едва отскоро, но реализацията на това е нелепа и много личи, че е направена под натиск и без нужното осъзнаване на проблема и отговорността.

Искрено се надявам, че скоро ще видим решителни и категорични мерки спрямо техноолигополите, включително разделяне на гигантите на по-малки компании. Но не съм наивен. Не е много вероятно, нито ще е лесно. Политиците в последно време са маркетингов продукт без идеологически фундамент. Нагласят се спрямо посоката на попътния вятър и следват популистките вълни на масата, вместо мотивирано да водят хората в една или друга посока.

Затова е важно простосмъртните да се съпротивляваме всячески.

- Да избягваме платформите и масовите доставчици – с особено внимание към тези в юрисдикциите на Петте очи. Да ползваме услугите на по-малки и по възможност местни или европейски хостинг-компании и микро-облаци.

- Да пускаме и поддържаме алтернативни решения за съхраняване на файлове и данни.

- Да криптираме комуникацията и трафика си (и да внимаваме бюрократите да не направят това незаконно).

- Да не трупаме съдържание по разни чужди платформи, а да имаме собствени малки сайтове, блогове и сървъри.

- Да ползваме инструменти, които са извън контрола на алгоритмите за куриране на информация – например RSS или Mastodon.

- Да не ползваме технологии, които обсебват трафик или се превръщат в гравитационен център – като AMP.

- Да децентрализираме всичко, каквото можем в Интернет.

- Да бойкотираме копелдаците.

Обмислям да подхвана серия от публикации, които да подсказват добри (според мен) идеи и решения за опазване на личната неприкосновеност в Интернет, за по-добра сигурност на устройствата и компютрите ни, за подсигуряване на фирмените и личните ни данни чрез инструменти и услуги, които са алтернатива на общоизвестните и ще се старая доколкото е възможно да пиша разбираемо и общодостъпно, макар и за някои по-технически теми това да е доста трудно.

От тази година ще започна да предлагам и внедряване на някои от решенията, за които пиша. Като услуга. Предвид ограниченото ми лично време и физически капацитет, поне на първо време услугите ми ще са предназначени основно за малки и средни бизнеси или организации, с които сме си взаимно симпатични. Така или иначе нямам никакъв мерак да помагам на големите 🙂

Заглавна снимка: @ev

Време е да притопляме шамарите

Post Syndicated from Йовко Ламбрев original https://yovko.net/vreme-za-shamari/

2021. Годината започва с поне три нови издания (и два нови превода) на български език на „1984“ и „Фермата“ на Оруел. Засега. Знам, че се подготвят още две издания на „1984“ до края на годината. Странно, нали? Когато споделих с приятел, че това изглежда абсурдно на фона на смешно малкия книжен пазар у нас, той ми припомни, че миналата година се навършиха 70 години от смъртта на автора и правата върху заглавията вече са свободни. „Некрасиво осребяване“ беше коментарът му.

От друга страна 2021 може и да бъде годината, в която ще надвием над безконтролното разпространение на вируса причинител на COVID-19, но със сигурност трябва да маркира и някаква по-осезаема съпротива срещу големите технологични корпорации. В този ред на мисли, четенето на антиутопии помага.

Интернет се централизира и деформира прекомерно. Съдържанието, генерирано от хората (шибаните корпорации ни наричат потребители) е засмукано от няколко т.нар. платформи. Но те го събират не за да е по-лесно намираемо или полезно за останалите, а единствено заради техните си егоистични и алчни цели. От своя страна почти всички платформи, услуги и инструменти са подслонени в обятията на облаците на Amazon, Microsoft или Google. Много вероятно е дори да сте наели виртуален сървър или нещо друго от по-малък доставчик, но той пак да разчита на инфраструктурата на трите големи, като само я преопакова и евентуално добавя някакви свои услуги или поддръжка.

Така уж децентрализираният Интернет е в ръцете на неколцина алчни копелдаци, които е крайно време да започнат да ядат шамари. Заради тях се пропукват фундаментални опори на обществата и демокрациите ни. Платформите отказват да носят отговорност за съдържанието, което разпространяват и усилват, но в същия момент задушават и поставят в зависимост медиите, които все още се опитват да изпълняват обществения си дълг. Новите бизнес-модели до един облагодетелстват платформите, без никакъв ангажимент да компенсират създаващите съдържание. Опитите на социалните мрежи да обозначават дезинформацията, политическите манипулации и фалшивите новини са едва отскоро, но реализацията на това е нелепа и много личи, че е направена под натиск и без нужното осъзнаване на проблема и отговорността.

Искрено се надявам, че скоро ще видим решителни и категорични мерки спрямо техноолигополите, включително разделяне на гигантите на по-малки компании. Но не съм наивен. Не е много вероятно, нито ще е лесно. Политиците в последно време са маркетингов продукт без идеологически фундамент. Нагласят се спрямо посоката на попътния вятър и следват популистките вълни на масата, вместо мотивирано да водят хората в една или друга посока.

Затова е важно простосмъртните да се съпротивляваме всячески.

- Да избягваме платформите и масовите доставчици – с особено внимание към тези в юрисдикциите на Петте очи. Да ползваме услугите на по-малки и по възможност местни или европейски хостинг-компании и микро-облаци.

- Да пускаме и поддържаме алтернативни решения за съхраняване на файлове и данни.

- Да криптираме комуникацията и трафика си (и да внимаваме бюрократите да не направят това незаконно).

- Да не трупаме съдържание по разни чужди платформи, а да имаме собствени малки сайтове, блогове и сървъри.

- Да ползваме инструменти, които са извън контрола на алгоритмите за куриране на информация – например RSS или Mastodon.

- Да не ползваме технологии, които обсебват трафик или се превръщат в гравитационен център – като AMP.

- Да децентрализираме всичко, каквото можем в Интернет.

- Да бойкотираме копелдаците.

Обмислям да подхвана серия от публикации, които да подсказват добри (според мен) идеи и решения за опазване на личната неприкосновеност в Интернет, за по-добра сигурност на устройствата и компютрите ни, за подсигуряване на фирмените и личните ни данни чрез инструменти и услуги, които са алтернатива на общоизвестните и ще се старая доколкото е възможно да пиша разбираемо и общодостъпно, макар и за някои по-технически теми това да е доста трудно.

От тази година ще започна да предлагам и внедряване на някои от решенията, за които пиша. Като услуга. Предвид ограниченото ми лично време и физически капацитет, поне на първо време услугите ми ще са предназначени основно за малки и средни бизнеси или организации, с които сме си взаимно симпатични. Така или иначе нямам никакъв мерак да помагам на големите 🙂

Заглавна снимка: @ev

Време е да притопляме шамарите

Post Syndicated from Йовко Ламбрев original https://yovko.net/vreme-za-shamari/

2021. Годината започва с поне три нови издания (и два нови превода) на български език на „1984“ и „Фермата“ на Оруел. Засега. Знам, че се подготвят още две издания на „1984“ до края на годината. Странно, нали? Когато споделих с приятел, че това изглежда абсурдно на фона на смешно малкия книжен пазар у нас, той ми припомни, че миналата година се навършиха 70 години от смъртта на автора и правата върху заглавията вече са свободни. „Некрасиво осребяване“ беше коментарът му.

От друга страна 2021 може и да бъде годината, в която ще надвием над безконтролното разпространение на вируса причинител на COVID-19, но със сигурност трябва да маркира и някаква по-осезаема съпротива срещу големите технологични корпорации. В този ред на мисли, четенето на антиутопии помага.

Интернет се централизира и деформира прекомерно. Съдържанието, генерирано от хората (шибаните корпорации ни наричат потребители) е засмукано от няколко т.нар. платформи. Но те го събират не за да е по-лесно намираемо или полезно за останалите, а единствено заради техните си егоистични и алчни цели. От своя страна почти всички платформи, услуги и инструменти са подслонени в обятията на облаците на Amazon, Microsoft или Google. Много вероятно е дори да сте наели виртуален сървър или нещо друго от по-малък доставчик, но той пак да разчита на инфраструктурата на трите големи, като само я преопакова и евентуално добавя някакви свои услуги или поддръжка.

Така уж децентрализираният Интернет е в ръцете на неколцина алчни копелдаци, които е крайно време да започнат да ядат шамари. Заради тях се пропукват фундаментални опори на обществата и демокрациите ни. Платформите отказват да носят отговорност за съдържанието, което разпространяват и усилват, но в същия момент задушават и поставят в зависимост медиите, които все още се опитват да изпълняват обществения си дълг. Новите бизнес-модели до един облагодетелстват платформите, без никакъв ангажимент да компенсират създаващите съдържание. Опитите на социалните мрежи да обозначават дезинформацията, политическите манипулации и фалшивите новини са едва отскоро, но реализацията на това е нелепа и много личи, че е направена под натиск и без нужното осъзнаване на проблема и отговорността.

Искрено се надявам, че скоро ще видим решителни и категорични мерки спрямо техноолигополите, включително разделяне на гигантите на по-малки компании. Но не съм наивен. Не е много вероятно, нито ще е лесно. Политиците в последно време са маркетингов продукт без идеологически фундамент. Нагласят се спрямо посоката на попътния вятър и следват популистките вълни на масата, вместо мотивирано да водят хората в една или друга посока.

Затова е важно простосмъртните да се съпротивляваме всячески.

- Да избягваме платформите и масовите доставчици – с особено внимание към тези в юрисдикциите на Петте очи. Да ползваме услугите на по-малки и по възможност местни или европейски хостинг-компании и микро-облаци.

- Да пускаме и поддържаме алтернативни решения за съхраняване на файлове и данни.

- Да криптираме комуникацията и трафика си (и да внимаваме бюрократите да не направят това незаконно).

- Да не трупаме съдържание по разни чужди платформи, а да имаме собствени малки сайтове, блогове и сървъри.

- Да ползваме инструменти, които са извън контрола на алгоритмите за куриране на информация – например RSS или Mastodon.

- Да не ползваме технологии, които обсебват трафик или се превръщат в гравитационен център – като AMP.

- Да децентрализираме всичко, каквото можем в Интернет.

- Да бойкотираме копелдаците.

Обмислям да подхвана серия от публикации, които да подсказват добри (според мен) идеи и решения за опазване на личната неприкосновеност в Интернет, за по-добра сигурност на устройствата и компютрите ни, за подсигуряване на фирмените и личните ни данни чрез инструменти и услуги, които са алтернатива на общоизвестните и ще се старая доколкото е възможно да пиша разбираемо и общодостъпно, макар и за някои по-технически теми това да е доста трудно.

От тази година ще започна да предлагам и внедряване на някои от решенията, за които пиша. Като услуга. Предвид ограниченото ми лично време и физически капацитет, поне на първо време услугите ми ще са предназначени основно за малки и средни бизнеси или организации, с които сме си взаимно симпатични. Така или иначе нямам никакъв мерак да помагам на големите 🙂

Заглавна снимка: @ev

Време е да притопляме шамарите

Post Syndicated from Йовко Ламбрев original https://yovko.net/vreme-za-shamari/

2021. Годината започва с поне три нови издания (и два нови превода) на български език на „1984“ и „Фермата“ на Оруел. Засега. Знам, че се подготвят още две издания на „1984“ до края на годината. Странно, нали? Когато споделих с приятел, че това изглежда абсурдно на фона на смешно малкия книжен пазар у нас, той ми припомни, че миналата година се навършиха 70 години от смъртта на автора и правата върху заглавията вече са свободни. „Некрасиво осребяване“ беше коментарът му.

От друга страна 2021 може и да бъде годината, в която ще надвием над безконтролното разпространение на вируса причинител на COVID-19, но със сигурност трябва да маркира и някаква по-осезаема съпротива срещу големите технологични корпорации. В този ред на мисли, четенето на антиутопии помага.

Интернет се централизира и деформира прекомерно. Съдържанието, генерирано от хората (шибаните корпорации ни наричат потребители) е засмукано от няколко т.нар. платформи. Но те го събират не за да е по-лесно намираемо или полезно за останалите, а единствено заради техните си егоистични и алчни цели. От своя страна почти всички платформи, услуги и инструменти са подслонени в обятията на облаците на Amazon, Microsoft или Google. Много вероятно е дори да сте наели виртуален сървър или нещо друго от по-малък доставчик, но той пак да разчита на инфраструктурата на трите големи, като само я преопакова и евентуално добавя някакви свои услуги или поддръжка.

Така уж децентрализираният Интернет е в ръцете на неколцина алчни копелдаци, които е крайно време да започнат да ядат шамари. Заради тях се пропукват фундаментални опори на обществата и демокрациите ни. Платформите отказват да носят отговорност за съдържанието, което разпространяват и усилват, но в същия момент задушават и поставят в зависимост медиите, които все още се опитват да изпълняват обществения си дълг. Новите бизнес-модели до един облагодетелстват платформите, без никакъв ангажимент да компенсират създаващите съдържание. Опитите на социалните мрежи да обозначават дезинформацията, политическите манипулации и фалшивите новини са едва отскоро, но реализацията на това е нелепа и много личи, че е направена под натиск и без нужното осъзнаване на проблема и отговорността.

Искрено се надявам, че скоро ще видим решителни и категорични мерки спрямо техноолигополите, включително разделяне на гигантите на по-малки компании. Но не съм наивен. Не е много вероятно, нито ще е лесно. Политиците в последно време са маркетингов продукт без идеологически фундамент. Нагласят се спрямо посоката на попътния вятър и следват популистките вълни на масата, вместо мотивирано да водят хората в една или друга посока.

Затова е важно простосмъртните да се съпротивляваме всячески.

- Да избягваме платформите и масовите доставчици – с особено внимание към тези в юрисдикциите на Петте очи. Да ползваме услугите на по-малки и по възможност местни или европейски хостинг-компании и микро-облаци.

- Да пускаме и поддържаме алтернативни решения за съхраняване на файлове и данни.

- Да криптираме комуникацията и трафика си (и да внимаваме бюрократите да не направят това незаконно).

- Да не трупаме съдържание по разни чужди платформи, а да имаме собствени малки сайтове, блогове и сървъри.

- Да ползваме инструменти, които са извън контрола на алгоритмите за куриране на информация – например RSS или Mastodon.

- Да не ползваме технологии, които обсебват трафик или се превръщат в гравитационен център – като AMP.

- Да децентрализираме всичко, каквото можем в Интернет.

- Да бойкотираме копелдаците.

Обмислям да подхвана серия от публикации, които да подсказват добри (според мен) идеи и решения за опазване на личната неприкосновеност в Интернет, за по-добра сигурност на устройствата и компютрите ни, за подсигуряване на фирмените и личните ни данни чрез инструменти и услуги, които са алтернатива на общоизвестните и ще се старая доколкото е възможно да пиша разбираемо и общодостъпно, макар и за някои по-технически теми това да е доста трудно.

От тази година ще започна да предлагам и внедряване на някои от решенията, за които пиша. Като услуга. Предвид ограниченото ми лично време и физически капацитет, поне на първо време услугите ми ще са предназначени основно за малки и средни бизнеси или организации, с които сме си взаимно симпатични. Така или иначе нямам никакъв мерак да помагам на големите 🙂

Заглавна снимка: @ev

Време е да притопляме шамарите

Post Syndicated from Yovko Lambrev original https://yovko.net/vreme-za-shamari/

2021. Годината започва с поне три нови издания (и два нови превода) на български език на „1984“ и „Фермата“ на Оруел. Засега. Знам, че се подготвят още две издания на „1984“ до края на годината. Странно, нали? Когато споделих с приятел, че това изглежда абсурдно на фона на смешно малкия книжен пазар у нас, той ми припомни, че миналата година се навършиха 70 години от смъртта на автора и правата върху заглавията вече са свободни. „Некрасиво осребяване“ беше коментарът му.

От друга страна 2021 може и да бъде годината, в която ще надвием над безконтролното разпространение на вируса причинител на COVID-19, но със сигурност трябва да маркира и някаква по-осезаема съпротива срещу големите технологични корпорации. В този ред на мисли, четенето на антиутопии помага.

Интернет се централизира и деформира прекомерно. Съдържанието, генерирано от хората (шибаните корпорации ни наричат потребители) е засмукано от няколко т.нар. платформи. И те го събират не за да е по-лесно намираемо или полезно за останалите, а единствено за техните си алчни цели. От своя страна почти всички платформи, услуги и инструменти са подслонени в обятията на облаците на Amazon, Microsoft и Google. Много вероятно е дори да сте наели виртуален сървър от по-малък доставчик, той пак да разчита на инфраструктурата на трите големи, като само я преопакова и добавя някакви свои услуги.

Така уж децентрализираният Интернет е в ръцете на неколцина алчни копелдаци, които е крайно време да започнат да ядат шамари. Заради тях се пропукват фундаментални опори на обществата и демокрациите ни. Платформите отказват да носят отговорност за съдържанието, което разпространяват и усилват, но в същия момент задушават и поставят в зависимост медиите, които все още се опитват да изпълняват обществения си дълг. Новите бизнес-модели до един облагодетелстват платформите, без никакъв ангажимент да компенсират създаващите съдържание. Опитите на социалните мрежи да обозначават дезинформацията, политическите манипулации и фалшивите новини са едва отскоро, но реализацията на това е нелепа и много личи, че е направена под натиск и без нужното осъзнаване на проблема и отговорността.

Искрено се надявам, че скоро ще видим решителни и категорични мерки спрямо техноолигополите, включително разделяне на гигантите на по-малки компании. Но не съм наивен. Не е много вероятно, нито ще е лесно. Политиците в последно време са маркетингов продукт без идеологически фундамент. Нагласят се спрямо посоката на попътния вятър и следват популистките вълни на масата, вместо мотивирано да водят хората в една или друга посока.

Затова е важно простосмъртните да се съпротивляваме всячески.

- Да избягваме платформите и масовите доставчици – с особено внимание към тези в юрисдикциите на Петте очи. Да ползваме услугите на по-малки и по възможност местни или европейски хостинг-компании и микро-облаци.

- Да пускаме и поддържаме алтернативни решения за съхраняване на файлове и данни.

- Да криптираме комуникацията и трафика си (и да внимаваме бюрократите да не направят това незаконно).

- Да не трупаме съдържание по разни чужди платформи, а да имаме собствени малки сайтове, блогове и сървъри.

- Да ползваме инструменти, които са извън контрола на алгоритмите за куриране на информация – например RSS или Mastodon.

- Да не ползваме технологии, които обсебват трафик или се превръщат в гравитационен център – като AMP.

- Да децентрализираме всичко, каквото можем в Интернет.

- Да бойкотираме копелдаците.

Обмислям да подхвана серия от публикации, които да подсказват добри (според мен) идеи и решения за опазване на личната неприкосновеност в Интернет, за по-добра сигурност на устройствата и компютрите ни, за подсигуряване на фирмените и личните ни данни чрез инструменти и услуги, които са алтернатива на общоизвестните и ще се старая доколкото е възможно да пиша разбираемо и общодостъпно, макар и за някои по-технически теми това да е доста трудно.

От тази година ще започна да предлагам и внедряване на някои от решенията, за които пиша. Като услуга. Предвид ограниченото ми лично време и физически капацитет, поне на първо време услугите ми ще са предназначени основно за малки и средни бизнеси или организации, с които сме си взаимно симпатични. Така или иначе нямам никакъв мерак да помагам на големите 🙂

Заглавна снимка: @ev

Latest on the SVR’s SolarWinds Hack

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/01/latest-on-the-svrs-solarwinds-hack.html

The New York Times has an in-depth article on the latest information about the SolarWinds hack (not a great name, since it’s much more far-reaching than that).

Interviews with key players investigating what intelligence agencies believe to be an operation by Russia’s S.V.R. intelligence service revealed these points:

- The breach is far broader than first believed. Initial estimates were that Russia sent its probes only into a few dozen of the 18,000 government and private networks they gained access to when they inserted code into network management software made by a Texas company named SolarWinds. But as businesses like Amazon and Microsoft that provide cloud services dig deeper for evidence, it now appears Russia exploited multiple layers of the supply chain to gain access to as many as 250 networks.

- The hackers managed their intrusion from servers inside the United States, exploiting legal prohibitions on the National Security Agency from engaging in domestic surveillance and eluding cyberdefenses deployed by the Department of Homeland Security.

- “Early warning” sensors placed by Cyber Command and the National Security Agency deep inside foreign networks to detect brewing attacks clearly failed. There is also no indication yet that any human intelligence alerted the United States to the hacking.

- The government’s emphasis on election defense, while critical in 2020, may have diverted resources and attention from long-brewing problems like protecting the “supply chain” of software. In the private sector, too, companies that were focused on election security, like FireEye and Microsoft, are now revealing that they were breached as part of the larger supply chain attack.

- SolarWinds, the company that the hackers used as a conduit for their attacks, had a history of lackluster security for its products, making it an easy target, according to current and former employees and government investigators. Its chief executive, Kevin B. Thompson, who is leaving his job after 11 years, has sidestepped the question of whether his company should have detected the intrusion.

- Some of the compromised SolarWinds software was engineered in Eastern Europe, and American investigators are now examining whether the incursion originated there, where Russian intelligence operatives are deeply rooted.

Separately, it seems that the SVR conducted a dry run of the attack five months before the actual attack:

The hackers distributed malicious files from the SolarWinds network in October 2019, five months before previously reported files were sent to victims through the company’s software update servers. The October files, distributed to customers on Oct. 10, did not have a backdoor embedded in them, however, in the way that subsequent malicious files that victims downloaded in the spring of 2020 did, and these files went undetected until this month.

[…]

“This tells us the actor had access to SolarWinds’ environment much earlier than this year. We know at minimum they had access Oct. 10, 2019. But they would certainly have had to have access longer than that,” says the source. “So that intrusion [into SolarWinds] has to originate probably at least a couple of months before that - probably at least mid-2019 [if not earlier].”

The files distributed to victims in October 2019 were signed with a legitimate SolarWinds certificate to make them appear to be authentic code for the company’s Orion Platform software, a tool used by system administrators to monitor and configure servers and other computer hardware on their network.

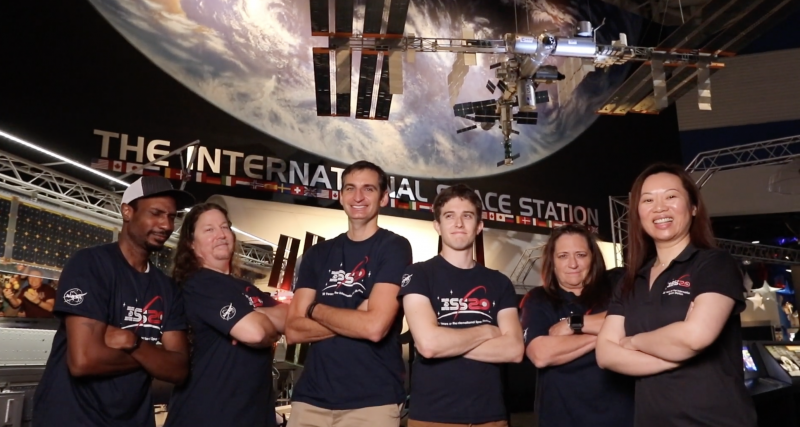

Meet team behind the mini Raspberry Pi–powered ISS

Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/meet-team-behind-the-mini-raspberry-pi-powered-iss/

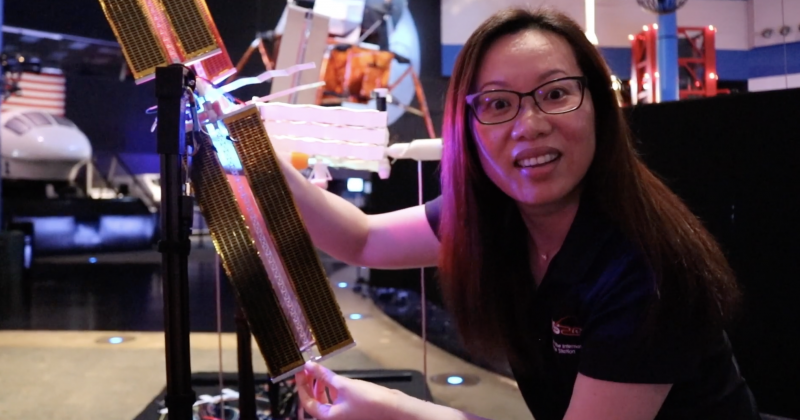

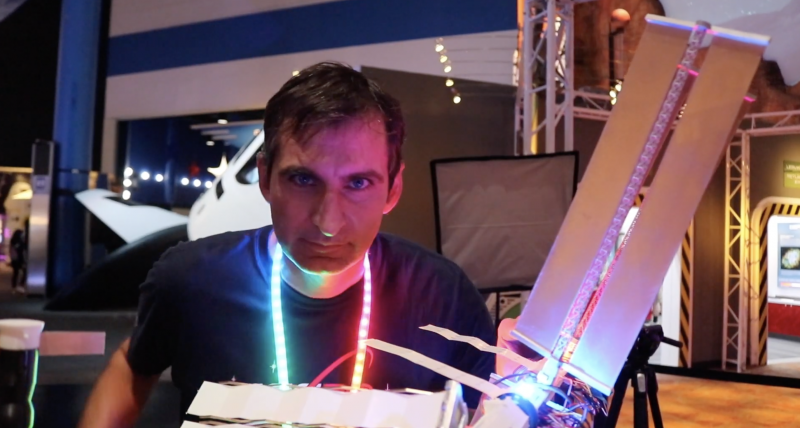

Quite possibly the coolest thing we saw Raspberry Pi powering this year was ISS Mimic, a mini version of the International Space Station (ISS). We wanted to learn more about the brains that dreamt up ISS Mimic, which uses data from the ISS to mirror exactly what the real thing is doing in orbit.

The ISS Mimic team’s a diverse, fun-looking bunch of people and they all made their way to NASA via different paths. Maybe you could see yourself there in the future too?

Dallas Kidd

Dallas Kidd currently works at the startup Skylark Wireless, helping to advance the technology to provide affordable high speed internet to rural areas.

Previously, she worked on traffic controllers and sensors, in finance on a live trading platform, on RAID controllers for enterprise storage, and at a startup tackling the problem of alarm fatigue in hospitals.

Before getting her Master’s in computer science with a thesis on automatically classifying stars, she taught English as a second language, Algebra I, geometry, special education, reading, and more.

Her hobbies are scuba diving, learning about astronomy, creative writing, art, and gaming.

Tristan Moody

Tristan Moody currently works as a spacecraft survivability engineer at Boeing, helping to keep the ISS and other satellites safe from the threat posed by meteoroids and orbital debris.

He has a PhD in mechanical engineering and currently spends much of his free time as playground equipment for his two young kids.

Estefannie

Estefannie is a software engineer, designer, punk rocker and likes to overly engineer things and document her findings on her YouTube and Instagram channels as Estefannie Explains It All.

Estefannie spends her time inventing things before thinking, soldering for fun, writing, filming and producing content for her YouTube channel, and public speaking at universities, conferences, and hackathons.

She lives in Houston, Texas and likes tacos.

Douglas Kimble

Douglas Kimble currently works as an electrical/mechanical design engineer at Boeing. He has designed countless wire harness and installation drawings for the ISS.

He assumes the mentor role and interacts well with diverse personalities. He is also the world’s biggest Lakers fan living in Texas.

His favorite pastimes includes hanging out with his two dogs, Boomer and Teddy.

Craig Stanton

Craig’s father worked for the Space Shuttle program, designing the ascent flight trajectories profiles for the early missions. He remembers being on site at Johnson Space Center one evening, in a freezing cold computer terminal room, punching cards for a program his dad wrote in the early 1980s.

Craig grew up with LEGO and majored in Architecture and Space Design at the University of Houston’s Sasakawa International Center for Space Architecture (SICSA).

His day job involves measuring ISS major assemblies on the ground to ensure they’ll fit together on-orbit. Traveling to many countries to measure hardware that will never see each other until on-orbit is the really coolest part of the job.

Sam Treagold

Sam Treadgold is an aerospace engineer who also works on the Meteoroid and Orbital Debris team, helping to protect the ISS and Space Launch System from hypervelocity impacts. Occasionally they take spaceflight hardware out to the desert and shoot it with a giant gun to see what happens.

In a non-pandemic world he enjoys rock climbing, music festivals, and making sound-reactive LED sunglasses.

Chen Deng

Chen Deng is a Systems Engineer working at Boeing with the International Space Station (ISS) program. Her job is to ensure readiness of Payloads, or science experiments, to launch in various spacecraft and operations to conduct research aboard the ISS.

The ISS provides a very unique science laboratory environment, something we can’t get much of on earth: microgravity! The term microgravity means a state of little or very weak gravity. The virtual absence of gravity allows scientists to conduct experiments that are impossible to perform on earth, where gravity affects everything that we do.

In her free time, Chen enjoys hiking, board games, and creative projects alike.

Bryan Murphy

Bryan Murphy is a dynamics and motion control engineer at Boeing, where he gets to create digital physics models of robotic space mechanisms to predict their performance.

His favorite projects include the ISS treadmill vibration isolation system and the shiny new docking system. He grew up on a small farm where his hands-on time with mechanical devices fueled his interest in engineering.

When not at work, he loves to brainstorm and create with his artist/engineer wife and their nerdy kids, or go on long family roadtrips—- especially to hike and kayak or eat ice cream. He’s also vice president of a local makerspace, where he leads STEM outreach and includes excess LEDs in all his builds.

Susan

Susan is a mechanical engineer and a 30+-year veteran of manned spaceflight operations. She has worked the Space Shuttle Program for Payloads (middeck experiments and payloads deployed with the shuttle arm) starting with STS-30 and was on the team that deployed the Hubble Space Telescope.

She then transitioned into life sciences experiments, which led to the NASA Mir Program where she was on continuous rotation for three years to Russian Mission Control, supporting the NASA astronaut and science experiments onboard the space station as a predecessor to the ISS.

She currently works on the ISS Program (for over 20 years now), where she used to write procedures for on-orbit assembly of the Space Xtation and now writes installation procedures for on-orbit modifications like the docking adapter. She is also an artist and makes crosses out of found objects, and even used to play professional women’s football.

Keep in touch

You can keep up with Team ISS Mimic on Facebook, Instagram, and Twitter. For more info or to join the team, check out their GitHub page and Discord.

Kids, run your code on the ISS!

Did you know that there are Raspberry Pi computers aboard the real ISS that young people can run their own Python programs on? How cool is that?!

Find out how to participate at astro-pi.org.

The post Meet team behind the mini Raspberry Pi–powered ISS appeared first on Raspberry Pi.

Бацилите като серийни убийци

Post Syndicated from original https://yurukov.net/blog/2021/seriini-ubiici/

![]() Вирусолозите: Открихме серийния убиец.

Вирусолозите: Открихме серийния убиец. ![]() Искаме да предпазим обществото.

Искаме да предпазим обществото.

![]() Атенуирани ваксини: Ето, показваме ви серийния убиец, но е онемощял и вързан. Знаем, че тук има поне минимална сигурност, така че вероятността да избяга е нищожна, но дори тогава не би стигнал далеч.

Атенуирани ваксини: Ето, показваме ви серийния убиец, но е онемощял и вързан. Знаем, че тук има поне минимална сигурност, така че вероятността да избяга е нищожна, но дори тогава не би стигнал далеч.

![]() Инактивирани ваксини: Ето, показваме ви отрязаната му глава, за да се научите как да го разпознавате. Добре е заключена, видимо не може да избяга, но някои все пак може да се стреснат от гледката.

Инактивирани ваксини: Ето, показваме ви отрязаната му глава, за да се научите как да го разпознавате. Добре е заключена, видимо не може да избяга, но някои все пак може да се стреснат от гледката.![]() Молекулни ваксини: Няма да ви показваме нищо, но ще пуснем сред вас запознати с него, които да ви предупреждават. Най-вероятно ще се спогодите с тях.

Молекулни ваксини: Няма да ви показваме нищо, но ще пуснем сред вас запознати с него, които да ви предупреждават. Най-вероятно ще се спогодите с тях.

![]() мРНК ваксини: Няма да ви показваме нищо от убиеца. Ще ви дадем обаче подробен чертеж на шперца, с който влиза с взлом при жертвите. Така ще може да си го направите сами и да го разучите. Ако видите някого с такъв шперц, знаете какво да правите. Рисковете включват порязване на хартията на чертежа.

мРНК ваксини: Няма да ви показваме нищо от убиеца. Ще ви дадем обаче подробен чертеж на шперца, с който влиза с взлом при жертвите. Така ще може да си го направите сами и да го разучите. Ако видите някого с такъв шперц, знаете какво да правите. Рисковете включват порязване на хартията на чертежа.

![]() Антиваксъри: О, не. Не ни трябват такива неща. Вярваме, че всеки има нужда да се сблъска с подобни серийни убийци. Е, от кога е имало такива и сме си добре, а и е важно за развитието на детето да се сблъска с убийци и да се научи по естествен начин как да се защитава от тях. Да не говорим, че повечето деца не са от типа на жертвите, които тоя предпочита, така че за какво е врявата? Още повече, че мислим, че са силно преувеличени твърденията колко е бил жесток – просто си е играел с тях. Всичко това е голяма конспирация – едни само искат да правят пари, за да разпечатват, показват и да плашат децата. Представяте ли си как ще се отрази на детското съзнание грозната гледка на снимка на един престъпник? Далеч по-добре е да го срещне на живо!

Антиваксъри: О, не. Не ни трябват такива неща. Вярваме, че всеки има нужда да се сблъска с подобни серийни убийци. Е, от кога е имало такива и сме си добре, а и е важно за развитието на детето да се сблъска с убийци и да се научи по естествен начин как да се защитава от тях. Да не говорим, че повечето деца не са от типа на жертвите, които тоя предпочита, така че за какво е врявата? Още повече, че мислим, че са силно преувеличени твърденията колко е бил жесток – просто си е играел с тях. Всичко това е голяма конспирация – едни само искат да правят пари, за да разпечатват, показват и да плашат децата. Представяте ли си как ще се отрази на детското съзнание грозната гледка на снимка на един престъпник? Далеч по-добре е да го срещне на живо!

The post Бацилите като серийни убийци first appeared on Блогът на Юруков.

Comic for 2021.01.05

Post Syndicated from Explosm.net original http://explosm.net/comics/5760/

New Cyanide and Happiness Comic

How FactSet automates thousands of AWS accounts at scale

Post Syndicated from Amit Borulkar original https://aws.amazon.com/blogs/devops/factset-automation-at-scale/

This post is by FactSet’s Cloud Infrastructure team, Gaurav Jain, Nathan Goodman, Geoff Wang, Daniel Cordes, Sunu Joseph, and AWS Solution Architects Amit Borulkar and Tarik Makota. In their own words, “FactSet creates flexible, open data and software solutions for tens of thousands of investment professionals around the world, which provides instant access to financial data and analytics that investors use to make crucial decisions. At FactSet, we are always working to improve the value that our products provide.”

At FactSet, our operational goal to use the AWS Cloud is to have high developer velocity alongside enterprise governance. Assigning AWS accounts per project enables the agility and isolation boundary needed by each of the project teams to innovate faster. As existing workloads are migrated and new workloads are developed in the cloud, we realized that we were operating close to thousands of AWS accounts. To have a consistent and repeatable experience for diverse project teams, we automated the AWS account creation process, various service control policies (SCP) and AWS Identity and Access Management (IAM) policies and roles associated with the accounts, and enforced policies for ongoing configuration across the accounts. This post covers our automation workflows to enable governance for thousands of AWS accounts.

AWS account creation workflow

To empower our project teams to operate in the AWS Cloud in an agile manner, we developed a platform that enables AWS account creation with the default configuration customized to meet FactSet’s governance policies. These AWS accounts are provisioned with defaults such as a virtual private cloud (VPC), subnets, routing tables, IAM roles, SCP policies, add-ons for monitoring and load-balancing, and FactSet-specific governance. Developers and project team members can request a micro account for their product via this platform’s website, or do so programmatically using an API or wrap-around custom Terraform modules. The following screenshot shows a portion of the web interface that allows developers to request an AWS account.

Continue reading How FactSet automates thousands of AWS accounts at scale

The best new features for data analysts in Amazon Redshift in 2020

Post Syndicated from Helen Anderson original https://aws.amazon.com/blogs/big-data/the-best-new-features-for-data-analysts-in-amazon-redshift-in-2020/

This is a guest post by Helen Anderson, data analyst and AWS Data Hero

Every year, the Amazon Redshift team launches new and exciting features, and 2020 was no exception. New features to improve the data warehouse service and add interoperability with other AWS services were rolling out all year.

I am part of a team that for the past 3 years has used Amazon Redshift to store source tables from systems around the organization and usage data from our software as a service (SaaS) product. Amazon Redshift is our one source of truth. We use it to prepare operational reports that support the business and for ad hoc queries when numbers are needed quickly.

When AWS re:Invent comes around, I look forward to the new features, enhancements, and functionality that make things easier for analysts. If you haven’t tried Amazon Redshift in a while, or even if you’re a longtime user, these new capabilities are designed with analysts in mind to make it easier to analyze data at scale.

Amazon Redshift ML

The newly launched preview of Amazon Redshift ML lets data analysts use Amazon SageMaker over datasets in Amazon Redshift to solve business problems without the need for a data scientist to create custom models.

As a data analyst myself, this is one of the most interesting announcements to come out in re:Invent 2020. Analysts generally use SQL to query data and present insights, but they don’t often do data science too. Now there is no need to wait for a data scientist or learn a new language to create predictive models.

For information about what you need to get started with Amazon Redshift ML, see Create, train, and deploy machine learning models in Amazon Redshift using SQL with Amazon Redshift ML.

Federated queries

As analysts, we often have to join datasets that aren’t in the same format and sometimes aren’t ready for use in the same place. By using federated queries to access data in other databases or Amazon Simple Storage Service (Amazon S3), you don’t need to wait for a data engineer or ETL process to move data around.

re:Invent 2019 featured some interesting talks from Amazon Redshift customers who were tackling this problem. Now that federated queries over operational databases like Amazon RDS for PostgreSQL and Amazon Aurora PostgreSQL are generally available and querying Amazon RDS for MySQL and Amazon Aurora MySQL is in preview, I’m excited to hear more.

For a step-by-step example to help you get started, see Build a Simplified ETL and Live Data Query Solution Using Redshift Federated Query.

SUPER data type

Another problem we face as analysts is that the data we need isn’t always in rows and columns. The new SUPER data type makes JSON data easy to use natively in Amazon Redshift with PartiQL.

PartiQL is an extension that helps analysts get up and running quickly with structured and semistructured data so you can unnest and query using JOINs and aggregates. This is really exciting for those who deal with data coming from applications that store data in JSON or unstructured formats.

For use cases and a quickstart, see Ingesting and querying semistructured data in Amazon Redshift (preview).

Partner console integration

The preview of the native console integration with partners announced at AWS re:Invent 2020 will also make data analysis quicker and easier. Although analysts might not be doing the ETL work themselves, this new release makes it easier to move data from platforms like Salesforce, Google Analytics, and Facebook Ads into Amazon Redshift.

Matillion, Sisense, Segment, Etleap, and Fivetran are launch partners, with other partners coming soon. If you’re an Amazon Redshift partner and would like to integrate into the console, contact [email protected].

RA3 nodes with managed storage

Previously, when you added Amazon Redshift nodes to a cluster, both storage and compute were scaled up. This all changed with the 2019 announcement of RA3 nodes, which upgrade storage and compute independently.

In 2020, the Amazon Redshift team introduced RA3.xlplus nodes, which offer even more compute sizing options to address a broader set of workload requirements.

AQUA for Amazon Redshift

As analysts, we want our queries to run quickly so we can spend more time empowering the users of our insights and less time watching data slowly return. AQUA, the Advanced Query Accelerator for Amazon Redshift tackles this problem at an infrastructure level by bringing the stored data closer to the compute power

This hardware-accelerated cache enables Amazon Redshift to run up to 10 times faster as it scales out and processes data in parallel across many nodes. Each node accelerates compression, encryption, and data processing tasks like scans, aggregates, and filtering. Analysts should still try their best to write efficient code, but the power of AQUA will speed up the return of results considerably.

AQUA is available on Amazon Redshift RA3 instances at no additional cost. To get started with AQUA, sign up for the preview.

The following diagram shows Amazon Redshift architecture with an AQUA layer.

Figure 1: Amazon Redshift architecture with AQUA layer

Automated performance tuning

For analysts who haven’t used sort and distribution keys, the learning curve can be steep. A table created with the wrong keys can mean results take much longer to return.

Automatic table optimization tackles this problem by using machine learning to select the best keys and tune the physical design of tables. Letting Amazon Redshift determine how to improve cluster performance reduces manual effort.

Summary

These are just some of the Amazon Redshift announcements made in 2020 to help analysts get query results faster. Some of these features help you get access to the data you need, whether it’s in Amazon Redshift or somewhere else. Others are under-the-hood enhancements that make things run smoothly with less manual effort.

For more information about these announcements and a complete list of new features, see What’s New in Amazon Redshift.

About the Author

Helen Anderson is a Data Analyst based in Wellington, New Zealand. She is well known in the data community for writing beginner-friendly blog posts, teaching, and mentoring those who are new to tech. As a woman in tech and a career switcher, Helen is particularly interested in inspiring those who are underrepresented in the industry.

Building a real-time notification system with Amazon Kinesis Data Streams for Amazon DynamoDB and Amazon Kinesis Data Analytics for Apache Flink

Post Syndicated from Saurabh Shrivastava original https://aws.amazon.com/blogs/big-data/building-a-real-time-notification-system-with-amazon-kinesis-data-streams-for-amazon-dynamodb-and-amazon-kinesis-data-analytics-for-apache-flink/

Amazon DynamoDB helps you capture high-velocity data such as clickstream data to form customized user profiles and Internet of Things (IoT) data so that you can develop insights on sensor activity across various industries, including smart spaces, connected factories, smart packing, fitness monitoring, and more. It’s important to store these data points in a centralized data lake in real time, where they can be transformed, analyzed, and combined with diverse organizational datasets to derive meaningful insights and make predictions.

A popular use case in the wind energy sector is to protect wind turbines from wind speed. As per National Wind Watch, every wind turbine has a range of wind speeds, typically 30–55 mph, in which it produces maximum capacity. When wind speed is greater than 70 mph, it’s important to start shutdown to protect the turbine from a high wind storm. Customers often store high-velocity IoT data in DynamoDB and use Amazon Kinesis streaming to extract data and store it in a centralized data lake built on Amazon Simple Storage Service (Amazon S3). To facilitate this ingestion pipeline, you can deploy AWS Lambda functions or write custom code to build a bridge between DynamoDB Streams and Kinesis streaming.

Amazon Kinesis Data Streams for DynamoDB help you to publish item-level changes in any DynamoDB table to a Kinesis data stream of your choice. Additionally, you can take advantage of this feature for use cases that require longer data retention on the stream and fan out to multiple concurrent stream readers. You also can integrate with Amazon Kinesis Data Analytics or Amazon Kinesis Data Firehose to publish data to downstream destinations such as Amazon Elasticsearch Service, Amazon Redshift, or Amazon S3.

In this post, you use Kinesis Data Analytics for Apache Flink (Data Analytics for Flink) and Amazon Simple Notification Service (Amazon SNS) to send a real-time notification when wind speed is greater than 60 mph so that the operator can take action to protect the turbine. You use Kinesis Data Streams for DynamoDB and take advantage of managed streaming delivery of DynamoDB data to other AWS services without having to use Lambda or write and maintain complex code. To process DynamoDB events from Kinesis, you have multiple options: Amazon Kinesis Client Library (KCL) applications, Lambda, and Data Analytics for Flink. In this post, we showcase Data Analytics for Flink, but this is just one of many available options.

Architecture

The following architecture diagram illustrates the wind turbine protection system.

In this architecture, high-velocity wind speed data comes from the wind turbine and is stored in DynamoDB. To send an instant notification, you need to query the data in real time and send a notification when the wind speed is greater than the established maximum. To achieve this goal, you enable Kinesis Data Streams for DynamoDB, and then use Data Analytics for Flink to query real-time data in a 60-second tumbling window. This aggregated data is stored in another data stream, which triggers an email notification via Amazon SNS using Lambda when the wind speed is greater than 60 mph. You will build this entire data pipeline in a serverless manner.

Deploying the wind turbine data simulator

To replicate a real-life scenario, you need a wind turbine data simulator. We use Amazon Amplify in this post to deploy a user-friendly web application that can generate the required data and store it in DynamoDB. You must have a GitHub account which will help to fork the Amplify app code and deploy it in your AWS account automatically.

Complete the following steps to deploy the data simulator web application:

- Choose the following AWS Amplify link to launch the wind turbine data simulator web app.

- Choose Connect to GitHub and provide credentials, if required.

- In the Deploy App section, under Select service role, choose Create new role.

- Follow the instructions to create the role

amplifyconsole-backend-role. - When the role is created, choose it from the drop-down menu.

- Choose Save and deploy.

On the next page, the dynamodb-streaming app is ready to deploy.

- Choose Continue.

On the next page, you can see the app build and deployment progress, which might take as many as 10 minutes to complete.

- When the process is complete, choose the URL on the left to access the data generator user interface (UI).

- Make sure to save this URL because you will use it in later steps.

You also get an email during the build process related to your SSH key. This email indicates that the build process created an SSH key on your behalf to connect to the Amplify application with GitHub.

- On the sign-in page, choose Create account.

- Provide a user name, password, and valid email to which the app can send you a one-time passcode to access the UI.

- After you sign in, choose Generate data to generate wind speed data.

- Choose the Refresh

icon to show the data in the graph.

icon to show the data in the graph.

You can generate a variety of data by changing the range of minimum and maximum speeds and the number of values.

To see the data in DynamoDB, choose the DynamoDB icon, note the table name that starts with windspeed-, and navigate to the table in the DynamoDB console.

Now that the wind speed data simulator is ready, let’s deploy the rest of the data pipeline.

Deploying the automated data pipeline by using AWS CloudFormation

You use AWS CloudFormation templates to create all the necessary resources for the data pipeline. This removes opportunities for manual error, increases efficiency, and ensures consistent configurations over time. You can view the template and code in the GitHub repository.

- Choose Launch with CloudFormation Console:

- Choose the US West (Oregon) Region (us-west-2).

- For pEmail, enter a valid email to which the analytics pipeline can send notifications.

- Choose Next.

- Acknowledge that the template may create AWS Identity and Access Management (IAM) resources.

- Choose Create stack.

This CloudFormation template creates the following resources in your AWS account:

- An IAM role to provide a trust relationship between Kinesis and DynamoDB to replicate data from DynamoDB to the data stream

- Two data streams:

- An input stream to replicate data from DynamoDB

- An output stream to store aggregated data from the Data Analytics for Flink app

- A Lambda function

- An SNS topic to send an email notifications about high wind speeds

- When the stack is ready, on the Outputs tab, note the values of both data streams.

Check your email and confirm your subscription to receive notifications. Make sure to check your junk folder if you don’t see the email in your inbox.

Now you can use Kinesis Data Streams for DynamoDB, which allows you to have your data in both DynamoDB and Kinesis without having to use Lambda or write custom code.

Enabling Kinesis streaming for DynamoDB

AWS recently launched Kinesis Data Streams for DynamoDB so that you can send data from DynamoDB to Kinesis Data. You can use the AWS Command Line Interface (AWS CLI) or the AWS Management Console to enable this feature.

To enable this feature from the console, complete the following steps:

- In the DynamoDB console, choose the table that you created earlier (it begins with the prefix

windspeed-). - On the Overview tab, choose Manage streaming to Kinesis.

- Choose your input stream.

- Choose Enable.

- Choose Close.

Make sure that Stream enabled is set to Yes.

Building the Data Analytics for Flink app for real-time data queries

As part of the CloudFormation stack, the new Data Analytics for Flink application is deployed in the configured AWS Region. When the stack is up and running, you should be able to see the new Data Analytics for Flink application in the configured Region. Choose Run to start the app.

When your app is running, you should see the following application graph.

Review the Properties section of the app, which shows you the input and output streams that the app is using.

Let’s learn important code snippets of the Flink Java application in next section, which explain how the Flink application reads data from a data stream, aggregates the data, and outputs it to another data stream.

Diving Deep into Flink Java application code:

In the following code, createSourceFromStaticConfig provides all the wind turbine speed readings from the input stream in string format, which we pass to the WindTurbineInputMap map function. This function parses the string into the Tuple3 data type (exp Tuple3<>(turbineID, speed, 1)). All Tuple3 messages are grouped by turbineID to further apply a one-minute tumbling window. The AverageReducer reduce function provides two things: the sum of all the speeds for the specific turbineId in the one-minute window, and a count of the messages for the specific turbineId in the one-minute window. The AverageMap map function takes the output of the AverageReducer reduce function and transforms it into Tuple2 (exp Tuple2<>(turbineId, averageSpeed)). Then all turbineIds are filtered with an average speed greater than 60 and map them to a JSON-formatted message, which we send to the output stream by using the createSinkFromStaticConfig sink function.

The following code demonstrates how the createSourceFromStaticConfig and createSinkFromStaticConfig functions read the input and output stream names from the properties of the Data Analytics for Flink application and establish the source and sink of the streams.

In the following code, the WindTurbineInputMap map function parses Tuple3 out of the string message. Additionally, the AverageMap map and AverageReducer reduce functions process messages to accumulate and transform data.

Receiving email notifications of high wind speed

The following screenshot shows an example of the notification email you will receive about high wind speeds.

To test the feature, in this section you generate high wind speed data from the simulator, which is stored in DynamoDB, and get an email notification when the average wind speed is greater than 60 mph for a one-minute period. You’ll observe wind data flowing through the data stream and Data Analytics for Flink.

To test this feature:

- Generate wind speed data in the simulator and confirm that it’s stored in DynamoDB.

- In the Kinesis Data Streams console, choose the input data stream,

kds-ddb-blog-InputKinesisStream. - On the Monitoring tab of the stream, you can observe the Get records – sum (Count) metrics, which show multiple records captured by the data stream automatically.

- In the Kinesis Data Analytics console, choose the Data Analytics for Flink application,

kds-ddb-blog-windTurbineAggregator. - On the Monitoring tab, you can see the Last Checkpoint metrics, which show multiple records captured by the Data Analytics for Flink app automatically.

- In the Kinesis Data Streams console, choose the output stream,

kds-ddb-blog-OutputKinesisStream. - On the Monitoring tab, you can see the Get records – sum (Count) metrics, which show multiple records output by the app.

- Finally, check your email for a notification.

If you don’t see a notification, change the data simulator value range between a minimum of 50 mph and maximum of 90 mph and wait a few minutes.

Conclusion

As you have learned in this post, you can build an end-to-end serverless analytics pipeline to get real-time insights from DynamoDB by using Kinesis Data Streams—all without writing any complex code. This allows your team to focus on solving business problems by getting useful insights immediately. IoT and application development have a variety of use cases for moving data quickly through an analytics pipeline, and you can make this happen by enabling Kinesis Data Streams for DynamoDB.

If this blog post helps you or inspires you to solve a problem, we would love to hear about it! The code for this solution is available in the GitHub repository for you to use and extend. Contributions are always welcome!

About the Authors

Saurabh Shrivastava is a solutions architect leader and analytics/machine learning specialist working with global systems integrators. He works with AWS partners and customers to provide them with architectural guidance for building scalable architecture in hybrid and AWS environments. He enjoys spending time with his family outdoors and traveling to new destinations to discover new cultures.

Saurabh Shrivastava is a solutions architect leader and analytics/machine learning specialist working with global systems integrators. He works with AWS partners and customers to provide them with architectural guidance for building scalable architecture in hybrid and AWS environments. He enjoys spending time with his family outdoors and traveling to new destinations to discover new cultures.

Sameer Goel is a solutions architect in Seattle who drives customers’ success by building prototypes on cutting-edge initiatives. Prior to joining AWS, Sameer graduated with a Master’s degree with a Data Science concentration from NEU Boston. He enjoys building and experimenting with creative projects and applications.

Sameer Goel is a solutions architect in Seattle who drives customers’ success by building prototypes on cutting-edge initiatives. Prior to joining AWS, Sameer graduated with a Master’s degree with a Data Science concentration from NEU Boston. He enjoys building and experimenting with creative projects and applications.

Pratik Patel is a senior technical account manager and streaming analytics specialist. He works with AWS customers and provides ongoing support and technical guidance to help plan and build solutions by using best practices, and proactively helps keep customers’ AWS environments operationally healthy.

Pratik Patel is a senior technical account manager and streaming analytics specialist. He works with AWS customers and provides ongoing support and technical guidance to help plan and build solutions by using best practices, and proactively helps keep customers’ AWS environments operationally healthy.

[$] LibreSSL languishes on Linux

Post Syndicated from original https://lwn.net/Articles/841664/rss

The LibreSSL project has been

developing a fork of the OpenSSL

package since 2014; it is supported as part of OpenBSD. Adoption of

LibreSSL on the Linux side has been slow from the start, though, and it

would appear that the situation is about to get worse. LibreSSL is

starting to look like an idea whose time may never come in the Linux world.

Military Cryptanalytics, Part III

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/01/military-cryptanalytics-part-iii.html

The NSA has just declassified and released a redacted version of Military Cryptanalytics, Part III, by Lambros D. Callimahos, October 1977.

Parts I and II, by Lambros D. Callimahos and William F. Friedman, were released decades ago — I believe repeatedly, in increasingly unredacted form — and published by the late Wayne Griswold Barker’s Agean Park Press. I own them in hardcover.

Like Parts I and II, Part III is primarily concerned with pre-computer ciphers. At this point, the document only has historical interest. If there is any lesson for today, it’s that modern cryptanalysis is possible primarily because people make mistakes

The monograph took a while to become public. The cover page says that the initial FOIA request was made in July 2012: eight and a half years ago.

And there’s more books to come. Page 1 starts off:

This text constitutes the third of six basic texts on the science of cryptanalytics. The first two texts together have covered most of the necessary fundamentals of cryptanalytics; this and the remaining three texts will be devoted to more specialized and more advanced aspects of the science.

Presumably, volumes IV, V, and VI are still hidden inside the classified libraries of the NSA.

And from page ii:

Chapters IV-XI are revisions of seven of my monographs in the NSA Technical Literature Series, viz: Monograph No. 19, “The Cryptanalysis of Ciphertext and Plaintext Autokey Systems”; Monograph No. 20, “The Analysis of Systems Employing Long or Continuous Keys”; Monograph No. 21, “The Analysis of Cylindrical Cipher Devices and Strip Cipher Systems”; Monograph No. 22, “The Analysis of Systems Employing Geared Disk Cryptomechanisms”; Monograph No.23, “Fundamentals of Key Analysis”; Monograph No. 15, “An Introduction to Teleprinter Key Analysis”; and Monograph No. 18, “Ars Conjectandi: The Fundamentals of Cryptodiagnosis.”

This points to a whole series of still-classified monographs whose titles we do not even know.

EDITED TO ADD: I have been informed by a reliable source that Parts 4 through 6 were never completed. There may be fragments and notes, but no finished works.

Accessing and visualizing data from multiple data sources with Amazon Athena and Amazon QuickSight

Post Syndicated from Saurabh Bhutyani original https://aws.amazon.com/blogs/big-data/accessing-and-visualizing-data-from-multiple-data-sources-with-amazon-athena-and-amazon-quicksight/

Amazon Athena now supports federated query, a feature that allows you to query data in sources other than Amazon Simple Storage Service (Amazon S3). You can use federated queries in Athena to query the data in place or build pipelines that extract data from multiple data sources and store them in Amazon S3. With Athena Federated Query, you can run SQL queries across data stored in relational, non-relational, object, and custom data sources. Athena queries including federated queries can be run from the Athena console, a JDBC or ODBC connection, the Athena API, the Athena CLI, the AWS SDK, or AWS Tools for Windows PowerShell.

The goal for this post is to discuss how we can use different connectors to run federated queries with complex joins across different data sources with Athena and visualize the data with Amazon QuickSight.

Athena Federated Query

Athena uses data source connectors that run on AWS Lambda to run federated queries. A data source connector is a piece of code that translates between your target data source and Athena. You can think of a connector as an extension of the Athena query engine. Prebuilt Athena data source connectors exist for data sources like Amazon CloudWatch Logs, Amazon DynamoDB, Amazon DocumentDB (with MongoDB compatibility), Amazon Elasticsearch Service (Amazon ES), Amazon ElastiCache for Redis, and JDBC-compliant relational data sources such as MySQL, PostgreSQL, and Amazon Redshift under the Apache 2.0 license. You can also use the Athena Query Federation SDK to write custom connectors. After you deploy data source connectors, the connector is associated with a catalog name that you can specify in your SQL queries. You can combine SQL statements from multiple catalogs and span multiple data sources with a single query.

When a query is submitted against a data source, Athena invokes the corresponding connector to identify parts of the tables that need to be read, manages parallelism, and pushes down filter predicates. Based on the user submitting the query, connectors can provide or restrict access to specific data elements. Connectors use Apache Arrow as the format for returning data requested in a query, which enables connectors to be implemented in languages such as C, C++, Java, Python, and Rust. Because connectors run in Lambda, you can use them to access data from any data source in the cloud or on premises that is accessible from Lambda.

Prerequisites

Before creating your development environment, you must have the following prerequisites:

- An AWS account that provides access to AWS services.

- An AWS Identity and Access Management (IAM) user with an access key and secret key to configure the AWS Command Line Interface (AWS CLI).

- The IAM user also needs permissions to create an IAM role and policies, and create stacks in AWS CloudFormation.

- An environment set up. For this post, we use the CloudFormation template from the post Extracting and joining data from multiple data sources with Athena Federated Query.

- A QuickSight subscription. If you don’t have a QuickSight subscription configured, see Signing Up for an Amazon QuickSight Subscription. You can access the QuickSight free trial as part of the AWS free tier option.

Configuring your data source connectors

After you deploy your CloudFormation stack, follow the instructions in the post Extracting and joining data from multiple data sources with Athena Federated Query to configure various Athena data source connectors for HBase on Amazon EMR, DynamoDB, ElastiCache for Redis, and Amazon Aurora MySQL.

You can run Athena federated queries in the AmazonAthenaPreviewFunctionality workgroup created as part of the CloudFormation stack or you could run them in the primary workgroup or other workgroups as long as you’re running with Athena engine version 2. As of this writing, Athena Federated Query is generally available in the Asia Pacific (Mumbai), Asia Pacific (Tokyo), Europe (Ireland), US East (N. Virginia), US East (Ohio), US West (N. California), and US West (Oregon) Regions. If you’re running in other Regions, use the AmazonAthenaPreviewFunctionality workgroup.

For information about changing your workgroup to Athena engine version 2, see Changing Athena Engine Versions.

Configuring QuickSight

The next step is to configure QuickSight to use these connectors to query data and visualize with QuickSight.

- On the AWS Management Console, navigate to QuickSight.

- If you’re not signed up for QuickSight, you’re prompted with the option to sign up. Follow the steps to sign up to use QuickSight.

- After you log in to QuickSight, choose Manage QuickSight under your account.

- In the navigation pane, choose Security & permissions.

- Under QuickSight access to AWS services, choose Add or remove.

A page appears for enabling QuickSight access to AWS services.

- Choose Athena.

- In the pop-up window, choose Next.

- On the S3 tab, select the necessary S3 buckets. For this post, I select the

athena-federation-workshop-<account_id>bucket and another one that stores my Athena query results. - For each bucket, also select Write permission for Athena Workgroup.

- On the Lambda tab, select the Lambda functions corresponding to the Athena federated connectors that Athena federated queries use. If you followed the post Extracting and joining data from multiple data sources with Athena Federated Query when configuring your Athena federated connectors, you can select dynamo, hbase, mysql, and redis.

For information about registering a data source in Athena, see the appendix in this post.

- Choose Finish.

- Choose Update.

- On the QuickSight console, choose New analysis.

- Choose New dataset.

- For Datasets, choose Athena.

- For Data source name, enter

Athena-federation. - For Athena workgroup, choose primary.

- Choose Create data source.

As stated earlier, you can use the AmazonAthenaPreviewFunctionality workgroup or another workgroup as long as you’re running Athena engine version 2 in a supported Region.

- For Catalog, choose the catalog that you created for your Athena federated connector.

For information about creating and registering a data source in Athena, see the appendix in this post.

- For this post, I choose the

dynamocatalog, which does a federation to the Athena DynamoDB connector.

I can now see the database and tables listed in QuickSight.

- Choose Edit/Preview data to see the data.

- Choose Save & Visualize to start using this data for creating visualizations in QuickSight.

- To do a join with another Athena data source, choose Add data and select the catalog and table.

- Choose the join link between the two datasets and choose the appropriate join configuration.

- Choose Apply.

You should be able to see the joined data.

Running a query in QuickSight

Now we use the custom SQL option in QuickSight to run a complex query with multiple Athena federated data sources.

- On the QuickSight console, choose New analysis.

- Choose New dataset.

- For Datasets, choose Athena.

- For Data source name, enter

Athena-federation. - For the workgroup, choose primary.

- Choose Create data source.

- Choose Use custom SQL.

- Enter the query for

ProfitBySupplierNation. - Choose Edit/Preview data.

Under Query mode, you have the option to view your query in either SPICE or direct query. SPICE is the QuickSight Super-fast, Parallel, In-memory Calculation Engine. It’s engineered to rapidly perform advanced calculations and serve data. Using SPICE can save time and money because your analytical queries process faster, you don’t need to wait for a direct query to process, and you can reuse data stored in SPICE multiple times without incurring additional costs. You also can refresh data in SPICE on a recurring basis as needed or on demand. For more information about refresh options, see Refreshing Data.

With direct query, QuickSight doesn’t use SPICE data and sends the query every time to Athena to get the data.

- Select SPICE.

- Choose Apply.

- Choose Save & visualize.

- On the Visualize page, under Fields list, choose nation and sum_profit.

QuickSight automatically chooses the best visualization type based on the selected fields. You can change the visual type based on your requirement. The following screenshot shows a pie chart for Sum_profit grouped by Nation.

You can add more datasets using Athena federated queries and create dashboards. The following screenshot is an example of a visual analysis over various datasets that were added as part of this post.

When your analysis is ready, you can choose Share to create a dashboard and share it within your organization.

Summary

QuickSight is a powerful visualization tool, and with Athena federated queries, you can run analysis and build dashboards on various data sources like DynamoDB, HBase on Amazon EMR, and many more. You can also easily join relational, non-relational, and custom object stores in Athena queries and use them with QuickSight to create visualizations and dashboards.

For more information about Athena Federated Query, see Using Amazon Athena Federated Query and Query any data source with Amazon Athena’s new federated query.

Appendix

To register a data source in Athena, complete the following steps:

- On the Athena console, choose Data sources.

- Choose Connect data source.

- Select Query a data source.

- For Choose a data source, select a data source (for this post, I select Redis).

- Choose Next.

- For Lambda function, choose your function.

For this post, I use the redis Lambda function, which I configured as part of configuring the Athena federated connector in the post Extracting and joining data from multiple data sources with Athena Federated Query.

- For Catalog name, enter a name (for example,

redis).

The catalog name you specify here is the one that is displayed in QuickSight when selecting Lambda functions for access.

- Choose Connect.

When the data source is registered, it’s available in the Data source drop-down list on the Athena console.

About the Author

Saurabh Bhutyani is a Senior Big Data Specialist Solutions Architect at Amazon Web Services. He is an early adopter of open-source big data technologies. At AWS, he works with customers to provide architectural guidance for running analytics solutions on Amazon EMR, Amazon Athena, AWS Glue, and AWS Lake Formation. In his free time, he likes to watch movies and spend time with his family.

Saurabh Bhutyani is a Senior Big Data Specialist Solutions Architect at Amazon Web Services. He is an early adopter of open-source big data technologies. At AWS, he works with customers to provide architectural guidance for running analytics solutions on Amazon EMR, Amazon Athena, AWS Glue, and AWS Lake Formation. In his free time, he likes to watch movies and spend time with his family.

Multi-tenant processing pipelines with AWS DMS, AWS Step Functions, and Apache Hudi on Amazon EMR

Post Syndicated from Francisco Oliveira original https://aws.amazon.com/blogs/big-data/multi-tenant-processing-pipelines-with-aws-dms-aws-step-functions-and-apache-hudi-on-amazon-emr/

Large enterprises often provide software offerings to multiple customers by providing each customer a dedicated and isolated environment (a software offering composed of multiple single-tenant environments). Because the data is in various independent systems, large enterprises are looking for ways to simplify data processing pipelines. To address this, you can create data lakes to bring your data to a single place.

Typically, a replication tool such as AWS Database Migration Service (AWS DMS) can replicate the data from your source systems to Amazon Simple Storage Service (Amazon S3). When the data is in Amazon S3, you process it based on your requirements. A typical requirement is to sync the data in Amazon S3 with the updates on the source systems. Although it’s easy to apply updates on a relational database management system (RDBMS) that backs an online source application, it’s tough to apply this change data capture (CDC) process on your data lakes. Apache Hudi is a good way to solve this problem. You can use Hudi on Amazon EMR to create Hudi tables (for more information, see Hudi in the Amazon EMR Release Guide).

This post introduces a pipeline that loads data and its ongoing changes (change data capture) from multiple single-tenant tables from different databases to a single multi-tenant table in an Amazon S3-backed data lake, simplifying data processing activities by creating multi-tenant datasets.

Architecture overview

At a high level, this architecture consolidates multiple single-tenant environments into a single multi-tenant dataset so data processing pipelines can be centralized. For example, suppose that your software offering has two tenants, each with their dedicated and isolated environment, and you want to maintain a single multi-tenant table that includes data of both tenants. Moreover, you want any ongoing replication (CDC) in the sources for tenant 1 and tenant 2 to be synchronized (compacted or reconciled) when an insert, delete, or update occurs in the source systems of the respective tenant.

In the past, to support record-level updates or inserts (called upserts) and deletes on an Amazon S3-backed data lake, you relied on either having an Amazon Redshift cluster or an Apache Spark job that reconciled the update, deletes, and inserts with existing historical data.

The architecture for our solution uses Hudi to simplify incremental data processing and data pipeline development by providing record-level insert, update, upsert, and delete capabilities. For more information, see Apache Hudi on Amazon EMR.

Moreover, the architecture for our solution uses the following AWS services:

- AWS DMS – AWS DMS is a cloud service that makes it easy to migrate relational databases, data warehouses, NoSQL databases, and other types of data stores. For more information, see What is AWS Database Migration Service?

- AWS Step Functions – AWS Step Functions is a web service that enables you to coordinate the components of distributed applications and microservices using visual workflows. For more information, see What Is AWS Step Functions?

- Amazon EMR – Amazon EMR is a managed cluster platform that simplifies running big data frameworks, such as Apache Hadoop and Apache Spark, on AWS to process and analyze vast amounts of data. For more information, see Overview of Amazon EMR Architecture and Overview of Amazon EMR.

- Amazon S3 – Data is stored in Amazon S3, an object storage service with scalable performance, ease-of-use features, and native encryption and access control capabilities. For more details on Amazon S3, see Amazon S3 as the Data Lake Storage Platform.

Architecture deep dive

The following diagram illustrates our architecture.

This architecture relies on AWS Database Migration Service (AWS DMS) to transfer data from specific tables into an Amazon S3 location organized by tenant-id.