Post Syndicated from Bryant Bost original https://aws.amazon.com/blogs/architecture/micro-frontend-architectures-on-aws/

A microservice architecture is characterized by independent services that are focused on a specific business function and maintained by small, self-contained teams. Microservice architectures are used frequently for web applications developed on AWS, and for good reason. They offer many well-known benefits such as development agility, technological freedom, targeted deployments, and more. Despite the popularity of microservices, many frontend applications are still built in a monolithic style. For example, they have one large code base that interacts with all backend microservices, and is maintained by a large team of developers.

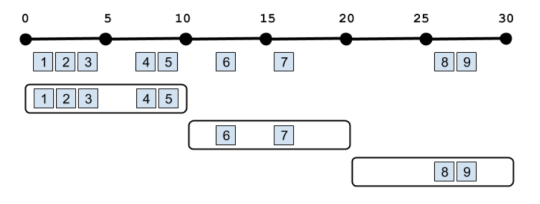

Figure 1. Microservice backend with monolith frontend

What is a Micro-frontend?

The micro-frontend architecture introduces microservice development principles to frontend applications. In a micro-frontend architecture, development teams independently build and deploy “child” frontend applications. These applications are combined by a “parent” frontend application that acts as a container to retrieve, display, and integrate the various child applications. In this parent/child model, the user interacts with what appears to be a single application. In reality, they are interacting with several independent applications, published by different teams.

Figure 2. Microservice backend with micro-frontend

Micro-frontend Benefits

Compared to a monolith frontend, a micro-frontend offers the following benefits:

- Independent artifacts: A core tenet of microservice development is that artifacts can be deployed independently, and this remains true for micro-frontends. In a micro-frontend architecture, teams should be able to independently deploy their frontend applications with minimal impact to other services. Those changes will be reflected by the parent application.

- Autonomous teams: Each team is the expert in its own domain. For example, the billing service team members have specialized knowledge. This includes the data models, the business requirements, the API calls, and user interactions associated with the billing service. This knowledge allows the team to develop the billing frontend faster than a larger, less specialized team.

- Flexible technology choices: Autonomy allows each team to make technology choices that are independent from other teams. For instance, the billing service team could develop their micro-frontend using Vue.js and the profile service team could develop their frontend using Angular.

- Scalable development: Micro-frontend development teams are smaller and are able to operate without disrupting other teams. This allows us to quickly scale development by spinning up new teams to deliver additional frontend functionality via child applications.

- Easier maintenance: Keeping frontend repositories small and specialized allows them to be more easily understood, and this simplifies long-term maintenance and testing. For instance, if you want to change an interaction on a monolith frontend, you must isolate the location and dependencies of the feature within the context of a large codebase. This type of operation is greatly simplified when dealing with the smaller codebases associated with micro-frontends.

Micro-frontend Challenges

Conversely, a micro-frontend presents the following challenges:

- Parent/child integration: A micro-frontend introduces the task of ensuring the parent application displays the child application with the same consistency and performance expected from a monolith application. This point is discussed further in the next section.

- Operational overhead: Instead of managing a single frontend application, a micro-frontend application involves creating and managing separate infrastructure for all teams.

- Consistent user experience: In order to maintain a consistent user experience, the child applications must use the same UI components, CSS libraries, interactions, error handling, and more. Maintaining consistency in the user experience can be difficult for child applications that are at different stages in the development lifecycle.

Building Micro-frontends

The most difficult challenge with the micro-frontend architecture pattern is integrating child applications with the parent application. Prioritizing the user experience is critical for any frontend application. In the context of micro-frontends, this means ensuring a user can seamlessly navigate from one child application to another inside the parent application. We want to avoid disruptive behavior such as page refreshes or multiple logins. At its most basic definition, parent/child integration involves the parent application dynamically retrieving and rendering child applications when the parent app is loaded. Rendering the child application depends on how the child application was built, and this can be done in a number of ways. Two of the most popular methods of parent/child integration are:

- Building each child application as a web component.

- Importing each child application as an independent module. These modules either declares a function to render itself or is dynamically imported by the parent application (such as with module federation).

Registering child apps as web components:

<html>

<head>

<script src="https://shipping.example.com/shipping-service.js"></script>

<script src="https://profile.example.com/profile-service.js"></script>

<script src="https://billing.example.com/billing-service.js"></script>

<title>Parent Application</title>

</head>

<body>

<shipping-service />

<profile-service />

<billing-service />

</body>

</html>Registering child apps as modules:

<html>

<head>

<script src="https://shipping.example.com/shipping-service.js"></script>

<script src="https://profile.example.com/profile-service.js"></script>

<script src="https://billing.example.com/billing-service.js"></script>

<title>Parent Application</title>

</head>

<body>

</body>

<script>

// Load and render the child applications form their JS bundles.

</script>

</html>The following diagram shows an example micro-frontend architecture built on AWS.

Figure 3. Micro-frontend architecture on AWS

In this example, each service team is running a separate, identical stack to build their application. They use the AWS Developer Tools and deploy the application to Amazon Simple Storage Service (S3) with Amazon CloudFront. The CI/CD pipelines use shared components such as CSS libraries, API wrappers, or custom modules stored in AWS CodeArtifact. This helps drive consistency across parent and child applications.

When you retrieve the parent application, it should prompt you to log in to an identity provider and retrieve JWTs. In this example, the identity provider is an Amazon Cognito User Pool. After a successful login, the parent application retrieves the child applications from CloudFront and renders them inside the parent application. Alternatively, the parent application can elect to render the child applications on demand, when you navigate to a specific route. The child applications should not require you to log in again to the Amazon Cognito user pool. They should be configured to use the JWT obtained by the parent app or silently retrieve a new JWT from Amazon Cognito.

Conclusion

Micro-frontend architectures introduce many of the familiar benefits of microservice development to frontend applications. A micro-frontend architecture also simplifies the process of building complex frontend applications by allowing you to manage small, independent components.