Post Syndicated from Michael Tran original https://aws.amazon.com/blogs/devops/getting-started-with-projen-and-aws-cdk/

In the modern world of cloud computing, Infrastructure as Code (IaC) has become a vital practice for deploying and managing cloud resources. AWS Cloud Development Kit (AWS CDK) is a popular open-source framework that allows developers to define cloud resources using familiar programming languages. A related open source tool called Projen is a powerful project generator that simplifies the management of complex software configurations. In this post, we’ll explore how to get started with Projen and AWS CDK, and discuss the pros and cons of using Projen.

What is Projen?

Building modern and high quality software requires a large number of tools and configuration files to handle tasks like linting, testing, and automating releases. Each tool has its own configuration interface, such as JSON or YAML, and a unique syntax, increasing maintenance complexity.

When starting a new project, you rarely start from scratch, but more often use a scaffolding tool (for instance, create-react-app) to generate a new project structure. A large amount of configuration is created on your behalf, and you get the ownership of those files. Moreover, there is a high number of project generation tools, with new ones created almost everyday.

Projen is a project generator that helps developers to efficiently manage project configuration files and build high quality software. It allows you to define your project structure and configuration in code, making it easier to maintain and share across different environments and projects.

Out of the box, Projen supports multiple project types like AWS CDK construct libraries, react applications, Java projects, and Python projects. New project types can be added by contributors, and projects can be developed in multiple languages. Projen uses the jsii library, which allows us to write APIs once and generate libraries in several languages. Moreover, Projen provides a single interface, the projenrc file, to manage the configuration of your entire project!

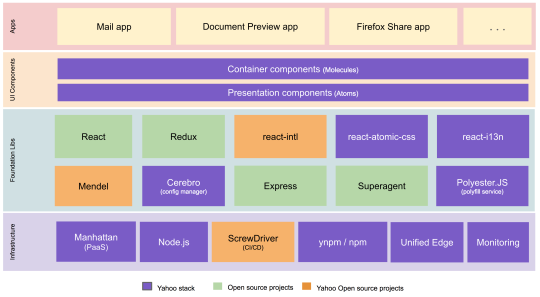

The diagram below provides an overview of the deployment process of AWS cloud resources using Projen:

- In this example, Projen can be used to generate a new project, for instance, a new CDK Typescript application.

- Developers define their infrastructure and application code using AWS CDK resources. To modify the project configuration, developers use the projenrc file instead of directly editing files like package.json.

- The project is synthesized to produce an AWS CloudFormation template.

- The CloudFormation template is deployed in a AWS account, and provisions AWS cloud resources.

Diagram 1 – Projen packaged features: Projen helps gets your project started and allows you to focus on coding instead of worrying about the other project variables. It comes out of the box with linting, unit test and code coverage, and a number of Github actions for release and versioning and dependency management.

Pros and Cons of using Projen

Pros

- Consistency: Projen ensures consistency across different projects by allowing you to define standard project templates. You don’t need to use different project generators, only Projen.

- Version Control: Since project configuration is defined in code, it can be version-controlled, making it easier to track changes and collaborate with others.

- Extensibility: Projen supports various plugins and extensions, allowing you to customize the project configuration to fit your specific needs.

- Integration with AWS CDK: Projen provides seamless integration with AWS CDK, simplifying the process of defining and deploying cloud resources.

- Polyglot CDK constructs library: Build once, run in multiple runtimes. Projen can convert and publish a CDK Construct developed in TypeScript to Java (Maven) and Python (PYPI) with JSII support.

- API Documentation : Generate API documentation from the comments, if you are building a CDK construct

Cons

- Microsoft Windows support. There are a number of open issues about Projen not completely working with the Windows environment (https://github.com/projen/projen/issues/2427 and https://github.com/projen/projen/issues/498).

- The framework, Projen, is very opinionated with a lot of assumptions on architecture, best practices and conventions.

- Projen is still not GA, with the version at the time of this writing at v0.77.5.

Walkthrough

Step 1: Set up prerequisites

- An AWS account

- Download and install Node

- Install yarn

- AWS CLI : configure your credentials

- Deploying stacks with the AWS CDK requires dedicated Amazon S3 buckets and other containers to be available to AWS CloudFormation during deployment (More information).

Note: Projen doesn’t need to be installed globally. You will be using npx to run Projen which takes care of all required setup steps. npx is a tool for running npm packages that:

- live inside of a local node_modules folder

- are not installed globally.

npx comes bundled with npm version 5.2+

Step 2: Create a New Projen Project

You can create a new Projen project using the following command:

mkdir test_project && cd test_projectnpx projen new awscdk-app-ts

This command creates a new TypeScript project with AWS CDK support. The exhaustive list of supported project types is available through the official documentation: Projen.io, or by running the npx projen new command without a project type. It also supports npx projen new awscdk-construct to create a reusable construct which can then be published to other package managers.

The created project structure should be as follows:

test_project| .github/| .projen/| src/| test/| .eslintrc| .gitattributes| .gitignore| .mergify.yml| .npmignore| .projenrc.js| cdk.json| LICENSE| package.json| README.md| tsconfig.dev.json| yarn.lock

Projen generated a new project including:

- Initialization of an empty git repository, with the associated GitHub workflow files to build and upgrade the project. The release workflow can be customized with projen tasks.

.projenrc.jsis the main configuration file for project- tasks.json file for integration with Visual Studio Code

srcfolder containing an empty CDK stackLicenseandREADMEfiles- A projen configuration file: projenrc.js

package.jsoncontains functional metadata about the project like name, versions and dependencies..gitignore,.gitattributesfile to manage your files with git..eslintrcidentifying and reporting patterns on javascript..npmignoreto keep files out of package manager..mergify.ymlfor managing the pull requests.tsconfig.jsonconfigure the compiler options

Most of the generated files include a disclaimer:

# ~~ Generated by projen. To modify, edit .projenrc.js and run "npx projen".

Projen’s power lies in its single configuration file, .projenrc.js. By editing this file, you can manage your project’s lint rules, dependencies, .gitignore, and more. Projen will propagate your changes across all generated files, simplifying and unifying dependency management across your projects.

Projen generated files are considered implementation details and are not meant to be edited manually. If you do make manual changes, they will be overwritten the next time you run npx projen.

To edit your project configuration, simply edit .projenrc.js and then run npx projen to synthesize again. For more information on the Projen API, please see the documentation: http://projen.io/api/API.html.

Projen uses the projenrc.js file’s configuration to instantiate a new AwsCdkTypeScriptApp with some basic metadata: the project name, CDK version and the default release branch. Additional APIs are available for this project type to customize it (for instance, add runtime dependencies).

Let’s try to modify a property and see how Projen reacts. As an example, let’s update the project name in projenrc.js :

name: 'test_project_2',

and then run the npx projen command:

npx projen

Once done, you can see that the project name was updated in the package.json file.

Step 3: Define AWS CDK Resources

Inside your Projen project, you can define AWS CDK resources using familiar programming languages like TypeScript. Here’s an example of defining an Amazon Simple Storage Service (Amazon S3) bucket:

1. Navigate to your main.ts file in the src/ directory

2. Modify the imports at the top of the file as follow:

import { App, CfnOutput, Stack, StackProps } from 'aws-cdk-lib';import * as s3 from 'aws-cdk-lib/aws-s3';import { Construct } from 'constructs';

1. Replace line 9 “// define resources here…” with the code below:

const bucket = new s3.Bucket(this, 'MyBucket', {

versioned: true,

});

new CfnOutput(this, 'TestBucket', { value: bucket.bucketArn });Step 4: Synthesize and Deploy

Next we will bootstrap our application. Run the following in a terminal:

$ npx cdk bootstrap

Once you’ve defined your resources, you can synthesize a cloud assembly, which includes a CloudFormation template (or many depending on the application) using:

$ npx projen build

npx projen build will perform several actions:

- Build the application

- Synthesize the CloudFormation template

- Run tests and linter

The synth() method of Projen performs the actual synthesizing (and updating) of all configuration files managed by Projen. This is achieved by deleting all Projen-managed files (if there are any), and then re-synthesizing them based on the latest configuration specified by the user.

You can find an exhaustive list of the available npx projen commands in .projen/tasks.json. You can also use the projen API project.addTask to add a new task to perform any custom action you need ! Tasks are a project-level feature to define a project command system backed by shell scripts.

Deploy the CDK application:

$ npx projen deploy

Projen will use the cdk deploy command to deploy the CloudFormation stack in the configured AWS account by creating and executing a change set based on the template generated by CDK synthesis. The output of the step above should look as follow:

deploy | cdk deploy

✨ Synthesis time: 3.28s

toto-dev: start: Building 387a3a724050aec67aa083b74c69485b08a876f038078ec7ea1018c7131f4605:263905523351-us-east-1toto-dev: success: Built 387a3a724050aec67aa083b74c69485b08a876f038078ec7ea1018c7131f4605:263905523351-us-east-1toto-dev: start: Publishing 387a3a724050aec67aa083b74c69485b08a876f038078ec7ea1018c7131f4605:263905523351-us-east-1toto-dev: success: Published 387a3a724050aec67aa083b74c69485b08a876f038078ec7ea1018c7131f4605:263905523351-us-east-1toto-dev: deploying... [1/1]toto-dev: creating CloudFormation changeset...

✅ testproject-dev

✨ Deployment time: 33.48s

Outputs:testproject-dev.TestBucket = arn:aws:s3:::testproject-dev-mybucketf68f3ff0-1xy2f0vk0ve4rStack ARN:arn:aws:cloudformation:us-east-1:263905523351:stack/testproject-dev/007e7b20-48df-11ee-b38d-0aa3a92c162d

✨ Total time: 36.76s

The application was successfully deployed in the configured AWS account! Also, the Amazon Resource Name (ARN) of the S3 bucket created is available through the CloudFormation stack Outputs tab, and displayed in your terminal under the ‘Outputs’ section.

Clean up

Delete CloudFormation Stack

To clean up the resources created in this section of the workshop, navigate to the CloudFormation console and delete the stack created. You can also perform the same task programmatically:

$ npx projen destroy

Which should produce the following output:

destroy | cdk destroyAre you sure you want to delete: testproject-dev (y/n)? ytestproject-dev: destroying... [1/1]

testproject-dev: destroyed

testproject-dev: destroyed

Delete S3 Buckets

The S3 bucket will not be deleted since its retention policy was set to RETAIN. Navigate to the S3 console and delete the created bucket. If you added files to that bucket, you will need to empty it before deletion. See the Deleting a bucket documentation for more information.

Conclusion

Projen and AWS CDK together provide a powerful combination for managing cloud resources and project configuration. By leveraging Projen, you can ensure consistency, version control, and extensibility across your projects. The integration with AWS CDK allows you to define and deploy cloud resources using familiar programming languages, making the entire process more developer-friendly.

Whether you’re a seasoned cloud developer or just getting started, Projen and AWS CDK offer a streamlined approach to cloud resource management. Give it a try and experience the benefits of Infrastructure as Code with the flexibility and power of modern development tools.

Nuxt.js scaffolding tool inputs

Nuxt.js scaffolding tool inputs