Post Syndicated from Oglaf! -- Comics. Often dirty. original https://www.oglaf.com/everyday-cleanser/

Under the Stars

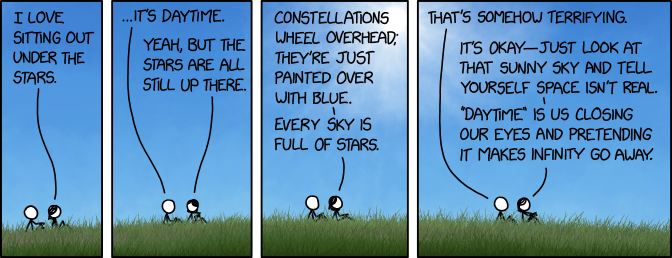

Post Syndicated from xkcd.com original https://xkcd.com/2849/

NVIDIA L40S is the NVIDIA H100 AI Alternative with a Big Benefit

Post Syndicated from Patrick Kennedy original https://www.servethehome.com/nvidia-l40s-is-the-nvidia-h100-ai-alternative-with-a-big-benefit-supermicro/

We delve into the NVIDIA L40S versus the NVIDIA H100 PCIe and discuss the differences and why the L40S should be the go-to GPU for many today

The post NVIDIA L40S is the NVIDIA H100 AI Alternative with a Big Benefit appeared first on ServeTheHome.

Announcing Amazon EC2 Capacity Blocks for ML to reserve GPU capacity for your machine learning workloads

Post Syndicated from Channy Yun original https://aws.amazon.com/blogs/aws/announcing-amazon-ec2-capacity-blocks-for-ml-to-reserve-gpu-capacity-for-your-machine-learning-workloads/

Recent advancements in machine learning (ML) have unlocked opportunities for customers across organizations of all sizes and industries to reinvent new products and transform their businesses. However, the growth in demand for GPU capacity to train, fine-tune, experiment, and inference these ML models has outpaced industry-wide supply, making GPUs a scarce resource. Access to GPU capacity is an obstacle for customers whose capacity needs fluctuate depending on the research and development phase they’re in.

Today, we are announcing Amazon Elastic Compute Cloud (Amazon EC2) Capacity Blocks for ML, a new Amazon EC2 usage model that further democratizes ML by making it easy to access GPU instances to train and deploy ML and generative AI models. With EC2 Capacity Blocks, you can reserve hundreds of GPUs collocated in EC2 UltraClusters designed for high-performance ML workloads, using Elastic Fabric Adapter (EFA) networking in a peta-bit scale non-blocking network, to deliver the best network performance available in Amazon EC2.

This is an innovative new way to schedule GPU instances where you can reserve the number of instances you need for a future date for just the amount of time you require. EC2 Capacity Blocks are currently available for Amazon EC2 P5 instances powered by NVIDIA H100 Tensor Core GPUs in the AWS US East (Ohio) Region. With EC2 Capacity Blocks, you can reserve GPU instances in just a few clicks and plan your ML development with confidence. EC2 Capacity Blocks make it easy for anyone to predictably access EC2 P5 instances that offer the highest performance in EC2 for ML training.

EC2 Capacity Block reservations work similarly to hotel room reservations. With a hotel reservation, you specify the date and duration you want your room for and the size of beds you’d like─a queen bed or king bed, for example. Likewise, with EC2 Capacity Block reservations, you select the date and duration you require GPU instances and the size of the reservation (the number of instances). On your reservation start date, you’ll be able to access your reserved EC2 Capacity Block and launch your P5 instances. At the end of the EC2 Capacity Block duration, any instances still running will be terminated.

You can use EC2 Capacity Blocks when you need capacity assurance to train or fine-tune ML models, run experiments, or plan for future surges in demand for ML applications. Alternatively, you can continue using On-Demand Capacity Reservations for all other workload types that require compute capacity assurance, such as business-critical applications, regulatory requirements, or disaster recovery.

Getting started with Amazon EC2 Capacity Blocks for ML

To reserve your Capacity Blocks, choose Capacity Reservations on the Amazon EC2 console in the US East (Ohio) Region. You can see two capacity reservation options. Select Purchase Capacity Blocks for ML and then Get started to start looking for an EC2 Capacity Block.

Choose your total capacity and specify how long you need the EC2 Capacity Block. You can reserve an EC2 Capacity Block in the following sizes: 1, 2, 4, 8, 16, 32, or 64 p5.48xlarge instances. The total number of days that you can reserve EC2 Capacity Blocks is 1– 14 days in 1-day increments. EC2 Capacity Blocks can be purchased up to 8 weeks in advance.

EC2 Capacity Block prices are dynamic and depend on total available supply and demand at the time you purchase the EC2 Capacity Block. You can adjust the size, duration, or date range in your specifications to search for other EC2 Capacity Block options. When you select Find Capacity Blocks, AWS returns the lowest-priced offering available that meets your specifications in the date range you have specified. At this point, you will be shown the price for the EC2 Capacity Block.

After reviewing EC2 Capacity Blocks details, tags, and total price information, choose Purchase. The total price of an EC2 Capacity Block is charged up front, and the price does not change after purchase. The payment will be billed to your account within 12 hours after you purchase the EC2 Capacity Blocks.

All EC2 Capacity Blocks reservations start at 11:30 AM Coordinated Universal Time (UTC). EC2 Capacity Blocks can’t be modified or canceled after purchase.

You can also use AWS Command Line Interface (AWS CLI) and AWS SDKs to purchase EC2 Capacity Blocks. Use the describe-capacity-block-offerings API to provide your cluster requirements and discover an available EC2 Capacity Block for purchase.

$ aws ec2 describe-capacity-block-offerings \

--instance-type p5.48xlarge \

--instance-count 4 \

--start-date-range 2023-10-30T00:00:00Z \

--end-date-range 2023-11-01T00:00:00Z \

–-capacity-duration 48After you find an available EC2 Capacity Block with the CapacityBlockOfferingId and capacity information from the preceding command, you can use purchase-capacity-block-reservation API to purchase it.

$ aws ec2 purchase-capacity-block-reservation \

--capacity-block-offering-id cbr-0123456789abcdefg \

–-instance-platform Linux/UNIXFor more information about new EC2 Capacity Blocks APIs, see the Amazon EC2 API documentation.

Your EC2 Capacity Block has now been scheduled successfully. On the scheduled start date, your EC2 Capacity Block will become active. To use an active EC2 Capacity Block on your starting date, choose the capacity reservation ID for your EC2 Capacity Block. You can see a breakdown of the reserved instance capacity, which shows how the capacity is currently being utilized in the Capacity details section.

To launch instances into your active EC2 Capacity Block, choose Launch instances and follow the normal process of launching EC2 instances and running your ML workloads.

In the Advanced details section, choose Capacity Blocks as the purchase option and select the capacity reservation ID of the EC2 Capacity Block you’re trying to target.

As your EC2 Capacity Block end time approaches, Amazon EC2 will emit an event through Amazon EventBridge, letting you know your reservation is ending soon so you can checkpoint your workload. Any instances running in the EC2 Capacity Block go into a shutting-down state 30 minutes before your reservation ends. The amount you were charged for your EC2 Capacity Block does not include this time period. When your EC2 Capacity Block expires, any instances still running will be terminated.

Now available

Amazon EC2 Capacity Blocks are now available for p5.48xlarge instances in the AWS US East (Ohio) Region. You can view the price of an EC2 Capacity Block before you reserve it, and the total price of an EC2 Capacity Block is charged up-front at the time of purchase. For more information, see the EC2 Capacity Blocks pricing page.

To learn more, see the EC2 Capacity Blocks documentation and send feedback to AWS re:Post for EC2 or through your usual AWS Support contacts.

— Channy

How Sonar is revealing the secrets of the sea, lakes and rivers.

Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=yXm4P9GvUA0

Опровержение на твърдения на натиск от моя страна

Post Syndicated from Bozho original https://blog.bozho.net/blog/4162

Във връзка с твърденията, че заедно с Кирил Петков съм притискал или заплашвал министъра на електронното управление за поръчки, искам да кажа няколко неща:

1. Това са абсолютни лъжи. Никога не е имало такъв разговор – нито сме обсъждали поръчки, нито е имало заплахи за каквото и да било.

2. Ще съдействам максимално на компетентните органи за установяване на истината.

3. Нямам никаква представа каква е причината за такива твърдения. Надявам се всичко това да е нелепо недоразумение, а не част от политически сценарий.

4. 640 милиона са много пари, които няма как да се изхарчат за краткия хоризонт на правителството. Министерството, а по-рано държавната агенция, за цялото си съществуване от 2016 г. досега са похарчили общо около 135 милиона (по данни от СЕБРА).

5. Министерството не може да не прилага Закона за обществените поръчки, с изключение на ограничени случаи, в които възлага на Информационно обслужване, което е държавно. (Преразказът на разговора включва обвинение, че натискът е бил за това парите да се харчат без обществени поръчки)

Приел съм, че ще бъда обект на абсурдни политически атаки. Те са очаквани в предизборна обстановка и са част от калния терен, на който се намираме, докато опитваме да променим България.

Материалът Опровержение на твърдения на натиск от моя страна е публикуван за пръв път на БЛОГодаря.

Home Assistant 2023.11 Release Party

Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=QlpC0LFM8l8

Transforming transactions: Streamlining PCI compliance using AWS serverless architecture

Post Syndicated from Abdul Javid original https://aws.amazon.com/blogs/security/transforming-transactions-streamlining-pci-compliance-using-aws-serverless-architecture/

Compliance with the Payment Card Industry Data Security Standard (PCI DSS) is critical for organizations that handle cardholder data. Achieving and maintaining PCI DSS compliance can be a complex and challenging endeavor. Serverless technology has transformed application development, offering agility, performance, cost, and security.

In this blog post, we examine the benefits of using AWS serverless services and highlight how you can use them to help align with your PCI DSS compliance responsibilities. You can remove additional undifferentiated compliance heavy lifting by building modern applications with abstracted AWS services. We review an example payment application and workflow that uses AWS serverless services and showcases the potential reduction in effort and responsibility that a serverless architecture could provide to help align with your compliance requirements. We present the review through the lens of a merchant that has an ecommerce website and include key topics such as access control, data encryption, monitoring, and auditing—all within the context of the example payment application. We don’t discuss additional service provider requirements from the PCI DSS in this post.

This example will help you navigate the intricate landscape of PCI DSS compliance. This can help you focus on building robust and secure payment solutions without getting lost in the complexities of compliance. This can also help reduce your compliance burden and empower you to develop your own secure, scalable applications. Join us in this journey as we explore how AWS serverless services can help you meet your PCI DSS compliance objectives.

|

Disclaimer This document is provided for the purposes of information only; it is not legal advice, and should not be relied on as legal advice. Customers are responsible for making their own independent assessment of the information in this document. This document: (a) is for informational purposes only, (b) represents current AWS product offerings and practices, which are subject to change without notice, and (c) does not create any commitments or assurances from AWS and its affiliates, suppliers or licensors. AWS products or services are provided “as is” without warranties, representations, or conditions of any kind, whether express or implied. The responsibilities and liabilities of AWS to its customers are controlled by AWS agreements, and this document is not part of, nor does it modify, any agreement between AWS and its customers. AWS encourages its customers to obtain appropriate advice on their implementation of privacy and data protection environments, and more generally, applicable laws and other obligations relevant to their business. |

PCI DSS v4.0 and serverless

In April 2022, the Payment Card Industry Security Standards Council (PCI SSC) updated the security payment standard to “address emerging threats and technologies and enable innovative methods to combat new threats.” Two of the high-level goals of these updates are enhancing validation methods and procedures and promoting security as a continuous process. Adopting serverless architectures can help meet some of the new and updated requirements in version 4.0, such as enhanced software and encryption inventories. If a customer has access to change a configuration, it’s the customer’s responsibility to verify that the configuration meets PCI DSS requirements. There are more than 20 PCI DSS requirements applicable to Amazon Elastic Compute Cloud (Amazon EC2). To fulfill these requirements, customer organizations must implement controls such as file integrity monitoring, operating system level access management, system logging, and asset inventories. Using AWS abstracted services in this scenario can remove undifferentiated heavy lifting from your environment. With abstracted AWS services, because there is no operating system to manage, AWS becomes responsible for maintaining consistent time settings for an abstracted service to meet Requirement 10.6. This will also shift your compliance focus more towards your application code and data.

This makes more of your PCI DSS responsibility addressable through the AWS PCI DSS Attestation of Compliance (AOC) and Responsibility Summary. This attestation package is available to AWS customers through AWS Artifact.

Reduction in compliance burden

You can use three common architectural patterns within AWS to design payment applications and meet PCI DSS requirements: infrastructure, containerized, and abstracted. We look into EC2 instance-based architecture (infrastructure or containerized patterns) and modernized architectures using serverless services (abstracted patterns). While both approaches can help align with PCI DSS requirements, there are notable differences in how they handle certain elements. EC2 instances provide more control and flexibility over the underlying infrastructure and operating system, assisting you in customizing security measures based on your organization’s operational and security requirements. However, this also means that you bear more responsibility for configuring and maintaining security controls applicable to the operating systems, such as network security controls, patching, file integrity monitoring, and vulnerability scanning.

On the other hand, serverless architectures similar to the preceding example can reduce much of the infrastructure management requirements. This can relieve you, the application owner or cloud service consumer, of the burden of configuring and securing those underlying virtual servers. This can streamline meeting certain PCI requirements, such as file integrity monitoring, patch management, and vulnerability management, because AWS handles these responsibilities.

Using serverless architecture on AWS can significantly reduce the PCI compliance burden. Approximately 43 percent of the overall PCI compliance requirements, encompassing both technical and non-technical tests, are addressed by the AWS PCI DSS Attestation of Compliance.

| Customer responsible 52% |

AWS responsible 43% |

N/A 5% |

The following table provides an analysis of each PCI DSS requirement against the serverless architecture in Figure 1, which shows a sample payment application workflow. You must evaluate your own use and secure configuration of AWS workload and architectures for a successful audit.

| PCI DSS 4.0 requirements | Test cases | Customer responsible | AWS responsible | N/A |

| Requirement 1: Install and maintain network security controls | 35 | 13 | 22 | 0 |

| Requirement 2: Apply secure configurations to all system components | 27 | 16 | 11 | 0 |

| Requirement 3: Protect stored account data | 55 | 24 | 29 | 2 |

| Requirement 4: Protect cardholder data with strong cryptography during transmission over open, public networks | 12 | 7 | 5 | 0 |

| Requirement 5: Protect all systems and networks from malicious software | 25 | 4 | 21 | 0 |

| Requirement 6: Develop and maintain secure systems and software | 35 | 31 | 4 | 0 |

| Requirement 7: Restrict access to system components and cardholder data by business need-to-know | 22 | 19 | 3 | 0 |

| Requirement 8: Identify users and authenticate access to system components | 52 | 43 | 6 | 3 |

| Requirement 9: Restrict physical access to cardholder data | 56 | 3 | 53 | 0 |

| Requirement 10: Log and monitor all access to system components and cardholder data | 38 | 17 | 19 | 2 |

| Requirement 11: Test security of systems and networks regularly | 51 | 22 | 23 | 6 |

| Requirement 12: Support information security with organizational policies | 56 | 44 | 2 | 10 |

| Total | 464 | 243 | 198 | 23 |

| Percentage | 52% | 43% | 5% |

Note: The preceding table is based on the example reference architecture that follows. The actual extent of PCI DSS requirements reduction can vary significantly depending on your cardholder data environment (CDE) scope, implementation, and configurations.

Sample payment application and workflow

This example serverless payment application and workflow in Figure 1 consists of several interconnected steps, each using different AWS services. The steps are listed in the following text and include brief descriptions. They cover two use cases within this example application — consumers making a payment and a business analyst generating a report.

The example outlines a basic serverless payment application workflow using AWS serverless services. However, it’s important to note that the actual implementation and behavior of the workflow may vary based on specific configurations, dependencies, and external factors. The example serves as a general guide and may require adjustments to suit the unique requirements of your application or infrastructure.

Several factors, including but not limited to, AWS service configurations, network settings, security policies, and third-party integrations, can influence the behavior of the system. Before deploying a similar solution in a production environment, we recommend thoroughly reviewing and adapting the example to align with your specific use case and requirements.

Keep in mind that AWS services and features may evolve over time, and new updates or changes may impact the behavior of the components described in this example. Regularly consult the AWS documentation and ensure that your configurations adhere to best practices and compliance standards.

This example is intended to provide a starting point and should be considered as a reference rather than an exhaustive solution. Always conduct thorough testing and validation in your specific environment to ensure the desired functionality and security.

Figure 1: Serverless payment architecture and workflow

- Use case 1: Consumers make a payment

- Consumers visit the e-commerce payment page to make a payment.

- The request is routed to the payment application’s domain using Amazon Route 53, which acts as a DNS service.

- The payment page is protected by AWS WAF to inspect the initial incoming request for any malicious patterns, web-based attacks (such as cross-site scripting (XSS) attacks), and unwanted bots.

- An HTTPS GET request (over TLS) is sent to the public target IP. Amazon CloudFront, a content delivery network (CDN), acts as a front-end proxy and caches and fetches static content from an Amazon Simple Storage Service (Amazon S3) bucket.

- AWS WAF inspects the incoming request for any malicious patterns, if the request is blocked, the request doesn’t return static content from the S3 bucket.

- User authentication and authorization are handled by Amazon Cognito, providing a secure login and scalable customer identity and access management system (CIAM)

- AWS WAF processes the request to protect against web exploits, then Amazon API Gateway forwards it to the payment application API endpoint.

- API Gateway launches AWS Lambda functions to handle payment requests. AWS Step Functions state machine oversees the entire process, directing the running of multiple Lambda functions to communicate with the payment processor, initiate the payment transaction, and process the response.

- The cardholder data (CHD) is temporarily cached in Amazon DynamoDB for troubleshooting and retry attempts in the event of transaction failures.

- A Lambda function validates the transaction details and performs necessary checks against the data stored in DynamoDB. A web notification is sent to the consumer for any invalid data.

- A Lambda function calculates the transaction fees.

- A Lambda function authenticates the transaction and initiates the payment transaction with the third-party payment provider.

- A Lambda function is initiated when a payment transaction with the third-party payment provider is completed. It receives the transaction status from the provider and performs multiple actions.

- Consumers receive real-time notifications through a web browser and email. The notifications are initiated by a step function, such as order confirmations or payment receipts, and can be integrated with external payment processors through an Amazon Simple Notification Service (Amazon SNS) Amazon Simple Email Service (Amazon SES) web hook.

- A separate Lambda function clears the DynamoDB cache.

- The Lambda function makes entries into the Amazon Simple Queue Service (Amazon SQS) dead-letter queue for failed transactions to retry at a later time.

- Use case 2: An admin or analyst generates the report for non-PCI data

- An admin accesses the web-based reporting dashboard using their browser to generate a report.

- The request is routed to AWS WAF to verify the source that initiated the request.

- An HTTPS GET request (over TLS) is sent to the public target IP. CloudFront fetches static content from an S3 bucket.

- AWS WAF inspects incoming requests for any malicious patterns, if the request is blocked, the request doesn’t return static content from the S3 bucket. The validated traffic is sent to Amazon S3 to retrieve the reporting page.

- The backend requests of the reporting page pass through AWS WAF again to provide protection against common web exploits before being forwarded to the reporting API endpoint through API Gateway.

- API Gateway launches a Lambda function for report generation. The Lambda function retrieves data from DynamoDB storage for the reporting mechanism.

- The AWS Security Token Service (AWS STS) issues temporary credentials to the Lambda service in the non-PCI serverless account, allowing it to launch the Lambda function in the PCI serverless account. The Lambda function retrieves non-PCI data and writes it into DynamoDB.

- The Lambda function fetches the non-PCI data based on the report criteria from the DynamoDB table from the same account.

Additional AWS security and governance services that would be implemented throughout the architecture are shown in Figure 1, Label-25. For example, Amazon CloudWatch monitors and alerts on all the Lambda functions within the environment.

Label-26 demonstrates frameworks that can be used to build the serverless applications.

Scoping and requirements

Now that we’ve established the reference architecture and workflow, lets delve into how it aligns with PCI DSS scope and requirements.

PCI scoping

Serverless services are inherently segmented by AWS, but they can be used within the context of an AWS account hierarchy to provide various levels of isolation as described in the reference architecture example.

Segregating PCI data and non-PCI data into separate AWS accounts can help in de-scoping non-PCI environments and reducing the complexity and audit requirements for components that don’t handle cardholder data.

PCI serverless production account

- This AWS account is dedicated to handling PCI data and applications that directly process, transmit, or store cardholder data.

- Services such as Amazon Cognito, DynamoDB, API Gateway, CloudFront, Amazon SNS, Amazon SES, Amazon SQS, and Step Functions are provisioned in this account to support the PCI data workflow.

- Security controls, logging, monitoring, and access controls in this account are specifically designed to meet PCI DSS requirements.

Non-PCI serverless production account

- This separate AWS account is used to host applications that don’t handle PCI data.

- Since this account doesn’t handle cardholder data, the scope of PCI DSS compliance is reduced, simplifying the compliance process.

Note: You can use AWS Organizations to centrally manage multiple AWS accounts.

AWS IAM Identity Center (successor to AWS Single Sign-On) is used to manage user access to each account and is integrated with your existing identify provider. This helps to ensure you’re meeting PCI requirements on identity, access control of card holder data, and environment.

Now, let’s look at the PCI DSS requirements that this architectural pattern can help address.

Requirement 1: Install and maintain network security controls

- Network security controls are limited to AWS Identity and Access Management (IAM) and application permissions because there is no customer controlled or defined network. VPC-centric requirements aren’t applicable because there is no VPC. The configuration settings for serverless services can be covered under Requirement 6 to for secure configuration standards. This supports compliance with Requirements 1.2 and 1.3.

Requirement 2: Apply secure configurations to all system components

- AWS services are single function by default and exist with only the necessary functionality enabled for the functioning of that service. This supports compliance with much of Requirement 2.2.

- Access to AWS services is considered non-console and only accessible through HTTPS through the service API. This supports compliance with Requirement 2.2.7.

- The wireless requirements under Requirement 2.3 are not applicable, because wireless environments don’t exist in AWS environments.

Requirement 3: Protect stored account data

- AWS is responsible for destruction of account data configured for deletion based on DynamoDB Time to Live (TTL) values. This supports compliance with Requirement 3.2.

- DynamoDB and Amazon S3 offer secure storage of account data, encryption by default in transit and at rest, and integration with AWS Key Management Service (AWS KMS). This supports compliance with Requirements 3.5 and 4.2.

- AWS is responsible for the generation, distribution, storage, rotation, destruction, and overall protection of encryption keys within AWS KMS. This supports compliance with Requirements 3.6 and 3.7.

- Manual cleartext cryptographic keys aren’t available in this solution, Requirement 3.7.6 is not applicable.

Requirement 4: Protect cardholder data with strong cryptography during transmission over open, public networks

- AWS Certificate Manager (ACM) integrates with API Gateway and enables the use of trusted certificates and HTTPS (TLS) for secure communication between clients and the API. This supports compliance with Requirement 4.2.

- Requirement 4.2.1.2 is not applicable because there are no wireless technologies in use in this solution. Customers are responsible for ensuring strong cryptography exists for authentication and transmission over other wireless networks they manage outside of AWS.

- Requirement 4.2.2 is not applicable because no end-user technologies exist in this solution. Customers are responsible for ensuring the use of strong cryptography if primary account numbers (PAN) are sent through end-user messaging technologies in other environments.

Requirement 5: Protect a ll systems and networks from malicious software

- There are no customer-managed compute resources in this example payment environment, Requirements 5.2 and 5.3 are the responsibility of AWS.

Requirement 6: Develop and maintain secure systems and software

- Amazon Inspector now supports Lambda functions, adding continual, automated vulnerability assessments for serverless compute. This supports compliance with Requirement 6.2.

- Amazon Inspector helps identify vulnerabilities and security weaknesses in the payment application’s code, dependencies, and configuration. This supports compliance with Requirement 6.3.

- AWS WAF is designed to protect applications from common attacks, such as SQL injections, cross-site scripting, and other web exploits. AWS WAF can filter and block malicious traffic before it reaches the application. This supports compliance with Requirement 6.4.2.

Requirement 7: Restrict access to system components and cardholder data by business need to know

- IAM and Amazon Cognito allow for fine-grained role- and job-based permissions and access control. Customers can use these capabilities to configure access following the principles of least privilege and need-to-know. IAM and Cognito support the use of strong identification, authentication, authorization, and multi-factor authentication (MFA). This supports compliance with much of Requirement 7.

Requirement 8: Identify users and authenticate access to system components

- IAM and Amazon Cognito also support compliance with much of Requirement 8.

- Some of the controls in this requirement are usually met by the identity provider for internal access to the cardholder data environment (CDE).

Requirement 9: Restrict physical access to cardholder data

- AWS is responsible for the destruction of data in DynamoDB based on the customer configuration of content TTL values for Requirement 9.4.7. Customers are responsible for ensuring their database instance is configured for appropriate removal of data by enabling TTL on DDB attributes.

- Requirement 9 is otherwise not applicable for this serverless example environment because there are no physical media, electronic media not already addressed under Requirement 3.2, or hard-copy materials with cardholder data. AWS is responsible for the physical infrastructure under the Shared Responsibility Model.

Requirement 10: Log and monitor all access to system components and cardholder data

- AWS CloudTrail provides detailed logs of API activity for auditing and monitoring purposes. This supports compliance with Requirement 10.2 and contains all of the events and data elements listed.

- CloudWatch can be used for monitoring and alerting on system events and performance metrics. This supports compliance with Requirement 10.4.

- AWS Security Hub provides a comprehensive view of security alerts and compliance status, consolidating findings from various security services, which helps in ongoing security monitoring and testing. Customers must enable PCI DSS security standard, which supports compliance with Requirement 10.4.2.

- AWS is responsible for maintaining accurate system time for AWS services. In this example, there are no compute resources for which customers can configure time. Requirement 10.6 is addressable through the AWS Attestation of Compliance and Responsibility Summary available in AWS Artifact.

Requirement 11: Regularly test security systems and processes

- Testing for rogue wireless activity within the AWS-based CDE is the responsibility of AWS. AWS is responsible for the management of the physical infrastructure under Requirement 11.2. Customers are still responsible for wireless testing for their environments outside of AWS, such as where administrative workstations exist.

- AWS is responsible for internal vulnerability testing of AWS services, and supports compliance with Requirement 11.3.1.

- Amazon GuardDuty, a threat detection service that continuously monitors for malicious activity and unauthorized access, providing continuous security monitoring. This supports the IDS requirements under Requirement 11.5.1, and covers the entire AWS-based CDE.

- AWS Config allows customers to catalog, monitor and manage configuration changes for their AWS resources. This supports compliance with Requirement 11.5.2.

- Customers can use AWS Config to monitor the configuration of the S3 bucket hosting the static website. This supports compliance with Requirement 11.6.1.

Requirement 12: Support information security with organizational policies and programs

- Customers can download the AWS AOC and Responsibility Summary package from Artifact to support Requirement 12.8.5 and the identification of which PCI DSS requirements are managed by the third-party service provider (TSPS) and which by the customer.

Conclusion

Using AWS serverless services when developing your payment application can significantly help reduce the number of PCI DSS requirements you need to meet by yourself. By offloading infrastructure management to AWS and using serverless services such as Lambda, API Gateway, DynamoDB, Amazon S3, and others, you can benefit from built-in security features and help align with your PCI DSS compliance requirements.

Contact us to help design an architecture that works for your organization. AWS Security Assurance Services is a Payment Card Industry-Qualified Security Assessor company (PCI-QSAC) and HITRUST External Assessor firm. We are a team of industry-certified assessors who help you to achieve, maintain, and automate compliance in the cloud by tying together applicable audit standards to AWS service-specific features and functionality. We help you build on frameworks such as PCI DSS, HITRUST CSF, NIST, SOC 2, HIPAA, ISO 27001, GDPR, and CCPA.

More information on how to build applications using AWS serverless technologies can be found at Serverless on AWS.

Want more AWS Security news? Follow us on Twitter.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, start a new thread on the Serverless re:Post, Security, Identity, & Compliance re:Post or contact AWS Support.

[$] Rust code review and netdev

Post Syndicated from jake original https://lwn.net/Articles/949270/

A fast-moving patch set—seemingly the norm for Linux networking

development—seeks to add some Rust abstractions for physical layer

(PHY) drivers. Lots of

review has been done, and the patch set has been reworked

frequently in response to those comments. Unfortunately, the Rust-for-Linux developers are

having trouble keeping up with that pace. There

is, it would appear, something of a disconnect between the two communities’

development practices.

Jabil Buys the Intel Pluggable Silicon Photonics Business

Post Syndicated from Rohit Kumar original https://www.servethehome.com/jabil-buys-the-intel-pluggable-silicon-photonics-business/

As a follow-up to our story last week, Jabil says that is has purchased Intel’s pluggable silicon photonics module business

The post Jabil Buys the Intel Pluggable Silicon Photonics Business appeared first on ServeTheHome.

Feature packed Novembers release of Home Assistant

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=z97Ly_I3MW0

Happy Halloween!

Post Syndicated from Home Assistant original https://www.youtube.com/watch?v=Eyoqvw8qLLc

Things That Used to Be Science Fiction (and Aren’t Anymore)

Post Syndicated from Yev original https://www.backblaze.com/blog/things-that-used-to-be-science-fiction-and-arent-anymore/

The year is 2023, and the human race has spread across the globe. Nuclear powered flying cars are everywhere, and the first colonies have landed on Mars! [Radio crackles.]

Okay, so that isn’t exactly how it’s gone down, but in honor of Halloween, the day celebrates the whimsy of all things being possible, let’s talk about things that used to be science fiction and aren’t anymore.

Artificial Intelligence (AI)

Have we gotten reader fatigue from this topic yet? (As technology people by nature, we’re deep in it.) The rise of generative AI over the past year or so has certainly brought this subject into the spotlight, so in some ways it seems “early” to judge all the ways that AI will change things. On the other hand, there are lots of tools and functions we’ve been using for a while that have been powered by AI algorithms, including AI assistants.

At the risk of not doing this topic justice in this list, I’ll say that there’s plenty of reporting on—and plenty of potential for—AI now and in the future.

Aliens

This year, the U.S. House Oversight Committee was conducting an investigation on unidentified flying objects (UFOs). While many UFOs turn out to be things like weather balloons and drones designed for home use, well, some apparently aren’t. Three military veterans, including a former intelligence officer, went on record saying that the government has a secret facility where it’s been reverse engineering highly advanced vehicles, and that the U.S. has recovered “non-human biologics” from these crash sites. (Whatever that means—but we all know what that means, right…)

Here’s the video, if you want to see for yourself.

Weirdly, the public response was… not much of one. (The last couple of years have been “a year”.) But, chalk this one up as confirmed.

Space Stations

The list of sci-fi shows and books set on space stations is definitely too long to list item by item. Depending on your age (and we won’t ask you to tell us), you may think of Isaac Asimov’s Foundation series (the books), Star Wars, Zenon, Girl of the 21st Century (or maybe the Zequel?), Babylon 5, or the Expanse.

Back in the real world, the International Space Station (ISS) has been in orbit since 1998 and runs all manner of scientific experiments. Notably, these experiments include the Alpha Meter Spectrometer (AMS-02) which is designed to detect things like dark matter and antimatter and solve the fundamental mysteries of the universe. (No big deal.)

For those of us stuck on Earth (for now), you can keep up with the ISS in lots of ways. Check out this map that lets you track whether you can see it from your current location. (Wave the next time it floats over!) And, of course, there are some fun YouTube channels streaming the ISS. Here’s just one:

Universal Translators

Okay, universal translators is the cool sci-fi name, but if you want the actual, machine learning (ML) name, folks call that interlingual machine translation. Translation may seem straightforward at first glance, but, as this legendary Star Trek episode demonstrates, things are not always so simple.

And sure, it’s easy to say that this is an unreasonable standard given that most human languages are known—but are they? Native language reclamation projects like those from the Cherokee and Oneida tribes demonstrate how easy it is to lose the nuance of a language without those who natively speak it. Advanced degrees in poetry translation, like this Masters of Fine Arts from the University of Iowa (go Hawks!), help specialists grapple with and capture the nuance between smell, scent, odor, and stench across different languages. And, add to those challenges that translators also have to contend with the wide array of accents in each language.

With that in mind, it’s pretty amazing that we now have translation devices that can be as small as earbuds. Most still require an internet connection, and some are more effective than others, but it’s safe to say we live in the future, folks. Case in point: I had a wine tasting in Tuscany a few months ago where we used Google Translate exclusively to speak with the winemaker and proprietor.

iPads

“What?” you say. “iPads are so normal!” Sure, now you’re used to touch screens. But, let me present you with this image from a show that is definitely considered science fiction:

Yes, folks, that’s Captain Jean Luc Picard from Star Trek: The Next Generation. And here’s a later one, from Star Trek: Deep Space Nine.

Star Trek wikis describe the details of a Personal Access Display Device, or PADD, including a breakdown of how they changed over time in the series. Uhura even had a “digital clipboard” in the original Star Trek series:

And, just for the record, we’ll always have a soft spot in our heart for Chief O’Brien’s love of backups.

Robot Domestic Laborer

If you were ever a fan of this lovely lady—

—then you’ll be happy to know that your robot caretaker(s) have arrived. Just as Rosie was often seen using a separate vacuum cleaner, they’re not all integrated into one charming package—yet. If you’re looking for the full suite of domestic help, you’ll have to get a few different products.

First up, the increasingly popular (and, as time goes on, increasingly affordable) robot vacuum. There are tons of models, from the simple vacuum to the vacuum/mop. While they’re reportedly prone to some pretty hilarious (horrific?) accidents, having one or several of these disk-shaped appliances saves lots of folks lots of time. Bonus: just add cat, and you have adorable internet content in the comfort of your own home.

Next up, the Snoo, marketed as a smart bassinet, will track everything baby, then use that data to help said baby sleep. Parents who can afford to buy or rent this item sing its praises, noting that you can swaddle the baby for safety and review the data collected to better care for your child.

And, don’t forget to round out your household with this charming toilet cleaning robot.

Robot Bartenders

In this iconic scene from The Fifth Element, Luc Besson’s 1997 masterpiece, a drunken priest waxes poetic about a perfect being (spoiler: she’s a woman) to a robot bartender. “Do you know what I mean?” the priest asks. The robot shakes its head. “Do you want some more?”

These days, you can actually visit robot bartenders in Las Vegas or on Royal Caribbean cruise ships. Or, if you’re looking for a robot bartender that does more than serve up a great Sazerac, you can turn to Brillo, a robot bartender powered by AI who can also engage in complex dialogue.

And, if leaving your house sounds terrible, don’t worry: you can also get a specialized appliance for your home.

It’s a Good Time to Be Cloud Storage

One thing that all these current (and future) tech developments have in common: you never see them carting something trailing wires. That means (you guessed it!) that they’re definitely using a central data source delivered via wireless network, a.k.a the cloud.

After you’ve done all the work to, say, study an alien life form or design and program the perfect cocktail, you definitely don’t want to do that work twice. And, do you see folks slowing down to schedule a backup? Definitely not. Easy, online, always updating backups are the way to go.

So, we’re not going to say Backblaze Computer Backup makes the list as a sci-fi idea that we’ve made real; we’re just saying that it’s probably one of those things that people leave off-stage, like characters brushing their teeth on a regular basis. And, past or future, we’re here to remind you that you should always back up your data.

Things We Still Want (Get On It, Scientists!)

Everything we just listed is really cool and all, but let’s not forget that we are still waiting for some very important things. Help us out scientists; we really need these:

- Flying cars

- Faster than light space travel

- Teleportation

- Matter replicators (3D printing isn’t quite there)

We feel compelled to add that, despite our jocular tone, the line between science and science fiction has always been something of a thin one. Studies have shown and inventors like Motorola’s Martin Cooper have gone on record pointing to their inspiration in the imaginative works of science fiction.

So, that leaves us standing by for new developments! Let’s see what 2024 brings. Let us know in the comments section what cool tech in your life fits this brief.

The post Things That Used to Be Science Fiction (and Aren’t Anymore) appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Broadway’s & Juliet | Cast and Creatives | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=QsYtZaTk2Os

Use Snowflake with Amazon MWAA to orchestrate data pipelines

Post Syndicated from Payal Singh original https://aws.amazon.com/blogs/big-data/use-snowflake-with-amazon-mwaa-to-orchestrate-data-pipelines/

This blog post is co-written with James Sun from Snowflake.

Customers rely on data from different sources such as mobile applications, clickstream events from websites, historical data, and more to deduce meaningful patterns to optimize their products, services, and processes. With a data pipeline, which is a set of tasks used to automate the movement and transformation of data between different systems, you can reduce the time and effort needed to gain insights from the data. Apache Airflow and Snowflake have emerged as powerful technologies for data management and analysis.

Amazon Managed Workflows for Apache Airflow (Amazon MWAA) is a managed workflow orchestration service for Apache Airflow that you can use to set up and operate end-to-end data pipelines in the cloud at scale. The Snowflake Data Cloud provides a single source of truth for all your data needs and allows your organizations to store, analyze, and share large amounts of data. The Apache Airflow open-source community provides over 1,000 pre-built operators (plugins that simplify connections to services) for Apache Airflow to build data pipelines.

In this post, we provide an overview of orchestrating your data pipeline using Snowflake operators in your Amazon MWAA environment. We define the steps needed to set up the integration between Amazon MWAA and Snowflake. The solution provides an end-to-end automated workflow that includes data ingestion, transformation, analytics, and consumption.

Overview of solution

The following diagram illustrates our solution architecture.

The data used for transformation and analysis is based on the publicly available New York Citi Bike dataset. The data (zipped files), which includes rider demographics and trip data, is copied from the public Citi Bike Amazon Simple Storage Service (Amazon S3) bucket in your AWS account. Data is decompressed and stored in a different S3 bucket (transformed data can be stored in the same S3 bucket where data was ingested, but for simplicity, we’re using two separate S3 buckets). The transformed data is then made accessible to Snowflake for data analysis. The output of the queried data is published to Amazon Simple Notification Service (Amazon SNS) for consumption.

Amazon MWAA uses a directed acyclic graph (DAG) to run the workflows. In this post, we run three DAGs:

- DAG1 sets up the Amazon MWAA connection to authenticate to Snowflake. The Snowflake connection string is stored in AWS Secrets Manager, which is referenced in the DAG file.

- DAG2 creates the required Snowflake objects (database, table, storage integration, and stage).

- DAG3 runs the data pipeline.

The following diagram illustrates this workflow.

See the GitHub repo for the DAGs and other files related to the post.

Note that in this post, we’re using a DAG to create a Snowflake connection, but you can also create the Snowflake connection using the Airflow UI or CLI.

Prerequisites

To deploy the solution, you should have a basic understanding of Snowflake and Amazon MWAA with the following prerequisites:

- An AWS account in an AWS Region where Amazon MWAA is supported.

- A Snowflake account with admin credentials. If you don’t have an account, sign up for a 30-day free trial. Select the Snowflake enterprise edition for the AWS Cloud platform.

- Access to Amazon MWAA, Secrets Manager, and Amazon SNS.

- In this post, we’re using two S3 buckets, called

airflow-blog-bucket-ACCOUNT_IDandcitibike-tripdata-destination-ACCOUNT_ID. Amazon S3 supports global buckets, which means that each bucket name must be unique across all AWS accounts in all the Regions within a partition. If the S3 bucket name is already taken, choose a different S3 bucket name. Create the S3 buckets in your AWS account. We upload content to the S3 bucket later in the post. ReplaceACCOUNT_IDwith your own AWS account ID or any other unique identifier. The bucket details are as follows:- airflow-blog-bucket-ACCOUNT_ID – The top-level bucket for Amazon MWAA-related files.

- airflow-blog-bucket-ACCOUNT_ID/requirements – The bucket used for storing the requirements.txt file needed to deploy Amazon MWAA.

- airflow-blog-bucket-ACCOUNT_ID/dags – The bucked used for storing the DAG files to run workflows in Amazon MWAA.

- airflow-blog-bucket-ACCOUNT_ID/dags/mwaa_snowflake_queries – The bucket used for storing the Snowflake SQL queries.

- citibike-tripdata-destination-ACCOUNT_ID – The bucket used for storing the transformed dataset.

When implementing the solution in this post, replace references to airflow-blog-bucket-ACCOUNT_ID and citibike-tripdata-destination-ACCOUNT_ID with the names of your own S3 buckets.

Set up the Amazon MWAA environment

First, you create an Amazon MWAA environment. Before deploying the environment, upload the requirements file to the airflow-blog-bucket-ACCOUNT_ID/requirements S3 bucket. The requirements file is based on Amazon MWAA version 2.6.3. If you’re testing on a different Amazon MWAA version, update the requirements file accordingly.

Complete the following steps to set up the environment:

- On the Amazon MWAA console, choose Create environment.

- Provide a name of your choice for the environment.

- Choose Airflow version 2.6.3.

- For the S3 bucket, enter the path of your bucket (

s3:// airflow-blog-bucket-ACCOUNT_ID). - For the DAGs folder, enter the DAGs folder path (

s3:// airflow-blog-bucket-ACCOUNT_ID/dags). - For the requirements file, enter the requirements file path (

s3:// airflow-blog-bucket-ACCOUNT_ID/ requirements/requirements.txt). - Choose Next.

- Under Networking, choose your existing VPC or choose Create MWAA VPC.

- Under Web server access, choose Public network.

- Under Security groups, leave Create new security group selected.

- For the Environment class, Encryption, and Monitoring sections, leave all values as default.

- In the Airflow configuration options section, choose Add custom configuration value and configure two values:

- Set Configuration option to

secrets.backendand Custom value toairflow.providers.amazon.aws.secrets.secrets_manager.SecretsManagerBackend. - Set Configuration option to

secrets.backend_kwargsand Custom value to{"connections_prefix" : "airflow/connections", "variables_prefix" : "airflow/variables"}.

- Set Configuration option to

- In the Permissions section, leave the default settings and choose Create a new role.

- Choose Next.

- When the Amazon MWAA environment us available, assign S3 bucket permissions to the AWS Identity and Access Management (IAM) execution role (created during the Amazon MWAA install).

This will direct you to the created execution role on the IAM console.

For testing purposes, you can choose Add permissions and add the managed AmazonS3FullAccess policy to the user instead of providing restricted access. For this post, we provide only the required access to the S3 buckets.

- On the drop-down menu, choose Create inline policy.

- For Select Service, choose S3.

- Under Access level, specify the following:

- Expand List level and select

ListBucket. - Expand Read level and select

GetObject. - Expand Write level and select

PutObject.

- Expand List level and select

- Under Resources, choose Add ARN.

- On the Text tab, provide the following ARNs for S3 bucket access:

arn:aws:s3:::airflow-blog-bucket-ACCOUNT_ID(use your own bucket).arn:aws:s3:::citibike-tripdata-destination-ACCOUNT_ID(use your own bucket).arn:aws:s3:::tripdata(this is the public S3 bucket where the Citi Bike dataset is stored; use the ARN as specified here).

- Under Resources, choose Add ARN.

- On the Text tab, provide the following ARNs for S3 bucket access:

arn:aws:s3:::airflow-blog-bucket-ACCOUNT_ID/*(make sure to include the asterisk).arn:aws:s3:::citibike-tripdata-destination-ACCOUNT_ID /*.arn:aws:s3:::tripdata/*(this is the public S3 bucket where the Citi Bike dataset is stored, use the ARN as specified here).

- Choose Next.

- For Policy name, enter

S3ReadWrite. - Choose Create policy.

- Lastly, provide Amazon MWAA with permission to access Secrets Manager secret keys.

This step provides the Amazon MWAA execution role for your Amazon MWAA environment read access to the secret key in Secrets Manager.

The execution role should have the policies MWAA-Execution-Policy*, S3ReadWrite, and SecretsManagerReadWrite attached to it.

When the Amazon MWAA environment is available, you can sign in to the Airflow UI from the Amazon MWAA console using link for Open Airflow UI.

Set up an SNS topic and subscription

Next, you create an SNS topic and add a subscription to the topic. Complete the following steps:

- On the Amazon SNS console, choose Topics from the navigation pane.

- Choose Create topic.

- For Topic type, choose Standard.

- For Name, enter

mwaa_snowflake. - Leave the rest as default.

- After you create the topic, navigate to the Subscriptions tab and choose Create subscription.

- For Topic ARN, choose

mwaa_snowflake. - Set the protocol to Email.

- For Endpoint, enter your email ID (you will get a notification in your email to accept the subscription).

By default, only the topic owner can publish and subscribe to the topic, so you need to modify the Amazon MWAA execution role access policy to allow Amazon SNS access.

- On the IAM console, navigate to the execution role you created earlier.

- On the drop-down menu, choose Create inline policy.

- For Select service, choose SNS.

- Under Actions, expand Write access level and select Publish.

- Under Resources, choose Add ARN.

- On the Text tab, specify the ARN

arn:aws:sns:<<region>>:<<our_account_ID>>:mwaa_snowflake. - Choose Next.

- For Policy name, enter

SNSPublishOnly. - Choose Create policy.

Configure a Secrets Manager secret

Next, we set up Secrets Manager, which is a supported alternative database for storing Snowflake connection information and credentials.

To create the connection string, the Snowflake host and account name is required. Log in to your Snowflake account, and under the Worksheets menu, choose the plus sign and select SQL worksheet. Using the worksheet, run the following SQL commands to find the host and account name.

Run the following query for the host name:

Run the following query for the account name:

Next, we configure the secret in Secrets Manager.

- On the Secrets Manager console, choose Store a new secret.

- For Secret type, choose Other type of secret.

- Under Key/Value pairs, choose the Plaintext tab.

- In the text field, enter the following code and modify the string to reflect your Snowflake account information:

{"host": "<<snowflake_host_name>>", "account":"<<snowflake_account_name>>","user":"<<snowflake_username>>","password":"<<snowflake_password>>","schema":"mwaa_schema","database":"mwaa_db","role":"accountadmin","warehouse":"dev_wh"}

For example:

{"host": "xxxxxx.snowflakecomputing.com", "account":"xxxxxx" ,"user":"xxxxx","password":"*****","schema":"mwaa_schema","database":"mwaa_db", "role":"accountadmin","warehouse":"dev_wh"}

The values for the database name, schema name, and role should be as mentioned earlier. The account, host, user, password, and warehouse can differ based on your setup.

Choose Next.

- For Secret name, enter

airflow/connections/snowflake_accountadmin. - Leave all other values as default and choose Next.

- Choose Store.

Take note of the Region in which the secret was created under Secret ARN. We later define it as a variable in the Airflow UI.

Configure Snowflake access permissions and IAM role

Next, log in to your Snowflake account. Ensure the account you are using has account administrator access. Create a SQL worksheet. Under the worksheet, create a warehouse named dev_wh.

The following is an example SQL command:

For Snowflake to read data from and write data to an S3 bucket referenced in an external (S3 bucket) stage, a storage integration is required. Follow the steps defined in Option 1: Configuring a Snowflake Storage Integration to Access Amazon S3(only perform Steps 1 and 2, as described in this section).

Configure access permissions for the S3 bucket

While creating the IAM policy, a sample policy document code is needed (see the following code), which provides Snowflake with the required permissions to load or unload data using a single bucket and folder path. The bucket name used in this post is citibike-tripdata-destination-ACCOUNT_ID. You should modify it to reflect your bucket name.

Create the IAM role

Next, you create the IAM role to grant privileges on the S3 bucket containing your data files. After creation, record the Role ARN value located on the role summary page.

Configure variables

Lastly, configure the variables that will be accessed by the DAGs in Airflow. Log in to the Airflow UI and on the Admin menu, choose Variables and the plus sign.

Add four variables with the following key/value pairs:

- Key

aws_role_arnwith value<<snowflake_aws_role_arn>>(the ARN for role mysnowflakerole noted earlier) - Key

destination_bucketwith value<<bucket_name>>(for this post, the bucket used in citibike-tripdata-destination-ACCOUNT_ID) - Key

target_sns_arnwith value<<sns_Arn>>(the SNS topic in your account) - Key

sec_key_regionwith value<<region_of_secret_deployment>>(the Region where the secret in Secrets Manager was created)

The following screenshot illustrates where to find the SNS topic ARN.

The Airflow UI will now have the variables defined, which will be referred to by the DAGs.

Congratulations, you have completed all the configuration steps.

Run the DAG

Let’s look at how to run the DAGs. To recap:

- DAG1 (create_snowflake_connection_blog.py) – Creates the Snowflake connection in Apache Airflow. This connection will be used to authenticate with Snowflake. The Snowflake connection string is stored in Secrets Manager, which is referenced in the DAG file.

- DAG2 (create-snowflake_initial-setup_blog.py) – Creates the database, schema, storage integration, and stage in Snowflake.

- DAG3 (run_mwaa_datapipeline_blog.py) – Runs the data pipeline, which will unzip files from the source public S3 bucket and copy them to the destination S3 bucket. The next task will create a table in Snowflake to store the data. Then the data from the destination S3 bucket will be copied into the table using a Snowflake stage. After the data is successfully copied, a view will be created in Snowflake, on top of which the SQL queries will be run.

To run the DAGs, complete the following steps:

- Upload the DAGs to the S3 bucket

airflow-blog-bucket-ACCOUNT_ID/dags. - Upload the SQL query files to the S3 bucket

airflow-blog-bucket-ACCOUNT_ID/dags/mwaa_snowflake_queries. - Log in to the Apache Airflow UI.

- Locate DAG1 (

create_snowflake_connection_blog), un-pause it, and choose the play icon to run it.

You can view the run state of the DAG using the Grid or Graph view in the Airflow UI.

After DAG1 runs, the Snowflake connection snowflake_conn_accountadmin is created on the Admin, Connections menu.

- Locate and run DAG2 (

create-snowflake_initial-setup_blog).

After DAG2 runs, the following objects are created in Snowflake:

- The database

mwaa_db - The schema

mwaa_schema - The storage integration

mwaa_citibike_storage_int - The stage

mwaa_citibike_stg

Before running the final DAG, the trust relationship for the IAM user needs to be updated.

- Log in to your Snowflake account using your admin account credentials.

- Open your SQL worksheet created earlier and run the following command:

mwaa_citibike_storage_int is the name of the integration created by the DAG2 in the previous step.

From the output, record the property value of the following two properties:

- STORAGE_AWS_IAM_USER_ARN – The IAM user created for your Snowflake account.

- STORAGE_AWS_EXTERNAL_ID – The external ID that is needed to establish a trust relationship.

Now we grant the Snowflake IAM user permissions to access bucket objects.

- On the IAM console, choose Roles in the navigation pane.

- Choose the role

mysnowflakerole. - On the Trust relationships tab, choose Edit trust relationship.

- Modify the policy document with the

DESC STORAGE INTEGRATIONoutput values you recorded. For example:

The AWS role ARN and ExternalId will be different for your environment based on the output of the DESC STORAGE INTEGRATION query

- Locate and run the final DAG (

run_mwaa_datapipeline_blog).

At the end of the DAG run, the data is ready for querying. In this example, the query (finding the top start and destination stations) is run as part of the DAG and the output can be viewed from the Airflow XCOMs UI.

In the DAG run, the output is also published to Amazon SNS and based on the subscription, an email notification is sent out with the query output.

Another method to visualize the results is directly from the Snowflake console using the Snowflake worksheet. The following is an example query:

There are different ways to visualize the output based on your use case.

As we observed, DAG1 and DAG2 need to be run only one time to set up the Amazon MWAA connection and Snowflake objects. DAG3 can be scheduled to run every week or month. With this solution, the user examining the data doesn’t have to log in to either Amazon MWAA or Snowflake. You can have an automated workflow triggered on a schedule that will ingest the latest data from the Citi Bike dataset and provide the top start and destination stations for the given dataset.

Clean up

To avoid incurring future charges, delete the AWS resources (IAM users and roles, Secrets Manager secrets, Amazon MWAA environment, SNS topics and subscription, S3 buckets) and Snowflake resources (database, stage, storage integration, view, tables) created as part of this post.

Conclusion

In this post, we demonstrated how to set up an Amazon MWAA connection for authenticating to Snowflake as well as to AWS using AWS user credentials. We used a DAG to automate creating the Snowflake objects such as database, tables, and stage using SQL queries. We also orchestrated the data pipeline using Amazon MWAA, which ran tasks related to data transformation as well as Snowflake queries. We used Secrets Manager to store Snowflake connection information and credentials and Amazon SNS to publish the data output for end consumption.

With this solution, you have an automated end-to-end orchestration of your data pipeline encompassing ingesting, transformation, analysis, and data consumption.

To learn more, refer to the following resources:

- Getting started with snowflake – Zero to Snowflake

- Amazon MWAA for Analytics Workshop

- Snowflake Airflow operators

About the authors

Payal Singh is a Partner Solutions Architect at Amazon Web Services, focused on the Serverless platform. She is responsible for helping partner and customers modernize and migrate their applications to AWS.

Payal Singh is a Partner Solutions Architect at Amazon Web Services, focused on the Serverless platform. She is responsible for helping partner and customers modernize and migrate their applications to AWS.

James Sun is a Senior Partner Solutions Architect at Snowflake. He actively collaborates with strategic cloud partners like AWS, supporting product and service integrations, as well as the development of joint solutions. He has held senior technical positions at tech companies such as EMC, AWS, and MapR Technologies. With over 20 years of experience in storage and data analytics, he also holds a PhD from Stanford University.

James Sun is a Senior Partner Solutions Architect at Snowflake. He actively collaborates with strategic cloud partners like AWS, supporting product and service integrations, as well as the development of joint solutions. He has held senior technical positions at tech companies such as EMC, AWS, and MapR Technologies. With over 20 years of experience in storage and data analytics, he also holds a PhD from Stanford University.

Bosco Albuquerque is a Sr. Partner Solutions Architect at AWS and has over 20 years of experience working with database and analytics products from enterprise database vendors and cloud providers. He has helped technology companies design and implement data analytics solutions and products.

Bosco Albuquerque is a Sr. Partner Solutions Architect at AWS and has over 20 years of experience working with database and analytics products from enterprise database vendors and cloud providers. He has helped technology companies design and implement data analytics solutions and products.

Manuj Arora is a Sr. Solutions Architect for Strategic Accounts in AWS. He focuses on Migration and Modernization capabilities and offerings in AWS. Manuj has worked as a Partner Success Solutions Architect in AWS over the last 3 years and worked with partners like Snowflake to build solution blueprints that are leveraged by the customers. Outside of work, he enjoys traveling, playing tennis and exploring new places with family and friends.

Manuj Arora is a Sr. Solutions Architect for Strategic Accounts in AWS. He focuses on Migration and Modernization capabilities and offerings in AWS. Manuj has worked as a Partner Success Solutions Architect in AWS over the last 3 years and worked with partners like Snowflake to build solution blueprints that are leveraged by the customers. Outside of work, he enjoys traveling, playing tennis and exploring new places with family and friends.

Comic for 2023.10.31 – Does This Hurt

Post Syndicated from Explosm.net original https://explosm.net/comics/does-this-hurt

New Cyanide and Happiness Comic

Prepare your AWS workloads for the “Operational risks and resilience – banks” FINMA Circular

Post Syndicated from Margo Cronin original https://aws.amazon.com/blogs/security/prepare-your-aws-workloads-for-the-operational-risks-and-resilience-banks-finma-circular/

In December 2022, FINMA, the Swiss Financial Market Supervisory Authority, announced a fully revised circular called Operational risks and resilience – banks that will take effect on January 1, 2024. The circular will replace the Swiss Bankers Association’s Recommendations for Business Continuity Management (BCM), which is currently recognized as a minimum standard. The new circular also adopts the revised principles for managing operational risks, and the new principles on operational resilience, that the Basel Committee on Banking Supervision published in March 2021.

In this blog post, we share key considerations for AWS customers and regulated financial institutions to help them prepare for, and align to, the new circular.

AWS previously announced the publication of the AWS User Guide to Financial Services Regulations and Guidelines in Switzerland. The guide refers to certain rules applicable to financial institutions in Switzerland, including banks, insurance companies, stock exchanges, securities dealers, portfolio managers, trustees, and other financial entities that FINMA oversees (directly or indirectly).

FINMA has previously issued the following circulars to help regulated financial institutions understand approaches to due diligence, third party management, and key technical and organizational controls to be implemented in cloud outsourcing arrangements, particularly for material workloads:

- 2018/03 FINMA Circular Outsourcing – banks and insurers (31.10.2019)

- 2008/21 FINMA Circular Operational Risks – Banks (31.10.2019) – Principal 4 Technology Infrastructure

- 2008/21 FINMA Circular Operational Risks – Banks (31.10.2019) – Appendix 3 Handling of electronic Client Identifying Data

- 2013/03 Auditing (04.11.2020) – Information Technology (21.04.2020)

- BCM minimum standards proposed by the Swiss Insurance Association (01.06.2015) and Swiss Bankers Association (29.08.2013)

Operational risk management: Critical data

The circular defines critical data as follows:

“Critical data are data that, in view of the institution’s size, complexity, structure, risk profile and business model, are of such crucial significance that they require increased security measures. These are data that are crucial for the successful and sustainable provision of the institution’s services or for regulatory purposes. When assessing and determining the criticality of data, the confidentiality as well as the integrity and availability must be taken into account. Each of these three aspects can determine whether data is classified as critical.”

This definition is consistent with the AWS approach to privacy and security. We believe that for AWS to realize its full potential, customers must have control over their data. This includes the following commitments:

- Control over the location of your data

- Verifiable control over data access

- Ability to encrypt everything everywhere

- Resilience of AWS

These commitments further demonstrate our dedication to securing your data: it’s our highest priority. We implement rigorous contractual, technical, and organizational measures to help protect the confidentiality, integrity, and availability of your content regardless of which AWS Region you select. You have complete control over your content through powerful AWS services and tools that you can use to determine where to store your data, how to secure it, and who can access it.

You also have control over the location of your content on AWS. For example, in Europe, at the time of publication of this blog post, customers can deploy their data into any of eight Regions (for an up-to-date list of Regions, see AWS Global Infrastructure). One of these Regions is the Europe (Zurich) Region, also known by its API name ‘eu-central-2’, which customers can use to store data in Switzerland. Additionally, Swiss customers can rely on the terms of the AWS Swiss Addendum to the AWS Data Processing Addendum (DPA), which applies automatically when Swiss customers use AWS services to process personal data under the new Federal Act on Data Protection (nFADP).

AWS continually monitors the evolving privacy, regulatory, and legislative landscape to help identify changes and determine what tools our customers might need to meet their compliance requirements. Maintaining customer trust is an ongoing commitment. We strive to inform you of the privacy and security policies, practices, and technologies that we’ve put in place. Our commitments, as described in the Data Privacy FAQ, include the following:

- Access – As a customer, you maintain full control of your content that you upload to the AWS services under your AWS account, and responsibility for configuring access to AWS services and resources. We provide an advanced set of access, encryption, and logging features to help you do this effectively (for example, AWS Identity and Access Management, AWS Organizations, and AWS CloudTrail). We provide APIs that you can use to configure access control permissions for the services that you develop or deploy in an AWS environment. We never use your content or derive information from it for marketing or advertising purposes.

- Storage – You choose the AWS Regions in which your content is stored. You can replicate and back up your content in more than one Region. We will not move or replicate your content outside of your chosen AWS Regions except as agreed with you.

- Security – You choose how your content is secured. We offer you industry-leading encryption features to protect your content in transit and at rest, and we provide you with the option to manage your own encryption keys. These data protection features include:

- Data encryption capabilities available in over 100 AWS services.

- Flexible key management options using AWS Key Management Service (AWS KMS), so you can choose whether to have AWS manage your encryption keys or to keep complete control over your keys.

- Disclosure of customer content – We will not disclose customer content unless we’re required to do so to comply with the law or a binding order of a government body. If a governmental body sends AWS a demand for your customer content, we will attempt to redirect the governmental body to request that data directly from you. If compelled to disclose your customer content to a governmental body, we will give you reasonable notice of the demand to allow the customer to seek a protective order or other appropriate remedy, unless AWS is legally prohibited from doing so.

- Security assurance – We have developed a security assurance program that uses current recommendations for global privacy and data protection to help you operate securely on AWS, and to make the best use of our security control environment. These security protections and control processes are independently validated by multiple third-party independent assessments, including the FINMA International Standard on Assurance Engagements (ISAE) 3000 Type II attestation report.

Additionally, FINMA guidelines lay out requirements for the written agreement between a Swiss financial institution and its service provider, including access and audit rights. For Swiss financial institutions that run regulated workloads on AWS, we offer the Swiss Financial Services Addendum to address the contractual and audit requirements of the FINMA guidelines. We also provide these institutions the ability to comply with the audit requirements in the FINMA guidelines through the AWS Security & Audit Series, including participation in an Audit Symposium, to facilitate customer audits. To help align with regulatory requirements and expectations, our FINMA addendum and audit program incorporate feedback that we’ve received from a variety of financial supervisory authorities across EU member states. To learn more about the Swiss Financial Services addendum or about the audit engagements offered by AWS, reach out to your AWS account team.

Resilience

Customers need control over their workloads and high availability to help prepare for events such as supply chain disruptions, network interruptions, and natural disasters. Each AWS Region is composed of multiple Availability Zones (AZs). An Availability Zone is one or more discrete data centers with redundant power, networking, and connectivity in an AWS Region. To better isolate issues and achieve high availability, you can partition applications across multiple AZs in the same Region. If you are running workloads on premises or in intermittently connected or remote use cases, you can use our services that provide specific capabilities for offline data and remote compute and storage. We will continue to enhance our range of sovereign and resilient options, to help you sustain operations through disruption or disconnection.

FINMA incorporates the principles of operational resilience in the newest circular 2023/01. In line with the efforts of the European Commission’s proposal for the Digital Operational Resilience Act (DORA), FINMA outlines requirements for regulated institutions to identify critical functions and their tolerance for disruption. Continuity of service, especially for critical economic functions, is a key prerequisite for financial stability. AWS recognizes that financial institutions need to comply with sector-specific regulatory obligations and requirements regarding operational resilience. AWS has published the whitepaper Amazon Web Services’ Approach to Operational Resilience in the Financial Sector and Beyond, in which we discuss how AWS and customers build for resiliency on the AWS Cloud. AWS provides resilient infrastructure and services, which financial institution customers can rely on as they design their applications to align with FINMA regulatory and compliance obligations.

AWS previously announced the third issuance of the FINMA ISAE 3000 Type II attestation report. Customers can access the entire report in AWS Artifact. To learn more about the list of certified services and Regions, see the FINMA ISAE 3000 Type 2 Report and AWS Services in Scope for FINMA.

AWS is committed to adding new services into our future FINMA program scope based on your architectural and regulatory needs. If you have questions about the FINMA report, or how your workloads on AWS align to the FINMA obligations, contact your AWS account team. We will also help support customers as they look for new ways to experiment, remain competitive, meet consumer expectations, and develop new products and services on AWS that align with the new regulatory framework.

To learn more about our compliance, security programs and common privacy and data protection considerations, see AWS Compliance Programs and the dedicated AWS Compliance Center for Switzerland. As always, we value your feedback and questions; reach out to the AWS Compliance team through the Contact Us page.