Post Syndicated from Curious Droid original https://www.youtube.com/watch?v=bjM3jOYQYz4

How we think about browsers

Post Syndicated from Keith Cirkel original https://github.blog/2022-06-10-how-we-think-about-browsers/

At GitHub, we believe it’s not fully shipped until it’s fast. JavaScript makes a big impact on how pages perform. One way we work to improve JavaScript performance is to make changes to the native syntax and polyfills we ship. For example, in January of this year, we updated our compiler to output native ES2019 code, shipping native syntax for optional catch binding.

JavaScript doesn’t get executed on very old browsers when native syntax for new language features is encountered. However, thanks to GitHub being built following the principle of progressive enhancement, users of older browsers still get to interact with basic features of GitHub, while users with more capable browsers get a faster experience.

GitHub will soon be serving JavaScript using syntax features found in the ECMAScript 2020 standard, which includes the optional chaining and nullish coalescing operators. This change will lead to a 10kb reduction in JavaScript across the site.

We want to take this opportunity to go into detail about how we think about browser support. We will share data about our customers’ browser usage patterns and introduce you to some of the tools we use to make sure our customers are getting the best experience, including our recently open-sourced browser support library.

What browsers do our customers use?

To monitor the performance of pages, we collect some usage information. We parse User-Agent headers as part of our analytics, which lets us make informed decisions based on the browsers our users are running. Some of these decisions include, what browsers we execute automated tests on, the configuration of our static analysis tools, and even what features we ship. Around 95% of requests to our web services come from browsers with an identifying user agent string. Another 1% of requests have no User-Agent header, and the remaining 4% make up scripts, like Python (2%) or programs like cURL (0.5%).

We encourage users to use the latest versions of Chrome, Edge, Firefox, or Safari, and our data shows that a majority of users do so. Here’s what the browser market share looked like for visits to github.com between May 9-13, 2022:

| Beta | Latest | -1 | -2 | -3 | -4 | -5 | -6 | -7 | -8 | Total | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Chrome | 0.2950% | 53.0551% | 12.7103% | 1.8120% | 0.8076% | 0.4737% | 0.5504% | 0.1728% | 0.1677% | 0.1029% | 70.1478% |

| Edge | 0.0000% | 6.3404% | 0.5328% | 0.0978% | 0.0432% | 0.0202% | 0.0143% | 0.0063% | 0.0058% | 0.0046% | 7.0657% |

| Firefox | 0.6525% | 7.7374% | 2.9717% | 0.2243% | 0.1041% | 0.1018% | 0.0541% | 0.0396% | 0.0219% | 0.0172% | 11.9249% |

| Safari | 0.0000% | 2.8802% | 0.7049% | 0.2110% | 0.0000% | 0.3288% | 0.0000% | 0.0696% | 0.0000% | 0.0094% | 4.2038% |

| Opera | 0.0030% | 0.2650% | 1.1173% | 0.0112% | 0.0044% | 0.0043% | 0.0016% | 0.0017% | 0.0015% | 0.0011% | 1.4112% |

| Internet Explorer | 0.0000% | 0.0658% | 0.0001% | 0.0001% | 0.0000% | 0.0000% | 0.0001% | 0.0000% | 0.0000% | 0.0000% | 0.0662% |

| Samsung Internet | 0.0000% | 0.0276% | 0.0007% | 0.0012% | 0.0008% | 0.0000% | 0.0000% | 0.0000% | 0.0000% | 0.0000% | 0.0302% |

| Total | 0.9507% | 70.3716% | 18.0379% | 2.3576% | 0.9602% | 0.9289% | 0.6207% | 0.2901% | 0.1968% | 0.1352% | 94.8498% |

The above graph shows two dimensions: market share across browser vendors, and market share across versions. Looking at traffic with a branded user-agent string shows that roughly 95% of requests are coming from one of seven browsers. It also shows us that—perhaps unsurprisingly—the majority of requests come from Google Chrome (more than 70%), 12% from Firefox, 7% from Edge, 4.2% from Safari, and 1.4% from Opera (all other browser vendors represent significantly less than 1% of traffic).

The fall-off for outdated versions of browsers is very steep. While over 70% of requests come from the latest release of a browser, 18% come from the previous release. Requests coming from three versions behind the latest fall to less than 1%. These numbers tell us that we can make the most impact by concentrating on Chrome, Firefox, Edge, and Safari, in that order. That’s not the whole story, though. Another vector to look at is over time:

| 15.4 | 15.3 | 15.2 | 15.1 | 15.0 | 14.1 | 14.0 | 13.1 | 13.0 | 12.1 | 12.0 | <12 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2022-01-01 | 0.2130% | 1.9933% | 31.9792% | 25.3472% | 6.1477% | 11.5005% | 3.9045% | 2.3446% | 0.5210% | 0.9379% | 0.0336% | 15.0773% |

| 2022-01-02 | 0.2548% | 2.0334% | 32.8731% | 25.9360% | 6.4715% | 12.0837% | 3.7401% | 2.4652% | 0.5292% | 0.8598% | 0.0297% | 12.7235% |

| 2022-01-03 | 0.3078% | 1.6285% | 34.9256% | 27.0666% | 7.3738% | 13.7125% | 3.7582% | 2.1204% | 0.4217% | 0.6152% | 0.0238% | 8.0459% |

| 2022-01-04 | 0.3637% | 1.4860% | 35.6528% | 26.9236% | 7.6980% | 14.2046% | 3.6510% | 2.0938% | 0.3804% | 0.5613% | 0.0305% | 6.9543% |

| 2022-01-05 | 0.3519% | 1.4723% | 35.8533% | 26.3673% | 7.6227% | 14.5006% | 3.7131% | 2.1403% | 0.3793% | 0.5682% | 0.0254% | 7.0055% |

| 2022-01-06 | 0.3575% | 1.5431% | 36.6670% | 25.8058% | 7.5075% | 14.2149% | 3.7570% | 2.1580% | 0.3888% | 0.6040% | 0.0242% | 6.9722% |

| 2022-01-07 | 0.4123% | 1.6277% | 37.4426% | 25.2663% | 7.3924% | 13.8618% | 3.6753% | 2.0874% | 0.4024% | 0.5904% | 0.0270% | 7.2144% |

| 2022-01-08 | 0.3237% | 1.9625% | 35.9640% | 24.3500% | 6.2977% | 12.0691% | 3.7139% | 2.3841% | 0.5170% | 0.8028% | 0.0266% | 11.5885% |

| 2022-01-09 | 0.2964% | 1.9599% | 36.0700% | 24.2496% | 6.3270% | 12.0979% | 3.7857% | 2.3146% | 0.4816% | 0.8567% | 0.0242% | 11.5363% |

| 2022-01-10 | 0.3488% | 1.5101% | 39.0599% | 24.5018% | 7.2861% | 14.0757% | 3.6064% | 2.0192% | 0.3818% | 0.5383% | 0.0285% | 6.6433% |

| 2022-01-11 | 0.4108% | 1.5541% | 39.4265% | 24.3465% | 7.3778% | 14.1840% | 3.5905% | 1.9870% | 0.3366% | 0.5555% | 0.0253% | 6.2052% |

| 2022-01-12 | 0.3743% | 1.5182% | 40.0054% | 23.9508% | 7.3054% | 14.1456% | 3.5695% | 2.0163% | 0.3643% | 0.5105% | 0.0308% | 6.2090% |

| 2022-01-13 | 0.3380% | 1.5659% | 40.3951% | 23.5803% | 7.2104% | 14.1495% | 3.6099% | 1.9705% | 0.3716% | 0.5117% | 0.0229% | 6.2743% |

| 2022-01-14 | 0.3709% | 1.6172% | 40.8321% | 23.4113% | 6.9690% | 13.5323% | 3.5354% | 1.9806% | 0.3559% | 0.5424% | 0.0251% | 6.8279% |

| 2022-01-15 | 0.2870% | 2.0547% | 39.7351% | 22.0067% | 5.9847% | 11.7234% | 3.6011% | 2.2909% | 0.4668% | 0.7720% | 0.0287% | 11.0489% |

| 2022-01-16 | 0.2964% | 2.0923% | 40.8441% | 20.6853% | 5.9118% | 11.8049% | 3.6625% | 2.3851% | 0.4599% | 0.8312% | 0.0294% | 10.9970% |

| 2022-01-17 | 0.3043% | 1.6554% | 43.8724% | 20.6116% | 6.6334% | 13.7081% | 3.5519% | 2.0195% | 0.3721% | 0.5356% | 0.0287% | 6.7071% |

| 2022-01-18 | 0.3448% | 1.5978% | 45.3308% | 19.6763% | 6.7137% | 13.6977% | 3.5498% | 1.9990% | 0.3478% | 0.5166% | 0.0289% | 6.1968% |

| 2022-01-19 | 0.3490% | 1.6179% | 46.3810% | 19.0037% | 6.5909% | 13.7031% | 3.4676% | 1.9497% | 0.3358% | 0.4847% | 0.0264% | 6.0901% |

| 2022-01-20 | 0.3410% | 1.6362% | 47.2639% | 18.3797% | 6.4656% | 13.3978% | 3.3907% | 1.9803% | 0.3393% | 0.5244% | 0.0245% | 6.2566% |

| 2022-01-21 | 0.3553% | 1.7170% | 48.0184% | 17.4454% | 6.3012% | 13.1411% | 3.3914% | 2.0109% | 0.3696% | 0.4934% | 0.0230% | 6.7332% |

| 2022-01-22 | 0.2929% | 2.3538% | 46.1479% | 16.4726% | 5.4806% | 11.2732% | 3.4515% | 2.1378% | 0.4547% | 0.7435% | 0.0291% | 11.1624% |

| 2022-01-23 | 0.2595% | 2.3385% | 47.0822% | 15.5800% | 5.4940% | 11.0466% | 3.5465% | 2.2365% | 0.4565% | 0.7749% | 0.0233% | 11.1614% |

| 2022-01-24 | 0.3607% | 1.7504% | 50.7307% | 15.3784% | 6.1093% | 12.9047% | 3.4166% | 2.0184% | 0.3442% | 0.4872% | 0.0225% | 6.4769% |

| 2022-01-25 | 0.3654% | 1.7706% | 51.9246% | 14.6195% | 6.0739% | 12.9834% | 3.3441% | 1.9226% | 0.3283% | 0.4742% | 0.0197% | 6.1736% |

| 2022-01-26 | 0.3465% | 2.1688% | 52.4595% | 14.0287% | 5.9250% | 12.4463% | 3.3065% | 1.9205% | 0.3530% | 0.5013% | 0.0244% | 6.5195% |

| 2022-01-27 | 0.3628% | 7.7522% | 47.3489% | 13.5902% | 5.8790% | 12.5425% | 3.2687% | 1.9584% | 0.3513% | 0.4820% | 0.0285% | 6.4356% |

| 2022-01-28 | 0.8512% | 12.2593% | 43.2173% | 12.7719% | 5.7684% | 12.2779% | 3.2807% | 1.8948% | 0.3661% | 0.4896% | 0.0249% | 6.7981% |

| 2022-01-29 | 1.5324% | 15.5746% | 37.9759% | 11.4900% | 5.0904% | 10.8157% | 3.2414% | 2.2146% | 0.4751% | 0.7246% | 0.0226% | 10.8425% |

| 2022-01-30 | 1.8095% | 17.1024% | 36.5444% | 11.5112% | 5.0038% | 10.5058% | 3.3404% | 2.2842% | 0.4604% | 0.7569% | 0.0187% | 10.6623% |

| 2022-01-31 | 1.5814% | 17.6461% | 38.7703% | 12.5933% | 5.6880% | 12.0274% | 3.1897% | 1.8416% | 0.3408% | 0.4923% | 0.0240% | 5.8050% |

| 2022-02-01 | 1.7441% | 19.2814% | 37.2947% | 12.3450% | 5.5508% | 11.9390% | 3.1856% | 1.8109% | 0.3369% | 0.4689% | 0.0228% | 6.0199% |

| 2022-02-02 | 1.8425% | 20.6234% | 36.1439% | 12.2229% | 5.5517% | 11.8100% | 3.0868% | 1.7966% | 0.3369% | 0.4872% | 0.0285% | 6.0697% |

| 2022-02-03 | 1.8914% | 21.5787% | 34.9534% | 12.0932% | 5.4199% | 11.7927% | 3.1686% | 1.8609% | 0.3504% | 0.4656% | 0.0240% | 6.4013% |

| 2022-02-04 | 1.9648% | 22.7768% | 34.0393% | 11.7468% | 5.2886% | 11.4763% | 3.0458% | 1.8618% | 0.3508% | 0.5207% | 0.0221% | 6.9061% |

| 2022-02-05 | 2.3963% | 23.4144% | 30.8252% | 10.7756% | 4.6826% | 10.0675% | 3.2277% | 2.1561% | 0.4480% | 0.7145% | 0.0214% | 11.2706% |

| 2022-02-06 | 2.3912% | 24.0953% | 30.5678% | 10.4257% | 4.7046% | 10.0236% | 3.3234% | 2.1215% | 0.4327% | 0.7056% | 0.0193% | 11.1893% |

| 2022-02-07 | 2.0336% | 24.6938% | 32.2185% | 11.5380% | 5.1985% | 11.8112% | 3.1986% | 1.9324% | 0.3535% | 0.4776% | 0.0249% | 6.5194% |

| 2022-02-08 | 2.0578% | 25.5825% | 31.5513% | 11.4319% | 5.1997% | 11.8368% | 3.1809% | 1.8839% | 0.3255% | 0.4600% | 0.0220% | 6.4678% |

| 2022-02-09 | 2.1357% | 26.4126% | 31.2722% | 11.3999% | 5.2737% | 11.9741% | 3.1823% | 1.9032% | 0.2298% | 0.2883% | 0.0204% | 5.9077% |

| 2022-02-10 | 2.1586% | 27.2403% | 30.8552% | 11.3862% | 5.2045% | 11.8815% | 3.1880% | 1.5341% | 0.2342% | 0.2931% | 0.0234% | 6.0009% |

| 2022-02-11 | 2.3263% | 28.7838% | 30.1344% | 11.3683% | 5.1761% | 11.6652% | 3.1655% | 0.8880% | 0.1781% | 0.2133% | 0.0214% | 6.0796% |

| 2022-02-12 | 2.7622% | 28.4764% | 26.9469% | 9.7973% | 4.4372% | 9.9020% | 3.1473% | 2.1256% | 0.4193% | 0.7154% | 0.0241% | 11.2464% |

| 2022-02-13 | 2.6300% | 28.9074% | 26.5648% | 9.9005% | 4.4070% | 9.9237% | 3.1472% | 2.2069% | 0.4375% | 0.7176% | 0.0234% | 11.1339% |

| 2022-02-14 | 2.2108% | 30.1253% | 28.0680% | 10.8367% | 5.0225% | 11.6307% | 3.1060% | 1.7727% | 0.3190% | 0.4699% | 0.0230% | 6.4155% |

| 2022-02-15 | 2.2626% | 31.0756% | 27.6637% | 10.8023% | 4.9224% | 11.5579% | 3.0697% | 1.7311% | 0.3060% | 0.4568% | 0.0263% | 6.1257% |

| 2022-02-16 | 2.3030% | 31.5893% | 27.2155% | 10.7267% | 4.8788% | 11.4932% | 2.9605% | 1.7814% | 0.3026% | 0.4668% | 0.0264% | 6.2558% |

| 2022-02-17 | 2.3139% | 32.1564% | 26.7888% | 10.6523% | 4.7749% | 11.4135% | 3.0497% | 1.8037% | 0.3309% | 0.4543% | 0.0254% | 6.2362% |

| 2022-02-18 | 2.3419% | 33.8505% | 25.1471% | 10.3232% | 4.6872% | 11.2973% | 2.9507% | 1.8080% | 0.3524% | 0.4658% | 0.0234% | 6.7524% |

| 2022-02-19 | 2.8255% | 35.4968% | 21.2425% | 9.0705% | 4.3585% | 9.6129% | 3.0839% | 2.1391% | 0.4321% | 0.7214% | 0.0240% | 10.9927% |

| 2022-02-20 | 2.7597% | 37.2786% | 19.6970% | 9.0995% | 4.3411% | 9.6230% | 3.0634% | 2.1577% | 0.4466% | 0.6930% | 0.0223% | 10.8180% |

| 2022-02-21 | 2.2972% | 39.5270% | 20.2617% | 9.8308% | 4.5785% | 11.0922% | 3.0427% | 1.8432% | 0.3359% | 0.4971% | 0.0203% | 6.6735% |

| 2022-02-22 | 2.3285% | 41.6072% | 18.8362% | 9.5382% | 4.5626% | 11.1426% | 3.0037% | 1.8037% | 0.3116% | 0.4570% | 0.0206% | 6.3882% |

| 2022-02-23 | 2.3564% | 42.9442% | 17.7843% | 9.3653% | 4.5609% | 11.0347% | 2.8992% | 1.7738% | 0.3189% | 0.4421% | 0.0198% | 6.5005% |

| 2022-02-24 | 2.3331% | 43.8819% | 16.9144% | 9.1178% | 4.5580% | 11.1665% | 2.9641% | 1.7680% | 0.3063% | 0.4369% | 0.0224% | 6.5306% |

| 2022-02-25 | 2.3644% | 45.0140% | 15.8175% | 8.8273% | 4.4317% | 10.9711% | 2.9355% | 1.7624% | 0.3324% | 0.4556% | 0.0238% | 7.0641% |

| 2022-02-26 | 2.9596% | 44.4252% | 13.1945% | 7.6195% | 3.9776% | 9.4234% | 3.1355% | 2.0300% | 0.5206% | 0.7039% | 0.0223% | 11.9878% |

| 2022-02-27 | 2.8501% | 45.0292% | 12.5025% | 7.7242% | 4.0507% | 9.4992% | 3.0954% | 2.1008% | 0.4980% | 0.6989% | 0.0251% | 11.9258% |

| 2022-02-28 | 2.3807% | 47.0753% | 14.2634% | 8.4807% | 4.2220% | 10.9576% | 3.0524% | 1.8148% | 0.3434% | 0.4632% | 0.0242% | 6.9222% |

| 2022-03-01 | 2.3748% | 48.2034% | 13.7801% | 8.3157% | 4.2605% | 10.7307% | 2.9418% | 1.8095% | 0.3259% | 0.4437% | 0.0224% | 6.7913% |

| 2022-03-02 | 2.3629% | 48.7995% | 13.5339% | 8.1894% | 4.2284% | 10.7893% | 2.9092% | 1.7475% | 0.3293% | 0.4560% | 0.0220% | 6.6327% |

| 2022-03-03 | 2.4486% | 49.7154% | 12.6928% | 8.1700% | 4.1801% | 10.6387% | 2.8873% | 1.7340% | 0.3109% | 0.4497% | 0.0246% | 6.7479% |

| 2022-03-04 | 2.5373% | 50.2026% | 12.4981% | 7.7686% | 4.1271% | 10.5053% | 2.8372% | 1.7236% | 0.3080% | 0.4493% | 0.0255% | 7.0174% |

| 2022-03-05 | 3.0231% | 49.3317% | 10.5426% | 6.5147% | 3.7707% | 9.0290% | 3.0784% | 2.0464% | 0.4182% | 0.6288% | 0.0208% | 11.5956% |

| 2022-03-06 | 3.0723% | 49.6284% | 10.0200% | 6.7391% | 3.7704% | 9.0282% | 3.0924% | 2.1063% | 0.4288% | 0.6881% | 0.0213% | 11.4046% |

| 2022-03-07 | 2.4667% | 51.7157% | 11.7176% | 7.6974% | 4.1000% | 10.2989% | 2.8448% | 1.7253% | 0.3185% | 0.4412% | 0.0236% | 6.6503% |

| 2022-03-08 | 2.4190% | 52.4292% | 11.3917% | 7.3946% | 4.1027% | 10.3559% | 2.8335% | 1.7378% | 0.3259% | 0.4500% | 0.0230% | 6.5368% |

| 2022-03-09 | 2.4744% | 52.9758% | 11.0708% | 7.4840% | 4.0474% | 10.3307% | 2.8409% | 1.6682% | 0.3015% | 0.4183% | 0.0210% | 6.3667% |

| 2022-03-10 | 2.5404% | 53.2418% | 10.8388% | 7.3800% | 3.9569% | 10.2019% | 2.8462% | 1.7425% | 0.3027% | 0.4212% | 0.0254% | 6.5022% |

| 2022-03-11 | 2.6346% | 53.8851% | 10.3123% | 7.1008% | 3.8969% | 9.8746% | 2.7686% | 1.6714% | 0.2988% | 0.4224% | 0.0252% | 7.1092% |

| 2022-03-12 | 3.2418% | 52.4146% | 8.4171% | 6.0948% | 3.5575% | 8.6494% | 3.0048% | 2.0180% | 0.3927% | 0.7005% | 0.0251% | 11.4838% |

| 2022-03-13 | 3.2069% | 52.3926% | 8.5002% | 6.1721% | 3.5239% | 8.4507% | 2.9757% | 2.1148% | 0.3755% | 0.7167% | 0.0254% | 11.5456% |

| 2022-03-14 | 3.2185% | 54.3662% | 9.8584% | 7.0464% | 3.8492% | 9.8257% | 2.7413% | 1.6965% | 0.2680% | 0.4273% | 0.0270% | 6.6756% |

| 2022-03-15 | 11.1192% | 47.4059% | 9.5438% | 6.8719% | 3.7611% | 9.7005% | 2.7719% | 1.6308% | 0.2637% | 0.4211% | 0.0250% | 6.4849% |

| 2022-03-16 | 17.9069% | 41.3967% | 9.1987% | 6.6590% | 3.7184% | 9.5772% | 2.6822% | 1.6273% | 0.2877% | 0.4349% | 0.0197% | 6.4914% |

| 2022-03-17 | 21.7348% | 38.1323% | 8.8607% | 6.5819% | 3.6503% | 9.4056% | 2.6279% | 1.6295% | 0.2814% | 0.4449% | 0.0250% | 6.6258% |

| 2022-03-18 | 24.4165% | 35.7041% | 8.5482% | 6.3399% | 3.5433% | 9.0851% | 2.5852% | 1.6362% | 0.2685% | 0.4610% | 0.0258% | 7.3863% |

| 2022-03-19 | 26.2368% | 31.9489% | 6.8779% | 5.5836% | 3.3195% | 7.9382% | 2.7793% | 1.9717% | 0.3594% | 0.6485% | 0.0219% | 12.3144% |

| 2022-03-20 | 27.3687% | 30.7753% | 7.0252% | 5.5489% | 3.3195% | 7.9491% | 2.8717% | 1.9881% | 0.3587% | 0.6629% | 0.0231% | 12.1088% |

| 2022-03-21 | 28.2620% | 32.3271% | 8.3673% | 6.3448% | 3.4783% | 9.2887% | 2.6006% | 1.6620% | 0.2865% | 0.4242% | 0.0238% | 6.9347% |

| 2022-03-22 | 29.5670% | 31.4166% | 8.2768% | 6.3061% | 3.4969% | 9.2905% | 2.5700% | 1.5872% | 0.2591% | 0.4296% | 0.0227% | 6.7774% |

| 2022-03-23 | 30.6539% | 30.6544% | 8.0608% | 6.2326% | 3.4798% | 9.1248% | 2.5844% | 1.6372% | 0.2534% | 0.4191% | 0.0235% | 6.8761% |

| 2022-03-24 | 32.0481% | 29.9540% | 8.0759% | 6.0714% | 3.4595% | 9.0259% | 2.5544% | 1.5634% | 0.2705% | 0.4064% | 0.0225% | 6.5478% |

| 2022-03-25 | 32.7566% | 28.9962% | 7.7413% | 5.9692% | 3.3709% | 8.7142% | 2.5446% | 1.5842% | 0.2667% | 0.4180% | 0.0235% | 7.6147% |

| 2022-03-26 | 32.5970% | 26.7095% | 6.2462% | 5.0950% | 3.2226% | 7.6540% | 2.7770% | 1.8228% | 0.3847% | 0.6553% | 0.0210% | 12.8149% |

| 2022-03-27 | 33.0257% | 26.3003% | 6.3665% | 5.3195% | 3.2245% | 7.8100% | 2.8208% | 1.8248% | 0.3827% | 0.6404% | 0.0235% | 12.2614% |

| 2022-03-28 | 34.6919% | 27.5399% | 7.6902% | 5.9742% | 3.3578% | 8.8958% | 2.5193% | 1.6230% | 0.2658% | 0.3997% | 0.0232% | 7.0192% |

| 2022-03-29 | 35.6580% | 27.0895% | 7.6128% | 5.9695% | 3.3326% | 8.7237% | 2.4484% | 1.5631% | 0.2741% | 0.3802% | 0.0223% | 6.9258% |

| 2022-03-30 | 36.0933% | 26.8011% | 7.4668% | 5.9183% | 3.3682% | 8.6179% | 2.4670% | 1.5698% | 0.2556% | 0.4017% | 0.0195% | 7.0208% |

| 2022-03-31 | 36.7627% | 26.3586% | 7.4152% | 5.8338% | 3.2992% | 8.6070% | 2.5025% | 1.5928% | 0.2742% | 0.4120% | 0.0184% | 6.9235% |

| 2022-04-01 | 37.7935% | 25.6391% | 7.0944% | 5.6951% | 3.3207% | 8.4395% | 2.3936% | 1.5895% | 0.2895% | 0.4032% | 0.0177% | 7.3242% |

| 2022-04-02 | 36.7583% | 23.8424% | 6.0495% | 4.9378% | 3.1161% | 7.6109% | 2.7326% | 1.8943% | 0.3616% | 0.6358% | 0.0209% | 12.0398% |

| 2022-04-03 | 38.0555% | 23.4329% | 5.9867% | 5.0717% | 3.1091% | 7.4917% | 2.6295% | 1.8512% | 0.3456% | 0.6151% | 0.0185% | 11.3926% |

| 2022-04-04 | 39.5734% | 24.4753% | 7.2343% | 5.6909% | 3.1580% | 8.4157% | 2.4270% | 1.5334% | 0.2433% | 0.4101% | 0.0237% | 6.8149% |

| 2022-04-05 | 40.1999% | 24.3237% | 7.1533% | 5.5920% | 3.2204% | 8.3465% | 2.3998% | 1.4640% | 0.2627% | 0.3814% | 0.0251% | 6.6309% |

| 2022-04-06 | 40.3972% | 23.9005% | 7.0750% | 5.5978% | 3.4175% | 8.4546% | 2.3345% | 1.5084% | 0.2411% | 0.4040% | 0.0226% | 6.6467% |

| 2022-04-07 | 40.6483% | 23.5724% | 6.9376% | 5.5906% | 3.5983% | 8.4082% | 2.3696% | 1.5432% | 0.2714% | 0.3932% | 0.0221% | 6.6452% |

| 2022-04-08 | 41.0291% | 23.0447% | 6.7669% | 5.3345% | 3.7167% | 8.1355% | 2.3707% | 1.5204% | 0.2979% | 0.4360% | 0.0227% | 7.3250% |

| 2022-04-09 | 39.0096% | 21.5707% | 5.5139% | 4.6910% | 3.8459% | 7.1101% | 2.6170% | 1.8408% | 0.3681% | 0.6555% | 0.0163% | 12.7611% |

| 2022-04-10 | 38.9654% | 21.5612% | 5.7157% | 4.7531% | 3.9948% | 7.0060% | 2.6146% | 1.9593% | 0.3643% | 0.6320% | 0.0189% | 12.4146% |

| 2022-04-11 | 41.7134% | 22.3259% | 6.8796% | 5.4925% | 3.8380% | 8.2313% | 2.3455% | 1.5951% | 0.2604% | 0.3967% | 0.0202% | 6.9013% |

| 2022-04-12 | 42.9776% | 21.4756% | 6.6304% | 5.3460% | 3.9136% | 8.1430% | 2.3224% | 1.4970% | 0.2704% | 0.4073% | 0.0232% | 6.9935% |

| 2022-04-13 | 44.8529% | 19.5508% | 6.5201% | 5.2781% | 3.9081% | 8.1321% | 2.3117% | 1.4651% | 0.2597% | 0.3798% | 0.0203% | 7.3213% |

| 2022-04-14 | 47.2604% | 18.5562% | 6.3329% | 5.0969% | 3.9568% | 7.8447% | 2.2326% | 1.4409% | 0.2660% | 0.3694% | 0.0195% | 6.6238% |

| 2022-04-15 | 46.1738% | 17.2112% | 5.7005% | 4.8802% | 4.1301% | 7.6028% | 2.4450% | 1.5947% | 0.3140% | 0.4888% | 0.0221% | 9.4369% |

| 2022-04-16 | 45.0816% | 15.9900% | 4.9427% | 4.2670% | 4.3097% | 6.9089% | 2.5115% | 1.7646% | 0.3776% | 0.6567% | 0.0196% | 13.1700% |

| 2022-04-17 | 45.9303% | 15.1178% | 4.8665% | 4.3033% | 4.3275% | 6.9231% | 2.6406% | 1.7807% | 0.3541% | 0.6456% | 0.0216% | 13.0890% |

| 2022-04-18 | 48.5945% | 15.2044% | 5.8556% | 4.8611% | 4.2658% | 7.9850% | 2.4404% | 1.6419% | 0.2887% | 0.4655% | 0.0209% | 8.3763% |

| 2022-04-19 | 50.8857% | 14.9250% | 5.8652% | 5.0294% | 3.9808% | 7.8429% | 2.2999% | 1.4581% | 0.2670% | 0.3945% | 0.0178% | 7.0337% |

| 2022-04-20 | 51.9700% | 14.2590% | 5.8156% | 4.8890% | 4.0109% | 7.6977% | 2.2176% | 1.4981% | 0.2546% | 0.4005% | 0.0209% | 6.9662% |

| 2022-04-21 | 52.5838% | 13.5549% | 5.7156% | 4.8685% | 3.9548% | 7.6767% | 2.3164% | 1.4839% | 0.2606% | 0.4122% | 0.0211% | 7.1515% |

| 2022-04-22 | 53.0145% | 12.8874% | 5.4692% | 4.7749% | 4.0399% | 7.5126% | 2.2910% | 1.4816% | 0.2738% | 0.3989% | 0.0203% | 7.8361% |

| 2022-04-23 | 51.2057% | 11.0804% | 4.4180% | 4.1472% | 4.4620% | 6.6022% | 2.4416% | 1.8031% | 0.3768% | 0.5984% | 0.0245% | 12.8400% |

| 2022-04-24 | 51.2867% | 10.9186% | 4.6488% | 4.1452% | 4.2797% | 6.9482% | 2.6263% | 1.8961% | 0.3964% | 0.6026% | 0.0243% | 12.2271% |

| 2022-04-25 | 54.1650% | 12.3169% | 5.5367% | 4.7520% | 4.0855% | 7.5898% | 2.2639% | 1.4719% | 0.2677% | 0.4090% | 0.0216% | 7.1201% |

| 2022-04-26 | 55.1848% | 11.9726% | 5.3847% | 4.6786% | 4.0406% | 7.4071% | 2.2462% | 1.4609% | 0.2643% | 0.3727% | 0.0237% | 6.9636% |

| 2022-04-27 | 55.9856% | 11.5124% | 5.3171% | 4.5683% | 3.9992% | 7.3195% | 2.2536% | 1.4565% | 0.2617% | 0.3671% | 0.0254% | 6.9336% |

| 2022-04-28 | 56.1709% | 11.2112% | 5.3278% | 4.5597% | 4.0453% | 7.2469% | 2.1800% | 1.4478% | 0.2588% | 0.3927% | 0.0223% | 7.1366% |

| 2022-04-29 | 56.4630% | 10.8456% | 5.0356% | 4.3545% | 4.1464% | 7.2195% | 2.1173% | 1.4117% | 0.2479% | 0.4089% | 0.0203% | 7.7295% |

| 2022-04-30 | 53.9976% | 8.9824% | 4.1147% | 3.7867% | 4.3880% | 6.3945% | 2.5134% | 1.7417% | 0.3505% | 0.6254% | 0.0265% | 13.0786% |

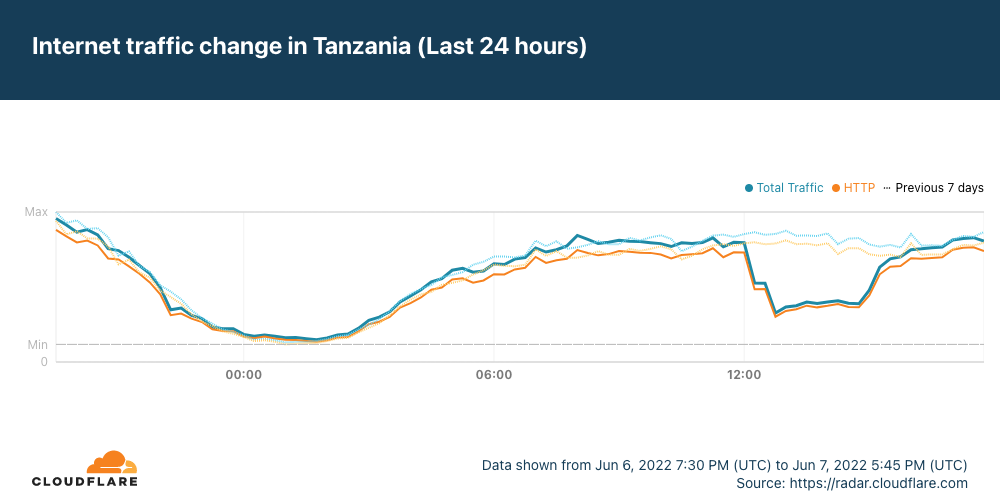

Safari releases a major version each year alongside macOS and iOS. The above shows the release cadence from January-April for Safari traffic on GitHub.com. While we see older versions used quite heavily, we also see regular upgrade cadence from Safari users, especially 15.x releases, with peak-to-peak usage approximately every eight weeks.

| 101 | 100 | 99 | 98 | 97 | 96 | <90 | 95 | 94 | 93 | 92 | 91 | 90 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2022-01-01 | 0.0000% | 0.3491% | 0.1468% | 0.2108% | 0.3386% | 86.6783% | 5.1358% | 2.2244% | 1.7838% | 0.5934% | 1.5257% | 0.5474% | 0.4658% |

| 2022-01-02 | 0.0000% | 0.3455% | 0.1363% | 0.1842% | 0.3181% | 88.7497% | 3.2558% | 2.2186% | 1.8004% | 0.5739% | 1.4336% | 0.5302% | 0.4538% |

| 2022-01-03 | 0.0000% | 0.2731% | 0.0937% | 0.1450% | 0.2434% | 90.4934% | 2.1269% | 2.5113% | 1.5836% | 0.5793% | 1.0352% | 0.5289% | 0.3861% |

| 2022-01-04 | 0.0000% | 0.2561% | 0.0908% | 0.1459% | 0.2757% | 90.3926% | 2.2204% | 2.5350% | 1.5445% | 0.5799% | 1.0151% | 0.5280% | 0.4160% |

| 2022-01-05 | 0.0000% | 0.2556% | 0.0899% | 0.1520% | 4.1497% | 86.6672% | 2.2260% | 2.4636% | 1.5101% | 0.5757% | 0.9840% | 0.5220% | 0.4042% |

| 2022-01-06 | 0.0000% | 0.2508% | 0.0815% | 0.1412% | 10.7723% | 80.2589% | 2.1902% | 2.3842% | 1.4689% | 0.5581% | 0.9913% | 0.5089% | 0.3937% |

| 2022-01-07 | 0.0000% | 0.1932% | 0.0962% | 0.1629% | 21.5610% | 69.5394% | 2.2636% | 2.2909% | 1.4494% | 0.5384% | 1.0015% | 0.5018% | 0.4017% |

| 2022-01-08 | 0.0000% | 0.1744% | 0.1480% | 0.2586% | 33.5510% | 56.3914% | 2.9587% | 2.0026% | 1.7096% | 0.5449% | 1.3334% | 0.5045% | 0.4230% |

| 2022-01-09 | 0.0000% | 0.1518% | 0.1521% | 0.2564% | 37.8032% | 52.5723% | 2.8295% | 1.8732% | 1.6458% | 0.5064% | 1.3001% | 0.4884% | 0.4208% |

| 2022-01-10 | 0.0000% | 0.1330% | 0.1059% | 0.1782% | 36.7369% | 54.4084% | 2.4167% | 2.1394% | 1.4727% | 0.5493% | 0.9584% | 0.5085% | 0.3927% |

| 2022-01-11 | 0.0000% | 0.1219% | 0.1051% | 0.2332% | 45.4664% | 46.0626% | 2.2293% | 2.0457% | 1.4169% | 0.5245% | 0.9212% | 0.4878% | 0.3853% |

| 2022-01-12 | 0.0000% | 0.1134% | 0.1468% | 0.2459% | 57.1392% | 34.5425% | 2.1934% | 1.9506% | 1.3772% | 0.5162% | 0.9154% | 0.4807% | 0.3786% |

| 2022-01-13 | 0.0000% | 0.1046% | 0.1624% | 0.2693% | 65.4838% | 26.4436% | 2.0266% | 1.8866% | 1.3646% | 0.4987% | 0.9004% | 0.4861% | 0.3733% |

| 2022-01-14 | 0.0000% | 0.1031% | 0.1829% | 0.3063% | 70.0467% | 21.6414% | 2.2699% | 1.8110% | 1.3665% | 0.4882% | 0.9326% | 0.4758% | 0.3755% |

| 2022-01-15 | 0.0000% | 0.1537% | 0.2627% | 0.4051% | 74.7938% | 15.8317% | 2.8385% | 1.6009% | 1.5320% | 0.4885% | 1.2465% | 0.4664% | 0.3802% |

| 2022-01-16 | 0.0000% | 0.1602% | 0.2558% | 0.3909% | 76.5597% | 14.3898% | 2.7115% | 1.5114% | 1.5108% | 0.4653% | 1.2131% | 0.4474% | 0.3840% |

| 2022-01-17 | 0.0000% | 0.1317% | 0.1893% | 0.2867% | 76.2164% | 15.8049% | 2.0853% | 1.6876% | 1.3236% | 0.4815% | 0.9184% | 0.4969% | 0.3777% |

| 2022-01-18 | 0.0000% | 0.1289% | 0.1898% | 0.2709% | 77.6409% | 14.7878% | 1.9411% | 1.6397% | 1.2295% | 0.4692% | 0.8660% | 0.4755% | 0.3606% |

| 2022-01-19 | 0.0000% | 0.1319% | 0.1909% | 0.2707% | 79.0723% | 13.6180% | 1.9163% | 1.5781% | 1.0862% | 0.4649% | 0.8503% | 0.4634% | 0.3568% |

| 2022-01-20 | 0.0000% | 0.1221% | 0.1687% | 0.2831% | 80.3988% | 12.5592% | 1.8927% | 1.4921% | 1.0036% | 0.4499% | 0.8351% | 0.4446% | 0.3500% |

| 2022-01-21 | 0.0000% | 0.1234% | 0.1599% | 0.2858% | 81.4219% | 11.6477% | 1.9316% | 1.3819% | 0.9494% | 0.4453% | 0.8615% | 0.4436% | 0.3480% |

| 2022-01-22 | 0.0000% | 0.1797% | 0.2379% | 0.3808% | 82.0847% | 9.9598% | 2.6312% | 1.2211% | 0.9332% | 0.4429% | 1.1404% | 0.4392% | 0.3493% |

| 2022-01-23 | 0.0000% | 0.1942% | 0.2317% | 0.3695% | 82.6698% | 9.5777% | 2.5169% | 1.1724% | 0.9056% | 0.4432% | 1.1244% | 0.4327% | 0.3617% |

| 2022-01-24 | 0.0000% | 0.1529% | 0.1677% | 0.2628% | 83.1674% | 10.2728% | 1.8032% | 1.2686% | 0.8822% | 0.4320% | 0.8178% | 0.4349% | 0.3377% |

| 2022-01-25 | 0.0000% | 0.1588% | 0.1748% | 0.2607% | 83.9039% | 9.7041% | 1.7497% | 1.2257% | 0.8594% | 0.4230% | 0.7947% | 0.4221% | 0.3230% |

| 2022-01-26 | 0.0000% | 0.1649% | 0.1919% | 0.2684% | 84.5595% | 9.1948% | 1.7411% | 1.1326% | 0.8188% | 0.4088% | 0.7871% | 0.4108% | 0.3213% |

| 2022-01-27 | 0.0000% | 0.1706% | 0.1723% | 0.2653% | 85.0102% | 8.7534% | 1.7195% | 1.1492% | 0.8218% | 0.4177% | 0.7826% | 0.4201% | 0.3175% |

| 2022-01-28 | 0.0000% | 0.1863% | 0.1721% | 0.2998% | 85.4656% | 8.3345% | 1.7354% | 1.1011% | 0.7879% | 0.4023% | 0.7755% | 0.4212% | 0.3184% |

| 2022-01-29 | 0.0000% | 0.2432% | 0.2524% | 0.4486% | 85.0456% | 7.6439% | 2.4780% | 0.9756% | 0.7196% | 0.4008% | 1.0169% | 0.4348% | 0.3406% |

| 2022-01-30 | 0.0000% | 0.2498% | 0.2378% | 0.4736% | 85.6022% | 7.4084% | 2.3117% | 0.9470% | 0.6814% | 0.3705% | 0.9848% | 0.4109% | 0.3219% |

| 2022-01-31 | 0.0000% | 0.1921% | 0.1645% | 0.3336% | 86.8529% | 7.5929% | 1.4485% | 0.9938% | 0.7046% | 0.3647% | 0.6753% | 0.4006% | 0.2765% |

| 2022-02-01 | 0.0000% | 0.1961% | 0.1595% | 0.4035% | 87.0774% | 7.3390% | 1.4790% | 0.9652% | 0.6843% | 0.3597% | 0.6694% | 0.3948% | 0.2720% |

| 2022-02-02 | 0.0000% | 0.1956% | 0.1629% | 4.0875% | 83.8040% | 7.0391% | 1.4431% | 0.9144% | 0.6703% | 0.3552% | 0.6702% | 0.3885% | 0.2692% |

| 2022-02-03 | 0.0000% | 0.1961% | 0.1534% | 10.7877% | 77.4577% | 6.7343% | 1.4876% | 0.8727% | 0.6411% | 0.3440% | 0.6779% | 0.3804% | 0.2672% |

| 2022-02-04 | 0.0000% | 0.2091% | 0.2088% | 19.5037% | 68.9628% | 6.4236% | 1.5359% | 0.8393% | 0.6403% | 0.3368% | 0.6862% | 0.3818% | 0.2718% |

| 2022-02-05 | 0.0000% | 0.3378% | 0.2795% | 27.7997% | 59.7330% | 6.1880% | 2.2839% | 0.7829% | 0.5944% | 0.3307% | 0.9933% | 0.3800% | 0.2968% |

| 2022-02-06 | 0.0000% | 0.3490% | 0.2758% | 30.7760% | 56.9533% | 6.0346% | 2.2841% | 0.7652% | 0.5892% | 0.3248% | 0.9688% | 0.3856% | 0.2937% |

| 2022-02-07 | 0.0000% | 0.2698% | 0.2073% | 31.2145% | 57.4092% | 6.0665% | 1.6698% | 0.8326% | 0.6459% | 0.3355% | 0.6748% | 0.3836% | 0.2905% |

| 2022-02-08 | 0.0000% | 0.2835% | 0.2034% | 39.6256% | 49.2262% | 5.8524% | 1.7011% | 0.8005% | 0.6267% | 0.3329% | 0.6700% | 0.3867% | 0.2913% |

| 2022-02-09 | 0.0000% | 0.2854% | 0.2040% | 51.7434% | 37.4438% | 5.5967% | 1.6149% | 0.7902% | 0.6179% | 0.3346% | 0.6811% | 0.3886% | 0.2994% |

| 2022-02-10 | 0.0000% | 0.3035% | 0.2056% | 64.1751% | 25.4399% | 5.1914% | 1.6026% | 0.7773% | 0.6148% | 0.3323% | 0.6678% | 0.3856% | 0.3042% |

| 2022-02-11 | 0.0000% | 0.3200% | 0.2123% | 70.9156% | 18.9909% | 4.9838% | 1.4181% | 0.7774% | 0.6214% | 0.3416% | 0.7113% | 0.3908% | 0.3168% |

| 2022-02-12 | 0.0001% | 0.4216% | 0.2739% | 75.8758% | 12.7559% | 4.8394% | 2.5110% | 0.7159% | 0.6017% | 0.3329% | 0.9718% | 0.3929% | 0.3072% |

| 2022-02-13 | 0.0000% | 0.4237% | 0.2763% | 77.6752% | 11.3726% | 4.6607% | 2.3649% | 0.6861% | 0.5655% | 0.3140% | 0.9684% | 0.3837% | 0.3091% |

| 2022-02-14 | 0.0000% | 0.3152% | 0.2083% | 76.9611% | 13.1654% | 4.6464% | 1.7167% | 0.7342% | 0.5899% | 0.3201% | 0.6560% | 0.3933% | 0.2934% |

| 2022-02-15 | 0.0000% | 0.3124% | 0.2021% | 79.1282% | 11.3529% | 4.4636% | 1.6466% | 0.7071% | 0.5653% | 0.3201% | 0.6274% | 0.3832% | 0.2912% |

| 2022-02-16 | 0.0000% | 0.3243% | 0.2038% | 80.6639% | 9.9946% | 4.2701% | 1.6595% | 0.7158% | 0.5658% | 0.3122% | 0.6302% | 0.3760% | 0.2838% |

| 2022-02-17 | 0.0000% | 0.3285% | 0.2009% | 81.8338% | 8.9708% | 4.1527% | 1.6762% | 0.6951% | 0.5514% | 0.3076% | 0.6269% | 0.3747% | 0.2814% |

| 2022-02-18 | 0.0008% | 0.3497% | 0.2078% | 82.6826% | 8.0582% | 4.0522% | 1.7689% | 0.6878% | 0.5474% | 0.3209% | 0.6655% | 0.3684% | 0.2897% |

| 2022-02-19 | 0.0334% | 0.3962% | 0.3184% | 83.4303% | 6.2674% | 4.0039% | 2.4328% | 0.6835% | 0.5080% | 0.3128% | 0.9336% | 0.3811% | 0.2987% |

| 2022-02-20 | 0.0797% | 0.3311% | 0.3194% | 84.0234% | 5.9904% | 3.8715% | 2.3334% | 0.6594% | 0.5073% | 0.3056% | 0.9356% | 0.3620% | 0.2810% |

| 2022-02-21 | 0.0587% | 0.2598% | 0.2468% | 84.1764% | 6.8024% | 3.8159% | 1.8127% | 0.6649% | 0.5265% | 0.3067% | 0.6597% | 0.3832% | 0.2862% |

| 2022-02-22 | 0.0632% | 0.2399% | 0.2450% | 84.9382% | 6.4595% | 3.6142% | 1.7137% | 0.6378% | 0.5159% | 0.3049% | 0.6299% | 0.3638% | 0.2741% |

| 2022-02-23 | 0.0752% | 0.2359% | 0.2761% | 85.3787% | 6.0287% | 3.5009% | 1.7839% | 0.6317% | 0.5087% | 0.3049% | 0.6374% | 0.3672% | 0.2707% |

| 2022-02-24 | 0.0705% | 0.2362% | 0.2634% | 85.9954% | 5.6871% | 3.4246% | 1.7009% | 0.5988% | 0.4996% | 0.2919% | 0.6033% | 0.3600% | 0.2683% |

| 2022-02-25 | 0.0768% | 0.2443% | 0.2955% | 86.3641% | 5.2829% | 3.3680% | 1.7568% | 0.5657% | 0.4905% | 0.2913% | 0.6322% | 0.3625% | 0.2695% |

| 2022-02-26 | 0.0986% | 0.3235% | 0.4231% | 85.9440% | 4.4333% | 3.3909% | 2.5171% | 0.5412% | 0.4798% | 0.2909% | 0.9168% | 0.3703% | 0.2705% |

| 2022-02-27 | 0.1076% | 0.2852% | 0.4442% | 86.3178% | 4.2554% | 3.3355% | 2.4455% | 0.5217% | 0.4657% | 0.2732% | 0.8975% | 0.3688% | 0.2820% |

| 2022-02-28 | 0.0805% | 0.2264% | 0.3290% | 87.0944% | 4.7665% | 3.2002% | 1.7667% | 0.5552% | 0.4824% | 0.2741% | 0.6065% | 0.3504% | 0.2676% |

| 2022-03-01 | 0.0823% | 0.2243% | 0.4175% | 87.4211% | 4.5314% | 3.0594% | 1.7720% | 0.5578% | 0.4607% | 0.2697% | 0.5930% | 0.3512% | 0.2596% |

| 2022-03-02 | 0.0813% | 0.2251% | 3.9680% | 84.2254% | 4.3094% | 3.0111% | 1.6974% | 0.5485% | 0.4639% | 0.2678% | 0.6025% | 0.3420% | 0.2575% |

| 2022-03-03 | 0.0860% | 0.2245% | 11.9552% | 76.5380% | 4.1113% | 2.9298% | 1.6869% | 0.5471% | 0.4651% | 0.2670% | 0.5852% | 0.3437% | 0.2603% |

| 2022-03-04 | 0.1481% | 0.2362% | 22.5161% | 66.1485% | 3.8667% | 2.8832% | 1.7433% | 0.5229% | 0.4527% | 0.2609% | 0.6226% | 0.3430% | 0.2558% |

| 2022-03-05 | 0.2483% | 0.3164% | 33.6288% | 54.2056% | 3.4509% | 3.0747% | 2.3631% | 0.4963% | 0.4430% | 0.2635% | 0.8942% | 0.3452% | 0.2699% |

| 2022-03-06 | 0.2651% | 0.3227% | 37.2905% | 50.9078% | 3.3400% | 2.9203% | 2.2912% | 0.4958% | 0.4426% | 0.2506% | 0.8726% | 0.3363% | 0.2644% |

| 2022-03-07 | 0.1837% | 0.2660% | 36.9753% | 52.3521% | 3.5951% | 2.6073% | 1.6510% | 0.4961% | 0.4436% | 0.2591% | 0.5845% | 0.3299% | 0.2562% |

| 2022-03-08 | 0.1921% | 0.2728% | 46.1578% | 43.5044% | 3.4375% | 2.5065% | 1.5931% | 0.4913% | 0.4273% | 0.2568% | 0.5841% | 0.3277% | 0.2486% |

| 2022-03-09 | 0.1910% | 0.2750% | 58.6384% | 31.1182% | 3.3291% | 2.5030% | 1.5923% | 0.4941% | 0.4443% | 0.2631% | 0.5759% | 0.3217% | 0.2541% |

| 2022-03-10 | 0.1983% | 0.2759% | 66.6023% | 23.3853% | 3.1888% | 2.4334% | 1.6060% | 0.4924% | 0.4216% | 0.2532% | 0.5752% | 0.3215% | 0.2462% |

| 2022-03-11 | 0.2169% | 0.2953% | 71.2820% | 18.7575% | 3.0712% | 2.4137% | 1.6315% | 0.4822% | 0.4248% | 0.2533% | 0.6013% | 0.3233% | 0.2471% |

| 2022-03-12 | 0.3178% | 0.3579% | 75.0959% | 13.7713% | 2.8331% | 2.6181% | 2.3502% | 0.5067% | 0.4230% | 0.2547% | 0.8853% | 0.3329% | 0.2531% |

| 2022-03-13 | 0.2925% | 0.3544% | 76.5015% | 12.4441% | 2.7972% | 2.6467% | 2.3365% | 0.5097% | 0.4227% | 0.2400% | 0.8676% | 0.3224% | 0.2645% |

| 2022-03-14 | 0.2183% | 0.2980% | 76.5209% | 13.9213% | 2.8796% | 2.3086% | 1.5790% | 0.4721% | 0.4184% | 0.2446% | 0.5742% | 0.3196% | 0.2454% |

| 2022-03-15 | 0.2178% | 0.2821% | 78.3268% | 12.3836% | 2.7799% | 2.2183% | 1.5574% | 0.4742% | 0.4004% | 0.2423% | 0.5740% | 0.3035% | 0.2396% |

| 2022-03-16 | 0.2279% | 0.2746% | 79.9200% | 11.0179% | 2.6655% | 2.1855% | 1.5131% | 0.4623% | 0.3887% | 0.2386% | 0.5568% | 0.3099% | 0.2394% |

| 2022-03-17 | 0.2330% | 0.2813% | 81.1027% | 9.9678% | 2.5655% | 2.1601% | 1.5065% | 0.4504% | 0.3991% | 0.2343% | 0.5593% | 0.3033% | 0.2366% |

| 2022-03-18 | 0.2561% | 0.2912% | 82.1716% | 9.0172% | 2.4236% | 2.0762% | 1.5907% | 0.4408% | 0.3820% | 0.2322% | 0.5850% | 0.3003% | 0.2331% |

| 2022-03-19 | 0.3151% | 0.3508% | 82.1984% | 7.4544% | 2.3516% | 2.4225% | 2.3421% | 0.4546% | 0.4032% | 0.2363% | 0.8967% | 0.3170% | 0.2574% |

| 2022-03-20 | 0.2713% | 0.3462% | 82.7299% | 7.2246% | 2.2897% | 2.3687% | 2.3127% | 0.4359% | 0.4056% | 0.2296% | 0.8447% | 0.2944% | 0.2466% |

| 2022-03-21 | 0.1971% | 0.2725% | 83.3152% | 8.0553% | 2.3554% | 2.0378% | 1.6154% | 0.4333% | 0.3793% | 0.2315% | 0.5662% | 0.3034% | 0.2376% |

| 2022-03-22 | 0.1822% | 0.2902% | 84.1726% | 7.4951% | 2.2640% | 1.9560% | 1.5519% | 0.4160% | 0.3707% | 0.2215% | 0.5526% | 0.3038% | 0.2235% |

| 2022-03-23 | 0.1787% | 0.2905% | 84.8110% | 7.0357% | 2.1387% | 1.9110% | 1.5677% | 0.3992% | 0.3727% | 0.2213% | 0.5465% | 0.2992% | 0.2279% |

| 2022-03-24 | 0.1816% | 0.2881% | 85.3078% | 6.6819% | 2.0346% | 1.8871% | 1.5665% | 0.4017% | 0.3653% | 0.2162% | 0.5431% | 0.2961% | 0.2300% |

| 2022-03-25 | 0.1850% | 0.3259% | 85.5512% | 6.3257% | 1.9862% | 1.8161% | 1.6912% | 0.3979% | 0.3712% | 0.2314% | 0.5782% | 0.3023% | 0.2376% |

| 2022-03-26 | 0.2513% | 0.4347% | 84.9980% | 5.7174% | 1.8887% | 1.8396% | 2.4636% | 0.3976% | 0.3775% | 0.2233% | 0.8562% | 0.3074% | 0.2447% |

| 2022-03-27 | 0.2380% | 0.4364% | 85.5432% | 5.6370% | 1.7971% | 1.7317% | 2.3124% | 0.3832% | 0.3692% | 0.2104% | 0.8099% | 0.2877% | 0.2441% |

| 2022-03-28 | 0.1666% | 0.3208% | 86.8461% | 5.7222% | 1.8063% | 1.5579% | 1.5932% | 0.3879% | 0.3505% | 0.2161% | 0.5225% | 0.2842% | 0.2257% |

| 2022-03-29 | 0.1513% | 0.5652% | 87.1876% | 5.3979% | 1.7323% | 1.4451% | 1.5669% | 0.3681% | 0.3506% | 0.2009% | 0.5336% | 0.2811% | 0.2194% |

| 2022-03-30 | 0.1576% | 5.5490% | 82.6303% | 5.1355% | 1.6597% | 1.3612% | 1.5718% | 0.3627% | 0.3415% | 0.2026% | 0.5277% | 0.2815% | 0.2187% |

| 2022-03-31 | 0.1559% | 14.2487% | 74.3161% | 4.9095% | 1.5865% | 1.3213% | 1.5609% | 0.3582% | 0.3308% | 0.1997% | 0.5151% | 0.2800% | 0.2173% |

| 2022-04-01 | 0.1813% | 25.5917% | 63.0197% | 4.6924% | 1.5348% | 1.3091% | 1.7270% | 0.3518% | 0.3366% | 0.2027% | 0.5498% | 0.2824% | 0.2208% |

| 2022-04-02 | 0.2617% | 36.0589% | 50.8073% | 4.7315% | 1.5903% | 1.4032% | 2.7742% | 0.3796% | 0.3699% | 0.2150% | 0.8255% | 0.3100% | 0.2731% |

| 2022-04-03 | 0.2696% | 40.1393% | 47.6733% | 4.5903% | 1.4216% | 1.2821% | 2.3981% | 0.3682% | 0.3410% | 0.1911% | 0.8276% | 0.2667% | 0.2313% |

| 2022-04-04 | 0.2050% | 40.4830% | 49.2044% | 4.2831% | 1.3593% | 1.1840% | 1.5060% | 0.3320% | 0.3082% | 0.1899% | 0.5062% | 0.2527% | 0.1862% |

| 2022-04-05 | 0.2067% | 43.9099% | 46.1357% | 4.0916% | 1.3287% | 1.1513% | 1.4396% | 0.3215% | 0.3041% | 0.1849% | 0.4934% | 0.2481% | 0.1847% |

| 2022-04-06 | 0.2187% | 45.9304% | 43.8938% | 3.9022% | 1.3749% | 1.1920% | 1.6659% | 0.3339% | 0.3118% | 0.1924% | 0.5080% | 0.2672% | 0.2089% |

| 2022-04-07 | 0.2248% | 55.3760% | 34.7892% | 3.6035% | 1.3432% | 1.1667% | 1.6971% | 0.3292% | 0.3116% | 0.1860% | 0.5093% | 0.2567% | 0.2067% |

| 2022-04-08 | 0.2437% | 65.3241% | 24.9055% | 3.4441% | 1.3174% | 1.1591% | 1.7565% | 0.3383% | 0.3080% | 0.1865% | 0.5392% | 0.2649% | 0.2129% |

| 2022-04-09 | 0.3552% | 72.6629% | 16.1023% | 3.4380% | 1.3360% | 1.2065% | 2.6180% | 0.3881% | 0.3343% | 0.2036% | 0.8520% | 0.2712% | 0.2320% |

| 2022-04-10 | 0.3588% | 75.0152% | 14.1712% | 3.3374% | 1.2959% | 1.1573% | 2.4802% | 0.3769% | 0.3273% | 0.1896% | 0.8174% | 0.2547% | 0.2182% |

| 2022-04-11 | 0.2514% | 75.1833% | 15.7071% | 3.0419% | 1.2542% | 1.1076% | 1.6913% | 0.3187% | 0.3001% | 0.1846% | 0.5052% | 0.2561% | 0.1983% |

| 2022-04-12 | 0.2525% | 77.6105% | 13.5018% | 2.8775% | 1.2313% | 1.0921% | 1.6838% | 0.3182% | 0.2940% | 0.1846% | 0.4997% | 0.2535% | 0.2004% |

| 2022-04-13 | 0.2515% | 79.3135% | 11.8381% | 2.7657% | 1.1999% | 1.1073% | 1.7596% | 0.3188% | 0.2942% | 0.1894% | 0.4959% | 0.2597% | 0.2064% |

| 2022-04-14 | 0.2638% | 81.6428% | 10.2230% | 2.6689% | 1.0457% | 1.0041% | 1.5028% | 0.2964% | 0.2720% | 0.1751% | 0.4884% | 0.2337% | 0.1832% |

| 2022-04-15 | 0.3107% | 82.1871% | 8.7132% | 2.6720% | 1.0914% | 1.0746% | 2.0693% | 0.3225% | 0.2960% | 0.1897% | 0.6075% | 0.2495% | 0.2165% |

| 2022-04-16 | 0.4049% | 82.9440% | 6.8725% | 2.7691% | 1.0718% | 1.0948% | 2.6616% | 0.3438% | 0.3227% | 0.1963% | 0.8405% | 0.2559% | 0.2221% |

| 2022-04-17 | 0.4047% | 83.4272% | 6.5785% | 2.7740% | 1.0408% | 1.0796% | 2.5529% | 0.3526% | 0.3187% | 0.1759% | 0.8376% | 0.2427% | 0.2149% |

| 2022-04-18 | 0.2857% | 83.8299% | 7.5944% | 2.5342% | 1.0325% | 1.0743% | 1.8479% | 0.3261% | 0.2894% | 0.1820% | 0.5436% | 0.2572% | 0.2028% |

| 2022-04-19 | 0.2766% | 84.8091% | 7.2881% | 2.3503% | 0.9702% | 0.9922% | 1.6394% | 0.3148% | 0.2756% | 0.1719% | 0.4804% | 0.2454% | 0.1860% |

| 2022-04-20 | 0.2799% | 85.4701% | 6.7614% | 2.2479% | 0.9362% | 0.9852% | 1.6643% | 0.3012% | 0.2773% | 0.1684% | 0.4834% | 0.2422% | 0.1825% |

| 2022-04-21 | 0.2973% | 86.0018% | 6.2328% | 2.2261% | 0.9132% | 0.9700% | 1.7047% | 0.2971% | 0.2696% | 0.1666% | 0.4986% | 0.2399% | 0.1823% |

| 2022-04-22 | 0.3213% | 86.3868% | 5.7889% | 2.1593% | 0.9105% | 0.9627% | 1.7757% | 0.2977% | 0.2819% | 0.1722% | 0.5237% | 0.2371% | 0.1822% |

| 2022-04-23 | 0.4968% | 85.8966% | 4.7296% | 2.4019% | 0.8974% | 0.9904% | 2.5055% | 0.3403% | 0.3033% | 0.1721% | 0.8126% | 0.2451% | 0.2084% |

| 2022-04-24 | 0.4777% | 85.4802% | 4.7939% | 2.3608% | 0.9737% | 1.0529% | 2.7088% | 0.3398% | 0.3156% | 0.1795% | 0.7897% | 0.2788% | 0.2486% |

| 2022-04-25 | 0.3257% | 87.3284% | 5.1208% | 2.0780% | 0.8546% | 0.9471% | 1.6892% | 0.2974% | 0.2703% | 0.1654% | 0.5006% | 0.2404% | 0.1822% |

| 2022-04-26 | 0.4103% | 87.7223% | 4.8262% | 2.0045% | 0.8395% | 0.9266% | 1.6524% | 0.2915% | 0.2682% | 0.1640% | 0.4741% | 0.2361% | 0.1842% |

| 2022-04-27 | 4.8221% | 83.6717% | 4.5406% | 1.9638% | 0.8197% | 0.9201% | 1.6594% | 0.2805% | 0.2673% | 0.1625% | 0.4782% | 0.2347% | 0.1792% |

| 2022-04-28 | 10.2662% | 78.5895% | 4.2556% | 1.9180% | 0.8044% | 0.9025% | 1.6505% | 0.2883% | 0.2675% | 0.1639% | 0.4822% | 0.2347% | 0.1769% |

| 2022-04-29 | 14.2843% | 74.6277% | 3.9706% | 1.9208% | 0.8075% | 0.9010% | 1.8151% | 0.2902% | 0.2755% | 0.1664% | 0.5181% | 0.2346% | 0.1882% |

| 2022-04-30 | 18.8788% | 69.1757% | 3.2994% | 2.1357% | 0.8096% | 0.9441% | 2.6950% | 0.3253% | 0.2932% | 0.1724% | 0.8318% | 0.2364% | 0.2026% |

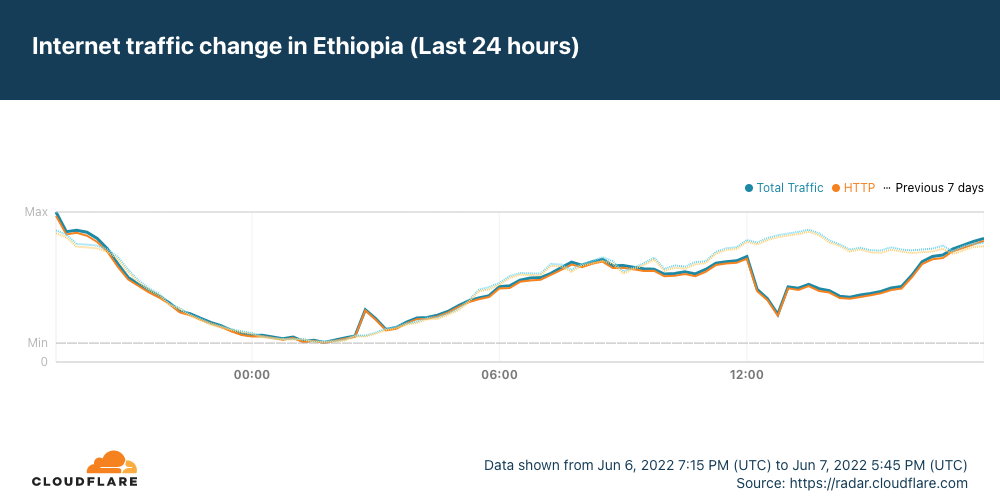

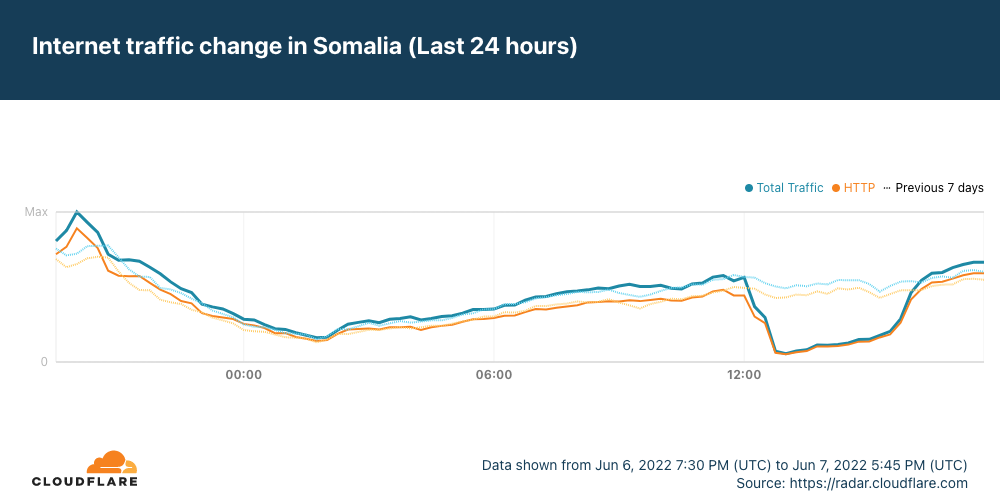

Chrome, Edge, and Firefox all have similar release cycles with releases every four weeks. Graphing Chrome traffic by version from January through April shows us how quickly older versions of these evergreen browsers fall off. We see peak-to-peak traffic around every four weeks, with a two week period where a single version represents more than 80% of all traffic for that browser.

This shows us that the promise of evergreen browsers is here today. The days of targeting one specific version of one browser are long gone. In fact, trying to do so today would be untenable. The Web Systems Team at GitHub removed the last traces of conditionals based on the user agent header in January 2020, and recorded an internal ADR explicitly disallowing this pattern due to how hard it is to maintain code that relies on user agent header parsing.

With that said, we still need to ensure some compatibility for user agents, which do not fall into the neat box of evergreen browsers. Universal access is important, and 1% of 73 million users is still 730,000 users.

Older browsers

When looking at the remaining 4% of browser traffic, we not only see very old versions of the most popular browsers, but also a diverse array of other branded user agents. Alongside older versions of Chrome (80-89 make up 1%, and 70-79 make up 0.2%), there are also Chromium forks, like QQ Browser (0.085%), Naver Whale (0.065%), and Silk (0.003%). Alongside older versions of Firefox (80-89 make up 0.12%, and 70-79 at 0.09%) there are Firefox forks, like IceWeasel (0.0002%) and SeaMonkey (0.0004%). The data also contains lots of esoteric user agents too, such as those from TVs, e-readers, and even refrigerators. In total, we’ve seen close to 20 million unique user agent strings visiting GitHub in 2022 alone.

Another vector we look at is logged-in vs. logged-out usage. As a whole, around 20% of the visits to GitHub come from browsers with logged-out sessions, but when looking at older browsers, the proportion of logged-out visits is much higher. For example, requests coming from Amazon Silk make up around 0.003% of all visits, but 80% of those visits are with logged-out sessions. Meaning, the number of logged-in visits on Silk is closer to 0.0006%. Users making requests with forked browsers also tend to make requests from evergreen browsers. For example, users making requests with SeaMonkey do so for 37% of their usage, while the other 63% come from Chrome or Firefox user agents.

We consider logged-in vs. logged-out, and main vs. a secondary browser to be important distinctions, because the usage patterns are quite different. Actions that a logged-out user takes (reading issues and pull requests, cloning repositories, and browsing files) are quite different to the actions a logged-in user takes (replying to issues and reviewing pull requests, starring repositories, editing files, and looking at their dashboard). Logged-out activities tend to be more “read only” actions, which means they hit very few paths that require JavaScript to run. Whereas logged-in users tend to perform the kind of rich interactions that require JavaScript.

With JavaScript disabled, you’re still able to log in, comment on issues and pull requests (although our rich markdown toolbar won’t work), browse source code (with syntax highlighting), search for repositories, and even star, watch, or fork them. Popover menus even work, thanks to the clever use of the HTML <details> element.

How we engineer for older browsers

With such a multitude of browsers, with various levels of standards compliance, we cannot expect our engineers to know the intricacies of each. We also don’t have the resources to test on the hundreds of browsers across thousands of operating system and version combinations we see, while 0.0002% of you are using your Tesla to merge pull requests, a Model 3 won’t fit into our testing budget! ![]()

Instead, we use a few industry standard practices, like linting and polyfills, to make sure we’re delivering a good baseline experience:

Static code analysis (linting) to catch browser compatibility issues:

We love ESLint. It’s great at catching classes of bugs, as well as enforcing style, for which we have extensive configurations, but it can also be useful for catching cross-browser bugs. We use amilajack/eslint-plugin-compat for guarding against use of features that aren’t well supported, and we’re not prepared to polyfill (for example, ResizeObserver). We also use keithamus/eslint-plugin-escompat for catching use of syntax that browsers do not support, and we don’t polyfill or transpile. These plugins are incredibly useful for catching quirks, for example, older versions of Edge supported destructuring, but in some instances these caused a SyntaxError. By linting for this corner case, we were able to ship native destructuring syntax to all browsers with a lint check to prevent engineers from hitting SyntaxErrors. Shipping native destructuring syntax allowed us to remove multiple kilobytes of transpiled code and helper functions, while linting kept code stable for older versions of Edge.

Polyfills to patch browsers with modern features

Past iterations of our codebase made liberal use of polyfills, such as mdn-polyfills, es6-promise, template-polyfill, and custom-event-polyfill, to name a few. Managing polyfills was burdensome and in some cases hurt performance. We were restricted in certain ways. For example, we postponed adoption of ShadowDOM due to the poor performance of polyfills available at the time.

More recently, our strategy has been to maintain a small list of polyfills for code features that are easy enough to polyfill with low impact. These polyfills are open sourced in our browser-support repository. In this repository, we also maintain a function that checks if a browser has a base set of functionality necessary to run GitHub’s JavaScript. This check expects variables, like Blob, globalThis, and MutationObserver to exist. If a browser doesn’t pass this check, JavaScript still executes, but any uncaught exceptions will not be reported to our error reporting library that we call failbot. By preventing browsers that don’t meet our minimum requirements, we reduce the amount of noise in our error reporting systems, which increases the value of error reporting software dramatically. Here’s some relevant code from failbot.ts:

import {isSupported} from '@github/browser-support' const extensions = /(chrome|moz|safari)-extension:\/\// // Does this stack trace contain frames from browser extensions? function isExtensionError(stack: PlatformStackframe[]): boolean { return stack.some(frame => extensions.test(frame.filename) || extensions.test(frame.function)) } let errorsReported = 0 function reportable() { return errorsReported < 10 && isSupported() } export async function report(context: PlatformReportBrowserErrorInput) { if (!reportable()) return if (isExtensionError()) return errorsReported++ // ... }

In order to help us quickly determine which browsers meet our minimum requirements, and which browsers require which polyfills, our browser-support repository even has its own publicly-visible compatibility table!

Shipping changes and validating data

When it comes to making a change, like shipping native optional chaining syntax, one tool we reach for is an internal CLI that lets us quickly generate Markdown tables that can be added to pull requests introducing new native syntax or features that require polyfilling. This internal CLI tool uses mdn/browser-compat-data and combines it with the data we have to generate a Can I Use-style feature table, but tailored to our usage data and the requested feature. For example:

browser-support-cli $ ./browsers.js optional chaining

#### [javascript operators optional_chaining](https://developer.mozilla.org/docs/Web/JavaScript/Reference/Operators/Optional_chaining)

| Browser | Supported Since | Latest Version | % Supported | % Unsupported |

| :---------------------- | --------------: | -------------: | ----------: | ------------: |

| chrome | 80 | 101 | 73.482 | 0.090 |

| edge | 80 | 100 | 6.691 | 0.001 |

| firefox | 74 | 100 | 12.655 | 0.014 |

| firefox_android | 79 | 100 | 0.127 | 0.001 |

| ie | Not Supported | 11 | 0.000 | 0.078 |

| opera | 67 | 86 | 1.267 | 0.000 |

| safari | 13.1 | 15.4 | 4.630 | 0.013 |

| safari_ios | 13.4 | 15.4 | 0.505 | 0.006 |

| samsunginternet_android | 13.0 | 16.0 | 0.020 | 0.000 |

| webview_android | 80 | 101 | 0.001 | 0.008 |

| **Total:** | | | **99.378** | **0.211** |

We can then take this table and paste it into a pull request description to help provide data at the fingertips of whoever is reviewing the pull request, to ensure that we’re making decisions that are inline with our principles.

This CLI tool has a few more features. It actually generated all the tables in this post, which we could then easily generate graphs with. For quick glances at feature tables, it also allows for exporting of our analytics table into a JSON format that we can import into Can I Use.

browser-support-cli $ ./browsers.js

Usage:

node browsers.js <query>

Examples:

node browsers.js --stats [--csv] # Show usage stats by browser+version

node browsers.js --last-ten [--csv] # Show usage stats of the last 10 major versions, by vendor

node browsers.js --cadence [--csv] # Show release cadence stats

node browsers.js --caniuse # Output a `simple.json` for import into caniuse.com

node browsers.js --html <query> # Output html for github.github.io/browser-support

Wrap-up

This is how GitHub thinks about our users and the browsers they use. We back up our principles with tooling and data to make sure we’re delivering a fast and reliable service to as many users as possible.

Concepts like progressive enhancement allow us to deliver the best experience possible to the majority of customers, while delivering a useful experience to those using older browsers.

Metasploit Weekly Wrap-Up

Post Syndicated from Brendan Watters original https://blog.rapid7.com/2022/06/10/metasploit-weekly-wrap-up-161/

A Confluence of High-Profile Modules

This release features modules covering the Confluence remote code execution bug CVE-2022-26134 and the hotly-debated CVE-2022-30190, a file format vulnerability in the Windows Operating System accessible through malicious documents. Both have been all over the news, and we’re very happy to bring them to you so that you can verify mitigations and patches in your infrastructure. If you’d like to read more about these vulnerabilities, Rapid7 has AttackerKB analyses and blogs covering both Confluence CVE-2022-26134 (AttackerKB, Rapid7 Blog)and Windows CVE-2022-30190 (AttackKB, Rapid7 Blog).

Metasploit 6.2

While we release new content weekly (or in real-time if you are using github), we track milestones as well. This week, we released Metasploit 6.2, and it has a whole host of new functionality, exploits, and fixes

New module content (2)

- Atlassian Confluence Namespace OGNL Injection by Spencer McIntyre, Unknown, bturner-r7, and jbaines-r7, which exploits CVE-2022-26134 – This module exploits an OGNL injection in Atlassian Confluence servers (CVE-2022-26134). A specially crafted URI can be used to evaluate an OGNL expression resulting in OS command execution.

- Microsoft Office Word MSDTJS by mekhalleh (RAMELLA Sébastien) and nao sec, which exploits CVE-2022-30190 – This PR adds a module supporting CVE-2022-30190 (AKA Follina), a Windows file format vulnerability.

Enhancements and features (2)

- #16651 from red0xff – The

test_vulnerablemethods in the various SQL injection libraries have been updated so that they will now use the specified encoder if one is specified, ensuring that characters are appropriately encoded as needed. - #16661 from dismantl – The impersonate_ssl module has been enhanced to allow it to add Subject Alternative Names (SAN) fields to the generated SSL certificate.

Bugs fixed (4)

- #16615 from NikitaKovaljov – A bug has been fixed in the IPv6 library when creating solicited-multicast addresses by finding leading zeros in last 16 bits of link-local address and removing them.

- #16630 from zeroSteiner – The

auxiliary/server/capture/smbmodule no longer stores duplicate Net-NTLM hashes in the database. - #16643 from ojasookert – The

exploits/multi/http/php_fpm_rcemodule has been updated to be compatible with Ruby 3.0 changes. - #16653 from adfoster-r7 – :

This PR fixes an issue where named pipe pivots failed to establish the named pipes in intermediate connections.

Get it

As always, you can update to the latest Metasploit Framework with msfupdate

and you can get more details on the changes since the last blog post from

GitHub:

If you are a git user, you can clone the Metasploit Framework repo (master branch) for the latest.

To install fresh without using git, you can use the open-source-only Nightly Installers or the

binary installers (which also include the commercial edition).

Use an AD FS user and Tableau to securely query data in AWS Lake Formation

Post Syndicated from Jason Nicholls original https://aws.amazon.com/blogs/big-data/use-an-ad-fs-user-and-tableau-to-securely-query-data-in-aws-lake-formation/

Security-conscious customers often adopt a Zero Trust security architecture. Zero Trust is a security model centered on the idea that access to data shouldn’t be solely based on network location, but rather require users and systems to prove their identities and trustworthiness and enforce fine-grained identity-based authorization rules before granting access to applications, data, and other systems.

Some customers rely on third-party identity providers (IdPs) like Active Directory Federated Services (AD FS) as a system to manage credentials and prove identities and trustworthiness. Users can use their AD FS credentials to authenticate to various related yet independent systems, including the AWS Management Console (for more information, see Enabling SAML 2.0 federated users to access the AWS Management Console).

In the context of analytics, some customers extend Zero Trust to data stored in data lakes, which includes the various business intelligence (BI) tools used to access that data. A common data lake pattern is to store data in Amazon Simple Storage Service (Amazon S3) and query the data using Amazon Athena.

AWS Lake Formation allows you to define and enforce access policies at the database, table, and column level when using Athena queries to read data stored in Amazon S3. Lake Formation supports Active Directory and Security Assertion Markup Language (SAML) identity providers such as OKTA and Auth0. Furthermore, Lake Formation securely integrates with the AWS BI service Amazon QuickSight. QuickSight allows you to effortlessly create and publish interactive BI dashboards, and supports authentication via Active Directory. However, if you use alternative BI tools like Tableau, you may want to use your Active Directory credentials to access data stored in Lake Formation.

In this post, we show you how you can use AD FS credentials with Tableau to implement a Zero Trust architecture and securely query data in Amazon S3 and Lake Formation.

Solution overview

In this architecture, user credentials are managed by Active Directory, and not Amazon Identity and Access Management (IAM). Although Tableau provides a connector to connect Tableau to Athena, the connector requires an AWS access key ID and an AWS secret access key normally used for programmatic access. Creating an IAM user with programmatic access for use by Tableau is a potential solution, however some customers have made an architectural decision that access to AWS accounts is done via a federated process using Active Directory, and not an IAM user.

In this post, we show you how you can use the Athena ODBC driver in conjunction with AD FS credentials to query sample data in a newly created data lake. We simulate the environment by enabling federation to AWS using AD FS 3.0 and SAML 2.0. Then we guide you through setting up a data lake using Lake Formation. Finally, we show you how you can configure an ODBC driver for Tableau to securely query your data in the lake data using your AD FS credentials.

Prerequisites

The following prerequisites are required to complete this walkthrough:

- An understanding of IAM roles and concepts

- A basic understanding of Lake Formation and Athena

- A copy of Tableau with a 14-day trail or fully licensed software

- An understanding of the concepts of Active Directory, and how to join a computer to an Active Directory domain

- An understanding of configuring ODBC components on a Windows machine

Create your environment

To simulate the production environment, we created a standard VPC in Amazon Virtual Private Cloud (Amazon VPC) with one private subnet and one public subnet. You can do the same using the VPC wizard. Our Amazon Elastic Compute Cloud (Amazon EC2) instance running the Tableau client is located in a private subnet and accessible via an EC2 bastion host. For simplicity, connecting out to Amazon S3, AWS Glue, and Athena is done via the NAT gateway and internet gateway set up by the VPC wizard. Optionally, you can replace the NAT gateway with AWS PrivateLink endpoints (AWS Security Token Service (AWS STS), Amazon S3, Athena, and AWS Glue endpoints are required) to make sure traffic remains within the AWS network.

The following diagram illustrates our environment architecture.

After you create your VPC with its private and public subnets, you can continue to build out the other requirements, such as Active Directory and Lake Formation. Let’s begin with Active Directory.

Enable federation to AWS using AD FS 3.0 and SAML 2.0

AD FS 3.0, a component of Windows Server, supports SAML 2.0 and is integrated with IAM. This integration allows Active Directory users to federate to AWS using corporate directory credentials, such as a user name and password from Active Directory. Before you can complete this section, AD FS must be configured and running.

To set up AD FS, follow the instructions in Setting up trust between AD FS and AWS and using Active Directory credentials to connect to Amazon Athena with ODBC driver. The first section of the post explains in detail how to set up AD FS and establish the trust between AD FS and Active Directory. The post ends with setting up an ODBC driver for Athena, which you can skip. The post creates a group name called ArunADFSTest. This group relates to a role in your AWS account, which you use later.

When you have successfully verified that you can log in using your IdP, you’re ready to configure your Windows environment ODBC driver to connect to Athena.

Set up a data lake using Lake Formation

Lake Formation is a fully managed service that makes it easy for you to build, secure, and manage data lakes. Lake Formation provides its own permissions model that augments the IAM permissions model. This centrally defined permissions model enables fine-grained access to data stored in data lakes through a simple grant/revoke mechanism. We use this permissions model to grant access to the AD FS role we created earlier.

- On the Lake Formation console, you’re prompted with a welcome box the first time you access Lake formation.

The box asks you to select the initial administrative user and roles. - Choose Add myself and choose Get Started.

We use the sample database provided by Lake Formation, but you’re welcome to use your own dataset. For instructions on loading your own dataset, see Getting Started with Lake Formation.With Lake Formation configured, we must grant read access to the AD FS role (ArunADFSTest) we created in the previous step. - In the navigation pane, choose Databases.

- Select the database

sampledb. - On the Actions menu, choose Grant.

We grant theSamlOdbcAccessrole access tosampledb. - For Principals, select IAM users and roles.

- For IAM users and roles, choose the role

ArunADFSTest. - Select Named data catalog resources.

- For Databases, choose the database

sampledb. - For Tables¸ choose All tables.

- Set the table permissions to Select and Describe.

- For Data permissions, select All data access.

- Choose Grant.

Our AD FS user assumes the roleArunADFSTest, which has been granted access tosampledbby Lake Formation. However, theArunADFSTestrole requires access to Lake Formation, Athena, AWS Glue, and Amazon S3. Following the practice of least privilege, AWS defines policies for specific Lake Formation personas. Our user fits the Data Analyst persona, which requires enough permissions to run queries. - Add the

AmazonAthenaFullAccessmanaged policy (for instructions, see Adding and removing IAM identity permissions) and the following inline policy to theArunADFSTestrole:Each time Athena runs a query, it stores the results in an S3 bucket, which is configured as the query result location in Athena.

- Create an S3 bucket, and in this new bucket create a new folder called

athena_results. - Update the settings on the Athena console to use your newly created folder.

Tableau uses Athena to run the query and read the results from Amazon S3, which means that theArunADFSTestrole requires access to your newly created S3 folder. - Attach the following inline policy to the

ArunADFSTestrole:

Our AD FS user can now assume a role that has enough privileges to query the sample database. The next step is to configure the ODBC driver on the client.

Configure an Athena ODBC driver

Athena is a managed serverless and interactive query service that allows you to analyze your data in Amazon S3 using standard Structured Query Language (SQL). You can use Athena to directly query data that is located in Amazon S3 or data that is registered with Lake Formation. Athena provides you with ODBC and JDBC drivers to effortlessly integrate with your data analytics tools (such as Microsoft Power BI, Tableau, or SQL Workbench) to seamlessly gain insights about your data in minutes.

To connect to our Lake Formation environment, we first need to install and configure the Athena ODBC driver on our Windows environment.

- Download the Athena ODBC driver relevant to your Windows environment.

- Install the driver by choosing the driver file you downloaded (in our case,

Simba+Athena+1.1+64-bit.msi).

- Choose Next on the welcome page.

- Read the End-User License Agreement, and if you agree to it, select I Accept the terms in the License Agreement and choose Next.

- Leave the default installation location for the ODBC driver and choose Next.

- Choose Install to begin the installation.

- If the User Access Control pop-up appears, choose Yes to allow the driver installation to continue.

- When the driver installation is complete, choose Finish to close the installer.

- Open the Windows ODBC configuration application by selecting the Start bar and searching for ODBC.

- Open the version corresponding to the Athena ODBC version you installed, in our case 64 bit.

- On the User DSN tab, choose Add.

- Choose the Simba Athena ODBC driver and choose Finish.

Configure the ODBC driver to AD FS authentication

We now need to configure the driver.

- Choose the driver on the Driver configuration page.

- For Data Source Name, enter

sampledb. - For Description, enter

Lake Formation Sample Database. - For AWS Region, enter

eu-west-1or the Region you used when configuring Lake Formation. - For Metadata Retrieval Method, choose Auto.

- For S3 Output Location, enter

s3://[BUCKET_NAME]/athena_results/. - For Encryption Options, choose NOT_SET.

- Clear the rest of the options.

- Choose Authentication Options.

- For Authentication Type, choose ADFS.

- For User, enter

[DOMAIN]\[USERNAME]. - For Password, enter your domain user password.

- For Preferred Role, enter

aws:iam::[ACCOUNT NUMBER]:role/ArunADFSTest.

The preferred role is the same role configured in the previous section (ArunADFSTest). - For IdP Host, enter the AD federation URL you configured during AD FS setup.

- For IdP Port, enter

443. - Select SSL Insecure.

- Choose OK.

- Choose Test on the initial configuration page to test the connection.

- When you see a success confirmation, choose OK.

We can now connect to our Lake Formation sample database from our desktop environment using the Athena ODBC driver. The next step is to use Tableau to query our data using the ODBC connection.

Connect to your data using Tableau

To connect to your data, complete the following steps:

- Open your Tableau Desktop edition.

- Under To a Server, choose More.

- On the list of available Tableau installed connectors, choose Other Databases (ODBC).

- Choose the ODBC database you created earlier.

- Choose Connect.

- Choose Sign In.

When the Tableau workbook opens, select the database, schema, and table that you want to query. - For Database, choose the database as listed in the ODBC setup (for this post,

AwsDataCatalog). - For Schema, choose your schema (

sampledb). - For Table, search for and choose your table (

elb_logs). - Drag the table to the work area to start your query and further report development.

Clean up

AWS Lake Formation provides database-, table-, column-, and tag-based access controls, and cross-account sharing at no charge. Lake Formation charges a fee for transaction requests and for metadata storage. In addition to providing a consistent view of data and enforcing row-level and cell-level security, the Lake Formation Storage API scans data in Amazon S3 and applies row and cell filters before returning results to applications. There is a fee for this filtering. To make sure that you’re not charged for any of the services that you no longer need, stop any EC2 instances that you created. Remove any objects in Amazon S3 you no longer require, because you pay for objects stored in S3 buckets.

Lastly, delete any Active Directory instances you may have created.

Conclusion

Lake Formation makes it simple to set up a secure data lake and then use the data lake with your choice of analytics and machine learning services, including Tableau. In this post, we showed you how you can connect to your data lake using AD FS credentials in a simple and secure way by using the Athena ODBC driver. Your AD FS user is configured within the ODBC driver, which then assumes a role in AWS. This role is granted access to only the data you require via Lake Formation.

To learn more about Lake Formation, see the Lake Formation Developer Guide or follow the Lake Formation workshop.

About the Authors

Jason Nicholls is an Enterprise Solutions Architect at AWS. He’s passionate about building scalable web and mobile applications on AWS. He started coding on a Commodore VIC 20, which lead to a career in software development. Jason holds a MSc in Computer Science with specialization in coevolved genetic programming. He is based in Johannesburg, South Africa.

Jason Nicholls is an Enterprise Solutions Architect at AWS. He’s passionate about building scalable web and mobile applications on AWS. He started coding on a Commodore VIC 20, which lead to a career in software development. Jason holds a MSc in Computer Science with specialization in coevolved genetic programming. He is based in Johannesburg, South Africa.

Francois van Rensburg is a Partner Management Solutions Architect at AWS. He has spent the last decade helping enterprise organizations successfully migrate to the cloud. He is passionate about networking and all things cloud. He started as a Cobol programmer and has built everything from software to data centers. He is based in Denver, Colorado.

Francois van Rensburg is a Partner Management Solutions Architect at AWS. He has spent the last decade helping enterprise organizations successfully migrate to the cloud. He is passionate about networking and all things cloud. He started as a Cobol programmer and has built everything from software to data centers. He is based in Denver, Colorado.

Introducing a new AWS whitepaper: Does data localization cause more problems than it solves?

Post Syndicated from Jana Kay original https://aws.amazon.com/blogs/security/introducing-a-new-aws-whitepaper-does-data-localization-cause-more-problems-than-it-solves/

Amazon Web Services (AWS) recently released a new whitepaper, Does data localization cause more problems than it solves?, as part of the AWS Innovating Securely briefing series. The whitepaper draws on research from Emily Wu’s paper Sovereignty and Data Localization, published by Harvard University’s Belfer Center, and describes how countries can realize similar data localization objectives through AWS services without incurring the unintended effects highlighted by Wu.

Wu’s research analyzes the intent of data localization policies, and compares that to the reality of the policies’ effects, concluding that data localization policies are often counterproductive to their intended goals of data security, economic competitiveness, and protecting national values.

The new whitepaper explains how you can use the security capabilities of AWS to take advantage of up-to-date technology and help meet your data localization requirements while maintaining full control over the physical location of where your data is stored.

AWS offers robust privacy and security services and features that let you implement your own controls. AWS uses lessons learned around the globe and applies them at the local level for improved cybersecurity against security events. As an AWS customer, after you pick a geographic location to store your data, the cloud infrastructure provides you greater resiliency and availability than you can achieve by using on-prem infrastructure. When you choose an AWS Region, you maintain full control to determine the physical location of where your data is stored. AWS also provides you with resources through the AWS compliance program, to help you understand the robust controls in place at AWS to maintain security and compliance in the cloud.

An important finding of Wu’s research is that localization constraints can deter innovation and hurt local economies because they limit which services are available, or increase costs because there are a smaller number of service providers to choose from. Wu concludes that data localization can “raise the barriers [to entrepreneurs] for market entry, which suppresses entrepreneurial activity and reduces the ability for an economy to compete globally.” Data localization policies are especially challenging for companies that trade across national borders. International trade used to be the remit of only big corporations. Current data-driven efficiencies in shipping and logistics mean that international trade is open to companies of all sizes. There has been particular growth for small and medium enterprises involved in services trade (of which cross-border data flows are a key element). In a 2016 worldwide survey conducted by McKinsey, 86 percent of tech-based startups had at least one cross-border activity. The same report showed that cross-border data flows added some US$2.8 trillion to world GDP in 2014.

However, the availability of cloud services supports secure and efficient cross-border data flows, which in turn can contribute to national economic competitiveness. Deloitte Consulting’s report, The cloud imperative: Asia Pacific’s unmissable opportunity, estimates that by 2024, the cloud will contribute $260 billion to GDP across eight regional markets, with more benefit possible in the future. The World Trade Organization’s World Trade Report 2018 estimates that digital technologies, which includes advanced cloud services, will account for a 34 percent increase in global trade by 2030.

Wu also cites a link between national data governance policies and a government’s concerns that movement of data outside national borders can diminish their control. However, the technology, storage capacity, and compute power provided by hyperscale cloud service providers like AWS, can empower local entrepreneurs.

AWS continually updates practices to meet the evolving needs and expectations of both customers and regulators. This allows AWS customers to use effective tools for processing data, which can help them meet stringent local standards to protect national values and citizens’ rights.

Wu’s research concludes that “data localization is proving ineffective” for meeting intended national goals, and offers practical alternatives for policymakers to consider. Wu has several recommendations, such as continuing to invest in cybersecurity, supporting industry-led initiatives to develop shared standards and protocols, and promoting international cooperation around privacy and innovation. Despite the continued existence of data localization policies, countries can currently realize similar objectives through cloud services. AWS implements rigorous contractual, technical, and organizational measures to protect the confidentiality, integrity, and availability of customer data, regardless of which AWS Region you select to store their data. As an AWS customer, this means you can take advantage of the economic benefits and the support for innovation provided by cloud computing, while improving your ability to meet your core security and compliance requirements.

For more information, see the whitepaper Does data localization cause more problems than it solves?, or contact AWS.

If you have feedback about this post, submit comments in the Comments section below.

sex robot 2

Post Syndicated from turnoff.us - geek comic site original http://turnoff.us/geek/sex-robot-2

readme

Post Syndicated from turnoff.us - geek comic site original http://turnoff.us/geek/readme

Identification of replication bottlenecks when using AWS Application Migration Service

Post Syndicated from Tobias Reekers original https://aws.amazon.com/blogs/architecture/identification-of-replication-bottlenecks-when-using-aws-application-migration-service/

Enterprises frequently begin their journey by re-hosting (lift-and-shift) their on-premises workloads into AWS and running Amazon Elastic Compute Cloud (Amazon EC2) instances. A simpler way to re-host is by using AWS Application Migration Service (Application Migration Service), a cloud-native migration service.

To streamline and expedite migrations, automate reusable migration patterns that work for a wide range of applications. Application Migration Service is the recommended migration service to lift-and-shift your applications to AWS.

In this blog post, we explore key variables that contribute to server replication speed when using Application Migration Service. We will also look at tests you can run to identify these bottlenecks and, where appropriate, include remediation steps.

Overview of migration using Application Migration Service

Figure 1 depicts the end-to-end data replication flow from source servers to a target machine hosted on AWS. The diagram is designed to help visualize potential bottlenecks within the data flow, which are denoted by a black diamond.

Figure 1. Data flow when using AWS Application Migration Service (black diamonds denote potential points of contention)

Baseline testing

To determine a baseline replication speed, we recommend performing a control test between your target AWS Region and the nearest Region to your source workloads. For example, if your source workloads are in a data center in Rome and your target Region is Paris, run a test between eu-south-1 (Milan) and eu-west-3 (Paris). This will give a theoretical upper bandwidth limit, as replication will occur over the AWS backbone. If the target Region is already the closest Region to your source workloads, run the test from within the same Region.

Network connectivity

There are several ways to establish connectivity between your on-premises location and AWS Region:

- Public internet

- VPN

- AWS Direct Connect

This section pertains to options 1 and 2. If facing replication speed issues, the first place to look is at network bandwidth. From a source machine within your internal network, run a speed test to calculate your bandwidth out to the internet; common test providers include Cloudflare, Ookla, and Google. This is your bandwidth to the internet, not to AWS.

Next, to confirm the data flow from within your data center, run a traceroute (Windows) or tracert (Linux). Identify any network hops that are unusual or potentially throttling bandwidth (due to hardware limitations or configuration).

To measure the maximum bandwidth between your data center and the AWS subnet that is being used for data replication, while accounting for Security Sockets Layer (SSL) encapsulation, use the CloudEndure SSL bandwidth tool (refer to Figure 1).

Source storage I/O

The next area to look for replication bottlenecks is source storage. The underlying storage for servers can be a point of contention. If the storage is maxing-out its read speeds, this will impact the data-replication rate. If your storage I/O is heavily utilized, it can impact block replication by Application Migration Service. In order to measure storage speeds, you can use the following tools:

- Windows: WinSat (or other third-party tooling, like AS SSD Benchmark)

- Linux: hdparm

We suggest reducing read/write operations on your source storage when starting your migration using Application Migration Service.

Application Migration Service EC2 replication instance size

The size of the EC2 replication server instance can also have an impact on the replication speed. Although it is recommended to keep the default instance size (t3.small), it can be increased if there are business requirements, like to speed up the initial data sync. Note: using a larger instance can lead to increased compute costs.

Common replication instance changes include:

- Servers with <26 disks: change the instance type to m5.large. Increase the instance type to m5.xlarge or higher, as needed.

- Servers with <26 disks (or servers in AWS Regions that do not support m5 instance types): change the instance type to m4.large. Increase to m4.xlarge or higher, as needed.