Post Syndicated from Tanushree Sharma original https://blog.cloudflare.com/logs-r2/

Hot on the heels of the R2 open beta announcement, we’re excited that Cloudflare enterprise customers can now use Logpush to store logs on R2!

Raw logs from our products are used by our customers for debugging performance issues, to investigate security incidents, to keep up security standards for compliance and much more. You shouldn’t have to make tradeoffs between keeping logs that you need and managing tight budgets. With R2’s low costs, we’re making this decision easier for our customers!

Getting into the numbers

Cloudflare helps customers at different levels of scale — from a few requests per day, up to a million requests per second. Because of this, the cost of log storage also varies widely. For customers with higher-traffic websites, log storage costs can grow large, quickly.

As an example, imagine a website that gets 100,000 requests per second. This site would generate about 9.2 TB of HTTP request logs per day, or 850 GB/day after gzip compression. Over a month, you’ll be storing about 26 TB (compressed) of HTTP logs.

For a typical use case, imagine that you write and read the data exactly once – for example, you might write the data to object storage before ingesting it into an alerting system. Compare the costs of R2 and S3 (note that this excludes costs per operation to read/write data).

| Provider | Storage price | Data transfer price | Total cost assuming data is read once |

|---|---|---|---|

| R2 | $0.015/GB | $0 | $390/month |

| S3 (Standard, US East | $0.023/GB | $0.09/GB for first 10 TB; then $0.085/GB | $2,858/month |

In this example, R2 leads to 86% savings! It’s worth noting that querying logs is where another hefty price tag comes in because Amazon Athena charges based on the amount of data scanned. If your team is looking back through historical data, each query can be hundreds of dollars.

Many of our customers have tens to hundreds of domains behind Cloudflare and the majority of our Enterprise customers also use multiple Cloudflare products. Imagine how costs will scale if you need to store HTTP, WAF and Spectrum logs for all of your Internet properties behind Cloudflare.

For SaaS customers that are building the next big thing on Cloudflare, logs are important to get visibility into customer usage and performance. Your customer’s developers may also want access to raw logs to understand errors during development and to troubleshoot production issues. Costs for storing logs multiply and add up quickly!

The flip side: log retrieval

When designing products, one of Cloudflare’s core principles is ease of use. We take on the complexity, so you don’t have to. Storing logs is only half the battle, you also need to be able to access relevant logs when you need them – in the heat of an incident or when doing an in depth analysis.

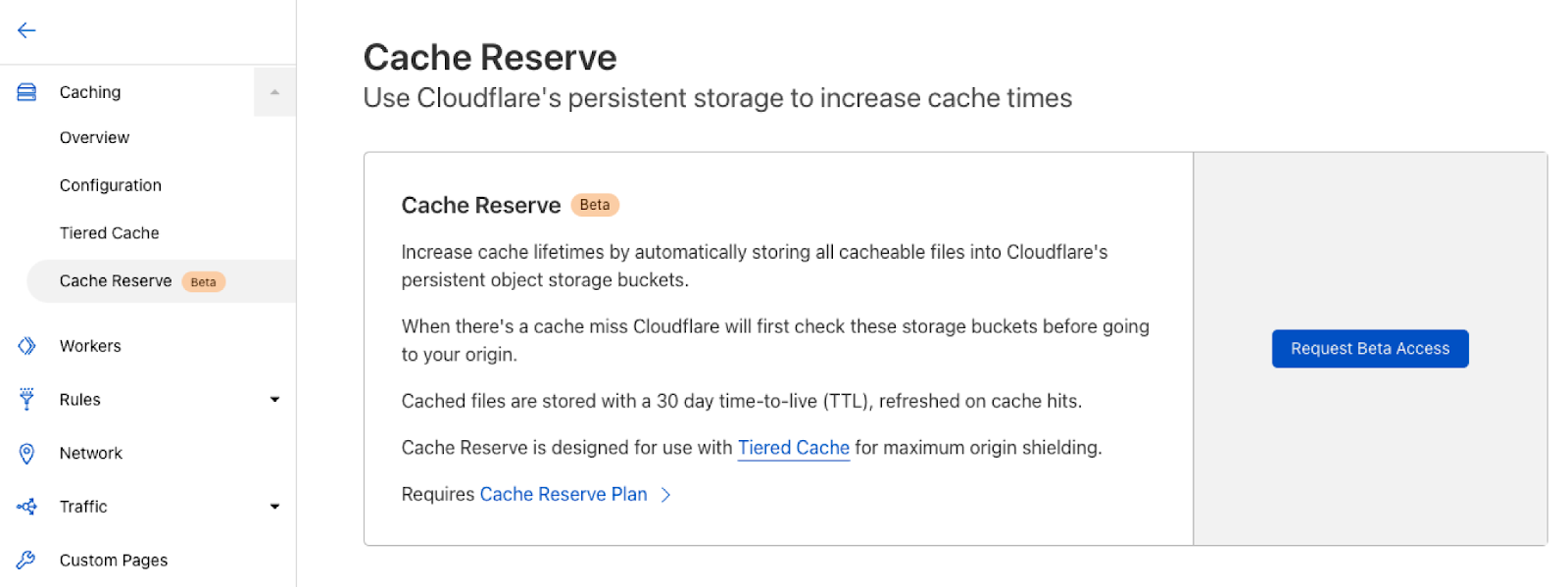

Our product, Logpull, offers seven days of log retention and an easy to use API to access. Our customers love that Logpull doesn’t need any setup on third parties since it’s completely managed by Cloudflare. However, Logpull is limited in the retention of logs, the type of logs that we store (only HTTP request logs) and the amount of data that can be queried at one time.

We’re building tools for log retrieval that make it super easy to get your data out of R2 from any of our datasets. Similar to Logpull, we’ll start by supporting lookups by time period and rayId. From there, we’ll tackle more complex functions like returning logs within time X and Y that have 500 errors or where WAF action = block.

We’re looking for customers to join a closed beta for our Log Retrieval API. If you’re interested in testing it out, giving feedback and ultimately helping us shape the product sign up here.

Logs on R2: How to get started

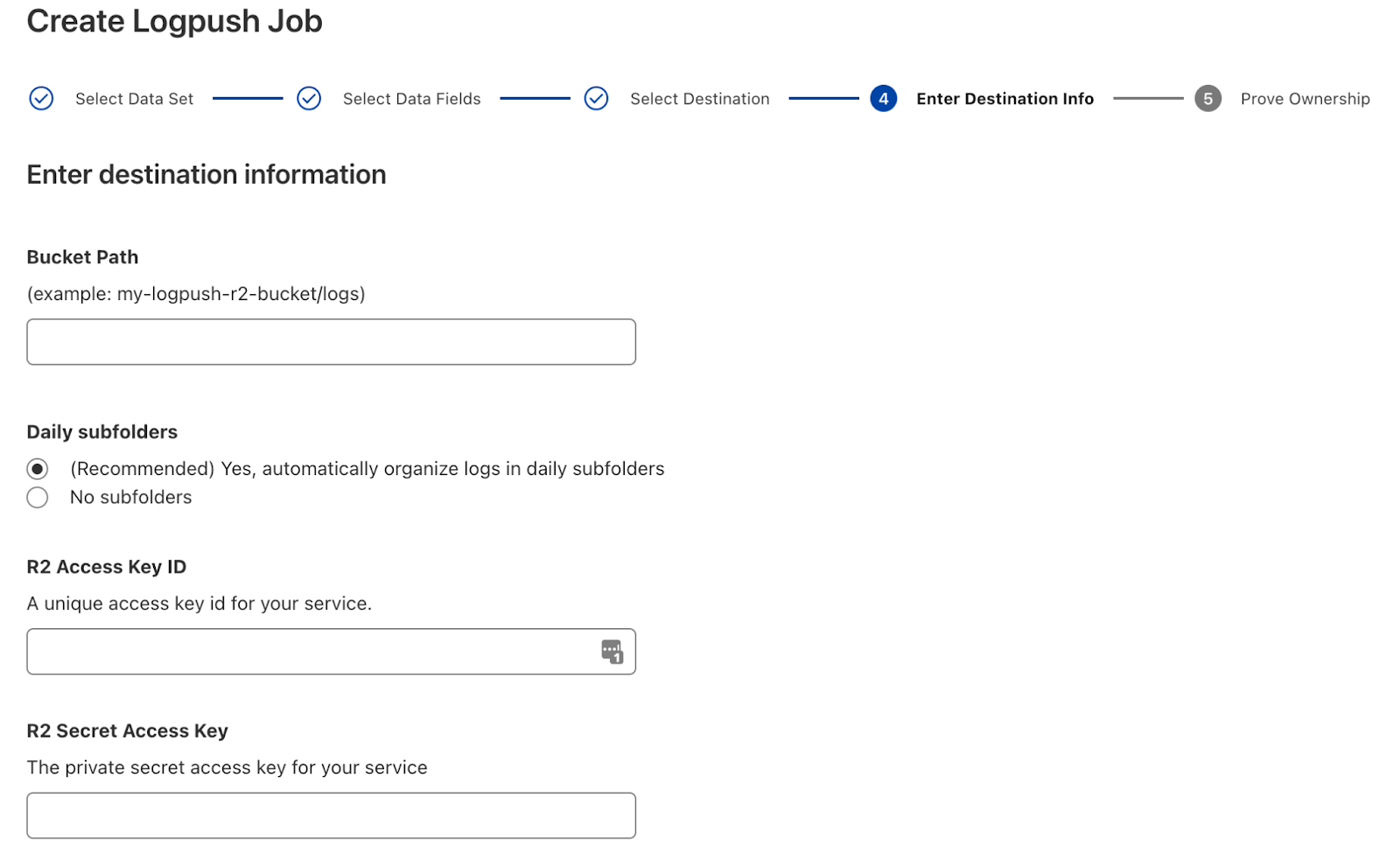

Enterprise customers first need to get R2 added to their contract. Reach out to your account team if this is something you’re interested in! Once enabled, create an R2 bucket for your logs and follow the Logpush setup flow to create your job.

It’s that simple! If you have questions, our Logpush to R2 developer docs go into more detail.

More to come

We’re continuing to build out more advanced Logpush features with a focus on customization. Here’s a preview of what’s next on the roadmap:

- New datasets: Network Analytics Logs, Workers Invocation Logs

- Log filtering

- Custom log formatting

We also have exciting plans to build out log analysis and forensics capabilities on top of R2. We want to make log storage tightly coupled to the Cloudflare dash so you can see high level analytics and drill down into individual log lines all in one view. Stay tuned to the blog for more!