Post Syndicated from Christopher Wood original https://blog.cloudflare.com/handshake-encryption-endgame-an-ech-update/

Privacy and security are fundamental to Cloudflare, and we believe in and champion the use of cryptography to help provide these fundamentals for customers, end-users, and the Internet at large. In the past, we helped specify, implement, and ship TLS 1.3, the latest version of the transport security protocol underlying the web, to all of our users. TLS 1.3 vastly improved upon prior versions of the protocol with respect to security, privacy, and performance: simpler cryptographic algorithms, more handshake encryption, and fewer round trips are just a few of the many great features of this protocol.

TLS 1.3 was a tremendous improvement over TLS 1.2, but there is still room for improvement. Sensitive metadata relating to application or user intent is still visible in plaintext on the wire. In particular, all client parameters, including the name of the target server the client is connecting to, are visible in plaintext. For obvious reasons, this is problematic from a privacy perspective: Even if your application traffic to crypto.cloudflare.com is encrypted, the fact you’re visiting crypto.cloudflare.com can be quite revealing.

And so, in collaboration with other participants in the standardization community and members of industry, we embarked towards a solution for encrypting all sensitive TLS metadata in transit. The result: TLS Encrypted ClientHello (ECH), an extension to protect this sensitive metadata during connection establishment.

Last year, we described the current status of this standard and its relation to the TLS 1.3 standardization effort, as well as ECH’s predecessor, Encrypted SNI (ESNI). The protocol has come a long way since then, but when will we know when it’s ready? There are many ways by which one can measure a protocol. Is it implementable? Is it easy to enable? Does it seamlessly integrate with existing protocols or applications? In order to assess these questions and see if the Internet is ready for ECH, the community needs deployment experience. Hence, for the past year, we’ve been focused on making the protocol stable, interoperable, and, ultimately, deployable. And today, we’re pleased to announce that we’ve begun our initial deployment of TLS ECH.

What does ECH mean for connection security and privacy on the network? How does it relate to similar technologies and concepts such as domain fronting? In this post, we’ll dig into ECH details and describe what this protocol does to move the needle to help build a better Internet.

Connection privacy

For most Internet users, connections are made to perform some type of task, such as loading a web page, sending a message to a friend, purchasing some items online, or accessing bank account information. Each of these connections reveals some limited information about user behavior. For example, a connection to a messaging platform reveals that one might be trying to send or receive a message. Similarly, a connection to a bank or financial institution reveals when the user typically makes financial transactions. Individually, this metadata might seem harmless. But consider what happens when it accumulates: does the set of websites you visit on a regular basis uniquely identify you as a user? The safe answer is: yes.

This type of metadata is privacy-sensitive, and ultimately something that should only be known by two entities: the user who initiates the connection, and the service which accepts the connection. However, the reality today is that this metadata is known to more than those two entities.

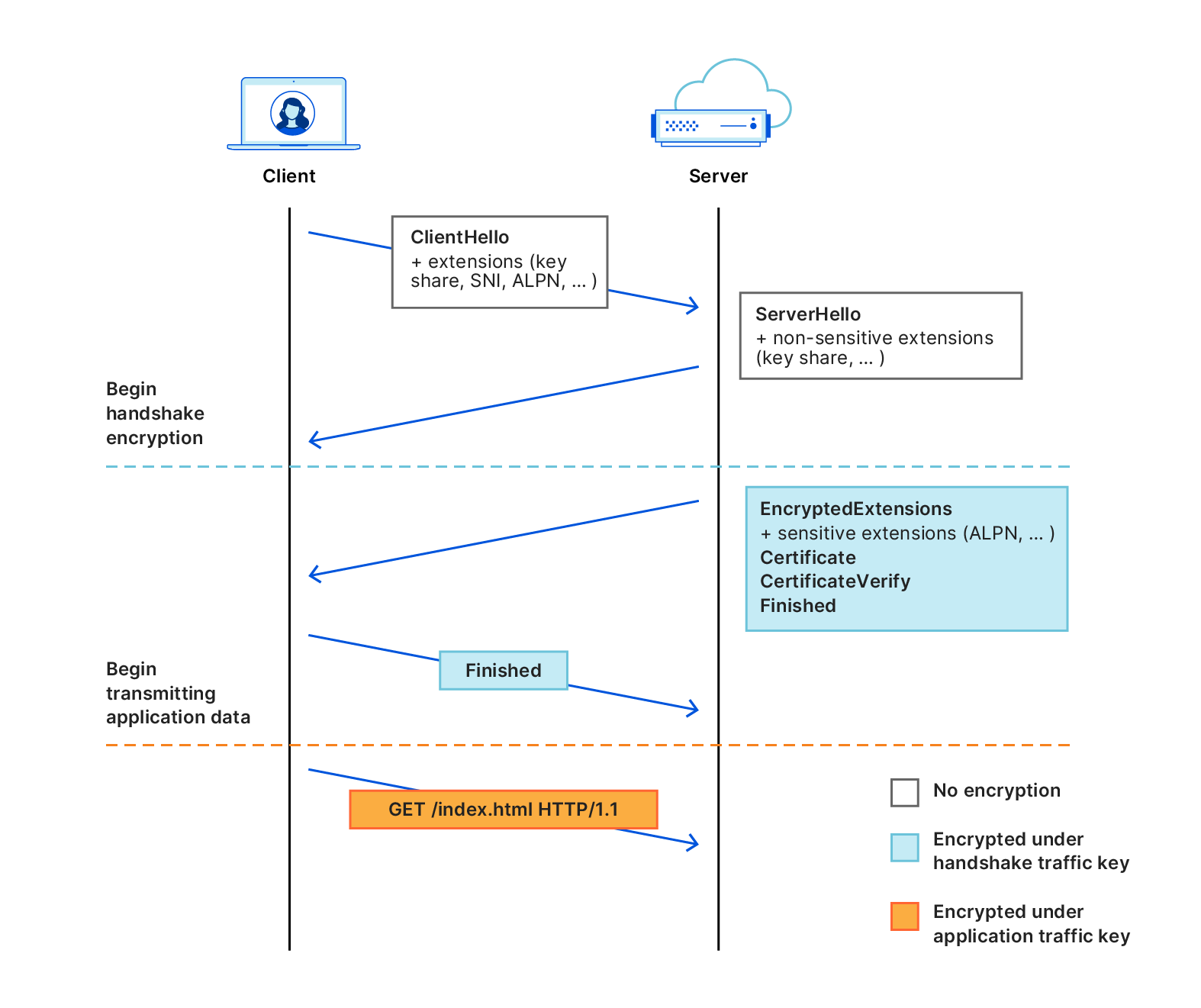

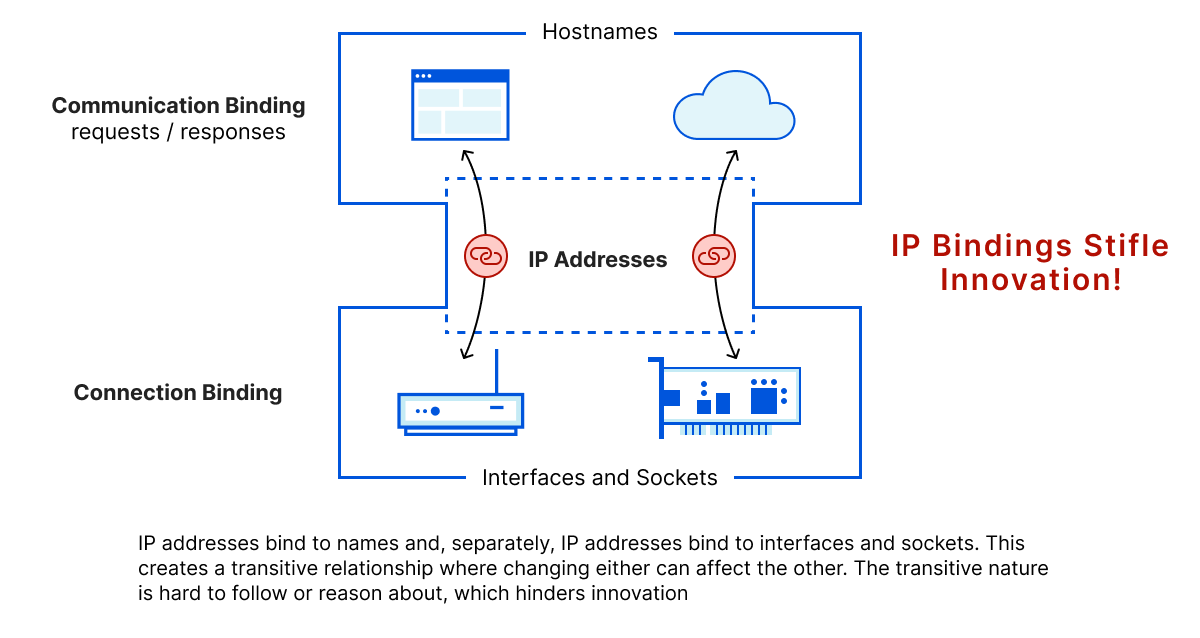

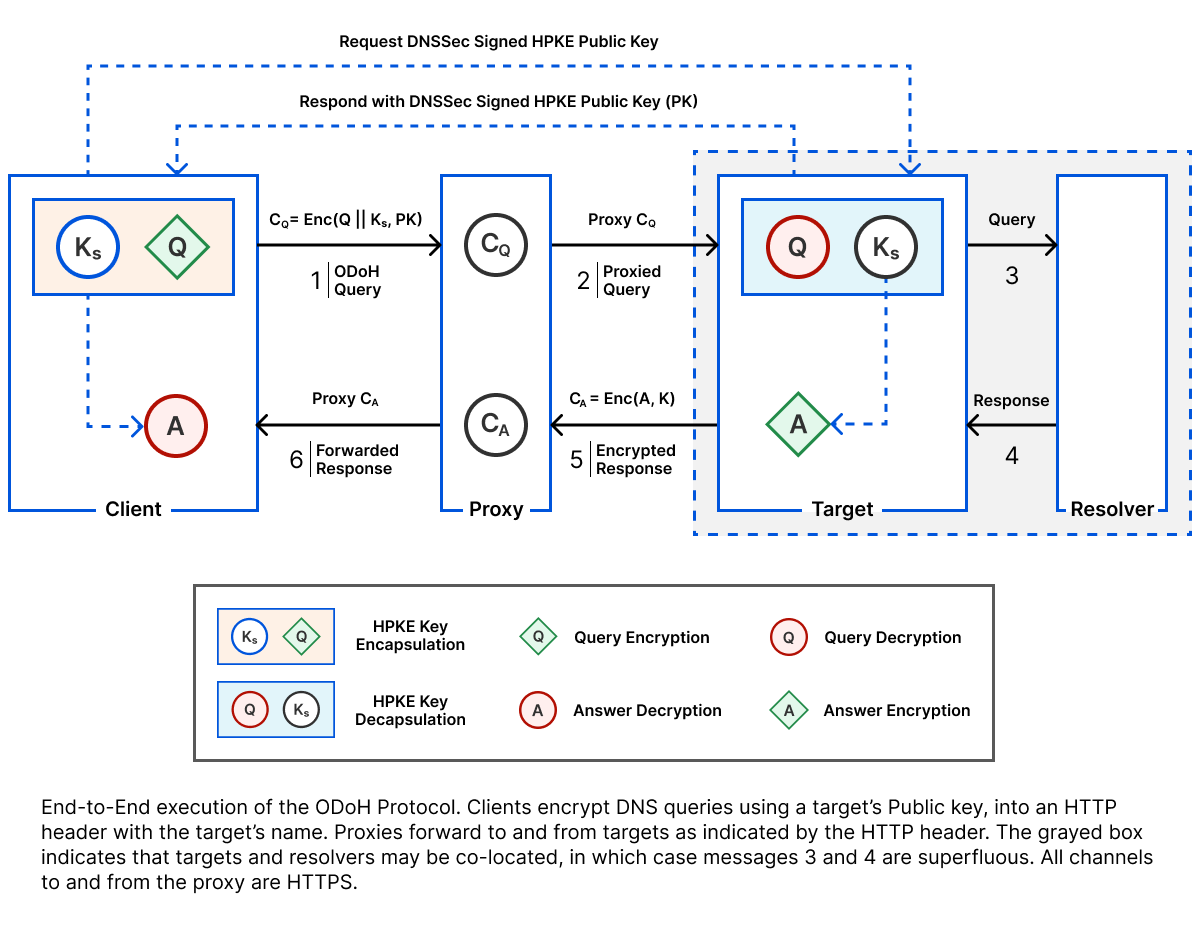

Making this information private is no easy feat. The nature or intent of a connection, i.e., the name of the service such as crypto.cloudflare.com, is revealed in multiple places during the course of connection establishment: during DNS resolution, wherein clients map service names to IP addresses; and during connection establishment, wherein clients indicate the service name to the target server. (Note: there are other small leaks, though DNS and TLS are the primary problems on the Internet today.)

As is common in recent years, the solution to this problem is encryption. DNS-over-HTTPS (DoH) is a protocol for encrypting DNS queries and responses to hide this information from onpath observers. Encrypted Client Hello (ECH) is the complementary protocol for TLS.

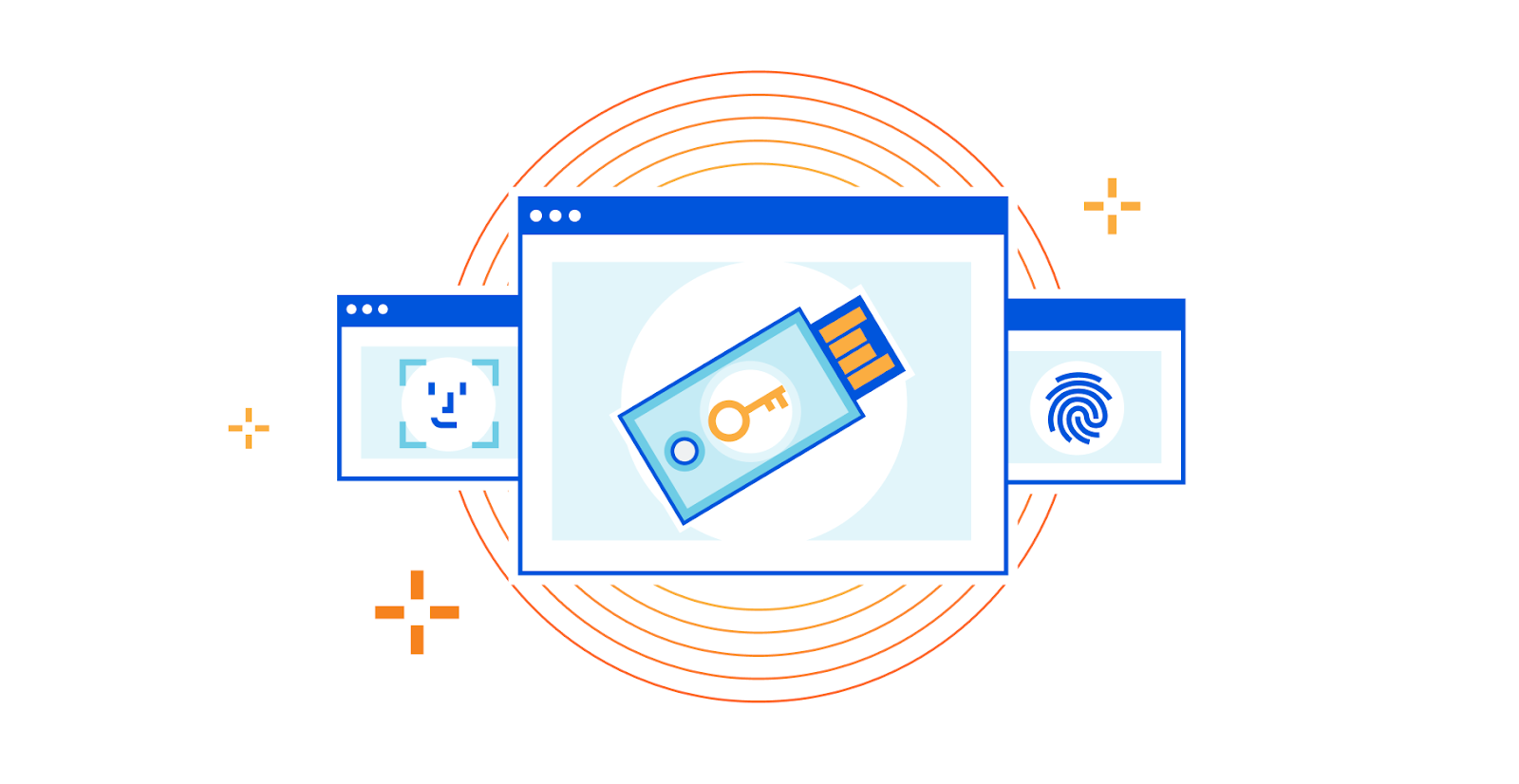

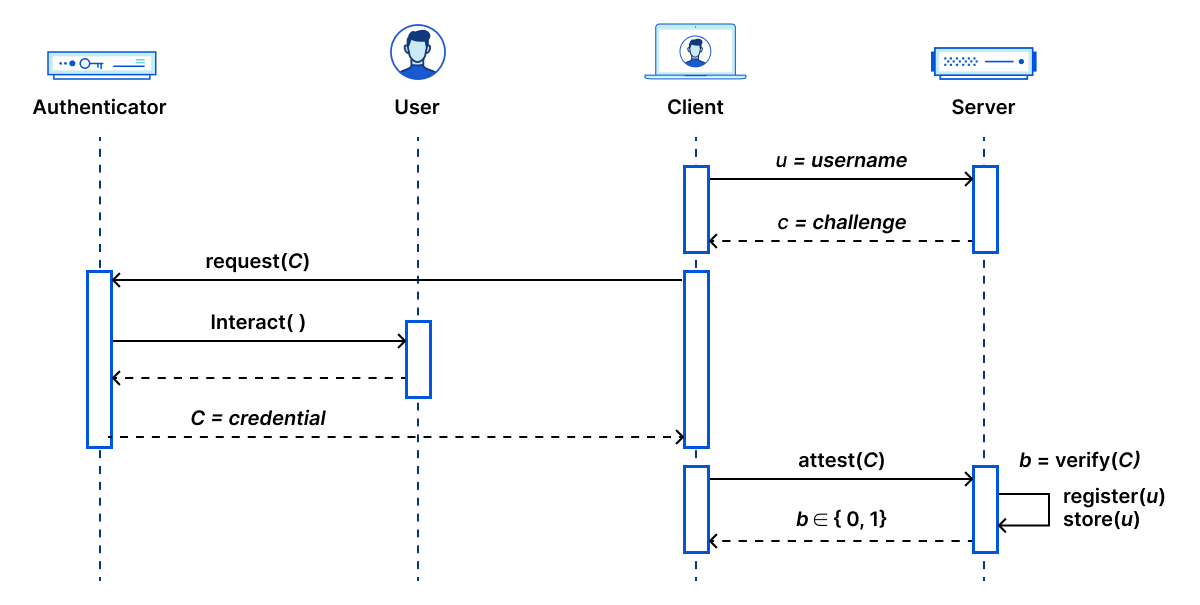

The TLS handshake begins when the client sends a ClientHello message to the server over a TCP connection (or, in the context of QUIC, over UDP) with relevant parameters, including those that are sensitive. The server responds with a ServerHello, encrypted parameters, and all that’s needed to finish the handshake.

The goal of ECH is as simple as its name suggests: to encrypt the ClientHello so that privacy-sensitive parameters, such as the service name, are unintelligible to anyone listening on the network. The client encrypts this message using a public key it learns by making a DNS query for a special record known as the HTTPS resource record. This record advertises the server’s various TLS and HTTPS capabilities, including ECH support. The server decrypts the encrypted ClientHello using the corresponding secret key.

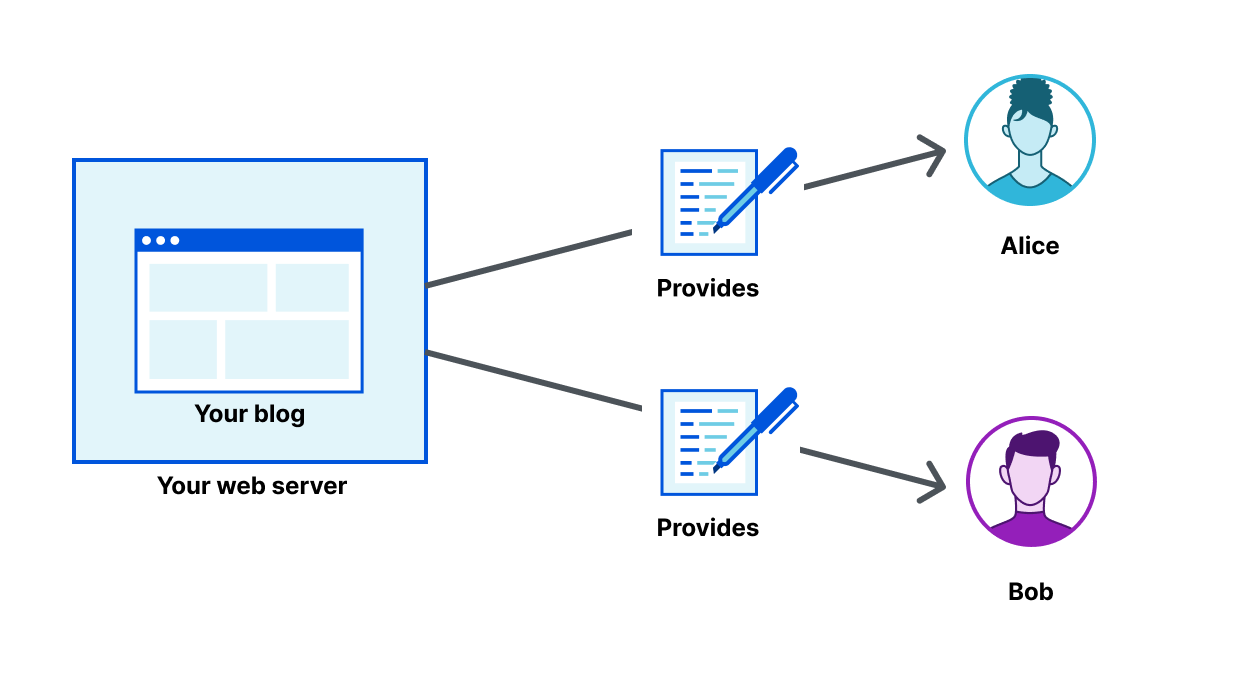

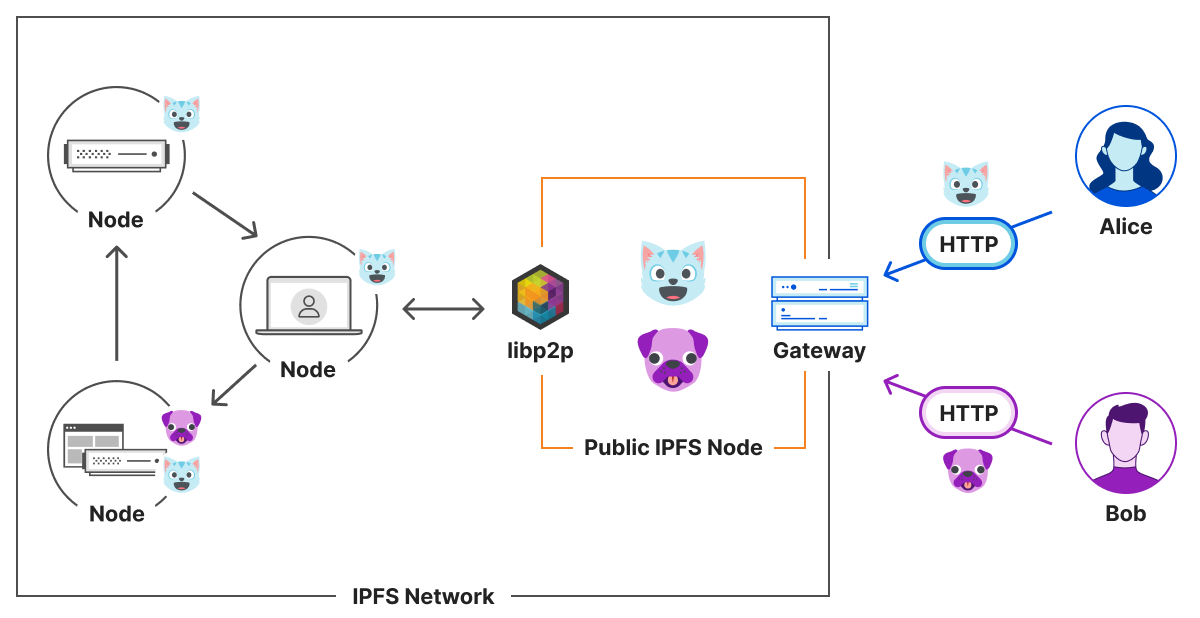

Conceptually, DoH and ECH are somewhat similar. With DoH, clients establish an encrypted connection (HTTPS) to a DNS recursive resolver such as 1.1.1.1 and, within that connection, perform DNS transactions.

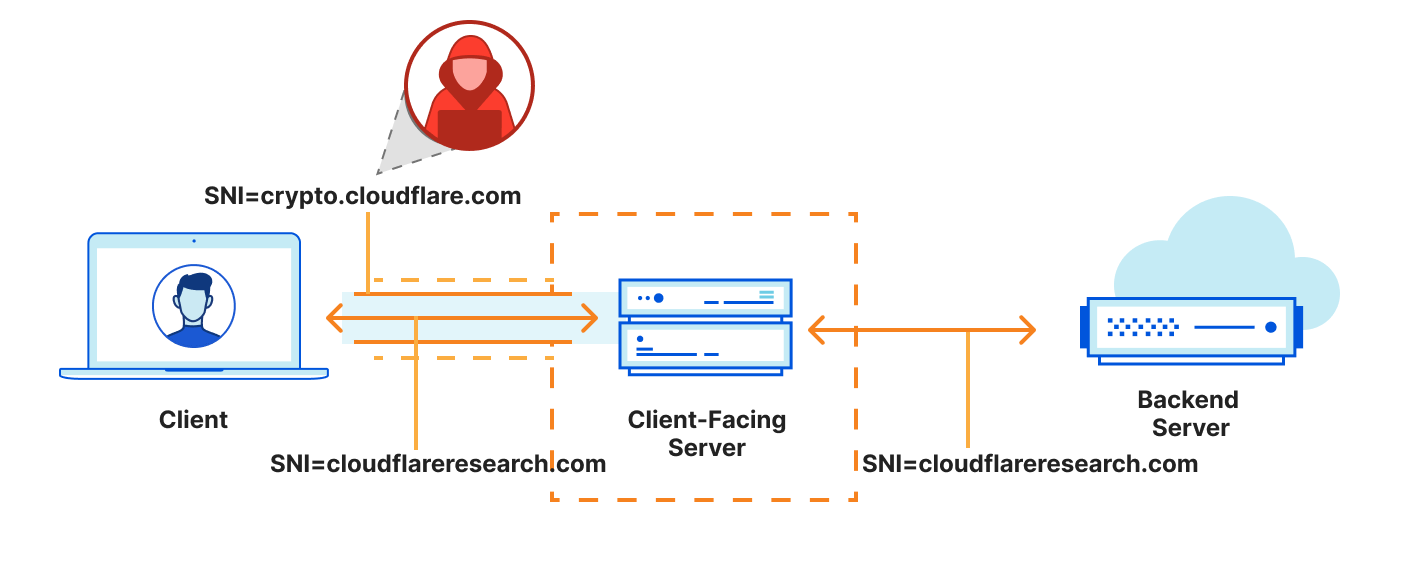

With ECH, clients establish an encrypted connection to a TLS-terminating server such as crypto.cloudflare.com, and within that connection, request resources for an authorized domain such as cloudflareresearch.com.

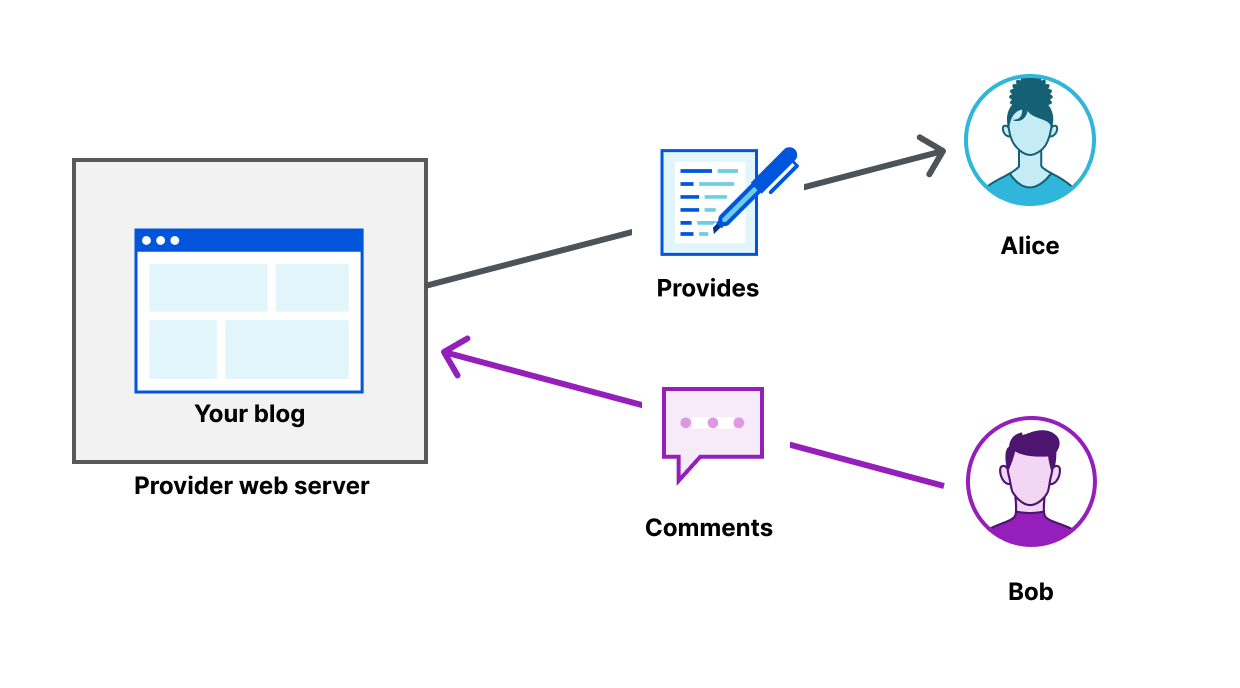

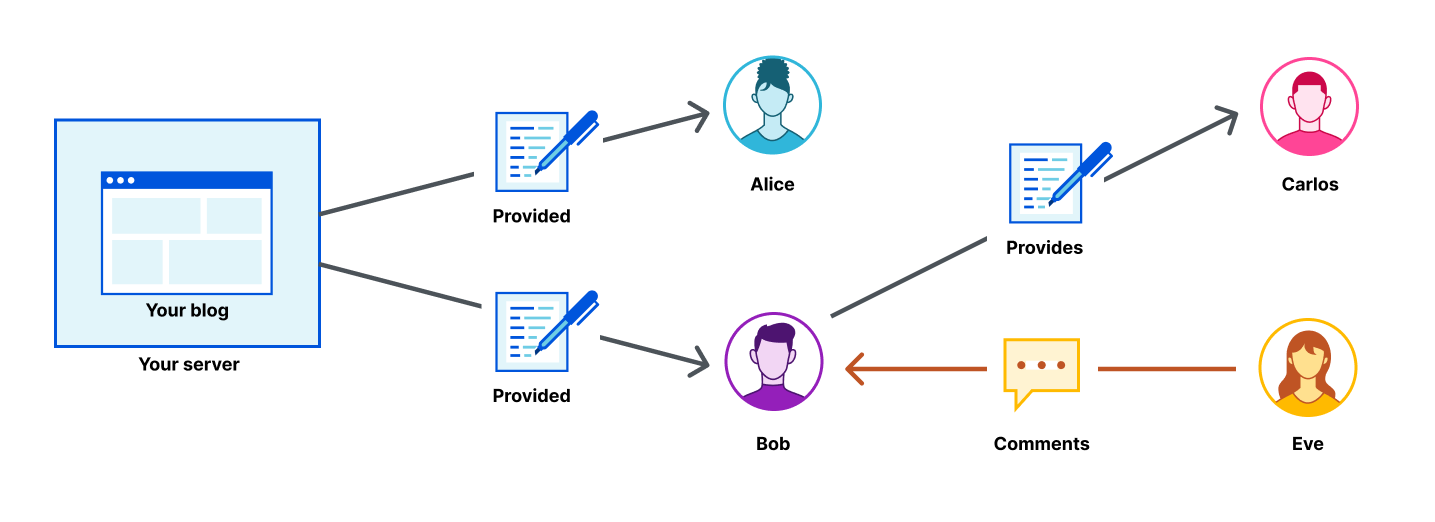

There is one very important difference between DoH and ECH that is worth highlighting. Whereas a DoH recursive resolver is specifically designed to allow queries for any domain, a TLS server is configured to allow connections for a select set of authorized domains. Typically, the set of authorized domains for a TLS server are those which appear on its certificate, as these constitute the set of names for which the server is authorized to terminate a connection.

Basically, this means the DNS resolver is open, whereas the ECH client-facing server is closed. And this closed set of authorized domains is informally referred to as the anonymity set. (This will become important later on in this post.) Moreover, the anonymity set is assumed to be public information. Anyone can query DNS to discover what domains map to the same client-facing server.

Why is this distinction important? It means that one cannot use ECH for the purposes of connecting to an authorized domain and then interacting with a different domain, a practice commonly referred to as domain fronting. When a client connects to a server using an authorized domain but then tries to interact with a different domain within that connection, e.g., by sending HTTP requests for an origin that does not match the domain of the connection, the request will fail.

From a high level, encrypting names in DNS and TLS may seem like a simple feat. However, as we’ll show, ECH demands a different look at security and an updated threat model.

A changing threat model and design confidence

The typical threat model for TLS is known as the Dolev-Yao model, in which an active network attacker can read, write, and delete packets from the network. This attacker’s goal is to derive the shared session key. There has been a tremendous amount of research analyzing the security of TLS to gain confidence that the protocol achieves this goal.

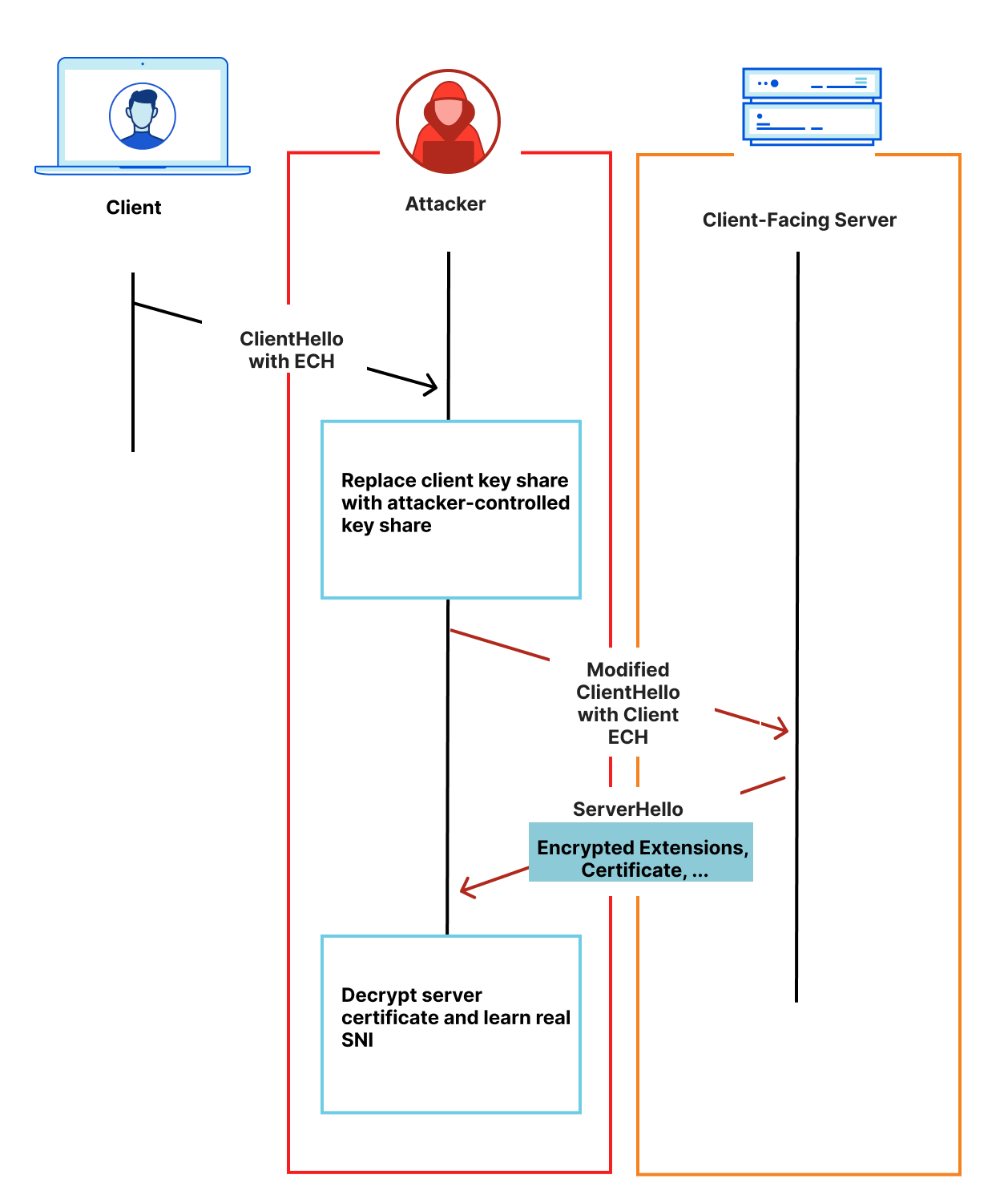

The threat model for ECH is somewhat stronger than considered in previous work. Not only should it be hard to derive the session key, it should also be hard for the attacker to determine the identity of the server from a known anonymity set. That is, ideally, it should have no more advantage in identifying the server than if it simply guessed from the set of servers in the anonymity set. And recall that the attacker is free to read, write, and modify any packet as part of the TLS connection. This means, for example, that an attacker can replay a ClientHello and observe the server’s response. It can also extract pieces of the ClientHello — including the ECH extension — and use them in its own modified ClientHello.

The design of ECH ensures that this sort of attack is virtually impossible by ensuring the server certificate can only be decrypted by either the client or client-facing server.

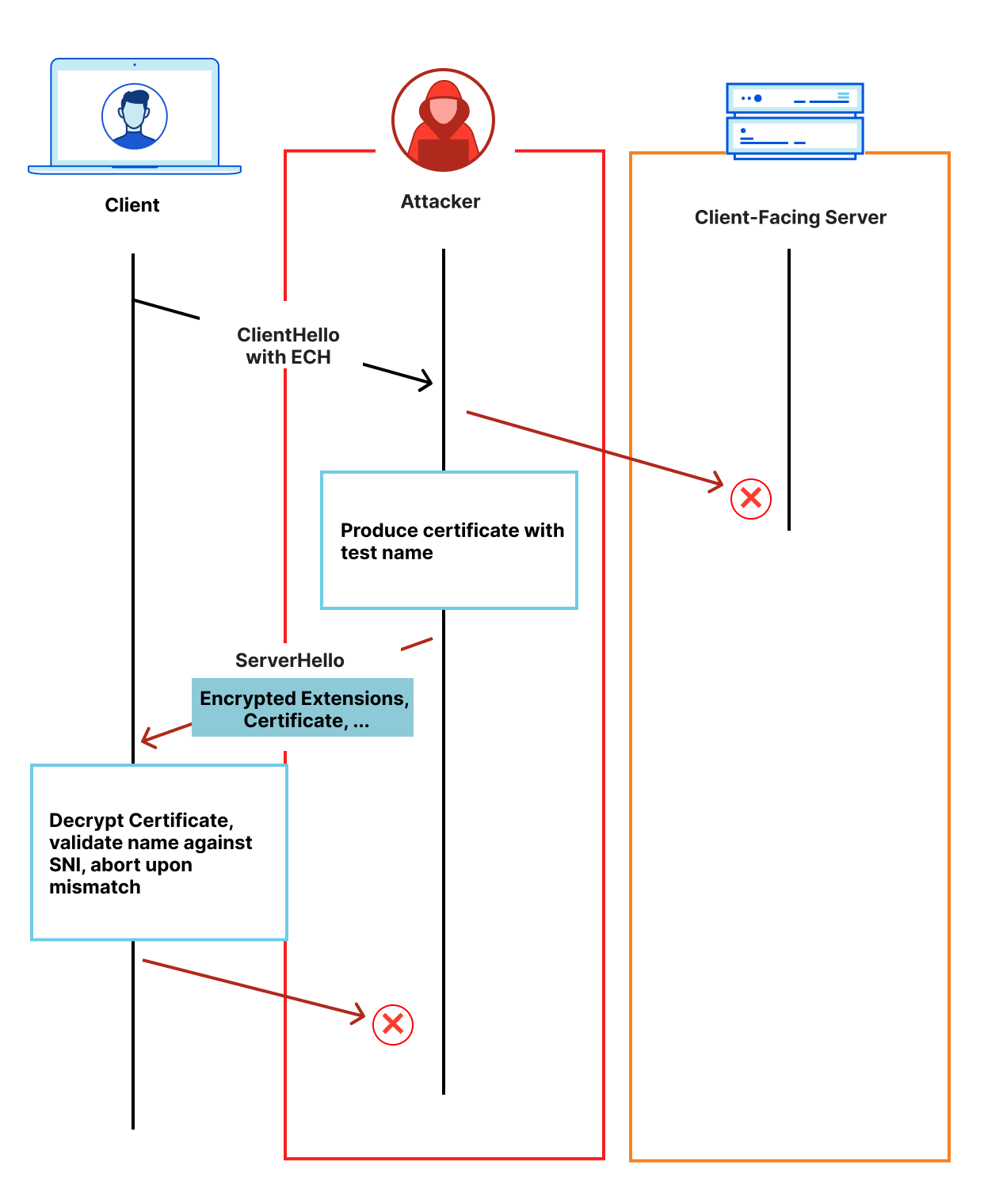

Something else an attacker might try is masquerade as the server and actively interfere with the client to observe its behavior. If the client reacted differently based on whether the server-provided certificate was correct, this would allow the attacker to test whether a given connection using ECH was for a particular name.

ECH also defends against this attack by ensuring that an attacker without access to the private ECH key material cannot actively inject anything into the connection.

The attacker can also be entirely passive and try to infer encrypted information from other visible metadata, such as packet sizes and timing. (Indeed, traffic analysis is an open problem for ECH and in general for TLS and related protocols.) Passive attackers simply sit and listen to TLS connections, and use what they see and, importantly, what they know to make determinations about the connection contents. For example, if a passive attacker knows that the name of the client-facing server is crypto.cloudflare.com, and it sees a ClientHello with ECH to crypto.cloudflare.com, it can conclude, with reasonable certainty, that the connection is to some domain in the anonymity set of crypto.cloudflare.com.

The number of potential attack vectors is astonishing, and something that the TLS working group has tripped over in prior iterations of the ECH design. Before any sort of real world deployment and experiment, we needed confidence in the design of this protocol. To that end, we are working closely with external researchers on a formal analysis of the ECH design which captures the following security goals:

- Use of ECH does not weaken the security properties of TLS without ECH.

- TLS connection establishment to a host in the client-facing server’s anonymity set is indistinguishable from a connection to any other host in that anonymity set.

We’ll write more about the model and analysis when they’re ready. Stay tuned!

There are plenty of other subtle security properties we desire for ECH, and some of these drill right into the most important question for a privacy-enhancing technology: Is this deployable?

Focusing on deployability

With confidence in the security and privacy properties of the protocol, we then turned our attention towards deployability. In the past, significant protocol changes to fundamental Internet protocols such as TCP or TLS have been complicated by some form of benign interference. Network software, like any software, is prone to bugs, and sometimes these bugs manifest in ways that we only detect when there’s a change elsewhere in the protocol. For example, TLS 1.3 unveiled middlebox ossification bugs that ultimately led to the middlebox compatibility mode for TLS 1.3.

While itself just an extension, the risk of ECH exposing (or introducing!) similar bugs is real. To combat this problem, ECH supports a variant of GREASE whose goal is to ensure that all ECH-capable clients produce syntactically equivalent ClientHello messages. In particular, if a client supports ECH but does not have the corresponding ECH configuration, it uses GREASE. Otherwise, it produces a ClientHello with real ECH support. In both cases, the syntax of the ClientHello messages is equivalent.

This hopefully avoids network bugs that would otherwise trigger upon real or fake ECH. Or, in other words, it helps ensure that all ECH-capable client connections are treated similarly in the presence of benign network bugs or otherwise passive attackers. Interestingly, active attackers can easily distinguish — with some probability — between real or fake ECH. Using GREASE, the ClientHello carries an ECH extension, though its contents are effectively randomized, whereas a real ClientHello using ECH has information that will match what is contained in DNS. This means an active attacker can simply compare the ClientHello against what’s in the DNS. Indeed, anyone can query DNS and use it to determine if a ClientHello is real or fake:

$ dig +short crypto.cloudflare.com TYPE65

\# 134 0001000001000302683200040008A29F874FA29F884F000500480046 FE0D0042D500200020E3541EC94A36DCBF823454BA591D815C240815 77FD00CAC9DC16C884DF80565F0004000100010013636C6F7564666C 6172652D65736E692E636F6D00000006002026064700000700000000 0000A29F874F260647000007000000000000A29F884F

Despite this obvious distinguisher, the end result isn’t that interesting. If a server is capable of ECH and a client is capable of ECH, then the connection most likely used ECH, and whether clients and servers are capable of ECH is assumed public information. Thus, GREASE is primarily intended to ease deployment against benign network bugs and otherwise passive attackers.

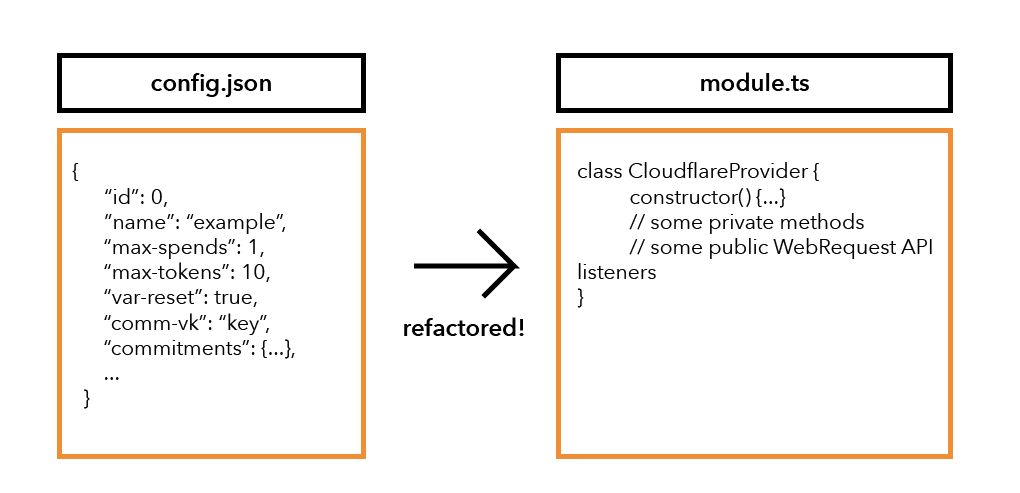

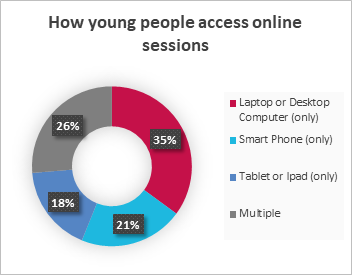

Note, importantly, that GREASE (or fake) ECH ClientHello messages are semantically different from real ECH ClientHello messages. This presents a real problem for networks such as enterprise settings or school environments that otherwise use plaintext TLS information for the purposes of implementing various features like filtering or parental controls. (Encrypted DNS protocols like DoH also encountered similar obstacles in their deployment.) Fundamentally, this problem reduces to the following: How can networks securely disable features like DoH and ECH? Fortunately, there are a number of approaches that might work, with the more promising one centered around DNS discovery. In particular, if clients could securely discover encrypted recursive resolvers that can perform filtering in lieu of it being done at the TLS layer, then TLS-layer filtering might be wholly unnecessary. (Other approaches, such as the use of canary domains to give networks an opportunity to signal that certain features are not permitted, may work, though it’s not clear if these could or would be abused to disable ECH.)

We are eager to collaborate with browser vendors, network operators, and other stakeholders to find a feasible deployment model that works well for users without ultimately stifling connection privacy for everyone else.

Next steps

ECH is rolling out for some FREE zones on our network in select geographic regions. We will continue to expand the set of zones and regions that support ECH slowly, monitoring for failures in the process. Ultimately, the goal is to work with the rest of the TLS working group and IETF towards updating the specification based on this experiment in hopes of making it safe, secure, usable, and, ultimately, deployable for the Internet.

ECH is one part of the connection privacy story. Like a leaky boat, it’s important to look for and plug all the gaps before taking on lots of passengers! Cloudflare Research is committed to these narrow technical problems and their long-term solutions. Stay tuned for more updates on this and related protocols.

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/akkadian-vuln.jpg)

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image5.jpg)

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image3-1.png)

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image4-1.png)

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image2-1.png)

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image6.png)

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image1-2.png)

![CVE-2021-3927[67]: Fortress S03 WiFi Home Security System Vulnerabilities](https://blog.rapid7.com/content/images/2021/08/fortress-vuln.jpg)

![CVE-2021-3927[67]: Fortress S03 WiFi Home Security System Vulnerabilities](https://blog.rapid7.com/content/images/2021/08/image1.png)

![CVE-2021-3927[67]: Fortress S03 WiFi Home Security System Vulnerabilities](https://blog.rapid7.com/content/images/2021/08/image2-1.png)

![CVE-2021-3927[67]: Fortress S03 WiFi Home Security System Vulnerabilities](https://blog.rapid7.com/content/images/2021/08/image3.png)