Post Syndicated from original https://lwn.net/Articles/898925/

Security updates have been issued by Fedora (ntfs-3g and ntfs-3g-system-compression), SUSE (389-ds, chafa, containerd, mariadb, php74, python3, salt, and xen), and Ubuntu (apache2).

Post Syndicated from original https://lwn.net/Articles/898925/

Security updates have been issued by Fedora (ntfs-3g and ntfs-3g-system-compression), SUSE (389-ds, chafa, containerd, mariadb, php74, python3, salt, and xen), and Ubuntu (apache2).

Post Syndicated from Corey Mahan original https://blog.cloudflare.com/announcing-gateway-and-casb/

This post is also available in 简体中文, 日本語, Español.

Shadow IT and managing access to sanctioned or unsanctioned SaaS applications remain one of the biggest pain points for IT administrators in the era of the cloud.

We’re excited to announce that starting today, Cloudflare’s Secure Web Gateway and our new API-driven Cloud Access Security Broker (CASB) work seamlessly together to help IT and security teams go from finding Shadow IT to fixing it in minutes.

Cloudflare’s API-driven CASB starts by providing comprehensive visibility into SaaS applications, so you can easily prevent data leaks and compliance violations. Setup takes just a few clicks to integrate with your organization’s SaaS services, like Google Workspace and Microsoft 365. From there, IT and security teams can see what applications and services their users are logging into and how company data is being shared.

So you’ve found the issues. But what happens next?

Customer feedback from the API-driven CASB beta has followed a similar theme: it was super easy to set up and detect all my security issues, but how do I fix this stuff?

Almost immediately after investigating the most critical issues, it makes sense to want to start taking action. Whether it be detecting an unknown application being used for Shadow IT or wanting to limit functionality, access, or behaviors to a known but unapproved application, remediation is front of mind.

This led to customers feeling like they had a bunch of useful data in front of them, but no clear action to take to get started on fixing them.

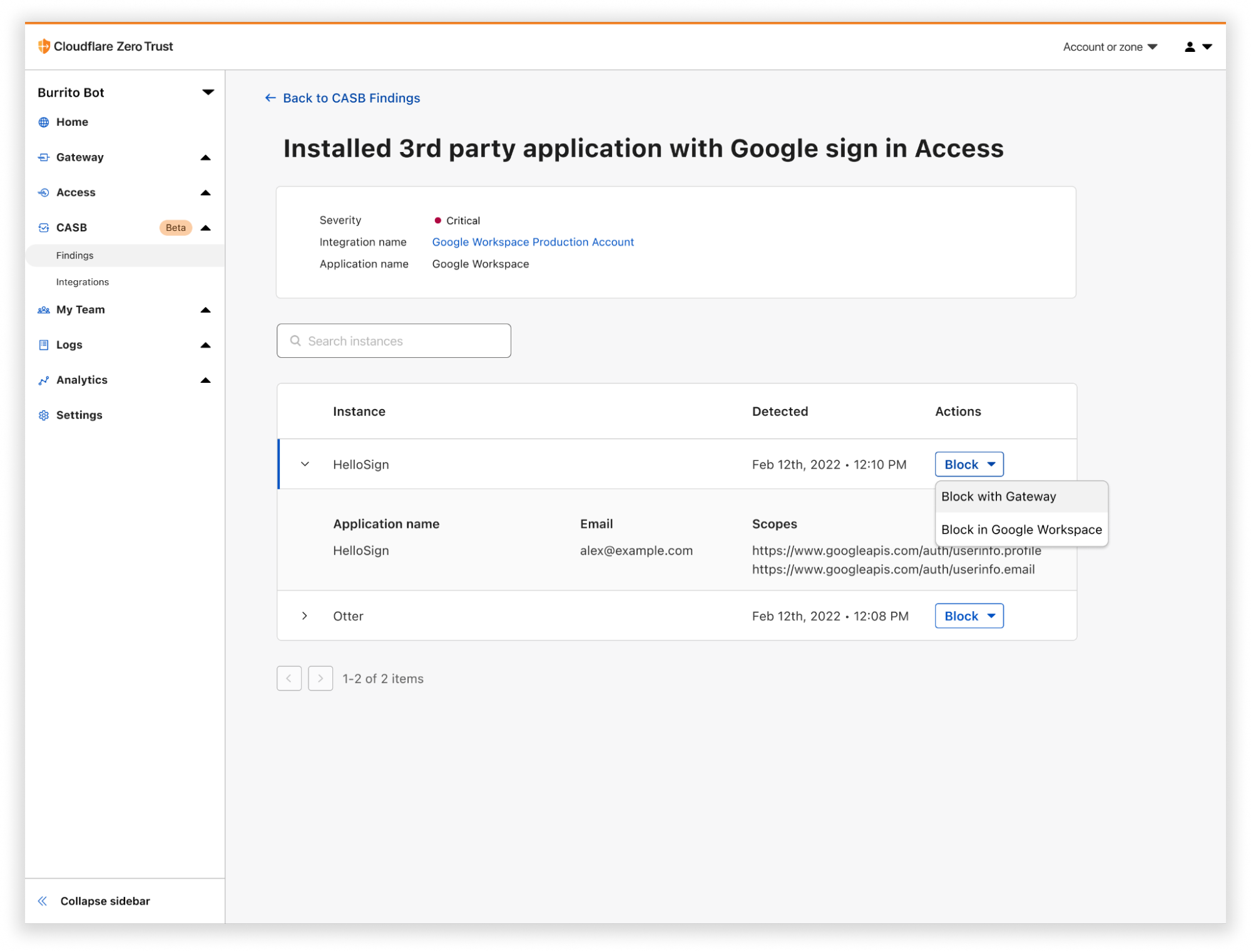

To solve this problem, we’re allowing you to easily create Gateway policies from CASB security findings. Security findings are issues detected within SaaS applications that involve users, data at rest, and settings that are assigned a Low, Medium, High or Critical severity per integration.

Using the security findings from CASB allows for fine-grained Gateway policies which prevent future unwanted behavior while still allowing usage that aligns to company security policy. This means going from viewing a CASB security issue, like the use of an unapproved SaaS application, to preventing or controlling access in minutes. This seamless cross-product experience all happens from a single, unified platform.

For example, take the CASB Google Workspace security finding around third-party apps which detects sign-ins or other permission sharing from a user’s account. In just a few clicks, you can create a Gateway policy to block some or all of the activity, like uploads or downloads, to the detected SaaS application. This policy can be applied to some or all users, based on what access has been granted to the user’s account.

By surfacing the exact behavior with CASB, you can take swift and targeted action to better protect your organization with Gateway.

This post highlights one of the many ways the Cloudflare One suite of solutions work seamlessly together as a unified platform to find and fix security issues across SaaS applications.

Get started now with Cloudflare’s Secure Web Gateway by signing up here. Cloudflare’s API-driven CASB is in closed beta with new customers being onboarded each week. You can request access here to try out this exciting new cross-product feature.

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=x_tT8li3fgw

Post Syndicated from Janne Pikkarainen original https://blog.zabbix.com/whats-up-home-welcome-to-my-zabbixverse/21353/

By day, I am a monitoring technical lead in a global cyber security company. By night, I monitor my home with Zabbix and Grafana in very creative ways. But what has Zabbix to do with Blender 3D software or virtual reality? Read on.

Full-stack monitoring is an old concept — in the IT world, it means your service is monitored all the way from physical level (data center environmental status like temperature or smoke detection, power, network connectivity, hardware status…) to operating system status, to your application status, enriched with all kinds of data such as application logs or end-to-end testing performance. Zabbix has very mature support for that, but how about… full house monitoring in 3D and, possibly, in virtual reality?

The catacombs of my heart do have a place for 3D modeling. I am not a talented 3D artist, not by a long shot, but I have flirted with 3D apps since Amiga 500 and it’s Real 3D 1.4, then later with Amiga 1200 a legally purchased Tornado 3D, and not so legally downloaded Lightwave. With Linux, so after 1999 for me, I have used POV-Ray about 20 years ago, and as Blender went open source a long time ago, I have tried it out every now and then.

So, in theory, I can do 3D. In practice, it’s the “Hmm, I wonder what happens if I press this button” approach I use.

Okay. There are several reasons why I am doing this whole home monitoring thing.

For traditional IT monitoring, 2D interface and 2D alerts are OK, maybe apart from physical rack location visualization, where it definitely helps if a sysadmin can locate a malfunctioning server easily from a picture.

For the Real World monitoring, it is a different story. I’m sure an electrician would appreciate if the alert would contain pictures or animations visualizing the exact location of whatever was broken. The same for plumbers, guards, whoever needs to get to fix something in huge buildings, fast.

Now that you know my motivation, let’s finally get started!

In my case, leaping Zabbix from 2D to 3D meant just a bunch of easy steps:

Sweet Home 3D is a relatively easy-to-use home modeling application. It’s free, and already contains a generous bunch of furniture, and with a small sum, you’ll get access to many, many more items.

After a few moments, I had my home modeled in Sweet Home 3D.

Next, I exported the file to .obj format, recognized by Blender.

In Blender, I created a new scene, removed the meme-worthy default cube, and imported the Sweet Home 3D model to Blender.

Oh wow, it worked! Next, I needed to label the interesting items, such as our living room TV to match the names in Zabbix.

Yes, it does. Here is my first “let’s throw in some random objects into a Blender scene and try to manipulate it from Zabbix” attempt before any Sweet Home 3D business.

Fancy? No. Meaningful? Yes. There’s a lot going on in here.

My Zabbix is now consulting Blender for every severity >=Average trigger, and I can also run the rendering manually any time I want.

First, here’s the manual refresh.

Next, here is the trigger:

Here is a static PNG image rendering result by Blender Eevee rendering engine. Like gaming engines, Eevee cuts some corners when it comes to accuracy, but with a powerful GPU it can do wonders in real-time or at least in near-real-time.

The “I am not a 3D artist” part will hit you now hard. Cover your eyes, this will hurt. Here’s the Eevee rendering result.

That green color? No, our home is not like that. I just tried to make this thing look more futuristic, perhaps Matrix-like… but now it looks like… well… like I would have used a 3D program. The red Rudolph the Rednose Reindeer nose-like thing? I imagined it would be a neatly glowing red sphere along with the TV glowing, indicating an alert with our TV. Fail for the visual part, but at least the alert logic works! And don’t ask why the TV looks so strange.

But you get the point. Imagine if a warehouse/factory/whatever monitoring center would see something like this in their alerts. No more cryptic “Power socket S1F1A255DU not working” alerts, instead, the alert would pinpoint the alert in a visual way.

Mark Zuckerberg, be very afraid with your Metaverse, as Zabbixverse will rule the world. Among many other formats, Blender can export its scenes to X3D format. It’s one of the virtual world formats our web browsers do support, and dead simple to embed inside Zabbix/Grafana. Blender would support WebGL, too, but getting X3D to run only needed the use of <x3d> tag, so for my experiment, it was super easy.

The video looks crappy because I have not done any texture/light work yet, but the concept works! In the video, it is me controlling the movement.

In my understanding, X3D/WebGL supports VR headsets, too, so in theory you could be observing the status of whatever physical facility you monitor through your VR headset.

Of course, this works in Grafana, too.

It’s free! I mean, Zabbix is free, Python is free and Blender is free, and open source. If you have some 3D blueprints of your facility in a format Blender can support — it supports plenty — you’re all set! Have an engineer or two or ten for doing the 3D scene labeling work, and pretty soon you will see you are doing your monitoring in 3D world.

The new/resolved alerts are not updated to the scene in real-time. For PNG files that does not matter much, as those are static and Zabbix can update those as often as needed, but for the interactive X3D files it’s a shame that for now the scene will only be updated whenever you refresh the page, or Zabbix does it for you. I need to learn if I can update X3D properties in real-time instead of a forced page load.

Next week I will show you how I monitor a Philips OneBlade shaver for its estimated runtime left. The device does not have any IoT functionality, so how do I monitor it? Tune in to this blog next week at the same Zabbix time.

I have been working at Forcepoint since 2014 and never get bored of inventing new ways to visualize data.

The post What’s Up, Home? Welcome to my Zabbixverse appeared first on Zabbix Blog.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/06/on-the-dangers-of-cryptocurrencies-and-the-uselessness-of-blockchain.html

Earlier this month, I and others wrote a letter to Congress, basically saying that cryptocurrencies are an complete and total disaster, and urging them to regulate the space. Nothing in that letter is out of the ordinary, and is in line with what I wrote about blockchain in 2019. In response, Matthew Green has written—not really a rebuttal—but a “a general response to some of the more common spurious objections…people make to public blockchain systems.” In it, he makes several broad points:

There’s nothing on that list that I disagree with. (We can argue about whether proof-of-stake is actually an improvement. I am skeptical of systems that enshrine a “they who have the gold make the rules” system of governance. And to the extent any of those scaling solutions work, they undo the decentralization blockchain claims to have.) But I also think that these defenses largely miss the point. To me, the problem isn’t that blockchain systems can be made slightly less awful than they are today. The problem is that they don’t do anything their proponents claim they do. In some very important ways, they’re not secure. They don’t replace trust with code; in fact, in many ways they are far less trustworthy than non-blockchain systems. They’re not decentralized, and their inevitable centralization is harmful because it’s largely emergent and ill-defined. They still have trusted intermediaries, often with more power and less oversight than non-blockchain systems. They still require governance. They still require regulation. (These things are what I wrote about here.) The problem with blockchain is that it’s not an improvement to any system—and often makes things worse.

In our letter, we write: “By its very design, blockchain technology is poorly suited for just about every purpose currently touted as a present or potential source of public benefit. From its inception, this technology has been a solution in search of a problem and has now latched onto concepts such as financial inclusion and data transparency to justify its existence, despite far better solutions to these issues already in use. Despite more than thirteen years of development, it has severe limitations and design flaws that preclude almost all applications that deal with public customer data and regulated financial transactions and are not an improvement on existing non-blockchain solutions.”

Green responds: “‘Public blockchain’ technology enables many stupid things: today’s cryptocurrency schemes can be venal, corrupt, overpromised. But the core technology is absolutely not useless. In fact, I think there are some pretty exciting things happening in the field, even if most of them are further away from reality than their boosters would admit.” I have yet to see one. More specifically, I can’t find a blockchain application whose value has anything to do with the blockchain part, that wouldn’t be made safer, more secure, more reliable, and just plain better by removing the blockchain part. I postulate that no one has ever said “Here is a problem that I have. Oh look, blockchain is a good solution.” In every case, the order has been: “I have a blockchain. Oh look, there is a problem I can apply it to.” And in no cases does it actually help.

Someone, please show me an application where blockchain is essential. That is, a problem that could not have been solved without blockchain that can now be solved with it. (And “ransomware couldn’t exist because criminals are blocked from using the conventional financial networks, and cash payments aren’t feasible” does not count.)

For example, Green complains that “credit card merchant fees are similar, or have actually risen in the United States since the 1990s.” This is true, but has little to do with technological inefficiencies or existing trust relationships in the industry. It’s because pretty much everyone who can and is paying attention gets 1% back on their purchases: in cash, frequent flier miles, or other affinity points. Green is right about how unfair this is. It’s a regressive subsidy, “since these fees are baked into the cost of most retail goods and thus fall heavily on the working poor (who pay them even if they use cash).” But that has nothing to do with the lack of blockchain, and solving it isn’t helped by adding a blockchain. It’s a regulatory problem; with a few exceptions, credit card companies have successfully pressured merchants into charging the same prices, whether someone pays in cash or with a credit card. Peer-to-peer payment systems like PayPal, Venmo, MPesa, and AliPay all get around those high transaction fees, and none of them use blockchain.

This is my basic argument: blockchain does nothing to solve any existing problem with financial (or other) systems. Those problems are inherently economic and political, and have nothing to do with technology. And, more importantly, technology can’t solve economic and political problems. Which is good, because adding blockchain causes a whole slew of new problems and makes all of these systems much, much worse.

Green writes: “I have no problem with the idea of legislators (intelligently) passing laws to regulate cryptocurrency. Indeed, given the level of insanity and the number of outright scams that are happening in this area, it’s pretty obvious that our current regulatory framework is not up to the task.” But when you remove the insanity and the scams, what’s left?

EDITED TO ADD: Nicholas Weaver is also adamant about this. David Rosenthal is good, too.

EDITED TO ADD (7/8/2022): This post has been translated into German.

Post Syndicated from original https://xkcd.com/2637/

Post Syndicated from BeardedTinker original https://www.youtube.com/watch?v=ge7wrFkt6eY

Post Syndicated from Jake Baines original https://blog.rapid7.com/2022/06/23/cve-2022-31749-watchguard-authenticated-arbitrary-file-read-write-fixed/

A remote and low-privileged WatchGuard Firebox or XTM user can read arbitrary system files when using the SSH interface due to an argument injection vulnerability affecting the diagnose command. Additionally, a remote and highly privileged user can write arbitrary system files when using the SSH interface due to an argument injection affecting the import pac command. Rapid7 reported these issues to WatchGuard, and the vulnerabilities were assigned CVE-2022-31749. On June 23, Watchguard published an advisory and released patches in Fireware OS 12.8.1, 12.5.10, and 12.1.4.

WatchGuard Firebox and XTM appliances are firewall and VPN solutions ranging in form factor from tabletop, rack mounted, virtualized, and “rugged” ICS designs. The appliances share a common underlying operating system named Fireware OS.

At the time of writing, there are more than 25,000 WatchGuard appliances with their HTTP interface discoverable on Shodan. There are more than 9,000 WatchGuard appliances exposing their SSH interface.

In February 2022, GreyNoise and CISA published details of WatchGuard appliances vulnerable to CVE-2022-26318 being exploited in the wild. Rapid7 discovered CVE-2022-31749 while analyzing the WatchGuard XTM appliance for the writeup of CVE-2022-26318 on AttackerKB.

This issue was discovered by Jake Baines of Rapid7, and it is being disclosed in accordance with Rapid7’s vulnerability disclosure policy.

CVE-2022-31749 is an argument injection into the ftpput and ftpget commands. The arguments are injected when the SSH CLI prompts the attacker for a username and password when using the diagnose or import pac commands. For example:

WG>diagnose to ftp://test/test

Name: username

Password:

The “Name” and “Password” values are not sanitized before they are combined into the “ftpput” and “ftpget” commands and executed via librmisvc.so. Execution occurs using execle, so command injection isn’t possible, but argument injection is. Using this injection, an attacker can upload and download arbitrary files.

File writing turns out to be less useful than an attacker would hope. The problem, from an attacker point of view, is that WatchGuard has locked down much of the file system, and the user can only modify a few directories: /tmp/, /pending/, and /var/run. At the time of writing, we don’t see a way to escalate the file write into code execution, though we wouldn’t rule it out as a possibility.

The low-privileged user file read is interesting because WatchGuard has a built-in low-privileged user named status. This user is intended to “read-only” access to the system. In fact, historically speaking, the default password for this user was “readonly”. Using CVE-2022-31749 this low-privileged user can exfiltrate the configd-hash.xml file, which contains user password hashes when Firebox-DB is in use. Example:

<?xml version="1.0"?>

<users>

<version>3</version>

<user name="admin">

<enabled>1</enabled>

<hash>628427e87df42adc7e75d2dd5c14b170</hash>

<domain>Firebox-DB</domain>

<role>Device Administrator</role>

</user>

<user name="status">

<enabled>1</enabled>

<hash>dddbcb37e837fea2d4c321ca8105ec48</hash>

<domain>Firebox-DB</domain>

<role>Device Monitor</role>

</user>

<user name="wg-support">

<enabled>0</enabled>

<hash>dddbcb37e837fea2d4c321ca8105ec48</hash>

<domain>Firebox-DB</domain>

<role>Device Monitor</role>

</user>

</users>

The hashes are just unsalted MD4 hashes. @funoverip wrote about cracking these weak hashes using Hashcat all the way back in 2013.

Rapid7 has published a proof of concept that exfiltrates the configd-hash.xml file via the diagnose command. The following video demonstrates its use against WatchGuard XTMv 12.1.3 Update 8.

Apply the WatchGuard Fireware updates. If possible, remove internet access to the appliance’s SSH interface. Out of an abundance of caution, changing passwords after updating is a good idea.

WatchGuard thanks Rapid7 for their quick vulnerability report and willingness to work through a responsible disclosure process to protect our customers. We always appreciate working with external researchers to identify and resolve vulnerabilities in our products and we take all reports seriously. We have issued a resolution for this vulnerability, as well as several internally discovered issues, and advise our customers to upgrade their Firebox and XTM products as quickly as possible. Additionally, we recommend all administrators follow our published best practices for secure remote management access to their Firebox and XTM devices.

March, 2022: Discovered by Jake Baines of Rapid7

Mar 29, 2022: Reported to Watchguard via support phone, issue assigned case number 01676704.

Mar 29, 2022: Watchguard acknowledged follow-up email.

April 20, 2022: Rapid7 followed up, asking for progress.

April 21, 2022: WatchGuard acknowledged again they were researching the issue.

May 26, 2022: Rapid7 checked in on status of the issue.

May 26, 2022: WatchGuard indicates patches should be released in June, and asks about CVE assignment.

May 26, 2022: Rapid7 assigns CVE-2022-31749

June 23, 2022: This disclosure

Additional reading:

Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/sending-amazon-eventbridge-events-to-private-endpoints-in-a-vpc/

This post is written by Emily Shea, Senior GTM Specialist, Event-Driven Architectures.

Building with events can help you accelerate feature velocity and build scalable, fault tolerant applications. You can achieve loose coupling in your application using asynchronous communication via events. Loose coupling allows each development team to build and deploy independently and each component to scale and fail without impacting the others. This approach is referred to as event-driven architecture.

Amazon EventBridge helps you build event-driven architectures. You can publish events to the EventBridge event bus and EventBridge routes those events to targets. You can write rules to filter events and only send them to the interested targets. For example, an order fulfillment service may only be interested in events of type ‘new order created.’

EventBridge is serverless, so there is no infrastructure to manage and the service scales automatically. EventBridge has native integrations with over 100 AWS services and over 40 SaaS providers.

Amazon EventBridge has a native integration with AWS Lambda, and many AWS customers use events to trigger Lambda functions to process events. You may also want to send events to workloads running on Amazon EC2 or containerized workloads deployed with Amazon ECS or Amazon EKS. These services are deployed into an Amazon Virtual Private Cloud, or VPC.

For some use cases, you may be able to expose public endpoints for your VPC. You can use EventBridge API destinations to send events to any public HTTP endpoint. API destinations include features like OAuth support and rate limiting to control the number of events you are sending per second.

However, some customers are not able to expose public endpoints for security or compliance purposes. This tutorial shows you how to send EventBridge events to a private endpoint in a VPC using a Lambda function to relay events. This solution deploys the Lambda function connected to the VPC and uses IAM permissions to enable EventBridge to invoke the Lambda function. Learn more about Lambda VPC connectivity here.

In this blog post, you learn how to send EventBridge events to a private endpoint in a VPC. You set up an example application with an EventBridge event bus, a Lambda function to relay events, a Flask application running in an EKS cluster to receive events behind an Application Load Balancer (ALB), and a secret stored in Secrets Manager for authenticating requests. This application uses EKS and Secrets Manager to demonstrate sending and authenticating requests to a containerized workload, but the same pattern applies for other container orchestration services like ECS and your preferred secret management solution.

Continue reading for the full example application and walkthrough. If you have an existing application in a VPC, you can deploy just the event relay portion and input your VPC details as parameters.

To run the application, you must install the AWS CLI, Docker CLI, eksctl, kubectl, and AWS SAM CLI.

To clone the repository, run:

git clone https://github.com/aws-samples/eventbridge-events-to-vpc.gitcd example-vpc-application

eksctl create cluster --config-file eksctl_config.yamlThis takes a few minutes. This step creates an EKS cluster with one node group in us-east-1. The EKS cluster has a service account with IAM permissions to access the Secrets Manager secret you create later.

aws ecr create-repository --repository-name events-flask-app Use the repository URI in the following commands to build, tag, and push the container image to ECR.

docker build --tag events-flask-app .

docker tag events-flask-app:latest {repository-uri}:1

docker push {repository-uri}:1/example-vpc-application/manifests/deployment.yaml), fill in your repository URI and container image version (for example, 123456789.dkr.ecr.us-east-1.amazonaws.com/events-flask-app:1)kubectl apply --kustomize manifests/kubectl get pod --namespace vpc-example-app

kubectl logs --namespace vpc-example-app {pod-name} --followYou should see the Flask application outputting ‘Hello from my container!’ in response to GET request health checks.

Next, you retrieve the security group ID, private subnet IDs, and ALB DNS Name to deploy the Lambda function connected to the same VPC and private subnet and send events to the ALB.

You need a VPC endpoint for your application to call Secrets Manager.

Deploy the event relay application using the AWS Serverless Application Model (AWS SAM) CLI:

cd event-relay

sam build

sam deploy --guidedThe guided deployment process prompts for input parameters. Enter ‘event-relay-app’ as the stack name and accept the default Region. For other parameters, submit the ALB and VPC details you copied: Url (ALB DNS name), security group ID, and private subnet IDs. For the Secret parameter, pass any value.The AWS SAM template saves this value as a Secrets Manager secret to authenticate calls to the container application. This is an example of how to pass secrets in the event relay HTTP call. Replace this with your own authentication method in production environments.

Both the Flask application in a VPC and the event relay application are now deployed. To test the event relay application, keep the Kubernetes pod logs from a previous step open to monitor requests coming into the Flask application.

aws events put-events \

--entries '[{"EventBusName": "event-relay-bus" ,"Source": "eventProducerApp", "DetailType": "inbound-event-sent", "Detail": "{ \"event-id\": \"123\", \"return-response-event\": true }"}]'When the event is relayed to the Flask application, a POST request in the Kubernetes pod logs confirms that the application processed the event.

In the VPC dashboard in the AWS Management Console, find the Endpoints tab and delete the Secrets Manager VPC endpoint. Next, run the following commands to delete the rest of the example application. Be sure to run the commands in this order as some of the resources have dependencies on one another.

sam delete --stack-name event-relay-app

kubectl --namespace vpc-example-app delete ingress vpc-example-app-ingressFrom the example-vpc-application directory, run this command.

eksctl delete cluster --config-file eksctl_config.yamlEvent-driven architectures and EventBridge can help you accelerate feature velocity and build scalable, fault tolerant applications. This post demonstrates how to send EventBridge events to a private endpoint in a VPC using a Lambda function to relay events and emit response events.

To learn more, read Getting started with event-driven architectures and visit EventBridge tutorials on Serverless Land.

Post Syndicated from Sukhomoy Basak original https://aws.amazon.com/blogs/big-data/stream-change-data-to-amazon-kinesis-data-streams-with-aws-dms/

In this post, we discuss how to use AWS Database Migration Service (AWS DMS) native change data capture (CDC) capabilities to stream changes into Amazon Kinesis Data Streams.

AWS DMS is a cloud service that makes it easy to migrate relational databases, data warehouses, NoSQL databases, and other types of data stores. You can use AWS DMS to migrate your data into the AWS Cloud or between combinations of cloud and on-premises setups. AWS DMS also helps you replicate ongoing changes to keep sources and targets in sync.

CDC refers to the process of identifying and capturing changes made to data in a database and then delivering those changes in real time to a downstream system. Capturing every change from transactions in a source database and moving them to the target in real time keeps the systems synchronized, and helps with real-time analytics use cases and zero-downtime database migrations.

Kinesis Data Streams is a fully managed streaming data service. You can continuously add various types of data such as clickstreams, application logs, and social media to a Kinesis stream from hundreds of thousands of sources. Within seconds, the data will be available for your Kinesis applications to read and process from the stream.

AWS DMS can do both replication and migration. Kinesis Data Streams is most valuable in the replication use case because it lets you react to replicated data changes in other integrated AWS systems.

This post is an update to the post Use the AWS Database Migration Service to Stream Change Data to Amazon Kinesis Data Streams. This new post includes steps required to configure AWS DMS and Kinesis Data Streams for a CDC use case. With Kinesis Data Streams as a target for AWS DMS, we make it easier for you to stream, analyze, and store CDC data. AWS DMS uses best practices to automatically collect changes from a data store and stream them to Kinesis Data Streams.

With the addition of Kinesis Data Streams as a target, we’re helping customers build data lakes and perform real-time processing on change data from your data stores. You can use AWS DMS in your data integration pipelines to replicate data in near-real time directly into Kinesis Data Streams. With this approach, you can build a decoupled and eventually consistent view of your database without having to build applications on top of a database, which is expensive. You can refer to the AWS whitepaper AWS Cloud Data Ingestion Patterns and Practices for more details on data ingestion patters.

The following diagram illustrates that AWS DMS can use many of the most popular database engines as a source for data replication to a Kinesis Data Streams target. The database source can be a self-managed engine running on an Amazon Elastic Compute Cloud (Amazon EC2) instance or an on-premises database, or it can be on Amazon Relational Database Service (Amazon RDS), Amazon Aurora, or Amazon DocumentDB (with MongoDB availability).

Kinesis Data Streams can collect, process, and store data streams at any scale in real time and write to AWS Glue, which is a serverless data integration service that makes it easy to discover, prepare, and combine data for analytics, machine learning, and application development. You can use Amazon EMR for big data processing, Amazon Kinesis Data Analytics to process and analyze streaming data , Amazon Kinesis Data Firehose to run ETL (extract, transform, and load) jobs on streaming data, and AWS Lambda as a serverless compute for further processing, transformation, and delivery of data for consumption.

You can store the data in a data warehouse like Amazon Redshift, which is a cloud-scale data warehouse, and in an Amazon Simple Storage Service (Amazon S3) data lake for consumption. You can use Kinesis Data Firehose to capture the data streams and load the data into S3 buckets for further analytics.

Once the data is available in Kinesis Data Streams targets (as shown in the following diagram), you can visualize it using Amazon QuickSight; run ad hoc queries using Amazon Athena; access, process, and analyze it using an Amazon SageMaker notebook instance; and efficiently query and retrieve structured and semi-structured data from files in Amazon S3 without having to load the data into Amazon Redshift tables using Amazon Redshift Spectrum.

In this post, we describe how to use AWS DMS to load data from a database to Kinesis Data Streams in real time. We use a SQL Server database as example, but other databases like Oracle, Microsoft Azure SQL, PostgreSQL, MySQL, SAP ASE, MongoDB, Amazon DocumentDB, and IBM DB2 also support this configuration.

You can use AWS DMS to capture data changes on the database and then send this data to Kinesis Data Streams. After the streams are ingested in Kinesis Data Streams, they can be consumed by different services like Lambda, Kinesis Data Analytics, Kinesis Data Firehose, and custom consumers using the Kinesis Client Library (KCL) or the AWS SDK.

The following are some use cases that can use AWS DMS and Kinesis Data Streams:

To facilitate the understanding of the integration between AWS DMS, Kinesis Data Streams, and Kinesis Data Firehose, we have defined a business case that you can solve. In this use case, you are the data engineer of an energy company. This company uses Amazon Relational Database Service (Amazon RDS) to store their end customer information, billing information, and also electric meter and gas usage data. Amazon RDS is their core transaction data store.

You run a batch job weekly to collect all the transactional data and send it to the data lake for reporting, forecasting, and even sending billing information to customers. You also have a trigger-based system to send emails and SMS periodically to the customer about their electricity usage and monthly billing information.

Because the company has millions of customers, processing massive amounts of data every day and sending emails or SMS was slowing down the core transactional system. Additionally, running weekly batch jobs for analytics wasn’t giving accurate and latest results for the forecasting you want to do on customer gas and electricity usage. Initially, your team was considering rebuilding the entire platform and avoiding all those issues, but the core application is complex in design, and running in production for many years and rebuilding the entire platform will take years and cost millions.

So, you took a new approach. Instead of running batch jobs on the core transactional database, you started capturing data changes with AWS DMS and sending that data to Kinesis Data Streams. Then you use Lambda to listen to a particular data stream and generate emails or SMS using Amazon SNS to send to the customer (for example, sending monthly billing information or notifying when their electricity or gas usage is higher than normal). You also use Kinesis Data Firehose to send all transaction data to the data lake, so your company can run forecasting immediately and accurately.

The following diagram illustrates the architecture.

In the following steps, you configure your database to replicate changes to Kinesis Data Streams, using AWS DMS. Additionally, you configure Kinesis Data Firehose to load data from Kinesis Data Streams to Amazon S3.

It’s simple to set up Kinesis Data Streams as a change data target in AWS DMS and start streaming data. For more information, see Using Amazon Kinesis Data Streams as a target for AWS Database Migration Service.

To get started, you first create a Kinesis data stream in Kinesis Data Streams, then an AWS Identity and Access Management (IAM) role with minimal access as described in Prerequisites for using a Kinesis data stream as a target for AWS Database Migration Service. After you define your IAM policy and role, you set up your source and target endpoints and replication instance in AWS DMS. Your source is the database that you want to move data from, and the target is the database that you’re moving data to. In our case, the source database is a SQL Server database on Amazon RDS, and the target is the Kinesis data stream. The replication instance processes the migration tasks and requires access to the source and target endpoints inside your VPC.

A Kinesis delivery stream (created in Kinesis Data Firehose) is used to load the records from the database to the data lake hosted on Amazon S3. Kinesis Data Firehose can load data also to Amazon Redshift, Amazon OpenSearch Service, an HTTP endpoint, Datadog, Dynatrace, LogicMonitor, MongoDB Cloud, New Relic, Splunk, and Sumo Logic.

For testing purposes, we use the database democustomer, which is hosted on a SQL Server on Amazon RDS. Use the following command and script to create the database and table, and insert 10 records:

To capture the new records added to the table, enable MS-CDC (Microsoft Change Data Capture) using the following commands at the database level (replace SchemaName and TableName). This is required if ongoing replication is configured on the task migration in AWS DMS.

You can use ongoing replication (CDC) for a self-managed SQL Server database on premises or on Amazon Elastic Compute Cloud (Amazon EC2), or a cloud database such as Amazon RDS or an Azure SQL managed instance. SQL Server must be configured for full backups, and you must perform a backup before beginning to replicate data.

For more information, see Using a Microsoft SQL Server database as a source for AWS DMS.

Next, we configure our Kinesis data stream. For full instructions, see Creating a Stream via the AWS Management Console. Complete the following steps:

Next, you configure your IAM policy and role.

We use a Kinesis delivery stream to load the information from the Kinesis data stream to Amazon S3. To configure the delivery stream, complete the following steps:

We use an AWS DMS instance to connect to the SQL Server database and then replicate the table and future transactions to a Kinesis data stream. In this section, we create a replication instance, source endpoint, target endpoint, and migration task. For more information about endpoints, refer to Creating source and target endpoints.

dbo and the table name is invoices.

When the task is ready, the migration starts.

After the data has been loaded, the table statistics are updated and you can see the 10 records created initially.

As the Kinesis delivery stream reads the data from Kinesis Data Streams and loads it in Amazon S3, the records are available in the bucket you defined previously.

To check that AWS DMS ongoing replication and CDC are working, use this script to add 1,000 records to the table.

You can see 1,000 inserts on the Table statistics tab for the database migration task.

After about 1 minute, you can see the records in the S3 bucket.

At this point the replication has been activated, and a Lambda function can start consuming the data streams to send emails SMS to the customers through Amazon SNS. More information, refer to Using AWS Lambda with Amazon Kinesis.

With Kinesis Data Streams as an AWS DMS target, you now have a powerful way to stream change data from a database directly into a Kinesis data stream. You can use this method to stream change data from any sources supported by AWS DMS to perform real-time data processing. Happy streaming!

If you have any questions or suggestions, please leave a comment.

Luis Eduardo Torres is a Solutions Architect at AWS based in Bogotá, Colombia. He helps companies to build their business using the AWS cloud platform. He has a great interest in Analytics and has been leading the Analytics track of AWS Podcast in Spanish.

Luis Eduardo Torres is a Solutions Architect at AWS based in Bogotá, Colombia. He helps companies to build their business using the AWS cloud platform. He has a great interest in Analytics and has been leading the Analytics track of AWS Podcast in Spanish.

Sukhomoy Basak is a Solutions Architect at Amazon Web Services, with a passion for Data and Analytics solutions. Sukhomoy works with enterprise customers to help them architect, build, and scale applications to achieve their business outcomes.

Sukhomoy Basak is a Solutions Architect at Amazon Web Services, with a passion for Data and Analytics solutions. Sukhomoy works with enterprise customers to help them architect, build, and scale applications to achieve their business outcomes.

Post Syndicated from Antje Barth original https://aws.amazon.com/blogs/aws/new-amazon-sagemaker-ground-truth-now-supports-synthetic-data-generation/

Today, I am happy to announce that you can now use Amazon SageMaker Ground Truth to generate labeled synthetic image data.

Building machine learning (ML) models is an iterative process that, at a high level, starts with data collection and preparation, followed by model training and model deployment. And especially the first step, collecting large, diverse, and accurately labeled datasets for your model training, is often challenging and time-consuming.

Let’s take computer vision (CV) applications as an example. CV applications have come to play a key role in the industrial landscape. They help improve manufacturing quality or automate warehouses. Yet, collecting the data to train these CV models often takes a long time or can be impossible.

As a data scientist, you might spend months collecting hundreds of thousands of images from the production environments to make sure you capture all variations in data the model will come across. In some cases, finding all data variations might even be impossible, for example, sourcing images of rare product defects, or expensive, if you have to intentionally damage your products to get those images.

And once all data is collected, you need to accurately label the images, which is often a struggle in itself. Manually labeling images is slow and open to human error, and building custom labeling tools and setting up scaled labeling operations can be time-consuming and expensive. One way to mitigate this data challenge is by adding synthetic data to the mix.

Advantages of Combining Real-World Data with Synthetic Data

Combining your real-world data with synthetic data helps to create more complete training datasets for training your ML models.

Synthetic data itself is created by simple rules, statistical models, computer simulations, or other techniques. This allows synthetic data to be created in enormous quantities and with highly accurate labels for annotations across thousands of images. The label accuracy can be done at a very fine granularity, such as on a sub-object or pixel level, and across modalities. Modalities include bounding boxes, polygons, depth, and segments. Synthetic data can also be generated for a fraction of the cost, especially when compared to remote sensing imagery that otherwise relies on satellite, aerial, or drone image collection.

If you combine your real-world data with synthetic data, you can create more complete and balanced data sets, adding data variety that real-world data might lack. With synthetic data, you have the freedom to create any imagery environment, including edge cases that might be difficult to find and replicate in real-world data. You can customize objects and environments with variations, for example, to reflect different lighting, colors, texture, pose, or background. In other words, you can “order” the exact use case you are training your ML model for.

Now, let me show you how you can start sourcing labeled synthetic images using SageMaker Ground Truth.

Get Started on Your Synthetic Data Project with Amazon SageMaker Ground Truth

To request a new synthetic data project, navigate to the Amazon SageMaker Ground Truth console and select Synthetic data.

Then, select Open project portal. In the project portal, you can request new projects, monitor projects that are in progress, and view batches of generated images once they become available for review. To initiate a new project, select Request project.

Describe your synthetic data needs and provide contact information.

After you submit the request form, you can check your project status in the project dashboard.

In the next step, an AWS expert will reach out to discuss your project requirements in more detail. Upon review, the team will share a custom quote and project timeline.

If you want to continue, AWS digital artists will start by creating a small test batch of labeled synthetic images as a pilot production for you to review.

They collect your project inputs, such as reference photos and available 2D and 3D assets. The team then customizes those assets, adds the specified inclusions, such as scratches, dents, and textures, and creates the configuration that describes all the variations that need to be generated.

They can also create and add new objects based on your requirements, configure distributions and locations of objects in a scene, as well as modify object size, shape, color, and surface texture.

Once the objects are prepared, they are rendered using a photorealistic physics engine, capturing an image of the scene from a sensor that is placed in the virtual world. Images are also automatically labeled. Labels include 2D bounding boxes, instance segmentation, and contours.

You can monitor the progress of the data generation jobs on the project detail page. Once the pilot production test batch becomes available for review, you can spot-check the images and provide feedback for any rework that might be required.

Select the batch you want to review and View details.

In addition to the images, you will also receive output image labels, metadata such as object positions, and image quality metrics as Amazon SageMaker compatible JSON files.

Synthetic Image Fidelity and Diversity Report

With each available batch of images, you also receive a synthetic image fidelity and diversity report. This report provides image and object level statistics and plots that help you make sense of the generated synthetic images.

The statistics are used to describe the diversity and the fidelity of the synthetic images and compare them with real images. Examples of the statistics and plots provided are the distributions of object classes, object sizes, image brightness, and image contrast, as well as the plots evaluating the indistinguishability between synthetic and real images.

Once you approve the pilot production test batch, the team will move to the production phase and start generating larger batches of labeled synthetic images with your desired label types, such as 2D bounding boxes, instance segmentation, and contours. Similar to the test batch, each production batch of images will be made available for you together with the image fidelity and diversity report to spot-check, accept, or reject.

All images and artifacts will be available for you to download from your S3 bucket once final production is complete.

Availability

Amazon SageMaker Ground Truth synthetic data is available in US East (N. Virginia). Synthetic data is priced on a per-label basis. You can request a custom quote that is tailored to your specific use case and requirements by filling out the project requirement form.

Learn more about SageMaker Ground Truth synthetic data on our Amazon SageMaker Data Labeling page.

Request your synthetic data project through the Amazon SageMaker Ground Truth console today!

— Antje

Post Syndicated from original https://lwn.net/Articles/898772/

Drew DeVault takes

issue with GitHub’s “Copilot” offering and the licensing issues that it raises:

GitHub’s Copilot is trained on software governed by these terms,

and it fails to uphold them, and enables customers to accidentally

fail to uphold these terms themselves. Some argue about the risks

of a “copyleft surprise”, wherein someone incorporates a GPL

licensed work into their product and is surprised to find that they

are obligated to release their product under the terms of the GPL

as well. Copilot institutionalizes this risk and any user who

wishes to use it to develop non-free software would be well-advised

not to do so, else they may find themselves legally liable to

uphold these terms, perhaps ultimately being required to release

their works under the terms of a license which is undesirable for

their goals.

Chances are that many people will disagree with DeVault’s reasoning, but

this is an issue that merits some discussion still.

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/now-in-preview-amazon-codewhisperer-ml-powered-coding-companion/

As I was getting ready to write this post I spent some time thinking about some of the coding tools that I have used over the course of my career. This includes the line-oriented editor that was an intrinsic part of the BASIC interpreter that I used in junior high school, the IBM keypunch that I used when I started college, various flavors of Emacs, and Visual Studio. The earliest editors were quite utilitarian, and grew in sophistication as CPU power become more plentiful. At first this increasing sophistication took the form of lexical assistance, such as dynamic completion of partially-entered variable and function names. Later editors were able to parse source code, and to offer assistance based on syntax and data types — Visual Studio‘s IntelliSense, for example. Each of these features broke new ground at the time, and each one had the same basic goal: to help developers to write better code while reducing routine and repetitive work.

Announcing CodeWhisperer

Today I would like to tell you about Amazon CodeWhisperer. Trained on billions of lines of code and powered by machine learning, CodeWhisperer has the same goal. Whether you are a student, a new developer, or an experienced professional, CodeWhisperer will help you to be more productive.

We are launching in preview form with support for multiple IDEs and languages. To get started, you simply install the proper AWS IDE Toolkit, enable the CodeWhisperer feature, enter your preview access code, and start typing:

CodeWhisperer will continually examine your code and your comments, and present you with syntactically correct recommendations. The recommendations are synthesized based on your coding style and variable names, and are not simply snippets.

CodeWhisperer uses multiple contextual clues to drive recommendations including the cursor location in the source code, code that precedes the cursor, comments, and code in other files in the same projects. You can use the recommendations as-is, or you can enhance and customize them as needed. As I mentioned earlier, we trained (and continue to train) CodeWhisperer on billions of lines of code drawn from open source repositories, internal Amazon repositories, API documentation, and forums.

CodeWhisperer in Action

I installed the CodeWhisperer preview in PyCharm and put it through its paces. Here are a few examples to show you what it can do. I want to build a list of prime numbers. I type # See if a number is pr. CodeWhisperer offers to complete this, and I press TAB (the actual key is specific to each IDE) to accept the recommendation:

On the next line, I press Alt-C (again, IDE-specific), and I can choose between a pair of function definitions. I accept the first one, and CodeWhisperer recommends the function body, and here’s what I have:

I write a for statement, and CodeWhisperer recommends the entire body of the loop:

CodeWhisperer can also help me to write code that accesses various AWS services. I start with # create S3 bucket and TAB-complete the rest:

I could show you many more cool examples, but you will learn more by simply joining the preview and taking CodeWhisperer for a spin.

Join the Preview

The preview supports code written in Python, Java, and JavaScript, using VS Code, IntelliJ IDEA, PyCharm, WebStorm, and AWS Cloud9. Support for the AWS Lambda Console is in the works and should be ready very soon.

Join the CodeWhisperer preview and let me know what you think!

— Jeff;

Post Syndicated from Kari Rivas original https://www.backblaze.com/blog/server-backup-101-on-premises-vs-cloud-only-vs-hybrid-backup-strategies/

As an IT leader or business owner, establishing a solid, working backup strategy is one of the most important tasks on your plate. Server backups are an essential part of a good security and disaster recovery stance. One decision you’re faced with as part of setting up that strategy is where and how you’ll store server backups: on-premises, in the cloud, or in some mix of the two.

As the cloud has become more secure, affordable, and accessible, more organizations are using a hybrid cloud strategy for their cloud computing needs, and server backups are particularly well suited to this strategy. It allows you to maintain existing on-premises infrastructure while taking advantage of the scalability, affordability, and geographic separation offered by the cloud.

If you’re confused about how to set up a hybrid cloud strategy for backups, you’re not alone. There are as many ways to approach it as there are companies backing up to the cloud. Today, we’re discussing different server backup approaches to help you architect a hybrid server backup strategy that fits your business.

Learning about different backup destinations can help administrators craft better backup policies and procedures to ensure the safety of your data for the long term. When structuring your server backup strategy, you essentially have three choices for where to store data: on-premises, in the cloud, or in a hybrid environment that uses both. First, though, let’s explain what a hybrid environment truly is.

Hybrid cloud refers to a cloud environment made up of both private cloud resources (typically on-premises, although they don’t have to be) and public cloud resources with some kind of orchestration between them. Let’s define private and public clouds:

Hybrid clouds are defined by a combined management approach, which means they have some type of orchestration between the public and private cloud that allows data to move between them as demands, needs, and costs change, giving businesses greater flexibility and more options for data deployment and use.

Here are some examples of different server backup destinations according to where your data is located:

On-premises backup, also known as a local backup, is the process of backing up your system, applications, and other data to a local device. Tape and network-attached storage (NAS) are examples of common local backup solutions.

Cloud-only backup strategies are becoming more commonplace as startups take a cloud-native approach and existing companies undergo digital transformations. A cloud-only backup strategy involves eliminating local, on-premises backups and sending files and databases to the cloud vendor for storage. It’s still a great idea to keep a local copy of your backup so you comply with a 3-2-1 backup strategy (more on that below). You could also utilize multiple cloud vendors or multiple regions with the same vendor to ensure redundancy. In the event of an outage, your data is stored safely in a separate cloud or a different cloud region and can easily be restored.

With services like Cloud Replication, companies can easily achieve a solid cloud-only server backup solution within the same cloud vendor’s infrastructure. It’s also possible to orchestrate redundancy between two different cloud vendors in a multi-cloud strategy.

When you hear the term “hybrid” when it comes to servers, you might initially think about a combination of on-premises and cloud data. That’s typically what people think of when they imagine a hybrid cloud, but as we mentioned earlier, a hybrid cloud is a combination of a public cloud and a private cloud. Today, private clouds can live off-premises, but for our purposes, we’ll consider private clouds as being on-premises. A hybrid server backup strategy is an easy way to accomplish a 3-2-1 backup strategy, generally considered the gold standard when it comes to backups.

The 3-2-1 backup strategy is a tried and tested way to keep your data accessible, yet safe. It includes:

A hybrid server backup strategy can be helpful for fulfilling this sage backup advice as it provides two backup locations, one in the private cloud and one in the public cloud.

Choosing a backup strategy that is right for you involves carefully evaluating your existing systems and your future goals. Can you get there with your current backup strategy? What if a ransomware or distributed denial of service (DDoS) attack affected your organization tomorrow? Decide what gaps need to be filled and take into consideration a few more crucial points:

Once you’ve decided a hybrid server backup strategy is right for you, there are many ways you can structure it. Here are just a few examples:

As you’re structuring your server backup strategy, consider any GDPR, HIPAA, or cybersecurity requirements. Does it call for off-site, air-gapped backups? If so, you may want to move that data (like customer or patient records) to the cloud and keep other, non-regulated data local. Some industries, particularly government and heavily regulated industries, may require you to keep some data in a private cloud.

Ready to get started? Back up your server using our joint solution with MSP360 or get started with Veeam or any one of our many other integrations.

The post Server Backup 101: On-premises vs. Cloud-only vs. Hybrid Backup Strategies appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=6RGwkWf_XFQ

Post Syndicated from Alex Krivit original https://blog.cloudflare.com/early-hints-performance/

A few months ago, we wrote a post focused on a product we were building that could vastly improve page load performance. That product, known as Early Hints, has seen wide adoption since that original post. In early benchmarking experiments with Early Hints, we saw performance improvements that were as high as 30%.

Now, with over 100,000 customers using Early Hints on Cloudflare, we are excited to talk about how much Early Hints have improved page loads for our customers in production, how customers can get the most out of Early Hints, and provide an update on the next iteration of Early Hints we’re building.

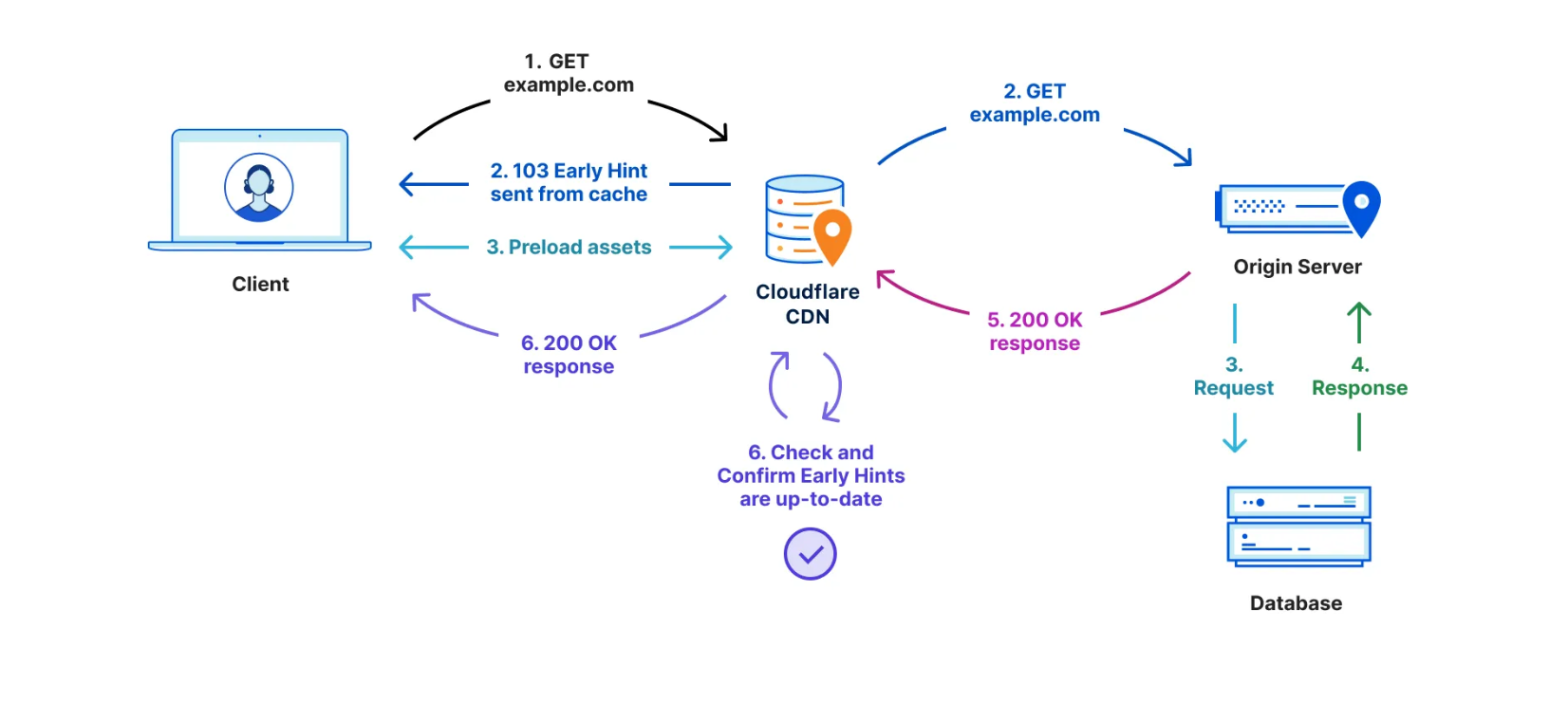

As a reminder, the browser you’re using right now to read this page needed instructions for what to render and what resources (like images, fonts, and scripts) need to be fetched from somewhere else in order to complete the loading of this (or any given) web page. When you decide you want to see a page, your browser sends a request to a server and the instructions for what to load come from the server’s response. These responses are generally composed of a multitude of resources that tell the browser what content to load and how to display it to the user. The servers sending these instructions to your browser often need time to gather up all of the resources in order to compile the whole webpage. This period is known as “server think time.” Traditionally, during the “server think time” the browser would sit waiting until the server has finished gathering all the required resources and is able to return the full response.

Early Hints was designed to take advantage of this “server think time” to send instructions to the browser to begin loading readily-available resources while the server finishes compiling the full response. Concretely, the server sends two responses: the first to instruct the browser on what it can begin loading right away, and the second is the full response with the remaining information. By sending these hints to a browser before the full response is prepared, the browser can figure out what it needs to do to load the webpage faster for the end user.

Early Hints uses the HTTP status code 103 as the first response to the client. The “hints” are HTTP headers attached to the 103 response that are likely to appear in the final response, indicating (with the Link header) resources the browser should begin loading while the server prepares the final response. Sending hints on which assets to expect before the entire response is compiled allows the browser to use this “think time” (when it would otherwise have been sitting idle) to fetch needed assets, prepare parts of the displayed page, and otherwise get ready for the full response to be returned.

Early Hints on Cloudflare accomplishes performance improvements in three ways:

Early Hints is like multitasking across the Internet – at the same time the origin is compiling resources for the final response and making calls to databases or other servers, the browser is already beginning to load assets for the end user.

While developing Early Hints, we’ve been fortunate to work with Google and Shopify to collect data on the performance impact. Chrome provided web developers with experimental access to both preload and preconnect support for Link headers in Early Hints. Shopify worked with us to guide the development by providing test frameworks which were invaluable to getting real performance data.

Today is a big day for Early Hints. Google announced that Early Hints is available in Chrome version 103 with support for preload and preconnect to start. Previously, Early Hints was available via an origin trial so that Chrome could measure the full performance benefit (A/B test). Now that the data has been collected and analyzed, and we’ve been able to prove a substantial improvement to page load, we’re excited that Chrome’s full support of Early Hints will mean that many more requests will see the performance benefits.

That’s not the only big news coming out about Early Hints. Shopify battle-tested Cloudflare’s implementation of Early Hints during Black Friday/Cyber Monday 2021 and is sharing the performance benefits they saw during the busiest shopping time of the year:

Today, HTTP 103 Early Hints ships with Chrome 103!

Why is this important for #webperf? How did @Shopify help make all merchant sites faster? (LCP over 500ms faster at p50!) 🧵

Hint: A little collaboration w/ @cloudflare & @googlechrome pic.twitter.com/Dz7BD4Jplp

— Colin Bendell (@colinbendell) June 21, 2022

While talking to the audience at Cloudflare Connect London last week, Colin Bendell, Director, Performance Engineering at Shopify summarized it best: “when a buyer visits a website, if that first page that (they) experience is just 10% faster, on average there is a 7% increase in conversion“. The beauty of Early Hints is you can get that sort of speedup easily, and with Smart Early Hints that can be one click away.

You can see his entire talk here:

The headline here is that during a time of vast uncertainty due to the global pandemic, a time when everyone was more online than ever before, when people needed their Internet to be reliably fast — Cloudflare, Google, and Shopify all came together to build and test Early Hints so that the whole Internet would be a faster, better, and more efficient place.

So how much did Early Hints improve performance of customers’ websites?

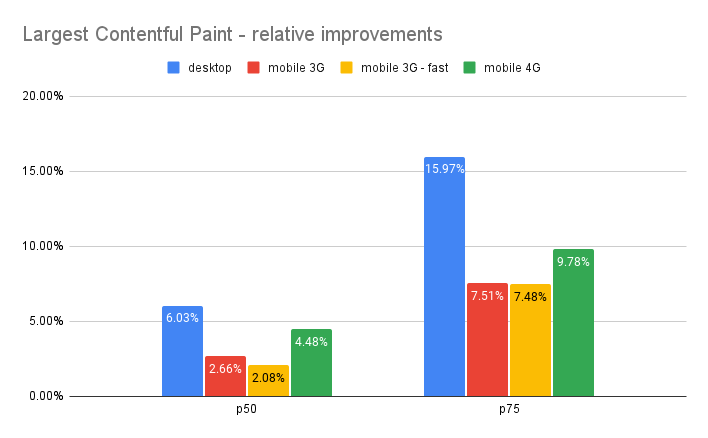

In our simple tests back in September, we were able to accelerate the Largest Contentful Paint (LCP) by 20-30%. Granted, this result was on an artificial page with mostly large images where Early Hints impact could be maximized. As for Shopify, we also knew their storefronts were particularly good candidates for Early Hints. Each mom-and-pop.shop page depends on many assets served from cdn.shopify.com – speeding up a preconnect to that host should meaningfully accelerate loading those assets.

But what about other zones? We expected most origins already using Link preload and preconnect headers to see at least modest improvements if they turned on Early Hints. We wanted to assess performance impact for other uses of Early Hints beyond Shopify’s.

However, getting good data on web page performance impact can be tricky. Not every 103 response from Cloudflare will result in a subsequent request through our network. Some hints tell the browser to preload assets on important third-party origins, for example. And not every Cloudflare zone may have Browser Insights enabled to gather Real User Monitoring data.

Ultimately, we decided to do some lab testing with WebPageTest of a sample of the most popular websites (top 1,000 by request volume) using Early Hints on their URLs with preload and preconnect Link headers. WebPageTest (which we’ve written about in the past) is an excellent tool to visualize and collect metrics on web page performance across a variety of device and connectivity settings.

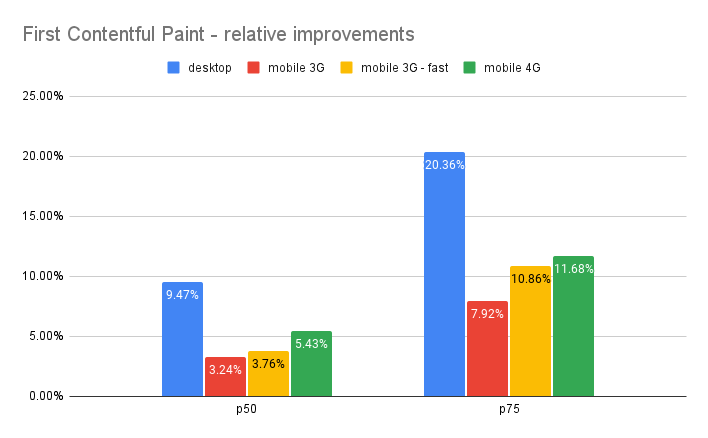

In our earlier blog post, we were mainly focused on Largest Contentful Paint (LCP), which is the time at which the browser renders the largest visible image or text block, relative to the start of the page load. Here we’ll focus on improvements not only to LCP, but also FCP (First Contentful Paint), which is the time at which the browser first renders visible content relative to the start of the page load.

We compared test runs with Early Hints support off and on (in Chrome), across four different simulated environments: desktop with a cable connection (5Mbps download / 28ms RTT), mobile with 3G (1.6Mbps / 300ms RTT), mobile with low-latency 3G (1.6Mbps / 150ms RTT) and mobile with 4G (9Mbps / 170ms RTT). After running the tests, we cleaned the data to remove URLs with no visual completeness metrics or less than five DOM elements. (These usually indicated document fragments vs. a page a user might actually navigate to.) This gave us a final sample population of a little more than 750 URLs, each from distinct zones.

In the box plots below, we’re comparing FCP and LCP percentiles between the timing data control runs (no Early Hints) and the runs with Early Hints enabled. Our sample population represents a variety of zones, some of which load relatively quickly and some far slower, thus the long whiskers and string of outlier points climbing the y-axis. The y-axis is constrained to the max p99 of the dataset, to ensure 99% of the data are reflected in the graph while still letting us focus on the p25 / p50 / p75 differences.

The relative shift in the box plot quantiles suggest we should expect modest benefits for Early Hints for the majority of web pages. By comparing FCP / LCP percentage improvement of the web pages from their respective baselines, we can quantify what those median and p75 improvements would look like:

A couple observations:

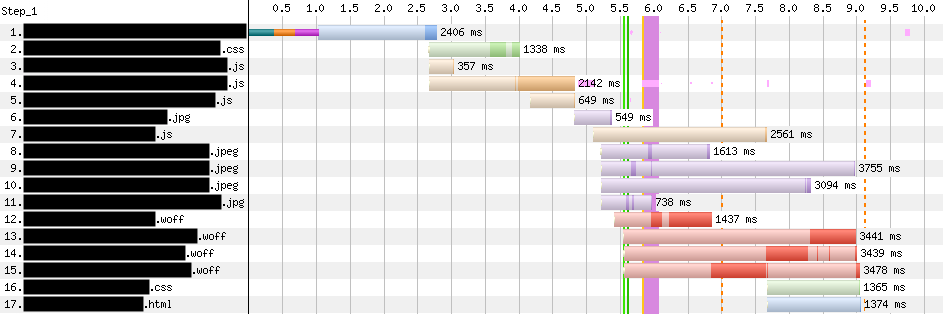

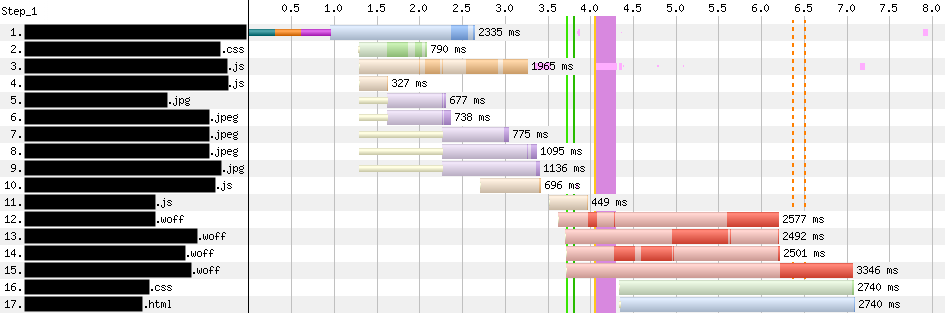

The relative distributions between control and Early Hints runs, as well as the per-site baseline improvements, show us Early Hints can be broadly beneficial for use cases beyond Shopify’s. As suggested by the p75+ values, we also still find plenty of case studies showing a more substantial potential impact to LCP (and FCP) like the one we observed from our artificial test case, as indicated from these WebPageTest waterfall diagrams:

These diagrams show the network and rendering activity on the same web page (which, bucking the trend, had some of its best results over mobile – 3G settings, shown here) for its first ten resources. Compare the WebPageTest waterfall view above (with Early Hints disabled) with the waterfall below (Early Hints enabled). The first green vertical line in each indicates First Contentful Paint. The page configures Link preload headers for a few JS / CSS assets, as well as a handful of key images. When Early Hints is on, those assets (numbered 2 through 9 below) get a significant head start from the preload hints. In this case, FCP and LCP improved by 33%!

The effect of Early Hints can vary widely on a case-by-case basis. We noticed particularly successful zones had one or more of the following:

It’s quite possible these strategies are already familiar to you if you work on web performance! Essentially the best practices that apply to using Link headers or <link> elements in the HTML <head> also apply to Early Hints. That is to say: if your web page is already using preload or preconnect Link headers, using Early Hints should amplify those benefits.

A cautionary note here: while it may be safer to aggressively send assets in Early Hints versus Server Push (as the hints won’t arbitrarily send browser-cached content the way Server Push might), it is still possible to over-hint non-critical assets and saturate network bandwidth in a similar manner to overpushing. For example, one page in our sample listed well over 50 images in its 103 response (but not one of its render-blocking JS scripts). It saw improvements over cable, but was consistently worse off in the higher latency, lower bandwidth mobile connection settings.

Google has great guidelines for configuring Link headers at your origin in their blog post. As for emitting these Links as Early Hints, Cloudflare can take care of that for you!

Enabling Early Hints means that we will harvest the preload and preconnect Link headers from your origin responses, cache them, and send them as 103 Early Hints for subsequent requests so that future visitors will be able to gain an even greater performance benefit.

For more information about our Early Hints feature, please refer to our announcement post or our documentation.

In our original blog post, we also mentioned our intention to ship a product improvement to Early Hints that would generate the 103 on your behalf.

Smart Early Hints will generate Early Hints even when there isn’t a Link header present in the origin response from which we can harvest a 103. The goal is to be a no-code/configuration experience with massive improvements to page load. Smart Early Hints will infer what assets can be preloaded or prioritized in different ways by analyzing responses coming from our customer’s origins. It will be your one-button web performance guru completely dedicated to making sure your site is loading as fast as possible.

This work is still under development, but we look forward to getting it built before the end of the year.

The promise Early Hints holds has only started to be explored, and we’re excited to continue to build products and features and make the web performance reliably fast.

We’ll continue to update you along our journey as we develop Early Hints and look forward to your feedback (special thanks to the Cloudflare Community members who have already been invaluable) as we move to bring Early Hints to everyone.

Post Syndicated from Celeste Bishop original https://aws.amazon.com/blogs/security/aws-reinforce-2022-threat-detection-and-incident-response-track-preview/

Register now with discount code SALXTDVaB7y to get $150 off your full conference pass to AWS re:Inforce. For a limited time only and while supplies last.

Today we’re going to highlight just some of the sessions focused on threat detection and incident response that are planned for AWS re:Inforce 2022. AWS re:Inforce is a learning conference focused on security, compliance, identity, and privacy. The event features access to hundreds of technical and business sessions, an AWS Partner expo hall, a keynote featuring AWS Security leadership, and more. AWS re:Inforce 2022 will take place in-person in Boston, MA on July 26-27.

AWS re:Inforce organizes content across multiple themed tracks: identity and access management; threat detection and incident response; governance, risk, and compliance; networking and infrastructure security; and data protection and privacy. This post highlights some of the breakout sessions, chalk talks, builders’ sessions, and workshops planned for the threat detection and incident response track. For additional sessions and descriptions, see the re:Inforce 2022 catalog preview. For other highlights, see our sneak peek at the identity and access management sessions and sneak peek at the data protection and privacy sessions.

These are lecture-style presentations that cover topics at all levels and delivered by AWS experts, builders, customers, and partners. Breakout sessions typically include 10–15 minutes of Q&A at the end.

TDR201: Running effective security incident response simulations

Security incidents provide learning opportunities for improving your security posture and incident response processes. Ideally you want to learn these lessons before having a security incident. In this session, walk through the process of running and moderating effective incident response simulations with your organization’s playbooks. Learn how to create realistic real-world scenarios, methods for collecting valuable learnings and feeding them back into implementation, and documenting correction-of-error proceedings to improve processes. This session provides knowledge that can help you begin checking your organization’s incident response process, procedures, communication paths, and documentation.

TDR202: What’s new with AWS threat detection services

AWS threat detection teams continue to innovate and improve the foundational security services for proactive and early detection of security events and posture management. Keeping up with the latest capabilities can improve your security posture, raise your security operations efficiency, and reduce your mean time to remediation (MTTR). In this session, learn about recent launches that can be used independently or integrated together for different use cases. Services covered in this session include Amazon GuardDuty, Amazon Detective, Amazon Inspector, Amazon Macie, and centralized cloud security posture assessment with AWS Security Hub.

TDR301: A proactive approach to zero-days: Lessons learned from Log4j

In the run-up to the 2021 holiday season, many companies were hit by security vulnerabilities in the widespread Java logging framework, Apache Log4j. Organizations were in a reactionary position, trying to answer questions like: How do we figure out if this is in our environment? How do we remediate across our environment? How do we protect our environment? In this session, learn about proactive measures that you should implement now to better prepare for future zero-day vulnerabilities.

TDR303: Zoom’s journey to hyperscale threat detection and incident response

Zoom, a leader in modern enterprise video communications, experienced hyperscale growth during the pandemic. Their customer base expanded by 30x and their daily security logs went from being measured in gigabytes to terabytes. In this session, Zoom shares how their security team supported this breakneck growth by evolving to a centralized infrastructure, updating their governance process, and consolidating to a single pane of glass for a more rapid response to security concerns. Solutions used to accomplish their goals include Splunk, AWS Security Hub, Amazon GuardDuty, Amazon CloudWatch, Amazon S3, and others.

These are small-group sessions led by an AWS expert who guides you as you build the service or product on your own laptop.

TDR351: Using Kubernetes audit logs for incident response automation

In this hands-on builders’ session, learn how to use Amazon CloudWatch and Amazon GuardDuty to effectively monitor Kubernetes audit logs—part of the Amazon EKS control plane logs—to alert on suspicious events, such as an increase in 403 Forbidden or 401 Unauthorized Error logs. Also learn how to automate example incident responses for streamlining workflow and remediation.

TDR352: How to mitigate the risk of ransomware in your AWS environment

Join this hands-on builders’ session to learn how to mitigate the risk from ransomware in your AWS environment using the NIST Cybersecurity Framework (CSF). Choose your own path to learn how to protect, detect, respond, and recover from a ransomware event using key AWS security and management services. Use Amazon Inspector to detect vulnerabilities, Amazon GuardDuty to detect anomalous activity, and AWS Backup to automate recovery. This session is beneficial for security engineers, security architects, and anyone responsible for implementing security controls in their AWS environment.

Highly interactive sessions with a small audience. Experts lead you through problems and solutions on a digital whiteboard as the discussion unfolds.

TDR231: Automated vulnerability management and remediation for Amazon EC2

In this chalk talk, learn about vulnerability management strategies for Amazon EC2 instances on AWS at scale. Discover the role of services like Amazon Inspector, AWS Systems Manager, and AWS Security Hub in vulnerability management and mechanisms to perform proactive and reactive remediations of findings that Amazon Inspector generates. Also learn considerations for managing vulnerabilities across multiple AWS accounts and Regions in an AWS Organizations environment.

TDR332: Response preparation with ransomware tabletop exercises

Many organizations do not validate their critical processes prior to an event such as a ransomware attack. Through a security tabletop exercise, customers can use simulations to provide a realistic training experience for organizations to test their security resilience and mitigate risk. In this chalk talk, learn about Amazon Managed Services (AMS) best practices through a live, interactive tabletop exercise to demonstrate how to execute a simulation of a ransomware scenario. Attendees will leave with a deeper understanding of incident response preparation and how to use AWS security tools to better respond to ransomware events.

These are interactive learning sessions where you work in small teams to solve problems using AWS Cloud security services. Come prepared with your laptop and a willingness to learn!

TDR271: Detecting and remediating security threats with Amazon GuardDuty