Post Syndicated from original https://lwn.net/Articles/877186/rss

Security updates have been issued by Debian (samba), Fedora (kernel), openSUSE (netcdf and tor), SUSE (netcdf and python-Pygments), and Ubuntu (imagemagick).

Post Syndicated from original https://lwn.net/Articles/877186/rss

Security updates have been issued by Debian (samba), Fedora (kernel), openSUSE (netcdf and tor), SUSE (netcdf and python-Pygments), and Ubuntu (imagemagick).

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=7PLlnzKv8Yc

Post Syndicated from Sue Sentance original https://www.raspberrypi.org/blog/ai-education-schools-panel-uk-policy/

AI is a broad and rapidly developing field of technology. Our goal is to make sure all young people have the skills, knowledge, and confidence to use and create AI systems. So what should AI education in schools look like?

To hear a range of insights into this, we organised a panel discussion as part of our seminar series on AI and data science education, which we co-host with The Alan Turing Institute. Here our panel chair Tabitha Goldstaub, Co-founder of CogX and Chair of the UK government’s AI Council, summarises the event. You can also watch the recording below.

As part of the Raspberry Pi Foundation’s monthly AI education seminar series, I was delighted to chair a special panel session to broaden the range of perspectives on the subject. The members of the panel were:

The session explored the UK government’s commitment in the recently published UK National AI Strategy stating that “the [UK] government will continue to ensure programmes that engage children with AI concepts are accessible and reach the widest demographic.” We discussed what it will take to make this a reality, and how we will ensure young people have a seat at the table.

It was clear that the Minister felt it is very important for young people to understand AI. He said, “The government takes the view that AI is going to be one of the foundation stones of our future prosperity and our future growth. It’s an enabling technology that’s going to have almost universal applicability across our entire economy, and that is why it’s so important that the United Kingdom leads the world in this area. Young people are the country’s future, so nothing is complete without them being at the heart of it.”

Our panelist Caitlin Glover, an A level student at Sandon School, reiterated this from her perspective as a young person. She told us that her passion for AI started initially because she wanted to help neurodiverse young people like herself. Her idea was to start a company that would build AI-powered products to help neurodiverse students.

A theme of the Foundation’s seminar series so far has been how learning about AI early may impact young people’s career choices. Our panelist Alice Ashby, who studies Computer Science and AI at Brighton University, told us about her own process of deciding on her course of study. She pointed to the fact that terms such as machine learning, natural language processing, self-driving cars, chatbots, and many others are currently all under the umbrella of artificial intelligence, but they’re all very different. Alice thinks it’s hard for young people to know whether it’s the right decision to study something that’s still so ambiguous.

When I asked Alice what gave her the courage to take a leap of faith with her university course, she said, “I didn’t know it was the right move for me, honestly. I took a gamble, I knew I wanted to be in computer science, but I wanted to spice it up.” The AI ecosystem is very lucky that people like Alice choose to enter the field even without being taught what precisely it comprises.

We also heard from Danielle Belgrave, a Research Scientist at DeepMind with a remarkable career in AI for healthcare. Danielle explained that she was lucky to have had a Mathematics teacher who encouraged her to work in statistics for healthcare. She said she wanted to ensure she could use her technical skills and her love for math to make an impact on society, and to really help make the world a better place. Danielle works with biologists, mathematicians, philosophers, and ethicists as well as with data scientists and AI researchers at DeepMind. One possibility she suggested for improving young people’s understanding of what roles are available was industry mentorship. Linking people who work in the field of AI with school students was an idea that Caitlin was eager to confirm as very useful for young people her age.

The AI Council’s Roadmap stresses how important it is to not only teach the skills needed to foster a pool of people who are able to research and build AI, but also to ensure that every child leaves school with the necessary AI and data literacy to be able to become engaged, informed, and empowered users of the technology. During the panel, the Minister, Chris Philp, spoke about the fact that people don’t have to be technical experts to come up with brilliant ideas, and that we need more people to be able to think creatively and have the confidence to adopt AI, and that this starts in schools.

Caitlin is a perfect example of a young person who has been inspired about AI while in school. But sadly, among young people and especially girls, she’s in the minority by choosing to take computer science, which meant she had the chance to hear about AI in the classroom. But even for young people who choose computer science in school, at the moment AI isn’t in the national Computing curriculum or part of GCSE computer science, so much of their learning currently takes place outside of the classroom. Caitlin added that she had had to go out of her way to find information about AI; the majority of her peers are not even aware of opportunities that may be out there. She suggested that we ensure AI is taught across all subjects, so that every learner sees how it can make their favourite subject even more magical and thinks “AI’s cool!”.

Philip Colligan, the CEO here at the Foundation, also described how AI could be integrated into existing subjects including maths, geography, biology, and citizenship classes. Danielle thoroughly agreed and made the very good point that teaching this way across the school would help prepare young people for the world of work in AI, where cross-disciplinary science is so important. She reminded us that AI is not one single discipline. Instead, many different skill sets are needed, including engineering new AI systems, integrating AI systems into products, researching problems to be addressed through AI, or investigating AI’s societal impacts and how humans interact with AI systems.

On hearing about this multitude of different skills, our discussion turned to the teachers who are responsible for imparting this knowledge, and to the challenges they face.

When we shifted the focus of the discussion to teachers, Philip said: “If we really want to equip every young person with the knowledge and skills to thrive in a world that shaped by these technologies, then we have to find ways to evolve the curriculum and support teachers to develop the skills and confidence to teach that curriculum.”

I asked the Minister what he thought needed to happen to ensure we achieved data and AI literacy for all young people. He said, “We need to work across government, but also across business and society more widely as well.” He went on to explain how important it was that the Department for Education (DfE) gets the support to make the changes needed, and that he and the Office for AI were ready to help.

Philip explained that the Raspberry Pi Foundation is one of the organisations in the consortium running the National Centre for Computing Education (NCCE), which is funded by the DfE in England. Through the NCCE, the Foundation has already supported thousands of teachers to develop their subject knowledge and pedagogy around computer science.

A recent study recognises that the investment made by the DfE in England is the most comprehensive effort globally to implement the computing curriculum, so we are starting from a good base. But Philip made it clear that now we need to expand this investment to cover AI.

Philip described how brilliant it is to witness young people who choose to get creative with new technologies. As an example, he shared that the Foundation is seeing more and more young people employ machine learning in the European Astro Pi Challenge, where participants run experiments using Raspberry Pi computers on board the International Space Station.

Philip also explained that, in the Foundation’s non-formal CoderDojo club network and its Coolest Projects tech showcase events, young people build their dream AI products supported by volunteers and mentors. Among these have been autonomous recycling robots and AI anti-collision alarms for bicycles. Like Caitlin with her company idea, this shows that young people are ready and eager to engage and create with AI.

We closed out the panel by going back to a point raised by Mhairi Aitken, who presented at the Foundation’s research seminar in September. Mhairi, an Alan Turing Institute ethics fellow, argues that children don’t just need to learn about AI, but that they should actually shape the direction of AI. All our panelists agreed on this point, and we discussed what it would take for young people to have a seat at the table.

Alice advised that we start by looking at our existing systems for engaging young people, such as Youth Parliament, student unions, and school groups. She also suggested adding young people to the AI Council, which I’m going to look into right away! Caitlin agreed and added that it would be great to make these forums virtual, so that young people from all over the country could participate.

The panel session was full of insight and felt very positive. Although the challenge of ensuring we have a data- and AI-literate generation of young people is tough, it’s clear that if we include them in finding the solution, we are in for a bright future.

In the coming months, our goal at the Foundation is to increase our understanding of the concepts underlying AI education and how to teach them in an age-appropriate way. To that end, we will start to conduct a series of small AI education research projects, which will involve gathering the perspectives of a variety of stakeholders, including young people. We’ll make more information available on our research pages soon.

In the meantime, you can sign up for our upcoming research seminars on AI and data science education, and peruse the collection of related resources we’ve put together.

The post How do we develop AI education in schools? A panel discussion appeared first on Raspberry Pi.

Post Syndicated from Bozho original https://techblog.bozho.net/simple-things-that-are-actually-hard-user-authentication/

You build a system. User authentication is the component that is always there, regardless of the functionality of the system. And by now it should be simple to implement it – just “drag” some ready-to-use authentication module, or configure it with some basic options (e.g. Spring Security), and you’re done.

Well, no. It’s the most obvious thing and yet it’s extremely complicated to get right. It’s not just login form -> check username/password -> set cookie. It has a lot of other things to think about:

And that’s for the most obvious feature that every application has. No wonder it has been implemented incorrectly many, many times. The IT world is complex and nothing is simple. Sending email isn’t simple, authentication isn’t simple, logging isn’t simple. Working with strings and dates isn’t simple, sanitizing input and output isn’t simple.

We have done a poor job in building the frameworks and tools to help us with all those things. We can’t really ignore them, we have to think about them actively and take conscious, informed decisions.

The post Simple Things That Are Actually Hard: User Authentication appeared first on Bozho's tech blog.

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/11/intel-is-maintaining-legacy-technology-for-security-research.html

Intel’s issue reflects a wider concern: Legacy technology can introduce cybersecurity weaknesses. Tech makers constantly improve their products to take advantage of speed and power increases, but customers don’t always upgrade at the same pace. This creates a long tail of old products that remain in widespread use, vulnerable to attacks.

Intel’s answer to this conundrum was to create a warehouse and laboratory in Costa Rica, where the company already had a research-and-development lab, to store the breadth of its technology and make the devices available for remote testing. After planning began in mid-2018, the Long-Term Retention Lab was up and running in the second half of 2019.

The warehouse stores around 3,000 pieces of hardware and software, going back about a decade. Intel plans to expand next year, nearly doubling the space to 27,000 square feet from 14,000, allowing the facility to house 6,000 pieces of computer equipment.

Intel engineers can request a specific machine in a configuration of their choice. It is then assembled by a technician and accessible through cloud services. The lab runs 24 hours a day, seven days a week, typically with about 25 engineers working any given shift.

Slashdot thread.

Post Syndicated from Explosm.net original http://explosm.net/comics/6042/

New Cyanide and Happiness Comic

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/new-use-amazon-s3-event-notifications-with-amazon-eventbridge/

We launched Amazon EventBridge in mid-2019 to make it easy for you to build powerful, event-driven applications at any scale. Since that launch, we have added several important features including a Schema Registry, the power to Archive and Replay Events, support for Cross-Region Event Bus Targets, and API Destinations to allow you to send events to any HTTP API. With support for a very long list of destinations and the ability to do pattern matching, filtering, and routing of events, EventBridge is an incredibly powerful and flexible architectural component.

We launched Amazon EventBridge in mid-2019 to make it easy for you to build powerful, event-driven applications at any scale. Since that launch, we have added several important features including a Schema Registry, the power to Archive and Replay Events, support for Cross-Region Event Bus Targets, and API Destinations to allow you to send events to any HTTP API. With support for a very long list of destinations and the ability to do pattern matching, filtering, and routing of events, EventBridge is an incredibly powerful and flexible architectural component.

S3 Event Notifications

Today we are making it even easier for you to use EventBridge to build applications that react quickly and efficiently to changes in your S3 objects. This is a new, “directly wired” model that is faster, more reliable, and more developer-friendly than ever. You no longer need to make additional copies of your objects or write specialized, single-purpose code to process events.

At this point you might be thinking that you already had the ability to react to changes in your S3 objects, and wondering what’s going on here. Back in 2014 we launched S3 Event Notifications to SNS Topics, SQS Queues, and Lambda functions. This was (and still is) a very powerful feature, but using it at enterprise-scale can require coordination between otherwise-independent teams and applications that share an interest in the same objects and events. Also, EventBridge can already extract S3 API calls from CloudTrail logs and use them to do pattern matching & filtering. Again, very powerful and great for many kinds of apps (with a focus on auditing & logging), but we always want to do even better.

Net-net, you can now configure S3 Event Notifications to directly deliver to EventBridge! This new model gives you several benefits including:

Advanced Filtering – You can filter on many additional metadata fields, including object size, key name, and time range. This is more efficient than using Lambda functions that need to make calls back to S3 to get additional metadata in order to make decisions on the proper course of action. S3 only publishes events that match a rule, so you save money by only paying for events that are of interest to you.

Multiple Destinations – You can route the same event notification to your choice of 18 AWS services including Step Functions, Kinesis Firehose, Kinesis Data Streams, and HTTP targets via API Destinations. This is a lot easier than creating your own fan-out mechanism, and will also help you to deal with those enterprise-scale situations where independent teams want to do their own event processing.

Fast, Reliable Invocation – Patterns are matched (and targets are invoked) quickly and directly. Because S3 provides at-least-once delivery of events to EventBridge, your applications will be more reliable.

You can also take advantage of other EventBridge features, including the ability to archive and then replay events. This allows you to reprocess events in case of an error or if you add a new target to an event bus.

Getting Started

I can get started in minutes. I start by enabling EventBridge notifications on one of my S3 buckets (jbarr-public in this case). I open the S3 Console, find my bucket, open the Properties tab, scroll down to Event notifications, and click Edit:

I select On, click Save changes, and I’m ready to roll:

Now I use the EventBridge Console to create a rule. I start, as usual, by entering a name and a description:

Then I define a pattern that matches the bucket and the events of interest:

Then I define a pattern that matches the bucket and the events of interest:

One pattern can match one or more buckets and one or more events; the following events are supported:

One pattern can match one or more buckets and one or more events; the following events are supported:

Object CreatedObject DeletedObject Restore InitiatedObject Restore CompletedObject Restore ExpiredObject Tags AddedObject Tags DeletedObject ACL UpdatedObject Storage Class ChangedObject Access Tier ChangedThen I choose the default event bus, and set the target to an SNS topic (BucketAction) which publishes the messages to my Amazon email address:

I click Create, and I am all set. To test it out, I simply upload some files to my bucket and await the messages:

The message contains all of the interesting and relevant information about the event, and (after some unquoting and formatting), looks like this:

My initial event pattern was very simple, and matched only the bucket name. I can use content-based filtering to write more complex and more interesting patterns. For example, I could use numeric matching to set up a pattern that matches events for objects that are smaller than 1 megabyte:

Or, I could use prefix matching to set up a pattern that looks for objects uploaded to a “subfolder” (which doesn’t really exist) of a bucket:

"object": {

"key" : [{"prefix" : "uploads/"}]

}]

}You can use all of this in conjunction with all of the existing EventBridge features, including Archive/Replay. You can also access the CloudWatch metrics for each of your rules:

Available Now

This feature is available now and you can start using it today in all commercial AWS Regions. You pay $1 for every 1 million events that match a rule; check out the EventBridge Pricing page for more information.

— Jeff;

Post Syndicated from Sébastien Stormacq original https://aws.amazon.com/blogs/aws/new-amazon-ebs-snapshots-archive/

I am pleased to announce the availability of Amazon EBS Snapshots Archive, a new storage tier for the long-term retention of Amazon Elastic Block Store (EBS) snapshots of your EBS volumes.

In a nutshell, EBS is an easy-to-use high-performance block storage service for your Amazon Elastic Compute Cloud (Amazon EC2) instances. An EBS volume mounted to your EC2 instances lets you boot an operating system and store data for your most performance-demanding workloads. You may use EBS snapshots to create point-in-time copies of your volume data. The first snapshot of a volume contains all of the data written into that volume. Subsequent snapshots are incremental. Snapshots are stored on Amazon Simple Storage Service (Amazon S3), and they may be shared between AWS accounts and AWS Regions.

The ability to take frequent snapshots and easily restore volumes makes EBS snapshots an obvious choice for your data management strategy, alongside other backup options. The incremental nature of snapshots makes them cost-effective for daily and weekly backups that need immediate restores. However, you were telling us that business compliance and regulatory needs have meant that you needed to retain EBS snapshots for longer periods of time (months or years). For example, snapshots taken at the end of a project, or snapshots for test and development preserved for future project releases. The vast majority of these snapshots are taken and never read. For these snapshots, you are looking to lower your storage costs. Today, to benefit from lower storage costs, you may have written complex scripts involving temporary EC2 instances to restore snapshots, mount the corresponding volumes, and transfer the data to lower-cost storage tiers, such as Amazon Glacier.

EBS Snapshots Archive provides a low-cost storage tier to archive full, point-in-time copies of EBS Snapshots that you must retain for 90 days or more for regulatory and compliance reasons, or for future project releases. Now, you can easily archive and manage EBS Snapshots, thereby eliminating the need for custom scripts and third-party tools to manage these snapshots. This lets you move your rarely accessed snapshots to EBS Snapshots Archive to achieve up to 75% lower storage costs, and avoid licensing costs for third-party tools. Furthermore, you can retrieve an archived snapshot within 24-72 hours, and, once restored, use the snapshot to recover an EBS volume.

As per usual, let me show you how it works.

How to Get Started

I have a snapshot available in the US East (N. Virginia) Region, and I want to archive this snapshot for compliance reasons. I open the AWS Management Console, navigate to EC2, then to Snapshots. I select the snapshot I want to archive, and select the Actions menu. I select the Archive snapshot menu option.

I carefully read the confirmation message :-), and I select Archive snapshot.

I may monitor the progress of the archive operation with the new Storage Tier tab at the bottom of the screen. After some time, depending on the size of the snapshot, the Tiering status becomes  Archival completed.

Archival completed.

Archived snapshots stay visible in the console. The new Storage tier column indicates the tier used for storage (Standard or Archive).

Archived snapshots stay visible in the console. The new Storage tier column indicates the tier used for storage (Standard or Archive).

How do I Restore a Volume?

Restoring a volume from EBS Snapshots Archive is a two-step process. First, I retrieve the snapshot from EBS Snapshots Archive to its original snapshot ID, using RestoreSnapshotTier API call or the management console. It takes between 24-72 hours to retrieve the snapshot from the archive, depending on the snapshot size. Once retrieved, the snapshot appears as a regular snapshot on my account. At this stage, I hydrate the retrieved snapshot into an EBS volume using the default snapshot restore or Fast Snapshot Restore (FSR) for expedited restores, just like usual.

A CloudWatch event is generated when the snapshot is restored. You may listen to this event to avoid pulling the status with the API.

A CreateVolume API call on an archived snapshot will fail. You must restore a snapshot from archive before you use it to create a volume.

Using the AWS Management Console, I select the snapshot that I want to restore, I select the Actions menu, and then I select the Restore snapshot from archive menu option.

I have the choice to restore the snapshot permanently, or just temporarily. At the end of the temporary duration, the standard tier snapshot is deleted, and only the archive is preserved.

After a while, depending on the snapshot size, the archive is restored to standard storage and may be used to recreate a volume, just like usual. I may monitor the progress of the retrieval and the lifetime for temporarily restored archives in the new Storage tier tab in the bottom half of the screen. Temporary restored snapshots may be kept for up to 180 days.

Pricing and Availability

EBS Snapshots Archive is available for you today in 17 AWS Regions. At the time of launch, it is not available in the two Regions in China, Asia Pacific (Seoul), Asia Pacific (Osaka), Canada (Central), and South America (São Paulo).

As per usual, you pay as-you-go, with no minimum or fixed fees. There are two metrics that influence EBS Snapshots Archive billing: data storage and data retrieval. We charge you $0.0125 per GB-month of stored data and $0.03 per GB retrieved. You are charged for a 90-day period at minimum. This means that if you delete a snapshot archive or permanently restore it less than 90 days after creation, then we charge for the full 90-day period. The EBS pricing page has the details.

Go ahead and start to configure your long term storage for EBS snaphots today.

Post Syndicated from Danilo Poccia original https://aws.amazon.com/blogs/aws/new-aws-control-tower-account-factory-for-terraform/

AWS Control Tower makes it easier to set up and manage a secure, multi-account AWS environment. AWS Control Tower uses AWS Organizations to create what is called a landing zone, bringing ongoing account management and governance based on our experience working with thousands of customers.

If you use AWS CloudFormation to manage your infrastructure as code, you can customize your AWS Control Tower landing zone using Customizations for AWS Control Tower, a solution that helps you deploy custom templates and policies to individual accounts and organizational units (OUs) within your organization.

But what if you use Terraform to manage your AWS infrastructure?

Today, I am happy to share the availability of AWS Control Tower Account Factory for Terraform (AFT), a new Terraform module maintained by the AWS Control Tower team that allows you to provision and customize AWS accounts through Terraform using a deployment pipeline. The source code for the development pipeline can be stored in AWS CodeCommit, GitHub, GitHub Enterprise, or BitBucket. With AFT, you can automate the creation of fully functional accounts that have access to all the resources they need to be productive. The module works with Terraform open source, Terraform Enterprise, and Terraform Cloud.

Let’s see how this works in practice.

Using AWS Control Tower Account Factory for Terraform

First, I create a main.tf file that uses the AWS Control Tower Account Factory for Terraform (AFT) module:

The first six parameters are required. As a prerequisite, I need to pass the ID of four AWS accounts in my AWS organization:

ct_management_account_id – AWS Control Tower management accountlog_archive_account_id – Log Archive accountaudit_account_id – Audit accountaft_management_account_id – AFT management accountThen, I have to pass two AWS Regions:

ct_home_region – The Region from which this module will be executed. This must be the same Region where AWS Control Tower is deployed.tf_backend_secondary_region – The backend primary Region is the same as the AFT Region. This parameter defines the secondary Region to replicate to. AFT creates a backend for state tracking for its own state. It is also used for Terraform when using the open-source version.The other parameters are optional and are set to their default value in the previous main.tf file:

terraform_distribution – To select between Terraform open source (default), Enterprise, or Cloudvcs_provider – To choose the version control system to use between AWS CodeCommit (default), GitHub, GitHub Enterprise, or BitBucket.These feature flags are disabled by default and can be omitted unless you want to enable them:

aft_feature_delete_default_vpcs_enabled – To automatically delete the default VPC for new accounts.aft_feature_cloudtrail_data_events – To enable AWS CloudTrail data events for new accounts. Be aware that this option, usually required for compliance in highly regulated environments, can have an impact on your costs.aft_feature_enterprise_support – To automatically enroll new accounts with Enterprise Support (if you have an Enterprise Support Plan).First, I initialize the project and download the plugins:

Then, I use AWS Single Sign-On to log in with the AWS Control Tower management account and start the deployment:

I confirm with a yes and, after some time, the deployment is complete.

Now, I use AWS SSO again to log in with the AFT management account. In the AWS CodeCommit console, I find four repositories that I can use to customize the accounts created with AFT.

These repositories are used by pipelines managed by AWS CodePipeline to automate the account creation:

xaft-account-request – This is where I place requests for accounts provisioned and managed by AFT.aft-global-customizations – I can use this repository to customize all provisioned accounts with customer-defined resources. The resources can be created through Terraform or through Python.aft-account-customizations – Here, I can customize provisioned accounts depending on the value of the account_customizations_name parameter in the aft-account-request repository. In this way, I can create different sets of customizations depending on the role the account will be used for.aft-account-provisioning-customizations – This repository uses AWS Step Functions to customize the provisioning process for new accounts and simplify the integration with additional environments. State machines can use AWS Lambda functions, Amazon Elastic Container Service (Amazon ECS) or AWS Fargate tasks, custom activities hosted either on AWS or on-premises, or Amazon Simple Notification Service (SNS) and Amazon Simple Queue Service (SQS) to communicate with external applications.Currently, these four repositories are all empty. To start, I use the code in the sources/aft-customizations-repos folder in the GitHub repo of the AFT Terraform module.

Using the example in the aft-account-request repository, I prepare a template to create a couple of AWS accounts. One of the two accounts is for a software developer.

To help software developers be productive quickly, I create a specific account customization. In the template, I set the parameter account_customizations_name equal to developer-customization.

Then, in the aft-account-customizations repository, I create a developer-customization folder where I put a Terraform template to automatically create an AWS Cloud9 EC2-based development environment for new accounts of that type. Optionally, I can extend that with my Python code, for example, to invoke internal or external APIs. Using this approach, all new accounts for software developers will have their development environment ready as they go through the delivery pipeline.

I push the changes to the main branch (first for the aft-account-customizations repository, then for the aft-account-request). This triggers the execution of the pipeline. After a few minutes, the two new accounts are ready to be used.

You can customize accounts created by AFT based on your unique requirements. For example, you can provide each account with its own specific security setup (such as IAM roles or security groups) and storage (for example, pre-configured Amazon Simple Storage Service (Amazon S3) buckets).

Availability and Pricing

AWS Control Tower Account Factory for Terraform (AFT) works in any Region where AWS Control Tower is available. There are no additional costs when using AFT. You pay for the services used by the solution. For example, when you set up AWS Control Tower, you will begin to incur costs for AWS services configured to set up your landing zone and mandatory guardrails.

When building this solution, we worked together with HashiCorp. Armon Dadgar, HashiCorp Co-Founder and CTO, told us: “Managing cloud environments with hundreds or thousands of users can be a complex and time-consuming process. Using a software delivery pipeline integrating Terraform and AWS Control Tower makes it easier to achieve consistent governance and compliance requirements across all accounts.”

The pipeline provides an account creation process that monitors when account provisioning is complete and then triggers additional Terraform modules to enhance the account with further customizations. You can configure the pipeline to use your own custom Terraform modules or pick from pre-published Terraform modules for common products and configurations.

Simplify and standardize AWS account creation using AWS Control Tower Account Factory for Terraform.

— Danilo

Post Syndicated from Jeff Barr original https://aws.amazon.com/blogs/aws/new-recycle-bin-for-ebs-snapshots/

It is easy to create EBS Snapshots, and just as easy to either delete them manually or to use the Data Lifecycle Manager to delete them automatically in accord with your organization’s retention model. Sometimes, as it turns out, it is a bit too easy to delete snapshots, and a well-intended cleanup effort or a wayward script can sometimes go a bit overboard!

New Recycle Bin

In order to give you more control over the deletion process, we are launching a Recycle Bin for EBS Snapshots. As you will see in a moment, you can now set up rules to retain deleted snapshots so that you can recover them after an accidental deletion. You can think of this as a two-level model, where individual AWS users are responsible for the initial deletion, and then a designated “Recycle Bin Administrator” (as specified by an IAM role) manages retention and recovery.

Rules can apply to all snapshots, or to snapshots that include a specified set of tag/value pairs. Each rule specifies a retention period (between one day and one year), after which the snapshot is permanently deleted.

Let’s Recycle!

I open the Recycle Bin Console, select the region of interest, and click Create retention rule to begin:

I call my first rule KeepAll, and set it to retain all deleted EBS snapshots for 4 days:

I add a tag (User) to the rule, and click Create retention rule:

I add a tag (User) to the rule, and click Create retention rule:

Because Apply to all resources is checked, this is a general rule that applies when there are no applicable rules that specify one or more tags.

Then I create a second rule (KeepDev) that retains snapshots tagged with a Mode of Dev for just one day:

If two different tag-based rules match the same resource, then the one with the longer retention period applies.

Here are my retention rules:

Here are my EBS snapshots. As you can see, the first three are tagged with a Mode of Dev:

In an effort to save several cents per month, I impulsively delete them all:

And they are gone:

Later in the day, a member of my developer team messages me in a panic and lets me know that they desperately need the latest snapshot of the development server’s code. I open the Recycle Bin and I locate the snapshot (DevServer_2021_10_6):

I select the snapshot and click Recover:

Then I confirm my intent:

And the snapshot is available once again:

As has always been the case, Fast Snapshot Restore is disabled when a snapshot is deleted. With this launch, it will remain disabled when a snapshot is restored.

All of this functionality (creating rules, listing resources in the Recycle Bin, and restoring them) is also available from the CLI and via the Recycle Bin APIs.

Things to Know

Here are a couple of things to know about the new Recycle Bin:

IAM Support – As I mentioned earlier, you can use AWS Identity and Access Management (IAM) to grant access to this feature, and should consider creating an empowered user known as the Recycle Bin Administrator.

Rule Changes – You can make changes to your retention rules at any time, but be aware that the rules are evaluated (and the retention period is set) when you delete a snapshot. Changing a rule after an item has been deleted will not alter the retention period for the item.

Pricing – Resources that are in the Recycle Bin are charged the usual price, but be aware that creating rules with long retention periods could increase your AWS bill. On a related note, be sure that keeping deleted snapshots around does not violate your organization’s data retention policies. There is no charge for deleting or recovering a resource.

In the Bin – Resources in the Recycle Bin are immutable. If a resource is recovered, all of its existing metadata (tags and so forth) is also recovered intact.

Recycling – We will do our best to recycle all of the zeroes and all of the ones once when a resource in your Recycle Bin reaches the end of its retention period!

— Jeff;

Post Syndicated from Channy Yun original https://aws.amazon.com/blogs/aws/introducing-karpenter-an-open-source-high-performance-kubernetes-cluster-autoscaler/

Today we are announcing that Karpenter is ready for production. Karpenter is an open-source, flexible, high-performance Kubernetes cluster autoscaler built with AWS. It helps improve your application availability and cluster efficiency by rapidly launching right-sized compute resources in response to changing application load. Karpenter also provides just-in-time compute resources to meet your application’s needs and will soon automatically optimize a cluster’s compute resource footprint to reduce costs and improve performance.

Before Karpenter, Kubernetes users needed to dynamically adjust the compute capacity of their clusters to support applications using Amazon EC2 Auto Scaling groups and the Kubernetes Cluster Autoscaler. Nearly half of Kubernetes customers on AWS report that configuring cluster auto scaling using the Kubernetes Cluster Autoscaler is challenging and restrictive.

When Karpenter is installed in your cluster, Karpenter observes the aggregate resource requests of unscheduled pods and makes decisions to launch new nodes and terminate them to reduce scheduling latencies and infrastructure costs. Karpenter does this by observing events within the Kubernetes cluster and then sending commands to the underlying cloud provider’s compute service, such as Amazon EC2.

Karpenter is an open-source project licensed under the Apache License 2.0. It is designed to work with any Kubernetes cluster running in any environment, including all major cloud providers and on-premises environments. We welcome contributions to build additional cloud providers or to improve core project functionality. If you find a bug, have a suggestion, or have something to contribute, please engage with us on GitHub.

Getting Started with Karpenter on AWS

To get started with Karpenter in any Kubernetes cluster, ensure there is some compute capacity available, and install it using the Helm charts provided in the public repository. Karpenter also requires permissions to provision compute resources on the provider of your choice.

Once installed in your cluster, the default Karpenter provisioner will observe incoming Kubernetes pods, which cannot be scheduled due to insufficient compute resources in the cluster and automatically launch new resources to meet their scheduling and resource requirements.

I want to show a quick start using Karpenter in an Amazon EKS cluster based on Getting Started with Karpenter on AWS. It requires the installation of AWS Command Line Interface (AWS CLI), kubectl, eksctl, and Helm (the package manager for Kubernetes). After setting up these tools, create a cluster with eksctl. This example configuration file specifies a basic cluster with one initial node.

cat <<EOF > cluster.yaml

---

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: eks-karpenter-demo

region: us-east-1

version: "1.20"

managedNodeGroups:

- instanceType: m5.large

amiFamily: AmazonLinux2

name: eks-kapenter-demo-ng

desiredCapacity: 1

minSize: 1

maxSize: 5

EOF

$ eksctl create cluster -f cluster.yamlKarpenter itself can run anywhere, including on self-managed node groups, managed node groups, or AWS Fargate. Karpenter will provision EC2 instances in your account.

Next, you need to create necessary AWS Identity and Access Management (IAM) resources using the AWS CloudFormation template and IAM Roles for Service Accounts (IRSA) for the Karpenter controller to get permissions like launching instances following the documentation. You also need to install the Helm chart to deploy Karpenter to your cluster.

$ helm repo add karpenter https://charts.karpenter.sh

$ helm repo update

$ helm upgrade --install --skip-crds karpenter karpenter/karpenter --namespace karpenter \

--create-namespace --set serviceAccount.create=false --version 0.5.0 \

--set controller.clusterName=eks-karpenter-demo

--set controller.clusterEndpoint=$(aws eks describe-cluster --name eks-karpenter-demo --query "cluster.endpoint" --output json) \

--wait # for the defaulting webhook to install before creating a ProvisionerKarpenter provisioners are a Kubernetes resource that enables you to configure the behavior of Karpenter in your cluster. When you create a default provisioner, without further customization besides what is needed for Karpenter to provision compute resources in your cluster, Karpenter automatically discovers node properties such as instance types, zones, architectures, operating systems, and purchase types of instances. You don’t need to define these spec:requirements if there is no explicit business requirement.

cat <<EOF | kubectl apply -f -

apiVersion: karpenter.sh/v1alpha5

kind: Provisioner

metadata:

name: default

spec:

#Requirements that constrain the parameters of provisioned nodes.

#Operators { In, NotIn } are supported to enable including or excluding values

requirements:

- key: node.k8s.aws/instance-type #If not included, all instance types are considered

operator: In

values: ["m5.large", "m5.2xlarge"]

- key: "topology.kubernetes.io/zone" #If not included, all zones are considered

operator: In

values: ["us-east-1a", "us-east-1b"]

- key: "kubernetes.io/arch" #If not included, all architectures are considered

values: ["arm64", "amd64"]

- key: " karpenter.sh/capacity-type" #If not included, the webhook for the AWS cloud provider will default to on-demand

operator: In

values: ["spot", "on-demand"]

provider:

instanceProfile: KarpenterNodeInstanceProfile-eks-karpenter-demo

ttlSecondsAfterEmpty: 30

EOFThe ttlSecondsAfterEmpty value configures Karpenter to terminate empty nodes. If this value is disabled, nodes will never scale down due to low utilization. To learn more, see Provisioner custom resource definitions (CRDs) on the Karpenter site.

Karpenter is now active and ready to begin provisioning nodes in your cluster. Create some pods using a deployment, and watch Karpenter provision nodes in response.

$ kubectl create deployment --name inflate \

--image=public.ecr.aws/eks-distro/kubernetes/pause:3.2Let’s scale the deployment and check out the logs of the Karpenter controller.

$ kubectl scale deployment inflate --replicas 10

$ kubectl logs -f -n karpenter $(kubectl get pods -n karpenter -l karpenter=controller -o name)

2021-11-23T04:46:11.280Z INFO controller.allocation.provisioner/default Starting provisioning loop {"commit": "abc12345"}

2021-11-23T04:46:11.280Z INFO controller.allocation.provisioner/default Waiting to batch additional pods {"commit": "abc123456"}

2021-11-23T04:46:12.452Z INFO controller.allocation.provisioner/default Found 9 provisionable pods {"commit": "abc12345"}

2021-11-23T04:46:13.689Z INFO controller.allocation.provisioner/default Computed packing for 10 pod(s) with instance type option(s) [m5.large] {"commit": " abc123456"}

2021-11-23T04:46:16.228Z INFO controller.allocation.provisioner/default Launched instance: i-01234abcdef, type: m5.large, zone: us-east-1a, hostname: ip-192-168-0-0.ec2.internal {"commit": "abc12345"}

2021-11-23T04:46:16.265Z INFO controller.allocation.provisioner/default Bound 9 pod(s) to node ip-192-168-0-0.ec2.internal {"commit": "abc12345"}

2021-11-23T04:46:16.265Z INFO controller.allocation.provisioner/default Watching for pod events {"commit": "abc12345"}The provisioner’s controller listens for Pods changes, which launched a new instance and bound the provisionable Pods into the new nodes.

Now, delete the deployment. After 30 seconds (ttlSecondsAfterEmpty = 30), Karpenter should terminate the empty nodes.

$ kubectl delete deployment inflate

$ kubectl logs -f -n karpenter $(kubectl get pods -n karpenter -l karpenter=controller -o name)

2021-11-23T04:46:18.953Z INFO controller.allocation.provisioner/default Watching for pod events {"commit": "abc12345"}

2021-11-23T04:49:05.805Z INFO controller.Node Added TTL to empty node ip-192-168-0-0.ec2.internal {"commit": "abc12345"}

2021-11-23T04:49:35.823Z INFO controller.Node Triggering termination after 30s for empty node ip-192-168-0-0.ec2.internal {"commit": "abc12345"}

2021-11-23T04:49:35.849Z INFO controller.Termination Cordoned node ip-192-168-116-109.ec2.internal {"commit": "abc12345"}

2021-11-23T04:49:36.521Z INFO controller.Termination Deleted node ip-192-168-0-0.ec2.internal {"commit": "abc12345"}If you delete a node with kubectl, Karpenter will gracefully cordon, drain, and shut down the corresponding instance. Under the hood, Karpenter adds a finalizer to the node object, which blocks deletion until all pods are drained, and the instance is terminated.

Things to Know

Here are a couple of things to keep in mind about Kapenter features:

Accelerated Computing: Karpenter works with all kinds of Kubernetes applications, but it performs particularly well for use cases that require rapid provisioning and deprovisioning large numbers of diverse compute resources quickly. For example, this includes batch jobs to train machine learning models, run simulations, or perform complex financial calculations. You can leverage custom resources of nvidia.com/gpu, amd.com/gpu, and aws.amazon.com/neuron for use cases that require accelerated EC2 instances.

Provisioners Compatibility: Kapenter provisioners are designed to work alongside static capacity management solutions like Amazon EKS managed node groups and EC2 Auto Scaling groups. You may choose to manage the entirety of your capacity using provisioners, a mixed model with both dynamic and statically managed capacity, or a fully static approach. We recommend not using Kubernetes Cluster Autoscaler at the same time as Karpenter because both systems scale up nodes in response to unschedulable pods. If configured together, both systems will race to launch or terminate instances for these pods.

Join our Karpenter Community

Karpenter’s community is open to everyone. Give it a try, and join our working group meeting, or follow our roadmap for future releases that interest you. As I said, we welcome any contributions such as bug reports, new features, corrections, or additional documentation.

To learn more about Karpenter, see the documentation and demo video from AWS Container Day.

– Channy

Post Syndicated from Channy Yun original https://aws.amazon.com/blogs/aws/new-aws-marketplace-for-containers-anywhere-to-deploy-your-kubernetes-cluster-in-any-environment/

More than 300,000 customers use AWS Marketplace today to find, subscribe to, and deploy third-party software packaged as Amazon Machine Images (AMIs), software-as-a-service (SaaS), and containers. Customers can find and subscribe containerized third-party applications from AWS Marketplace and deploy them in Amazon Elastic Container Service (Amazon ECS) and Amazon Elastic Kubernetes Service (Amazon EKS).

Many customers that run Kubernetes applications on AWS want to deploy them on-premises due to constraints, such as latency and data governance requirements. Also, once they have deployed the Kubernetes application, they need additional tools to govern the application through license tracking, billing, and upgrades.

Today, we announce AWS Marketplace for Containers Anywhere, a set of capabilities that allows AWS customers to find, subscribe to, and deploy third-party Kubernetes applications from AWS Marketplace on any Kubernetes cluster in any environment. This capability makes the AWS Marketplace more useful for customers who run containerized workloads.

With this launch, you can deploy third party Kubernetes applications to on-premises environments using Amazon EKS Anywhere or any customer self-managed Kubernetes cluster in on-premises environments or in Amazon Elastic Compute Cloud (Amazon EC2), enabling you to use a single catalog to find container images regardless of where they eventually plan to deploy.

With AWS Marketplace for Containers Anywhere, you can get the same benefits as any other products in AWS Marketplace, including consolidated billing, flexible payment options, and lower pricing for long-term contracts. You can find vetted, security-scanned, third-party Kubernetes applications, manage upgrades with a few clicks, and track all licenses and bills. You can migrate applications between any environment without purchasing duplicate licenses. After you have subscribed to an application using this feature, you can migrate your Kubernetes applications to AWS by deploying the independent software vendor (ISV) provided Helm charts onto their Kubernetes clusters on AWS without changing their licenses.

Getting Started with AWS Marketplace for Containers Anywhere

You can get started by visiting AWS Marketplace. Easily search in Delivery methods in all products, then filter Helm Chart in the catalog to find Kubernetes-based applications that they can deploy on AWS and on premises.

If you chose to subscribe to your favorite product, you would select Continue to Subscribe.

Once you accept the seller’s end user license agreement (EULA), select Create Contract and Continue to Configuration.

You can configure the software deployment using the dropdowns. Once Fulfillment option and Software Version are selected, choose Continue to Launch.

To deploy on Amazon EKS, you have the option to deploy the application on a new EKS cluster or copy and paste commands into existing clusters. You can also deploy into self-managed Kubernetes in EC2 by clicking on the self-managed Kubernetes option in the supported services.

To deploy on-premises or in EC2, you can select EKS Anywhere and then take an additional step to request a license token on the AWS Marketplace launch page. You will then use commands provided by AWS Marketplace to download container images, Helm charts from the AWS Marketplace Elastic Container Registry (ECR), the service account creation, and the token to apply IAM Roles for Service Accounts on your EKS cluster.

To upgrade or renew your existing software licenses, you can go to the AWS Marketplace website for a self-service upgrade or renewal experience. You can also negotiate a private offer directly with ISVs to upgrade and renew the application. After you subscribe to the new offer, the license is automatically updated in AWS License Manager. You can view all the licenses you have purchased from AWS Marketplace using AWS License Manager, including the application capabilities you’re entitled to and the expiration date.

Launch Partners of AWS Marketplace for Containers Anywhere

Here is the list of our launch partners to support an on-premises deployment option. Try them out today!

If you are interested in offering your Kubernetes application on AWS Marketplace, register and modify your product to integrate with AWS License Manager APIs using the provided AWS SDK. Integrating with AWS License Manager will allow the application to check licenses procured through AWS Marketplace.

Next, you would create a new container product on AWS Marketplace with a contract offer by submitting details of the listing, including the product information, license options, and pricing. The details would be reviewed, approved, and published by AWS Marketplace Technical Account Managers. You would then submit the new container image to AWS Marketplace ECR and add it to a newly created container product through the self-service Marketplace Management Portal. All container images are scanned for Common Vulnerabilities and Exposures (CVEs).

Finally, the product listing and container images would be published and accessible by customers on AWS Marketplace’s customer website. To learn more details about creating container products on AWS Marketplace, visit Getting started as a seller and Container-based products in the AWS documentation.

Available Now

The feature of AWS Marketplace for Containers Anywhere is available now in all Regions that support AWS Marketplace. You can start using the feature directly from the product of launch partners.

Give it a try, and please send us feedback either in the AWS forum for AWS Marketplace or through your usual AWS support contacts.

– Channy

Post Syndicated from Alex Casalboni original https://aws.amazon.com/blogs/aws/data-exchange-for-apis-find-subscribe-use-third-party-apis-consistent-authentication/

Data is at the center of many processes and products, whether it’s a large-scale dataset used to train machine learning models, a relational database, or an API-based integration. AWS Data Exchange lets you discover, subscribe to, and use hundreds of file-based datasets via Amazon Simple Storage Service (Amazon S3) offered by third parties such as Reuters, Foursquare, Change Healthcare, Vortexa, IMDb, and many more. Additionally, AWS Data Exchange for Amazon Redshift makes it even easier to ingest third-party data in your Amazon Redshift data warehouse, without any manual processing or transformation.

However, in many cases your data projects require more than static datasets because you need frequent and synchronous retrieval of small amounts of information – for example, you might need to fetch a stock price every hour. Data APIs let you answer specific questions quickly and without having to build ad-hoc data pipelines to ingest, process, and analyze bulk datasets. But each API provider has its own ease of use, SDK, documentation, and authentication mechanisms, which makes this harder than it needs to be.

Today, I’m happy to announce the general availability of AWS Data Exchange for APIs, a new capability that lets you find, subscribe to, and use third-party APIs with a consistent access using AWS SDKs, as well as consistent AWS-native authentication and governance. This simplifies the lives of developers and IT administrators who have to integrate and secure the access to multiple third-party APIs.

Now you can make RESTful or GraphQL API calls directly to AWS Data Exchange and receive synchronous responses that contain the information you need, using the AWS SDK in the programming language of your choice. We take care of integrating with the API provider, implementing proper authentication, managing the API subscription, and ensuring charges appear on your AWS bill. You can manage API access centrally with AWS Identity and Access Management (IAM).

As a data provider, you make your API discoverable by millions of AWS customers by listing it in the AWS Data Exchange catalog using an OpenAPI specification and fronting it with an Amazon API Gateway endpoint.

AWS Data Exchange for APIs in Action

First, I look for an API product in the AWS Data Exchange catalog, review its subscription terms, support information, and auto-renewal. Each API product might include multiple public or private subscription offers and periods.

I select Subscribe and a couple of minutes later I’m successfully subscribed.

Within the API product, I select an entitled data set and its latest revision.

Each API revision contains one or more API assets that correspond to a specific API endpoint and a unique Asset ARN.

AWS Data Exchange takes care of invoking API endpoints with the correct authentication.

All I need to do is check the Integration notes, which include instructions and code snippets based on the AWS Command Line Interface (CLI).

Of course, I could implement the very same API call with my favorite programming language using one of the AWS SDKs.

For example, here’s how I’d implement a simple wrapper function in Python:

import json

import urllib

import boto3

adx = boto3.client('dataexchange')

def get_api_response(path, method="GET", querystring={}, headers={}, body={}):

return adx.send_api_asset(

DataSetId="4b3fbabc31171662851531b8576a3411",

RevisionId="e8e78e921af12c76499edc40f92e3082",

AssetId="557d858c317efdfb5b6c9a2860ec4a03",

Method=method,

Path=path,

QueryStringParameters=urllib.urlencode(querystring),

RequestHeaders=urllib.urlencode(headers),

Body=json.dumps(body),

)Please note that there are no hard-coded credentials in the code above because all the authorization happens via AWS Identity and Access Management (IAM).

And that’s how you make your first API call via AWS Data Exchange for APIs.

Available Today

AWS Data Exchange for APIs is generally available in all AWS Regions where AWS Data Exchange is available. We’re looking forward to helping you simplify and centralize the management and governance of third-party APIs while we take care of the undifferentiated heavy lifting for you.

Today you can start integrating third-party APIs such as Infutor, Variety Business Intelligence, IMDb, PeopleDataLabs, Neustar, Experian, Foursquare, PredictHQ, WeatherTrends International, and many more.

If you’re a developer, check out the new AWS Data Exchange for APIs documentation to learn more about subscribing and using APIs. If you’re an API provider, check out the new publishing documentation to learn more about publishing new APIs on the AWS Data Exchange catalog.

— Alex

Post Syndicated from original https://lwn.net/Articles/877062/rss

One of the key features of the extended BPF virtual machine is the verifier

built into the kernel that ensures that all BPF programs are safe to run.

BPF developers often see the verifier as a bit of a mixed blessing, though;

while it can catch a lot of problems before they happen, it can also be

hard to please. Comparisons with a well-meaning but rule-bound and picky

bureaucracy would not be entirely misplaced. The bpf_loop()

proposal from Joanne Koong is an attempt to make pleasing the BPF

bureaucrats a bit easier for one type of loop construct.

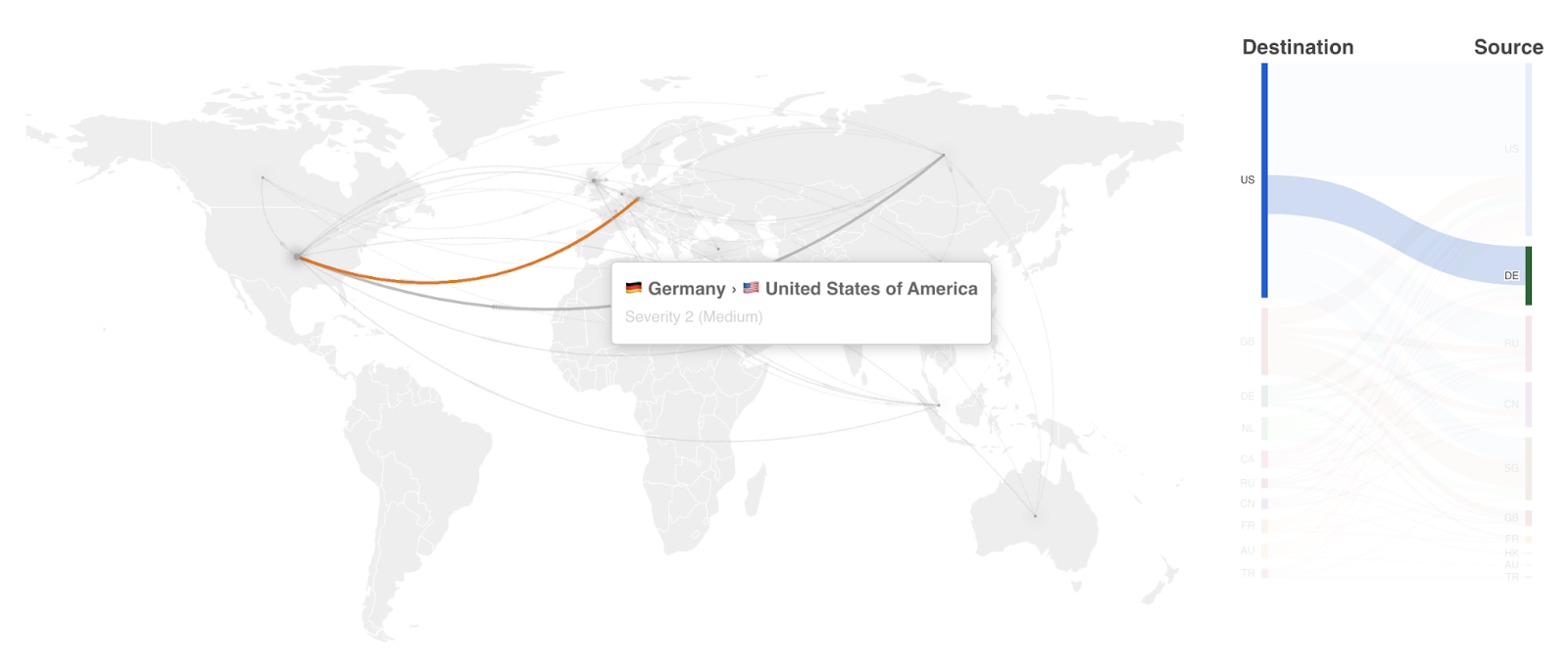

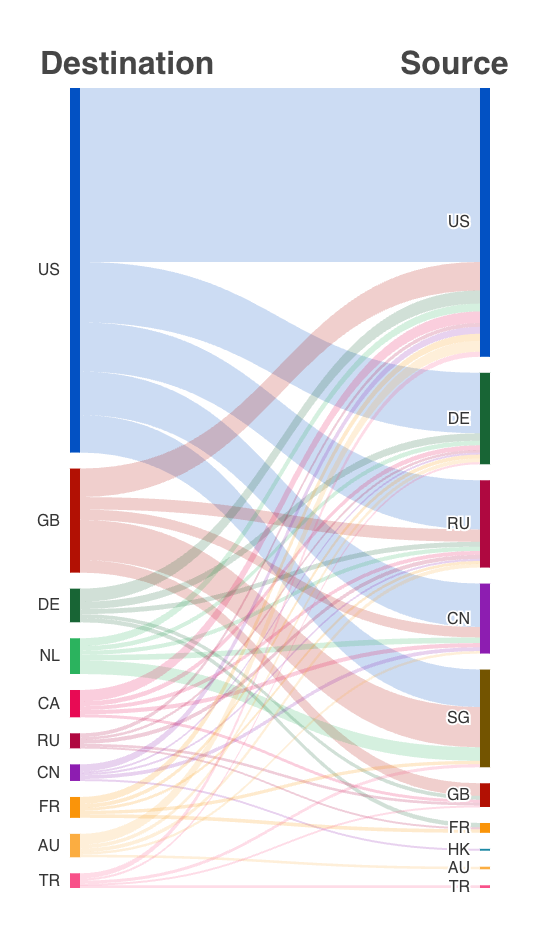

Post Syndicated from Joao Sousa Botto original https://blog.cloudflare.com/attack-maps-now-available-on-radar/

Cloudflare Radar launched as part of last year’s Birthday Week. We described it as a “newspaper for the Internet”, that gives “any digital citizen the chance to see what’s happening online [which] is part of our pursuit to help build a better, more informed, Internet”.

Since then, we have made considerable strides, including adding dedicated pages to cover how key events such as the UEFA Euro 2020 Championship and the Tokyo Olympics shaped Internet usage in participating countries, and added a Radar section for interactive deep-dive reports on topics such as DDoS.

Today, Radar has four main sections:

Cloudflare’s global network spans more than 250 cities in over 100 countries. Because of this, we have the unique ability to see both macro and micro trends happening online, including insights on how traffic is flowing around the world or what type of attacks are prevalent in a certain country.

Radar Maps will make this information even richer and easier to consume.

Starting today, Radar has two new data visualizations to help us share more insights from our data and represent what’s happening on the Internet.

Note: The identified location of the devices involved in the attack may not be the actual location of the people performing the attack.

Cyber threats are more common than ever. In the third quarter of 2021 Cloudflare blocked an average of 76 billion cyber threats each day and had visibility over many more. Helping build a better Internet also means giving people more visibility over our data. That’s why we’ve made a near real-time view of the types of attacks, protocol distribution, and attack volume over time available on Radar from day one.

Now we’re adding a geographical representation of origin and target of such attacks using two new visualizations.

First, we have a global map drawing near real-time directional lines of the attacks, also known as a “pew pew” map — thank you, 1983 and WarGames.

Second, we have Sankey diagrams that are great for representing how strongly the attacks are flowing from one country to the other.

We hope you like what we’ve built with our new Radar Maps. Radar, unlike any other insights platform out there, is totally built on Cloudflare components and our edge computing platform — Workers and Workers KV. This gives us new and unique ways of representing data at scale. So do keep checking back radar.cloudflare.com to see the Internet evolving in (near) real-time.

Post Syndicated from Maddie Bacon original https://aws.amazon.com/blogs/security/aws-security-profiles-jenny-brinkley-director-aws-security/

In the week leading up to AWS re:Invent 2021, we’ll share conversations we’ve had with people at AWS who will be presenting, and get a sneak peek at their work.

I’ve been at AWS for 5½ years. I get to focus on the future of security and compliance. It gives me a lot of space to experiment and try new things, which is how I like to operate.

I joined AWS through a startup acquisition, and I actually didn’t think I was going to go with the acquisition. I thought AWS would be way too big and move way too slow. I love being in environments where I get to move fast and be entrepreneurial. I started on the product side. I was able to learn what it takes to build and ship products at the scale of AWS – which is on another level and mind-blowing.

Then, like others at AWS, I was able to reinvent myself, find different passions, and experiment with new things. One of those areas for me was compliance. I started to get perspective on how that space was being defined by regulatory activity for the cloud, and it started opening my mind in different ways.

I started thinking, how do you make compliance easier for customers? How do you work with regulated entities to understand how to audit, and to understand the function of how the cloud operates? From there, my career has been about changing how to think about product, about how to make security easier. Layering in this compliance aspect, too, means I get to play in all these different worlds, work with internal and external customers, and work to simplify security, while also understanding where and how compliance fits in, without slowing down innovation.

I explain my work as removing the fear around security. You go see images of people in hoodies, with darkened faces, and binary code running behind them, and my job is to break that perception and walk in the light – yes, that’s my nod to Olivia Pope in Scandal. I love the idea of that gladiator mentality. You’re going in and solving the big problems, but you’re also creating more visibility and transparency around how security operates. And you’re doing this without making anyone afraid that they’re being watched or monitored, and without holding back innovation. My job is to provide that transparency and clarity, and give people prescriptive guidance on how to operate securely on AWS.

So much! That’s what I really love about my job – I get to play in a lot of spaces, and the context switching is something that really fuels me. One of the top projects I’m working on is something we just released in response to an ask from the White House, which I feel really privileged to work on. We released a new Cybersecurity Awareness training which is now available to everyone in the world. You can access this training right now, and you can share it with your grandparents or implement it in your corporation or small business. We were able to take a training product we built for all Amazon employees–and then externalize it. The size and the scope is something I’m really excited about. Making security easier for everybody is a big mission for us.

Another big area is up-skilling. You hear a lot about security jobs being the future, so we’re building everything from apprenticeships to new learning paths for anyone interested in security. We’re thinking about how we can build quick learning modules for people to listen to on the go. That’s something I get really excited about in this job – creating opportunities for people to understand that security jobs and opportunities are vast. If you’re curious and want to learn new things, AWS is endless.

I am partnering with Eric Brandwine, AWS VP/Distinguished Engineer for a session called Introverts and extroverts collide: Build an inclusive workforce (SEC204). Eric and I are night and day in terms of how we work. In our talk we’ll touch on some of the challenges we had when we first started working together, but how we found value in our different approaches.

We’ll be discussing how he solves problems with technology and how I solve problems regarding people, and thinking about how that empathetic layer resonates between the two perspectives. Not every problem needs technology, and not every problem needs a people-focused solution. But, humans are behind any of those aspects of impact.

We’ll give prescriptive guidance to customers on how they should think about their security culture as it relates to people and as it relates to technology. We’ll talk about how those two worlds can blend together in a way that empowers an entire organization to prioritize security, and that they shouldn’t be afraid of it. We want to help bridge the gaps between the technologists and the empathetic individuals who think about how the technology lands in use cases across a business.

Listening. Sitting back, getting the feedback, being vulnerable, asking the questions. So much of what we need to do now is practice that listening skill, really understand the motivations of our teams, and then try to create these safe working environments where people feel comfortable sharing their perspectives. It’s not that you’re going to act on everything everyone’s talking about, but at least you get diverse perspectives and points of view to help create an inclusive work environment that makes everyone want to show up, support each other, and do the best work possible.

I have two. One is Learn and Be Curious because that is how I like to operate. I think, “what if…” or “why can’t we…”. Then Think Big pairs with “why can’t we…” The culture within AWS really supports that. On a daily basis, we can flip the script on how we think about our jobs and how we position the business.

If you’re entrepreneurial and like to create, this place is like a magic playground. Some people look at my job and they’re so confused with all the different things I get to do – but it goes back to that context switching. I believe that Learn and Be Curious and Think Big fit in that realm for me–I feel like I can be anything, I can do anything. I also had parents who told me as a kid that I could do anything and be anything, so I think that’s just who I am. Those two leadership principles help me to produce and do my best work.

That’s hard. It’s a couple of things. I’ve had a lot of incredible opportunities. One of which was being involved in a startup. We raised the money quickly, we worked with incredible customers, we solved really challenging business issues. The fact that I was able to bring that here to AWS, in a way that now hundreds of thousands of people get to see the kind of work we’re able to produce, is pretty cool.

But honestly, working with some of our new hires who are just getting into the workforce–especially with our diverse candidates–I’m at a place in my career where I want to create opportunities for others. I’m working to create safe spaces for people to operate and do their best work and really break down barriers for people who might not otherwise get those opportunities. That’s what I’m most excited about for the future, and also the most proud about–giving people opportunities to work in careers they never thought were available to them. I love that, and I get to do it daily.

Sports agent. I think I’d be so good at it. I would love to go work with young athletes, especially with the new NCAA ruling that college athletes can get paid for the use of their likeness. I would love to help them develop really interesting business plans.

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security news? Follow us on Twitter.

Post Syndicated from Albert Capdevila original https://aws.amazon.com/blogs/architecture/volotea-mro-modernization-in-aws/

Volotea is one of the fastest growing independent airlines in Europe, and has increased its fleet, routes, and number of available seats year over year. Volotea has already transported more than 30 million passengers across Europe since 2012, and has bases in 16 European capitals.

The maintenance, repair, and overhaul (MRO) application is a critical system for every airline. It’s used to manage the maintenance, repair, service, and inspection of aircraft. The main goal of an MRO application is to ensure the safety and airworthiness of the aircraft. Traditionally, those systems have been based on monolithic, packaged applications. However, these are difficult to scale and do not offer the benefit of elasticity to adapt to changing demand. Volotea migrated to Amazon Web Services (AWS) to modernize their MRO without refactoring the code. In this blog post, we’ll show you an architecture solution that can be applied to modernize an MRO (or similarly packaged monolithic application) without refactoring, and discuss some considerations.

Volotea’s MRO software previously ran in an on-premises data center. The system was based on Windows, an outdated database engine, and a virtual desktop system based on Citrix. Costs were fixed, yet MRO usage is typically seasonal. All the interfaces with other systems were based on an outdated communications protocol. This presented security concerns, especially considering that ransomware attacks are an increasing threat.

The main challenge for Volotea was adapting the MRO system to changing business requirements. Seasonal workloads and high impact projects, like changing fleets from Boeing to Airbus, require flexibility. The company also needed to adapt to the changing protocols necessitated by the COVID-19 pandemic, as airlines are one of the most impacted industries in Europe.

Volotea needed to modernize the operating system (OS) and database, simplify the end user application access, and increase the overall platform security, including integration with other applications.

Following Volotea’s cloud strategy, the MRO system was migrated in 2 months to AWS to reduce technology costs and gain higher operational performance, availability, security, and flexibility. The migration was not simply based on a lift-and-shift approach, but used an existing AWS reference architecture for the MRO system. This reference architecture incorporates AWS managed services to modernize the application without incurring refactoring costs.

Figure 1. Volotea MRO deployment in a multi-account architecture

As shown in the high-level architecture in Figure 1:

The application was deployed in Volotea’s AWS Landing Zone, which included the following services:

To make the systems management homogeneous, AWS Systems Manager and AWS Backup offered a single management point for the backup policies, system inventory, and patching.

Once this initial modernization is finished, Volotea will use the AWS reference architecture for high availability (HA) to increase resiliency. They’ll configure Amazon EC2 Auto Scaling with application failover to another Availability Zone and the DB native replication mechanisms. This will use Elastic IP addresses to remap the endpoints in a failover scenario. This architecture can be easily implemented in AWS to incorporate HA to applications that do not natively support horizontal scaling.

Volotea successfully modernized its MRO software, which has given them greater flexibility, elasticity, and the increased security of AWS services. They intend to continue with their digital transformation journey. Volotea is increasing its capacity to innovate faster to deliver new digital services more efficiently and with reduced IT costs. The AWS services and strategies discussed in this blog post can be applied to other similarly packaged applications to implement a first level of modernization with little effort and low migration risk.

References:

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=FKeydDMz_AI

Post Syndicated from Steve Roberts original https://aws.amazon.com/blogs/aws/improved-automated-vulnerability-management-for-cloud-workloads-with-a-new-amazon-inspector/

Amazon Inspector is a service used by organizations of all sizes to automate security assessment and management at scale. Amazon Inspector helps organizations meet security and compliance requirements for workloads deployed to AWS, scanning for unintended network exposure, software vulnerabilities, and deviations from application security best practice.

Since the original launch of Amazon Inspector in 2015, vulnerability management for cloud customers has changed considerably. Over the last six years, the team delivered several new customer-requested features, including assessment reporting, support for proxy environments, and integration with Amazon CloudWatch Metrics. However, the team also recognized that there were new requirements to meet – enabling frictionless deployment at scale, support for an expanded set of resource types needing assessment, and a critical need to detect and remediate at speed. Today I’m happy to announce a new Amazon Inspector, able to meet these requirements with the following features:

Automatically Assessing your Workloads with Amazon Inspector

Tens of thousands of vulnerabilities exist, with new ones being discovered and made public on a regular basis. With this continually growing threat, manual assessment can lead to customers being unaware of an exposure and thus potentially vulnerable between assessments. Additionally, customers with manual processes for managing their inventories of applications resources, the deployment of stand-alone security agents on those resources, and the scheduling of periodic assessments may find the whole process to be a costly and time-consuming exercise. That’s before they have to then sift through the mass of assessment findings to determine the most critical issues to address.

With the new Amazon Inspector, all you need to do is enable the service. It will auto-discover and start continual assessment of your EC2 and your Amazon Elastic Container Registry-based container workloads to evaluate your security posture, even as the underlying resources change.

EC2 instances are discovered and assessed for unintended exposure to external networks and software vulnerabilities using the Systems Manager agent, already included by default in images provided by AWS for instance management, automated patching, and more. Container-based workloads are assessed as the images are pushed to Amazon Elastic Container Registry. Without needing additional software or agents, container images and EC2 instances are assessed in near real time when an event occurs.

Automated assessment is driven by changes in workload configuration and newly published vulnerabilities to ensure resources are only assessed when needed. The new Amazon Inspector collects events from over 50 vulnerability intelligence sources, including CVE, the National Vulnerability Database (NVD), and MITRE. Images that may be affected by a newly identified entry, for example, a new CVE notification, will be automatically rescanned. Image rescanning is enabled for 30 days from the date they are pushed to the registry. You can also enable an option to only scan on image push and not subsequently perform rescans.

Selecting either Accounts, Instances, or Repositories from your Dashboard page takes you to a detail summary for the selected resource. Below, I’m viewing summary data for EC2 instances across a couple of accounts.

If vulnerabilities are found, you receive actionable assessment findings in a report. Starting today, these findings are summarized with enhanced risk scoring and improved resource detail to help you prioritize the most at-risk resources needing to be addressed. Also new today, the Amazon Inspector console has been redesigned to surface all findings and recommendations for remediation.

Vulnerabilities in container images are also sent to Amazon Elastic Container Registry to be summarized for the owner. And, as I noted earlier, new integrations with AWS Security Hub and Amazon EventBridge allow findings to be sent downstream for additional visibility and remediation by automated workflows. For example, automation can be created to isolate instances, trigger system patching, software image rebuilds, and more. The availability of multiple integration points makes it easier for security and application teams to collaborate to manage remediation. Below, I’m viewing findings from Amazon Inspector in the AWS Security Hub console.