Post Syndicated from Shekar Tippur original https://aws.amazon.com/blogs/big-data/create-a-secure-data-lake-by-masking-encrypting-data-and-enabling-fine-grained-access-with-aws-lake-formation/

You can build data lakes with millions of objects on Amazon Simple Storage Service (Amazon S3) and use AWS native analytics and machine learning (ML) services to process, analyze, and extract business insights. You can use a combination of our purpose-built databases and analytics services like Amazon EMR, Amazon Elasticsearch Service (Amazon ES), and Amazon Redshift as the right tool for your specific job and benefit from optimal performance, scale, and cost.

In this post, you learn how to create a secure data lake using AWS Lake Formation for processing sensitive data. The data (simulated patient metrics) is ingested through a serverless pipeline to identify, mask, and encrypt sensitive data before storing it securely in Amazon S3. After the data has been processed and stored, you use Lake Formation to define and enforce fine-grained access permissions to provide secure access for data analysts and data scientists.

Target personas

The proposed solution focuses on the following personas, with each one having different level of access:

- Cloud engineer – As the cloud infrastructure engineer, you implement the architecture but may not have access to the data itself or to define access permissions

- secure-lf-admin – As a data lake administrator, you configure the data lake setting and assign data stewards

- secure-lf-business-analyst – As a business analyst, you shouldn’t be able to access sensitive information

- secure-lf-data-scientist – As a data scientist, you shouldn’t be able to access sensitive information

Solution overview

We use the following AWS services for ingesting, processing, and analyzing the data:

- Amazon Athena is an interactive query service that can query data in Amazon S3 using standard SQL queries using tables in an AWS Glue Data Catalog. The data can be accessed via JDBC for further processing such as displaying in business intelligence (BI) dashboards.

- Amazon CloudWatch is a monitoring and observability service that provides you with data and actionable insights to monitor your applications, respond to system-wide performance changes, and more. The logs from AWS Glue jobs and AWS Lambda functions are saved in CloudWatch logs.

- Amazon Comprehend is a natural language processing (NLP) service that uses ML to uncover information in unstructured data.

- Amazon DynamoDB is a NoSQL database that delivers single-digit millisecond performance at any scale and is used to avoid processing duplicates files.

- AWS Glue is a serverless data preparation service that makes it easy to extract, transform, and load (ETL) data. An AWS Glue job encapsulates a script that reads, processes, and writes data to a new schema. This solution uses Python3.6 AWS Glue jobs for ETL processing.

- AWS IoT provides the cloud services that connect your internet of things (IoT) devices to other devices and AWS Cloud services.

- Amazon Kinesis Data Firehose is the easiest way to reliably load streaming data into data lakes, data stores, and analytics services.

- AWS Lake Formation makes it easy to set up, secure, and manage your data lake. With Lake Formation, you can discover, cleanse, transform, and ingest data into your data lake from various sources; define fine-grained permissions at the database, table, or column level; and share controlled access across analytic, ML, and ETL services.

- Amazon S3 is a scalable object storage service that hosts the raw data files and processed files in the data lake for millisecond access.

You can enhance the security of your sensitive data with the following methods:

- Implement encryption at rest using AWS Key Management Service (AWS KMS) and customer managed encryption keys

- Instrument AWS CloudTrail and audit logging

- Restrict access to AWS resources based on the least privilege principle

Architecture overview

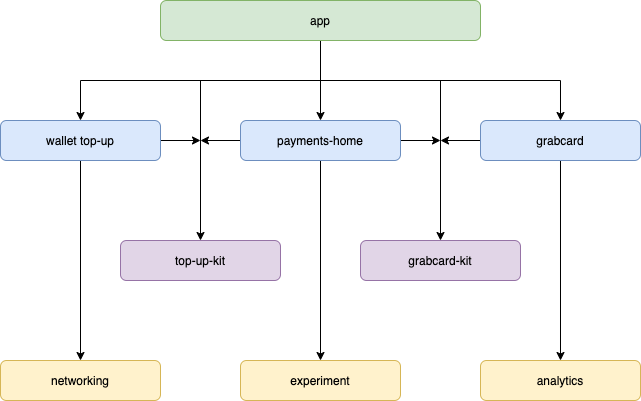

The solution emulates diagnostic devices sending Message Queuing Telemetry Transport (MQTT) messages onto an AWS IoT Core topic. We use Kinesis Data Firehose to preprocess and stage the raw data in Amazon S3. We then use AWS Glue for ETL to further process the data by calling Amazon Comprehend to identify any sensitive information. Finally, we use Lake Formation to define fine-grained permissions that restrict access to business analysts and data scientists who use Athena to query the data.

The following diagram illustrates the architecture for our solution.

Prerequisites

To follow the deployment walkthrough, you need an AWS account. Use us-east-1 or us-west-2 as your Region.

For this post, make sure you don’t have Lake Formation enabled in your AWS account.

Stage the data

Download the zipped archive file to use for this solution and unzip the files locally. patient.csv file is dummy data created to help demonstrate masking, encryption, and granting fine-grained access. The send-messages.sh script randomly generates simulated diagnostic data to represent body vitals. AWS Glue job uses glue-script.py script to perform ETL that detects sensitive information, masks/encrypt data, and populates curated table in AWS Glue catalog.

Create an S3 bucket called secure-datalake-scripts-<ACCOUNT_ID> via the Amazon S3 console. Upload the scripts and CSV files to this location.

Deploy your resources

For this post, we use AWS CloudFormation to create our data lake infrastructure.

- Choose Launch Stack:

- Select I acknowledge that AWS CloudFormation might create IAM resources with custom names before deploying.

The stack takes approximately 5 minutes to complete.

The following screenshot shows the key-values the stack created. We use the TestUserPassword parameter for the Lake Formation personas to sign in to the AWS Management Console.

Load the simulation data

Sign in to the AWS CloudShell console and wait for the terminal to start.

Stage the send-messages.sh script by running the Amazon S3 copy command:

aws s3 cp s3://secure-datalake-scripts-<ACCOUNT_ID>/send-messages.sh

Run your script by using the following command:

The script runs for a few minutes and emits 300 messages. This sends MQTT messages to the secure_iot_device_analytics topic, filtered using IoT rules, processed using Kinesis Data Firehose, and converted to Parquet format. After a minute, data starts showing up in the raw bucket.

Run the AWS Glue ETL pipeline

Run AWS Glue workflow (secureGlueWorkflow) from the AWS Glue console; you can also schedule to run this using CloudWatch. It takes approximately 10 minutes to complete.

The AWS Glue job that is triggered as part of the workflow (ProcessSecureData) joins the patient metadata and patient metrics data. See the following code:

# Join Patient metadata and patient metrics dataframe

combined_df=Join.apply(patient_metadata, patient_metrics, 'PatientId', 'pid', transformation_ctx = "combined_df")

The ensuing dataframe contains sensitive information like FirstName, LastName, DOB, Address1, Address2, and AboutYourself. AboutYourself is freeform text entered by the patient during registration. In the following code snippet, the detect_sensitive_info function calls the Amazon Comprehend API to identify personally identifiable information (PII):

# Apply groupBy to get unique AboutYourself records

group=combined_df.toDF().groupBy("pid","DOB", "FirstName", "LastName", "Address1", "Address2", "AboutYourself").count()

# Apply detect_sensitive_info to get the redacted string after masking PII data

df_with_about_yourself = Map.apply(frame = group_df, f = detect_sensitive_info)

# Apply encryption to the identified fields

df_with_about_yourself_encrypted = Map.apply(frame = group_df, f = encrypt_rows)

Amazon Comprehend returns an object that has information about the entity name and entity type. Based on your needs, you can filter the entity types that need to be masked.

These fields are masked, encrypted, and written to their respective S3 buckets where fine-grained access controls are applied via Lake Formation:

- Masked data –

s3://secure-data-lake-masked-<ACCOUNT_ID>

secure-dl-masked-data/

- Encrypted data –

s3://secure-data-lake-masked-<ACCOUNT_ID>

secure-dl-encrypted-data/

- Curated data –

s3://secure-data-lake-<ACCOUNT_ID>

secure-dl-curated-data/

Now that the tables have been defined, we review permissions using Lake Formation.

Enable Lake Formation fine-grained access

To enable fine-grained access, we first add a Lake Formation admin user.

- On the Lake Formation console, select Add other AWS users or roles.

- On the drop-down menu, choose secure-lf-admin.

- Choose Get started.

- In the navigation pane, choose Settings.

- On the Data Catalog Settings page, deselect Use only IAM access control for new databases and Use only IAM access control for new tables in new databases.

- Choose Save.

Grant access to different personas

Before we grant permissions to different user personas, let’s register the S3 locations in Lake Formation so these personas can access S3 data without granting access through AWS Identity and Access Management (IAM).

- On the Lake Formation console, choose Register and ingest in the navigation pane.

- Choose Data lake locations.

- Choose Register location.

- Find and select each of the following S3 buckets and choose Register location:

s3://secure-raw-bucket-<ACCOUNT_ID>/temp-raw-tables3://secure-data-lake-masked-<ACCOUNT_ID>/secure-dl-encrypted-datas3://secure-data-lake-<ACCOUNT_ID>/secure-dl-curated-datas3://secure-data-lake-masked-<ACCOUNT_ID>/secure-dl-masked-data

We’re now ready to grant access to our different users.

Grant read-only access to all the tables to secure-lf-admin

First, we grant read-only access to all the tables for the user secure-lf-admin.

- Sign in to the console with

secure-lf-admin (use the password value for TestUserPassword from the CloudFormation stack) and make sure you’re in the same Region.

- Navigate to AWS Lake Formation console

- Under Data Catalog, choose Databases.

- Select the database

secure-db.

- On the Actions drop-down menu, choose Grant.

- Select IAM users and roles.

- Choose the role

secure-lf-admin.

- Under Policy tags or catalog resources, select Named data catalog resources.

- For Database, choose the database

secure-db.

- For Tables, choose All tables.

- Under Permissions, select Table permissions.

- For Table permissions, select Super.

- Choose Grant.

- Choosesecure_dl_curated_data table.

- On the Actions drop-down menu, chose View permissions.

- Check IAMAllowedPrincipals and select Revoke and click on Revoke button.

You can confirm your user permissions on the Data Permissions page.

Grant read-only access to secure-lf-business-analyst

Now we grant read-only access to certain encrypted columns to the user secure-lf-business-analyst.

- On the Lake Formation console, under Data Catalog, choose Databases.

- Select the database

secure-db and choose View tables.

- Select the table

secure_dl_encrypted_data.

- On the Actions drop-down menu, choose Grant.

- Select IAM users and roles.

- Choose the role

secure-lf-business-analyst.

- Under Permissions, select Column-based permissions.

- Choose the following columns:

countaddress1_encryptedfirstname_encryptedaddress2_encrypteddob_encryptedlastname_encrypted

- For Grantable permissions, select Select.

- Choose Grant.

- Chose secure_dl_encrypted_data table.

- On the Actions drop-down menu, chose View permissions.

- Check IAMAllowedPrincipals and select Revoke and click on Revoke button.

You can confirm your user permissions on the Data Permissions page.

Grant read-only access to secure-lf-data-scientist

Lastly, we grant read-only access to masked data to the user secure-lf-data-scientist.

- On the Lake Formation console, under Data Catalog, choose Databases.

- Select the database

secure-db and choose View tables.

- Select the table

secure_dl_masked_data.

- On the Actions drop-down menu, choose Grant.

- Select IAM users and roles.

- Choose the role

secure-lf-data-scientist.

- Under Permissions, select Table permissions.

- For Table permissions, select Select.

- Choose Grant.

- Under Data Catalog, chose Tables.

- Chose secure_dl_masked_data table.

- On the Actions drop-down menu, chose View permissions.

- Check IAMAllowedPrincipals and select Revoke and click on Revoke button.

You can confirm your user permissions on the Data Permissions page.

Query the data lake using Athena from different personas

To validate the permissions of different personas, we use Athena to query against the S3 data lake.

Make sure you set the query result location to the location created as part of the CloudFormation stack (secure-athena-query-<ACCOUNT_ID>). The following screenshot shows the location information in the Settings section on the Athena console.

You can see all the tables listed under secure-db.

- Sign in to the console with

secure-lf-admin (use the password value for TestUserPassword from the CloudFormation stack) and make sure you’re in the same Region.

- Navigate to Athena Console.

- Run a SELECT query against the

secure_dl_curated_data

The user secure-lf-admin should see all the columns with encryption or masking.

Now let’s validate the permissions of secure-lf-business-analyst user.

- Sign in to the console with

secure-lf-business-analyst.

- Navigate to Athena console.

- Run a SELECT query against the

secure_dl_encrypted_data table.

The secure-lf-business-analyst user can only view the selected encrypted columns.

Lastly, let’s validate the permissions of secure-lf-data-scientist.

- Sign in to the console with

secure-lf-data-scientist.

- Run a SELECT query against the

secure_dl_masked_data table.

The secure-lf-data-scientist user can only view the selected masked columns.

If you try to run a query on different tables, such as secure_dl_curated_data, you get an error message for insufficient permissions.

Clean up

To avoid unexpected future charges, delete the CloudFormation stack.

Conclusion

In this post, we presented a potential solution for processing and storing sensitive data workloads in an S3 data lake. We demonstrated how to build a data lake on AWS to ingest, transform, aggregate, and analyze data from IoT devices in near-real time. This solution also demonstrates how you can mask and encrypt sensitive data, and use fine-grained column-level security controls with Lake Formation, which benefits those with a higher level of security needs.

Lake Formation recently announced the preview for row-level access; and you can sign up for the preview now!

About the Authors

Shekar Tippur is an AWS Partner Solutions Architect. He specializes in machine learning and analytics workloads. He has been helping partners and customers adopt best practices and discover insights from data.

Shekar Tippur is an AWS Partner Solutions Architect. He specializes in machine learning and analytics workloads. He has been helping partners and customers adopt best practices and discover insights from data.

Ramakant Jos hi is an AWS Solution Architect, specializing in the analytics and serverless domain. He has over 20 years of software development and architecture experience, and is passionate about helping customers in their cloud journey.

hi is an AWS Solution Architect, specializing in the analytics and serverless domain. He has over 20 years of software development and architecture experience, and is passionate about helping customers in their cloud journey.

Navnit Shukla is AWS Specialist Solution Architect, Analytics, and is passionate about helping customers uncover insights from their data. He has been building solutions to help organizations make data-driven decisions.

Navnit Shukla is AWS Specialist Solution Architect, Analytics, and is passionate about helping customers uncover insights from their data. He has been building solutions to help organizations make data-driven decisions.

comments