Post Syndicated from Explosm.net original https://explosm.net/comics/fashion

New Cyanide and Happiness Comic

Post Syndicated from Explosm.net original https://explosm.net/comics/fashion

New Cyanide and Happiness Comic

Post Syndicated from Sheila Busser original https://aws.amazon.com/blogs/compute/optimizing-gpu-utilization-for-ai-ml-workloads-on-amazon-ec2/

This blog post is written by Ben Minahan, DevOps Consultant, and Amir Sotoodeh, Machine Learning Engineer.

Machine learning workloads can be costly, and artificial intelligence/machine learning (AI/ML) teams can have a difficult time tracking and maintaining efficient resource utilization. ML workloads often utilize GPUs extensively, so typical application performance metrics such as CPU, memory, and disk usage don’t paint the full picture when it comes to system performance. Additionally, data scientists conduct long-running experiments and model training activities on existing compute instances that fit their unique specifications. Forcing these experiments to be run on newly provisioned infrastructure with proper monitoring systems installed might not be a viable option.

In this post, we describe how to track GPU utilization across all of your AI/ML workloads and enable accurate capacity planning without needing teams to use a custom Amazon Machine Image (AMI) or to re-deploy their existing infrastructure. You can use Amazon CloudWatch to track GPU utilization, and leverage AWS Systems Manager Run Command to install and configure the agent across your existing fleet of GPU-enabled instances.

First, make sure that your existing Amazon Elastic Compute Cloud (Amazon EC2) instances have the Systems Manager Agent installed, and also have the appropriate level of AWS Identity and Access Management (IAM) permissions to run the Amazon CloudWatch Agent. Next, specify the configuration for the CloudWatch Agent in Systems Manager Parameter Store, and then deploy the CloudWatch Agent to our GPU-enabled EC2 instances. Finally, create a CloudWatch Dashboard to analyze GPU utilization.

This post assumes you already have GPU-enabled EC2 workloads running in your AWS account. If the EC2 instance doesn’t have any GPUs, then the custom configuration won’t be applied to the CloudWatch Agent. Instead, the default configuration is used. For those instances, leveraging the CloudWatch Agent’s default configuration is better suited for tracking resource utilization.

For the CloudWatch Agent to collect your instance’s GPU metrics, the proper NVIDIA drivers must be installed on your instance. Several AWS official AMIs including the Deep Learning AMI already have these drivers installed. To see a list of AMIs with the NVIDIA drivers pre-installed, and for full installation instructions for Linux-based instances, see Install NVIDIA drivers on Linux instances.

Additionally, deploying and managing the CloudWatch Agent requires the instances to be running. If your instances are currently stopped, then you must start them to follow the instructions outlined in this post.

You utilize Systems Manager to deploy the CloudWatch Agent, so make sure that your EC2 instances have the Systems Manager Agent installed. Many AWS-provided AMIs already have the Systems Manager Agent installed. For a full list of the AMIs which have the Systems Manager Agent pre-installed, see Amazon Machine Images (AMIs) with SSM Agent preinstalled. If your AMI doesn’t have the Systems Manager Agent installed, see Working with SSM Agent for instructions on installing based on your operating system (OS).

Once installed, the CloudWatch Agent needs certain permissions to accept commands from Systems Manager, read Systems Manager Parameter Store entries, and publish metrics to CloudWatch. These permissions are bundled into the managed IAM policies AmazonEC2RoleforSSM, AmazonSSMReadOnlyAccess, and CloudWatchAgentServerPolicy. To create a new IAM role and associated IAM instance profile with these policies attached, you can run the following AWS Command Line Interface (AWS CLI) commands, replacing <REGION_NAME> with your AWS region, and <INSTANCE_ID> with the EC2 Instance ID that you want to associate with the instance profile:

aws iam create-role --role-name CloudWatch-Agent-Role --assume-role-policy-document '{"Statement":{"Effect":"Allow","Principal":{"Service":"ec2.amazonaws.com"},"Action":"sts:AssumeRole"}}'

aws iam attach-role-policy --role-name CloudWatch-Agent-Role --policy-arn arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM

aws iam attach-role-policy --role-name CloudWatch-Agent-Role --policy-arn arn:aws:iam::aws:policy/AmazonSSMReadOnlyAccess

aws iam attach-role-policy --role-name CloudWatch-Agent-Role --policy-arn arn:aws:iam::aws:policy/CloudWatchAgentServerPolicy

aws iam create-instance-profile --instance-profile-name CloudWatch-Agent-Instance-Profile

aws iam add-role-to-instance-profile --instance-profile-name CloudWatch-Agent-Instance-Profile --role-name CloudWatch-Agent-Role

aws ec2 associate-iam-instance-profile --region <REGION_NAME> --instance-id <INSTANCE_ID> --iam-instance-profile Name=CloudWatch-Agent-Instance-Profile

Alternatively, you can attach the IAM policies to your existing IAM role associated with an existing IAM instance profile.

aws iam attach-role-policy --role-name <ROLE_NAME> --policy-arn arn:aws:iam::aws:policy/service-role/AmazonEC2RoleforSSM

aws iam attach-role-policy --role-name <ROLE_NAME> --policy-arn arn:aws:iam::aws:policy/AmazonSSMReadOnlyAccess

aws iam attach-role-policy --role-name <ROLE_NAME> --policy-arn arn:aws:iam::aws:policy/CloudWatchAgentServerPolicy

aws ec2 associate-iam-instance-profile --region <REGION_NAME> --instance-id <INSTANCE_ID> --iam-instance-profile Name=<INSTANCE_PROFILE>

Once complete, you should see that your EC2 instance is associated with the appropriate IAM role.

This role should have the AmazonEC2RoleforSSM, AmazonSSMReadOnlyAccess and CloudWatchAgentServerPolicy IAM policies attached.

Before deploying the CloudWatch Agent onto our EC2 instances, make sure that those agents are properly configured to collect GPU metrics. To do this, you must create a CloudWatch Agent configuration and store it in Systems Manager Parameter Store.

Copy the following into a file cloudwatch-agent-config.json:

{

"agent": {

"metrics_collection_interval": 60,

"run_as_user": "cwagent"

},

"metrics": {

"aggregation_dimensions": [

[

"InstanceId"

]

],

"append_dimensions": {

"AutoScalingGroupName": "${aws:AutoScalingGroupName}",

"ImageId": "${aws:ImageId}",

"InstanceId": "${aws:InstanceId}",

"InstanceType": "${aws:InstanceType}"

},

"metrics_collected": {

"cpu": {

"measurement": [

"cpu_usage_idle",

"cpu_usage_iowait",

"cpu_usage_user",

"cpu_usage_system"

],

"metrics_collection_interval": 60,

"resources": [

"*"

],

"totalcpu": false

},

"disk": {

"measurement": [

"used_percent",

"inodes_free"

],

"metrics_collection_interval": 60,

"resources": [

"*"

]

},

"diskio": {

"measurement": [

"io_time"

],

"metrics_collection_interval": 60,

"resources": [

"*"

]

},

"mem": {

"measurement": [

"mem_used_percent"

],

"metrics_collection_interval": 60

},

"swap": {

"measurement": [

"swap_used_percent"

],

"metrics_collection_interval": 60

},

"nvidia_gpu": {

"measurement": [

"utilization_gpu",

"temperature_gpu",

"utilization_memory",

"fan_speed",

"memory_total",

"memory_used",

"memory_free",

"pcie_link_gen_current",

"pcie_link_width_current",

"encoder_stats_session_count",

"encoder_stats_average_fps",

"encoder_stats_average_latency",

"clocks_current_graphics",

"clocks_current_sm",

"clocks_current_memory",

"clocks_current_video"

],

"metrics_collection_interval": 60

}

}

}

}

Run the following AWS CLI command to deploy a Systems Manager Parameter CloudWatch-Agent-Config, which contains a minimal agent configuration for GPU metrics collection. Replace <REGION_NAME> with your AWS Region.

aws ssm put-parameter \

--region <REGION_NAME> \

--name CloudWatch-Agent-Config \

--type String \

--value file://cloudwatch-agent-config.json

Now you can see a CloudWatch-Agent-Config parameter in Systems Manager Parameter Store, containing your CloudWatch Agent’s JSON configuration.

Next, install the CloudWatch Agent on your EC2 instances. To do this, you can leverage Systems Manager Run Command, specifically the AWS-ConfigureAWSPackage document which automates the CloudWatch Agent installation.

aws ssm send-command \

--query 'Command.CommandId' \

--region <REGION_NAME> \

--instance-ids <INSTANCE_ID> \

--document-name AWS-ConfigureAWSPackage \

--parameters '{"action":["Install"],"installationType":["In-place update"],"version":["latest"],"name":["AmazonCloudWatchAgent"]}'2. To monitor the status of your command, use the get-command-invocation AWS CLI command. Replace <COMMAND_ID> with the command ID output from the previous step, <REGION_NAME> with your AWS region, and <INSTANCE_ID> with your EC2 instance ID.

aws ssm get-command-invocation --query Status --region <REGION_NAME> --command-id <COMMAND_ID> --instance-id <INSTANCE_ID>3.Wait for the command to show the status Success before proceeding.

$ aws ssm send-command \

--query 'Command.CommandId' \

--region us-east-2 \

--instance-ids i-0123456789abcdef \

--document-name AWS-ConfigureAWSPackage \

--parameters '{"action":["Install"],"installationType":["Uninstall and reinstall"],"version":["latest"],"additionalArguments":["{}"],"name":["AmazonCloudWatchAgent"]}'

"5d8419db-9c48-434c-8460-0519640046cf"

$ aws ssm get-command-invocation --query Status --region us-east-2 --command-id 5d8419db-9c48-434c-8460-0519640046cf --instance-id i-0123456789abcdef

"Success"Repeat this process for all EC2 instances on which you want to install the CloudWatch Agent.

Next, configure the CloudWatch Agent installation. For this, once again leverage Systems Manager Run Command. However, this time the AmazonCloudWatch-ManageAgent document which applies your custom agent configuration is stored in the Systems Manager Parameter Store to your deployed agents.

aws ssm send-command \

--query 'Command.CommandId' \

--region <REGION_NAME> \

--instance-ids <INSTANCE_ID> \

--document-name AmazonCloudWatch-ManageAgent \

--parameters '{"action":["configure"],"mode":["ec2"],"optionalConfigurationSource":["ssm"],"optionalConfigurationLocation":["/CloudWatch-Agent-Config"],"optionalRestart":["yes"]}'2. To monitor the status of your command, utilize the get-command-invocation AWS CLI command. Replace <COMMAND_ID> with the command ID output from the previous step, <REGION_NAME> with your AWS region, and <INSTANCE_ID> with your EC2 instance ID.

aws ssm get-command-invocation --query Status --region <REGION_NAME> --command-id <COMMAND_ID> --instance-id <INSTANCE_ID>3. Wait for the command to show the status Success before proceeding.

$ aws ssm send-command \

--query 'Command.CommandId' \

--region us-east-2 \

--instance-ids i-0123456789abcdef \

--document-name AmazonCloudWatch-ManageAgent \

--parameters '{"action":["configure"],"mode":["ec2"],"optionalConfigurationSource":["ssm"],"optionalConfigurationLocation":["/CloudWatch-Agent-Config"],"optionalRestart":["yes"]}'

"9a4a5c43-0795-4fd3-afed-490873eaca63"

$ aws ssm get-command-invocation --query Status --region us-east-2 --command-id 9a4a5c43-0795-4fd3-afed-490873eaca63 --instance-id i-0123456789abcdef

"Success"Repeat this process for all EC2 instances on which you want to install the CloudWatch Agent. Once finished, the CloudWatch Agent installation and configuration is complete, and your EC2 instances now report GPU metrics to CloudWatch.

Now that your GPU-enabled EC2 Instances are publishing their utilization metrics to CloudWatch, you can visualize and analyze these metrics to better understand your resource utilization patterns.

The GPU metrics collected by the CloudWatch Agent are within the CWAgent namespace. Explore your GPU metrics using the CloudWatch Metrics Explorer, or deploy our provided sample dashboard.

{

"widgets": [

{

"height": 10,

"width": 24,

"y": 16,

"x": 0,

"type": "metric",

"properties": {

"metrics": [

[{"expression": "SELECT AVG(nvidia_smi_utilization_gpu) FROM SCHEMA(\"CWAgent\", InstanceId) GROUP BY InstanceId","id": "q1"}]

],

"view": "timeSeries",

"stacked": false,

"region": "<REGION_NAME>",

"stat": "Average",

"period": 300,

"title": "GPU Core Utilization",

"yAxis": {

"left": {"label": "Percent","max": 100,"min": 0,"showUnits": false}

}

}

},

{

"height": 7,

"width": 8,

"y": 0,

"x": 0,

"type": "metric",

"properties": {

"metrics": [

[{"expression": "SELECT AVG(nvidia_smi_utilization_gpu) FROM SCHEMA(\"CWAgent\", InstanceId)", "label": "Utilization","id": "q1"}]

],

"view": "gauge",

"stacked": false,

"region": "<REGION_NAME>",

"stat": "Average",

"period": 300,

"title": "Average GPU Core Utilization",

"yAxis": {"left": {"max": 100, "min": 0}

},

"liveData": false

}

},

{

"height": 9,

"width": 24,

"y": 7,

"x": 0,

"type": "metric",

"properties": {

"metrics": [

[{ "expression": "SEARCH(' MetricName=\"nvidia_smi_memory_used\" {\"CWAgent\", InstanceId} ', 'Average')", "id": "m1", "visible": false }],

[{ "expression": "SEARCH(' MetricName=\"nvidia_smi_memory_total\" {\"CWAgent\", InstanceId} ', 'Average')", "id": "m2", "visible": false }],

[{ "expression": "SEARCH(' MetricName=\"mem_used_percent\" {CWAgent, InstanceId} ', 'Average')", "id": "m3", "visible": false }],

[{ "expression": "100*AVG(m1)/AVG(m2)", "label": "GPU", "id": "e2", "color": "#17becf" }],

[{ "expression": "AVG(m3)", "label": "RAM", "id": "e3" }]

],

"view": "timeSeries",

"stacked": false,

"region": "<REGION_NAME>",

"stat": "Average",

"period": 300,

"yAxis": {

"left": {"min": 0,"max": 100,"label": "Percent","showUnits": false}

},

"title": "Average Memory Utilization"

}

},

{

"height": 7,

"width": 8,

"y": 0,

"x": 8,

"type": "metric",

"properties": {

"metrics": [

[ { "expression": "SEARCH(' MetricName=\"nvidia_smi_memory_used\" {\"CWAgent\", InstanceId} ', 'Average')", "id": "m1", "visible": false } ],

[ { "expression": "SEARCH(' MetricName=\"nvidia_smi_memory_total\" {\"CWAgent\", InstanceId} ', 'Average')", "id": "m2", "visible": false } ],

[ { "expression": "100*AVG(m1)/AVG(m2)", "label": "Utilization", "id": "e2" } ]

],

"sparkline": true,

"view": "gauge",

"region": "<REGION_NAME>",

"stat": "Average",

"period": 300,

"yAxis": {

"left": {"min": 0,"max": 100}

},

"liveData": false,

"title": "GPU Memory Utilization"

}

}

]

}

2. run the following AWS CLI command, replacing <REGION_NAME> with the name of your Region:

aws cloudwatch put-dashboard \

--region <REGION_NAME> \

--dashboard-name My-GPU-Usage \

--dashboard-body file://cloudwatch-dashboard.jsonView the My-GPU-Usage CloudWatch dashboard in the CloudWatch console for your AWS region..

To avoid incurring future costs for resources created by following along in this post, delete the following:

By following along with this post, you deployed and configured the CloudWatch Agent across your GPU-enabled EC2 instances to track GPU utilization without pausing in-progress experiments and model training. Then, you visualized the GPU utilization of your workloads with a CloudWatch Dashboard to better understand your workload’s GPU usage and make more informed scaling and cost decisions. For other ways that Amazon CloudWatch can improve your organization’s operational insights, see the Amazon CloudWatch documentation.

Post Syndicated from Preet Jassi original https://aws.amazon.com/blogs/big-data/alexa-smart-properties-creates-value-for-hospitality-senior-living-and-healthcare-properties-with-amazon-quicksight-embedded/

This is a guest post by Preet Jassi from Alexa Smart Properties.

Alexa Smart Properties (ASP) is powered by a set of technologies that property owners, property managers, and third-party solution providers can use to deploy and manage Alexa-enabled devices at scale. Alexa can simplify tasks like playing music, controlling lights, or communicating with on-site staff. Our team got its start by building products for hospitality and residential properties, but we have since expanded our products to serve senior living and healthcare properties.

With Alexa now available in hotels, hospitals, senior living homes, and other facilities, we hear stories from our customers every day about how much they love Alexa. Everything from helping veterans with visual impairments gain access to information, to enabling a senior living home resident who had fallen and sustained an injury to immediately alert staff. It’s a great feeling when you can say, “The product I work on every day makes a difference in people’s lives!”

Our team builds the software that leading hospitality, healthcare, and senior living facilities use to manage Alexa devices in their properties. We partner directly with organizations that manage their own properties as well as third-party solution providers to provide comprehensive strategy and deployment support for Alexa devices and skills, making sure that they are ready for end-user customers. Our primary goal is to create value for properties through improved customer satisfaction, cost savings, and incremental revenue. We wanted a way to measure that impact in a fast, efficient, easily accessible way from a return on investment (ROI) perspective.

After we had established what capabilities we needed to close our analytics gap, we got in touch with the Amazon QuickSight team to help. In this post, we discuss our requirements and why Amazon QuickSight Embedded was the right fit for what we needed.

As a business-to-business-to-consumer product, our team serves the needs of two customers: the end-users who enjoy Alexa-enabled devices at the properties, and the property managers or solution providers that manage the Alexa deployment. We needed to prove to the latter group of customers that deploying Alexa would not only help them delight their customers, but save money as well.

We had the data necessary to tell that ROI story, but we needed an analytics solution that would allow us to provide insights that can be communicated to leadership.

These were our requirements:

With QuickSight, we can now show detailed device usage information, including quantity and frequency, with insights that connect the dots between that engagement and cost savings. For example, property managers can look at total dialog counts to determine that their guests are using Alexa often, which validates their investment.

The following screenshots show an example of the dashboard our solution providers can access, which they can use to send reports to staff at the properties they serve.

The following screenshots show an example of the Communications tab, which shows how properties use communications features to save costs (both in terms of time and equipment). Customers save time and money on protective equipment by using Alexa’s remote communication features, which enable caretakers to virtually check in on patients instead of visiting a property in person. These metrics help our customers calculate the cost savings from using Alexa.

In the last year, the Analytics page in our management console has had over 20,000 page views from customers who are accessing the data and insights there to understand the impact Alexa has had on their businesses.

With QuickSight embedded dashboards, our direct-property customers and solution providers now have an easy-to-understand visual representation of how Alexa is making a difference for the guests and patients at each property. Embedded dashboards simplify the viewing, analyzing, and insight gathering for key usage metrics that help both enterprise property owners and solution providers connect the dots between Alexa’s use and money saved. Because we use Amazon Redshift to house our data, QuickSight’s seamless integration made it a fantastic choice.

Going forward, we plan to expand and improve upon the analytics foundation we’ve built with QuickSight by providing programmatic access to data—for example, a CSV file that can be sent to a customer’s Amazon Simple Storage Service (Amazon S3) bucket—as well as adding more data to our dashboards, thereby creating new opportunities for deeper insights.

To learn more about how you can embed customized data visuals, interactive dashboards, and natural language querying into any application, visit Amazon QuickSight Embedded.

Preet Jassi is a Principal Product Manager Technical with Alexa Smart Properties. Preet fell in love with technology in Grade 5 where he built his first website for his elementary school. Prior to completing his MBA at Cornell, Preet was a UI Team Lead with over 6 years of experience as a software engineer post BSc. Preet’s passion is combining his love of technology (specifically analytics and artificial intelligence), with design, and business strategy to build products that customers love, spending time with family, and keeping active. He currently manages the Developer Experience for Alexa Smart Properties focusing on making it quick and easy to deploy Alexa devices in properties and he loves hearing quotes from end customers on how Alexa has changed their lives.

Preet Jassi is a Principal Product Manager Technical with Alexa Smart Properties. Preet fell in love with technology in Grade 5 where he built his first website for his elementary school. Prior to completing his MBA at Cornell, Preet was a UI Team Lead with over 6 years of experience as a software engineer post BSc. Preet’s passion is combining his love of technology (specifically analytics and artificial intelligence), with design, and business strategy to build products that customers love, spending time with family, and keeping active. He currently manages the Developer Experience for Alexa Smart Properties focusing on making it quick and easy to deploy Alexa devices in properties and he loves hearing quotes from end customers on how Alexa has changed their lives.

Post Syndicated from Kari Rivas original https://www.backblaze.com/blog/how-to-do-bare-metal-backup-and-recovery/

When you’re creating or refining your backup strategy, it’s important to think ahead to recovery. Hopefully you never have to deal with data loss, but any seasoned IT professional can tell you—whether it’s the result of a natural disaster or human error—data loss will happen.

With the ever-present threat of cybercrime and the use of ransomware, it is crucial to develop an effective backup strategy that also considers how quickly data can be recovered. Doing so is a key pillar of increasing your business’ cyber resilience: the ability to withstand and protect from cyber threats, but also bounce back quickly after an incident occurs. The key to that effective recovery may lie with bare metal recoveries.

In this guide, we will discuss what bare metal recovery is, its importance, the challenges of its implementation, and how it differs from other methods.

Your backup plan should be part of a broader disaster recovery (DR) plan that aims to help you minimize downtime and disruption after a disaster event.

A good backup plan starts with, at bare minimum, following the 3-2-1 rule. This involves having at least three copies of your data, two local copies (on-site) and at least one copy off-site. But it doesn’t end there. The 3-2-1 rule is evolving, and there are additional considerations around where and how you back up your data.

As part of an overall disaster recovery plan, you should also consider whether to use file and/or image-based backups. This decision will absolutely inform your DR strategy. And it leads to another consideration—understanding how to use bare metal recovery. If you plan to use bare metal recovery (and we’ll explain the reasons why you might want to), you’ll need to plan for image-based backups.

The term “bare metal” means a machine without an operating system (OS) installed on it. Fundamentally, that machine is “just metal”—the parts and pieces that make up a computer or server. A “bare metal backup” is designed so that you can take a machine with nothing else on it and restore it to your normal state of work. That means that the backup data has to contain the operating system (OS), user data, system settings, software, drivers, and applications, as well as all of the files. The terms image-based backups and bare metal backups are often used interchangeably to mean the process of creating backups of entire system data.

Bare metal backup is a favored method by many businesses because it ensures absolutely everything is backed up. This allows the entire system to be restored should a disaster result in total system failure. File-based backup strategies are, of course, very effective when just backing up folders and large media files, but when you’re talking about getting people back to work, a lot of man hours go into properly setting up a workstations to interact with internal networks, security protocols, proprietary or specialized software, etc. Since file-based backups do not back up the operating system and its settings, they are almost obsolete in modern IT environments, and operating on a file-based backup strategy can put businesses at significant risk or add downtime in the event of business interruption.

Bare metal backups allow data to be moved from one physical machine to another, to a virtual server, from a virtual server back to a physical machine, or from a virtual machine to a virtual server—offering a lot of flexibility.

This is the recommended method for backing up preferred system configurations so they can be transferred to other machines. The operating system and its settings can be quickly copied from a machine that is experiencing IT issues or has failing hardware, for example. Additionally, with a bare metal backup, virtual servers can also be set up very quickly instead of configuring the system from scratch.

As the name suggests, bare metal recovery is the process of recovering the bare metal (image-based) backup. By launching a bare metal recovery, a bare metal machine will retrieve its previous operating system, all files, folders, programs, and settings, ensuring the organization can resume operations as quickly as possible.

A bare metal recovery (or restore) works by recovering the image of a system that was created during the bare metal backup. The backup software can then reinstate the operating system, settings, and files on a bare metal machine so it is fully functional again.

This type of recovery is typically issued in a disaster situation when a full server recovery is required, or when hardware has failed.

The importance of BMR is dependent on an organization’s recovery time objective (RTO), the metric for measuring how quickly IT infrastructure can return online following a data disaster. The need for high-speed recovery, which in most cases is a necessity, means many businesses use bare metal recovery as part of their backup recovery plan.

If an OS becomes corrupted or damaged and you do not have a sufficient recovery plan in place, then the time needed to reinstall it, update it, and apply patches can result in significant downtime. BMR allows a server to be completely restored on a bare metal machine to its exact settings and configured simply and quickly.

Another key factor for choosing BMR is to protect against cybercrime. If your IT team can pinpoint the time when a system was infected with malware or ransomware, then a restore can be executed to wipe the machine clean of any threats and remove the source of infection, effectively rolling the system back to a time when everything was running smoothly.

BMR’s flexibility also means that it can be used to restore a physical or virtual machine, or simply as a method of cloning machines for easier deployment in the future.

The key advantages of bare metal recovery (BMR) are:

Like any backup and recovery method, some IT environments may be more suitable for BMR than others, and there are some caveats that an organization should be aware of before implementing such a strategy.

First, bare metal recovery can experience issues if the restore is being executed on a machine with dissimilar hardware. The reason for this is that the original operating system copy needs to load the correct drivers to match the machine’s hardware. Therefore, if there is no match, then the system will not boot.

Fortunately, Backblaze Partner integrations, like MSP360, have features that allow you to restore to dissimilar hardware with no issues. This is a key feature to look for when considering BMR solutions. Otherwise, you have to seek out a new machine that has the same hardware as the corrupted machine.

Second, there may be a reason for not wanting to run BMR, such as a minor data accident when a simple file/folder restore is more practical, taking less time to achieve the desired results. A bare metal recovery strategy is recommended when a full machine needs to be restored, so it is advised to include several different options in your backup recovery plan to cover all scenarios.

An on-premises disaster disrupts business operations and can have catastrophic implications for your bottom line. And, if you’re unable to run your preferred backup software, performing a bare metal recovery may not even be an option. Backblaze has created a solution that draws data from Veeam Backup & Replication backups stored in Backblaze B2 Cloud Storage to quickly bring up an orchestrated combination of on-demand servers, firewalls, networking, storage, and other infrastructure in phoenixNAP’s bare metal cloud servers. This Instant Business Recovery (IBR) solution includes fully-managed, affordable 24/7 disaster recovery support from Backblaze’s managed service provider partner specializing in disaster recovery as a service (DRaaS).

IBR allows your business to spin up your entire environment, including the data from your Backblaze B2 backups, in the cloud. With this active DR site in the cloud, you can keep business operations running while restoring your on-premises systems. Recovery is initiated via a simple web form or phone call. Instant Business Recovery protects your business in the case of on-premises disaster for a fraction of the cost of typical managed DRaaS solutions. As you build out your business continuity plan, you should absolutely consider how to sustain your business in the case of damage to your local infrastructure; Instant Business Recovery allows you to begin recovering your servers in minutes to ensure you meet your RTO.

Bare metal backup and recovery should be a key part of any DR strategy. From moving operating systems and files from one physical machine to another, to transferring image-based backups from a virtual machine to a virtual server, it’s a tool that makes sense as part of any IT admin’s toolbox.

Your next question is where to store your bare metal backups, and cloud storage makes good sense. Even if you’re already keeping your backups off-site, it’s important for them to be geographically distanced in case your entire area experiences a natural disaster or outage. That takes more than just backing up to the cloud, really—it’s important to know where your cloud storage provider stores their data for both compliance standards, speed of content delivery (if that’s a concern), and to ensure that you’re not unintentionally storing your off-site backup close to home.

Remember that these are critical backups you’ll need in a disaster scenario, so consider recovery time and expense when choosing a cloud storage provider. While it may seem more economical to use cold storage, it comes with long recovery times and high fees to recover quickly. Using always-hot cloud storage is imperative, both for speed and to avoid an additional expense in the form of a bill for egress fees after you’ve recovered from a cyberattack.

Backblaze B2 Cloud Storage provides S3 compatible, Object Lock-capable hot storage for one-fifth the cost of AWS and other public clouds—with no trade-off in performance.

Get started today, and contact us to support a customized proof of concept (PoC) for datasets of more than 50TB.

The post How To Do Bare Metal Backup and Recovery appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from original https://lwn.net/Articles/929453/

Version 5.13 of the LXD virtual-machine manager has been released. New

features include fast live migration, support for AMD’s secure enclaves,

and more. See this

announcement for details.

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=lDsFsyWa7r8

Post Syndicated from Chad Woolf original https://aws.amazon.com/blogs/security/scaling-security-and-compliance/

At Amazon Web Services (AWS), we move fast and continually iterate to meet the evolving needs of our customers. We design services that can help our customers meet even the most stringent security and compliance requirements. Additionally, our service teams work closely with our AWS Security Guardians program to coordinate security efforts and to maintain a high quality bar. We also have internal compliance teams that continually monitor security control requirements from all over the world and engage with external auditors to achieve third-party validation of our services against these requirements.

In this post, I’ll cover some key strategies and best practices that we use to scale security and compliance while maintaining a culture of innovation.

At AWS, security is our top priority. Although compliance might be challenging, treating security as an integral part of everything we do at AWS makes it possible for us to adhere to a broad range of compliance programs, to document our compliance, and to successfully demonstrate our compliance status to our auditors and customers.

Over time, as the auditors get deeper into what we’re doing, we can also help improve and refine their approach, as well. This increases the depth and quality of the reports that we provide directly to our customers.

Many customers struggle with balancing security, compliance, and production. These customers have applications that they want to quickly make available to their own customer base. They might need to audit these applications. The traditional process can include writing the application, putting it into production, and then having the audit team take a look to make sure it meets compliance standards. This approach can cause issues, because retroactively adding compliance requirements can result in rework and churn for the development team.

Enforcing compliance requirements in this way doesn’t scale and eventually causes more complexity and friction between teams. So how do you scale quickly and securely?

The first way to earn trust with development teams is to speak their language. It’s critical to use terms and references that developers use, and to know what tools they are using to develop, deploy, and secure code. It’s not efficient or realistic to ask the engineering teams to do the translation of diverse (and often vague) compliance requirements into engineering specs. The compliance teams must do the hard work of translating what is required into what specifically must be done, using language that engineers are familiar with.

Another strategy to scale is to embed compliance requirements into the way developers do their daily work. It’s important that compliance teams enable developers to do their work just as they normally do, without compliance needing to intervene. If you’re successful at that strategy—and the compliant path becomes the simplest and most natural path—then that approach can lead to a very scalable compliance program that fosters understanding between teams and increased collaboration. This approach has helped break down the barriers between the developer and audit/compliance organizations.

I believe that you should treat auditors and regulators as true business partners. An independent auditor or regulator understands how a wide range of customers will use the security assurance artifacts that you are producing, and therefore will have valuable insights into how your reports can best be used. I think people can fall into the trap of treating regulators as adversaries. The best approach is to communicate openly with regulators, helping them understand your business and the value you bring to your customers, and getting them ramped up on your technology and processes.

At AWS, we help auditors and regulators get ramped up in various ways. For example, we have the Digital Audit Symposium, which contains presentations on how we control and operate particular services in terms of security and compliance. We also offer the Cloud Audit Academy, a learning path that provides both cloud-agnostic and AWS-specific training to help existing and prospective auditing, risk, and compliance professionals understand how to audit regulated cloud workloads. We’ve learned that being a partner with auditors and regulators is key in scaling compliance.

Having security as a foundation is essential to driving and scaling compliance efforts. Speaking the language of developers helps them continue to work without disruption, and makes the simple path the compliant path. Although some barriers still exist, especially for organizations in highly regulated industries such as financial services and healthcare, treating auditors like partners is a positive strategic shift in perspective. The more proactive you are in helping them accomplish what they need, the faster you will realize the value they bring to your business.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this post, contact AWS Support.

Want more AWS Security news? Follow us on Twitter.

Post Syndicated from Utkarsh Agarwal original https://aws.amazon.com/blogs/big-data/configure-saml-federation-for-amazon-opensearch-serverless-with-aws-iam-identity-center/

Amazon OpenSearch Serverless is a serverless option of Amazon OpenSearch Service that makes it easy for you to run large-scale search and analytics workloads without having to configure, manage, or scale OpenSearch clusters. It automatically provisions and scales the underlying resources to deliver fast data ingestion and query responses for even the most demanding and unpredictable workloads. With OpenSearch Serverless, you can configure SAML to enable users to access data through OpenSearch Dashboards using an external SAML identity provider (IdP).

AWS IAM Identity Center (Successor to AWS Single Sign-On) helps you securely create or connect your workforce identities and manage their access centrally across AWS accounts and applications, OpenSearch Dashboards being one of them.

In this post, we show you how to configure SAML authentication for OpenSearch Dashboards using IAM Identity Center as its IdP.

The following diagram illustrates how the solution allows users or groups to authenticate into OpenSearch Dashboards using single sign-on (SSO) with IAM Identity Center using its built-in directory as the identity source.

The workflow steps are as follows:

OpenSearch Serverless validates the SAML assertion, and the user logs in to OpenSearch Dashboards.

To get started, you must have an active OpenSearch Serverless collection. Refer to Creating and managing Amazon OpenSearch Serverless collections to learn more about creating a collection. Furthermore, you must have the correct AWS Identity and Access Management (IAM) permissions for configuring SAML authentication along with relevant IAM permissions for configuring the data access policy.

IAM Identity Center should be enabled, and you should have the relevant IAM permissions to create an application in IAM Identity Center and create and manage users and groups.

To set up your application in IAM Identity Center, complete the following steps:

We use this metadata file to create a SAML provider under OpenSearch Serverless. It contains the public certificate used to verify the signature of the IAM Identity Center SAML assertions.

Note that the session duration you configure in this step takes precedence over the OpenSearch Dashboards timeout setting specified in the configuration of the SAML provider details on the OpenSearch Serverless end.

https://collection.<REGION>.aoss.amazonaws.com/_saml/acs. For example, we enter https://collection.us-east-1.aoss.amazonaws.com/_saml/acs for this post.aws:opensearch:<aws account id>.

Now you modify the attribute settings. The attribute mappings you configure here become part of the SAML assertion that is sent to the application.

${user:email}, with the format unspecified.Using ${user:email} here ensures that the email address for the user in IAM Identity Center is passed in the <NameId> tag of the SAML response.

Now we assign a user to the application.

Alternatively, you can use an existing user.

You have now created a custom SAML application. Next, you will configure the SAML provider in OpenSearch Serverless.

The SAML provider you create in this step can be assigned to any collection in the same Region. Complete the following steps:

You have now configured a SAML provider for OpenSearch Serverless. Next, we walk you through configuring the data access policy for accessing collections.

In this section, you set up data access policies for OpenSearch Serverless and allow access to the users. Complete the following steps:

user/<email> (for example, user/[email protected]).The value of the email address should match the email address in IAM Identity Center.

You can configure what access you want to provide for the specific user at the collection level and specific indexes at the index pattern level.

You should select the access the user needs based on the least privilege model. Refer to Supported policy permissions and Supported OpenSearch API operations and permissions to set up more granular access for your users.

You can now review and edit your configuration if needed.

Now you have the data access policy that will allow the users to perform the allowed actions on OpenSearch Dashboards.

To sign in to OpenSearch Dashboards, complete the following steps:

<collection-endpoint>/_dashboards).

Groups can help you organize your users and permissions in a coherent way. With groups, you can add multiple users from the IdP, and then use groupid as the identifier in the data access policy. For more information, refer to Add groups and Add users to groups.

To configure group access to OpenSearch Dashboards, complete the following steps:

${user:groups}, with the format unspecified.

group/<GroupId>.

You can fetch the value for the group ID by navigating to the Group section on the IAM Identity Center console.

If you don’t want to continue using the solution, be sure to delete the resources you created:

In this post, you learned how to set up IAM Identity Center as an IdP to access OpenSearch Dashboards using SAML as SSO. You also learned on how to set up users and groups within IAM Identity Center and control the access of users and groups for OpenSearch Dashboards. For more details, refer to SAML authentication for Amazon OpenSearch Serverless.

Stay tuned for a series of posts focusing on the various options available for you to build effective log analytics and search solutions using OpenSearch Serverless. You can also refer to the Getting started with Amazon OpenSearch Serverless workshop to know more about OpenSearch Serverless.

If you have feedback about this post, submit it in the comments section. If you have questions about this post, start a new thread on the OpenSearch Service forum or contact AWS Support.

Utkarsh Agarwal is a Cloud Support Engineer in the Support Engineering team at Amazon Web Services. He specializes in Amazon OpenSearch Service. He provides guidance and technical assistance to customers thus enabling them to build scalable, highly available and secure solutions in AWS Cloud. In his free time, he enjoys watching movies, TV series and of course cricket! Lately, he his also attempting to master the art of cooking in his free time – The taste buds are excited, but the kitchen might disagree.

Utkarsh Agarwal is a Cloud Support Engineer in the Support Engineering team at Amazon Web Services. He specializes in Amazon OpenSearch Service. He provides guidance and technical assistance to customers thus enabling them to build scalable, highly available and secure solutions in AWS Cloud. In his free time, he enjoys watching movies, TV series and of course cricket! Lately, he his also attempting to master the art of cooking in his free time – The taste buds are excited, but the kitchen might disagree.

Ravi Bhatane is a software engineer with Amazon OpenSearch Serverless Service. He is passionate about security, distributed systems, and building scalable services. When he’s not coding, Ravi enjoys photography and exploring new hiking trails with his friends.

Ravi Bhatane is a software engineer with Amazon OpenSearch Serverless Service. He is passionate about security, distributed systems, and building scalable services. When he’s not coding, Ravi enjoys photography and exploring new hiking trails with his friends.

Prashant Agrawal is a Sr. Search Specialist Solutions Architect with Amazon OpenSearch Service. He works closely with customers to help them migrate their workloads to the cloud and helps existing customers fine-tune their clusters to achieve better performance and save on cost. Before joining AWS, he helped various customers use OpenSearch and Elasticsearch for their search and log analytics use cases. When not working, you can find him traveling and exploring new places. In short, he likes doing Eat → Travel → Repeat.

Prashant Agrawal is a Sr. Search Specialist Solutions Architect with Amazon OpenSearch Service. He works closely with customers to help them migrate their workloads to the cloud and helps existing customers fine-tune their clusters to achieve better performance and save on cost. Before joining AWS, he helped various customers use OpenSearch and Elasticsearch for their search and log analytics use cases. When not working, you can find him traveling and exploring new places. In short, he likes doing Eat → Travel → Repeat.

Post Syndicated from original https://lwn.net/Articles/929391/

The Fedora 38

release is available. Fedora has mostly moved past it’s old pattern of

late releases, but it’s still a bit surprising that this release came out

one week ahead of the scheduled date. Some of the changes in this

release, including

reduced shutdown timeouts

and frame pointers have been covered here

in the past; see the announcement for details on the rest.

If you want to use Fedora Linux on your mobile device, F38

introduces a Phosh image. Phosh is a Wayland shell for mobile

devices based on Gnome. This is an early effort from our Mobility

SIG. If your device isn’t supported yet, we welcome your

contributions!

Post Syndicated from original https://lwn.net/Articles/929389/

Security updates have been issued by Debian (protobuf), Fedora (libpcap, libxml2, openssh, and tcpdump), Mageia (kernel and kernel-linus), Oracle (firefox, kernel, kernel-container, and thunderbird), Red Hat (thunderbird), Scientific Linux (thunderbird), SUSE (gradle, kernel, nodejs10, nodejs12, nodejs14, openssl-3, pgadmin4, rubygem-rack, and wayland), and Ubuntu (firefox).

Post Syndicated from original https://lwn.net/Articles/929343/

Matthew Garrett points

out that many Linux systems using encrypted disks were installed with a

relatively week key derivation function that could make it relatively easy

for a well-resourced attacker to break the encryption:

So, in these days of attackers with access to a pile of GPUs, a

purely computationally expensive KDF is just not a good

choice. And, unfortunately, the subject of this story was almost

certainly using one of those. Ubuntu 18.04 used the LUKS1 header

format, and the only KDF supported in this format is PBKDF2. This

is not a memory expensive KDF, and so is vulnerable to GPU-based

attacks. But even so, systems using the LUKS2 header format used to

default to argon2i, again not a memory expensive KDF. New versions

default to argon2id, which is. You want to be using argon2id.

The article includes instructions on how to (carefully) switch an installed

system to a more secure setup.

Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/python-3-10-runtime-now-available-in-aws-lambda/

This post is written by Suresh Poopandi, Senior Solutions Architect, Global Life Sciences.

AWS Lambda now supports Python 3.10 as both a managed runtime and container base image. With this release, Python developers can now take advantage of new features and improvements introduced in Python 3.10 when creating serverless applications on Lambda.

Enhancements in Python 3.10 include structural pattern matching, improved error messages, and performance enhancements. This post outlines some of the benefits of Python 3.10 and how to use this version in your Lambda functions.

AWS has also published a preview Lambda container base image for Python 3.11. Customers can use this image to get an early look at Python 3.11 support in Lambda. This image is subject to change and should not be used for production workloads. To provide feedback on this image, and for future updates on Python 3.11 support, see https://github.com/aws/aws-lambda-base-images/issues/62.

Thanks to its simplicity, readability, and extensive community support, Python is a popular language for building serverless applications. The Python 3.10 release includes several new features, such as:

The faster PEP 590 vectorcall calling convention allows for quicker and more efficient Python function calls, particularly those that take multiple arguments. The specific built-in functions that benefit from this optimization include map(), filter(), reversed(), bool(), and float(). By using the vectorcall calling convention, according to Python 3.10 release notes, these inbuilt functions’ performance improved by a factor of 1.26x.

When a function is defined with annotations, these are stored in a dictionary that maps the parameter names to their respective annotations. In previous versions of Python, this dictionary was created immediately when the function was defined. However, in Python 3.10, this dictionary is created only when the annotations are accessed, which can happen when the function is called. By delaying the creation of the annotation dictionary until it is needed, Python can avoid the overhead of creating and initializing the dictionary during function definition. This can result in a significant reduction in CPU time, as the dictionary creation can be a time-consuming operation, particularly for functions with many parameters or complex annotations.

In Python 3.10, the LOAD_ATTR instruction, which is responsible for loading attributes from objects in the code, has been improved with a new mechanism called the “per opcode cache”. This mechanism works by storing frequently accessed attributes in a cache specific to each LOAD_ATTR instruction, which reduces the need for repeated attribute lookups. As a result of this improvement, according to Python 3.10 release notes, the LOAD_ATTR instruction is now approximately 36% faster when accessing regular attributes and 44% faster when accessing attributes defined using the slots mechanism.

In Python, the str(), bytes(), and bytearray() constructors are used to create new instances of these types from existing data or values. Based on the result of the performance tests conducted as part of BPO-41334, constructors str(), bytes(), and bytearray() are around 30–40% faster for small objects.

Lambda functions developed with Python that read and process Gzip compressed files can gain a performance improvement. Adding _BlocksOutputBuffer for the bz2/lzma/zlib module eliminated the overhead of resizing bz2/lzma buffers, preventing excessive memory footprint of the zlib buffer. According to Python 3.10 release notes, bz2 decompression is now 1.09x faster, lzma decompression 1.20x faster, and GzipFile read is 1.11x faster

To use the Python 3.10 runtime to develop your Lambda functions, specify a runtime parameter value Python 3.10 when creating or updating a function. Python 3.10 version is now available in the Runtime dropdown in the Create function page.

To update an existing Lambda function to Python 3.10, navigate to the function in the Lambda console, then choose Edit in the Runtime settings panel. The new version of Python is available in the Runtime dropdown:

In AWS SAM, set the Runtime attribute to python3.10 to use this version.

AWSTemplateFormatVersion: ‘2010-09-09’

Transform: AWS::Serverless-2016-10-31

Description: Simple Lambda Function

Resources:

MyFunction:

Type: AWS::Serverless::Function

Properties:

Description: My Python Lambda Function

CodeUri: my_function/

Handler: lambda_function.lambda_handler

Runtime: python3.10

AWS SAM supports the generation of this template with Python 3.10 out of the box for new serverless applications using the sam init command. Refer to the AWS SAM documentation here.

In the AWS CDK, set the runtime attribute to Runtime.PYTHON_3_10 to use this version. In Python:

from constructs import Construct

from aws_cdk import (

App, Stack,

aws_lambda as _lambda

)

class SampleLambdaStack(Stack):

def __init__(self, scope: Construct, id: str, **kwargs) -> None:

super().__init__(scope, id, **kwargs)

base_lambda = _lambda.Function(self, 'SampleLambda',

handler='lambda_handler.handler',

runtime=_lambda.Runtime.PYTHON_3_10,

code=_lambda.Code.from_asset('lambda'))

In TypeScript:

import * as cdk from 'aws-cdk-lib';

import * as lambda from 'aws-cdk-lib/aws-lambda'

import * as path from 'path';

import { Construct } from 'constructs';

export class CdkStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// The code that defines your stack goes here

// The python3.10 enabled Lambda Function

const lambdaFunction = new lambda.Function(this, 'python310LambdaFunction', {

runtime: lambda.Runtime.PYTHON_3_10,

memorySize: 512,

code: lambda.Code.fromAsset(path.join(__dirname, '/../lambda')),

handler: 'lambda_handler.handler'

})

}

}

Change the Python base image version by modifying FROM statement in the Dockerfile:

FROM public.ecr.aws/lambda/python:3.10

# Copy function code

COPY lambda_handler.py ${LAMBDA_TASK_ROOT}

To learn more, refer to the usage tab on building functions as container images.

You can build and deploy functions using Python 3.10 using the AWS Management Console, AWS CLI, AWS SDK, AWS SAM, AWS CDK, or your choice of Infrastructure as Code (IaC). You can also use the Python 3.10 container base image if you prefer to build and deploy your functions using container images.

We are excited to bring Python 3.10 runtime support to Lambda and empower developers to build more efficient, powerful, and scalable serverless applications. Try Python 3.10 runtime in Lambda today and experience the benefits of this updated language version and take advantage of improved performance.

For more serverless learning resources, visit Serverless Land.

Post Syndicated from Светла Енчева original https://www.toest.bg/strastite-hristovi-na-bulgarskata-politika/

Що за двусмислено заглавие? За страстите на Христос ли става дума, или за българската политика? Ако извънземно същество е кацнало на Земята в началото на миналата седмица и се е опитало да се информира за нравите на тази планета от българските медии, вероятно е останало с впечатлението, че Божият Син е възкръснал в името на българските институции. И особено – в името на президента, парламента, служебното правителство и прокуратурата.

Президентът Румен Радев свика новоизбрания парламент в т.нар. Страстна седмица, т.е. седмицата преди Великден. Председателят на ГЕРБ и бивш премиер Бойко Борисов определи Страстната седмица като неподходящо начало на работата на Народното събрание. В аргументацията си той постави религията над държавата: „На Разпети петък, каквото и да има, ние няма да ходим на работа. Няма да участваме в парламента, защото ние въведохме Разпети петък като неработен ден, за да могат всички християни да отидат, да минат под масата и да страдат така, както е страдал Господ.“

Не става ясно как минаването под масата може да причини такова страдание, каквото е изпитал Христос, докато е умирал на кръста. Но поне е успокоително, че Борисов не призовава да се самобичуваме, за да ни боли, както го е боляло Иисус.

В речта си при откриването на новия парламент Румен Радев обоснова решението си да го свика точно на Велика сряда със символното значение на седмицата в християнството:

Свиках 49-тото Народно събрание в Страстната седмица с очакванията на българския народ за различно начало на това Народно събрание, с надеждата за смирение, диалог и разум.

Радев обясни и в каква посока си представя смирението, диалога и разума, като се започне с приемането на бюджет. И понеже, въпреки призивите му за разум, религиозното смирение прави разсъжденията излишни, призивът му Народното събрание, „за разлика от предишните, да подкрепи европейския курс, зададен от служебното правителство“, остана без ответна реакция.

А всъщност при всичките си проблеми и недостатъци именно парламентът се опитваше да държи европейски курс, а президентът и неговото служебно правителство правеха всичко възможно да променят посоката на този курс към Русия. Справка – възраженията на Радев срещу решението на депутатите да се изпрати военна помощ на Украйна и нежеланието на кабинета му да изпълни това решение.

Страстната седмица не пропусна да спомене във встъпителната си реч и Вежди Рашидов, който в качеството си на най-възрастния народен представител откри парламента. Той каза:

Ние сме в Страстната седмица на предателството и опрощението, на разпятието и саможертвата, на надеждата и вярата във Възкресението. И се питам – не може ли двайсет века по-късно да изградим общия ни път, а не да стоим отстрани или тихо да чакаме някой да се жертва за нас с надеждата да оцелеем и да празнуваме Възкресение? Аз мисля, че можем.

Патетичното първо изречение от този цитат отвлича вниманието от следващите две. А те са важни, защото Вежди Рашидов, който впрочем е мюсюлманин (според исляма Христос е само пророк, а не Бог), казва, че това, което има значение, са действията ни на този свят, а не вярата в някой, който да се жертва за нас. Но Рашидов не е вчерашен в българския публичен живот и знае, че когато човек първо каже това, което се очаква от него, останалите му думи може да минат между капките.

В кулоарите на парламента и служебният премиер Гълъб Донев не пропусна да се позове на Велика сряда – деня от Страстната седмица, в който е свикано Народното събрание. Според него съвпадението е „знак не само за това, че народните представители трябва да работят като всички останали българи, но и израз на необходимостта от смирение, самооценка – етична – и готовност да се служи на народа“.

На влизане в парламента и главният прокурор Иван Гешев беше обзет от религиозен патос. Той постави въпрос пред българските политици, като сам даде отговор още в процеса на задаването му:

Днес е Велика сряда. В този ден на Страстната седмица ние, православните християни, трябва да си зададем въпроса дали делата ни съответстват на повелите на Спасителя. Надявам се и българските политици да си зададат този въпрос. И да си зададат въпроса дали са важни личните интереси и дали трябва – а то трябва – да работят в интерес на народа, а не народът да работи за политиците.

На следващия ден пък на страницата на прокуратурата във Facebook беше публикувано изявление на Иван Гешев. От него стана ясно, че след Велика сряда главният прокурор смята за необходимо да отбележи и Велики четвъртък, на който „православните християни си спомнят за Тайната вечеря на Божия Син с апостолите и пророчеството му, че ще бъде предаден“. В изявлението си Гешев сравнява прокуратурата, чиято структура и работа е обект на множество критики, с предадения Христос:

Въпреки безпрецедентните опити за дестабилизация и дискредитация, за овладяване и политически контрол в нарушение на принципа за разделение на властите, въпреки заплахите и обидите прокурорите, следователите и служителите на ПРБ [прокуратурата на Република България] работят и ще продължат да работят за всеки един българин. Нито един от тях не предаде колегите си за тридесет сребърника.

Тук човек може да си зададе въпроса дали да защитаваш собствените интереси на прокуратурата е да работиш „за всеки един българин“ и дали безнаказаността на тази институция е сравнима с божествения статут на Христос. Но да не забравяме, че Гешев беше обявил сам себе си за „инструмент в ръцете на Господ“ в интервю за „Епицентър“. Там той заяви, че самият Бог действа чрез него:

Единственият, който въздава правосъдие, е Господ. Аз не въздавам правосъдие. Аз съм инструмент, с който Той прави нещата, които смята за правилни.

На Разпети петък Инструментът на Господ изненадващо не сведе божията воля до българските граждани. Мълча и на Велика събота. Възкръсна словесно точно на Великден със снимки, демонстриращи набожността му, и с призива: „Нека светлината и надеждата на Христовото Възкресение надмогнат разединението и омразата, за да дадат път на разума и обединението.“

Тази година Великден съвпадна с Деня на Конституцията и юриста, който се отбелязва на 16 април. Това стана повод Иван Гешев да излезе и с второ обръщение, в което да отбележи професионалния празник на юристите и да се обяви за отстояване на върховенството на закона. Макар че тъкмо в името на върховенството на правото са и неуспешните досега опити да се реформира прокуратурата.

В неделя два пъти се „разписа“ във Facebook и председателката на БСП Корнелия Нинова. Първо на страницата ѝ се появи в 20-секундно видео, в която тя стои пред маса с великденски яйца и козунак, държейки в ръце и галейки истинско малко зайче. На фона на тези умилителни кадри е трудно зрителят да се концентрира върху думите ѝ, но тя започва с „Христос воскресе“ и после пожелава все хубави неща, като в началото, разбира се, е мирът. Нинова призовава всички да вървим напред с вяра.

Във втората публикация Нинова, изобразена със сериозна снимка с костюм на фона на библиотека, честити Деня на Конституцията, напомняйки, че е юристка по образование. Този пост стана впрочем повод за подигравки в социалните мрежи, че докато други отбелязват Великден, тя акцентира само върху празника на юристите. Проблемът обаче не е само в това, че иронизиращите са пропуснали видеото със зайчето. Доколкото Нинова е председателка на социалистическа партия, е логично да поставя светската държава над религията. Проблемът е, че тя не прави това, а в борбата си срещу „джендъра“ все повече се съюзява с най-мракобесните религиозни кръгове.

Религиозният патос е необичаен за европейските социалисти, но е напълно в реда на нещата в руската пропаганда, в духа на която е и политиката, провеждана от Корнелия Нинова. Въпреки че по времето на социализма религията е преследвана, съвременната соцносталгия и религиозният фундаментализъм вървят ръка за ръка.

Ашладисването на соцносталгията с религията се прояви и във великденския концерт на БНТ, в който известният от времето на социализма дует на Кристина Димитрова и Орлин Горанов пееше на фона на балет от… пионерчета. Да оставим настрана, че въпросните пионерчета бяха с дължина на полите, напомняща по-скоро за филмите за възрастни, отколкото за социализма, по времето на който са се слагали печати на момичета и жени с къси поли.

Но като стана дума за Деня на Конституцията, нека припомним какво казва Основният закон за религията. Най-важното по темата е събрано в чл. 13:

(1) Вероизповеданията са свободни.

(2) Религиозните институции са отделени от държавата.

(3) Традиционна религия в Република България е източноправославното вероизповедание.

(4) Религиозните общности и институции, както и верските убеждения не могат да се използват за политически цели.

Към това може да добавим и ал. 2 на чл. 58, която гласи: „Религиозните и другите убеждения не са основание за отказ да се изпълняват задълженията, установени в Конституцията и законите.“

Свободата на вероизповеданията си има граница, която е определена в ал. 2 на чл. 37: „Свободата на съвестта и на вероизповеданието не може да бъде насочена срещу националната сигурност, обществения ред, народното здраве и морала или срещу правата и свободите на други граждани.“

Да не забравяме и че в чл. 11, ал. 4 се забраняват партиите на етническа и верска основа. Независимо каква е вярата.

Накратко, по конституция България е светска държава. Макар православието да е традиционно за страната вероизповедание, то не е официална държавна религия. Религиозните институции нямат правото да се намесват в държавните, а използването на религията за политически цели е недопустимо. Вероизповеданията са свободни, стига да не вредят и да не нарушават правата и свободите на останалите.

Независимо какво пише в Конституцията, ролята на религията в България бавно и постепенно се официализира. Това е процес, започнал още през 2001 г. с правителството на Симеон Сакскобургготски. То беше първото, което положи клетва не само пред Конституцията, но и пред Библията, и то в присъствието на патриарха. На следващата година Георги Първанов постъпи по същия начин при встъпването си като президент. След това започна да изглежда все по-естествено премиери и президенти да се кълнат пред Библията, а патриархът получи своеобразно „запазено място“ в Народното събрание.

Журналистката Татяна Ваксберг (днес главна редакторка на „Свободна Европа“) следи тази тенденция от години. Тя отбелязва, че неусетно въведената от Симеон Сакскобургготски практика е нарушена едва през 2021 г., при правителството на Кирил Петков. То се заклева само пред Конституцията и без присъствието на патриарха. Изключение са и двете служебни правителства на Румен Радев (който при собственото си встъпване като президент следва дотогавашната практика) от същата година, но поради не толкова официалния характер на церемониите те минаха „между капките“.

Въпреки моментното политическо разведряване обаче юридически България все повече губи светския си характер. За това особена роля играе кампанията срещу Истанбулската конвенция. Когато през 2018 г. Конституционният съд обявява Конвенцията за противоконституционна, в мотивите му не става дума за религия, а традициите се споменават 5 пъти. През 2021 г., когато КС постановява, че полът има единствено биологичен смисъл, в решението му за религия става дума цели 23 пъти, а за „традиционното“ се споменава 30 пъти. Въпреки че в самата Конституция религията се споменава едва 7 пъти, вероизповеданията – 5, а моралът – 2.

В решението на Върховния касационен съд, с което той отменя правото на трансджендър лицата да сменят гражданския си пол, за религия става дума едва 5 пъти, а за морал – 4. Но именно религията и моралът са основният аргумент, с който ВКС отнема граждански права на тази група хора.

Експлоатирането на религията за политически цели, включително под формата на стари и не толкова стари народни традиции, не буди обществено възмущение, нищо че противоречи на Конституцията. Позоваването на религията дори се ползва за имунизиране от критиката на политическите опоненти. Последните не си позволяват даже критика с други религиозни аргументи. Например на призивите към смирение да отговорят, че именно Христос е този, който е изгонил търговците от храма, и че именно това е смисълът на борбата с корупцията и за правосъдна реформа.

Ако в началото на всяко Народно събрание се търси дълбок религиозен и традиционен смисъл, може само да гадаем какво би могло да се случи, ако откриването на някой следващ парламент съвпада не със Страстната седмица, а примерно с Еньовден. Нека припомним как в „Оптимистична теория за нашия народ“ Иван Хаджийски описва ритуалите на този древен български празник, на който се отбелязва и раждането на св. Йоан Кръстител: „На Еньовден врачките, бродниците отиваха на някоя нива с хубаво жито, събличаха се голи, завираха си лъжица отзад, нарамваха едно кросно, минаваха накръст нивата, обираха най-високите класове – „царете“, – изваждаха лъжицата, облизваха заврения ѝ край, за да не може да им се развали магията, и после, хвърляйки тези „царе“ в своята нива, пренасяха там плодородието на „измамената“ нива.“

Варианти на този ритуал биха могли да се използват за привличане на доскорошни политически противници за постигането на мнозинство в парламента, като нивите с хубаво жито символизират техните парламентарни групи.

Ако подобни перспективи са ни смешни, значи още не сме се „сварили“ напълно. Но ако светският характер на България бъде напълно потопен в религията и традициите, тогава вече ще е все едно дали ни е смешно, тъжно, или дори не забелязваме.

Post Syndicated from Kuba Orlik original https://blog.cloudflare.com/consent-manager/

Depending on where you live you may be asked to agree to the use of cookies when visiting a website for the first time. And if you’ve ever clicked something other than Approve you’ll have noticed that the list of choices about which services should or should not be allowed to use cookies can be very, very long. That’s because websites typically incorporate numerous third party tools for tracking, A/B testing, retargeting, etc. – and your consent is needed for each one of them.

For website owners it’s really hard to keep track of which third party tools are used and whether they’ve asked end users about all of them. There are tools that help you load third-party scripts on your website, and there are tools that help you manage and gather consent. Making the former respect the choices made in the latter is often cumbersome, to say the least.

This changes with Cloudflare Zaraz, a solution that makes third-party tools secure and fast, and that now can also help you with gathering and managing consent. Using the Zaraz Consent Manager, you can easily collect users’ consent preferences on your website, using a consent modal, and apply your consent policy on third-party tools you load via Cloudflare Zaraz. The consent modal treats all the tools it handles as opt-in and lets users accept or reject all of those tools with one click.

The privacy landscape around analytics cookies, retargeting cookies, and similar tracking technologies is changing rapidly. Last year in Europe, for example, the French data regulator fined Google and Facebook millions of euros for making it too difficult for users to reject all cookies. Meanwhile, in California, there have been enforcement actions on retargeting cookies, and new laws on retargeting come into effect in 2023 in California and a handful of other states. As a result, more and more companies are growing wary of potential liability related to data processing activities performed by third party scripts that use additional cookies on their websites.

While the legal requirements vary by jurisdiction, creating a compliance headache for companies trying to promote their goods and services, one thing is clear about the increasing spate of regulation around trackers and cookies – end users need to be given notice and have the opportunity to consent to these trackers.

In Europe, such consent needs to occur before third-party scripts are loaded and executed. Unfortunately, we’ve noticed that this doesn’t always happen. Sometimes it’s because the platform used to generate the consent banner makes it hard to set up in a way that would block those scripts until consent is given. This is a pain point on the road to compliance for many small website owners.

Some consent modals are designed in a deceptive manner, using dark patterns that make the process to refuse consent much more difficult and time-consuming than giving consent. This is not only frustrating to the end users, but also something that regulators are taking enforcement actions to stop.

Cloudflare Zaraz is a tool that lets you offload most of third-party scripts’ jobs to Cloudlare Workers, significantly increasing the performance and decreasing the time it takes for your site to become fully interactive. To achieve this, users configure third-party scripts in the dashboard. This means Cloudflare Zaraz already has information on what scripts to load and the power to not execute scripts under certain conditions. This is why the team developed a consent modal that would integrate with tools already set up in the dashboard and make it dead-simple to configure.

To start working with the consent functionality, you just have to provide basic information about the administrator of the website (name, street address, email address), and assign a purpose to each of the tools that you want to be handled by the consent modal. The consent modal will then automatically appear to all the users of your website. You can easily customize the CSS styles of the modal to make it match your brand identity and other style guidelines.

In line with Europe’s ePrivacy Directive and General Data Protection Regulation (GDPR), we’ve made all consent opt-in: that is, trackers or cookies that are not strictly necessary are disabled by default and will only execute after being enabled. With our modal, users can refuse consent to all purposes with one click, and can accept all purposes just as easily, or they can pick and choose to consent to only certain purposes.

The natural consequence of the opt-in nature of consent is the fact that first-time users will not immediately be tracked with tools handled by the consent modal. Using traditional consent management platforms, this could lead to loss of important pageview events. Since Cloudflare Zaraz is tightly integrated with the loading and data handling of all third-party tools on your website, it prevents this data loss automatically. Once a first-time user gives consent to a purpose tied to a third-party script, Zaraz will re-emit the pageview event just for that script.

There’s still more features coming to the consent functionality in the future, including giving the option to make some purposes opt-out, internationalization, and analytics on how people interact with the modal.

Try Zaraz Consent to see for yourself that consent management can be easy to set up: block scripts that don’t have the user’s consent and respect the end-users’ right to choose what happens to their data.

Post Syndicated from Mike Conlow original https://blog.cloudflare.com/making-home-internet-faster/

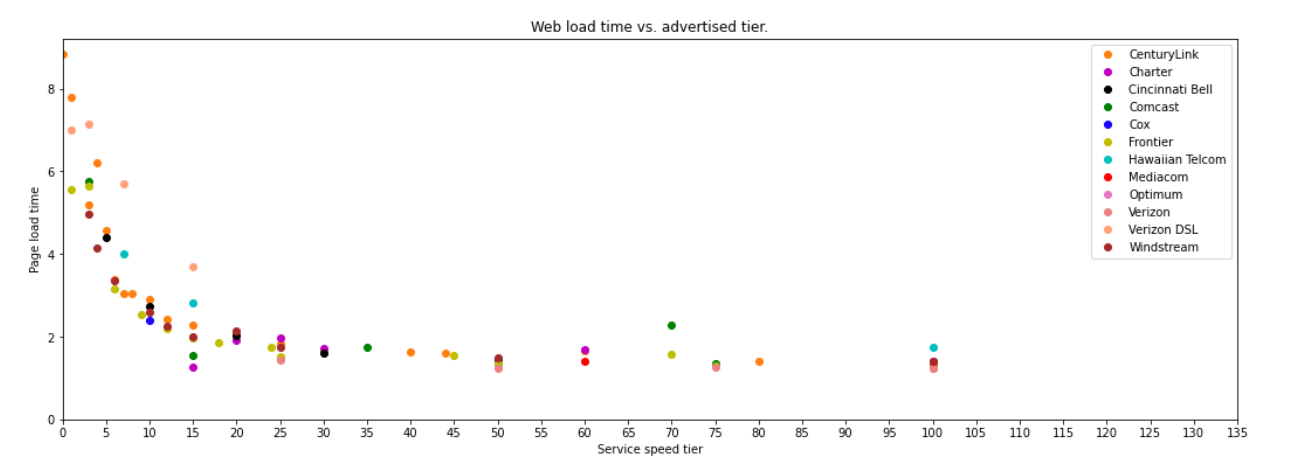

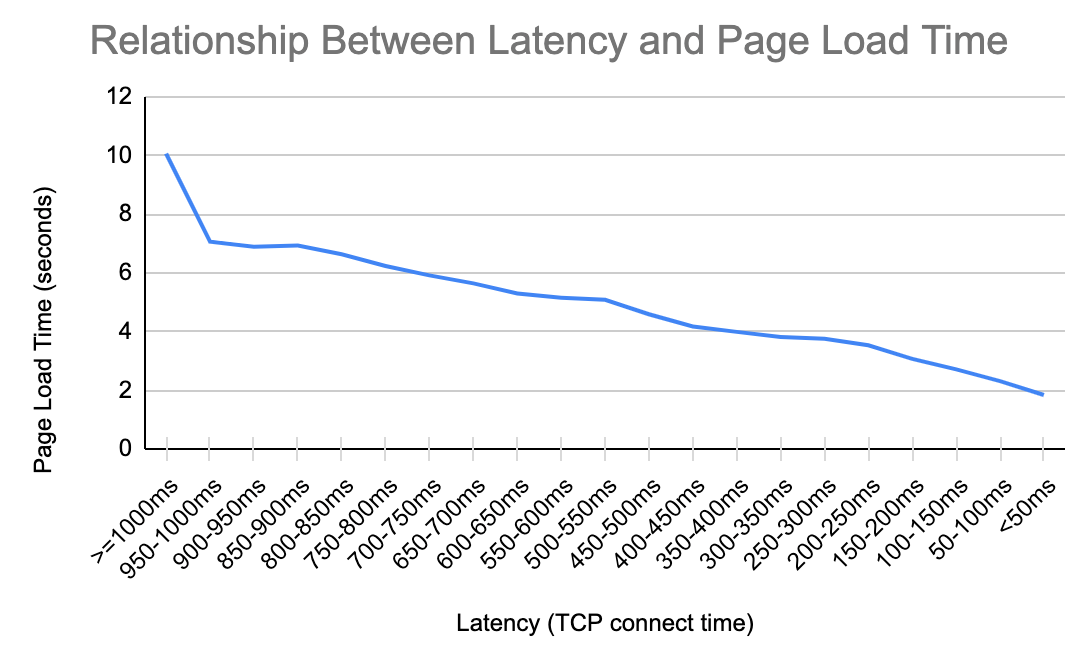

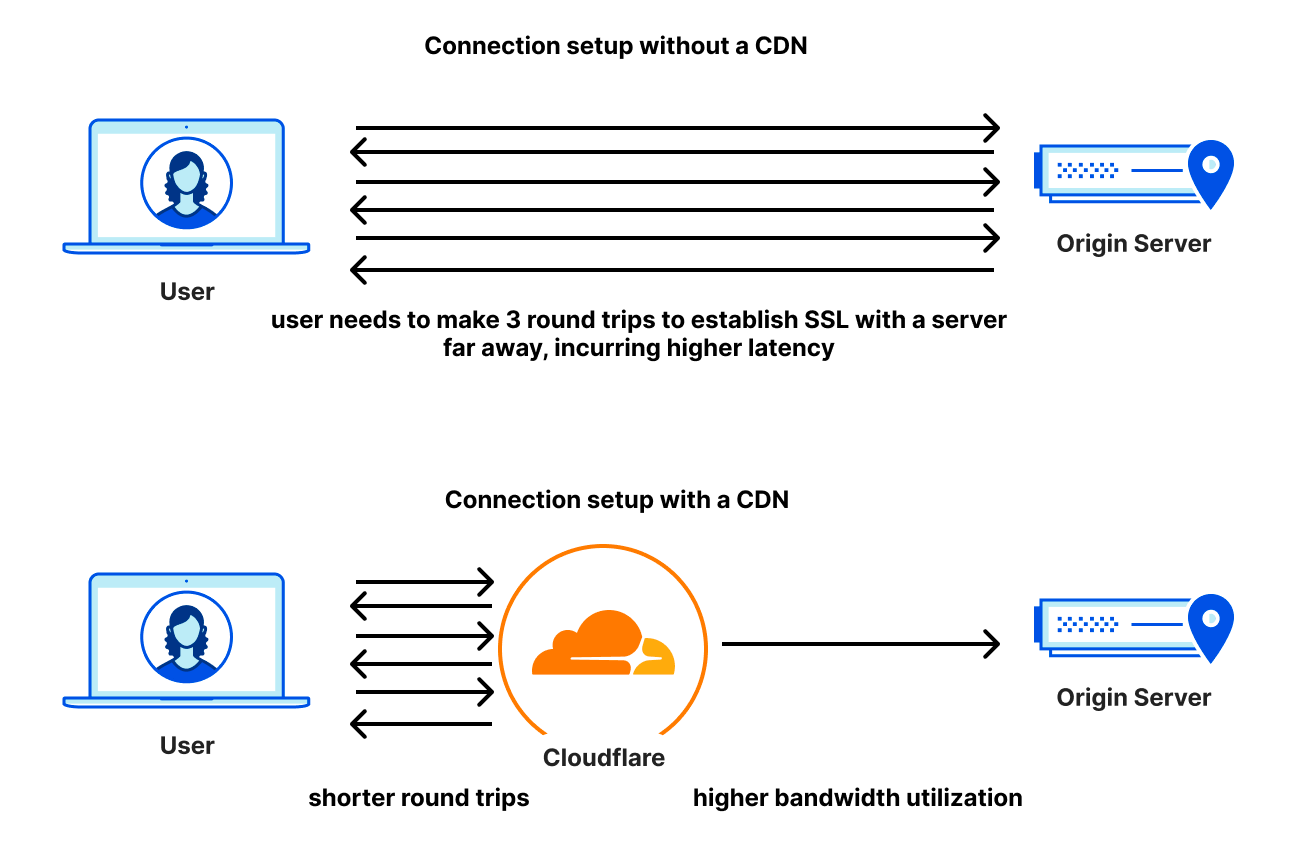

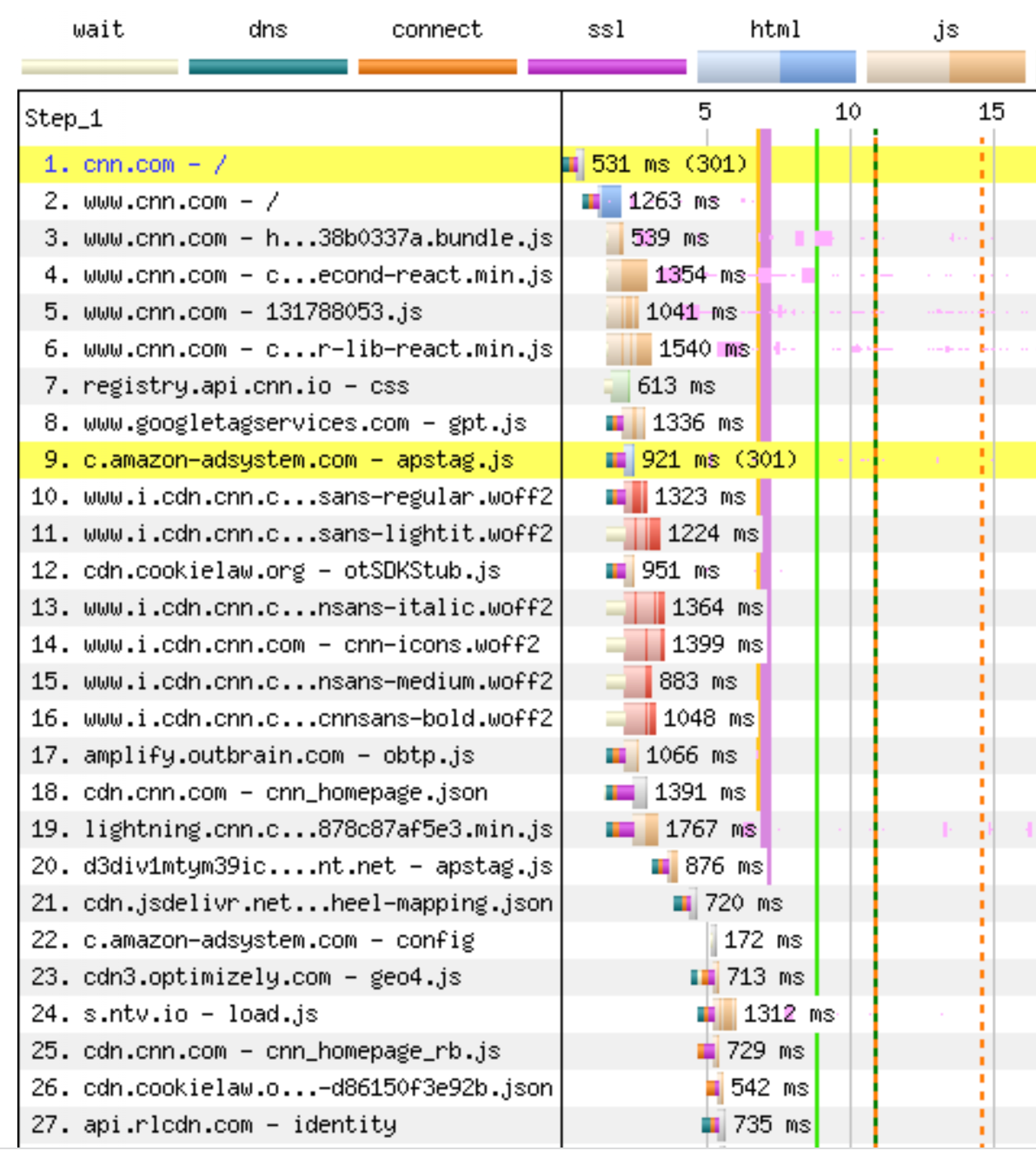

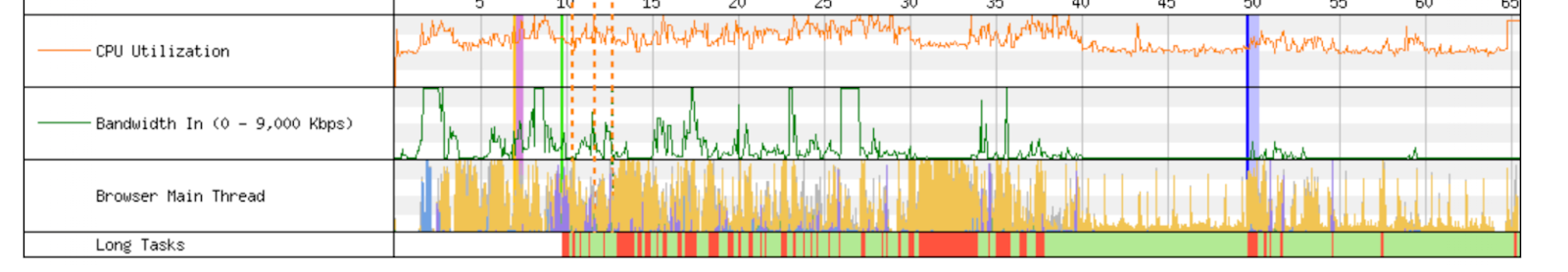

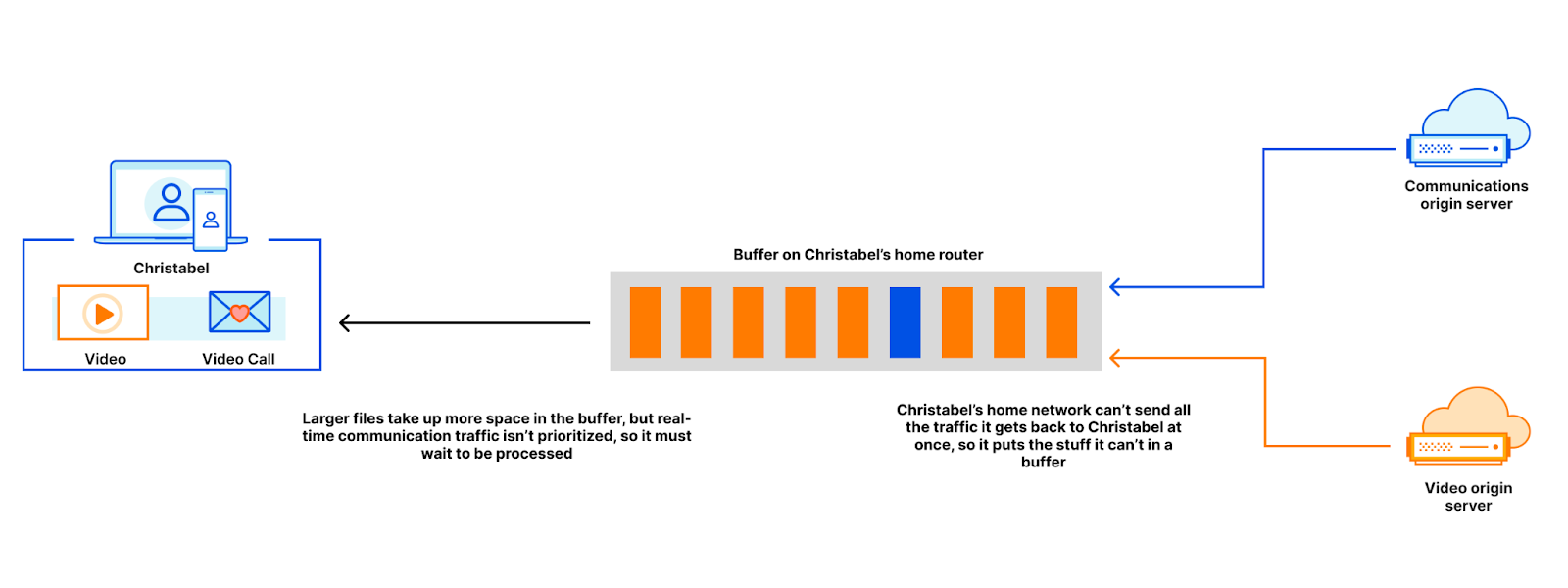

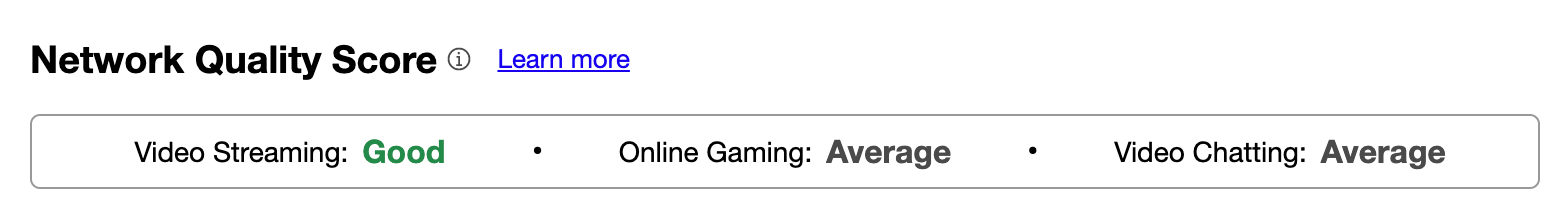

More than ten years ago, researchers at Google published a paper with the seemingly heretical title “More Bandwidth Doesn’t Matter (much)”. We published our own blog showing it is faster to fly 1TB of data from San Francisco to London than it is to upload it on a 100 Mbps connection. Unfortunately, things haven’t changed much. When you make purchasing decisions about home Internet plans, you probably consider the bandwidth of the connection when evaluating Internet performance. More bandwidth is faster speed, or so the marketing goes. In this post, we’ll use real-world data to show both bandwidth and – spoiler alert! – latency impact the speed of an Internet connection. By the end, we think you’ll understand why Cloudflare is so laser focused on reducing latency everywhere we can find it.

First, we should quickly define bandwidth and latency. Bandwidth is the amount of data that can be transmitted at any single time. It’s the maximum throughput, or capacity, of the communications link between two servers that want to exchange data. Usually, the bottleneck – the place in the network where the connection is constrained by the amount of bandwidth available – is in the “last mile”, either the wire that connects a home, or the modem or router in the home itself.

If the Internet is an information superhighway, bandwidth is the number of lanes on the road. The wider the road, the more traffic can fit on the highway at any time. Bandwidth is useful for downloading large files like operating system updates and big game updates. While bandwidth, throughput and capacity are synonyms, confusingly “speed” has come to mean bandwidth when talking about Internet plans. More on this later.

We use bandwidth when streaming video, though probably less than you think. Netflix recommends 15 Mbps of bandwidth to watch a stream in 4K/Ultra HD. A 1 Gbps connection could stream more than 60 Netflix shows in 4K at the same time!