Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=xCILNrxoF90

Filmmaker Joe Berlinger Discusses “Shadowland” and Conspiracy Theories | The Atlantic Festival 2022

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=y6kXOr3WV_0

One Year After IntSights Acquisition, Threat Intel’s Value Is Clear

Post Syndicated from Stacy Moran original https://blog.rapid7.com/2022/09/22/one-year-after-intsights-acquisition-threat-intels-value-is-clear/

Rapid7 Strengthens Market Position With 360-Degree XDR and Best-in-Class Threat Intelligence Offerings

Time flies… and provides opportunities to establish proof points. After recently passing the one-year milestone of Rapid7’s acquisition of IntSights, the added value threat intelligence brings to our product portfolio is unmistakable.

Cross-platform SIEM, SOAR, and VM integrations expand capabilities and deliver super-charged XDR

Integrations with Rapid7 InsightIDR (SIEM) and InsightConnect (SOAR) strengthen our product offerings. Infusing these tools with threat intelligence elevates customer security outcomes and delivers greater visibility across applications, while speeding response times. The combination of expertly vetted detections, contextual intelligence, and automated workflows within the security operations center (SOC) helps teams gain immediate visibility into the external attack surface from within their SIEM environments.

The threat intelligence integration with IDR is unique to Rapid7. It’s the only XDR solution in the market to infuse both generic threat intelligence IOCs and customized digital risk protection coverage. Users receive contextual, tailored alerts based on their digital assets, enabling them to detect potential threats before they hit endpoints and become incident response cases.

Capabilities

- Expand and accelerate threat detection with native integration of Threat Command alerts and TIP Threat Library IOCs with InsightIDR.

- Proactively thwart attack plans with alerts that identify active threats across the attack surface.

Benefits

- 360-degree visibility and protection across your internal and external attack surface

- Faster automated discovery and elimination of threats via correlation of Threat Command alerts with InsightIDR investigative capabilities

Learn more: 360-Degree XDR and Attack Surface Coverage, XDR Solution Brief

The Threat Command Vulnerability Risk Analyzer (VRA) + InsightVM integration delivers complete visibility into digital assets and vulnerabilities across your attack surface, including attacker perspective, trends, and active discussions and exploits. Joint customers can import data from InsightVM into their VRA environment where CVEs are enriched with valuable context and prioritized by vulnerability criticality and risk, eliminating the guesswork of manual patch management. VRA is a bridge connecting objective critical data with contextualized threat intelligence derived from tactical observations and deep research. In addition to VRA, customers can leverage Threat Command’s Browser Extension to obtain additional context on CVEs, and TIP module to see related IOCs and block actively exploited vulnerabilities.

Integration benefits

- Visibility: Continuously monitor assets and associated vulnerabilities.

- Speed: Instantly assess risk from emerging vulnerabilities and improve patching cadence.

- Assessment: Eliminate blind spots with enhanced vulnerability coverage.

- Productivity: Reduce time security analysts spend searching for threats by 75% or more.

- Prioritization: Focus on the vulnerabilities that matter most.

- Automation: Integrate CVEs enriched with threat intelligence into existing security stack.

- Simplification: Rely on intuitive dashboards for centralized vulnerability management.

Learn how to leverage this integration to effectively prioritize and accelerate vulnerability remediation in this short demo and Integration Solution Brief.

In addition to these game-changing integrations that infuse Rapid7 Insight Platform solutions with external threat intelligence, Threat Command also introduced numerous feature and platform enhancements during the past several months.

Expanded detections and reduced noise

Of all mainstream social media platforms, Twitter has the fewest restrictions and regulations; coupled with maximum anonymity, this makes the service a breeding ground for hostile discourse.

Twitter by the numbers (in 2021)

Threat Command Twitter Chatter coverage continually monitors Twitter discourse and alerts customers regarding mentions of company domains. Expanded Twitter coverage later this year will include company and brand names.

Threat Command’s Information Stealers feature expands the platform’s botnets credentials coverage. We now detect and alert on information-stealing malware that gathered leaked credentials and private data from infected devices. Customers are alerted when employees or users have been compromised (via corporate email, website, or mobile app). Rely on extended protection against this prevalent and growing malware threat based on our unique ability to obtain compromised data via our exclusive access to threat actors.

Accelerated time to value

The recently enhanced Threat Command Asset Management dashboard provides visibility into the risk associated with specific assets, displays asset targeting trends, and enables drill-down for alert investigation. Users can now categorize assets using tags and comments, generate bulk actions for multiple assets, and see a historical perspective of all activity related to specific assets.

Better visibility for faster decisions

Strategic Intelligence is now available to existing Threat Command customers for a limited time in Open Preview mode. The Strategic Intelligence dashboard, aligned to the MITRE ATT&CK framework, enables CISOs and other security executives to track risk over time and assess, plan, and budget for future security investments.

Capabilities

- View potential vulnerabilities attackers may use to execute an attack – aligned to the MITRE ATT&CK framework (tactics & techniques).

- See trends in your external attack surface and track progress over time in exposed areas.

- Benchmark your exposure relative to other Threat Command customers in your sector/vertical.

- Easily communicate gaps and trends to management via dashboard and/or reports.

Benefits

- Rapid7 is the first vendor in the TI space to provide a comprehensive strategic view of an organization’s external threat landscape.

- Achieve your security goals with complete, forward-looking, and actionable intelligence context about your external assets.

- Bridge the communication and reporting gap between your CTI analysts dealing with everyday threats and the CISO, focused on the bigger picture.

Stay tuned!

There are many more exciting feature enhancements and new releases planned by year end.

Learn more about how Threat Command simplifies threat intelligence, delivering instant value for organizations of any size or maturity, while reducing risk exposure.

Additional reading:

Rust 1.64.0 released

Post Syndicated from original https://lwn.net/Articles/909085/

Version

1.64.0 of the Rust language has been released. Changes include the

stabilization of the IntoFuture

trait, easier access to C-compatible types, the availability of

rust-analyzer via

rustup, and more.

Logpush: now lower cost and with more visibility

Post Syndicated from Duc Nguyen original https://blog.cloudflare.com/logpush-filters-alerts/

Logs are a critical part of every successful application. Cloudflare products and services around the world generate massive amounts of logs upon which customers of all sizes depend. Structured logging from our products are used by customers for purposes including analytics, debugging performance issues, monitoring application health, maintaining security standards for compliance reasons, and much more.

Logpush is Cloudflare’s product for pushing these critical logs to customer systems for consumption and analysis. Whenever our products generate logs as a result of traffic or data passing through our systems from anywhere in the world, we buffer these logs and push them directly to customer-defined destinations like Cloudflare R2, Splunk, AWS S3, and many more.

Today we are announcing three new key features related to Cloudflare’s Logpush product. First, the ability to have only logs matching certain criteria be sent. Second, the ability to get alerted when logs are failing to be pushed due to customer destinations having issues or network issues occurring between Cloudflare and the customer destination. In addition, customers will also be able to query for analytics around the health of Logpush jobs like how many bytes and records were pushed, number of successful pushes, and number of failing pushes.

Filtering logs before they are pushed

Because logs are both critical and generated with high volume, many customers have to maintain complex infrastructure just to ingest and store logs, as well as deal with ever-increasing related costs. On a typical day, a real, example customer receives about 21 billion records, or 2.1 terabytes (about 24.9 TB uncompressed) of gzip compressed logs. Over the course of a month, that could easily be hundreds of billions of events and hundreds of terabytes of data.

It is often unnecessary to store and analyze all of this data, and customers could get by with specific subsets of the data matching certain criteria. For example, a customer might want just the set of HTTP data that had status code >= 400, or the set of firewall data where the action taken was to block the user.

We can now achieve this in our Logpush jobs by setting specific filters on the fields of the log messages themselves. You can use either our API or the Cloudflare dashboard to set up filters.

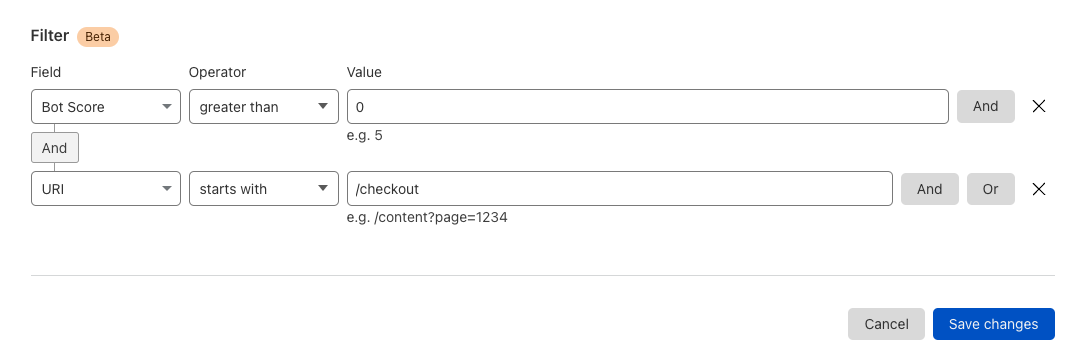

To do this in the dashboard, either create a new Logpush job or modify an existing job. You will see the option to set certain filters. For example, an ecommerce customer might want to receive logs only for the checkout page where the bot score was non-zero:

Logpush job alerting

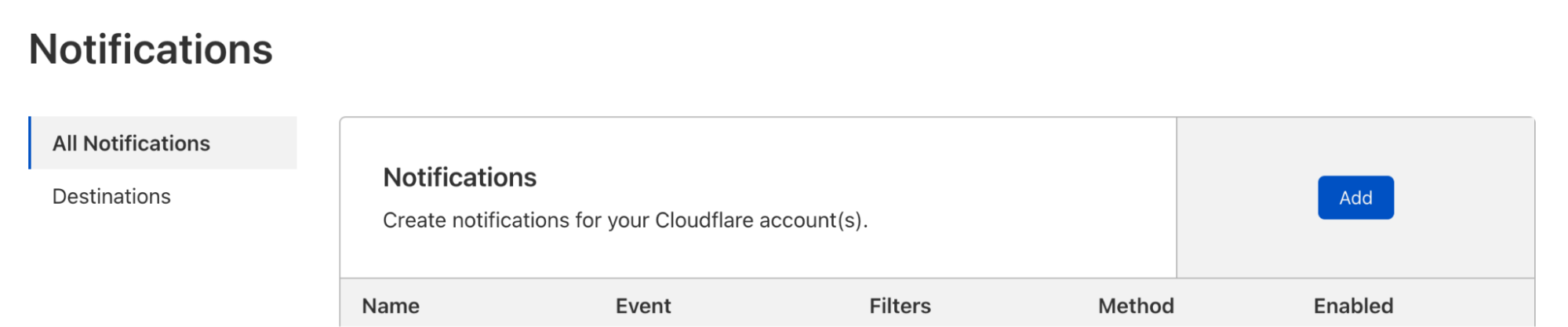

When logs are a critical part of your infrastructure, you want peace of mind that logging infrastructure is healthy. With that in mind, we are announcing the ability to get notified when your Logpush jobs have been retrying to push and failing for 24 hours.

To set up alerts in the Cloudflare dashboard:

1. First, navigate to “Notifications” in the left-panel of the account view

2. Next, Click the “add” button

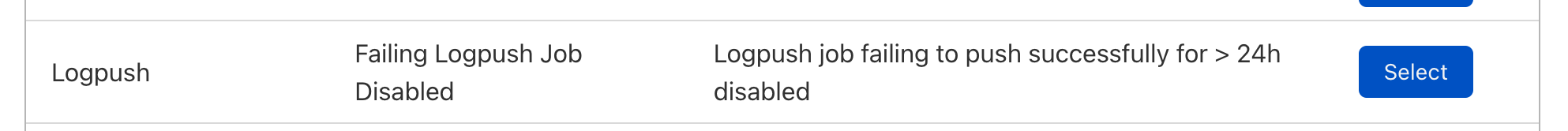

3. Select the alert “Failing Logpush Job Disabled”

4. Configure the alert and click Save.

That’s it — you will receive an email alert if your Logpush job is disabled.

Logpush Job Health API

We have also added the ability to query for stats related to the health of your Logpush jobs to our graphql API. Customers can now use our GraphQL API to query for things like the number of bytes pushed, number of compressed bytes pushed, number of records pushed, the status of each push, and much more. Using these stats, customers can have greater visibility into a core part of infrastructure. The GraphQL API is self documenting so full details about the new logpushHealthAdaptiveGroups node can be found using any GraphQL client, but head to GraphQL docs for more information.

Below are a couple example queries of how you can use the GraphQL to find stats related to your Logpush jobs.

Query for number of pushes to S3 that resulted in status code != 200

query

{

viewer

{

zones(filter: { zoneTag: $zoneTag})

{

logpushHealthAdaptiveGroups(filter: {

datetime_gt:"2022-08-15T00:00:00Z",

destinationType:"s3",

status_neq:200

},

limit:10)

{

count,

dimensions {

jobId,

status,

destinationType

}

}

}

}

}

Getting the number of bytes, compressed bytes and records that were pushed

query

{

viewer

{

zones(filter: { zoneTag: $zoneTag})

{

logpushHealthAdaptiveGroups(filter: {

datetime_gt:"2022-08-15T00:00:00Z",

destinationType:"s3",

status:200

},

limit:10)

{

sum {

bytes,

bytesCompressed,

records

}

}

}

}

}

Summary

Logpush is a robust and flexible platform for customers who need to integrate their own logging and monitoring systems with Cloudflare. Different Logpush jobs can be deployed to support multiple destinations or, with filtering, multiple subsets of logs.

Customers who haven’t created Logpush jobs are encouraged to do so. Try pushing your logs to R2 for safe-keeping! For customers who don’t currently have access to this powerful tool, consider upgrading your plan.

Security updates for Thursday

Post Syndicated from original https://lwn.net/Articles/909051/

Security updates have been issued by Debian (e17, fish, mako, and tinygltf), Fedora (mingw-poppler), Mageia (firefox, google-gson, libxslt, open-vm-tools, redis, and sofia-sip), Oracle (dbus-broker, kernel, kernel-container, mysql, and nodejs and nodejs-nodemon), Slackware (bind), SUSE (cdi-apiserver-container, cdi-cloner-container, cdi-controller-container, cdi-importer-container, cdi-operator-container, cdi-uploadproxy-container, cdi-uploadserver-container, containerized-data-importer, go1.18, go1.19, kubevirt, virt-api-container, virt-controller-container, virt-handler-container, virt-launcher-container, virt-libguestfs-tools-container, virt-operator-container, libconfuse0, and oniguruma), and Ubuntu (bind9 and pcre2).

Cloudflare Zaraz supports Managed Components and DLP to make third-party tools private

Post Syndicated from Yo'av Moshe original https://blog.cloudflare.com/zaraz-uses-managed-components-and-dlp-to-make-tools-private/

When it comes to privacy, much is in your control as a website owner. You decide what information to collect, how to transmit it, how to process it, and where to store it. If you care for the privacy of your users, you’re probably taking action to ensure that these steps are handled sensitively and carefully. If your website includes no third party tools at all – no analytics, no conversion pixels, no widgets, nothing at all – then it’s probably enough! But… If your website is one of the other 94% of the Internet, you have some third-party code running in it. Unfortunately, you probably can’t tell what exactly this code is doing.

Third-party tools are great. Your product team, marketing team, BI team – they’re all right when they say that these tools make a better website. Third-party tools can help you understand your users better, embed information such as maps, chat widgets, or measure and attribute conversions more accurately. The problem doesn’t lay with the tools themselves, but with the way they are implemented – third party scripts.

Third-party scripts are pieces of JavaScript that your website is loading, often from a remote web server. Those scripts are then parsed by the browser, and they can generally do everything that your website can do. They can change the page completely, they can write cookies, they can read form inputs, URLs, track visitors and more. It is mostly a restrictions-less system. They were built this way because it used to be the only way to create a third-party tool.

Over the years, companies have suffered a lot of third party scripts. Those scripts were sometimes hacked, and started hijacking information from visitors to websites that were using them. More often, third party scripts are simply collecting information that could be sensitive, exposing the website visitors in ways that the website owner never intended.

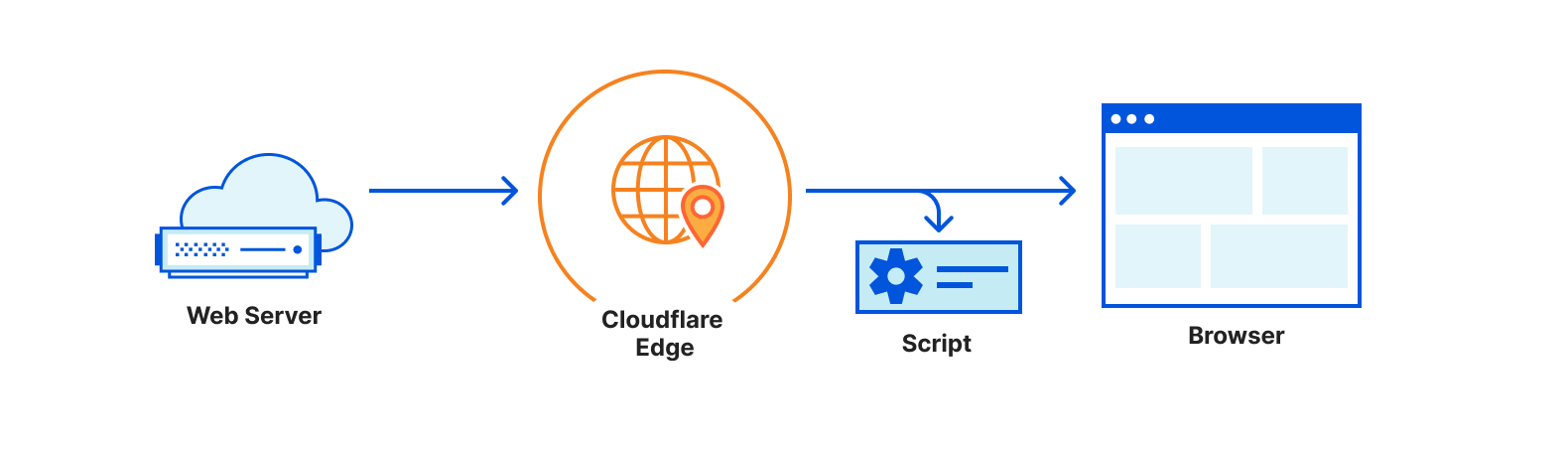

Recently we announced that we’re open sourcing Managed Components. Managed Components are a new API to load third-party tools in a secure and privacy-aware way. It changes the way third-party tools load, because by default there are no more third-party scripts in it at all. Instead, there are components, which are controlled with a Components Manager like Cloudflare Zaraz.

In this blogpost we will discuss how to use Cloudflare Zaraz for granting and revoking permissions from components, and for controlling what information flows into components. Even more exciting, we’re also announcing the upcoming DLP features of Cloudflare Zaraz, that can report, mask and remove PII from information shared with third-parties by mistake.

How are Managed Components better

Because Managed Components run isolated inside a Component Manager, they are more private by design. Unlike a script that gets unlimited access to everything on your website, a Managed Component is transparent about what kind of access it needs, and operates under a Component Manager that grants and revokes permissions.

When you add a Managed Component to your website, the Component Manager will list all the permissions required for this component. Such permissions could be “setting cookies”, “making client-side network requests”, “installing a widget” and more. Depending on the tool, you’ll be able to remove permissions that are optional, if your website maintains a more restrictive approach to privacy.

Aside from permissions, the Component Manager also lets you choose what information is exposed to each Managed Component. Perhaps you don’t want to send IP addresses to Facebook? Or rather not send user agent strings to Mixpanel? Managed Components put you in control by telling you exactly what information is consumed by each tool, and letting you filter, mask or hide it according to your needs.

Data Loss Prevention with Cloudflare Zaraz

Another area we’re working on is developing DLP features that let you decide what information to forward to different Managed Components not only by the field type, e.g. “user agent header” or “IP address”, but by the actual content. DLP filters can scan all information flowing into a Managed Component and detect names, email addresses, SSN and more – regardless of which field they might be hiding under.

Our DLP Filters will be highly flexible. You can decide to only enable them for users from specific geographies, for users on specific pages, for users with a certain cookie, and you can even mix-and-match different rules. After configuring your DLP filter, you can set what Managed Components you want it to apply for – letting you filter information differently according to the receiving target.

For each DLP filter you can choose your action type. For example, you might want to not send any information in which the system detected a SSN, but to only report a warning if a first name was detected. Masking will allow you to replace an email address like [email protected] with [email protected], making sure events containing email addresses are still sent, but without exposing the address itself.

While there are many DLP tools available in the market, we believe that the integration between Cloudflare Zaraz’s DLP features and Managed Components is the safest approach, because the DLP rules are effectively fencing the information not only before it is being sent, but before the component even accesses it.

Getting started with Managed Components and DLP

Cloudflare Zaraz is the most advanced Component Manager, and you can start using it today. We recently also announced an integrated Consent Management Platform. If your third-party tool of course is missing a Managed Component, you can always write a Managed Component of your own, as the technology is completely open sourced.

While we’re working on bringing advanced permissions handling, data masking and DLP Filters to all users, you can sign up for the closed beta, and we’ll contact you shortly.

API Endpoint Management and Metrics are now GA

Post Syndicated from Jin-Hee Lee original https://blog.cloudflare.com/api-management-metrics/

The Internet is an endless flow of conversations between computers. These conversations, the constant exchange of information from one computer to another, are what allow us to interact with the Internet as we know it. Application Programming Interfaces (APIs) are the vital channels that carry these conversations, and their usage is quickly growing: in fact, more than half of the traffic handled by Cloudflare is for APIs, and this is increasing twice as fast as traditional web traffic.

In March, we announced that we’re expanding our API Shield into a full API Gateway to make it easy for our customers to protect and manage those conversations. We already offer several features that allow you to secure your endpoints, but there’s more to endpoints than their security. It can be difficult to keep track of many endpoints over time and understand how they’re performing. Customers deserve to see what’s going on with their API-driven domains and have the ability to manage their endpoints.

Today, we’re excited to announce that the ability to save, update, and monitor the performance of all your API endpoints is now generally available to API Shield customers. This includes key performance metrics like latency, error rate, and response size that give you insights into the overall health of your API endpoints.

A Refresher on APIs

The bar for what we expect an application to do for us has risen tremendously over the past few years. When we open a browser, app, or IoT device, we expect to be able to connect to data instantly, compare dozens of flights within seconds, choose a menu item from a food delivery app, or see the weather for ten locations at once.

How are applications able to provide this kind of dynamic engagement for their users? They rely on APIs, which provide access to data and services—either from the application developer or from another company. APIs are fundamental in how computers (or services) talk to each other and exchange information.

You can think of an API as a waiter: say a customer orders a delicious bowl of Mac n Cheese. The waiter accepts this order from the customer, communicates the request to the chef in a format the chef can understand, and then delivers the Mac n Cheese back to the customer (assuming the chef has the ingredients in stock). The waiter is the crucial channel of communication, which is exactly what the API does.

Managing API Endpoints

The first step in managing APIs is to get a complete list of all the endpoints exposed to the internet. API Discovery automatically does this for any traffic flowing through Cloudflare. Undiscovered APIs can’t be monitored by security teams (since they don’t know about them) and they’re thus less likely to have proper security policies and best practices applied. However, customers have told us they also want the ability to manually add and manage APIs that are not yet deployed, or they want to ignore certain endpoints (for example those in the process of deprecation). Now, API Shield customers can choose to save endpoints found by Discovery or manually add endpoints to API Shield.

But security vulnerabilities aren’t the only risk or area of concern with APIs – they can be painfully slow or connections can be unsuccessful. We heard questions from our customers such as: what are my most popular endpoints? Is this endpoint significantly slower than it was yesterday? Are any endpoints returning errors that may indicate a problem with the application?

That’s why we built Performance Metrics into API Shield, which allows our customers to quickly answer these questions themselves with real-time data.

Prioritizing Performance

Once you’ve discovered, saved, or removed endpoints, you want to know what’s going well and what’s not. To end-users, a huge part of what defines the experience as “going well” is good performance. Poor performance can lead to a frustrating experience: when you’re shopping online and press a button to check out, you don’t want to wait around for minutes for the page to load. And you certainly never want to see a dreaded error symbol telling you that you can’t get what you came for.

Exposing performance metrics of API endpoints puts concrete numerical data into your developers’ hands to tell you how things are going. When things are going poorly, these dashboard metrics will point out exactly which aspect of performance is causing concern: maybe you expected to see a spike in requests, but find out that request count is normal and latency is just higher than usual.

Empowering our customers to make data-driven decisions to better manage their APIs ends up being a win for our customers and our customers’ customers, who expect to seamlessly engage with the domain’s APIs and get exactly what they came for.

Management and Performance Metrics in the Dashboard

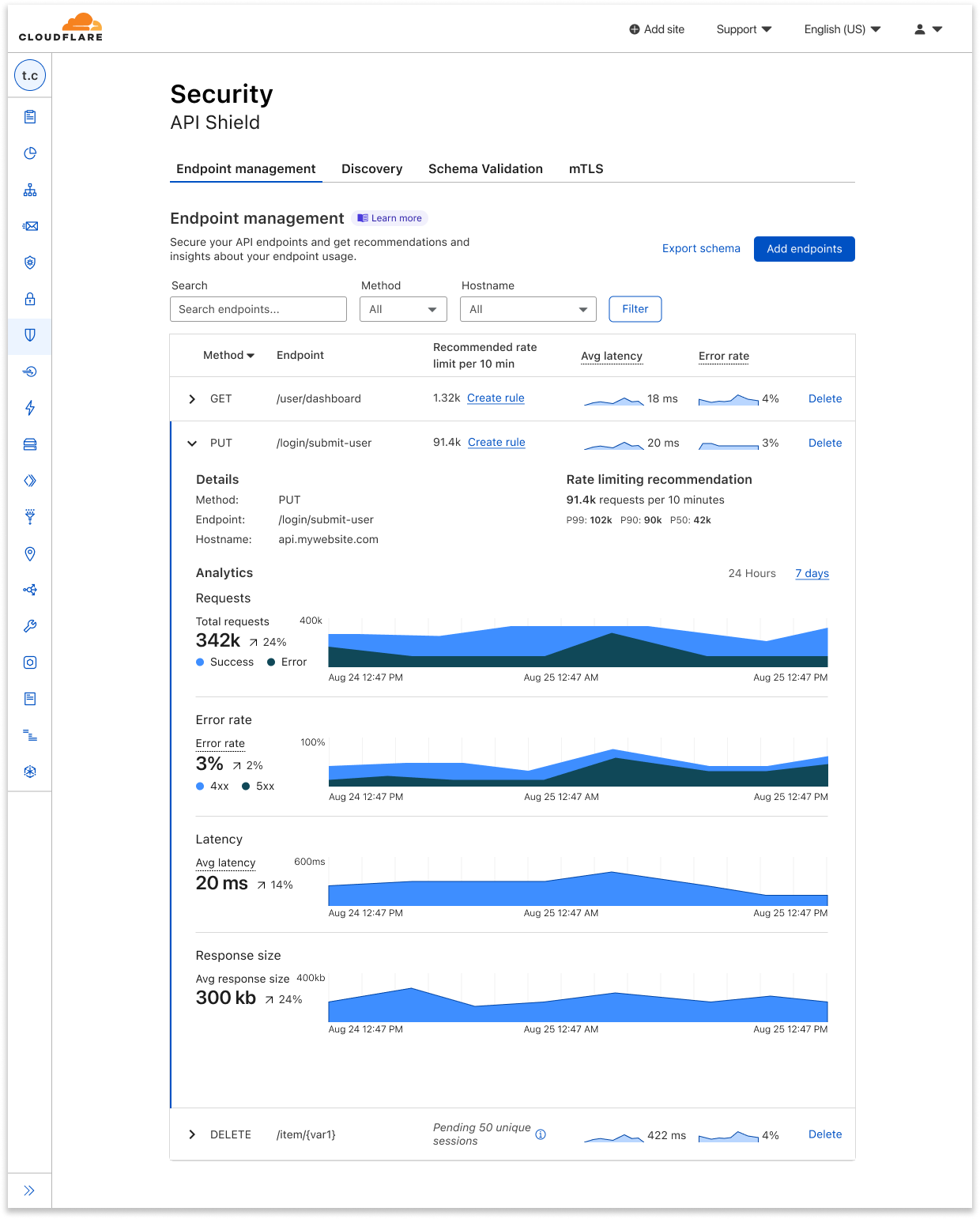

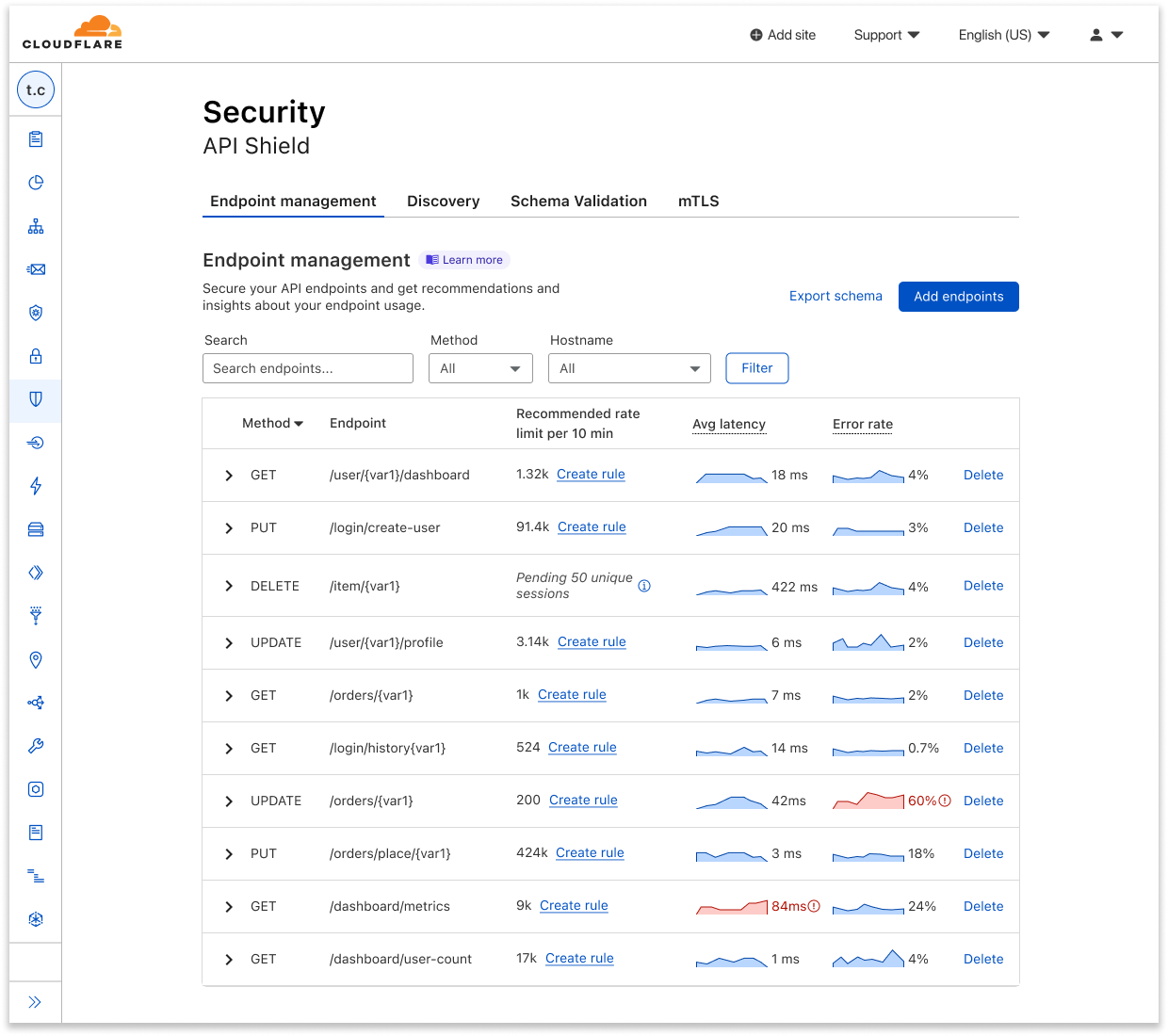

So, what’s available today? Log onto your Cloudflare dashboard, go to the domain-level Security tab, and open up the API Shield page. Here, you’ll see the Endpoint Management tab, which shows you all the API endpoints that you’ve saved, alongside placeholders for metrics that will soon be gathered.

Here you can easily delete endpoints you no longer want to track, or click manually add additional endpoints. You can also export schemas for each host to share internally or externally.

Once you’ve saved the endpoints that you want to keep tabs on, Cloudflare will start collecting data on its performance and make it available to you as soon as possible.

In Endpoint Management, you can see a few summary metrics in the collapsed view of each endpoint, including recommended rate limits, average latency, and error rate. It can be difficult to tell whether things are going well or not just from seeing a value alone, so we added sparklines that show relative performance, comparing an endpoint’s current metrics with its usual or previous data.

If you want to view further details about a given endpoint, you can expand it for additional metrics such as response size and errors separated by 4xx and 5xx. The expanded view also allows you to view all metrics at a single timestamp by hovering over the charts.

For each saved endpoint, customers can see the following metrics:

- Request count: total number of requests to the endpoint over time.

- Rate limiting recommendation per 10 minutes, which is guided by the request count.

- Latency: average origin response time, in milliseconds (ms). How long does it take from the moment a visitor makes a request to the moment the visitor gets a response back from the origin?

- Error rate vs. overall traffic: grouped by 4xx, 5xx, and their sum.

- Response size: average size of the response (in bytes) returned to the request.

You can toggle between viewing these metrics on a 24-hour period or a 7-day period, depending on the scale on which you’d like to view your data. And in the expanded view, we provide a percentage difference between the averages of the current vs. the previous period. For example, say I’m viewing my metrics on a 24-hour timeline. My average latency yesterday was 10 ms, and my average latency today is 30 ms, so the dashboard shows a 200% increase. We also use anomaly detection to bring attention to endpoints that have concerning performance changes.

Additional improvements to Discovery and Schema Validation

As part of making endpoint management GA, we’re also adding two additional enhancements to API Shield.

First, API Discovery now accepts cookies — in addition to authorization headers — to discover endpoints and suggest rate limiting thresholds. Previously, you could only identify an API session with HTTP headers, which didn’t allow customers to protect endpoints that use cookies as session identifiers. Now these endpoints can be protected as well. Simply go to the API Shield tab in the dashboard, choose edit session identifiers, and either change the type, or click Add additional identifier.

Second, we added the ability to validate the body of requests via Schema Validation for all customers. Schema Validation allows you to provide an OpenAPI schema (a template for your API traffic) and have Cloudflare block non-conformant requests as they arrive at our edge. Previously, you provided specific headers, cookies, and other features to validate. Now that we can validate the body of requests, you can use Schema Validation to confirm every element of a request matches what is expected. If a request contains strange information in the payload, we’ll notice. Note: customers who have already uploaded schemas will need to re-upload to take advantage of body validation.

Take a look at our developer documentation for more details on both of these features.

Get started

Endpoint Management, performance metrics, schema exporting, discovery via cookies, and schema body validation are all available now for all API Shield customers. To use them, log into the Cloudflare dashboard, click on Security in the navigation bar, and choose API Shield. Once API Shield is enabled, you’ll be able to start discovering endpoints immediately. You can also use all features through our API.

If you aren’t yet protecting a website with Cloudflare, it only takes a few minutes to sign up.

Astro Pi Mission Zero 2022/23 is open for young people

Post Syndicated from Sam Duffy original https://www.raspberrypi.org/blog/astro-pi-mission-zero-2022-23-is-open/

Inspire young people about coding and space science with Astro Pi Mission Zero. Mission Zero offers young people the chance to write code that will run in space! It opens for participants today.

What is Mission Zero?

In Mission Zero, young people write a simple computer program to run on an Astro Pi computer on board the International Space Station (ISS).

Following step-by-step instructions, they write code to take a reading from an Astro Pi sensor and display a colourful image for the ISS astronauts to see as they go about their daily tasks. This is a great, one-hour activity for beginners to programming.

Participation is free and open for young people up to age 19 in ESA Member States (eligibility details). Everything can be done in a web browser, on any computer with internet access. No special hardware or prior coding skills are needed.

Participants will receive a piece of space science history to keep: a personalised certificate they can download, which shows their Mission Zero program’s exact start and end time, and the position of the ISS when their program ran.

All young people’s entries that meet the eligibility criteria and follow the official Mission Zero guidelines will have their program run in space for up to 30 seconds.

Mission Zero 2022/23 is open until 17 March 2023.

New this year for Mission Zero participants

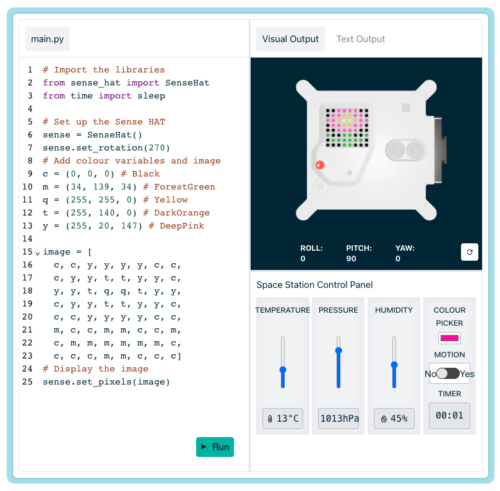

If you’ve been involved in Mission Zero before, you will notice lots of things have changed. This year’s Mission Zero participants will be the first to use our brand-new online code editor, a tool that makes it super easy to write their program using the Python language.

Thanks to the new Astro Pi computers that we sent to the ISS in 2021, there’s a brand-new colour and luminosity sensor, which has never been available to Mission Zero programmers before:

Finally, this year we’re challenging coders to create a colourful image to show on the Astro Pi’s LED display, and to use the data from the colour sensor to determine the image’s background colour.

The theme to inspire images for Mission Zero 2022/23 is ‘flora and fauna’. The images participants design can represent any aspect of this theme, such as flowers, trees, animals, or insects. Young people could even choose to program a series of images to show a short animation during the 30 seconds their program will run.

Here are some examples of images created by last year’s Mission Zero participants. What will you create?

Sign up for Astro Pi news

The European Astro Pi Challenge is an ESA Education project run in collaboration with us here at the Raspberry Pi Foundation. Young people can also take part in Astro Pi Mission Space Lab, where they will work to design a real scientific experiment to run on the Astro Pi computers.

You can keep updated with all of the latest Astro Pi news by following the Astro Pi Twitter account or signing up to the newsletter at astro-pi.org.

The post Astro Pi Mission Zero 2022/23 is open for young people appeared first on Raspberry Pi.

Handy Tips #38: Automating SNMP item creation with low-level discovery

Post Syndicated from Arturs Lontons original https://blog.zabbix.com/handy-tips-38-automating-snmp-item-creation-with-low-level-discovery/23521/

The post Handy Tips #38: Automating SNMP item creation with low-level discovery appeared first on Zabbix Blog.

Protests spur Internet disruptions in Iran

Post Syndicated from David Belson original https://blog.cloudflare.com/protests-internet-disruption-ir/

Over the past several days, protests and demonstrations have erupted across Iran in response to the death of Mahsa Amini. Amini was a 22-year-old woman from the Kurdistan Province of Iran, and was arrested on September 13, 2022, in Tehran by Iran’s “morality police”, a unit that enforces strict dress codes for women. She died on September 16 while in police custody.

Published reports indicate that the growing protests have resulted in at least eight deaths. Iran has a history of restricting Internet connectivity in response to protests, taking such steps in May 2022, February 2021, and November 2019. They have taken a similar approach to the current protests, including disrupting Internet connectivity, blocking social media platforms, and blocking DNS. The impact of these actions, as seen through Cloudflare’s data, are reviewed below.

Impact to Internet traffic

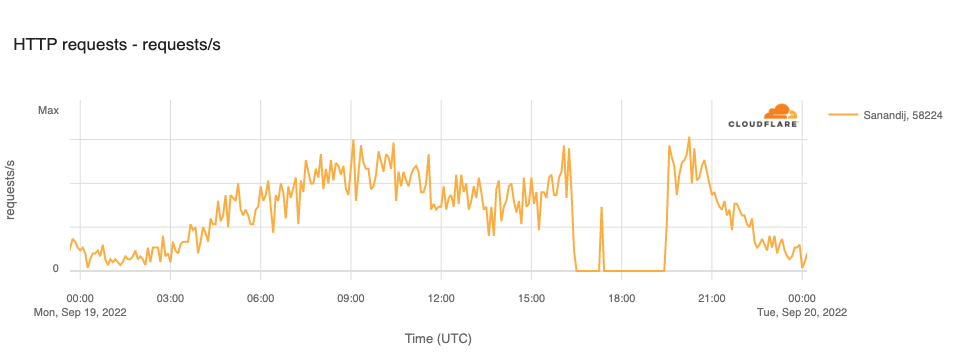

In the city of Sanandij in the Kurdistan Province, several days of anti-government protests took place after the death of Mahsa Amini. In response, the government reportedly disrupted Internet connectivity there on September 19. This disruption is clearly visible in the graph below, with traffic on TCI (AS58224), Iran’s fixed-line incumbent operator, in Sanandij dropping to zero between 1630 and 1925 UTC, except for a brief spike evident between 1715 and 1725 UTC.

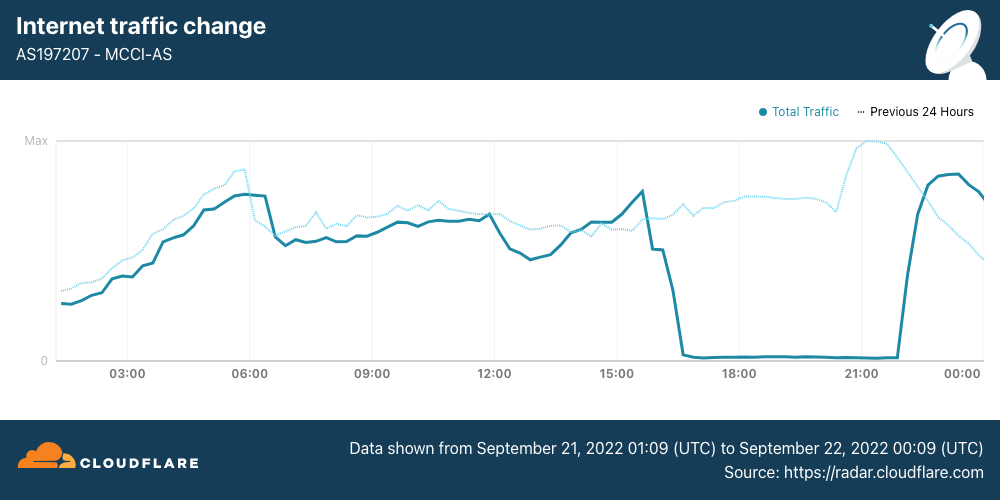

On September 21, Internet disruptions started to become more widespread, with mobile networks effectively shut down nationwide. (Iran is a heavily mobile-centric country, with Cloudflare Radar reporting that 85% of requests are made from mobile devices.) Internet traffic from Iran Mobile Communications Company (AS197207) started to decline around 1530 UTC, and remained near zero until it started to recover at 2200 UTC, returning to “normal” levels by the end of the day.

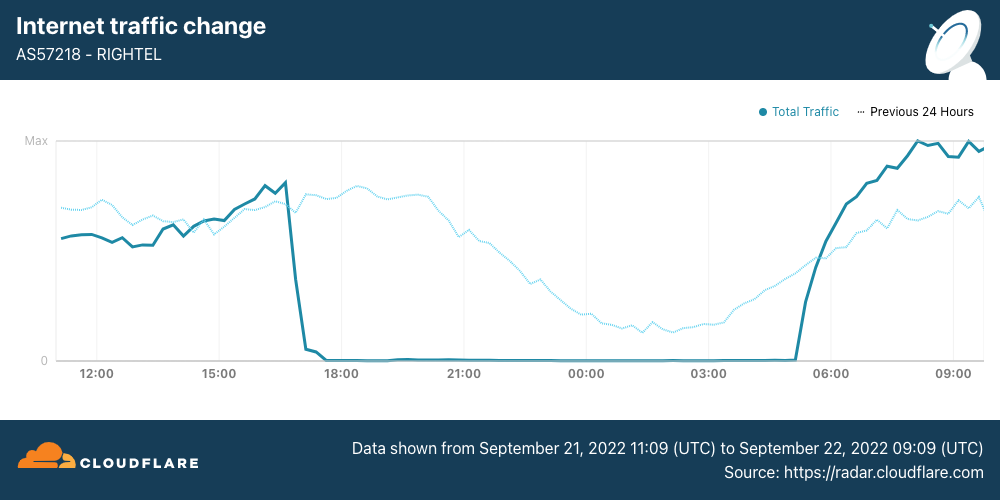

Internet traffic from RighTel (AS57218) began to decline around 1630 UTC. After an outage lasting more than 12 hours, traffic returned at 0510 UTC.

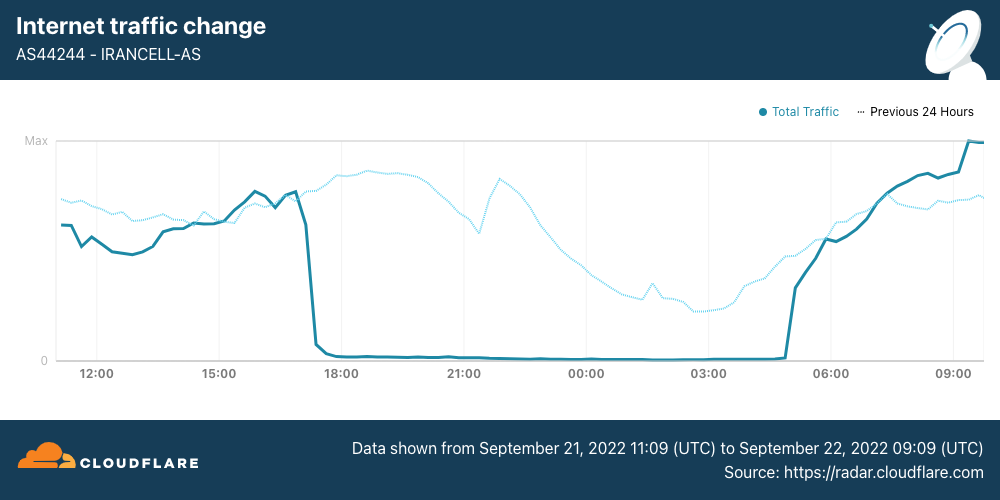

Internet traffic from MTN Irancell (AS44244) began to drop just before 1700 UTC. After a 12-hour outage, traffic began recovering at 0450 UTC.

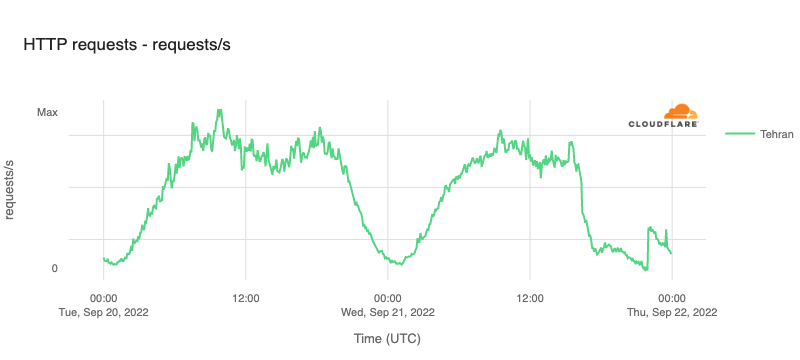

The impact of these disruptions is also visible when looking at traffic at both a regional and national level. In Tehran Province, HTTP request volume declined by approximately 70% around 1600 UTC, and continued to drop for the next several hours before seeing a slight recovery at 2200 UTC, likely related to the recovery also seen at that time on AS197207.

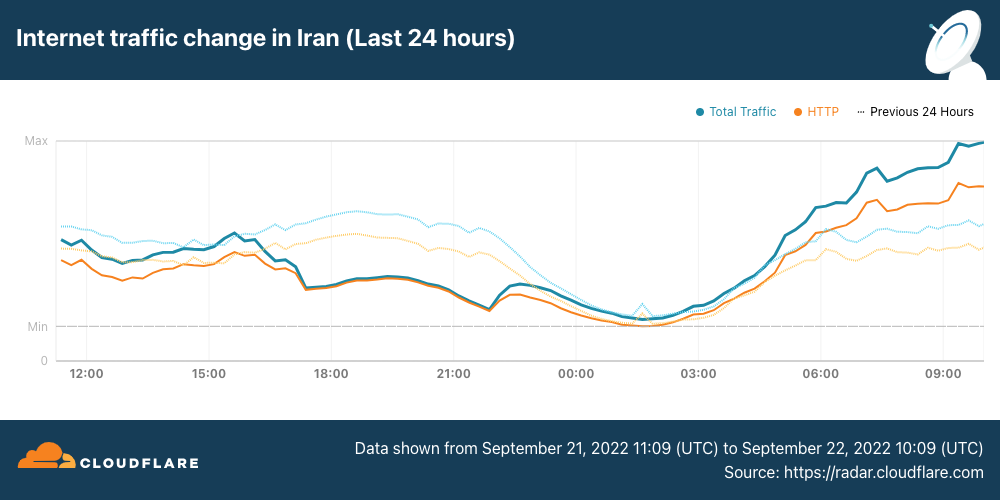

Similarly, Internet traffic volumes across the whole country began to decline just after 1600 UTC, falling approximately 40%. Nominal recovery at 2200 UTC is visible in this view as well, again likely from the increase in traffic from AS197207. More aggressive traffic growth is visible starting around 0500 UTC, after the remaining two mobile network providers came back online.

DNS blocking

In addition to shutting down mobile Internet providers within the country, Iran’s government also reportedly blocked access to social media platform Instagram, as well as blocking access to DNS-over-HTTPS from open DNS resolver services including Quad9, Google’s 8.8.8.8, and Cloudflare’s 1.1.1.1. Analysis of requests originating in Iran to 1.1.1.1 illustrates the impacts of these blocking attempts.

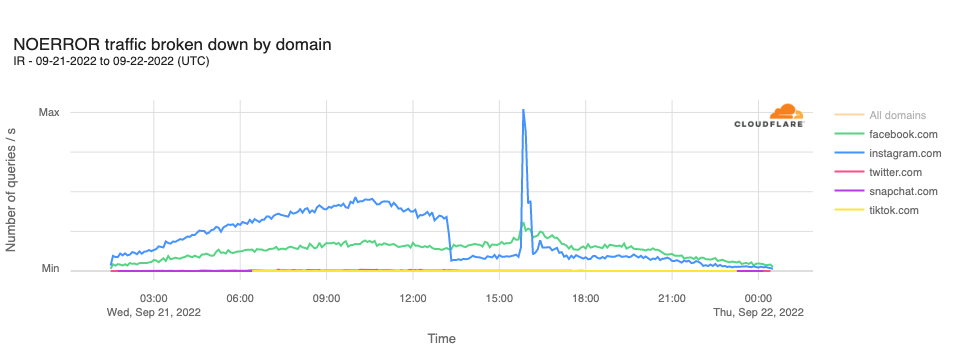

In analyzing DNS requests to Cloudflare’s resolver for domains associated with leading social media platforms, we observe that requests for instagram.com hostnames drop sharply at 1310 UTC, remaining lower for the rest of the day, except for a significant unexplained spike in requests between 1540 and 1610 UTC. Request volumes for hostnames associated with other leading social media platforms did not appear to be similarly affected.

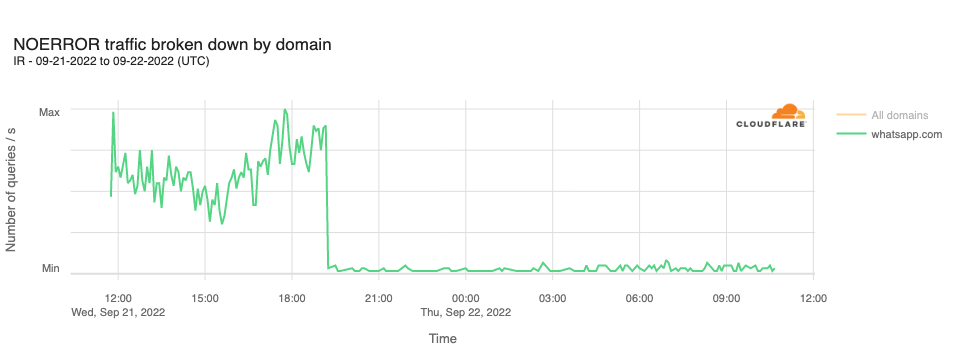

In addition, it was reported that access to WhatsApp had also been blocked in Iran. This can be seen in resolution requests to Cloudflare’s resolver for whatsapp.com hostnames. The graph below shows a sharp decline in query traffic at 1910 UTC, dropping to near zero.

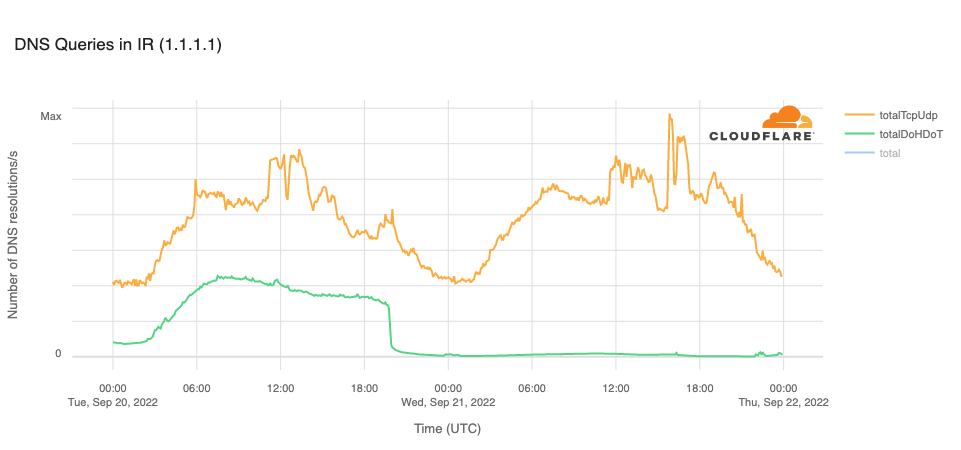

The Open Observatory for Network Interference (OONI), an organization that measures Internet censorship, reported in a Tweet that the cloudflare-dns.com domain name, used for DNS-over-HTTPS (DoH) and DNS-over-TLS (DoT) connections to Cloudflare’s DNS resolver, was blocked in Iran on September 20. This is clearly evident in the graph below, with resolution volume over DoH and DoT dropping to zero at 1940 UTC. The OONI tweet also noted that the 1.1.1.1 IP address “remains blocked on most networks.” The trend line for resolution over TCP or UDP (on port 53) in the graph below suggests that the IP address is not universally blocked, as there are still resolution requests reaching Cloudflare.

Interested parties can use Cloudflare Radar to monitor the impact of such government-directed Internet disruptions, and can follow @CloudflareRadar on Twitter for updates on Internet disruptions as they occur.

Prompt Injection/Extraction Attacks against AI Systems

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2022/09/prompt-injection-extraction-attacks-against-ai-systems.html

This is an interesting attack I had not previously considered.

The variants are interesting, and I think we’re just starting to understand their implications.

10 бюрократични пречки, чието премахване започнахме

Post Syndicated from Bozho original https://blog.bozho.net/blog/3947

В рамките на предходна кампания публикувах списък с бюрократични пречки, за чието отпадане ще работя. Ето ревизирана версия, с прогреса, който постигнахме за седем месеца:

1. Удостоверенията – голяма част от обикалянето по гишета е събирането на удостоверения и бележки за данни, които администрацията има за вас. Тя е длъжна да ги събира по електронен път, служебно, но се оправдава, че не го прави, защото „нашият закон е специален“ и „нямаме техническа възможност“. Подготвихме и приехме на Министерски съвет изменения в Закона за електронното управление, което приравнява служебните справки на предоставено удостоверение, като по този начин елиминираме оправданието с „нашия специален закон“. На първия ден на Народното събрание този закон ще бъде внесен.

2. Печатите – Имахме готов законопроект, който дадох на работна група, която създадох. Стигнахме до извода, че за да е трайна и системна реформата, трябва изцяло нов закон, който да урежда работата с документи и печати, като по този закон вече се работи. Никой няма да има право да ви върне, защото на нещо някъде липсва печат.

3. „Тук не е информация“ – отношението към гражданите, че те трябва сами да си знаят всички бюрократични процедури, трябва да спре. Закон забраняващ „тук не е информация“ е комично да има, но може да има ясни правила за „дизайн на услугите“ както в дигиталната, така и във физическата среда, които да правят омразния надпис „Тук не е информация“ излишен. В края на мандата изготвихме проект на изменение на наредба, с която се въвежда забрана за надписи с отрицание, вкл. „Тук не е информация“. В следващ кабинет ще има време да мине обществено обсъждане и да бъде приета.

4. „Това не може по електронен път, елате на място“ – по закон всичко трябва да може да се прави по електронен път. Докато бях министър, получих редица сигнали за такива откази и ги разпределих на отговорните за контрола, които да съставят актове на нарушаващите администрации.

5. Разпечатването на платежни – дори електронизирани към момента услуги изисква прикачване на платежно (макар нормативната уредба, създавана с мое участие преди години, да го забранява). В рамките на мандата присъединихме много нови институции към системата за електронни плащания, което да елиминира тези проблеми, а заедно с МФ инструктирахме администрациите да позволят плащане и с чуждестранни карти и иновативни платежни услуги, така че онлайн плащанията да се все по-достъпни.

6. Сканираните PDF-и – всички документи се създават в електронен вид. След това, заради аналоговото мислене в администрацията, се разпечатват, подписват, подпечатват и сканират. Това ги прави (почти) невъзможни за търсене и индексиране. Отпадането на печатите ще реши и този проблем. В МЕУ сканирани документи нямаше.

7. Трудовата книжка – по-предното редовно правителство предложи смяна на дизайна на трудовата книжка. Крайно време е този документ да отпадне. Създадох работна група, която предостави пълен анализ на данните от трудовата книжка и нормативните актове, в които те са уредени и предложения за пълна електронизация.

8. Медицинския картон – единният (електронен) здравен запис, до който има всеки лекар при нужда, трябва да замени парцалите, които разнасяме по лекари (или които сме загубили много отдавна). МЗ, в сътрудничество с МЕУ и Информационно обслужване, въведе изцяло електронна рецепта, която е важна стъпка към пълнотата на здравния запис, а аз изисках от НЗОК да спазят закона, като предоставят данните си на Министерсетво на здравеопазването.

9. Подписване с електронен подпис – Java вече (почти) не е проблем, но електронните подписи са неудобни. Затова стартирахме приоритетно проекта за електронна идентификация, който ще е готов в началото на следващата година, и чрез който ще могат да се заявяват услуги без електронен подпис (след като минат и измененията в Закона за електронното управление, които подготвихме)

10. Хартиените ваучери за храна – тези ваучери са полезни, но администрирането им е ужасно бюрократично – по-големите вериги строят отделни складове за хартиените ваучери, напр. Подготвихме електронизирането им с наредба, като нужните изменения в Закона за корпоративното подоходно облагане ще ги внесем в първия ден народното събрание.

Бюрокрацията пречи на гражданите, на бизнеса и на администрацията. Нейното отпадане е трудно, защото „винаги така сме го правили“, но задължително. Ще продължим започнатото по всички тези теми.

#25

Материалът 10 бюрократични пречки, чието премахване започнахме е публикуван за пръв път на БЛОГодаря.

[$] LWN.net Weekly Edition for September 22, 2022

Post Syndicated from original https://lwn.net/Articles/908080/

The LWN.net Weekly Edition for September 22, 2022 is available.

Regional Services comes to India, Japan and Australia

Post Syndicated from Achiel van der Mandele original https://blog.cloudflare.com/regional-services-comes-to-apac/

This post is also available in Deutsch, Français.

We announced the Data Localization Suite in 2020, when requirements for data localization were already important in the European Union. Since then, we’ve witnessed a growing trend toward localization globally. We are thrilled to expand our coverage to these countries in Asia Pacific, allowing more customers to use Cloudflare by giving them precise control over which parts of the Cloudflare network are able to perform advanced functions like WAF or Bot Management that require inspecting traffic.

Regional Services, a recap

In 2020, we introduced (Regional Services), a new way for customers to use Cloudflare. With Regional Services, customers can limit which data centers actually decrypt and inspect traffic. This helps because certain customers are affected by regulations on where they are allowed to service traffic. Others have agreements with their customers as part of contracts specifying exactly where traffic is allowed to be decrypted and inspected.

As one German bank told us: “We can look at the rules and regulations and debate them all we want. As long as you promise me that no machine outside the European Union will see a decrypted bank account number belonging to one of my customers, we’re happy to use Cloudflare in any capacity”.

Under normal operation, Cloudflare uses its entire network to perform all functions. This is what most customers want: leverage all of Cloudflare’s data centers so that you always service traffic to eyeballs as quickly as possible. Increasingly, we are seeing customers that wish to strictly limit which data centers service their traffic. With Regional Services, customers can use Cloudflare’s network but limit which data centers perform the actual decryption. Products that require decryption, such as WAF, Bot Management and Workers will only be applied within those data centers.

How does Regional Services work?

You might be asking yourself: how does that even work? Doesn’t Cloudflare operate an anycast network? Cloudflare was built from the bottom up to leverage anycast, a routing protocol. All of Cloudflare’s data centers advertise the same IP addresses through Border Gateway Protocol. Whichever data center is closest to you from a network point of view is the one that you’ll hit.

This is great for two reasons. The first is that the closer the data center to you, the faster the reply. The second great benefit is that this comes in very handy when dealing with large DDoS attacks. Volumetric DDoS attacks throw a lot of bogus traffic at you, which overwhelms network capacity. Cloudflare’s anycast network is great at taking on these attacks because they get distributed across the entire network.

Anycast doesn’t respect regional borders, it doesn’t even know about them. Which is why out of the box, Cloudflare can’t guarantee that traffic inside a country will also be serviced there. Although typically you’ll hit a data center inside your country, it’s very possible that your Internet Service Provider will send traffic to a network that might route it to a different country.

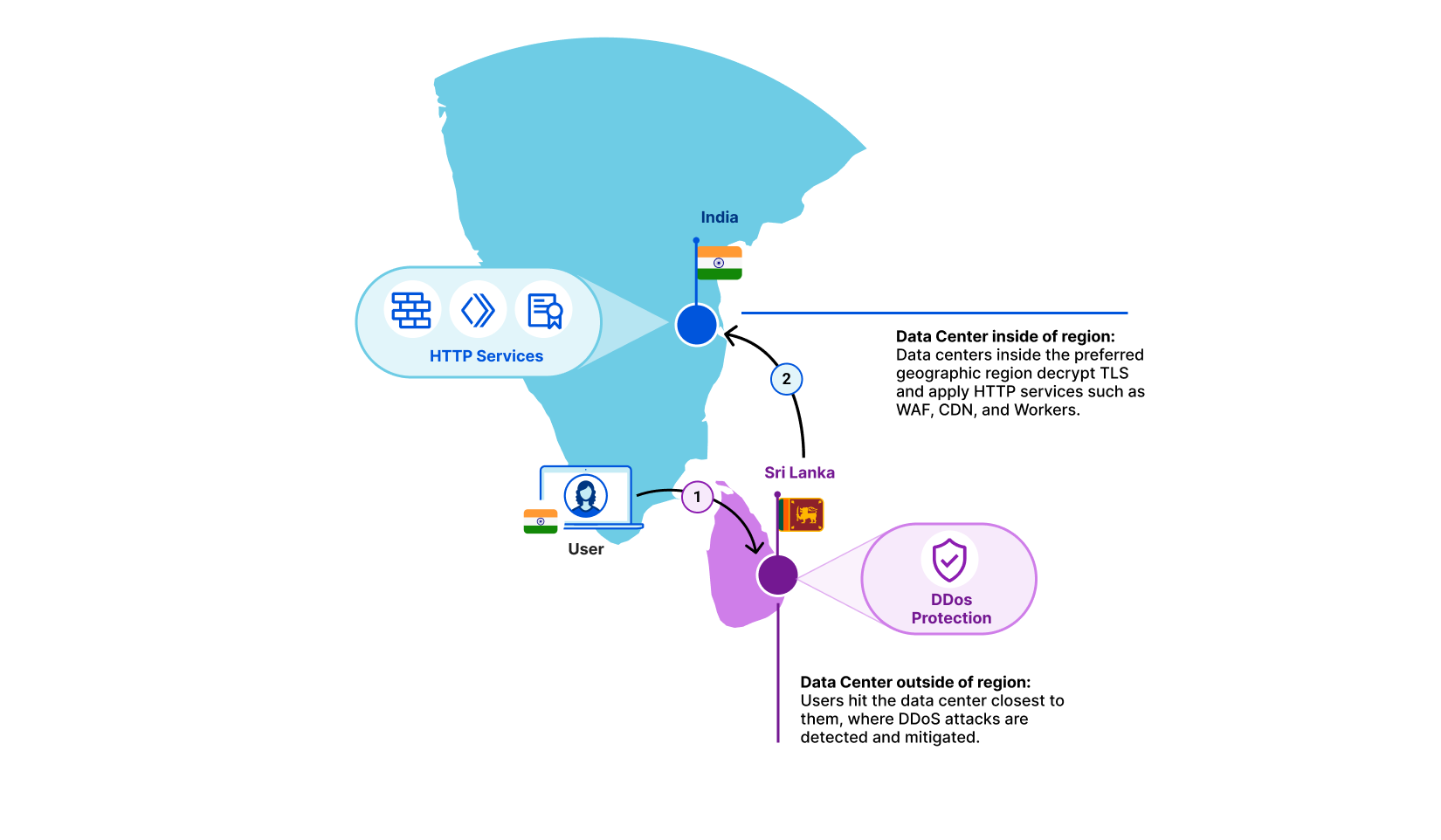

Regional Services solves that: when turned on, each data center becomes aware of which region it is operating in. If a user from a country hits a data center that doesn’t match the region that the customer has selected, we simply forward the raw TCP stream in encrypted form. Once it reaches a data center inside the right region, we decrypt and apply all Layer 7 products. This covers products such as CDN, WAF, Bot Management and Workers.

Let’s take an example. A user is in Kerala, India and their Internet Service Provider has determined that the fastest path to one of our data centers is to Colombo, Sri Lanka. In this example, a customer may have selected India as the sole region within which traffic should be serviced. The Colombo data center sees that this traffic is meant for the India region. It does not decrypt, but instead forwards it to the closest data center inside India. There, we decrypt and products such as WAF and Workers are applied as if the traffic had hit the data center directly.

Bringing Regional Services to Asia

Historically, we’ve seen most interest in Regional Services in geographic regions such as the European Union and the Americas. Over the past few years, however, we are seeing a lot of interest from Asia Pacific. Based on customer feedback and analysis on regulations we quickly concluded there were three key regions we needed to support: India, Japan and Australia. We’re proud to say that all three are now generally available for use today.

But we’re not done yet! We realize there are many more customers that require localization to their particular region. We’re looking to add many more in the near future and are working hard to make it easier to support more of them. If you have a region in mind, we’d love to hear it!

India, Japan and Australia are all live today! If you’re interested in using the Data Localization Suite, contact your account team!

Fighting Climate Change for a Sustainable Future | The Atlantic Festival 2022

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=K9W2v53KEMk

[$] Two visions for the future of sourceware.org

Post Syndicated from original https://lwn.net/Articles/908638/

Public hosting systems for free software have come and gone over the years

but one of them, Sourceware, has been

supporting the development of most of the GNU toolchain for nearly

25 years.

Recently, an application was made to bring

Sourceware under the umbrella of the Software Freedom Conservancy (SFC), at least for

fundraising purposes. It turns out that there is a separate initiative,

developed in secret until now, with a different vision for the future of

Sourceware. The 2022 GNU

Tools Cauldron was the site of an intense discussion on how this

important community resource should be managed in the coming years.

Announcing an update to IAM role trust policy behavior

Post Syndicated from Mark Ryland original https://aws.amazon.com/blogs/security/announcing-an-update-to-iam-role-trust-policy-behavior/

AWS Identity and Access Management (IAM) is changing an aspect of how role trust policy evaluation behaves when a role assumes itself. Previously, roles implicitly trusted themselves from a role trust policy perspective if they had identity-based permissions to assume themselves. After receiving and considering feedback from customers on this topic, AWS is changing role assumption behavior to always require self-referential role trust policy grants. This change improves consistency and visibility with regard to role behavior and privileges. This change allows customers to create and understand role assumption permissions in a single place (the role trust policy) rather than two places (the role trust policy and the role identity policy). It increases the simplicity of role trust permission management: “What you see [in the trust policy] is what you get.”

Therefore, beginning today, for any role that has not used the identity-based behavior since June 30, 2022, a role trust policy must explicitly grant permission to all principals, including the role itself, that need to assume it under the specified conditions. Removal of the role’s implicit self-trust improves consistency and increases visibility into role assumption behavior.

Most AWS customers will not be impacted by the change at all. Only a tiny percentage (approximately 0.0001%) of all roles are involved. Customers whose roles have recently used the previous implicit trust behavior are being notified, beginning today, about those roles, and may continue to use this behavior with those roles until February 15, 2023, to allow time for making the necessary updates to code or configuration. Or, if these customers are confident that the change will not impact them, they can opt out immediately by substituting in new roles, as discussed later in this post.

The first part of this post briefly explains the change in behavior. The middle sections answer practical questions like: “why is this happening?,” “how might this change impact me?,” “which usage scenarios are likely to be impacted?,” and “what should I do next?” The usage scenario section is important because it shows that, based on our analysis, the self-assuming role behavior exhibited by code or human users is very likely to be unnecessary and counterproductive. Finally, for security professionals interested in better understanding the reasons for the old behavior, the rationale for the change, as well as its possible implications, the last section reviews a number of core IAM concepts and digs in to additional details.

What is changing?

Until today, an IAM role implicitly trusted itself. Consider the following role trust policy attached to the role named RoleA in AWS account 123456789012.

This role trust policy grants role assumption access to the role named RoleB in the same account. However, if the corresponding identity-based policy for RoleA grants the sts:AssumeRole action with respect to itself, then RoleA could also assume itself. Therefore, there were actually two roles that could assume RoleA: the explicitly permissioned RoleB, and RoleA, which implicitly trusted itself as a byproduct of the IAM ownership model (explained in detail in the final section). Note that the identity-based permission that RoleA must have to assume itself is not required in the case of RoleB, and indeed an identity-based policy associated with RoleB that references other roles is not sufficient to allow RoleB to assume them. The resource-based permission granted by RoleA’s trust policy is both necessary and sufficient to allow RoleB to assume RoleA.

Although earlier we summarized this behavior as “implicit self-trust,” the key point here is that the ability of Role A to assume itself is not actually implicit behavior. The role’s self-referential permission had to be explicit in one place or the other (or both): either in the role’s identity-based policy (perhaps based on broad wildcard permissions), or its trust policy. But unlike the case with other principals and role trust, an IAM administrator would have to look in two different policies to determine whether a role could assume itself.

As of today, for any new role, or any role that has not recently assumed itself while relying on the old behavior, IAM administrators must modify the previously shown role trust policy as follows to allow RoleA to assume itself, regardless of the privileges granted by its identity-based policy:

This change makes role trust behavior clearer and more consistent to understand and manage, whether directly by humans or as embodied in code.

How might this change impact me?

As previously noted, most customers will not be impacted by the change at all. For those customers who do use the prior implicit trust grant behavior, AWS will work with you to eliminate your usage prior to February 15, 2023. Here are more details for the two cases of customers who have not used the behavior, and those who have.

If you haven’t used the implicit trust behavior since June 30, 2022

Beginning today, if you have not used the old behavior for a given role at any time since June 30, 2022, you will now experience the new behavior. Those existing roles, as well as any new roles, will need an explicit reference in their own trust policy in order to assume themselves. If you have roles that are used only very occasionally, such as once per quarter for a seldom-run batch process, you should identify those roles and if necessary either remove the dependency on the old behavior or update their role trust policies to include the role itself prior to their next usage (see the second sample policy above for an example).

If you have used the implicit trust behavior since June 30, 2022

If you have a role that has used the implicit trust behavior since June 30, 2022, then you will continue to be able to do so with that role until February 15, 2023. AWS will provide you with notice referencing those roles beginning today through your AWS Health Dashboard and will also send an email with the relevant information to the account owner and security contact. We are allowing time for you to make any necessary changes to your existing processes, code, or configurations to prepare for removal of the implicit trust behavior. If you can’t change your processes or code, you can continue to use the behavior by making a configuration change—namely, by updating the relevant role trust policies to reference the role itself. On the other hand, you can opt out of the old behavior at any time by creating a new role with a different Amazon Resource Name (ARN) with the desired identity-based and trust-policy-based permissions and substituting it for any older role that was identified as using the implicit trust behavior. (The new role will not be allow-listed, because the allow list is based on role ARNs.) You can also modify an existing allow-listed role’s trust policy to explicitly deny access to itself. See the “What should I do next?” section for more information.

Notifications and retirement

As we previously noted, starting today, accounts with existing roles that use the implicit self-assume role assumption behavior will be notified of this change by email and through their AWS Health Dashboard. Those roles have been allow-listed, and so for now their behavior will continue as before. After February 15, 2023, the old behavior will be retired for all roles and all accounts. IAM Documentation has been updated to make clear the new behavior.

After the old behavior is retired from the allow-listed roles and accounts, role sessions that make self-referential role assumption calls will fail with an Access Denied error unless the role’s trust policy explicitly grants the permission directly through a role ARN. Another option is to grant permission indirectly through an ARN to the root principal in the trust policy that acts as a delegation of privilege management, after which permission grants in identity-based policies determine access, similar to the typical cross-account case.

Which usage scenarios are likely to be impacted?

Users often attach an IAM role to an Amazon Elastic Compute Cloud (Amazon EC2) instance, an Amazon Elastic Container Service (Amazon ECS) task, or AWS Lambda function. Attaching a role to one of these runtime environments enables workloads to use short-term session credentials based on that role. For example, when an EC2 instance is launched, AWS automatically creates a role session and assigns it to the instance. An AWS best practice is for the workload to use these credentials to issue AWS API calls without explicitly requesting short-term credentials through sts:AssumeRole calls.

However, examples and code snippets commonly available on internet forums and community knowledge sharing sites might incorrectly suggest that workloads need to call sts:AssumeRole to establish short-term sessions credentials for operation within those environments.

We analyzed AWS Security Token Service (AWS STS) service metadata about role self-assumption in order to understand the use cases and possible impact of the change. What the data shows is that in almost all cases this behavior is occurring due to unnecessarily reassuming the role in an Amazon EC2, Amazon ECS, Amazon Elastic Kubernetes Services (EKS), or Lambda runtime environment already provided by the environment. There are two exceptions, discussed at the end of this section under the headings, “self-assumption with a scoped-down policy” and “assuming a target compute role during development.”

There are many variations on this theme, but overall, most role self-assumption occurs in scenarios where the person or code is unnecessarily reassuming the role that the code was already running as. Although this practice and code style can still work with a configuration change (by adding an explicit self-reference to the role trust policy), the better practice will almost always be to remove this unnecessary behavior or code from your AWS environment going forward. By removing this unnecessary behavior, you save CPU, memory, and network resources.

Common mistakes when using Amazon EKS

Some users of the Amazon EKS service (or possibly their shell scripts) use the command line interface (CLI) command aws eks get-token to obtain an authentication token for use in managing a Kubernetes cluster. The command takes as an optional parameter a role ARN. That parameter allows a user to assume another role other than the one they are currently using before they call get-token. However, the CLI cannot call that API without already having an IAM identity. Some users might believe that they need to specify the role ARN of the role they are already using. We have updated the Amazon EKS documentation to make clear that this is not necessary.

Common mistakes when using AWS Lambda

Another example is the use of an sts:AssumeRole API call from a Lambda function. The function is already running in a preassigned role provided by user configuration within the Lambda service, or else it couldn’t successfully call any authenticated API action, including sts:AssumeRole. However, some Lambda functions call sts:AssumeRole with the target role being the very same role that the Lambda function has already been provided as part of its configuration. This call is unnecessary.

AWS Software Development Kits (SDKs) all have support for running in AWS Lambda environments and automatically using the credentials provided in that environment. We have updated the Lambda documentation to make clear that such STS calls are unnecessary.

Common mistakes when using Amazon ECS

Customers can associate an IAM role with an Amazon ECS task to give the task AWS credentials to interact with other AWS resources.

We detected ECS tasks that call sts:AssumeRole on the same role that was provided to the ECS task. Amazon ECS makes the role’s credentials available inside the compute resources of the ECS task, whether on Amazon EC2 or AWS Fargate, and these credentials can be used to access AWS services or resources as the IAM role associated with the ECS talk, without being called through sts:AssumeRole. AWS handles renewing the credentials available on ECS tasks before the credentials expire. AWS STS role assumption calls are unnecessary, because they simply create a new set of the same temporary role session credentials.

AWS SDKs all have support for running in Amazon ECS environments and automatically using the credentials provided in that ECS environment. We have updated the Amazon ECS documentation to make clear that calling sts:AssumeRole for an ECS task is unnecessary.

Common mistakes when using Amazon EC2

Users can configure an Amazon EC2 instance to contain an instance profile. This instance profile defines the IAM role that Amazon EC2 assigns the compute instance when it is launched and begins to run. The role attached to the EC2 instance enables your code to send signed requests to AWS services. Without this attached role, your code would not be able to access your AWS resources (nor would it be able to call sts:AssumeRole). The Amazon EC2 service handles renewing these temporary role session credentials that are assigned to the instance before they expire.

We have observed that workloads running on EC2 instances call sts:AssumeRole to assume the same role that is already associated with the EC2 instance and use the resulting role-session for communication with AWS services. These role assumption calls are unnecessary, because they simply create a new set of the same temporary role session credentials.

AWS SDKs all have support for running in Amazon EC2 environments and automatically using the credentials provided in that EC2 environment. We have updated the Amazon EC2 documentation to make clear that calling sts:AssumeRole for an EC2 instance with a role assigned is unnecessary.

For information on creating an IAM role, attaching that role to an EC2 instance, and launching an instance with an attached role, see “IAM roles for Amazon EC2” in the Amazon EC2 User Guide.

Other common mistakes

If your use case does not use any of these AWS execution environments, you might still experience an impact from this change. We recommend that you examine the roles in your account and identify scenarios where your code (or human use through the AWS CLI) results in a role assuming itself. We provide Amazon Athena and AWS CloudTrail Lake queries later in this post to help you locate instances where a role assumed itself. For each instance, you can evaluate whether a role assuming itself is the right operation for your needs.

Self-assumption with a scoped-down policy

The first pattern we have observed that is not a mistake is the use of self-assumption combined with a scoped-down policy. Some systems use this approach to provide different privileges for different use cases, all using the same underlying role. Customers who choose to continue with this approach can do so by adding the role to its own trust policy. While the use of scoped-down policies and the associated least-privilege approach to permissions is a good idea, we recommend that customers switch to using a second generic role and assume that role along with the scoped-down policy rather than using role self-assumption. This approach provides more clarity in CloudTrail about what is happening, and limits the possible iterations of role assumption to one round, since the second role should not be able to assume the first. Another possible approach in some cases is to limit subsequent assumptions is by using an IAM condition in the role trust policy that is no longer satisfied after the first role assumption. For example, for Lambda functions, this would be done by a condition checking for the presence of the “lambda:SourceFunctionArn” property; for EC2, by checking for presence of “ec2:SourceInstanceARN.”

Assuming an expected target compute role during development

Another possible reason for role self-assumption may result from a development practice in which developers attempt to normalize the roles that their code is running in between scenarios in which role credentials are not automatically provided by the environment, and scenarios where they are. For example, imagine a developer is working on code that she expects to run as a Lambda function, but during development is using her laptop to do some initial testing of the code. In order to provide the same execution role as is expected later in product, the developer might configure the role trust policy to allow assumption by a principal readily available on the laptop (an IAM Identity Center role, for example), and then assume the expected Lambda function execution role when the code is initializing. The same approach could be used on a build and test server. Later, when the code is deployed to Lambda, the actual role is already available and in use, but the code need not be modified in order to provide the same post-role-assumption behavior that existing outside of Lambda: the unmodified code can automatically assume what is in this case the same role, and proceed. While this approach is not illogical, as with the scope-down policy case we recommend that customers configure distinct roles for assumption both in development and test environments as well as later production environments. Again, this approach provides more clarity in CloudTrail about what is happening, and limits the possible iterations of role assumption to one round, since the second role should not be able to assume the first.

What should I do next?

If you receive an email or AWS Health Dashboard notification for an account, we recommend that you review your existing role trust policies and corresponding code. For those roles, you should remove the dependency on the old behavior, or if you can’t, update those role trust policies with an explicit self-referential permission grant. After the grace period expires on February 15, 2023, you will no longer be able to use the implicit self-referential permission grant behavior.

If you currently use the old behavior and need to continue to do so for a short period of time in the context of existing infrastructure as code or other automated processes that create new roles, you can do so by adding the role’s ARN to its own trust policy. We strongly encourage you to treat this as a temporary stop-gap measure, because in almost all cases it should not be necessary for a role to be able to assume itself, and the correct solution is to change the code that results in the unnecessary self-assumption. If for some reason that self-service solution is not sufficient, you can reach out to AWS Support to seek an accommodation of your use case for new roles or accounts.

If you make any necessary code or configuration changes and want to remove roles that are currently allow-listed, you can also ask AWS Support to remove those roles from the allow list so that their behavior follows the new model. Or, as previously noted, you can opt out of the old behavior at any time by creating a new role with a different ARN that has the desired identity-based and trust-policy–based permissions and substituting it for the allow-listed role. Another stop-gap type of option is to add an explicit deny that references the role to its own trust policy.

If you would like to understand better the history of your usage of role self-assumption in a given account or organization, you can follow these instructions on querying CloudTrail data with Athena and then use the following Athena query against your account or organization CloudTrail data, as stored in Amazon Simple Storage Services (Amazon S3). The results of the query can help you understand the scenarios and conditions and code involved. Depending on the size of your CloudTrail logs, you may need to follow the partitioning instructions to query subsets of your CloudTrail logs sequentially. If this query yields no results, the role self-assumption scenario described in this blog post has never occurred within the analyzed CloudTrail dataset.

SELECT eventid, eventtime, userIdentity.sessioncontext.sessionissuer.arn as RoleARN, split_part(userIdentity.principalId, ':', 2) as RoleSessionName from cloudtrail_logs t CROSS JOIN UNNEST(t.resources) unnested (resources_entry) where eventSource = 'sts.amazonaws.com' and eventName = 'AssumeRole' and userIdentity.type = 'AssumedRole' and errorcode IS NULL and substr(userIdentity.sessioncontext.sessionissuer.arn,12) = substr(unnested.resources_entry.ARN,12)

As another option, you can follow these instructions to set up CloudTrail Lake to perform a similar analysis. CloudTrail Lake allows richer, faster queries without the need to partition the data. As of September 20, 2022, CloudTrail Lake now supports import of CloudTrail logs from Amazon S3. This allows you to perform a historical analysis even if you haven’t previously enabled CloudTrail Lake. If this query yields no results, the scenario described in this blog post has never occurred within the analyzed CloudTrail dataset.

SELECT eventid, eventtime, userIdentity.sessioncontext.sessionissuer.arn as RoleARN, userIdentity.principalId as RoleIdColonRoleSessionName from $EDS_ID where eventSource = 'sts.amazonaws.com' and eventName = 'AssumeRole' and userIdentity.type = 'AssumedRole' and errorcode IS NULL and userIdentity.sessioncontext.sessionissuer.arn = element_at(resources,1).arn

Understanding the change: more details

To better understand the background of this change, we need to review the IAM basics of identity-based policies and resource-based policies, and then explain some subtleties and exceptions. You can find additional overview material in the IAM documentation.

The structure of each IAM policy follows the same basic model: one or more statements with an effect (allow or deny), along with principals, actions, resources, and conditions. Although the identity-based and resource-based policies share the same basic syntax and semantics, the former is associated with a principal, the latter with a resource. The main difference between the two is that identity-based policies do not specify the principal, because that information is supplied implicitly by associating the policy with a given principal. On the other hand, resource policies do not specify an arbitrary resource, because at least the primary identifier of the resource (for example, the bucket identifier of an S3 bucket) is supplied implicitly by associating the policy with that resource. Note that an IAM role is the only kind of AWS object that is both a principal and a resource.

In most cases, access to a resource within the same AWS account can be granted by either an identity-based policy or a resource-based policy. Consider an Amazon S3 example. An identity-based policy attached to an IAM principal that allows the s3:GetObject action does not require an equivalent grant in the S3 bucket resource policy. Conversely, an s3:GetObject permission grant in a bucket’s resource policy is all that is needed to allow a principal in the same account to call the API with respect to that bucket; an equivalent identity-based permission is not required. Either the identity-based policy or the resource-based policy can grant the necessary permission. For more information, see IAM policy types: How and when to use them.

However, in order to more tightly govern access to certain security-sensitive resources, such as AWS Key Management Service (AWS KMS) keys and IAM roles, those resource policies need to grant access to the IAM principal explicitly, even within the same AWS account. A role trust policy is the resource policy associated with a role that specifies which IAM principals can assume the role by using one of the sts:AssumeRole* API calls. For example, in order for RoleB to assume RoleA in the same account, whether or not RoleB’s identity-based policy explicitly allows it to assume RoleA, RoleA’s role trust policy must grant access to RoleB. Within the same account, an identity-based permission by itself is not sufficient to allow assumption of a role. On the other hand, a resource-based permission—a grant of access in the role trust policy—is sufficient. (Note that it’s possible to construct a kind of hybrid permission to a role by using both its resource policy and other identity-based policies. In that case, the role trust policy grants permission to the root principal ARN; after that, the identity-based policy of a principal in that account would need to explicitly grant permission to assume that role. This is analogous to the typical cross-account role trust scenario.)

Until now, there has been a nonintuitive exception to these rules for situations where a role assumes itself. Since a role is both a principal (potentially with an identity-based policy) and a resource (with a resource-based policy), it is in the unique position of being both a subject and an object within the IAM system, as well as being an object owned by itself rather than its containing account. Due to this ownership model, roles with identity-based permission to assume themselves implicitly trusted themselves as resources, and vice versa. That is to say, roles that had the privilege as principals to assume themselves implicitly trusted themselves as resources, without an explicit self-referential Allow in the role trust policy. Conversely, a grant of permission in the role trust policy was sufficient regardless of whether there was a grant in the same role’s identity-based policy. Thus, in the self-assumption case, roles behaved like most other resources in the same account: only a single permission was required to allow role self-assumption, either on the identity side or the resource side of their dual-sided nature. Because of a role’s implicit trust of itself as a resource, the role’s trust policy—which might otherwise limit assumption of the role with properties such as actions and conditions—was not applied, unless it contained an explicit deny of itself.

The following example is a role trust policy attached to the role named RoleA in account 123456789012. It grants explicit access only to the role named RoleB.

Assuming that the corresponding identity-based policy for RoleA granted the sts:AssumeRole action with regard to RoleA, this role trust policy provided that there were two roles that could assume RoleA: RoleB (explicitly referenced in the trust policy) and RoleA (assuming it was explicitly referenced in its identity policy). RoleB could assume RoleA only if it had the principal tag project:BlueSkyProject because of the trust policy condition. (The sts:TagSession permission is needed here in case tags need to be added by the caller as parted of the RoleAssumption call.) RoleA, on the other hand, did not need to meet that condition because it relied on a different explicit permission—the one granted in the identity-based policy. RoleA would have needed the principal tag project:BlueSkyProject to meet the trust policy condition if and only if it was relying on the trust policy to gain access through the sts:AssumeRole action; that is, in the case where its identity-based policy did not provide the needed privilege.

As we previously noted, after considering feedback from customers on this topic, AWS has decided that requiring self-referential role trust policy grants even in the case where the identity-based policy also grants access is the better approach to delivering consistency and visibility with regard to role behavior and privileges. Therefore, as of today, role assumption behavior requires an explicit self-referential permission in the role trust policy, and the actions and conditions within that policy must also be satisfied, regardless of the permissions expressed in the role’s identity-based policy. (If permissions in the identity-based policy are present, they must also be satisfied.)

Requiring self-reference in the trust policy makes role trust policy evaluation consistent regardless of which role is seeking to assume the role. Improved consistency makes role permissions easier to understand and manage, whether through human inspection or security tooling. This change also eliminates the possibility of continuing the lifetime of an otherwise temporary credential without explicit, trackable grants of permission in trust policies. It also means that trust policy constraints and conditions are enforced consistently, regardless of which principal is assuming the role. Finally, as previously noted, this change allows customers to create and understand role assumption permissions in a single place (the role trust policy) rather than two places (the role trust policy and the role identity policy). It increases the simplicity of role trust permission management: “what you see [in the trust policy] is what you get.”

Continuing with the preceding example, if you need to allow a role to assume itself, you now must update the role trust policy to explicitly allow both RoleB and RoleA. The RoleA trust policy now looks like the following: