Post Syndicated from DAMODAR SHENVI WAGLE original https://aws.amazon.com/blogs/devops/continuous-compliance-workflow-for-infrastructure-as-code-part-2/

In the first post of this series, we introduced a continuous compliance workflow in which an enterprise security and compliance team can release guardrails in a continuous integration, continuous deployment (CI/CD) fashion in your organization.

In this post, we focus on the technical implementation of the continuous compliance workflow. We demonstrate how to use AWS Developer Tools to create a CI/CD pipeline that releases guardrails for Terraform application workloads.

We use the Terraform-Compliance framework to define the guardrails. Terraform-Compliance is a lightweight, security and compliance-focused test framework for Terraform to enable the negative testing capability for your infrastructure as code (IaC).

With this compliance framework, we can ensure that the implemented Terraform code follows security standards and your own custom standards. Currently, HashiCorp provides Sentinel (a policy as code framework) for enterprise products. AWS has CloudFormation Guard an open-source policy-as-code evaluation tool for AWS CloudFormation templates. Terraform-Compliance allows us to build a similar functionality for Terraform, and is open source.

This post is from the perspective of a security and compliance engineer, and assumes that the engineer is familiar with the practices of IaC, CI/CD, behavior-driven development (BDD), and negative testing.

Solution overview

You start by building the necessary resources as listed in the workload (application development team) account:

- An AWS CodeCommit repository for the Terraform workload

- A CI/CD pipeline built using AWS CodePipeline to deploy the workload

- A cross-account AWS Identity and Access Management (IAM) role that gives the security and compliance account the permissions to pull the Terraform workload from the workload account repository for testing their guardrails in observation mode

Next, we build the resources in the security and compliance account:

- A CodeCommit repository to hold the security and compliance standards (guardrails)

- A CI/CD pipeline built using CodePipeline to release new guardrails

- A cross-account role that gives the workload account the permissions to pull the activated guardrails from the

mainbranch of the security and compliance account repository.

The following diagram shows our solution architecture.

The architecture has two workflows: security and compliance (Steps 1–4) and application delivery (Steps 5–7).

- When a new security and compliance guardrail is introduced into the

developbranch of the compliance repository, it triggers the security and compliance pipeline. - The pipeline pulls the Terraform workload.

- The pipeline tests this compliance check guardrail against the Terraform workload in the workload account repository.

- If the workload is compliant, the guardrail is automatically merged into the

mainbranch. This activates the guardrail by making it available for all Terraform application workload pipelines to consume. By doing this, we make sure that we don’t break the Terraform application deployment pipeline by introducing new guardrails. It also provides the security and compliance team visibility into the resources in the application workload that are noncompliant. The security and compliance team can then reach out to the application delivery team and suggest appropriate remediation before the new standards are activated. If the compliance check fails, the automatic merge to themainbranch is stopped. The security and compliance team has an option to force merge the guardrail into themainbranch if it’s deemed critical and they need to activate it immediately. - The Terraform deployment pipeline in the workload account always pulls the latest security and compliance checks from the

mainbranch of the compliance repository. - Checks are run against the Terraform workload to ensure that it meets the organization’s security and compliance standards.

- Only secure and compliant workloads are deployed by the pipeline. If the workload is noncompliant, the security and compliance checks fail and break the pipeline, forcing the application delivery team to remediate the issue and recheck-in the code.

Prerequisites

Before proceeding any further, you need to identify and designate two AWS accounts required for the solution to work:

- Security and Compliance – In which you create a CodeCommit repository to hold compliance standards that are written based on Terraform-Compliance framework. You also create a CI/CD pipeline to release new compliance guardrails.

- Workload – In which the Terraform workload resides. The pipeline to deploy the Terraform workload enforces the compliance guardrails prior to the deployment.

You also need to create two AWS account profiles in ~/.aws/credentials for the tools and target accounts, if you don’t already have them. These profiles need to have sufficient permissions to run an AWS Cloud Development Kit (AWS CDK) stack. They should be your private profiles and only be used during the course of this use case. Therefore, it should be fine if you want to use admin privileges. Don’t share the profile details, especially if it has admin privileges. I recommend removing the profile when you’re finished with this walkthrough. For more information about creating an AWS account profile, see Configuring the AWS CLI.

In addition, you need to generate a cucumber-sandwich.jar file by following the steps in the cucumber-sandwich GitHub repo. The JAR file is needed to generate pretty HTML compliance reports. The security and compliance team can use these reports to make sure that the standards are met.

To implement our solution, we complete the following high-level steps:

- Create the security and compliance account stack.

- Create the workload account stack.

- Test the compliance workflow.

Create the security and compliance account stack

We create the following resources in the security and compliance account:

- A CodeCommit repo to hold the security and compliance guardrails

- A CI/CD pipeline to roll out the Terraform compliance guardrails

- An IAM role that trusts the application workload account and allows it to pull compliance guardrails from its CodeCommit repo

In this section, we set up the properties for the pipeline and cross-account role stacks, and run the deployment scripts.

Set up properties for the pipeline stack

Clone the GitHub repo aws-continuous-compliance-for-terraform and navigate to the folder security-and-compliance-account/stacks. This contains the folder pipeline_stack/, which holds the code and properties for creating the pipeline stack.

The folder has a JSON file cdk-stack-param.json, which has the parameter TERRAFORM_APPLICATION_WORKLOADS, which represents the list of application workloads that the security and compliance pipeline pulls and runs tests against to make sure that the workloads are compliant. In the workload list, you have the following parameters:

- GIT_REPO_URL – The HTTPS URL of the CodeCommit repository in the workload account against which the security and compliance check pipeline runs compliance guardrails.

- CROSS_ACCOUNT_ROLE_ARN – The ARN for the cross-account role we create in the next section. This role gives the security and compliance account permissions to pull Terraform code from the workload account.

For CROSS_ACCOUNT_ROLE_ARN, replace <workload-account-id> with the account ID for your designated AWS workload account. For GIT_REPO_URL, replace <region> with AWS Region where the repository resides.

Set up properties for the cross-account role stack

In the cloned GitHub repo aws-continuous-compliance-for-terraform from the previous step, navigate to the folder security-and-compliance-account/stacks. This contains the folder cross_account_role_stack/, which holds the code and properties for creating the cross-account role.

The folder has a JSON file cdk-stack-param.json, which has the parameter TERRAFORM_APPLICATION_WORKLOAD_ACCOUNTS, which represents the list of Terraform workload accounts that intend to integrate with the security and compliance account for running compliance checks. All these accounts are trusted by the security and compliance account and given permissions to pull compliance guardrails. Replace <workload-account-id> with the account ID for your designated AWS workload account.

Run the deployment script

Run deploy.sh by passing the name of the AWS security and compliance account profile you created earlier. The script uses the AWS CDK CLI to bootstrap and deploy the two stacks we discussed. See the following code:

cd aws-continuous-compliance-for-terraform/security-and-compliance-account/

./deploy.sh "<AWS-COMPLIANCE-ACCOUNT-PROFILE-NAME>"You should now see three stacks in the tools account:

- CDKToolkit – AWS CDK creates the CDKToolkit stack when we bootstrap the AWS CDK app. This creates an Amazon Simple Storage Service (Amazon S3) bucket needed to hold deployment assets such as an AWS CloudFormation template and AWS Lambda code package.

- cf-CrossAccountRoles – This stack creates the cross-account IAM role.

- cf-SecurityAndCompliancePipeline – This stack creates the pipeline. On the Outputs tab of the stack, you can find the CodeCommit source repo URL from the key

OutSourceRepoHttpUrl. Record the URL to use later.

Create a workload account stack

We create the following resources in the workload account:

- A CodeCommit repo to hold the Terraform workload to be deployed

- A CI/CD pipeline to deploy the Terraform workload

- An IAM role that trusts the security and compliance account and allows it to pull Terraform code from its CodeCommit repo for testing

We follow similar steps as in the previous section to set up the properties for the pipeline stack and cross-account role stack, and then run the deployment script.

Set up properties for the pipeline stack

In the already cloned repo, navigate to the folder workload-account/stacks. This contains the folder pipeline_stack/, which holds the code and properties for creating the pipeline stack.

The folder has a JSON file cdk-stack-param.json, which has the parameter COMPLIANCE_CODE, which provides details on where to pull the compliance guardrails from. The pipeline pulls and runs compliance checks prior to deployment, to make sure that application workload is compliant. You have the following parameters:

- GIT_REPO_URL – The HTTPS URL of the CodeCommit repositoryCode in the security and compliance account, which contains compliance guardrails that the pipeline in the workload account pulls to carry out compliance checks.

- CROSS_ACCOUNT_ROLE_ARN – The ARN for the cross-account role we created in the previous step in the security and compliance account. This role gives the workload account permissions to pull the Terraform compliance code from its respective security and compliance account.

For CROSS_ACCOUNT_ROLE_ARN, replace <compliance-account-id> with the account ID for your designated AWS security and compliance account. For GIT_REPO_URL, replace <region> with Region where the repository resides.

Set up the properties for cross-account role stack

In the already cloned repo, navigate to folder workload-account/stacks. This contains the folder cross_account_role_stack/, which holds the code and properties for creating the cross-account role stack.

The folder has a JSON file cdk-stack-param.json, which has the parameter COMPLIANCE_ACCOUNT, which represents the security and compliance account that intends to integrate with the workload account for running compliance checks. This account is trusted by the workload account and given permissions to pull compliance guardrails. Replace <compliance-account-id> with the account ID for your designated AWS security and compliance account.

Run the deployment script

Run deploy.sh by passing the name of the AWS workload account profile you created earlier. The script uses the AWS CDK CLI to bootstrap and deploy the two stacks we discussed. See the following code:

cd aws-continuous-compliance-for-terraform/workload-account/

./deploy.sh "<AWS-WORKLOAD-ACCOUNT-PROFILE-NAME>"You should now see three stacks in the tools account:

- CDKToolkit –AWS CDK creates the

CDKToolkitstack when we bootstrap the AWS CDK app. This creates an S3 bucket needed to hold deployment assets such as a CloudFormation template and Lambda code package. - cf-CrossAccountRoles – This stack creates the cross-account IAM role.

- cf-TerraformWorkloadPipeline – This stack creates the pipeline. On the Outputs tab of the stack, you can find the CodeCommit source repo URL from the key

OutSourceRepoHttpUrl. Record the URL to use later.

Test the compliance workflow

In this section, we walk through the following steps to test our workflow:

- Push the application workload code into its repo.

- Push the security and compliance code into its repo and run its pipeline to release the compliance guardrails.

- Run the application workload pipeline to exercise the compliance guardrails.

- Review the generated reports.

Push the application workload code into its repo

Clone the empty CodeCommit repo from workload account. You can find the URL from the variable OutSourceRepoHttpUrl on the Outputs tab of the cf-TerraformWorkloadPipeline stack we deployed in the previous section.

- Create a new branch main and copy the workload code into it.

- Copy the cucumber-sandwich.jar file you generated in the prerequisites section into a new folder

/lib. - Create a directory called

reportswith an empty filedummy. Thereportsdirectory is where Terraform-Compliance framework create compliance reports. - Push the code to the remote origin.

See the following sample script

git checkout -b main

# Copy the code from git repo location

# Create reports directory and a dummy file.

mkdir reports

touch reports/dummy

git add .

git commit -m “Initial commit”

git push origin main

The folder structure of workload code repo should match the structure shown in the following screenshot.

The first commit triggers the pipeline-workload-main pipeline, which fails in the stage RunComplianceCheck due to the security and compliance repo not being present (which we add in the next section).

Push the security and compliance code into its repo and run its pipeline

Clone the empty CodeCommit repo from the security and compliance account. You can find the URL from the variable OutSourceRepoHttpUrl on the Outputs tab of the cf-SecurityAndCompliancePipeline stack we deployed in the previous section.

- Create a new local branch

mainand check in the empty branch into the remote origin so that themainbranch is created in the remote origin. Skipping this step leads to failure in the code merge step of the pipeline due to the absence of themainbranch. - Create a new branch

developand copy the security and compliance code into it. This is required because the security and compliance pipeline is configured to be triggered from thedevelopbranch for the purposes of this post. - Copy the cucumber-sandwich.jar file you generated in the prerequisites section into a new folder

/lib.

See the following sample script:

cd security-and-compliance-code

git checkout -b main

git add .

git commit --allow-empty -m “initial commit”

git push origin main

git checkout -b develop main

# Here copy the code from git repo location

# You also copy cucumber-sandwich.jar into a new folder /lib

git add .

git commit -m “Initial commit”

git push origin develop

The folder structure of security and compliance code repo should match the structure shown in the following screenshot.

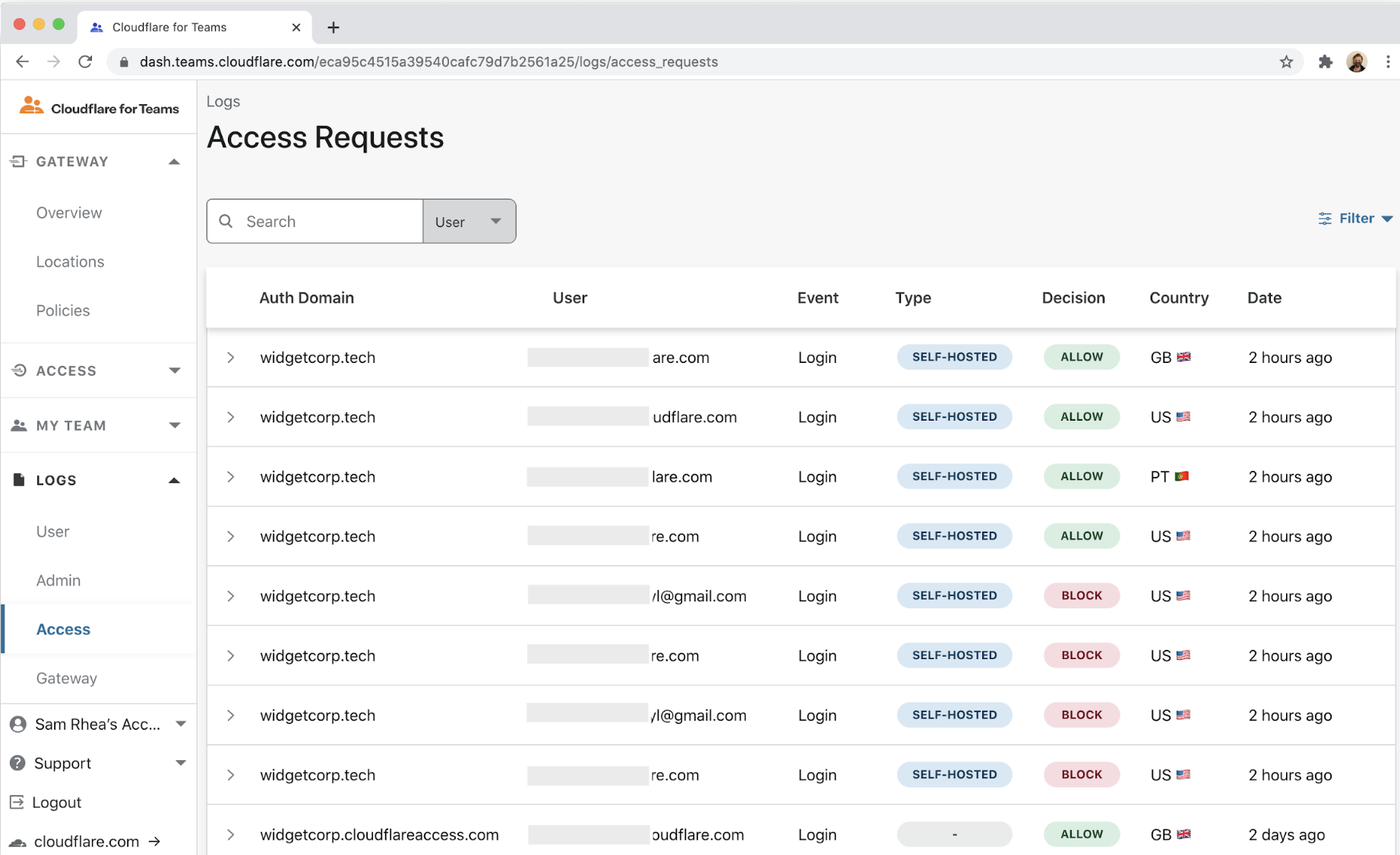

The code push to the develop branch of the security-and-compliance-code repo triggers the security and compliance pipeline. The pipeline pulls the code from the workload account repo, then runs the compliance guardrails against the Terraform workload to make sure that the workload is compliant. If the workload is compliant, the pipeline merges the compliance guardrails into the main branch. If the workload fails the compliance test, the pipeline fails. The following screenshot shows a sample run of the pipeline.

Run the application workload pipeline to exercise the compliance guardrails

After we set up the security and compliance repo and the pipeline runs successfully, the workload pipeline is ready to proceed (see the following screenshot of its progress).

The service delivery teams are now being subjected to the security and compliance guardrails being implemented (RunComplianceCheck stage), and their pipeline breaks if any resource is noncompliant.

Review the generated reports

CodeBuild supports viewing reports generated in cucumber JSON format. In our workflow, we generate reports in cucumber JSON and BDD XML formats, and we use this capability of CodeBuild to generate and view HTML reports. Our implementation also generates report directly in HTML using the cucumber-sandwich library.

The following screenshot is snippet of the script compliance-check.sh, which implements report generation.

The bug noted in the screenshot is in the radish-bdd library that Terraform-Compliance uses for the cucumber JSON format report generation. For more information, you can review the defect logged against radish-bdd for this issue.

After the script generates the reports, CodeBuild needs to be configured to access them to generate HTML reports. The following screenshot shows a snippet from buildspec-compliance-check.yml, which shows how the reports section is set up for report generation:

For more details on how to set up buildspec file for CodeBuild to generate reports, see Create a test report.

CodeBuild displays the compliance run reports as shown in the following screenshot.

We can also view a trending graph for multiple runs.

The other report generated by the workflow is the pretty HTML report generated by the cucumber-sandwich library.

The reports are available for download from the S3 bucket <OutPipelineBucketName>/pipeline-security-an/report_App/<zip file>.

The cucumber-sandwich generated report marks scenarios with skipped tests as failed scenarios. This is the only noticeable difference between the CodeBuild generated HTML and cucumber-sandwich generated HTML reports.

Clean up

To remove all the resources from the workload account, complete the following steps in order:

- Go to the folder where you cloned the workload code and edit

buildspec-workload-deploy.yml:- Comment line 44 (-

./workload-deploy.sh). - Uncomment line 45 (-

./workload-deploy.sh --destroy). - Commit and push the code change to the remote repo. The workload pipeline is triggered, which cleans up the workload.

- Comment line 44 (-

- Delete the CloudFormation stack

cf-CrossAccountRoles. This step removes the cross-account role from the workload account, which gives permission to the security and compliance account to pull the Terraform workload. - Go to the CloudFormation stack

cf-TerraformWorkloadPipelineand note theOutPipelineBucketNameandOutStateFileBucketNameon the Outputs tab. Empty the two buckets and then delete the stack. This removes pipeline resources from workload account. - Go to the

CDKToolkitstack and note theBucketNameon the Outputs tab. Empty that bucket and then delete the stack.

To remove all the resources from the security and compliance account, complete the following steps in order:

- Delete the CloudFormation stack

cf-CrossAccountRoles. This step removes the cross-account role from the security and compliance account, which gives permission to the workload account to pull the compliance code. - Go to CloudFormation stack

cf-SecurityAndCompliancePipelineand note theOutPipelineBucketNameon the Outputs tab. Empty that bucket and then delete the stack. This removes pipeline resources from the security and compliance account. - Go to the

CDKToolkitstack and note theBucketNameon the Outputs tab. Empty that bucket and then delete the stack.

Security considerations

Cross-account IAM roles are very powerful and need to be handled carefully. For this post, we strictly limited the cross-account IAM role to specific CodeCommit permissions. This makes sure that the cross-account role can only do those things.

Conclusion

In this post in our two-part series, we implemented a continuous compliance workflow using CodePipeline and the open-source Terraform-Compliance framework. The Terraform-Compliance framework allows you to build guardrails for securing Terraform applications deployed on AWS.

We also showed how you can use AWS developer tools to seamlessly integrate security and compliance guardrails into an application release cycle and catch noncompliant AWS resources before getting deployed into AWS.

Try implementing the solution in your enterprise as shown in this post, and leave your thoughts and questions in the comments.

About the authors

Sumit Mishra is Senior DevOps Architect at AWS Professional Services. His area of expertise include IaC, Security in pipeline, CI/CD and automation.

Damodar Shenvi Wagle is a Cloud Application Architect at AWS Professional Services. His areas of expertise include architecting serverless solutions, CI/CD and automation.

Srinivas Manepalli is a DevSecOps Solutions Architect in the U.S. Fed SI SA team at Amazon Web Services (AWS). He is passionate about helping customers, building and architecting DevSecOps and highly available software systems. Outside of work, he enjoys spending time with family, nature and good food.

Srinivas Manepalli is a DevSecOps Solutions Architect in the U.S. Fed SI SA team at Amazon Web Services (AWS). He is passionate about helping customers, building and architecting DevSecOps and highly available software systems. Outside of work, he enjoys spending time with family, nature and good food.