Post Syndicated from Ben Solomon original https://blog.cloudflare.com/api-gateway/

Over the past decade, the Internet has experienced a tectonic shift. It used to be composed of static websites: with text, images, and the occasional embedded movie. But the Internet has grown enormously. We now rely on API-driven applications to help with almost every aspect of life. Rather than just download files, we are able to engage with apps by exchanging rich data. We track workouts and send the results to the cloud. We use smart locks and all kinds of IoT devices. And we interact with our friends online.

This is all wonderful, but it comes with an explosion of complexity on the back end. Why? Developers need to manage APIs in order to support this functionality. They need to monitor and authenticate every single request. And because these tasks are so difficult, they’re usually outsourced to an API gateway provider.

Unfortunately, today’s gateways leave a lot to be desired. First: they’re not cheap. Then there’s the performance impact. And finally, there’s a data and privacy risk, since more than 50% of traffic reaches APIs (and is presumably sent through a third party gateway). What a mess.

Today we’re announcing the Cloudflare API Gateway. We’re going to completely replace your existing gateway at a fraction of the cost. And our solution uses the technology behind Workers, Bot Management, Access, and Transform Rules to provide the most advanced API toolset on the market.

What is API Gateway?

In short, it’s a package of features that will do everything for your APIs. We break it down into three categories:

Security

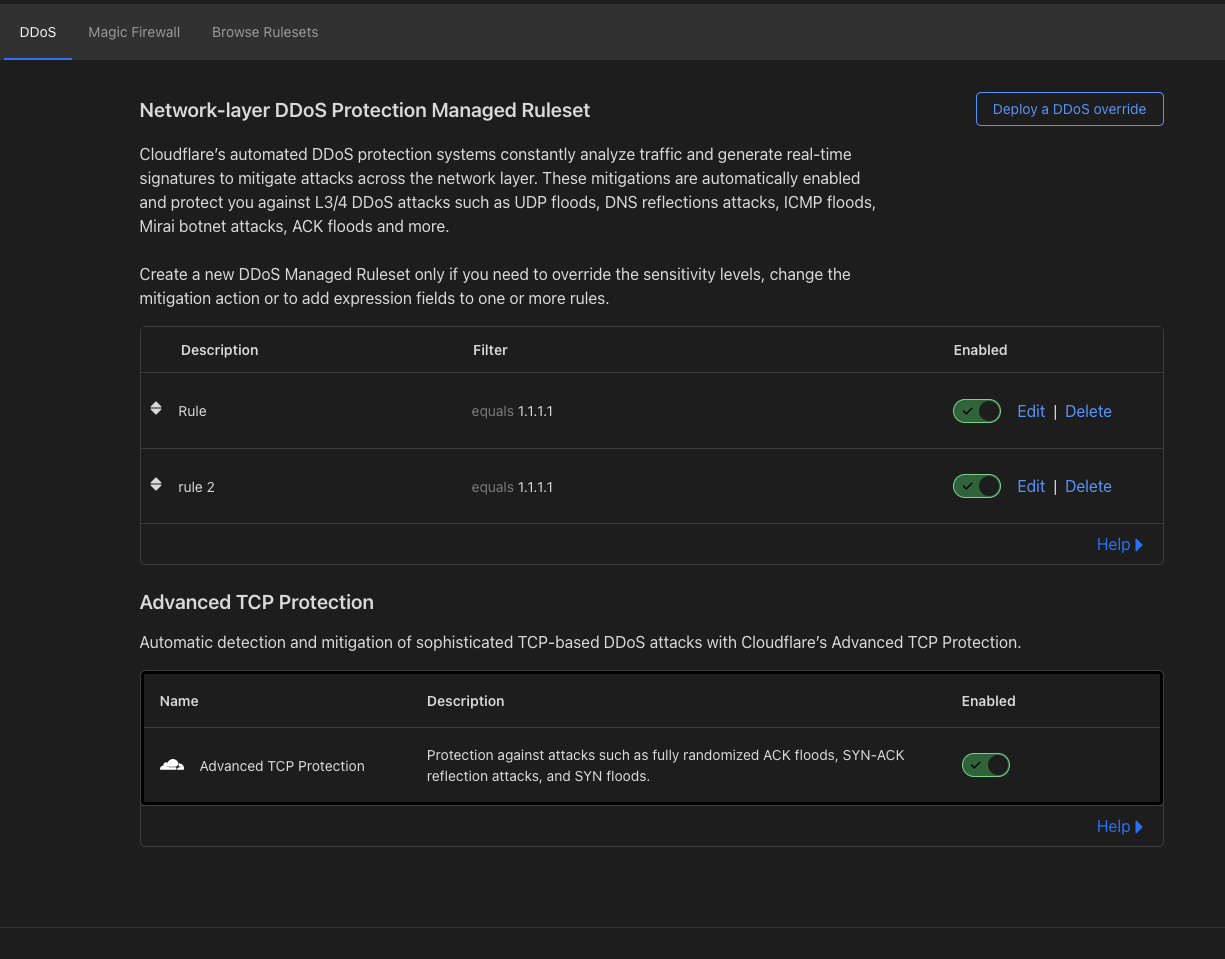

These are the products we have already blogged about. Tools like Discovery, Schema Validation, Abuse Detection, and more. We’ve spent a lot of time applying our security expertise to the world of APIs.

Management & Monitoring

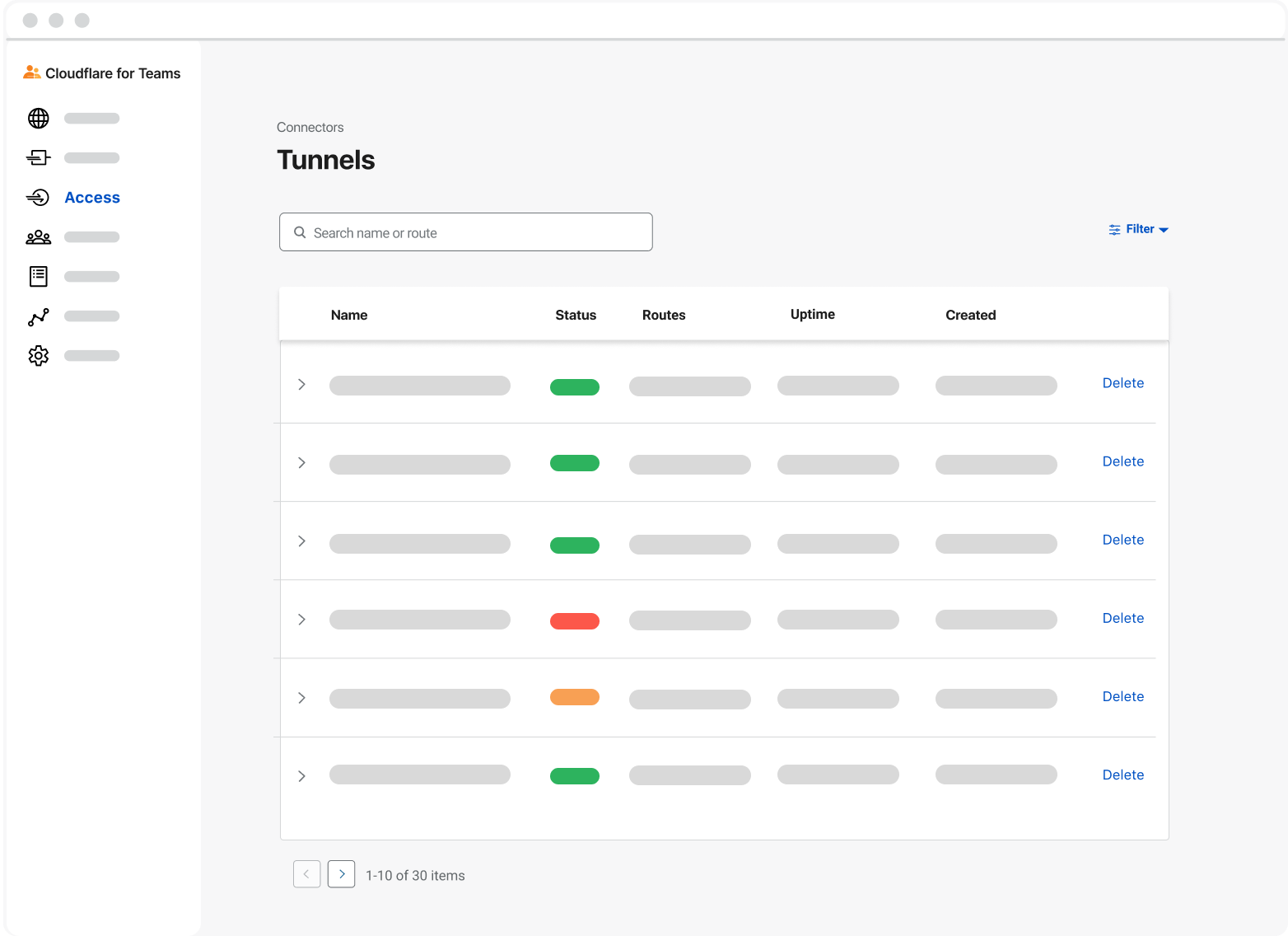

These are the foundational tools that keep your APIs in order. Some examples: analytics, routing, and authentication. We are already able to do these things with existing products like Cloudflare Access, and more features are on the way.

Everything Else

These are the small (but crucial) items that keep everything running. Cloudflare already offers SSL/TLS termination, load balancing, and proxy services that can run by default.

Today’s blog post describes each feature in detail. We’re excited to announce that all the security features are now generally available, so let’s start by discussing those.

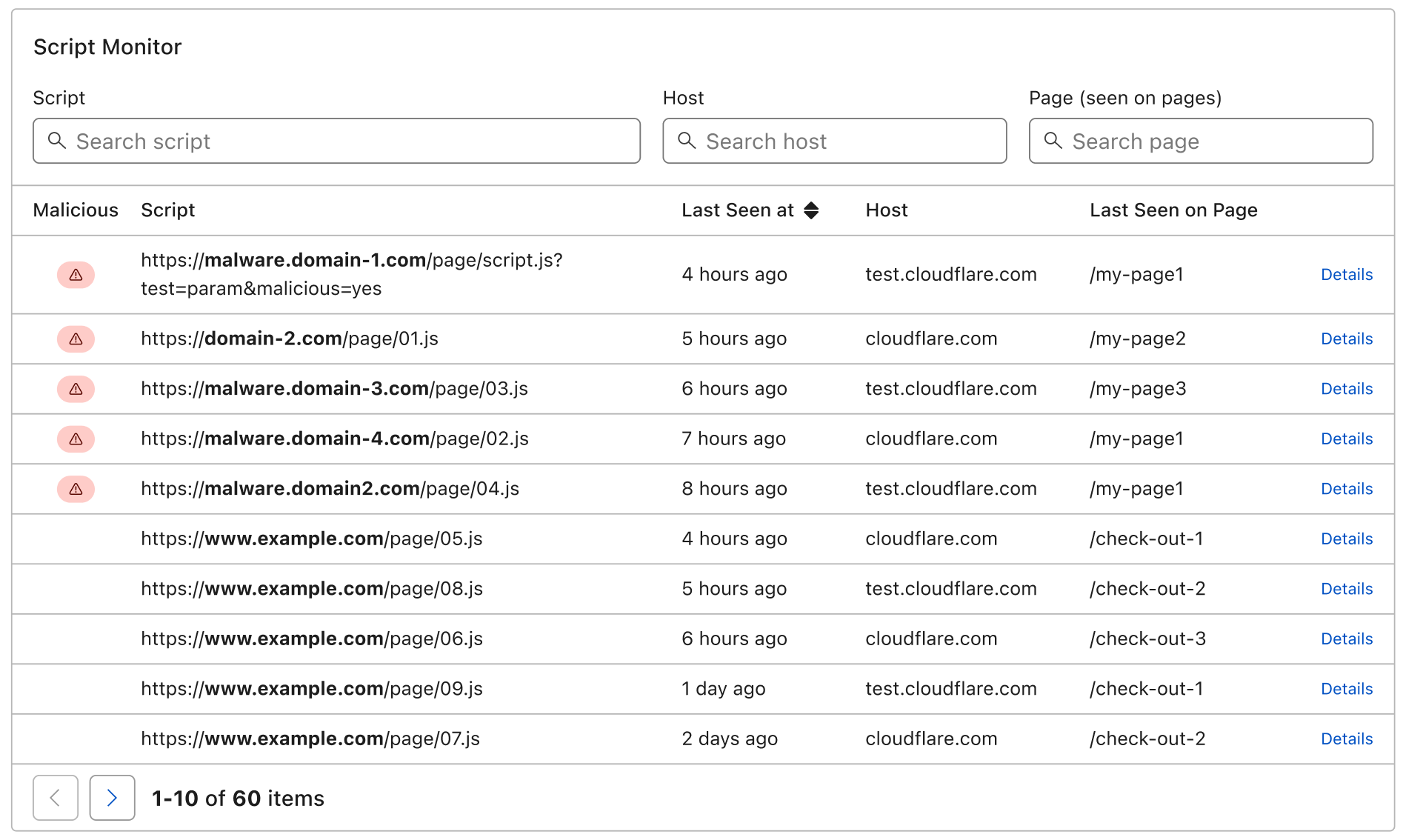

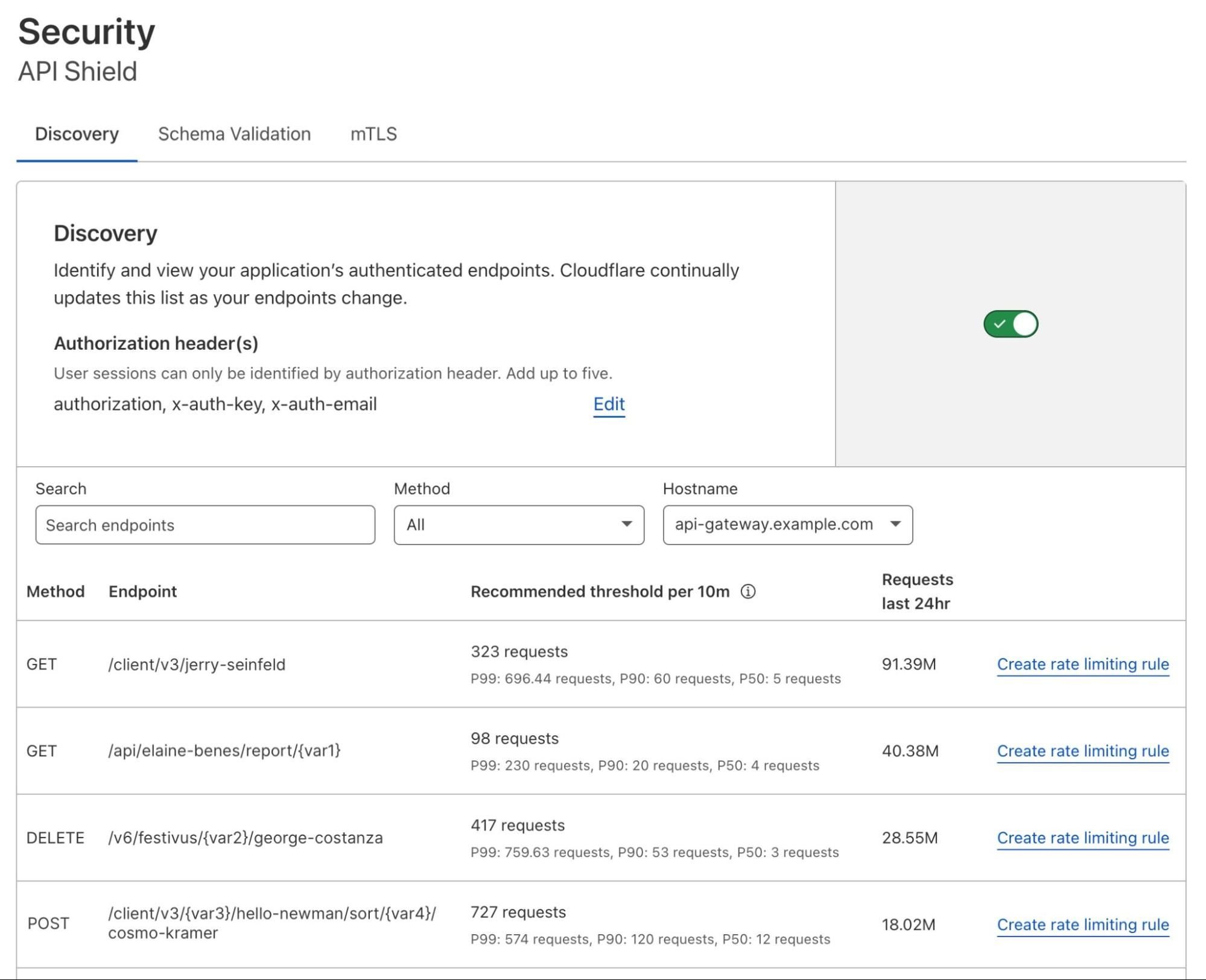

Discovery

Our customers are eager to protect their APIs. Unfortunately, they don’t always have these endpoints documented—or worse, they think everything is documented, but have unknowingly lost or modified endpoints. These hidden endpoints are sometimes called shadow APIs. We need to begin our journey with an exhaustive (and accurate) picture of API surface area.

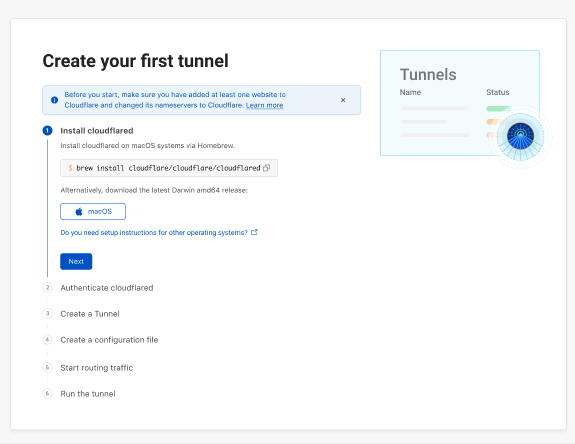

That’s where Discovery comes in. Head to the Cloudflare dashboard, select the Security tab, then choose “API Shield.” Activate the feature and tell us how you want to identify your API traffic. Most users provide a header (available today), but we can also use the request body or cookie (available soon).

We provide an exhaustive list of your API endpoints. Cloudflare lists each method, path, and additional metadata to help you understand your surface area. We even collapse endpoints that include variables (e.g., /account/217) to become generally applicable (e.g., /account/{var1}).

Discovery is a powerful countermeasure to entropy. Our customers often expect to find 30 endpoints, but are surprised to learn they have over 100 active endpoints.

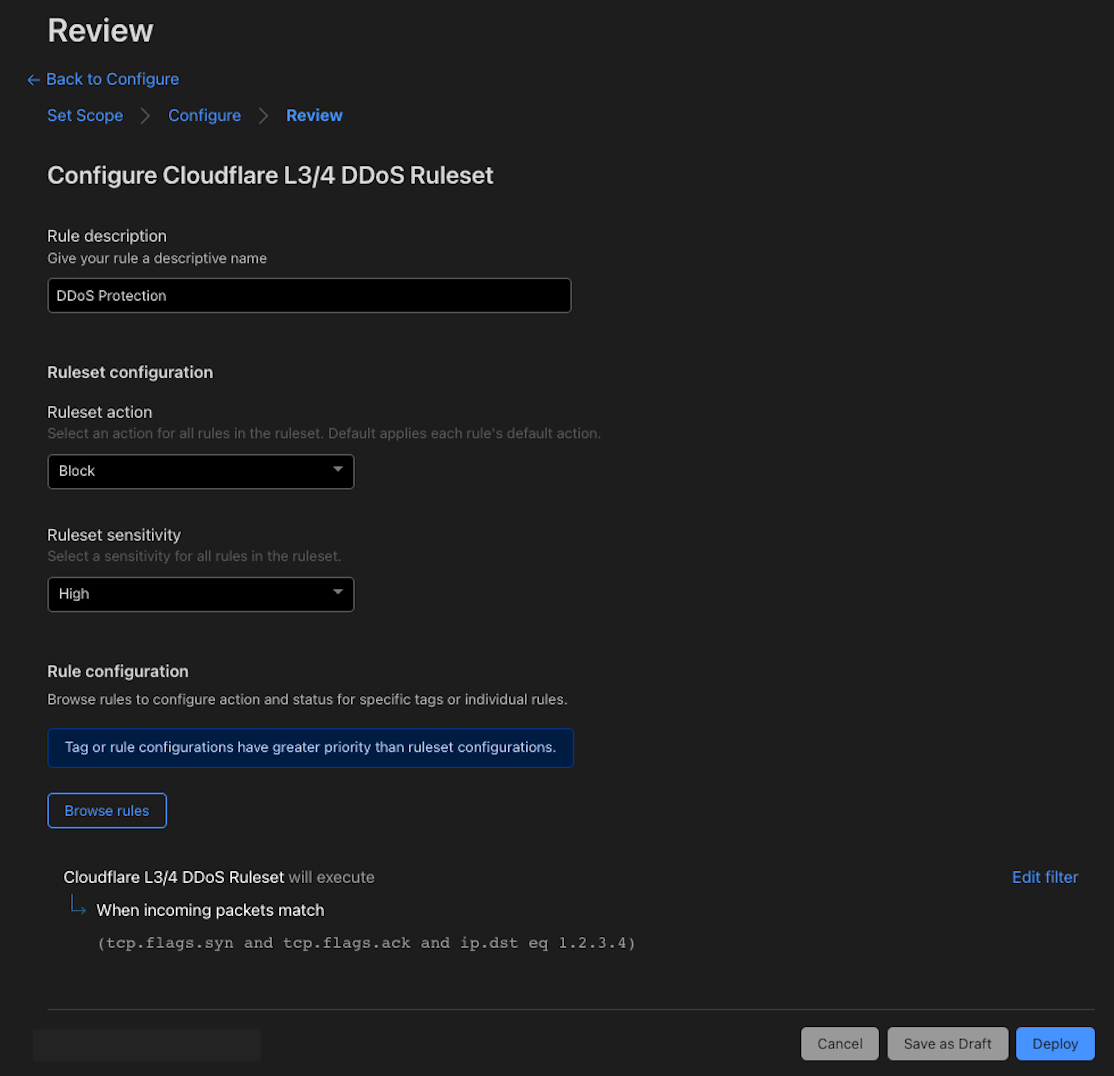

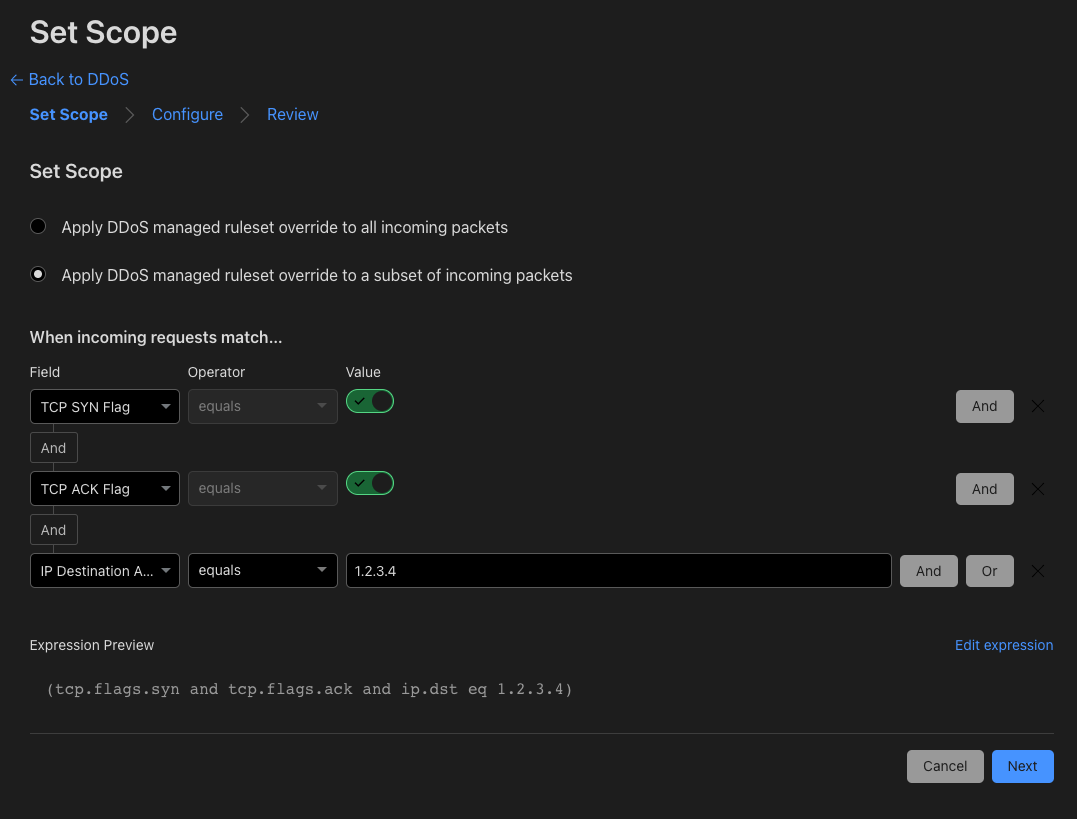

Schema Validation

Perhaps you already have a schema for your API endpoints. A schema is like a template: it provides the paths, methods, and additional data you expect API requests to include. Many developers follow the OpenAPI standard to generate (and maintain) a schema.

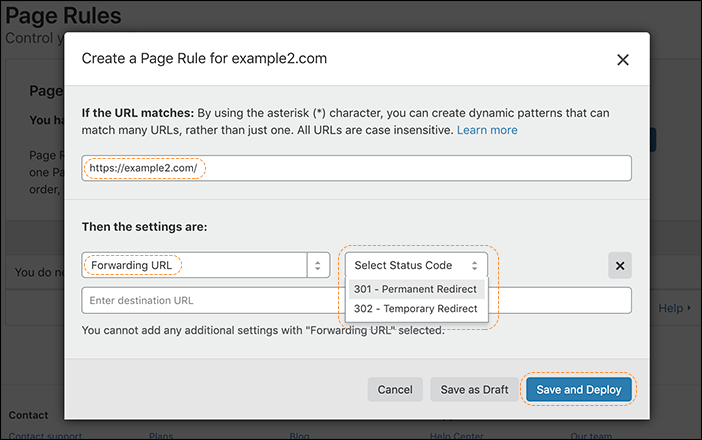

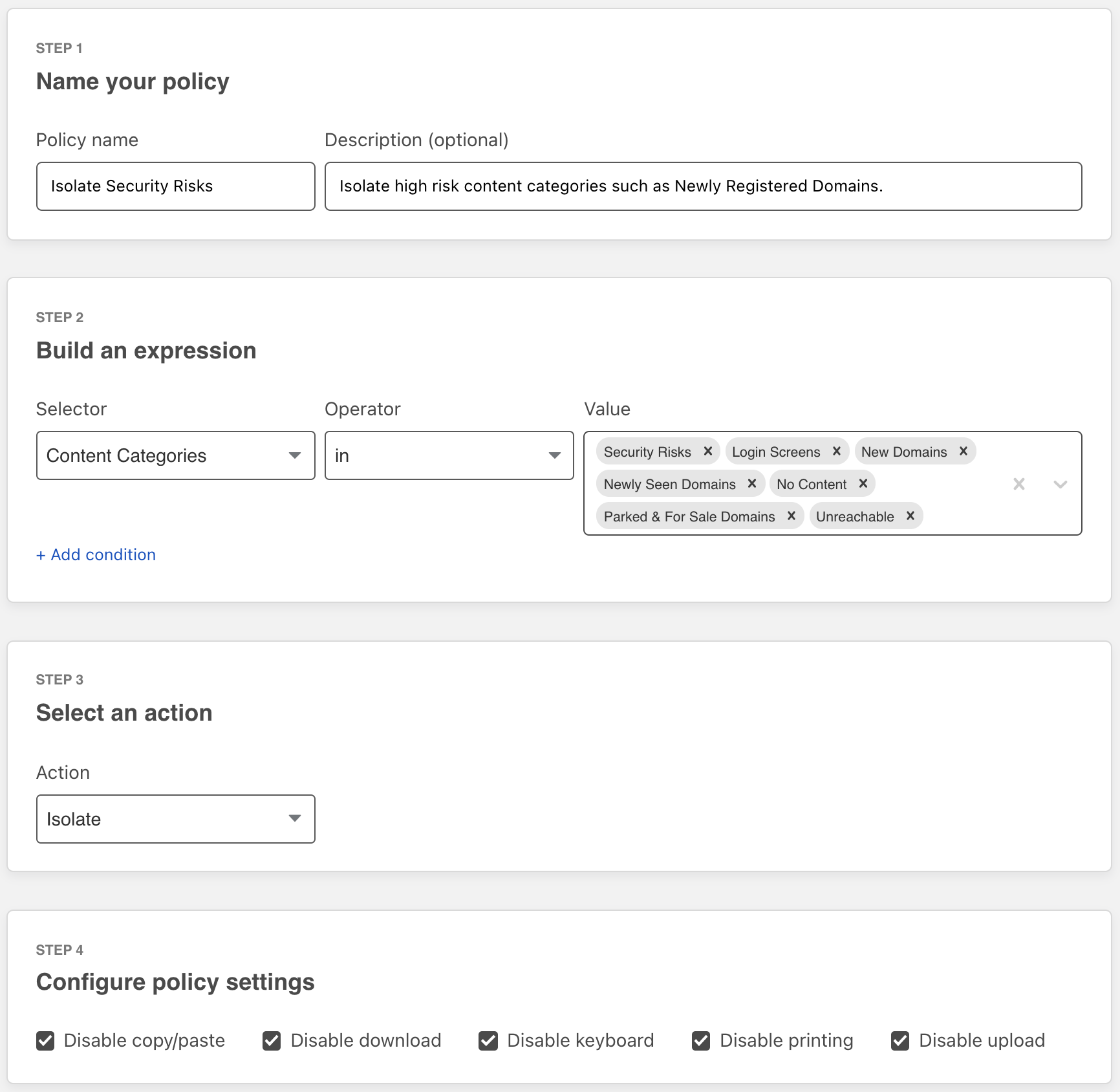

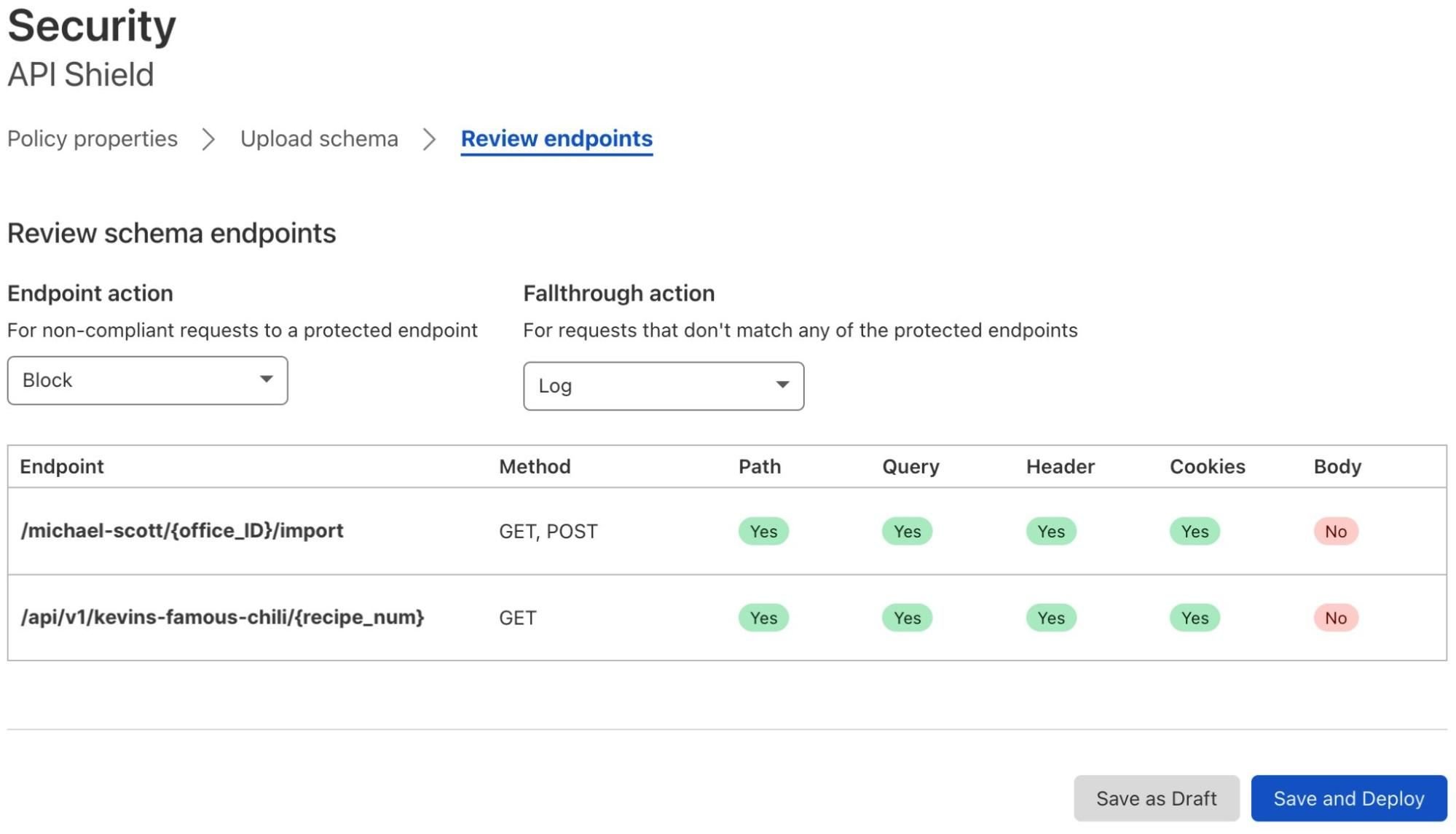

To harden your security, we can validate incoming traffic against this schema. This is a great way to stop basic attacks. Cloudflare will turn away nonconforming requests, discarding nonsense traffic that ignored the dress code. Simply upload your schema to the dashboard, select the actions you want to take, and deploy:

Schema Validation has already vetted traffic for some of the world’s largest crypto sites, delivery services, and payment platforms. It’s available now, and we’ll add body validation soon.

Abuse Detection

A robust security approach will use Schema Validation and Discovery in tandem, ensuring traffic matches the expected format. But what about abusive traffic that makes it through?

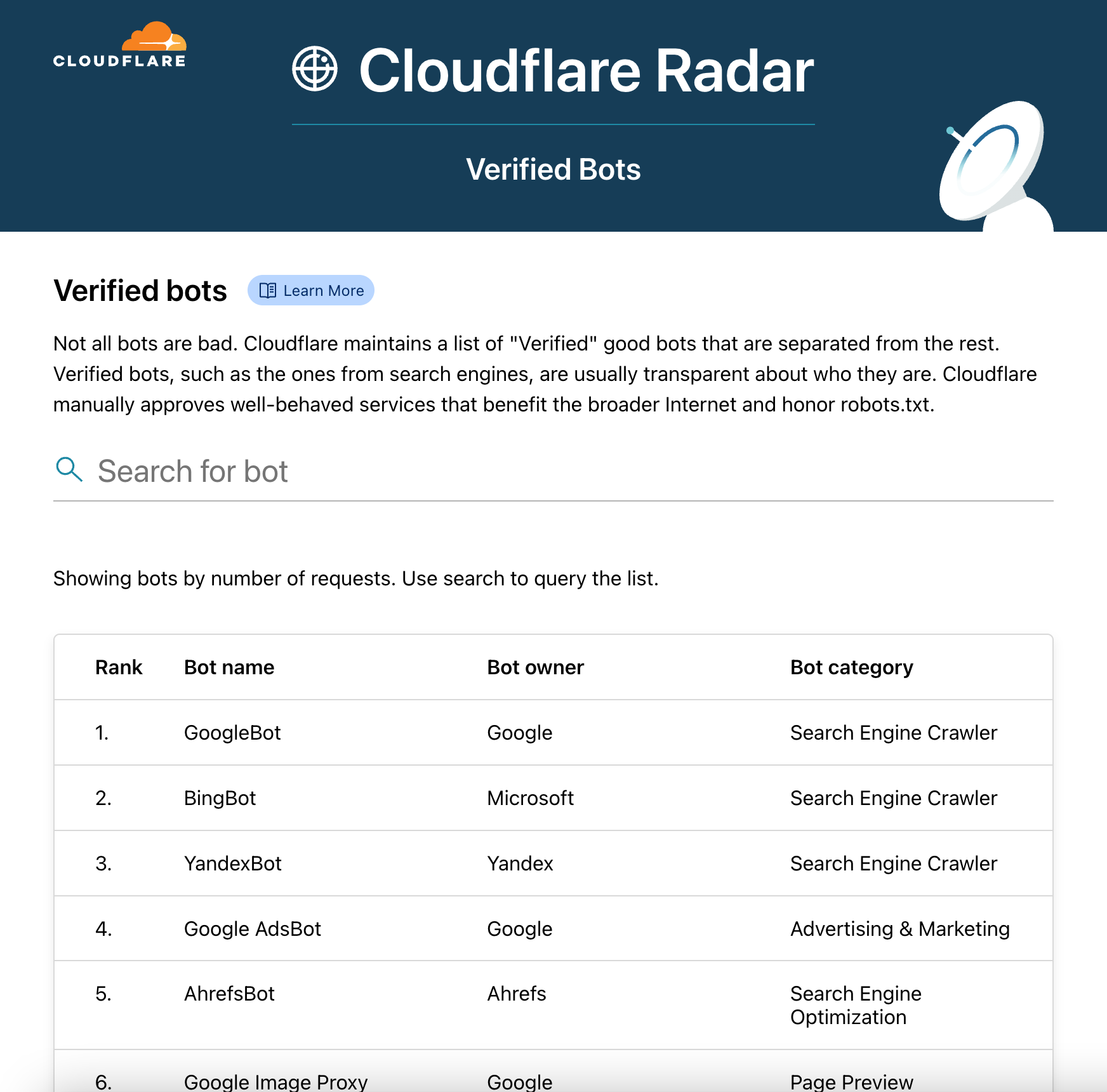

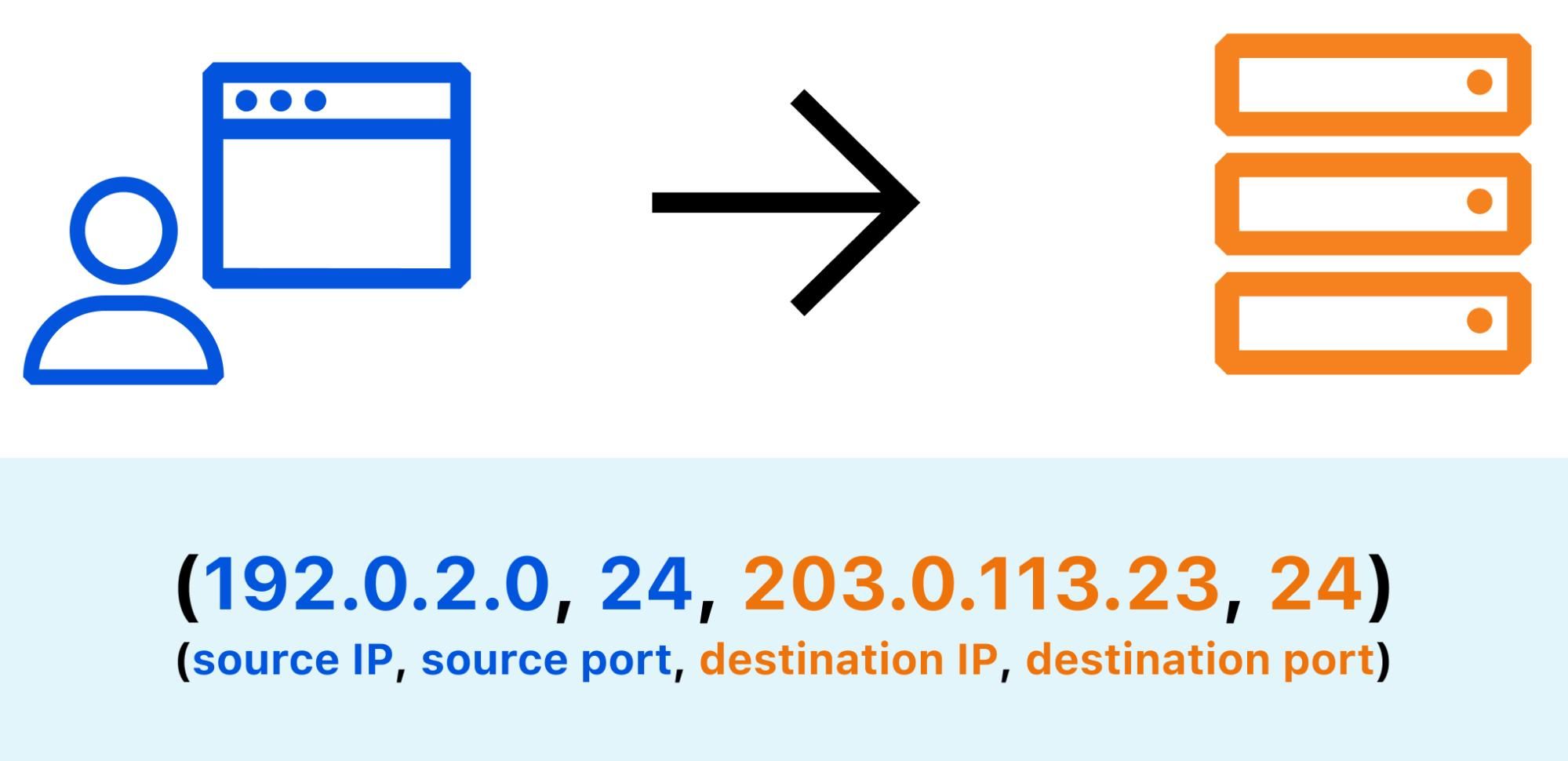

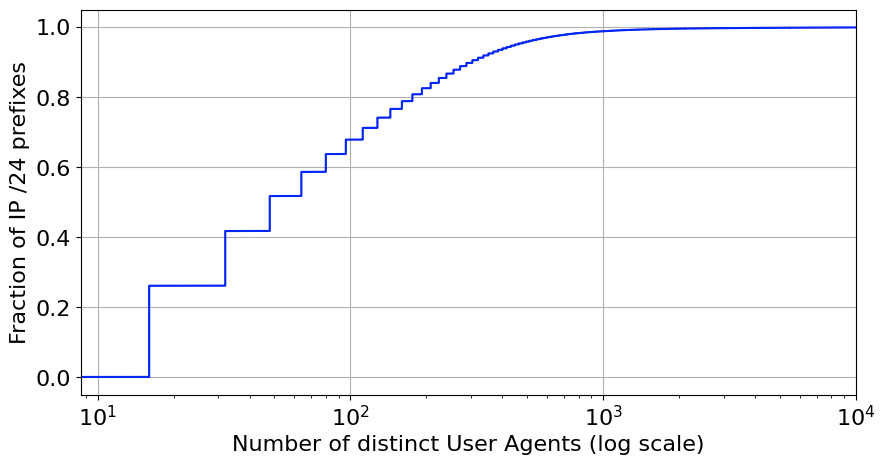

As Cloudflare discovers new API endpoints, we actually suggest rate limits for each one. That’s the role of Abuse Detection, and it opens the door to a more sophisticated kind of security.

Consider an API endpoint that returns weather updates. Specifically, the endpoint will return “yes” if it is likely to snow in the next hour, and “no” otherwise. Our algorithm might detect that the average user requests this data once every 10 minutes. A small group of scrapers, however, makes 37 requests per 10 minutes. Cloudflare automatically recommends a threshold in between, weighted to provide normal users with some breathing room. This would prevent abusive scraping services from fetching the weather too often.

We provide the option to create a rule using our new Advanced Rate Limiting engine. You can use cookies, headers, and more to tune thresholds. We’ve been using Abuse Detection to protect api.cloudflare.com for months now.

Our favorite part of this feature: it relies on the machine learning approach we use for Bot Management. Just another way our products can feed into (and benefit from) each other.

Abuse Detection is available now. If you’re interested in Sequential Abuse Detection, which we use to flag anomalous request flows, check out our previous blog post. The sequential piece is in early access, and we’re continuing to tune it before an official launch.

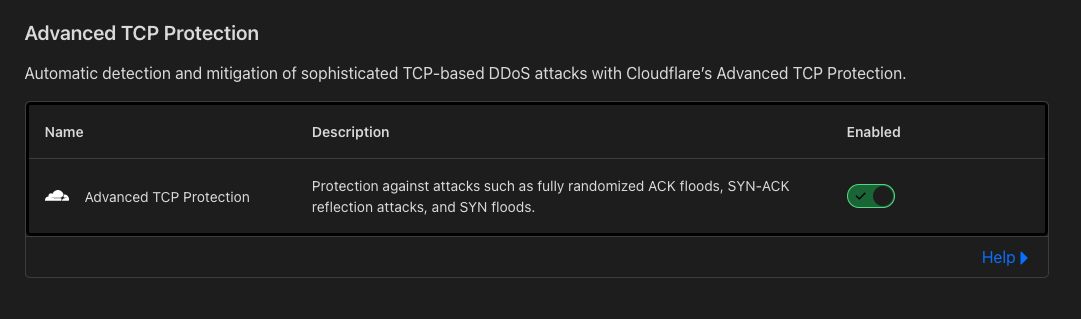

mTLS

Mutual TLS takes security to a new level. You can use certificates to validate incoming traffic as it reaches your APIs—which is especially useful for mobile and IoT devices. Moreover, this is an excellent positive security model that can (and should) be adopted for most device ecosystems.

As an example, let’s return to our weather API. Perhaps this service includes a second endpoint that receives the current temperature from a thermometer. But there’s a problem: anyone can make fake requests, providing inaccurate readings to the endpoint. To prevent this, use mTLS to install a client certificate on the legitimate thermometer, then let Cloudflare validate that certificate. Any other requests will be turned away. Problem solved!

We already offer a set of free certificates to every Cloudflare customer. That will continue. But starting today, API Gateway customers get unlimited certificates by default.

Authentication

Many modern APIs require authentication. In fact, authentication unlocks all sorts of capabilities—it allows sessions (with login), personal data exchange, and infrastructure efficiency. And of course, Cloudflare protects authenticated traffic as it passes through our network.

But with API Gateway, Cloudflare plays a more active role in authenticating traffic, helping to issue and validate the following:

- API keys

- JSON web tokens (JWT)

- OAuth 2.0 tokens

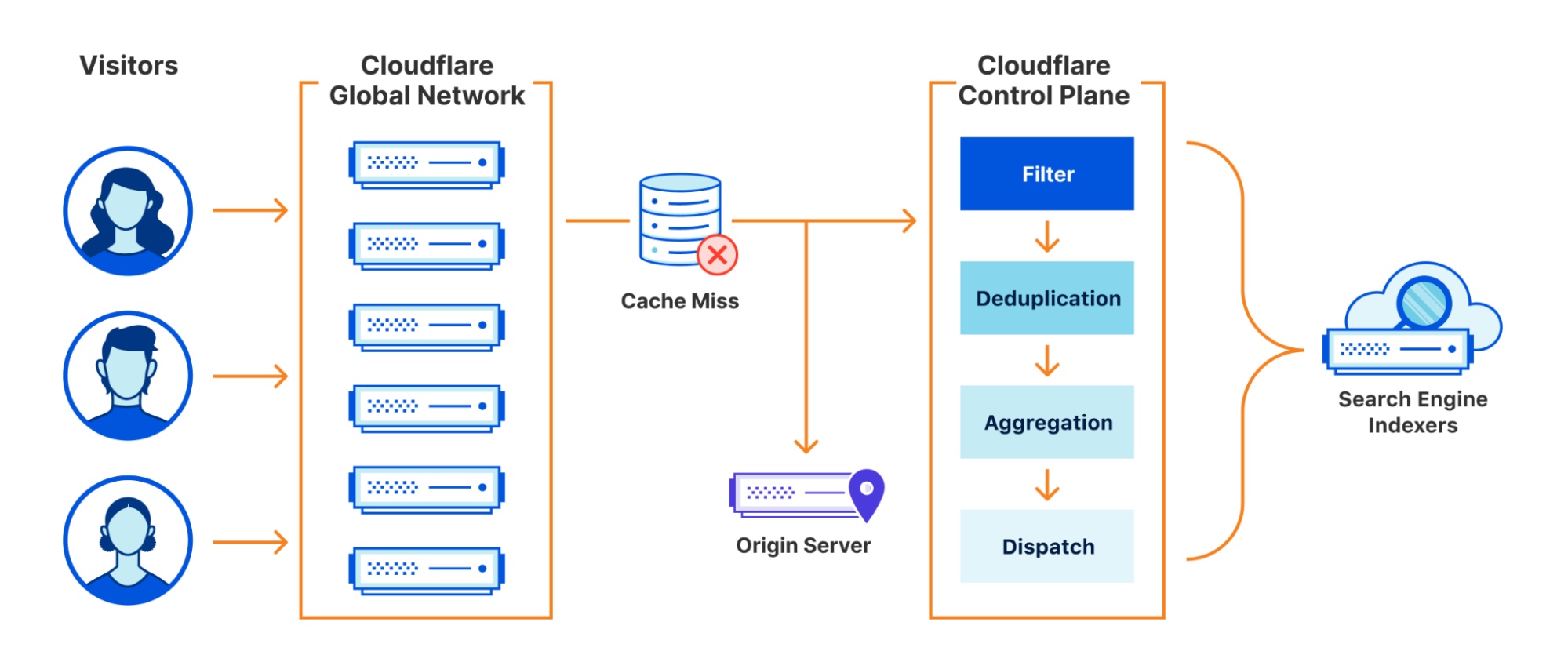

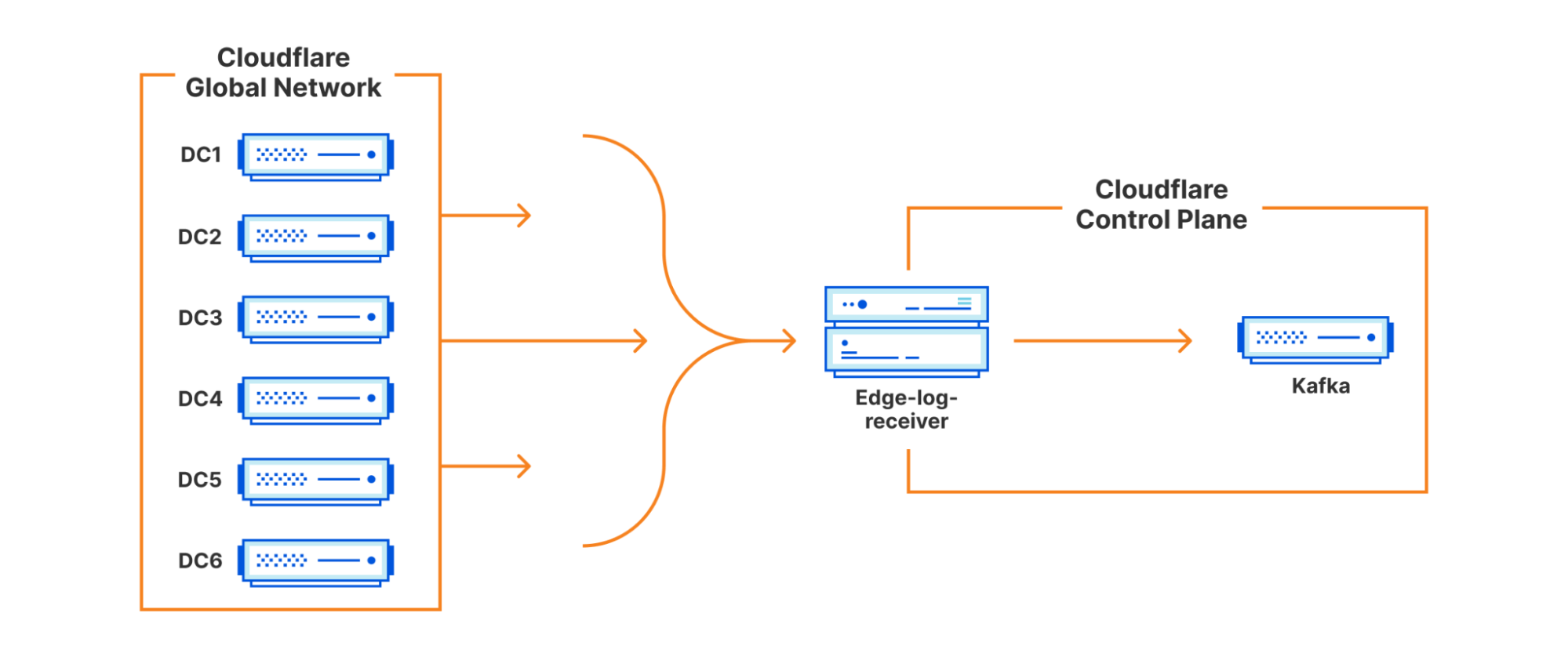

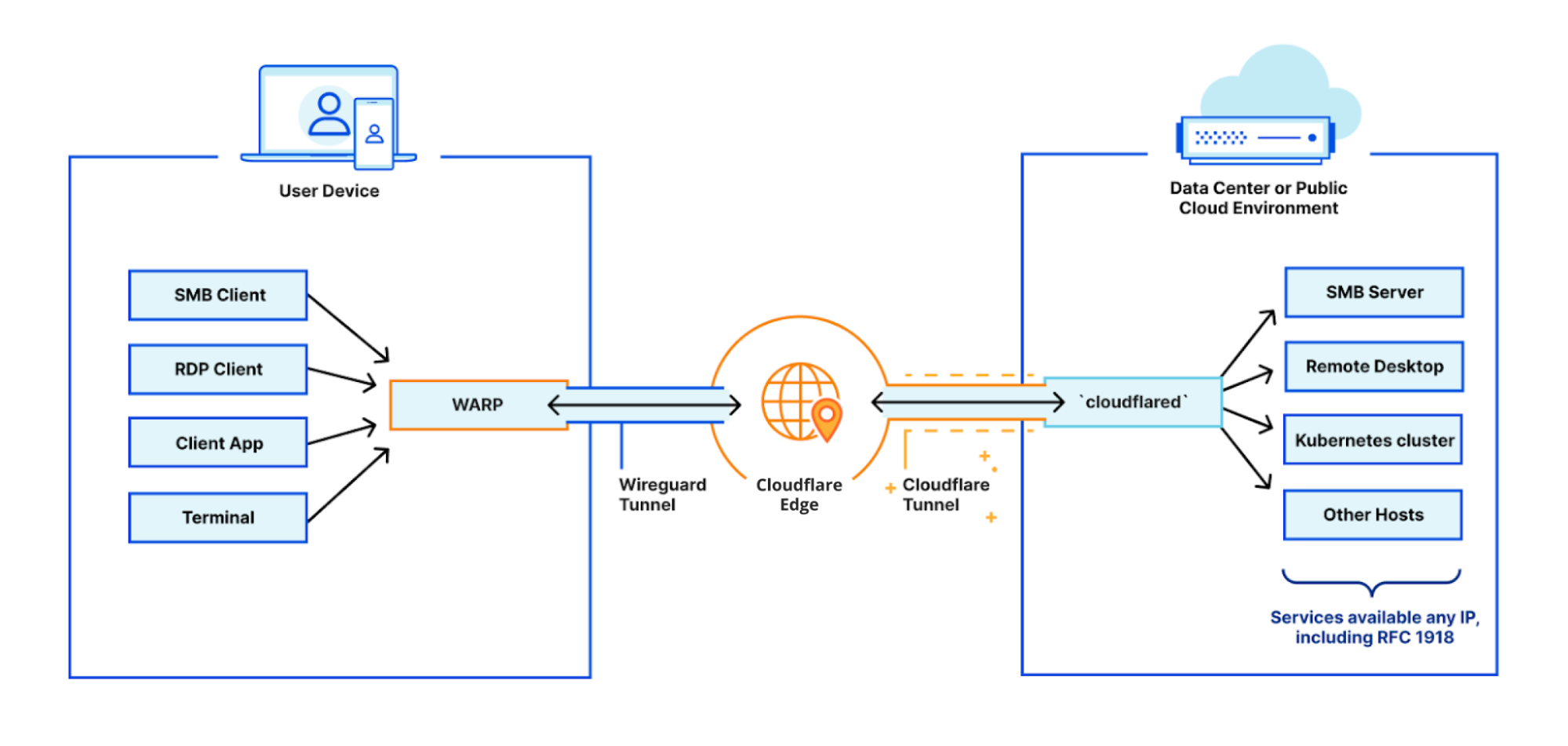

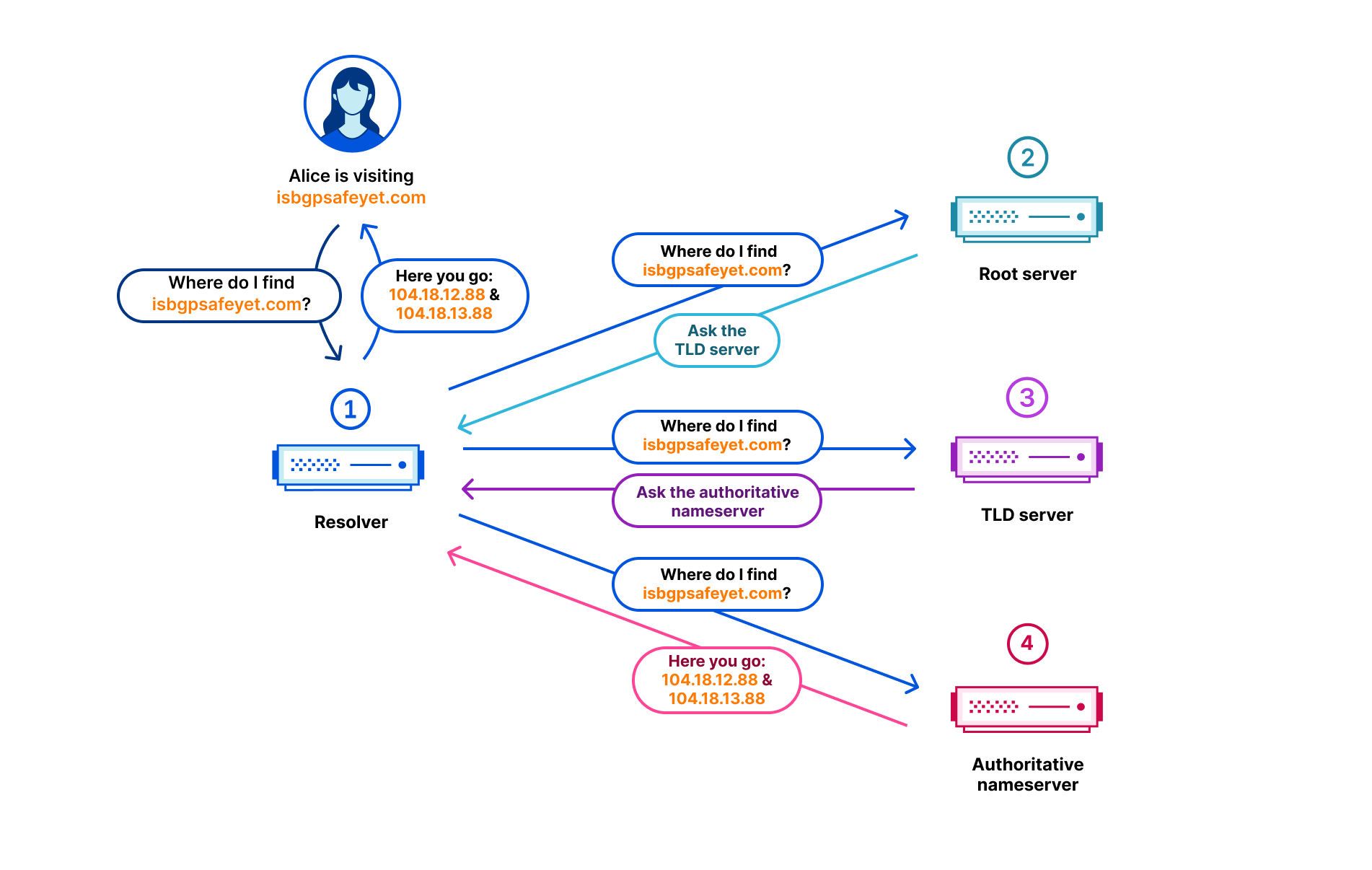

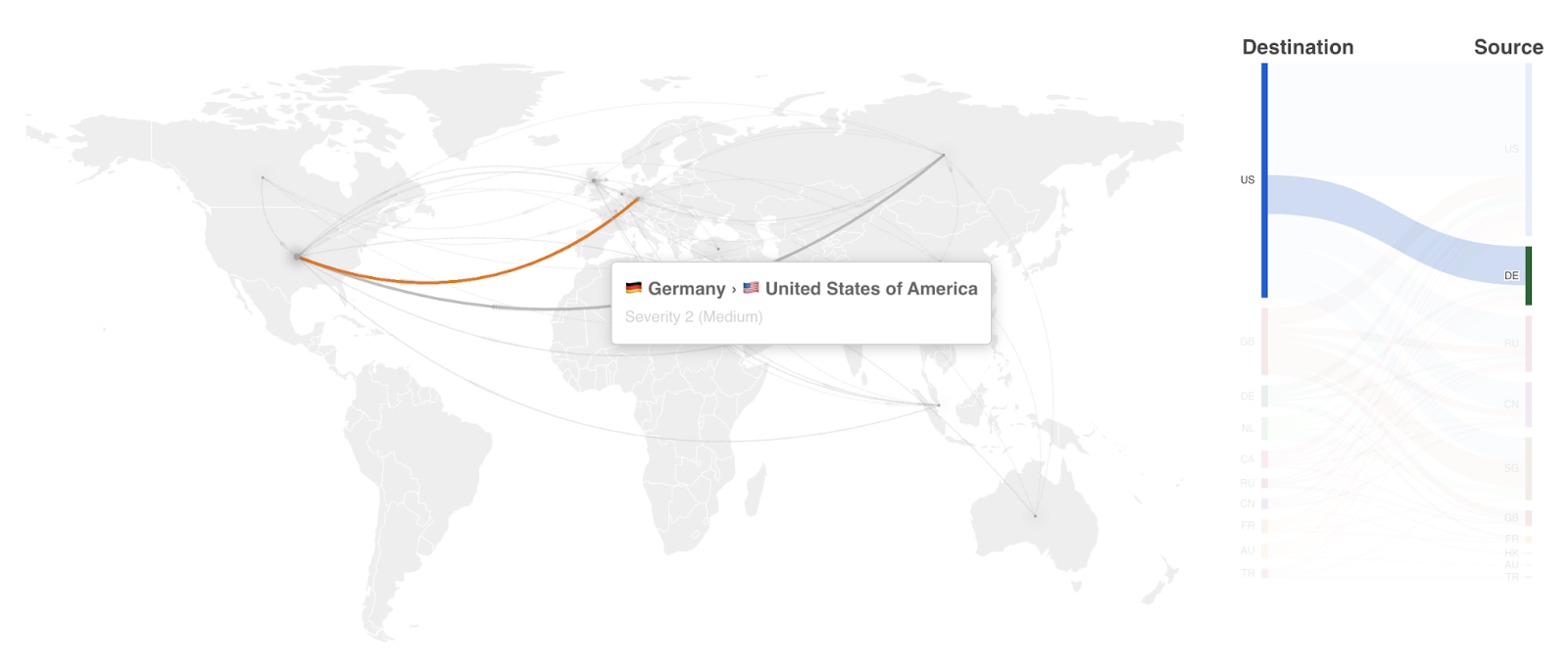

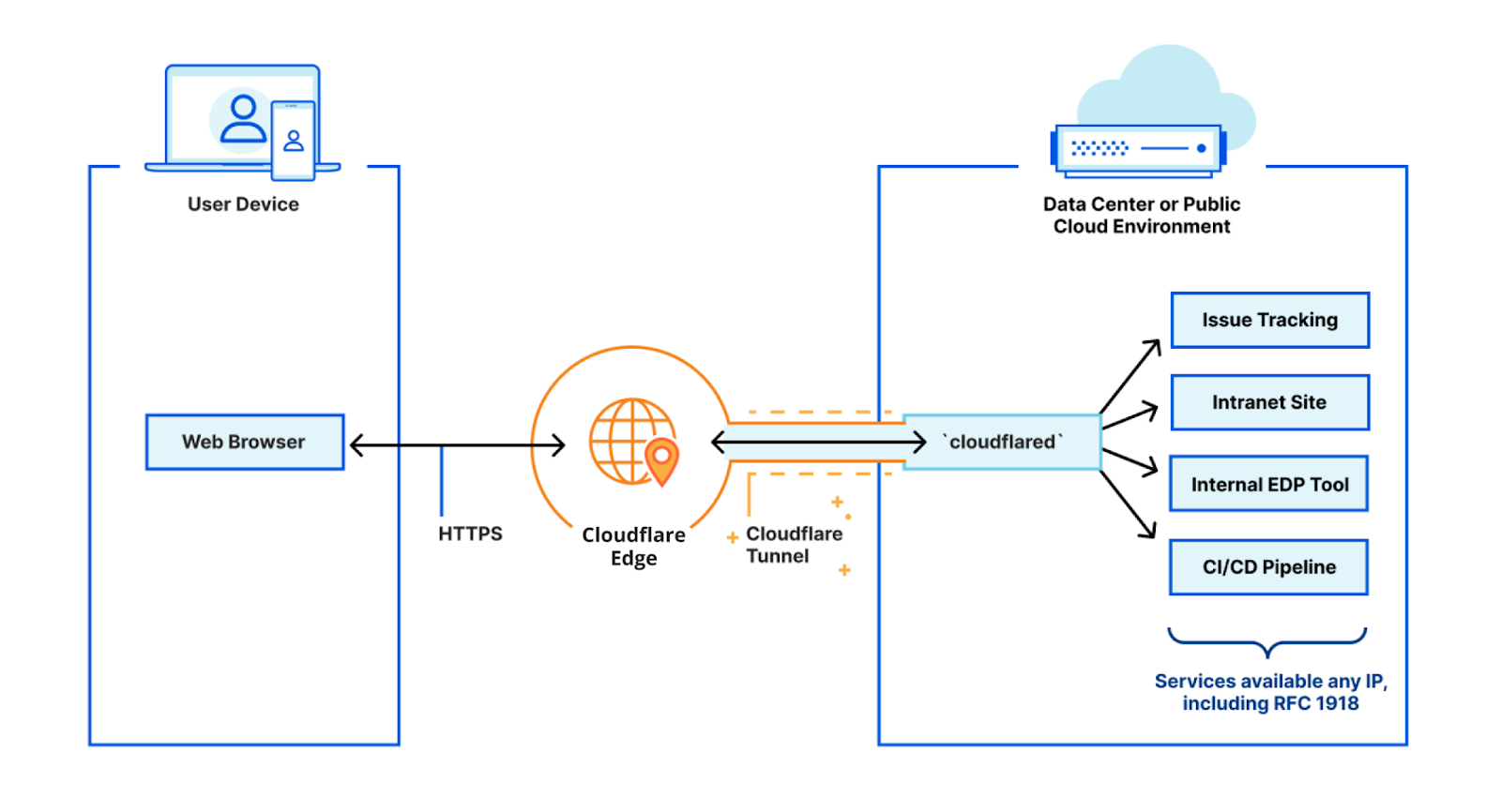

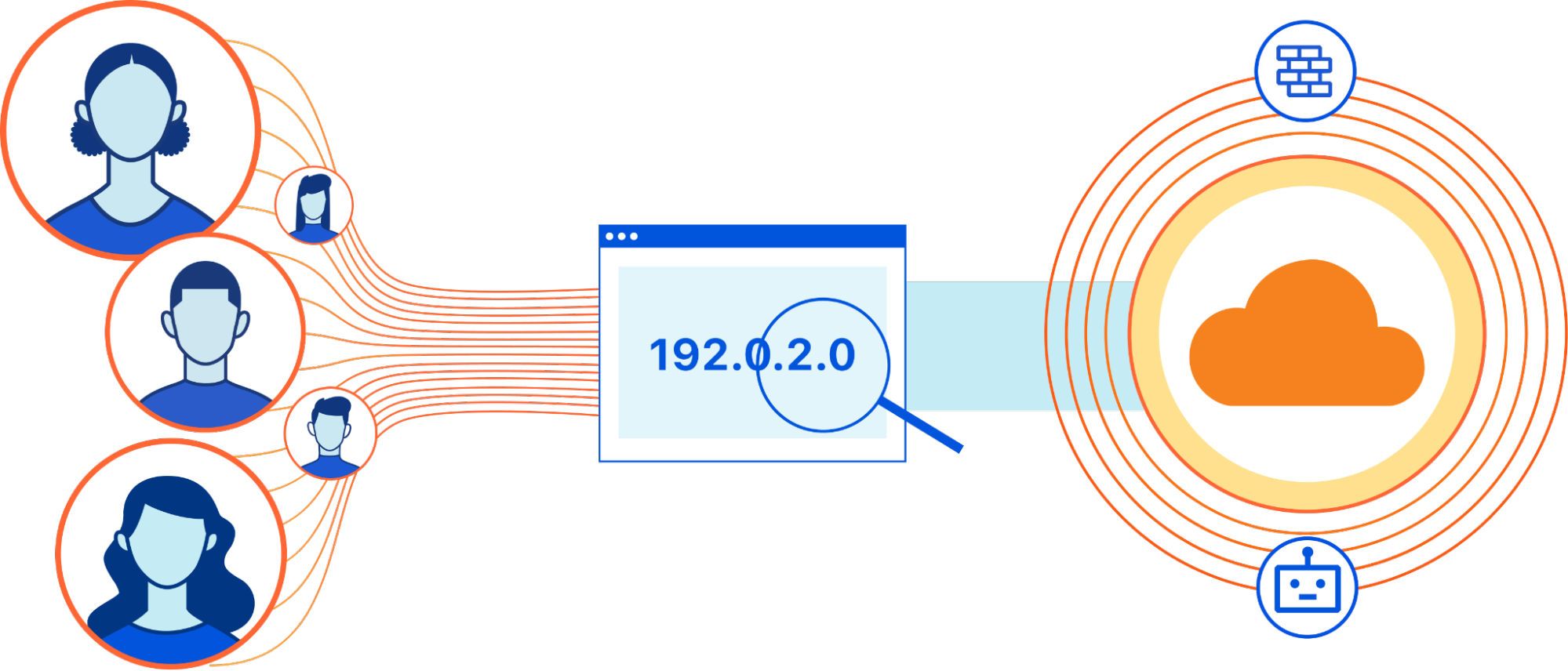

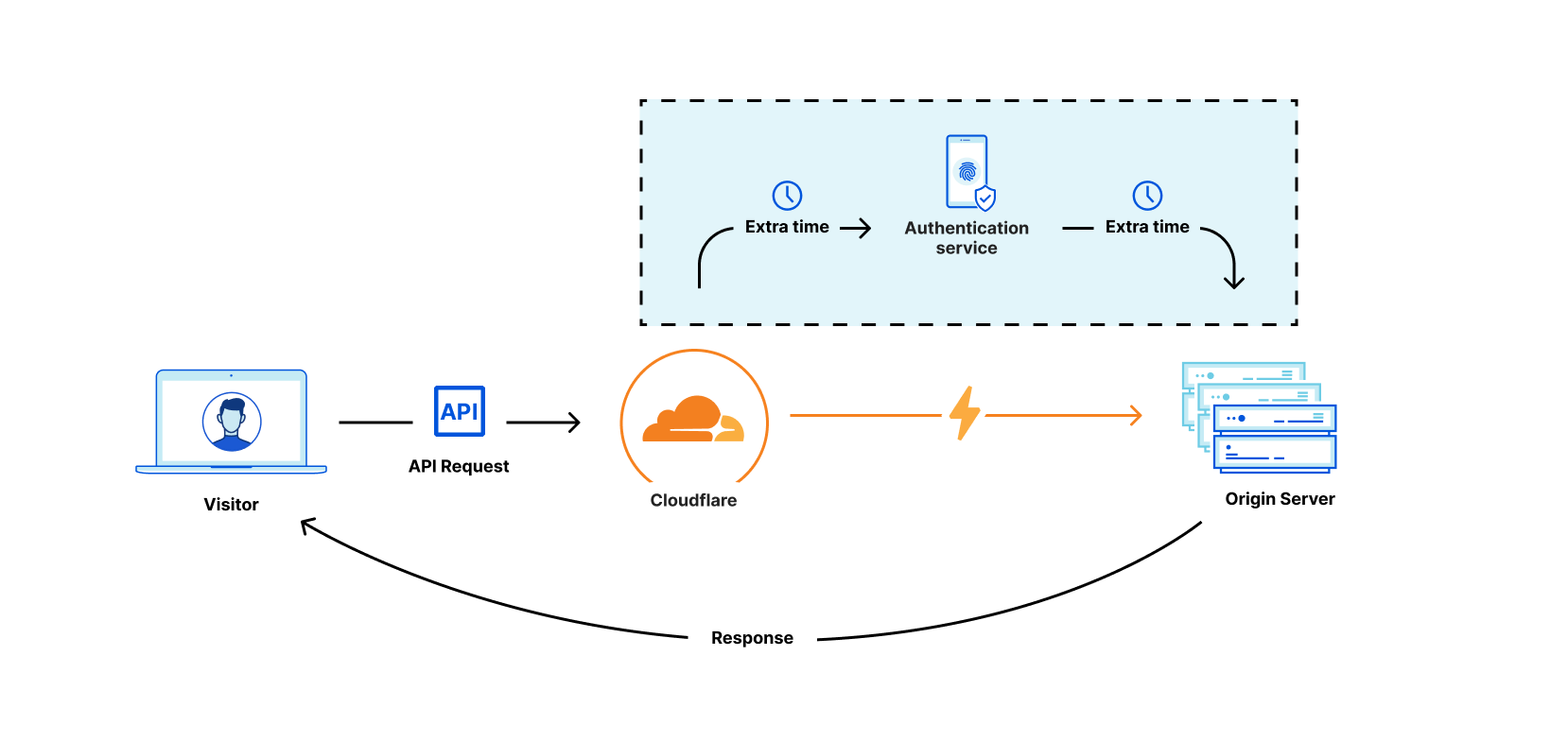

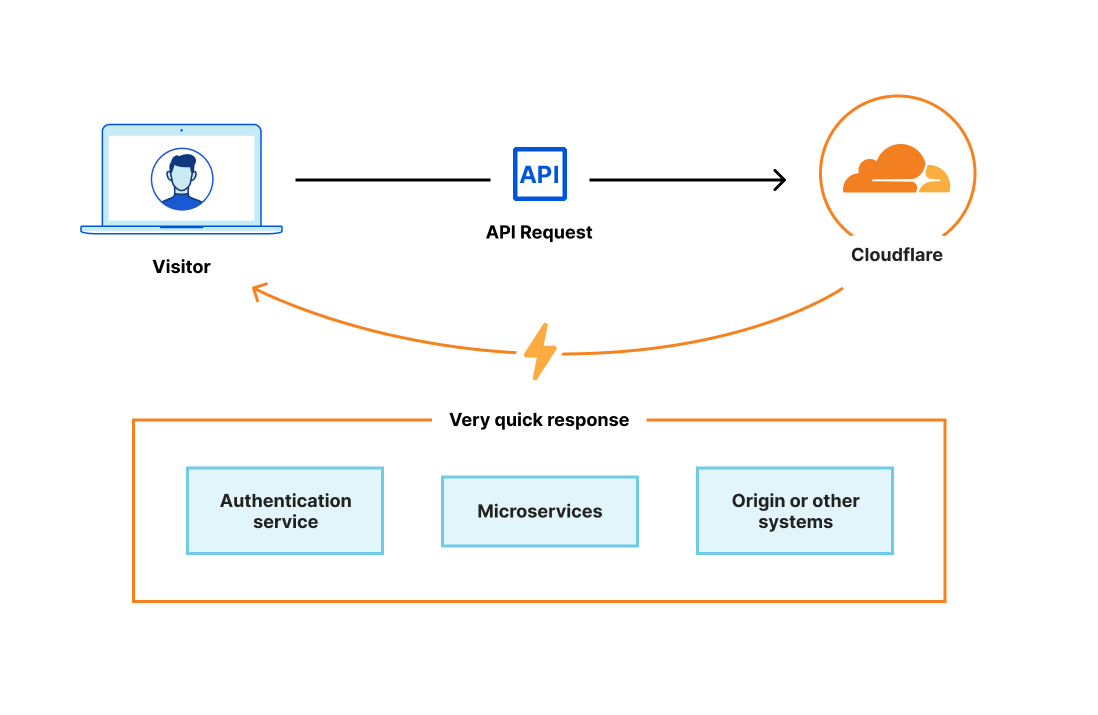

Using access control lists, we help you manage different user groups with varying permissions. And this matters—because your current provider is introducing tons of latency and unnecessary data exchange. If a request has to go somewhere outside the Cloudflare ecosystem, it’s traveling farther than it needs to:

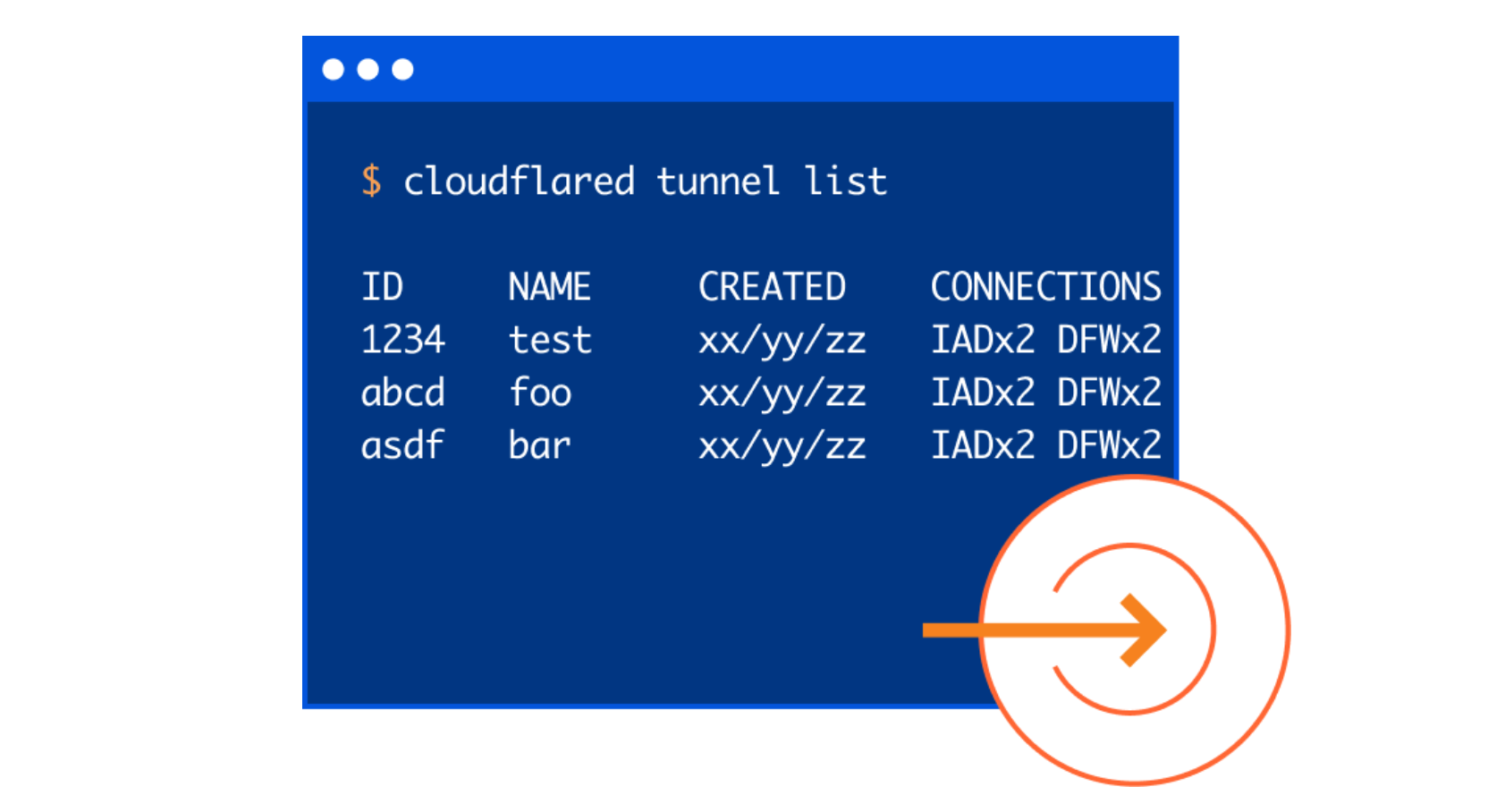

Cloudflare can authenticate on our global network and handle requests in a fraction of the time. This kind of technology is difficult to implement, but we felt it was too important to ignore. How did we build it so quickly? Cloudflare Access. We took our experience working with identity providers and, once again, ported it over to the world of APIs. Our gateway includes unlimited authentication and token exchange. These features will be available soon.

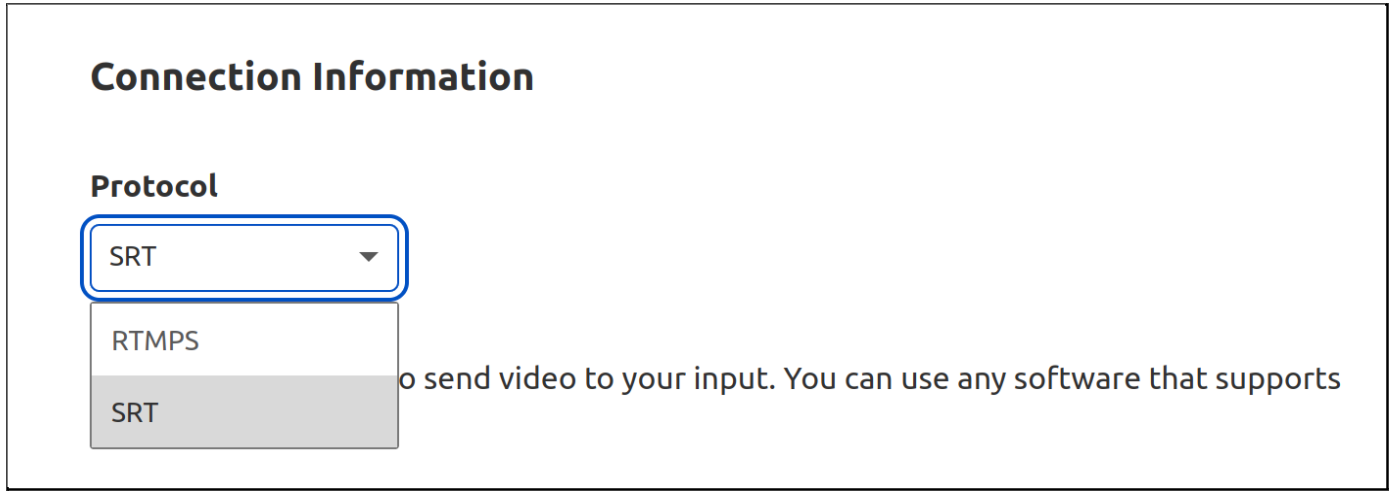

Routing & Management

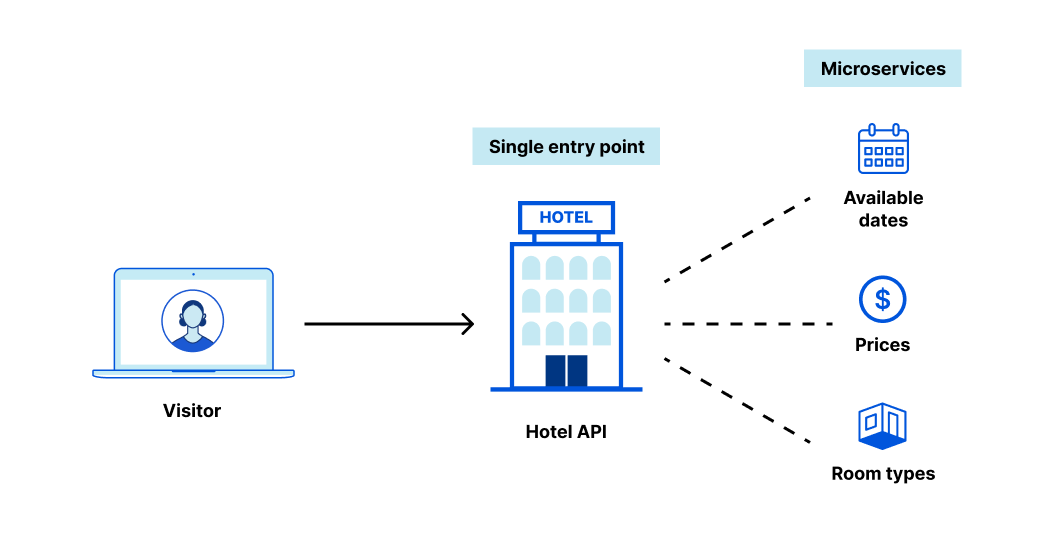

Let’s talk briefly about microservices. Modern applications are behemoths, so developers break them up into smaller chunks called “microservices.”

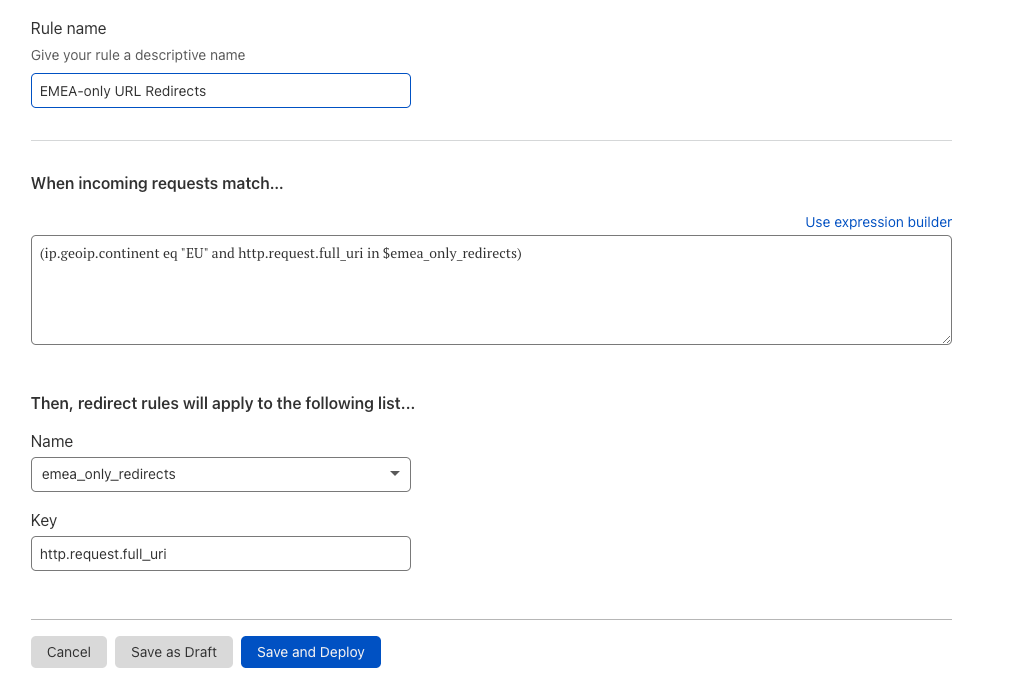

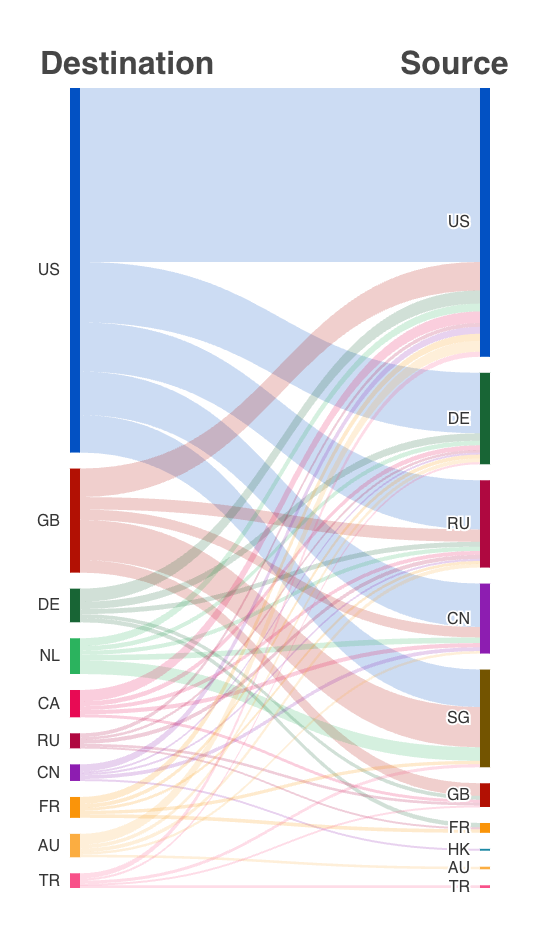

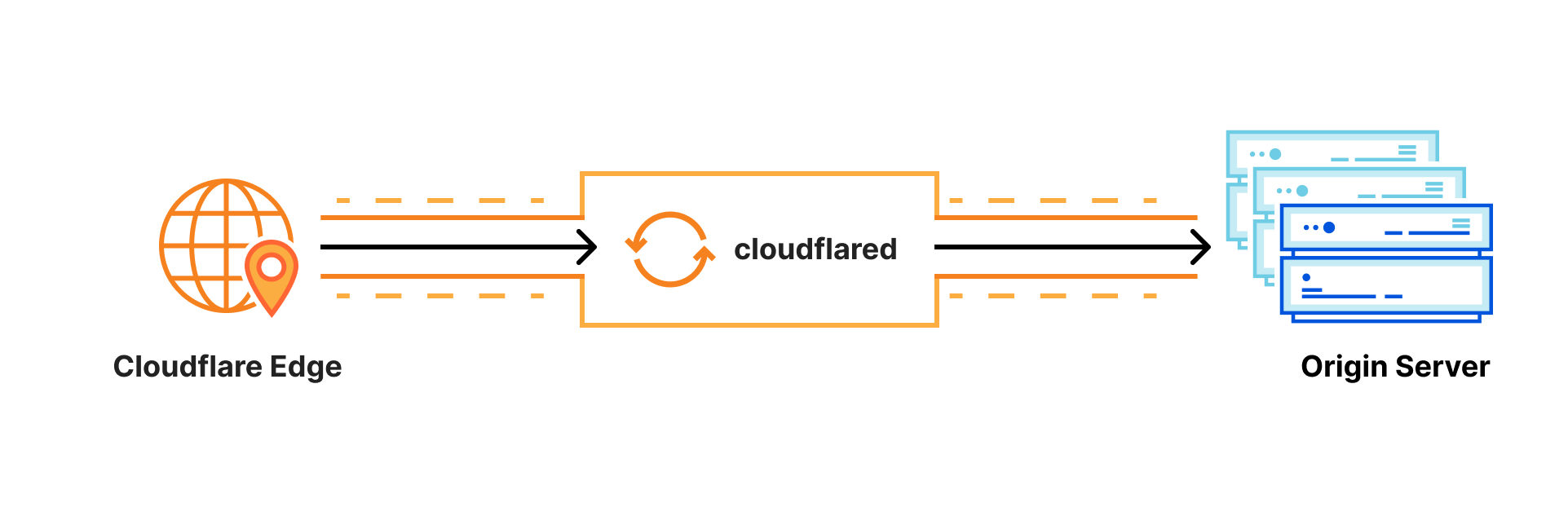

Consider an application that helps you book a hotel room. It might use a microservice to fetch available dates, another to fetch prices, and still another to fetch room types. Perhaps a different team manages each microservice, but they all need to be available from a single public entry point:

That single entry point—traditionally managed by an API gateway—is responsible for routing each request to the right microservice. Many of our customers have been paying standalone services to do this for years. That’s no longer necessary. We’ve built on our Transform Rules product to dynamically re-write and re-route at our edge. It’s easy to configure, fast to deploy, and natively built into API Gateway. Cloudflare can now be your API’s single point of entry.

That’s just the tip of the iceberg. API Gateway can actually replace your microservices through an integration with our Workers product. How? Consider writing a Worker that performs some action; perhaps return hotel prices, which are stored with Durable Objects on our network. With API Gateway, requests arrive at our network, are routed to the correct microservice with Transform Rules, and then are fully served with Workers (still on our network!). These Workers may contact your origin for additional information, where necessary.

Workers are faster, cheaper, and simpler than microservice alternatives. This integration will be available soon.

API Analytics

Customers tell us that seeing API traffic is sometimes more important than even acting on it. In fact, this trend isn’t specific to APIs. We published another blog today that explores how one customer uses our bot intelligence to passively log information about threats.

With API Analytics, we’ve drawn on our other products to show useful data in real time. You can view popular endpoints, filter by ML-driven insights, see histograms of abuse thresholds, and capture trends.

API Analytics will be available soon. When this happens, you’ll also be able to export custom reports and share insights within your organization.

Logging, Quota Management, and More

All of our established features, like caching, load balancing, and log integrations work natively with API Gateway. These shouldn’t be overlooked as primitive gateway features; they’re essential. And because Cloudflare performs all of these functions in the same place, you get the latency benefits without having to do a thing.

We are also expanding our Enterprise Logs functionality to perform real-time logging. If you choose to authenticate on Cloudflare’s network, you can view detailed logs of each user who has accessed an API. Similarly, we keep track of each request’s lifespan as it is received, validated, routed, and responded to. Everything is logged.

Finally, we are building Quota Management, a feature that counts API requests over a longer period of time (like a month) and allows you to manage thresholds for your users. We’ve also launched Advanced Rate Limiting to help with more sophisticated cases (including body inspection for GraphQL).

Conclusion

Our API security features—Discovery, Schema Validation, Abuse Detection, and mTLS—are available now! We call these features API Shield because they form the shield that protects the remaining gateway functions. Enterprise customers can ask their account teams for access today.

Many of the other portions of API Gateway are now in early access. According to Gartner®, “by 2025, less than 50% of enterprise APIs will be managed, as explosive growth in APIs surpasses the capabilities of API management tools.” Our goal is to offer an affordable gateway that will fight this trend. If you have a specific feature you want to test, let your account team know, so we can onboard you as soon as possible.

Source: Gartner, “Predicts 2022: APIs Demand Improved Security and Management”, Shameen Pillai, Jeremy D’Hoinne, John Santoro, Mark O’Neill, Sham Gill, 6 December 2021. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.