Post Syndicated from Ian McQuoid original https://blog.cloudflare.com/research-directions-in-password-security/

As Internet users, we all deal with passwords every day. With so many different services, each with their own login systems, we have to somehow keep track of the credentials we use with each of these services. This situation leads some users to delegate credential storage to password managers like LastPass or a browser-based password manager, but this is far from universal. Instead, many people still rely on old-fashioned human memory, which has its limitations — leading to reused passwords and to security problems. This blog post discusses how Cloudflare Research is exploring how to minimize password exposure and thwart password attacks.

The Problem of Password Reuse

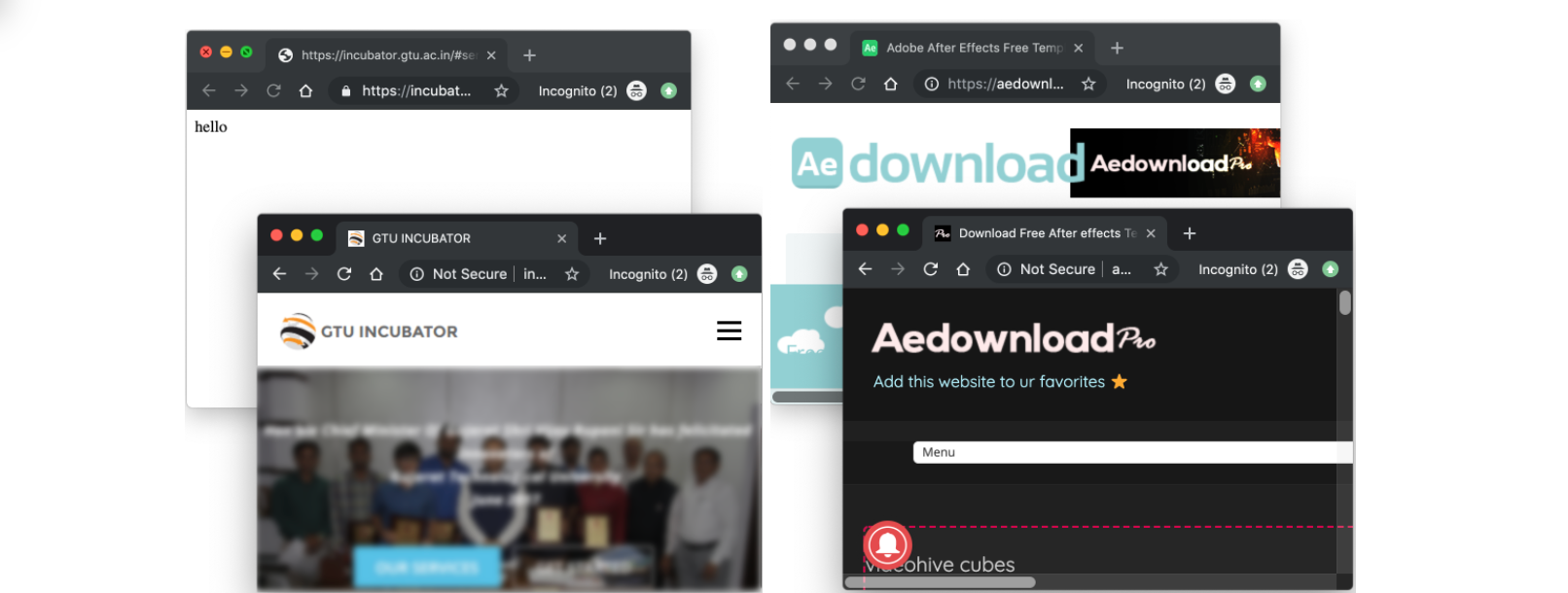

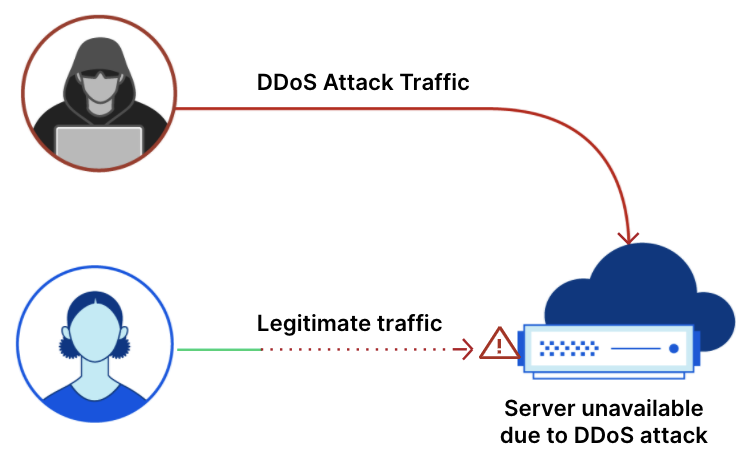

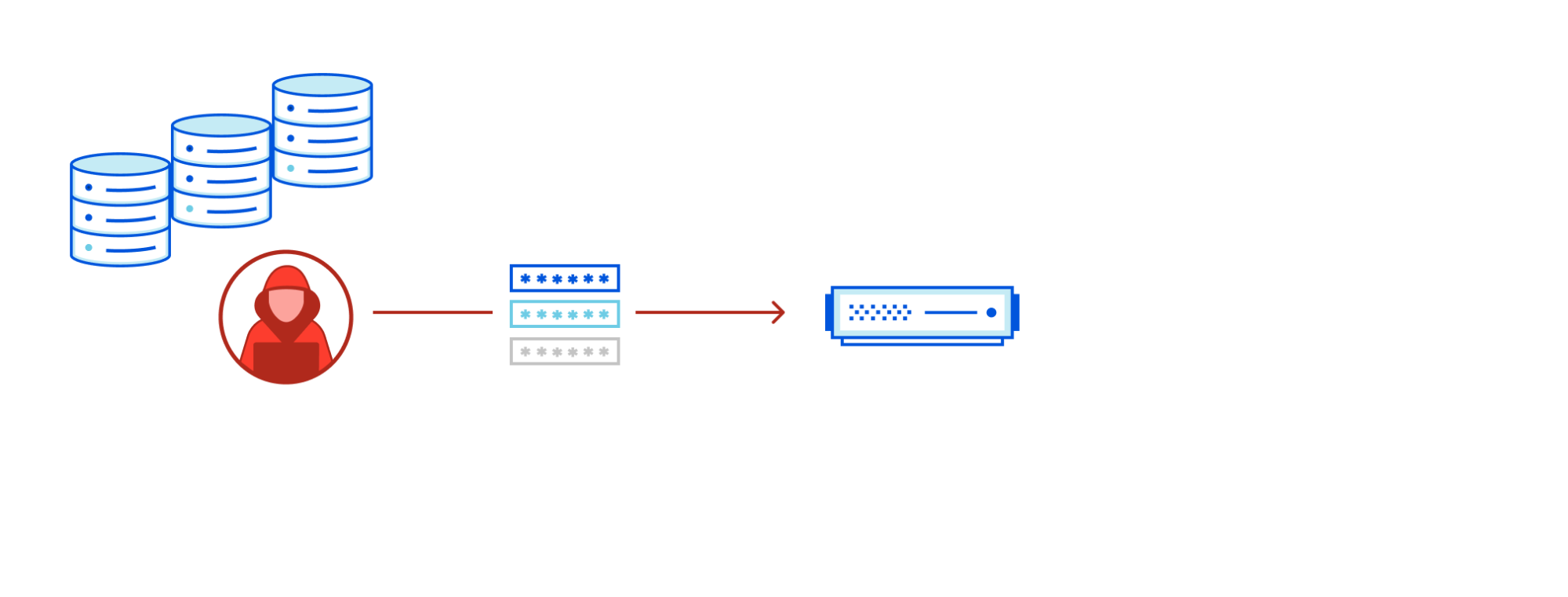

Because it’s too difficult to remember many distinct passwords, people often reuse them across different online services. When breached password datasets are leaked online, attackers can take advantage of these to conduct “credential stuffing attacks”. In a credential stuffing attack, an attacker tests breached credentials against multiple online login systems in an attempt to hijack user accounts. These attacks are highly effective because users tend to reuse the same credentials across different websites, and they have quickly become one of the most prevalent types of online guessing attacks. Automated attacks can be run at a large scale, testing out exposed passwords across multiple systems, under the assumption that some of these passwords will unlock accounts somewhere else (if they have been reused). When a data breach is detected, users of that service will likely receive a security notification and will reset that account password. However, if this password was reused elsewhere, they may easily forget that it needs to be changed for those accounts as well.

How can we protect against credential stuffing attacks? There are a number of methods that have been deployed — with varying degrees of success. Password managers address the problem of remembering a strong, unique password for every account, but many users have yet to adopt them. Multi-factor authentication is another potential solution — that is, using another form of authentication in addition to the username/password pair. This can work well, but has limits: for example, such solutions may rely on specialized hardware that not all clients have. Consumer systems are often reluctant to mandate multi-factor authentication, given concerns that people may find it too complicated to use; companies do not want to deploy something that risks impeding the growth of their user base.

Since there is no perfect solution, security researchers continue to try to find improvements. Two different approaches we will discuss in this blog post are hardening password systems using cryptographically secure keys, and detecting the reuse of compromised credentials, so they don’t leave an account open to guessing attacks.

Improved Authentication with PAKEs

Investigating how to securely authenticate a user just using what they can remember has been an important area in secure communication. To this end, the subarea of cryptography known as Password Authenticated Key Exchange (PAKE) came about. PAKEs deal with protocols for establishing cryptographically secure keys where the only source of authentication is a human memorizable (low-entropy, attacker-guessable) password — that is, the “what you know” side of authentication.

Before diving into the details, we’ll provide a high-level overview of the basic problem. Although passwords are typically protected in transit by being sent over HTTPS, servers handle them in plaintext to verify them once they arrive. Handling plaintext passwords increases security risk — for instance, they might get inadvertently logged and exposed. Ideally, the user’s password never gets sent to the server in the first place. This is where PAKEs come in — a means of verifying that the user and server share a password, ideally without revealing information about the password that could help attackers to discover or crack it.

A few words on PAKEs

PAKE protocols let two parties turn a password into a shared key. Each party only gets one guess at the password the other holds. If a user tries to log in to the wrong server with a PAKE, that server will not be able to turn around and impersonate the user. As such, PAKEs guarantee that communication with one of the parties is the only way for an attacker to test their (single) password guess. This may seem like an unneeded level of complexity when we could use already available tools like a key distribution mechanism along with password-over-TLS, but this puts a lot of trust in the service. You may trust a service with learning your password on that service, but what about if you accidentally use a password for a different service when trying to log in? Note the particular risks of a reused password: it is no longer just a secret shared between a user and a single service, but is now a secret shared between a user and multiple services. This therefore increases the password’s privacy sensitivity — a service should not know users’ account login information for other services.

PAKE protocols are built with the assumption that the server isn’t always working in the best interest of the client and, even more, cannot use any kind of public-key infrastructure during login (although it doesn’t hurt to have both!). This precludes the user from sending their plaintext password (or any information that could be used to derive it — in a computational sense) to the server during login.

PAKE protocols have expanded into new territory since the seminal EKE paper of Bellovin and Merritt, where the client and server both remembered a plaintext version of the password. As mentioned above, when the server stores the plaintext password, the client risks having the password logged or leaked. To address this, new protocols were developed, referred to as augmented, verifier-based, or asymmetric PAKEs (aPAKEs), where the server stored a modified version (similar to a hash) of the password instead of the plaintext password. This mirrors the way many of us were taught to store passwords in a database, specifically as a hash of the password with accompanying salt and pepper. However, in these cases, attackers can still use traditional methods of attack such as targeted rainbow tables. To avoid these kinds of attacks, a new kind of PAKE was born, the strong asymmetric PAKE (saPAKE).

OPAQUE was the first saPAKE and it guarantees defense against precomputation by hiding the password dictionary itself! It does this by replacing the noninteractive hash function with an interactive protocol referred to as an Oblivious Pseudorandom Function (OPRF) where one party inputs their “salt”, another inputs their “password”, and only the password-providing party learns the output of the function. The fact that the password-providing party learns nothing (computationally) about the salt prevents offline precomputation by disallowing an attacker from evaluating the function in their head.

Another way to think about the three PAKE paradigms has to do with how each of them treats the password dictionary:

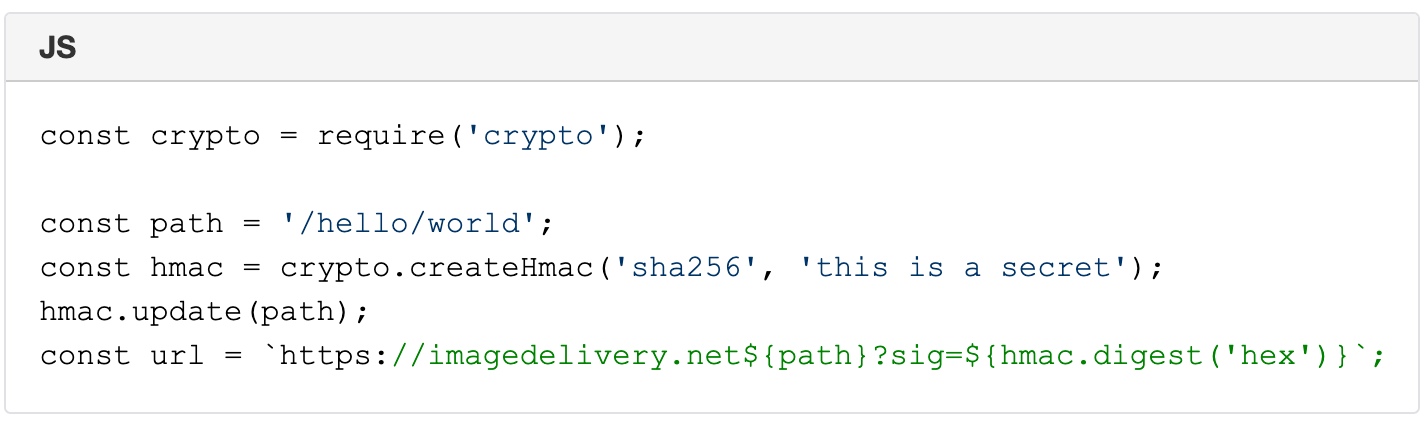

| PAKE type | Password Dictionary | Threat Model |

|---|---|---|

| PAKE | The password dictionary is public and common to every user. | Without any guessing, the attacker learns the user’s password upon compromise of the server. |

| aPAKE | Each user gets their own password dictionary; a description of the dictionary (e.g., the “salt”) is leaked to the client when they attempt to log in. | The attacker must perform an independent precomputation for each client they want to attack. |

| saPAKE (e.g., OPAQUE) | Each user gets their own password dictionary; the server only provides an online interface (the OPRF) to the dictionary. | The adversary must wait until after they compromise the server to run an offline attack on the user’s password1. |

OPAQUE also goes one step further and allows the user to perform the password transformation on their own device so that the server doesn’t see the plaintext password during registration either. Cloudflare Research has been involved with OPAQUE for a while now — for instance, you can read about our previous implementation work and demo if you want to learn more.

But OPAQUE is not a panacea: in the event of server compromise, the attacker can learn the salt that the server uses to evaluate the OPRF and can still run the same offline attack that was available in the aPAKE world, although this is now considerably more time-consuming and can be made increasingly difficult through the use of memory-hard hash functions like scrypt. This means that despite our best efforts, when a server is breached, the attacker can eventually come out with a list of plaintext passwords. Indeed, this attack is always inevitable as the attacker can always run the (sa)PAKE protocol in their head acting as both parties to test each password. With this being the case, we still need to take steps to defend against automated password attacks such as credential stuffing attacks and have ways of mitigating them.

Are You Overexposed?

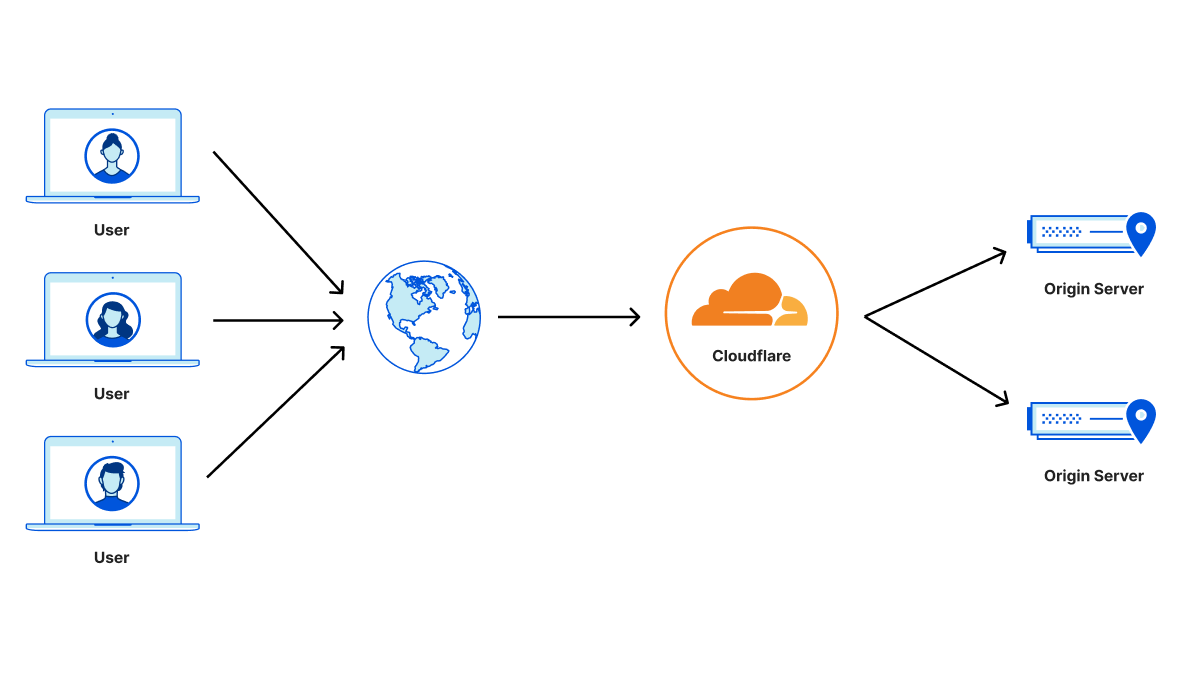

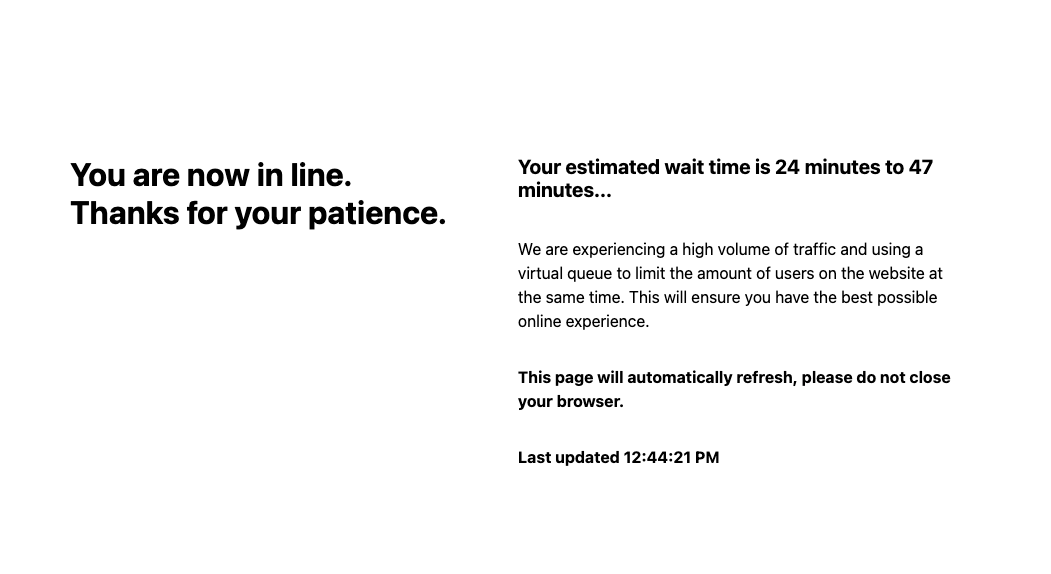

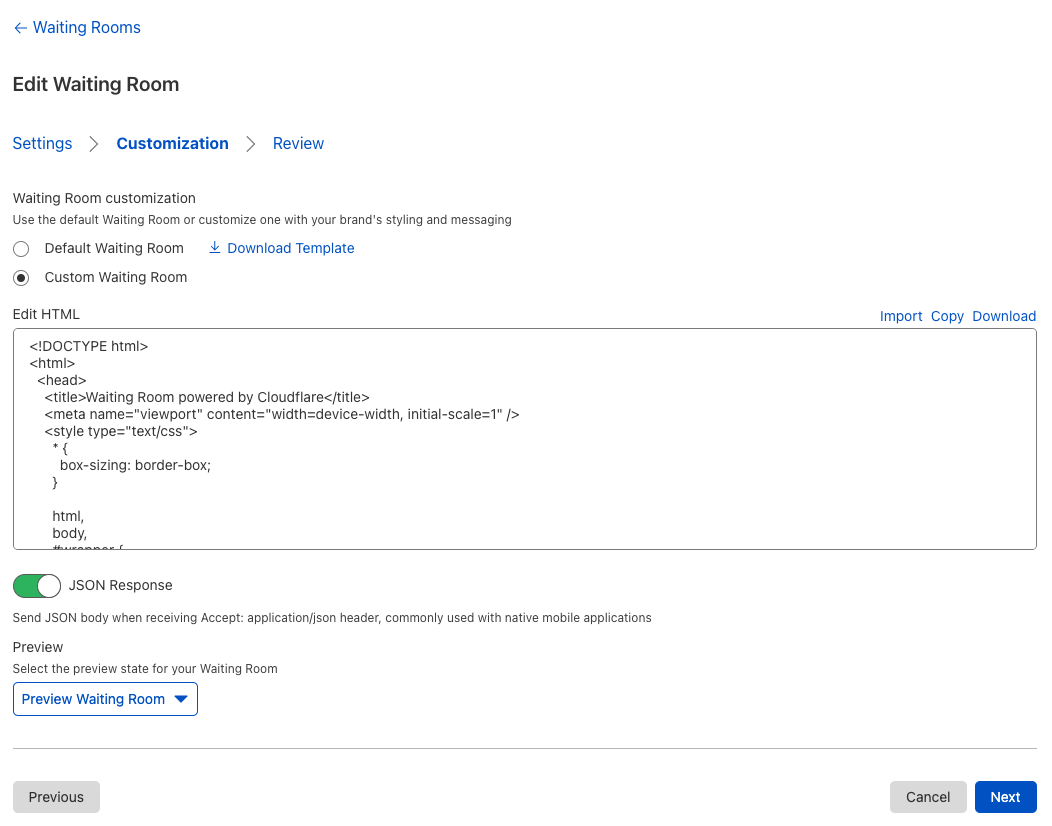

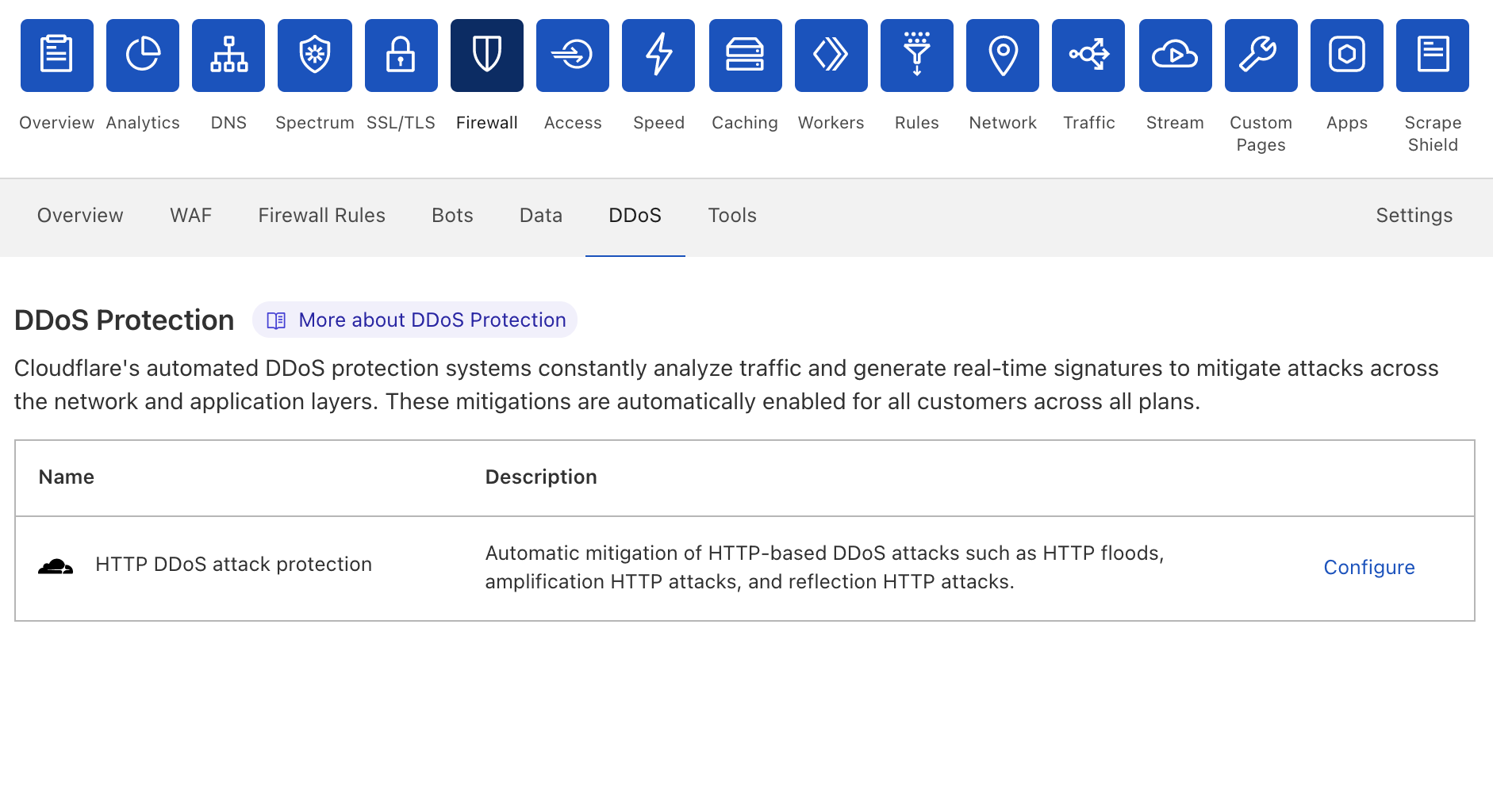

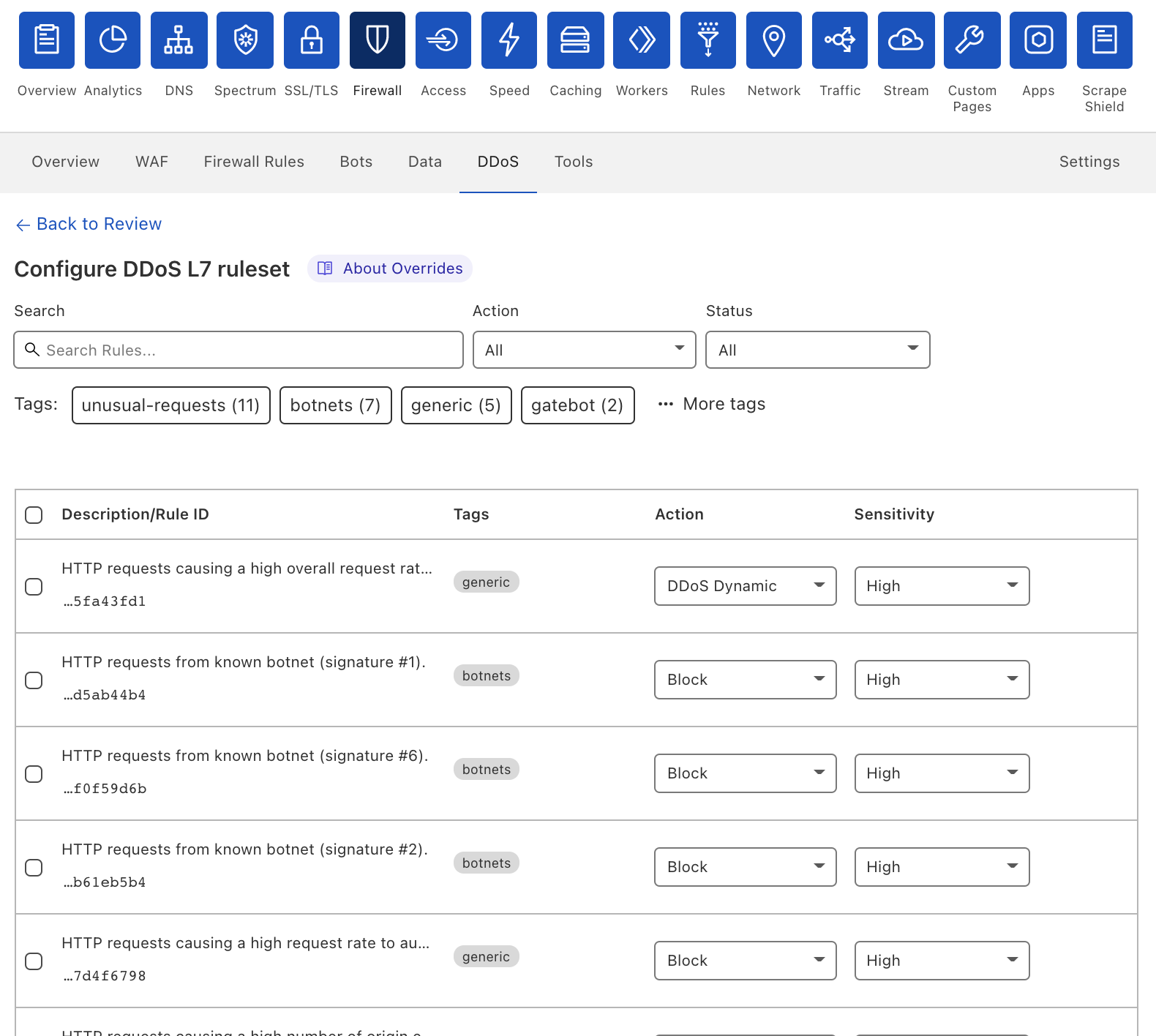

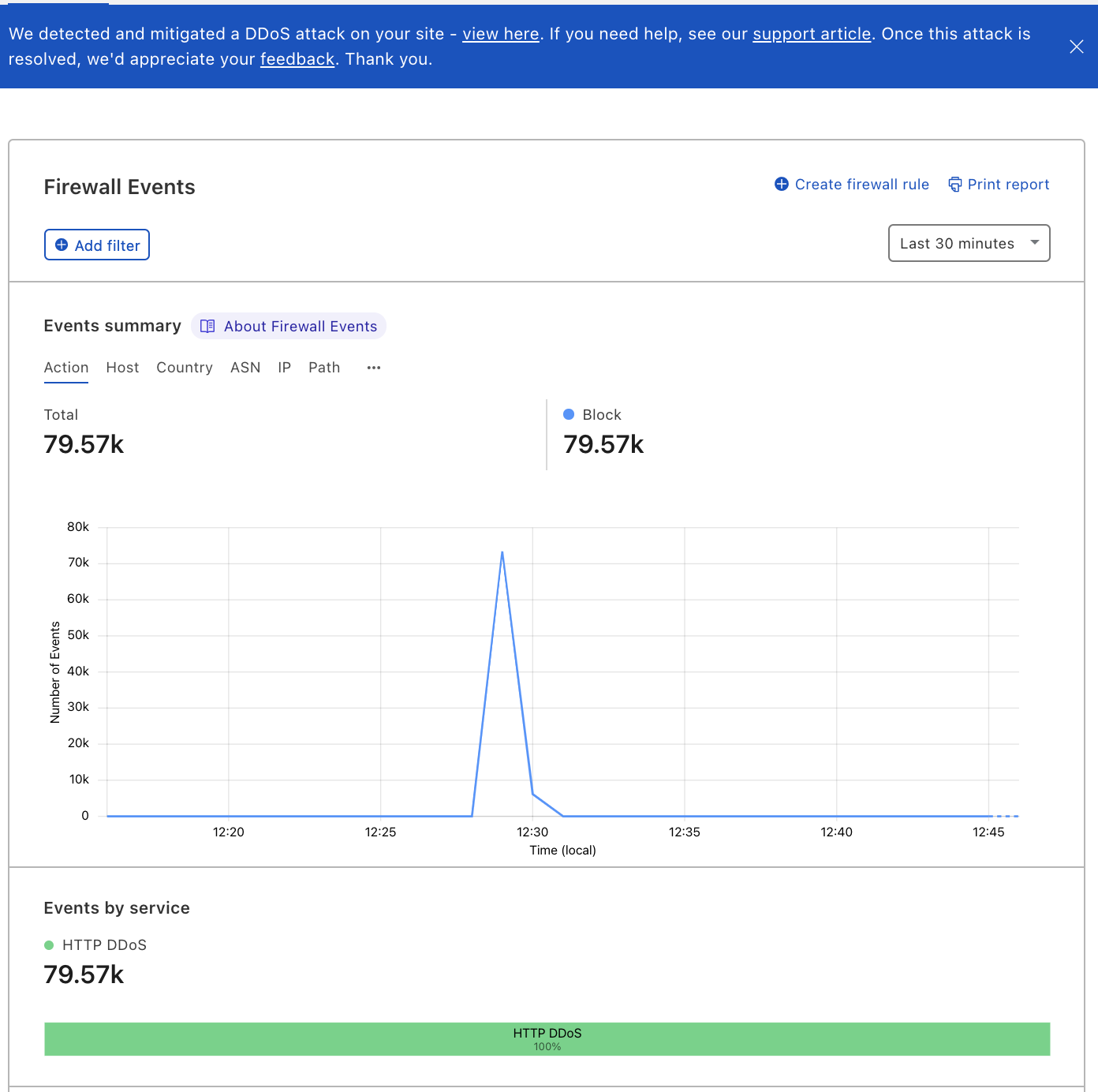

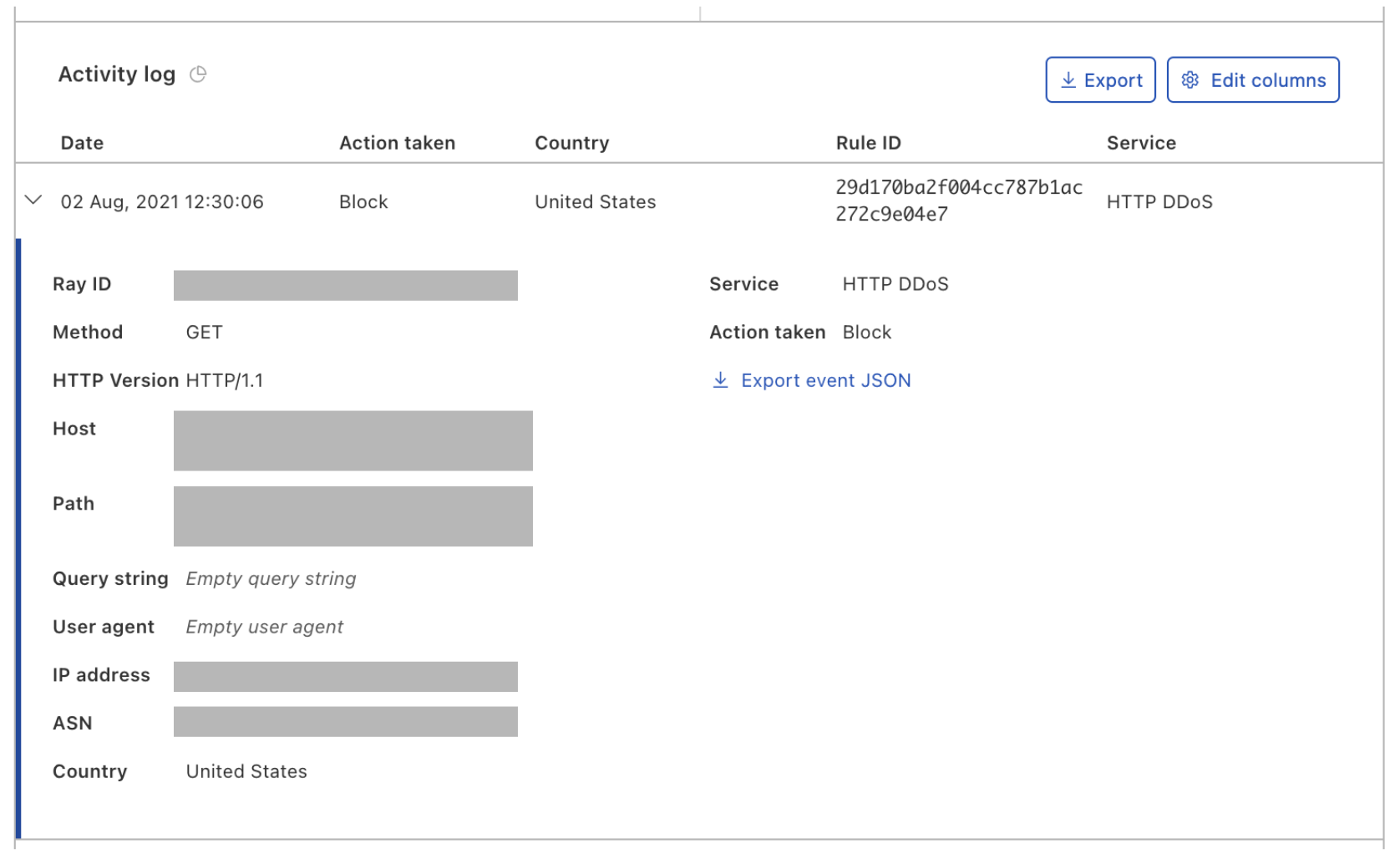

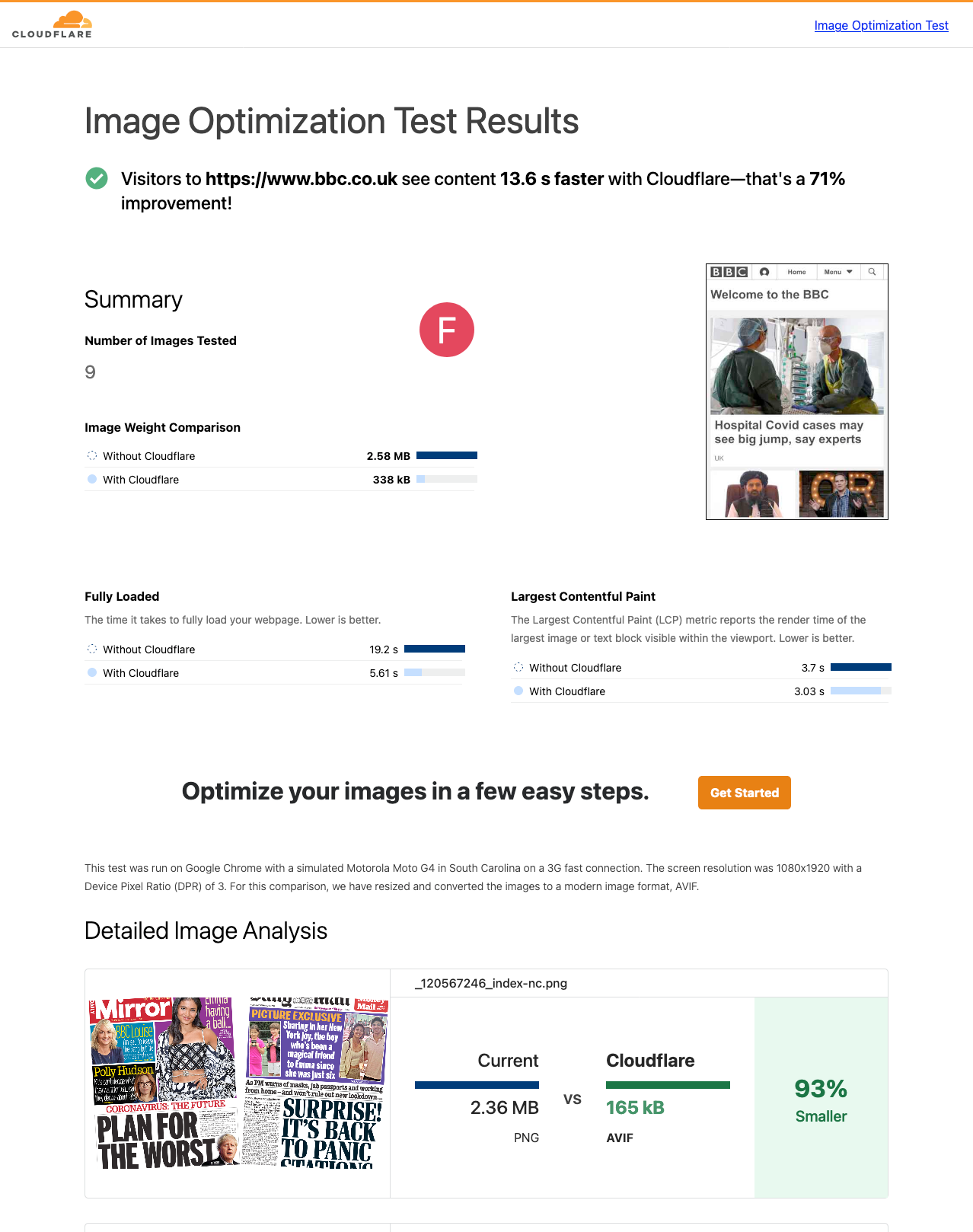

To help detect and respond to credential stuffing, Cloudflare recently rolled out the Exposed Credential Checks feature on the Web Application Firewall (WAF), which can alert the origin if a user’s login credentials have appeared in a recent breach. Historically, compromised credential checking services have allowed users to be proactive against credential stuffing attacks when their username and password appear together in a breach. However, they do not account for recently proposed credential tweaking attacks, in which an attacker tries variants of a breached password, under the assumption that users often use slight modifications of the same password for different accounts, such as “sunshineFB”, “sunshineIG”, and so on. Therefore, compromised credential check services should incorporate methods of checking for credential tweaks.

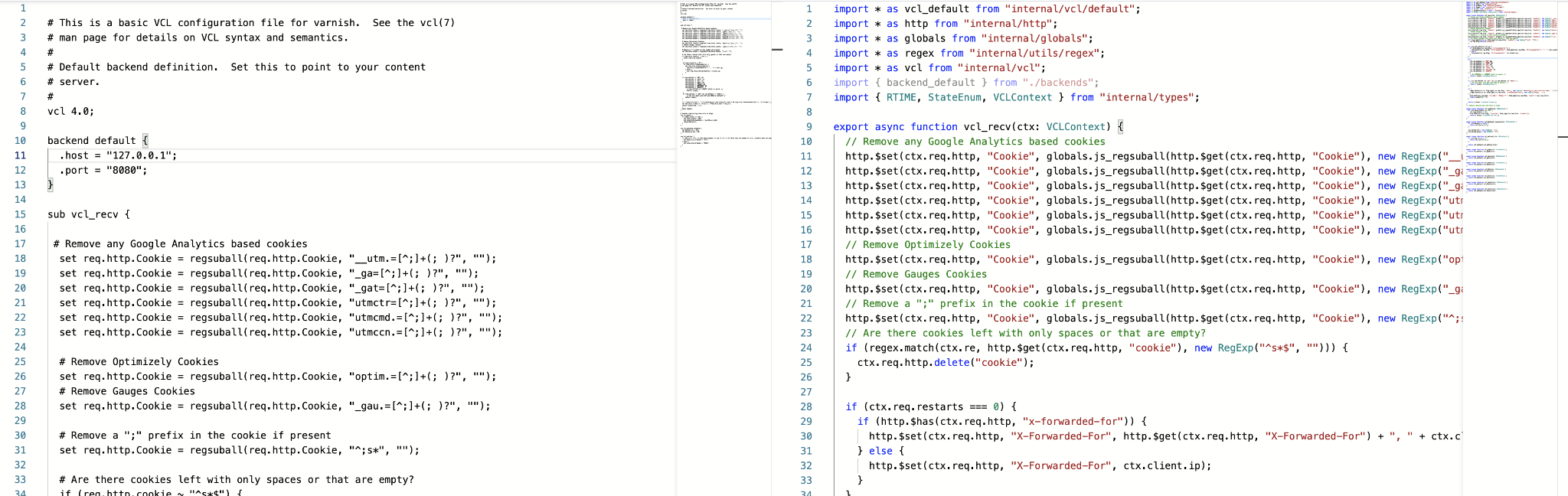

Under the hood, Cloudflare’s Exposed Credential Checks feature relies on an underlying protocol deemed Might I Get Pwned (MIGP). MIGP uses the bucketization method proposed in Li et al. to avoid sending the plaintext username or password to the server while handling a large breach dataset. After receiving a user’s credentials, MIGP hashes the username and sends a portion of that hash as a “bucket identifier” to the server. The client and server can then perform a private membership test protocol to verify whether the user’s username/password pair appeared in that bucket, without ever having to send plaintext credentials to the server.

Unlike previous compromised credential check services, MIGP also enables credential tweaking checks by augmenting the original breach dataset with a set of password “variants”. For each leaked password, it generates a list of password variants, which are labeled as such to differentiate them from the original leaked password and added to the original dataset. For more information, you can check out the Cloudflare Research blog post detailing our open-source implementation and deployment of the MIGP protocol.

Measuring Credential Compromises

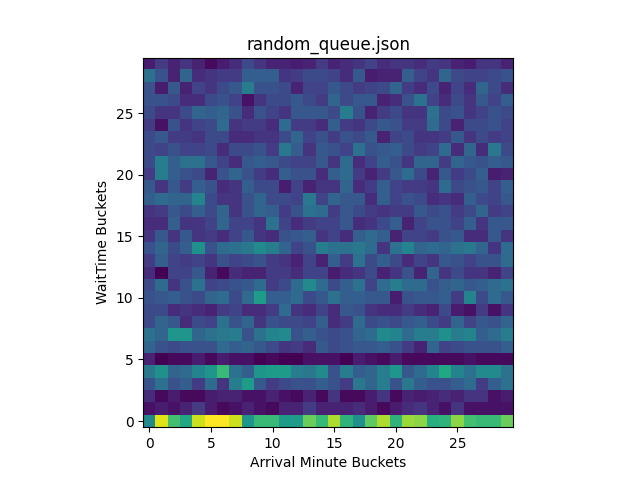

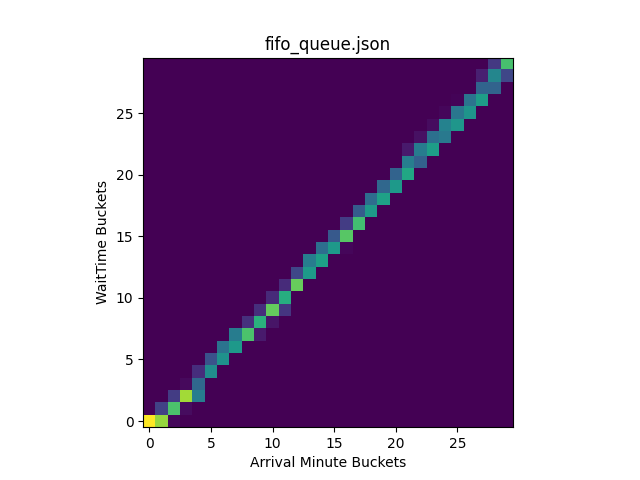

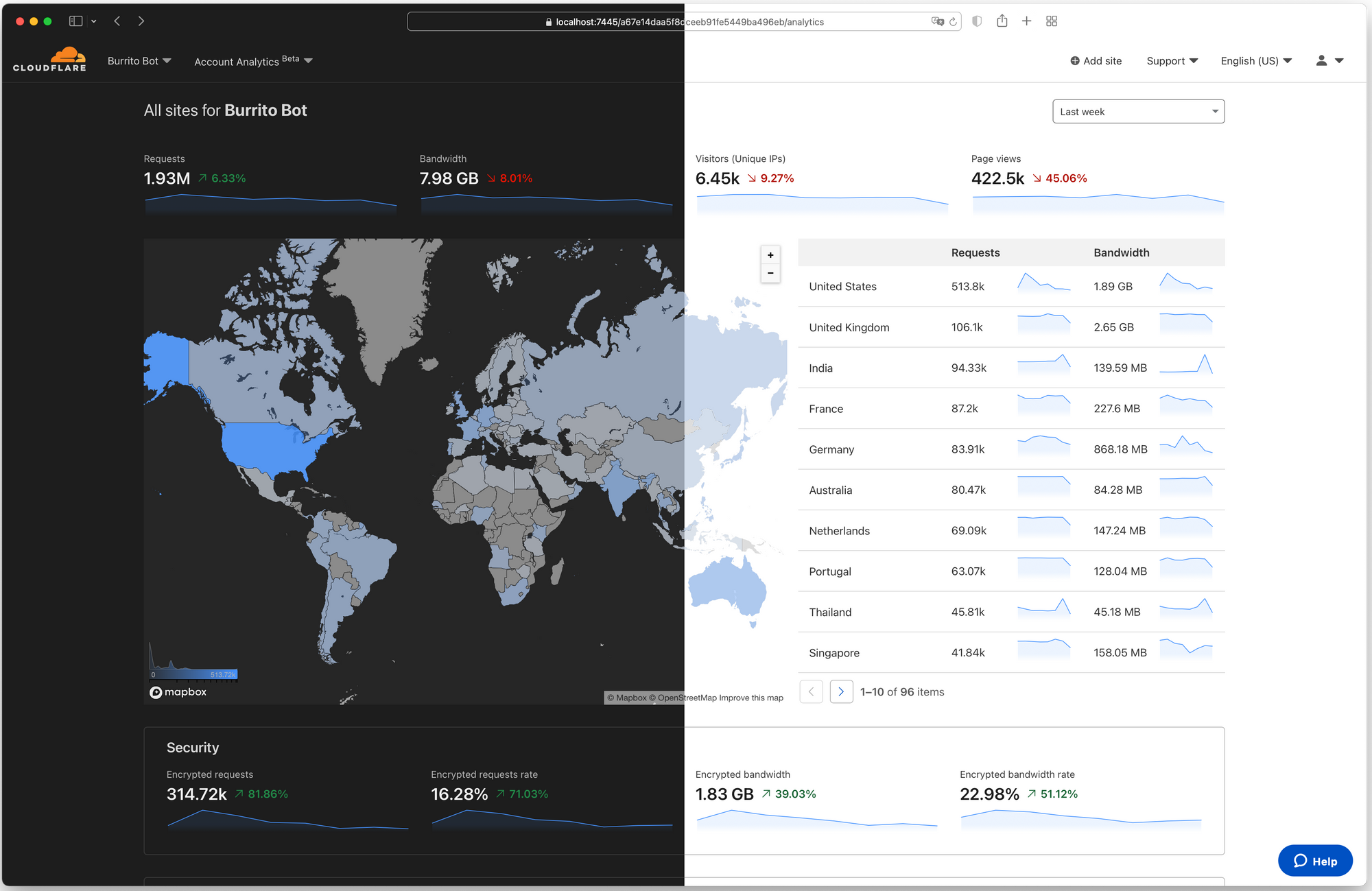

The question remains, just how important are these exposed credential checks for detecting and preventing credential stuffing attacks in practice? To answer this question, the Research Team has initiated a study investigating login requests to our own Cloudflare dashboard. For this study, we are collecting the data logged by Cloudflare’s Exposed Credential Check feature (described above), designed to be privacy-preserving: this check does not reveal a password, but provides a “yes/no” response on whether the submitted credentials appear in our breach dataset. Along with this signal, we are looking at other fields that may be indicative of malicious behavior such as bot score and IP reputation. As this project develops, we plan to cluster the data to find patterns of different types of credential stuffing attacks that we can generalize to form attack fingerprints. We can then feed these fingerprints into the alert logs for the Cloudflare Detection & Response team to see if they provide useful information for the security analysts.

Additionally, we hope to investigate potential post-compromise behavior as it relates to these compromise check fields. After an attacker successfully hijacks an account, they may take a number of actions such as changing the password, revoking all valid access tokens, or setting up a malicious script. By analyzing compromised credential checks along with these signals, we may be able to better differentiate benign from malicious behavior.

Future directions: OPAQUE and MIGP combined

This post has discussed how we’re approaching the problem of preventing credential stuffing attacks from two different angles. Through the deployment and analysis of compromised credential checks, we aim to prevent server compromise by detecting and preventing credential stuffing attacks before they happen. In addition, in the case that a server does get compromised, the wider use of OPAQUE would help address the problem of leaking passwords to an attacker by avoiding the reception and storage of plaintext passwords on the server as well as preventing precomputation attacks.

However, there are still remaining research challenges to address. Notably, the current method for interfacing with MIGP still requires the server to either pass along a plaintext version of the client’s password, or trust the client to honestly communicate with the MIGP service on behalf of the server. If we want to leverage the security guarantees of OPAQUE (or generally an saPAKE) with the analytics and alert system provided by MIGP in a privacy-preserving way, we need additional mechanisms.

At first glance, the privacy-preserving goals of both protocols seem to be perfect matches for each other. Both OPAQUE and MIGP are built upon the idea of replacing the traditional salted password hashes with an OPRF as a way of keeping the client’s plaintext passwords from ever leaving their device. However, both the interfaces for these protocols rely on user-provided inputs which aren’t cryptographically tied to each other. This allows an attacker to provide a false password to MIGP while providing their actual password to the OPAQUE server. Further, the security analysis of both protocols assume that their idealized building blocks are separated in an important way. This isn’t to say that the two protocols are incompatible, and indeed, much of these protocols may be salvaged.

The next stages for password privacy will be an integration of these two protocols such that a server can be made aware of credential stuffing attacks and the patterns of compromised account usage that can protect a server against the compromise of other servers while providing the same privacy guarantees OPAQUE does. Our goal is to allow you to protect yourself from other compromised servers while protecting your clients from compromise of your server. Stay tuned for updates!

We’re always keen to collaborate with others to build more secure systems, and would love to hear from those interested in password research. You can reach us with questions, comments, and research ideas at [email protected]. For those interested in joining our team, please visit our Careers Page.

…

1There are other ways of constructing saPAKE protocols. The curious reader can see this CRYPTO 2019 paper for details.