Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=oyp-m4VzxwQ

Shellye Archambeau | Unapologetically Ambitious | Talks at Google

Post Syndicated from Talks at Google original https://www.youtube.com/watch?v=E0PDepwHsh8

Devuan 5.0.0 released

Post Syndicated from corbet original https://lwn.net/Articles/941672/

Version

5.0 (“Daedalus”) of the Debian-based Devuan distribution has been

released. “This is the result of many months of painstaking work by the

” The

Team and detailed testing by the wider Devuan community.

announcement lists a couple of new features but mostly defers to the

Debian 12 (“bookworm”) release notes.

Use Amazon Athena to query data stored in Google Cloud Platform

Post Syndicated from Jonathan Wong original https://aws.amazon.com/blogs/big-data/use-amazon-athena-to-query-data-stored-in-google-cloud-platform/

As customers accelerate their migrations to the cloud and transform their businesses, some find themselves in situations where they have to manage data analytics in a multi-cloud environment, such as acquiring a company that runs on a different cloud provider. Customers who use multi-cloud environments often face challenges in data access and compatibility that can create blockades and slow down productivity.

When managing multi-cloud environments, customers must look for services that address these gaps through features providing interoperability across clouds. With the release of the Amazon Athena data source connector for Google Cloud Storage (GCS), you can run queries within AWS to query data in Google Cloud Storage, which can be stored in relational, non-relational, object, and custom data sources, whether that be Parquet or comma-separated value (CSV) format. Athena provides the connectivity and query interface and can easily be plugged into other AWS services for downstream use cases such as interactive analysis and visualizations. Some examples include AWS data analytics services such as AWS Glue for data integration, Amazon QuickSight for business intelligence (BI), as well as third-party software and services from AWS Marketplace.

This post demonstrates how to use Athena to run queries on Parquet or CSV files in a GCS bucket.

Solution overview

The following diagram illustrates the solution architecture.

The Athena Google Cloud Storage connector uses both AWS and Google Cloud Platform (GCP), so we will be referencing both cloud providers in the architecture diagram.

We use the following AWS services in this solution:

- Amazon Athena – A serverless interactive analytics service. We use Athena to run queries on data stored on Google Cloud Storage.

- AWS Lambda – A serverless compute service that is event driven and manages the underlying resources for you. We deploy a Lambda function data source connector to connect AWS with Google Cloud Provider.

- AWS Secrets Manager – A secrets management service that helps protect access to your applications and services. We reference the secret in Secrets Manager in the Lambda function so we can run a query on AWS and it can access the data stored on Google Cloud Provider.

- AWS Glue – A serverless data analytics service for data discovery, preparation, and integration. We create an AWS Glue database and table to point to the correct bucket and files within Google Cloud Storage.

- Amazon Simple Storage Service (Amazon S3) – An object storage service that stores data as objects within buckets. We create an S3 bucket to store data that exceeds the Lambda function’s response size limits.

The Google Cloud Platform portion of the architecture contains a few services as well:

- Google Cloud Storage – A managed service for storing unstructured data. We use Google Cloud Storage to store data within a bucket that will be used in a query from Athena, and we upload a CSV file directly to the GCS bucket.

- Google Cloud Identity and Access Management (IAM) – The central source to control and manage visibility for cloud resources. We use Google Cloud IAM to create a service account and generate a key that will allow AWS to access GCP. We create a key with the service account, which is uploaded to Secrets Manager.

Prerequisites

For this post, we create a VPC and security group that will be used in conjunction with the GCP connector. For complete steps, refer to Creating a VPC for a data source connector. The first step is to create the VPC using Amazon Virtual Private Cloud (Amazon VPC), as shown in the following screenshot.

Then we create a security group for the VPC, as shown in the following screenshot.

For more information about the prerequisites, refer to Amazon Athena Google Cloud Storage connector. Additionally, there are tables that highlight the specific data types that can be used such as CSV and Parquet files. There are also required permissions to run the solution.

Google Cloud Platform configuration

To begin, you must have either CSV or Parquet files stored within a GCS bucket. To create the bucket, refer to Create buckets. Make sure to note the bucket name—it will be referenced in a later step. After you create the bucket, upload your objects to the bucket. For instructions, refer to Upload objects from a filesystem.

The CSV data used in this example came from Mockaroo, which generated random test data as shown in the following screenshot. In this example, we use a CSV file, but you can also use Parquet files.

Additionally, you must create a service account to generate a key pair within Google Cloud IAM, which will be uploaded to Secrets Manager. For full instructions, refer to Create service accounts.

After you create the service account, you can create a key. For instructions, refer to Create and delete service account keys.

AWS configuration

Now that you have a GCS bucket with a CSV file and a generated JSON key file from Google Cloud Platform, you can proceed with the rest of the steps on AWS.

- On the Secrets Manager console, choose Secrets in the navigation pane.

- Choose Store a new secret and specify Other type of secret.

- Provide the GCP generated key file content.

The next step is to deploy the Athena Google Cloud Storage connector. For more information, refer to Using the Athena console.

- On the Athena console, add a new data source.

- Select Google Cloud Storage.

- For Data source name, enter a name.

- For Lambda function, choose Create Lambda function to be redirected to the Lambda console.

- In the Application settings section, enter the information for Application name, SpillBucket, GCSSecretName, and LambdaFunctionName.

- You also have to create an S3 bucket to reference the S3 spill bucket parameter in order to store data that exceeds the Lambda function’s response size limits. For more information, refer to Create your first S3 bucket.

After you provide the Lambda function’s application settings, you’re redirected to the Review and create page.

- Confirm that these are the correct fields and choose Create data source.

Now that the data source connector has been created, you can connect Athena to the data source.

- On the Athena console, navigate to the data source.

- Under Data source details, choose the link for the Lambda function.

You can reference the Lambda function to connect to the data source. As an optional step and for validation, the variables that were put into the Lambda function can be found within the Lambda function’s environment variables on the Configuration tab.

- Because the built-in GCS connector schema inference capability is limited, it’s recommended to create an AWS Glue database and table for your metadata. For instructions, refer to Setting up databases and tables in AWS Glue.

The following screenshot shows our database details.

The following screenshot shows our table details.

Query the data

Now you can run queries on Athena that will access the data stored on Google Cloud Storage.

- On the Athena console, choose the correct data source, database, and table within the query editor.

- Run

SELECT * FROM [AWS Glue Database name].[AWS Glue Table name]in the query editor.

As shown in the following screenshot, the results will be from the bucket on Google Cloud Storage.

The data that is stored on Google Cloud Platform can be accessed through AWS and used for many use cases, such as performing business intelligence, machine learning, or data science. Doing so can help unblock developers and data scientists so they can efficiently provide results and save time.

Clean up

Complete the following steps to clean up your resources:

- Delete the provisioned bucket in Google Cloud Storage.

- Delete the service account under IAM & Admin.

- Delete the secret GCP credentials in Secrets Manager.

- Delete the S3 spill bucket.

- Delete the Athena connector Lambda function.

- Delete the AWS Glue database and table.

Troubleshooting

If you receive a ROLLBACK_COMPLETE state and “can not be updated error” when creating the data source in Lambda, go to AWS CloudFormation, delete the CloudFormation stack, and try recreating it.

If the AWS Glue table doesn’t appear in the Athena query editor, verify that the data source and database values are correctly selected in the Data pane on the Athena query editor console.

Conclusion

In this post, we saw how you can minimize the time and effort required to access data on Google Cloud Platform and use it efficiently on AWS. Using the data connector helps organizations become multi-cloud agnostic and helps accelerate business growth. Additionally, you can build out BI applications with the discoveries, relationships, and insights found when analyzing the data, which can further your organization’s data analysis process.

About the Author

Jonathan Wong is a Solutions Architect at AWS assisting with initiatives within Strategic Accounts. He is passionate about solving customer challenges and has been exploring emerging technologies to accelerate innovation.

Jonathan Wong is a Solutions Architect at AWS assisting with initiatives within Strategic Accounts. He is passionate about solving customer challenges and has been exploring emerging technologies to accelerate innovation.

Welcome Chris Opat, Senior Vice President of Cloud Operations

Post Syndicated from Patrick Thomas original https://www.backblaze.com/blog/welcome-chris-opat-senior-vice-president-of-cloud-operations/

Backblaze is happy to announce that Chris Opat has joined our team as senior vice president of cloud operations. Chris will oversee the strategy and operations of the Backblaze global cloud storage platform.

What Chris Brings to Backblaze

Chris expands the company’s leadership by bringing his impressive cloud and infrastructure knowledge with more than 25 years of industry experience.

Previously, Chris served as senior vice president leading platform engineering and operations at StackPath, a specialized provider in edge technology and content delivery. He also held leadership roles at CyrusOne, CompuCom, Cloudreach, and Bear Stearns/JPMorgan. Chris earned his Bachelor of Science degree in television and digital media production from Ithaca College.

Backblaze CEO, Gleb Budman, shared that Chris is a forward-thinking cloud leader with a proven track record of leading teams that are clever and bold in solving problems and creating best-in-class experiences for customers. His expertise and approach will be pivotal as more customers move to an open cloud ecosystem and will help advance Backblaze’s cloud strategy as we continue to grow.

Chris’ Role as SVP of Cloud Operations

As SVP of Cloud Operations, Chris oversees cloud strategy, platform engineering, and technology infrastructure, enabling Backblaze to further scale capacity and improve performance to meet larger-sized customers’ needs, as we continue to see success in moving up-market.

Chris says of his new role at Backblaze:

Backblaze’s vision and mission resonate with me. I’m proud to be joining a company that is supporting customers and advocating for an open cloud ecosystem. I’m looking forward to working with the amazing team at Backblaze as we continue to scale with our customers and accelerate growth.

The post Welcome Chris Opat, Senior Vice President of Cloud Operations appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Integrating IBM MQ with Amazon SQS and Amazon SNS using Apache Camel

Post Syndicated from Pascal Vogel original https://aws.amazon.com/blogs/compute/integrating-ibm-mq-with-amazon-sqs-and-amazon-sns-using-apache-camel/

This post is written by Joaquin Rinaudo, Principal Security Consultant and Gezim Musliaj, DevOps Consultant.

IBM MQ is a message-oriented middleware (MOM) product used by many enterprise organizations, including global banks, airlines, and healthcare and insurance companies.

Customers often ask us for guidance on how they can integrate their existing on-premises MOM systems with new applications running in the cloud. They’re looking for a cost-effective, scalable and low-effort solution that enables them to send and receive messages from their cloud applications to these messaging systems.

This blog post shows how to set up a bi-directional bridge from on-premises IBM MQ to Amazon MQ, Amazon Simple Queue Service (Amazon SQS), and Amazon Simple Notification Service (Amazon SNS).

This allows your producer and consumer applications to integrate using fully managed AWS messaging services and Apache Camel. Learn how to deploy such a solution and how to test the running integration using SNS, SQS, and a demo IBM MQ cluster environment running on Amazon Elastic Container Service (ECS) with AWS Fargate.

This solution can also be used as part of a step-by-step migration using the approach described in the blog post Migrating from IBM MQ to Amazon MQ using a phased approach.

Solution overview

The integration consists of an Apache Camel broker cluster that bi-directionally integrates an IBM MQ system and target systems, such as Amazon MQ running ActiveMQ, SNS topics, or SQS queues.

In the following example, AWS services, in this case AWS Lambda and SQS, receive messages published to IBM MQ via an SNS topic:

- The cloud message consumers (Lambda and SQS) subscribe to the solution’s target SNS topic.

- The Apache Camel broker connects to IBM MQ using secrets stored in AWS Secrets Manager and reads new messages from the queue using IBM MQ’s Java library. Only IBM MQ messages are supported as a source.

- The Apache Camel broker publishes these new messages to the target SNS topic. It uses the Amazon SNS Extended Client Library for Java to store any messages larger than 256 KB in an Amazon Simple Storage Service (Amazon S3) bucket.

- Apache Camel stores any message that cannot be delivered to SNS after two retries in an S3 dead letter queue bucket.

The next diagram demonstrates how the solution sends messages back from an SQS queue to IBM MQ:

- A sample message producer using Lambda sends messages to an SQS queue. It uses the Amazon SQS Extended Client Library for Java to send messages larger than 256 KB.

- The Apache Camel broker receives the messages published to SQS, using the SQS Extended Client Library if needed.

- The Apache Camel broker sends the message to the IBM MQ target queue.

- As before, the broker stores messages that cannot be delivered to IBM MQ in the S3 dead letter queue bucket.

A phased live migration consists of two steps:

- Deploy the broker service to allow reading messages from and writing to existing IBM MQ queues.

- Once the consumer or producer is migrated, migrate its counterpart to the newly selected service (SNS or SQS).

Next, you will learn how to set up the solution using the AWS Cloud Development Kit (AWS CDK).

Deploying the solution

Prerequisites

- AWS CDK

- TypeScript

- Java

- Docker

- Git

- Yarn

Step 1: Cloning the repository

Clone the repository using git:

git clone https://github.com/aws-samples/aws-ibm-mq-adapterStep 2: Setting up test IBM MQ credentials

This demo uses IBM MQ’s mutual TLS authentication. To do this, you must generate X.509 certificates and store them in AWS Secrets Manager by running the following commands in the app folder:

- Generate X.509 certificates:

./deploy.sh generate_secrets - Set up the secrets required for the Apache Camel broker (replace

<integration-name>with, for example,dev):./deploy.sh create_secrets broker <integration-name> - Set up secrets for the mock IBM MQ system:

./deploy.sh create_secrets mock - Update the

cdk.jsonfile with the secrets ARN output from the previous commands:IBM_MOCK_PUBLIC_CERT_ARNIBM_MOCK_PRIVATE_CERT_ARNIBM_MOCK_CLIENT_PUBLIC_CERT_ARNIBMMQ_TRUSTSTORE_ARNIBMMQ_TRUSTSTORE_PASSWORD_ARNIBMMQ_KEYSTORE_ARNIBMMQ_KEYSTORE_PASSWORD_ARN

If you are using your own IBM MQ system and already have X.509 certificates available, you can use the script to upload those certificates to AWS Secrets Manager after running the script.

Step 3: Configuring the broker

The solution deploys two brokers, one to read messages from the test IBM MQ system and one to send messages back. A separate Apache Camel cluster is used per integration to support better use of Auto Scaling functionality and to avoid issues across different integration operations (consuming and reading messages).

Update the cdk.json file with the following values:

accountId: AWS account ID to deploy the solution to.region: name of the AWS Region to deploy the solution to.defaultVPCId: specify a VPC ID for an existing VPC in the AWS account where the broker and mock are deployed.allowedPrincipals: add your account ARN (e.g.,arn:aws:iam::123456789012:root) to allow this AWS account to send messages to and receive messages from the broker. You can use this parameter to set up cross-account relationships for both SQS and SNS integrations and support multiple consumers and producers.

Step 4: Bootstrapping and deploying the solution

- Make sure you have the correct

AWS_PROFILEandAWS_REGIONenvironment variables set for your development account. - Run

yarn cdk bootstrap –-qualifier mq <aws://<account-id>/<region>to bootstrap CDK. - Run

yarn installto install CDK dependencies. - Finally, execute

yarn cdk deploy '*-dev' –-qualifier mq --require-approval neverto deploy the solution to thedevenvironment.

Step 5: Testing the integrations

Use AWS System Manager Session Manager and port forwarding to establish tunnels to the test IBM MQ instance to access the web console and send messages manually. For more information on port forwarding, see Amazon EC2 instance port forwarding with AWS System Manager.

- In a command line terminal, make sure you have the correct

AWS_PROFILEandAWS_REGIONenvironment variables set for your development account. - In addition, set the following environment variables:

IBM_ENDPOINT: endpoint for IBM MQ. Example: network load balancer for IBM mockmqmoc-mqada-1234567890.elb.eu-west-1.amazonaws.com.BASTION_ID: instance ID for the bastion host. You can retrieve this output from Step 4: Bootstrapping and deploying the solution listed after themqBastionStackdeployment.

Use the following command to set the environment variables:

export IBM_ENDPOINT=mqmoc-mqada-1234567890.elb.eu-west-1.amazonaws.com export BASTION_ID=i-0a1b2c3d4e5f67890 - Run the script

test/connect.sh. - Log in to the IBM web console via

https://127.0.0.1:9443/adminusing the default IBM user (admin) and the password stored in AWS Secrets Manager asmqAdapterIbmMockAdminPassword.

Sending data from IBM MQ and receiving it in SNS:

- In the IBM MQ console, access the local queue manager

QM1andDEV.QUEUE.1. - Send a message with the content

Hello AWS. This message will be processed by AWS Fargate and published to SNS. - Access the SQS console and choose the

snsIntegrationStack-dev-2prefix queue. This is an SQS queue subscribed to the SNS topic for testing. - Select Send and receive message.

- Select Poll for messages to see the

Hello AWSmessage previously sent to IBM MQ.

Sending data back from Amazon SQS to IBM MQ:

- Access the SQS console and choose the queue with the prefix

sqsPublishIntegrationStack-dev-3-dev. - Select Send and receive messages.

- For Message Body, add

Hello from AWS. - Choose Send message.

- In the IBM MQ console, access the local queue manager

QM1andDEV.QUEUE.2to find your message listed under this queue.

Step 6: Cleaning up

Run cdk destroy '*-dev' to destroy the resources deployed as part of this walkthrough.

Conclusion

In this blog, you learned how you can exchange messages between IBM MQ and your cloud applications using Amazon SQS and Amazon SNS.

If you’re interested in getting started with your own integration, follow the README file in the GitHub repository. If you’re migrating existing applications using industry-standard APIs and protocols such as JMS, NMS, or AMQP 1.0, consider integrating with Amazon MQ using the steps provided in the repository.

If you’re interested in running Apache Camel in Kubernetes, you can also adapt the architecture to use Apache Camel K instead.

For more serverless learning resources, visit Serverless Land.

Maintainers Summit call for topics

Post Syndicated from corbet original https://lwn.net/Articles/941660/

The 2023 Maintainers Summit will be held on November 16 in Richmond, VA,

immediately after the Linux Plumbers

Conference.

As in previous years, the Maintainers Summit is invite-only, where

the primary focus will be process issues around Linux Kernel

Development. It will be limited to 30 invitees and a handful of

sponsored attendees.

The call for

topics has just gone out, with the first invitations to be sent within

a couple of weeks or so.

Security updates for Tuesday

Post Syndicated from corbet original https://lwn.net/Articles/941658/

Security updates have been issued by Debian (samba), Red Hat (.NET 6.0, .NET 7.0, rh-dotnet60-dotnet, rust, rust-toolset-1.66-rust, and rust-toolset:rhel8), and SUSE (kernel and opensuse-welcome).

THG Podcast: Age of Airships

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=JRq4KnsfO7I

Digital making with Raspberry Pis in primary schools in Sarawak, Malaysia

Post Syndicated from Jenni Fletcher-McGrady original https://www.raspberrypi.org/blog/computing-education-primary-schools-sarawak-malaysia/

Dr Sue Sentance, Director of our Raspberry Pi Computing Education Research Centre at the University of Cambridge, shares what she learned on a recent visit in Malaysia to understand more about the approach taken to computing education in the state of Sarawak.

Computing education is a challenge around the world, and it is fascinating to see how different countries and education systems approach it. I recently had the opportunity to attend an event organised by the government of Sarawak, Malaysia, to see first-hand what learners and teachers are achieving thanks to the state’s recent policies.

Raspberry Pis and training for Sarawak’s primary schools

In Sarawak, the largest state of Malaysia, the local Ministry of Education, Innovation and Talent Development is funding an ambitious project through which all of Sarawak’s primary schools are receiving sets of Raspberry Pis. Learners use these as desktop computers and to develop computer science skills and knowledge, including the skills to create digital making projects.

Crucially, the ministry is combining this hardware distribution initiative with a three-year programme of professional development for primary school teachers. They receive training known as the Raspberry Pi Training Programme, which starts with Scratch programming and incorporates elements of physical computing with the Raspberry Pis and sensors.

To date the project has provided 9436 kits (including Raspberry Pi computer, case, monitor, mouse, and keyboard) to schools, and training for over 1200 teachers.

The STEM Trailblazers event

In order to showcase what has been achieved through the project so far, students and teachers were invited to use their schools’ Raspberry Pis to create projects to prototype solutions to real problems faced by their communities, and to showcase these projects at a special STEM Trailblazers event.

Geographically, Sarawak is Malaysia’s largest state, but it has a much smaller population than the west of the country. This means that towns and villages are very spread out and teachers and students had large distances to travel to attend the STEM Trailblazers event. To partially address this, the event was held in two locations simultaneously, Kuching and Miri, and talks were live-streamed between both venues.

STEM Trailblazers featured a host of talks from people involved in the initiative. I was very honoured to be invited as a guest speaker, representing both the University of Cambridge and the Raspberry Pi Foundation as the Director of the Raspberry Pi Computing Education Research Centre.

Solving real-world problems

The Raspberry Pi projects at STEM Trailblazers were entered into a competition, with prizes for students and teachers. Most projects had been created using Scratch to control the Raspberry Pi as well as a range of sensors.

The children and teachers who participated came from both rural and urban areas, and it was clear that the issues they had chosen to address were genuine problems in their communities.

Many of the projects I saw related to issues that schools faced around heat and hydration: a Smart Bottle project reminded children to drink regularly, a shade creator project created shade when the temperature got too high, a teachers’ project told students that they could no longer play outside when the temperature exceeded 35 degrees, and a water cooling system project set off sprinklers when the temperature rose. Other themes of the projects were keeping toilets clean, reminding children to eat healthily, and helping children to learn the alphabet. One project that especially intrigued me was an alert system for large and troublesome birds that were a problem for rural schools.

The creativity and quality of the projects on show was impressive given that all the students (and many of their teachers) had learned to program very recently, and also had to be quite innovative where they hadn’t been able to access all the hardware they needed to build their creations.

What we can learn from this initiative

Everyone involved in this project in Sarawak — including teachers, government representatives, university academics, and industry partners — is really committed to giving children the best opportunities to grow up with an understanding of digital technology. They know this is essential for their professional futures, and also fosters their creativity, independence, and problem-solving skills.

Over the last ten years, I’ve been fortunate enough to travel widely in my capacity as a computing education researcher, and I’ve seen first-hand a number of the approaches countries are taking to help their young people gain the skills and understanding of computing technologies that they need for their futures.

It’s good for us to look beyond our own context to understand how countries across the world are preparing their young people to engage with digital technology. No matter how many similarities there are between two places, we can all learn from each other’s initiatives and ideas. In 2021 the Brookings Institution published a global review of how countries are progressing with this endeavour. Organisations such as UNESCO and WEF regularly publish reports that emphasise the importance for countries to develop their citizens’ digital skills, and also advanced technological skills.

The Sarawak government’s initiative is grounded in the use of Raspberry Pis as desktop computers for schools, which run offline where schools have no access to the internet. That teachers are also trained to use the Raspberry Pis to support learners to develop hands-on digital making skills is a really important aspect of the project.

Our commercial subsidiary Raspberry Pi Limited works with a company network of Approved Resellers around the globe; in this case the Malaysian reseller Cytron has been an enormous support in supplying Sarawak’s primary schools with Raspberry Pis and other hardware.

Schools anywhere in the world can also access the Raspberry Pi Foundation’s free learning and teaching resources, such as curriculum materials, online training courses for teachers, and our magazine for educators, Hello World. We are very proud to support the work being done in Sarawak.

As for what the future holds for Sarawak’s computing education, at the opening ceremony of the STEM Trailblazers event, the Deputy Minister announced that the event will be an annual occasion. That means every year more students and teachers will be able to come together, share their learning, and get excited about using digital making to solve the problems that matter to them.

The post Digital making with Raspberry Pis in primary schools in Sarawak, Malaysia appeared first on Raspberry Pi Foundation.

Zoom Can Spy on Your Calls and Use the Conversation to Train AI, But Says That It Won’t

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2023/08/zoom-can-spy-on-your-calls-and-use-the-conversation-to-train-ai-but-says-that-it-wont.html

This is why we need regulation:

Zoom updated its Terms of Service in March, spelling out that the company reserves the right to train AI on user data with no mention of a way to opt out. On Monday, the company said in a blog post that there’s no need to worry about that. Zoom execs swear the company won’t actually train its AI on your video calls without permission, even though the Terms of Service still say it can.

Of course, these are Terms of Service. They can change at any time. Zoom can renege on its promise at any time. There are no rules, only the whims of the company as it tries to maximize its profits.

It’s a stupid way to run a technological revolution. We should not have to rely on the benevolence of for-profit corporations to protect our rights. It’s not their job, and it shouldn’t be.

Comic for 2023.08.15 – It’s A Boy

Post Syndicated from Explosm.net original https://explosm.net/comics/its-a-boy

New Cyanide and Happiness Comic

Streamlining Grab’s Segmentation Platform with faster creation and lower latency

Post Syndicated from Grab Tech original https://engineering.grab.com/streamlining-grabs-segmentation-platform

Launched in 2019, Segmentation Platform has been Grab’s one-stop platform for user segmentation and audience creation across all business verticals. User segmentation is the process of dividing passengers, driver-partners, or merchant-partners (users) into sub-groups (segments) based on certain attributes. Segmentation Platform empowers Grab’s teams to create segments using attributes available within our data ecosystem and provides APIs for downstream teams to retrieve them.

Checking whether a user belongs to a segment (Membership Check) influences many critical flows on the Grab app:

- When a passenger launches the Grab app, our in-house experimentation platform will tailor the app experience based on the segments the passenger belongs to.

- When a driver-partner goes online on the Grab app, the Drivers service calls Segmentation Platform to ensure that the driver-partner is not blacklisted.

- When launching marketing campaigns, Grab’s communications platform relies on Segmentation Platform to determine which passengers, driver-partners, or merchant-partners to send communication to.

This article peeks into the current design of Segmentation Platform and how the team optimised the way segments are stored to reduce read latency thus unlocking new segmentation use cases.

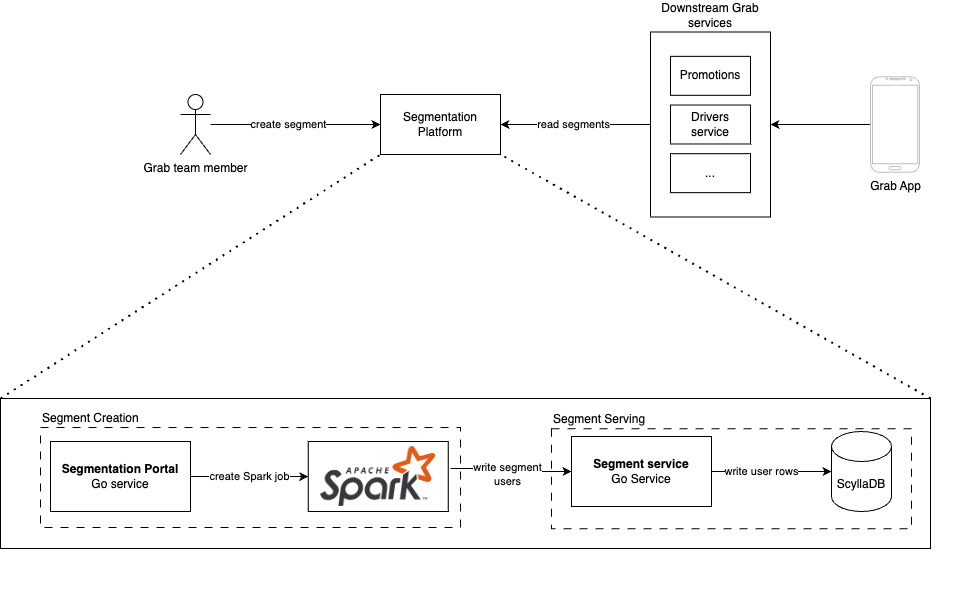

Architecture

Segmentation Platform comprises two major subsystems:

- Segment creation

- Segment serving

Segment creation

Segment creation is powered by Spark jobs. When a Grab team creates a segment, a Spark job is started to retrieve data from our data lake. After the data is retrieved, cleaned, and validated, the Spark job calls the serving sub-system to populate the segment with users.

Segment serving

Segment serving is powered by a set of Go services. For persistence and serving, we use ScyllaDB as our primary storage layer. We chose to use ScyllaDB as our NoSQL store due to its ability to scale horizontally and meet our <80ms p99 SLA. Users in a segment are stored as rows indexed by the user ID. The table is partitioned by the user ID ensuring that segment data is evenly distributed across the ScyllaDB clusters.

| User ID | Segment Name | Other metadata columns |

|---|---|---|

| 1221 | Segment_A | … |

| 3421 | Segment_A | … |

| 5632 | Segment_B | … |

| 7889 | Segment_B | … |

With this design, Segmentation Platform handles up to 12K read and 36K write QPS, with a p99 latency of 40ms.

Problems

The existing system has supported Grab, empowering internal teams to create rich and personalised experiences. However, with the increased adoption and use, certain challenges began to emerge:

- As more and larger segments are being created, the write QPS became a bottleneck leading to longer wait times for segment creation.

- Grab services requested even lower latency for membership checks.

Long segment creation times

As more segments were created by different teams within Grab, the write QPS was no longer able to keep up with the teams’ demands. Teams would have to wait for hours for their segments to be created, reducing their operational velocity.

Read latency

Further, while the platform already offers sub-40ms p99 latency for reads, this was still too slow for certain services and their use cases. For example, Grab’s communications platform needed to check whether a user belongs to a set of segments before sending out communication and incurring increased latency for every communication request was not acceptable. Another use case was for Experimentation Platform, where checks must have low latency to not impact the user experience.

Thus, the team explored alternative ways of storing the segment data with the goals of:

- Reducing segment creation time

- Reducing segment read latency

- Maintaining or reducing cost

Solution

Segments as bitmaps

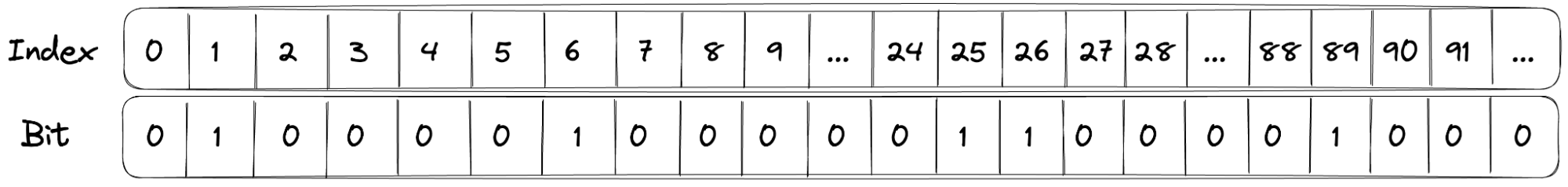

One of the main engineering challenges was scaling the write throughput of the system to keep pace with the number of segments being created. As a segment is stored across multiple rows in ScyllaDB, creating a large segment incurs a huge number of writes to the database. What we needed was a better way to store a large set of user IDs. Since user IDs are represented as integers in our system, a natural solution to storing a set of integers was a bitmap.

For example, a segment containing the following user IDs: 1, 6, 25, 26, 89 could be represented with a bitmap as follows:

To perform a membership check, a bitwise operation can be used to check if the bit at the user ID’s index is 0 or 1. As a bitmap, the segment can also be stored as a single Blob in object storage instead of inside ScyllaDB.

However, as the number of user IDs in the system is large, a small and sparse segment would lead to prohibitively large bitmaps. For example, if a segment contains 2 user IDs 100 and 200,000,000, it will require a bitmap containing 200 million bits (25MB) where all but 2 of the bits are just 0. Thus, the team needed an encoding to handle sparse segments more efficiently.

Roaring Bitmaps

After some research, we landed on Roaring Bitmaps, which are compressed uint32 bitmaps. With roaring bitmaps, we are able to store a segment with 1 million members in a Blob smaller than 1 megabyte, compared to 4 megabytes required by a naive encoding.

Roaring Bitmaps achieve good compression ratios by splitting the set into fixed-size (216) integer chunks and using three different data structures (containers) based on the data distribution within the chunk. The most significant 16 bits of the integer are used as the index of the chunk, and the least significant 16 bits are stored in the containers.

Array containers

Array containers are used when data is sparse (<= 4096 values). An array container is a sorted array of 16-bit integers. It is memory-efficient for sparse data and provides logarithmic-time access.

Bitmap containers

Bitmap containers are used when data is dense. A bitmap container is a 216 bit container where each bit represents the presence or absence of a value. It is memory-efficient for dense data and provides constant-time access.

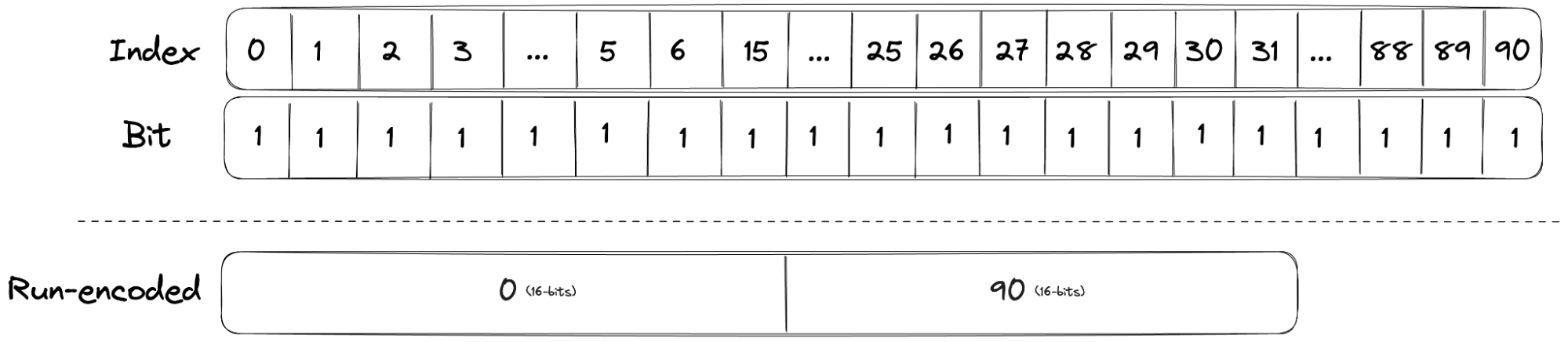

Run containers

Finally, run containers are used when a chunk has long consecutive values. Run containers use run-length encoding (RLE) to reduce the storage required for dense bitmaps. Run containers store a pair of values representing the start and the length of the run. It provides good memory efficiency and fast lookups.

The diagram below shows how a dense bitmap container that would have required 91 bits can be compressed into a run container by storing only the start (0) and the length (90). It should be noted that run containers are used only if it reduces the number of bytes required compared to a bitmap.

By using different containers, Roaring Bitmaps are able to achieve good compression across various data distributions, while maintaining excellent lookup performance. Additionally, as segments are represented as Roaring Bitmaps, service teams are able to perform set operations (union, interaction, and difference, etc) on the segments on the fly, which previously required re-materialising the combined segment into the database.

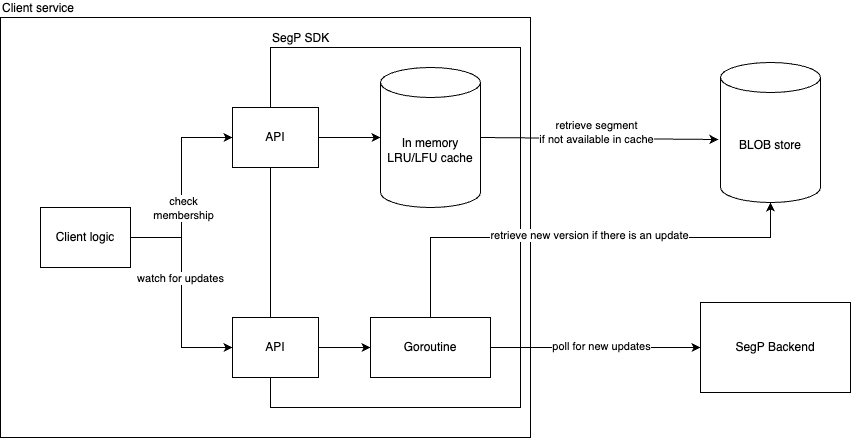

Caching with an SDK

Even though the segments are now compressed, retrieving a segment from the Blob store for each membership check would incur an unacceptable latency penalty. To mitigate the overhead of retrieving a segment, we developed an SDK that handles the retrieval and caching of segments.

The SDK takes care of the retrieval, decoding, caching, and watching of segments. Users of the SDK are only required to specify the maximum size of the cache to prevent exhausting the service’s memory. The SDK provides a cache with a least-recently-used eviction policy to ensure that hot segments are kept in the cache. They are also able to watch for updates on a segment and the SDK will automatically refresh the cached segment when it is updated.

Hero teams

Communications Platform

Communications Platform has adopted the SDK to implement a new feature to control the communication frequency based on which segments a user belongs to. Using the SDK, the team is able to perform membership checks on multiple multi-million member segments, achieving peak QPS 15K/s with a p99 latency of <1ms. With the new feature, they have been able to increase communication engagement and reduce the communication unsubscribe rate.

Experimentation Platform

Experimentation Platform powers experimentation across all Grab services. Segments are used heavily in experiments to determine a user’s experience. Prior to using the SDK, Experimentation Platform limited the maximum size of the segments that could be used to prevent exhausting a service’s memory.

After migrating to the new SDK, they were able to lift this restriction due to the compression efficiency of Roaring Bitmaps. Users are now able to use any segments as part of their experiment without worrying that it would require too much memory.

Closing

This blog post discussed the challenges that Segmentation Platform faced when scaling and how the team explored alternative storage and encoding techniques to improve segment creation time, while also achieving low latency reads. The SDK allows our teams to easily make use of segments without having to handle the details of caching, eviction, and updating of segments.

Moving forward, there are still existing use cases that are not able to use the Roaring Bitmap segments and thus continue to rely on segments from ScyllaDB. Therefore, the team is also taking steps to optimise and improve the scalability of our service and database.

Special thanks to Axel, the wider Segmentation Platform team, and Data Technology team for reviewing the post.

Join us

Grab is the leading superapp platform in Southeast Asia, providing everyday services that matter to consumers. More than just a ride-hailing and food delivery app, Grab offers a wide range of on-demand services in the region, including mobility, food, package and grocery delivery services, mobile payments, and financial services across 428 cities in eight countries.

Powered by technology and driven by heart, our mission is to drive Southeast Asia forward by creating economic empowerment for everyone. If this mission speaks to you, join our team today!

Why Can’t We Quit Weddings?

Post Syndicated from The Atlantic original https://www.youtube.com/watch?v=7hTz9q9ai3c

Implementing automatic drift detection in CDK Pipelines using Amazon EventBridge

Post Syndicated from DAMODAR SHENVI WAGLE original https://aws.amazon.com/blogs/devops/implementing-automatic-drift-detection-in-cdk-pipelines-using-amazon-eventbridge/

The AWS Cloud Development Kit (AWS CDK) is a popular open source toolkit that allows developers to create their cloud infrastructure using high level programming languages. AWS CDK comes bundled with a construct called CDK Pipelines that makes it easy to set up continuous integration, delivery, and deployment with AWS CodePipeline. The CDK Pipelines construct does all the heavy lifting, such as setting up appropriate AWS IAM roles for deployment across regions and accounts, Amazon Simple Storage Service (Amazon S3) buckets to store build artifacts, and an AWS CodeBuild project to build, test, and deploy the app. The pipeline deploys a given CDK application as one or more AWS CloudFormation stacks.

With CloudFormation stacks, there is the possibility that someone can manually change the configuration of stack resources outside the purview of CloudFormation and the pipeline that deploys the stack. This causes the deployed resources to be inconsistent with the intent in the application, which is referred to as “drift”, a situation that can make the application’s behavior unpredictable. For example, when troubleshooting an application, if the application has drifted in production, it is difficult to reproduce the same behavior in a development environment. In other cases, it may introduce security vulnerabilities in the application. For example, an AWS EC2 SecurityGroup that was originally deployed to allow ingress traffic from a specific IP address might potentially be opened up to allow traffic from all IP addresses.

CloudFormation offers a drift detection feature for stacks and stack resources to detect configuration changes that are made outside of CloudFormation. The stack/resource is considered as drifted if its configuration does not match the expected configuration defined in the CloudFormation template and by extension the CDK code that synthesized it.

In this blog post you will see how CloudFormation drift detection can be integrated as a pre-deployment validation step in CDK Pipelines using an event driven approach.

Services and frameworks used in the post include CloudFormation, CodeBuild, Amazon EventBridge, AWS Lambda, Amazon DynamoDB, S3, and AWS CDK.

Solution overview

Amazon EventBridge is a serverless AWS service that offers an agile mechanism for the developers to spin up loosely coupled, event driven applications at scale. EventBridge supports routing of events between services via an event bus. EventBridge out of the box supports a default event bus for each account which receives events from AWS services. Last year, CloudFormation added a new feature that enables event notifications for changes made to CloudFormation-based stacks and resources. These notifications are accessible through Amazon EventBridge, allowing users to monitor and react to changes in their CloudFormation infrastructure using event-driven workflows. Our solution leverages the drift detection events that are now supported by EventBridge. The following architecture diagram depicts the flow of events involved in successfully performing drift detection in CDK Pipelines.

Architecture diagram

The user starts the pipeline by checking code into an AWS CodeCommit repo, which acts as the pipeline source. We have configured drift detection in the pipeline as a custom step backed by a lambda function. When the drift detection step invokes the provider lambda function, it first starts the drift detection on the CloudFormation stack Demo Stack and then saves the drift_detection_id along with pipeline_job_id in a DynamoDB table. In the meantime, the pipeline waits for a response on the status of drift detection.

The EventBridge rules are set up to capture the drift detection state change events for Demo Stack that are received by the default event bus. The callback lambda is registered as the intended target for the rules. When drift detection completes, it triggers the EventBridge rule which in turn invokes the callback lambda function with stack status as either DRIFTED or IN SYNC. The callback lambda function pulls the pipeline_job_id from DynamoDB and sends the appropriate status back to the pipeline, thus propelling the pipeline out of the wait state. If the stack is in the IN SYNC status, the callback lambda sends a success status and the pipeline continues with the deployment. If the stack is in the DRIFTED status, callback lambda sends failure status back to the pipeline and the pipeline run ends up in failure.

Solution Deep Dive

The solution deploys two stacks as shown in the above architecture diagram

- CDK Pipelines stack

- Pre-requisite stack

The CDK Pipelines stack defines a pipeline with a CodeCommit source and drift detection step integrated into it. The pre-requisite stack deploys following resources that are required by the CDK Pipelines stack.

- A Lambda function that implements drift detection step

- A DynamoDB table that holds

drift_detection_idandpipeline_job_id - An Event bridge rule to capture “CloudFormation Drift Detection Status Change” event

- A callback lambda function that evaluates status of drift detection and sends status back to the pipeline by looking up the data captured in DynamoDB.

The pre-requisites stack is deployed first, followed by the CDK Pipelines stack.

Defining drift detection step

CDK Pipelines offers a mechanism to define your own step that requires custom implementation. A step corresponds to a custom action in CodePipeline such as invoke lambda function. It can exist as a pre or post deployment action in a given stage of the pipeline. For example, your organization’s policies may require its CI/CD pipelines to run a security vulnerability scan as a prerequisite before deployment. You can build this as a custom step in your CDK Pipelines. In this post, you will use the same mechanism for adding the drift detection step in the pipeline.

You start by defining a class called DriftDetectionStep that extends Step and implements ICodePipelineActionFactory as shown in the following code snippet. The constructor accepts 3 parameters stackName, account, region as inputs. When the pipeline runs the step, it invokes the drift detection lambda function with these parameters wrapped inside userParameters variable. The function produceAction() adds the action to invoke drift detection lambda function to the pipeline stage.

Please note that the solution uses an SSM parameter to inject the lambda function ARN into the pipeline stack. So, we deploy the provider lambda function as part of pre-requisites stack before the pipeline stack and publish its ARN to the SSM parameter. The CDK code to deploy pre-requisites stack can be found here.

export class DriftDetectionStep

extends Step

implements pipelines.ICodePipelineActionFactory

{

constructor(

private readonly stackName: string,

private readonly account: string,

private readonly region: string

) {

super(`DriftDetectionStep-${stackName}`);

}

public produceAction(

stage: codepipeline.IStage,

options: ProduceActionOptions

): CodePipelineActionFactoryResult {

// Define the configuraton for the action that is added to the pipeline.

stage.addAction(

new cpactions.LambdaInvokeAction({

actionName: options.actionName,

runOrder: options.runOrder,

lambda: lambda.Function.fromFunctionArn(

options.scope,

`InitiateDriftDetectLambda-${this.stackName}`,

ssm.StringParameter.valueForStringParameter(

options.scope,

SSM_PARAM_DRIFT_DETECT_LAMBDA_ARN

)

),

// These are the parameters passed to the drift detection step implementaton provider lambda

userParameters: {

stackName: this.stackName,

account: this.account,

region: this.region,

},

})

);

return {

runOrdersConsumed: 1,

};

}

}

Configuring drift detection step in CDK Pipelines

Here you will see how to integrate the previously defined drift detection step into CDK Pipelines. The pipeline has a stage called DemoStage as shown in the following code snippet. During the construction of DemoStage, we declare drift detection as the pre-deployment step. This makes sure that the pipeline always does the drift detection check prior to deployment.

Please note that for every stack defined in the stage; we add a dedicated step to perform drift detection by instantiating the class DriftDetectionStep detailed in the prior section. Thus, this solution scales with the number of stacks defined per stage.

export class PipelineStack extends BaseStack {

constructor(scope: Construct, id: string, props?: StackProps) {

super(scope, id, props);

const repo = new codecommit.Repository(this, 'DemoRepo', {

repositoryName: `${this.node.tryGetContext('appName')}-repo`,

});

const pipeline = new CodePipeline(this, 'DemoPipeline', {

synth: new ShellStep('synth', {

input: CodePipelineSource.codeCommit(repo, 'main'),

commands: ['./script-synth.sh'],

}),

crossAccountKeys: true,

enableKeyRotation: true,

});

const demoStage = new DemoStage(this, 'DemoStage', {

env: {

account: this.account,

region: this.region,

},

});

const driftDetectionSteps: Step[] = [];

for (const stackName of demoStage.stackNameList) {

const step = new DriftDetectionStep(stackName, this.account, this.region);

driftDetectionSteps.push(step);

}

pipeline.addStage(demoStage, {

pre: driftDetectionSteps,

});

Demo

Here you will go through the deployment steps for the solution and see drift detection in action.

Deploy the pre-requisites stack

Clone the repo from the GitHub location here. Navigate to the cloned folder and run script script-deploy.sh You can find detailed instructions in README.md

Deploy the CDK Pipelines stack

Clone the repo from the GitHub location here. Navigate to the cloned folder and run script script-deploy.sh. This deploys a pipeline with an empty CodeCommit repo as the source. The pipeline run ends up in failure, as shown below, because of the empty CodeCommit repo.

Next, check in the code from the cloned repo into the CodeCommit source repo. You can find detailed instructions on that in README.md This triggers the pipeline and pipeline finishes successfully, as shown below.

The pipeline deploys two stacks DemoStackA and DemoStackB. Each of these stacks creates an S3 bucket.

Demonstrate drift detection

Locate the S3 bucket created by DemoStackA under resources, navigate to the S3 bucket and modify the tag aws-cdk:auto-delete-objects from true to false as shown below

Now, go to the pipeline and trigger a new execution by clicking on Release Change

The pipeline run will now end in failure at the pre-deployment drift detection step.

Cleanup

Please follow the steps below to clean up all the stacks.

- Navigate to S3 console and empty the buckets created by stacks

DemoStackAandDemoStackB. - Navigate to the CloudFormation console and delete stacks

DemoStackAandDemoStackB, since deleting CDK Pipelines stack does not delete the application stacks that the pipeline deploys. - Delete the CDK Pipelines stack

cdk-drift-detect-demo-pipeline - Delete the pre-requisites stack

cdk-drift-detect-demo-drift-detection-prereq

Conclusion

In this post, I showed how to add a custom implementation step in CDK Pipelines. I also used that mechanism to integrate a drift detection check as a pre-deployment step. This allows us to validate the integrity of a CloudFormation Stack before its deployment. Since the validation is integrated into the pipeline, it is easier to manage the solution in one place as part of the overarching pipeline. Give the solution a try, and then see if you can incorporate it into your organization’s delivery pipelines.

About the author:

Damodar Shenvi Wagle is a Senior Cloud Application Architect at AWS Professional Services. His areas of expertise include architecting serverless solutions, CI/CD, and automation.

Load test your applications in a CI/CD pipeline using CDK pipelines and AWS Distributed Load Testing Solution

Post Syndicated from Krishnakumar Rengarajan original https://aws.amazon.com/blogs/devops/load-test-applications-in-cicd-pipeline/

Load testing is a foundational pillar of building resilient applications. Today, load testing practices across many organizations are often based on desktop tools, where someone must manually run the performance tests and validate the results before a software release can be promoted to production. This leads to increased time to market for new features and products. Load testing applications in automated CI/CD pipelines provides the following benefits:

- Early and automated feedback on performance thresholds based on clearly defined benchmarks.

- Consistent and reliable load testing process for every feature release.

- Reduced overall time to market due to eliminated manual load testing effort.

- Improved overall resiliency of the production environment.

- The ability to rapidly identify and document bottlenecks and scaling limits of the production environment.

In this blog post, we demonstrate how to automatically load test your applications in an automated CI/CD pipeline using AWS Distributed Load Testing solution and AWS CDK Pipelines.

The AWS Cloud Development Kit (AWS CDK) is an open-source software development framework to define cloud infrastructure in code and provision it through AWS CloudFormation. AWS CDK Pipelines is a construct library module for continuous delivery of AWS CDK applications, powered by AWS CodePipeline. AWS CDK Pipelines can automatically build, test, and deploy the new version of your CDK app whenever the new source code is checked in.

Distributed Load Testing is an AWS Solution that automates software applications testing at scale to help you identify potential performance issues before their release. It creates and simulates thousands of users generating transactional records at a constant pace without the need to provision servers or instances.

Prerequisites

To deploy and test this solution, you will need:

- AWS Command Line Interface (AWS CLI): This tutorial assumes that you have configured the AWS CLI on your workstation. Alternatively, you can use also use AWS CloudShell.

- AWS CDK V2: This tutorial assumes that you have installed AWS CDK V2 on your workstation or in the CloudShell environment.

Solution Overview

In this solution, we create a CI/CD pipeline using AWS CDK Pipelines and use it to deploy a sample RESTful CDK application in two environments; development and production. We load test the application using AWS Distributed Load Testing Solution in the development environment. Based on the load test result, we either fail the pipeline or proceed to production deployment. You may consider running the load test in a dedicated testing environment that mimics the production environment.

For demonstration purposes, we use the following metrics to validate the load test results.

- Average Response Time – the average response time, in seconds, for all the requests generated by the test. In this blog post we define the threshold for average response time to 1 second.

- Error Count – the total number of errors. In this blog post, we define the threshold for for total number of errors to 1.

For your application, you may consider using additional metrics from the Distributed Load Testing solution documentation to validate your load test.

Architecture diagram

Solution Components

- AWS CDK code for the CI/CD pipeline, including AWS Identity and Access Management (IAM) roles and policies. The pipeline has the following stages:

- Source: fetches the source code for the sample application from the AWS CodeCommit repository.

- Build: compiles the code and executes

cdk synthto generate CloudFormation template for the sample application. - UpdatePipeline: updates the pipeline if there are any changes to our code or the pipeline configuration.

- Assets: prepares and publishes all file assets to Amazon S3 (S3).

- Development Deployment: deploys application to the development environment and runs a load test.

- Production Deployment: deploys application to the production environment.

- AWS CDK code for a sample serverless RESTful application.

-

- The AWS Lambda (Lambda) function in the architecture contains a 500 millisecond sleep statement to add latency to the API response.

- Typescript code for starting the load test and validating the test results. This code is executed in the ‘Load Test’ step of the ‘Development Deployment’ stage. It starts a load test against the sample restful application endpoint and waits for the test to finish. For demonstration purposes, the load test is started with the following parameters:

- Concurrency: 1

- Task Count: 1

- Ramp up time: 0 secs

- Hold for: 30 sec

- End point to test: endpoint for the sample RESTful application.

- HTTP method: GET

- Load Testing service deployed via the AWS Distributed Load Testing Solution. For costs related to the AWS Distributed Load Testing Solution, see the solution documentation.

Implementation Details

For the purposes of this blog, we deploy the CI/CD pipeline, the RESTful application and the AWS Distributed Load Testing solution into the same AWS account. In your environment, you may consider deploying these stacks into separate AWS accounts based on your security and governance requirements.

To deploy the solution components

- Follow the instructions in the the AWS Distributed Load Testing solution Automated Deployment guide to deploy the solution. Note down the value of the CloudFormation output parameter ‘DLTApiEndpoint’. We will need this in the next steps. Proceed to the next step once you are able to login to the User Interface of the solution.

- Clone the blog Git repository

- Update the Distributed Load Testing Solution endpoint URL in loadTestEnvVariables.json.

- Deploy the CloudFormation stack for the CI/CD pipeline. This step will also commit the AWS CDK code for the sample RESTful application stack and start the application deployment.

cd pipeline && cdk bootstrap && cdk deploy --require-approval never - Follow the below steps to view the load test results:

-

- Open the AWS CodePipeline console.

- Click on the pipeline named “blog-pipeline”.

- Observe that one of the stages (named ‘LoadTest’) in the CI/CD pipeline (that was provisioned by the CloudFormation stack in the previous step) executes a load test against the application Development environment.

- Click on the details of the ‘LoadTest’ step to view the test results. Notice that the load test succeeded.

-

Change the response time threshold

In this step, we will modify the response time threshold from 1 second to 200 milliseconds in order to introduce a load test failure. Remember from the steps earlier that the Lambda function code has a 500 millisecond sleep statement to add latency to the API response time.

- From the AWS Console and then go to CodeCommit. The source for the pipeline is a CodeCommit repository named “blog-repo”.

- Click on the “blog-repo” repository, and then browse to the “pipeline” folder. Click on file ‘loadTestEnvVariables.json’ and then ‘Edit’.

- Set the response time threshold to 200 milliseconds by changing attribute ‘AVG_RT_THRESHOLD’ value to ‘.2’. Click on the commit button. This will start will start the CI/CD pipeline.

- Go to CodePipeline from the AWS console and click on the ‘blog-pipeline’.

- Observe the ‘LoadTest’ step in ‘Development-Deploy’ stage will fail in about five minutes, and the pipeline will not proceed to the ‘Production-Deploy’ stage.

- Click on the details of the ‘LoadTest’ step to view the test results. Notice that the load test failed.

- Log into the Distributed Load Testing Service console. You will see two tests named ‘sampleScenario’. Click on each of them to see the test result details.

Cleanup

- Delete the CloudFormation stack that deployed the sample application.

- From the AWS Console, go to CloudFormation and delete the stacks ‘Production-Deploy-Application’ and ‘Development-Deploy-Application’.

- Delete the CI/CD pipeline.

cd pipeline && cdk destroy - Delete the Distributed Load Testing Service CloudFormation stack.

- From CloudFormation console, delete the stack for Distributed Load Testing service that you created earlier.

Conclusion

In the post above, we demonstrated how to automatically load test your applications in a CI/CD pipeline using AWS CDK Pipelines and AWS Distributed Load Testing solution. We defined the performance bench marks for our application as configuration. We then used these benchmarks to automatically validate the application performance prior to production deployment. Based on the load test results, we either proceeded to production deployment or failed the pipeline.

About the Authors

Cost considerations and common options for AWS Network Firewall log management

Post Syndicated from Sharon Li original https://aws.amazon.com/blogs/security/cost-considerations-and-common-options-for-aws-network-firewall-log-management/

When you’re designing a security strategy for your organization, firewalls provide the first line of defense against threats. Amazon Web Services (AWS) offers AWS Network Firewall, a stateful, managed network firewall that includes intrusion detection and prevention (IDP) for your Amazon Virtual Private Cloud (VPC).

Logging plays a vital role in any firewall policy, as emphasized by the National Institute of Standards and Technology (NIST) Guidelines on Firewalls and Firewall Policy. Logging enables organizations to take proactive measures to help prevent and recover from failures, maintain proper firewall security configurations, and gather insights for effectively responding to security incidents.

Determining the optimal logging approach for your organization should be approached on a case-by-case basis. It involves striking a balance between your security and compliance requirements and the costs associated with implementing solutions to meet those requirements.

This blog post walks you through logging configuration best practices, discusses three common architectural patterns for Network Firewall logging, and provides guidelines for optimizing the cost of your logging solution. This information will help you make a more informed choice for your organization’s use case.

Stateless and stateful rules engines logging

When discussing Network Firewall best practices, it’s essential to understand the distinction between stateful and stateless rules. Note that stateless rules don’t support firewall logging, which can make them difficult to work with in use cases that depend on logs.

To verify that traffic is forwarded to the stateful inspection engine that generates logs, you can add a custom-defined stateless rule group that covers the traffic you need to monitor, or you can set a default action for stateless traffic to be forwarded to stateful rule groups in the firewall policy, as shown in the following figure.

Figure 1: Set up stateless default actions to forward to stateful rule groups

Alert logs and flow logs

Network Firewall provides two types of logs:

- Alert — Sends logs for traffic that matches a stateful rule whose action is set to Alert or Drop.

- Flow — Sends logs for network traffic that the stateless engine forwards to the stateful rules engine.

To grasp the use cases of alert and flow logs, let’s begin by understanding what a flow is from the view of the firewall. For the network firewall, network flow is a one-way series of packets that share essential IP header information. It’s important to note that the Network Firewall flow log differs from the VPC flow log, as it captures the network flow from the firewall’s perspective and it is summarized in JSON format.

For example, the following sequence shows how an HTTP request passes through the Network Firewall.

Figure 2: HTTP request passes through Network Firewall

When you’re using a stateful rule to block egress HTTP traffic, the TCP connection will be established initially. When an HTTP request comes in, it will be evaluated by the stateful rule. Depending on the rule’s action, the firewall may send a TCP reset to the sender when a Reject action is configured, or it may drop the packets to block them if a Drop action is configured. In the case of a Drop action, shown in Figure 3, the Network Firewall decides not to forward the packets at the HTTP layer, and the closure of the connection is determined by the TCP timers on both the client and server sides.

Figure 3: HTTP request blocked by Network Firewall

In the given example, the Network Firewall generates a flow log that provides information like IP addresses, port numbers, protocols, timestamps, number of packets, and bytes of the traffic. However, it doesn’t include details about the stateful inspection, such as whether the traffic was blocked or allowed.

Figure 4 shows the inbound flow log.

Figure 4: Inbound flow log

Figure 5 shows the outbound flow log.

Figure 5: Outbound flow log

The alert log entry complements the flow log by containing stateful inspection details. The entry includes information about whether the traffic was allowed or blocked and also provides the hostname associated with the traffic. This additional information enhances the understanding of network activities and security events, as shown in Figure 6.

Figure 6: Alert log

In summary, flow logs provide stateless information and are valuable for identifying trends, like monitoring IP addresses that transmit the most data over time in your network. On the other hand, alert logs contain stateful inspection details, making them helpful for troubleshooting and threat hunting purposes.

Keep in mind that flow logs can become excessive. When you’re forwarding traffic to a stateful inspection engine, flow logs capture the network flows crossing your Network Firewall endpoints. Because log volume affects overall costs, it’s essential to choose the log type that suits your use case and security needs. If you don’t need flow logs for traffic flow trends, consider only enabling alert logs to help reduce expenses.

Effective logging with alert rules

When you write stateful rules using the Suricata format, set the alert rule to be evaluated before the pass rule to log allowed traffic. Be aware that:

- You must enable strict rule evaluation order to allow the alert rule to be evaluated before the pass rule. Otherwise the order of evaluation by default is pass rules first, then drop, then alert. The engine stops processing rules when it finds a match.

- When you use pass rules, it’s recommended to add a message to remind anyone looking at the policy that these rules do not generate messages. This will help when developing and troubleshooting your rules.

For example, the rules below will allow traffic to a target with a specific Server Name Indication (SNI) and log the traffic that was allowed. As you can see in the pass rule, it includes a message to remind the firewall policy maker that pass rules don’t alert. The alert rule evaluated before the pass rule logs a message to tell the log viewer which rule allows the traffic. This way you can see allowed domains in the logs.

This way you can see allowed domains in the alert logs.

Figure 7: Allowed domain in the alert log

Log destination considerations

Network Firewall supports the following log destinations:

You can select the destination that best fits your organization’s processes. In the next sections, we review the most common pattern for each log destination and walk you through the cost considerations, assuming a scenario in which you generate 15 TB Network Firewall logs in us-east-1 Region per month.

Amazon S3

Network Firewall is configured to inspect traffic and send logs to an S3 bucket in JSON format using Amazon CloudWatch vended logs, which are logs published by AWS services on behalf of the customer. Optionally, logs in the S3 bucket can then be queried using Amazon Athena for monitoring and analysis purposes. You can also create Amazon QuickSight dashboards with an Athena-based dataset to provide additional insight into traffic patterns and trends, as shown in Figure 8.

Figure 8: Architecture diagram showing AWS Network Firewall logs going to S3

Cost considerations

Note that Network Firewall logging charges for the pattern above are the combined charges for CloudWatch Logs vended log delivery to the S3 buckets and for using Amazon S3.

CloudWatch vended log pricing can influence overall costs significantly in this pattern, depending on the amount of logs generated by Network Firewall, so it’s recommended that your team be aware of the charges described in Amazon CloudWatch Pricing – Amazon Web Services (AWS). From the CloudWatch pricing page, navigate to Paid Tier, choose the Logs tab, select your Region and then under Vended Logs, see the information for Delivery to S3.

For Amazon S3, go to Amazon S3 Simple Storage Service Pricing – Amazon Web Services, choose the Storage & requests tab, and view the information for your Region in the Requests & data retrievals section. Costs will be dependent on storage tiers and usage patterns and the number of PUT requests to S3.

In our example, 15 TB is converted and compressed to approximately 380 GB in the S3 bucket. The total monthly cost in the us-east-1 Region is approximately $3800.

Long-term storage

There are additional features in Amazon S3 to help you save on storage costs:

- If after implementing Network Firewall logging, you determine that your log files are larger than 128 KB, you can take advantage of S3 Intelligent-Tiering, which provides automatic cost savings for files with unknown access patterns. There is additional cost savings potential in the S3 Intelligent-Tiering optional Archive Access and Deep Archive Access tiers, depending on your usage patterns.

- S3 Lifecycle management can help automatically move log files into cost-saving infrequent access storage tiers after a user-defined amount of time after creation.

- If logs need to be kept for compliance but are too small to take advantage of S3 Intelligent-Tiering, consider aggregating and placing them in the S3 Glacier Flexible Retrieval or S3 Glacier Deep Archive tiers as a way to save on storage costs. Metadata charges might apply and can influence costs depending on the number of objects being stored.

Analytics and reporting

Athena and QuickSight can be used for analytics and reporting:

- Athena can perform SQL queries directly against data in the S3 bucket where Network Firewall logs are stored. In the Athena query editor, a single query can be run to set up the table that points to the Network Firewall logging bucket.

- After data is available in Athena, you can use Athena as a data source for QuickSight dashboards. You can use QuickSight to visualize data from your Network Firewall logs, taking advantage of AWS serverless services.

- Please note that using Athena to scan firewall data in S3 might increase costs, as can the number of authors, users, reports, alerts, and SPICE data used in QuickSight.

Amazon CloudWatch Logs

In this pattern, shown in Figure 9, Network Firewall is configured to send logs to Amazon CloudWatch as a destination. Once the logs are available in CloudWatch, CloudWatch Log Insights can be used to search, analyze, and visualize your logs to generate alerts, notifications, and alarms based on specific log query patterns.

Figure 9: Architecture diagram using CloudWatch for Network Firewall Logs

Cost considerations

Configuring Network Firewall to send logs to CloudWatch incurs charges based on the number of metrics configured, metrics collection frequency, the number of API requests, and the log size. See Amazon CloudWatch Pricing for additional details.

In our example of 15 TB logs, this pattern in the us-east-1 Region results in approximately $6900.

CloudWatch dashboards offers a mechanism to create customized views of the metrics and alarms for your Network Firewall logs. These dashboards incur an additional charge of $3 per month for each dashboard.

Contributor Insights and CloudWatch alarms are additional ways that you can monitor logs for a pre-defined query pattern and take necessary corrective actions if needed. Contributor Insights are charged per Contributor Insights rule. To learn more, go to the Amazon CloudWatch Pricing page, and under Paid Tier, choose the Contributor Insights tab. CloudWatch alarms are charged based on the number of metric alarms configured and the number of CloudWatch Insights queries analyzed. To learn more, navigate to the CloudWatch pricing page and navigate to the Metrics Insights tab.

Long-term storage

CloudWatch offers the flexibility to retain logs from 1 day up to 10 years. The default behavior is never expire, but you should consider your use case and costs before deciding on the optimal log retention period. For cost optimization, the recommendation is to move logs that need to be preserved long-term or for compliance from CloudWatch to Amazon S3. Additional cost optimization can be achieved through S3 tiering. To learn more, see Managing your storage lifecycle in the S3 User Guide.

AWS Lambda with Amazon EventBridge, as shown in the following sample code, can be used to create an export task to send logs from CloudWatch to Amazon S3 based on an event rule, pattern matching rule, or scheduled time intervals for long-term storage and other use cases.

Figure 10 shows how EventBridge is configured to trigger the Lambda function periodically.

Figure 10: EventBridge scheduler for daily export of CloudWatch logs

Analytics and reporting

CloudWatch Insights offers a rich query language that you can use to perform complex searches and aggregations on your Network Firewall log data stored in log groups as shown in Figure 11.

The query results can be exported to CloudWatch dashboard for visualization and operational decision making. This will help you quickly identify patterns, anomalies, and trends in the log data to create the alarms for proactive monitoring and corrective actions.

Figure 11: Network Firewall logs ingested into CloudWatch and analyzed through CloudWatch Logs Insights

Amazon Kinesis Data Firehose

For this destination option, Network Firewall sends logs to Amazon Kinesis Data Firehose. From there, you can choose the destination for your logs, including Amazon S3, Amazon Redshift, Amazon OpenSearch Service, and an HTTP endpoint that’s owned by you or your third-party service providers. The most common approach for this option is to deliver logs to OpenSearch, where you can index log data, visualize, and analyze using dashboards as shown in Figure 12.

In the blog post How to analyze AWS Network Firewall logs using Amazon OpenSearch Service, you learn how to build network analytics and visualizations using OpenSearch in detail. Here, we discuss only some cost considerations of using this pattern.

Figure 12: Architecture diagram showing AWS Network Firewall logs going to OpenSearch

Cost considerations

The charge when using Kinesis Data Firehose as a log destination is for CloudWatch Logs vended log delivery. Ingestion pricing is tiered and billed per GB ingested in 5 KB increments. See Amazon Kinesis Data Firehose Pricing under Vended Logs as source. There are no additional Kinesis Data Firehose charges for delivery unless optional features are used.

For 15 TB of log data, the cost of CloudWatch delivery and Kinesis Data Firehose ingestion is approximately $5400 monthly in the us-east-1 Region.

The cost for Amazon OpenSearch Service is based on three dimensions:

- Instance hours, which are the number of hours that an instance is available to you for use

- The amount of storage you request

- The amount of data transferred in and out of OpenSearch Service