Post Syndicated from Igor Alekseev original https://aws.amazon.com/blogs/big-data/build-a-serverless-streaming-pipeline-with-amazon-msk-serverless-amazon-msk-connect-and-mongodb-atlas/

This post was cowritten with Babu Srinivasan and Robert Walters from MongoDB.

Amazon Managed Streaming for Apache Kafka (Amazon MSK) is a fully managed, highly available Apache Kafka service. Amazon MSK makes it easy to ingest and process streaming data in real time and use that data easily within the AWS ecosystem. With Amazon MSK Serverless, you can automatically provision and manage required resources to provide on-demand streaming capacity and storage for your applications.

Amazon MSK also supports integration of data sources such as MongoDB Atlas via Amazon MSK Connect. MSK Connect allows serverless integration of MongoDB data with Amazon MSK using the MongoDB Connector for Apache Kafka.

MongoDB Atlas Serverless provides database services that dynamically scale up and down with data size and throughput—and the cost scales accordingly. It’s best suited for applications with variable demands to be managed with minimal configuration. It provides high performance and reliability with automated upgrade, encryption, security, metrics, and backup features built in with the MongoDB Atlas infrastructure.

MSK Serverless is a type of cluster for Amazon MSK. Just like MongoDB Atlas Serverless, MSK Serverless automatically provisions and scales compute and storage resources. You can now create end-to-end serverless workflows. You can build a serverless streaming pipeline with serverless ingestion using MSK Serverless and serverless storage using MongoDB Atlas. In addition, MSK Connect now supports private DNS hostnames. This allows Serverless MSK instances to connect to Serverless MongoDB clusters via AWS PrivateLink, providing you with secure connectivity between platforms.

If you’re interested in using a non-serverless cluster, refer to Integrating MongoDB with Amazon Managed Streaming for Apache Kafka (MSK).

This post demonstrates how to implement a serverless streaming pipeline with MSK Serverless, MSK Connect, and MongoDB Atlas.

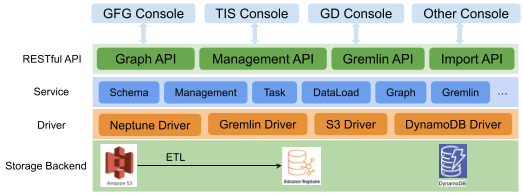

Solution overview

The following diagram illustrates our solution architecture.

The data flow starts with an Amazon Elastic Compute Cloud (Amazon EC2) client instance that writes records to an MSK topic. As data arrives, an instance of the MongoDB Connector for Apache Kafka writes the data to a collection in the MongoDB Atlas Serverless cluster. For secure connectivity between the two platforms, an AWS PrivateLink connection is created between the MongoDB Atlas cluster and the VPC containing the MSK instance.

This post walks you through the following steps:

- Create the serverless MSK cluster.

- Create the MongoDB Atlas Serverless cluster.

- Configure the MSK plugin.

- Create the EC2 client.

- Configure an MSK topic.

- Configure the MongoDB Connector for Apache Kafka as a sink.

Configure the serverless MSK cluster

To create a serverless MSK cluster, complete the following steps:

- On the Amazon MSK console, choose Clusters in the navigation pane.

- Choose Create cluster.

- For Creation method, select Custom create.

- For Cluster name, enter

MongoDBMSKCluster. - For Cluster type¸ select Serverless.

- Choose Next.

- On the Networking page, specify your VPC, Availability Zones, and corresponding subnets.

- Note the Availability Zones and subnets to use later.

- Choose Next.

- Choose Create cluster.

When the cluster is available, its status becomes Active.

Create the MongoDB Atlas Serverless cluster

To create a MongoDB Atlas cluster, follow the Getting Started with Atlas tutorial. Note that for the purposes of this post, you need to create a serverless instance.

After the cluster is created, configure an AWS private endpoint with the following steps:

- On the Security menu, choose Network Access.

- On the Private Endpoint tab, choose Serverless Instance.

- Choose Create new endpoint.

- For Serverless Instance, choose the instance you just created.

- Choose Confirm.

- Provide your VPC endpoint configuration and choose Next.

- When creating the AWS PrivateLink resource, make sure you specify the exact same VPC and subnets that you used earlier when creating the networking configuration for the serverless MSK instance.

- Choose Next.

- Follow the instructions on the Finalize page, then choose Confirm after your VPC endpoint is created.

Upon success, the new private endpoint will show up in the list, as shown in the following screenshot.

Configure the MSK Plugin

Next, we create a custom plugin in Amazon MSK using the MongoDB Connector for Apache Kafka. The connector needs to be uploaded to an Amazon Simple Storage Service (Amazon S3) bucket before you can create the plugin. To download the MongoDB Connector for Apache Kafka, refer to Download a Connector JAR File.

- On the Amazon MSK console, choose Customized plugins in the navigation pane.

- Choose Create custom plugin.

- For S3 URI, enter the S3 location of the downloaded connector.

- Choose Create custom plugin.

Configure an EC2 client

Next, let’s configure an EC2 instance. We use this instance to create the topic and insert data into the topic. For instructions, refer to the section Configure an EC2 client in the post Integrating MongoDB with Amazon Managed Streaming for Apache Kafka (MSK).

Create a topic on the MSK cluster

To create a Kafka topic, we need to install the Kafka CLI first.

- On the client EC2 instance, first install Java:

sudo yum install java-1.8.0

- Next, run the following command to download Apache Kafka:

wget https://archive.apache.org/dist/kafka/2.6.2/kafka_2.12-2.6.2.tgz

- Unpack the tar file using the following command:

tar -xzf kafka_2.12-2.6.2.tgz

The distribution of Kafka includes a bin folder with tools that can be used to manage topics.

- Go to the

kafka_2.12-2.6.2directory and issue the following command to create a Kafka topic on the serverless MSK cluster:

bin/kafka-topics.sh --create --topic sandbox_sync2 --bootstrap-server <BOOTSTRAP SERVER> --command-config=bin/client.properties --partitions 2

You can copy the bootstrap server endpoint on the View Client Information page for your serverless MSK cluster.

You can configure IAM authentication by following these instructions.

Configure the sink connector

Now, let’s configure a sink connector to send the data to the MongoDB Atlas Serverless instance.

- On the Amazon MSK console, choose Connectors in the navigation pane.

- Choose Create connector.

- Select the plugin you created earlier.

- Choose Next.

- Select the serverless MSK instance that you created earlier.

- Enter your connection configuration as the following code:

Make sure that the connection to the MongoDB Atlas Serverless instance is through AWS PrivateLink. For more information, refer to Connecting Applications Securely to a MongoDB Atlas Data Plane with AWS PrivateLink.

- In the Access Permissions section, create an AWS Identity and Access Management (IAM) role with the required trust policy.

- Choose Next.

- Specify Amazon CloudWatch Logs as your log delivery option.

- Complete your connector.

When the connector status changes to Active, the pipeline is ready.

Insert data into the MSK topic

On your EC2 client, insert data into the MSK topic using the kafka-console-producer as follows:

To verify that data successfully flows from the Kafka topic to the serverless MongoDB cluster, we use the MongoDB Atlas UI.

If you run into any issues, be sure to check the log files. In this example, we used CloudWatch to read the events that were generated from Amazon MSK and the MongoDB Connector for Apache Kafka.

Clean up

To avoid incurring future charges, clean up the resources you created. First, delete the MSK cluster, connector, and EC2 instance:

- On the Amazon MSK console, choose Clusters in the navigation pane.

- Select your cluster and on the Actions menu, choose Delete.

- Choose Connectors in the navigation pane.

- Select your connector and choose Delete.

- Choose Customized plugins in the navigation pane.

- Select your plugin and choose Delete.

- On the Amazon EC2 console, choose Instances in the navigation pane.

- Choose the instance you created.

- Choose Instance state, then choose Terminate instance.

- On the Amazon VPC console, choose Endpoints in the navigation pane.

- Select the endpoint you created and on the Actions menu, choose Delete VPC endpoints.

Now you can delete the Atlas cluster and AWS PrivateLink:

- Log in to the Atlas cluster console.

- Navigate to the serverless cluster to be deleted.

- On the options drop-down menu, choose Terminate.

- Navigate to the Network Access section.

- Choose the private endpoint.

- Select the serverless instance.

- On the options drop-down menu, choose Terminate.

Summary

In this post, we showed you how to build a serverless streaming ingestion pipeline using MSK Serverless and MongoDB Atlas Serverless. With MSK Serverless, you can automatically provision and manage required resources on an as-needed basis. We used a MongoDB connector deployed on MSK Connect to seamlessly integrate the two services, and used an EC2 client to send sample data to the MSK topic. MSK Connect now supports Private DNS hostnames, enabling you to use private domain names between the services. In this post, the connector used the default DNS servers of the VPC to resolve the Availability Zone-specific private DNS name. This AWS PrivateLink configuration allowed secure and private connectivity between the MSK Serverless instance and the MongoDB Atlas Serverless instance.

To continue your learning, check out the following resources:

- MongoDB Kafka Connector

- Amazon MSK Serverless

- Getting started using Amazon MSK

- Getting started using MSK Connect

About the Authors

Igor Alekseev is a Senior Partner Solution Architect at AWS in Data and Analytics domain. In his role Igor is working with strategic partners helping them build complex, AWS-optimized architectures. Prior joining AWS, as a Data/Solution Architect he implemented many projects in Big Data domain, including several data lakes in Hadoop ecosystem. As a Data Engineer he was involved in applying AI/ML to fraud detection and office automation.

Kiran Matty is a Principal Product Manager with Amazon Web Services (AWS) and works with the Amazon Managed Streaming for Apache Kafka (Amazon MSK) team based out of Palo Alto, California. He is passionate about building performant streaming and analytical services that help enterprises realize their critical use cases.

Kiran Matty is a Principal Product Manager with Amazon Web Services (AWS) and works with the Amazon Managed Streaming for Apache Kafka (Amazon MSK) team based out of Palo Alto, California. He is passionate about building performant streaming and analytical services that help enterprises realize their critical use cases.

Babu Srinivasan is a Senior Partner Solutions Architect at MongoDB. In his current role, he is working with AWS to build the technical integrations and reference architectures for the AWS and MongoDB solutions. He has more than two decades of experience in Database and Cloud technologies . He is passionate about providing technical solutions to customers working with multiple Global System Integrators(GSIs) across multiple geographies.

Babu Srinivasan is a Senior Partner Solutions Architect at MongoDB. In his current role, he is working with AWS to build the technical integrations and reference architectures for the AWS and MongoDB solutions. He has more than two decades of experience in Database and Cloud technologies . He is passionate about providing technical solutions to customers working with multiple Global System Integrators(GSIs) across multiple geographies.

Robert Walters is currently a Senior Product Manager at MongoDB. Previous to MongoDB, Rob spent 17 years at Microsoft working in various roles, including program management on the SQL Server team, consulting, and technical pre-sales. Rob has co-authored three patents for technologies used within SQL Server and was the lead author of several technical books on SQL Server. Rob is currently an active blogger on MongoDB Blogs.

Robert Walters is currently a Senior Product Manager at MongoDB. Previous to MongoDB, Rob spent 17 years at Microsoft working in various roles, including program management on the SQL Server team, consulting, and technical pre-sales. Rob has co-authored three patents for technologies used within SQL Server and was the lead author of several technical books on SQL Server. Rob is currently an active blogger on MongoDB Blogs.

Jeremy Ber has been working in the telemetry data space for the past 7 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the past, Jeremy has supported and built systems that stream in terabytes of data per day, and process complex machine learning algorithms in real time. At AWS, he is a Senior Streaming Specialist Solutions Architect supporting both Amazon MSK and Amazon Kinesis.

Jeremy Ber has been working in the telemetry data space for the past 7 years as a Software Engineer, Machine Learning Engineer, and most recently a Data Engineer. In the past, Jeremy has supported and built systems that stream in terabytes of data per day, and process complex machine learning algorithms in real time. At AWS, he is a Senior Streaming Specialist Solutions Architect supporting both Amazon MSK and Amazon Kinesis. Pratik Patel is a Sr Technical Account Manager and streaming analytics specialist. He works with AWS customers and provides ongoing support and technical guidance to help plan and build solutions using best practices, and proactively helps keep customer’s AWS environments operationally healthy.

Pratik Patel is a Sr Technical Account Manager and streaming analytics specialist. He works with AWS customers and provides ongoing support and technical guidance to help plan and build solutions using best practices, and proactively helps keep customer’s AWS environments operationally healthy.

Rajarshi Sarkar is a Software Development Engineer at Amazon EMR. He works on cutting-edge features of Amazon EMR and is also involved in open-source projects such as Apache Iceberg and Trino. In his spare time, he likes to travel, watch movies and hang out with friends.

Rajarshi Sarkar is a Software Development Engineer at Amazon EMR. He works on cutting-edge features of Amazon EMR and is also involved in open-source projects such as Apache Iceberg and Trino. In his spare time, he likes to travel, watch movies and hang out with friends.

Veena Vasudevan is a Senior Partner Solutions Architect and an Amazon EMR specialist at AWS focusing on Big Data and Analytics. She helps customers and partners build highly optimized, scalable, and secure solutions; modernize their architectures; and migrate their Big Data workloads to AWS.

Veena Vasudevan is a Senior Partner Solutions Architect and an Amazon EMR specialist at AWS focusing on Big Data and Analytics. She helps customers and partners build highly optimized, scalable, and secure solutions; modernize their architectures; and migrate their Big Data workloads to AWS.

Aish Gunasekar is a Specialist Solutions architect with a focus on Amazon OpenSearch Service. Her passion at AWS is to help customers design highly scalable architectures and help them in their cloud adoption journey. Outside of work, she enjoys hiking and baking.

Aish Gunasekar is a Specialist Solutions architect with a focus on Amazon OpenSearch Service. Her passion at AWS is to help customers design highly scalable architectures and help them in their cloud adoption journey. Outside of work, she enjoys hiking and baking. Pavani Baddepudi is a senior product manager working in search services at AWS. Her interests include distributed systems, networking, and security.

Pavani Baddepudi is a senior product manager working in search services at AWS. Her interests include distributed systems, networking, and security.

Gonzalo Herreros is a Senior Big Data Architect on the AWS Glue team.

Gonzalo Herreros is a Senior Big Data Architect on the AWS Glue team. Michael Benattar is a Senior Software Engineer on the AWS Glue Studio team. He has led the design and implementation of the custom visual transform feature.

Michael Benattar is a Senior Software Engineer on the AWS Glue Studio team. He has led the design and implementation of the custom visual transform feature.

David Adamson is the head of WWSO Insights. He is leading the team on the journey to a data driven organization that delivers insightful, actionable data products to WWSO and shared in partnership with the broader AWS organization. In his spare time, he likes to travel across the world and can be found in his back garden, weather permitting exploring and photography the night sky.

David Adamson is the head of WWSO Insights. He is leading the team on the journey to a data driven organization that delivers insightful, actionable data products to WWSO and shared in partnership with the broader AWS organization. In his spare time, he likes to travel across the world and can be found in his back garden, weather permitting exploring and photography the night sky. Yash Agrawal is an Analytics Lead at Amazon Web Services. Yash’s role is to define the analytics roadmap, develop standardized global dashboards & deliver insightful analytics solutions for stakeholders across AWS.

Yash Agrawal is an Analytics Lead at Amazon Web Services. Yash’s role is to define the analytics roadmap, develop standardized global dashboards & deliver insightful analytics solutions for stakeholders across AWS. Addis Crooks-Jones is a Sr. Manager of Business Intelligence Engineering at Amazon Web Services Finance, Analytics and Science Team (FAST). She is responsible for partnering with business leaders in the World Wide Specialist Organization to build a culture of data to support strategic initiatives. The technology solutions developed are used globally to drive decision making across AWS. When not thinking about new plans involving data, she like to be on adventures big and small involving food, art and all the fun people in her life.

Addis Crooks-Jones is a Sr. Manager of Business Intelligence Engineering at Amazon Web Services Finance, Analytics and Science Team (FAST). She is responsible for partnering with business leaders in the World Wide Specialist Organization to build a culture of data to support strategic initiatives. The technology solutions developed are used globally to drive decision making across AWS. When not thinking about new plans involving data, she like to be on adventures big and small involving food, art and all the fun people in her life. Graham Gilvar is an Analytics Lead at Amazon Web Services. He builds and maintains centralized QuickSight dashboards, which enable stakeholders across all WWSO services to interlock and make data driven decisions. In his free time, he enjoys walking his dog, golfing, bowling, and playing hockey.

Graham Gilvar is an Analytics Lead at Amazon Web Services. He builds and maintains centralized QuickSight dashboards, which enable stakeholders across all WWSO services to interlock and make data driven decisions. In his free time, he enjoys walking his dog, golfing, bowling, and playing hockey. Shilpa Koogegowda is the Sales Ops Analyst at Amazon Web Services and has been part of the WWSO Insights team for the last two years. Her role involves building standardized metrics, dashboards and data products to provide data and insights to the customers.

Shilpa Koogegowda is the Sales Ops Analyst at Amazon Web Services and has been part of the WWSO Insights team for the last two years. Her role involves building standardized metrics, dashboards and data products to provide data and insights to the customers.

Kinnar Kumar Sen is a Sr. Solutions Architect at Amazon Web Services (AWS) focusing on Flexible Compute. As a part of the EC2 Flexible Compute team, he works with customers to guide them to the most elastic and efficient compute options that are suitable for their workload running on AWS. Kinnar has more than 15 years of industry experience working in research, consultancy, engineering, and architecture.

Kinnar Kumar Sen is a Sr. Solutions Architect at Amazon Web Services (AWS) focusing on Flexible Compute. As a part of the EC2 Flexible Compute team, he works with customers to guide them to the most elastic and efficient compute options that are suitable for their workload running on AWS. Kinnar has more than 15 years of industry experience working in research, consultancy, engineering, and architecture.

Snigdha Sahi is a Senior Product Manager (Technical) with Amazon Web Services (AWS) Marketplace. She has diverse work experience across product management, product development and management consulting. A technology enthusiast, she loves brainstorming and building products that are intuitive and easy to use. In her spare time, she enjoys hiking, solving Sudoku and listening to music.

Snigdha Sahi is a Senior Product Manager (Technical) with Amazon Web Services (AWS) Marketplace. She has diverse work experience across product management, product development and management consulting. A technology enthusiast, she loves brainstorming and building products that are intuitive and easy to use. In her spare time, she enjoys hiking, solving Sudoku and listening to music. Vincent Larchet is a Principal Software Development Engineer on the AWS Marketplace team based in Vancouver, BC. Outside of work, he is a passionate wood worker and DIYer.

Vincent Larchet is a Principal Software Development Engineer on the AWS Marketplace team based in Vancouver, BC. Outside of work, he is a passionate wood worker and DIYer.