Post Syndicated from Michael Keane http://blog.cloudflare.com/author/michael-keane/ original https://blog.cloudflare.com/single-vendor-sase-announcement-2024

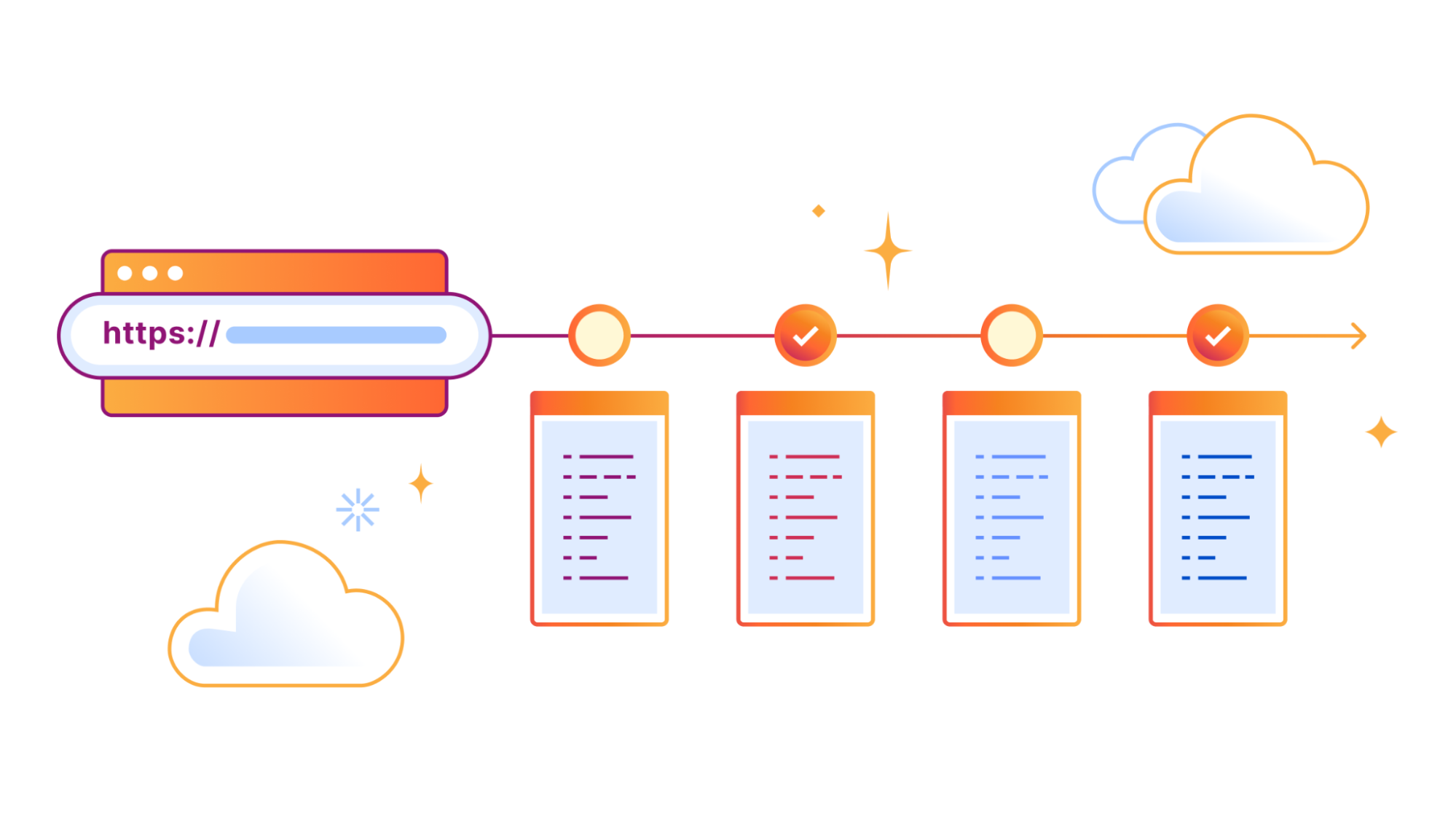

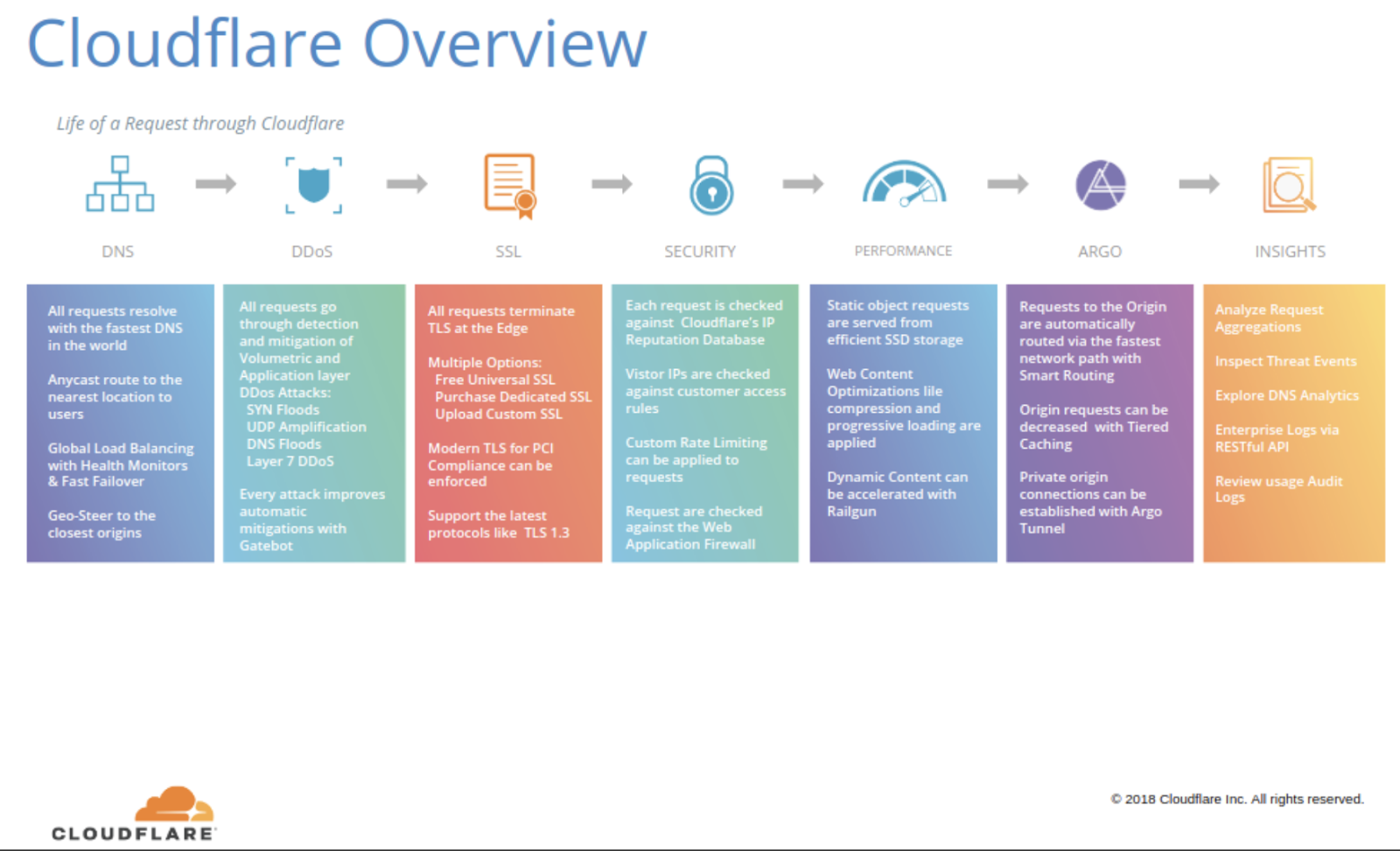

As more organizations collectively progress toward adopting a SASE architecture, it has become clear that the traditional SASE market definition (SSE + SD-WAN) is not enough. It forces some teams to work with multiple vendors to address their specific needs, introducing performance and security tradeoffs. More worrisome, it draws focus more to a checklist of services than a vendor’s underlying architecture. Even the most advanced individual security services or traffic on-ramps don’t matter if organizations ultimately send their traffic through a fragmented, flawed network.

Single-vendor SASE is a critical trend to converge disparate security and networking technologies, yet enterprise “any-to-any connectivity” needs true network modernization for SASE to work for all teams. Over the past few years, Cloudflare has launched capabilities to help organizations modernize their networks as they navigate their short- and long-term roadmaps of SASE use cases. We’ve helped simplify SASE implementation, regardless of the team leading the initiative.

Announcing (even more!) flexible on-ramps for single-vendor SASE

Today, we are announcing a series of updates to our SASE platform, Cloudflare One, that further the promise of a single-vendor SASE architecture. Through these new capabilities, Cloudflare makes SASE networking more flexible and accessible for security teams, more efficient for traditional networking teams, and uniquely extend its reach to an underserved technical team in the larger SASE connectivity conversation: DevOps.

These platform updates include:

- Flexible on-ramps for site-to-site connectivity that enable both agent/proxy-based and appliance/routing-based implementations, simplifying SASE networking for both security and networking teams.

- New WAN-as-a-service (WANaaS) capabilities like high availability, application awareness, a virtual machine deployment option, and enhanced visibility and analytics that boost operational efficiency while reducing network costs through a “light branch, heavy cloud” approach.

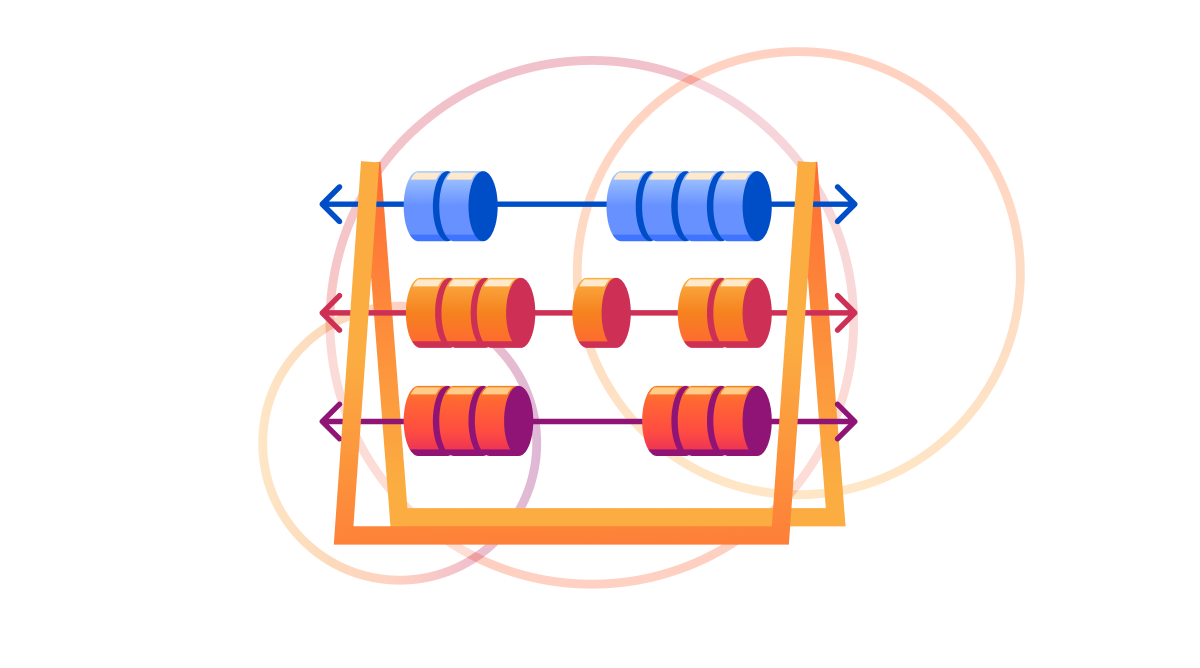

- Zero Trust connectivity for DevOps: mesh and peer-to-peer (P2P) secure networking capabilities that extend ZTNA to support service-to-service workflows and bidirectional traffic.

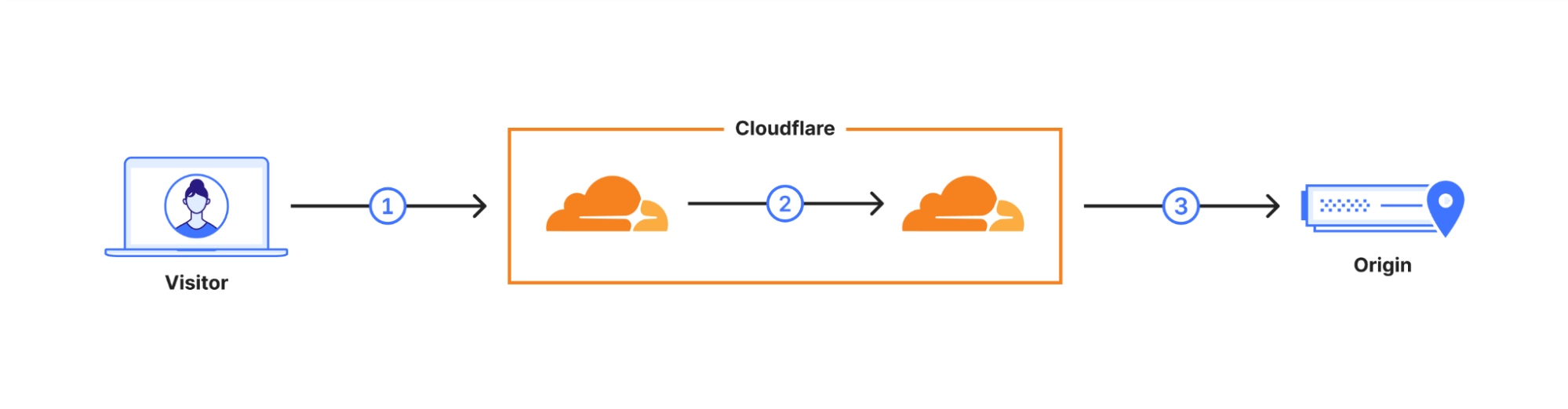

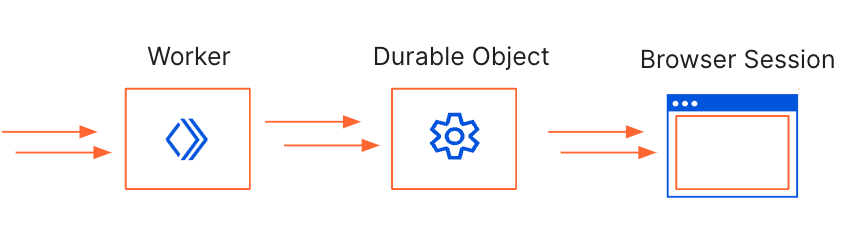

Cloudflare offers a wide range of SASE on- and off-ramps — including connectors for your WAN, applications, services, systems, devices, or any other internal network resources — to more easily route traffic to and from Cloudflare services. This helps organizations align with their best fit connectivity paradigm, based on existing environment, technical familiarity, and job role.

We recently dove into the Magic WAN Connector in a separate blog post and have explained how all our on-ramps fit together in our SASE reference architecture, including our new WARP Connector. This blog focuses on the main impact those technologies have for customers approaching SASE networking from different angles.

More flexible and accessible for security teams

The process of implementing a SASE architecture can challenge an organization’s status quo for internal responsibilities and collaboration across IT, security, and networking. Different teams own various security or networking technologies whose replacement cycles are not necessarily aligned, which can reduce the organization’s willingness to support particular projects.

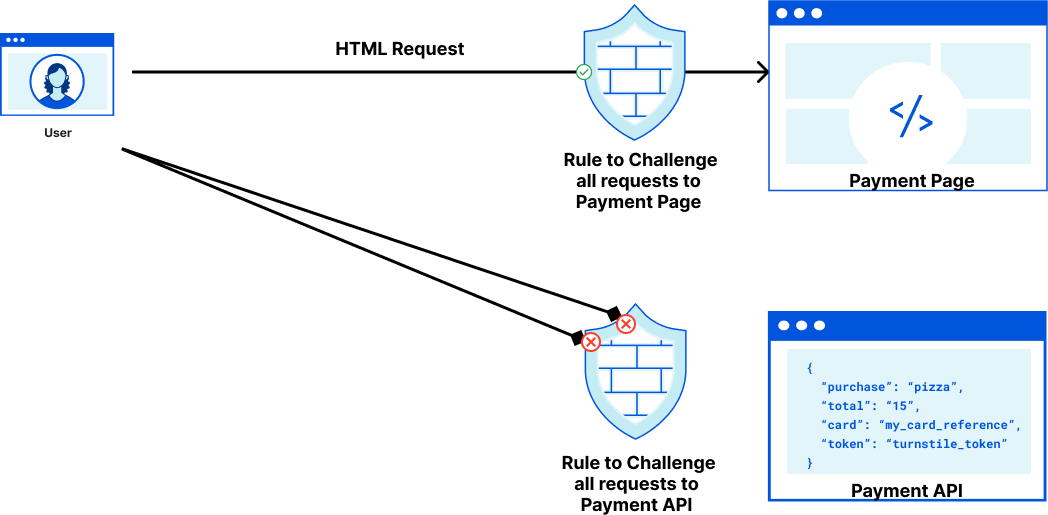

Security or IT practitioners need to be able to protect resources no matter where they reside. Sometimes a small connectivity change would help them more efficiently protect a given resource, but the task is outside their domain of control. Security teams don’t want to feel reliant on their networking teams in order to do their jobs, and yet they also don’t need to cause downstream trouble with existing network infrastructure. They need an easier way to connect subnets, for instance, without feeling held back by bureaucracy.

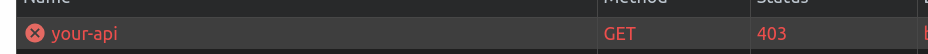

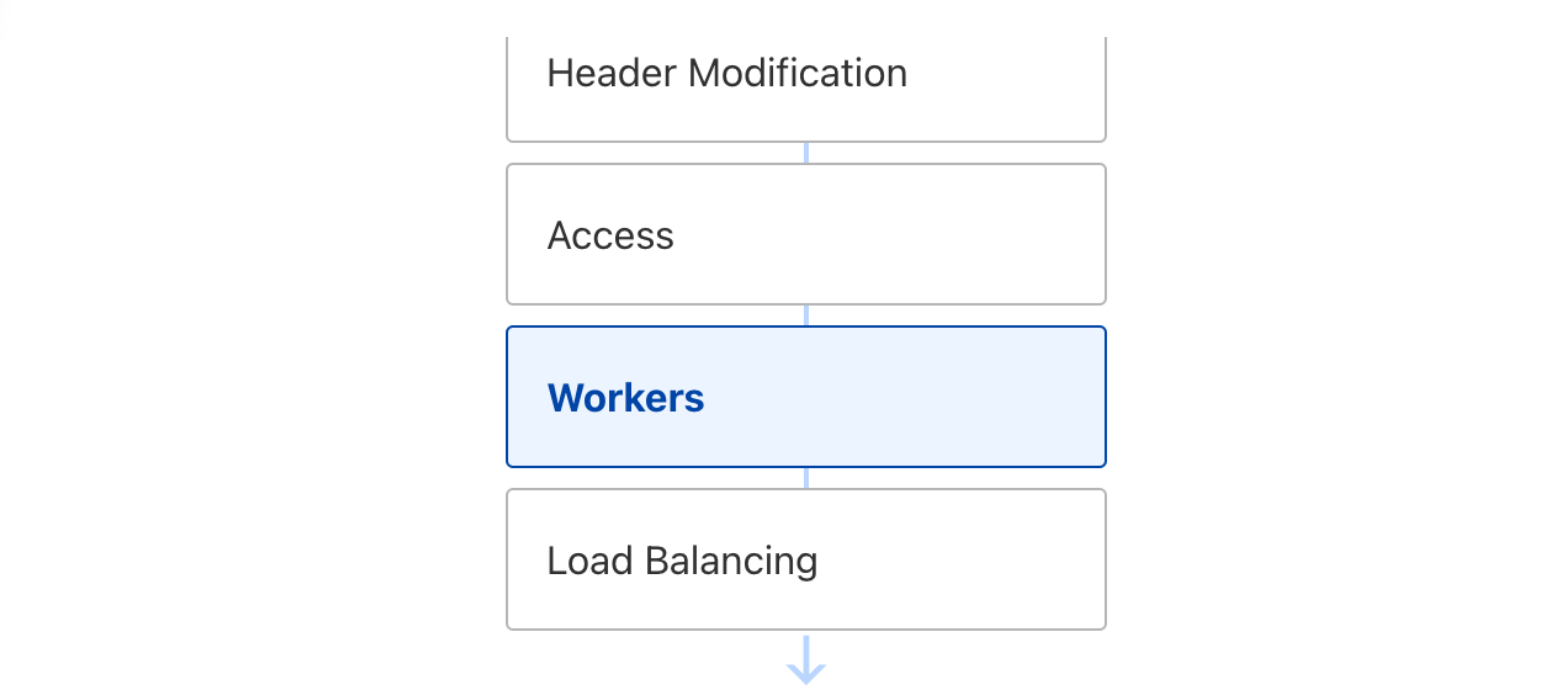

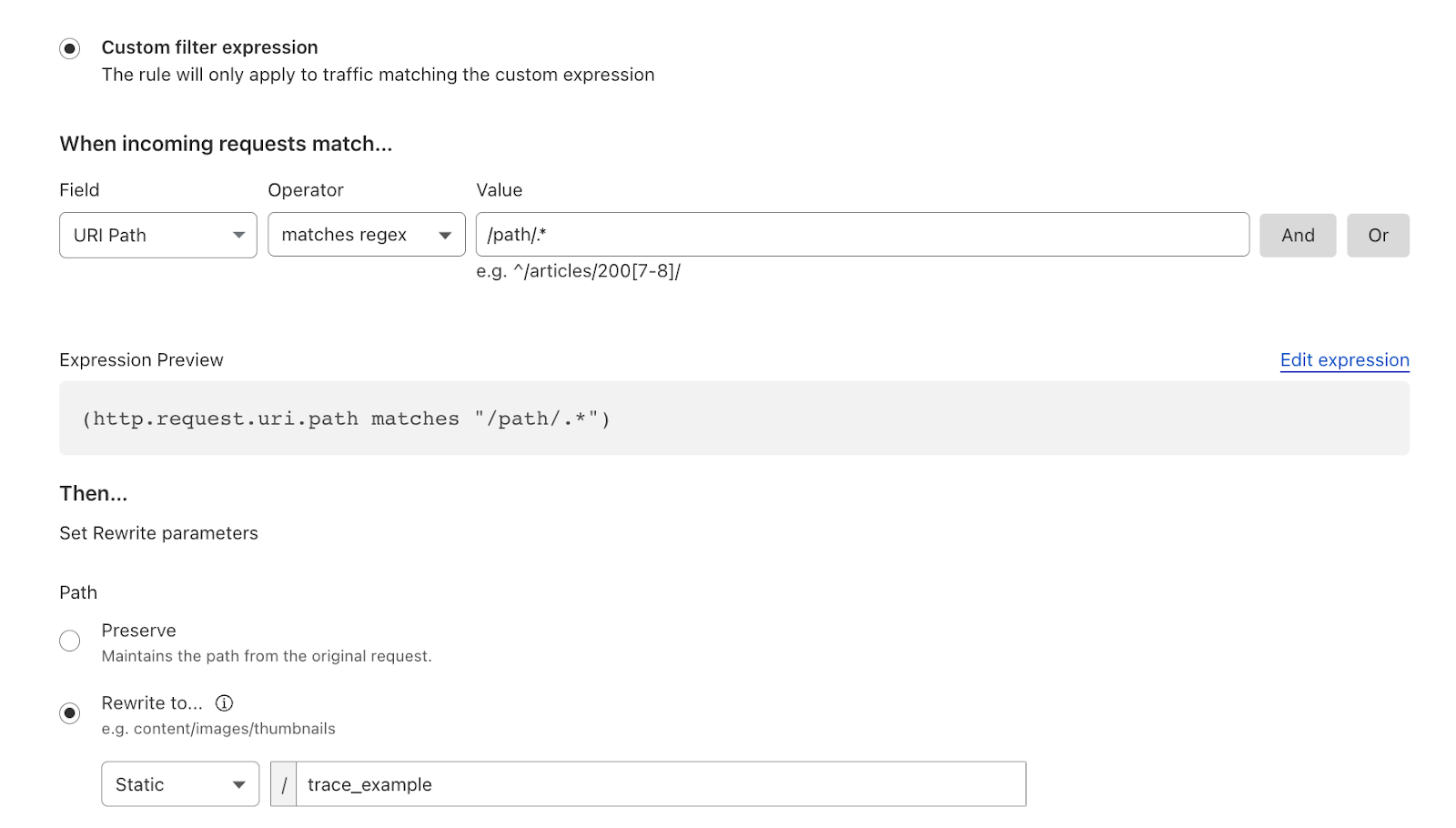

Agent/proxy-based site-to-site connectivity

To help push these security-led projects past the challenges associated with traditional siloes, Cloudflare offers both agent/proxy-based and appliance/routing-based implementations for site-to-site or subnet-to-subnet connectivity. This way, networking teams can pursue the traditional networking concepts with which they are familiar through our appliance/routing-based WANaaS — a modern architecture vs. legacy SD-WAN overlays. Simultaneously, security/IT teams can achieve connectivity through agent/proxy-based software connectors (like the WARP Connector) that may be more approachable to implement. This agent-based approach blurs the lines between industry norms for branch connectors and app connectors, bringing WAN and ZTNA technology closer together to help achieve least-privileged access everywhere.

Agent/proxy-based connectivity may be a complementary fit for a subset of an organization’s total network connectivity. These software-driven site-to-site use cases could include microsites with no router or firewall, or perhaps cases in which teams are unable to configure IPsec or GRE tunnels like in tightly regulated managed networks or cloud environments like Kubernetes. Organizations can mix and match traffic on-ramps to fit their needs; all options can be used composably and concurrently.

Our agent/proxy-based approach to site-to-site connectivity uses the same underlying technology that helps security teams fully replace VPNs, supporting ZTNA for apps with server-initiated or bidirectional traffic. These include services such as Voice over Internet Protocol (VoIP) and Session Initiation Protocol (SIP) traffic, Microsoft’s System Center Configuration Manager (SCCM), Active Directory (AD) domain replication, and as detailed later in this blog, DevOps workflows.

This new Cloudflare on-ramp enables site-to-site, bidirectional, and mesh networking connectivity without requiring changes to underlying network routing infrastructure, acting as a router for the subnet within the private network to on-ramp and off-ramp traffic through Cloudflare.

More efficient for networking teams

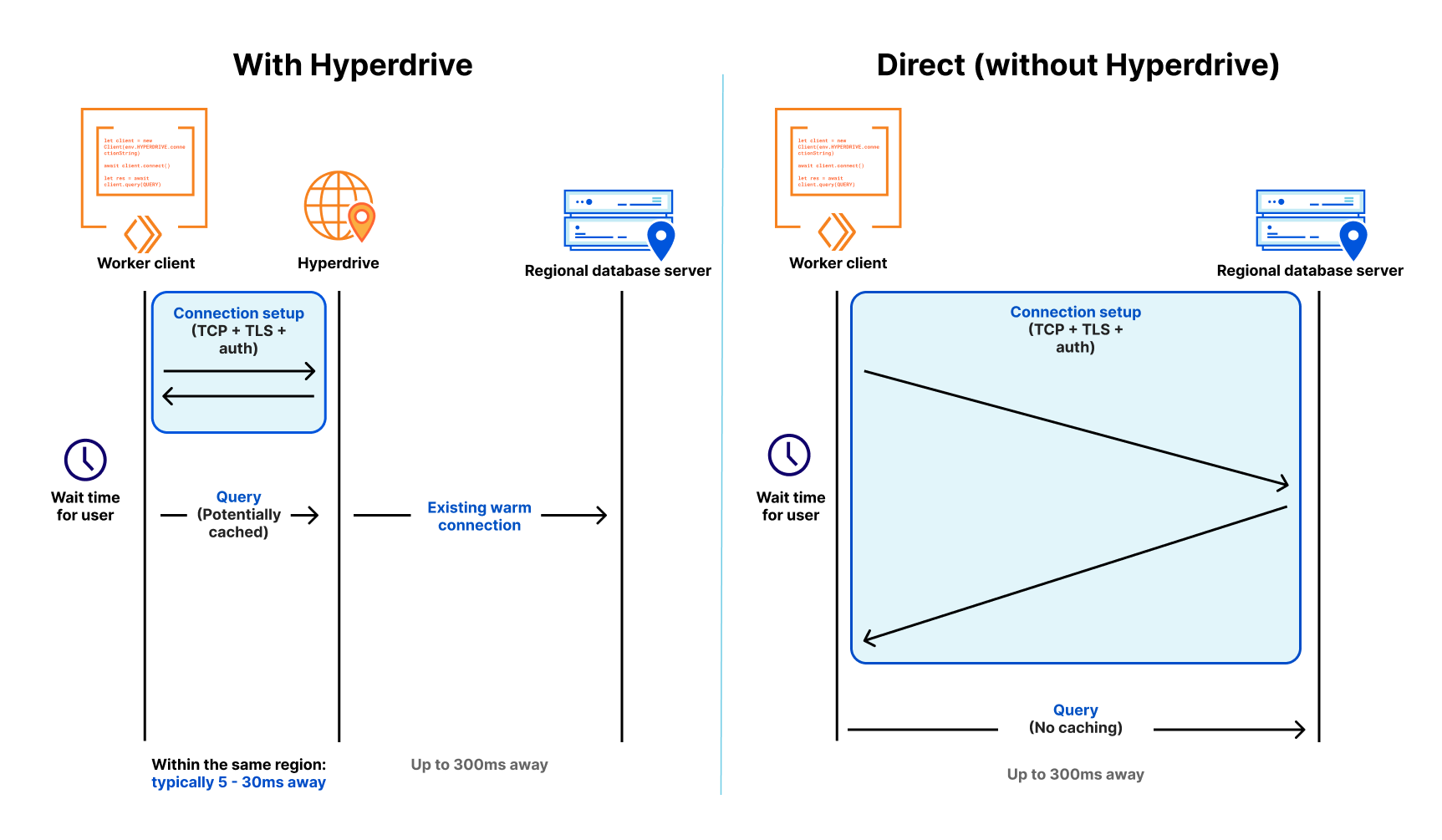

Meanwhile, for networking teams who prefer a network-layer appliance/routing-based implementation for site-to-site connectivity, the industry norms still force too many tradeoffs between security, performance, cost, and reliability. Many (if not most) large enterprises still rely on legacy forms of private connectivity such as MPLS. MPLS is generally considered expensive and inflexible, but it is highly reliable and has features such as quality of service (QoS) that are used for bandwidth management.

Commodity Internet connectivity is widely available in most parts of the inhabited world, but has a number of challenges which make it an imperfect replacement to MPLS. In many countries, high speed Internet is fast and cheap, but this is not universally true. Speed and costs depend on the local infrastructure and the market for regional service providers. In general, broadband Internet is also not as reliable as MPLS. Outages and slowdowns are not unusual, with customers having varying degrees of tolerance to the frequency and duration of disrupted service. For businesses, outages and slowdowns are not tolerable. Disruptions to network service means lost business, unhappy customers, lower productivity and frustrated employees. Thus, despite the fact that a significant amount of corporate traffic flows have shifted to the Internet anyway, many organizations face difficulty migrating away from MPLS.

SD-WAN introduced an alternative to MPLS that is transport neutral and improves networking stability over conventional broadband alone. However, it introduces new topology and security challenges. For example, many SD-WAN implementations can increase risk if they bypass inspection between branches. It also has implementation-specific challenges such as how to address scaling and the use/control (or more precisely, the lack of) a middle mile. Thus, the promise of making a full cutover to Internet connectivity and eliminating MPLS remains unfulfilled for many organizations. These issues are also not very apparent to some customers at the time of purchase and require continuing market education.

Evolution of the enterprise WAN

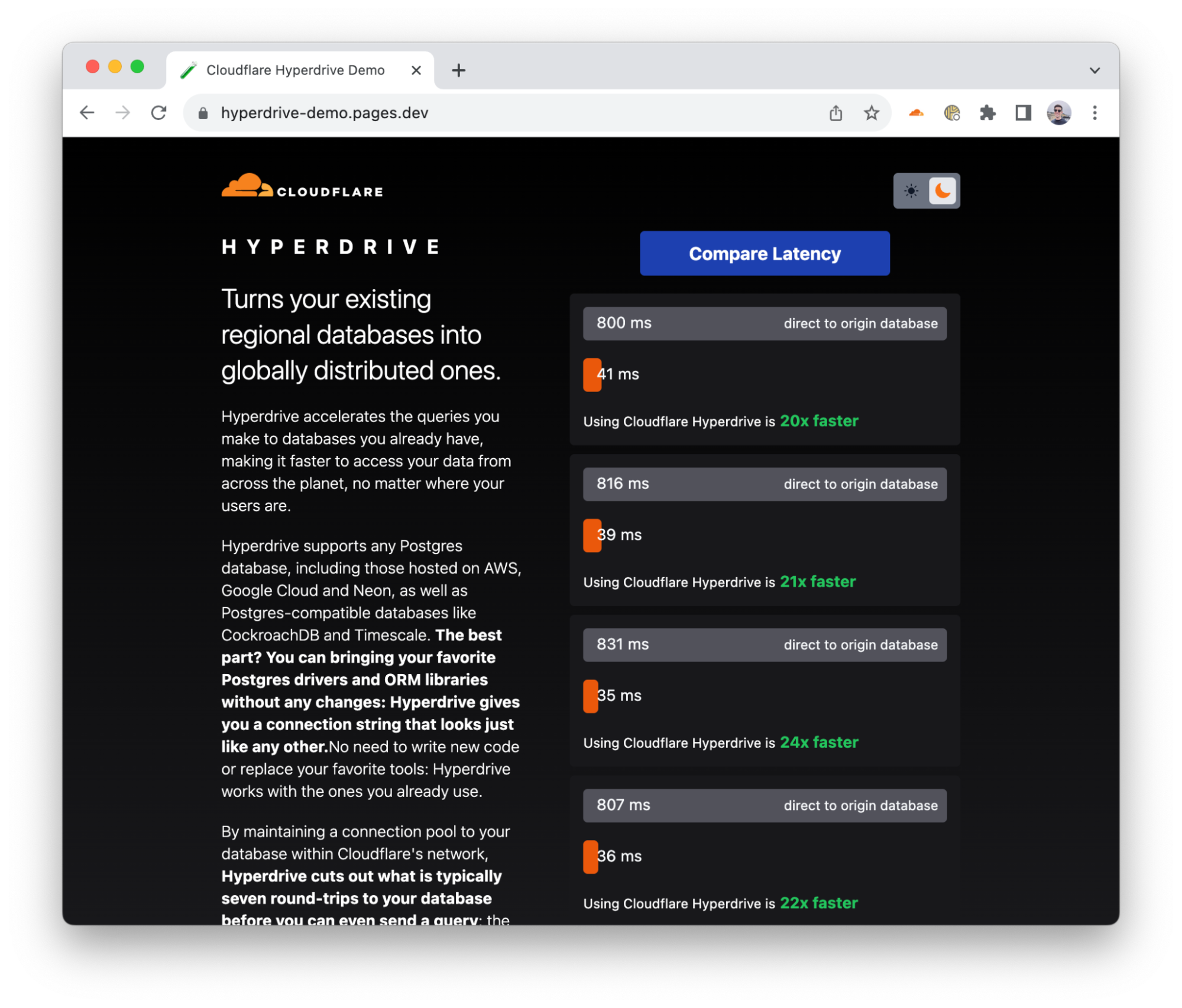

Cloudflare Magic WAN follows a different paradigm built from the ground up in Cloudflare’s connectivity cloud; it takes a “light branch, heavy cloud” approach to augment and eventually replace existing network architectures including MPLS circuits and SD-WAN overlays. While Magic WAN has similar cloud-native routing and configuration controls to what customers would expect from traditional SD-WAN, it is easier to deploy, manage, and consume. It scales with changing business requirements, with security built in. Customers like Solocal agree that the benefits of this architecture ultimately improve their total cost of ownership:

“Cloudflare’s Magic WAN Connector offers a centralized and automated management of network and security infrastructure, in an intuitive approach. As part of Cloudflare’s SASE platform, it provides a consistent and homogeneous single-vendor architecture, founded on market standards and best practices. Control over all data flows is ensured, and risks of breaches or security gaps are reduced. It is obvious to Solocal that it should provide us with significant savings, by reducing all costs related to acquiring, installing, maintaining, and upgrading our branch network appliances by up to 40%. A high-potential connectivity solution for our IT to modernize our network.”

– Maxime Lacour, Network Operations Manager, Solocal

This is quite different from other single-vendor SASE vendor approaches which have been trying to reconcile acquisitions that were designed around fundamentally different design philosophies. These “stitched together” solutions lead to a non-converged experience due to their fragmented architectures, similar to what organizations might see if they were managing multiple separate vendors anyway. Consolidating the components of SASE with a vendor that has built a unified, integrated solution, versus piecing together different solutions for networking and security, significantly simplifies deployment and management by reducing complexity, bypassed security, and potential integration or connectivity challenges.

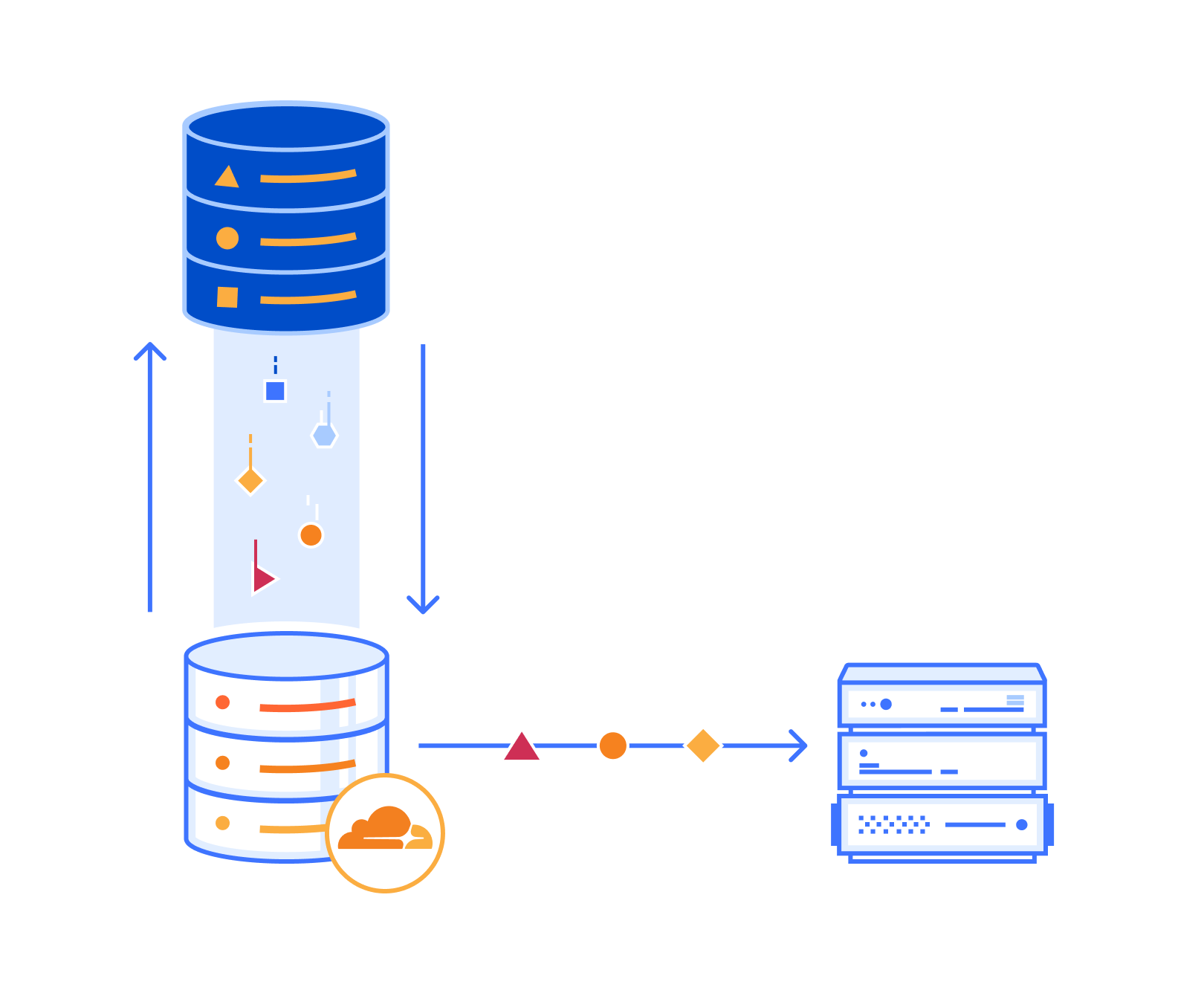

Magic WAN can automatically establish IPsec tunnels to Cloudflare via our Connector device, manually via Anycast IPsec or GRE Tunnels initiated on a customer’s edge router or firewall, or via Cloudflare Network Interconnect (CNI) at private peering locations or public cloud instances. It pushes beyond “integration” claims with SSE to truly converge security and networking functionality and help organizations more efficiently modernize their networks.

New Magic WAN Connector capabilities

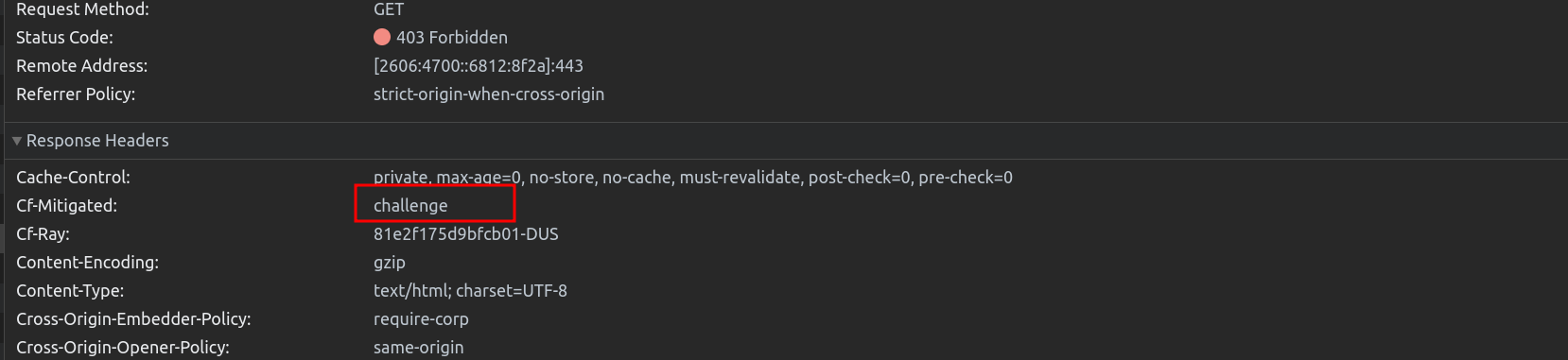

In October 2023, we announced the general availability of the Magic WAN Connector, a lightweight device that customers can drop into existing network environments for zero-touch connectivity to Cloudflare One, and ultimately used to replace other networking hardware such as legacy SD-WAN devices, routers, and firewalls. Today, we’re excited to announce new capabilities of the Magic WAN Connector including:

- High Availability (HA) configurations for critical environments: In enterprise deployments, organizations generally desire support for high availability to mitigate the risk of hardware failure. High availability uses a pair of Magic WAN Connectors (running as a VM or on a supported hardware device) that work in conjunction with one another to seamlessly resume operation if one device fails. Customers can manage HA configuration, like all other aspects of the Magic WAN Connector, from the unified Cloudflare One dashboard.

- Application awareness: One of the central differentiating features of SD-WAN vs. more traditional networking devices has been the ability to create traffic policies based on well-known applications, in addition to network-layer attributes like IP and port ranges. Application-aware policies provide easier management and more granularity over traffic flows. Cloudflare’s implementation of application awareness leverages the intelligence of our global network, using the same categorization/classification already shared across security tools like our Secure Web Gateway, so IT and security teams can expect consistent behavior across routing and inspection decisions – a capability not available in dual-vendor or stitched-together SASE solutions.

- Virtual machine deployment option: The Magic WAN Connector is now available as a virtual appliance software image, that can be downloaded for immediate deployment on any supported virtualization platform / hypervisor. The virtual Magic WAN Connector has the same ultra-low-touch deployment model and centralized fleet management experience as the hardware appliance, and is offered to all Magic WAN customers at no additional cost.

- Enhanced visibility and analytics: The Magic WAN Connector features enhanced visibility into key metrics such as connectivity status, CPU utilization, memory consumption, and device temperature. These analytics are available via dashboard and API so operations teams can integrate the data into their NOCs.

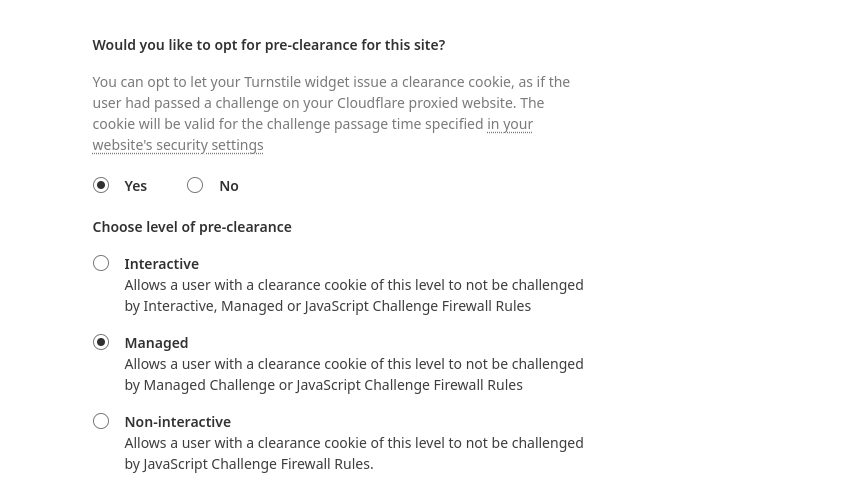

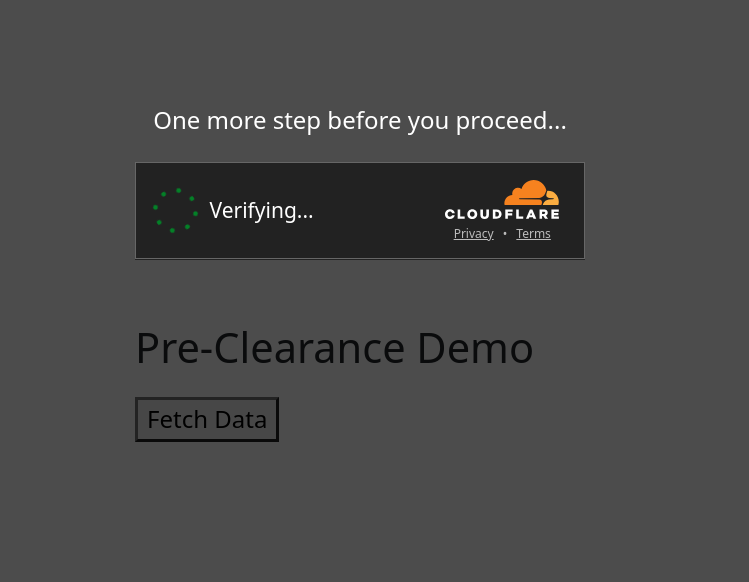

Extending SASE’s reach to DevOps

Complex continuous integration and continuous delivery (CI/CD) pipeline interaction is famous for being agile, so the connectivity and security supporting these workflows should match. DevOps teams too often rely on traditional VPNs to accomplish remote access to various development and operational tools. VPNs are cumbersome to manage, susceptible to exploit with known or zero-day vulnerabilities, and use a legacy hub-and-spoke connectivity model that is too slow for modern workflows.

Of any employee group, developers are particularly capable of finding creative workarounds that decrease friction in their daily workflows, so all corporate security measures need to “just work,” without getting in their way. Ideally, all users and servers across build, staging, and production environments should be orchestrated through centralized, Zero Trust access controls, no matter what components and tools are used and no matter where they are located. Ad hoc policy changes should be accommodated, as well as temporary Zero Trust access for contractors or even emergency responders during a production server incident.

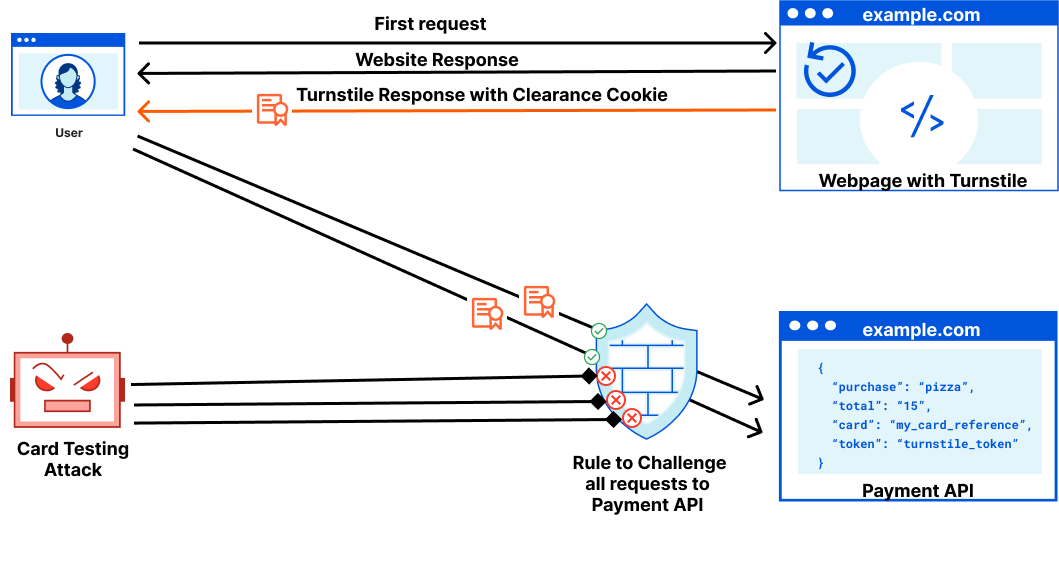

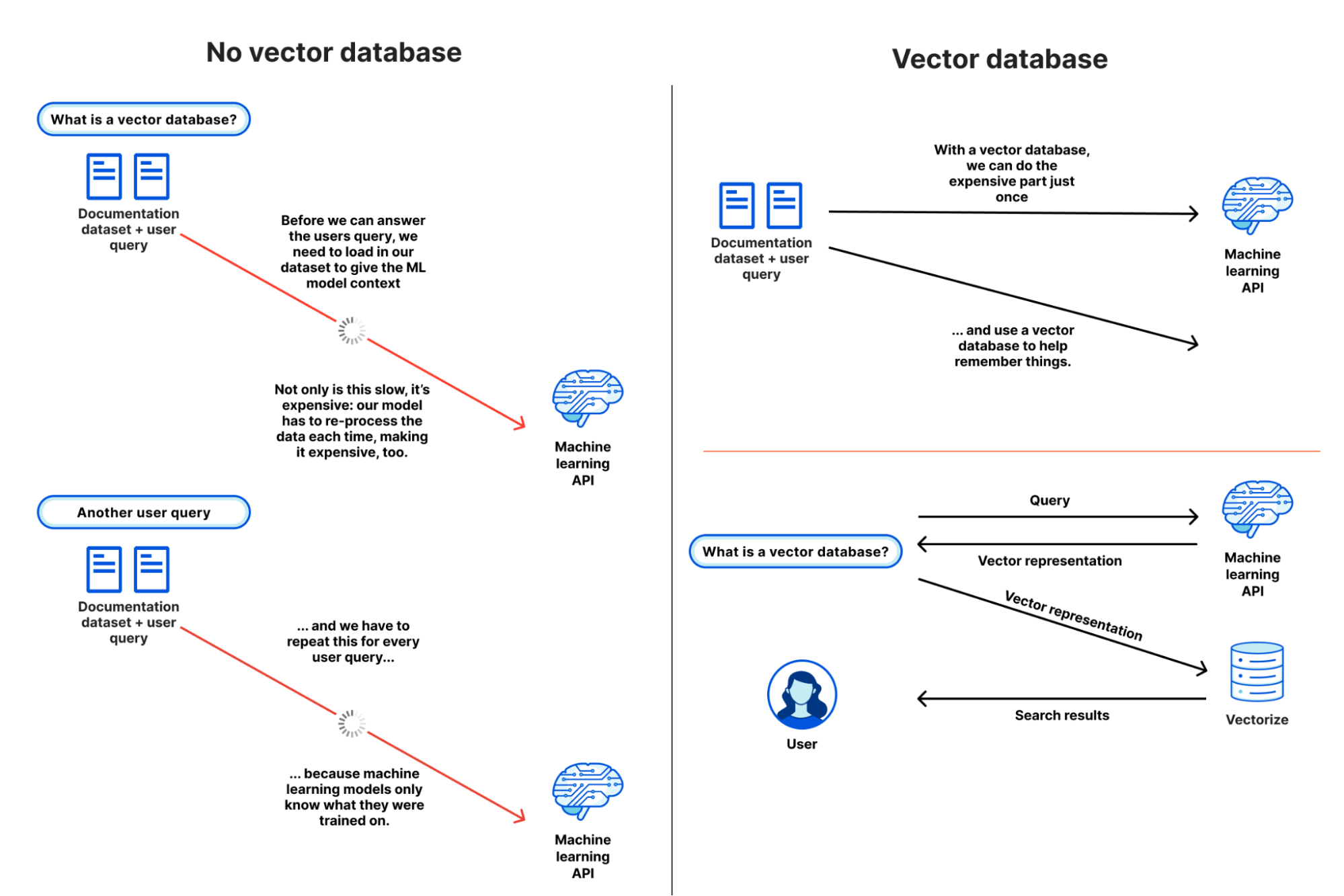

Zero Trust connectivity for DevOps

ZTNA works well as an industry paradigm for secure, least-privileged user-to-app access, but it should extend further to secure networking use cases that involve server-initiated or bidirectional traffic. This follows an emerging trend that imagines an overlay mesh connectivity model across clouds, VPCs, or network segments without a reliance on routers. For true any-to-any connectivity, customers need flexibility to cover all of their network connectivity and application access use cases. Not every SASE vendor’s network on-ramps can extend beyond client-initiated traffic without requiring network routing changes or making security tradeoffs, so generic “any-to-any connectivity” claims may not be what they initially seem.

Cloudflare extends the reach of ZTNA to ensure all user-to-app use cases are covered, plus mesh and P2P secure networking to make connectivity options as broad and flexible as possible. DevOps service-to-service workflows can run efficiently on the same platform that accomplishes ZTNA, VPN replacement, or enterprise-class SASE. Cloudflare acts as the connectivity “glue” across all DevOps users and resources, regardless of the flow of traffic at each step. This same technology, i.e., WARP Connector, enables admins to manage different private networks with overlapping IP ranges — VPC & RFC1918, support server-initiated traffic and P2P apps (e.g., SCCM, AD, VoIP & SIP traffic) connectivity over existing private networks, build P2P private networks (e.g., CI/CD resource flows), and deterministically route traffic. Organizations can also automate management of their SASE platform with Cloudflare’s Terraform provider.

The Cloudflare difference

Cloudflare’s single-vendor SASE platform, Cloudflare One, is built on our connectivity cloud — the next evolution of the public cloud, providing a unified, intelligent platform of programmable, composable services that enable connectivity between all networks (enterprise and Internet), clouds, apps, and users. Our connectivity cloud is flexible enough to make “any-to-any connectivity” a more approachable reality for organizations implementing a SASE architecture, accommodating deployment preferences alongside prescriptive guidance. Cloudflare is built to offer the breadth and depth needed to help organizations regain IT control through single-vendor SASE and beyond, while simplifying workflows for every team that contributes along the way.

Other SASE vendors designed their data centers for egress traffic to the Internet. They weren’t designed to handle or secure East-West traffic, providing neither middle mile nor security services for traffic passing from branch to HQ or branch to branch. Cloudflare’s middle mile global backbone supports security and networking for any-to-any connectivity, whether users are on-prem or remote, and whether apps are in the data center or in the cloud.

To learn more, read our reference architecture, “Evolving to a SASE architecture with Cloudflare,” or talk to a Cloudflare One expert.