Post Syndicated from Kristian Freeman original https://blog.cloudflare.com/building-black-friday-e-commerce-experiences-with-jamstack-and-cloudflare-workers/

The idea of serverless is to allow developers to focus on writing code rather than operations — the hardest of which is scaling applications. A predictably great deal of traffic that flows through Cloudflare’s network every year is Black Friday. As John wrote at the end of last year, Black Friday is the Internet’s biggest online shopping day. In a past case study, we talked about how Cordial, a marketing automation platform, used Cloudflare Workers to reduce their API server latency and handle the busiest shopping day of the year without breaking a sweat.

The ability to handle immense scale is well-trodden territory for us on the Cloudflare blog, but scale is not always the first thing developers think about when building an application — developer experience is likely to come first. And developer experience is something Workers does just as well; through Wrangler and APIs like Workers KV, Workers is an awesome place to hack on new projects.

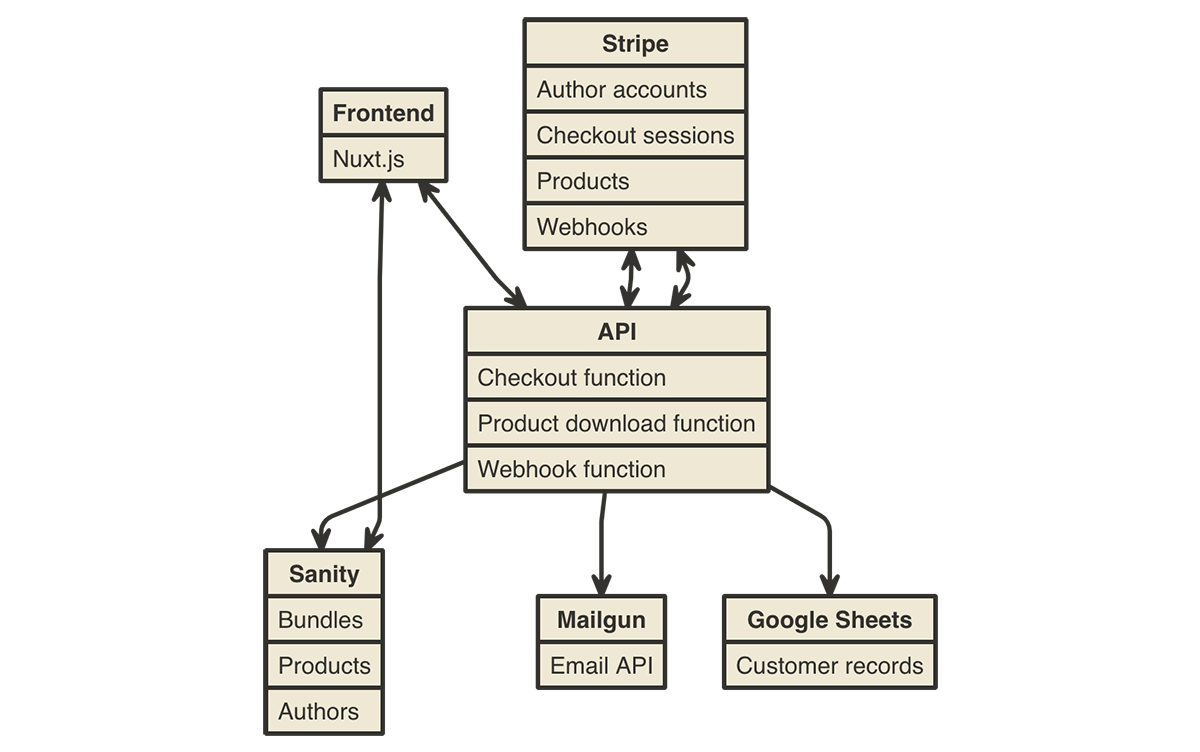

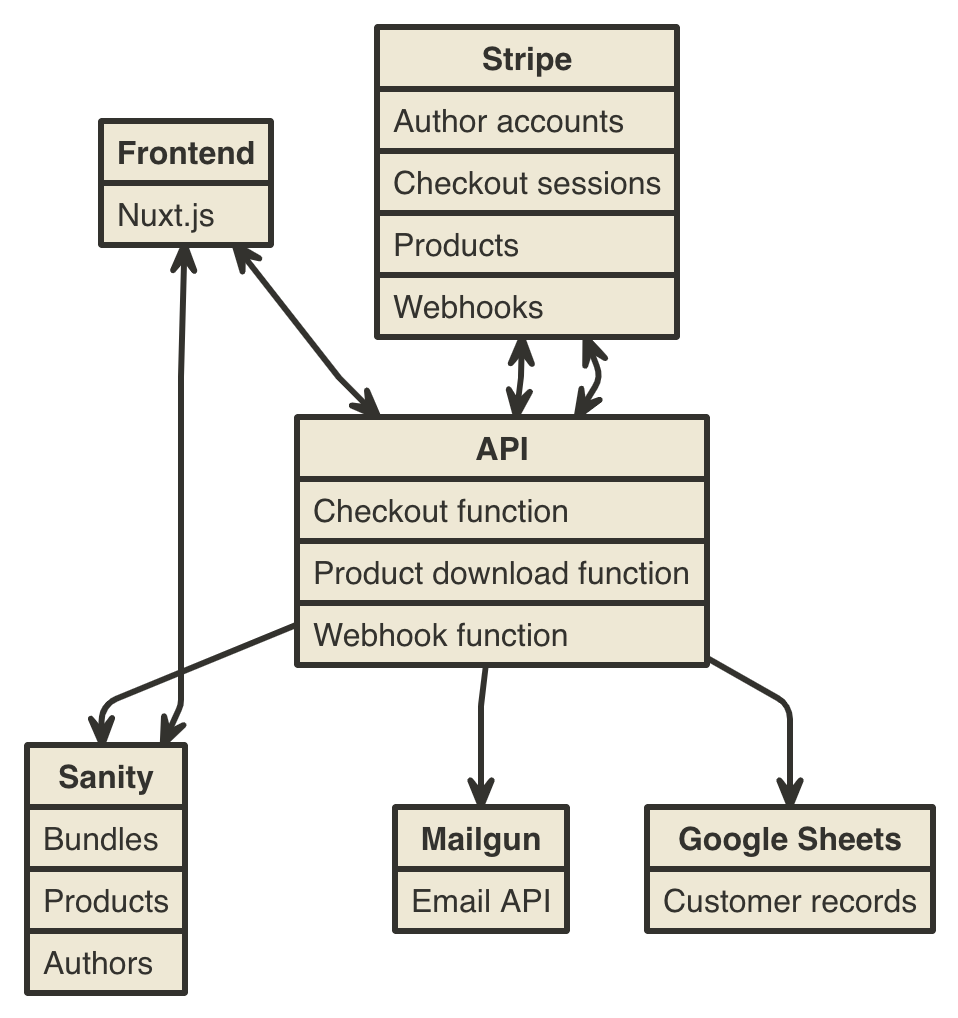

Over the past few weeks, I’ve been working on a sample open-source e-commerce app for selling software, educational products, and bundles. Inspired by Humble Bundle, it’s built entirely on Workers, and it integrates powerfully with all kinds of first-class modern tooling: Stripe, an API for accepting payments (both from customers and to authors, as we’ll see later), and Sanity.io, a headless CMS for data management.

This kind of project is perfectly suited for Workers. We can lean into Workers as a static site hosting platform (via Workers Sites), API server, and webhook consumer, all within a single codebase, and deployed instantly around the world on Cloudflare’s network.

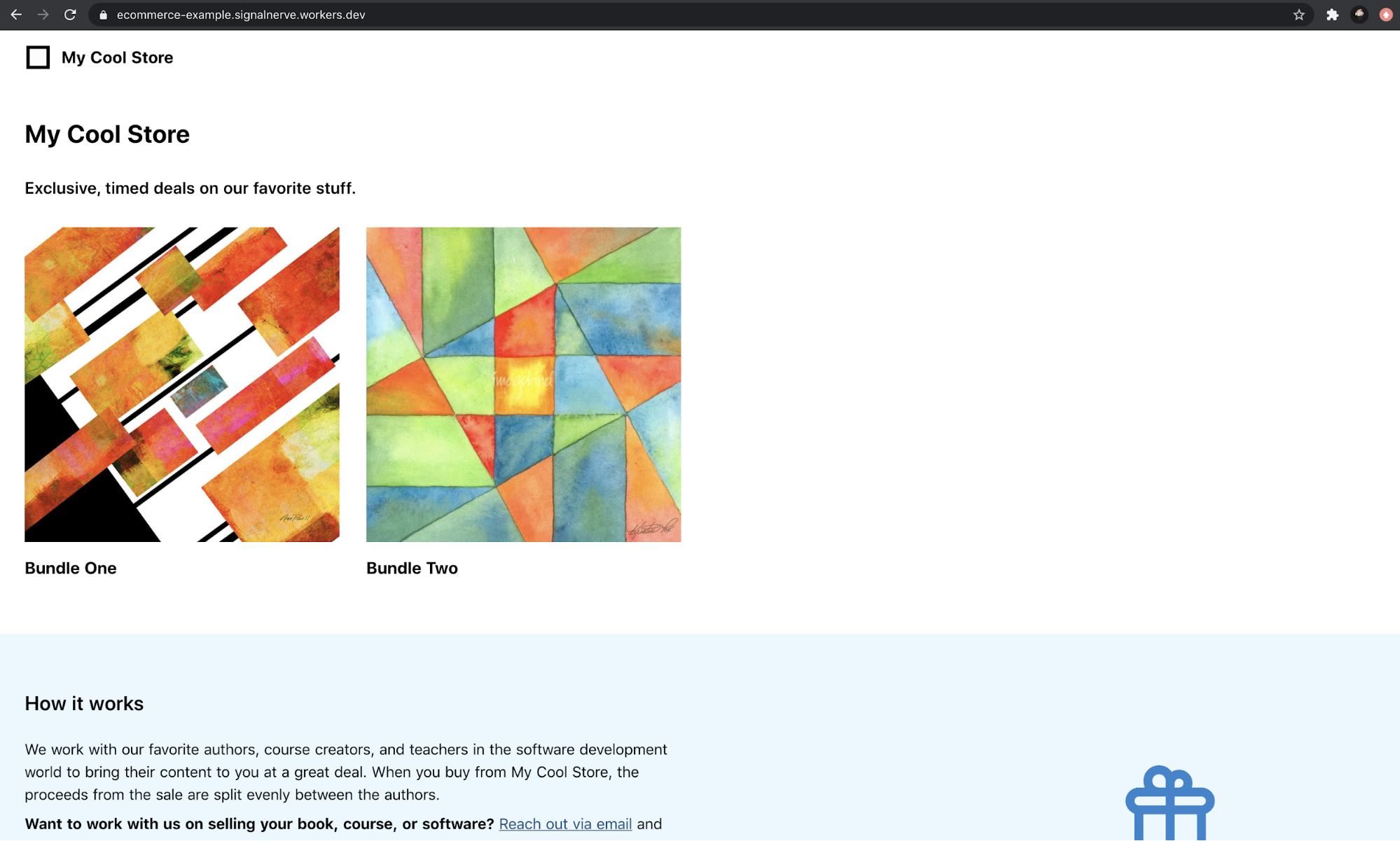

If you want to see a deployed version of this template, check out ecommerce-example.signalnerve.workers.dev.

In this blog post, I’ll dive deeper into the implementation details of the site, covering how Workers continues to excel as a JAMstack deployment platform. I’ll also cover some new territory in integrating Workers with Stripe. The project is open-source on GitHub, and I’m actively working on improving the documentation, so that you can take the codebase and build on it for your own e-commerce sites and use cases.

The frontend

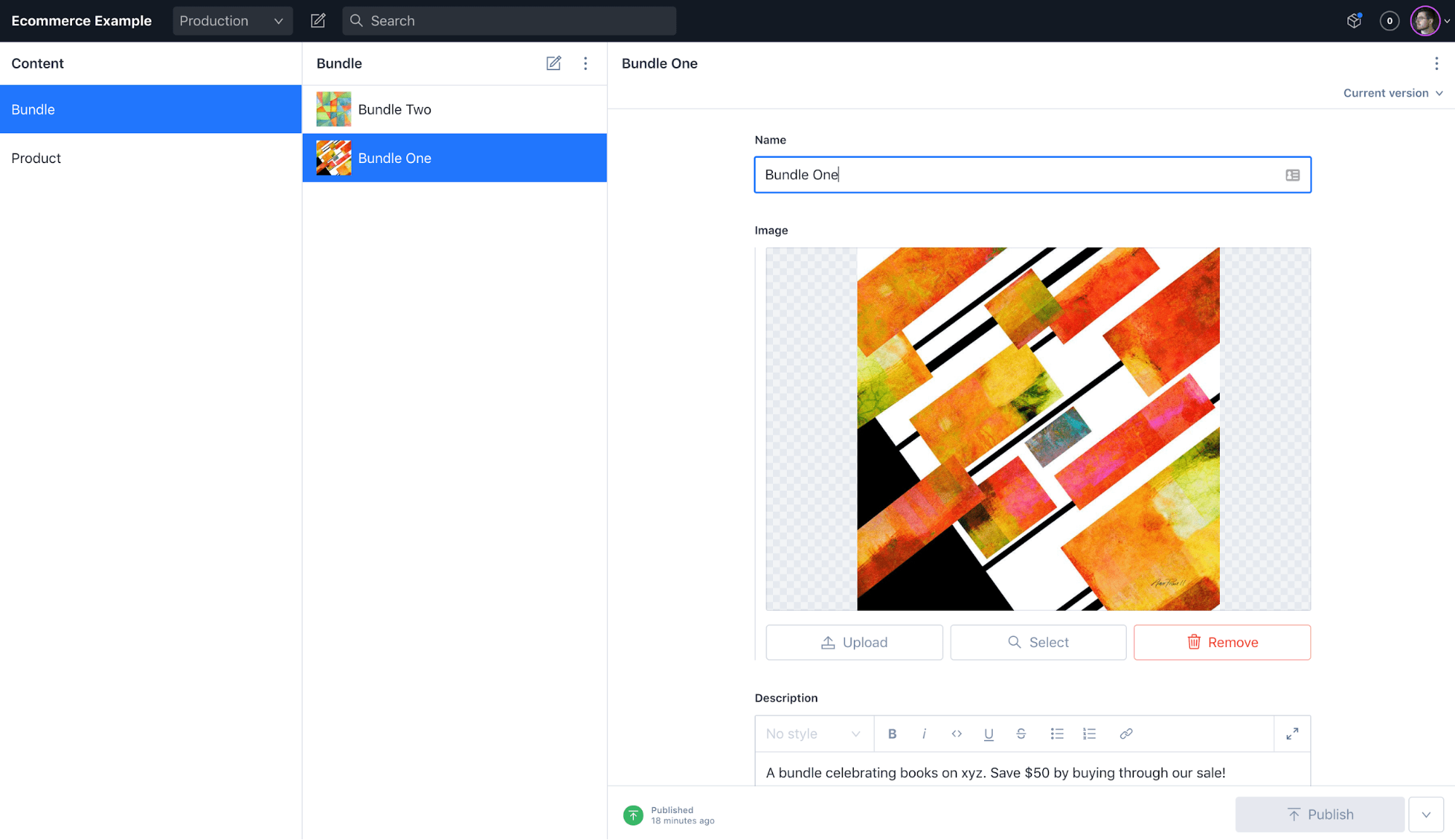

As I wrote last year, Workers continues to be an amazing platform for JAMstack apps. When I started building this template, I wanted to use some things I already knew — Sanity.io for managing data, and of course, Workers Sites for deploying — but some new tools as well.

Workers Sites is incredibly simple to use: just point it at a directory of static assets, and you’re good to go. With this project, I decided to try out Nuxt.js, a Vue-based static site generator, to power the frontend for the application.

Using Sanity.io, the data representing the bundles (and the products inside of those bundles) is stored on Sanity.io’s own CDN, and retrieved client-side by the Nuxt.js application.

When a potential customer visits a bundle, they’ll see a list of products from Sanity.io, and a checkout button provided by Stripe.

Responding to new checkout sessions and purchases

Making API requests with Stripe’s Node SDK isn’t currently supported in Workers (check out the GitHub issue where we’re discussing a fix), but because it’s just REST underneath, we can easily make REST requests using the library.

When a user clicks the checkout button on a bundle page, it makes a request to the Cloudflare Workers API, and securely generates a new session for the user to checkout with Stripe.

import { json, stripe } from '../helpers'

export default async (request) => {

const body = await request.json()

const { price_id } = body

const session = await stripe('/checkout/sessions', {

payment_method_types: ['card'],

line_items: [{

price: price_id,

quantity: 1,

}],

mode: 'payment'

}, 'POST')

return json({ session_id: session.id })

}

This is where Workers excels as a JAMstack platform. Yes, it can do static site hosting, but with just a few extra lines of routing code, I can deploy a highly scalable API right alongside my Nuxt.js application.

Webhooks and working with external services

This idea extends throughout the rest of the checkout process. When a customer is successfully charged for their purchase, Stripe sends a webhook back to Cloudflare Workers. In order to complete the transaction on our end, the Workers application:

- Validates the incoming data from Stripe to ensure that it’s legitimate. This means that every incoming webhook request is explicitly validated using your Stripe account details, and can be confirmed to be valid before the function acts on it.

- Distributes payments to the authors using Stripe Connect. When a customer buys a bundle for $20, that $20 (minus Stripe fees) gets distributed evenly between the authors in that bundle — all of this calculation and the associated transfer requests happen inside the Worker.

- Sends a unique download link to the customer. Using Workers KV, a unique token is set up that corresponds to the customer’s email, which can be used to retrieve the content the customer purchased. This integration uses Mailgun to construct an email and send it entirely over REST APIs.

By the time the purchase is complete, the Workers serverless API will have interfaced with four distinct APIs, persisting records, sending emails, and handling and distributing payments to everyone involved in the e-commerce transaction. With Workers, this all happens in a single codebase, with low latency and a superb developer experience. The entire API is type-checked and validated before it ever gets shipped to production, thanks to our TypeScript template.

Each of these tasks involves a pretty serious level of complexity, but by using Workers, we can abstract each of them into smaller pieces of functionality, and compose powerful, on-demand, and infinitely scalable webhooks directly on the serverless edge.

Conclusion

I’m really excited about the launch of this template and, of course, it wouldn’t have been possible to ship something like this in just a few weeks without using Cloudflare Workers. If you’re interested in digging into how any of the above stuff works, check out the project on GitHub!

With the recent announcement of our Workers KV free tier, this project is perfect to fork and build your own e-commerce products with. Let me know what you build and say hi on Twitter!

This generates a sample workflow definition that you can change once the workflow is created.

This generates a sample workflow definition that you can change once the workflow is created.

The Definition section shows the ASL that makes up the example workflow. The following example shows the new API Gateway resource and its parameters:

The Definition section shows the ASL that makes up the example workflow. The following example shows the new API Gateway resource and its parameters:

Nuxt.js scaffolding tool inputs

Nuxt.js scaffolding tool inputs