Post Syndicated from James Beswick original https://aws.amazon.com/blogs/compute/discovering-sensitive-data-in-aws-codecommit-with-aws-lambda-2/

This post is courtesy of Markus Ziller, Solutions Architect.

Today, git is a de facto standard for version control in modern software engineering. The workflows enabled by git’s branching capabilities are a major reason for this. However, with git’s distributed nature, it can be difficult to reliably remove changes that have been committed from all copies of the repository. This is problematic when secrets such as API keys have been accidentally committed into version control. The longer it takes to identify and remove secrets from git, the more likely that the secret has been checked out by another user.

This post shows a solution that automatically identifies credentials pushed to AWS CodeCommit in near-real-time. I also show three remediation measures that you can use to reduce the impact of secrets pushed into CodeCommit:

- Notify users about the leaked credentials.

- Lock the repository for non-admins.

- Hard reset the CodeCommit repository to a healthy state.

I use the AWS Cloud Development Kit (CDK). This is an open source software development framework to model and provision cloud application resources. Using the CDK can reduce the complexity and amount of code needed to automate the deployment of resources.

Overview of solution

The services in this solution are AWS Lambda, AWS CodeCommit, Amazon EventBridge, and Amazon SNS. These services are part of the AWS serverless platform. They help reduce undifferentiated work around managing servers, infrastructure, and the parts of the application that add less value to your customers. With serverless, the solution scales automatically, has built-in high availability, and you only pay for the resources you use.

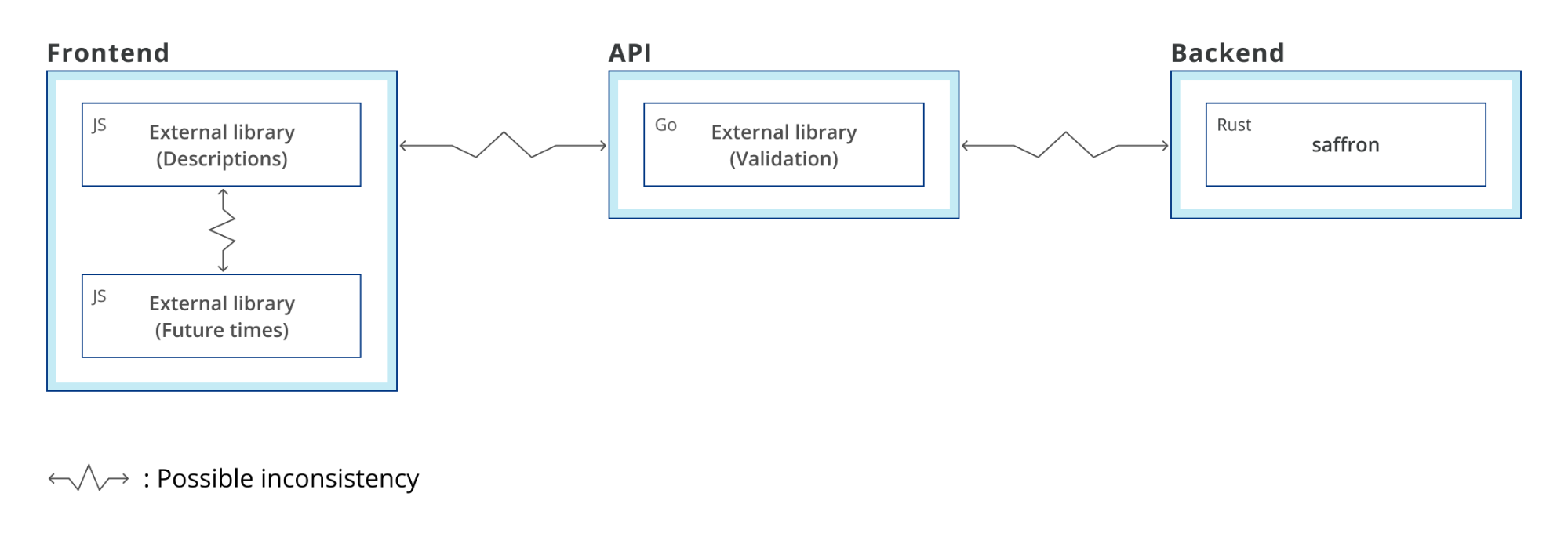

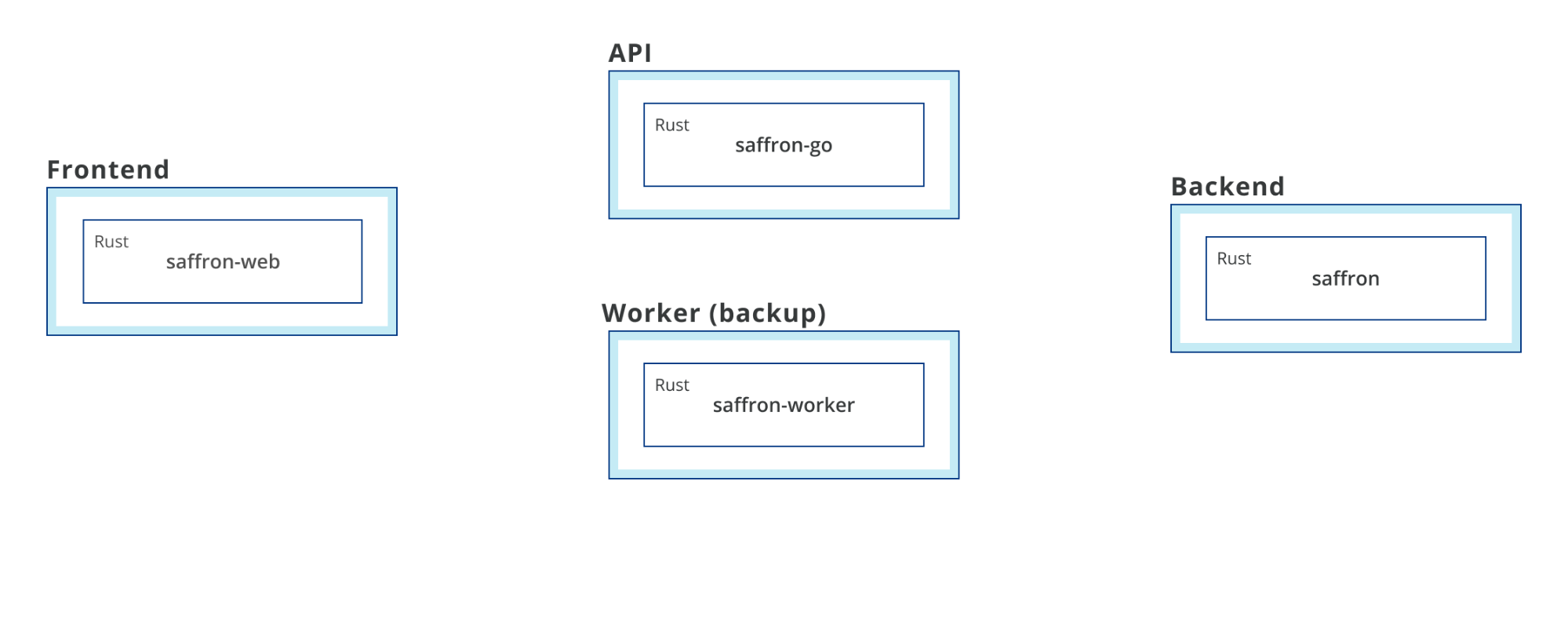

This diagram outlines the workflow implemented in this blog:

- After a developer pushes changes to CodeCommit, it emits an event to an event bus.

- A rule defined on the event bus routes this event to a Lambda function.

- The Lambda function uses the AWS SDK for JavaScript to get the changes introduced by commits pushed to the repository.

- It analyzes the changes for secrets. If secrets are found, it publishes another event to the event bus.

- Rules associated with this event type then trigger invocations of three Lambda functions A, B, and C with information about the problematic changes.

- Each of the Lambda functions runs a remediation measure:

- Function A sends out a notification to an SNS topic that informs users about the situation (A1).

- Function B locks the repository by setting a tag with the AWS SDK (B2). It sends out a notification about this action (B2).

- Function C runs git commands that remove the problematic commit from the CodeCommit repository (C2). It also sends out a notification (C1).

Walkthrough

The following walkthrough explains the required components, their interactions and how the provisioning can be automated via CDK.

For this walkthrough, you need:

- An AWS account

- Installed and authenticated AWS CLI (authenticate with an IAM user or an AWS STS security token)

- Installed Node.js, TypeScript

- Installed git

- AWS CDK installed (

npm install aws-cdk -g)

Checkout and deploy the sample stack:

- After completing the prerequisites, clone the associated GitHub repository by running the following command in a local directory:

git clone [email protected]:aws-samples/discover-sensitive-data-in-aws-codecommit-with-aws-lambda.git - Open the repository in a local editor and review the contents of

cdk/lib/resources.ts,src/handlers/commits.ts, andsrc/handlers/remediations.ts. - Follow the instructions in the README.md to deploy the stack.

The CDK will deploy resources for the following services in your account.

Using CodeCommit to manage your git repositories

The CDK creates a new empty repository called TestRepository and adds a tag RepoState with an initial value of ok. You later use this tag in the LockRepo remediation strategy to restrict access.

It also creates two IAM groups with one user in each. Members of the CodeCommitSuperUsers group are always able to access the repository, while members of the CodeCommitUsers group can only access the repository when the value of the tag RepoState is not locked.

I also import the CodeCommitSystemUser into the CDK. Since the user requires git credentials in a downloaded CSV file, it cannot be created by the CDK. Instead it must be created as described in the README file.

The following CDK code sets up all the described resources:

const TAG_NAME = "RepoState";

const superUsers = new Group(this, "CodeCommitSuperUsers", { groupName: "CodeCommitSuperUsers" });

superUsers.addUser(new User(this, "CodeCommitSuperUserA", {

password: new Secret(this, "CodeCommitSuperUserPassword").secretValue,

userName: "CodeCommitSuperUserA"

}));

const users = new Group(this, "CodeCommitUsers", { groupName: "CodeCommitUsers" });

users.addUser(new User(this, "User", {

password: new Secret(this, "CodeCommitUserPassword").secretValue,

userName: "CodeCommitUserA"

}));

const systemUser = User.fromUserName(this, "CodeCommitSystemUser", props.codeCommitSystemUserName);

const repo = new Repository(this, "Repository", {

repositoryName: "TestRepository",

description: "The repository to test this project out",

});

Tags.of(repo).add(TAG_NAME, "ok");

users.addToPolicy(new PolicyStatement({

effect: Effect.ALLOW,

actions: ["*"],

resources: [repo.repositoryArn],

conditions: {

StringNotEquals: {

[`aws:ResourceTag/${TAG_NAME}`]: "locked"

}

}

}));

superUsers.addToPolicy(new PolicyStatement({

effect: Effect.ALLOW,

actions: ["*"],

resources: [repo.repositoryArn]

}));

Using EventBridge to pass events between components

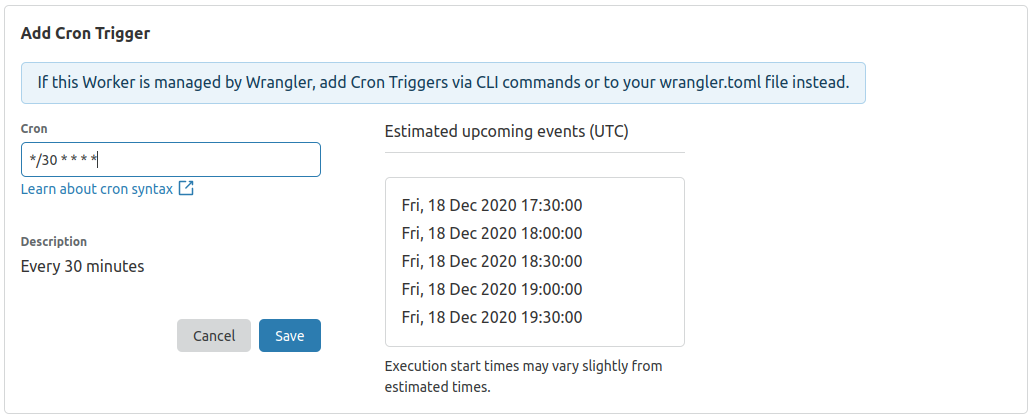

I use EventBridge, a serverless event bus, to connect the Lambda functions together. Many AWS services like CodeCommit are natively integrated into EventBridge and publish events about changes in their environment.

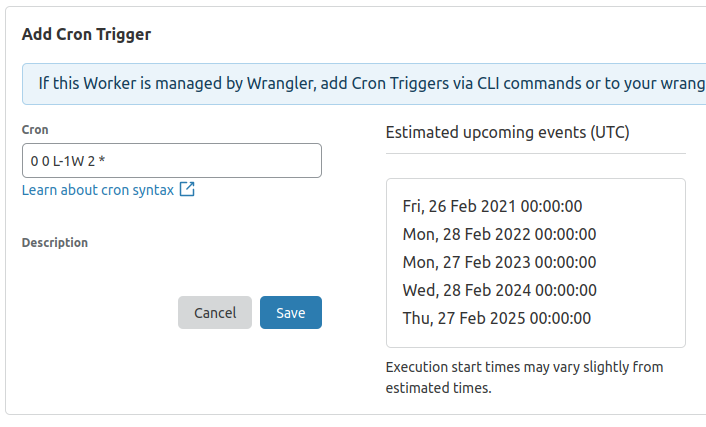

repo.onCommit is a higher-level CDK construct. It creates the required resources to invoke a Lambda function for every commit to a given repository. The created events rule looks like this:

Note that this event rule only matches commit events in TestRepository. To send commits of all repositories in that account to the inspecting Lambda function, remove the resources filter in the event pattern.

CodeCommit Repository State Change is a default event that is published by CodeCommit if changes are made to a repository. In addition, I define CodeCommit Security Event, a custom event, which Lambda publishes to the same event bus if secrets are discovered in the inspected code.

The sample below shows how you can set up Lambda functions as targets for both type of events.

const DETAIL_TYPE = "CodeCommit Security Event";

const eventBus = new EventBus(this, "CodeCommitEventBus", {

eventBusName: "CodeCommitSecurityEvents"

});

repo.onCommit("AnyCommitEvent", {

ruleName: "CallLambdaOnAnyCodeCommitEvent",

target: new targets.LambdaFunction(commitInspectLambda)

});

new Rule(this, "CodeCommitSecurityEvent", {

eventBus,

enabled: true,

ruleName: "CodeCommitSecurityEventRule",

eventPattern: {

detailType: [DETAIL_TYPE]

},

targets: [

new targets.LambdaFunction(lockRepositoryLambda),

new targets.LambdaFunction(raiseAlertLambda),

new targets.LambdaFunction(forcefulRevertLambda)

]

});

Using Lambda functions to run remediation measures

AWS Lambda functions allow you to run code in response to events. The example defines four Lambda functions.

By comparing the delta to its predecessor, the commitInspectLambda function analyzes if secrets are introduced by a commit. With the CDK, you can create a Lambda function with:

const myLambdaInCDK = new Function(this, "UniqueIdentifierRequiredByCDK", {

runtime: Runtime.NODEJS_12_X,

handler: "<handlerfile>.<function name>",

code: Code.fromAsset(path.join(__dirname, "..", "..", "src", "handlers")),

// See git repository for complete code

});

The code for this Lambda function uses the AWS SDK for JavaScript to fetch the details of the commit, the differences introduced, and the new content.

The code checks each modified file line by line with a regular expression that matches typical secret formats. In src/handlers/regex.json, I provide a few regular expressions that match common secrets. You can extend this with your own patterns.

If a secret is discovered, a CodeCommit Security Event is published to the event bus. EventBridge then invokes all Lambda functions that are registered as targets with this event. This demo triggers three remediation measures.

The raiseAlertLambda function uses the AWS SDK for JavaScript to send out a notification to all subscribers (that is, CodeCommit administrators) on an SNS topic. It takes no further action.

SNS.publish({

TopicArn: <TOPIC_ARN>,

Subject: `[ACTION REQUIRED] Secrets discovered in <repo>`

Message: `<Your message>

}

The lockRepositoryLambda function uses the AWS SDK for JavaScript to change the RepoState tag from ok to locked. This restricts access to members of the CodeCommitSuperUsers IAM group.

CodeCommit.tagResource({

resourceArn: event.detail.repositoryArn,

tags: {

RepoState: "locked"

}

})

In addition, the Lambda function uses SNS to send out a notification. The forcefulRevertLambda function runs the following git commands:

git clone <repository>

git checkout <branch>

git reset –hard <previousCommitId>

git push origin <branch> --forceThese commands reset the repository to the last accepted commit, by forcefully removing the respective commit from the git history of your CodeCommit repo. I advise you to handle this with care and only activate it on a real project if you fully understand the consequences of rewriting git history.

The Node.js v12 runtime for Lambda does not have a git runtime installed by default. You can add one by using the git-lambda2 Lambda layer. This allows you to run git commands from within the Lambda function.

Finally, this Lambda function also sends out a notification. The complete code is available in the GitHub repo.

Using SNS to notify users

To notify users about secrets discovered and actions taken, you create an SNS topic and subscribe to it via email.

const topic = new Topic(this, "CodeCommitSecurityEventNotification", {

displayName: "CodeCommitSecurityEventNotification",

});

topic.addSubscription(new subs.EmailSubscription(/* your email address */));

Testing the solution

You can test the deployed solution by running these two sets of commands. First, add a file with no credentials:

echo "Clean file - no credentials here" > clean_file.txt

git add clean_file.txt

git commit clean_file.txt -m "Adds clean_file.txt"

git pushThen add a file containing credentials:

SECRET_LIKE_STRING=$(cat /dev/urandom | env LC_CTYPE=C tr -dc 'a-zA-Z0-9' | fold -w 32 | head -n 1)

echo "secret=$SECRET_LIKE_STRING" > problematic_file.txt

git add problematic_file.txt

git commit problematic_file.txt -m "Adds secret-like string to problematic_file.txt"

git push

This first command creates, commits and pushes an unproblematic file clean_file.txt that will pass the checks of commitInspectLambda. The second command creates, commits, and pushes problematic_file.txt, which matches the regular expressions and triggers the remediation measures.

If you check your email, you soon receive notifications about actions taken by the Lambda functions.

Cleaning up

To avoid incurring charges, delete the resources by running cdk destroy and confirming the deletion.

Conclusion

This post demonstrates how you can implement a solution to discover secrets in commits to AWS CodeCommit repositories. It also defines different strategies to remediate this.

The CDK code to set up all components is minimal and can be extended for remediation measures. The template is portable between Regions and uses serverless technologies to minimize cost and complexity.

For more serverless learning resources, visit Serverless Land.