Post Syndicated from Dave Bailey original https://aws.amazon.com/blogs/security/best-practices-securing-your-amazon-location-service-resources/

Location data is subjected to heavy scrutiny by security experts. Knowing the current position of a person, vehicle, or asset can provide industries with many benefits, whether to understand where a current delivery is, how many people are inside a venue, or to optimize routing for a fleet of vehicles. This blog post explains how Amazon Web Services (AWS) helps keep location data secured in transit and at rest, and how you can leverage additional security features to help keep information safe and compliant.

The General Data Protection Regulation (GDPR) defines personal data as “any information relating to an identified or identifiable natural person (…) such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural or social identity of that natural person.” Also, many companies wish to improve transparency to users, making it explicit when a particular application wants to not only track their position and data, but also to share that information with other apps and websites. Your organization needs to adapt to these changes quickly to maintain a secure stance in a competitive environment.

On June 1, 2021, AWS made Amazon Location Service generally available to customers. With Amazon Location, you can build applications that provide maps and points of interest, convert street addresses into geographic coordinates, calculate routes, track resources, and invoke actions based on location. The service enables you to access location data with developer tools and to move your applications to production faster with monitoring and management capabilities.

In this blog post, we will show you the features that Amazon Location provides out of the box to keep your data safe, along with best practices that you can follow to reach the level of security that your organization strives to accomplish.

Data control and data rights

Amazon Location relies on global trusted providers Esri and HERE Technologies to provide high-quality location data to customers. Features like maps, places, and routes are provided by these AWS Partners so solutions can have data that is not only accurate but constantly updated.

AWS anonymizes and encrypts location data at rest and during its transmission to partner systems. In parallel, third parties cannot sell your data or use it for advertising purposes, following our service terms. This helps you shield sensitive information, protect user privacy, and reduce organizational compliance risks. To learn more, see the Amazon Location Data Security and Control documentation.

Integrations

Operationalizing location-based solutions can be daunting. It’s not just necessary to build the solution, but also to integrate it with the rest of your applications that are built in AWS. Amazon Location facilitates this process from a security perspective by integrating with services that expedite the development process, enhancing the security aspects of the solution.

Encryption

Amazon Location uses AWS owned keys by default to automatically encrypt personally identifiable data. AWS owned keys are a collection of AWS Key Management Service (AWS KMS) keys that an AWS service owns and manages for use in multiple AWS accounts. Although AWS owned keys are not in your AWS account, Amazon Location can use the associated AWS owned keys to protect the resources in your account.

If customers choose to use their own keys, they can benefit from AWS KMS to store their own encryption keys and use them to add a second layer of encryption to geofencing and tracking data.

Authentication and authorization

Amazon Location also integrates with AWS Identity and Access Management (IAM), so that you can use its identity-based policies to specify allowed or denied actions and resources, as well as the conditions under which actions are allowed or denied on Amazon Location. Also, for actions that require unauthenticated access, you can use unauthenticated IAM roles.

As an extension to IAM, Amazon Cognito can be an option if you need to integrate your solution with a front-end client that authenticates users with its own process. In this case, you can use Cognito to handle the authentication, authorization, and user management for you. You can use Cognito unauthenticated identity pools with Amazon Location as a way for applications to retrieve temporary, scoped-down AWS credentials. To learn more about setting up Cognito with Amazon Location, see the blog post Add a map to your webpage with Amazon Location Service.

Limit the scope of your unauthenticated roles to a domain

When you are building an application that allows users to perform actions such as retrieving map tiles, searching for points of interest, updating device positions, and calculating routes without needing them to be authenticated, you can make use of unauthenticated roles.

When using unauthenticated roles to access Amazon Location resources, you can add an extra condition to limit resource access to an HTTP referer that you specify in the policy. The aws:referer request context value is provided by the caller in an HTTP header, and it is included in a web browser request.

The following is an example of a policy that allows access to a Map resource by using the aws:referer condition, but only if the request comes from the domain example.com.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "MapsReadOnly",

"Effect": "Allow",

"Action": [

"geo:GetMapStyleDescriptor",

"geo:GetMapGlyphs",

"geo:GetMapSprites",

"geo:GetMapTile"

],

"Resource": "arn:aws:geo:us-west-2:111122223333:map/MyMap",

"Condition": {

"StringLike": {

"aws:Referer": "https://www.example.com/*"

}

}

}

]

}

To learn more about aws:referer and other global conditions, see AWS global condition context keys.

Encrypt tracker and geofence information using customer managed keys with AWS KMS

When you create your tracker and geofence collection resources, you have the option to use a symmetric customer managed key to add a second layer of encryption to geofencing and tracking data. Because you have full control of this key, you can establish and maintain your own IAM policies, manage key rotation, and schedule keys for deletion.

After you create your resources with customer managed keys, the geometry of your geofences and all positions associated to a tracked device will have two layers of encryption. In the next sections, you will see how to create a key and use it to encrypt your own data.

Create an AWS KMS symmetric key

First, you need to create a key policy that will limit the AWS KMS key to allow access to principals authorized to use Amazon Location and to principals authorized to manage the key. For more information about specifying permissions in a policy, see the AWS KMS Developer Guide.

To create the key policy

Create a JSON policy file by using the following policy as a reference. This key policy allows Amazon Location to grant access to your KMS key only when it is called from your AWS account. This works by combining the kms:ViaService and kms:CallerAccount conditions. In the following policy, replace us-west-2 with your AWS Region of choice, and the kms:CallerAccount value with your AWS account ID. Adjust the KMS Key Administrators statement to reflect your actual key administrators’ principals, including yourself. For details on how to use the Principal element, see the AWS JSON policy elements documentation.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "Amazon Location",

"Effect": "Allow",

"Principal": {

"AWS": "*"

},

"Action": [

"kms:DescribeKey",

"kms:CreateGrant"

],

"Resource": "*",

"Condition": {

"StringEquals": {

"kms:ViaService": "geo.us-west-2.amazonaws.com",

"kms:CallerAccount": "111122223333"

}

}

},

{

"Sid": "Allow access for Key Administrators",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::111122223333:user/KMSKeyAdmin"

},

"Action": [

"kms:Create*",

"kms:Describe*",

"kms:Enable*",

"kms:List*",

"kms:Put*",

"kms:Update*",

"kms:Revoke*",

"kms:Disable*",

"kms:Get*",

"kms:Delete*",

"kms:TagResource",

"kms:UntagResource",

"kms:ScheduleKeyDeletion",

"kms:CancelKeyDeletion"

],

"Resource": "*"

}

]

}

For the next steps, you will use the AWS Command Line Interface (AWS CLI). Make sure to have the latest version installed by following the AWS CLI documentation.

Tip: AWS CLI will consider the Region you defined as the default during the configuration steps, but you can override this configuration by adding –region <your region> at the end of each command line in the following command. Also, make sure that your user has the appropriate permissions to perform those actions.

To create the symmetric key

Now, create a symmetric key on AWS KMS by running the create-key command and passing the policy file that you created in the previous step.

aws kms create-key –policy file://<your JSON policy file>

Alternatively, you can create the symmetric key using the AWS KMS console with the preceding key policy.

After running the command, you should see the following output. Take note of the KeyId value.

{

"KeyMetadata": {

"Origin": "AWS_KMS",

"KeyId": "1234abcd-12ab-34cd-56ef-1234567890ab",

"Description": "",

"KeyManager": "CUSTOMER",

"Enabled": true,

"CustomerMasterKeySpec": "SYMMETRIC_DEFAULT",

"KeyUsage": "ENCRYPT_DECRYPT",

"KeyState": "Enabled",

"CreationDate": 1502910355.475,

"Arn": "arn:aws:kms:us-west-2:111122223333:key/1234abcd-12ab-34cd-56ef-1234567890ab",

"AWSAccountId": "111122223333",

"MultiRegion": false

"EncryptionAlgorithms": [

"SYMMETRIC_DEFAULT"

],

}

}

Create an Amazon Location tracker and geofence collection resources

To create an Amazon Location tracker resource that uses AWS KMS for a second layer of encryption, run the following command, passing the key ID from the previous step.

aws location \

create-tracker \

--tracker-name "MySecureTracker" \

--kms-key-id "1234abcd-12ab-34cd-56ef-1234567890ab"

Here is the output from this command.

{

"CreateTime": "2021-07-15T04:54:12.913000+00:00",

"TrackerArn": "arn:aws:geo:us-west-2:111122223333:tracker/MySecureTracker",

"TrackerName": "MySecureTracker"

}

Similarly, to create a geofence collection by using your own KMS symmetric keys, run the following command, also modifying the key ID.

aws location \

create-geofence-collection \

--collection-name "MySecureGeofenceCollection" \

--kms-key-id "1234abcd-12ab-34cd-56ef-1234567890ab"

Here is the output from this command.

{

"CreateTime": "2021-07-15T04:54:12.913000+00:00",

"TrackerArn": "arn:aws:geo:us-west-2:111122223333:geofence-collection/MySecureGeoCollection",

"TrackerName": "MySecureGeoCollection"

}

By following these steps, you have added a second layer of encryption to your geofence collection and tracker.

Data retention best practices

Trackers and geofence collections are stored and never leave your AWS account without your permission, but they have different lifecycles on Amazon Location.

Trackers store the positions of devices and assets that are tracked in a longitude/latitude format. These positions are stored for 30 days by the service before being automatically deleted. If needed for historical purposes, you can transfer this data to another data storage layer and apply the proper security measures based on the shared responsibility model.

Geofence collections store the geometries you provide until you explicitly choose to delete them, so you can use encryption with AWS managed keys or your own keys to keep them for as long as needed.

Asset tracking and location storage best practices

After a tracker is created, you can start sending location updates by using the Amazon Location front-end SDKs or by calling the BatchUpdateDevicePosition API. In both cases, at a minimum, you need to provide the latitude and longitude, the time when the device was in that position, and a device-unique identifier that represents the asset being tracked.

Protecting device IDs

This device ID can be any string of your choice, so you should apply measures to prevent certain IDs from being used. Some examples of what to avoid include:

- First and last names

- Facility names

- Documents, such as driver’s licenses or social security numbers

- Emails

- Addresses

- Telephone numbers

Latitude and longitude precision

Latitude and longitude coordinates convey precision in degrees, presented as decimals, with each decimal place representing a different measure of distance (when measured at the equator).

Amazon Location supports up to six decimal places of precision (0.000001), which is equal to approximately 11 cm or 4.4 inches at the equator. You can limit the number of decimal places in the latitude and longitude pair that is sent to the tracker based on the precision required, increasing the location range and providing extra privacy to users.

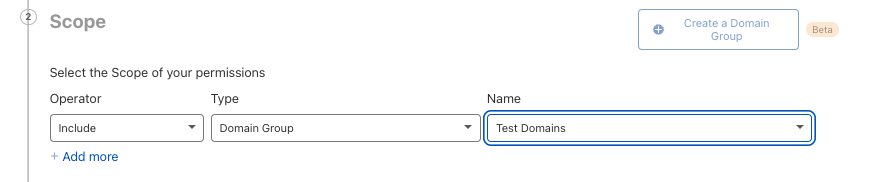

Figure 1 shows a latitude and longitude pair, with the level of detail associated to decimals places.

Figure 1: Geolocation decimal precision details

Position filtering

Amazon Location introduced position filtering as an option to trackers that enables cost reduction and reduces jitter from inaccurate device location updates.

- DistanceBased filtering ignores location updates wherein devices have moved less than 30 meters (98.4 ft).

- TimeBased filtering evaluates every location update against linked geofence collections, but not every location update is stored. If your update frequency is more often than 30 seconds, then only one update per 30 seconds is stored for each unique device ID.

- AccuracyBased filtering ignores location updates if the distance moved was less than the measured accuracy provided by the device.

By using filtering options, you can reduce the number of location updates that are sent and stored, thus reducing the level of location detail provided and increasing the level of privacy.

Logging and monitoring

Amazon Location integrates with AWS services that provide the observability needed to help you comply with your organization’s security standards.

To record all actions that were taken by users, roles, or AWS services that access Amazon Location, consider using AWS CloudTrail. CloudTrail provides information on who is accessing your resources, detailing the account ID, principal ID, source IP address, timestamp, and more. Moreover, Amazon CloudWatch helps you collect and analyze metrics related to your Amazon Location resources. CloudWatch also allows you to create alarms based on pre-defined thresholds of call counts. These alarms can create notifications through Amazon Simple Notification Service (Amazon SNS) to automatically alert teams responsible for investigating abnormalities.

Conclusion

At AWS, security is our top priority. Here, security and compliance is a shared responsibility between AWS and the customer, where AWS is responsible for protecting the infrastructure that runs all of the services offered in the AWS Cloud. The customer assumes the responsibility to perform all of the necessary security configurations to the solutions they are building on top of our infrastructure.

In this blog post, you’ve learned the controls and guardrails that Amazon Location provides out of the box to help provide data privacy and data protection to our customers. You also learned about the other mechanisms you can use to enhance your security posture.

Start building your own secure geolocation solutions by following the Amazon Location Developer Guide and learn more about how the service handles security by reading the security topics in the guide.

If you have feedback about this post, submit comments in the Comments section below. If you have questions about this blog post, start a new thread on Amazon Location Service forum or contact AWS Support.

Want more AWS Security news? Follow us on Twitter.