Post Syndicated from Simona Badoiu original https://blog.cloudflare.com/cloudflare-zaraz-supports-csp/

Cloudflare Zaraz can be used to manage and load third-party tools on the cloud, achieving significant speed, privacy and security improvements. Content Security Policy (CSP) configuration prevents malicious content from being run on your website.

If you have Cloudflare Zaraz enabled on your website, you don’t have to ask yourself twice if you should enable CSP because there’s no harmful collision between CSP & Cloudflare Zaraz.

Why would Cloudflare Zaraz collide with CSP?

Cloudflare Zaraz, at its core, injects a <script> block on every page where it runs. If the website enforces CSP rules, the injected script can be automatically blocked if inline scripts are not allowed. To prevent this, at the moment of script injection, Cloudflare Zaraz adds a nonce to the script-src policy in order for everything to work smoothly.

Cloudflare Zaraz supports CSP enabled by using both Content-Security-Policy headers or Content-Security-Policy <meta> blocks.

What is CSP?

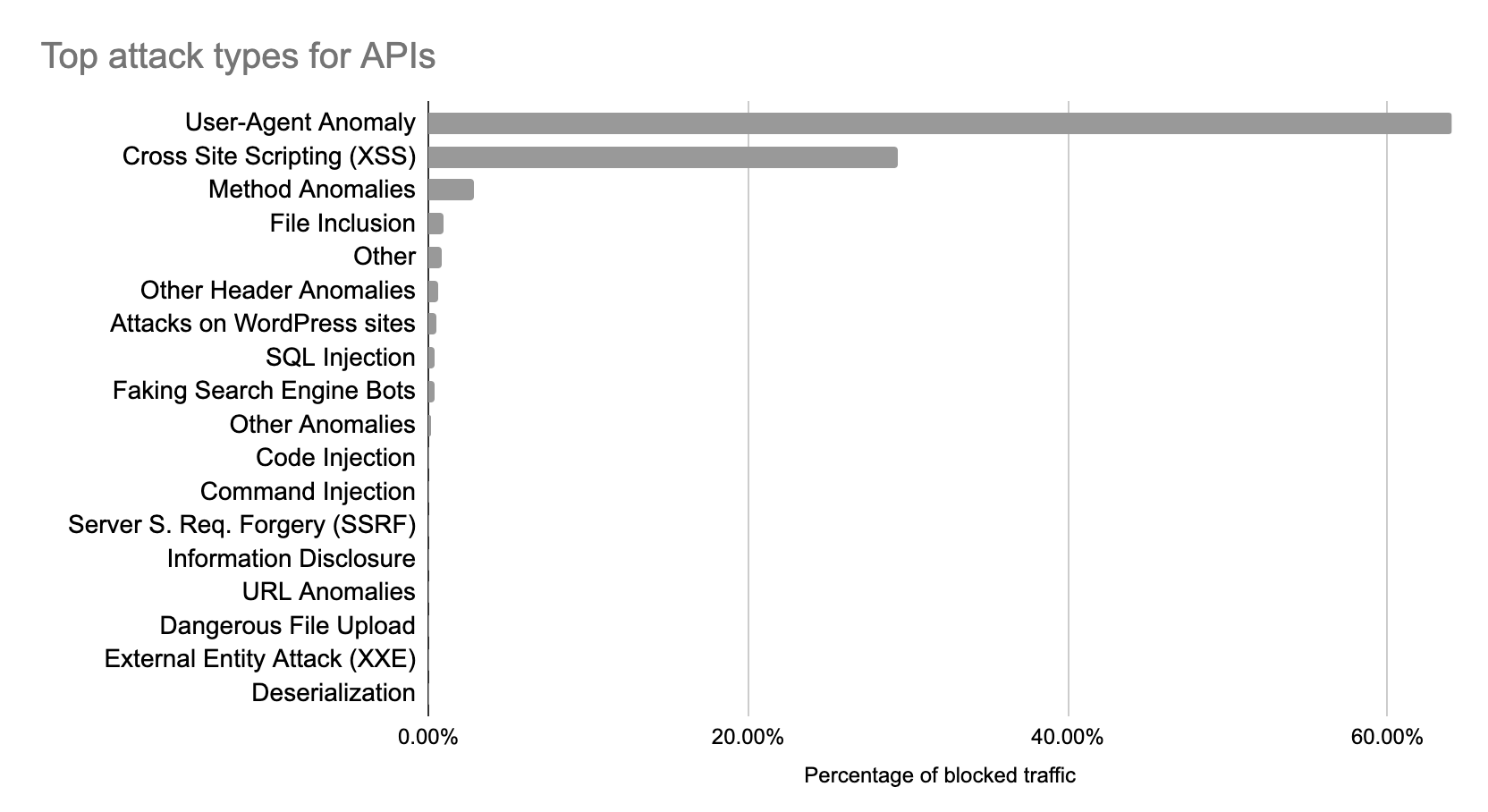

Content Security Policy (CSP) is a security standard meant to protect websites from Cross-site scripting (XSS) or Clickjacking by providing the means to list approved origins for scripts, styles, images or other web resources.

Although CSP is a reasonably mature technology with most modern browsers already implementing the standard, less than 10% of websites use this extra layer of security. A Google study says that more than 90% of the websites using CSP are still leaving some doors open for attackers.

Why this low adoption and poor configuration? An early study on ‘why CSP adoption is failing’ says that the initial setup for a website is tedious and can generate errors, risking doing more harm for the business than an occasional XSS attack.

We’re writing this to confirm that Cloudflare Zaraz will comply with your CSP settings without any other additional configuration from your side and to share some interesting findings from our research regarding how CSP works.

What is Cloudflare Zaraz?

Cloudflare Zaraz aims to make the web faster by moving third-party script bloat away from the browser.

There are tens of third-parties loaded by almost every website on the Internet (analytics, tracking, chatbots, banners, embeds, widgets etc.). Most of the time, the third parties have a slightly small role on a particular website compared to the high-volume and complex code they provide (for a good reason, because it’s meant to deal with all the issues that particular tool can tackle). This code, loaded a huge number of times on every website is simply inefficient.

Cloudflare Zaraz reduces the amount of code executed on the client side and the amount of time consumed by the client with anything that is not directly serving the user and their experience on the web.

At the same time, Cloudflare Zaraz does the integration between website owners and third-parties by providing a common management interface with an easy ‘one-click’ method.

Cloudflare Zaraz & CSP

When auto-inject is enabled, Cloudflare Zaraz ‘bootstraps’ on your website by running a small inline script that collects basic information from the browser (Screen Resolution, User-Agent, Referrer, page URL) and also provides the main `track` function. The `track` function allows you to track the actions your users are taking on your website, and other events that might happen in real time. Common user actions a website will probably be interested in tracking are successful sign-ups, calls-to-action clicks, and purchases.

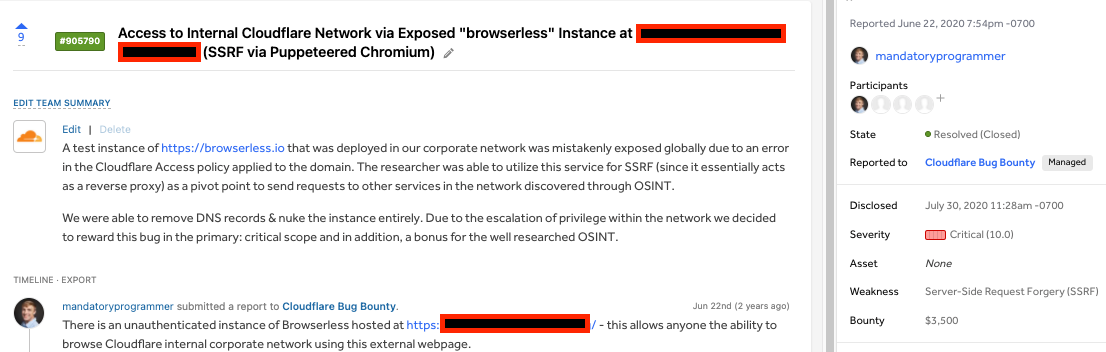

Before adding CSP support, Cloudflare Zaraz would’ve been blocked on any website that implemented CSP and didn’t include ‘unsafe-inline’ in the script-src policy or default-src policy. Some of our users have signaled collisions between CSP and Cloudflare Zaraz so we decided to make peace with CSP once and forever.

To solve the issue, when Cloudflare Zaraz is automatically injected on a website implementing CSP, it enhances the response header by appending a nonce value in the script-src policy. This way we make sure we’re not harming any security that was already enforced by the website owners, and we are still able to perform our duties – making the website faster and more secure by asynchronously running third parties on the server side instead of clogging the browser with irrelevant computation from the user’s point of view.

CSP – Edge Cases

In the following paragraphs we’re describing some interesting CSP details we had to tackle to bring Cloudflare Zaraz to a trustworthy state. The following paragraphs assume that you already know what CSP is, and you know how a simple CSP configuration looks like.

Dealing with multiple CSP headers/<meta> elements

The CSP standard allows multiple CSP headers but, on a first look, it’s slightly unclear how the multiple headers will be handled.

You would think that the CSP rules will be somehow merged and the final CSP rule will be a combination of all of them but in reality the rule is much more simple – the most restrictive policy among all the headers will be applied. Relevant examples can be found in the w3c’s standard description and in this multiple CSP headers example.

The rule of thumb is that “A connection will be allowed only if all the CSP headers will allow it”.

In order to make sure that the Cloudflare Zaraz script is always running, we’re adding the nonce value to all the existing CSP headers and/or <meta http-equiv=”Content-Security-Policy”> blocks.

Adding a nonce to a CSP header that already allows unsafe-inline

Just like when sending multiple CSP headers, when configuring one policy with multiple values, the most restrictive value has priority.

An illustrative example for a given CSP header:

Content-Security-Policy: default-src ‘self’; script-src ‘unsafe-inline’ ‘nonce-12345678’

If ‘unsafe-inline’ is set in the script-src policy, adding nonce-123456789 will disable the effect of unsafe-inline and will allow only the inline script that mentions that nonce value.

This is a mistake we already made while trying to make Cloudflare Zaraz compliant with CSP. However, we solved it by adding the nonce only in the following situations:

- if ‘unsafe-inline’ is not already present in the header

- If ‘unsafe-inline’ is present in the header but next to it, a ‘nonce-…’ or ‘sha…’ value is already set (because in this situation ‘unsafe-inline’ is practically disabled)

Adding the script-src policy if it doesn’t exist already

Another interesting case we had to tackle was handling a CSP header that didn’t include the script-src policy, a policy that was relying only on the default-src values. In this case, we’re copying all the default-src values to a new script-src policy, and we’re appending the nonce associated with the Cloudflare Zaraz script to it(keeping in mind the previous point of course)

Notes

Cloudflare Zaraz is still not 100% compliant with CSP because some tools still need to use eval() – usually for setting cookies, but we’re already working on a different approach so, stay tuned!

The Content-Security-Policy-Report-Only header is not modified by Cloudflare Zaraz yet – you’ll be seeing error reports regarding the Cloudflare Zaraz <script> element if Cloudflare Zaraz is enabled on your website.

Content-Security-Policy Report-Only can not be set using a <meta> element

Conclusion

Cloudflare Zaraz supports the evolution of a more secure web by seamlessly complying with CSP.

If you encounter any issue or potential edge-case that we didn’t cover in our approach, don’t hesitate to write on Cloudflare Zaraz’s Discord Channel, we’re always there fixing issues, listening to feedback and announcing exciting product updates.

For more details on how Cloudflare Zaraz works and how to use it, check out the official documentation.

Resources:

[1] https://wiki.mozilla.org/index.php?title=Security/CSP/Spec&oldid=133465

[2] https://storage.googleapis.com/pub-tools-public-publication-data/pdf/45542.pdf

[3] https://en.wikipedia.org/wiki/Content_Security_Policy

[4] https://www.w3.org/TR/CSP2/#enforcing-multiple-policies

[5] https://chrisguitarguy.com/2019/07/05/working-with-multiple-content-security-policy-headers/

[6]https://www.bitsight.com/blog/content-security-policy-limits-dangerous-activity-so-why-isnt-everyone-doing-it

[7]https://wkr.io/publication/raid-2014-content_security_policy.pdf