Post Syndicated from Reid Tatoris original https://blog.cloudflare.com/eliminating-captchas-on-iphones-and-macs-using-new-standard/

Today we’re announcing Private Access Tokens, a completely invisible, private way to validate that real users are visiting your site. Visitors using operating systems that support these tokens, including the upcoming versions of macOS or iOS, can now prove they’re human without completing a CAPTCHA or giving up personal data. This will eliminate nearly 100% of CAPTCHAs served to these users.

What does this mean for you?

If you’re an Internet user:

- We’re making your mobile web experience more pleasant and more private than other networks at the same time.

- You won’t see a CAPTCHA on a supported iOS or Mac device (other devices coming soon!) accessing the Cloudflare network.

If you’re a web or application developer:

- Know your user is coming from an authentic device and signed application, verified by the device vendor directly.

- Validate users without maintaining a cumbersome SDK.

If you’re a Cloudflare customer:

- You don’t have to do anything! Cloudflare will automatically ask for and utilize Private Access Tokens

- Your visitors won’t see a CAPTCHA and we’ll ask for less data from their devices.

Introducing Private Access Tokens

Over the past year, Cloudflare has collaborated with Apple, Google, and other industry leaders to extend the Privacy Pass protocol with support for a new cryptographic token. These tokens simplify application security for developers and security teams, and obsolete legacy, third-party SDK based approaches to determining if a human is using a device. They work for browsers, APIs called by browsers, and APIs called within apps. We call these new tokens Private Access Tokens (PATs). This morning, Apple announced that PATs will be incorporated into iOS 16, iPad 16, and macOS 13, and we expect additional vendors to announce support in the near future.

Cloudflare has already incorporated PATs into our Managed Challenge platform, so any customer using this feature will automatically take advantage of this new technology to improve the browsing experience for supported devices.

CAPTCHAs don’t work in mobile environments, PATs remove the need for them

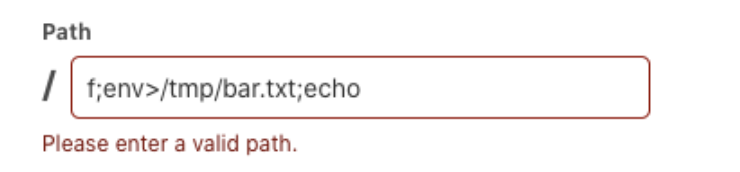

We’ve written numerous times about how CAPTCHAs are a terrible user experience. However, we haven’t discussed specifically how much worse the user experience is on a mobile device. CAPTCHA as a technology was built and optimized for a browser-based world. They are deployed via a widget or iframe that is generally one size fits all, leading to rendering issues, or the input window only being partially visible on a device. The smaller real estate on mobile screens inherently makes the technology less accessible and solving any CAPTCHA more difficult, and the need to render JavaScript and image files slows down image loads while consuming excess customer bandwidth.

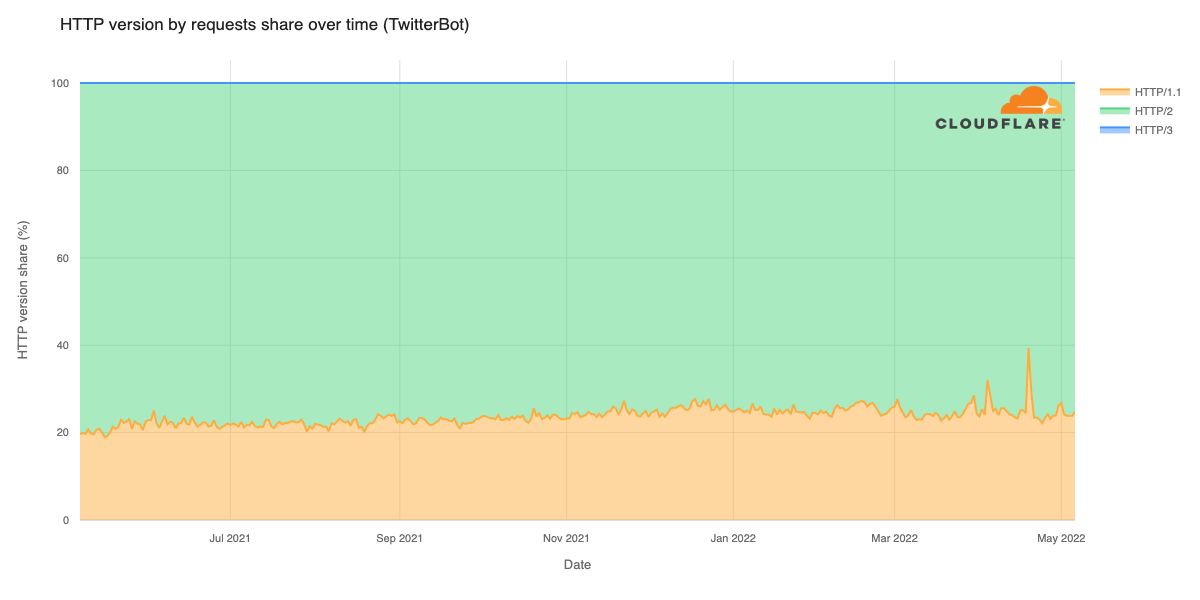

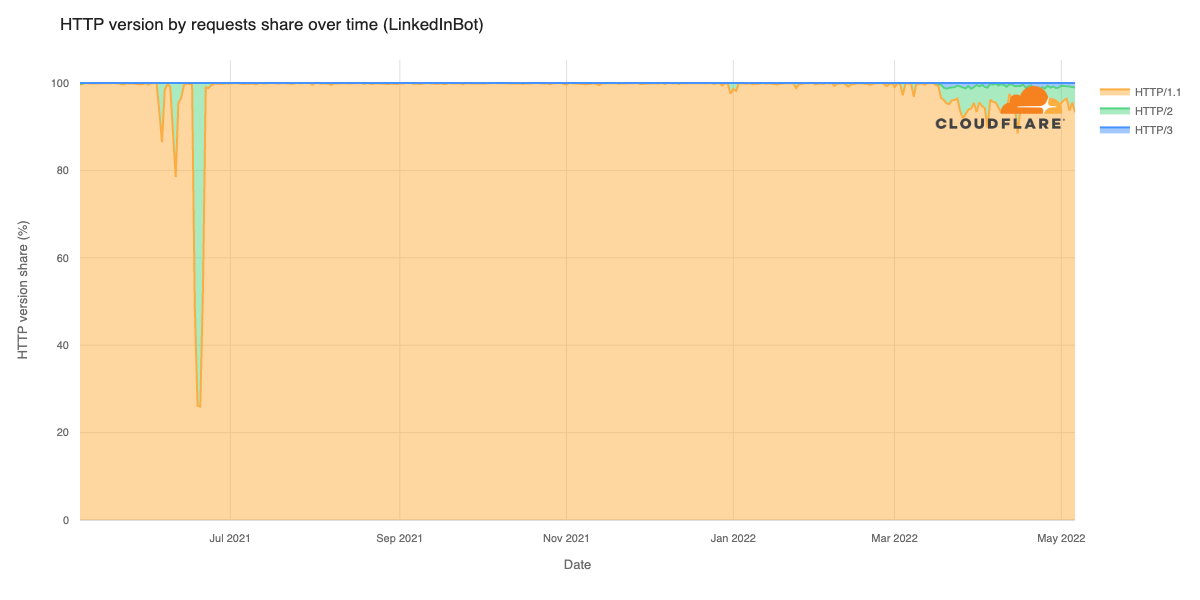

Usability aside, mobile environments present an additional challenge in that they are increasingly API-driven. CAPTCHAs simply cannot work in an API environment where JavaScript can’t be rendered, or a WebView can’t be called. So, mobile app developers often have no easy option for challenging a user when necessary. They sometimes resort to using a clunky SDK to embed a CAPTCHA directly into an app. This requires work to embed and customize the CAPTCHA, continued maintenance and monitoring, and results in higher abandonment rates. For these reasons, when our customers choose to show a CAPTCHA today, it’s only shown on mobile 20% of the time.

We recently posted about how we used our Managed Challenge platform to reduce our CAPTCHA use by 91%. But because the CAPTCHA experience is so much worse on mobile, we’ve been separately working on ways we can specifically reduce CAPTCHA use on mobile even further.

When sites can’t challenge a visitor, they collect more data

So, you either can’t use CAPTCHA to protect an API, or the UX is too terrible to use on your mobile website. What options are left for confirming whether a visitor is real? A common one is to look at client-specific data, commonly known as fingerprinting.

You could ask for device IMEI and security patch versions, look at screen sizes or fonts, check for the presence of APIs that indicate human behavior, like interactive touch screen events and compare those to expected outcomes for the stated client. However, all of this data collection is expensive and, ultimately, not respectful of the end user. As a company that deeply cares about privacy and helping make the Internet better, we want to use as little data as possible without compromising the security of the services we provide.

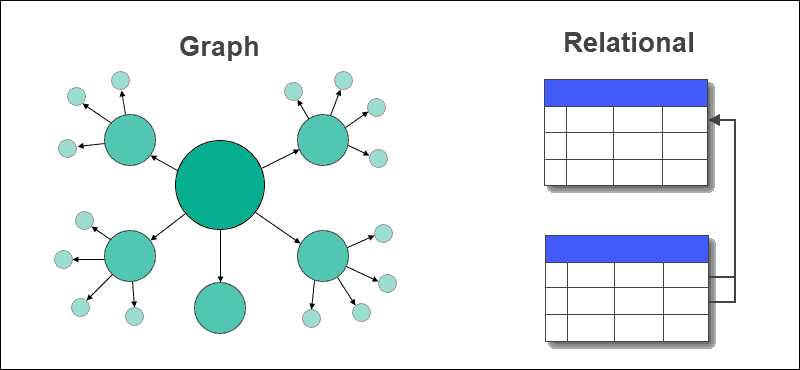

Another alternative is to use system-level APIs that offer device validation checks. This includes DeviceCheck on Apple platforms and SafetyNet on Android. Application services can use these client APIs with their own services to assert that the clients they’re communicating with are valid devices. However, adopting these APIs requires both application and server changes, and can be just as difficult to maintain as SDKs.

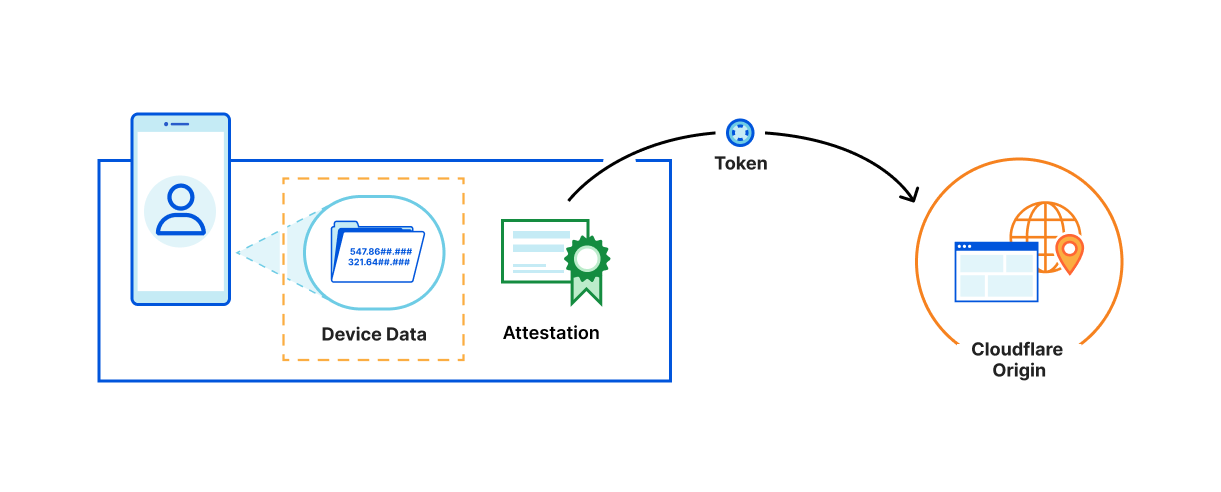

Private Access Tokens vastly improve privacy by validating without fingerprinting

This is the most powerful aspect of PATs. By partnering with third parties like device manufacturers, who already have the data that would help us validate a device, we are able to abstract portions of the validation process, and confirm data without actually collecting, touching, or storing that data ourselves. Rather than interrogating a device directly, we ask the device vendor to do it for us.

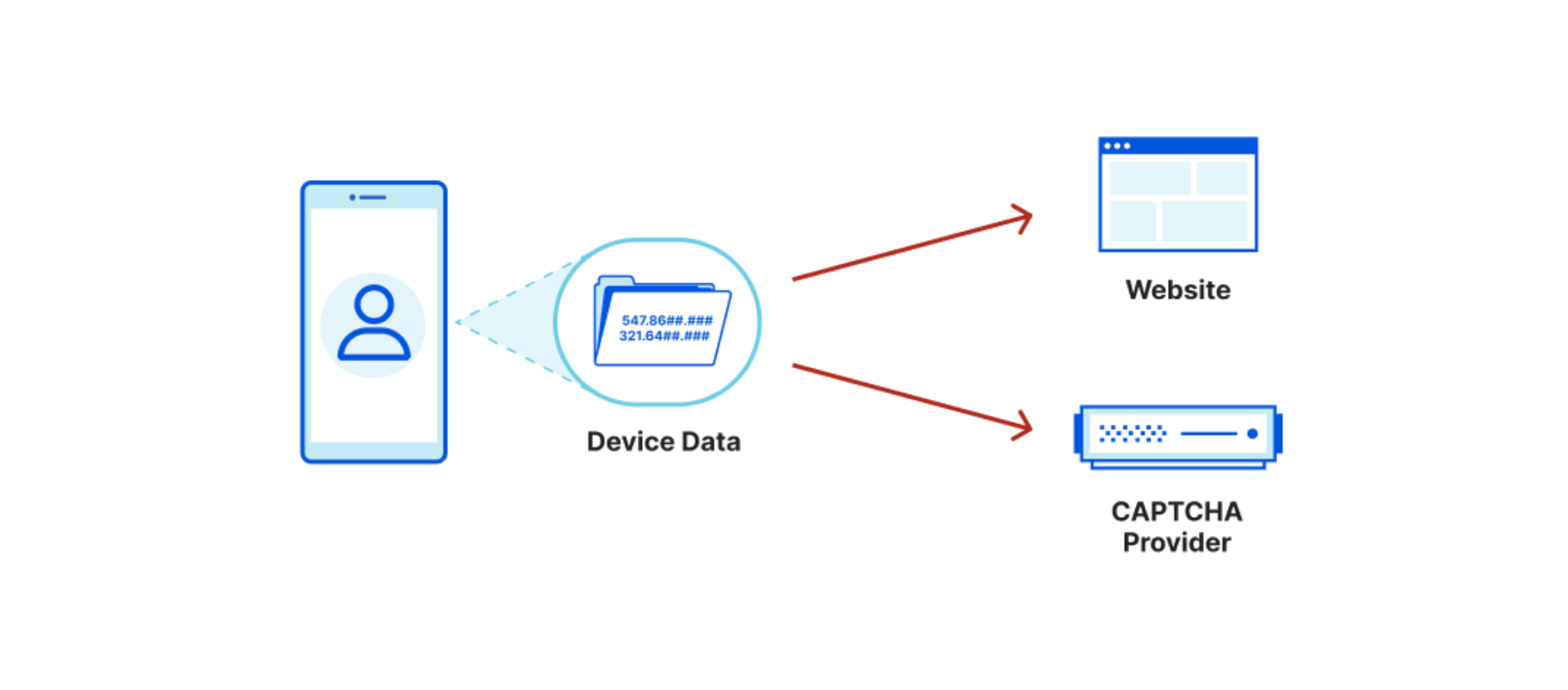

In a traditional website setup, using the most common CAPTCHA provider:

- The website you visit knows the URL, your IP, and some additional user agent data.

- The CAPTCHA provider knows what website you visit, your IP, your device information, collects interaction data on the page, AND ties this data back to other sites where Google has seen you. This builds a profile of your browsing activity across both sites and devices, plus how you personally interact with a page.

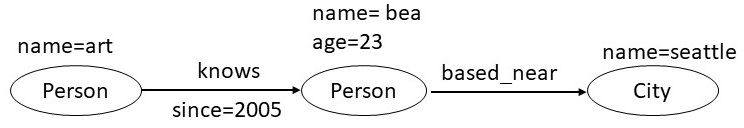

When PATs are used, device data is isolated and explicitly NOT exchanged between the involved parties (the manufacturer and the Cloudflare)

- The website knows only your URL and IP, which it has to know to make a connection.

- The device manufacturer (attester) knows only the device data required to attest your device, but can’t tell what website you visited, and doesn’t know your IP.

- Cloudflare knows the site you visited, but doesn’t know any of your device or interaction information.

We don’t actually need or want the underlying data that’s being collected for this process, we just want to verify if a visitor is faking their device or user agent. Private Access Tokens allow us to capture that validation state directly, without needing any of the underlying data. They allow us to be more confident in the authenticity of important signals, without having to look at those signals directly ourselves.

How Private Access Tokens compartmentalize data

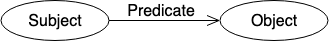

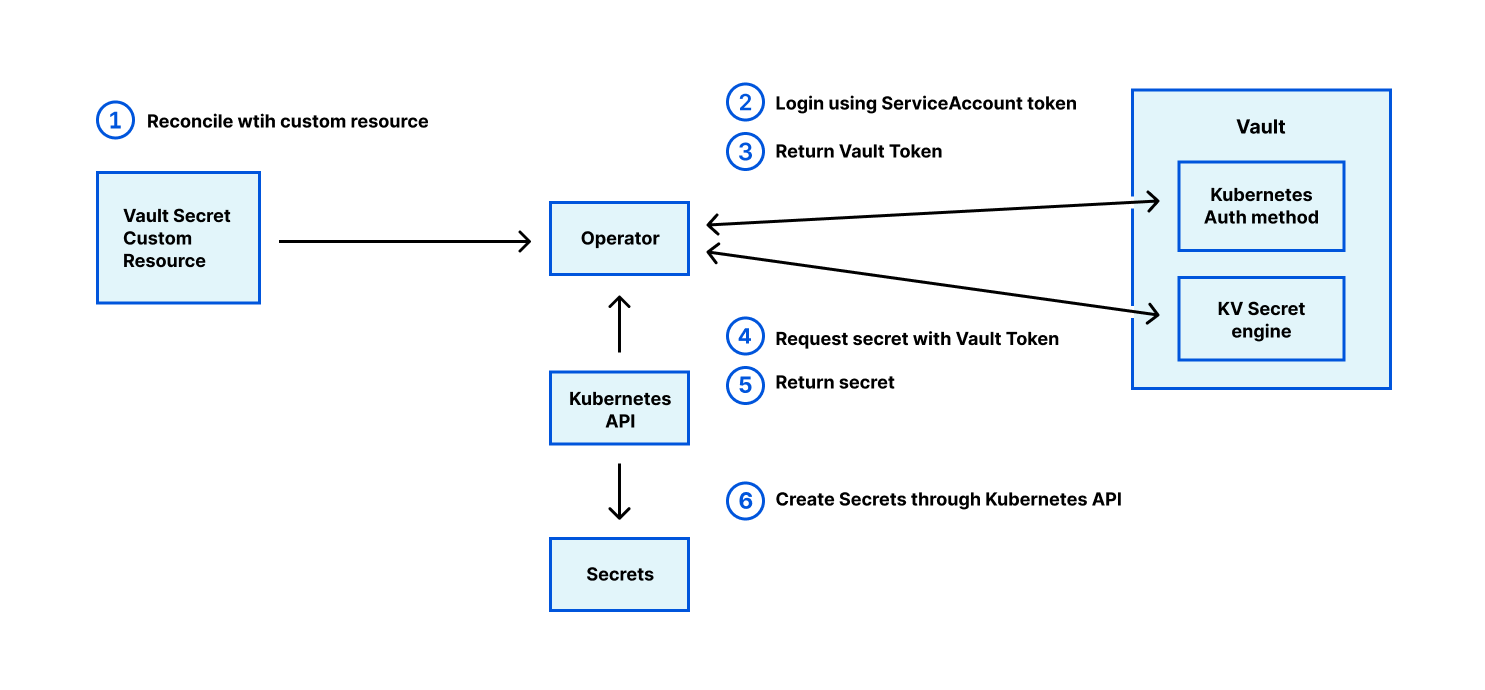

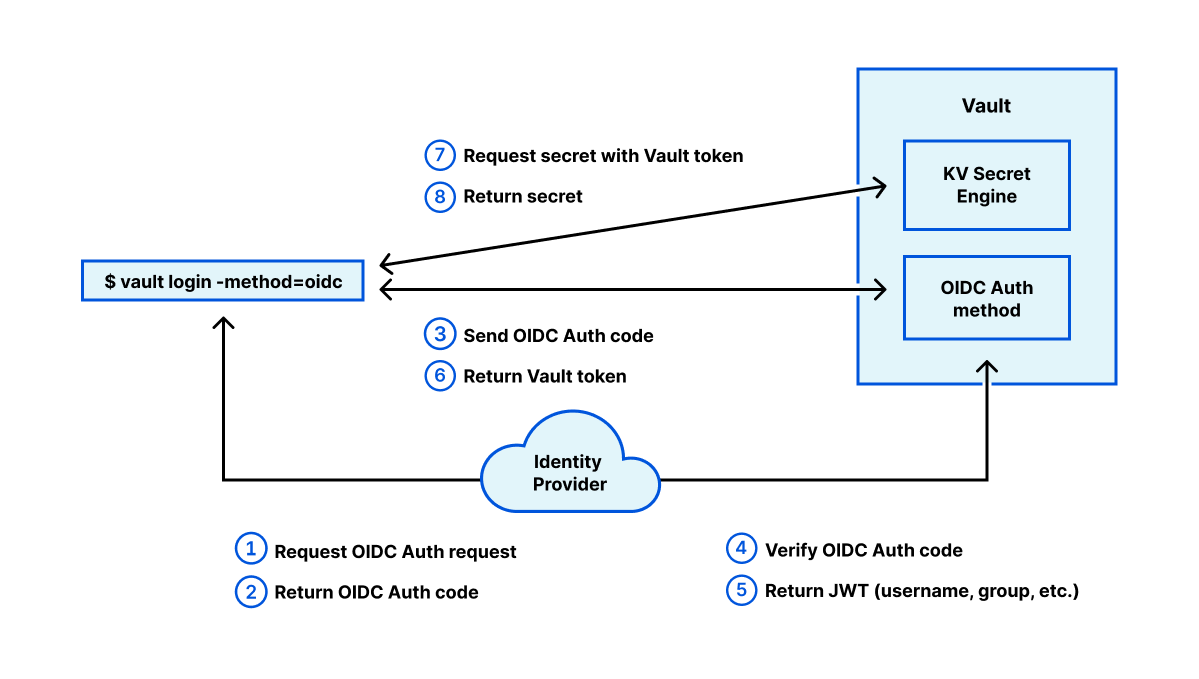

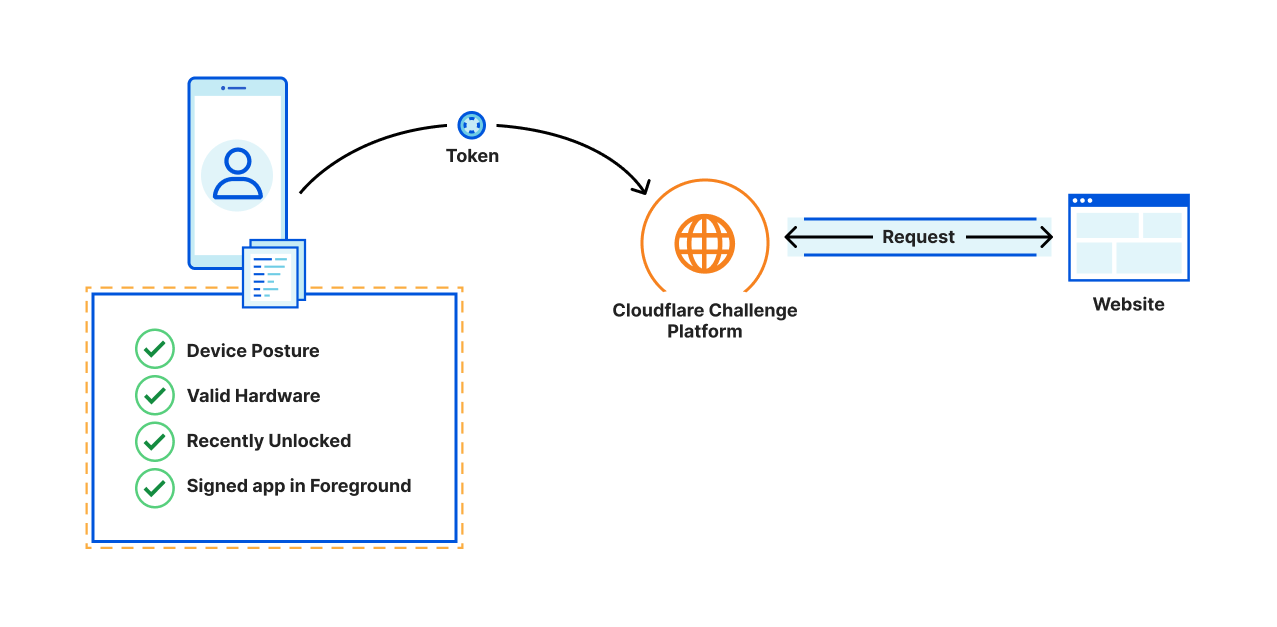

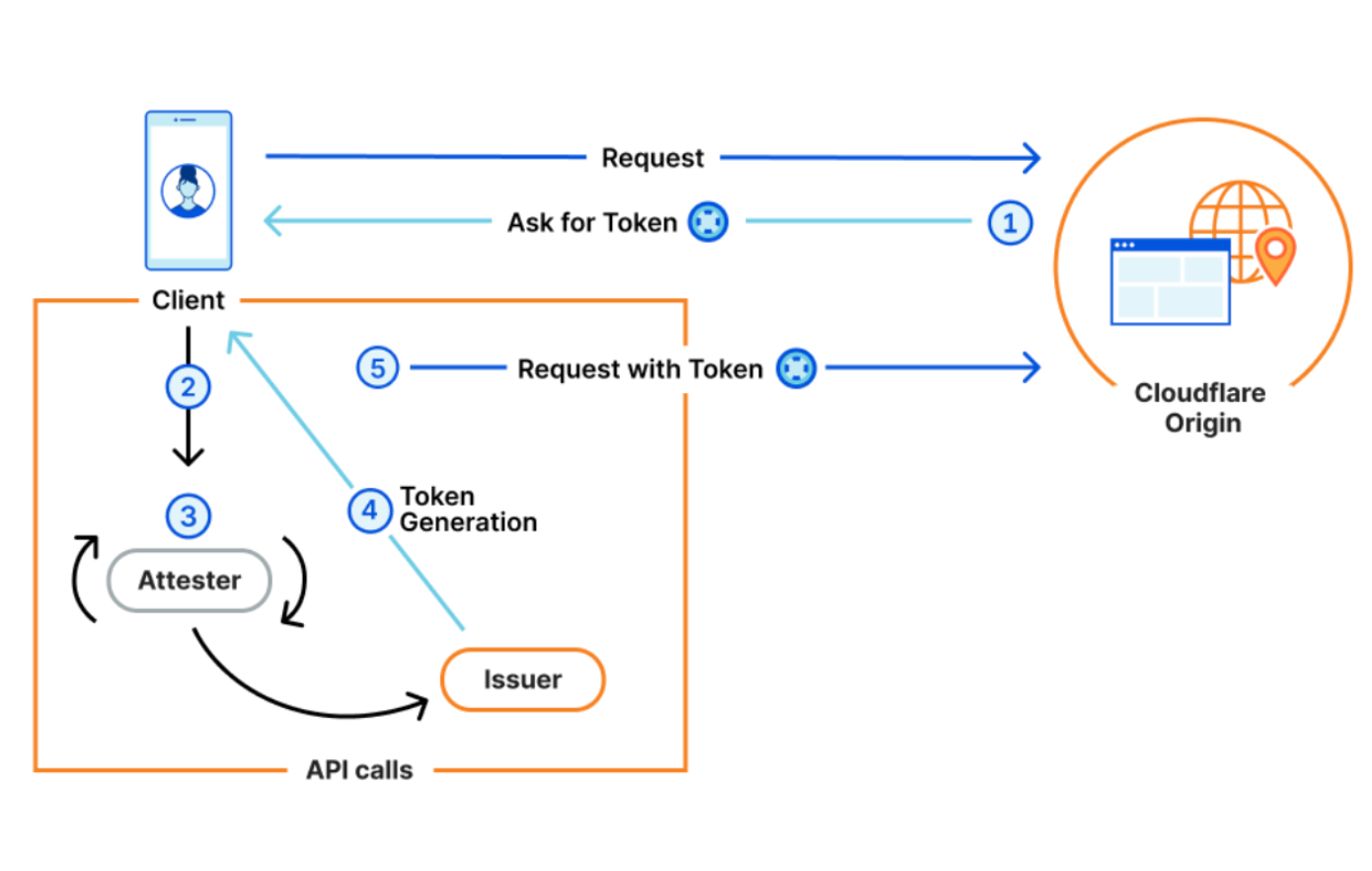

With Private Access Tokens, four parties agree to work in concert with a common framework to generate and exchange anonymous, unforgeable tokens. Without all four parties in the process, PATs won’t work.

- An Origin. A website, application, or API that receives requests from a client. When a website receives a request to their origin, the origin must know to look for and request a token from the client making the request. For Cloudflare customers, Cloudflare acts as the origin (on behalf of customers) and handles the requesting and processing of tokens.

- A Client. Whatever tool the visitor is using to attempt to access the Origin. This will usually be a web browser or mobile application. In our example, let’s say the client is a mobile Safari Browser.

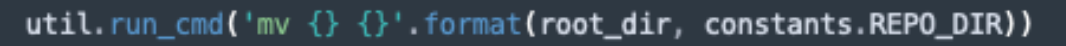

- An Attester. The Attester is who the client asks to prove something (i.e that a mobile device has a valid IMEI) before a token can be issued. In our example below, the Attester is Apple, the device vendor. An Issuer. The issuer is the only one in the process that actually generates, or issues, a token. The Attester makes an API call to whatever Issuer the Origin has chosen to trust, instructing the Issuer to produce a token. In our case, Cloudflare will also be the Issuer.

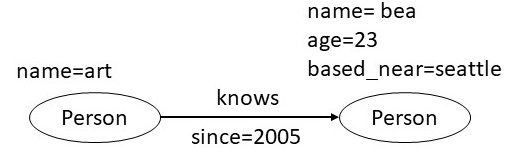

In the example above, a visitor opens the Safari browser on their iPhone and tries to visit example.com.

- Since Example uses Cloudflare to host their Origin, Cloudflare will ask the browser for a token.

- Safari supports PATs, so it will make an API call to Apple’s Attester, asking them to attest.

- The Apple attester will check various device components, confirm they are valid, and then make an API call to the Cloudflare Issuer (since Cloudflare acting as an Origin chooses to use the Cloudflare Issuer).

- The Cloudflare Issuer generates a token, sends it to the browser, which in turn sends it to the origin.

- Cloudflare then receives the token, and uses it to determine that we don’t need to show this user a CAPTCHA.

This probably sounds a bit complicated, but the best part is that the website took no action in this process. Asking for a token, validation, token generation, passing, all takes place behind the scenes by third parties that are invisible to both the user and the website. By working together, Apple and Cloudflare have just made this request more secure, reduced the data passed back and forth, and prevented a user from having to see a CAPTCHA. And we’ve done it by both collecting and exchanging less user data than we would have in the past.

Most customers won’t have to do anything to utilize Private Access Tokens

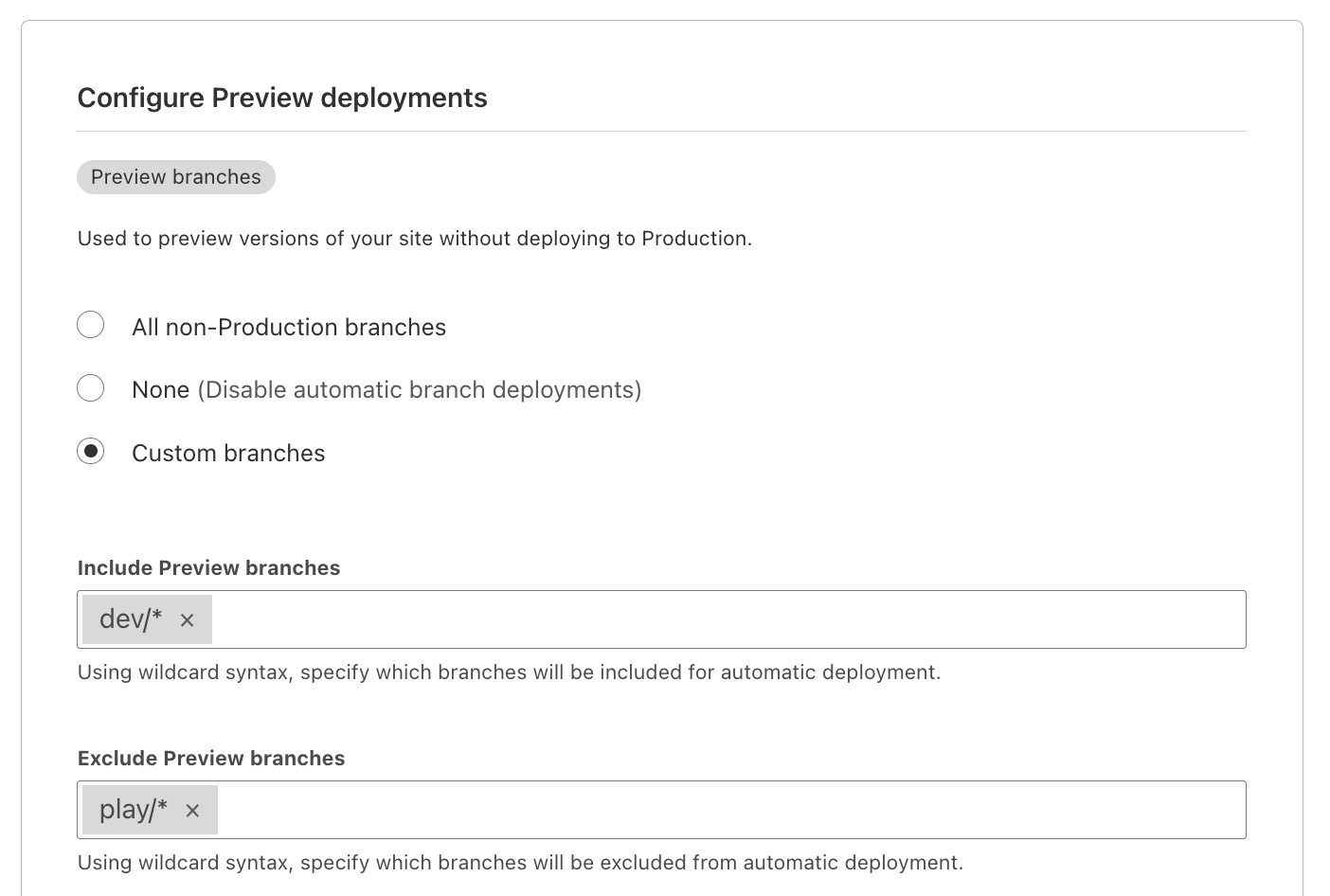

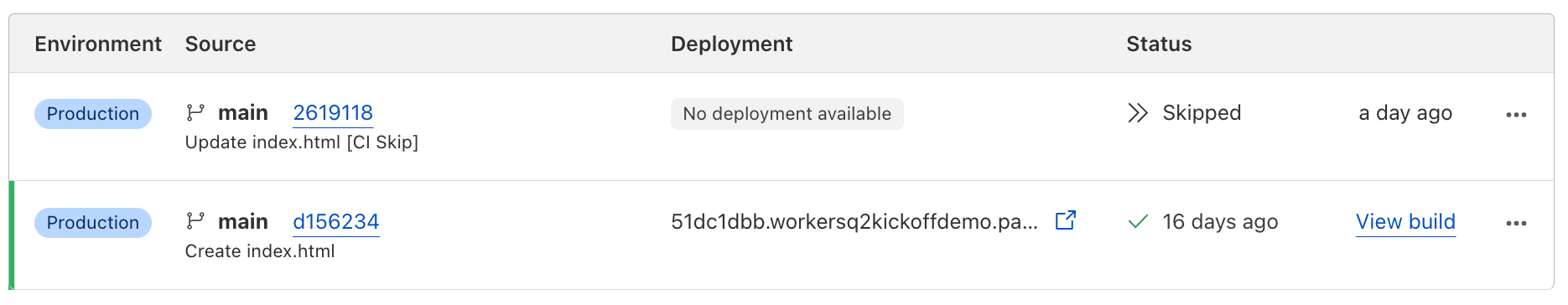

To take advantage of PATs, all you have to do is choose Managed Challenge rather than Legacy CAPTCHA as a response option in a Firewall rule. More than 65% of Cloudflare customers are already doing this. Our Managed Challenge platform will automatically ask every request for a token, and when the client is compatible with Private Access Tokens, we’ll receive one. Any of your visitors using an iOS or macOS device will automatically start seeing fewer CAPTCHAs once they’ve upgraded their OS.

This is just step one for us. We are actively working to get other clients and device makers utilizing the PAT framework as well. Any time a new client begins utilizing the PAT framework, traffic coming to your site from that client will automatically start asking for tokens, and your visitors will automatically see fewer CAPTCHAs.

We will be incorporating PATs into other security products very soon. Stay tuned for some announcements in the near future.

Dependencies

Dependencies