Post Syndicated from Vittorio Cioe original https://blog.zabbix.com/lift-and-shift-your-zabbix-to-oracle-cloud-with-mysql-database-service/12792/

If you are tired of administering the infrastructure on your own and would prefer to gain time to focus on real monitoring activities rather than costly platform upgrades, you can easily lift and shift your MySQL-based Zabbix installation stack to Oracle Cloud.

Contents

I. Moving to the Cloud (1:46)

II. Moving Zabbix to Oracle Cloud (2:41)

1. Planning migration (3:22)

2. Migrating Zabbix to Oracle Cloud (6:17)

3. Migrating the database to MySQL Database Service (8:47)

III. Questions & Answers (15:12)

Moving to the Cloud

The data is increasingly moving to the cloud — the consumer data followed by the enterprise data, as enterprises are always a bit slower in adopting technologies.

Data moving to the cloud

Oracle Cloud Infrastructure, OCI, is the 4th cloud provider in the Cloud Infrastructure Ranking of the Gartner Magic Quadrant based on ‘Completeness of Vision’ and ‘Ability to Execute’.

OCI is available in 26 regions and has 26 data centers across the world with 12 more planned.

26 Regions Live, 12+ Planned

24+ Industry and Regional Certifications

Moving Zabbix to Oracle Cloud

With Zabbix in the Oracle Cloud you can:

- get the latest updates on the technology stack, minimizing downtime and service windows.

- convert the time you spend managing your monitoring platform into the time you spend monitoring your platforms.

- leverage the most secure and cost-effective cloud platform in the market, including security information and security updates made available by OCI.

Planning migration

To plan effective migration of the on-premise Zabbix instance with clients, proxies, management server, interface, and database, we need to migrate the last three instance components. Basically, we need:

- the server configuration;

- on-premise network topology to understand what can communicate with the outside or what would eventually go over VPN, that is, the network topology of client and proxies; and

- the database.

Migration requirements

We also need to set up the following in the OCI tenancy:

- MySQL Database System,

- Compute instance for the Zabbix Server,

- storage for database and backup,

- networking/load balancing.

The target architecture involves setting up the VPN from your data center to the Oracle cloud tenancy and deploying the load balancer, the Zabbix server in redundancy over availability domains, and the MySQL database in a separate subnet.

Required Components:

• Cloud Networking,

• Zabbix Cloud Image,

• MySQL Database Service,

• VPN Connection for client/proxies.

Oracle Cloud target architecture for Zabbix

You can also have a lighter setup, for instance, with proxies communicating over TLS connections over the Internet or communicating directly with the Zabbix Server in the Oracle Cloud, and the Zabbix server interfacing with the database. Here, you will need fewer elements: server, database, and VCN.

Oracle Cloud target architecture for Zabbix — a simpler solution

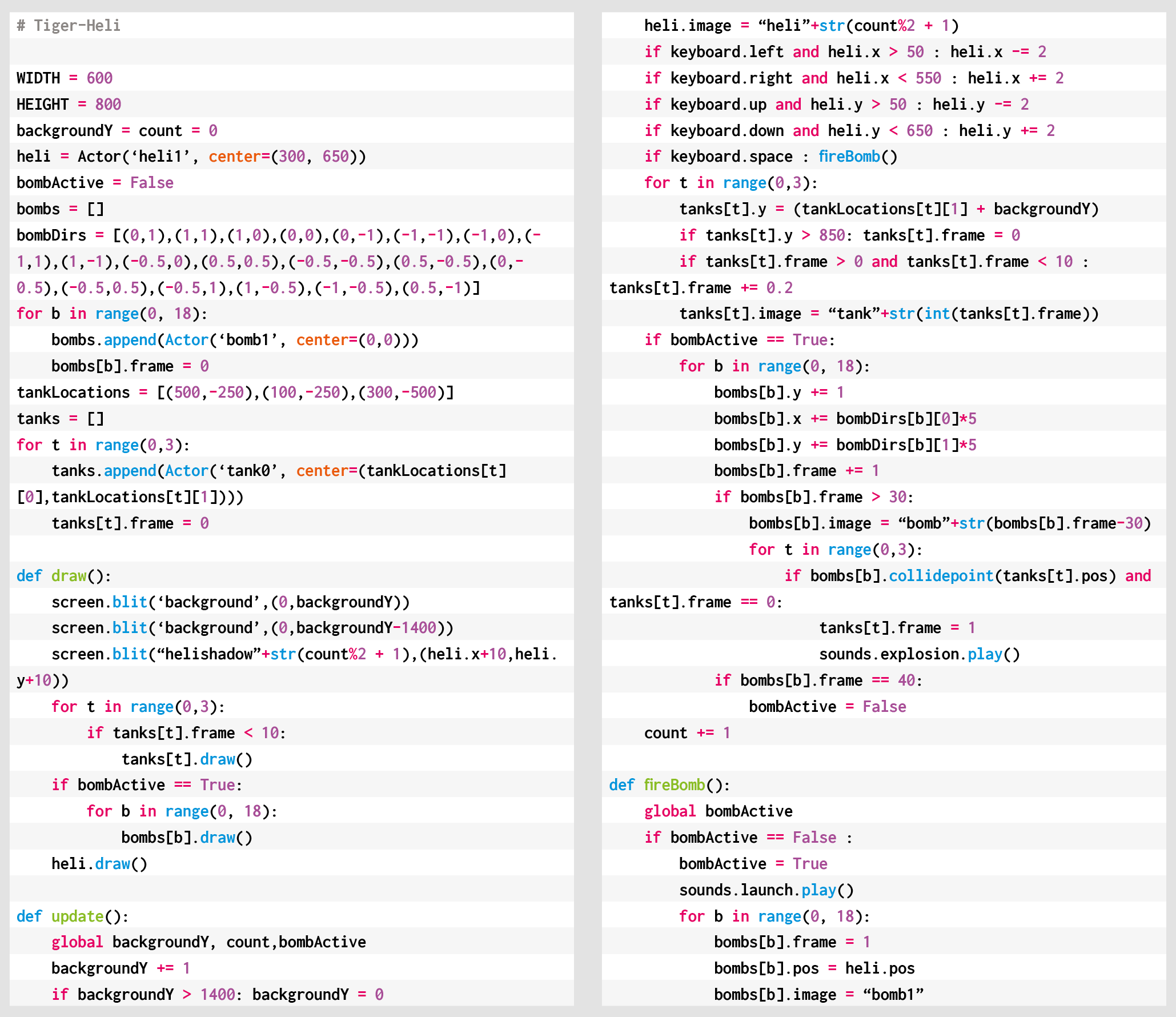

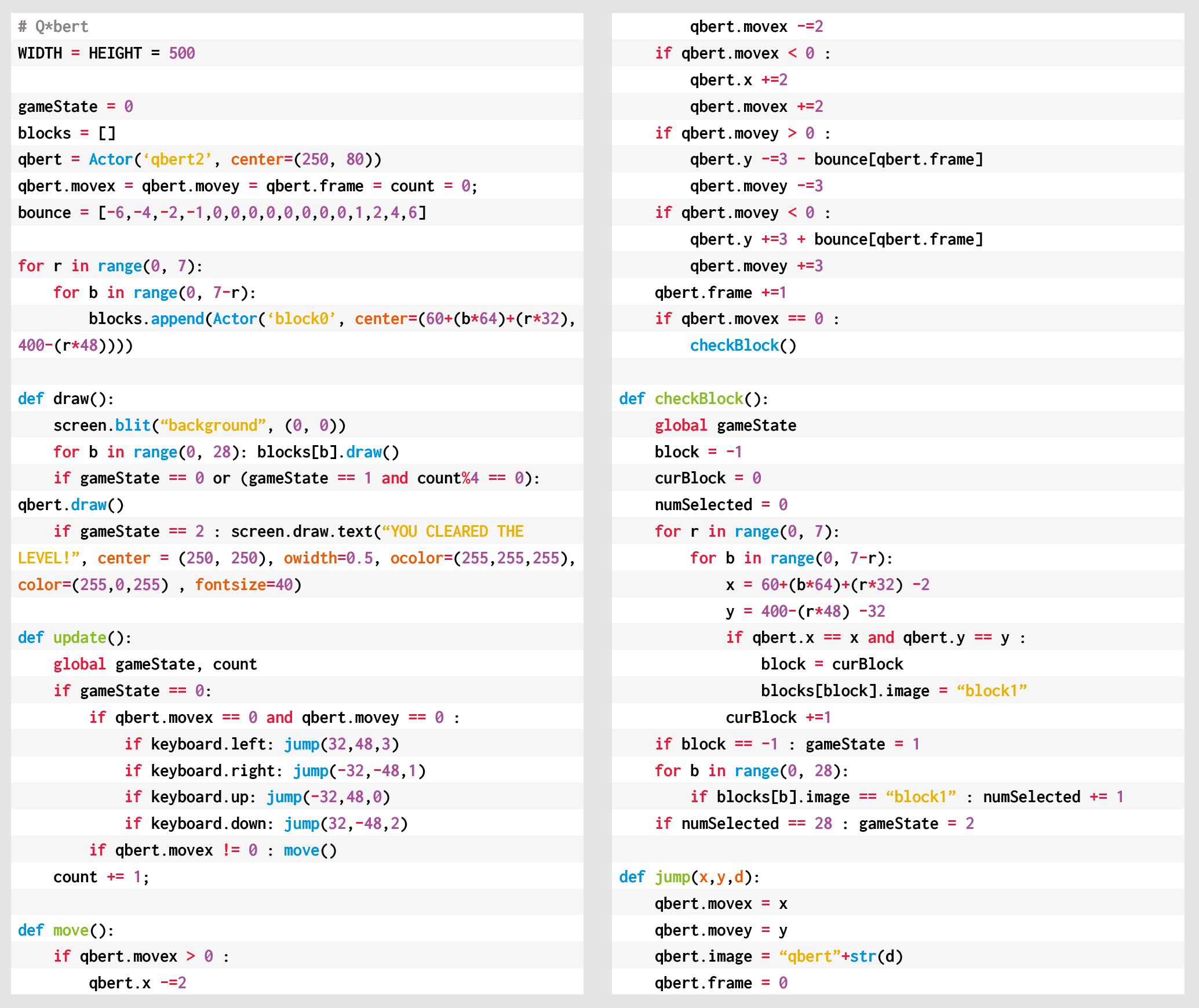

Migrating Zabbix to Oracle Cloud

Zabbix migration to the Oracle Cloud is straightforward.

1. Before you begin:

- set up tenancy and compartments,

- set up cloud networking — public and private VCN.

2. Zabbix deployment on the VM:

- select one-click deployment or DIY — use the official Zabbix OCI Marketplace Image or deploy an OCI Compute Instance and install manually,

- choose the desired Compute ‘shape’ during deployment.

3. Configuration:

- start the instance,

- edit the config file,

- point to the database with the IP address, username, and password (to do that, you’ll need to open several ports in the cloud network via the GUI).

The OCI infrastructure allows for multiple choices. The Zabbix Server is lightweight software requiring resources. In the majority of cases, a powerful VM will be enough. Otherwise, you’ll have the Oracle Cloud available.

Compute services for any enterprise use case

In the Oracle Cloud you’ll have the bare metal option — the physical machines dedicated to a single customer, Kubernetes container engine, and a lot of fast storage possibilities, which end up being quite cheap.

Migrating the database to MySQL Database Service

MySQL Database Service is the managed offer for MySQL in Oracle Cloud, fully developed, managed, and supported by the MySQL team. It is secure and provides the latest features as it leverages the Oracle Cloud, which has been rated by various sources as one of the most secure cloud platforms.

In addition, the platform is built on the MySQL Enterprise Edition binaries, so it is fully compatible with the platform you might be using. Finally, it costs way less on a yearly basis than a full-blown on-premise MySQL Enterprise subscription.

MySQL Database Service — 100% developed, managed, and supported by the MySQL team

Considerations before migration

Before you begin:

- check your MySQL 8.0 compatibility,

- check your database size (to assess the time needed to migrate), and

- plan a service window.

High-level migration plan

- Set up cloud networking.

- Set up your (on-premise) networking secure connection (to communicate with the cloud).

- Create MySQL Database Service DB System with storage.

- Move the data using MySQL Shell Dump & Load utility.

Creating MySQL DB system with just a few clicks

- Create a customized configuration.

- Start the wizard to create DB system.

- Select Virtual Cloud Network (VCN).

- Select subnet to place your MySQL endpoint.

- Select MySQL configuration (or create customized instances for your workload).

- The shape for the DB System (CPU and RAM) will be set automatically.

- Select the size of the storage for data and backup.

- Create a backup policy or accept the default.

Creating MySQL instances

You can use MySQL Shell Upgrade Checker Utility to check the compatibility with MySQL8.0.

util.checkForServerUpgrade()

Loading the data

To move the data, you can use the MySQL Shell Dump & Load utility, which is capable of multi-threading and is callable with the JavaScript methods from MySQL Shell.

So, you can dump on what can be a bastion machine, and load your instance to the cloud. It will take several minutes to load the database of several gigabytes, so it is necessary to plan the service maintenance window accordingly.

In addition, the utility is easy to use. You just need to connect to an instance and dump.

MySQL Shell Dump & Load

The operation is pretty straightforward and the migration time will depend on the size of the database.

Free trial

You can have a test drive of the MySQL Database Service with $300 in cloud credits, which you can spend in the Oracle Cloud on MySQL Database Service or other cloud services.

Questions & Answers

Question. Do you help with migrating the databases from older versions to MySQL 8.0?

Answer. Yes, this is the thing we normally do for our customers — providing guidance, though data migration is normally straightforward.

Question. Does the database size matter? How efficient MySQL Shell Dump is? What if my database is terabytes in size?

Answer. MySQL Shell Dump & Load utility is much more efficient than what MySQL Dump used to be. The database size still matters. In that case, it will require more time, still way less than it used to take