Post Syndicated from Marshall Jones original https://aws.amazon.com/blogs/security/using-aws-security-services-to-protect-against-detect-and-respond-to-the-log4j-vulnerability/

January 7, 2022: The blog post has been updated to include using Network ACL rules to block potential log4j-related outbound traffic.

January 4, 2022: The blog post has been updated to suggest using WAF rules when correct HTTP Host Header FQDN value is not provided in the request.

December 31, 2021: We made a minor update to the second paragraph in the Amazon Route 53 Resolver DNS Firewall section.

December 29, 2021: A paragraph under the Detect section has been added to provide guidance on validating if log4j exists in an environment.

December 23, 2021: The GuardDuty section has been updated to describe new threat labels added to specific finding to give log4j context.

December 21, 2021: The post includes more info about Route 53 Resolver DNS query logging.

December 20, 2021: The post has been updated to include Amazon Route 53 Resolver DNS Firewall info.

December 17, 2021: The post has been updated to include using Athena to query VPC flow logs.

December 16, 2021: The Respond section of the post has been updated to include IMDSv2 and container mitigation info.

This blog post was first published on December 15, 2021.

Overview

In this post we will provide guidance to help customers who are responding to the recently disclosed log4j vulnerability. This covers what you can do to limit the risk of the vulnerability, how you can try to identify if you are susceptible to the issue, and then what you can do to update your infrastructure with the appropriate patches.

The log4j vulnerability (CVE-2021-44228, CVE-2021-45046) is a critical vulnerability (CVSS 3.1 base score of 10.0) in the ubiquitous logging platform Apache Log4j. This vulnerability allows an attacker to perform a remote code execution on the vulnerable platform. Version 2 of log4j, between versions 2.0-beta-9 and 2.15.0, is affected.

The vulnerability uses the Java Naming and Directory Interface (JNDI) which is used by a Java program to find data, typically through a directory, commonly a LDAP directory in the case of this vulnerability.

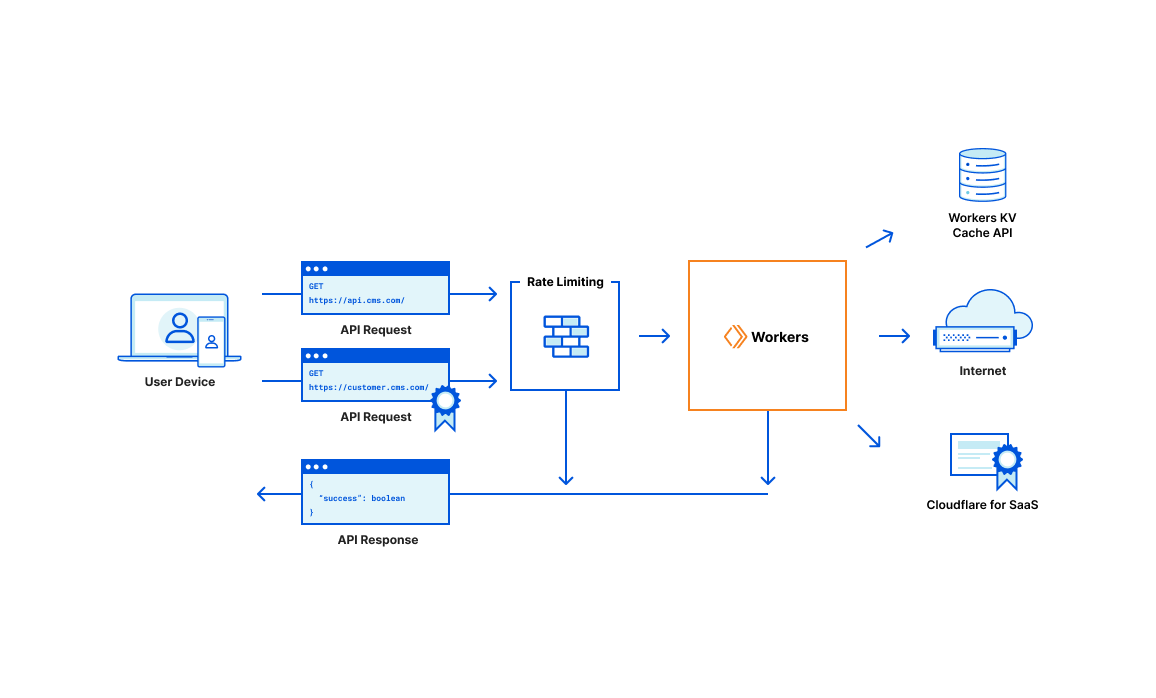

Figure 1, below, highlights the log4j JNDI attack flow.

Figure 1. Log4j attack progression. Source: GovCERT.ch, the Computer Emergency Response Team (GovCERT) of the Swiss government

As an immediate response, follow this blog and use the tool designed to hotpatch a running JVM using any log4j 2.0+. Steve Schmidt, Chief Information Security Officer for AWS, also discussed this hotpatch.

Protect

You can use multiple AWS services to help limit your risk/exposure from the log4j vulnerability. You can build a layered control approach, and/or pick and choose the controls identified below to help limit your exposure.

AWS WAF

Use AWS Web Application Firewall, following AWS Managed Rules for AWS WAF, to help protect your Amazon CloudFront distribution, Amazon API Gateway REST API, Application Load Balancer, or AWS AppSync GraphQL API resources.

- AWSManagedRulesKnownBadInputsRuleSet esp. the Log4JRCE rule which helps inspects the request for the presence of the Log4j vulnerability. Example patterns include ${jndi:ldap://example.com/}.

- AWSManagedRulesAnonymousIpList esp. the AnonymousIPList rule which helps inspect IP addresses of sources known to anonymize client information.

- AWSManagedRulesCommonRuleSet, esp. the SizeRestrictions_BODY rule to verify that the request body size is at most 8 KB (8,192 bytes).

You should also consider implementing WAF rules that deny access, if the correct HTTP Host Header FQDN value is not provided in the request. This can help reduce the likelihood of scanners that are scanning the internet IP address space from reaching your resources protected by WAF via a request with an incorrect Host Header, like an IP address instead of an FQDN. It’s also possible to use custom Application Load Balancer listener rules to achieve this.

If you’re using AWS WAF Classic, you will need to migrate to AWS WAF or create custom regex match conditions.

Have multiple accounts? Follow these instructions to use AWS Firewall Manager to deploy AWS WAF rules centrally across your AWS organization.

Amazon Route 53 Resolver DNS Firewall

You can use Route 53 Resolver DNS Firewall, following AWS Managed Domain Lists, to help proactively protect resources with outbound public DNS resolution. We recommend associating Route 53 Resolver DNS Firewall with a rule configured to block domains on the AWSManagedDomainsMalwareDomainList, which has been updated in all supported AWS regions with domains identified as hosting malware used in conjunction with the log4j vulnerability. AWS will continue to deliver domain updates for Route 53 Resolver DNS Firewall through this list.

Also, you should consider blocking outbound port 53 to prevent the use of external untrusted DNS servers. This helps force all DNS queries through DNS Firewall and ensures DNS traffic is visible for GuardDuty inspection. Using DNS Firewall to block DNS resolution of certain country code top-level domains (ccTLD) that your VPC resources have no legitimate reason to connect out to, may also help. Examples of ccTLDs you may want to block may be included in the known log4j callback domains IOCs.

We also recommend that you enable DNS query logging, which allows you to identify and audit potentially impacted resources within your VPC, by inspecting the DNS logs for the presence of blocked outbound queries due to the log4j vulnerability, or to other known malicious destinations. DNS query logging is also useful in helping identify EC2 instances vulnerable to log4j that are responding to active log4j scans, which may be originating from malicious actors or from legitimate security researchers. In either case, instances responding to these scans potentially have the log4j vulnerability and should be addressed. GreyNoise is monitoring for log4j scans and sharing the callback domains here. Some notable domains customers may want to examine log activity for, but not necessarily block, are: *interact.sh, *leakix.net, *canarytokens.com, *dnslog.cn, *.dnsbin.net, and *cyberwar.nl. It is very likely that instances resolving these domains are vulnerable to log4j.

AWS Network Firewall

Customers can use Suricata-compatible IDS/IPS rules in AWS Network Firewall to deploy network-based detection and protection. While Suricata doesn’t have a protocol detector for LDAP, it is possible to detect these LDAP calls with Suricata. Open-source Suricata rules addressing Log4j are available from corelight, NCC Group, from ET Labs, and from CrowdStrike. These rules can help identify scanning, as well as post exploitation of the log4j vulnerability. Because there is a large amount of benign scanning happening now, we recommend customers focus their time first on potential post-exploitation activities, such as outbound LDAP traffic from their VPC to untrusted internet destinations.

We also recommend customers consider implementing outbound port/protocol enforcement rules that monitor or prevent instances of protocols like LDAP from using non-standard LDAP ports such as 53, 80, 123, and 443. Monitoring or preventing usage of port 1389 outbound may be particularly helpful in identifying systems that have been triggered by internet scanners to make command and control calls outbound. We also recommend that systems without a legitimate business need to initiate network calls out to the internet not be given that ability by default. Outbound network traffic filtering and monitoring is not only very helpful with log4j, but with identifying other classes of vulnerabilities too.

Network Access Control Lists

Customers may be able to use Network Access Control List rules (NACLs) to block some of the known log4j-related outbound ports to help limit further compromise of successfully exploited systems. We recommend customers consider blocking ports 1389, 1388, 1234, 12344, 9999, 8085, 1343 outbound. As NACLs block traffic at the subnet level, careful consideration should be given to ensure any new rules do not block legitimate communications using these outbound ports across internal subnets. Blocking ports 389 and 88 outbound can also be helpful in mitigating log4j, but those ports are commonly used for legitimate applications, especially in a Windows Active Directory environment. See the VPC flow logs section below to get details on how you can validate any ports being considered.

Use IMDSv2

Through the early days of the log4j vulnerability researchers have noted that, once a host has been compromised with the initial JDNI vulnerability, attackers sometimes try to harvest credentials from the host and send those out via some mechanism such as LDAP, HTTP, or DNS lookups. We recommend customers use IAM roles instead of long-term access keys, and not store sensitive information such as credentials in environment variables. Customers can also leverage AWS Secrets Manager to store and automatically rotate database credentials instead of storing long-term database credentials in a host’s environment variables. See prescriptive guidance here and here on how to implement Secrets Manager in your environment.

To help guard against such attacks in AWS when EC2 Roles may be in use — and to help keep all IMDS data private for that matter — customers should consider requiring the use of Instance MetaData Service version 2 (IMDSv2). Since IMDSv2 is enabled by default, you can require its use by disabling IMDSv1 (which is also enabled by default). With IMDSv2, requests are protected by an initial interaction in which the calling process must first obtain a session token with an HTTP PUT, and subsequent requests must contain the token in an HTTP header. This makes it much more difficult for attackers to harvest credentials or any other data from the IMDS. For more information about using IMDSv2, please refer to this blog and documentation. While all recent AWS SDKs and tools support IMDSv2, as with any potentially non-backwards compatible change, test this change on representative systems before deploying it broadly.

Detect

This post has covered how to potentially limit the ability to exploit this vulnerability. Next, we’ll shift our focus to which AWS services can help to detect whether this vulnerability exists in your environment.

Figure 2. Log4j finding in the Inspector console

Amazon Inspector

As shown in Figure 2, the Amazon Inspector team has created coverage for identifying the existence of this vulnerability in your Amazon EC2 instances and Amazon Elastic Container Registry Images (Amazon ECR). With the new Amazon Inspector, scanning is automated and continual. Continual scanning is driven by events such as new software packages, new instances, and new common vulnerability and exposure (CVEs) being published.

For example, once the Inspector team added support for the log4j vulnerability (CVE-2021-44228 & CVE-2021-45046), Inspector immediately began looking for this vulnerability for all supported AWS Systems Manager managed instances where Log4j was installed via OS package managers and where this package was present in Maven-compatible Amazon ECR container images. If this vulnerability is present, findings will begin appearing without any manual action. If you are using Inspector Classic, you will need to ensure you are running an assessment against all of your Amazon EC2 instances. You can follow this documentation to ensure you are creating an assessment target for all of your Amazon EC2 instances. Here are further details on container scanning updates in Amazon ECR private registries.

GuardDuty

In addition to finding the presence of this vulnerability through Inspector, the Amazon GuardDuty team has also begun adding indicators of compromise associated with exploiting the Log4j vulnerability, and will continue to do so. GuardDuty will monitor for attempts to reach known-bad IP addresses or DNS entries, and can also find post-exploit activity through anomaly-based behavioral findings. For example, if an Amazon EC2 instance starts communicating on unusual ports, GuardDuty would detect this activity and create the finding Behavior:EC2/NetworkPortUnusual. This activity is not limited to the NetworkPortUnusual finding, though. GuardDuty has a number of different findings associated with post exploit activity, such as credential compromise, that might be seen in response to a compromised AWS resource. For a list of GuardDuty findings, please refer to this GuardDuty documentation.

To further help you identify and prioritize issues related to CVE-2021-44228 and CVE-2021-45046, the GuardDuty team has added threat labels to the finding detail for the following finding types:

Backdoor:EC2/C&CActivity.B

If the IP queried is Log4j-related, then fields of the associated finding will include the following values:

- service.additionalInfo.threatListName = Amazon

- service.additionalInfo.threatName = Log4j Related

Backdoor:EC2/C&CActivity.B!DNS

If the domain name queried is Log4j-related, then the fields of the associated finding will include the following values:

- service.additionalInfo.threatListName = Amazon

- service.additionalInfo.threatName = Log4j Related

Behavior:EC2/NetworkPortUnusual

If the EC2 instance communicated on port 389 or port 1389, then the associated finding severity will be modified to High, and the finding fields will include the following value:

- service.additionalInfo.context = Possible Log4j callback

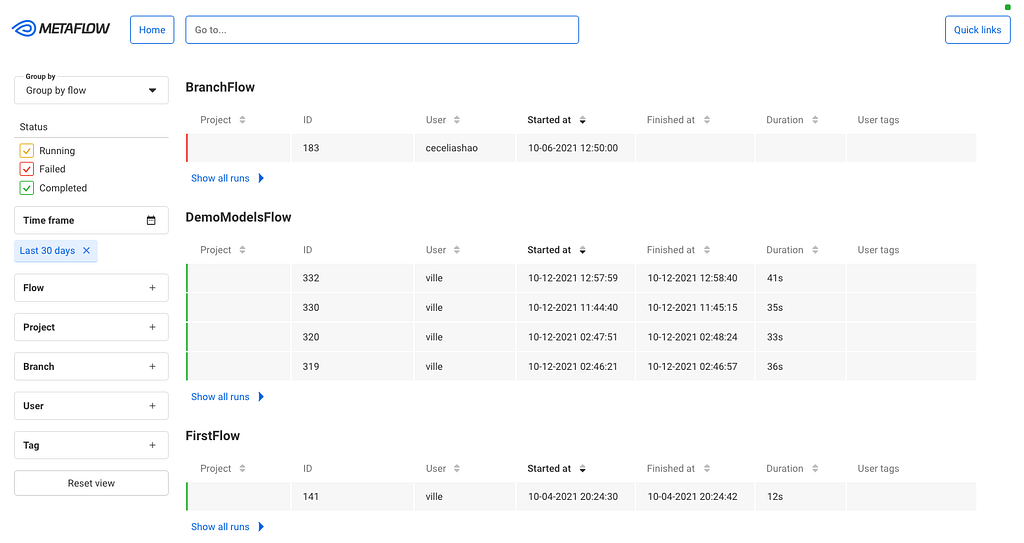

Figure 3. GuardDuty finding with log4j threat labels

Security Hub

Many customers today also use AWS Security Hub with Inspector and GuardDuty to aggregate alerts and enable automatic remediation and response. In the short term, we recommend that you use Security Hub to set up alerting through AWS Chatbot, Amazon Simple Notification Service, or a ticketing system for visibility when Inspector finds this vulnerability in your environment. In the long term, we recommend you use Security Hub to enable automatic remediation and response for security alerts when appropriate. Here are ideas on how to setup automatic remediation and response with Security Hub.

VPC flow logs

Customers can use Athena or CloudWatch Logs Insights queries against their VPC flow logs to help identify VPC resources associated with log4j post exploitation outbound network activity. Version 5 of VPC flow logs is particularly useful, because it includes the “flow-direction” field. We recommend customers start by paying special attention to outbound network calls using destination port 1389 since outbound usage of that port is less common in legitimate applications. Customers should also investigate outbound network calls using destination ports 1388, 1234, 12344, 9999, 8085, 1343, 389, and 88 to untrusted internet destination IP addresses. Free-tier IP reputation services, such as VirusTotal, GreyNoise, NOC.org, and ipinfo.io, can provide helpful insights related to public IP addresses found in the logged activity.

Note: If you have a Microsoft Active Directory environment in the captured VPC flow logs being queried, you might see false positives due to its use of port 389.

Validation with open-source tools

With the evolving nature of the different log4j vulnerabilities, it’s important to validate that upgrades, patches, and mitigations in your environment are indeed working to mitigate potential exploitation of the log4j vulnerability. You can use open-source tools, such as aws_public_ips, to get a list of all your current public IP addresses for an AWS Account, and then actively scan those IPs with log4j-scan using a DNS Canary Token to get notification of which systems still have the log4j vulnerability and can be exploited. We recommend that you run this scan periodically over the next few weeks to validate that any mitigations are still in place, and no new systems are vulnerable to the log4j issue.

Respond

The first two sections have discussed ways to help prevent potential exploitation attempts, and how to detect the presence of the vulnerability and potential exploitation attempts. In this section, we will focus on steps that you can take to mitigate this vulnerability. As we noted in the overview, the immediate response recommended is to follow this blog and use the tool designed to hotpatch a running JVM using any log4j 2.0+. Steve Schmidt, Chief Information Security Officer for AWS, also discussed this hotpatch.

Figure 4. Systems Manager Patch Manager patch baseline approving critical patches immediately

AWS Patch Manager

If you use AWS Systems Manager Patch Manager, and you have critical patches set to install immediately in your patch baseline, your EC2 instances will already have the patch. It is important to note that you’re not done at this point. Next, you will need to update the class path wherever the library is used in your application code, to ensure you are using the most up-to-date version. You can use AWS Patch Manager to patch managed nodes in a hybrid environment. See here for further implementation details.

Container mitigation

To install the hotpatch noted in the overview onto EKS cluster worker nodes AWS has developed an RPM that performs a JVM-level hotpatch which disables JNDI lookups from the log4j2 library. The Apache Log4j2 node agent is an open-source project built by the Kubernetes team at AWS. To learn more about how to install this node agent, please visit the this Github page.

Once identified, ECR container images will need to be updated to use the patched log4j version. Downstream, you will need to ensure that any containers built with a vulnerable ECR container image are updated to use the new image as soon as possible. This can vary depending on the service you are using to deploy these images. For example, if you are using Amazon Elastic Container Service (Amazon ECS), you might want to update the service to force a new deployment, which will pull down the image using the new log4j version. Check the documentation that supports the method you use to deploy containers.

If you’re running Java-based applications on Windows containers, follow Microsoft’s guidance here.

We recommend you vend new application credentials and revoke existing credentials immediately after patching.

Mitigation strategies if you can’t upgrade

In case you either can’t upgrade to a patched version, which disables access to JDNI by default, or if you are still determining your strategy for how you are going to patch your environment, you can mitigate this vulnerability by changing your log4j configuration. To implement this mitigation in releases >=2.10, you will need to remove the JndiLookup class from the classpath: zip -q -d log4j-core-*.jar org/apache/logging/log4j/core/lookup/JndiLookup.class.

For a more comprehensive list about mitigation steps for specific versions, refer to the Apache website.

Conclusion

In this blog post, we outlined key AWS security services that enable you to adopt a layered approach to help protect against, detect, and respond to your risk from the log4j vulnerability. We urge you to continue to monitor our security bulletins; we will continue updating our bulletins with our remediation efforts for our side of the shared-responsibility model.

Given the criticality of this vulnerability, we urge you to pay close attention to the vulnerability, and appropriately prioritize implementing the controls highlighted in this blog.

If you have feedback about this post, submit comments in the Comments section below.

Want more AWS Security news? Follow us on Twitter.

Last AWS re:Invent 2020, we announced

Last AWS re:Invent 2020, we announced  Before the end of the current LTS period, you will be able to use your AWS account to complete the FreeRTOS EMP registration on the FreeRTOS console, review and agree to the associated terms and conditions, select the LTS version, and buy an annual subscription. You will then gain access to the private repository where you’ll receive .zip files containing a git repo with chosen libraries, patches, and related notifications.

Before the end of the current LTS period, you will be able to use your AWS account to complete the FreeRTOS EMP registration on the FreeRTOS console, review and agree to the associated terms and conditions, select the LTS version, and buy an annual subscription. You will then gain access to the private repository where you’ll receive .zip files containing a git repo with chosen libraries, patches, and related notifications.